- 1Co-Innovation Center for Sustainable Forestry in Southern China, Nanjing Forestry University, Nanjing, China

- 2Citrus Research and Education Center, University of Florida, Lake Alfred, FL, United States

- 3Crop Development Centre/Department of Plant Sciences, University of Saskatchewan, Saskatoon, SK, Canada

- 4Gulf Coast Research and Education Center, University of Florida, Wimauma, FL, United States

Precision herbicide application can substantially reduce herbicide input and weed control cost in turfgrass management systems. Intelligent spot-spraying system predominantly relies on machine vision-based detectors for autonomous weed control. In this work, several deep convolutional neural networks (DCNN) were constructed for detection of dandelion (Taraxacum officinale Web.), ground ivy (Glechoma hederacea L.), and spotted spurge (Euphorbia maculata L.) growing in perennial ryegrass. When the networks were trained using a dataset containing a total of 15,486 negative (images contained perennial ryegrass with no target weeds) and 17,600 positive images (images contained target weeds), VGGNet achieved high F1 scores (≥0.9278), with high recall values (≥0.9952) for detection of E. maculata, G. hederacea, and T. officinale growing in perennial ryegrass. The F1 scores of AlexNet ranged from 0.8437 to 0.9418 and were generally lower than VGGNet at detecting E. maculata, G. hederacea, and T. officinale. GoogleNet is not an effective DCNN at detecting these weed species mainly due to the low precision values. DetectNet is an effective DCNN and achieved high F1 scores (≥0.9843) in the testing datasets for detection of T. officinale growing in perennial ryegrass. Moreover, VGGNet had the highest Matthews correlation coefficient (MCC) values, while GoogleNet had the lowest MCC values. Overall, the approach of training DCNN, particularly VGGNet and DetectNet, presents a clear path toward developing a machine vision-based decision system in smart sprayers for precision weed control in perennial ryegrass.

Introduction

Turfgrasses are the predominant vegetation cover in urban landscapes including golf courses, institutional and residential lawns, parks, roadsides, and sport fields (Milesi et al., 2005). Weed control is one of the most challenging issues for turfgrass management. Weeds compete with turfgrass for nutrient, sunlight, and water, and disrupt turfgrass aesthetics and functionality. Cultural practices, such as appropriate fertilization, irrigation, and mowing, can reduce weed infestation (Busey, 2003), but herbicides often provide the most effective weed control (McElroy and Martins, 2013). Conventional herbicide-based weed control relies on broadcast application, spraying weed patches and pure turfgrass stands indiscriminately. Manual spot-spraying is time-consuming and expensive but practiced commonly to reduce herbicide input and weed control cost.

Deep learning is a category of machine learning that allows a computer algorithm to learn and understand a dataset in terms of a hierarchy of concepts (Deng et al., 2009; LeCun et al., 2015). In recent years, deep learning has emerged as an effective application in various scientific domains, including computer vision (LeCun et al., 2015; Kendall and Gal, 2017; Gu et al., 2018), natural language processing (Collobert and Weston, 2008; Collobert et al., 2011), and speech recognition (Hinton et al., 2012; LeCun et al., 2015). Deep learning has proven to be a promising method in computer-assisted drug discovery and design (Gawehn et al., 2016), sentiment analysis and question answering (Ye et al., 2009; Bordes et al., 2014), predicting sequence specificities of DNA- and RNA-binding proteins (Alipanahi et al., 2015), and performing automatic brain tumor detection (Havaei et al., 2017).

Deep convolutional neural networks (DCNN) have an extraordinary ability to extract complex features from images (LeCun et al., 2015; Schmidhuber, 2015; Ghosal et al., 2018). It has been widely employed as a powerful tool to classify images and detect objects (LeCun et al., 2015). In the 2012 ImageNet competition, a DCNN effectively classified a 1000 class dataset containing approximately a million high resolution images (Krizhevsky et al., 2012). In recent years, a growing number of companies such as Apple, Intel, Nvidia, and Tencent utilize DCNN-based machine vision in facial recognition (Song et al., 2014; Parkhi et al., 2015), self-driving cars (LeCun et al., 2015; Jordan and Mitchell, 2015), real-time smart phone vision applications (Ronao and Cho, 2016), and target identification for robot grasping (Wang et al., 2016).

In agriculture, DCNN reliably detected various ecological crop stresses (Ghosal et al., 2018). For example, Mohanty et al. (2016) presented a DCNN that identified 14 crop species and 26 diseases with an overall accuracy of >99%. Moreover, DCNN-based machine vision can identify plants. For example, Grinblat et al. (2016) presented a DCNN that can reliably identify three legume species using plant vein morphological patterns, while dos Santos Ferreira et al. (2017) presented a DCNN that identified various broadleaf and grassy weeds in relation to soybean (Glycine max L. Merr.) and soil, with an overall accuracy of >99%. Recently, Teimouri et al. (2018) presented a method of automatically determining 18 weed species and their growth stages. Accurate image classification and object detection along with the fast image processing are of paramount importance for real-time weed detection and precision herbicide application (Fennimore et al., 2016). Previous researchers noted that training a DCNN takes several hours with a high-performance graphic processor unit (GPU), while the image classification itself is fast (<1 second per image) (Mohanty et al., 2016; Chen et al., 2017; Sharpe et al. 2019; Yu et al., 2019a).

In this work, four DCNN architectures, including i) AlexNet (Krizhevsky et al., 2012), ii) DetectNet (Tao et al., 2016), iii) GoogleNet (Szegedy et al., 2015), and iv) VGG-16 (VGGNet) (Simonyan and Zisserman, 2014) were explored for weed detection in a cool-season turfgrass perennial ryegrass (Lolium perenne L.). DetectNet is an object detection DCNN (Tao et al., 2016), while AlexNet, GoogleNet, and VGGNet are image classification DCNNs (Krizhevsky et al., 2012; Simonyan and Zisserman, 2014; Szegedy et al., 2015). In previous works, Yu et al. (2019b) documented that DetectNet was highly effective in detecting annual bluegrass (Poa annua L.) and various broadleaf weeds in dormant bermudagrass [Cynodon dactylon (L.) Pers.], while VGGNet was highly effective in detecting dollar weed (Hydrocotyle spp.), old world diamond-flower (Hedyotis cormybosa L. Lam), and Florida pusley (Richardia scabra L.) in actively growing bermudagrass. Similarly, Yu et al. (2019a) reported that DetectNet reliably detected cutleaf evening-primrose (Oenothera laciniata Hill) in bahiagrass (Paspalum notatum Flugge) with overall accuracy >0.99 and recall value of 1.00. However, weed detection in cool-season turfgrass systems with these DCNNs has never been previously reported.

Dandelion (Taraxacum officinale Web.), ground ivy (Glechoma hederacea L.), and spotted spurge (Euphorbia maculata L.) are distributed throughout the continental United States (USDA-NRCS 2018). These weed species are commonly found in various cool-season turfgrasses. Herbicides, such as 2,4-D, dicamba, MCPP, triclopyr, and sulfentrazone are broadcast-applied in cool-season turfgrasses for POST control of various broadleaf weeds (McElroy and Martins, 2013; Reed et al., 2013; Johnston et al., 2016; Yu and McCullough, 2016a; Yu and McCullough, 2016b). Precision herbicide application using machine-vision based sprayers will substantially reduce herbicide input and weed control costs. The objective of this research was to examine the feasibility of using DCNN for detection of broadleaf weeds in perennial ryegrass.

Materials and Methods

Image Acquisition

Images of E. maculata, G. hederacea, and T. officinale growing in perennial ryegrass, acquired at multiple golf courses and institutional lawns in Indianapolis, Indiana, United States (39.76 °N, 86.15 ° W), were used in the training datasets. Images of E. maculata, G. hederacea, and T. officinale, acquired at multiple institutional lawns and golf courses in Carmel, Indiana, United States (39.97° N, 86.11° W), were used in the testing dataset 1 (TD 1). Images of E. maculata and G. hederacea, acquired at multiple institutional lawns, roadsides, and parks in West Lafayette, Indiana, United States (40.42° N, 86.90° W) were used in the testing dataset 2 (TD 2). Images of T. officinale taken at multiple institutional and residential lawns at Saskatoon, Saskatchewan, Canada (52.13° N, 106.67° W) were included in TD 2. The images acquired in Indiana and Saskatchewan were taken using a Sony® Cyber-Shot (SONY Corporation, Minato, Tokyo, Japan) and a Canon® EOS Rebel T6 digital camera (Ohta-ku, Canon Inc., Tokyo, Japan), respectively, at a resolution of 1920 × 1080 pixels. The camera heights were adjusted to a ground-sampling distance of 0.05 cm pixel-1 during image acquisition. The images were acquired during the daytime from 9:00 AM to 5:00 PM and under various sunlight conditions including clear, partly cloudy, or cloudy days. The training and testing images were acquired at multiple times between August and September 2018.

Training and Testing

For training and testing image classification DCNN, images were cropped into 426 × 240 pixels using Irfanview (Version 5.50, Irfan Skijan, Jaice, Bosnia). The images containing a single weed species were selected for training and testing image classification DCNN. The neural networks were trained using the training dataset containing either a single weed species (single-species neural network) or multiple weed species (multiple-species neural network). For training single-species neural networks, the E. maculata training dataset contained 6,180 negative images (images containing perennial ryegrass without target weeds) and 6,500 positive images (images containing perennial ryegrass infested with target weeds); the G. hederacea training dataset contained 4,470 negative and 4,600 positive images; and the T. officinale training dataset contained 4,836 negative and 6,500 positive images. The above training datasets were used to train DCNN for detecting a single weed species growing in perennial ryegrass. For each weed species, a total of 630 negative and 630 positive images were used for validation dataset (VD), TD 1, and TD 2.

The multiple-species neural networks were trained because we were interested to evaluate the feasibility of using a single image classification DCNN to detect multiple weed species growing in perennial ryegrass. We trained AlexNet, GoogLeNet, and VGGNet using two training datasets (A and B). Training dataset A was a balanced dataset that contained 19,500 negative and 19,500 positive images (6,500 images per weed species). Training dataset B was an unbalanced dataset containing a total of 15,486 negative and 17,600 positive images (6,500 images for E. maculata; 4,600 images for G. hederacea; and 6,500 images for T. officinale). A total of 900 negative and 900 positive images (300 images for each weed species) were used for VD. The TD 1 and TD 2 for the multiple-species neural networks contained 630 negative and 630 positive images.

When training object detection DCNN, images were resized to 1280 × 720 pixels (720 p) using Irfanview. A total of 810 images containing T. officinale while growing in perennial ryegrass were used for training DetectNet. Training images were imported into custom software compiled using Lazarus (http://www.lazarus-ide.org/). Bounding boxes were drawn on imported images to identify objects. Program output generated corresponding text files used for DetectNet training. A total of 100 images containing T. officinale while growing in perennial ryegrass were used in the VD, TD 1 or TD 2. For detection of T. officinale, the images of VD, TD 1, and TD 2 contained a total of 630, 446, and 157 individual weeds, respectively.

Neural network training and testing were performed in the NVIDIA Deep Learning GPU Training System (DIGITS) (version 6.0.0, NVIDIA Corporation, Santa Clara, CA) using the Convolutional Architecture for Fast Feature Embedding (Caffe) (Jia et al., 2014). Networks were pre-trained using the ImageNet database (Deng et al., 2009) and KITTI dataset (Geiger et al., 2013). The following hyper-parameters were standardized to compare the results of all DCNN.

● Base learning rate: 0.03

● Batch accumulation: 5

● Batch size: 2

● Gamma: 0.95

● Learning rate policy: Exponential decay

● Solver type: AdaDelta

● Training epochs: 30

The results of validation and testing for all DCNN were arranged in binary confusion matrixes, including true positive (tp), true negative (tn), false positive (fp), and false negative (fn) (Sokolova and Lapalme, 2009). In this context, tp represents the images containing target weeds that are correctly identified; tn represents the images containing turfgrasses without target weeds that are correctly identified; fp represents the images without target weeds that are incorrectly identified as target weeds; and fn represents the images containing target weeds incorrectly not identified as turfgrasses. We computed the precision (Equation 1), recall (Equation 2), F1 score (Equation 3), and Matthews correlation coefficient (MCC) (Equation 4) for each DCNN. The precision, recall, and F1 score values are unitless indices of predictive ability and ranged from 0 to 1. The higher the value is, the better is the predictive ability of the network. The high precision indicates that the neural network achieved high successful rate of detection for the turfgrass area where weeds do not occur, while the high recall indicates that the neural network realized high successful rate of detection for the target weeds. MCC is a correlation coefficient between the observed and predicted binary classifications. MCC ranges from -1 to +1. An MCC value of -1 represents the total discrepancy between observation and prediction, 0 indicates no better than a random prediction, and +1 represents a prefect prediction (Matthews, 1975).

Precision measures the ability of the neural network to accurately identify targets, which was calculated using the following equation (Sokolova and Lapalme, 2009):

Recall measures the ability of the neural network to detect its target, which was calculated using the following equation (Sokolova and Lapalme, 2009; Hoiem et al., 2012):

F1 score is a harmonic means of the precision and recall (Sokolova and Lapalme, 2009) calculated by the following equation:

MCC measures the quality of binary classifications, particularly the correlation between the actual class labels and predictions, which was determined by the following equation (Matthews, 1975).

Results and Discussion

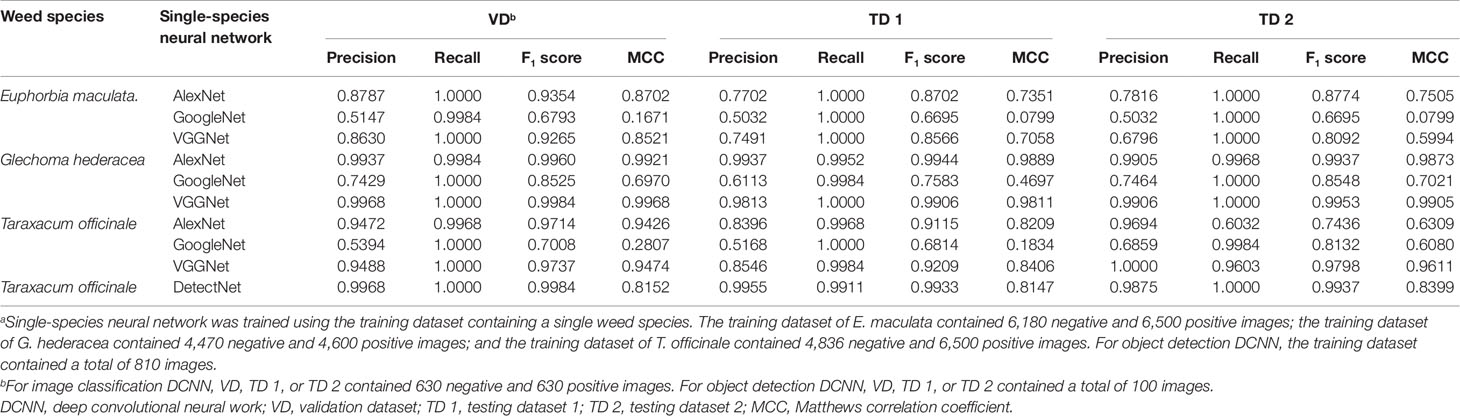

When single-species neural networks were trained for detection of E. maculata, AlexNet and VGGNet exhibited higher precision, recall, F1 score, and MCC values compared to GoogleNet (Table 1). AlexNet achieved slightly higher F1 score than VGGNet, primarily due to higher precision. For detection of G. hederacea, AlexNet and VGGNet exhibited excellent performances and achieved high F1 score values (≥0.9906) for VD, TD 1, and TD 2. AlexNet and VGGNet exhibited high F1 scores (≥0.9115) and recall values (≥0.9952) at detecting T. officinale for VD and TD 1, but the recall values decreased to 0.6032 and 0.9603, respectively for TD 2. Among the neural networks, GoogleNet consistently exhibited the lowest MCC values, indicating the poor predictions for the actual class labels. The poor performance of GoogleNet was primarily due to the low precision (≤0.7464). DetectNet achieved high F1 scores (≥0.9933) and reliably detected T. officinale for VD, TD 1, and TD 2.

Table 1 Weed detection training results while growing in perennial ryegrass using artificial neural networks.a

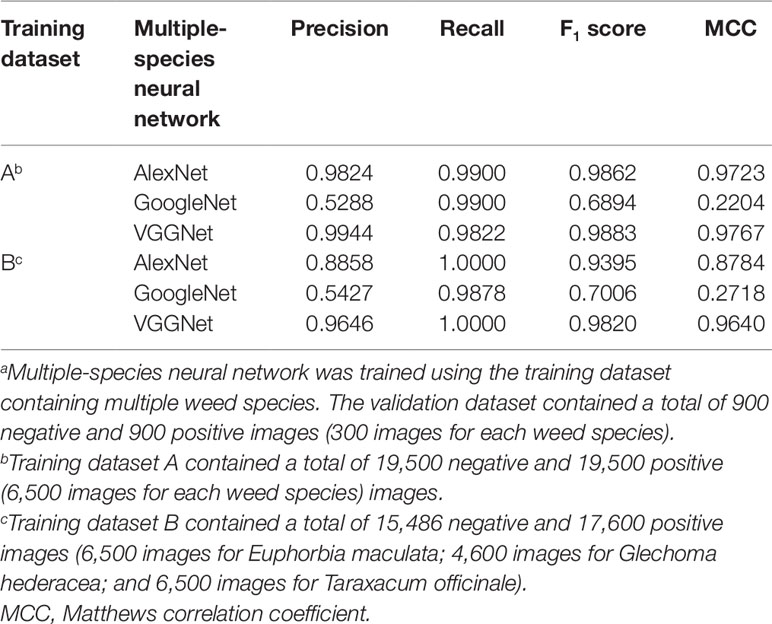

The capability of multiple-species neural networks to simultaneously detect multiple weed species was evaluated using the VD (contained three weed species per dataset) (Table 2). VGGNet exhibited the highest MCC values (≥0.9640), while GoogleNet exhibited the lowest MCC values (≤0.2718). AlexNet and VGGNet achieved high F1 scores (≥0.9395), with high recall values (≥0.9822) at detecting these weeds. AlexNet and VGGNet exhibited higher precision but lower recall values when trained using the training dataset A than the training dataset B. AlexNet and VGGNet out-performed GoogleNet. GoogleNet was not effective in detecting selected weeds, with the F1 score values of 0.6894 and 0.7006 when trained using the training dataset A and B, respectively.

Table 2 Validation results of multiple-species neural networks for detection of weeds while growing in perennial ryegrass.a

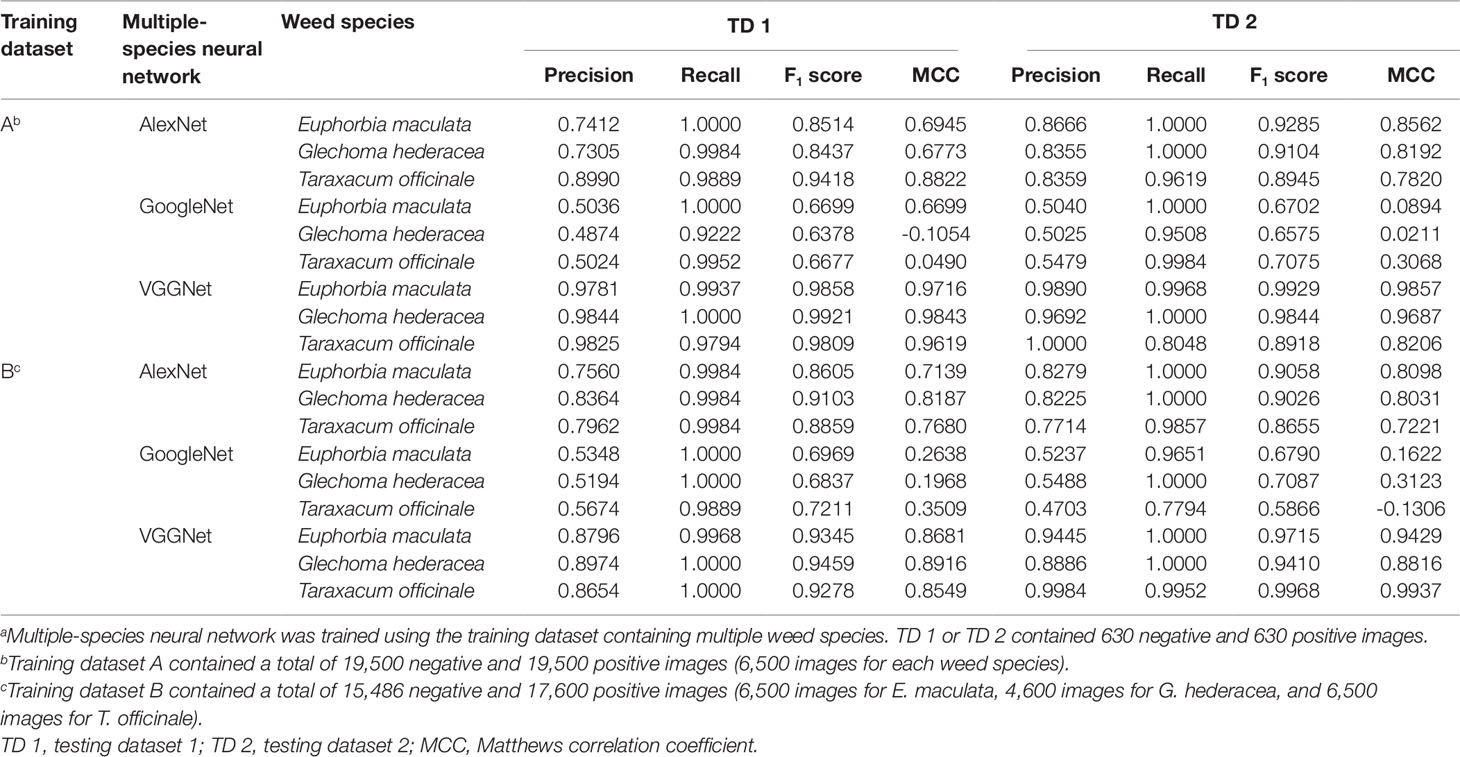

The capability of multiple-species neural networks to detect these weed species was further evaluated using TD 1 and TD 2 (contained only a single weed species per dataset) (Table 3). When the AlexNet was trained using the training dataset A, it performed similarly to when trained using the training dataset B. AlexNet demonstrated high recall (≥0.9619) but relatively low precision (≤0.7412) for detection of E. maculata and T. officinale in the TD 1 and T. officinale in the TD 2, which reduced the F1 score values. The MCC value of GoogleNet was the lowest, while the MCC value of VGGNet was the highest among the multiple-species neural networks for both training datasets.

Table 3 Testing results of multiple-species neural networks for detection of weeds while growing in perennial ryegrass.a

When the neural networks were trained for detecting multiple weed species, VGGNet exhibited excellent performances in detecting E. maculata and G. hederacea and achieved high F1 scores (≥0.9345) and recall values (≥0.9968) in the TD 1 and TD 2. However, for detection of T. officinale, VGGNet trained using the training dataset B exhibited considerably higher recall and F1 score values than when trained using the training dataset A. GoogLeNet was ineffective at detecting these weed species, primarily due to the low precision values.

Both image classification and object detection DCNN can be used in the machine vision sub-system of smart sprayers but each approach has pros and cons. Compared to the object detection DCNN, the training of the image classification DCNN takes less time because it does not involve the drawing of bounding boxes. The herbicide application of DetectNet-based smart sprayers can target individual weeds as objects using the narrow spray pattern nozzles, while this is less feasible with the image classification DCNN.

Except for T. officinale, selected DCNN produced consistent results for weed detection, even across different geographical regions. When single-species neural networks were trained, AlexNet and VGGNet exhibited excellent precision and recall at detecting T. officinale in the TD 1, but the recall values decreased in the TD 2. The reduction in recall value suggests that the network is more likely to misclassify target weeds as turfgrass. This is undesirable in field applications as weeds would be missed, inadequate herbicide applied, and thus result in poor weed control. The cause is unknown, but we hypothesized that it was likely due to the variations in the morphological characteristics of T. officinale between the training and testing images. Fortunately, this problem has been overcome by training the multiple-species neural networks, particularly the VGGNet.

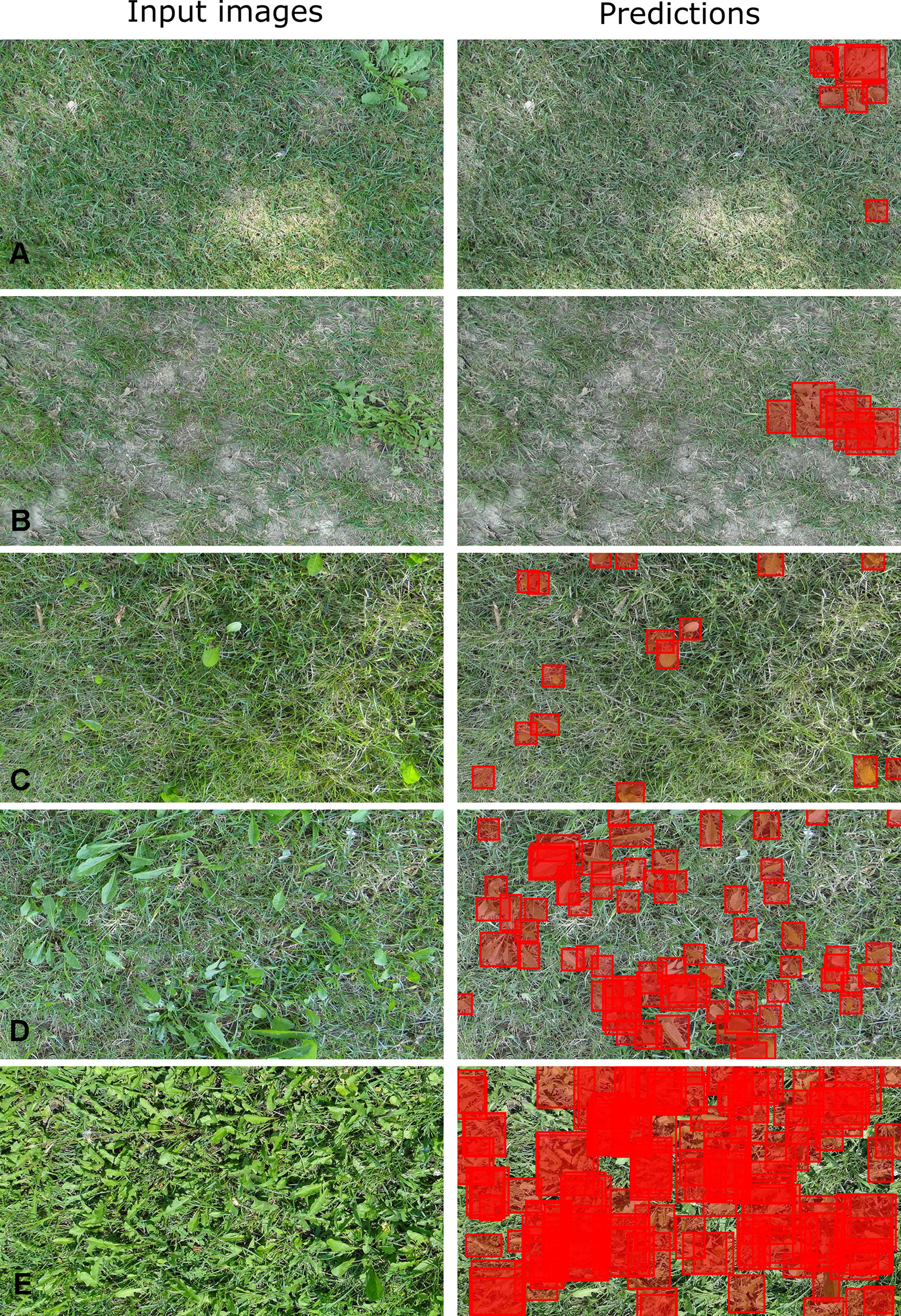

DetectNet exhibited excellent performances at detecting T. officinale across different growth stages and densities (Figures 1A–E). Several images used for model testing in TD 1 had high weed densities. The bounding boxes generated by DetectNet failed to cover every single leaf of the target weeds when the testing images containing high weed densities (Figure 1E), which reduced the recall values. However, this is unlikely to be an issue in field applications because the great majority of weeds per image are detected. The few undetected leaves would likely fall into the spray zone if the herbicides are delivered using flat fan nozzles. In addition, several testing images in TD 2 contained smooth crabgrass [Digitaria ischaemum (Schreb.) Muhl]. In a few cases, DetectNet incorrectly detected the smooth crabgrass as the T. officinale (Figure 1B), which reduced precision. Increasing the number of training images containing crabgrass growing with T. officinale may remove this effect and increase the precision and overall accuracy. In previous research, DetectNet trained to detect Carolina geranium (Geranium carolinianum L.) in plastic-mulched strawberry crops was successfully desensitized to black medic (Medicago lupulina L.) leaves in a similar circumstance (Sharpe et al., 2018).

Figure 1 DetectNet-generated bounding boxes (predictions) generated on the testing images (input images) of Taraxacum officinale while growing in perennial ryegrass. (A) The DetectNet detected T. officinale at mature and seedling growth stages, respectively. (B) The DetectNet incorrectly detected a Digitaria ischaemum as T. officinale. (C–E) The DetectNet detected T. officinale at different growth stages and weed densities.

In the present study, we evaluated the effectiveness of using a single DCNN to detect multiple weed species growing in perennial ryegrass. Simultaneous detection of multiple weed species is of paramount importance for precision herbicide application because weeds often grow in mixed stands and various postemergence herbicides, such as 2,4-D, carfentrazone, dicamba, and MCPP, are sprayed to provide broad-spectrum control of various broadleaf weeds (McElroy and Martins, 2013; Reed et al., 2013). For training purposes, E. maculata, G. hederacea, and T. officinale constituted a single category of objects to be discriminated from perennial ryegrass (Figure 2). The selected weed species exhibited tremendous differences in plant morphology, while perennial ryegrass was viewed at different turfgrass management regimes, mowing heights, and surface conditions (Figure 2). Meanwhile, weeds present in the training and testing images were at different growth stages, which added the extra complexity for the machine learning algorithms. Surprisingly, VGGNet (trained using training dataset B) achieved high F1 scores (≥0.92), with high recall values (≥0.99) in the VD, TD 1, and TD 2. These results suggest that the simultaneous detection of multiple weed species with a single VGGNet is effective even when target weeds have distinct morphological structures and weed densities.

Figure 2 Images for training the multiple-species neural networks. (A) Euphorbia maculata, Glechoma hederacea, and Taraxacum officinale at various weed densities. (B) Perennial ryegrass at different turfgrass management regimes, mowing heights, and surface conditions.

AlexNet and VGGNet trained using the training dataset A resulted in higher precision and F1 score, but lower recall than the training dataset B in the VD and most testing results in the TD 1 and TD 2. Recall is a critical factor for precision herbicide application. High recall indicates that target weeds are more likely to be correctly identified, whereas low recall indicates that target weeds are more likely to be misidentified, leading to inadequate herbicide application and thus poor weed control. Regardless of the training datasets, GoogLeNet exhibited high recall but unacceptable precision. Low precision is undesirable since resultant networks are more likely to misclassify turfgrasses as target weeds and spraying herbicides where weeds do not occur.

The training dataset A is balanced and contained equal number of negative and positive images, whereas the training dataset B is unbalanced and contained less training images than the training dataset A. Because of the differential performances of multiple-species neural networks, particularly AlexNet and VGGNet, were evident when trained using the training dataset A and B, we hypothesized that (1) the ratios of negative and positive images in the training dataset may influence the performances of neural networks, (2) changing the number of positive images for each weed species in the training dataset may alter the performances for weed detection, and (3) a larger training dataset might be needed to further improve the precision and recall and enhance the overall accuracy. These hypotheses will be tested in further work. It should be noted that all training images were taken in a relatively small geographical area. While the model achieved high classification rates, a more diversified training dataset that represents different weed biotypes collected in different geographical regions is highly desired. Moreover, pixel-wise semantic segmentation was noted to improve the accuracy of object detection with less training images (Milioto and Stachniss, 2018; Milioto et al., 2018), which warrant further evaluation. The expansion of the neural networks to include a wider variety of weed species should be the next immediate step of this research.

Conclusion and Summary

This research demonstrated the feasibility of using DCNN for weed detection in perennial ryegrass. When the neural networks were trained using training datasets containing a single weed species, AlexNet and VGGNet performed similarly for detection of E. maculata and G. hederacea growing in perennial ryegrass. AlexNet and VGGNet had reduced the recall values in the TD 2 for detection of T. officinale, but this problem was overcome by training the multiple-species neural networks. VGGNet consistently exhibited the highest MCC values in the multiple-species neural networks for both training datasets. VGGNet trained using training dataset A exhibited higher precision and F1 score but lower recall compared to the models trained using training dataset B. VGGNet (trained using the training dataset B) achieved high F1 score (≥0.9278), with high recall (≥0.9952), indicating that it is highly suitable for the automated detection of E. maculata, G. hederacea, and T. officinale growing in perennial ryegrass. GoogleNet is not an effective DCNN at detecting these weed species primarily due to the unacceptable precision. DetectNet exhibited excellent F1 scores (≥0.9843) and recall values (≥0.9911) in the TD 1 and TD 2, and thus is an effective DCNN for detection of T. officinale growing in perennial ryegrass. To further improve the accuracy of weed detection, other DCNN architectures, such as Single Shot Detection (SSD; Liu et al., 2016), You Only Look Once (Yolo; Redmon et al., 2016), and residual network (He et al., 2016) may be investigated in the future.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Author Contributions

JY, AS, and NB designed the experiment. JY, ZC, and SS acquired the images. JY trained DCNN models, analyzed the data, and drafted the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research received no specific grant from any funding agency in the commercial, public, or not-for-profit organizations.

References

Alipanahi, B., Delong, A., Weirauch, M. T., Frey, B. J. (2015). Predicting the sequence specificities of DNA-and RNA-binding proteins by deep learning. Nat. Biotechnol. 33, 831–838. doi: 10.1038/nbt.3300

Bordes, A., Chopra, S., Weston, J. (2014). Question answering with subgraph embeddings. In Proceedings of the Conference on Empirical Methods in Natural Language Processing. http://arxiv.org/abs/1406.3676v3

Busey, P. (2003). Cultural management of weeds in turfgrass. Crop Sci. 43, 1899–1911. doi: 10.2135/cropsci2003.1899

Chen, Q., Xu, J., Koltun, V. (2017). Fast image processing with fully-convolutional networks. Pages 2516–2525 in Proceedings of the IEEE International Conference on Computer Vision. doi: 10.1109/ICCV.2017.273

Collobert, R., Weston, J. (2008). A unified architecture for natural language processing: Deep neural networks with multitask learning. Pages 160–167 in Proceedings of the Proceedings of the 25th international conference on Machine learning: ACM. doi: 10.1145/1390156.1390177 .

Collobert, R., Weston, J., Bottou, L., Karlen, M., Kavukcuoglu, K., Kuksa, P. (2011). Natural language processing (almost) from scratch. J. Mach. Learn. Res. 12, 2493–2537. doi: 10.1016/j.chemolab.2011.03.009

Deng, J., Dong, W., Socher, R., Li, L., Li, K., Li, F. F. (2009). Imagenet: A large-scale hierarchical image database. in Proceedings of the Computer Vision and Pattern Recognition. pp. 248–255 doi: 10.1109/CVPR.2009.5206848.

dos Santos Ferreira, A., Freitas, D. M., da Silva, G. G., Pistori, H. (2017). Weed detection in soybean crops using ConvNets. Comp. Electron. Agric. 143, 314–324. doi: 10.1016/j.compag.2017.10.027

Fennimore, S. A., Slaughter, D. C., Siemens, M. C., Leon, R. G., Saber, M. N. (2016). Technology for automation of weed control in specialty crops. Weed Technol. 30, 823–837. doi: 10.1614/WT-D-16-00070.1

Gawehn, E., Hiss, J. A., Schneider, G. (2016). Deep learning in drug discovery. Mol. Infor. 35, 3–14. doi: 10.1002/minf.201501008

Geiger, A., Lenz, P., Stiller, C., Urtasun, R. (2013). Vision meets robotics: the KITTI dataset. Inter. J. Rob. Res. 32, 1231–1237. doi: 10.1177/0278364913491297

Ghosal, S., Blystone, D., Singh, A. K., Ganapathysubramanian, B., Singh, A., Sarkar, S. (2018). An explainable deep machine vision framework for plant stress phenotyping. Proc. Nat. Acad. Sci. 115, 4613–4618. doi: 10.1073/pnas.1716999115

Grinblat, G. L., Uzal, L. C., Larese, M. G., Granitto, P. M. (2016). Deep learning for plant identification using vein morphological patterns. Comp. Electron. Agric. 127, 418–424. doi: 10.1016/j.compag.2016.07.003

Gu, J., Wang, Z., Kuen, J., Ma, L., Shahroudy, A., Shuai, B., et al. (2018). Recent advances in convolutional neural networks. Pattern Recog. 77, 354–377. doi: 10.1016/j.patcog.2017.10.013

Havaei, M., Davy, A., Warde-Farley, D., Biard, A., Courville, A., Bengio, Y., et al. (2017). Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31. doi: 10.1016/j.media.2016.05.004

He, K. M., Zhang, X. Y., Ren, S. Q., Sun, J. (2016). Deep residual learning for image recognition. IEEE Conf. Comput. Vision Pattern Recognition (CVPR), 770–778. doi: 10.1109/CVPR.2016.90

Hinton, G., Deng, L., Yu, D., Dahl, G. E., Mohamed, A. R., Jaitly, N., et al. (2012). Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process Mag 29, 82–97. doi: 10.1109/MSP.2012.2205597

Hoiem, D., Chodpathumwan, Y., Dai, Q. (2012). Diagnosing error in object detectors. in . Berlin, Heidelberg: Springer. Pages 340–353

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R., et al. (2014). Caffe: Convolutional architecture for fast feature embedding. in . . Pages 675-678.

Johnston, C. R., Yu, J., McCullough, P. E. (2016). Creeping bentgrass, perennial ryegrass, and tall fescue tolerance to Topramezone during establishment. Weed Technol. 30, 36–44. doi: 10.1614/WT-D-15-00072.1

Jordan, M. I., Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science 349, 255–260. doi: 10.1126/science.aaa8415

Kendall, A., Gal, Y. (2017). What uncertainties do we need in bayesian deep learning for computer vision? in Proceedings of the Advances in Neural Information Processing Systems. pp. 2274–5584.

Krizhevsky, A., Sutskever, I., Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Pages 1097–1105 in Proceedings of the Advances in neural information processing systems Advances in Neural Information Processing Systems 25 NIPS 2012. doi: 10.1145/3065386

LeCun, Y., Bengio, Y., Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C. Y., Berg, A.C. (2016). Ssd:Single shot multibox detector. Pages 21–37 in Proceedings of the European Conference on Computer Vision arXiv:151202325v5. doi: 10.1007/978-3-319-46448-0_2

McElroy, J., Martins, D. (2013). Use of herbicides on turfgrass. Planta Daninha 31, 455–467. doi: 10.1590/S0100-83582013000200024

Milesi, C., Elvidge, C., Dietz, J., Tuttle, B., Nemani, R., Running, S. (2005). A strategy for mapping and modeling the ecological effects of US lawns. J. Turfgrass Manage. 1, 83–97.

Milioto, A., Stachniss, C. (2018). Bonnet: An open-source training and deployment framework for semantic segmentation in robotics using CNNs. In IEEE International Conference on Robotics and Automation. arXiv preprint arXiv:1802.08960. DOI: 10.1109/ICRA.2019.8793510

Milioto, A., Lottes, P., Stachniss, C. (2018) Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. Pages 2229–2235 in Proceedings of the 2018 IEEE International Conference on Robotics and Automation ICRA: IEEE. doi: 10.1109/ICRA.2018.8460962

Mohanty, S. P., Hughes, D. P., Salathé, M. (2016). Using deep learning for image-based plant disease detection. Front. Plant Sci. 7, 1419. doi: 10.3389/fpls.2016.01419

Matthews, B. W. (1975). Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta (BBA)-Protein Struct. 405, 442–451. doi: 10.1016/0005-2795(75)90109-9

Parkhi, O. M., Vedaldi, A., Zisserman, A. (2015). Deep face recognition. in, Proceedings of the British Machine Vision Conference BMVC. 6. doi: 10.5244/C.29.41

Redmon, J., Divvala, S., Girshick, R., Farhadi, A. (2016). You only look once: Unified, real-time object detection. Pages 779–788 in Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition arXiv:150602640v5. doi: 10.1109/CVPR.2016.91

Reed, T. V., Yu, J., McCullough, P. E. (2013). Aminocyclopyrachlor efficacy for controlling Virginia buttonweed (Diodia virginiana) and smooth crabgrass (Digitaria ischaemum) in tall fescue. Weed Technol. 27, 488–491. doi: 10.1614/WT-D-12-00159.1

Ronao, C. A., Cho, S. B. (2016). Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 59, 235–244. doi: 10.1016/j.eswa.2016.04.032

Schmidhuber, J. (2015). Deep learning in neural networks: An overview. Neural Networks 61, 85–117. doi: 10.1016/j.neunet.2014.09.003

Sharpe, S. M., Schumann, A. W., Boyd, N. S. (2018). Detection of Carolina geranium (Geranium carolinianum) growing in competition with strawberry using convolutional neural networks. Weed Sci. 67, 239–245. doi: 10.1017/wsc.2018.66

Sharpe, S. M., Schumann, A. W., Yu, J., Boyd, N. S. (2019). Vegetation detection and discrimination within vegetable plasticulture row-middles using a convolutional neural network. Prec. Agric. 20, 1–7. doi: 10.1007/s11119-019-09666-6

Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. In the International Conference on Learning Representations (ICLR). https://arxiv.org/pdf/1409.1556.pdf. Accessed: October 5, 2018.

Sokolova, M., Lapalme, G. (2009). A systematic analysis of performance measures for classification tasks. Infor. Proc. Manage. 45, 427–437. doi: 10.1016/j.ipm.2009.03.002

Song, I., Kim, H. J., Jeon, P. B. (2014). Deep learning for real-time robust facial expression recognition on a smartphone. in Proceedings of the Consumer Electronics ICCE, 2014 IEEE International Conference on Consumer Electronics . Pages 564–567. doi:10.1109/ICCE.2014.6776135

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., et al(2015). Going deeper with convolutions. in: Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition. 1–9. doi: 10.1109/CVPR.2015.7298594

Tao, A., Barker, J., Sarathy, S. (2016). Detectnet: Deep neural network for object detection in digits. https://devblogs.nvidia.com/detectnet-deep-neural-network-object-detection-digits. Accessed: October 2, 2018.

Teimouri, N., Dyrmann, M., Nielsen, P. R., Mathiassen, S. K., Somerville, G. J., Jørgensen, N. R. (2018). Weed growth stage estimator using deep convolutional neural networks. Sensors 18, 1580. doi: 10.3390/s18051580

USDA-NRCS. (2018). United States Department of Agriculture—Natural Resources Conservation Service. https://www.nrcs.usda.gov/wps/portal/nrcs/site/national/home. Accessed October 3, 2018.

Wang, Z., Li, Z., Wang, B., Liu, H. (2016). Robot grasp detection using multimodal deep convolutional neural networks. Adv. Mech. Eng. 8, 1–12. doi: 10.1177/1687814016668077

Ye, Q., Zhang, Z., Law, R. (2009). Sentiment classification of online reviews to travel destinations by supervised machine learning approaches. Expert Syst. Appl. 36, 6527–6535. doi: 10.1016/j.eswa.2008.07.035

Yu, J., McCullough, P. E. (2016a). Growth stage influences mesotrione efficacy and fate in two bluegrass (Poa) species. Weed Technol. 30, 524–532. doi: 10.1614/wt-d-15-00153.1

Yu, J., McCullough, P. E. (2016b). Triclopyr reduces foliar bleaching from mesotrione and enhances efficacy for smooth crabgrass control by altering uptake and translocation. Weed Technol. 30, 516–523. doi: 10.1614/wt-d-15-00189.1

Yu, J., Sharpe, S. M., Schumann, A. W., Boyd, N. S. (2019a). Detection of broadleaf weeds growing in turfgrass with convolutional neural networks. Pest Manage. Sci. 75, 2211–2218. doi: 10.1002/ps.5349.

Keywords: artificial intelligence, machine vision, machine learning, precision herbicide application, weed control

Citation: Yu J, Schumann AW, Cao Z, Sharpe SM and Boyd NS (2019) Weed Detection in Perennial Ryegrass With Deep Learning Convolutional Neural Network. Front. Plant Sci. 10:1422. doi: 10.3389/fpls.2019.01422

Received: 11 July 2019; Accepted: 14 October 2019;

Published: 31 October 2019.

Edited by:

Franceschi Pietro, Fondazione Edmund Mach, ItalyReviewed by:

Khan M. Sohail, Gyeongsang National University, South KoreaGiuseppe Jurman, Fondazione Bruno Kessler, Italy

Copyright © 2019 Yu, Schumann, Cao, Sharpe and Boyd. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nathan S. Boyd, bnNib3lkQHVmbC5lZHU=

Jialin Yu

Jialin Yu Arnold W. Schumann2

Arnold W. Schumann2 Shaun M. Sharpe

Shaun M. Sharpe