- College of Internet of Things Technology, Hangzhou Polytechnic, Hangzhou, China

Colorectal cancer is a common malignant tumor in the gastrointestinal tract, which usually evolves from adenomatous polyps. However, due to the similarity in color between polyps and their surrounding tissues in colonoscopy images, and their diversity in size, shape, and texture, intelligent diagnosis still remains great challenges. For this reason, we present a novel dense residual-inception network (DRI-Net) which utilizes U-Net as the backbone. Firstly, in order to increase the width of the network, a modified residual-inception block is designed to replace the traditional convolutional, thereby improving its capacity and expressiveness. Moreover, the dense connection scheme is adopted to increase the network depth so that more complex feature inputs can be fitted. Finally, an improved down-sampling module is built to reduce the loss of image feature information. For fair comparison, we validated all method on the Kvasir-SEG dataset using three popular evaluation metrics. Experimental results consistently illustrates that the values of DRI-Net on IoU, Mcc and Dice attain 77.72%, 85.94% and 86.51%, which were 1.41%, 0.66% and 0.75% higher than the suboptimal model. Similarly, through ablation studies, it also demonstrated the effectiveness of our approach in colorectal semantic segmentation.

1 Introduction

In today’s world, cancer has become the most important disease threatening human health. Due to genetic, environmental, diet and other factors, there are more and more patients with colorectal cancer, and the death rate is also the second highest. Research shows that colorectal cancer lesions are closely related to colorectal polyps. Therefore, early detection and treatment can effectively control the occurrence of diseases and reduce the mortality rate. By far, colonoscopy is an effective diagnostic method for detecting polyps in the intestine, and it has become the gold standard for early screening of colorectal cancer. Although the size, shape and lesions of tumors can be visually observed through colonoscopy, the characteristic analysis of the pathological images is entirely dependent on the professional doctor. This method not only has a long detection cycle and high labor intensity, but also relies heavily on the subjective judgment and cognition of doctors. Besides, with the increase in the number of disease patients, the demand for professional experts is also increasing, which poses a huge challenge to the medical talent industry. For this reason, the combination of computer vision technology and pathological image diagnosis has become extremely important in the medical field.

At present, deep-learning performs very well in computer vision, especially in medical image-assisted diagnosis (Dang et al., 2023; Maria et al., 2023; Morita et al., 2023; Yang et al., 2023; Zhang et al., 2023). Compared with traditional segmentation frameworks (Srikanth and Bikshalu, 2022; Chen et al., 2023), the core advantage of deep learning is that it can independently discover and learn higher-level image features directly from training data, thus significantly reducing the refinement of feature extraction and facilitating end-to-end image processing in deep architectures. At present, convolutional neural network (CNN) (Lecun et al., 1998) is one of the most popular models in deep learning networks. By introducing local receptive fields, weight sharing, and pooling operations, the generalization ability of the model is greatly improved. However, this network needs to assign labels to each pixel, and medical images often contain millions of pixels, so it takes a lot of time to process millions of forward channels. In addition, all pixels are calculated independently, resulting in spatial inconsistencies in the segmentation results. To solve the above problems, Long et al. (2015) proposed a full convolutional network (FCN). By replacing the fully-connected layer in CNN with a convolutional layer, the spatial information of images can be preserved by using the features and up-sampling strategies of different layers. At the same time, this method can accept any size of input image, and is easier to implement than the traditional image block classification method.

Inspired by FCN, similar network structure models have emerged in an endless stream, mainly improved from extended convolutions (Liu et al., 2020; Karthika and Senthilselvi, 2023), recurrent neural networks (Tan et al., 2021; Chen J et al., 2022), multi-scale features (Dourthe et al., 2022; Goyal et al., 2022), residual connections (Anil and Dayananda, 2023; Selvaraj and Nithiyaraj, 2023) and attention mechanisms (Kanimozhi and Franklin, 2023; Rasti et al., 2023). Among them, Tang et al. (2022) proposed a guidance network for segmentation of medical images that can learn and cope with uncertainty end-to-end. Specifically, this method contains of three parts: firstly, the rough segmentation module is used to obtain the rough segmentation and uncertainty graph. Secondly, the feature refinement module is used to embed multiple double attention blocks to generate the final segmentation. Finally, to extract richer context information, a multi-scale feature extractor is inserted between the encoder and decoder of the coarsely segmented module. Sun et al. (2022) proposed a dual-path CNN with DeepLabV3+ as the backbone. In this method, soft shape monitoring blocks were inserted between the regional path and the shape path to realize the cross-path attention mechanism, so as to accurately detect and segment thyroid nodules. Zhang et al. (2022) proposed a retinal vessel segmentation algorithm based on M-Net. Firstly, to reduce the influence of noise, a double-attention mechanism based on channel and space was designed. Then, the self-attention mechanism in Transformer is introduced into skip connections to recode features and explicitly model remote relationships. Fu et al. (2022) proposed an automatic segmentation method for cardiac MRI images. On the one hand, CNNs were used for feature extraction and spatial encoding of inputs. On the other hand, by using Transformer to add remote dependencies to advanced features, the model’s ability to capture details can be fully utilized.

In this research, we proposed a new dense residual-inception network (called DRI-Net) for the segmentation of colorectal polyps and performed comparative experiments on a public dataset. Compared to other networks, our contributions are the following:

1) Using standard U-Net architecture, the DRI-Net was presented to provide guidance for the accurate segmentation of polyps.

2) In DRI-Net, to make the network structure wider without gradient disappearing, simple convolutional blocks were replaced with dense residual-inception blocks.

3) The down-sampling was carefully redesigned using average-pooling to reduce the loss of image feature information.

4) We do ablation studies on residual-inception, dense and down-sampling. Compared with several classical algorithms, our approach has better performance.

2 Methods

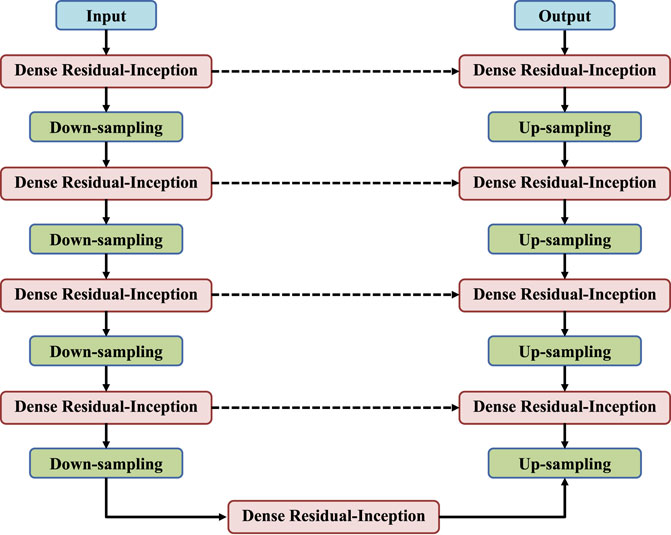

DRI-Net is a classic encoder-decoder structure, and its overall network is shown in Figure 1. The left encoder includes four dense residual-inception modules, and each of which is followed by a pooling layers to down-sample the image. The right decoder also contains four dense residual-inception modules and the resolution is successively increased by the up-sampling operation until it is consistent with the resolution of the input image. Skip connections are used in the network to connect the up-sampled result to the output of a module with the same resolution in the encoder as the input to the next module in the decoder. Finally, the activation function used in the last layer is a Sigmoid function to generate binary segmentation results, and the rest of the activation functions are linear activation functions. In the following, we will explain each block in detail.

2.1 Residual-inception

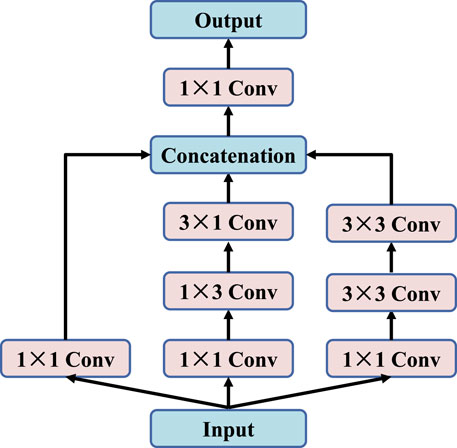

In deep learning, many algorithms achieve better results by simply deepening or broadening neural networks. However, it not only greatly increases the number of parameters and the amount of computation, but also causes problems such as generator over-fitting, gradient disappearing and insufficient diversity of generated samples. To overcome the above difficulties, we propose an improved inception module with multiple convolution kernels of 1 × 1, 1 × 3, 3 × 1 and 3 × 3, as shown in Figure 2. By using parallel structure, the weight of each convolution kernel is adjusted adaptively during the training process, so that the network can adapt to images of different scales. At the same time, three sets of convolution kernels can convert full connection-layer connections to sparse connections, thus improving computational efficiency and extracting more features. However, it is important to note that with the number of convolution cores increases, the number of parameters will increase. Therefore, each group of parallel branches will first undergo 1 × 1 convolution operations to reduce channel dimensions to achieve the purpose of dimensionality reduction of images.

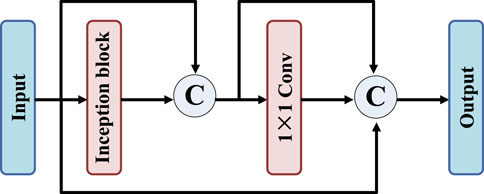

To further improve the feature extraction capability of the network, residual-inception block is designed, as shown in Figure 3. Firstly, the proposed residual-inception structure connects the input and output of inception layer and 1 × 1 convolution layer respectively. This approach presents an overall sequential connection, and the distance between the two connected network layers is short and there is only one network layer. Firstly, the residual-inception structure is proposed to connect the input and output of inception layer and 1 × 1 convolution layer respectively. This approach presents an overall sequential connection, and the distance between the two connected network layers is short and there is only one network layer. Then, the input features of the image are connected with the output of the second connection layer. In this structure, the features of short jump connections include both adjacent outputs and distant ones.

2.2 Dense connections

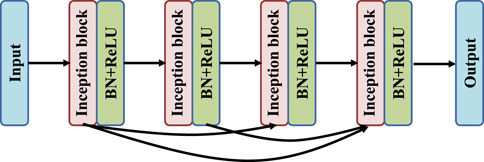

As shown in Figure 4, the DRI-Net network adopts a dense structure, and the top convolutional layer is directly connected to the subsequent convolutional layer. After each convolution layer there is a Batch Normalization (BN) layer and a Rectified Linear Unit (ReLU) layer. This connection integrates the larger eigenvalues of the bottom layer into the smaller eigenvalues of the top layer, which can effectively alleviate the problems of over-fitting and gradient disappearance. In addition, the number of existing colon image datasets is small, which will make deep neural network training difficult. At the same time, the disappearance of gradients during training will seriously limit the improvement of the accuracy of neural networks, and dense structures can alleviate these problems to some extent.

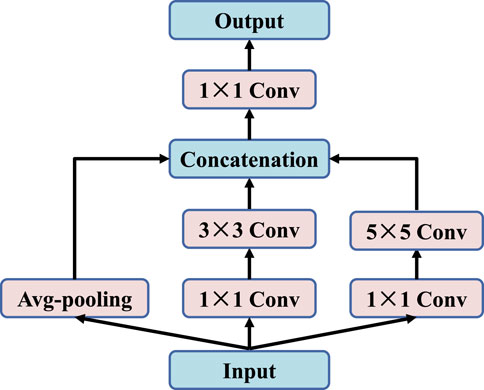

2.3 Down-sampling layer

The traditional U-Net (Ronneberger et al., 2015) uses Max-pooling to reduce and compress features in the shrink path. However, this will cause a lot of useful information in the image to be lost. In order to store more fine-grained feature information and reduce information loss caused by pooling process, this paper adopts two 1 × 1 convolution steps, one 3 × 3 convolution, one 5 × 5 convolution and average-pooling layers for parallel processing, as shown in Figure 5.

3 Experiments and results

The colorectal polyp images from Kvasir-SEG (Jha et al., 2020) dataset were used to evaluate the performance of DRI-Net. The database has 1,196 images, of which 700 are training sets, 300 are verification sets, and 196 are test sets. The programming language used in the experiment is Python 3.6, the operating system is Windows 10. The system memory is 24 GB, and the GPU is NVIDIA Quadro RTX 6000. According to the effect of the network, we select the Adam optimizer, the initial learning-rate was 0.001 (Badshah and Ahmad, 2021), the batch size was 16, the number of iterations was 200, the learning rate was 0.001, and the loss function was Dice loss.

3.1 Evaluation metrics

Several quantitative metrics, including Intersection over Union (IoU) (Ahmed et al., 2021), Matthews correlation coefficient (Mcc) (Jiang et al., 2021), and Dice (Yang et al., 2020) were adopted to evaluate the performance of each algorithm. The above indicators can calculate as:

where TP indicates that the actual target is a positive sample, and the algorithm also judges the target as a positive sample. TN is represented as a negative sample, and the algorithm also judges this negative sample as a negative sample. FP means a negative sample, but the algorithm incorrectly judges it as a positive sample, FN means a positive sample, but the algorithm incorrectly judges it as a negative sample.

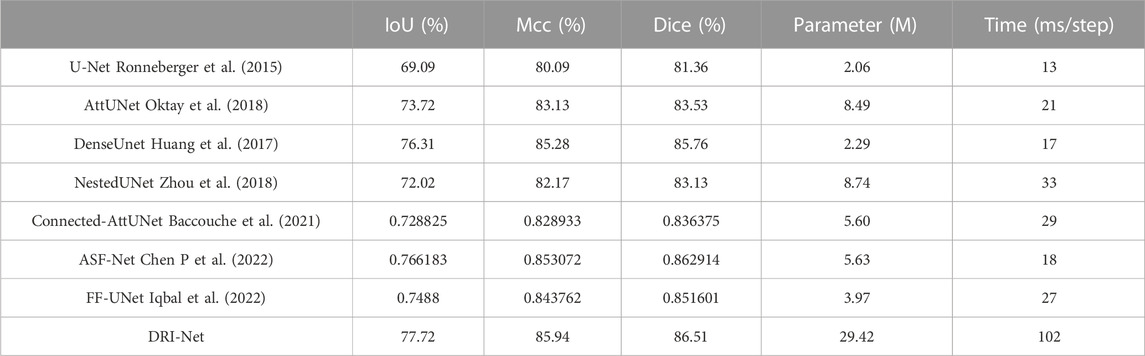

3.2 Comparison with other methods

To quantitatively analyze the performance of the models, IoU, Mcc and Dice were calculated for automatic segmentation compared with manual specificity, as shown in Table 1. By adding the gate attention mechanism to the UNet structure, AttUNet, Connected-AttUNet and FF-UNet can effectively improve the precision to segment the colonoscopy images and achieve good results. Among them, the results of DenseUnet, ASF-Net and DRI-Net network are very close, and the comparison between them can objectively reflect the advantages of dense connections. Although the dense mechanism can increase the size of the network and reduce the over-fitting of without using a pre-trained model, its ability to increase the size of the network is limited. Obviously, our approach is superior to other methods in depth feature characterization and can obtain more accurate segmentation results. As you can see from the last two columns, although DRI-Net achieves better segmentation results, it requires more parameters and runtimes due to the introduction of many modules.

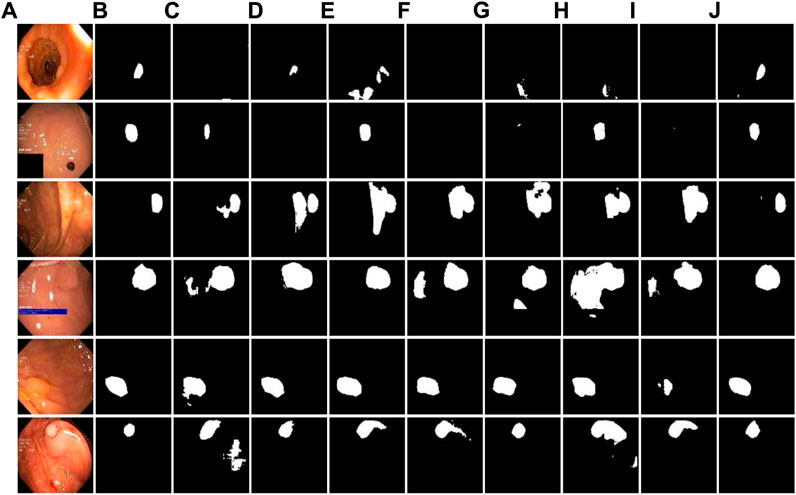

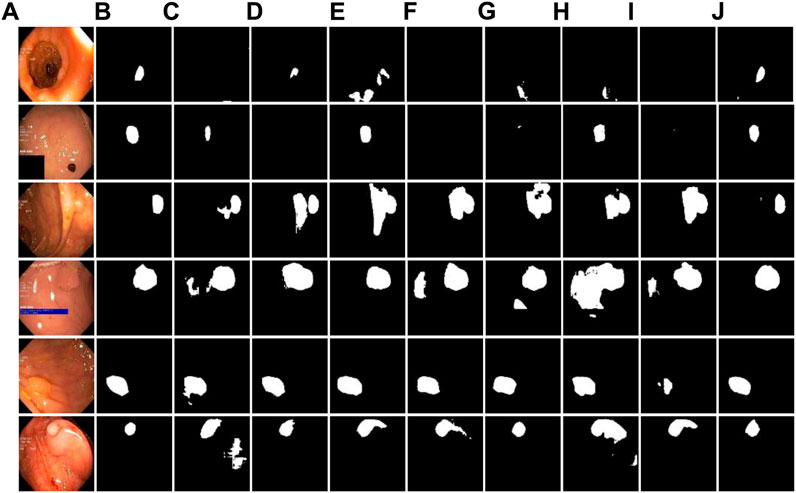

Figure 6 shows the comparison of the visualization segmentation results on the Kvasir-SEG dataset between the DRI-Net and the models proposed by some researchers in recent years. It can be seen from the segmentation example that in the structure of U-net, due to the lack of support for convolutional low-level information, the segmentation details are poor and there are many false negatives. Compared with U-Net, the results of other models are better, and the false negative is reduced. However, due to the loss of global association, the phenomenon of over-segmentation appeared, and the false positives of polyp segmentation were relatively high. As can be seen from the comparison between the visual segmentation results and Ground-truth, compared with other methods, our method can well distinguish polyp boundaries, and is better in maintaining the consistency of polyp morphological features, with lower FP and FN.

FIGURE 6. Comparison experiment with other methods on Kvasir-SEG dataset. (A) original images; (B) Ground-truth; (C–J) are the results of U-Net, AttUNet, DenseUnet, NestedUNet, Connected-AttUNet, ASF-Net, FF-UNet and DRI-Net.

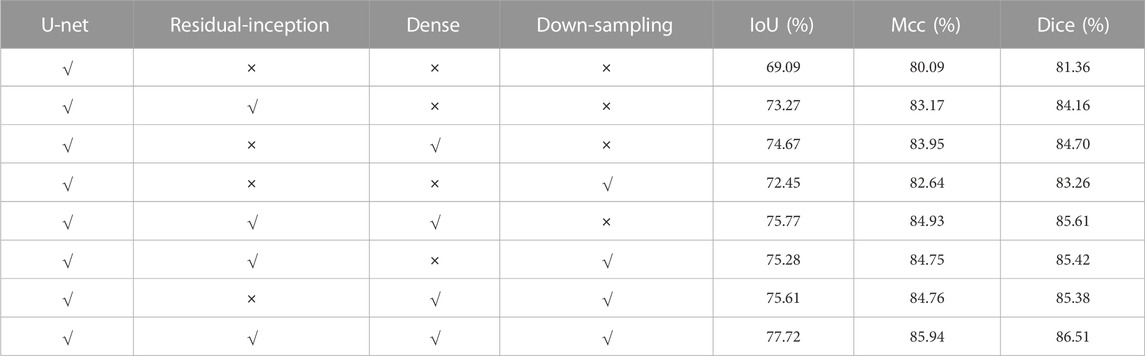

In order to prove that each module added to the proposed DRI-Net network plays a role in colorectal polyp images, ablation experiments are conducted for each module. Experimental comparisons were conducted on the Kvasir-SEG dataset using U-Net, U-Net with only residual-inception module, U-Net with only dense module, U-Net with only down-sampling module, U-Net with residual-inception+dense module, U-Net with residual-inception+down-sampling module, U-Net model with dense+down-sampling module, and DRI-Net. As can be seen from Table 2, when the network is added with the combination of residual-inception, dense and down-sampling modules, compared with a single U-Net, all evaluation indicators are better than those obtained by the latter, it demonstrate the effectiveness of the these modules.

For further analysis of ablation performance, the partial segmentation results are shown in Figure 7. Visually, it can be seen that before module fusion, there was still under segmentation at the boundaries of some lesions, and the complete tumor region could not be segmented well. After adding residual-inception, dense and improved down-sampling modules, the segmentation accuracy of the whole network is greatly contributed. Therefore, the results of ablation experiments further verify the validity of these modules.

FIGURE 7. Ablation experiment of DRI-Net on Kvasir-SEG dataset. (A) original images; (B) Ground-truth; (C–J) are the combination results of baseline (U-Net), U-Net+residual-inception, U-Net+dense, U-Net+down-sampling, U-Net+residual-inception+dense, U-Net+residual-inception+down-sampling, U-Net+dense+down-sampling, and U-Net+residual-inception+dense+down-sampling.

4 Conclusion

Based on the color similarity between colon polyps and surrounding tissues and the diversity of size, shape and texture of colon polyps, a dense residual initialization network structure is proposed, which is an effective extension of encoder-decoder U-Net network. Firstly, we integrate the reside-inception module and dense connection into U-Net to effectively extract more discernible features in colon cancer tissue from a large amount of information. Then, the re-designed down-sampling module aim to suppress useless information and improve the recognition accuracy of the network. We assessed all methods on the Kvasir-SEG dataset using three popular evaluation metrics. Experimental results consistently illustrates that DRI-Net has better results than other typical networks. In the future, we will investigate the lightweight and over-fitting problems of these methods and apply them to more medical image segmentation tasks.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislations and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

XL: Conceptualization, Writing–original draft. HC: Validation, Writing–review and editing. WJ: Visualization, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahmed I., Ahmad M., Jeon G. (2021). A real-time efficient object segmentation system based on U-Net using aerial drone images. J. Real-Time Image Process 18, 1745–1758. doi:10.1007/s11554-021-01166-z

Anil B., Dayananda P. (2023). Automatic liver tumor segmentation based on multi-level deep convolutional networks and fractal residual network. IETE J. Res. 69, 1925–1933. doi:10.1080/03772063.2021.1878066

Baccouche A., Garcia-Zapirain B., Castillo Olea C., Elmaghraby A. S. (2021). Connected-UNets: a deep learning architecture for breast mass segmentation. NPJ Breast Cancer 7, 151. doi:10.1038/s41523-021-00358-x

Badshah N., Ahmad A. (2021). ResBCU-Net: deep learning approach for segmentation of skin images. Biomed. Signal Process. Control 40, 103137–137. doi:10.1016/j.bspc.2021.103137

Chen H. Y., Zhang H. Q., Zhen X. J. (2023). A hybrid active contour image segmentation model with robust to initial contour position. Multimed. Tools Appl. 82, 10813–10832. doi:10.1007/s11042-022-13782-3

Chen J., Jiang Y., Luo L., Gong W. (2022). ASF-Net: adaptive screening feature network for building footprint extraction from remote-sensing images. IEEE Trans. Geosci. Remote. Sens. 60, 1–13. doi:10.1109/TGRS.2022.3165204

Chen P., Huang S., Yue Q. (2022). Skin lesion segmentation using recurrent attentional convolutional networks. IEEE Access 10, 94007–94018. doi:10.1109/ACCESS.2022.3204280

Dang H., Li M., Tao X. X., Zhang G., Qi X. Q. (2023). LVSegNet: a novel deep learning-based framework for left ventricle automatic segmentation using magnetic resonance imaging. Comput. Commun. 208, 124–135. doi:10.1016/j.comcom.2023.05.011

Dourthe B., Shaikh N., Pai S. A., Fels S., Brown S. H. M., Wilson D. R., et al. (2022). Automated segmentation of spinal muscles from upright open MRI using a multiscale pyramid 2D convolutional neural network. Spine 47, 1179–1186. doi:10.1097/BRS.0000000000004308

Fu Z. Y., Zhang J., Luo R. Y., Sun Y. T., Deng D. D., Xia L. (2022). TF-Unet: an automatic cardiac MRI image segmentation method. Math. Biosci. Eng. 19, 5207–5222. doi:10.3934/mbe.2022244

Goyal B., Lepcha D. C., Dogra A., Wang S. H. (2022). A weighted least squares optimisation strategy for medical image super resolution via multiscale convolutional neural networks for healthcare applications. Complex Intell. Syst. 8, 3089–3104. doi:10.1007/s40747-021-00465-z

Huang G., Liu Z., Van Der Maaten L., Weinberger K. Q. (2017). “Densely connected convolutional networks,” in In IEEE conference on computer vision and pattern recognition, 21–26. doi:10.1109/CVPR.2017.243

Iqbal A., Sharif M., Khan M. A., Nisar W., Alhaisoni M. (2022). FF-UNet: a u-shaped deep convolutional neural network for multimodal biomedical image segmentation. Cogn. Comput. 14, 1287–1302. doi:10.1007/s12559-022-10038-y

Jha D., Smedsrud P. H., Riegler M. A., Halvorsen P., de Lange T., Johansen D., et al. (2020). “Kvasir-SEG: a segmented polyp dataset,” in In proceedings of the international conference on multimedia modeling. Available online: https://datasets.simula.no/kvasir-seg/.

Jiang M., Zhai F., Kong J. (2021). A novel deep learning model DDU-net using edge features to enhance brain tumor segmentation on MR images. Artif. Intell. Med. 121, 102180. doi:10.1016/j.artmed.2021.102180

Kanimozhi T., Franklin J. V. (2023). An automated cervical cancer detection scheme using deeply supervised shuffle attention modified convolutional neural network model. Automatika 64, 518–528. doi:10.1080/00051144.2023.2196114

Karthika J., Senthilselvi A. (2023). Smart credit card fraud detection system based on dilated convolutional neural network with sampling technique. Multimed. Tools Appl. 82, 31691–31708. doi:10.1007/s11042-023-15730-1

Lecun Y., Bottou L., Bengio Y., Haffner P. (1998). “Gradient-based learning applied to document recognition,” in Proceedings of the IEEE (IEEE), 2278–2324. doi:10.1109/5.726791

Liu Q. H., Fu M., Jiang H., Gong X. Q. (2020). Densely dilated spatial pooling convolutional network using benign loss functions for imbalanced volumetric prostate segmentation. Curr. Bioinform. 15, 788–799. doi:10.2174/1574893615666200127124145

Long J., Shelhamer E., Darrell T. (2015). Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal.Mach. Intell. 39, 640–651. doi:10.1109/TPAMI.2016.2572683

Maria H. H., Jossy A. M., Malarvizhi S. (2023). A hybrid deep learning approach for detection and segmentation of ovarian tumours. Neural comput. Appl. 35, 15805–15819. doi:10.1007/s00521-023-08569-y

Morita D., Mazen S., Tsujiko S., Otake Y., Sato Y., Numajiri T. (2023). Deep-learning-based automatic facial bone segmentation using a two-dimensional U-Net. Int. J. Oral Max. Surg. 52, 787–792. doi:10.1016/j.ijom.2022.10.015

Oktay O., Schlemper J., Folgoc L. L., Lee M., Heinrich M., Misawa K., et al. (2018). Attention U-Net: learning where to look for the pancreas. https://arxiv.org/abs/1804.03999v2.

Rasti R., Biglari A., Rezapourian M., Yang Z. Y., Farsiu S. (2023). RetiFluidNet: a self-adaptive and multi-attention deep convolutional network for retinal OCT fluid segmentation. IEEE Trans. Med. Imaging 42, 1413–1423. doi:10.1109/TMI.2022.3228285

Ronneberger O., Fischer P., Brox T. (2015). “U-Net: convolutional networks for biomedical image segmentation,” in In international conference on medical image computing and computer-assisted intervention (Springer), 234–241. arXiv:1505.04597.

Selvaraj A., Nithiyaraj E. (2023). CEDRNN: a convolutional encoder-decoder residual neural network for liver tumour segmentation. Neural process. Lett. 55, 1605–1624. doi:10.1007/s11063-022-10953-z

Srikanth R., Bikshalu K. (2022). Chaotic multi verse improved Harris hawks optimization (CMV-IHHO) facilitated multiple level set model with an ideal energy active contour for an effective medical image segmentation. Multimed. Tools Appl. 81, 20963–20992. doi:10.1007/s11042-022-12344-x

Sun J. W., Li C. Y., Lu Z. D., He M., Zhao T., Li X. Q., et al. (2022). TNSNet: thyroid nodule segmentation in ultrasound imaging using soft shape supervision. Comput. Meth. Prog. Bio. 215, 106600. doi:10.1016/j.cmpb.2021.106600

Tan Q. X., Ye M., Ma A. J., Yang B., Yip T. C. F., Wong G. L. H., et al. (2021). Explainable uncertainty-aware convolutional recurrent neural network for irregular medical time series. IEEE Trans. Neur. Net. Lear. Syst. 32, 4665–4679. doi:10.1109/TNNLS.2020.3025813

Tang P., Yang P. L., Nie D., Wu X., Zhou J. L., Wang Y. (2022). Unified medical image segmentation by learning from uncertainty in an end-to-end manner. Knowledge-Based Syst. 241, 108215. doi:10.1016/j.knosys.2022.108215

Yang T. T., Yuan L. L., Li P., Liu P. Z. (2023). Real-time automatic assisted detection of uterine fibroid in ultrasound images using a deep learning detector. Ultrasound Med. Biol. 49, 1616–1626. doi:10.1016/j.ultrasmedbio.2023.03.013

Yang Y. Y., Feng C., Wang R. F. (2020). Automatic segmentation model combining U-Net and level set method for medical images. Expert Syst. Appl. 153, 113419. doi:10.1016/j.eswa.2020.113419

Zhang C., Vinodhini B., Muthu B. A. (2023). Deep learning assisted medical insurance data analytics with multimedia system. Int. J. Interact. Multi. Artif. Intell. 8, 69–80. doi:10.9781/ijimai.2023.01.009

Zhang H. B., Zhong X., Li Z. J., Chen Y. A., Zhu Z. L., Lv J. Q., et al. (2022). TiM-Net: transformer in M-Net for retinal vessel segmentation. J. Healthc. Eng. 2022, 9016401. doi:10.1155/2022/9016401

Keywords: image segmentation, colonoscopy, residual-inception, dense, down-sampling

Citation: Lan X, Chen H and Jin W (2023) DRI-Net: segmentation of polyp in colonoscopy images using dense residual-inception network. Front. Physiol. 14:1290820. doi: 10.3389/fphys.2023.1290820

Received: 08 September 2023; Accepted: 04 October 2023;

Published: 25 October 2023.

Edited by:

Ruizheng Shi, Central South University, ChinaReviewed by:

Yiling Lu, University of Derby, United KingdomHui Wang, Hangzhou Cancer Hospital, China

Yucheng Song, Central South University, China

Copyright © 2023 Lan, Chen and Jin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Honghuan Chen, aGhjaGVuQGhkdS5lZHUuY24=

Xiaoke Lan

Xiaoke Lan Honghuan Chen

Honghuan Chen