94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Physiol., 05 January 2024

Sec. Computational Physiology and Medicine

Volume 14 - 2023 | https://doi.org/10.3389/fphys.2023.1272814

Yang Li1,2,3†

Yang Li1,2,3† Wen Li1,2,3,4†

Wen Li1,2,3,4† Li Wang3

Li Wang3 Xinrui Wang5

Xinrui Wang5 Shiyu Gao6

Shiyu Gao6 Yunyang Liao4

Yunyang Liao4 Yihan Ji4

Yihan Ji4 Lisong Lin4*

Lisong Lin4* Yiming Liu3*

Yiming Liu3* Jiang Chen1,2*

Jiang Chen1,2*Background: Magnetic resonance imaging (MRI) plays a crucial role in diagnosing anterior disc displacement (ADD) of the temporomandibular joint (TMJ). The primary objective of this study is to enhance diagnostic accuracy in two common disease subtypes of ADD of the TMJ on MRI, namely, ADD with reduction (ADDWR) and ADD without reduction (ADDWoR). To achieve this, we propose the development of transfer learning (TL) based on Convolutional Neural Network (CNN) models, which will aid in accurately identifying and distinguishing these subtypes.

Methods: A total of 668 TMJ MRI scans were obtained from two medical centers. High-resolution (HR) MRI images were subjected to enhancement through a deep TL, generating super-resolution (SR) images. Naive Bayes (NB) and Logistic Regression (LR) models were applied, and performance was evaluated using receiver operating characteristic (ROC) curves. The model’s outcomes in the test cohort were compared with diagnoses made by two clinicians.

Results: The NB model utilizing SR reconstruction with 400 × 400 pixel images demonstrated superior performance in the validation cohort, exhibiting an area under the ROC curve (AUC) of 0.834 (95% CI: 0.763–0.904) and an accuracy rate of 0.768. Both LR and NB models, with 200 × 200 and 400 × 400 pixel images after SR reconstruction, outperformed the clinicians’ diagnoses.

Conclusion: The ResNet152 model’s commendable AUC in detecting ADD highlights its potential application for pre-treatment assessment and improved diagnostic accuracy in clinical settings.

As the sole craniomandibular joint in humans and the only left-right joint in the body, temporomandibular joint (TMJ) stands as one of the most complex joints in the human anatomy (Alomar et al., 2007). In contemporary society, temporomandibular disorders (TMD) are becoming increasingly prevalent, affecting the quality of life for approximately 5%–12% of the population (Dworkin et al., 1990; Nishiyama et al., 2012), with a higher incidence among women (Nekora-Azak, 2004). Typically, the disc becomes displaced anteriorly (Oğütcen-Toller et al., 2002), leading to impaired joint function, pain, and other symptoms. The two most prevalent forms of TMJ disc displacement are anterior disc displacement (ADD) with reduction (ADDWR) and ADD without reduction (ADDWoR) (Bas et al., 2012). Clinicians typically conduct a comprehensive clinical examination, which includes the assessment of joint sounds, range of motion, pain patterns, and palpation. Diagnostic imaging, such as magnetic resonance images (MRI), proves invaluable in visualizing the disc’s position and confirming the diagnosis (Limchaichana et al., 2007).

Deep learning (DL) and transfer learning (TL) have demonstrated significant potential in medicine for diagnosing diseases, largely due to their capacity to identify complex patterns within extensive datasets and provide valuable insights for clinical decision-making. DL algorithms can autonomously learn features from raw data, achieving high diagnostic accuracy that frequently surpasses traditional machine learning methods and, in some cases, even human experts (Asch et al., 2019). TL, on the other hand, employs pre-trained models that have already learned features from related tasks, reducing the time and computational resources necessary for training new models (Choudhary et al., 2023). Numerous studies have demonstrated the utilization of DL and TL techniques in the early detection and classification of oral cancers by analyzing histopathological slides and medical images (Bansal et al., 2022). Convolutional Neural Networks (CNNs), a pivotal component of deep learning technology, are designed to extract intricate and discriminative features from input images by employing multi-layered convolution operations (Suzuki, 2017). These extracted features serve as the foundation upon which the model can make precise determinations, ranging from discerning the presence or absence of tumors to performing tumor grading and other complex diagnostic tasks (Truong et al., 2020). Despite notable advancements, current medical imaging radiomics signatures often encounter challenges related to anisotropic resolution and limited voxel statistics (de Farias et al., 2021). To improve the specificity and sensitivity of radiomics models, it is imperative to generate higher resolution images. Super-resolution (SR) technology focuses on enhancing the spatial resolution of digital images derived from lower-resolution observations (Van Reeth et al., 2012). In recent years, the integration of DL techniques has propelled SR to achieve remarkable advancements in the field of medical imaging (Li et al., 2021). Notably, a recent study conducted by Hossein et al. (Mohammad-Rahimi et al., 2023) demonstrated the potential of SR images in enhancing the quality of oral panoramas.

The primary premise of this study is to develop a TL model utilizing SR reconstruction technique MRI data for the diagnosis of ADDWR and ADDWoR. Our aim is to enhance diagnostic efficiency for clinicians through the implementation of this model.

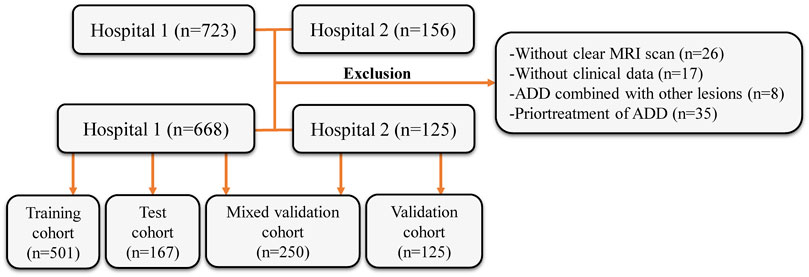

This retrospective study’s inclusion criteria and participant recruitment procedures adhered to the guidelines outlined in the 1964 Helsinki Declaration. This retrospective analysis was approved by the ethical review board (2023-KY-0256), and informed consent was waived. The patient registration process is illustrated in Figure 1. This study included patient samples who underwent TMJ MRI examinations at the First Affiliated Hospital of Zhengzhou University from January 2012 to December 2022 and at the First Affiliated Hospital of Fujian Medical University from October 2018 to December 2022. The exclusion criteria included: (Alomar et al., 2007): without clear MRI scan (n = 26), (Dworkin et al., 1990), without clinical data (gender, age, etc.) (n = 17), (Nishiyama et al., 2012), ADD combined with other lesions (joint disc perforation, joint mass, etc.) (n = 8), and (Nekora-Azak, 2004) prior treatment of ADD (conservative treatment or surgical treatment) (n = 35). In accordance with the sequence of patient examinations, a total of 668 MRI images from the First Affiliated Hospital of Zhengzhou University were partitioned into two distinct groups: the training cohort (n = 501) and the test cohort (n = 167). MRI images from the First Affiliated Hospital of Fujian Medical University were the validation cohort (n = 125). Simultaneously, 125 MRI images from the test cohort and 125 MRI images from the validation cohort were randomly chosen to create a new mixed validation cohort. This cohort was employed to assess the performance of the model by incorporating mixed multicenter data for evaluation.

FIGURE 1. Flow diagram of the study population. MRI, magnetic resonance images; ADD, anterior disc displacement.

The First Affiliated Hospital of Zhengzhou University consistently employs a 3T superconducting magnetic resonance scanner (Siemens, Germany) along with a TMJ surface coil. In this study, T2-weighted imaging (T2WI) sequence images were selected for analysis. The parameters are as follows: oblique sagittal plane T2WI: TR 2,000 ms, TE 76 ms, FOV 256 mm × 256 mm. The thickness for the above sequence layers is 3 mm. Similarly, the First Affiliated Hospital of Fujian Medical University utilizes a 3T superconducting magnetic resonance scanner (Siemens, Germany) and a TMJ surface coil. Scanning parameters include: oblique sagittal plane T2WI: TR 4,000 ms, TE 79 ms, FOV 220 mm × 220 mm. The thickness for the above sequence layers is 5 mm.

Employing the widely-used MRI oblique sagittal reading method, oblique sagittal images of open position was selected for evaluation (Orhan et al., 2021). Based on the position of the posterior band of the joint disc and the condyle in the open position, patients were judged to have either ADDWR (posterior band located anterior to the condylar head in the closed-mouth position but with a normal disc-condyle relationship in the open-mouth position) or ADDWoR (posterior band positioned anterior to the condyle in both the closed-mouth and open-mouth positions). All MRI image assessments in this study were conducted by two highly experienced physicians in the imaging department, each specializing in diagnosing TMD for over 10 years. In the event of any disagreement in the diagnosis, a senior imaging physician with over 30 years of experience in diagnosing TMD will make the final judgment.

We standardized the preprocessing methodology for all images acquired from two distinct medical centers. Initially, we employed a resampling technique on the selected MRI images, adjusting the pixel dimensions to 1 mm × 1 mm × 1 mm (Huang et al., 2023). This resampling procedure ensured the preservation of spatial consistency across images possessing varying resolutions. Subsequently, we applied a standardization process to the resampled images, harmonizing the parameters of the two medical centers of images to conform to a unified standard (Nyúl et al., 2000). Images were manually selected, ensuring the condyle was visible and positioned within 1/3 of the image center. To avoid any potential impact on model recognition, the image size was reduced to improve training efficiency. The images were cropped to 50 × 50 pixel and 100 × 100 pixel. Building upon the acquired high-resolution (HR) images, we employ a deep DL network to enhance the longitudinal resolution by quadrupling the pixel count. First, Gaussian noise was added to the MRI to reduce the out-plane resolution with a factor of four to generate a new low-resolution image. Then, the low-resolution and synthetic HR image pairs were used to train a lightweight parallel generative adversarial network (GAN) model. Finally, the trained model was applied to HR by TL. Consequently, the resulting images are referred to as SR images.

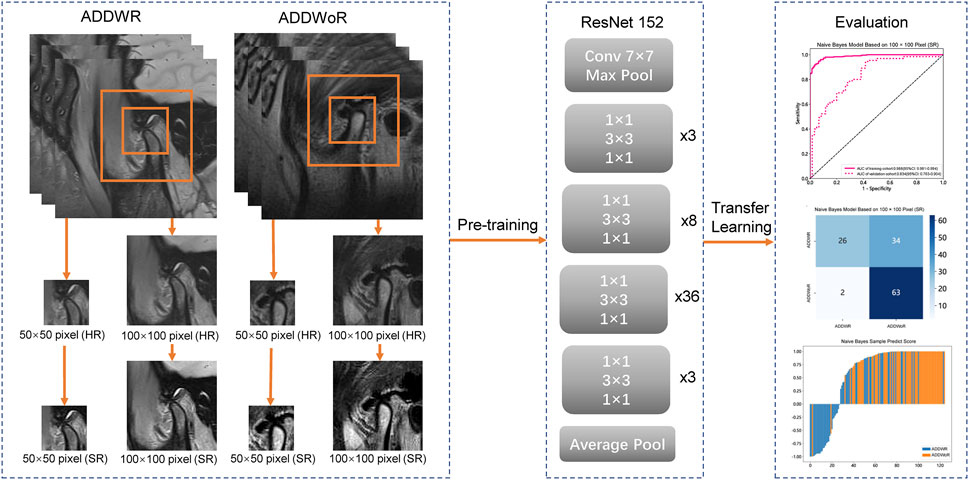

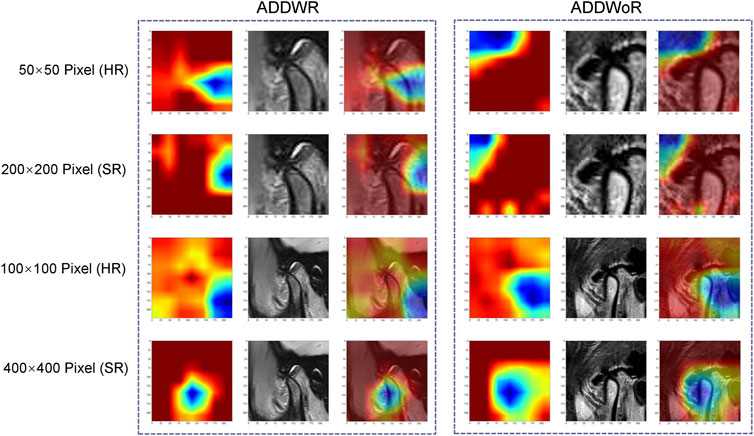

The model process is depicted in Figure 2. The workflow employed in this study involved the input of cropped images into three CNN models (ResNet152, DenseNet201 and GoogLeNet) separately, which, in turn, facilitated the extraction of salient image features through TL. Following this feature extraction step, our investigation leveraged two distinct machine learning models: Naive Bayes (NB) and Logistic Regression (LR). The primary objective was to meticulously assess and quantify the performance of the model under scrutiny. The CNN model, which was pre-trained on the ImageNet dataset, was used for TL. On one hand, the HR image with pixel dimensions of 50 × 50 and 100 × 100 is inputted into the model structure. On the other hand, the above two groups of images with different pixels are subjected to SR reconstruction. The obtained images with 200 × 200 and 400 × 400 pixel are then input into the same structure. These HR and SR images serve as the original inputs for four distinct groups of models. Model training was performed by updating the network weights using a cross-entropy loss function for the prediction task. An adaptive moment estimation optimizer was implemented with a learning rate of 0.1 for 30 epochs using a batch size of 64. The network parameters were fixed after training was completed and the fixed model was used as a feature extractor. Finally, DL features were extracted from the penultimate layer of the fine-tuned model for each patient in the training and validation cohorts. The model was subsequently assessed visually to comprehend its functioning. The classification activation heatmap was employed to emphasize the most crucial anatomical regions utilized by the model when categorizing images as ADDWR and ADDWoR. Classification activation heatmaps are heat gradient maps that employ warmer colors to indicate regions of greater significance in the classification process.

FIGURE 2. Workflow of the study. ADDWR, anterior disc displacement with reduction; ADDWoR, anterior disc displacement without reduction; HR, high-resolution; SR, super-resolution.

In this study, the Mann-Whitney U test and feature screening were conducted on all DL features. Only features with p < 0.05 were considered for further analysis. Spearman’s rank correlation coefficient was employed to assess the correlation between features with high repeatability. To avoid redundancy, only one feature from any pair exhibiting a correlation coefficient greater than 0.9 was retained (Wang et al., 2021). In order to maximize the informative value of the feature set, a greedy recursive deletion strategy was employed for feature filtering (Farahat et al., 2013). The Least Absolute Shrinkage and Selection Operator (LASSO) regression model was utilized to construct a signature based on the discovery dataset. LASSO regression shrinks all regression coefficients towards zero and sets many coefficients of uncorrelated features to exactly zero. The optimal regularization weight λ was determined using a minimum criterion and 10-fold cross-validation. After LASSO feature screening, the final features were input into machine learning models, including LR and NB for constructing the model.

Two oral and maxillofacial clinicians were selected, one with 3 years of experience and the other with 5 years. Subsequently, the MRI images of the test cohort were entrusted to these two clinicians for evaluation. This training encompassed the identification and diagnosis of ADDWR and ADDWoR from oblique sagittal images obtained from SR-processed MRI scans. Subsequently, the two clinicians participated in the training session and applied their acquired knowledge to diagnose the disease within the test cohort. Notably, the test cohort consisted of 167 oblique sagittal MRI images that had undergone SR processing. It is important to note that these 167 images in the test cohort were distinct from those in the training cohort.

The predictive power of NB and LR models was assessed using receiver operating characteristic (ROC) curves; the area under the ROC curve (AUC) was calculated and the balanced sensitivity and specificity of the cut-off point giving the maximum value of the Youden index was calculated. The 95% confidence interval (CI) of AUC was calculated using the bootstrap method (1,000 intervals). The AUC ranges from 0.5 to 1.0 and serves as a metric for evaluating the discriminative power of a test. An AUC value of 1.0 indicates a perfect discriminant test, while an AUC ranging from 0.8 to 1.0 signifies a good discriminant test. In cases where the AUC falls between 0.6 and 0.8, the discriminant test is considered moderate. However, if the AUC ranges from 0.5 to 0.6, the discriminant test is regarded as poor (Weinstein et al., 1980; Meehan et al., 2022). Statistical analyses were performed using SPSS software (version 21.0). Statistical significance was defined as a two-sided p ≤ 0.05.

Sensitivity, specificity, and accuracy were computed to evaluate the performance of the classification model. The definitions are as follows: where TP (true positives) and TN (true negatives) represent correct classifications, while FP (false positives) and FN (false negatives) indicate incorrect classifications.

A total of 391 patients, encompassing 668 TMJs, were identified in the First Affiliated Hospital of Zhengzhou University. Among these, 295 patients with a mean age of 30.31 ± 16.14 (age range of 11–90 years, 45 males, 250 females) and 501 MRI images were selected as the training cohort, while 96 patients with a mean age of 30.75 ± 16.04 (age range of 12–70 years, 10 males, 86 females) and 167 MRI images were chosen as the test cohort. The First Affiliated Hospital of Fujian Medical University contributed a total of 96 patients with a mean age of 25.90 ± 10.63 (age range of 12–68 years, 19 males, 77 females) and 125 MRI images for the validation cohort.

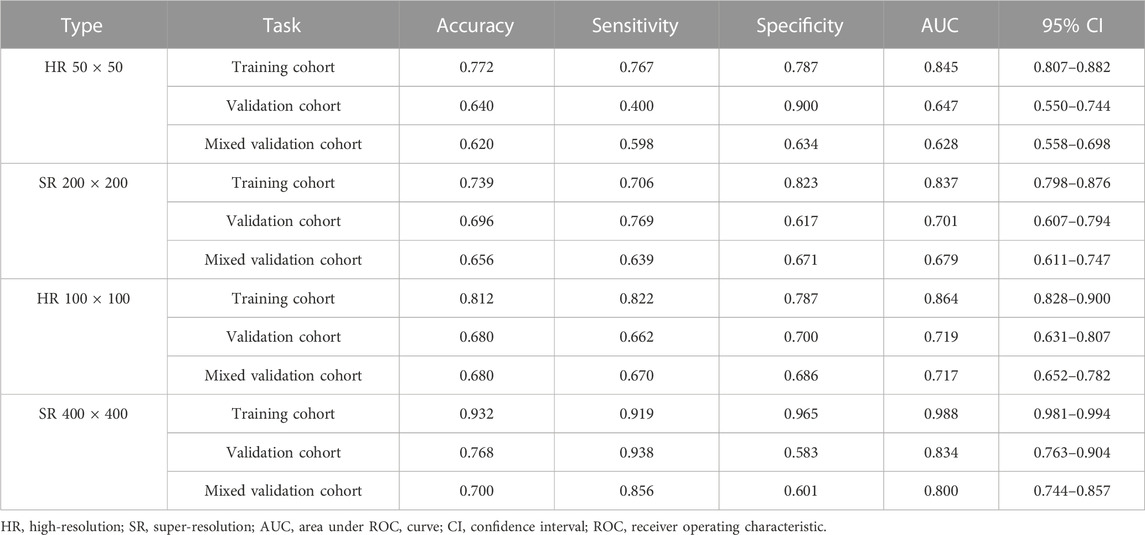

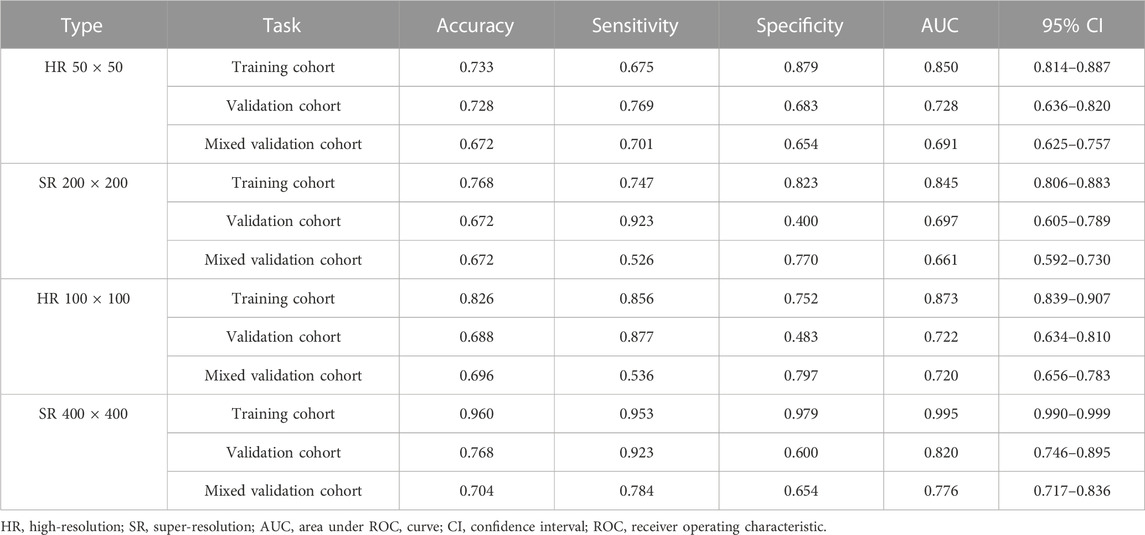

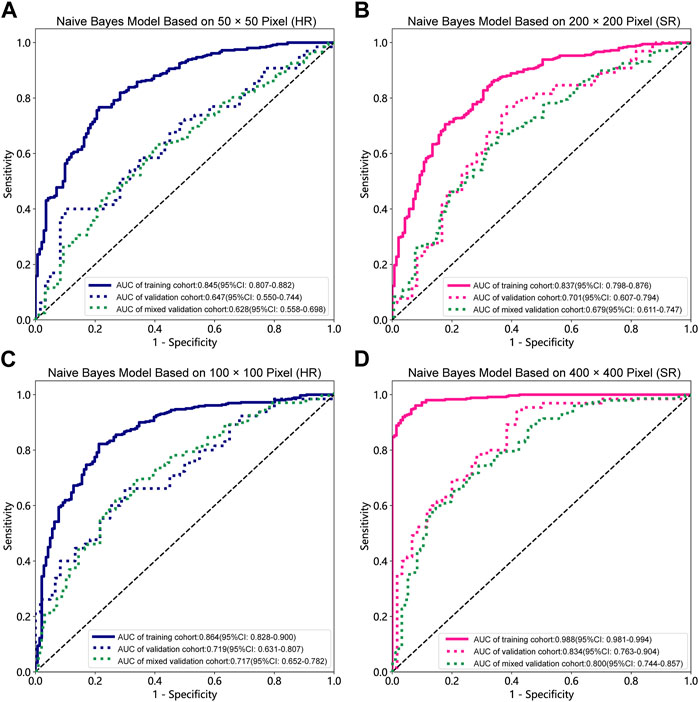

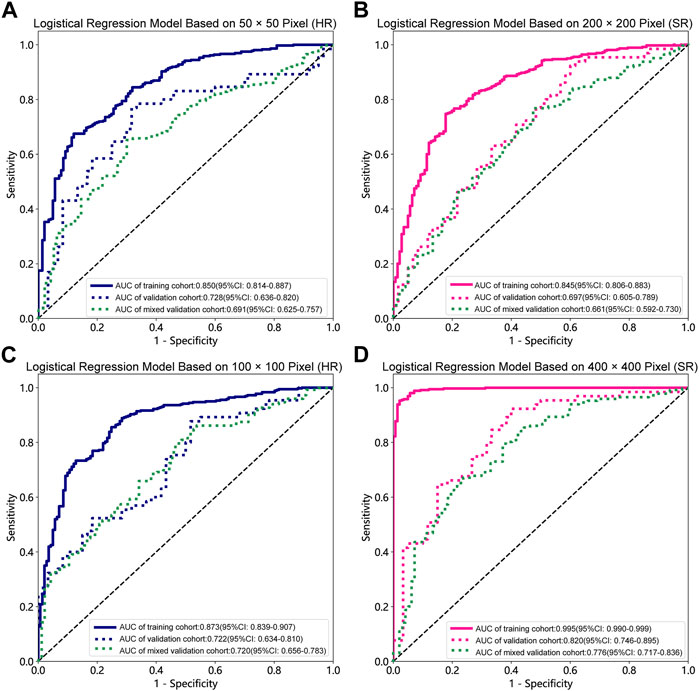

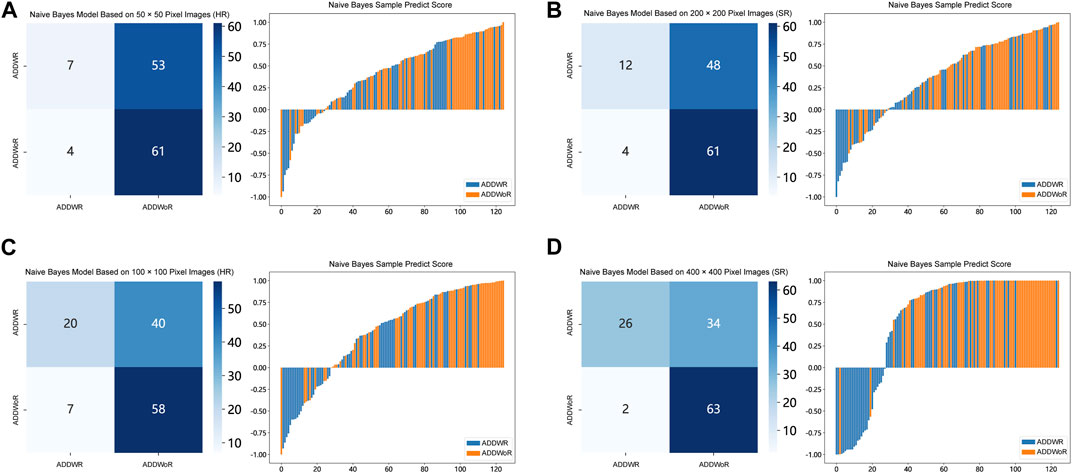

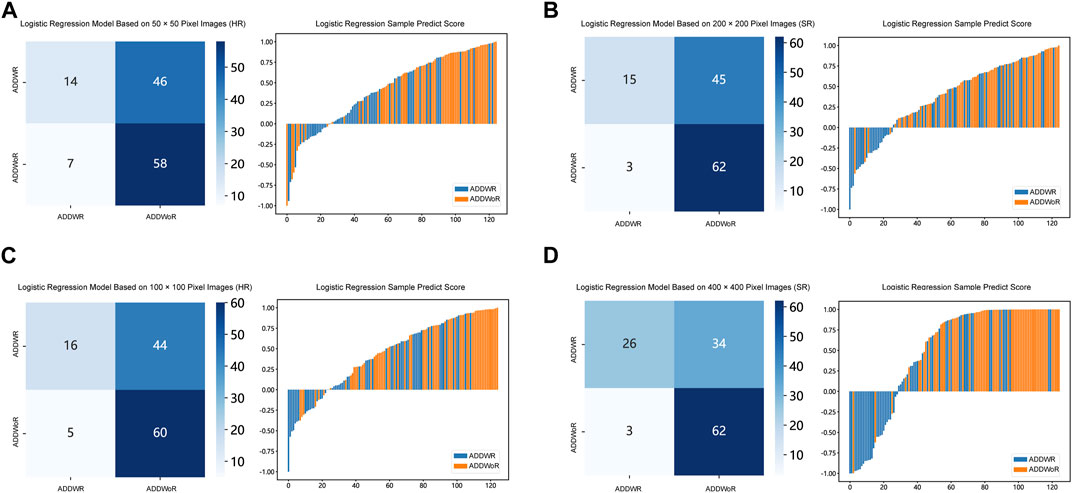

Table 1, Table 2 and Supplementary Tables S1–S4 summarize the performance of NB and LR models utilizing TL based on ResNet152, DenseNet201 and GoogLeNet separately. The training and validation cohort’s ROC and AUC with 95% CI of NB and LR models are provided in Figure 3, Figure 4 and Supplementary Figures S1–S4. The mixed validation cohort’s ROC and AUC with 95% CI of NB and LR models based on ResNet152 are provided in Table 1 and Table 2. Between the models that compared the input of four different types of images, the validation cohort demonstrated superior performance for the 400 × 400 pixel model utilizing SR reconstruction based on ResNet152 with the NB algorithm. The AUC of this model reached 0.834 (95% CI: 0.763–0.904), accompanied by an accuracy rate of 0.768. The next step of performance evaluation was conducted for the ResNet152 model. Figure 5 showcases the confusion matrix and histogram of predicted outcomes for each patient within the NB model groups using the four types of images, alongside their corresponding true outcomes. Furthermore, Figure 6 presents the comparative results relative to the LR model. Supplementary Figures S5, S6 presents the comparative results based on mixed validation cohort.

TABLE 1. Performance measures in Naive Bayes models of two different pixels for images based on validation cohort (ResNet152).

TABLE 2. Performance measures in logistic regression models of two different pixels for images based on validation cohort (ResNet152).

FIGURE 3. Receiver operating characteristic (ROC) curves of the different pixel in the Naive Bayes models (ResNet152). (A) 50 × 50 pixel images (HR). (B) 200 × 200 pixel images (SR). (C) 100 × 100 pixel images (HR). (D) 400 × 400 pixel images (SR). AUC, area under ROC curve; HR, high-resolution; SR, super-resolution.

FIGURE 4. Receiver operating characteristic (ROC) curves of the different pixel in the Logistic Regression models (ResNet152). (A) 50 × 50 pixel images (HR). (B) 200 × 200 pixel images (SR). (C) 100 × 100 pixel images (HR). (D) 400 × 400 pixel images (SR). AUC, area under ROC curve; HR, high-resolution; SR, super-resolution.

FIGURE 5. Confusion matrix and sample prediction histogram in the Naive Bayes models of different pixel images (ResNet152) (Validation cohort). (A) 50 × 50 pixel images (HR). (B) 200 × 200 pixel images (SR). (C) 100 × 100 pixel images (HR). (D) 400 × 400 pixel images (SR). ADDWR: anterior disc displacement with reduction; ADDWoR, anterior disc displacement without reduction; HR, high-resolution; SR, super-resolution.

FIGURE 6. Confusion matrix and sample prediction histogram in the Logistic Regression models of different pixel images (ResNet152) (Validation cohort). (A) 50 × 50 pixel images (HR). (B) 200 × 200 pixel images (SR). (C) 100 × 100 pixel images (HR). (D) 400 × 400 pixel images (SR). ADDWR: anterior disc displacement with reduction; ADDWoR, anterior disc displacement without reduction; HR, high-resolution; SR, super-resolution.

Figure 7 depicts the class activation maps for the four types of image pixel. The red color on the heatmap represents the most influential area, while the blue color signifies the least influential area in the decision-making process. It is evident that in the 400 × 400 pixel image post SR reconstruction, the mandibular condyle serves as the reference point, with the surrounding regions being identified as significant areas.

FIGURE 7. Classification activation heatmap. ADDWR, anterior disc displacement with reduction; ADDWoR, anterior disc displacement without reduction; HR, high-resolution; SR, super-resolution.

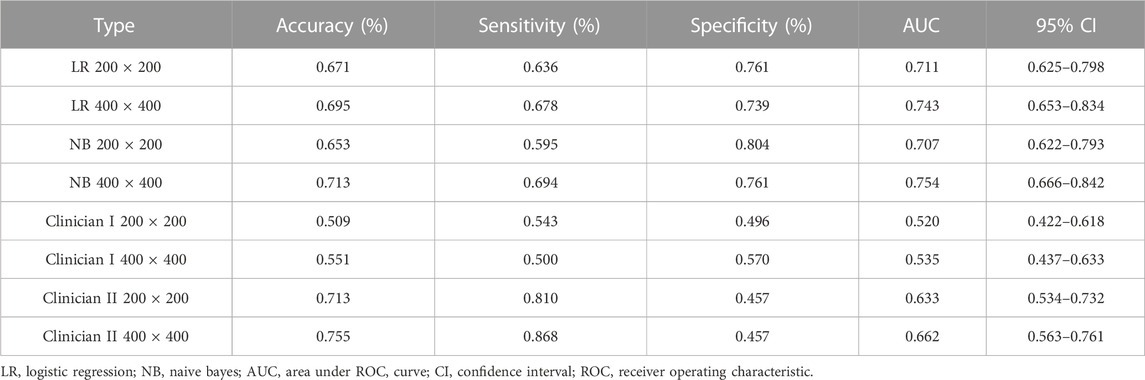

Table 3 demonstrates that the AUC of both NB and LR models, with pixel of 200 × 200 and 400 × 400 pixel images after SR reconstruction, surpass those of the two clinicians. Specifically, the AUC for the NB model with 400 × 400 pixel image after SR reconstruction technology is 0.754 (95% CI: 0.666–0.842), indicating moderate performance. However, the AUC for the LR with 400 × 400 pixel image after SR reconstruction technology slightly decreases to 0.743 (95% CI: 0.653–0.834). Furthermore, the AUC for the 200 × 200 pixel image reconstructed using SR is even lower. Notably, the AUC values for the 50 × 50 and 100 × 100 pixel images interpreted by the two clinicians show minimal disparity.

TABLE 3. Performance measures in Naive Bayes and logistic regression models for test cohort SR images, compared with judgments by oral and maxillofacial clinicians (ResNet152).

TMD encompass a group of conditions involving pain and dysfunction of the TMJ, masticatory muscles, and surrounding structures. An increasing number of studies have discovered that the disorder progressively results in emotional and psychological disturbances (Sójka et al., 2019), negatively impacting patients’ quality of life (Tjakkes et al., 2010). ADD represents a subgroup of TMD and is one of the most common TMD. It occurs when the articular disc, a fibrocartilaginous structure situated between the mandibular condyle and the temporal bone, becomes displaced forward relative to the condyle. ADD may lead to osteoarthritis, joint ankylosis, and reduced condylar height (Zhuo et al., 2015), potentially affecting mandibular development or causing mandibular asymmetry.

MRI serves as a non-invasive imaging modality, offering valuable insights into the soft tissues of the TMJ, such as the articular disc, ligaments, and surrounding muscle tissue. In ADDWoR, the joint space may appear narrower and the condyle may be situated more anteriorly, while in ADDWR, the joint space and condyle position exhibit a more normal appearance during mouth opening. Consequently, by selecting MRI images of the patient’s oral opening position for disease diagnosis, clinicians can differentiate between these two types of ADD, thereby supporting the development of subsequent individualized and tailored treatment plans, as well as predicting the prognosis of TMD (Ahmad et al., 2009). The application of the diagnostic model developed in this study has the potential to significantly enhance the diagnostic accuracy and efficiency of physicians in clinical practice. By leveraging the model’s capabilities, clinicians may experience improved accuracy in identifying TMJ anterior displacement conditions, namely, ADDWR and ADDWoR. Additionally, the integration of this model into clinical workflows has the potential to mitigate the time-consuming process of manually interpreting MRI films.

In this study, a total of 793 TMJs from 487 patients, spanning two hospitals in southern and northern China. The diverse and representative patient sample increases the potential for generalizability of the study’s results to a broader population. Simultaneously, the multi-center data not only reduces the risk of overfitting but also bolsters confidence in the model’s ability to generalize to new, unseen data (Falconieri et al., 2020). Referring to Tables 1, 2, the comparison demonstrates that there is no significant disparity in the model’s performance evaluation between validation cohort and mixed validation cohort. Our inference is that following the resampling and standardization procedures, the distinctions observed in the multi-center imaging data appear to exert minimal influence on the model’s performance.

DL models have been employed for the identification of TMD. Jung et al. (2023) utilized a TL model to classify images of normal TMJs and osteoarthritis, and their results demonstrated the model’s effectiveness in recognizing osteoarthritis. Choi et al.’s research (Choi et al., 2021) indicates that image-based artificial intelligence models for diagnosing TMJ osteoarthritis offer diagnostic performance comparable to that of oral and maxillofacial radiology experts, suggesting that this model can play a crucial role in most clinics lacking such experts. However, there are currently limited reports on the application of SR reconstruction technology in the diagnosis of TMD. In the SR-processed 200 × 200 pixel group and 400 × 400 pixel group, the image resolution has been enhanced fourfold, resulting in finer texture and sharper edges. Upon analyzing the feature extraction outcomes of this image set, the NB model demonstrated superior performance. Comparing these results with those obtained without super-resolution reconstruction, it is observed that the AUC of the 200 × 200 pixel group, despite using SR technology, did not exhibit significant improvement. However, in the 400 × 400 pixel group, the AUC value increased from 0.719 to 0.834, signifying a notable enhancement from a moderate to a good performance level. Furthermore, the model’s confusion matrix and histogram of prediction results exemplify the ideal diagnostic effect. NB offers computational efficiency and the ability to effectively handle high-dimensional features extracted by DL models, resulting in faster training and inference times while simplifying complex representation processing (Singh et al., 2017). Comparing the models constructed using the four types of pixel collectively, it is evident that the AUC value of the 100 × 100 pixel group consistently surpasses that of the 50 × 50 pixel group. This finding implies that larger image pixel offers enhanced resolution and finer details, thereby aiding DL algorithms in capturing underlying patterns and features within the data more effectively (Cha et al., 2017). Moreover, the utilization of larger image pixel enables CNN to extract a more extensive range of diverse and discriminative features, empowering models to learn from richer datasets (Mopuri et al., 2018).

Within the 400 × 400 pixel group, which has undergone SR reconstruction, the categorical activation heatmaps of the two samples (ADDWR and ADDWoR, respectively) exhibit more precise focus regions. This improved accuracy can be attributed to the utilization of SR-processed 400 × 400 pixel groups, which offer the model more comprehensive and detailed information. Consequently, the model can better prioritize the most relevant and discriminative features within the image (Dusmanu et al., 2019).

In predicting ADD classification, the ROC of the model ranks higher than two clinicians when comparing a ResNet152 model based on DL to trained clinicians. In terms of specificity, the ResNet152 model performs exceptionally well and surpasses that of the clinicians. High specificity models can make the diagnosis of TMD more effective. Since clinicians demonstrate higher sensitivity in determining the presence or absence of ADD, the appropriate use of these machines by clinicians could improve diagnostic accuracy.

The novelty of this article resides in the utilization of advanced techniques such as deep transfer learning and super-resolution reconstruction within the context of ADD diagnosis, thereby enhancing the efficiency of model evaluation. Significantly, this study presents advancements in various aspects compared to prior research, including enhancements in sample selection, data preprocessing, and model construction. However, our research, while promising, is not without its limitations. Firstly, the current system’s capabilities are confined solely to recognizing ADD, with no capacity to identify the relatively infrequent instances of posterior and lateral displacement frequently encountered in clinical practice. Addressing this limitation necessitates an expansion of our dataset to encompass a more comprehensive array of TMJ images. We are committed to this pursuit and intend to develop a multi-classification model, empowered by DL, that can aptly discern and categorize various forms of TMJ displacement. Secondly, we acknowledge that our study, though enriched by data from two medical centers, was restricted by the limited number of images employed for model training. Amplifying the size of our dataset, through the incorporation of more diverse images, is a logical next step that promises to enhance the model’s overall performance. Additionally, we recognize that our current model’s scope is confined to the analysis of a single sagittal MRI image. Nevertheless, it is crucial to underscore that a substantial reservoir of valuable information is embedded within the coronal images. Hence, our future research endeavors will entail the development of advanced DL models capable of comprehensively recognizing and interpreting all image orientations.

In this investigation, we conducted a rigorous analysis of SR reconstruction applied to MRI images obtained from patients diagnosed with ADD of the TMJ. Through a meticulous comparative analysis of various models, our findings elucidate a notable trend: the SR reconstruction using 400 × 400 pixel group, in conjunction with the NB model, yielded superior performance outcomes. SR reconstruction, as exemplified by our investigation, represents an innovative approach that transcends the realm of MRI images. Indeed, this technique bears the potential to revolutionize the efficiency of diverse medical imaging modalities and, more significantly, facilitate the seamless translation of cutting-edge technology into the intricate landscape of clinical practice.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

The studies involving humans were approved by the First Affiliated Hospital of Zhengzhou University (approval number: 2023-KY-0256). The studies were conducted in accordance with the local legislation and institutional requirements. The ethics committee/institutional review board waived the requirement of written informed consent for participation from the participants or the participants’ legal guardians/next of kin because This retrospective study was conducted without obtaining informed consent from the patients due to the impracticality of performing an informed consent study and the absence of any research risk associated with this retrospective analysis. The exemption from informed consent was approved as it did not negatively impact the rights or welfare of the patients involved in the study.

YaL: Writing–original draft. WL: Writing–original draft. LW: Data curation, Investigation, Writing–original draft. XW: Data curation, Methodology, Writing–original draft. SG: Formal Analysis, Methodology, Writing–original draft. YuL: Methodology, Writing–original draft. YJ: Data curation, Writing–original draft. LL: Funding acquisition, Resources, Supervision, Writing–original draft. YiL: Funding acquisition, Resources, Supervision, Writing–original draft. JC: Funding acquisition, Supervision, Writing–original draft.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by the Fujian Medical University Starting Fund (2019QH1099), the Medical Innovation Program of Fujian Health and Family Planning Commission (No.2021CXA035), the National Natural Science Foundation of China (U1904145) and the Fujian Provincial Science and Technology Innovation Joint Fund (2019Y9128).

Thanks to all the patients who participated in this study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphys.2023.1272814/full#supplementary-material

Ahmad M., Hollender L., Anderson Q., Kartha K., Ohrbach R., Truelove E. L., et al. (2009). Research diagnostic criteria for temporomandibular disorders (RDC/TMD): development of image analysis criteria and examiner reliability for image analysis. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 107 (6), 844–860. doi:10.1016/j.tripleo.2009.02.023

Alomar X., Medrano J., Cabratosa J., Clavero J. A., Lorente M., Serra I., et al. (2007). Anatomy of the temporomandibular joint. Semin. Ultrasound CT MR 28 (3), 170–183. doi:10.1053/j.sult.2007.02.002

Asch F. M., Poilvert N., Abraham T., Jankowski M., Cleve J., Adams M., et al. (2019). Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ. Cardiovasc Imaging 12 (9), e009303. doi:10.1161/CIRCIMAGING.119.009303

Bansal K., Bathla R. K., Kumar Y. (2022). Deep transfer learning techniques with hybrid optimization in early prediction and diagnosis of different types of oral cancer. SOFT Comput. 26 (21), 11153–11184. doi:10.1007/s00500-022-07246-x

Bas B., Ozgonenel O., Ozden B., Bekcioglu B., Bulut E., Kurt M. (2012). Use of artificial neural network in differentiation of subgroups of temporomandibular internal derangements: a preliminary study. J. Oral Maxillofac. Surg. 70 (1), 51–59. doi:10.1016/j.joms.2011.03.069

Cha Y., Choi W., Büyüköztürk O. (2017). Deep learning-based crack damage detection using convolutional neural networks. Computer-Aided Civ. Infrastructure Eng. 32 (5), 361–378. doi:10.1111/mice.12263

Choi E., Kim D., Lee J. Y., Park H. K. (2021). Artificial intelligence in detecting temporomandibular joint osteoarthritis on orthopantomogram. Sci. Rep. 11, 10246. doi:10.1038/s41598-021-89742-y

Choudhary T., Gujar S., Goswami A., Mishra V., Badal T. (2023). Deep learning-based important weights-only transfer learning approach for COVID-19 CT-scan classification. Appl. Intell. (Dordr) 53 (6), 7201–7215. doi:10.1007/s10489-022-03893-7

de Farias E. C., di Noia C., Han C., Sala E., Castelli M., Rundo L. (2021). Impact of GAN-based lesion-focused medical image super-resolution on the robustness of radiomic features. Sci. Rep. 11 (1), 21361. doi:10.1038/s41598-021-00898-z

Dusmanu M., Rocco I., Pajdla T., Pollefeys M., Sivic J., Torii A., et al. (2019). D2-Net: a trainable CNN for joint detection and description of local features. arXiv. doi:10.48550/arXiv.1905.03561

Dworkin S. F., Huggins K. H., LeResche L., Von Korff M., Howard J., Truelove E., et al. (1990). Epidemiology of signs and symptoms in temporomandibular disorders: clinical signs in cases and controls. J. Am. Dent. Assoc. 120 (3), 273–281. doi:10.14219/jada.archive.1990.0043

Falconieri N., Van Calster B., Timmerman D., Wynants L. (2020). Developing risk models for multicenter data using standard logistic regression produced suboptimal predictions: a simulation study. Biom J. 62 (4), 932–944. doi:10.1002/bimj.201900075

Farahat A. K., Ghodsi A., Kamel M. S. (2013). Efficient greedy feature selection for unsupervised learning. Knowl. Inf. Syst. 35, 285–310. doi:10.1007/s10115-012-0538-1

Huang Y., Zhu T., Zhang X., Li W., Zheng X., Cheng M., et al. (2023). Longitudinal MRI-based fusion novel model predicts pathological complete response in breast cancer treated with neoadjuvant chemotherapy: a multicenter, retrospective study. EClinicalMedicine 58, 101899. doi:10.1016/j.eclinm.2023.101899

Jung W., Lee K. E., Suh B. J., Seok H., Lee D. W. (2023). Deep learning for osteoarthritis classification in temporomandibular joint. Oral Dis. 29, 1050–1059. doi:10.1111/odi.14056

Li Y., Sixou B., Peyrin F. (2021). A review of the deep learning methods for medical images super resolution problems. IRBM 42 (2), 120–133. doi:10.1016/j.irbm.2020.08.004

Limchaichana N., Nilsson H., Ekberg E. C., Nilner M., Petersson A. (2007). Clinical diagnoses and MRI findings in patients with TMD pain. J. Oral Rehabil. 34 (4), 237–245. doi:10.1111/j.1365-2842.2006.01719.x

Meehan A. J., Baldwin J. R., Lewis S. J., MacLeod J. G., Danese A. (2022). Poor individual risk classification from adverse childhood experiences screening. Am. J. Prev. Med. 62 (3), 427–432. doi:10.1016/j.amepre.2021.08.008

Mohammad-Rahimi H., Vinayahalingam S., Mahmoudinia E., Soltani P., Bergé S. J., Krois J., et al. (2023). Super-resolution of dental panoramic radiographs using deep learning: a pilot study. Diagn. (Basel) 13 (5), 996. doi:10.3390/diagnostics13050996

Mopuri K. R., Garg U., Babu R. V. (2018). Cnn fixations: an unraveling approach to visualize the discriminative image regions. IEEE Trans. Im Process. 28 (5), 2116–2125. doi:10.1109/TIP.2018.2881920

Nekora-Azak A. (2004). Temporomandibular disorders in relation to female reproductive hormones: a literature review. J. Prosthet. Dent. 91 (5), 491–493. doi:10.1016/j.prosdent.2004.03.002

Nishiyama A., Kino K., Sugisaki M., Tsukagoshi K. (2012). A survey of influence of work environment on temporomandibular disorders-related symptoms in Japan. Head. Face Med. 8, 24. doi:10.1186/1746-160X-8-24

Nyúl L. G., Udupa J. K., Zhang X. (2000). New variants of a method of MRI scale standardization. IEEE Trans. Med. Imaging 19, 143–150. doi:10.1109/42.836373

Oğütcen-Toller M., Taşkaya-Yilmaz N., Yilmaz F. (2002). The evaluation of temporomandibular joint disc position in TMJ disorders using MRI. Int. J. Oral Maxillofac. Surg. 31 (6), 603–607. doi:10.1054/ijom.2002.0321

Orhan K., Driesen L., Shujaat S., Jacobs R., Chai X. (2021). Development and validation of a magnetic resonance imaging-based machine learning model for TMJ pathologies. Biomed. Res. Int. 2021, 6656773. doi:10.1155/2021/6656773

Singh A., Halgamuge M. N., Lakshmiganthan R., Suri J., Koul S., Mondhe D. M., et al. (2017). IN0523 (Urs-12-ene-3α,24β-diol) a plant based derivative of boswellic acid protect Cisplatin induced urogenital toxicity. Int. J. Adv. Comput. Sci. Appl. 318 (12), 8–15. doi:10.1016/j.taap.2017.01.011

Sójka A., Stelcer B., Roy M., Mojs E., Pryliński M. (2019). Is there a relationship between psychological factors and TMD. Brain Behav. 9 (9), e01360. doi:10.1002/brb3.1360

Suzuki K. (2017). Overview of deep learning in medical imaging. Radiol. Phys. Technol. 10, 257–273. doi:10.1007/s12194-017-0406-5

Tjakkes G. H., Reinders J. J., Tenvergert E. M., Stegenga B. (2010). TMD pain: the effect on health related quality of life and the influence of pain duration. Health Qual. Life Outcomes 8, 46. doi:10.1186/1477-7525-8-46

Truong A. H., Sharmanska V., Limbӓck-Stanic C., Grech-Sollars M. (2020). Optimization of deep learning methods for visualization of tumor heterogeneity and brain tumor grading through digital pathology. Neurooncol Adv. 2, vdaa110. doi:10.1093/noajnl/vdaa110

Van Reeth E., Tham I. W. K., Tan C. H., Poh C. L. (2012). Super-resolution in magnetic resonance imaging: a review. Concepts Magnetic Reson 40A (6), 306–325. doi:10.1002/cmr.a.21249

Wang W., Peng Y., Feng X., Zhao Y., Seeruttun S. R., Zhang J., et al. (2021). Development and validation of a computed tomography-based radiomics signature to predict response to neoadjuvant chemotherapy for locally advanced gastric cancer. JAMA Netw. Open 4 (8), e2121143. doi:10.1001/jamanetworkopen.2021.21143

Weinstein M. C., Fineberg H. V., Elstein A. S., Frazier H. S., Neuhauser D., Neutra R. R., et al. (1980). Clinical decision analysis. Philadelphia: Saunders.

Keywords: transfer learning, temporomandibular joint, MRI, super-resolution, anterior disc displacement

Citation: Li Y, Li W, Wang L, Wang X, Gao S, Liao Y, Ji Y, Lin L, Liu Y and Chen J (2024) Detecting anteriorly displaced temporomandibular joint discs using super-resolution magnetic resonance imaging: a multi-center study. Front. Physiol. 14:1272814. doi: 10.3389/fphys.2023.1272814

Received: 04 August 2023; Accepted: 18 December 2023;

Published: 05 January 2024.

Edited by:

Christopher Rhodes Stephens, National Autonomous University of Mexico, MexicoReviewed by:

Andre Luiz Costa, Universidade Cruzeiro do Sul, BrazilCopyright © 2024 Li, Li, Wang, Wang, Gao, Liao, Ji, Lin, Liu and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lisong Lin, ZHJfbGxzQGhvdG1haWwuY29t; Yiming Liu, ZG9jdG9ybGl1eW1AMTYzLmNvbQ==; Jiang Chen, amlhbmdjaGVuQGZqbXUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.