94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Physiol., 19 December 2022

Sec. Computational Physiology and Medicine

Volume 13 - 2022 | https://doi.org/10.3389/fphys.2022.1084202

This article is part of the Research TopicData Assimilation in Cardiovascular Medicine: Merging Experimental Measurements with Physics-Based Computational ModelsView all 5 articles

The manual identification and segmentation of intracranial aneurysms (IAs) involved in the 3D reconstruction procedure are labor-intensive and prone to human errors. To meet the demands for routine clinical management and large cohort studies of IAs, fast and accurate patient-specific IA reconstruction becomes a research Frontier. In this study, a deep-learning-based framework for IA identification and segmentation was developed, and the impacts of image pre-processing and convolutional neural network (CNN) architectures on the framework’s performance were investigated. Three-dimensional (3D) segmentation-dedicated architectures, including 3D UNet, VNet, and 3D Res-UNet were evaluated. The dataset used in this study included 101 sets of anonymized cranial computed tomography angiography (CTA) images with 140 IA cases. After the labeling and image pre-processing, a training set and test set containing 112 and 28 IA lesions were used to train and evaluate the convolutional neural network mentioned above. The performances of three convolutional neural networks were compared in terms of training performance, segmentation performance, and segmentation efficiency using multiple quantitative metrics. All the convolutional neural networks showed a non-zero voxel-wise recall (V-Recall) at the case level. Among them, 3D UNet exhibited a better overall segmentation performance under the relatively small sample size. The automatic segmentation results based on 3D UNet reached an average V-Recall of 0.797 ± 0.140 (3.5% and 17.3% higher than that of VNet and 3D Res-UNet), as well as an average dice similarity coefficient (DSC) of 0.818 ± 0.100, which was 4.1%, and 11.7% higher than VNet and 3D Res-UNet. Moreover, the average Hausdorff distance (HD) of the 3D UNet was 3.323 ± 3.212 voxels, which was 8.3% and 17.3% lower than that of VNet and 3D Res-UNet. The three-dimensional deviation analysis results also showed that the segmentations of 3D UNet had the smallest deviation with a max distance of +1.4760/−2.3854 mm, an average distance of 0.3480 mm, a standard deviation (STD) of 0.5978 mm, a root mean square (RMS) of 0.7269 mm. In addition, the average segmentation time (AST) of the 3D UNet was 0.053s, equal to that of 3D Res-UNet and 8.62% shorter than VNet. The results from this study suggested that the proposed deep learning framework integrated with 3D UNet can provide fast and accurate IA identification and segmentation.

The intracranial aneurysm is the local abnormal bulge of the intracranial arterial wall, which occurs in 5%–8% of the general population (Schievink, 1997; Vlak et al., 2011; Cebral and Raschi, 2013). The IAs remain asymptomatic until rupture. The global incidence of subarachnoid hemorrhage (SAH) caused by an IA rupture varies from two to more than 20 per 100,000 persons-years, and the modality could be greater than 50% (Nam et al., 2015; Lawton and Vates, 2017; Etminan et al., 2019; Schatlo et al., 2021).

One of the major challenges in IAs management is rupture prediction (Wiebers et al., 1987; England, 1998; Rayz and Cohen-Gadol, 2020). In current clinical practices, rupture risk estimation of IAs mainly relies on morphological metrics, including size, location, aspect ratio (AR), and size ratio (Hademenos et al., 1998; Wardlaw and White, 2000; Lall et al., 2009; Ma et al., 2010; Duan et al., 2018). Thus, accurate measurement is the basis of successful prediction (Raghavan et al., 2005; Dhar et al., 2008; Zanaty et al., 2014; Leemans et al., 2019), especially for some sensitive parameters such as daughter sac and AR (Murayama et al., 2016; Wang et al., 2018). Traditionally only 2D information from neuroimaging was utilized in the interpreting and measuring IAs (Rayz and Cohen-Gadol, 2020), which neglected the complex 3D structure of IAs and may lead to measurement bias and inconsistency (Rajabzadeh-Oghaz et al., 2017, 2018). Studies have shown that morphological metrics derived based on 3D information are more accurate and consistent than 2D manual measurement (Ma et al., 2004; Ryu et al., 2011; Rajabzadeh-Oghaz et al., 2018). However, recent studies have revealed that the morphology metrics alone may not be sufficient for predicting the rupture risks of IA, especially in small unruptured IAs (Abboud et al., 2017; Korja et al., 2017; Longo et al., 2017; Hu et al., 2021; Ren et al., 2022).

In addition to morphological evaluation, hemodynamics’ role in IA rupture has drawn growing attention. Imaging-based patient-specific computational fluid dynamics (CFD) simulations have been regarded as a powerful tool for investigating the hemodynamics in the IAs (Xiang et al., 2011; Takao et al., 2012; Liang et al., 2016; Xu et al., 2018; Zhu et al., 2019a; Medero et al., 2020; Hu et al., 2021; Le, 2021; Li et al., 2022). Several quantitative hemodynamics metrics, such as average wall shear stress (WSS), maximum intra-aneurysmal WSS, low WSS area, average oscillatory shear index, and relative resident time, were identified to play a vital role in the pathologies of IA rupture. However, most metrics are derived from studies that only involve a single or relatively small volume of patients, which are statistically unconvincing. Moreover, the clinical guideline and practical scoring system that include the hemodynamics metrics for IA management are yet to be established. To overcome the problems mentioned above, single and multicenter studies that contain patient-specific hemodynamics analysis in larger cohorts would be required (Xiang et al., 2013; Ionita et al., 2014; Rayz and Cohen-Gadol, 2020).

Precise individualized 3D modeling of IA is the first and the most crucial step in the workflow of accurate patient-specific morphological and hemodynamics analyses. Conventionally, the IA recognition and segmentation in the modeling procedure mainly rely on manual operations. The manual detection and segmentation of IAs require researchers to have rich medical image interpretation experience (Firouzian et al., 2011; Sen et al., 2014; Yang et al., 2014; Kavur et al., 2020; Haider and Michahelles, 2021; Jalali et al., 2021). Due to the complexity of cerebrovascular anatomy, the procedure is error-prone, which could bring inconsistency in the modeling and induce errors in subsequent analyses (Sen et al., 2014; Schwenke et al., 2019; Bo et al., 2021; Mensah et al., 2022). In addition, the highly labor-intensive nature of the manual operations also prevents the application of patient-specific analyses in large cohorts. Thus, automating the modeling process has been a research Frontier.

With the development of machine learning in recent years, convolutional neural network (CNN) architecture has shown great potential in automatic medical image segmentation. Ronneberger et al. proposed the UNet, a U-shaped convolution neural network model (Ronneberger et al., 2015). This model can achieve accurate segmentation under a small dataset and is continuously applied, developed, and optimized. Based on UNet, deep residual UNet (Res-UNet) simplifies the training process of deep neural networks with a residual mechanism, achieving higher accuracy in aerial image-based road extraction (Zhang et al., 2018). VNet also adopts the residual mechanism based on UNet, showing excellent results in the field of prostate segmentation (Milletari et al., 2016). Based on these studies, many neural network models have emerged in the past 2 years to detect and segment IAs(Park et al., 2019; Shi et al., 2020a; Ma and Nie, 2021; Su et al., 2021). Park et al. (Park et al., 2019) developed a CNN model called HeadXNet, which can process the CTA images of patients and generate voxel-by-voxel prediction results and has passed the validation of the clinical application. Su et al. (Su et al., 2021) introduced the attention gate (AG) mechanism into the 3D UNet model to improve the performance of the UNet model under a small sample size. Ma et al. (Ma and Nie, 2021) adopted the 3D UNet model and configured it with a larger patch size to obtain more context information. The early experiences of CNN-assisted automatic IA segmentation have proved its practical value as a tool to assist clinical diagnosis and to improve the efficiency of the modeling procedure of IA (Zhao et al., 2018).

Furthermore, several groups have conducted studies to evaluate the segmentation performances between different CNN architectures. Karimov et al. (Karimov et al., 2019) compared the accuracy and performance of three CNNs (UNet, ENet, and BoxENet) for the segmentation of mast cells in scans of histological slices and found that UNet showed higher accuracy in terms of DSC, intersection over union (IoU) and F1-score. Kartali et al. (Kartali et al., 2018) compared three deep-learning approaches based on CNN and two conventional approaches for real-time emotion recognition of four basic emotions (happiness, sadness, anger, and fear) from facial images. Zhang et al. proposed the Dense-Dilated Neural Network (DDNet) based on 3D UNet for the segmentation of cerebral arteries in TOF-MRA images, which got better performance than UNet, Vnet, and Uception (Zhang and Chen, 2019). Zhu et al. (Zhu et al., 2019b) compared the segmentation performance of V-NAS, 3D UNet, and VNet on the dataset of both normal organs (NIH Pancreas) and abnormal organs (MSD Lung tumors and MSD Pancreas tumors). These studies suggested that the CNN architectures and the segmentation object could impact the segmentation performances. However, the performances of existing CNNs in IA segmentation are yet to be investigated.

In this study, we proposed a deep-learning-based segmentation framework for IA, and the impacts of pre-processing and convolutional neural network (CNN) architectures on IA segmentation performance were quantitively evaluated.

The Institutional Ethics Review Committee of the First Affiliated Hospital of Xi’an Jiaotong University approved this retrospective study. A dataset containing the CTA images of 101 patients with 140 IAs was retrospectively collected and fully anonymized from the First Affiliated Hospital of Xi’an Jiaotong University. The CTA images were captured by the 256-slice spiral CT scanners (BrillianceiCT, Philips Healthcare, Cleveland, OH, United States). The specific scanning parameters were as follows: tube voltage, 120 kV; tube current, 1,000 mA; layer thickness, 0.9 mm.

We included all CTA acquisitions with at least an aneurysm, irrespective of etiology, symptomatology, and configuration (saccular, fusiform, and dissecting). The aneurysms were located in the anterior cerebral arteries (ACA), the anterior communicating arteries (ACoA), internal carotid arteries (ICA), the middle cerebral arteries (MCA), the posterior cerebral arteries (PCA), and the vertebral basilar arteries (VA).

The CTA images were annotated under the guidance of experienced clinicians. All aneurysms were manually segmented using the manual segmentation tool of ITK-SNAP. The location and diameter of the 140 IAs were determined and statistically classified.

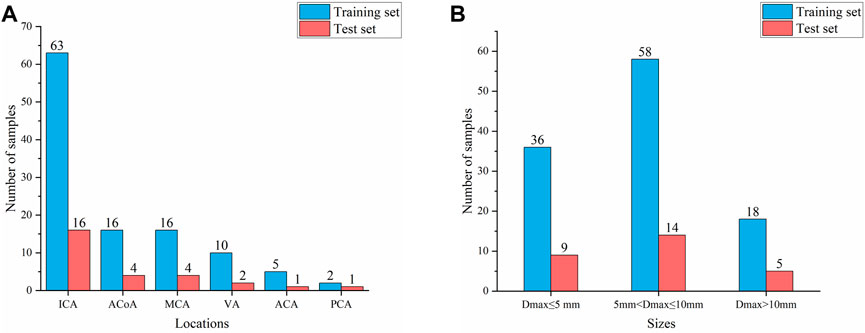

According to the classification by location and size of IAs, 80% of cases were randomly divided as the training set, and the remaining 20% were used as the test set to ensure that the data distribution of the training set and the test set is as consistent as possible. Thus, the training set and test sets contained 112 and 28 aneurysms, respectively, as shown in Figure 1. Finally, 112 negative cases (no IAs occur) were added to the training set to balance the proportion of positive and negative samples. No validation set was set due to the small sample size in this study.

FIGURE 1. Composition of IAs of different locations and sizes (A) Aneurysm location distribution (B) Aneurysm size distribution.

Before input to the network, the data needs to be pre-processed because the grey value of IA is relatively similar to the surrounding tissues and the lesion area occupies a relatively small proportion in the original image. Therefore, we set up a comparative experiment in this study. First, we performed first-order derivation on the image in advance to emphasize the boundary features of the aneurysm and performed the same follow-up pre-processing on the derivated and underived images. Then we input the derivated and underived images into the network model for training, respectively, and compared the segmentation effects of the models in the two situations to explore the sensitivity of the edge information to the deep learning network model. The data pre-processing process is shown in Figure 2.

In the above-mentioned follow-up pre-processing process, we first cropped the image into 48 × 48 × 48 voxel sub-volumes, thereby increasing the proportion of lesions in the image. We also performed a grayscale transformation to enhance the contrast between IA and the background region. After these two steps, the image features of the lesion are directly enhanced. Considering the small sample size of the dataset in this study, random flip and random rotation are also used for data augmentation in the pre-processing stage.

To explore the influence of different patch sizes on the automatic segmentation results of the model, this study set three different patch sizes, namely 32 × 32 × 32, 48 × 48 × 48, and 64 × 64 × 64 voxels.

Convolutional neural network (CNN) is one of the most representative algorithms of deep learning, proposed firstly by Lecun et al. for image processing (LeCun et al., 1998). CNN usually consists of an input layer, multiple convolution layers, pooling layers, and fully connected layers. It uses a convolution kernel to extract features from the image and uses image filling strategy to retain the original image information as much as possible. Therefore, it can extract high-order features from input information, which is widely used in image recognition, target segmentation, natural language processing, and other fields. We built and compared three popular CNN models for medical image processing in this paper, including 3D UNet, VNet, and 3D Res-UNet.

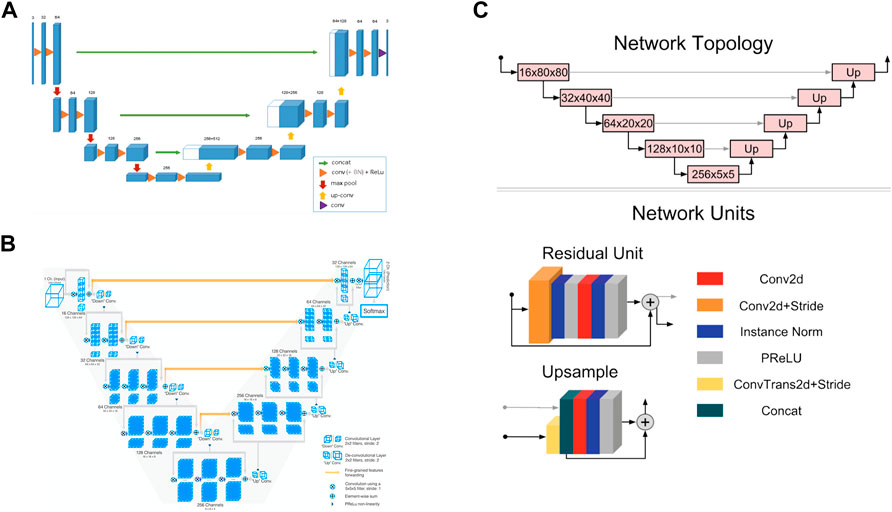

3D UNet is a CNN composed of a contracting path and an expansive path (Cicek et al., 2016). The contracting path is used to obtain context information, while the expansive path is used to locate accurately. They are almost symmetrical, forming a U-shaped network structure (Figure 3A).

FIGURE 3. Convolutional neural network structures of (A) 3D UNet (Cicek et al., 2016) (B) VNet (Milletari et al., 2016), and (C) 3D Res-UNet (Kerfoot et al., 2019).

In the encoder part of the left half, the downsampling module is repeatedly applied, which is composed of two 3 × 3 × 3 convolutions, each followed by a rectified linear unit (ReLU) and a 2 × 2 × 2 maximum pooling operation. After each pooling operation, the image size is reduced by one time, and the number of channels of the feature map is doubled.

In the decoder part of the right half, the upsampling module is repeatedly applied, which is composed of a 2 × 2 × 2 up-convolution and two 3 × 3 × 3 convolutions, each followed by a ReLU. In this process, the image size can be doubled by the 2 × 2 × 2 up-convolution operation, and the number of channels of the feature map can be doubled. Subsequently, the feature map obtained in the upsampling process and the corresponding cropped feature map in the contracting path are concatenated through skip connections so that more information can be integrated for more precise pixel positioning. In the last layer, a 1 × 1 × 1 convolutional layer is also added to reduce the number of channels of the output image to the number of labels.

3D UNet can accept images of any size because it does not contain full connection layers. In addition, 3D UNet uses batch normalization (BN) before each RELU to speed up convergence and avoid network structure bottlenecks.

VNet is a CNN proposed for 3D medical image segmentation (Milletari et al., 2016). Similar to UNet, its contracting path and expansive path are almost symmetrical, forming a V-shaped network structure (Figure 3B).

The contracting path on the left is divided into different stages, including one to three convolutional layers, and each stage uses a convolution kernel with a size of 5 × 5 × 5 voxels for convolution operation. VNet introduces the residual function, which connects the feature map after convolution operation and PReLU non-linearity with the original input of this stage for element-wise residual connection. Then the convolution with 2 × 2 × 2 voxels wide kernels applied with stride two is performed for downsampling. Therefore, after each downsampling operation, the size of the feature map is reduced by half, and the number of channels is doubled.

The expansive path on the right continuously extracts features during the up-sampling process and increases the spatial support for lower-resolution feature maps to collect important feature information. Finally, softmax is used to generate probability distributions to achieve voxel-by-voxel classification.

Like UNet, VNet also transfers and superimposes the feature map of the contracting path on the left to the expansive path on the right through skip connections, supplementing the detailed information of the loss to improve the segmentation accuracy.

3D Res-UNet is a CNN model implemented by adding residual units based on UNet, as seen in Figure 3C (Kerfoot et al., 2019).

3D Res-UNet uses convolution and deconvolution with stride two to perform downsampling and upsampling operations instead of pooling layers so that the network can learn the best upsampling or downsampling operation and further reduce the number of network layers. In addition, parametric rectifying linear units (PReLU) are used in the residual unit to enable better activation of the network learning and improve the segmentation effect. Instance normalization is used to prevent contrast shift.

In this study, the three CNN models are constructed based on PyTorch (Paszke et al., 2019) and Monai (MONAI Consortium, 2020) deep learning frameworks. All training and testing tasks were carried out on the same deep learning platform and accelerated by GeForce GTX 1080 Ti GPU with 10 GB of memory. Each model was trained for 500 epochs using the Adam optimizer with an initial learning rate of 0.0001. As the criterion for convergence of model training, the Dice coefficient loss function is defined as Eq. 1, where ygt and ypred are the ground truth and binary predictions from the neural networks, respectively. Additionally, ε is an infinite decimal, set to 1e-5 here.

In this paper, we used V-Recall, DSC, HD, and AST to comprehensively evaluate the segmentation performance of three CNN models. Among them, V-Recall was used to characterize the voxel-wise accuracy of lesion recognition. DSC and HD were used to characterize the quality of lesion segmentation, and AST was used to characterize segmentation efficiency.

V-Recall: V-Recall is the voxel-wise ratio of the number of true positive IA voxels to the number of all true IA voxels in an IA lesion, which is defined as follows:

where ygt and ypred represent the ground truth and the binary predictions from the neural networks and | ygt∩ypred | is the intersection of the ground truth and the prediction, representing the predicted correctly lesion voxels. We used V-Recall to evaluate the ability of the model to accurately identify true IA voxels in an IA lesion since a segmentation task can be seen as a voxel-wise prediction. A non-zero V-Recall indicates that the lesion can be identified at the case level. The closer V-Recall is to 1, the more complete the segmentation of the lesion.

DSC: DSC represents the overlap ratio between the ground truth and segmentation results. Its value range is between 0 and 1, the closer to 1, the better the segmentation effect. DSC is defined as follows:

HD: The HD measures the distance between the two point sets, representing the similarity of the two sets. The HD is sensitive to the boundary of the segmentation results. When the segmentation results predicted by the neural networks are closer to the ground truth, the HD is smaller. The HD between the two sets is defined as follows:

where d(x, y) is the distance between point x of the ground truth (ygt) and point y of the predictions (ypred).

AST: AST is the average segmentation time consuming for a trained model to segment each sample, representing a trained model’s segmentation efficiency.

Because the above metrics (DSC, HD, V-Recall, etc.) cannot reflect the bias of geometric details, which might affect the accuracy of subsequent mechanical analysis, a 3D deviation analysis between CNN-based segmentation and ground truth was carried out in Geomagic Studio 2014 software (Raindrop Geomagic, Development Triangle, NC, United States). The 3D models were reconstructed from segmentation and ground truth based on the Python platform and VTK library (Schroeder et al., 2006). Furthermore, the geometric quality was evaluated using four metrics widely used in 3D model deviation analysis, including maximum distance, average distance, standard deviation (STD), and root mean square (RMS) value.

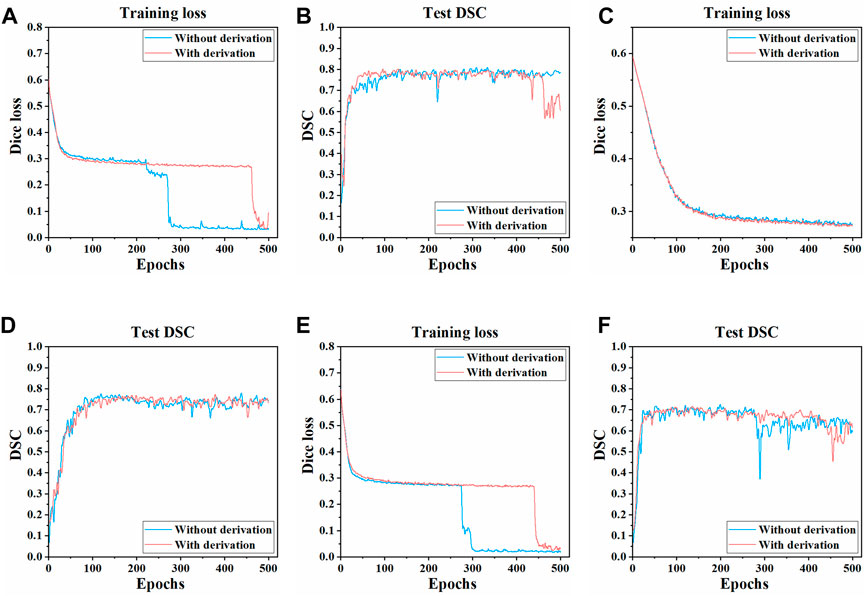

The training process and results of three models in two pre-processing methods (with derivation and without derivation) were compared. The Dice loss value change on the training set and the DSC change on the test set during the training process of three models are shown in Figure 4. Compared with the pre-derivation of images, 3D UNet and 3D Res-UNet models can fit and converge faster without derivation while achieving a higher DSC value at the end of training. Besides, the Dice loss value was lower and the DSC value changed more smoothly after convergence. In addition, VNet was not sensitive to the pre-derivation of images, and the Dice loss value and DSC value were similar between derivation and non-derivation during training.

FIGURE 4. Changes of dice loss value and DSC coefficient in the training process of three models. The changes of the Dice loss on the training set of (A) 3D UNet (C) VNet, and (E) 3D Res-UNet. The changes of the DSC on the test set of (B) 3D UNet (D) VNet, and (F) 3D Res-UNet. The red and blue curves in the figure represent the training process of images with derivation and without derivation, respectively.

In general, the overall training effect of the three models on the dataset without derivation was better. Therefore, the segmentation effects of the three models on the dataset without derivation were compared, and the subsequent studies in this paper were carried out on the data set without derivation.

To explore the influence of different patch sizes on the automatic segmentation results of the 3D UNet model, this study set three different patch sizes (32 × 32 × 32, 48 × 48 × 48, 64 × 64 × 64 voxels). We input samples with different patch sizes into 3D UNet for training and compared the automatic segmentation results of the test set samples, the specific data are shown in Table 1.

As can be seen from Table 1, the patch size of the sample would affect the segmentation performance of models. When the patch size was 48 × 48 × 48 voxels, the average DSC value on the test set sample was the highest, which was 0.818 ± 0.100, 5.3%, and 0.3% higher than that of 32 × 32 × 32 voxels and 64 × 64 × 64 voxels, respectively. Besides, the HD was also the lowest (3.323 ± 3.212 voxels) when the patch size was 48 × 48 × 48 voxels, which was 15.5% and 28.1% lower than those of 32 × 32 × 32 voxels and 64 × 64 × 64 voxels, respectively. Therefore, when the patch size was 48 × 48 × 48 voxels, 3D UNet had better segmentation performance for IAs.

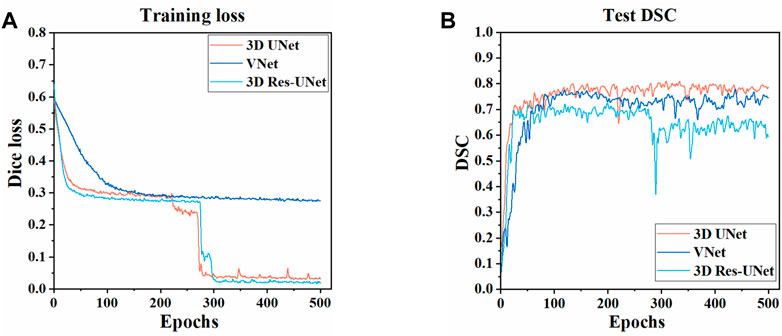

The three CNNs were trained under the same hyperparameters. Changes in the Dice loss value on the training set and the DSC on the test set during the training of three models are shown in Figures 5, 6. Compared with VNet and 3D Res-UNet, 3D UNet has the fastest convergence rate and the highest DSC value on the test set.

FIGURE 5. Changes of dice loss value and dice coefficient in the training of three models (A) Changes of dice loss on the training set (B) Changes of DSC on the test set.

Figure 6A illustrates the boxplot of the V-Recall of segmentation results on the test set. All the CNNs showed a non-zero voxel-wise recall at the case level, which indicates that all the IAs in each case were identified successfully. Moreover, 3D UNet achieved the highest average V-Recall (79.7%), as well as the highest median V-Recall (81.5%). The average V-Recall of 3D UNet on the test set was 3.5% and 17.3% higher than that of VNet and 3D Res-UNet, respectively. The specific data were listed in Table 2.

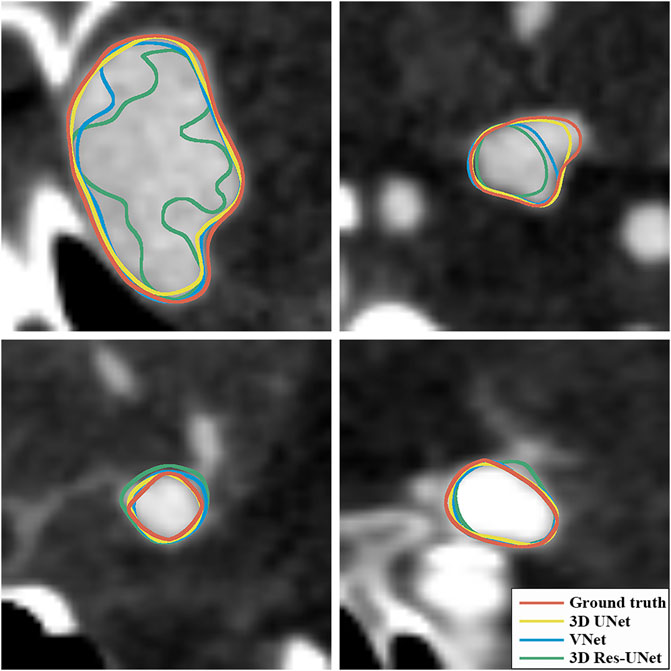

Figures 6B, C illustrate the boxplots of the DSC and HD of three CNNs’ segmentation results on the test set. The average DSC and HD values of 3D UNet, VNet, and 3D Res-UNet were 0.818 ± 0.100 and 3.323 ± 3.212 voxels, 0.786 ± 0.108 and 3.626 ± 3.167 voxels, 0.732 ± 0.139 and 6.080 ± 6.065 voxels, respectively (Table 2). The average DSC of 3D UNet was 4.1% and 11.7% higher than VNet and 3D Res-UNet, and the average HD was 8.3% and 17.3% lower than that of VNet and 3D Res-UNet, respectively. The segmentation results of three CNNs are illustrated in Figure 7. Among all the compared models, the 3D UNet provides segmentation results most similar to the ground truth.

FIGURE 7. Visualization of segmentation results of three models. The ground-truth annotations are shown in red, and the automatic segmentations of 3D UNet, VNet, and 3D Res-UNet are shown in yellow, blue, and green, respectively.

The AST of 3D UNet and 3D Res-UNet was 0.053 s, which was 8.62% faster than VNet (0.058 s). The specific data of the evaluation performance of the three models on the test set are shown in Table 2.

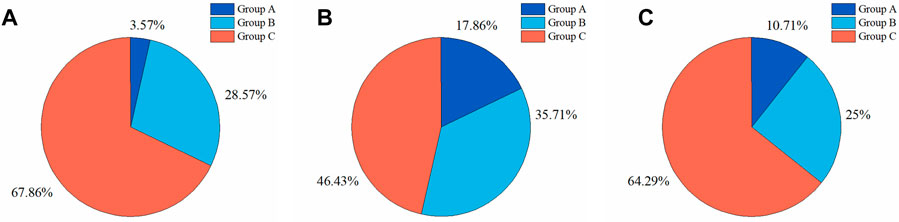

To further investigate the DSC distributions of segmentation results, the DSC values of the segmentations in the test set were statistically analyzed. We divided the IA segmentation results into three categories according to the DSC. Group A, Group B, and Group C represented sample groupings of DSC between 0.4 and 0.6, 0.6–0.8, and 0.8–1.0, respectively. For the automatic segmentation based on 3D UNet, 67.86% had a DSC greater than 0.8 (Figure 8A), which was 5.5% and 45.5% higher than that of VNet (Figure 8B) and 3D Res-UNet (Figure 8C), and only 3.57% (1 case) had a DSC between 0.4 and 0.6 (Figure 8A). In the subsequent VNet and 3D Res-UNet, Group C decreased in proportion, while Group A increased in proportion (Figures 8B,C).

FIGURE 8. Statistical results of DSC values of segmentation results on the test set. The proportion of segmentation results within different DSC values based on (A) 3D UNet (B) VNet, and (C) 3D Res-UNet. Group A, Group B, and Group C represented samples of DSC between 0.4 and 0.6, 0.6–0.8, and 0.8–1.0, respectively.

Sizes and location of IAs are important factors that may affect IA rupture. However, the sensitivity of different models to these factors remains to be studied. Therefore, we compared the segmentation performances of these three models on IAs at different sizes to study the impact of size on model performance.

We divided the IAs of the test set into Group 1, Group 2, and Group 3 according to maximum diameter (Dmax), which were distributed in Dmax < 5 mm, 5 mm < Dmax ≤ 10 mm, and Dmax > 10 mm, respectively. Group 1, Group 2, and Group 3 contained 9, 14, and 4 cases, with an average Dmax of 3.498, 7.143, and 13.281 mm, respectively.

Figure 9 illustrates the statistical results of the three models’ mean and standard deviation of the V-Recall, DSC, and HD values. In the groups of IAs of different sizes, 3D UNet achieved the highest average V-Recall (Figure 9A) and DSC (Figure 9B) as well as the lowest average HD (Figure 9C), followed by VNet and 3D Res-UNet. When the maximum diameter (Dmax) of the IAs increased, the V-Recall and DSC values of the segmentation results of the three models increased gradually, and the HD values decreased gradually. It could be seen that the three CNN models had good recognition and segmentation ability for larger IAs. As shown in Table 3, the DSC value of 3D UNet, VNet, and 3D Res-UNet for large aneurysms (Dmax > 10 mm) in Group 3 was 0.856 ± 0.043, 0.824 ± 0.063, 0.711 ± 0.156, which was 13.5%, 15.9%, 3.9% higher than that of small aneurysms (Dmax < 5 mm) in Group 1. While in Group 1, 3D UNet still had a DSC of 0.754 ± 0.142, which was 6.0% and 10.2% higher than that of VNet and 3D Res-UNet. V-Recall and HD showed similar dynamics to DSC. Therefore, the segmentation performance of 3D UNet on small aneurysms, general aneurysms, and large aneurysms was relatively balanced, which was better than that of VNet and 3D Res-UNet. VNet performed second. While 3D Res-UNet segmentation results were greatly affected by aneurysm size changes. The specific data were listed in Table 3.

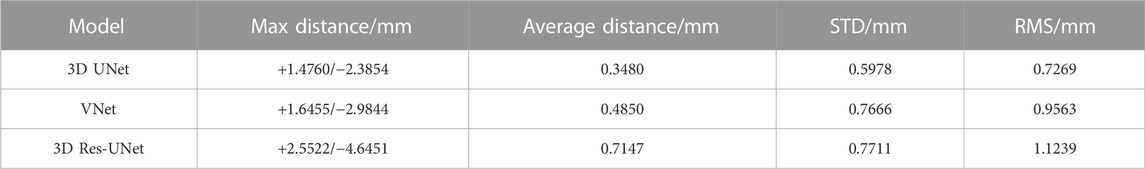

The 3D deviation analysis results (Table 4) showed that 3D IA reconstruction models based on 3D UNet have the smallest deviation under the above four metrics, while that of 3D Res-UNet has the highest deviation. The max distance of 3D UNet was +1.4760/-2.3854 mm, which was 10.3%/20.1% and 42.2%/48.6% lower than the absolute value of VNet and 3D Res-UNet. The average distance, STD, and RMS of 3D UNet were 0.3480 mm, 0.5978 mm, and 0.7269 mm, which was 28.2% and 56.1%, 22.0% and 22.5%, 24.0% and 35.3% lower than that of VNet and 3D Res-UNet, respectively.

TABLE 4. 3D deviation between 3D reconstruction models from CNN-based segmentation and ground truth.

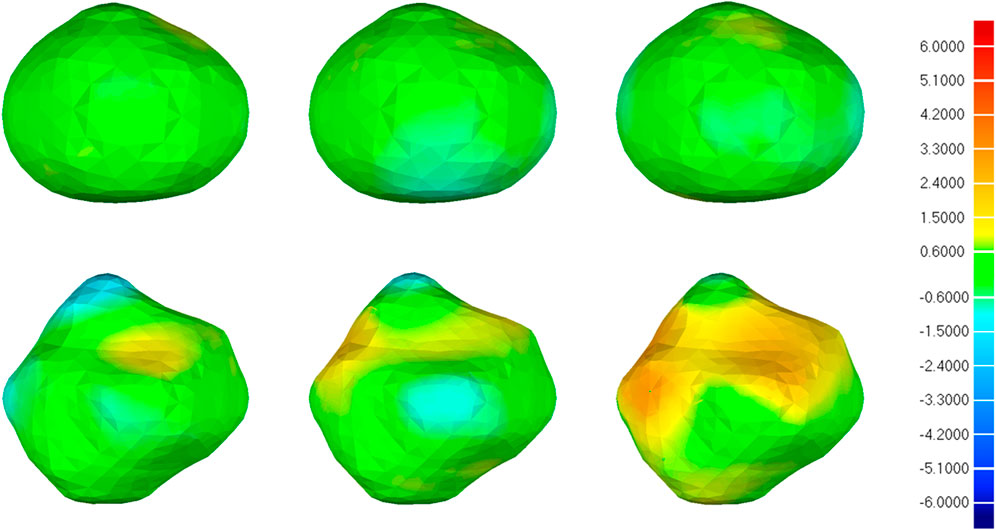

Figure 10 illustrates the visual distribution of the 3D deviation using the color-coded map to show the differences between CNN-based segmentations compared to the reference. For the IA represented in the first row, all 3D reconstruction models based on three CNN models have minor deviations compared to the reference, and the deviation distribution of the three models is consistent. For the IA in the second row, more yellow areas appear in all 3D reconstruction models, indicating higher deviations. Specifically, 3D IA reconstruction models based on 3D UNet have the smallest deviation.

FIGURE 10. 3D deviation analysis results of two IA reconstructed models based on CNN segmentation. The deviation is represented in the reference geometry. The first, second, and third columns represent 3D deviation results for 3D UNet, VNet, and 3D Res-UNet on two IA reconstructed models (upper and lower rows), respectively. The red areas show an overestimation of the reference model and the blue areas indicate an underestimation. Red: +6.000 mm deviation. Green: 0.000 mm deviation. Blue: −6.000 mm deviation.

In this study, three CNN models (3D UNet, VNet, 3D Res-UNet) were constructed and applied to the automatic segmentation of IAs. We compared the automatic segmentation performances of the three models under a small sample size and found that 3D UNet outperformed VNet and 3D Res-UNet. The automatic segmentation results of IAs suggest that the 3D UNet can achieve excellent segmentation quality, which can meet the requirement of the subsequent 3D reconstruction as well as assist clinical diagnosis and treatment. In addition, 3D UNet is also highly time-efficient for a single prediction of less than a second.

The patch size plays an important role in getting a precise segmentation of IA lesions, which would affect the negative and positive proportion of the input as well as the consumption of computing resources. Therefore, it is necessary to select the most appropriate patch size according to the specific task for subsequent research. This study compared the impact of three patch sizes (32 × 32 × 32, 48 × 48 × 48, 64 × 64 × 64 voxels) on the IA segmentation using 3D UNet, and found that 3D UNet has better segmentation performance for IAs when the patch size is 48 × 48 × 48 voxels (Table 1). Some previous studies have also shown that a larger patch size doesn’t necessarily mean better (Xu et al., 2019; Essa et al., 2020; Tang et al., 2021).

The convergence and efficiency of CNN training receive much concern in the evaluation of the model since the training is computationally intensive and might affect the segmentation performance (Rehman et al., 2018). In our study, there is no obvious over-fitting phenomenon in the three models. Among them, 3D UNet has the fastest convergence and the highest DSC value on the test set. It might benefit from the use of batch normalization in 3D UNet, which helps the network train faster and achieve higher DSC by reducing internal covariate shifts (Ioffe and Szegedy, 2015; Cicek et al., 2016). Besides, the simple structure of 3D UNet also makes it relatively light and shallow to be available to process the data efficiently under the same hardware conditions (Zou et al., 2018; Lyu et al., 2021).

The automatic segmentation and reconstruction quality of IAs have a primary influence on the subsequent hemodynamic analysis. Inaccurate segmentation (especially for important anatomical features, such as aneurysm necks) may result in unrealistic flow patterns and diverging flow parameter values and therefore may even lead to erroneous conclusions (Berg et al., 2018, 2019; Valen-Sendstad et al., 2018).

In this paper, 3D UNet showed excellent IA segmentation performance under a small sample size, which was better than VNet and 3D Res-UNet. In terms of lesion recognition, all the CNNs showed a non-zero V-Recall at the case level, indicating that all the IAs were identified successfully. Among them, 3D UNet achieved the highest average V-Recall of 0.797 ± 0.140, which was 3.5% and 17.3% higher than that of VNet and 3D Res-UNet, respectively (Table 2). This suggested that 3D UNet was more sensitive than the other two models in voxel-wise lesion identification. In terms of lesion segmentation, the automatic segmentation based on 3D UNet reached an average DSC of 0.818 ± 0.100, 4.1%, and 11.7% higher than that of VNet and 3D Res-UNet, as well as an average HD of 3.323 ± 3.212 voxels, 8.3%, and 17.3% lower than that of VNet and 3D Res-UNet (Table 2). These results are comparable to previous studies which used similar image modalities, sample size, and model architecture (Shahzad et al., 2020; Ma and Nie, 2021). Table 5 lists the average DSC achieved in previous studies ranging from 0.53 to 0.8632 (Sichtermann et al., 2019; Shi et al., 2020b; Shahzad et al., 2020; Bo et al., 2021; Ma and Nie, 2021).

There could be multiple possible reasons for the better segmentation performance of 3D UNet, for there are differences among the three network architectures. VNet and 3D Res-UNet adopt residual mechanisms based on 3D UNet and use convolution layers instead of pooling layers to perform downsampling. The residual mechanism is mainly proposed to improve the gradient disappearance and gradient explosion in the deep network training through skip connection to realize feature fusion between different layers (He et al., 2016). However, Wang et al. have found that skip connection is not always beneficial, and some inappropriate feature fusion would negatively influence the segmentation performance (Wang et al., 2022). The optimal combination of skip connections should be determined according to the scales and appearance of the target lesions (Wang et al., 2022). Some studies also showed that the effect of the residual mechanism is related to the implementation of specific residual blocks and the input data and pre-processing methods (Naranjo-Alcazar et al., 2019). Our study also demonstrates that adding residual blocks may affect the effectiveness of feature fusion and weaken the IA segmentation performance.

Besides, the performance of CNNs is somewhat different on different datasets. The study by Turečková et al. showed that VNet slightly outperforms 3D UNet on Medical Decathlon Challenge (MDC) Liver dataset, while the trend is opposed in the MDC Pancreas dataset (Turečková et al., 2020). Wang et al. also showed that 3D UNet outperforms VNet in head and neck CT tumor segmentation (Wang G. et al., 2022). Our dataset has high variability in size and shape, similar to those of pancreas and head and neck tumors, resulting in consistent results with the above studies.

Small IAs are a common risk factor for aneurysmal SAH which have a high risk of being missed in clinical screening (Kassell and Torner, 1983; Adams et al., 2000; Weir et al., 2002; Pradilla et al., 2013; Dolati et al., 2015). Whereas, the accurate automatic segmentation of small IAs is still a problem. To verify the impacts of IA size on segmentation performance, we analyzed and found that the performances of the models on small IAs were worse than those on large IAs (similar to other studies (Sichtermann et al., 2019; Shi et al., 2020b; Bo et al., 2021)), and the performance of 3D UNet on small IAs was better than other models. The DSC of 3D UNet, VNet, and 3D Res-UNet for large IAs in Group 3 was 13.5%, 15.9%, and 3.9% higher than that of small aneurysms in Group 1. While in Group 1, the DSC of 3D UNet was 6.0% and 10.2% higher than that of VNet and 3D Res-UNet (Table 3).

Compared with VNet, 3D UNet uses a smaller convolution kernel size which may be more attentive to local IA features and not be overly distracted by the neighborhood when an IA lesion is relatively small and surrounded by many tissues, as Cao et al. demonstrated in their study (Cao et al., 2021).

To evaluate the availability and reliability of the 3D IA models in subsequent hemodynamics, a specific geometric deviation analysis is necessary. Nevertheless, the above metrics (DSC, HD, etc.) cannot reflect the accuracy of geometric details, which is important in subsequent mechanical analysis. Thus, we performed the 3D deviation analysis to intuitively evaluate the detailed bias between the CNN-based segmentation and the ground truth. Table 4 shows that 3D UNet had the smallest deviation with a max distance of +1.4760/-2.3854 mm and an average distance of 0.3480 mm. While 3D Res-UNet had the highest deviation, consistent with the trend demonstrated by the above metrics.

What’s more, the visual deviation analysis suggested that high deviations usually occur at the concave and convex areas of irregular IAs (Figure 10). For an IA with regular morphology, the 3D reconstruction models based on three CNN models are more likely to have a small deviation compared with the reference, as shown in the first row of Figure 10. On the contrary, for an IA with irregular morphology, the original concave and convex areas are more likely to be overestimated and underestimated in the 3D reconstruction models, respectively, as shown in the second row of Figure 10. That is because these areas are hard to be segmented accurately by CNN models. Under this premise, we still found that 3D IA reconstruction models based on 3D UNet are more likely to have smaller deviations compared with VNet and 3D Res-UNet.

Improving the segmentation efficiency is of great significance for meeting clinical needs timely and accelerating the morphological and hemodynamic analysis based on individualized 3days models. As far as we know, automatic segmentation time varies according to different tasks (Patel et al., 2020; Claux et al., 2022). According to some studies, automatic segmentation of IA takes seconds to minutes per case (Sichtermann et al., 2019; Shi et al., 2020b; Bo et al., 2021).

In our study, three CNN models are all highly time-efficient with an AST of less than a second, which is promising for practical use. As the test set uses a smaller patch size (48 × 48 × 48 voxels) than other studies (Sichtermann et al., 2019; Shi et al., 2020b; Bo et al., 2021), the AST of 3D UNet and 3D Res-UNet of an IA is only 0.053s in this study, which is 9.4% less than that of VNet and comparable with the study of Jin et al. (Jin et al., 2019). The longer AST of VNet can be attributed to the use of a larger convolution kernel size, resulting in disproportionally increased expensive computation (Szegedy et al., 2016).

The main limitation of this study is related to the small sample size of IAs from a single center, which may result in insufficient diversity of samples. Multicenter study validation should be performed to improve the robustness of results to data from different centers. Besides, all cases in this study were labeled by only one annotator. In the future, cross-validation between different annotators is needed to reduce the impact of individual differences among annotators. Finally, this study only compared three CNN models, and more updated network models can be included for comparison in the future following the same methodology proposed in this study.

In conclusion, we deployed three CNN models (3D UNet, VNet, 3D Res-UNet) and applied them to the automatic segmentation of IAs. After comparing the automatic segmentation effects of the three models under a small sample size, we found that 3D UNet outperformed VNet and 3D Res-UNet in terms of V-Recall, DSC, and HD. Besides, the 3D Deviation analysis of 3D reconstruction models from CNN-based segmentation also suggested that the segmentation of 3D UNet had the smallest deviation under the above four metrics while that of 3D Res-UNet had the highest deviation, consistent with the above metrics, further demonstrating that 3D UNet is more suitable for IA segmentation than the other two. In terms of segmentation efficiency, three models are all highly time-efficient for a single prediction of less than a second in this study, which is promising for practical use in the real-time diagnosis of cerebral hemorrhage and treatment of IAs. This can greatly facilitate current large-scale CTA-based precise patient-specific modeling and analysis studies in healthcare.

The study of IA is vital for the health of the public. In the future, beyond the automatic detection and segmentation, predicting the rupture of IAs according to the morphological and hemodynamic analysis based on individualized 3D models will be worth exploring. Therefore, 3D UNet can not only assist clinicians in the diagnosis of IAs but can also encourage more implementations of artificial intelligence in healthcare.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

The Institutional Ethics Review Committee of the First Affiliated Hospital of Xi’an Jiaotong University approved this retrospective study. All data were fully anonymized.

GYZ and XQL contributed to the conception, design, and manuscript draft of this study. GYZ contributed to the funding acquisition. XQL contributed to the data analysis and visualization. TTY, LC, GY, and JHY contributed to the data interpretation and commented on the manuscript. JY and GY contributed to the clinical support of this study. All authors contributed to the manuscript revision and approved the submitted version.

This research was funded by the National Natural Science Foundation of China (NSFC) (12272289, 12271440).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abboud T., Rustom J., Bester M., Czorlich P., Vittorazzi E., Pinnschmidt H. O., et al. (2017). Morphology of ruptured and unruptured intracranial aneurysms. World Neurosurg. 99, 610–617. doi:10.1016/j.wneu.2016.12.053

Adams W. M., Laitt R. D., Jackson A. (2000). The role of mr angiography in the pretreatment assessment of intracranial aneurysms: A comparative study. AJNR. Am. J. Neuroradiol. 21, 1618–1628. Available at: http://www.ncbi.nlm.nih.gov/pubmed/11039340.

Berg P., Saalfeld S., Voß S., Beuing O., Janiga G. (2019). A review on the reliability of hemodynamic modeling in intracranial aneurysms: Why computational fluid dynamics alone cannot solve the equation. Neurosurg. Focus 47, E15–E19. doi:10.3171/2019.4.FOCUS19181

Berg P., Voß S., Saalfeld S., Janiga G., Bergersen A. W., Valen-Sendstad K., et al. (2018). Multiple aneurysms AnaTomy CHallenge 2018 (MATCH): Phase I: Segmentation. Cardiovasc. Eng. Technol. 9, 565–581. doi:10.1007/s13239-018-00376-0

Bo Z.-H., Qiao H., Tian C., Guo Y., Li W., Liang T., et al. (2021). Toward human intervention-free clinical diagnosis of intracranial aneurysm via deep neural network. Patterns 2, 100197. doi:10.1016/j.patter.2020.100197

Cao Y., Vassantachart A., Ye J. C., Yu C., Ruan D., Sheng K., et al. (2021). Automatic detection and segmentation of multiple brain metastases on magnetic resonance image using asymmetric UNet architecture. Phys. Med. Biol. 66, 015003. doi:10.1088/1361-6560/abca53

Cebral J. R., Raschi M. (2013). Suggested connections between risk factors of intracranial aneurysms: A review. Ann. Biomed. Eng. 41, 1366–1383. doi:10.1007/s10439-012-0723-0

Cicek O., Abdulkadir A., Lienkamp S. S., Brox T., Ronneberger O. (2016). “3D U-net: Learning Dense volumetric segmentation from sparse annotation,” Medical image Computing and computer-assisted intervention - MICCAI 2016 lecture notes in computer science. S. Ourselin, L. Joskowicz, M. R. Sabuncu, G. Unal, and W. Wells (Cham, Champa: Springer International Publishing), 424–432. doi:10.1007/978-3-319-46723-8

Claux F., Baudouin M., Bogey C., Rouchaud A. (2022). Dense, deep learning-based intracranial aneurysm detection on TOF MRI using two-stage regularized U-Net. J. Neuroradiol. 000, 3–9. doi:10.1016/j.neurad.2022.03.005

Dhar S., Tremmel M., Mocco J., Kim M., Yamamoto J., Siddiqui A. H., et al. (2008). Morphology parameters for intracranial aneurysm rupture risk assessment. Neurosurgery 63, 185–196. doi:10.1227/01.NEU.0000316847.64140.81

Dolati P., Pittman D., Morrish W. F., Wong J., Sutherland G. R. (2015). The frequency of subarachnoid hemorrhage from very small cerebral aneurysms (< 5 mm): A population-based study. Cureus 7, 2799–e310. doi:10.7759/cureus.279

Duan Z., Li Y., Guan S., Ma C., Han Y., Ren X., et al. (2018). Morphological parameters and anatomical locations associated with rupture status of small intracranial aneurysms. Sci. Rep. 8, 6440. doi:10.1038/s41598-018-24732-1

England T. N. (1998). Unruptured intracranial aneurysms — risk of rupture and risks of surgical intervention. N. Engl. J. Med. 339, 1725–1733. doi:10.1056/NEJM199812103392401

Essa E., Aldesouky D., Hussein S. E., Rashad M. Z. (2020). Neuro-fuzzy patch-wise R-CNN for multiple sclerosis segmentation. Med. Biol. Eng. Comput. 58, 2161–2175. doi:10.1007/s11517-020-02225-6

Etminan N., Chang H. S., Hackenberg K., De Rooij N. K., Vergouwen M. D. I., Rinkel G. J. E., et al. (2019). Worldwide incidence of aneurysmal subarachnoid hemorrhage according to region, time period, blood pressure, and smoking prevalence in the population: A systematic review and meta-analysis. JAMA Neurol. 76, 588–597. doi:10.1001/jamaneurol.2019.0006

Firouzian A., Manniesing R., Flach Z. H., Risselada R., van Kooten F., Sturkenboom M. C. J. M., et al. (2011). Intracranial aneurysm segmentation in 3D CT angiography: Method and quantitative validation with and without prior noise filtering. Eur. J. Radiol. 79, 299–304. doi:10.1016/j.ejrad.2010.02.015

Hademenos G. J., Massoud T. F., Turjman F., Sayre J. W. (1998). Anatomical and morphological factors correlating with rupture of intracranial aneurysms in patients referred for endovascular treatment. Neuroradiology 40, 755–760. doi:10.1007/s002340050679

Haider T., Michahelles F. (2021). “Human-machine collaboration on data annotation of images by semi-automatic labeling,” in Mensch und Computer 2021 (New York, NY, USA: ACM), 552–556. doi:10.1145/3473856.3473993

He K., Zhang X., Ren S., Sun J. (2016).Deep residual learning for image recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV, USA, IEEE, 770–778. doi:10.1109/CVPR.2016.90

Hu B., Shi Z., Schoepf U. J., Varga-Szemes A., Few W. E., Zhang L. J. (2021). Computational fluid dynamics based hemodynamics in the management of intracranial aneurysms: State-of-the-art. Chin. J. Acad. Radiol. 4, 150–159. doi:10.1007/s42058-021-00081-3

Ioffe S., Szegedy C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. 32nd Int. Conf. Mach. Learn. ICML, Lille France 1, 448-456. ,

Ionita C. N., Mokin M., Varble N., Bednarek D. R., Xiang J., Snyder K. V., et al. (2014). “Challenges and limitations of patient-specific vascular phantom fabrication using 3D Polyjet printing,” in Medical imaging 2014: Biomedical applications in molecular, structural, and functional imaging. R. C. Molthen, and J. B. Weaver, SPIE), Christophe, Europa, 90380M. doi:10.1117/12.2042266

Jalali Y., Fateh M., Rezvani M., Abolghasemi V., Anisi M. H. (2021). ResBCDU-net: A deep learning framework for lung CT image segmentation. Sensors Switz. 21, 268–324. doi:10.3390/s21010268

Jin H., Yin Y., Hu M., Yang G., Qin L. (2019). “Fully automated unruptured intracranial aneurysm detection and segmentation from digital subtraction angiography series using an end-to-end spatiotemporal deep neural network,” in Medical imaging 2019: Image processing. E. D. Angelini, and B. A. Landman (SPIE), Christophe, Europa 53. doi:10.1117/12.2512623

Karimov A., Razumov A., Manbatchurina R., Simonova K., Donets I., Vlasova A., et al. (2019).Comparison of UNet, ENet, and BoxENet for segmentation of mast cells in scans of histological slices, 2019 International Multi-Conference on Engineering, Computer and Information Sciences (SIBIRCON), Novosibirsk, Russia 0544–0547. IEEE. doi:10.1109/SIBIRCON48586.2019.8958121

Kartali A., Roglic M., Barjaktarovic M., Duric-Jovicic M., Jankovic M. M. (2018). Real-time algorithms for facial emotion recognition: A comparison of different approaches. 2018 14th Symp. Neural Netw. Appl. NEUREL, Belgrade, Serbia, 1–4. doi:10.1109/NEUREL.2018.8587011

Kassell N. F., Torner J. C. (1983). Size of intracranial aneurysms. Neurosurgery 12, 291–297. doi:10.1227/00006123-198303000-00007

Kavur A. E., Gezer N. S., Barış M., Şahin Y., Özkan S., Baydar B., et al. (2020). Comparison of semi-automatic and deep learning-based automatic methods for liver segmentation in living liver transplant donors. Diagn. Interv. Radiol. 26, 11–21. doi:10.5152/dir.2019.19025

Kerfoot E., Clough J., Oksuz I., Lee J., King A. P., Schnabel J. A. (2019). “Left-ventricle quantification using residual U-net,” in Lecture Notes in computer science (including subseries lecture Notes in artificial Intelligence and lecture Notes in bioinformatics) lecture notes in computer science. M. Pop, M. Sermesant, J. Zhao, S. Li, K. McLeod, and A. Young (Cham, Champa: Springer International Publishing), 371–380. doi:10.1007/978-3-030-12029-0_40

Korja M., Kivisaari R., Jahromi B. R., Lehto H. (2017). Size and location of ruptured intracranial aneurysms: Consecutive series of 1993 hospital-admitted patients. J. Neurosurg. 127, 748–753. doi:10.3171/2016.9.JNS161085

Lall R. R., Eddleman C. S., Bendok B. R., Batjer H. H. (2009). Unruptured intracranial aneurysms and the assessment of rupture risk based on anatomical and morphological factors: Sifting through the sands of data. Neurosurg. Focus 26, E2. doi:10.3171/2009.2.FOCUS0921

Lawton M. T., Vates G. E. (2017). Subarachnoid hemorrhage. N. Engl. J. Med. 377, 257–266. doi:10.1056/NEJMcp1605827

Le T. B. (2021). Dynamic modes of inflow jet in brain aneurysms. J. Biomech. 116, 110238. doi:10.1016/j.jbiomech.2021.110238

LeCun Y., Bottou L., Bengio Y., Haffner P. (1998). Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324. doi:10.1109/5.726791

Leemans E. L., Cornelissen B. M. W., Said M., van den Berg R., Slump C. H., Marquering H. A., et al. (2019). Intracranial aneurysm growth: Consistency of morphological changes. Neurosurg. Focus 47, E5. doi:10.3171/2019.4.FOCUS1987

Li Y., Amili O., Moen S., Van de Moortele P.-F., Grande A., Jagadeesan B., et al. (2022). Flow residence time in intracranial aneurysms evaluated by in vitro 4D flow MRI. J. Biomech. 141, 111211. doi:10.1016/j.jbiomech.2022.111211

Liang F., Liu X., Yamaguchi R., Liu H. (2016). Sensitivity of flow patterns in aneurysms on the anterior communicating artery to anatomic variations of the cerebral arterial network. J. Biomech. 49, 3731–3740. doi:10.1016/j.jbiomech.2016.09.031

Longo M., Granata F., Racchiusa S., Mormina E., Grasso G., Longo G. M., et al. (2017). Role of hemodynamic forces in unruptured intracranial aneurysms: An overview of a complex scenario. World Neurosurg. 105, 632–642. doi:10.1016/j.wneu.2017.06.035

Lyu Z., Jia X., Yang Y., Hu K., Zhang F., Wang G. (2021). A comprehensive investigation of LSTM-CNN deep learning model for fast detection of combustion instability. Fuel 303, 121300. doi:10.1016/j.fuel.2021.121300

Ma B., Harbaugh R. E., Raghavan M. L. (2004). Three-dimensional geometrical characterization of cerebral aneurysms. Ann. Biomed. Eng. 32, 264–273. doi:10.1023/B:ABME.0000012746.31343.92

Ma D., Tremmel M., Paluch R. A., Levy E. L. I., Meng H., Mocco J. (2010). Size ratio for clinical assessment of intracranial aneurysm rupture risk. Neurol. Res. 32, 482–486. doi:10.1179/016164109X12581096796558

Ma J., Nie Z. (2021). Exploring large context for cerebral aneurysm segmentation. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma., 7, 68–72. doi:10.1007/978-3-030-72862-5_7

Medero R., Ruedinger K., Rutkowski D., Johnson K., Roldán-Alzate A. (2020). In vitro assessment of flow variability in an intracranial aneurysm model using 4D flow MRI and tomographic PIV. Ann. Biomed. Eng. 48, 2484–2493. doi:10.1007/s10439-020-02543-8

Mensah E., Pringle C., Roberts G., Gurusinghe N., Golash A., Alalade A. F. (2022). Deep learning in the management of intracranial aneurysms and cerebrovascular diseases: A review of the current literature. World Neurosurg. 161, 39–45. doi:10.1016/j.wneu.2022.02.006

Milletari F., Navab N., Ahmadi S.-A. (2016).V-Net: Fully convolutional neural networks for volumetric medical image segmentation, 2016 Fourth International Conference on 3D Vision (3DV). Stanford, CA, USA 565–571. IEEE, doi:10.1109/3DV.2016.79

Monai Consortium (2020). Monai. New York, NY, USA: Medical Open Network for AI. doi:10.5281/zenodo.4323058

Murayama Y., Takao H., Ishibashi T., Saguchi T., Ebara M., Yuki I., et al. (2016). Risk analysis of unruptured intracranial aneurysms: Prospective 10-year cohort study. Stroke 47, 365–371. doi:10.1161/STROKEAHA.115.010698

Nam S. W., Choi S., Cheong Y., Kim Y. H., Park H. K. (2015). Evaluation of aneurysm-associated wall shear stress related to morphological variations of circle of Willis using a microfluidic device. J. Biomech. 48, 348–353. doi:10.1016/j.jbiomech.2014.11.018

Naranjo-Alcazar J., Perez-Castanos S., Martin-Morato I., Zuccarello P., Cobos M. (2019). On the performance of residual block design alternatives in convolutional neural networks for end-to-end audio classification. 1–6. Available at: http://arxiv.org/abs/1906.10891.

Park A., Chute C., Rajpurkar P., Lou J., Ball R. L., Shpanskaya K., et al. (2019). Deep learning-assisted diagnosis of cerebral aneurysms using the HeadXNet model. JAMA Netw. open 2, e195600. doi:10.1001/jamanetworkopen.2019.5600

Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., et al. (2019). “PyTorch: An imperative style, high-performance deep learning library,” in Advances in neural information processing systems (Red Hook, NY, USA: Curran Associates, Inc.), 32. 8024–8035. Available at: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf.

Patel T. R., Paliwal N., Jaiswal P., Waqas M., Mokin M., Siddiqui A. H., et al. (2020). Multi-resolution CNN for brain vessel segmentation from cerebrovascular images of intracranial aneurysm: A comparison of U-net and DeepMedic. Computer-Aided Diagnosis 101. doi:10.1117/12.2549761

Pradilla G., Wicks R. T., Hadelsberg U., Gailloud P., Coon A. L., Huang J., et al. (2013). Accuracy of computed tomography angiography in the diagnosis of intracranial aneurysms. World Neurosurg. 80, 845–852. doi:10.1016/j.wneu.2012.12.001

Raghavan M. L., Ma B., Harbaugh R. E. (2005). Quantified aneurysm shape and rupture risk. J. Neurosurg. 102, 355–362. doi:10.3171/jns.2005.102.2.0355

Rajabzadeh-Oghaz H., Varble N., Davies J. M., Mowla A., Shakir H. J., Sonig A., et al. (2017). “Computer-assisted adjuncts for aneurysmal morphologic assessment: Toward more precise and accurate approaches,” in Medical imaging 2017: Computer-aided diagnosis. S. G. Armato, and N. A. Petrick Genève, Switzerland, 10134. doi:10.1117/12.2255553

Rajabzadeh-Oghaz H., Varble N., Shallwani H., Tutino V. M., Mowla A., Shakir H. J., et al. (2018). Computer-assisted three-dimensional morphology evaluation of intracranial aneurysms. World Neurosurg. 119, e541–e550. doi:10.1016/j.wneu.2018.07.208

Rayz V. L., Cohen-Gadol A. A. (2020). Hemodynamics of cerebral aneurysms: Connecting medical imaging and biomechanical analysis. Annu. Rev. Biomed. Eng. 22, 231–256. doi:10.1146/annurev-bioeng-092419-061429

Rehman S., Tu S., Rehman O., Huang Y., Magurawalage C., Chang C.-C. (2018). Optimization of CNN through novel training strategy for visual classification problems. Entropy 20, 290. doi:10.3390/e20040290

Ren S., Guidoin R., Xu Z., Deng X., Fan Y., Chen Z., et al. (2022). Narrative review of risk assessment of abdominal aortic aneurysm rupture based on biomechanics-related morphology. J. Endovasc. Ther., 1, 152660282211193. doi:10.1177/15266028221119309

Ronneberger O., Fischer P., Brox T. (2015). U-Net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma., 28, 234–241. doi:10.1007/978-3-319-24574-4_28

Ryu C. W., Kwon O. K., Koh J. S., Kim E. J. (2011). Analysis of aneurysm rupture in relation to the geometric indices: Aspect ratio, volume, and volume-to-neck ratio. Neuroradiology 53, 883–889. doi:10.1007/s00234-010-0804-4

Schatlo B., Fung C., Stienen M. N., Fathi A. R., Fandino J., Smoll N. R., et al. (2021). Incidence and outcome of aneurysmal subarachnoid hemorrhage: The Swiss study on subarachnoid hemorrhage (Swiss SOS). Stroke 52, 344–347. doi:10.1161/STROKEAHA.120.029538

Schievink W. I. (1997). Intracranial aneurysms. N. Engl. J. Med. 336, 28–40. doi:10.1056/NEJM199701023360106

Schroeder W., Martin K., Lorensen B. (2006). The visualization toolkit. Kitware. North Carolina, NY, USA.

Schwenke H., Kemmling A., Schramm P. (2019). High-precision, patient-specific 3D models of brain aneurysms for therapy planning and training in interventional neuroradiology. Trans. Addit. Manuf. Meets Med. 1. doi:10.18416/AMMM.2019.1909

Sen Y., Qian Y., Avolio A., Morgan M. (2014). Image segmentation methods for intracranial aneurysm haemodynamic research. J. Biomech. 47, 1014–1019. doi:10.1016/j.jbiomech.2013.12.035

Shahzad R., Pennig L., Goertz L., Thiele F., Kabbasch C., Schlamann M., et al. (2020). Fully automated detection and segmentation of intracranial aneurysms in subarachnoid hemorrhage on CTA using deep learning. Sci. Rep. 10, 21799–21812. doi:10.1038/s41598-020-78384-1

Shi Z., Hu B., Schoepf U. J., Savage R. H., Dargis D. M., Pan C. W., et al. (2020a). Artificial intelligence in the management of intracranial aneurysms: Current status and future perspectives. AJNR. Am. J. Neuroradiol. 41, 373–379. doi:10.3174/AJNR.A6468

Shi Z., Miao C., Schoepf U. J., Savage R. H., Dargis D. M., Pan C., et al. (2020b). A clinically applicable deep-learning model for detecting intracranial aneurysm in computed tomography angiography images. Nat. Commun. 11, 6090. doi:10.1038/s41467-020-19527-w

Sichtermann T., Faron A., Sijben R., Teichert N., Freiherr J., Wiesmann M. (2019). Deep learning–based detection of intracranial aneurysms in 3D TOF-MRA. AJNR. Am. J. Neuroradiol. 40, 25–32. doi:10.3174/ajnr.A5911

Su Z., Jia Y., Liao W., Lv Y., Dou J., Sun Z., et al. (2021). “3D attention U-net with pretraining: A solution to CADA-aneurysm segmentation challenge,” in Lecture notes in computer science. A. Hennemuth, L. Goubergrits, M. Ivantsits, and J.-M. Kuhnigk (Cham, Champa: Springer International Publishing), 58–67. doi:10.1007/978-3-030-72862-5_6

Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. (2016). Rethinking the inception architecture for computer vision. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit, 28182826. Las Vegas, NV, USA doi:10.1109/CVPR.2016.308

Takao H., Murayama Y., Otsuka S., Qian Y., Mohamed A., Masuda S., et al. (2012). Hemodynamic differences between unruptured and ruptured intracranial aneurysms during observation. Stroke 43, 1436–1439. doi:10.1161/STROKEAHA.111.640995

Tang C., Chen H., Li X., Li J., Zhang Z., Hu X. (2021).Look closer to segment better: Boundary patch refinement for instance segmentation, 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville, TN, USA 13921–13930. IEEE. doi:10.1109/CVPR46437.2021.01371

Turečková A., Tureček T., Komínková Oplatková Z., Rodríguez-Sánchez A. (2020). Improving CT image tumor segmentation through deep supervision and attentional gates. Front. Robot. AI 7, 106–114. doi:10.3389/frobt.2020.00106

Valen-Sendstad K., Bergersen A. W., Shimogonya Y., Goubergrits L., Bruening J., Pallares J., et al. (2018). Real-world variability in the prediction of intracranial aneurysm wall shear stress: The 2015 international aneurysm CFD challenge. Cardiovasc. Eng. Technol. 9, 544–564. doi:10.1007/s13239-018-00374-2

Vlak M. H. M., Algra A., Brandenburg R., Rinkel G. J. E. (2011). Prevalence of unruptured intracranial aneurysms, with emphasis on sex, age, comorbidity, country, and time period: A systematic review and meta-analysis. Lancet. Neurol. 10, 626–636. doi:10.1016/S1474-4422(11)70109-0

Wang G., Huang Z., Shen H., Hu Z. (2022a). The head and neck tumor segmentation in PET/CT based on multi-channel attention network. Lect. Notes Comput. Sci. Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinforma., 9. 68–74. doi:10.1007/978-3-030-98253-9_5

Wang G., Zhang D., Wang Z., Yang L., Yang H., Li W. (2018). Risk factors for ruptured intracranial aneurysms. J Med Res 147(1), 51–57. doi:10.4103/ijmr.IJMR

Wang H., Cao P., Wang J., Zaiane O. R. (2022b). UCTransNet: Rethinking the skip connections in U-net from a channel-wise perspective with transformer. Biochemistry 36, 2441–2442. Proc. AAAI Conf. Artif. Intell. doi:10.1021/acs.biochem.2c00621

Wardlaw J. M., White P. M. (2000). The detection and management of unruptured intracranial aneurysms. Brain 123, 205–221. doi:10.1093/brain/123.2.205

Weir B., Disney L., Karrison T. (2002). Sizes of ruptured and unruptured aneurysms in relation to their sites and the ages of patients. J. Neurosurg. 96, 64–70. doi:10.3171/jns.2002.96.1.0064

Wiebers D. O., Whisnant J. P., Sundt T. M., O’Fallon W. M. (1987). The significance of unruptured intracranial saccular aneurysms. J. Neurosurg. 66, 23–29. doi:10.3171/jns.1987.66.1.0023

Xiang J., Natarajan S. K., Tremmel M., Ma D., Mocco J., Hopkins L. N., et al. (2011). Hemodynamic-morphologic discriminants for intracranial aneurysm rupture. Stroke 42, 144–152. doi:10.1161/STROKEAHA.110.592923

Xiang J., Tutino V. M., Snyder K. V., Meng H. (2013). Cfd: Computational fluid dynamics or confounding factor dissemination? The role of hemodynamics in intracranial aneurysm rupture risk assessment. AJNR. Am. J. Neuroradiol. 35, 1849–1857. doi:10.3174/ajnr.A3710

Xu L., Liang F., Gu L., Liu H. (2018). Flow instability detected in ruptured versus unruptured cerebral aneurysms at the internal carotid artery. J. Biomech. 72, 187–199. doi:10.1016/j.jbiomech.2018.03.014

Xu M., Qi S., Yue Y., Teng Y., Xu L., Yao Y., et al. (2019). Segmentation of lung parenchyma in CT images using CNN trained with the clustering algorithm generated dataset. Biomed. Eng. Online 18, 2–21. doi:10.1186/s12938-018-0619-9

Yang X., Cheng K. T. T., Chien A. (2014). Geodesic active contours with adaptive configuration for cerebral vessel and aneurysm segmentation. Proc. - Int. Conf. Pattern Recognit., Stockholm, Sweden 3209–3214. doi:10.1109/ICPR.2014.553

Zanaty M., Chalouhi N., Tjoumakaris S. I., Fernando Gonzalez L., Rosenwasser R. H., Jabbour P. M. (2014). Aneurysm geometry in predicting the risk of rupture. A review of the literature. Neurol. Res. 36, 308–313. doi:10.1179/1743132814Y.0000000327

Zhang Y., Chen L. (2019). DDNet: A novel network for cerebral artery segmentation from mra images. Proc. - 2019 12th Int. Congr. Image Signal Process. Biomed. Eng. Informatics, CISP-BMEI 2019. Suzhou, China doi:10.1109/CISP-BMEI48845.2019.8965836

Zhang Z., Liu Q., Wang Y. (2018). Road extraction by deep residual U-net. IEEE Geosci. Remote Sens. Lett. 15, 749–753. doi:10.1109/LGRS.2018.2802944

Zhao G., Liu F., Oler J. A., Meyerand M. E., Kalin N. H., Birn R. M. (2018). Bayesian convolutional neural network based MRI brain extraction on nonhuman primates. Neuroimage 175, 32–44. doi:10.1016/j.neuroimage.2018.03.065

Zhu G.-Y., Wei Y., Su Y.-L., Yuan Q., Yang C.-F. (2019a). Impacts of internal carotid artery revascularization on flow in anterior communicating artery aneurysm: A preliminary multiscale numerical investigation. Appl. Sci. (Basel). 9, 4143. doi:10.3390/app9194143

Zhu Z., Liu C., Yang D., Yuille A., Xu D. (2019b2019). V-NAS: Neural architecture search for volumetric medical image segmentation. Proc. - 2019 Int. Conf. 3D Vis. 3, 240–248. doi:10.1109/3DV.2019.00035

Keywords: SAH, intracranial aneurysm, automatic segmentation, convolutional neural network, deep learning

Citation: Zhu G, Luo X, Yang T, Cai L, Yeo JH, Yan G and Yang J (2022) Deep learning-based recognition and segmentation of intracranial aneurysms under small sample size. Front. Physiol. 13:1084202. doi: 10.3389/fphys.2022.1084202

Received: 30 October 2022; Accepted: 28 November 2022;

Published: 19 December 2022.

Edited by:

Yubing Shi, Shaanxi University of Chinese Medicine, ChinaReviewed by:

Fuyou Liang, Shanghai Jiao Tong University, ChinaCopyright © 2022 Zhu, Luo, Yang, Cai, Yeo, Yan and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guangyu Zhu, emh1Z3Vhbmd5dUB4anR1LmVkdS5jbg==; Jian Yang, eWoxMTE4QG1haWwueGp0dS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.