95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Physiol. , 23 December 2020

Sec. Computational Physiology and Medicine

Volume 11 - 2020 | https://doi.org/10.3389/fphys.2020.611596

This article is part of the Research Topic AI in Biological and Biomedical Imaging View all 17 articles

Minimally invasive surgery (MIS) has been the preferred surgery approach owing to its advantages over conventional open surgery. As a major limitation, the lack of tactile perception impairs the ability of surgeons in tissue distinction and maneuvers. Many studies have been reported on industrial robots to perceive various tactile information. However, only force data are widely used to restore part of the surgeon’s sense of touch in MIS. In recent years, inspired by image classification technologies in computer vision, tactile data are represented as images, where a tactile element is treated as an image pixel. Processing raw data or features extracted from tactile images with artificial intelligence (AI) methods, including clustering, support vector machine (SVM), and deep learning, has been proven as effective methods in industrial robotic tactile perception tasks. This holds great promise for utilizing more tactile information in MIS. This review aims to provide potential tactile perception methods for MIS by reviewing literatures on tactile sensing in MIS and literatures on industrial robotic tactile perception technologies, especially AI methods on tactile images.

Minimally invasive surgery (MIS) is a surgery approach that provides indirect access to anatomy for surgeons by introducing specially designed surgical instruments or flexible catheters into a patient’s body through minimally sized incisions (Verdura et al., 2000). Compared to conventional open surgery, MIS offers many advantages including reduced anesthesia and hospitalization time, mitigated tissue trauma and risk of postoperative infection, decreased intraoperative blood loss, and accelerated recovery (Puangmali et al., 2008). However, the indirect access to the anatomy brings two challenges: low degree of freedom (DOF) during manipulation and absence of tactile feedback during tool–tissue interactions (Abushagur et al., 2014). With the development of mechatronics, robot-assisted minimally invasive surgery (RMIS) systems, such as the ZEUS Surgical System (Uranues et al., 2002) and the da Vinci Surgical System (Guthar and Salisbury, 2000), have been developed to improve the dexterity of tools during manipulation, which partly resolve the motion constrain problem. Despite this, there are still limitations existing for MIS, including reduced hand-eye coordination, a narrowed field of vision, and limited workspace of the tools (Bandari et al., 2020). More importantly, surgeons have little tactile information in MIS compared to the rich tactile feedback of human hands, which severely impairs the surgeon’s ability to control the applied forces, thus causing extra tissue trauma or unintentional damage to healthy tissue (Ahmadi et al., 2012).

Tactile feelings, including but not limited to force, distributed pressure, temperature, vibrations, and texture, are complicated information that a human obtains through cutaneous receptors during physical interaction with environment. Depending on the sensing modalities, tactile sensors can be categorized into different kinds, including force sensors for measuring contact forces, slippage sensors for detecting slippage between tissue, and surgical instruments vibration sensors for measuring vibrations during contact. The goal of tactile technologies in MIS is to restore all the tactile information so that surgeons feel they are contacting that patients’ anatomy directly with their own hands rather than operating a mechanism. Among this tactile information, force data are relatively easy to acquire, model, quantify, and display, so it is most widely used in MIS. The sensing principles, design requirements, specifications, developments of force sensors, and their applications in MIS have been thoroughly reviewed (Eltaib and Hewit, 2003; Puangmali et al., 2008; Schostek et al., 2009; Tiwana et al., 2012; Abushagur et al., 2014; Konstantinova et al., 2014; Saccomandi et al., 2014; Park et al., 2018; Al-Handarish et al., 2020; Bandari et al., 2020). In contrast, studies on utilizing other tactile information in MIS are very rare. Researchers have begun to realize the advantages of various tactile information in MIS, but challenges remain. Van der Putten et al. found that slippage and texture information can augment force information to prevent tissue trauma during manipulation but limited by cost and changes in instability; few studies were about texture information (Westebring-van der Putten et al., 2008). Okamura found some studies on tactile sensor arrays to perceive pressure distribution or deformation over a contact area, but it was challenging to acquire and display tactile data due to size and weight constraints (Okamura, 2009).

Tactile sensors are often categorized into single-point tactile sensor and the tactile array with respect to their spatial resolution. The single-point tactile sensor is usually embedded in the tip of the equipment to confirm the object-sensor contact and detect tactile signals at the contact point. The tactile array is composed of several single-point tactile sensors arranged according to certain rules. Compared with single-point tactile sensors, tactile array sensor can cover a wider area and can capture the tactile information of the object from multiple dimensions, so it can achieve high spatial resolution of touch.

In the field of industrial robots, tactile perception technologies have received considerable attention. Tactile perception is a procedure that obtains tactile information from tactile data sensed by tactile sensors. Many methods have been proposed to accomplish robot tactile perception tasks, including shape recognition, texture recognition, stiffness recognition, and sliding detection (Liu et al., 2017). In the early years, single-point tactile sensors were used to create point cloud models to finish tactile perception tasks. A current trend of tactile perception researches is to represent tactile data as images, where a tactile element is treated as an image pixel. From tactile images that tactile sensor arrays acquired, features are extracted, such as statistical features, vision feature descriptors, principal component analysis (PCA)-based features, and self-organizing features (Luo et al., 2017). These features are usually processed by AI methods like clustering, support vector machine (SVM), and deep neural networks, to obtain tactile information.

Robotically assisted surgery is a type of surgical procedure that is done using robotic systems. It was developed to overcome the limitations of pre-existing minimally invasive surgery and to enhance the capabilities of surgeons performing open surgery. According to their level of autonomy, surgical robotic systems are often classified into two categories: autonomous systems, which automatically execute tasks without interventions of the practitioner, and nonautonomous systems, which reproduce the surgeon’s motion in either a master/slave teleoperated configuration or a hands-on configuration (Okamura, 2009). Due to the technical complications and high demanded reliability, most surgical robots belong to the second category. However, the development of robot tactile perception is promising for autonomous robotic systems. In the last decades, sensors have become smaller, cheaper, and more robust. Enormous studies on industrial robots aimed to perceive tactile in small areas like fingertips, on which sensors are tiny. Some studies accomplish tactile perception tasks with sensors made of soft material. In MIS, tactile information is usually displayed in the form of raw tactile data, which demands extra analysis. These tactile perception studies make it possible to provide more intuitive tactile information (e.g., stiffness distribution map) for surgeons utilizing nonautonomous surgical robotic systems and offer potential designs of autonomous surgical robotic systems.

In this paper, we review literatures on tactile perception technologies in industrial robots and MIS in the last decades to analyze the advantages and feasibility of applying tactile perception methods on MIS, especially the state-of-the-art AI methods on tactile images. Similarly, the features and advantages of tactile sensors varying in sensing modalities are analyzed, together with their applications in MIS.

The remainder of this paper is organized as follows: Tactile Sensors and Their Applications in MIS introduced tactile sensors and their applications in MIS. In Tactile Perception Algorithms in MIS, tactile perception algorithms in MIS are reviewed. In Tactile Perception Applications in MIS, the feasibility of applying tactile perception methods on MIS is analyzed. In Conclusion, a summary of the challenges and perspectives hoped for the future with tactile perception in MIS is presented.

Tactile sensors are used to collect tactile data at the contact point between the surgical equipment and tissues. Depending on modalities of tactile signal, various physical properties (e.g., softness and roughness) of a tissue can be extracted from tactile data. Tactile feedback is then provided for surgeons based on these detected physical properties. In most of the literatures, force feedback is the main form of tactile feedback, and force sensors are the most widely used tactile sensors. Tactile sensors can be categorized into the single-point tactile sensor and the tactile array sensor. In this section, studies on providing force feedback with the above two kinds of tactile sensors are reviewed. Except for force sensors and force feedback, some novel tactile sensors and tactile feedback methods are investigated.

A single-point tactile sensor is usually embedded on the tip of the surgical equipment to confirm the object–sensor contact and detect tactile signals at the contact point. In MIS, force feedback is extremely important to doctors in the consideration of the various consistency of the tissue. The force feedback implies the active force applied to the operators’ hands directly where the active force is usually related to the reactive force from the tissue to the tools. Many studies investigated the different application scenarios of force feedback in MIS. We summarized related cases into knotting, insertion, and incision, which will be described in the later paragraphs. After that, we will expound on the importance of force feedback in the abovementioned cases by a series of relevant studies, while the comparison with visual force feedback will also be referred to. We also investigated the development of force feedback in a famous minimally invasive surgical robotic system named da Vinci robot. Finally, the limitation of force feedback in minimally invasive surgery has been given out.

In the knotting situation through the laparoscopic procedures, the force feedback indicating the tension of the thread from the tip of the tools is extremely important to guarantee the firmness of the knots but prevent damage to the tissue. To sense the point force feedback from tool tips, load cells are commonly used, as the case in (Song et al., 2009). Moreover, in (Song et al., 2011), a load cell with fiber Bragg grating (FBG) sensors was applied to measure the tension of the thread, where FGB sensors are optical fiber sensors improving the accuracy by encoding the wavelength. Richards et al. utilized the force/torque at the grasper-side to calculate the grasping force (Richards et al., 2000). Fazal and Karsiti decomposed the reactive force happened during the insertion process into three types by a piezoelectric type one-dimensional sensor and mathematical statistics, which were the force generated due to the stiffness of the tissue, the friction force, and the cutting force, thus enabling us to analyze each type of force separately (Fazal and Karsiti, 2009). Mohareri et al. creatively passed the reactive force produced by one hand to another hand and improved the knotting accuracy to 98% (Mohareri et al., 2014).

Apart from the suture scenario, the incision situation is another indispensable part of minimally invasive surgery. Callaghan and McGrath designed a force-feedback scissor with button load cells attached to the scissor blades to measure the interforce between the blades and the tissue (Callaghan and McGrath, 2007). However, a load cell normally could only sense force from one axial and two moments; therefore, the more complicated design is considered in the later researches. In (Valdastri et al., 2006), an integrated triaxial force sensor was developed and attached to the cutting tool for fetal surgery. A similar design of 3-DOF sensors could be found in (Berkelman et al., 2003). In (Kim et al., 2015), a type of surgical instrument with force sensors of 4 DOFs was developed, which could be applied to measure the normal force and the tangential force from the tip of the tools by capacitive transduction principle.

Another conspicuous application scenario of force feedback in minimally invasive surgery is palpation. Since the tumor is always stiffer than the surrounding skin, the pressure intensity on the tumor tends to be obviously larger; therefore, sensors with a single point of contact can detect tumors by palpation (Talasaz and Patel, 2013). Similarly, to detect abnormal masses in the breast, a tactile sensing instrument (TSI) was designed in (Hosseini et al., 2010) and applied in a simulated scenario with a certain detecting route, which was the transverse scan mode. By combining the stress variation curves of each line, users could determine the x‐ and y-axis coordinates of the abnormal masses. The stress variations of the sensor in the two cases that were operating manually and by a robot showed a similar pattern. Besides, a new tactile sensory system was developed in (Afshari et al., 2011) by combining the displacement sensor and the force sensor to determine the existence and detect the location of kidney stones during laparoscopy. Since the surface stiffness was proportional to the result of the force sensor as well as the displacement sensor, the stiffness could be presented by these two values and depicted by a curving line through the path on the surface of model. Besides, Yip et al. first developed a miniature uniaxial force sensor to do endocardial measurements (Yip et al., 2010). In the research of (Munawar and Fischer, 2016), an elastic spherical proxy regions was designed to sense the forces from various directions.

To support the multiple cases in the above paragraphs, we also investigated the necessity of force feedback in minimally invasive surgery, which was proved and explained in (Morimoto et al., 1997) and (Tholey et al., 2005). The accuracy of the force applied seemed to be improved with the increasing force feedback in (Wagner and Howe, 2007) and (Bell et al., 2007). The reasons could be generalized into two points as in (Mohareri et al., 2014). One is sensing the invisible property such as the stiffness and the texture. Another one is preventing the undesired damage of tissue. Many studies investigated the cases of providing force feedback, visual feedback, visual force feedback (force feedback in the forms of image, sometimes like color bars), and no feedback. Mahvash et al. put forward the result that providing force feedback generated less error than other cases in the cardiac palpation (Mahvash et al., 2008). A similar result could be found in (Kitagawa et al., 2005). Mohareri et al. found out that the tightening degree in the knitting situation tended to be less uniform with visual feedback and summed up that the visual feedback could compensate part of the force feedback but was entirely not enough while applying the needles and thread (Mohareri et al., 2014). Reiley et al. investigated the practicability of the visual force feedback and concluded that operators without robotic experience could benefit from visual force feedback while practitioners do not as much as their counterparts (Reiley et al., 2008). Similar results were also shown in (Gwilliam et al., 2009) and summarized in (Okamura, 2009). However, visual force feedback could be the better solution in knot-tightening tasks as demonstrated in (Talasaz et al., 2012) and (Talasaz et al., 2017). Later, after (Talasaz et al., 2012), Talasaz and Patel first operated the system with an MIS tactile sensing probe remotely and viewed the feedback through a camera display (Talasaz and Patel, 2013). Besides, Guo et al. applied visual force feedback in vascular interventional surgery and showed great conformity (Guo et al., 2012).

Based on the aforementioned techniques, many operation platforms for minimally invasive surgery have been developed, including Robodoc, Probot, Zeus, and the most recent one named da Vinci (Marohn and Hanly, 2004; Puangmali et al., 2008; Munawar and Fischer, 2016). The da Vinci operation system solved several major limitations in recent minimally invasive surgeries, including the need for hand motion feedback, hand–eye coordination, feeling hands inside the body, expanding the DOF, elimination of surgeon tremor, and variable motion scaling (Guthar and Salisbury, 2000). Many pieces of research were applying based on the da Vinci operation system; however, the force feedback has been added to this system only recently. The examples could be found in (Mahvash et al., 2008) and (Reiley et al., 2008).

Force feedback has been very promising for a long while; however, it has also faced some unsolved problems. Although the force feedback provides better tumor localization performance and more precise suture and incision operation with straightforward quantitative measures, it can be somehow time consuming since the measurement from one point to another is low effective in the algorithm level as shown in (Talasaz and Patel, 2013). Apart from this problem, another problem from the force feedback method is the attenuation of the force signal since the surgical tools are always long and stiff. To solve this problem, force amplification is considered as shown in (Song et al., 2009). However, due to the unpredictable disturbance of the tissues, the small disturbing force might also be amplified leading to fatal maloperation. To solve this problem, several actions in the laparoscopic cholecystectomy procedure are described and modeled in spatial coordinates in (Pitakwatchara et al., 2006) to amplify the operation reactive force but remain the disturbing force. Nevertheless, the research remained on the theoretical level without any real model.

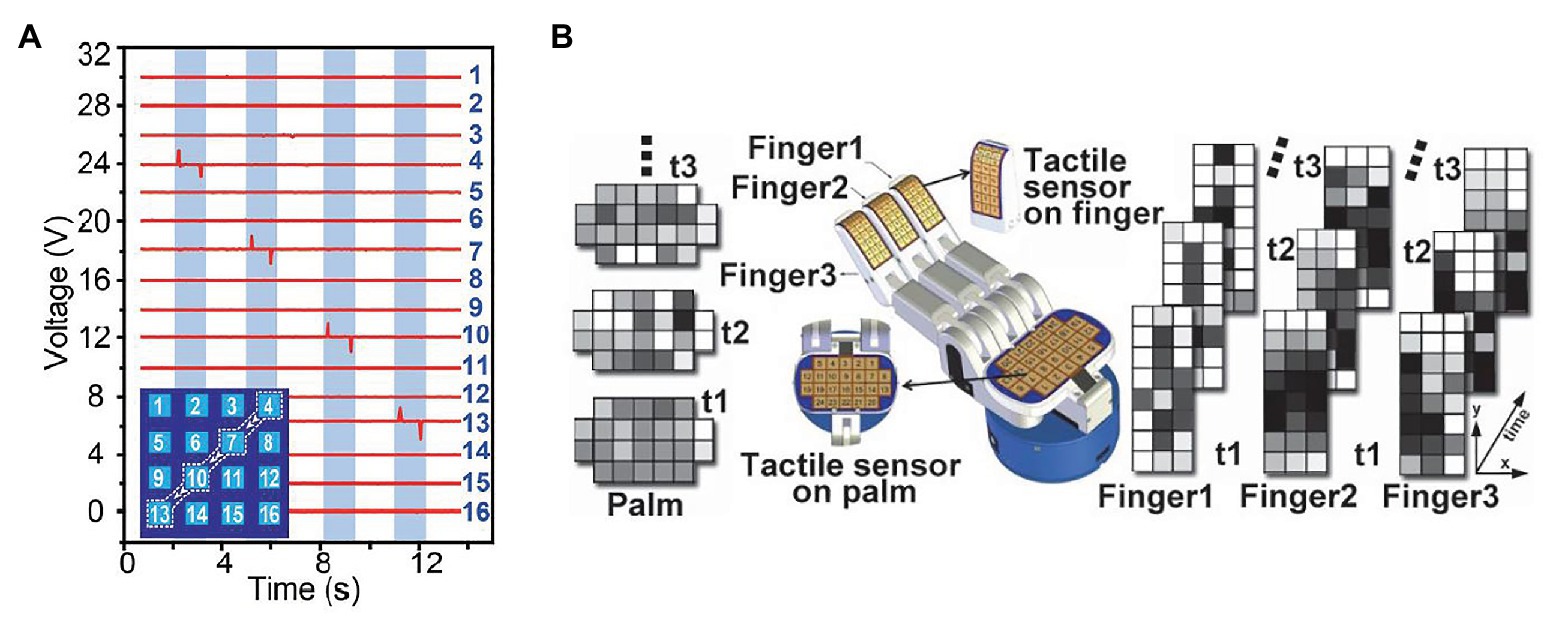

A tactile array sensor is composed of several single-point tactile sensors arranged according to certain rules. It is usually a flat cuboid with M × N tactile sensing units, where M and N indicate the number of rows and columns of sensing units. In the last decades, tactile data sensed by tactile array sensor was generally displayed as a wave diagram with M × N waveforms, each of which indicates a time-dependent physical quantity obtained by a sensing unit. Recently, with the development of computer version, the methods for processing images have been faster and more accurate, inspired by which tactile data are represented as image sequences, where each sequence represent tactile data over time sensed by a tactile array sensor, and each image pixel represents tactile data sensed by a sensing unit in a certain time. Figure 1 shows a comparison between a wave diagram and tactile images.

Figure 1. (A) An example of tactile wave diagram, where each waveform indicates voltage sensed by a sensing unit. This diagram shows the sensing result of a case that a capacitive stylus touched the surface of a 4 × 4 tactile array sensor along a path: 4 → 7 → 10 → 13 (Wang et al., 2016). (B) An example of tactile image sequences, where each sequence represent tactile data over time sensed by an tactile array sensor, and each image pixel represents tactile data sensed by a sensing unit in a certain time (Cao et al., 2016).

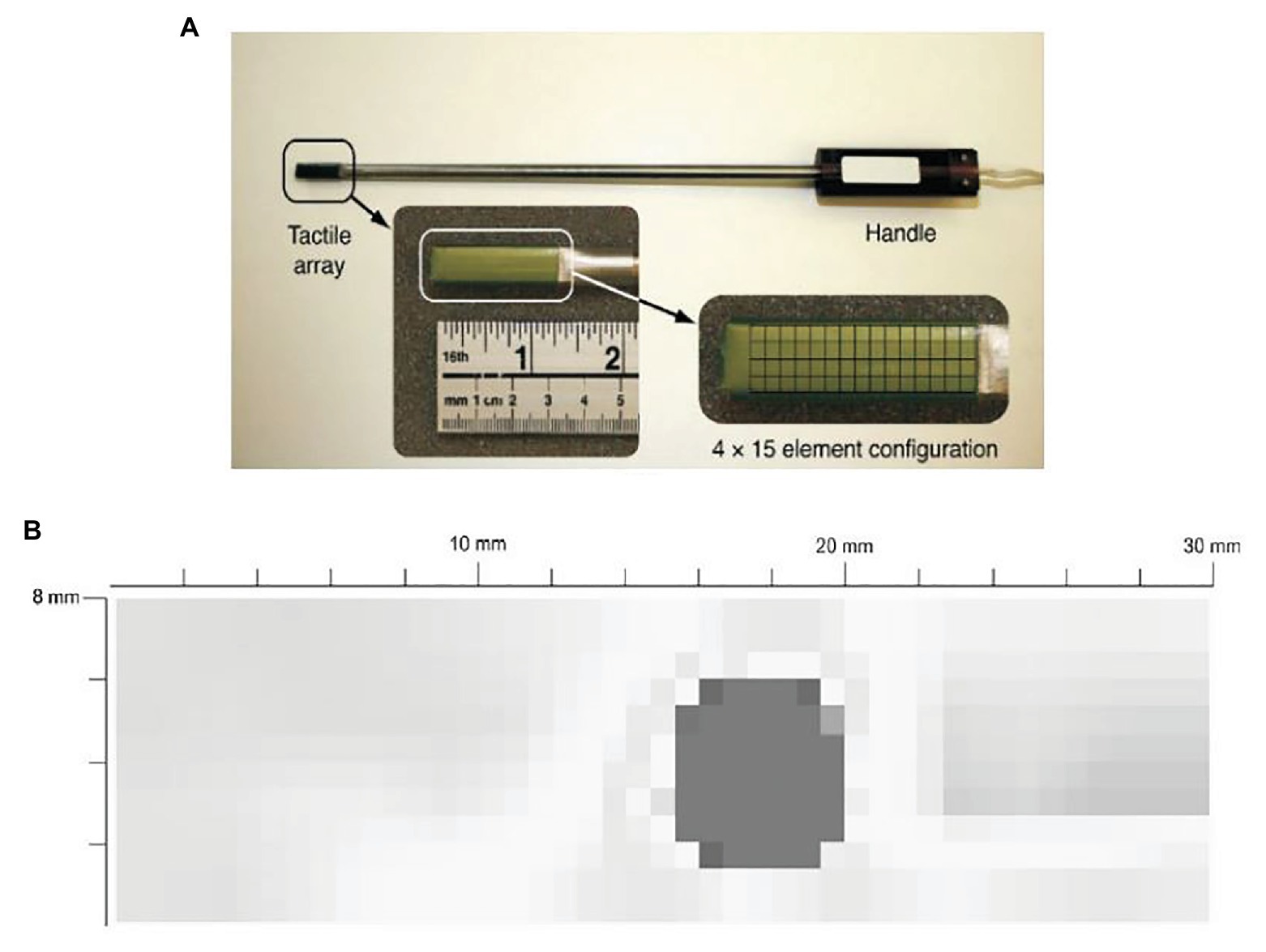

For example, Trejos et al. developed a TSI that uses a commercially available pressure pad (Trejos et al., 2009). The TSI is shown in Figure 2A. The TSI industrial TactArray on this instrument consists of an array with 15 rows and 4 columns of electrodes, which are oriented orthogonally to each other. Each overlapping area created by the row and column electrodes forms a distinct capacitor. The results from the tactile sensor as shown in Figure 2B converts the measured voltage values from the capacitive sensor to pressure measurements and displays these results in a color contour map of pressure distributions.

Figure 2. (A) A tactile sensor array with 4 × 15 sensing elements. (B) a typical contour map of a tumor obtained from the visualization software (Trejos et al., 2009).

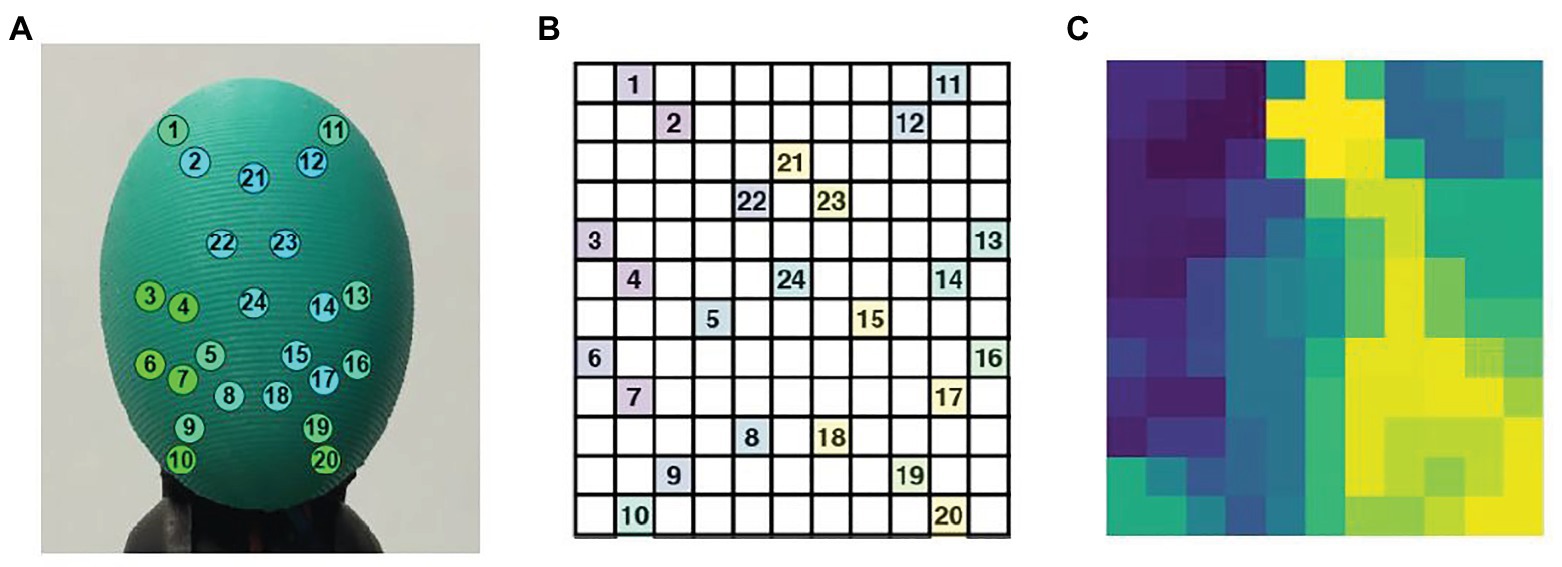

Zapata-Impata et al. used the BioTac SP tactile sensor manufactured by Syntouch (Zapata-Impata et al., 2019). Figure 3A shows a representation of the location of the electrodes in the sensor. A tactile image can be created for this 2D array in which the 24 electrodes values are spatially distributed to occupy the image pixels at certain coordinates . Basically, the tactile image consists of a 12 × 11 matrix in which the 24 electrodes are distributed as shown in Figure 3B. Figure 3C shows the final tactile image; all the gaps (cells without assigned values) are then filled using the mean value of the eight-closest neighbors.

Figure 3. (A) The BioTac sensor with 24 electrodes distributions, (B) distribution of the BioTac SP electrodes in a 12 × 11 tactile image, (C) result of filling the gaps in the tactile image with the mean value of the eight-closest neighbors (Zapata-Impata et al., 2019).

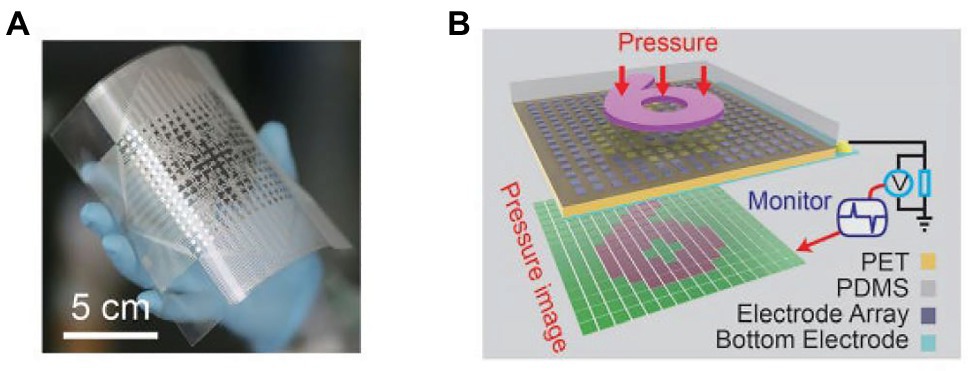

Wang et al. reported a self-powered, high-resolution, and pressure-sensitive triboelectric sensor matrix (TESM) based on single-electrode triboelectric generators that enable real-time tactile mapping (Wang et al., 2016). Figure 4A shows a flexible 16 × 16 pixelated TESM with a resolution of 5 dpi can map single and multipoint tactile stimuli in real time via the multichannel data acquisition method while maintaining an excellent pressure sensitivity of 0.06 kPa−1 and long-term durability. Figure 4B is a schematic of how the sensor matrix images the pressure distribution when a mold in the shape of a “6” is pressed against the top of the TESM.

Figure 4. (A) Photograph of a fabricated 16 × 16 TESM with good flexibility. (B) Demonstration of the mapping output voltage of the sensor matrix under the pressure of a module in the shape of a “6” (Wang et al., 2016).

Compared with single-point tactile sensors, a tactile array sensor can cover a wider area and can capture the tactile information of the target from multiple dimensions, so it can achieve high spatial resolution of touch. Therefore, it is applied to minimally invasive surgery now. For vascular interventional surgery, Guo et al. reported a novel catheter sidewall force tactile sensor array, which is based on a developed robotic catheter operating system with a master–slave structure (Guo et al., 2013). It can detect the force information between the sidewall of the catheter and the blood vessel in detail and transmit the detected force information to the surgeon through the robot catheter system. Besides, to reduce the postoperative pains, Li et al. proposed an original miniature three-dimensional force sensor that can detect the interaction forces during tissue palpation in minimally invasive surgery (Li et al., 2015). In addition, to detect and locate tissue abnormalities, Li et al. presented a novel and high-sensitivity optical tactile sensor array based on fiber Bragg grating (FBG) (Li et al., 2018). Each tactile unit is mainly composed of a spiral elastomer, a suspended optical fiber engraved with an FBG element, and a contact connected with elastomers with threads. Moreover, for tissue palpation, Xie et al. proposed a new type of optical fiber tactile probe, which consists of 3 × 4 tactile sensors (Xie et al., 2013). In this paper, one single camera is employed to capture and detect the light intensity changes of all sensing elements and convert to force information. Finally, for tissue palpation, Roesthuis et al. proposed an experimental bench, which includes a tendon-driven manipulator. A kind of nitinol FBG wire is fabricated, on which 12 FBG sensor arrays are integrated and distributed over four different groups. In closed-loop control, the minimum average tracking error of circular trajectory is 0.67 mm (Roesthuis et al., 2013).

In conventional open surgery, surgeons make ample use of their cutaneous senses to differentiate tissue qualities, which can hardly be achieved with force sensors alone, motivating some researchers to expend effort on enabling other sensing modality in MIS. In the last decades, researchers have made an attempt to use other tactile signals to measure properties of tissues. Eklund et al. developed an in vitro tissue hardness measurement method using a catheter-type version of piezoelectric vibration sensors (Eklund et al., 1999). Eltaib and Hewit proposed a tactile sensor by attaching a pressure sensor to the end of a sinusoidally driven rod of the tactile probe. The sensor measured both vibration and contact force to detect differences between soft and hard tissues and assist surgeons in detecting abnormal tissues (Eltaib and Hewit, 2000). Baumann et al. presented a method of measuring mechanical tissue impedance by determining resonance frequency with an electromechanic vibrotactile sensor integrated into an operating instrument (Baumann et al., 2001). Chuang et al. reported a miniature piezoelectric hardness sensor mounting on an endoscope to detect submucosal tumors (Chuang et al., 2015). Kim et al. fabricated sensorized surgical forceps with five-degree-of-freedom (5-DOF) force/torque (FIT) sensing capability (Kim et al., 2018). A summary of the representative tactile sensors and their applications is presented in Table 1.

Some novel tactile display systems were developed to provide feedback for surgeons based on various tactile information. Schostek et al. proposed a tactile sensor, integrated into a laparoscopic grasper jaw, to obtain information about shape and consistency of tissue structures (Schostek et al., 2006). The tactile data were wirelessly transferred via Bluetooth and graphically displayed to the surgeon. However, tissue exploration time was longer compared to a conventional grasper. Prasad et al. presented an audio display system to relay force information to the surgeon, but continual noise in an operating room setting remained a problem (Prasad et al., 2003). Fischer et al. developed a system that displayed oxygenation values to surgeons. They simultaneously used force sensors and oxygenation sensors to measure tissue interaction forces and tissue oxygenation next to translational forces, when tissue oxygenation decreases below a certain value, trauma will occur (Fischer et al., 2006). Pacchierotti et al. reported a cutaneous feedback solution on an da Vinci surgical robot. They proposed a model-free algorithm based on look-up tables to map the contact deformations, dc pressure, and vibrations to input commands for the cutaneous device’s motors. A custom cutaneous display was attached to the master controller to reproduce the tactile sensations by continually moving, tilting, and vibrating a flat plate at the operator’s fingertip (Pacchierotti et al., 2016).

Recent researches on tactile perception algorithms are focused on the tactile array sensor. With the tactile array sensor, we can collect an M × N tactile image, where each tactile element is treated as an image pixel. From tactile images that tactile sensor arrays acquired, features are extracted, such as statistical features, vision feature descriptors, PCA-based features, and self-organizing features. These features are usually processed by AI methods like clustering, SVM, and deep neural networks to obtain tactile information. After training the algorithm, we can use it to assist doctors in minimally invasive surgery. There are a lot of scenarios where the algorithm can be used. We summarized related cases into wall following, shape recognition, stable scraping, and hardness detection.

To perform wall following, Fagogenis et al. designed an image classifier, which is based on machine learning and can distinguish between blood (no contact) or ventricular wall tissue and the bioprosthetic aortic valve (Fagogenis et al., 2019). The algorithm used the bag-of-words method to group tactile images, which is based on the number of occurrences of specific features of interest. During training, the algorithm can select features that were of interest and the relationship between their number and the tactile image class. For training, they used OpenCV to detect features in a set of training images based on manually labeled. Then, the detected features are mathematically encoded with LUCID descriptors to achieve efficient online computation. In order to reduce the number of features, they used clustering (k-mean) to identify the optimal feature representatives. The resulting cluster centers were the representative features used for the rest of the training and for runtime image classification. After determining the representative feature set, they traversed the training data for the second time and constructed the feature histogram for each image by calculating the number of times each representative feature appeared in the image. The last step was to train an SVM classifier, which learned the relationship between the feature histogram and the corresponding classes. Using the trained algorithm, we first detected the features and calculated the corresponding LUCID descriptors and then classified the images. Then, these features were matched to the nearest representative features, and the resulting feature histogram was constructed. Based on the histogram, the SVM classifier is used to predict the tissue-based contact state. They used a small group of training tactile images (~2,000 images) with training taking just a few minutes (~4 min) and achieved good results. Because image classification took 1 ms, our haptic vision system estimated contact state based on the camera’s frame rate (~45 frames/s). The accuracy of contact classification algorithm is 97% (tested on 7,000 images not used for training) with type I error (false positive) of 3.7% and type II (false negative) of 2.3%.

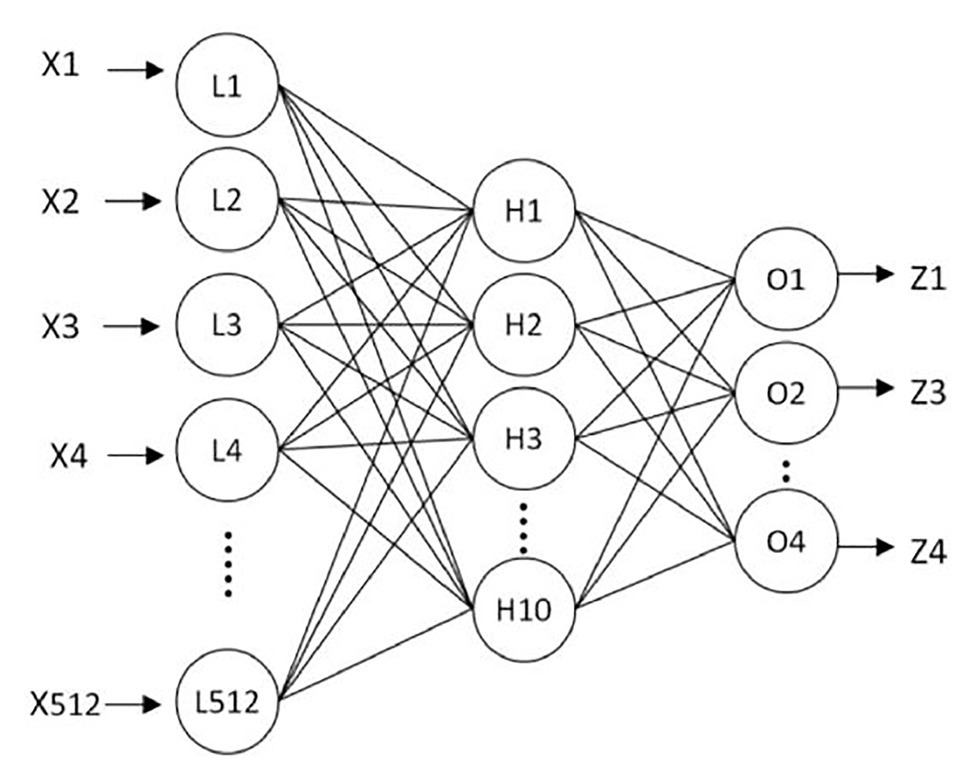

To recognize the shape of an object, Liu et al. proposed a new algorithm to identify the shape of an object by tactile pressure images, which can distinguish the contact shapes between a group of low-resolution pressure maps (Liu et al., 2012). The algorithm can be divided into four steps. The first step of the algorithm is “Data extraction.” Data extraction normalizes the strongly correlated tactile images sequence into a single map to save computational cost and reduce the disturbances from signal noise. The second step is “Preprocessing.” It consists of several subalgorithms to prepare the information for its latter “Feature extraction.” Preprocessing is essential not only to prepare the information for further steps but also to enhance the interests of tactile images. The third step is “Feature extraction.” In this step, the tactile image is transformed into a 512-feature vector, and the extracted features are used to train the developed neural network for object shape recognition. These features are not affected by occlusion, position, or scale, as well as image size, resolution, and number of frames. All these characteristics make the algorithm robust and effective. Finally, a three-layer neural network is developed to train, validate, and test the efficiency and success rate of the algorithm. It is trained to use the features extracted at the previous stage as a classifier. Figure 5 shows a diagram of the three-layer neural network for object classification. Through the experimental study, it was found that using four different contact shapes to test, the average classification accuracy reached 91.07%. The shape recognition algorithm based on the feature extraction has strong robustness and effectiveness in distinguishing different target shapes. It can be directly applied to minimally invasive surgery to identify the shape of the contact site and determine whether the tissue is abnormal, which is convenient for doctors to detect abnormal tissues with abnormal shapes in time.

Figure 5. The three-layer neural network for object classification (Liu et al., 2012).

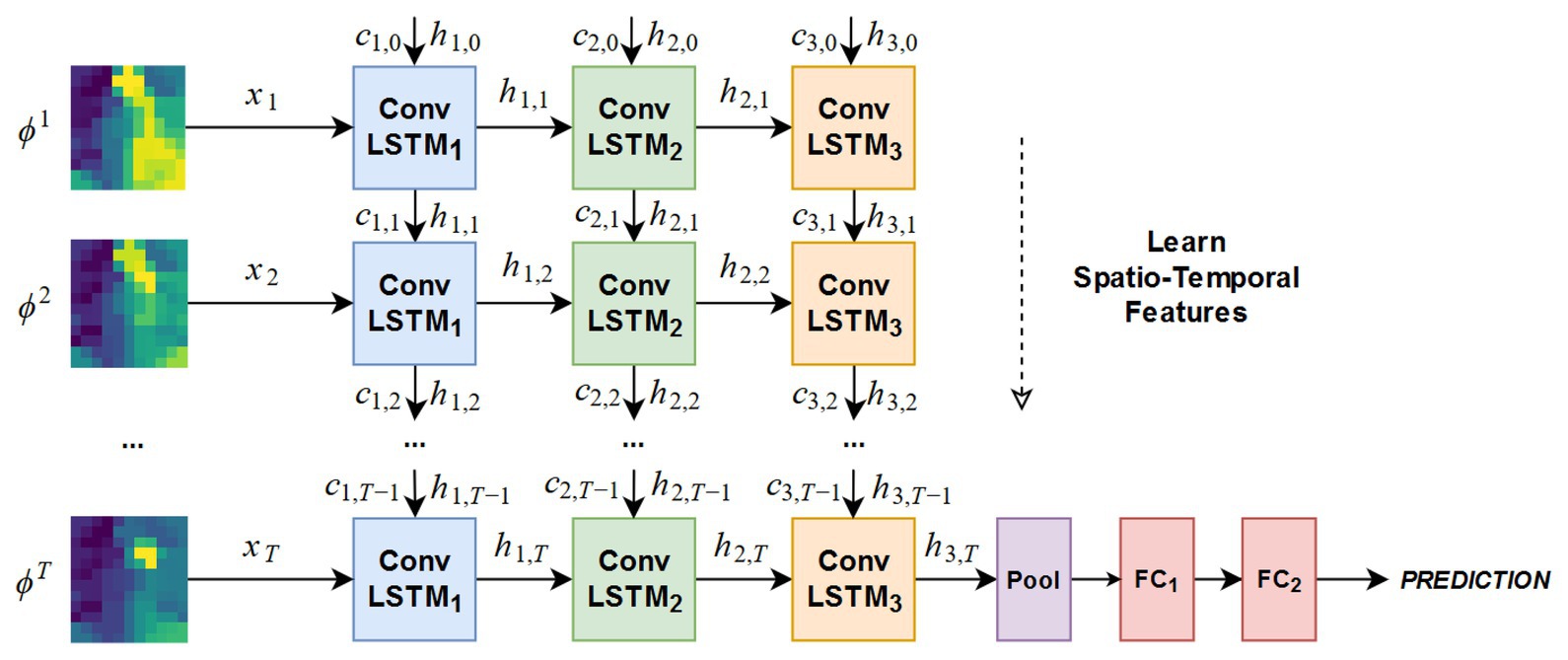

To judge the stability of the grip, Zapata-Impata et al. proposed a spatiotemporal tactile features learning method based on convolutional long short-term memory (ConvLSTM) (Zapata-Impata et al., 2019). This method preprocessed the tactile readings and fed to a ConvLSTM that learns to detect multiple types of slip directions with just 50 ms of data. The architecture of the ConvLSTM network is shown in Figure 6. For preprocessing data, this method used a sensor with 24-electrode distributions to obtain tactile images. In more detail, the sensor uses these electrodes to record signals from four emitters and measure the impedance in the fluid between them and the elastic skin of the sensor. The fluid moves while contact is experienced by these sensors, thus affecting the measurements made by the electrodes. The whole method used four object sets, containing a total of 11 different objects, and was used to capture a new tactile dataset, recording seven different types of slip directions: north, slip south, slip east, slip west, slip clockwise, slip anticlockwise, or touch. Basically, the method created the ConvLSTM learns spatial features from pictures while simultaneously learning temporal ones. In the process of creating ConvLSTM, this method studied how the performance of the ConvLSTM changes depending on several parameters: the number of ConvLSTM layers, the size of the convolutional filters, and the number of filters inside each ConvLSTM layer. Finally, according to the experimental results, the network structures of five ConvLSTM layers, 3 × 3 filters, and ConvLSTM layers with 32 filters are selected to focus more attention on the low-level details in the tactile image and get better accuracy. For feature learning in time series, this method only needs three to five continuous tactile images, and the network can accurately learn to detect the sliding direction. In the task of detecting these seven states on seen objects, the system achieved an accuracy rate of 99%. Even if the ConvLSTM network was sensitive to new objects, during the robustness experiments, its performance dropped to an accuracy rate of 82.56% in the case of new objects with familiar properties (solids set) and an accuracy rate of 73.54 and 70.94% for stranger sets like the textures and small sets. The spatiotemporal tactile features learning method can be directly applied to minimally invasive surgery to improve the stability of tissue detect/mass grasp. However, at present, the single-point sensor is used to judge the grasping stability, and the judgment of the slip direction is only based on the single-point tactile characteristics. If the array tactile map is used, the regional feature information can be considered in the process of judgment to improve the stability of grasping. Therefore, it is very promising to apply this algorithm to minimally invasive surgery.

Figure 6. Architecture of the ConvLSTM network tested in the experimentation (Zapata-Impata et al., 2019).

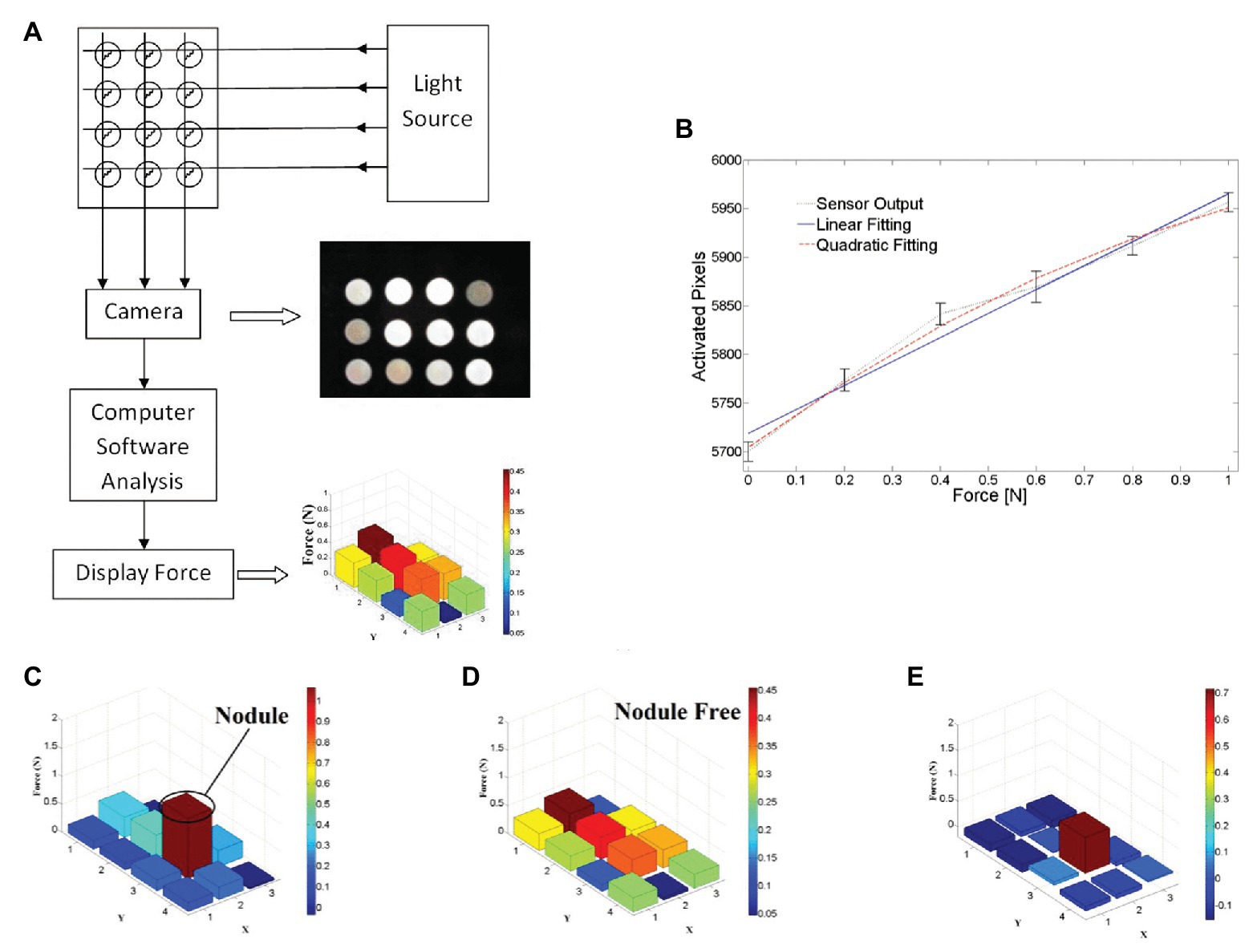

To detect hardness, Yuan et al. designed a deep learning method that can estimate shape-independent hardness (Yuan et al., 2017). The algorithm, the convolutional neural network (CNN) feature layer of VGG network, is used to extract the physical signs of the tactile image, and a feature sequence is generated and input to LSTM to evaluate the softness and hardness of the sample. The algorithm can estimate the hardness of objects with different shapes and hardness ranging from 8 to 87 in Shore 00 scale. In minimally invasive surgery, tissue hardness detection is very important. Tumor detection is a very good example: some solid tumors are harder than the surrounding tissue, and their existence can also be obtained through tactile feedback to determine the location of resection and increase the success rate of surgery. Xie et al. proposed a method based on pixel calculation to measure the normal force and its distribution in the sensor area, to judge the hardness range of the area and determine the abnormal structure (Xie et al., 2013). As shown in Figure 7, in this method, the tactile image data of different brightness under different forces are captured by an optical fiber tactile sensor containing 3 4 sensing elements, and the tactile image is divided into 12 different regions in turn. By calculating the pixel values of each sensing region, and according to the predetermined linear relationship, the magnitude of the force applied in the region is obtained. The sensor outputs responses after palpation in two different areas; Figure 7C shows areas including the nodule, while Figure 7D shows areas that do not. From the result, outputs of each sensing element in the nodule-free area vary mostly in the range of 0–0.4 N. while in the nodule-embedded area, outputs of the sensing elements in contact with the nodule exceed the value of 0.8 N. The location of the nodules can be seen more clearly by subtracting Figure 7C of Figure 7D from Figure 7E. This method determines the tissue lump according to the pressure distribution map; although it is effective in some ways, the method based on linear fitting has a risk of producing large errors, and the definition range of hardness is single. If the image processing algorithm is used, the above two defects can be improved. Therefore, in the tissue hardness detection method, the method based on array tactile image processing is worth studying.

Figure 7. (A) Schematic design of proposed tactile sensor using camera, (B) measured output responses of sensing element 1 to the normal force applied, (C) test results on nodule area, (D) test results on nodule-free area, and (E) effective stiffness distribution map by compare test result on two areas (Xie et al., 2013).

In the minimally invasive surgery, given the very small holes for the tools to operate, the capacity to feel tends to be limited as discussed in (Eltaib and Hewit, 2003). In line with that, Dargahi and Najarian summarized four categories of properties that were usually considered to be important in minimally invasive surgery including the force, position and size, hardness/softness, and roughness and texture (Dargahi and Najarian, 2004). However, with the help of tactile perception, the general performance is liable to be improved. To show the feasibility of the assistance provided by the tactile perception in the minimally invasive surgery, we will discuss the assistance provided by tactile perception catering for each property, respectively, after which the general discussion will be given.

Measuring the acting and reactive force from tissues could be applied in many cases such as controlling the surgical knife on the liver tissue (Chanthasopeephan et al., 2003), measuring the tension of the thread while knotting (Song et al., 2009), modeling needle insertion force (Fazal and Karsiti, 2009), and differentiating between tissue samples in the scissoring process (Callaghan and McGrath, 2007). The doctors usually rely on the magnitude of force feedback to estimate when to stop every single shearing or insertion operation. For example, He et al. designed a 3-DOF force sensing pick instrument applied in the retinal microsurgery with fiber optic sensors placed at the distal tip of the surgical instrument. To realize multiple degrees of freedom, a linear model and second-order Bernstein polynomial were used to distinct forces in different directions (He et al., 2014). Besides, many previous studies like (Mohareri et al., 2014) show that, with the force-feedback data, doctors tend to make each separate operation more uniform, such as knots with similar thread tension. Nevertheless, the sensing directions and the accuracy are still of the top concern when researchers strive to improve the overall performance of the assistance of force.

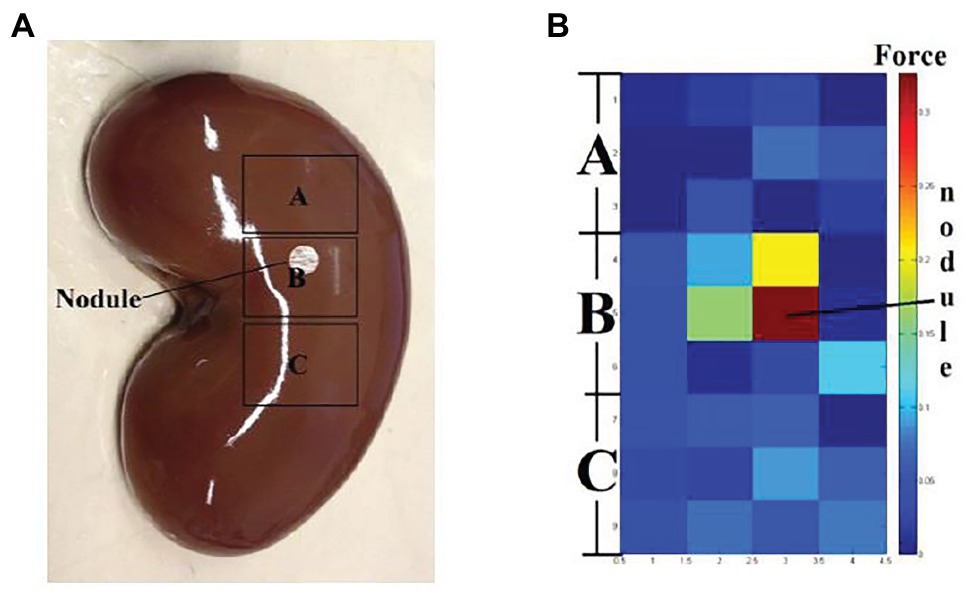

The assistance in position and size is usually for tumor localization (Hosseini et al., 2006; Perri et al., 2010). For example, Liu et al. measured the indentation depth to detect the abnormal part of the tissue (Liu et al., 2010). Lederman et al. used rigid fingertip sheaths to locate the 3D mass (Lederman and Klatzky, 1999). Afshari et al. utilized the stress distribution to determine the stone inside the kidney (Afshari et al., 2010). Most of the time, the position and size of mass are reflected by the surging magnitude of rigidity sensed from the tools during palpation or the image constructed by an array of force sensors. In (Xie et al., 2013), a 3 × 4 sensing array was designed to detect the force distribution, and the doctors could be provided with the visualized data on which area tended to be stiffer. In the evaluation test on a lamb kidney with nodules embedded, the design presented a very effective performance. The doctors are more likely to rely on their experience to estimate the position and size based on the force feedback. Figure 8 shows an example of the mass localization utilizing an array of force sensors.

Figure 8. (A) A kidney with invisible nodule buried in area B. (B) The sensing result by palpating on three areas. Various color blocks indicating different force values (Xie et al., 2013).

When calculating the hardness/softness, the sensors are usually placed on endoscopic graspers (Najarian et al., 2006). In many cases, tissue hardness could also be utilized to locate the mass in palpation. For instance, Ju et al. relied on the sensors on the catheter robot to locate the mass (Ju et al., 2018, 2019). Moreover, Kalantari et al. measured the relative hardness/softness of the tissue to sense various types of cardiac tissues while performing mitral valve annuloplasty (Kalantari et al., 2011). In (Yip et al., 2010), a creative uniaxial force sensor based on fiber optic transduction was developed, which could detect very small forces but show few root mean square (RMS) errors. In the designing process, properties of waterproof, electrical passivity, and material constraints were especially considered so that the instrument could perfectly meet the requirement of operating in the cardiac environment. However, there is no certain threshold to determine hard or soft by machine, which means the subjective judgment from doctors is indispensable.

Roughness and texture are the fourth groups of properties that can assist doctors in MIS. To measure roughness and texture, the sensors are usually placed on endoscopic graspers (Bonakdar and Narayanan, 2010), like laparoscopic graspers (Dargahi and Payandeh, 1998; Dargahi, 2002; Zemiti et al., 2006; Lee et al., 2016), which could be applied to cholecystectomy (Richards et al., 2000) and Nissen fundoplication (Rosen et al., 2001), and could also measure viscoelastic properties of tissues (Narayanan et al., 2006). In (Bicchi et al., 1996), tissue elastic properties were measured. For instance, in (Dargahi, 2002), a polyvinylidene fluoride tactile sensor was designed to measure the compliance and roughness of tissues. The principle was to measure the relative deformation when the tissue contacted with the sensor surface. However, it is never the best choice to detect all the tissue surface points by point, which indicates the large time consumed. For wiser utilization, subjective judgment by humans should also be taken into account.

In line with the aforementioned cases, some researchers have devoted to the intelligent algorithms that can diminish the participation of subjectivity. Many of them have been described in Tactile Perception Algorithms in MIS. In this subsection, we will introduce more AI-based tactile perception technologies that were proven to be effective in MIS. Beasley and Howe used the pulsatile pressure variation from force sensors to find the artery through a signal processing algorithm and applied an adaptive extrapolation algorithm to generate the ultimate position prediction. The rough idea of adaptive extrapolation was applying 15 sensing samples and linear regression to fit the predicted arteries. It has been tested on the ZEUS Surgical Robot System and resulted in a < 2-nm mean error (Beasley and Howe, 2002).

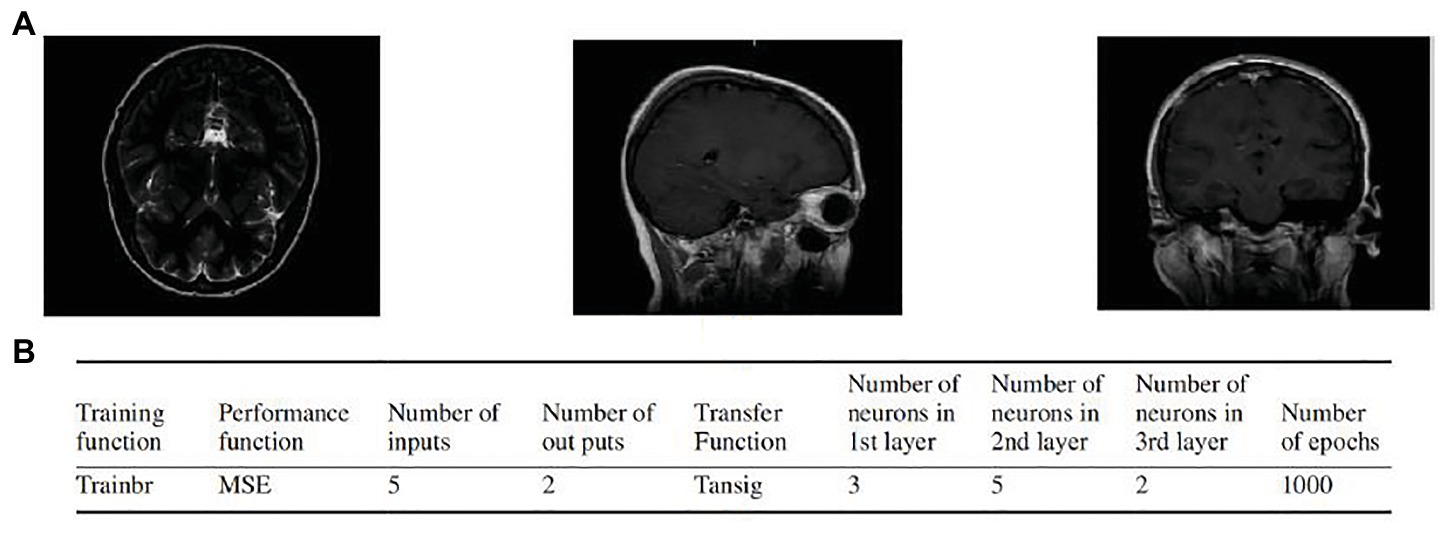

Sadeghi-Goughari et al. introduced a new minimally invasive diagnosis technique named intraoperative thermal imaging (ITT) based on artificial tactile sensing (ATS) technology and artificial neural networks (ANNs) (Sadeghi-Goughari et al., 2016). In this study, a forward analysis and an inverse analysis based on ANN were proposed to estimate features including temperature and depth of a tumor using a brain thermogram. The brain thermogram is shown in Figure 9A. This work involved the forward analysis of heat conduction in cancerous brain tissue by employing a finite element method (FEM). Then, a three-layer feed-forward neural network (FFNN) with back propagation learning algorithm was developed to estimate related features of a tumor. Parameters of the proposed FFNN are shown in Figure 9B. The inputs of FFNN are thermal parameters extracted from tissue surface temperature profiles. Training of the ANN was performed by a backpropagation algorithm. By comparing estimated values of tumor features and expected values, potential brain tissue abnormalities were detected, which greatly facilitate the task of the neurosurgeon during MIS.

Figure 9. (A) Examples of the brain thermogram. (B) Parameters of artificial neural network (ANN; Sadeghi-Goughari et al., 2016).

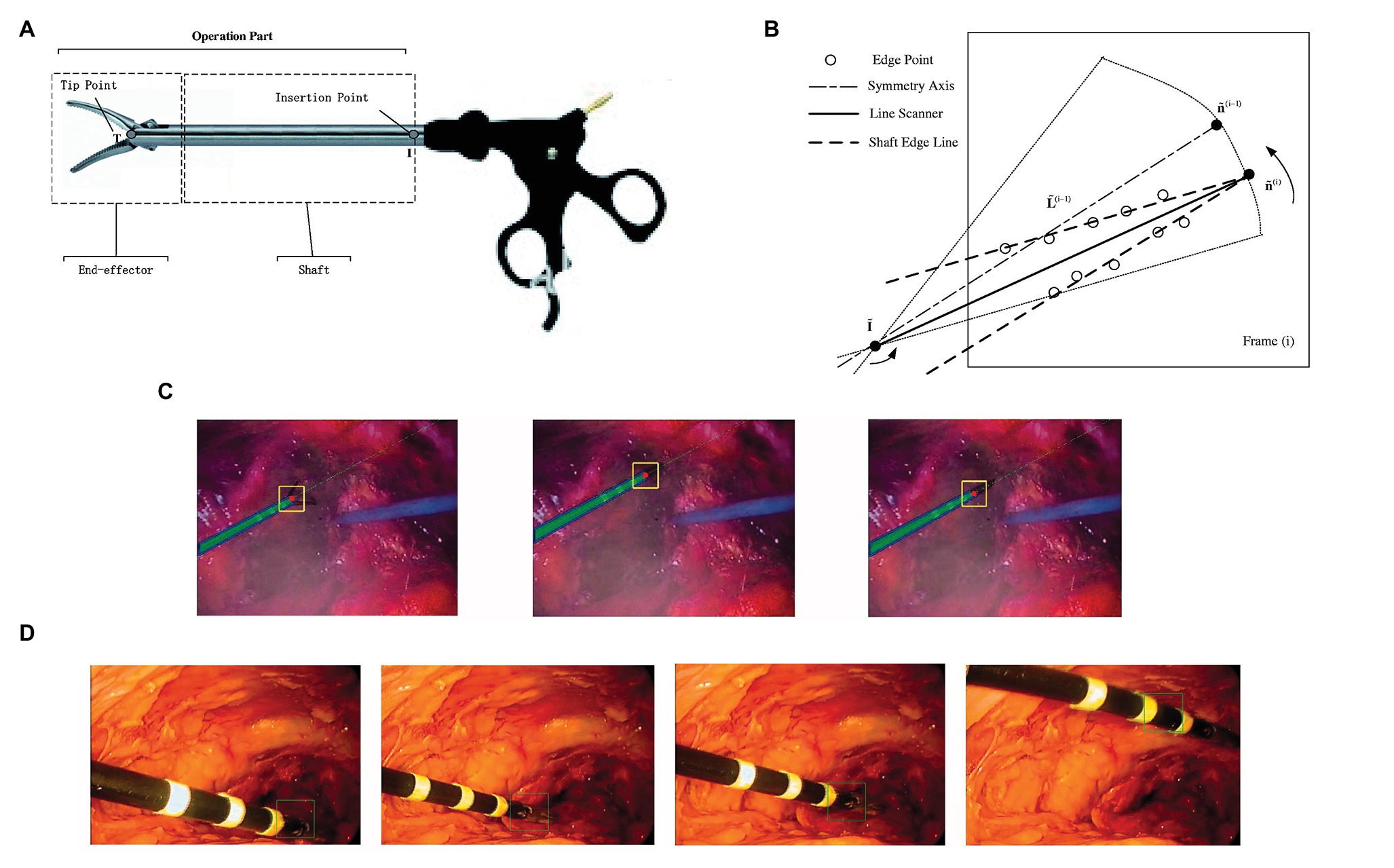

Zhao et al. proposed a tracking-by-detection framework of surgical instruments in MIS (Zhao et al., 2017). As shown in Figure 10A, the operation of conventional MIS instruments can be subdivided into shaft portions and end effector. In the proposed method, the shaft portion was described by line features through the random sample consensus (RANSAC) scheme, and the end effector was depicted by some special image features based on deep learning through a well-trained CNN. With camera parameters and insertion points, a tracking method was proposed to estimate the 3D position and orientation of the instruments. As shown in Figure 10B, the scanning range was restricted to a sector area with the symmetry axis , where is the image and is an arbitrary scale. For the current frame , the bounding box slid along the symmetry axis obtained by shaft detection. The parameter is the center of the bounding box. The image in the bounding box at every sliding step with scale was resized to 101 × 101 and then used as an input for the trained CNN. The highest score of the CNN positive output corresponds to the bounding box , where is treated as the imaged tip position direction of the current frame . Figure 10C shows the selected frames from the tracking procedure of the proposed method. However, compared with those in the ex vivo test, the 2D measurement error in the in vivo test was at least 2.5 pixels. When the respective 2D tracking by the proposed method was applied to each frame with the CNN-based detection of instruments, the insufficient illumination of the image part (end effector) accounted for drifted tracking results in some frames (see Figure 10D), which is the main reason why the in vivo test has higher 2D measurement errors. This issue can be resolved by adding samples of in vivo sequences into the training database.

Figure 10. (A) The operation part of minimally invasive surgery (MIS) instrument: end effector and shaft portion; (B) line scanner application for detection of shaft edge lines and shaft image direction estimation; (C) selected frames of the instrument tracking and detection: the red circles are the tracked end-effector tip position, and the green dashed line is the shaft symmetry axis; (D) example frames of in vivo sequences with the end-effector positions shown by squares (Zhao et al., 2017).

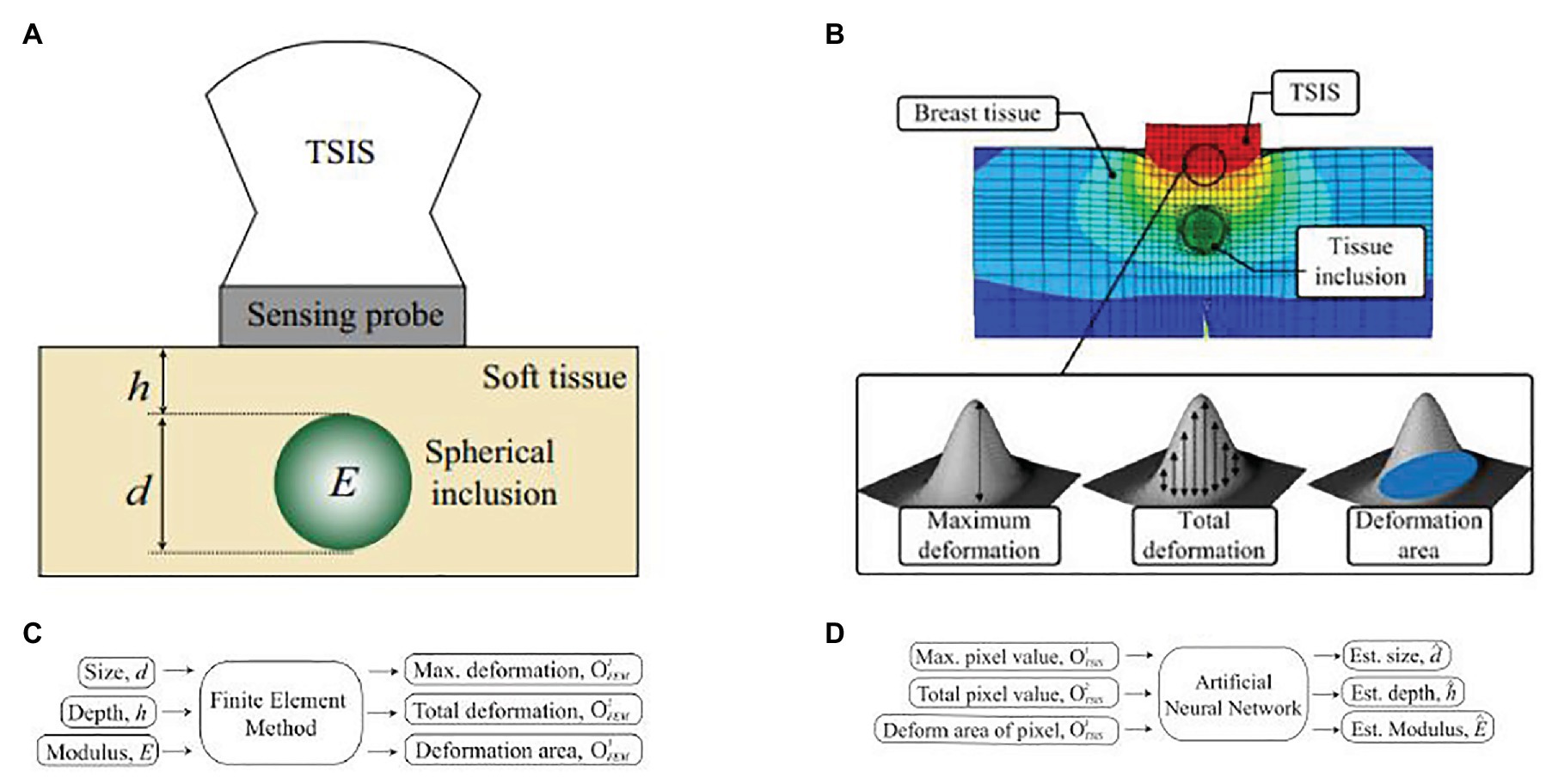

Lee and Won presented a novel method to estimate the stiffness and geometric information of a tissue inclusion (Lee and Won, 2013). The estimation was performed based on the tactile data obtained on the tissue surface. To obtain the tactile data, the author developed an optical tactile sensation imaging system (TSIS). The TSIS obtained tactile images with maximum pixel values, total pixel values, and deformation areas. These parameters were used to estimate the size, depth, and elasticity of the embedded lesions. The proposed method consisted of a forward algorithm and an inversion algorithm. The forward algorithm was designed to predict maximum deformation, total deformation, and deformation areas based on the parameters including size, depth, and modulus of the tissue inclusion. In the inversion algorithm, tactile parameters obtained from the TSIS and simulated values from the forward algorithm were used to estimate the size, depth, and modulus of the embedded lesion. Figure 11A describes a cross-section of an idealized breast mode. Figure 11B shows the sensing probe of TSIS modeled on top of the breast tissue. When the TSIS compressed against the tissue surface containing a stiff tissue inclusion, it produced different parameters: size , depth , and Young’s modulus . The FEM in the forward algorithm quantified deformation as the maximum deformation (the largest vertical displacement of FEM elements of sensing probe from the nondeformed position), the total deformation (displacement summation of FEM elements of sensing probe from the nondeformed position), and the deformation area (the projected area of the deformed surface of the sensing probe), as shown in Figure 11C. The tactile data are necessary to relate FEM tactile data and TSIS tactile data The definitions of TSIS tactile data are as follows: The maximum pixel value is defined as the pixel value in the centroid of the tactile data. The total pixel value is defined as the summation of pixel values in the tactile data. The deformation area of pixel is defined as the number of pixels greater than the specific threshold value in the tactile data. The inversion algorithm was used to estimate using the determined . In this method, the multilayered ANN was considered as an inversion algorithm, as shown in Figure 11D.

Figure 11. (A) A cross-section of an idealized breast model for estimating inclusion parameters. The tissue inclusion has three parameters: size d, depth h, and Young’s modulus E. (B) Finite element method (FEM) model of an idealized breast tissue model. The sensing probe of tactile sensation imaging system (TSIS) is modeled on top of the breast tissue model. (C) The forward algorithm. (D) The inversion algorithm (Lee and Won, 2013).

Despite these methods, algorithms design of tactile perception in the minimally invasive surgery is still a new subject, with related little research, although we hold the opinion that this field could be considerably promising due to the application need.

Minimally invasive surgery has been the preferred surgery approach owing to its advantages over conventional open surgery. Tactile information has been proven effective to improve surgeons’ performance, while most reviews for MIS were only focusing on force sensors and force feedback, neglecting other tactile information. In this paper, we reported tactile sensors, tactile perception algorithms, and tactile perception applications for MIS. These include a description of various tactile sensors and feedbacks not limited to force sensors and force feedback, the state-of-the-art and novel machine learning algorithms in tactile images for tactile perception in MIS, and potential tactile perception applications for MIS, especially for detecting tissue properties. Finally, this review contains some of the limitations and challenges of each technical aspect.

An emerging research and development trend in the literature is the fusion of various tactile information. Utilizing force information alone has met challenges, including low effectiveness in the algorithm level, the attenuation of the force signal, and amplified disturbing force. Therefore, some studies aimed to develop hybrid sensors employing more than merely one sensing principle to measure one or multiple physical stimuli (e.g., force, slippage, stiffness, etc.) to obtain more robust measurements of physical stimuli and cover wider working environments. With the development of tactile sensors of various sensing modalities, some novel tactile feedback systems were reported (e.g., graphical display system, audio display system, etc.). Some researchers attempted to obtain more tactile information at the algorithm level. Inspired by computer vision technologies, some researchers reported machine learning algorithms for obtaining more than merely one kind of tactile information from tactile images, where a tactile element is treated as an image pixel. Tactile perception algorithms design in MIS is still a new subject, with related little research; while considering the high accuracy, high robustness, and excellent real-time performance of machine learning algorithms, we hold the opinion that this field could be considerably promising due to the application need.

All authors researched the literature, drafted, and wrote the review article, and approved the submitted version.

This review was supported by grants from the Science and Technology Bureau of Ningbo National High-Tech Zone (Program for Development and Industrialization of Intelligent Assistant Robot), the Industrial Internet Innovation and Development Project in 2018 (Program for Industrial Internet platform Testbed for Network Collaborative Manufacturing), and the Industrial Internet Innovation and Development Project in 2020 (Program for Industrial Internet Platform Application Innovation Promotion Center).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abushagur, A. A. G., Arsad, N., Reaz, M. I., and Bakar, A. A. A. (2014). Advances in bio-tactile sensors for minimally invasive surgery using the fibre bragg grating force sensor technique: a survey. Sensors 14, 6633–6665. doi: 10.3390/s140406633

Afshari, E., Najarian, S., and Simforoosh, N. (2010). Application of artificial tactile sensing approach in kidney-stone-removal laparoscopy. Biomed. Mater. Eng. 20, 261–267. doi: 10.3233/BME-2010-0640

Afshari, E., Najarian, S., Simforoosh, N., and Farkoush, S. H. (2011). Design and fabrication of a novel tactile sensory system applicable in artificial palpation. Minim. Invasive Ther. Allied Technol. 20, 22–29. doi: 10.3109/13645706.2010.518739

Ahmadi, R., Packirisamy, M., Dargahi, J., and Cecere, R. (2012). Discretely loaded beam-type optical fiber tactile sensor for tissue manipulation and palpation in minimally invasive robotic surgery. IEEE Sens. J. 12, 22–32. doi: 10.1109/jsen.2011.2113394

Al-Handarish, Y., Omisore, O. M., Igbe, T., Han, S., Li, H., Du, W., et al. (2020). A survey of tactile-sensing systems and their applications in biomedical engineering. Adv. Mater. Sci. Eng. 2020, 1–17. doi: 10.1155/2020/4047937

Bandari, N., Dargahi, J., and Packirisamy, M. (2020). Tactile sensors for minimally invasive surgery: a review of the state-of-the-art, applications, and perspectives. IEEE Access 8, 7682–7708. doi: 10.1109/access.2019.2962636

Baumann, I., Plinkert, P. K., Kunert, W., and Buess, G. F. (2001). Vibrotactile characteristics of different tissues in endoscopic otolaryngologic surgery ‐ in vivo and ex vivo measurements. Minim. Invasive Ther. Allied Technol. 10, 323–327. doi: 10.1080/136457001753337627

Beasley, R. A., and Howe, R. D. (2002). “Tactile tracking of arteries in robotic surgery” in 2002 IEEE International Conference on Robotics and Automation, Vols I-Iv, Proceedings; May 11-15, 2002.

Bell, A. K., and Cao, C. G. L. (2007). “Effects of artificial force feedback in laparoscopic surgery training simulators” in 2007 Ieee International Conference on Systems, Man and Cybernetics, Vols 1–8; October 07-10, 2007; 564–568.

Berkelman, P. J., Whitcomb, L. L., Taylor, R. H., and Jensen, P. (2003). A miniature microsurgical instrument tip force sensor for enhanced force feedback during robot-assisted manipulation. IEEE Trans. Robot. Autom. 19, 917–922. doi: 10.1109/tra.2003.817526

Bicchi, A., Canepa, G., de rossi, D., Iacconi, P., and Scilingo, E. P. (1996). “A sensor-based minimally invasive surgery tool for detecting tissue elastic properties” in Proceedings of IEEE International Conference on Robotics and Automation; April 22-28, 1996; Minneapolis, MN, USA, USA: IEEE.

Bonakdar, A., and Narayanan, N. (2010). Determination of tissue properties using microfabricated piezoelectric tactile sensor during minimally invasive surgery. Sens. Rev. 30, 233–241. doi: 10.1108/02602281011051425

Callaghan, D. J., and McGrath, M. M. (2007). “A Force measurement evaluation tool for telerobotic cutting applications: development of an effective characterization platform,” in Proceedings of World Academy of Science, Engineering and Technology, Vol 25, ed. C. Ardil, 274–280.

Cao, L., Kotagiri, R., Sun, F., Li, H., Huang, W., Aye, Z. M. M., et al. (2016). “Efficient spatio-temporal tactile object recognition with randomized tiling convolutional networks in a hierarchical fusion strategy” in Thirtieth Aaai Conference on Artificial Intelligence; Febraury 12-17, 2016; 3337–3345.

Chanthasopeephan, T., Desai, J. P., and Lau, A. C. W. (2003). Measuring forces in liver cutting: new equipment and experimental results. Ann. Biomed. Eng. 31, 1372–1382. doi: 10.1114/1.1624601

Chuang, C. H., Li, T. H., Chou, I. C., and Teng, Y. J. (2015). “Piezoelectric tactile sensor for submucosal tumor hardness detection in endoscopy” in 2015 Transducers −2015 18th International Conference on Solid-State Sensors, Actuators and Microsystems; June 21-25, 2015.

Dargahi, J. (2002). An endoscopic and robotic tooth-like compliance and roughness tactile sensor. J. Mech. Des. 124, 576–582. doi: 10.1115/1.1471531

Dargahi, J., and Najarian, S. (2004). Human tactile perception as a standard for artificial tactile sensing—a review. Int. J. Med. Robot. 1, 23–35. doi: 10.1002/rcs.3

Dargahi, J., and Payandeh, S. (1998). “Surface texture measurement by combining signals from two sensing elements of a piezoelectric tactile sensor” in Sensor fusion: Architectures, algorithms, and applications ii. ed. B. V. Dasarathy (Orlando, FL, USA). 122–128.

Eklund, A., Bergh, A., and Lindahl, O. A. (1999). A catheter tactile sensor for measuring hardness of soft tissue: measurement in a silicone model and in an in vitro human prostate model. Med. Biol. Eng. Comput. 37, 618–624. doi: 10.1007/bf02513357

Eltaib, M., and Hewit, J. R. (2000). A tactile sensor for minimal access surgery applications. IFAC Proc. Vol. 33, 505–508. doi: 10.1016/S1474-6670(17)39194-2

Eltaib, M. E. H., and Hewit, J. R. (2003). Tactile sensing technology for minimal access surgery ‐ a review. Mechatronics 13, 1163–1177. doi: 10.1016/S0957-4158(03)00048-5

Fagogenis, G., Mencattelli, M., Machaidze, Z., Rosa, B., Price, K., Wu, F., et al. (2019). Autonomous robotic intracardiac catheter navigation using haptic vision. Sci. Robot. 4:eaaw1977. doi: 10.1126/scirobotics.aaw1977

Fazal, I., and Karsiti, M. N. (2009). “Needle insertion simulation forces v/s experimental forces for haptic feedback device” in 2009 IEEE Student Conference on Research and Development: Scored 2009, Proceedings; November 16-18, 2009.

Fischer, G. S., Akinbiyi, T., Saha, S., Zand, J., Talamini, M., Marohn, M., et al. (2006). “Ischemia and force sensing surgical instruments for augmenting available surgeon information” in 2006 1st IEEE Ras-Embs International Conference on Biomedical Robotics and Biomechatronics, Vols 1–3; Febraury 20-22, 2006; 989.

Guo, J., Guo, S., Wang, P., Wei, W., and Wang, Y. (2013). “A Novel type of catheter sidewall tactile sensor array for vascular interventional surgery” in 2013 Icme International Conference on Complex Medical Engineering; May 25-28, 2013.

Guo, J., Guo, S., Xiao, N., Ma, X., Yoshida, S., Tamiya, T., et al. (2012). A novel robotic catheter system with force and visual feedback for vascular interventional surgery. Int. J. Mech. Autom. 2, 15–24. doi: 10.1504/IJMA.2012.046583

Guthar, G. S., and Salisbury, J. K. (2000). “The IntuitiveTM telesurgery system: overview and application” in Proceedings-IEEE International Conference on Robotics and Automation 1; April 24-28, 2000; 618–621.

Gwilliam, J. C., Mahvash, M., Vagvolgyi, B., Vacharat, A., Yuh, D. D., Okamura, A. M., et al. (2009). “Effects of haptic and graphical force feedback on teleoperated palpation” in Icra: 2009 IEEE International Conference on Robotics and Automation, Vols 1–7; May 12-17, 2009; 3315.

He, X., Handa, J., Gehlbach, P., Taylor, R., and Iordachita, I. (2014). A Submillimetric 3-DOF force sensing instrument with integrated fiber Bragg grating for retinal microsurgery. IEEE Trans. Biomed. Eng. 61, 522–534. doi: 10.1109/tbme.2013.2283501

Hosseini, S. M., Kashani, S. M. T., Najarian, S., Panahi, F., Naeini, S. M. M., and Mojra, A. (2010). A medical tactile sensing instrument for detecting embedded objects, with specific application for breast examination. Int. J. Med. Robot. 6, 73–82. doi: 10.1002/rcs.291

Hosseini, M., Najarian, S., Motaghinasab, S., and Dargahi, J. (2006). Detection of tumours using a computational tactile sensing approach. Int. J. Med. Robot. 2, 333–340. doi: 10.1002/rcs.112

Ju, F., Wang, Y., Zhang, Z., Wang, Y., Yun, Y., Guo, H., et al. (2019). A miniature piezoelectric spiral tactile sensor for tissue hardness palpation with catheter robot in minimally invasive surgery. Smart Mater. Struct. 28:025033. doi: 10.1088/1361-665X/aafc8d

Ju, F., Yun, Y., Zhang, Z., Wang, Y., Wang, Y., Zhang, L., et al. (2018). A variable-impedance piezoelectric tactile sensor with tunable sensing performance for tissue hardness sensing in robotic tumor palpation. Smart Mater. Struct. 27:115039. doi: 10.1088/1361-665X/aae54f

Kalantari, M., Ramezanifard, M., Ahmadi, R., Dargahi, J., and Koevecses, J. (2011). A piezoresistive tactile sensor for tissue characterization during catheter-based cardiac surgery. Int. J. Med. Robot. 7, 431–440. doi: 10.1002/rcs.413

Kim, U., Kim, Y. B., So, J., Seok, D. -Y., and Choi, H. R. (2018). Sensorized surgical forceps for robotic-assisted minimally invasive surgery. IEEE Trans. Ind. Electron. 65, 9604–9613. doi: 10.1109/tie.2018.2821626

Kim, U., Lee, D. -H., Yoon, W. J., Hannaford, B., and Choi, H. R. (2015). Force sensor integrated surgical forceps for minimally invasive robotic surgery. IEEE Trans. Robot. 31, 1214–1224. doi: 10.1109/tro.2015.2473515

Kitagawa, M., Dokko, D., Okamura, A. M., and Yuh, D. D. (2005). Effect of sensory substitution on suture-manipulation forces for robotic surgical systems. J. Thorac. Cardiovasc. Surg. 129, 151–158. doi: 10.1016/j.jtcvs.2004.05.029

Konstantinova, J., Jiang, A., Althoefer, K., Dasgupta, P., and Nanayakkara, T. (2014). Implementation of tactile sensing for palpation in robot-assisted minimally invasive surgery: a review. IEEE Sens. J. 14, 2490–2501. doi: 10.1109/jsen.2014.2325794

Lederman, S. J., and Klatzky, R. L. (1999). Sensing and displaying spatially distributed fingertip forces in haptic interfaces for teleoperator and virtual environment systems. Presence Teleoperat. Virt. Environ. 8, 86–103. doi: 10.1162/105474699566062

Lee, D. -H., Kim, U., Gulrez, T., Yoon, W. J., Hannaford, B., and Choi, H. R. (2016). A laparoscopic grasping tool with force sensing capability. IEEE-Asme Trans. Mech. 21, 130–141. doi: 10.1109/tmech.2015.2442591

Lee, J. -H., and Won, C. -H. (2013). The tactile sensation imaging system for embedded lesion characterization. IEEE J. Biomed. Health Inform. 17, 452–458. doi: 10.1109/jbhi.2013.2245142

Li, K., Pan, B., Zhan, J., Gao, W., Fu, Y., and Wang, S. (2015). Design and performance evaluation of a 3-axis force sensor for MIS palpation. Sens. Rev. 35, 219–228. doi: 10.1108/sr-04-2014-632

Li, T., Shi, C., and Ren, H. (2018). A high-sensitivity tactile sensor array based on fiber bragg grating sensing for tissue palpation in minimally invasive surgery. IEEE-Asme Trans. Mech. 23, 2306–2315. doi: 10.1109/tmech.2018.2856897

Liu, H., Greco, J., Song, X., Bimbo, J., Seneviratne, L., and Althoefer, K. (2012). “Tactile image based contact shape recognition using neural network” in 2012 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI); September 13-15, 2012; 138–143.

Liu, H., Puangmali, P., Zbyszewski, D., Elhage, O., Dasgupta, P., Dai, J. S., et al. (2010). An indentation depth-force sensing wheeled probe for abnormality identification during minimally invasive surgery. Proc. Inst. Mech. Eng. H 224, 751–763. doi: 10.1243/09544119jeim682

Liu, H., Wu, Y., Sun, F., and Guo, D. (2017). Recent progress on tactile object recognition. Int. J. Adv. Robot. Syst. 14, 1–12. doi: 10.1177/1729881417717056

Luo, S., Bimbo, J., Dahiya, R., and Liu, H. (2017). Robotic tactile perception of object properties: a review. Mechatronics 48, 54–67. doi: 10.1016/j.mechatronics.2017.11.002

Mahvash, M., Gwilliam, J., Agarwal, R., Vagvolgyi, B., Su, L. -M., Yuh, D. D., et al. (2008). “Force-feedback surgical teleoperator: controller design and palpation experiments.” in: Symposium on Haptics Interfaces for Virtual Environment and Teleoperator Systems 2008, Proceedings. eds. J. Weisenberger, A. Okamura and K. MacLean.

Marohn, M. R., and Hanly, E. J. (2004). Twenty-first century surgery using twenty-first century technology: surgical robotics. Curr. Surg. 61, 466–473. doi: 10.1016/j.cursur.2004.03.009

Mohareri, O., Schneider, C., and Salcudean, S. (2014). “Bimanual telerobotic surgery with asymmetric force feedback: A daVinci (R) surgical system implementation” in 2014 Ieee/Rsj International Conference on Intelligent Robots and Systems; September 14-18, 2014; 4272–4277.

Morimoto, A. K., Foral, R. D., Kuhlman, J. L., Zucker, K. A., Curet, M. J., Bocklage, T., et al. (1997). Force sensor for laparoscopic babcock. Stud. Health Technol. Inform. 39, 354–361.

Munawar, A., and Fischer, G. (2016). “Towards a haptic feedback framework for multi-DOF robotic laparoscopic surgery platforms” in 2016 IEEE/Rsj International Conference on Intelligent Robots and Systems; October 9-14, 2016.

Najarian, S., Dargahi, J., and Zheng, X. Z. (2006). A novel method in measuring the stiffness of sensed objects with applications for biomedical robotic systems. Int. J. Med. Robot. 2, 84–90. doi: 10.1002/rcs.75

Narayanan, N. B., Bonakdar, A., Dargahi, J., Packirisamy, M., and Bhat, R. (2006). Design and analysis of a micromachined piezoelectric sensor for measuring the viscoelastic properties of tissues in minimally invasive surgery. Smart Mater. Struct. 15, 1684–1690. doi: 10.1088/0964-1726/15/6/021

Okamura, A. M. (2009). Haptic feedback in robot-assisted minimally invasive surgery. Curr. Opin. Urol. 19, 102–107. doi: 10.1097/MOU.0b013e32831a478c

Pacchierotti, C., Prattichizzo, D., and Kuchenbecker, K. J. (2016). Cutaneous feedback of fingertip deformation and vibration for palpation in robotic surgery. IEEE Trans. Biomed. Eng. 63, 278–287. doi: 10.1109/tbme.2015.2455932

Park, M., Bok, B. -G., Ahn, J. -H., and Kim, M. -S. (2018). Recent advances in tactile sensing technology. Micromachines 9:321. doi: 10.3390/mi9070321

Perri, M. T., Trejos, A. L., Naish, M. D., Patel, R. V., and Malthaner, R. A. (2010). New tactile sensing system for minimally invasive surgical tumour localization. Int. J. Med. Robot. 6, 211–220. doi: 10.1002/rcs.308

Pitakwatchara, P., Warisawa, S. I., and Mitsuishi, M. (2006). “Analysis of the surgery task for the force feedback amplification in the minimally invasive surgical system” in Conference proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; August 30-September 3, 2006; 829–832.

Prasad, S. K., Kitagawa, M., Fischer, G. S., Zand, J., Talamini, M. A., Taylor, R. H., et al. (2003). “A modular 2-DOF force-sensing instrument for laparoscopic surgery,” in Medical Image Computing and Computer-Assisted Intervention-Miccai 2003, Pt 1. eds. R. E. Ellis and T. M. Peters, 279–286.

Puangmali, P., Althoefer, K., Seneviratne, L. D., Murphy, D., and Dasgupta, P. (2008). State-of-the-art in force and tactile sensing for minimally invasive surgery. IEEE Sensors J. 8, 371–381. doi: 10.1109/jsen.2008.917481

Reiley, C. E., Akinbiyi, T., Burschka, D., Chang, D. C., Okamura, A. M., and Yuh, D. D. (2008). Effects of visual force feedback on robot-assisted surgical task performance. J. Thorac. Cardiovasc. Surg. 135, 196–202. doi: 10.1016/j.jtcvs.2007.08.043

Richards, C., Rosen, J., Hannaford, B., Pellegrini, C., and Sinanan, M. (2000). Skills evaluation in minimally invasive surgery using force/torque signatures. Surg. Endos. 14, 791–798. doi: 10.1007/s004640000230

Roesthuis, R. J., Janssen, S., and Misra, S. (2013). “On using an array of fiber bragg grating sensors for closed-loop control of flexible minimally invasive surgical instruments” in 2013 IEEE/Rsj International Conference on Intelligent Robots and Systems. ed. N. Amato. November 3-7, 2013; 2545–2551.

Rosen, J., Solazzo, M., Hannaford, B., and Sinanan, M. (2001). “Objective laparoscopic skills assessments of surgical residents using hidden Markov models based on haptic information and tool/tissue interactions” in Medicine meets virtual reality 2001: Outer space, inner space, virtual space. eds. J. D. Westwood, H. M. Hoffman, G. T. Mogel, D. Stredney, and R. A. Robb (Amsterdam, BG: IOS Press). 417–423.

Saccomandi, P., Schena, E., Oddo, C. M., Zollo, L., Silvestri, S., and Guglielmelli, E. (2014). Microfabricated tactile sensors for biomedical applications: a review. Biosensors 4, 422–448. doi: 10.3390/bios4040422

Sadeghi-Goughari, M., Mojra, A., and Sadeghi, S. (2016). Parameter estimation of brain tumors using intraoperative thermal imaging based on artificial tactile sensing in conjunction with artificial neural network. J. Phys. D. Appl. Phys. 49:075404. doi: 10.1088/0022-3727/49/7/075404

Schostek, S., Ho, C. -N., Kalanovic, D., and Schurr, M. O. (2006). Artificial tactile sensing in minimally invasive surgery ‐ a new technical approach. Minim. Invasive Ther. Allied Technol. 15, 296–304. doi: 10.1080/13645700600836299

Schostek, S., Schurr, M. O., and Buess, G. F. (2009). Review on aspects of artificial tactile feedback in laparoscopic surgery. Med. Eng. Phys. 31, 887–898. doi: 10.1016/j.medengphy.2009.06.003

Song, H. -S., Kim, H., Jeong, J., and Lee, J. -J. (2011). “Development of FBG sensor system for force-feedback in minimally invasive robotic surgery” in 2011 Fifth International Conference on Sensing Technology; November 28-December 1, 2011.

Song, H. -S., Kim, K. -Y., and Lee, J. -J. (2009). “Development of the dexterous manipulator and the force sensor for minimally invasive surgery” in Proceedings of the Fourth International Conference on Autonomous Robots and Agents; Febraury 10-12, 2009.

Talasaz, A., and Patel, R. V. (2013). Integration of force reflection with tactile sensing for minimally invasive robotics-assisted tumor localization. IEEE Trans. Haptics. 6, 217–228. doi: 10.1109/ToH.2012.64

Talasaz, A., Trejos, A. L., and Patel, R. V. (2012). “Effect of force feedback on performance of robotics-assisted suturing.” in 2012 4th IEEE Ras and Embs International Conference on Biomedical Robotics and Biomechatronics. eds. J. P. Desai, L. P. S. Jay and L. Zollo. June 24-27, 2012; 823–828.

Talasaz, A., Trejos, A. L., and Patel, R. V. (2017). The role of direct and visual force feedback in suturing using a 7-DOF dual-arm Teleoperated system. IEEE Trans. Haptics. 10, 276–287. doi: 10.1109/toh.2016.2616874

Tholey, G., Desai, J. P., and Castellanos, A. E. (2005). Force feedback plays a significant role in minimally invasive surgery ‐ results and analysis. Ann. Surg. 241, 102–109. doi: 10.1097/01.sla.0000149301.60553.1e

Tiwana, M. I., Redmond, S. J., and Lovell, N. H. (2012). A review of tactile sensing technologies with applications in biomedical engineering. Sens. Actuat. Phys. 179, 17–31. doi: 10.1016/j.sna.2012.02.051

Trejos, A. L., Jayender, J., Perri, M. T., Naish, M. D., Patel, R. V., and Malthaner, R. A. (2009). Robot-assisted tactile sensing for minimally invasive tumor localization. Int. J. Robot. Res. 28, 1118–1133. doi: 10.1177/0278364909101136

Uranues, S., Maechler, H., Bergmann, P., Huber, S., Hoebarth, G., Pfeifer, J., et al. (2002). Early experience with telemanipulative abdominal and cardiac surgery with the Zeus™ robotic system. Eur. Surg. 34, 190–193. doi: 10.1046/j.1563-2563.2002.t01-1-02049.x

Valdastri, P., Harada, K., Menciassi, A., Beccai, L., Stefanini, C., Fujie, M., et al. (2006). Integration of a miniaturised triaxial force sensor in a minimally invasive surgical tool. IEEE Trans. Biomed. Eng. 53, 2397–2400. doi: 10.1109/tbme.2006.883618

Verdura, J., Carroll, M. E., Beane, R., Ek, S., and Callery, M. P. (2000). Systems methods and instruments for minimally invasive surgery. United States patent application 6165184.

Wagner, C. R., and Howe, R. D. (2007). Force feedback benefit depends on experience in multiple degree of freedom robotic surgery task. IEEE Trans. Robot. 23, 1235–1240. doi: 10.1109/tro.2007.904891

Wang, X., Zhang, H., Dong, L., Han, X., Du, W., Zhai, J., et al. (2016). Self-powered high-resolution and pressure-sensitive triboelectric sensor matrix for real-time tactile mapping. Adv. Mater. 28, 2896–2903. doi: 10.1002/adma.201503407

Westebring-van der Putten, E. P., Goossens, R. H. M., Jakimowicz, J. J., and Dankelman, J. (2008). Haptics in minimally invasive surgery ‐ a review. Minim. Invasive Ther. Allied Technol. 17, 3–16. doi: 10.1080/13645700701820242

Xie, H., Liu, H., Luo, S., Seneviratne, L. D., and Althoefer, K. (2013). “Fiber optics tactile array probe for tissue palpation during minimally invasive surgery” in 2013 IEEE/Rsj International Conference on Intelligent Robots and Systems. ed. N. Amato. November 3-7, 2013; 2539–2544.

Yip, M. C., Yuen, S. G., and Howe, R. D. (2010). A robust uniaxial force sensor for minimally invasive surgery. I.E.E.E. Trans. Biomed. Eng. 57, 1008–1011. doi: 10.1109/tbme.2009.2039570

Yuan, W., Zhu, C., Owens, A., Srinivasan, M., and Adelson, E. (2017). “Shape-independent hardness estimation using deep learning and a GelSight tactile sensor” in 2017 IEEE International Conference on Robotics and Automation (ICRA); May 29-June 3, 2017; Singapore, Singapore: IEEE.

Zapata-Impata, B. S., Gil, P., and Torres, F. (2019). Learning spatio temporal tactile features with a ConvLSTM for the direction of slip detection. Sensors 19:523. doi: 10.3390/s19030523

Zemiti, N., Ortmaier, T., Vitrani, M. A., and Morel, G. (2006). “A force controlled laparoscopic surgical robot without distal force sensing” in Experimental robotics ix. eds. M. H. Ang and O. Khatib (Heidelberger, Berlin: Springer), 153.

Keywords: tactile sensors, tactile perception, tactile images, minimally invasive surgery, robotic surgery, artificial intelligence

Citation: Huang C, Wang Q, Zhao M, Chen C, Pan S and Yuan M (2020) Tactile Perception Technologies and Their Applications in Minimally Invasive Surgery: A Review. Front. Physiol. 11:611596. doi: 10.3389/fphys.2020.611596

Received: 29 September 2020; Accepted: 16 November 2020;

Published: 23 December 2020.

Edited by:

Xin Gao, King Abdullah University of Science and Technology, Saudi ArabiaReviewed by:

Yan Wang, Jilin University, ChinaCopyright © 2020 Huang, Wang, Zhao, Chen, Pan and Yuan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chao Huang, Y2h1YW5nQGljdC5hYy5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.