- 1School of Artificial Intelligence, Chongqing University of Education, Chongqing, China

- 2School of Pharmacy (School of Traditional Chinese Medicine), Chongqing Medical and Pharmaceutical College, Chongqing, China

Ultrasound imaging has a history of several decades. With its non-invasive, low-cost advantages, this technology has been widely used in medicine and there have been many significant breakthroughs in ultrasound imaging. Even so, there are still some drawbacks. Therefore, some novel image reconstruction and image analysis algorithms have been proposed to solve these problems. Although these new solutions have some effects, many of them introduce some other side effects, such as high computational complexity in beamforming. At the same time, the usage requirements of medical ultrasound equipment are relatively high, and it is not very user-friendly for inexperienced beginners. As artificial intelligence technology advances, some researchers have initiated efforts to deploy deep learning to address challenges in ultrasound imaging, such as reducing computational complexity in adaptive beamforming and aiding novices in image acquisition. In this survey, we are about to explore the application of deep learning in medical ultrasound imaging, spanning from image reconstruction to clinical diagnosis.

1 Introduction

1.1 Brief introduction to medical imaging

Medical imaging relies on various physical phenomena to visualize human body tissues, internally and externally, through non-invasive or invasive techniques. Key modalities such as computed tomography (CT), magnetic resonance imaging (MRI), X-ray radiography, ultrasound, and digital pathology generate essential healthcare data, constituting around 90% of medical information [1]. Consequently, medical imaging plays a vital role in clinical assessment and healthcare interventions. Deep learning, as the cornerstone technology propelling the ongoing artificial intelligence (AI) revolution, exhibits significant potential in medical imaging. It spans from image reconstruction to comprehensive image analysis[2–8]. The integration of deep learning with medical imaging has spurred advancements, with the potential to reshape clinical practices and healthcare delivery. Empirical evidence has proven that deep learning algorithms exhibit performance comparable to that of medical professionals in diagnosing various medical conditions from imaging data [9]. At the same time, many applications of deep learning in clinics have emerged [10–16]. Consequently, there is a discernible trend towards certifying software applications for clinical utilization [17].

1.2 Literature reviews of deep learning in ultrasound beamforming

The development of medical ultrasound has a history of 80–90 years now. Medical ultrasound began as an investigative technology around the end of World War II [18]. With advancements in electronics, this technology improved. Ultrasound is being continually refined for better resolution, more portable devices, and more automated systems that can aid even in remote diagnostics. The most recent advancement in medical ultrasound is the incorporation of AI to help diagnosis.

The application of AI in medical ultrasound is long-standing [19–25]. With the explosion of deep learning, its application in medicine has become even more widespread. The medical ultrasound system mainly includes image reconstruction and image analysis, both of which have seen extensive applications of deep learning [26]. Deep learning has brought a revolutionary change in ultrasound beamforming, significantly enhancing image quality and improving computational efficiency. Ultrasound beamforming is a process of combining signals from multiple ultrasound elements to construct a focused image. Traditional methods rely heavily on user intervention and predefined parameters, which may limit the image quality and accuracy. Deep learning, on the other hand, uses neural network models to learn and generalize from examples. In the context of ultrasound beamforming, deep learning methods can learn to extract relevant features from raw ultrasound data and form a high-quality image without needing explicit instructions or predefined parameters. The process is relatively autonomous and adaptable. In training phase, a deep learning model is trained with a large amount of data (usually raw Radio Frequency (RF) data) which includes both inputs (ultrasound signals) and outputs (desired images). The model learns to identify patterns in the data and how to predict the output from given inputs. Once trained, the model can be used with new input data to predict the corresponding output images. The advantage is that this prediction process is usually faster than traditional beamforming methods as it bypasses the need for complex signal processing. Deep learning models such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have been successfully used in ultrasound beamforming. They have shown promising results in enhancing image resolution, reducing speckle noise, improving contrast, and even performing advanced tasks like tissue characterization and acoustic aberration correction. Around 2017, applications of deep learning in beamforming began to appear in publications [27,28], and the interest in this area has been increasing ever since. In plane wave imaging, if only one plane wave is emitted, a very high frame rate can be achieved, but this will lead to poor image quality. Therefore, to improve image quality, a method called coherent plane wave compounding (CPWC) [29] has been proposed to solve this problem. However, using this method usually requires the emission of plane waves at multiple angles, which leads to a reduction in frame rate. Gasse et al. [27] propose a method using CNNs that allows for the acquisition of high-quality images even with the emission of only three plane waves. Luchies and Byram [28,30] discuss how to use deep neural networks (DNNs) to suppress off-axis scattering. The study is based on operations in the frequency domain through short-time Fourier transform. There are also some studies on bypassing beamforming [31–36]. The principal concept involves utilizing advanced deep learning methodologies to directly reconstruct images or conduct image segmentation from raw RF data. Deep learning has also been used to reduce artifacts in multi-line acquisition (MLA) and multi-line transmission (MLT) [37,38, 39]. Luijten et al. [35,40] investigate how deep learning can be applied to the adaptive beamforming process, addressing the computational challenges and aiming to produce better ultrasound images. Wiacek et al. [35,42] explore the use of DNNs to estimate normalized cross-correlation as a function of spatial lag. This estimation is specifically for coherence-based beamforming, such as short-lag spatial coherence (SLSC) beamforming [44]. Using sub-sampled RF data to reconstruct images can increase the frame rate, but the image quality will decrease. Some researchers [31,45–47] propose using deep learning to address this issue. More research is focused on the application of deep learning in plane wave imaging [48–56]. Some studies [57–59] discuss the training schemes. In addition, the ultrasound community also organized a challenge to encourage researchers to engage in deep learning research [60,61].

1.3 Overview of deep learning in clinical application of ultrasound

Deep learning plays a significant role in ultrasound clinical applications as it enhances the efficiency and accuracy of diagnosis, reducing human errors and paving the way for more sophisticated applications. Deep learning models can be trained to automatically detect and segment lesions in ultrasound images. This reduces the workload for radiologists and increases accuracy, as human interpretation can be subjective and variable. They can also be trained to classify diseases based on ultrasound images. Deep learning helps build more detailed 3D and 4D imaging from 2D ultrasound images, providing a more comprehensive picture of the patient’s condition. Deep learning algorithms can be used to predict clinical outcomes or progression of a disease based on ultrasound imaging data. From Ref. [19–25], it can be seen that the application of AI in medical ultrasound analysis predates that of beamforming. Medical ultrasound analysis mainly includes segmentation, classification, registration, and localization [62,63]. The integration of deep learning with ultrasound image analysis has spurred advancements, with the potential to reshape clinical practices. Breast cancer is a disease that seriously threatens people’s health [64]. The application of deep learning in breast ultrasound can effectively assist radiologists or clinicians in diagnosis. Becker et al. [65] are attempting to use a deep learning software (DLS) to classify breast cancer from ultrasound images. Xu et al. [66] focus on segmenting breast ultrasound images into functional tissues using CNNs. This segmentation aids in tumor localization, breast density measurement, and treatment response assessment, crucial for breast cancer diagnosis. Qian et al. [67] discuss a deep-learning system designed to predict Breast Imaging Reporting and Data System (BI-RADS) scores for breast cancer using multimodal breast-ultrasound images. Chen et al. [68] introduce a novel deep learning model for breast cancer diagnosis using contrast-enhanced ultrasound (CEUS) videos. Jabeen et al. [69] present a novel framework for classifying breast cancer from ultrasound images. The method employs deep learning and optimizes feature selection and fusion for enhanced classification accuracy. Raza et al. [70] propose a deep learning framework, DeepBreastCancerNet, designed for the detection and classification of breast cancer from ultrasound images. Deep learning is also widely applied in cardiac ultrasound. Degel et al. [71] discuss a novel approach to segment the left atrium in 3D echocardiography images using CNNs. Leclerc et al. [72] evaluate encoder-decoder deep CNN methods for assessing 2D echocardiographic images. The study introduces the Cardiac Acquisitions for Multi-structure Ultrasound Segmentation (CAMUS) dataset, the largest publicly available and fully annotated dataset for echocardiographic assessment, featuring images from 500 patients. Ghorbani et al. [73] investigate the application of deep learning models, particularly CNNs, to interpret echocardiograms. Narang et al. [74] explore the efficacy of a deep learning algorithm in assisting novice operators to obtain diagnostic-quality transthoracic echocardiograms. Ultrasonography is also a primary diagnostic method for thyroid diseases [75]. Thus, some studies on the assistance of deep learning in the diagnosis of thyroid diseases [76–81] emerged. There are also many applications of deep learning in prostate cancer detection [82–84] and prostate segmentation [85–91]. In ultrasound fetal imaging, deep learning also plays an increasingly important role[92–97]. In addition, there is a constant emergence of deep learning research in ultrasound brain imaging [98–103].

1.4 Other review articles on deep learning in ultrasound imaging

There are already some review articles about deep learning in medical ultrasound imaging. van Sloun et al. [104] presents an inclusive examination of the potential and application of deep learning strategies in ultrasound systems, spanning from the front end to more complex applications. In another article [105], they specifically discussed deep learning in beamforming. They introduce the potential role that deep learning can play in beamforming, as well as some of the existing achievements of deep learning in beamforming, and also look forward to new opportunities. Ref. [106] discusses the shortcomings of traditional signal processing methods in ultrasound imaging. The paper suggests a blend of model-based signal processing methods with machine learning approaches, stating that probability theory can seamlessly bridge the gap between conventional strategies and modern machine/deep learning approaches. However, these articles mainly focus on the principles of ultrasound imaging and do not discuss clinical applications. There are also some articles that provide reviews from the perspective of image analysis and clinical practices. Reference [62,63] discuss deep learning in medical ultrasound analysis from multiple perspectives. Afrin et al. [107] discuss the application of deep learning in different ultrasound methods for breast cancer management - from diagnosis to prognosis. Reference [108] presents an in-depth analysis of the application of AI in echocardiography interpretation. Khachnaoui et al. [109] discuss the role of ultrasound imaging in diagnosing thyroid lesions. In this review, our aim is to introduce the application of deep learning in medical ultrasound from the perspective of image reconstruction to clinical applications. The content seems to be quite broad, we aim to provide a comprehensive perspective on the application of deep learning in medical ultrasound and introduce the potential role of deep learning in ultrasound imaging from a system perspective.

2 Overview of medical ultrasound system

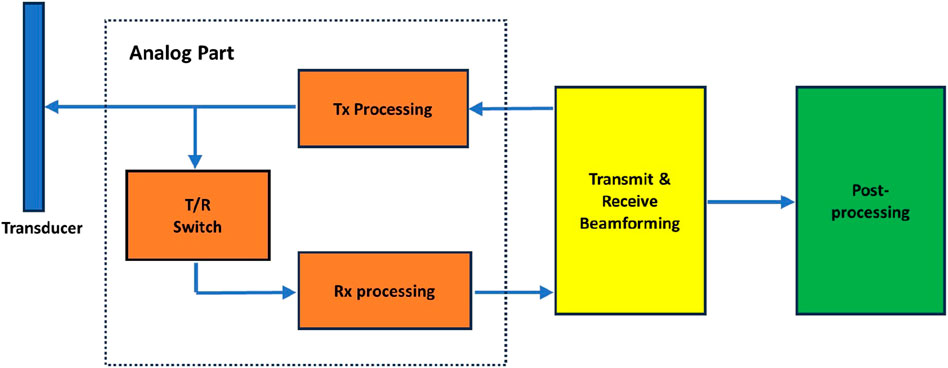

A medical ultrasound system consists of various interconnected modules, each of which is further segmented into numerous smaller components. Figure 1 illustrates a simplified block diagram of a medical ultrasound system. The entire signal processing pipeline of an ultrasound system is relatively complex, with even more detailed subdivisions for each module. For those interested in a deeper exploration, please refer to [110]. Here, we are only providing readers with a high-level overview, and a more in-depth introduction to the modules we are interested in will be covered subsequently. The dashed box in Figure 1 represents the analog signal processing module, which is not within the scope of discussion in this survey. We will focus on the discussion of the transmit and receive beamforming and introduce the post-processing as well. The transmit and receive beamforming are actually two distinct parts; however, in Figure 1, we categorize both under beamforming. In subsequent discussions, we will address these two parts separately. The categorization of post-processing here may be overly broad. In fact, after beamforming, there is a series of intermediate processing steps before the final post-processing. However, in this context, we refer to all these processing steps collectively as post-processing.

2.1 Transmit processing

In ultrasound systems, transmit beamforming is a technique that involves controlling the timing of excitation of multiple transducer elements to produce a directional beam or focus the beam within a specific area. By precisely adjusting the phase and amplitude of each element, an ultrasound beam with a specific direction and focal depth can be formed [111].

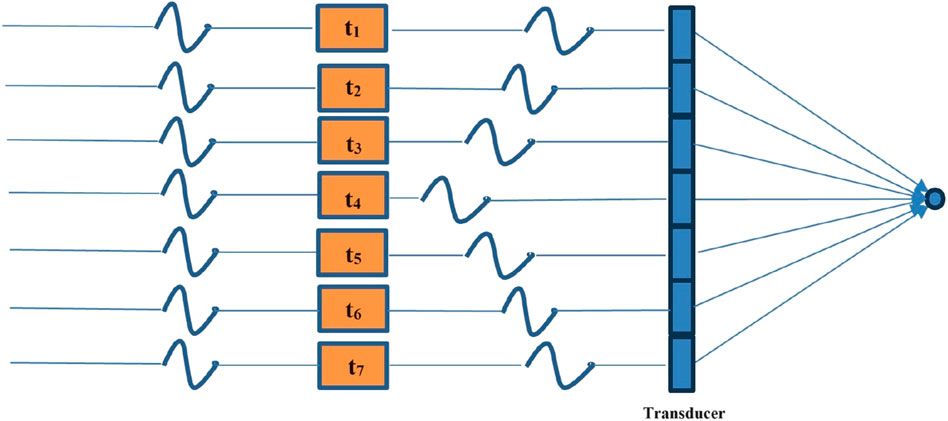

It can be seen from Figure 2, which illustrates the process of transmit beamforming, that the distance from each element on the transducer to the focal point is different. To ensure the beam ultimately focus at the point, it is necessary to control the emission timing of each element. It is noteworthy that in the typical transmission focusing, only a subset of elements are involved. In plane wave imaging, all elements on the transducer need to transmit, and the direction of the plane wave is controlled by adjusting the transmission timing of each element.

Figure 2. Schematic of transmit beamforming. By controlling the emission time of each element on the transducer, the waves emitted by each element can ultimately be focused on one point.

2.2 Receive processing

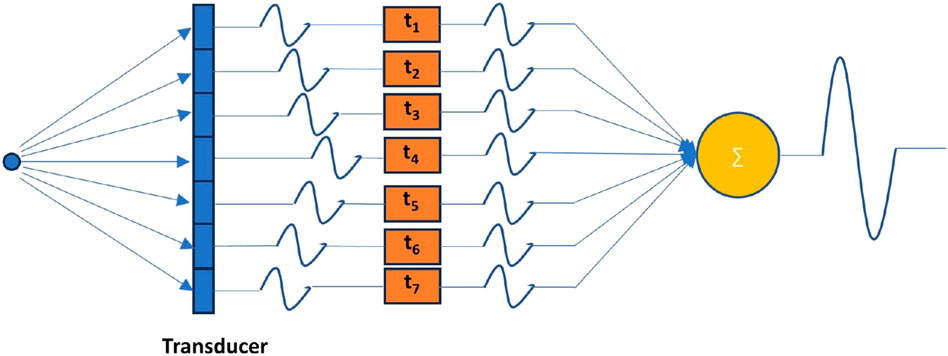

The receive beamforming is a crucial signal processing technique used to construct a high quality image from the echoes returning from the scanned tissue or organs in ultrasound imaging. When an ultrasound probe emits high-frequency sound waves, they travel through the body, echolocate off structures, and are then reflected back to the receiver. The reflected echoes are captured by multiple transducer elements arranged in an array on the probe. The schematic is illustrated in Figure 3.

Figure 3. Schematic of receive beamforming. In order to align the echo received by each transducer element that is reflected from a certain point, it is necessary to properly delay the signal received by each element.

Receive beamforming involves combining the signals received by each of these elements in an intelligent way to construct a coherent and high-resolution representation of the scanned region. The most fundamental beamforming technique is the delay-and-sum (DAS) [112] method where the received signals from different transducer elements are delayed relative to each other to account for the different times of flight from the reflecting structure. They are then summed together, enhancing the signal from a specific direction or focal point while attenuating the signals from other directions. While the transmitted beam can be focused at a certain depth, receive beamforming allows dynamic focusing at various depths on receive. The delays are continuously adjusted as the echoes return from different depths, effectively focusing the beam at multiple depths in real-time. The apodization process involves weighting the received signals before they are summed, reducing side lobes and improving the lateral resolution. Advanced beamforming techniques use adaptive methods like Minimum Variance (MV) [113] to improve the image quality further by adapting to the signal environment, hence reducing the impact of off-axis scattering and noise. The result of receive beamforming is a narrow, well-defined beam that can accurately locate and display the internal structures of the body, thus providing detailed images for diagnosis. Advances in digital signal processing and hardware technology have significantly improved beamforming techniques, making them more sophisticated and effective.

2.3 Post processing

Ultrasound imaging can be roughly divided into pre-processing and post-processing [114]. Beamforming, as a key part of pre-processing, plays an important role in imaging quality, but post-processing is also an indispensable step. The post-processing is a research field that involves applying several steps after the channel data are mapped to the image domain via beamforming. These steps include further image processing to improve B-mode image quality, such as contrast, resolution, despeckling. It also involves spatiotemporal processing to suppress tissue clutter and to estimate motion. For 2D or 3D ultrasound data, post-processing is crucial for automatic analysis and/or quantitative measurements [115]. For instance, the recovery of quantitative volume parameters is a unique way of making objective, reproducible, and operator-independent diagnoses.

Medical ultrasound image analysis involves the use of diagnostic techniques, primarily those leveraging ultrasound, to create an image of internal body structures like blood vessels, joints, muscles, tendons, and internal organs. These images can then be used to measure certain characteristics such as distances and velocities. Medical ultrasound image analysis has extensive applications in various medical fields, including fetal, cardiac, trans-rectal, and intra-vascular examinations.

Common practices in the analysis of medical ultrasound images often encompass techniques such as segmentation and classification. Segmentation separates different types of organs and structures in the image, especially for regions of interest. Segmentation often uses edge detection, region growing, thresholding techniques, and more advanced techniques such as cascade classifiers, random forests, deep learning, etc. Classification is also a key part of image analysis. It classifies the images into normal images and abnormal images based on the previously extracted features, or further, performs disease classification. Common classification methods include neural networks, K-nearest neighbors (K-NN), decision trees, Support Vector Machines (SVM), etc. In recent years, deep learning-based classification models, such as CNNs, have been widely used, and with their powerful performance and accuracy, are extensively applied in the field of medical image analysis.

3 Deep learning in medical ultrasound imaging

We will discuss the application of deep learning in medical ultrasound imaging from several perspectives. First is the improvement of different beamforming techniques via deep learning, followed by a discussion on clinical application, and then the analysis of the application of deep learning in portable ultrasound devices and training schemes. Finally, we will briefly introduce the CNNs and transformer.

3.1 Image reconstruction

3.1.1 Bypass beamforming

Beamforming plays a crucial role in enhancing image quality. DAS algorithm, as a classic beamforming technique, is widely used in ultrasound imaging systems. Despite its operational simplicity and ease of implementation, this method also presents certain limitations and drawbacks. DAS beamforming generates relatively high side lobes and grating lobes, which are unwanted beam directions that may capture reflected signals from non-target areas, reducing image contrast and resolution. To suppress the side lobes, a common method is to use apodization, which is the application of weighting windows.

Usually, beamforming synthesizes the signals received by an array of elements to form a directional response or beam pattern, but this process can be computationally intensive. Deep learning approaches can potentially learn to perform the beamforming operation more efficiently, leading to faster image reconstruction without compromising quality. Simson et al. [31] address the challenge of reconstructing high-quality ultrasound images from sub-sampled raw data. Traditional beamforming methods, although adept at generating high-resolution images, impose considerable computational demands and their efficacy diminishes when dealing with sub-sampled data. To overcome this issue, the authors propose “DeepFormer,” an end-to-end, deep learning-based method designed to reconstruct high-quality ultrasound images in real-time, using sub-sampled raw data. Traditional beamforming algorithms often ignore the information between scan lines. Yet, a fully convolutional neural network (FCNN) is capable of capturing this information and utilizing it effectively; thus, enabling cross-scan line interpolation in sub-sampled data. As shown in Eq. 1 [31], the loss function used in DeepFormer is a combination of ℓ1 loss and Structural Similarity Imaging Metric (SSIM) [116].

Their results, which were tested on an in vivo dataset of some participants, indicate that DeepFormer is a promising approach for enhancing ultrasound image quality while also providing the speed necessary for clinical use. In addition, Nair et al. [32–35], introduced a concept with the objective of achieving high frame rates for automated imaging tasks over an extended field of view using single plane wave transmissions. They address the typical challenge of suboptimal image quality produced by single plane wave insonification and propose the use of DNNs to directly extract information from raw RF data to generate both an image and a segmentation map simultaneously. Unlike traditional beamforming, which generally only reconstructs images, they have utilized deep learning to achieve both image reconstruction and segmentation at the same time. They employed FCNN, the entire network includes an encoder and two decoders, one for image reconstruction and the other for image segmentation. As shown in Eq. 2 [35], the loss function of the entire network also adopts a combination of ℓ1 loss and Dice similarity coefficient (DSC) loss.

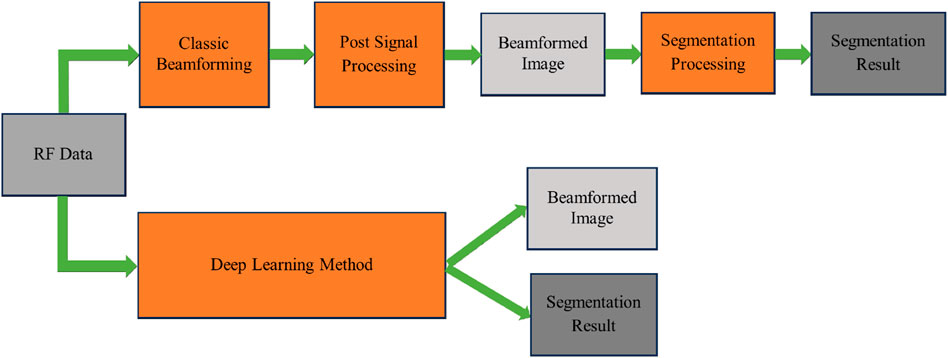

The DSC loss is used to measure the overlap between the predicted and true segmentation masks during the training of their DNN. Specifically, the DSC loss is utilized to quantify the similarity between the predicted DNN segmentation and the true segmentation. The DSC is calculated as a function of the overlap between these two segmentations, with a value of one indicating perfect overlap and 0 indicating no overlap. The DSC loss complements the mean absolute error loss by focusing on the segmentation performance of the network. While the mean absolute error provides a pixel-wise comparison between the predicted and reference images, the DSC loss offers a more holistic measure of the segmentation quality, especially important in medical imaging where the precise localization of structures is vital. This dual-loss approach enables the network to learn both the image reconstruction and segmentation tasks effectively, ensuring that the network parameters are optimized to generate accurate segmentations alongside the reconstructed images. The comparison between classic beamforming and this method is shown in Figure 4.

Figure 4. A comparison between DAS beamforming (top) and a deep learning method that bypasses the beamforming process [35] (bottom).

3.1.2 Adaptive beamforming

Traditional beamforming typically uses a fixed, predetermined set of weights applied to the received signals from each transducer element. These weights are usually uniform (DAS) or they use simple apodization (windowing) techniques. The resolution is generally limited by the fixed nature of the weights. The main lobe width does not adapt to different signal scenarios, which can lead to a less focused image. Traditional approaches may exhibit relatively higher side lobes, inducing higher levels of interference and clutter within the image. However, these methods are simpler to implement and faster in terms of computation, which makes them suitable for many real-time imaging applications.

The MV beamforming uses an adaptive approach to determine the weights applied to the signals. It calculates the weights that minimize the variance of the noise and interference, essentially optimizing the signal-to-noise ratio. The adaption of weights allows to generate a much narrower main lobe in the beam pattern, which translates to higher spatial resolution and better ability to distinguish between closely spaced scatterers. As a result of the narrower main lobe and suppressed side lobes, MV can provide significantly improved image resolution and contrast. It allows for clearer delineation of structures within the body, especially beneficial when visualizing small or closely spaced scatterers. The MV algorithm uses the data from the transducer elements to estimate the covariance matrix of the received signals. As shown in Eq. 3 [113], the weights are derived to minimize the output variance while maintaining the gain in the direction of the signal of interest.

where Rx is the covariance matrix of the received signals and a is a steering vector of ones. This optimization process, typically solved as a constrained minimization problem, is more complex than applying fixed weights as in traditional beamforming methods. This process involves calculating the correlations between the signals received at each pair of array elements. As the number of elements increases, the size of this matrix grows quadratically, thus increasing the computational burden. To compute the weights that will minimize the variance of the noise and interference, the MV algorithm requires the inversion of the covariance matrix. The inversion of a matrix is considered a process that requires significant computational resources, especially as the size of the matrix grows with the number of transducer elements. The algorithm must dynamically adapt and recalculate the weights for each focal point in real-time as the transducer moves and steers its beam. This continuous adaptation requires the algorithm to perform the above computations for each new set of received signals, which is computationally demanding.

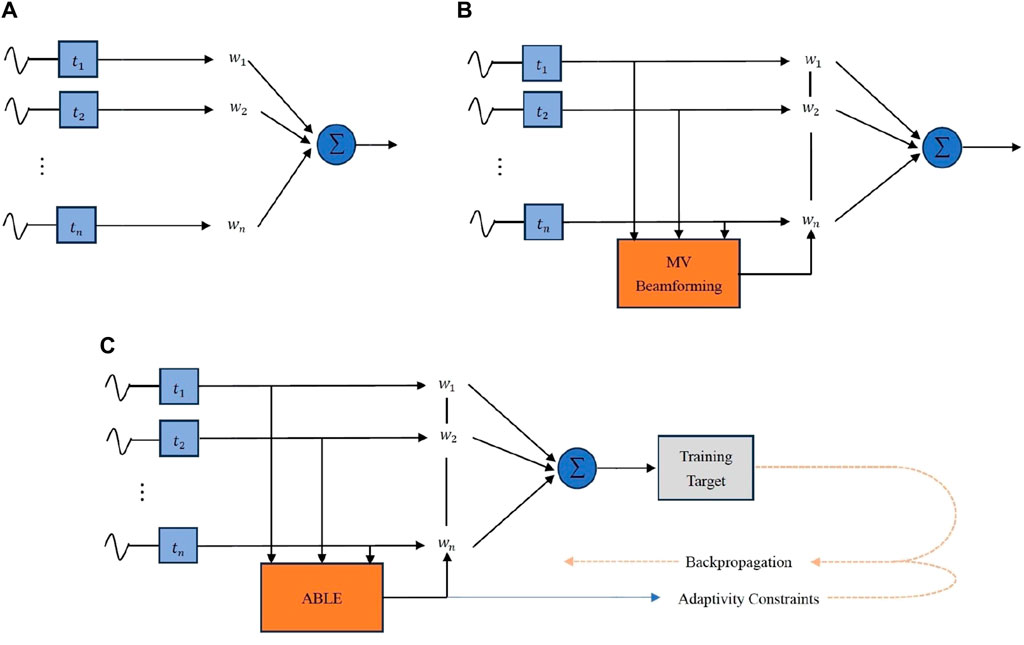

Luijten et al. [35,40] examine the applicability of deep learning to augment the adaptive beamforming process, addressing the computational challenges and aiming to produce better ultrasound images. They develop a neural network architecture, termed Adaptive Beamforming by deep LEarning (ABLE), which can adaptively calculate apodization weights for image reconstruction from received RF data. This method aims to improve ultrasound image quality by efficiently mimicking adaptive beamforming methods without the high computational burden. The ABLE network consists of fully connected layers and employs an encoder-decoder structure to create a compact representation of the data, aiding in noise suppression and signal representation. The training of ABLE is performed using a specialized loss function designed to promote similarity between the target and the produced images while also encouraging unity gain in the apodization weights. The study demonstrates ABLE’s effectiveness on two different ultrasound imaging modalities: plane wave imaging with a linear array and synthetic aperture imaging with a circular array. Moreover, ABLE’s computational efficiency, as assessed by the number of required floating-point operations, is significantly lower than that of Eigen-Based Minimum Variance (EBMV) beamforming, highlighting its potential for real-time imaging applications. In the training strategy, the network employs a total loss function composed of an image loss and an apodization-weight penalty. The image loss is designed to promote similarity between the target image and the one produced by ABLE, while the weight penalty encourages the network to learn weights that facilitate a distortionless response in the beamforming process. This penalty is inspired by MV beamforming principles, which aim to minimize output power while ensuring a distortionless response in the desired direction. By incorporating this constraint, the network is guided to learn apodization weights that not only aim to reconstruct high-quality ultrasound images but also adhere to a fundamental beamforming criterion, ensuring the network’s predictions align with the physical beamforming process. The comparison between MV and ABLE is shown in Figure 5.

Figure 5. A comparison among (A)DAS, (B)MV and (C)ABLE [41]. The weights in DAS are typically pre-set fixed values, while the weights in MV and ABLE are adaptive. ABLE can be seen as an alternative form of MV. They both adaptively estimate weights through the received signals. The calculation of weights in MV requires a large amount of computation, while ABLE reduces the computational complexity.

3.1.3 Spatial coherence-based beamforming

Spatial coherence-based beamforming is a sophisticated method employed in ultrasound imaging that focuses on analyzing the spatial coherence of received echo signals to form diagnostic images. It improves image clarity by emphasizing echoes that show consistent phase or time delays across neighboring transducer elements, which indicates they are coming from a real reflector-like tissue structure, rather than random noise or scattering. By harnessing this spatial coherence, the beamformer can more effectively differentiate between signal and noise, leading to images with better resolution and contrast.

Typically, the DAS algorithm only utilizes one attribute, the signal strength, while spatial coherence reflects the similarity of signals [117]. Therefore, this is another property that can be used to enhance image quality. There are many studies based on spatial coherence, such as coherence factor (CF) [118], generalized coherence factor (GCF) [119], and phase coherence factor (PCF) [120]. Lediju et al. [44] have proposed a spatial coherence-based method named short-lag spatial coherence (SLSC). This method leverages the coherence of echoes that occur at short lags. The objective of this method is to overcome the limitations of traditional ultrasound imaging, caused by factors such as acoustic clutter, speckle noise, and phase aberration. SLSC images demonstrate improved visualization when compared to matched B-mode images by addressing these issues. By applying the SLSC imaging, the researchers aim to enhance ultrasound image quality and diagnostic accuracy, benefiting the field of medical imaging. The spatial coherence is calculated by Eq. 4 [44],

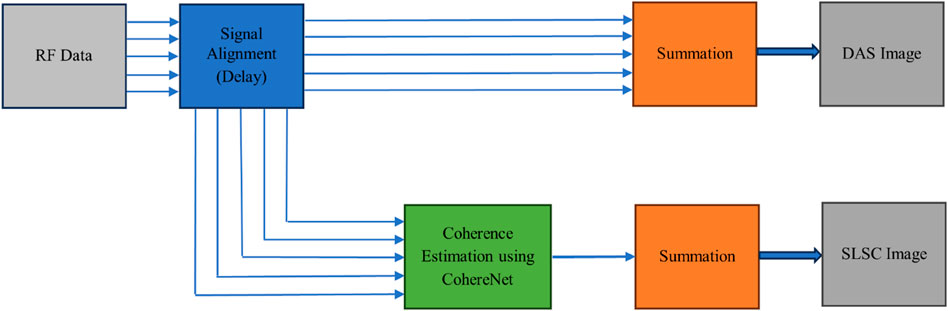

where xi is the aligned signal received by the ith element, si represents the sample index along the axial direction. In addition, N denotes the receive aperture, and m indicates the lag. From this equation, it can be seen that its computational complexity is relatively high. Wiacek et al. [35,42] have proposed a deep learning approach named CohereNet to estimate the normalized cross correlation as a function of lag. This network can be used to replace the SLSC beamforming. They delve into the potential of FCNNs as “universal approximators” that could learn any function. In CohereNet, a 7 × 64 input is adopted, which means the axial kernel chooses seven samples in the axial direction, while the aperture size is 64. The output is the spatial correlation at different lag distances. The network structure consists of an input layer, three fully connected layers using rectified linear unit (ReLU) as the activation function, followed by a fully connected layer using hyperbolic tangent (tanh) as the activation function, and an average pool output layer. In essence, CohereNet aims to utilize the capability of DNNs to enhance the beamforming process, thereby improving image quality and computational efficiency. As described in [43], the CohereNet is faster than SLSC, and this network also has high generality. The Figure 6 illustrates the comparison between DAS and CohereNet.

Figure 6. A comparison between DAS beamforming (top) and CohereNet [43] (bottom). The DAS algorithm obtains the final result by aligning the received signals and then weighting and summing them up. On the other hand, SLSC achieves the final result through calculating the spatial coherence. CohereNet reduces the computational complexity of SLSC.

3.2 Deep learning in clinical applications

Ultrasound imaging, due to its non-invasive characteristic and real-time imaging capabilities, has seen extensive use across various medical domains. This section delves into the clinical applications of deep learning, including breast imaging, cardiology, prostate imaging, fetal, thyroid, and brain.

3.2.1 Breast imaging

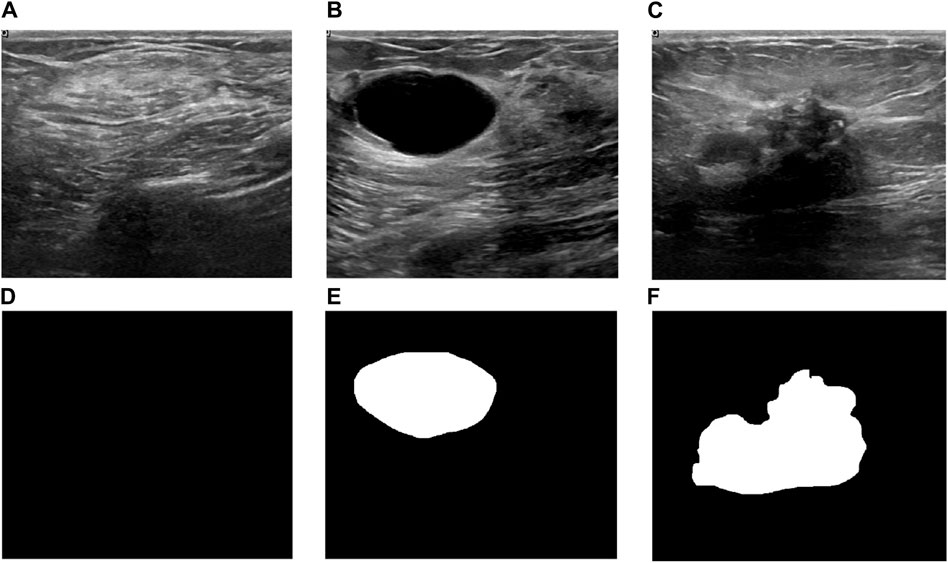

Breast ultrasound imaging is commonly utilized to detect potential breast diseases [121]. Although it falls short in identifying microcalcifications compared to X-ray mammography, it is instrumental in distinguishing benign masses like cysts and fibroadenomas from malignant ones. With the development of AI, especially the advent of deep learning technologies, it has also promoted the evolution of ultrasound breast imaging. Some open-source datasets, such as Breast Ultrasound Images Dataset (BUSI) [122], have also promoted the widespread application of deep learning. As depicted in Figure 7, the images within the BUSI dataset are classified into three distinct categories: normal, benign, and malignant.

Figure 7. Three examples from BUSI dataset[122]. (A) A normal image and (D) its corresponding mask, (B) a benign image and (E) its corresponding mask, (C)a malignant image and (F) its corresponding mask.

In 2018, Becker et al.[65] reported using generic deep learning software (DLS) for the classification of breast cancer in ultrasound images. The study aimed to evaluate the effectiveness of a DLS in classifying breast cancer using ultrasound images and compare its performance against human readers with varying levels of breast imaging experience. They used Receiver Operating Characteristic (ROC) to assess the accuracy of diagnostic results. The DLS achieved diagnostic accuracy comparable to radiologists and performed better than a medical student with no prior experience. Although they did not discuss the technical details of deep learning, the study demonstrated that deep learning software could achieve high diagnostic accuracy in classifying breast cancer using ultrasound images, even with a limited number of training cases. The fast evaluation speed of the software supports the feasibility of real-time image analysis during ultrasound examinations. This indirectly illustrates the potential of deep learning in improving diagnostic processes. Xu et al. [66] develop a CNN based method for the automatic segmentation of breast ultrasound images into four major tissues: skin, fibroglandular tissue, mass, and fatty tissue, to aid in tumor localization, breast density measurement, and assessment of treatment response. They designed two CNN architectures CNN-I and CNN-II. CNN-I is an 8-layer CNN for pixel-centric patch classification. CNN-II is a smaller CNN to combine the outputs of three CNN-Is, each trained on orthogonal image planes, to provide comprehensive evaluation. CNNs were trained using the Adam optimization algorithm, and dropout methods were applied to prevent overfitting. The proposed method achieved high quantitative metrics for segmentation. Accuracy, Precision, Recall, and F1-measure all exceeded 80%. Jaccard similarity index (JSI) for mass segmentation reached 85.1%, outperforming previous methods. The proposed method provided better segmentation visualization and quantitative evaluation compared to previous studies. The automated segmentation method can offer objective references for radiologists, aiding in breast cancer diagnosis and breast density assessments. Qian et al. [67] have proposed a deep learning system to assess the breast cancer risk. The system was trained on a large dataset from two hospitals, encompassing 10,815 ultrasound images of 721 biopsy-confirmed lesions, and then prospectively tested on an additional 912 images of 152 lesions. The deep-learning system, when applied to bimodal (B-mode and color Doppler images) and multimodal (including elastography) images, achieved high accuracy in predicting BI-RADS scores. The system’s predictions align with radiologists’ assessments, demonstrating its potential utility in clinical settings. It could facilitate the adoption of ultrasound in breast cancer screening, particularly beneficial for women with dense breasts where mammography is less effective. This research underscores the potential of deep learning in enhancing breast ultrasound’s diagnostic power, offering a tool that aligns with current BI-RADS standards and supports radiologists in decision-making processes. Chen et al. [68] introduce a deep learning model for breast cancer diagnosis. They leverage the domain knowledge of radiologists, particularly their diagnostic patterns when viewing CEUS videos, to enhance the model’s diagnostic accuracy. The model integrates a 3D CNN with a domain-knowledge-guided temporal attention module (DKG-TAM) and a domain-knowledge-guided channel attention module (DKG-CAM). These modules are designed to mimic the attention patterns of radiologists, focusing on specific time slots in contrast-enhanced ultrasound (CEUS) videos and incorporating relevant features from both CEUS and traditional ultrasound images. The study utilizes a Breast-CEUS dataset comprising 221 cases, which includes CEUS videos and corresponding images, making it one of the largest datasets of its kind. Reference [69] addresses the challenge of breast cancer, the second leading cause of death among women worldwide. It highlights the importance of early detection through automated systems due to the time-consuming nature of manual diagnosis. The study introduces a new framework leveraging deep learning and feature fusion for classifying breast cancer using ultrasound images. The proposed framework comprises five main steps: data augmentation, model selection, feature extraction, feature optimization, feature fusion and classification. Operations like horizontal flip, vertical flip, and 90-degree rotation were applied to enhance the original dataset’s size and diversity. The pre-trained DarkNet-53 model was modified and trained using transfer learning techniques. Features were extracted from the global average pooling layer of the modified model. Two improved optimization algorithms, reformed differential evolution (RDE) and reformed gray wolf (RGW), were used to select the best features. The optimized features were fused using a probability-based approach and classified using machine learning algorithms. The study concludes that the proposed framework significantly improves the accuracy and efficiency of breast cancer classification from ultrasound images. It highlights the potential of the method to provide reliable support for radiologists, enhancing early detection and treatment planning. Rzaz et al. [70] present DeepBreastCancerNet, a new deep learning model designed for the detection and classification of breast cancer using ultrasound images. This model addresses the challenges of manual breast cancer detection, which is often time-consuming and prone to inaccuracies. The proposed DeepBreastCancerNet framework includes 24 layers, consisting of six convolutional layers, nine inception modules, and one fully connected layer. It employs both clipped ReLU and leaky ReLU activation functions, batch normalization, and cross-channel normalization to enhance model performance. Images were augmented through random translations and rotations to enhance the dataset’s size and diversity, thereby reducing overfitting. The architecture starts with a convolutional layer followed by max pooling, batch normalization, and leaky ReLU activation. Inception modules are used for extracting multi-scale features. The model ends with a global average pooling layer and a fully connected layer for classification. The proposed model achieved a classification accuracy of 99.35%, outperforming several state-of-the-art deep learning models. On a binary classification dataset, the model achieved an accuracy of 99.63%. The DeepBreastCancerNet model outperformed other pre-trained models like AlexNet, ResNet, and GoogLeNet in terms of accuracy, precision, recall, and F1-score. Ablation studies confirmed the importance of using both leaky ReLU and clipped ReLU activation functions and global average pooling for optimal performance.

3.2.2 Cardiology

In cardiology, echocardiography, particularly through ultrasound imaging of the heart, represents a pivotal area in medical ultrasound research, with abundant literature focusing on automated methods for segmenting and tracking the heart’s left ventricle - a crucial component evaluated in heart disease diagnosis. Echocardiography is a test that uses high-frequency sound waves to make pictures of your heart. It can show the size, shape, movement, pumping strength, valves, blood flow and other features of the heart. The quality of echocardiographic images can be influenced by multiple factors such as patient’s body habitus, lung disease, or surgical dressings, which can make interpretation difficult. Interpreting the results of an echocardiography exam requires significant expertise and experience. Sometimes not all views of the heart can be visualized adequately, which may limit the amount of information obtained from the test. Deep learning techniques can solve these problems to a certain extent.

Ref.[71] addressed the challenge of segmenting the left atrium (LA) in 3D ultrasound images using CNNs. The proposed method aims to automate this process, which is traditionally time-consuming and dependent on the observer. The introduction of shape priors and adversarial learning into the CNN framework enhances the accuracy and adaptability of the segmentation across different ultrasound devices. The framework integrates three existing methods: 3D Fully Convolutional Segmentation Network (V-Net), Anatomic Constraint via Autoencoder Network and Domain Adaptation with Adversarial Networks. The V-Net processes 3D image volumes and creates segmentation masks. Shape priors are incorporated through an autoencoder network trained on ground truth segmentation masks. This ensures that the segmentation masks adhere to anatomically plausible shapes. Domain adaptation is achieved by training a classifier to identify the data source, aiming to make the feature maps domain invariant. The combined approach of using shape priors and adversarial learning in CNNs significantly improves the segmentation of the left atrium in 3D ultrasound images. This method not only boosts accuracy but also ensures the generalizability of the model across different devices, making it a promising tool for clinical use.

2D echocardiographic image analysis is crucial in clinical settings for diagnosing cardiac morphology and function. Manual and semi-automatic annotations are still common due to the lack of accuracy and reproducibility of fully automatic methods. Challenges in segmentation arise from poor contrast, brightness inhomogeneities, speckle patterns, and anatomical variability. Leclerc et al.[72] evaluate the performance of state-of-the-art encoder-decoder deep CNNs for segmenting cardiac structures in 2D echocardiographic images and estimating clinical indices using the CAMUS dataset. CAMUS is the largest publicly available and fully annotated dataset for echocardiographic assessment, containing data from 500 patients. The CAMUS dataset enables comprehensive evaluation of deep learning methods for echocardiographic image analysis. Encoder-decoder networks, especially U-Net, demonstrate strong potential for accurate and reproducible cardiac segmentation, paving the way for fully automatic analysis in clinical practice. The study confirms that encoder-decoder networks, particularly U-Net, provide highly accurate segmentation results for 2D echocardiographic images. However, achieving inter-observer variability remains challenging, and more sophisticated architectures did not significantly outperform simpler U-Net designs. The findings suggest that further improvements in deep learning methods and larger annotated datasets are essential for advancing fully automatic cardiac image analysis.

Ghorbani et al. [73] have developed a deep learning model named EchoNet to interpret the echocardiograms. This model could identify local cardiac structures, estimate cardiac function, and predict systemic phenotypes like age, sex, weight, and height with significant accuracy. EchoNet is able to accurately predict various clinical parameters, such as ejection fraction and volumes, crucial for diagnosing and managing heart conditions. It also demonstrated the potential to predict systemic phenotypes that are not directly observable in echocardiogram images. By automating echocardiogram interpretation, such AI models could streamline clinical workflows, provide preliminary interpretations in regions lacking specialized cardiologists, and offer insights into phenotypes challenging for human evaluation. The research emphasized the potential of deep learning to enhance echocardiogram analysis, offering a step toward more automated, accurate, and comprehensive cardiovascular imaging diagnostics. Due to the lack of experience among novices, Narang et al. [74] proposed the use of deep learning techniques to assist them. The deep learning algorithm provides real-time guidance to novices, enabling them to capture essential cardiac views without prior experience in ultrasonography. The study involved eight nurses without prior echocardiography experience who used the AI guidance to perform echocardiographic scans on 240 patients. These scans were then compared with those obtained by experienced sonographers. The primary outcome was the ability of the AI-assisted novices to acquire echocardiographic images of sufficient quality to assess left and right ventricular size and function, as well as the presence of pericardial effusion. Results indicated that the novice-operated, AI-assisted echocardiograms were of diagnostic quality in a high percentage of cases, closely aligning with the quality of scans performed by experienced sonographers. The study suggests that AI-guided echocardiogram acquisition can potentially expand the accessibility of echocardiographic diagnostics to settings where expert sonographers are unavailable, thereby enhancing patient care in diverse clinical environments.

3.2.3 Thyroid

The thyroid gland, located in the neck and comprising two interconnected lobes, plays a critical role in hormone secretion, impacting protein synthesis, metabolic rate, and calcium homeostasis. These hormones are particularly influential in children’s growth and development. Despite its small size, the thyroid is susceptible to various disorders, such as hyperthyroidism, hypothyroidism, and nodule formation. Diagnosing these conditions involves a range of techniques, including blood tests for hormone levels, ultrasound imaging for gland volume and nodule detection, and fine-needle aspiration (FNA) for definitive tissue analysis. FNA, the most invasive of these methods, is being increasingly circumvented by leveraging ultrasound imaging with advanced deep learning and computer-aided diagnosis (CAD) systems to enhance diagnostic accuracy and nodule characterization.

Wang et al. [79] introduce a deep learning method for diagnosing thyroid nodules using multiple ultrasound images from an examination. The study proposes an architecture that includes three networks, addressing the challenge of using multiple views from an ultrasound examination for a comprehensive diagnosis. The research involves a dataset with 7803 images from 1046 examinations, employing various ultrasound equipment. The dataset is annotated at the examination level, categorizing examinations into malignant and benign based on ultrasound reports and pathological records. The method integrates features from multiple images using an attention-based feature aggregation network, aiming to reflect the diagnostic process of sonographers who consider multiple image views. The model demonstrated high diagnostic performance on the dataset, showcasing the potential of deep learning in enhancing the accuracy and objectivity of thyroid nodule diagnosis in ultrasound imaging. The attention-based network assigns weights to different images within an examination, focusing on those with significant features, which aligns with clinical practices where sonographers prioritize certain image views. Peng et al. [77] have developed a deep learning AI model called ThyNet. This model was designed to differentiate between malignant tumors and benign thyroid nodules, aiming to enhance radiologists’ diagnostic performance and reduce unnecessary FNAs. ThyNet was developed using 18,049 images from 8,339 patients across two hospitals and tested on 4,305 images from 2,775 patients across seven hospitals. The model’s performance was initially compared with 12 radiologists, and then a ThyNet-assisted diagnostic strategy was developed and tested in real-world clinical settings. The AI model, ThyNet, demonstrated superior diagnostic performance compared to individual radiologists, with an area under the receiver operating characteristic curve (AUROC) of 0.922, significantly higher than the radiologists’ AUROC of 0.839. When radiologists were assisted by ThyNet, their diagnostic performance improved significantly. The pooled AUROC increased from 0.837 to 0.875 with ThyNet assistance for image reviews and from 0.862 to 0.873 in a clinical setting involving image and video reviews. The ThyNet-assisted strategy significantly decreased the percentage of unnecessary FNAs from 61.9% to 35.2%, while also reducing the rate of missed malignancies from 18.9% to 17.0%.

3.2.4 Prostate

Prostate cancer ranks as the most frequently diagnosed malignancy among adult and elderly men, with early detection and intervention being crucial for reducing mortality rates. Transrectal ultrasound (TRUS) imaging, in conjunction with prostate-specific antigen (PSA) testing and digital rectal examination (DRE), plays a pivotal role in the diagnosis of prostate cancer. The delineation of prostate volumes and boundaries is critical for the accurate diagnosis, treatment, and follow-up of this cancer [123]. Typically, the delineation process involves outlining prostate boundaries on transverse parallel 2-D slices along its length, leading to the development of various (semi-)automatic methods for detecting these boundaries. In the diagnosis of prostate diseases, deep learning techniques provide some additional insights.

Azizi et al. [83] present a deep learning approach using Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks, for prostate cancer detection through Temporal Enhanced Ultrasound (TeUS). The study aimed to leverage the temporal information inherent in TeUS to distinguish between malignant and benign tissue in the prostate. The authors utilized RNNs to model the temporal variations in ultrasound backscatter signals, demonstrating that LSTM networks outperformed other models in identifying cancerous tissues. The study analyzed data from 255 prostate biopsy cores from 157 patients. LSTM networks achieved an area under the curve (AUC) of 0.96, with sensitivity, specificity, and accuracy rates of 0.76, 0.98, and 0.93, respectively, highlighting the potential of RNNs in medical imaging analysis. The study also introduced algorithms for analyzing LSTM networks to understand the temporal features relevant for prostate cancer detection. This analysis revealed that significant discriminative features could be captured within the first half of the TeUS sequence, suggesting a potential reduction in data acquisition time for clinical applications. The research suggests that deep learning models, particularly LSTM-based RNNs, can significantly enhance prostate cancer detection using ultrasound imaging, offering a promising tool for improving diagnostic accuracy and potentially guiding biopsy procedures. Karimi et al. [89] introduces a method for the automatic segmentation of the prostate clinical target volume (CTV) in TRUS images, which is crucial for brachytherapy treatment planning. The method employs CNNs, specifically an ensemble of CNNs, to improve segmentation accuracy, particularly for challenging images with weak landmarks or strong artifacts. The method uses adaptive sampling to focus the training process on difficult-to-segment images and an ensemble of CNNs to estimate segmentation uncertainty, improving robustness and accuracy. For segmentations with high uncertainty, a statistical shape model (SSM) is used to refine the segmentation, utilizing prior knowledge about the expected shape of the prostate. The method achieved a Dice score of 93.9% ± 3.5% and a Hausdorff distance of 2.7 ± 2.3 mm, outperforming several other methods and demonstrating its effectiveness in reducing the likelihood of large segmentation errors. This study highlights the potential of deep learning and ensemble methods to enhance the accuracy and reliability of medical image segmentation, particularly in applications like prostate cancer treatment where precision is crucial.

3.2.5 Fetal

Ultrasonography is a pivotal technology in prenatal diagnosis, renowned for its safety for both the mother and fetus. This research area encompasses numerous subfields, often employing segmentation and classification techniques akin to those used in adult diagnostics but adapted for the smaller scale of fetal organs. This miniaturization introduces diagnostic challenges due to less pronounced signs of abnormalities. Furthermore, ultrasound imaging must penetrate maternal tissue and the placenta to reach the fetus, potentially introducing noise, exacerbated by the movements of both mother and fetus, emphasizing the need for enhanced automated diagnostic methods. Especially in underdeveloped areas with a shortage of medical personnel, such automatic diagnostic methods can provide tremendous help.

Van den Heuvel et al. [94] present a study where a system is developed to estimate the fetal head circumference (HC) from ultrasound data obtained using an obstetric sweep protocol (OSP). This protocol can be taught within a day to any healthcare worker without prior knowledge of ultrasound. The study aims to make ultrasound imaging more accessible in developing countries by eliminating the need for a trained sonographer to acquire and interpret images. The system uses two FCNNs. The first network detects frames containing the fetal head from the OSP data, and the second network measures the HC from these frames. The HC measurements are then used to estimate gestational age (GA) using the curve of Hadlock. The study, conducted on data from 183 pregnant women in Ethiopia, found that the system could automatically estimate GA with a reasonable level of accuracy, indicating its potential application in maternal care in resource-constrained settings. Pu et al. [96] developed an automatic fetal ultrasound standard plane recognition (FUSPR) model. This model is designed to operate in an Industrial Internet of Things (IIoT) environment and leverages deep learning to identify standard planes in fetal ultrasound imagery. The research introduces a distributed platform for processing ultrasound data using IIoT and high-performance computing (HPC) technology. The FUSPR model integrates a CNN and an RNN to learn spatial and temporal features of ultrasound video streams, aiming to improve the accuracy and robustness of fetal plane recognition. The system’s goal is to aid in gestational age assessment and fetal weight estimation by accurately identifying and analyzing key anatomical structures in ultrasound video frames. The study demonstrates that the FUSPR model significantly outperforms baseline models in recognizing four standard fetal planes from over 1000 ultrasound videos. The use of deep learning within the IIoT framework presents a promising approach to enhancing the efficiency and reliability of fetal ultrasound analysis, particularly in resource-constrained environments. A study by Xu et al.[124] introduced a novel segmentation framework incorporating vector self-attention layers (VSAL) and context aggregation loss (CAL) to address the challenges of fetal ultrasound image segmentation. The VSAL module allows for simultaneous spatial and channel attention, capturing both global and local contextual information. The CAL component further enhances the model’s ability to differentiate between similar-looking structures by considering both inter-class and intra-class dependencies. On the multi-target Fetal Apical Four-chamber dataset and one-target Fetal Head dataset, the proposed framework outperformed several state-of-the-art CNN-based, U-net[125], methods in terms of pixel accuracy (PA), dice coefficient (DCS), Hausdorff distance (HD) metrics, demonstrating its potential for improving fetal ultrasound image segmentation accuracy. The study showcases the effectiveness of self-attention techniques in enhancing the accuracy and reliability of fetal ultrasound image segmentation, offering a promising tool for improving prenatal diagnostics and care.

3.2.6 Brain

The brain, a pivotal organ in the nervous system, epitomizes complexity within the human body, orchestrating the functions of voluntary organs and muscles. Despite its critical role, the full extent of its operations remains partially elusive, prompting ongoing research to decipher its mechanisms. Notably, the brain undergoes a phenomenon termed “brain shift,” a potential deformation during surgical procedures that could impact surgical outcomes. Ultrasound technology, particularly when integrated with magnetic resonance (MR) imaging data, serves as a crucial aid in neurosurgical contexts. This integration is instrumental in addressing the challenges posed by brain shift and enhancing intraoperative navigation and decision-making.

Milletari et al.[98] discuss a deep learning approach using CNNs combined with a Hough voting strategy for segmenting deep brain regions in MRI and ultrasound images. The study showcases the use of this method for fully automatic localization and segmentation of anatomical regions of interest, utilizing the features produced by CNNs for robust, multi-region, and modality-flexible segmentation. The method is particularly designed to adapt to different imaging modalities, showing effectiveness in MRI and transcranial ultrasound volumes. It demonstrates the potential of CNNs in medical image analysis, particularly in the challenging context of brain imaging, where accurate segmentation of anatomical structures is critical. The study systematically explores the performance of various CNN architectures across different scenarios, offering insights into the effective application of deep learning techniques in medical imaging. Reference [99] presents a method for segmenting brain tumors during surgery using 3D intraoperative ultrasound (iUS) images. The technique employs a tumor model derived from preoperative magnetic resonance (MR) data for local MR-iUS registration, aiming to enhance the visualization of brain tumor contours in iUS. This multi-step process defines a region of interest based on the patient-specific tumor model, extracts hyperechogenic structures from this region in both modalities, and performs registration using gradient values and rigid and affine transformations to align the tumor model with the 3D-iUS data. The method’s effectiveness was assessed on a dataset of 33 patients, showing promising results in terms of computational time and accuracy, indicating its potential utility in supporting neurosurgeons during brain tumor resections. Di Ianni and Airan [102] introduce a deep learning-based image reconstruction method for functional ultrasound (fUS) imaging of the brain. The method significantly reduces the amount of data required for imaging while maintaining image quality, using a CNN to reconstruct power Doppler images from sparsely sampled ultrasound data. The approach enables high-quality fUS imaging of brain activity in rodents, with potential applications in various settings where dedicated ultrasound hardware is not available, thereby broadening the accessibility and utility of fUS imaging technology.

3.3 Deep learning in portable ultrasound system

Due to its portability and low cost, handheld ultrasound devices have great application prospects in areas such as emergencies, point-of-care, sports fields, and outdoors. At the same time, it is also suitable for assisting doctors in diagnosing diseases in remote and medically undeveloped areas. Portable ultrasound diagnostic devices appeared in the 1980s. Initially, they were mainly used to scan the bladder to measure the volume [126–129]. Compared to the common invasive method of catheterization through a urinary catheter, the bladder scanner does not cause any harm to the patient. Until now, the development of bladder scanners has been a direction in the advancement of portable ultrasound devices [130–132]. However, besides this field, portable ultrasound devices have many other applications, such as Color Doppler imaging [133], blood flow imaging [134], echocardiography [135], skin imaging [136] and so on. During the outbreak of COVID-19, portable ultrasound devices also played a positive role in assisting diagnosis [137–139].

Indeed, the compact size of portable ultrasound devices does present significant challenges for both hardware design and the development of imaging algorithms [140,141]. Despite its portable advantages, these challenges need to be meticulously addressed to ensure the efficient performance and accuracy of the device. With the advancement of semiconductor technology, technologies such as Field Programmable Gate Arrays (FPGAs) [142] and Application Specific Integrated Circuits (ASICs) [143] have been successively applied to portable ultrasound devices to overcome some of the challenges in hardware design. From the perspective of algorithm design, beamforming technology based on compressed sensing [144] has extensive research in portable ultrasound [145–148].

These methods have promoted the development of portable ultrasound devices, and with the advancement of artificial intelligence technology, the corresponding technologies have also brought new development directions for portable ultrasound devices. Zhou et al. [149] proposed to apply Generative Adversarial Network (GAN) to enhance the image quality of handheld ultrasound devices. They introduce a novel approach using a two-stage GAN to enhance image quality. The proposed two-stage GAN framework incorporates a U-Net network and a GAN to reconstruct high-quality ultrasound images from low-quality ones. The method focuses on reconstructing tissue structure details and speckles of the ultrasound images, essential for accurate diagnostics. The paper presents a comprehensive loss function combining texture, structure, and perceptual features to guide the GAN training effectively. The simulated, phantom and clinical data are used to demonstrate the method’s efficacy, showing significant improvements in image quality compared to original low-quality images and other algorithms. In addition, Soleimani et al. [103] developed a lightweight and portable ultrasound computed tomography (USCT) system for noninvasive imaging of the human head with high resolution. The study aims to compare the effectiveness of a deep neural network combining CNN and long short-term memory (LSTM) layers against traditional deterministic methods in creating tomographic images of the human head. The research shows that the proposed neural network is more effective in dealing with noisy and synthetic data compared to deterministic methods, which often require additional filtering to improve image quality. The findings suggest that the CNN + LSTM model is more versatile and generalizable, making it a superior choice for medical ultrasound tomography applications. The study contributes to the advancement of USCT by demonstrating the potential of deep learning approaches in improving the accuracy and reliability of noninvasive brain imaging techniques.

3.4 Training scheme

Vienneau et al. [57] discuss the training methods for DNNs in the context of ultrasound imaging. They address the issues with traditional ℓp norm loss functions when training DNNs, where lower loss values do not necessarily translate to improved image quality. Ref. [57] presents an effort to better align the optimization objective with the relevant image quality metrics. The authors suggest that their novel training scheme can potentially increase the maximum achievable image quality for ultrasound beamforming using DNNs. Luchies and Byram [58] investigate practical considerations of training DNN beamformers for ultrasound imaging. They discuss the use of combinations of multiple point target responses for training DNNs, as opposed to single point target responses. It also examines the impact of various hyperparameter settings on the quality of ultrasound images in simulated scans. The study demonstrates that DNN beamforming exhibits robustness when confronted with electronic noise, and it points out that mean squared error (MSE) validation loss is not a reliable predictor for image quality. Goudarzi and Rivaz [52] used real photographic images as the ground-truth echogenicity map in their simulations to provide the network with a diverse range of textures, contrasts, and object geometries during the training phase. This approach not only enhances the variety in the training dataset, which is crucial for preventing overfitting but also aligns the simulation settings more closely with the real experimental imaging settings of in vivo test data, thus minimizing unwanted domain shifts between training and test datasets.

3.5 Transformer/attention mechanism and CNN

CNNs have been the backbone of medical image analysis for years. It can be seen from our previous review that a larger number of architectures are based on the CNNs.They excel in extracting local features through convolutional layers, pooling, and activation functions. Networks such as U-Net[125] and its variants [150–153] have been particularly successful in medical image segmentation tasks due to their encoder-decoder architectures, which capture detailed spatial hierarchies. However, CNNs face limitations in modeling global context and long-range dependencies. This shortfall can lead to suboptimal performance in tasks where the relationship between distant regions in the image is crucial. In ultrasound imaging, this limitation manifests in difficulties handling speckle noise and artifacts, which require broader contextual understanding to be effectively mitigated. On the other hand, the advent of Transformer models and their self-attention mechanisms [154] has introduced new opportunities for enhancing ultrasound image analysis. The integration of U-net with transformer has also become a new direction for current research [155–159]. This section delves into the application of Transformers and attention mechanisms in medical imaging focusing on ultrasound, comparing their performance with traditional CNNs.

Transformers, originally designed for natural language processing, utilize a self-attention mechanism that allows the model to weigh the importance of different input elements dynamically [154]. This capability is particularly beneficial for medical image analysis [124,156,160–167], where different regions of an image may hold varying levels of significance for accurate diagnosis. The self-attention mechanism operates by creating attention scores between all pairs of input elements, which in the context of images, correspond to pixels or features. These scores determine how much attention each element should receive from the others. This global consideration enables the Transformer to capture long-range dependencies and contextual information that CNNs might miss due to their localized receptive fields [154]. In the realm of medical imaging, the Transformer models have been adapted to handle the unique challenges posed by this modality. For instance, TransUNet [156] architecture integrates CNNs and Transformers into a unified framework, where CNNs are employed to extract initial feature maps from medical images, and Transformers encode these features into tokenized patches to capture global context. This hybrid approach enables the model to retain detailed spatial information while benefiting from the global attention provided by Transformers; the GPA-TUNet[162] model integrates Group Parallel Axial Attention (GPA) with Transformers to enhance both local and global feature extraction. This hybrid approach leverages the strengths of Transformers in capturing long-range dependencies and the efficiency of GPA in highlighting local information. Another segmentation method specific to ultrasound images is the integration of a Vector Self-Attention Layer (VSAL) [124], which performs long-range spatial and channel-wise reasoning simultaneously. VSAL is designed to maintain translational equivariance and accommodate multi-scale inputs, which are critical for handling the variability in ultrasound images. This layer can be seamlessly integrated into existing CNN architectures, enhancing their performance by adding the benefits of self-attention. Studies [124] have shown that Transformer-based models significantly improve the accuracy of ultrasound image segmentation tasks. For example, in the segmentation of fetal ultrasound images, models incorporating VSAL and context aggregation loss (CAL) demonstrated superior performance compared to traditional CNNs.

The adaptive multimodal attention mechanism [160] is another advanced approach used in deep learning models to improve the generation of descriptive and coherent medical image reports. Yang et al. propose a novel framework for generating high-quality medical reports from ultrasound images using an adaptive multimodal attention network (AMAnet). This framework addresses the challenges of tedious and time-consuming manual report writing by leveraging deep learning techniques to automate the process. The core innovation of AMAnet lies in its adaptive multimodal attention mechanism, which integrates three key components: spatial attention, semantic attention, and a sentinel gate. The spatial attention mechanism focuses on the relevant regions of the ultrasound images, ensuring that the model captures essential visual details. Meanwhile, the semantic attention mechanism predicts crucial local properties, such as boundary conditions and tumor morphology, by using a multi-label classification network. These predicted properties are then used as semantic features to enhance the report generation process. The sentinel gate is a pivotal element in the AMAnet framework, designed to dynamically control the attention level on visual features and language model memories. This gate allows the model to decide whether to focus on current visual features or rely on the learned knowledge stored in the Long Short-Term Memory (LSTM) when generating the next word in the report. This adaptive mechanism is particularly beneficial in handling fixed phrases commonly found in medical reports, ensuring that the model can generate coherent and contextually appropriate text. The incorporation of semantic features and the adaptive attention mechanism contribute to the model’s superior performance, highlighting its potential for practical clinical applications. In practical terms, consider a scenario where the model is generating a report for an ultrasound image showing a tumor. The spatial attention mechanism might focus on the region where the tumor is located. The semantic attention mechanism will consider properties such as “irregular morphology” and “unclear boundary” predicted by the multi-label classification network. The sentinel gate will dynamically balance between these features and the language model’s internal memory to generate a sentence like “The ultrasound image shows an irregularly shaped tumor with unclear boundaries.” This adaptive attention mechanism ensures that the model generates accurate and contextually appropriate reports, enhancing its utility in clinical settings, which CNNs alone might struggle to achieve.

Chi et al. [168] propose a unified framework that combines the 2D and 3D Transformer-UNets into a single end-to-end network. This novel method enhances the segmentation of thyroid glands in ultrasound sequences, addressing several key limitations of existing deep learning models. The proposed Hybrid Transformer UNet (H-TUNet) integrates both intra-frame and inter-frame features through a combination of 2D and 3D Transformer UNets, significantly improving segmentation accuracy and efficiency. The framework is designed to exploit both the detailed intra-frame features and the broader inter-frame contextual information, resulting in a more accurate and robust segmentation of the thyroid gland in ultrasound images. The proposed method outperforms state-of-the-art CNN-based models, such as 3D UNet, in terms of segmentation accuracy, demonstrating the effectiveness of hybrid Transformer-2D-3D models in ultrasound image analysis. Wang et al.[169] presents a groundbreaking method for enhancing the safety and efficiency of robot-assisted prostate biopsy through advanced force sensing techniques. This method addresses the limitations of existing VFS techniques, particularly in accurately sensing the interaction force between surgical tools and prostate tissue. The core innovation of TransVFS is the spatio-temporal local–global transformer architecture. This model captures both local image details and global dependencies simultaneously, which is crucial for accurately estimating prostate deformations and the resulting forces during biopsy. The architecture includes efficient local–global attention modules that reduce the computational burden associated with processing 4D spatio-temporal data. This makes the method suitable for real-time force-sensing applications in clinical settings. The proposed method was extensively validated through experiments on prostate phantoms and beagle dogs. The results demonstrated that TransVFS outperforms state-of-the-art VFS methods and other spatio-temporal transformer models in terms of force estimation accuracy. Specifically, TransVFS provided significantly lower mean absolute errors in force estimation compared to the most competitive model, ResNet3dGRU. The paper highlights the practical benefits of TransVFS in improving the safety and efficacy of robot-assisted prostate biopsies. By providing accurate real-time force feedback, TransVFS can help reduce the risk of tissue damage and improve the precision of biopsy procedures, thereby enhancing patient outcomes. Ahmadi et al.[170] integrate a spatio-temporal architecture that combines anatomical features and the motion of the aortic valve to accurately classify AS severity. The Temporal Deformable Attention (TDA) mechanism is specifically designed to capture small local motions and spatial changes across frames, which are critical for assessing AS severity. The model incorporates a temporal coherent loss function to enforce sensitivity to small motions in spatially similar frames without explicit aortic valve localization labels. This loss helps the model maintain consistency in frame-level embeddings, enhancing its ability to detect subtle changes in the aortic valve’s movement. An innovative attention layer is introduced to aggregate disease severity likelihoods over a sequence of echocardiographic frames, focusing on the most clinically informative frames. This temporal localization mechanism enables the model to identify and prioritize frames that are critical for accurate AS diagnosis. The model was tested on both private and public datasets, demonstrating state-of-the-art accuracy in AS detection and severity classification. On the private dataset, the model achieved 95.2% accuracy in AS detection and 78.1% in severity classification. On the public TMED-2 dataset, the model achieved 91.5% accuracy in AS detection and 83.8% in severity classification. By reducing the reliance on Doppler measurements and enabling automated AS severity assessment from two-dimensional echocardiographic data, the proposed framework facilitates broader access to AS screening. This is particularly valuable in clinical settings with limited access to expert cardiologists and specialized Doppler imaging equipment.