- 1Los Alamos National Laboratory, Los Alamos, NM, United States

- 2Department of Electrical and Computer Engineering, The University of Texas at Austin, Austin, TX, United States

- 3Center for Nanoscale Materials, Argonne National Laboratory, Lemont, IL, United States

- 4Advanced Photon Source, Argonne National Laboratory, Lemont, IL, United States

- 5Microelectronics Research Center, The University of Texas at Austin, Austin, TX, United States

- 6Omega Optics Inc, Austin, TX, United States

Recent advances in image data proccesing through deep learning allow for new optimization and performance-enhancement schemes for radiation detectors and imaging hardware. This enables radiation experiments, which includes photon sciences in synchrotron and X-ray free electron lasers as a subclass, through data-endowed artificial intelligence. We give an overview of data generation at photon sources, deep learning-based methods for image processing tasks, and hardware solutions for deep learning acceleration. Most existing deep learning approaches are trained offline, typically using large amounts of computational resources. However, once trained, DNNs can achieve fast inference speeds and can be deployed to edge devices. A new trend is edge computing with less energy consumption (hundreds of watts or less) and real-time analysis potential. While popularly used for edge computing, electronic-based hardware accelerators ranging from general purpose processors such as central processing units (CPUs) to application-specific integrated circuits (ASICs) are constantly reaching performance limits in latency, energy consumption, and other physical constraints. These limits give rise to next-generation analog neuromorhpic hardware platforms, such as optical neural networks (ONNs), for high parallel, low latency, and low energy computing to boost deep learning acceleration (LA-UR-23-32395).

1 Introduction

X-rays produced by synchrotrons and free electron lasers (XFELs), together with high-energy photons above 100 keV, which are often generated using high-current (kA) electron accelerators and lately high-power lasers, are widely used as radiographic imaging and tomography (RadIT) tools to examine material properties and their temporal evolution [1–3]. Spatial resolution (δ) down to atomic dimensions is possible by using diffraction-limited X-rays, δ ∼ λ/2, corresponding to Abbe’s diffraction limit for X-ray wavelength λ [4,5]. The overall object size that X-rays can probe readily reaches a length (L) greater than 1 mm, which is limited by the X-ray attenuation length and is X-ray energy dependent. In room-temperature water, for example, L = 0.19, 1.2, 5.9, and 14.1 cm for 1/e-attenuation length of 10 keV, 20 keV, 100 keV, and 1 MeV X-rays, respectively. The temporal resolution has now approached a few femtoseconds by using XFELs, where an XFEL experiment can be repeated for many hours in a pump-probe configuration [6,7]. In other words, the spatial dynamic range (i.e., for 10 keV X-rays, L ∼ 1 mm) is 2L/λ > 107 and temporal dynamic range is

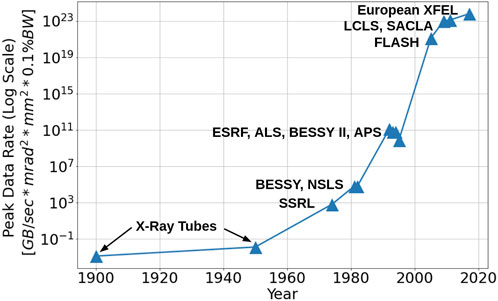

The enormous spatial and temporal dynamic ranges give rise to “big data” in X-ray imaging, tomography, and photon science. Theoretically, 1 mm3 of water contains about 5.6 × 10−5 mol of water molecules (N = 3.3 × 1019). If the position of every molecule were recorded, the memory size would be N log2N (log2N is the bit length for a binary data system) or 2.2 × 1021 bits. In experiments, explosive data growth in X-ray and other forms of RadIT is built upon steady progress for more than 120 years in X-ray and radiation sources, detectors, computation, and lately data science. Figure 1 shows the evolution of the peak data rate due to the increasing X-ray source brilliance over the years [8]. The fourth generation synchrotrons such as APS-U [9] and PETRA IV [10] will have a significant reduction in emittance and a brilliance increase by a factor about 103 over the parameters of the third generation synchrotrons such as APS and PETRA III. XFELs, which are many of orders of magnitude brighter than synchrotrons, will run at a higher repetition rate up to 1 MHz [11]. The original LCLS, in comparison, operates at 120 Hz. However, the upgraded LCLS-II greatly increased the repetition rate to 1 MHz. High-speed detectors with frame rate frequencies above 1 MHz are commercially available. The combination of high-repetition-rate experiments with a mega-pixel and larger recording system leads to high data rates, exceeding 1 TB/s (1 TB = 1012 bytes), as we discuss further in Sec. 2.1.

FIGURE 1. Peak data rate evolution of laboratory X-ray sources. Values are obtained by converting the peak brilliance to bits by assuming 100% detector efficiency and 1 photon = 1 bit.

Big data not only presents a significant challenge to data handling in terms of computing speed, computing power, short- and long-term computer memory, and computer energy consumption, which all together is called “computational resources”, but also offer a transformative approach to process and interpret data, i.e., machine learning (ML) and AI through data-enabled algorithms. Such algorithms, including deep learning (DL) [12,13], are distinctive from traditional physics, statistical, and other forward-model- or domain-knowledge-driven algorithms. Traditional algorithms are based on the domain knowledge, such as physics and statistics, and applicable to both small or large ensembles of data. In contrast, data-driven models may only rely on data explicitly for model training (tuning), model validation and use, with no domain knowledge required. In practice, domain knowledge always helps, partly due to the fact that some aspects of data models, such as the model architecture and other hyper-parameters, are chosen pragmatically and do not depend on the data. The amount of data required for data model training depends on the number of model parameters such as weights, activation functions, the number of nodes, etc. It is not uncommon that a deep neural network (DNN) may contain billions of tunable free parameters, which require a commensurate amount of data for training. Hybrid approaches to ML and AI [14,15], which merge data and domain knowledge, are increasingly popular. Hybrid models not only supplement data-driven models with domain knowledge and reduce the amount of data required for training, but also accelerate the computational speed of traditional forward models by 10 to more than 100 times by bypassing some detailed and time-consuming computations [16–18].

We may differentiate two approaches to ML and AI by the computational resources involved and how the resources are distributed. In the centralized approach, data are collected from distributed locations or different data acquisition instruments through the internet. The data are then stored in a data center, and processed by high-performance computers or mainframes. Cloud computing and data centers are now widely used to process ‘big data’ in industry, healthcare, and research institutions. However, using cloud computing to process data generated at the network edge is not always efficient. One limiting factor is the limited network bandwidth for data transportation due to increasing data generation rates. For example, in 2017, CERN had to install a third 100 Gigabit per second fiber optic line to increase their network capacity and bandwidth [19]. Other factors include the scalability and privacy issues of data transmission to the cloud [20]. Through the cloud computing and data center approach, data generation and data processing tasks can be separated, which can mitigate the computation and data processing burden on people who generate data. In the distributed or edge approach, ML and AI, together with the computing hardware, are deployed at the individual device or instrument level. Distributed computing now pairs with distributed data. Through an internet of ML/AI-enhanced instruments, each ML/AI-enhanced instrument can be optimized for a specific purpose such as data reduction and real-time data processing. Shown later in Table 1, detection cameras used at various synchrotron and XFEL facilities can generate data at a rate of

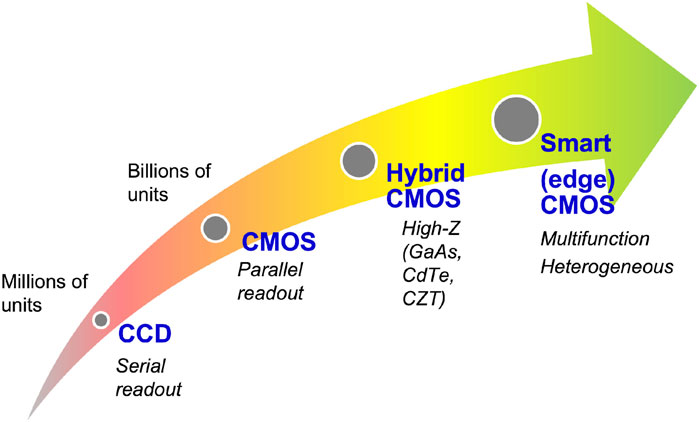

TABLE 1. A comparison of different camera data rates and specifications for individual integration modules for each detector. Additional details and examples may be found in [1]. Note that this table tabulates select cameras to illustrate the data rates and their uses in light sources or X-ray measurements. The state of the art is

We will give an overview of DL methods for real-time radiation image analysis as well as hardware solutions for DL acceleration at the edge. We note that while not all scientific applications may require real-time image analysis, it is possible to offload some computing and preprocessing steps to an edge device. The edge device can preprocess the acquired data in real-time before sending the processed data to upstream processing centers for heavier computations. This paper is organized as follows. In Section 2, we discuss different radiation detectors and imaging devices, the resulting big data generation at photon sources, and the motivations for edge computing and DL. In Section 3, we present an overview of popular neural network architectures and several image processing tasks that have potential to be performed on edge devices. In addition, we discuss examples of DL-based methods for each. In Section 4, we present on overview of hardware solutions for DL acceleration and recent works that have applied them for computing at the edge. Lastly, Section 5 concludes this paper.

2 Experimental data generation at photon sources

Data science at light sources is centered around scientific data generation and processing. Scientific data at synchrotrons and XFEL sources consist of experimental data, simulation and synthetic data, and meta data, such as detector calibration data, material properties of objects and sensors, and point spread functions of the detectors. Methods (imaging modalities) and detectors to collect experimental data are driven by the light sources, which continue to improve in source brightness, repetition rate, source coherence, photon energy, and spectral tunability. Computing hardware and algorithms are used to process experimental data and for data visualization. Computing hardware and algorithms are also used to simulate the experiments and produce synthetic data as close to the experimental data as possible for experimental data interpretation. Diversity of the materials to be integrated and imaged, together with the photon source and detector improvement have demanded continued improvements in computing hardware and algorithms towards real-time data processing, reductions in data transmission over long distances, and reducing data storage volumes.

2.1 Radiation detectors and imaging for photon science

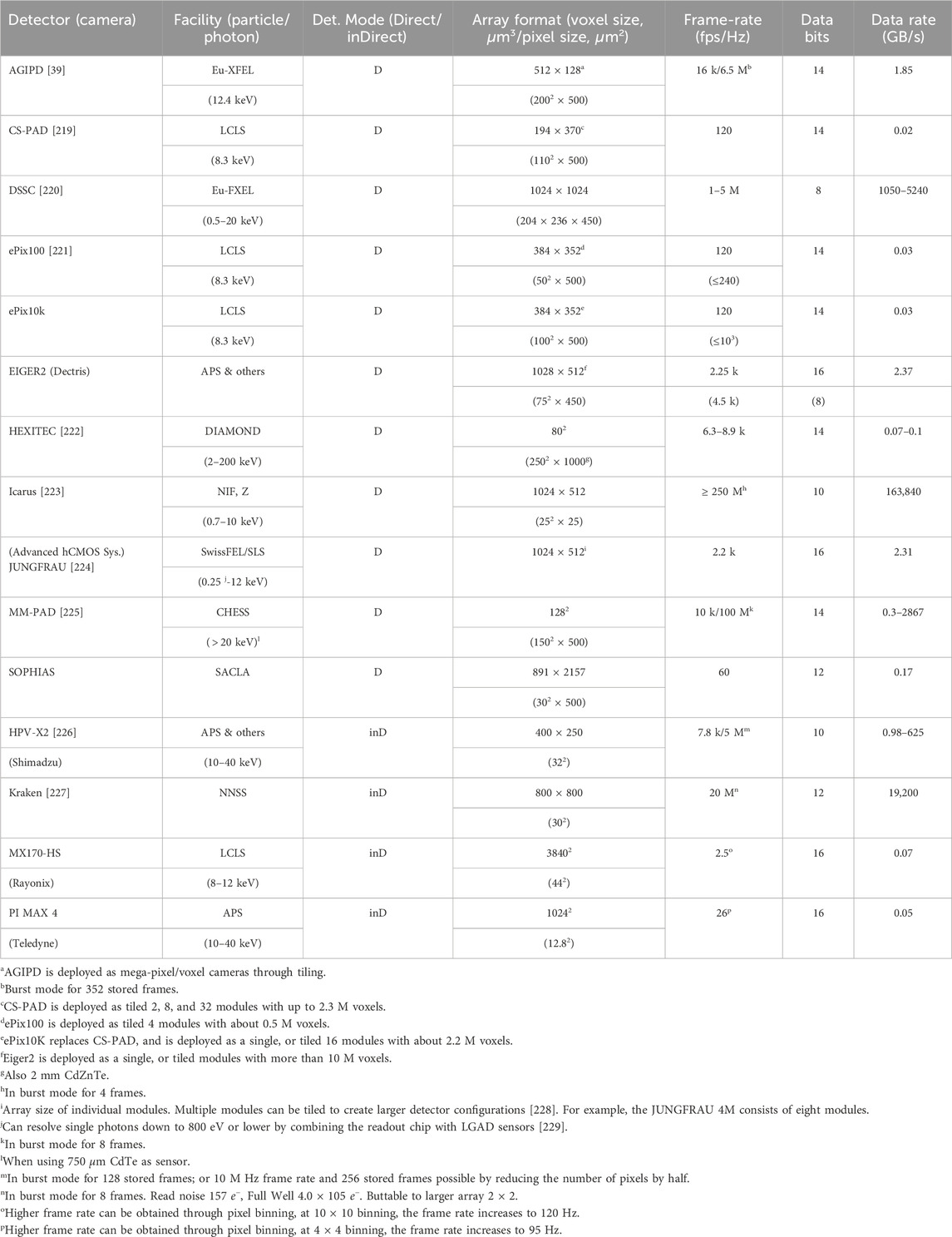

Complementary metal-oxide semiconductor (CMOS) pixelated detectors, including hybrid CMOS, are now widely used for X-ray photon science, replacing charge-coupled devices (CCDs) as the primary digital imaging technology, see Figure 2. CMOS technology is rapidly catching up to CCD cameras, with recent developments such as Sony’s STARVIS which can offer better sensitivity than traditional CCD sensors [21]. In addition, CMOS sensors are much cheaper than CCD sensors, making them more cost efficient while achieving matching performance. The latest trend is smart CMOS technology to enable edge computing and neural networks on CMOS sensors [22,23]; see Section 2.3 for more details.

FIGURE 2. Evolution of digital image sensor technology, which started with the introduction of the charge-coupled device (CCD) in the late 1960s. The latest trend is smart multi-functional CMOS image sensors enabled by three-dimensional (3D) integration in fabrication, innovations in heterogeneous materials and structures, neural networks, and edge computing.

CMOS sensors are used in many state-of-the-art radiation applications. For example, CMOS-based back-thinned monolithic active pixel sensors (MAPS) are the state-of-the-art detectors used for cryo-electron microscopy applications. MAPS detectors are CMOS sensors that combine the photodetectors and readout electronics on the same silicon layer, while backthinning reduces the electron scattering within pixels. MAPS detectors are also being developed for high-energy physics [24], cryogenic electron microscopy (cryo-EM), cryo-ptychography, integrated differential phase contrast (iDPC), and liquid cell imaging applications [25]. Meanwhile, hybrid CMOS detectors such as the AGIPD, ePix, and MM-PAD (see Table 1 for more detectors) are popularly used at facilities for photon science applications. Hybrid detectors are composed of a sensor array and pixel electronics readout layer that are interconnected through bump bonding, while the sensor frontend can be fabricated using different semiconductor materials. The thickness and material properties of the sensor array is dependent on the active absorbing layer design requirements and given X-ray energy to obtain high quantum efficiency. For example, high-Z sensors use materials with high atomic numbers such as Gallium Arsenide (GaAs), Cadmium Telluride (CdTE) and Cadmium Zinc Telluride (CZT) [26]. The hybrid design architecture allows for independent optimization of the quantum efficiency of the sensor array and pixel electronics functionality to meet imaging and measurement performance requirements [27]. Currently, hybrid CMOS detectors are the most widely used image sensors for high energy physics experiments [24]. Another family of image sensor is called the low-gain avalanche detector (LGAD), a silicon sensor fabricated on thin substrates to deliver fast signal pulses to achieve enhanced time resolution [28], as well as to increase the X-ray signal amplitudes and the signal-to-noise ratio to achieve single photon resolution [29]. As a result, LGADs are popularly used in experiments that require fast time resolution and good spatial resolution such as 4D tracking [30] and for soft X-ray applications in low energy diffraction, spectro-microscopy and imaging experiments such as the resonant inelastic X-ray scattering experiments [29]. In summary, radiation pixel detectors aim to capture incident photons and convert the accumulated charges in the pixel into an output image. We also mention that CMOS image sensors, including hybrid CMOS, may also be extended to neutron imaging by converting incident neutrons to visible photons through neutron capture reactions [31].

The particle nature of photons motivates digitized detectors for photon counting. Hybrid CMOS detectors are one of the most popular detectors that use the photon counting mode of operation, where individual photons are detected by tuning the discriminator threshold and the energy value of each incident photon is recorded as electronic signals. However, several factors complicate photon counting implementation in high-luminosity X-ray sources. The intensity of the sources can be too high to count individual photons one by one. The amount of X-ray photon-induced charge in CMOS detectors, which is the basis of X-ray photon counting, is not constant for the same X-ray energy. Furthermore, the detectors suffer from a charge sharing issue when a photon interacts on the border between neighboring pixels. The source energy is not monochromatic, especially in imaging applications. Inelastic scattering of mono-chromatic X-rays can result in a broad distribution of X-ray photon energies after scattering by the object. When an optical camera is used together with a X-ray scintillator, the energy resolution of individual X-rays based on the photon detection is worse than direct detection when the X-ray directly deposits its energy in a silicon photo-diode. See Table 1 for a comparison of different direct and indirect detection cameras and their data rates. Note that Table 1 tabulates the specifications of select cameras to illustrate their data rates as well as their uses in light sources or X-ray measurements. To overcome the issue of the too high photon flux rate, hybrid detectors are developed to operate under a charge integration mode, where the signal intensity is obtained by integrating over the exposure time. The current generation of hybrid CMOS detectors are capable of different modes of operation (i.e., photon counting and charge integration) for direct photon detection [32].

2.2 Imaging modalities

X-ray microscopy uses X-ray lenses, zone plates, mirrors and other optics to modulate the X-ray field to form images [33]. As the X-ray intensities generated by synchrotrons and XFELs continue to increase, the advances in computational imaging modalities and lens-less X-ray modalities are increasingly used in synchrotrons and XFELs. In some cases, lens-less modalities may be preferred to avoid damages to X-ray lenses and mirrors. Lens-less modalities may also avoid aberration, diffraction due to imperfect X-ray lens, defects in zone plates, and other optics. The simplest lens-less X-ray imaging setup is radiography or projection imaging, pioneered by Röntgen. Röntgen’s lens-less radiographic imaging modality directly measures attenuated X-ray intensity due to absorption. Synchrotrons and XFELs also allow a growing number of phase contrast imaging, see Ref. [1] and references therein. Other modalities include in-line holography [34] and coherent diffractive imaging [35]. Additional phase and intensity modulation using pinholes, coded apertures, and kinoforms are also possible. Combinatorial X-ray modalities have also been introduced. For example, X-ray ptychography microscopy combines raster scanning X-ray microscopy with coherent diffraction imaging [36]. Compton scattering, usually ignored in the synchrotron and XFEL setting, may offer some additional information about the samples and potentially reduce the dose required [37]. The versatility of modalities requires different off-line and real-time data processing techniques. Background reduction is a common issue for all X-ray modalities. Real-time data processing, including energy-resolving detection, is highly desirable to distinguish different sources of X-rays since the detector pixel may simultaneously collect X-ray photons from different sources of X-ray attenuation and scattering.

2.3 Real time in-pixel data-processing

When an X-ray photon is detected directly or indirectly through the use of a scintillator, charge-hole pairs are created through photo-to-electric conversion, or the photoelectric effect, within pixels of a camera or a pixelated array. CCD cameras, CMOS cameras, and LGAD arrays are now available for synchrotron and XFEL applications. Unlike a CCD camera, a CMOS image sensor collects charge and stores it in capacitors in pixels in parallel. Parallel charge collection and capacitor voltage digitization, which turns analog voltage signals into digitized signals, allow CMOS image sensors to operate at a much higher frame rate than CCDs. Charge and voltage amplification, in LGADs and sometimes in CMOS image sensors, are also used to improve signal-to-noise ratio. Any source of charge or voltage modulation not related to the photoelectric effect is a potential source of noise. The photoelectric effect itself can lead to so-called Poisson noise due to the probabilistic process of photo-to-electric conversion. Other sources of noise include thermal noise or dark current, salt’n’pepper noise (due to charge migration in and out of pixel defects and traps), and readout noise.

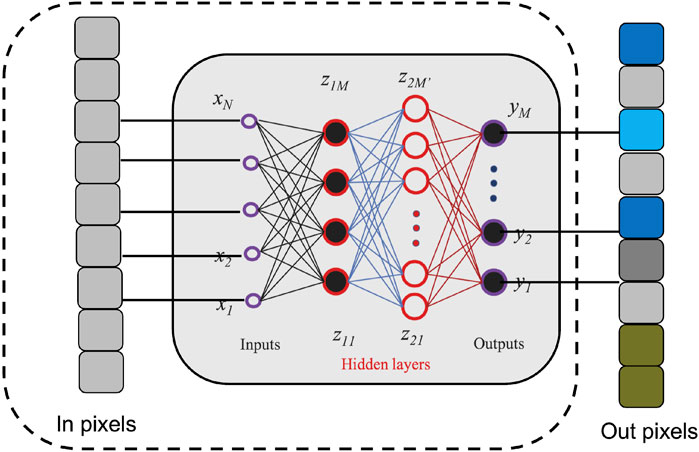

Automated real-time in-pixel signal and data processing are therefore required in CMOS and other pixelated array sensors for noise rejection and noise reduction for charge and voltage amplification controls, and for charge sharing corrections. Figure 3 illustrates a generic approach on in-pixel neural network processing for optimized and real-time data processing. Common approaches process the data by transmitting it to a separate processor and storing the data in memory. However, the data transmission and memory access actions are known to be among the most power hungry in imaging systems [38]. As a result, it is desirable to optimize the end-to-end processes of sensing, data transmission, and processing tasks. One solution is to utilize in-pixel processing to directly extract features of the input pixels which can significantly reduce system bandwidth and power consumption of data transmission, memory management, and downstream data processing. In recent years, a number of works have been proposed to implement image sensors with in-pixel neural network processing; see [22,23] and references therein. This motivates real-time image processing for image sensors for various image processing tasks including noise removal. If uncorrected, noise can corrupt the image information and make it hard for post processing or misleading for data interpretation. Charge and voltage amplification may lead to nonlinear distortion between the X-ray flux and voltage signal. When the X-ray flux is too high, the so-called plasma effect may also need correction. Charge-sharing happens when an X-ray photon arrives at a pixel border and the electron-hole pairs created are spread across multiple neighboring pixels.

FIGURE 3. A generic illustration of in-pixel neural network processing for optimized and real-time end-to-end data processing and reductions. The neural network is directly implemented on the imaging sensor. For this specific example, the network illustrated is a fully connected neural network (FCNN). The network takes in the sensor pixel values as inputs x (in pixels) then feeds the input into hidden layers z with the number of neurons per layer denoted by M. The processed pixels (out pixels) is the output of the neural network denoted by y.

By using transistor circuits, correlated double sampling (CDS) is an extremely successful example in noise reduction. Adaptive gain control circuits have been implemented in the AGIPD high-speed camera [39,40]. While real-time pixel-level signal processing by novel transistor circuits is important, there is also room for novel data-processing approaches that do not require hardware modifications to the pixels. As a recent example [41], a physics-informed neural network was demonstrated to improve spatial resolution of neutron imaging. Other novel applications of neural networks and their integration with hardware, see Figure 3, may offer new possibilities in noise reduction and image corrections. Integrated hardware and software (neutral networks are emphasized here) approaches for optimal performance also need to take into account of the complexity of the workflow [42–44], or computational cost, power consumptions, constrained by the frame rate and other metrics. For example, the computational cost of an n × n matrix is O (n3) [45].

3 Deep learning for image processing

In recent years, deep learning (DL) has contributed significantly to the progress in computer vision, especially in different areas of image processing tasks including but not limited to image denoising, segmentation, super-resolution, and classification. DL is a sub-field of ML and AI that utilize neural networks (NNs) and their superior nonlinear approximation capabilities to learn underlying structures and patterns within high-dimensional data [12]. In other words, DL aims to learn multiple levels of representation, corresponding to a hierarchy of features or concepts, where higher-level features are defined from lower-level ones and lower-level ones can help build up higher-level features.

For DL algorithms to extract underlying features and to obtain accurate predictions, it is important to understand the workflow of the DL process. In general, the DL process can be broken into several stages: i) data acquisition, ii) data preprocessing, iii) model training, testing, and evaluation, iv) model deployment and monitoring [46,47]. The first step to ML and DL problems is to collect large amounts of data from sources including but not limited to sensors, cameras, and databases. Next, the collected data needs to be preprocessed into useful features as inputs into the DL model. At a high level, the preprocessing step aims to prepare the raw data (e.g., data cleaning, outlier removal, data normalization, etc.) and to allow data analysts to preform data exploration (i.e., identifying data structure, relevance, type, and suitability). The preprocessed data is split for model training, testing, and evaluation. The appropriate DL training algorithm, model, and ML problem are dependent on the nature of the application. The model is trained on the training dataset to tune the model hyperparameters and is evaluated using unseen data, also known as the test dataset. This process is reiterated until a desired accuracy performance or stopping criteria has been achieved. Last, the trained model is deployed and monitored for further retraining and redeployment. See [46,48,49] for comprehensive details on the basics of DL.

3.1 Centralized and decentralized learning

Recall that ML and AI, and thus DL, can be differentiated into two approaches, namely, centralized and decentralized approaches. In the centralized approach, the data collected from network edge detectors are transmitted to and stored in a data center and then processed by high-performance computers. Very large traditional, ML, or hybrid algorithms can be deployed in the data center, which also requires correspondingly large memory, energy and power consumption. Estimated global data center electricity consumption in 2022 was 240–340 TWh [50], or around 1%–1.3% of global final electricity demand from data centers and data transmission networks [51]. To put this value in a better perspective, it is estimated that Bitcoin alone consumes around 125 TWh per year [52] and that the combination of Bitcoin and Ethereum consumed around 190 TWh (0.81% of the world energy consumption) in 2021 [53]. Furthermore, centralized approaches and large ML models are commonly executed by a large team of people. A Meta AI research team recently introduced the model called Segment Anything Model (SAM) and a dataset of more than 1 billion masks on 11 Million images [54]. Nvidia unveiled Project Clara at its recent GTC conference, showing early results using DL post-processing to dramatically enhance existing, often grainy and indistinct echocardiograms (sonograms of the heart). Clara motivates acceleration in research being done on several fronts that exploits explosive growth in DL computational capability to perform analysis that was previously impossible or far too costly. One technique is called 3D volumetric segmentation that can accurately measure the size of organs, tumors or fluids, such as the volume of blood flowing through arteries and the heart. Nvidia claims that a recent implementation, an algorithm called V-Net, “would’ve needed a computer that cost $10 million and consumed 500 kW of power [15 years ago]. Today, it can run on a few Tesla V100 GPUs” [55,56]. This claim accentuates the rapid advancements made in the hardware industry to accommodate DL computational requirements. For example, a work by [57] implemented and trained V-Net for 48 h on a workstation equipped with an NVIDIA GTX 1080 with 8 GB of video memory.

However, data processing using cloud computing and data centers are inefficient due to factors including limited network latency, scalability, and privacy [20]. To address these challenges, edge computing, or the distributed approach, offloads computing resources to the edge devices to improve network latency, to enable real-time services, and to address data privacy challenges by directly analyzing data generated by the source. In addition, edge computing will help reduce the high costs of memory storage as well as high energy costs for data transmission and memory access during data processing. For example, CERN’s Large Hadron Collider (LHC) and non-LHC experiments generate over 100 petabytes of data each year and CERN’s main data center had an energy consumption of about 37 GWh over the year of 2021 [58]. Assuming that the average cost of electricity is $0.15/kWh, then the cost of using 37 GWh is $5.55 million. CERN’s new data center in Prévessin aims to have a power usage effectiveness (PUE) below 1.1 (ideal PUE is 1.0), and in future data centers, CERN aims to implement ML based approaches to key computing tasks to help reduce the amount of computing resources and energy consumption [58].

There are some other successes using ML and AI in areas such as HEP experiments (i.e., Higgs boson discovery) and electron microscopy. The discovery of the Higgs boson was a major challenge in HEP and can be setup as a classification problem. Many ML methods such as decision trees, logistic regression, and DL algorithms have been applied to solve the signal separation problem [59,60]. Meanwhile, ML and AI in electron microscopy are proposed to enable autonomous experimentation. Specifically, the automation of routine operations including but not limited to probe conditioning, guided exploration of large images, optimized spectroscopy measurements, and time-intensive and repetitive operations [61]. Edge ML and edge AI have already attracted a lot of attention in medicine. The fusion of DL and medical images creates dramatic improvements [56]. The concept is similar to techniques like high-dynamic range (HDR) photography, digital remastering of recordings or even film colorization in that one or more original sources of data are post-processed and enhanced to bring out additional detail, remove noise or improve aesthetics.

3.2 Neural network architectures

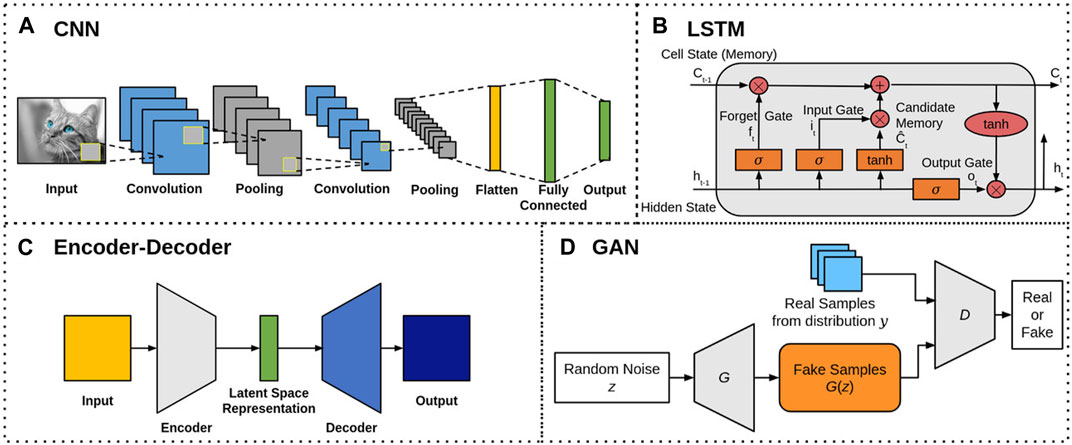

This section provides an overview of different popular deep neural network (DNN) architectures used for image processing tasks. These widely used architectures include but are not limited to convolutional neural networks (CNNs), long short-term memory (LSTM), encoder-decoder networks, and generative adversarial networks (GANs). Due to space limitations, other DNN architectures such as transformers [62], restricted boltzamann machines [63], and extreme learning [64] will not be covered here.

3.2.1 Convolutional neural networks (CNNs)

CNNs are one of the most widely used architectures in DL, especially for image processing tasks, due to their inherent spatial invariance property. The built-in convolutional layers allow the network to naturally reduce the high dimensionality of the input data, i.e., images, without information loss. Figure 4A shows the basic architecture of CNNs, which usually consists of 3 types of layers: i) convolutional layers, ii) pooling layers, and iii) fully connected layers. The convolutional layer uses various kernels to convolve the entire input image, including intermediate feature maps, and generate new feature maps. There are 3 major advantages of the convolutional operation [65]: i) the number of parameters is reduced by using weight sharing mechanisms, ii) the correlation among neighboring pixels are easily learned through local connectivity, and iii) the location of objects are fixed due to spatial invariance. Generally following a convolutional layer, the pooling layer is used to further reduce the dimensions of feature maps and network parameters. The average pooling and max pooling methods are commonly used, and their theoretical performances have been evaluated by [66,67], where max pooling is shown to achieve faster convergence and improved CNN performance. Lastly, the fully connected layer follows the last pooling or convolutional layer to convert the 2D feature maps into a 1D vector for additional feature mapping, i.e., labels. A few of the well known CNN models are the AlexNet [68], VGG [69], GoogLeNet [70], and ResNet [71], where all the models were top 3 finishers in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). Discussed later in Section 3.3, CNNs are popularly used in many image processing tasks such as image restoration (e.g., denoising, deblurring, and super resolution), segmentation, classification, and 3D reconstruction. A few examples from photon sciences include but are not limited to denoising synchrotron computed tomography images, deblurring neutron images, segmentation of inertial confinement fusion radiographs, and 3D reconstruction of coherent diffraction imaging.

FIGURE 4. Basic neural network architectures for (A) CNNs, (B) LSTMs, (C) encoder-decoders, and (D) GANs.

3.2.2 Long short-term memory (LSTM)

LSTMs [72] are a special type of recurrent neural network (RNN) that is commonly used to process sequential datasets, such as audio recordings, videos, and time-series data. Figure 4B shows the basic structure of a LSTM block, which consists of 3 gates (the forget gate ft, the input gate it, and output gate ot), as well as the candidate memory (new information)

3.2.3 Encoder-decoders

Encoder-decoder neural networks, also known as sequence-to-sequence networks, are a type of network that learns to map the input domain to a desired output domain [13]. As shown in Figure 4C, the network consists of two main components: an encoder network which uses an encoder function h = f(x) to compress the input x into a latent-space representation h, and a decoder network y = g(h) that produces a reconstruction y from h. The latent-space representation h prioritizes learning the important aspects of the input x which are useful in reconstructing the output y. A special case of encoder-decoder models, autoencoders are networks in which the input and output domains are the same. These networks are popularly used in DL applications involving sequence-to-sequence modeling such as natural language processing [73], image captioning [74], and speech recognition [75]. In image processing, encoder-decoder networks are popularly used for image denoising, segmentation, compression, and 3D reconstruction. For example, one popular encoder-decoder model for image segmentation is U-Net [76]. Discussed later in Section 3.3, U-Net is used for image segmentation of inertial confinement fusion images and modified versions of the U-Net architecture are used in many works for image processing tasks. A few examples include but are not limited to the denoising and super resolution of synchrotron and X-ray computed tomography images.

3.2.4 Generative adversarial networks (GANs)

GANs [77] are increasingly popular DL frameworks for generative AI models. Classical GANs consist of 2 different networks, a generator and a discriminator, as shown in Figure 4D. The generator network G aims to generate data G(z) that is indistinguishable from the real data by learning a mapping from an input noise distribution z to a target distribution y of the real data. Meanwhile, the discriminator network D takes as input the real and generated data, and aims to correctly classify them as “real” or “fake” (generated). The GAN learning objective takes on a game-theoretic approach as a two player minimax game between G and D. Let

3.3 Image processing techniques

This section provides an overview of several image processing tasks that have potential to be performed on edge devices. In addition, this section surveys different works that have applied the DL-based image processing techniques to radiographic image processing.

3.3.1 Restoration

Image restoration is the process of adjusting the quality of digital images such that the enhanced image can facilitate further image analysis. Common enhancement operations include histogram-based equalization, brightness, and contrast adjustment. However, these operations are very elemental and advanced operations are necessary to further improve the perceptual quality. These advanced operations include image denoising, deblurring, and super-resolution (SR); see [80–83] for examples of images before and after processing.

3.3.1.1 Denoising

One of the fundamental challenges in image processing, image denoising aims to estimate the ground-truth image by suppressing internal and external noise factors such as sensor and environmental noise, as discussed in Section 2.3. Sources of noise include but are not limited to Poisson noise due to photo-electric conversion, camera thermal noise or dark current, salt’n’pepper noise, camera readout noise, and shot noise for low-dose X-ray imaging conditions. Conventional methods including but not limited to adaptive nonlinear filters, Markov random field (MRF), and weighed nuclear norm minimization (WNNM), have achieved good performance in image denoising [84], however, they suffer from several drawbacks [85]. Two major drawbacks are the need to manually set parameters as the proposed methods are non-convex and the high computational cost for the optimization problem for the test phase. To overcome these challenges, DL methods are applied for image denoising problems to learn the underlying noise distribution. Various neural network architectures, such as CNNs, encoder-decoders, and GANs, have been proposed for image denoising in recent years; see [84] for details.

An example application that uses image denoising is in X-ray computed tomography (CT). X-ray CT imaging is a common noninvasive imaging technique that allows for reconstructing the internal structure of objects by using 3D reconstruction from 2D projection images; see Section 3.3.6 on 3D reconstruction. The spatial resolution of XFEL-based and synchrotron-based X-ray CT images can range from tens of microns to a few nanometers, while higher resolutions can be obtained by using higher radiation doses. However, some experiments may require short exposure times or low radiation dosage to avoid damaging the sample. The low-dose image conditions results in noisy 2D projection images, which in turn impacts the quality of the 3D reconstructed image. To address this issue [86], developed a GAN-based image denoising method called TomoGAN. TomoGAN is a conditional GAN model where the generator G conditionally uses the noisy reconstruction as input and outputs enhanced (denoised) reconstructions. Furthermore, the generator network architecture adopts a modified U-Net [76] architecture, popularly used for image segmentation. Meanwhile, the discriminator D is trained to classify reconstructions of the enhanced reconstructions and reconstructions of normal dose projections [86]. Evaluates the effectiveness of TomoGAN on two experimental (shale sample) datasets. TomoGAN outperforms conventional methods in noise reduction and reports a higher structural similarity (SSIM) value. In addition, TomoGAN is demonstrated to be robust to images with dynamic features from faster experiments, e.g., collecting fewer projections and/or using shorter exposure times.

Denoising has also been applied to synchrotron radiation CT (SR-CT) in a recent work by [87], which developed a CNN-based image denoising method called Sparse2Noise. Similar to the previous work for TomoGAN, this work presents a low-dose imaging strategy and utilizes paired normal-flux CT images (sparse-view) and low-flux CT image (full-view) to train Sparse2Noise. In addition, Sparse2Noise also adopts a modified U-Net architecture for its performance of removing image degradation factors such as noise and ring artifacts. The Sparse2Noise network takes as input the normal-flux CT images into the modified U-Net architecture and outputs the enhanced image. During training, the network is trained in a supervised fashion using the low-flux CT images. The loss function to update the network weights is defined to minimize the difference between the enhanced image and the reconstructed low-flux CT image [87]. Evaluates the effectiveness of Sparse2Noise on one simulated and two experimental datasets. Furthermore, Sparse2Noise is compared to simultaneous iterative reconstruction technique (SIRT), unsupervised deep imaging prior (DIP), and supervised training algorithms Noise2Inverse [88] and Noise2Noise [89]. For the simulated dataset, Sparse2Noise outperforms all methods by achieving the highest SSIM and peak signal to noise ratio (PSNR) values, and in terms of removing image degradation factors such as noise and ring artifacts. For the experimental datasets, Sparse2Noise also achieves the best performance in terms of noise and ring artifact removal. Most importantly, however, Sparse2Noise can achieve excellent performance for low-dose experiments (0.5 Gy per scan).

3.3.1.2 Deblurring

Image deblurring aims to recover a sharp image from a blurred image by suppressing blur factors such as lack of focus, camera shake, and target motion. Some blur factors are application specific such as multiple Coulomb scattering and chromatic aberration in proton radiography [90]. A blurred image can be modeled mathematically as B = K*I + N, where B denotes the blurred image, K the blur kernel, I the sharp image, N the additive noise, and * the convolution operation. The blur kernel K is typically modeled as a convolution of blur kernels that are spatially invariant or varying [82]. Conventional methods aim to solve the inverse filtering problem to estimate K, however, this is an ill-posed problem as the sharp image I needs to be estimated as well. To address this issue, prior-based optimization approaches, also known as maximum a posteriori (MAP)-based approaches, have been proposed to define priors for K and I [91]. While these approaches are shown to achieve good results for image deblurring, deep learning approaches can further improve the accuracy of the blur kernel estimation or even skip the kernel estimation process altogether by using end-to-end methods. Various neural network architectures, such as CNNs, LSTMs, and GANs, have been proposed for image deblurring; see [82,91] for details.

One example application that uses image deblurring is in neutron imaging restoration (NIR), a non-destructive imaging method. However, the neutron images suffer from noise and blur artifacts due to the neutron source and the digital image system. The low quality of raw neutron images limits their applications in research, and thus image denoising and deblurring techniques are necessary to produce sharp images. To address these issues [92], proposes a fast and lightweight neural network called DAUNet. DAUNet consists of three main blocks: a feature extraction block (FEB), multiple cascaded attention U-Net blocks (AUB), and a reconstruction block (RB). First, DAUNet takes as input a degraded neutron image and feeds it into the FEB to extract important underlying features. Next, the AUB inputs the extracted feature maps into a modified U-Net with an attention mechanism, which allows U-Net to focus on harder to address features such as texture and structure information, and outputs a restored image. Last, the RB block outputs the enhanced image by reconstructing the restored image. To evaluate DAUNet, its performance is compared with several popular DNN image restoration methods such as DnCNN [93] and RDUNet [94]. Due to the lack of available neutron imaging datasets, the networks are trained on X-ray images that are similar to the neutron imaging principle; specifically, the X-ray images are obtained from the SIXray dataset [95], where 4699 and 23 images are used as the training and test set respectively. In addition, seven clean neutron images are added to the test set. Results show that DAUNet can effectively improve the image quality by removing noise and blurring artifacts, while achieving quality close to the large network with faster running times and a smaller number of network parameters.

3.3.1.3 Super-resolution (SR)

Image SR is the process of reconstructing high-resolution images from low-resolution images. It has been widely applied in many real-world applications, especially in medical imaging [96] and surveillance [97], where the spatial resolution of captured images are not sufficient due to limitations such as hardware and imaging conditions. A variety of DL-based methods for SR have been explored, ranging from CNN-based methods (e.g., SRCNN [98]) to more recent GAN-based methods (e.g., SRGAN [99]). In addition to utilizing different neural network architectures, DL-based SR algorithms also differ in other major aspects such as their loss functions and training approaches [83,100]. These differences result from various factors that contribute to the degradation of image quality including but not limited to blurring, sensor noise, and compression artifacts. Intuitively, one can think of the low-resolution image as the output of a degradation function with an input high-quality image. In the most general case, the degradation function is unknown and an approximate mapping is learned through deep learning. These degradation factors influence the design of loss function, and thus training approaches. A detailed discussion of the various loss functions, SR network architectures, and learning frameworks is out of scope for this paper; however, see [100] for details.

An example application that applies super resolution is for X-ray CT imaging. As mentioned earlier, CT imaging has many factors that impact the resulting image quality such as radiation dose and slice thickness. In addition, 3D image reconstruction may require heavy computational power due to the number of slices or projection views taken, where thicker slices results in lower image resolution, and slower operational speed, which increases with the number of slices. To address this issue, it is desirable to obtain higher-resolution (thin-slice) images from low-resolution (thick-slice) ones [101]. Develops an end-to-end super-resolution method based on a modified U-Net. The network takes as input the low-resolution image and outputs the high-resolution one. The network is trained on slices of brain CT images obtained from a 65 clinical positron emission tomography (PET)/CT studies for Parkinson’s disease. The low-resolution images are generated as the moving average of five high resolution slices and the ground-truth image is taken as the middle slice. The performance of the proposed method is compared with the Richardson-Lucy (RL) deblurring algorithm using the PSNR and normalized root mean square error (NRMSE) metrics. The results show that the proposed method achieves the highest PSNR and lowest NRMSE values compared to the RL algorithm. In addition, the noise level of the enhanced images are reported to be lower than that of the ground-truth.

Super resolution has also been applied to transmission and cryogenic electron microscopy (cryo-EM) imaging applications for sub-pixel electron event localization [25,102]. In transmission electron microscopy, electron events are captured using pixelated detectors as a 2D projection track of the energy deposition [102]. Conventional reconstruction methods, such as the weighted centroid method and the furthest away method (FAM), require an event analysis procedure to extract electron track events. However, these classical algorithms are unable to separate overlapping electron event tracks, and do not take into consideration the statistical behavior of the electron movement and energy deposition. To address this issue [102], used a U-Net-based CNN to learn a mapping from input electron track image to an output probability map that indicates the probability of the point of entry for each pixel. The network is trained using a labeled dataset generated through Monte Carlo simulations, and tested on simulated data and experimental data from a pnCCD [103]. The performance of the proposed CNN model is compared with FAM. The results show that the proposed method achieves superior localization performance compared to FAM by reducing the distribution spread of the Euclidean distance on the simulated dataset, while achieving a modulation transfer function closer to the ground truth on the experimental dataset. For cryo-EM, a CNN model was applied in a similar manner for electron event localization, but with a slightly different dataset. Cyro-EM experiments popularly use MAPS detectors to directly detect electron events, where each captured electron results in a pixel cluster on the captured image. In [25], a CNN model is designed to output a sub-pixel incident position given an input pixel cluster image and the corresponding time over threshold values.

3.3.2 Segmentation

Image segmentation is the process which segments an image or video frames into multiple regions or clusters, where each pixel can be represented by a mask or be assigned a class [104]. This task is essential in a broad range of computer vision problems, especially for visual understanding systems. A few applications that utilize image segmentation include but are not limited to medical imaging for organ and tumor localization [105], autonomous vehicles for surface and object detection, and video footage for object and people detection and tracking [106]. Numerous techniques for image segmentation have been proposed throughout the years, ranging from early techniques based on region splitting or merging such as thresholding and clustering algorithms, to newer algorithms based on active contours and level sets such as graph cuts and Markov random fields [104,107]. Although these conventional methods have achieved acceptable performance for some applications, image segmentation still remains a challenging task due to various image degradation factors such as noise, blur, and contrast. To address these issue, numerous deep learning methods have been developed and have been shown to achieve remarkable performance. This is due to the powerful feature learning capabilities of DNNs, which allows DNNs to have reduced sensitivity to image degradation factors compared to the conventional methods. Popular neural network architectures used for DL-based segmentation includes CNNs, encoder-decoder models, and multiscale architectures; see [107] for details. Two popular DNN architectures used for image segmentation problems are U-Net [76] and SegNet [106]; see [107] for examples of images before and after processing.

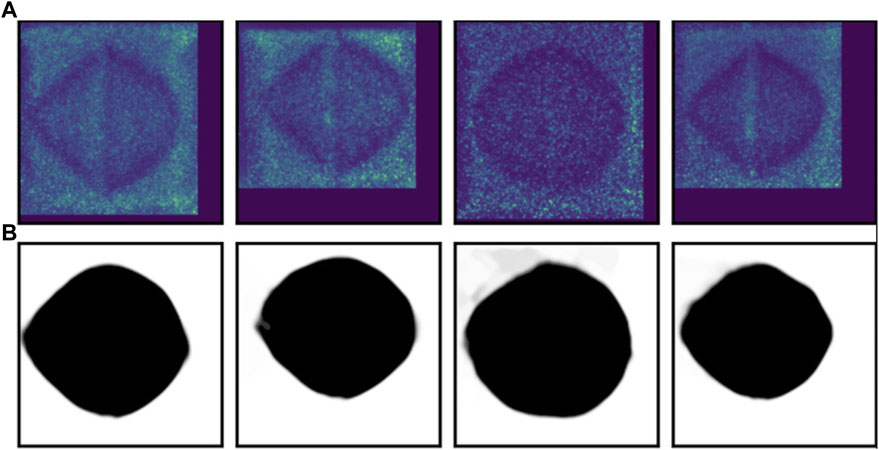

Image segmentation is an important step in analyzing X-ray radiographs from, for example, inertial confinement fusion (ICF) experiments [79]. ICF experiments typically use single or double shell targets which are imploded as the laser energy or laser-induced X-rays rapidly compress the target surface. X-ray and neutron radiographs of the target provide insight to the shape of target shells during the implosion. Contour extraction methods are used to extract the shell shape to conduct shot diagnostics such as quantifying the implosion and kinetic energy, identifying shell shape asymmetries, and determining instability information [79]. Uses U-Net [76], a CNN architecture for image segmentation, to output a binary masked image of the outer shell in ICF images. The shell contour is then extracted from the masked image using edge detection and shape extraction methods. Due to the limited number of actual ICF images, a synthetic dataset consisting of 2000 experimental-like radiographs is used to train the U-Net. In addition, the synthetic dataset provides ground-truth ICF image-mask pairs, which are required to train U-Net. The trained U-Net is tested on experimental images and has successfully extracted the binary mask of high-signal-to-noise ratio ICF images as shown in Figure 5.

FIGURE 5. Results of image segmentation using U-Net on experimental ICF images (A) and the corresponding output masks (B). Reproduced with permission from [79].

Another example of X-ray image segmentation is for the Magnetized Linear Inertial Fusion (MagLIF) experiments at Sandia National Laboratory’s Z-facility [108]. The MagLIF experiments compresses a cylindrical beryllium tube, also known as a liner, filled with pure deuterium fuel using a very large electric current on the order of O (20MA). Before compression, the deuterium fuel is pre-heated and an axially oriented magnetic field is applied. The electric current causes the liner to implode and compresses the deuterium fuel in a quasi-adiabatic implosion. The magnetic field flux is also compressed which aids in the trapping of charged fusion particles at stagnation. X-ray radiographs are taken during the implosion process for diagnostics and to analyze the resulting plasma conditions and liner shape. To better analyze the implosion, a CNN model is proposed to segment the captured X-ray images into fuel strand and background. The CNN is trained using synthetically generated and augmented dataset of 10,000 X-ray images and their corresponding binary masks. The trained CNN is tested on experimental images where the results generally demonstrate excellent fuel-background segmentation performance. The worst segmentation performance is due to factors such as excessive background noise and X-ray image plate damage.

3.3.3 Image classification and object detection

Image classification, a fundamental problem in computer vision, aims to assign labels or categories to images or specific regions in images. It is known to form the basis of other computer vision tasks including segmentation and object detection. Traditional approaches to solve the classification problem typically use a two stage approach, where handcrafted features extracted from the image are used to train a classifier. The traditional approaches suffer from low classification accuracy due to the heavy dependence on the design of the handcrafted features. DL approaches can easily overcome this challenge by exploiting neural network layers for automated feature extraction, transformation, and pattern analysis. CNNs are the most popular neural network architecture used for image classification [109,110] due to their capability of reducing the high dimensionality of images without information loss, as discussed in Section 3.2.1. In addition, recall in Section 3.2.1, the CNN architectures AlexNet, VGG, GoogLeNet, and ResNet were top 3 finishers in the ILSVRC. The ILSVRC is an annual software contest where algorithms compete to correctly classify images in the ImageNet database.

Object detection builds upon image classification by estimating the location of the object in an input image in addition to classifying the object. As a result, the workflow for traditional detection algorithms can be broken down into informative region selection, feature extraction, and classification. For informative region selection, a multiscale sliding window (bounding box) is used to scan the image to determine regions of interest. Feature extraction is used on the selected region, which is then used for object classification. However, traditional methods are time consuming and robust algorithms are difficult to design. For example, a large number of candidate sliding windows need to be considered or the algorithm may return bad regions of interest. In addition, the imaging conditions can vary significantly due to factors such as lighting conditions, backgrounds, and distortion effects. Again, DL algorithms can overcome these challenges due to their capability of learning complex features using robust training algorithms [111–113]. Popular DL object detection models are Fast R-CNN [114,115] which jointly optimizes the classification and bounding box regression tasks, You Only Look Once (YOLO) [116] which uses a fixed-grid regression, and Single Shot MultiBox Detector (SSD) [117] which improves upon YOLO using multi-reference and multi-resolution techniques.

3.3.4 Compression

Image compression is the process of reducing the file size, in bytes, without reducing the quality of the image below a threshold. This process is important in order to save memory storage space and to reduce the memory bandwidth to transmit data, especially for running image processing algorithms on edge devices. The fundamental principle of compression is to reduce spatial and visual redundancies in images, by exploiting inter-pixel, psycho-visual, and coding redundancies. Conventional methods commonly leverage various quantization and entropy coding techniques [118]. Popularly used conventional methods for lossy and lossless compression includes but are not limited to JPEG [119], JPEG2000, wavelet, and PNG. While conventional methods are widely used for both image and video compression, their performance is not the most optimal for all types of image and video applications. DL approaches can achieve improved compression results due to several factors. DNNs can learn non-linear mappings to capture the compression process as well as extract the important underlying features of the image through dimensionality reduction. For example, an encoder network or CNN can extract important features into a latent feature space for compact representation. In addition, DNNs can implement direct end-to-end methods using networks such as encoder-decoders to directly obtain the compressed image from an input sharp image. Furthermore, once a DNN is trained, the inference time is much faster. For DL-based image compression methods, the most commonly used neural network architectures are CNNs, encoder-decoders, and GANs [118].

3.3.5 Sparse sampling

A closely related process to image compression is sparse sampling. While compression aims to reduce the file size, sparse sampling, also known as compressed sensing (CS), aims to efficiently acquire and reconstruct a signal by solving underdetermined linear systems. It has been shown in CS theory that a signal can be recovered from sampling fewer measurements than required by the Niquist-Shannon sampling theorem [120]. As a result, both memory storage space and data transmission bandwidth can be reduced. In conventional methods, CS algorithms need to overcome two main challenges: the design of the sampling and reconstruction matrices. Numerous methods have been proposed including but no limited to random and binary sampling matrices and reconstruction methods using convex-optimization and greedy algorithms [121]. However, these conventional methods suffer from long computational times or low quality reconstruction. DL approaches allow for fast inference (reconstruction) times for a trained network, as well as learning non-linear functions for higher quality signal reconstruction [121,122]; see [123] for examples of images before and after processing.

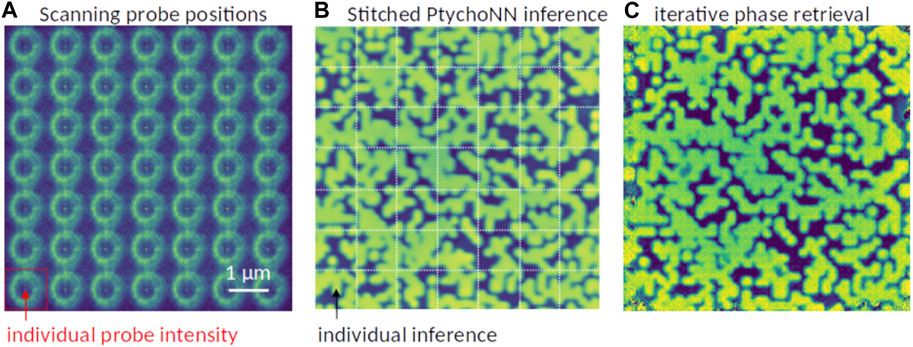

Neural network (NN) models that learn to invert X-ray data have also been shown to significantly reduce the sampling requirements faced by traditional iterative approaches. For example, in ptychography, traditional iterative phase retrieval methods require at least 50% overlap between adjacent scan positions to successfully reconstruct sample images as required by Nyquist-Shannon sampling. In contrast, Figure 6B shows image reconstructions obtained from PtychoNN when sampled at 25× less than required for conventional phase retrieval methods [124]. Figure 6A shows the probe positions and intensities, there is minimal overlap between probes. Through use of inductive bias provided through online training of the network [125], PtychoNN is able to reproduce most of the features seen in the sample even when provided extremely sparse data. Figure 6C shows the same region reconstructed using an oversampled dataset and traditional iterative phase retrieval. Furthermore [125], demonstrated live inference performance during a real experiment using an edge device and running the detector at its maximum frame rate of 2 kHz.

FIGURE 6. Sparse-sampled single-shot ptychography reconstruction using PtychoNN. (A) Scanning probe positions with minimal overlap. (B) Single-shot PtychoNN predictions on 25 × sub-sampled data compared to (C) ePIE reconstruction of the full resolution dataset.

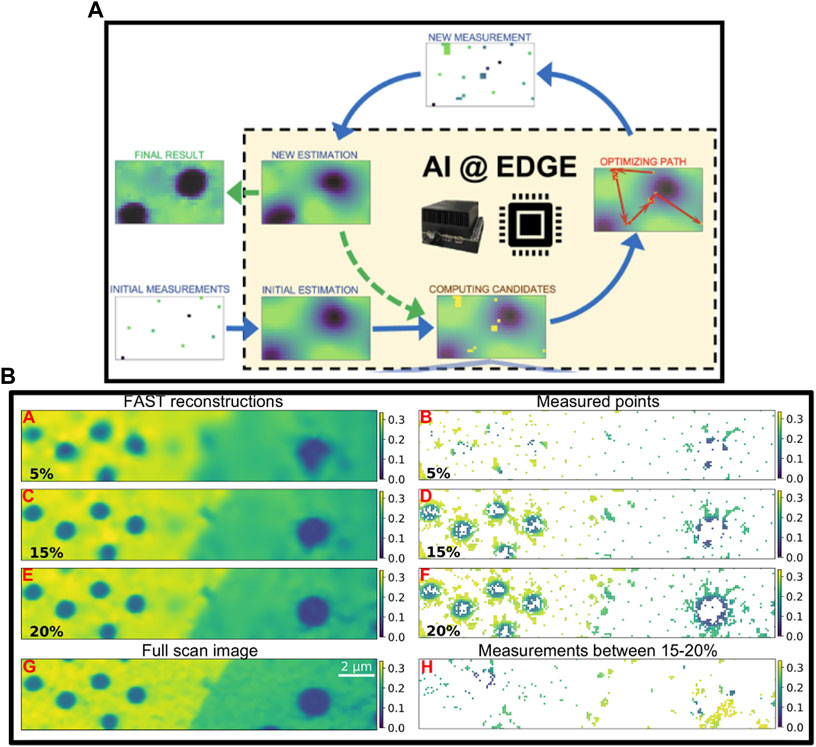

In the previous example, DL is used to reduce sampling requirements but not to alter the sampling strategy. In other words, the scan proceeds using a conventional acquisition strategy, but using fewer points along that trajectory than traditionally required. In contrast, active learning approaches are being developed that use data-driven priors to direct the acquisition strategy. Typically, this is treated as a Bayesian optimization (BO) problem using Gaussian processes (GPs). This method has been applied to a variety of characterization modalities including scanning probe microscopy [126], X-ray scattering [127], and neutron characterization [128]. A downside to such approaches is that the computational complexity typically increases as O(N3) with the action space [129], making real-time decision a challenge. To address these scaling limitations which are critical especially in fast scanning instruments, recent work has demonstrated the use of pre-trained NNs to make such control decisions [130,131]. Figure 7 shows the workflow and results from the Fast Autonomous Scanning Toolkit applied to a scanning diffraction X-ray microscopy measurement of a WSe2 sample. Starting from some quasi-random initial measurements, FAST generates an estimate of the sample morphology, predicts the next batch of 50 points to sample from, triggers acquisition on the instrument, analyzes the image after the next set of points has been acquired and continues the process until the improvement in sample image is minimal. Figures 7B, A, C, and E show the predicted image after 5%, 15%, and 20% sampling while Figure 7 B, D, and F shows the points preferentially selected by the AI. The AI has learned to prioritize acquisition where the expected information gain is maximum, e.g., around contrast features on the sample.

FIGURE 7. FAST framework for autonomous experimentation. (A) shows the workflow that enables real-time steering of scanning microscopy experiments. (B) shows reconstructed images at 5%, 15% and 20% sampling along with the corresponding locations from which they were sampled. In addition, the full-grid pointwise scan and corresponding points sampled between 15% and 20% is also shown. Reproduced with permission from [131].

3.3.6 3D reconstruction

Image-based 3D reconstruction is the process of inferring a 3D structure from a single or multiple 2D images, and is a common topic in the fields of computer vision, medical imaging, and virtual reality. This problem is well known to be an ill-posed inverse problem. Conventional methods attempt to formulate a mathematical formula for the 3D to 2D projection process, use prior models, 2D annotations, and other techniques [132,133]. In addition, high quality reconstruction typically requires 2D projections from multiple views or angles, which may be difficult to calibrate (i.e., cameras) or time consuming to obtain (i.e., CT) depending on the application. DL techniques and the increasing availability of large datasets motivates new advances in 3D reconstruction by address challenges found in conventional methods. The popular networks used for image-based 3D reconstruction are CNNs, encoder-decoder, and GAN models [132]; see [132] for examples on 2D to 3D reconstruction.

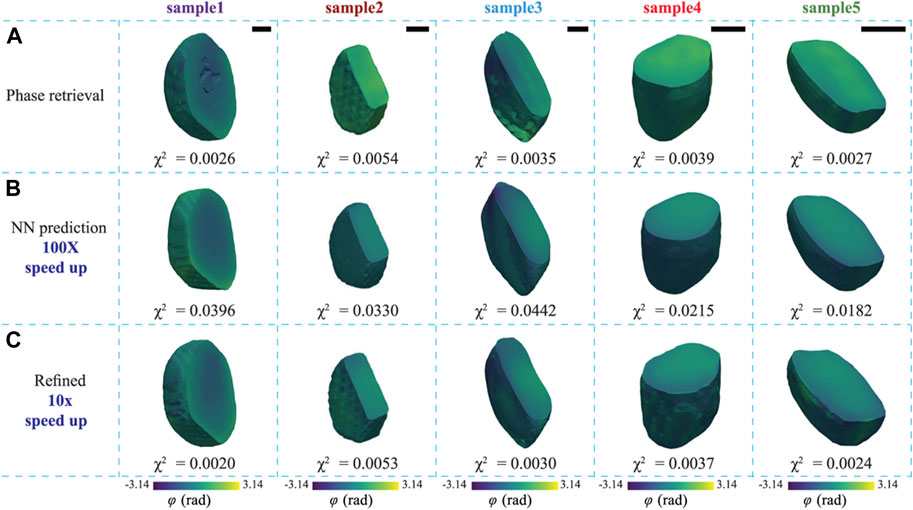

X-ray phase information is now available for 3D reconstruction in the state-of-the-art X-ray sources such as synchrotrons and XFELs. In contrast to iterative phase retrieval methods that incorporate NNs through a DIP or other means, single-shot phase retrieval NNs provide sample images from a single pass through a trained NN. The inference time on a trained NN is minimal and such methods are hundreds of times faster than conventional phase retrieval [134,135]. Figures 8A, B compare AutoPhaseNN and traditional phase retrieval for 3D coherent image reconstruction, respectively [136]. AutoPhaseNN is trained to invert 3D coherently scattered data into sample image in a single shot. Once trained AutoPhaseNN is

FIGURE 8. Comparison of 3D sample images obtained by (A) phase retrieval, (B) AutoPhaseNN, and (C) AutoPhaseNN + phase retrieval. Reproduced with permission from [136].

A recent work by Scheinker and Pokharel [137] developed an adaptive CNN-based 3D reconstruction method for coherent diffraction imaging (CDI), a non-destructive X-ray imaging technique that provides 3D measurements of electron density with nanometer resolution. The CDI detectors record only the intensity of the complex diffraction pattern of the incident object. However, all phase information is lost in this detection method, and thus results in an ill-posed inverse Fourier transform problem to obtain the 3D electron density. Conventional methods encounter many challenges including expert knowledge, sensitivity to small variations, and heavy computation requirements. While DL methods currently cannot completely substitute conventional methods, they can speed up the 3D reconstruction speed given an initial guess, and can be fine-tuned using conventional methods to achieve better performances. For CDI 3D reconstruction, Scheinker and Pokharel [137] proposes a 3D CNN architecture with model-independent adaptive feedback agents. The network takes in 3D diffracted intensities as inputs and outputs a vector of spherical harmonic coefficients, which describe the surface of the 3D incident object. The adaptive feedback agents take as input the spherical harmonics to adaptively adjust the intensities, positions, and decay rates of a collection of radial basis functions. The 3DCNN is trained using a synthetic dataset consisting of 500,000 training set of 49 sampling coefficients as well as the spherical surface and volume of each in order to perform a 3D Fourier transform. An additional 100 random 3D shapes and their corresponding 3D Fourier transforms are used to test the adaptive model-independent feedback algorithm, with the CNN output as its initial guess. Last, the robustness of the trained 3DCNN is tested on the experimental data of a 3D grain from a polycrystalline copper sample measured using high-energy diffraction microscopy. Results show that the 3DCNN provides an initial guess that captures the average size and a rough estimate of the shape of the grain. The adaptive feedback algorithm uses the 3DCNN initial guess to fine-tune the harmonic coefficients to match and converge the generated and measured diffraction patterns of the grain.

4 Hardware solutions for deep learning

DNNs have been implemented for many imaging processing tasks ranging from enhancement to generation as discussed above. To achieve good performance, these algorithms use very deep networks which can be very computationally intensive during training and inference in their own ways. During training, DNNs are fed large amounts of data and a large number of computations must be performed to update network weights to achieve accurate predictions. For example, AlexNet [68] took five to 6 days to train on two NVIDIA GTX 580 graphical processing units. As a result, powerful computing hardware is needed to accelerate DNN training. Meanwhile, during inference, larger networks require more computing power and memory storage space, and thus results in higher energy consumption and latency to obtain predictions in real-time. For very large networks such as AlexNet, a single forward pass may require millions of multiply and accumulate (MAC) operations, thus making DNNs both computationally and energy costly. For real-time data processing in imaging devices, DNN algorithms need to be executed with low latency, limited energy, and other design constraints. Hence, there is a need to develop cost and energy efficient hardware solutions for DL applications.

Interestingly, neural network algorithms are known to have at least two types of inherent parallelism, namely, model and data parallelism [138]. Model parallelism refers to the partitioning of the neural network weights for MAC operations for parallel execution as there are no data dependencies. Data parallelism refers to processing the data samples in batches rather than a single sample at a time. Hardware accelerators can exploit these characteristics by implementing parallel computing paradigms. This section presents different hardware accelerators used for DL applications. Note that the best hardware solution is dependent on the application and corresponding design requirements. For example, edge computing devices such as cameras and sensors may require small chip area with limited power consumption.

4.1 Electronic-based accelerators

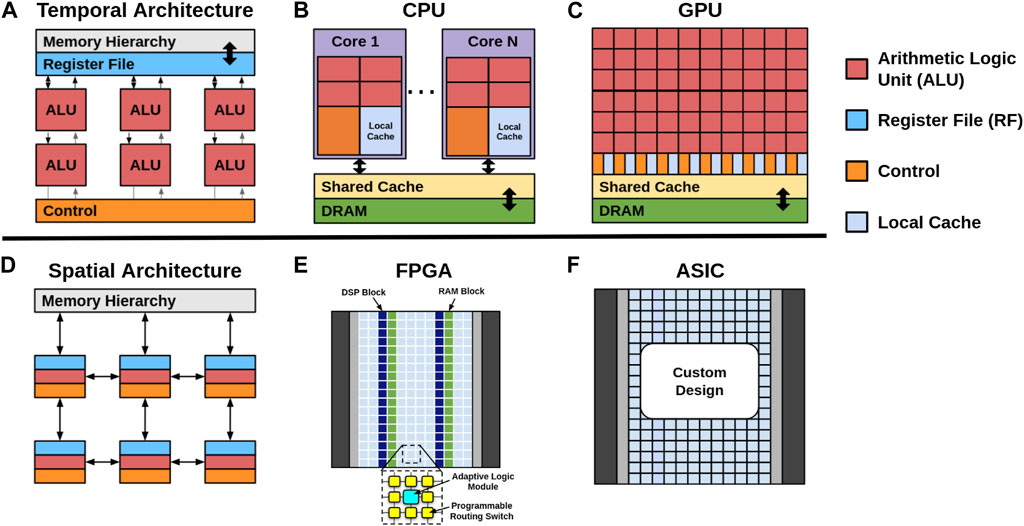

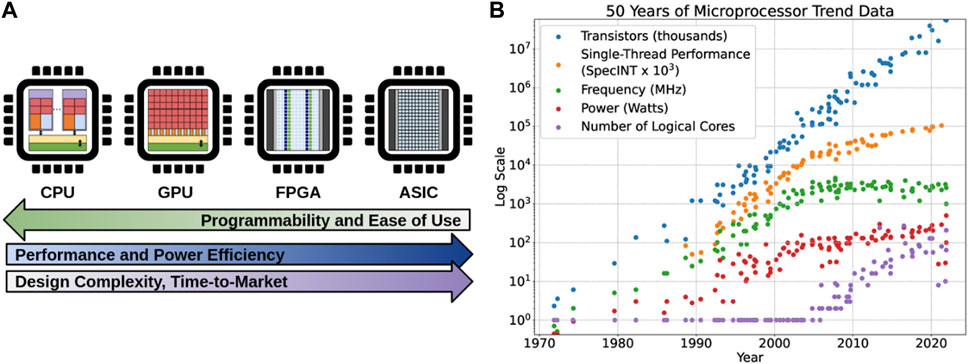

The electronic-based hardware solutions for DL are broad, ranging from general purpose processors such as central processing units (CPUs) and graphical processing units (GPUs), field-programmable gate arrays (FPGAs), to application-specific integrated circuits (ASICs). The circuit architecture design typically follows either temporal or spatial architectures [139] as shown in Figures 9A, D. The architectures are similar in using multiple processing elements (PEs) for parallel computing, however, there are differences in control, memory, and communication. The temporal architecture features a centralized control for simple PEs, consisting of only arithmetic logic units (ALUs), which can only access data from the centralized memory. Meanwhile, the spatial architecture features a decentralized control scheme with complex PEs, where each unit can have its own local memory or register file (RF), ALU, and control logic. The decentralized control scheme forms interconnections between neighboring PEs to exchange data directly, allowing for dataflow processing techniques.

FIGURE 9. Basic models of the (A) temporal, (B) CPU, (C) GPU, (D) spatial, (E) FPGA, and (F) ASIC architectures.

4.1.1 Temporal architectures: CPUs and GPUs

CPUs and GPUs are general purpose processors that typically adopt the temporal architecture as shown in Figures 9B, C. Modern CPUs can be realized as vector processors, which adopt the single-instruction multiple-data (SIMD) model to process a single instruction on multiple ALUs simultaneously. In addition, CPUs are optimized for instruction-level parallelism in order to accelerate the execution time of serial algorithms and programs. Meanwhile, modern GPUs adopt the single-instruction multiple threads (SIMT) model to process a single instruction across multiple threads or cores. Different from CPUs, GPUs are made up of more specialized, parallel, and smaller cores than CPUs to efficiently process vector data with high performance and reduced latency. As a result, GPU optimization relies on software defined parallelism rather than instruction-level parallelism [140]. Both the SIMD and SIMT execution models for CPUs and GPUs, respectively, allow for parallel MAC operations for accelerated computations.

Nonetheless, CPUs are not the most used processor for DNN training and inference. Compared to GPUs, CPUs have a limited number of cores, and thus a limited number of parallel executions. For example, one of Intel’s server-grade CPUs is the Intel Xeon Platinum 8280 processor which can have up to 28 cores, 56 threads, 131.12 GB/s maximum memory bandwidth, and 2190 Giga-floating point operations per second (GFLOPS) for single-precision compute power. In addition, AMD’s server-grade EPYC 9645 features 96 cores, 192 threads, and a memory bandwidth of 460.8 GB/s. In comparison, NVIDIA’s GeForce RTX 2080 Ti is a desktop-grade GPU with 4352 CUDA cores, 616.0 GB/s memory bandwidth, and 13450 GFLOPS single-precision compute power. Furthermore, a recently released NVIDIA RTX 4090 desktop-grade GPU features 16,834 CUDA cores, 1008 GB/s memory bandwidth, and 82.85 TFLOPS single-precision compute power. Therefore, GPUs outperform CPUs in terms of parallel computing.

For DL at the edge, the hardware industry has developed embedded platforms for AI. One popular platform is the NVIDIA Jetson for next-generation embedded computing. The Jetson processor features a heterogeneous CPU-GPU architecture [141] where the CPU accelerates the serial instructions and the GPU accelerates the parallel neural network computation. Furthermore, the Jetson is designed with a small form factor, size, and power consumption. A broad survey by [142] presents different works using the Jetson platform for DL applications such as medical, robotics, and speech recognition. Several surveyed works have used the Jetson platform to implement imaging processing tasks including segmentation, object detection, and classification.

Also using the NVIDIA Jetson platform, a work by [143] investigates the performance of the Jetson TX2 for edge deployment for TomoGAN [86], an image denoising technique using generative adversarial networks (GANs) for low-dose X-ray images. The training and testing datasets consist of 1024 pairs of images of size 1024 × 1024 with each image pair consisting of a noisy image and its corresponding ground truth. The pre-trained TomoGAN network is deployed and tested on the Jetson TX2 and a laptop with an Intel Core i7-6700HQ CPU @2.60GHz with 32GB RAM. The laptop CPU achieves an average inference performance of 1.537 s per image, while the TX2 achieves an inference performance of 0.88 s per image, approximately 1.7× faster than the laptop CPU.

A recent work by [144] investigates the classification accuracy of tuberculosis detection from chest X-ray images using MobileNet [145], ShuffleNet [146], SqueezeNet [147], and their proposed E-TBNet. In addition, they further investigate the inference time during testing of each network on the NVIDIA Jetson Xavier and a laptop with Intel Core i5-9600KF CPU and NVIDIA Titan V GPU. The dataset consists of 800 chest X-ray images scaled to size 512 × 512 × 3. The MobileNet network achieves the highest accuracy at 90% while their proposed E-TBNet achieves 85%. However, the inference time for E-TBNet is the fastest for all investigated networks with an inference time of 0.3 m and 3 m per image when deployed on the laptop with Titian GPU and Jetson Xavier, respectively. The slowest reported inference time for the Jetson Xavier is 6 m per image for the ShuffleNet. Although the inference time for the Xavier is an order of magnitude slower, classification inference can be achieved in real-time with smaller hardware footprint for edge deployment.

4.1.2 Spatial architectures: FPGAs and ASICs

Field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) typically adopt the spatial architecture as shown in Figures 9E, F. FPGAs and ASICs are specialized hardware that are tailored for specific applications due to their design process. FPGAs can be configured to perform any function as it is made up of programmable logic modules and interconnecting switches as shown in Figure 9E. The FPGA software is used to directly build the logic and data flow directly into the chip architecture. On the other hand, ASICs are designed and optimized for a single application, and cannot be reconfigured. Nonetheless, the spatial architecture of FPGAs and ASICs makes them well suited for neural network computations as the mathematical operations of each layer are fixed and known a priori. As a result, FPGAs and ASICs can attain highly optimized performance.

As shown in Figure 9D, the spatial architecture consists of an array of PEs interconnected with a Network-on-Chip (NoC) design, allowing for custom data flow schemes. Although not shown in Figure 9D, the memory hierarchy consists of three levels. The lowest level consists of the RF in each PE, which is used to locally store data for inter-PE data movement or local accumulation operations. The middle level consists of a global buffer (GB) that holds the neural network weights and inputs to feed the PEs. The highest level is the off-chip memory, usually a DRAM, to store the weights and activations of the whole network. MAC operations need to be performed on large data sets. Hence, the major bottleneck is the high latency and energy costs of DRAM accesses. A comparison between DianNao and Cambricon-X, two CNN accelerators, show that DRAM accesses consume more that 80% of the total energy consumption [148]. In addition [149], reports that the energy cost of DRAM access is approximately 200× more than a RF access. Therefore, energy efficiency can be greatly improved through the reduction of DRAM accesses, commonly done by exploiting the idea of data reuse.

The focus of data reuse is to utilize the data already stored in RFs and the GB as often as possible. This gives rise to the investigations of efficient data flow paradigms in both the spatial and temporal operations of PEs. For example, in fully connected layers, the input reuse scheme is popular since the input vector is dot multiplied by each row of the weight matrix to compute the layer output. For convolutional layers, the weight reuse scheme is popular as the weight kernel matrix is used for multiple subsets of the input feature map. In addition for convolutional layers, convolutional reuse can be applied by exploiting the overlapping region of the sliding window of kernel weights and the input feature map. Additional data reuse schemes are the weight stationary, output stationary, row stationary, and no local reuse schemes. A detailed discussion of the data reuse schemes is out of scope for this paper. However, for a comprehensive review, see details in [139,150,151]. In summary, optimizing the data flow is crucial for FPGAs and ASICs to attain high energy efficiency.

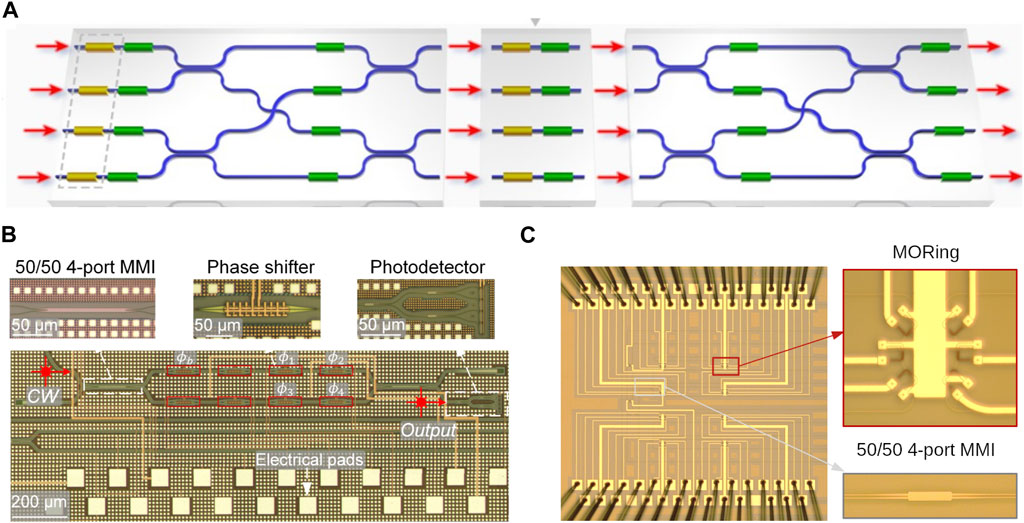

Nonetheless, it is important to note the challenges faced by FPGAs, and in turn ASICs, have in implementing DL networks. A few challenges include but are not limited to memory storage requirements, memory bandwidth, and large computational requirements on the order of Giga-operations per second (GOPS). For example, AlexNet requires 250 MB of memory with 32-bit representation to store 60 million model parameters and 1.5 GOPS for each input image [152], while VGG has 138 million model parameters and requires 30 GOPS per image [153]. Commercial FPGAs do not have enough memory storage space and thus requires external memory to store model parameters, which needs to be transmitted to the FPGA during computation. One way to address this issue, is to compress the neural network by reducing its size through methods such as compression and quantization [154,155]. For example, SqueezeNet [147] can be thought of a compressed AlexNet with 50× fewer model parameters and