94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Phys., 19 July 2023

Sec. Nuclear Physics

Volume 11 - 2023 | https://doi.org/10.3389/fphy.2023.1198572

We present global predictions of the ground state mass of atomic nuclei based on a novel Machine Learning algorithm. We combine precision nuclear experimental measurements together with theoretical predictions of unmeasured nuclei. This hybrid data set is used to train a probabilistic neural network. In addition to training on this data, a physics-based loss function is employed to help refine the solutions. The resultant Bayesian averaged predictions have excellent performance compared to the testing set and come with well-quantified uncertainties which are critical for contemporary scientific applications. We assess extrapolations of the model’s predictions and estimate the growth of uncertainties in the region far from measurements.

Mass is a defining quantity of an atomic nucleus and appears ubiquitously in research efforts ranging from technical applications to scientific studies such as the synthesis of the heavy elements in astrophysical environments [1, 2]. While accurate nuclear data of masses is available for nuclei that are relatively stable, the same is not true for nuclei farther away from beta stability because measurements on radioactive nuclei are exceedingly challenging [3]. As a consequence, theoretical models of atomic nuclei are required for extrapolations used in present-day scientific applications [4].

The goal of theoretical nuclear models is to describe all atomic nuclei (from light to heavy) using fundamental interactions. Attainment of this challenging goal remains elusive, however, due to the sheer complexity of modeling many-body systems with Quantum Chromodynamics [5]. To understand the range of nuclei that may exist in nature, mean-field approximations are often made which simplify complex many-body dynamics into a non-interacting system of quasi-particles where remaining residual interactions can be added as perturbations [6]. A consequence of this approximation is that current nuclear modeling efforts are unable to describe the rich correlations that are found across the chart of nuclides.

In contrast, Machine Learning (ML) based approaches do not have to rely on the assumption of modeling nuclei from a mean-field. This provides freedom in finding solutions that contemporary modeling may not be capable of ascertaining. Furthermore, Bayesian approaches to ML afford the ability to associate predictions with uncertainties [7, 8]. Such tasks are more difficult to achieve in modern nuclear modeling due to relatively higher computational costs.

ML approaches in nuclear physics were pioneered by J.W. Clark and colleagues [9, 10]. These studies were the first to show that networks could approximate stable nuclei, learn to predict masses and analyze nuclear systematics of separation energies as well as spin-parity assignments [11, 12]. Powered by open-source frameworks, research into ML methods has seen a recent resurgence in nuclear physics [13]. ML approaches have shown promise in optimizing data and experiments [14], building surrogate models of density functional theory [15], and describing quantum many-body wave functions for light nuclei [16, 17].

Several research groups are actively pursuing the problem of describing nuclear systems with ML from a more data-centric approach. These efforts currently attempt to improve existing nuclear models by adding correction terms [18]. Gaussian Processes (GP) have also been used for model averaging [19], but this approach is inherently limited to where data is known as GP methods typically revert to the mean when extrapolating. A further limitation to training ML models on residuals (or the discrepancy of theoretical model predictions with experimental data) is that the methods are arbitrary. The changes learned by the network to improve one model will not be applicable to another. These approaches thus provide limited insight into the underlying missing physics in modern models of the atomic nucleus.

In [20], a different approach was taken, where the masses of atomic nuclei were modeled directly with a neural network. It was shown that the masses of nuclei can be well described, and model predictions with increased fidelity correlate strongly with a careful selection of physically motivated input features. The selection of input features is especially important in ML applications [21, 22]. Following this work, [23] showed that the size of the training set can greatly be reduced, and the fidelity of model solutions increases drastically, when an additional physical constraint is introduced as a second loss function during model training.

The focus of this work is to present a Bayesian approach for combining precision data with theoretical predictions to model the mass of atomic nuclei. In Section 2, we present our ML algorithm and define the model hyperparameters. In Section 3, we show the results of our approach and assess the quality of model extrapolations. We end with a short summary.

In this section, we outline our methodology: describe the neural network, define our physics-based feature space, list model hyperparameters, and discuss training.

In a feed-forward neural network, inputs, x, are mapped to outputs, y, in a deterministic manner. We employ the Mixture Density Network (MDN) of Bishop [24] which differs from the standard approach. This ML network takes as inputs stochastic realizations of probability distributions and maps this to a mixture of Gaussians. Thus, the network fundamentally respects the probabilistic nature of both known data and model predictions by both sampling the prior distribution of inputs and predicting the posterior distribution of the outputs.

Formally, the conditional probability can be written as

where

The neural network outputs are π, μ, and σ which depend only on the input training set information x and the network weights. For ease of reading the equation we have kept the dependence of the network weights implicit.

The hyperbolic tangent function

We now discuss the components of the input vector, x. The ground state of an atomic nucleus comprises Z protons and N neutrons. While it is reasonable to start from these two independent features as inputs [21], and [20] reported that a modestly larger physics-based feature space drastically improves the prediction of masses. For this reason, we employ a combination of macroscopic and microscopic features that are of relevance to low-energy nuclear physics properties.

In addition to the proton number Z and neutron number N, we also use the mass number A = Z + N, and a measure of isospin asymmetry,

The input feature space is then a nine component vector:

where the first four components can be considered macroscopic features and the last five are microscopic features. All remaining features beyond the second are functions of Z and N exclusively.

Our training is hybrid data consisting of two distinct input sets. The first is the mass data provided by the 2020 Atomic Mass Evaluation (AME2020) [28]. The information in this set is very precise with an average reported mass uncertainty of roughly 25 keV. Modern experimental advances, such as Penning trap mass spectrometers enhanced with the Phase-Imaging Ion-Cyclotron-Resonance technique, enable such high precision measurements [29, 30].

The second mass data are provided by modern theoretical models. The information in this set is less precise, owing to the approximations made in the modeling of atomic nuclei. This set can be calculated for nuclei that have not yet been measured, providing a valuable new source of information. Nuclear models in this second set include macroscopic-microscopic approaches like the Duflo-Zuker model [31], the 2012 version of the Finite Range Droplet Model (FRDM) [32], the WS4 model [33] and microscopic approaches like UNEDF [34], and HFB32 [35].

In this work, we combine predictions from three theoretical models: FRDM2012, WS4, and HFB32. Because these models do not report individual uncertainties on their predictions, we instead estimate theoretical model uncertainty using the commonly quoted root-mean-square error or

where dj is the atomic mass from the AME and tj is the predicted atomic mass from the theoretical model. The sum runs over each j which defines a nucleus, (Z, N). For FRDM2012, WS4, and HFB32, σRMS = 0.606, 0.295, and 0.608 MeV respectively, using AME2020 masses. While the σRMS is a good measure of overall model accuracy for measured nuclei, uncertainties are certainly larger for shorter-lived systems. In this work, we do not seek to preferentiate one model over another. For this reason, we increase the assumed uncertainty of WS4 to a more reasonable 0.500 MeV when probing its predicted masses further from stability.

Training for the hybrid input data is taken at random, rather than selected based on any given criteria. The number of unique nuclei from experimental data is a free parameter in our training. The best performance is found for models provided with approximately 20% of the AME, or 400–500 nuclei [23]. Adding more than 20% of the AME data does not provide subsequent new information for the model regarding the different types of nuclei that may exist. Thus increasing this number does not offer any predictive benefits, however, it does slow down training due to the larger input space. The number of unique nuclei from theory is also a free parameter. We find that as few as 50 additional unmeasured nuclei can influence training, and therefore use this minimal number. There is flexibility in the choice of this number. Larger values would more strongly preference theoretical data in training than what is considered here. Such studies may be useful in understanding the properties of nuclei at the extremes of nuclear binding. In this work, we sample the masses of 50 randomly chosen nuclei from each of the three theoretical mass models independently.

The benefit of using hybrid data is that the neural network is not limited to solutions of model averaging which can regress to the mean when extrapolating. Instead, the combination of hybrid data with ML-based methods affords the opportunity to create new models that are capable of reproducing data, capturing trends, and predicting yet to be measured masses with sound uncertainties.

The network is set up with 6 hidden layers and 10 hidden nodes per layer. The final layer turns the network into a Gaussian ad-mixture. For masses we choose a single Gaussian, although other physical quantities, such as fission yields, may require additional components [36]. The Adam optimizer is used with learning rate 0.0002 [37]. We also implement a weight-decay regularization with value 0.01. These hyperparameters were determined from a select set of runs where the values were varied. By setting the network architecture to the aforementioned values and fixing the feature space to 9 inputs this work has 683 trainable parameters in the model. Similar results can be found with a smaller number of trainable parameters (on the order of 300) as in Mumpower et al. [23].

We perform model training with two loss functions. The first loss function,

where y is the vector of training outputs and K is the total number of Gaussian mixtures. The πi(x), μi(x), and σi(x), variables define the Gaussians, as in Eq. 1. The minimization of this loss function furnishes the posterior distributions of predicted masses.

The hybrid mixture of experimental data and theoretical data enter into training as the variable y. Each nucleus defined uniquely by a proton number Z and neutron number N. A Gaussian distribution is assumed to represent the probability distribution for sampling both experimental and theoretical data,

For the high-precision experimental data taken from the AME, the mean is set to the evaluated mass,

In this work, we do not include masses of isomeric states in the training set. However, we note that since our previous works [20, 23], the AME2020 is now utilized, rather than the earlier AME2016. This data better refines the separation of ground state and isomeric states in evaluated masses, which continues to be a known source of systematic uncertainty in the evaluation of atomic masses.

For the AME data, we take roughly 500 nuclei for training, leaving the remaining 80% of the AME as testing data. The number of stochastic realizations per nucleus is 50. For theory data, we take only 50 additional nuclei explicitly outside the AME. These nuclei are also taken at random. A given nucleus is set to have 20 stochastic realizations per theory model, for a total of 60 samples overall.

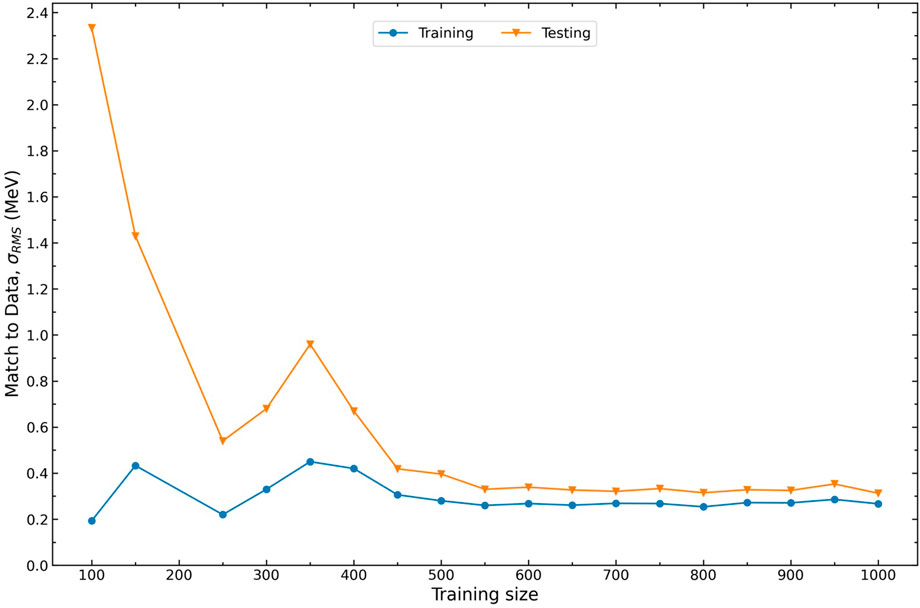

The training is most sensitive to precision masses that comprise the AME. The training size of this data has been carefully determined to be approximately 500 through a series of runs. To determine this number, we fix all model hyperparameters and incrementally increase the AME training size. In this way, additional nuclei are continually added to the previous set, which introduces new information to the model. The obtained results from this set of runs are depicted in Figure 1.

FIGURE 1. Root-Mean-Square (RMS) Error relative to AME2020 data across various training sizes for a fixed random seed.

When the training size is set to 450 or larger, the resulting training and testing RMS values remain consistently around 0.35 MeV with minimal fluctuations. Conversely, if the training set consists of fewer than 450 data points, the training RMS remains small while the testing RMS is large. This observation can be attributed to the limited amount of data available to the model. With limited data, the model does not have sufficient information to generalize outside of the training set. Consequently, the testing masses are predicted poorly. To ensure that our model possesses sufficient training information while preserving its predictive capability, we have chosen to set the training size to 500. This selection strikes a balance between providing ample training data for robust learning while avoiding a potential pitfall of overfitting.

We summarize the model hyperparameters in Table 1. This table lists the parameters which control the input data, the size of the network, the admixture of Gaussians, the valid range of predictions, the weight of the physics constraint, as well as basic physics knowledge of the closed shells. In this work we fix these parameters. A more complete study of all model hyperparameters is the subject of future investigations.

One essential observation of ground-state masses is that they obey the eponymous Garvey-Kelson (GK) relations [38]. This result suggests a judicious choice of mass differences of neighboring nuclei that minimizes the interactions between nucleons to first order, resulting in particular linear combinations that strategically sum to zero.

If N ≥ Z, the GK relations state that the mass difference is

and for N < Z,

Higher order GK mass relations may also be considered, as in [39]. However, the use of these constraints alone does not yield viable predictions far from stability due to the accumulation of uncertainty as the relationship is recursively applied beyond known data [40].

As an alternative, we perform no such iteration in our application of the GK relations. Eqs. 6, 7 are used directly, and it is important to recognize that these equations depend exclusively on the masses. Thus the second (physics-based) loss function can be defined purely as a function of the ML model’s mass predictions.

To enforce this physics-based observation, the second loss function can be defined as

where GK is function that defines the left-hand side of Eqs. 6, 7 and we only use the model’s predicted mean value of the masses, μ. The sum is performed over any choice of subset, {C}, of masses and does not have to overlap with the hybrid training data. The absolute value is necessary to ensure that the log-loss remains a real number. As with the data loss,

An alternative to Eq. 8 that is, potentially more restrictive, is to take the absolute value inside the summation

Because Eq. 9 sums many non-zero items, it is a larger loss than using Eq. 8. In this case, the strength of the physics hyperparameter (discussed below) should generally be lower than in the case of using Eq. 8. A strong preference for selecting one functional form for the physics-based loss over the other has not been found.

The total loss function used in training is taken as a sum of the data and physics losses

where λphysics is a model hyperparameter which defines the strength of enforcement of the physics loss. We have found that values between 0.1 and 2 generally enforce the physics constraint in model predictions.

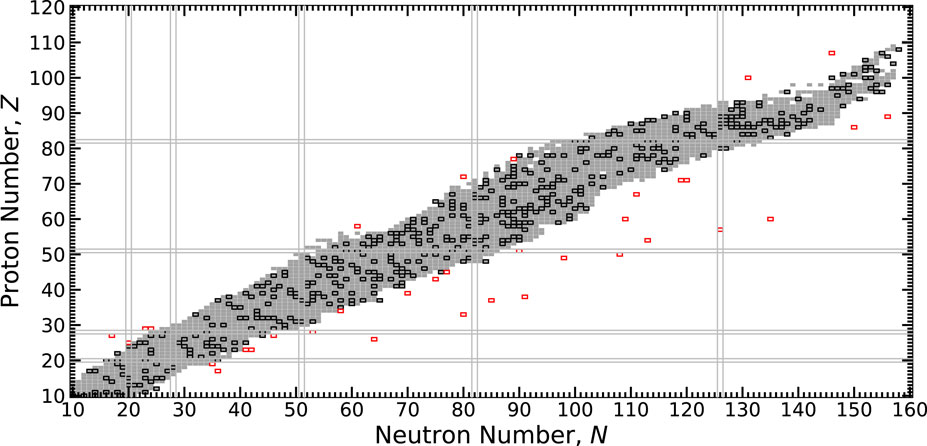

A schematic of our methodology is shown in Figure 2 and encapsulated below. The modeling of masses begins by setting hyperparameters, summarized in Table 1, for the particular calculation. A random selection of hybrid data is made, as can be seen in Figure 3. The bulk of the masses selected for training comes from the AME (black squares) where high-precision evaluated data resides (gray squares). Only a handful of masses from theoretical models are taken for training (red squares).

FIGURE 2. A schematic of our methodology. The procedure used in this work combines high precision evaluated data with a handful of less-precise theoretical data. This results in predictions with well-quantified uncertainties across the chart of nuclides.

FIGURE 3. The chart of nuclides showing the extent of the 2020 Atomic Mass Evaluation (AME) indicated by grey squares, training nuclei part of the AME indicated by black squares, and the extra theoretical nuclei indicated by red squares. Closed proton and neutron shells are indicated by parallel lines.

After selection of hyperparameters and data, training begins which seeks to minimize the total loss, Eq. 10. Training can take many epochs, and the data loss as well as the physics loss play important roles throughout this process, as discussed in [23]. Once the MDN has been trained on data, the results are assembled into predictions by sampling the posterior distribution several thousand times. The final output is a prediction of the mean value of the expected mass, M, and its associated uncertainty, σ(M) for any provided nucleus defined by (Z, N).

In this section we present a MDN model trained on hybrid data. We analyze the performance with known data and discern the ability to extrapolate model predictions. We evaluate the impact of including theoretical data and the physics-based loss function.

The final match to 506 training nuclei for our MDN model is σRMS = 0.279 MeV. The total σRMS for the entire AME2020 is 0.395 MeV. This is an increase of roughly 0.116 MeV between training and verification data which is on the order of the accuracy of the GK relations. We limit the calculation of σRMS to nuclei with A ≥ 50 as this more aptly captures the predictive region of our model. While the model can predict masses for nuclei lighter than A = 50, it generally performs worse in this region because there are fewer nuclei at lower mass numbers than in heavier mass regions. Therefore, there are fewer light nuclei selected in the random sample than heavy nuclei. Different sampling techniques (for instance, first grouping nuclei using K-means) may be employed that could alleviate the present bias.

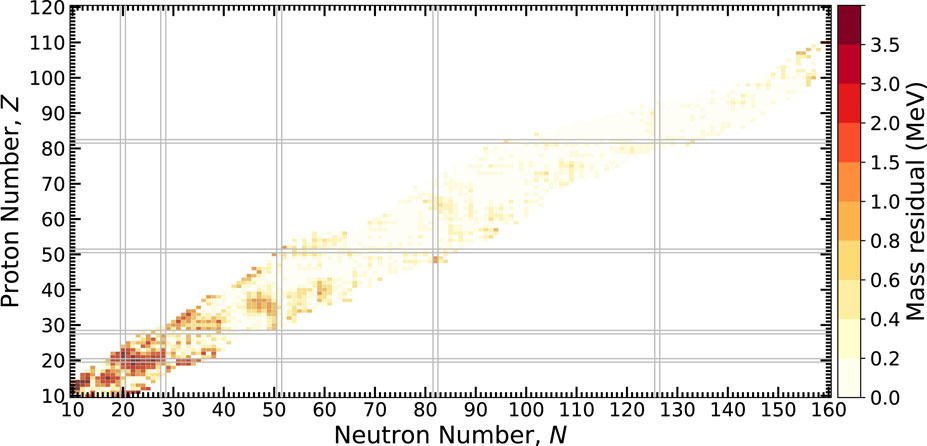

The absolute value of the mass residual,

FIGURE 4. The absolute value of mass residuals across the chart of nuclides using an MDN model and the AME2020. Heavier nuclei are generally well described by the model while lighter nuclei exhibit larger discrepancies. See text for details.

In comparison to our previous results discussed in [23], the addition of light nuclei in training is found to relatively increase the discrepancy for heavier nuclei. The additional information modestly reduces the overall model quality as measured by σRMS. On the other hand, the model is better positioned to describe the nuclear landscape more completely, insofar as the training process introduces information on the nuclear interaction, that is, uniquely captured in low-mass systems.

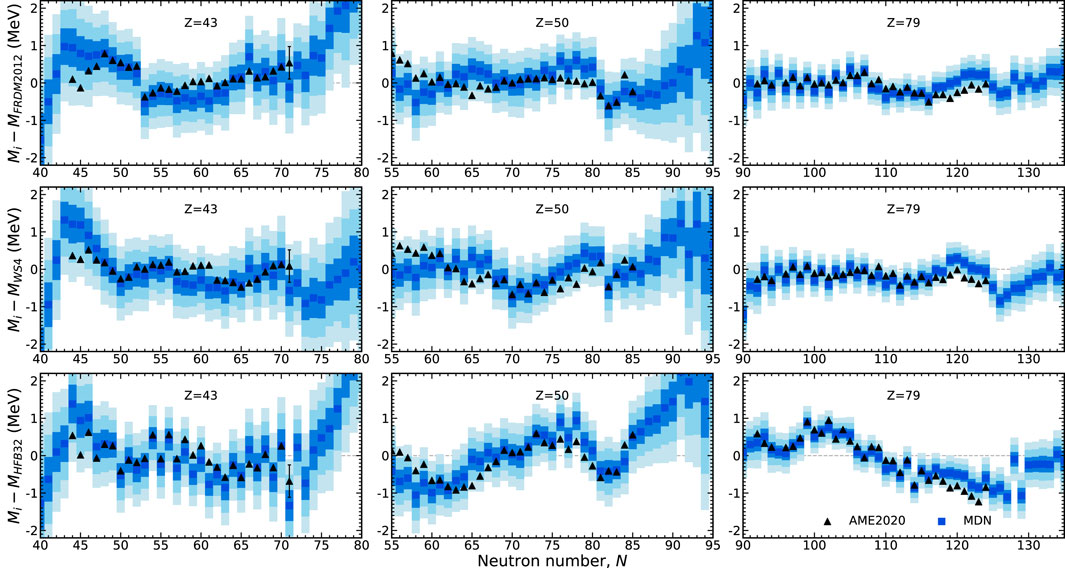

The behavior of the model with respect to select isotopic chains are shown in Figure 5. In regions of the chart where the MDN model is confident in its predictions such as in the Z = 79 isotopic chain, the uncertainties are very well constrained. The converse is also true, as is the case with higher uncertainties along the Z = 43 isotopic chain. The tin (Z = 50) isotopic chain highlights an intermediate case.

FIGURE 5. The prediction of masses along three isotopic chains in comparison to AME2020 data (black triangles). Masses are plotted in reference to the theoretical training models: FRDM2012, WS4, and HFB32. The MDN model captures the trends exhibited in data and furnishes individual uncertainties (the one, two, and, three sigma confidence intervals are shown by blue shading).

Inspection of this figure shows that the MDN model has a preference for evaluated data in this region and does not follow the trends of any of the theoretical predictions, despite some of these masses being provided for training. This result reveals a unique feature of our modeling: evaluated points, due to their low uncertainties, are highly favored while theoretical points, with relatively larger uncertainties, are used as guideposts for how nuclei behave where there is no data. How much a particularly model is favored farther from stability depends on how much weighting we provide it with the choice of model uncertainty. In this work we treat the choice of weighting of theoretical models as free parameters. As these parameters are set to be roughly equivalent, recall Table 1, no specific theoretical model is favored where measurements do not exist. The trends of the MDN predictions far from the stable isotopes are discussed in the next section.

The extrapolation quality of atomic mass predictions is an important problem, especially for astrophysical applications where this information is needed for thousands of unmeasured species [41, 42]. The formation of the elements in particular requires robust predictions with well-quantified uncertainties [43]. The MDN supplies such information, and we now gauge the quality of the extrapolations by comparison with other theoretical models.

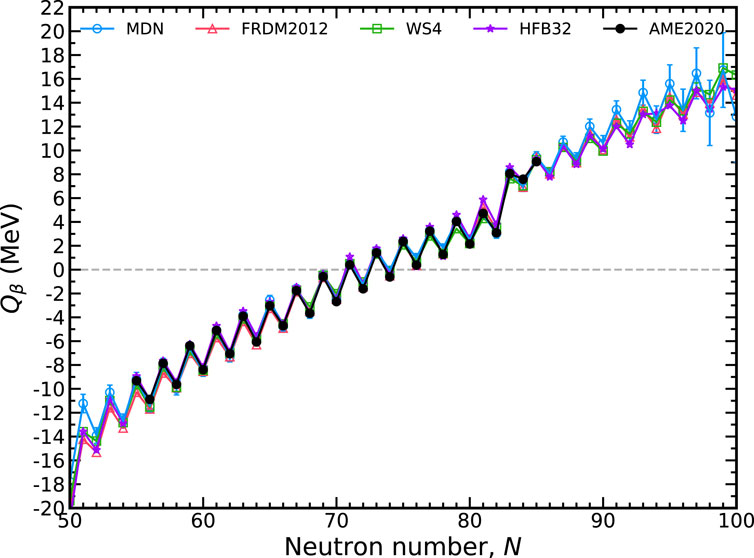

The Qβ(Z, N) = MZ,N − MZ+1,N−1 is plotted in Figure 6 for the tin (Z = 50) isotopic chain. Additionally plotted is the uncertainty, δQβ, which is calculated via propagation of error

The mass uncertainties σ(MZ,N), σ(MZ+1,N−1) are outputs of the MDN. The correlation between the masses, σ(MZ,N, MZ+1,N−1), is assumed to be zero. The model has excellent performance where data is known and this result can be considered representative for other isotopic chains. Predicted uncertainties grow with decreasing and increasing neutron number outside of measured data, underscoring the Bayesian nature of our approach.

FIGURE 6. The total available energy for nuclear β− decay, Qβ, along the tin isotopic chain (Z =50) with a 1-σ confidence interval. The MDN model (blue) reproduces known data (black) and continues reasonably physical behavior when extrapolating. Theoretical models used in training are plotted for comparison.

Also shown in Figure 6 are the theoretical models used in training. Comparison with these models shows that the MDN continues to retain physical behavior when extrapolating to neutron deficient or neutron rich regions. Intriguingly, the MDN model does not preferentiate one specific model when extrapolating. Instead, where there begins to be discrepancies among the theoretical models, the uncertainties begin to increase. For Qβ, the predictions along the tin isotopic chain begin to be dominated by uncertainties roughly ten units from the last available AME2020 data point.

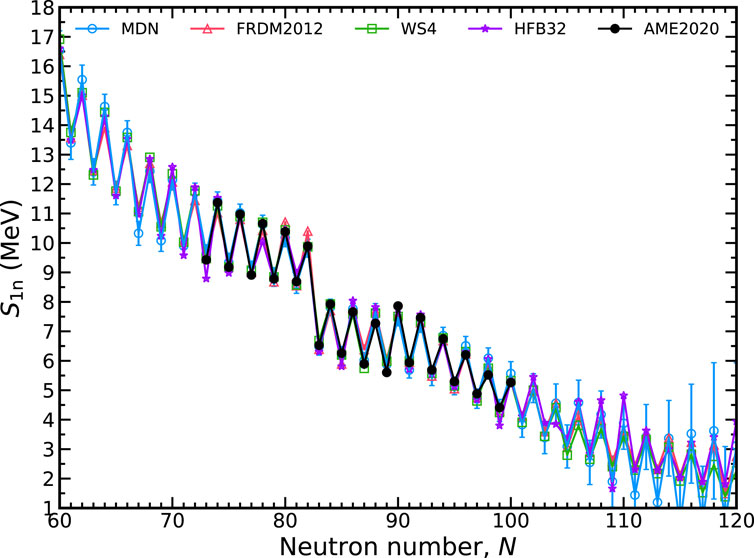

In Figure 7 we show the extrapolation quality of one-neutron separation energies, S1n(Z, N) = Mn + MZ,N−1 − MZ,N. These energies play a significant role in defining the r-process path and are pivotal in shaping the isotopic abundance pattern [44]. The propagation of error formula, Eq. 11, is again employed to calculate δS1n since this quantity also depends on mass differences. The uncertainty of the mass of the neutron, Mn, can be safely ignored. The qualitative behavior of S1n is well described. No unphysical inversions of S1n are found with the MDN model in contrast to the HFB32 model where this behavior can arise; observe around N = 105. Again, we find that roughly ten units from the last measured isotope, uncertainties begin to rise substantially.

FIGURE 7. The one-neutron separation energy, S1n, along the promethium isotopic chain (Z =61) with a 1-σ confidence interval. The MDN model (blue) reproduces known data (black) and continues reasonably physical behavior when extrapolating. Theoretical models used in training are plotted for comparison.

A consequence of the growth of uncertainties is that the prediction of the neutron dripline, S1n = 0 is also largely uncertain for any isotopic chain. We conclude that hybrid data does not presently place stringent constraints on this quantity, which is widely recognized as an open problem by the community [45–47].

The comparison with theoretical models in Figures 6, 7 serves two purposes. First, it shows that our model remains physical when extrapolating into regions where data does not exist, mimicking the behavior of well-established theoretical models. Second, despite training on theoretical data, the model does not regress to the average of the model predictions far from the stable isotopes as observed in model averaging procedures. Instead, the model is free to explore various solutions whilst retaining physical qualities. This is an important point, as there could be “missing physics” or other deficiencies in standard theoretical models that can be explored freely in physics-constrained ML based methods such as the one presented here.

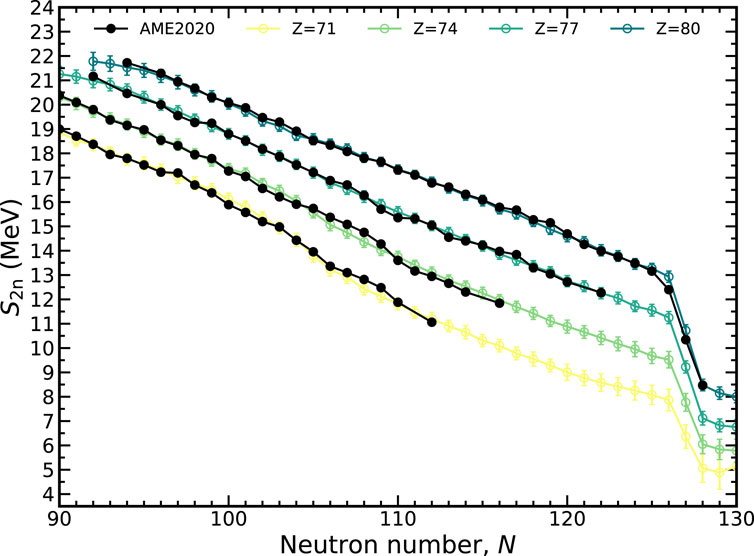

Another quantity that can be used to gauge the quality of extrapolations is the two-neutron separation energy, S2n(Z, N) = 2Mn + MZ,N−2 − MZ,N. The two-neutron separation exhibits less odd-even staggering than S1n because the subtraction always pairs even-N or odd-N nuclei. The behavior of the MDN model is shown in Figure 8 for the lutetium (Z = 71), tungsten (Z = 74), iridium (Z = 71), and mercury (Z = 80) isotopic chains. All experimental data falls within the 1-σ confidence intervals except for 206Hg. A relatively robust shell closure is predicted at N = 126, though there is some weakening at the smaller proton numbers.

FIGURE 8. The two-neutron separation energy, S2n, along several isotopic chains plotted with a 1-σ confidence interval. The MDN model (colors) reproduces known data (black) and continues reasonably physical behavior when extrapolating.

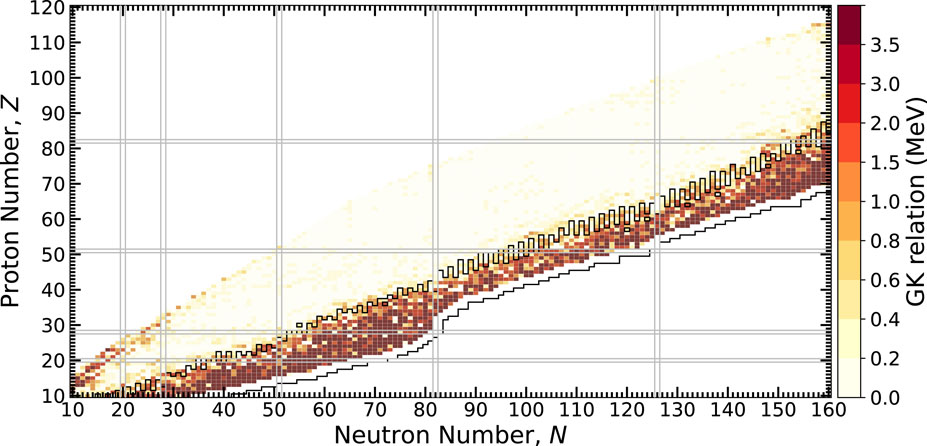

Finally, we consider the behavior of the physics of ground-state masses across the nuclear chart using the predictions of the MDN model. Whether or not the GK relations are preserved is yet another test of the extrapolation quality of the MDN. The calculation of the left hand side of Eqs. 6, 7 is shown in Figure 9 across the chart of nuclides. Yellow shading indicates the GK relations are satisfied while orange and red shading indicate potential problem areas where the relations have broken down. Given the behavior seen in the previous figures, it is clear that towards the neutron dripline, the uncertainties have grown so large that the model is unsure of the preservation of the GK relations. To emphasize this point, we calculate the nuclei for which the mass uncertainty, δM, is larger than the one-neutron separation energy, S1n, and designate this as a region bound in black. We observe that this bounded region is precisely where the orange and red regions are located. Figure 9 suggests that a potential modification of the loss function that encodes the GK relations could be made to include uncertainties obtained from the MDN. This line of reasoning is the subject of future work.

FIGURE 9. A test of how well the GK relations are maintained throughout the chart of nuclides. Lower values indicate predictions inline with GK, which is found nearly everywhere, except for the most extreme cases where the model is uncertain at the limits of bound nuclei. The black outlined nuclei have δM > S1n, indicating where mass uncertainties are large.

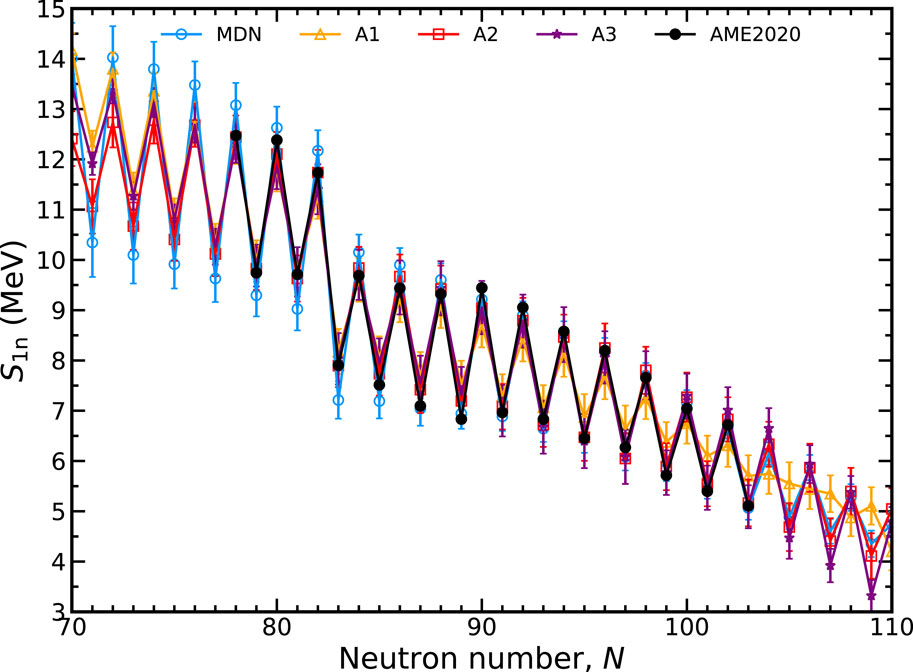

We now assess the impact of the inclusion of theoretical data and the physics loss on the predictive capabilities of the MDN. Figure 10 shows four different training sets in the context of S1n values. The line labeled MDN is the network shown throughout this manuscript that includes both hybrid data and physical constraint. A1 is a MDN model trained only with experimental data, lacking information about theory or the physical loss defined by the GK relations; A2 is a MDN model trained with the physics loss but without theoretical data; and A3 is a MDN model trained with theory data but without any physics loss.

FIGURE 10. Comparison of MDN models with different assumptions for input data and training loss along the dysprosium (Z = 66) isotopic chain. See text for details.

From these four sets, it is clear that both hybrid data and the physics-based loss are necessary to provide quality extrapolations into unknown regions. Training with the lack of theory and GK (A1) exhibits a less desirable preference for a smooth extrapolation of S1n values. The addition of the physics loss (A2) improves the situation by restoring the odd-even behavior observed in measured nuclei. The expected behavior in S1n is also restored by run A3, where the hybrid data includes theory but training is not informed of the GK relations.

We note that the improvement in extrapolation behavior resulting from the hybrid data and physics-based loss is generally independent of any hyperparameters that otherwise appear in the MDN. In particular, we have preliminarily verified this result against systematic variations in relevant hyperparameters, including network size, training size, different nuclei, different input features and different blends of theoretical models. All of these variations demonstrate a similar propensity for improvement when both physics loss and hybrid data are used. These results lead us to reaffirm our previous observation that the addition of theory data serves as guideposts for the network solutions while the GK relations are used to ensure a refined solution.

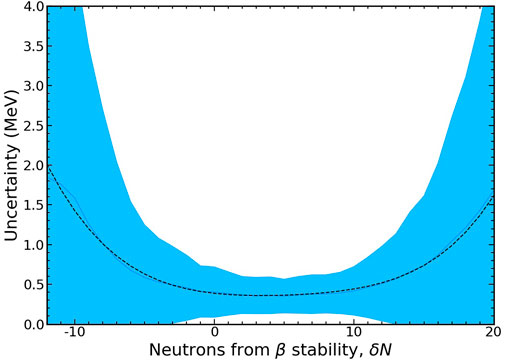

The behavior of mass uncertainties as one traverses the chart of nuclides can be ascertained using the MDN predictions. Here we consider the evolution of uncertainties provided by an MDN model as a function of neutrons from the line of β stability, δN. The line of β stability may be approximated using the Weizsäcker formula,

where Nβ is the neutron number of the β-stable nucleus for the given value of Z (proton number) and A (nucleon number). The Coulomb coefficient is taken to be aC ∼ 0.711 and the asymmetry coefficient is taken to be aA ∼ 23.7. For a given nucleus with Z protons and N neutrons, the distance from the β stability line is then,

Note that this quantity can be negative indicating neutron deficient nuclei. A final remark regarding the definition of δN is an observation that nearly identical behavior can be found by measuring instead from the last stable isotope defined in the NuBase (2020) evaluation [48].

It is important to realize that for each isotopic chain, δN may reference a slightly different neutron number for the particular isotope. The choice of this variable provides a relatively straight forward way to observe how mass uncertainties grow far from known data.

The average and standard deviation of the MDN model’s uncertainties are shown by a blue line and shaded region in Figure 11. Here we can see that the model predictions are fairly robust for neutron-rich nuclei (δN > 0) up to about 10 neutrons from β stability. At this point the uncertainties start to rapidly grow. Because the model is trained on a random selection of nuclei, neutron-deficient nuclei are naturally less represented. Thus, uncertainties grow even faster for nuclei with δN < 0 in contrast to their neutron-rich counterparts.

FIGURE 11. The average (solid blue line) and standard deviation (shaded area) of the growth of mass uncertainties as a function of neutrons from β-stability. The functional form of the average can be well modeled by Eq. 14 as plotted with a dashed black line.

The functional form of the average uncertainty growth as a function of neutrons from the β-stability line is well approximated by the following relation,

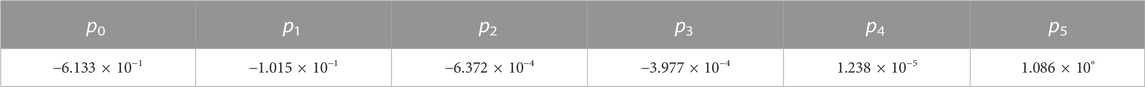

where the parameters, pi, are fit numerically (least squares) and are given in Table 2. This functional form may be readily used in simulations of nucleosynthesis to approximate uncertainties in masses with models that do not provide this information.

TABLE 2. The parameters found using a least-square fit of Eq. 14 to the MDN uncertainties across the chart of nuclides.

We present a Bayesian averaging technique that can be used to study the ground-state masses of atomic nuclei with corresponding uncertainties. In this work, we combine high-precision evaluated data, weighted strongly, with theoretical data for nuclei which are further from stability, more poorly understood, and therefore weighted more weakly. Training of a probabilistic neural network is used to construct the posterior distribution of ground-state masses. Along with a loss function for matching data, a second, physics-based loss function is employed in training to emphasize the relevant local behavior of masses. Excellent performance is obtained with comparison to known data, on the order of σRMS ∼ 0.3 MeV and the physical behavior of solutions is maintained when extrapolating. Furthermore, the model does not regress to the mean of theoretical predictions when extrapolating which implies flexibility in finding novel physics-constrained solutions. In contrast to our previous work (limited to Z > 20) [23], the MDN model of this work is capable of describing systems as light as boron (Z = 5). It is found that available data from experiment and theory are not, at this time, sufficient to resolve the relatively large uncertainties towards the limits of bound nuclei using the framework developed in this work. Uncertainties in predicted masses are found to grow moderately as in Eq. 14. We emphasize the continuing need for advances in nuclear experiment and theory to reduce these uncertainties.

Our Bayesian averaging procedure enables the rapid construction of a mass model using any combination of precise and imprecise data through adjustable stochastic weights of the hybrid training inputs. For instance, if a particular theoretical model is favored over another, its sampling can be adjusted accordingly to emphasize its importance. Similarly, new high-precision data may be incorporated in the future from measurement campaigns at radioactive beam facilities. At the same time, our technique also enables freedom in the exploration of the relevant physics of ground-state masses. This can be achieved by probing a variety of physics-based features, for example, or by introducing alternative physics-based loss functions in training. Machine Learning/Artificial Intelligence approaches such as the one presented here hold great promise to advance modeling efforts in low-energy nuclear physics where the typical model development time scale is on the order of a decade or longer.

The methodology outlined here can be generalized to describe any nuclear physics property of interest, particularly when reliable extrapolations are necessary. This technique opens new avenues into Machine Learning research in the context of nuclear physics through the unification of data, theory, and associated physical constraints to empower predictions with quantified uncertainties. We look forward to extensions of this work to model nuclear decay properties, such as half-lives and branching ratios, as particularly promising opportunities in the near future.

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

MM led the study, overseeing all aspects of the project, including writing the code used in this study. ML ran and analyzed combinations of hybrid input data as well as hyperparamters. TS, AL, and AM provided technical expertise for Machine Learning methods. All authors contributed to the article and approved the submitted version.

This research was supported by the Los Alamos National Laboratory (LANL) through its Center for Space and Earth Science (CSES). CSES is funded by LANL’s Laboratory Directed Research and Development (LDRD) program under project number 20210528CR. MM, ML, TS, AL, and AM were supported by the United States Department of Energy through the Los Alamos National Laboratory (LANL). LANL is operated by Triad National Security, LLC, for the National Nuclear Security Administration of United States Department of Energy (Contract No. 89233218CNA000001). ML and BM were supported by NASA Emerging Worlds grant 80NSSC20K0338.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Horowitz CJ, Arcones A, Côté B, Dillmann I, Nazarewicz W, Roederer IU, et al. r-process nucleosynthesis: connecting rare-isotope beam facilities with the cosmos. J Phys G Nucl Phys (2019) 46:083001. doi:10.1088/1361-6471/ab0849

2. Kajino T, Aoki W, Balantekin A, Diehl R, Famiano M, Mathews G. Current status of r-process nucleosynthesis. Prog Part Nucl Phys (2019) 107:109–66. doi:10.1016/j.ppnp.2019.02.008

3. Thoennessen M. Plans for the facility for rare isotope beams. Nucl Phys A (2010) 834:688c–693c. The 10th International Conference on Nucleus-Nucleus Collisions (NN2009). doi:10.1016/j.nuclphysa.2010.01.125

4. Möller P, Bernd P, Karl-Ludwig K. New calculations of gross β-decay properties for astrophysical applications: Speeding-up the classical r process. Phys Rev C (2003) 67:055802. doi:10.1103/PhysRevC.67.055802

5. Geesaman D. Reaching for the horizon: The 2015 NSAC long range plan. In: APS DNP (2015), APS Meeting Abstracts; October 28–31, 2015; Santa Fe, New Mexico (2015). AA1.001.

6. Negele JW. The mean-field theory of nuclear structure and dynamics. Rev Mod Phys (1982) 54:913–1015. doi:10.1103/RevModPhys.54.913

7. Goan E, Fookes C. Bayesian neural networks: An introduction and survey. In: Case studies in applied bayesian data science. Springer International Publishing (2020). p. 45–87. doi:10.1007/978-3-030-42553-1_3

8. Mohebali B, Tahmassebi A, Meyer-Baese A, Gandomi AH. Chapter 14 - probabilistic neural networks: A brief overview of theory, implementation, and application. In: P Samui, D Tien Bui, S Chakraborty, and RC Deo, editors. Handbook of probabilistic models. Butterworth-Heinemann (2020). p. 347–67. doi:10.1016/B978-0-12-816514-0.00014-X

9. Clark JW, Gazula S. Artificial neural networks that learn many-body physics. Boston, MA: Springer US (1991). p. 1–24. doi:10.1007/978-1-4615-3686-4_1

10. Clark JW, Gazula S, Bohr H. Nuclear phenomenology with neural nets. In: JG Taylor, ER Caianiello, RMJ Cotterill, and JW Clark, editors. Neural network dynamics. London: Springer London (1992). p. 305–22.

11. Gazula S, Clark J, Bohr H. Learning and prediction of nuclear stability by neural networks. Nucl Phys A (1992) 540:1–26. doi:10.1016/0375-9474(92)90191-L

12. Gernoth KA, Clark JW, Prater JS, Bohr H. Neural network models of nuclear systematics. Phys Lett B (1993) 300:1–7. doi:10.1016/0370-2693(93)90738-4

13. Boehnlein A, Diefenthaler M, Sato N, Schram M, Ziegler V, Fanelli C, et al. Colloquium: Machine learning in nuclear physics. Rev Mod Phys (2022) 94:031003. doi:10.1103/revmodphys.94.031003

14. Hutchinson JD, Louise J, Rich A, Elizabeth T, John M, Wim H, et al. Euclid: A new approach to improve nuclear data coupling optimized experiments with validation using machine learning [slides]. In: 15.International Conference on Nuclear Data for Science and Technology (ND2022); 21-29 Jul 2022; Sacramento, CA (2022). doi:10.2172/1898108

15. Verriere M, Schunck N, Kim I, Marević P, Quinlan K, Ngo MN, et al. Building surrogate models of nuclear density functional theory with Gaussian processes and autoencoders. Front Phys (2022) 10:1028370. doi:10.3389/fphy.2022.1028370

16. Adams C, Carleo G, Lovato A, Rocco N. Variational Monte Carlo calculations of a ≤ 4 nuclei with an artificial neural-network correlator ansatz. Phys Rev Lett (2021) 127:022502. doi:10.1103/PhysRevLett.127.022502

17. Gnech A, Adams C, Brawand N, Lovato A, Rocco N. Nuclei with up to a = 6 nucleons with artificial neural network wave functions. Few-Body Syst (2022) 63:7. doi:10.1007/s00601-021-01706-0

18. Utama R, Piekarewicz J, Prosper HB. Nuclear mass predictions for the crustal composition of neutron stars: A bayesian neural network approach. Phys Rev C (2016) 93:014311. doi:10.1103/PhysRevC.93.014311

19. Neufcourt L. Neutron drip line from bayesian model averaging. Phys Rev Lett (2019) 122:062502. doi:10.1103/PhysRevLett.122.062502

20. Lovell AE, Mohan AT, Sprouse TM, Mumpower MR. Nuclear masses learned from a probabilistic neural network. Phys Rev C (2022) 106:014305. doi:10.1103/PhysRevC.106.014305

21. Niu Z, Liang H. Nuclear mass predictions based on bayesian neural network approach with pairing and shell effects. Phys Lett B (2018) 778:48–53. doi:10.1016/j.physletb.2018.01.002

22. Perez RN, Schunck N. Controlling extrapolations of nuclear properties with feature selection. arXiv preprint arXiv:2201.08835 (2022).

23. Mumpower MR, Sprouse TM, Lovell AE, Mohan AT. Physically interpretable machine learning for nuclear masses. Phys Rev C (2022) 106:L021301. doi:10.1103/PhysRevC.106.L021301

24. Bishop CM. Mixture density networks. Tech. Rep. Birmingham, United Kingdom: Aston University (1994).

25. Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. Pytorch: An imperative style, high-performance deep learning library. In: H Wallach, H Larochelle, A Beygelzimer, F d’Alche Buc, E Fox, and R Garnett, editors. Advances in neural information processing systems 32. Red Hook, NY: Curran Associates, Inc. (2019). p. 8024–35.

26. Casten RF, Brenner DS, Haustein PE. Valence p-n interactions and the development of collectivity in heavy nuclei. Phys Rev Lett (1987) 58:658–61. doi:10.1103/PhysRevLett.58.658

27. Casten R, Zamfir N. The evolution of nuclear structure: The npnn scheme and related correlations. J Phys G: Nucl Part Phys (1999) 22:1521. doi:10.1088/0954-3899/22/11/002

28. Wang M, Huang W, Kondev F, Audi G, Naimi S. The AME 2020 atomic mass evaluation (II). tables, graphs and references. Chin Phys C (2021) 45:030003. doi:10.1088/1674-1137/abddaf

29. Nesterenko DA, Eronen T, Kankainen A, Canete L, Jokinen A, Moore ID, et al. Phase-Imaging Ion-Cyclotron-Resonance technique at the JYFLTRAP double Penning trap mass spectrometer. Eur Phys J A (2018) 54:154. doi:10.1140/epja/i2018-12589-y

30. Clark J, Savard G, Mumpower M, Akankainen . Precise mass measurements of radioactive nuclides for astrophysics. Berlin, Germany: EPJA (2023).

31. Duflo J, Zuker A. Microscopic mass formulas. Phys Rev C (1995) 52:R23. doi:10.1103/PhysRevC.52.R23

32. Möller P, Sierk A, Ichikawa T, Sagawa H. Nuclear ground-state masses and deformations: FRDM(2012). Atomic Data Nucl. Data Tables (2016) 109-110:1–204. doi:10.1016/j.adt.2015.10.002

33. Wang N, Liu M, Wu X, Meng J. Surface diffuseness correction in global mass formula. Phys Lett B (2014) 734:215–9. doi:10.1016/j.physletb.2014.05.049

34. Kortelainen M, McDonnell J, Nazarewicz W, Reinhard PG, Sarich J, Schunck N, et al. Nuclear energy density optimization: Large deformations. Phys Rev C (2012) 85:024304. doi:10.1103/PhysRevC.85.024304

35. Goriely S, Chamel N, Pearson JM. Further explorations of skyrme-Hartree-Fock-bogoliubov mass formulas. xvi. inclusion of self-energy effects in pairing. Phys Rev C (2016) 93:034337. doi:10.1103/PhysRevC.93.034337

36. Lovell AE, Mohan AT, Talou P. Quantifying uncertainties on fission fragment mass yields with mixture density networks. J Phys G Nucl Phys (2020) 47:114001. doi:10.1088/1361-6471/ab9f58

38. Garvey GT, Gerace WJ, Jaffe RL, Talmi I, Kelson I. Set of nuclear-mass relations and a resultant mass table. Rev Mod Phys (1969) 41:S1–S80. doi:10.1103/RevModPhys.41.S1

39. Barea J, Frank A, Hirsch JG, Isacker PV, Pittel S, Velázquez V. Garvey-kelson relations and the new nuclear mass tables. Phys Rev C (2008) 77:041304. doi:10.1103/PhysRevC.77.041304

40. Jänecke J, Masson P. Mass predictions from the garvey-kelson mass relations. Atomic Data Nucl Data Tables (1988) 39:265–71. doi:10.1016/0092-640X(88)90028-9

41. Mumpower M, Surman R, McLaughlin G, Aprahamian A. The impact of individual nuclear properties on r-process nucleosynthesis. Prog Part Nucl Phys (2016) 86:86–126. doi:10.1016/j.ppnp.2015.09.001

42. Martin D, Arcones A, Nazarewicz W, Olsen E. Impact of nuclear mass uncertainties on the r process. Phys Rev Lett (2016) 116:121101. doi:10.1103/PhysRevLett.116.121101

43. Sprouse TM, Navarro Perez R, Surman R, Mumpower MR, McLaughlin GC, Schunck N. Propagation of statistical uncertainties of skyrme mass models to simulations of r-process nucleosynthesis. Phys Rev C (2020) 101:055803. doi:10.1103/PhysRevC.101.055803

44. Li M, Meyer BS. Dependence of (n, γ) − (γ, n) equilibrium r-process abundances on nuclear physics properties. Phys Rev C (2022) 106:035803. doi:10.1103/PhysRevC.106.035803

45. Erler J, Birge N, Kortelainen M, Nazarewicz W, Olsen E, Perhac AM, et al. The limits of the nuclear landscape. Nature (2012) 486:509–12. doi:10.1038/nature11188

46. Xia X, Lim Y, Zhao P, Liang H, Qu X, Chen Y, et al. The limits of the nuclear landscape explored by the relativistic continuum hartree–bogoliubov theory. At Data Nucl Data Tables (2018) 121-122:1–215. doi:10.1016/j.adt.2017.09.001

47. Neufcourt L, Cao Y, Giuliani SA, Nazarewicz W, Olsen E, Tarasov OB. Quantified limits of the nuclear landscape. Phys Rev C (2020) 101:044307. doi:10.1103/physrevc.101.044307

Keywords: atomic nuclei, Bayesian averaging, binding energies and masses, Machine Learning-ML, nuclear physics, computational physics

Citation: Mumpower M, Li M, Sprouse TM, Meyer BS, Lovell AE and Mohan AT (2023) Bayesian averaging for ground state masses of atomic nuclei in a Machine Learning approach. Front. Phys. 11:1198572. doi: 10.3389/fphy.2023.1198572

Received: 01 April 2023; Accepted: 07 July 2023;

Published: 19 July 2023.

Edited by:

Paul Stevenson, University of Surrey, United KingdomReviewed by:

Mihai Horoi, Central Michigan University, United StatesCopyright © 2023 Mumpower, Li, Sprouse, Meyer, Lovell and Mohan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matthew Mumpower, bXVtcG93ZXJAbGFubC5nb3Y=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.