94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Phys., 22 February 2023

Sec. Optics and Photonics

Volume 11 - 2023 | https://doi.org/10.3389/fphy.2023.1139976

This article is part of the Research TopicApplication of Spectroscopy in Agricultural Environment and Livestock BreedingView all 12 articles

Poultry behaviors reflect the health status of poultry. For four behaviors of laying hens, such as standing, lying, feeding, and grooming, four deep learning methods for recognition were compared in this paper, as Efficientnet-YoloV3, YoloV4-Tiny, YoloV5, and Faster-RCNN. First, the behavior detection dataset was produced based on the monitoring video data. Then, four algorithms, Efficientnet-YoloV3, YoloV4-Tiny, YoloV5, and Faster-RCNN, were used for training respectively. Finally, using the validation set for recognition, we got the mAP values for the four algorithms: Efficientnet-YoloV3 had mAP values of 81.82% (standing), 88.36% (lying), 98.20% (feeding), 77.30% (grooming), and its FPS values were 9.83 in order; YoloV4-Tiny had mAP values of 65.50% (standing), 78.40% (lying), 94.51% (feeding), 62.70% (grooming), and their FPS values were 14.73 successively; YoloV5 had mAP values of 97.24% (standing), 98.61% (lying), 97.43% (feeding), 92.33% (grooming), and their FPS values were 55.55 successively; Faster-RCNN had mAP values were 95.40% (standing), 98.50% (lying), 99.10% (feeding), and 85.40% (grooming), and their FPS values were 3.54 respectively. The results showed that the YoloV5 algorithm was the optimal algorithm among the four algorithms and could meet the requirements for real-time recognition of laying hens’ behavior.

With the increasing demand for poultry meat and eggs, the poultry farming industry is rapidly developing towards industrialization and scale. The information level of modern poultry breeding has been continuously improved and enhanced [1]. The welfare level of poultry under large-scale breeding has gradually attracted the attention of various countries [2], and standardized farming conditions have been proposed in various countries to improve the welfare level of poultry breeding [3]. There are many factors affecting the welfare breeding of poultry. The significant issue is the factor of the breeding environment [4], and the behavioral information of poultry can be a good reflection of the welfare level and health status of poultry.

Traditional manual observation and statistics of poultry behavior are easily influenced by farmers’ experience, time-consuming, and easy to miss detection. With the development of science and technology, artificial intelligence breeding and non-invasive precision breeding technology have gradually emerged [5, 6]. Artificial intelligence breeding has good potential in solving poultry behavior detection. The combination of sensor and AI model-driven to well detect poultry behavior [5], and sensor monitoring for harmless sensing of poultry behavior, image monitoring, and sound monitoring technologies are also widely used in the poultry breeding industry [6]. The application of the Internet of Things and data analysis in monitoring the welfare of chickens on poultry farms was studied using radio frequency identification (RFID) technology [7]. RFID transponders were attached to chicken legs to compose feedback with weighing sensors to establish an automatic monitoring system for identifying poultry roosting behavior [8]. It is difficult to assess behavioral changes in chickens when humans are present, and the use of an Internet-based camera to monitor and record chicken behavior can be effective in assessing the level of chicken welfare [9]. The monitoring adaptability of different vision systems and image processing algorithms for poultry activity on breeding farms was tested [10]. The study proposed machine vision to test broiler health, which allows early warning and prediction of broiler disease [11]. The sound of poultry eating has obvious differences from normal vocalization and based on the analysis of the combination of timbre and time change, there are proposed 3 types of poultry feeding vocal networks, which were experimentally tested for a high recognition rate [12]. Researchers experimented on the relationship between animal vocalization and body weight, and the results showed that the method can be used for early warning [13]. The monitoring analysis of nocturnal vocalizations of poultry can provide a practical method for poultry abnormal status judgment [14]. With the development of the computer vision method of Convolutional Neural Network (CNN), deep learning computer vision analysis has been continuously used for behavior detection of animals to improve the welfare level of rural animals [15]. The pose analysis of broilers is the basis of poultry behavior prediction. The deep neural network is used for pose research, and the Naive Bayes model (NBM) is used to classify and identify the pose of broilers. The experimental method has a high recognition accuracy for the pose of broilers [16]. Deep learning models of convolutional neural networks (CNN) were used to identify rumination behavior in cattle [17]. The researchers used a convolutional neural network (CNN) to extract the feeding features of pigs, used an image processing algorithm to determine the situation of pigs and the feeding areas, and identify the feeding time of single pigs [18]. The researchers proposed the use of convolutional neural networks to identify three important activities in sheep and demonstrate the importance of the method in the case of data capture, and data tagging [19]. Experts used a deep convolutional neural network to detect the walking key points of broilers, and the extracted key point information is input into the model for classification, and the model provided an effective detection method for the clinical symptoms of claudication in poultry [20]. The Kinect sensor combined with the convolutional neural network approach was effective in identifying the behavior of chickens [21]. Yolo detection models are also commonly used in animal behavior recognition. The researchers used the deep learning model YoloV3 to identify six behaviors of laying hens and analyzed the frequency of each behavior [22]. Comparison of the training detection of the deep learning model YoloV4 with YoloV5 provided data support for poultry embryo detection [23]. Researchers trained and tested the YoloV5 deep learning model to identify domesticated chickens, using the Kalman filter principle to propose a model to track multiple chickens, and thus improve the welfare level of chickens in animal breeding [24].

In order to achieve the real-time accurate identification of the behavior of the laying layer, this paper analyzed and compared through different first-and second-order target detection algorithms, which better verified the applicability of the target detection algorithm for poultry behavior identification and provided experimental support for further real-time monitoring of poultry health.

The experiment was conducted at Huixin Breeding Co., LTD., Lingqiu County, Datong City, Shanxi Province. The white-green-shell laying hens in the chicken house were pure white, 7 weeks of age, and their body weight was 750 g–1100 g. During the experiment, two 100 cm × 120 cm × 150 cm wire mesh fences were built in the chicken house. The feed trough was fixed on the long side of the fence, and 1/3 of the trough was for drinking and the remaining 2/3 was for feeding. The fence is 15 cm above the ground to facilitate the cleaning of manure on the ground, and a webcam is installed 200 cm from the center of the fence. The camera is connected to the hard disk video recorder to record and save experimental videos, and a monitor is connected to facilitate observation. Ten white-green-shelled hens are placed in each enclosure. The laying hens are kept with natural lighting inside, with vents and a temperature and hygrometer inside, to keep the chicken house comfortable. The structure diagram of the experimental field is shown in Figure 1.

The experiment was recorded by Hikvision webcam with an image resolution of 1920 × 1,080 and a frame rate of 25 fps. The recording time was set at 7:00–22:00 per day, the experiment was conducted for 15 days, and the video files in the hard disk video recorder were sorted and saved daily. After the experiment, VSPlayer software was used to intercept the content in the video to obtain suitable single-frame pictures, and 1,500 pictures with different light intensities were selected. The selected images were labeled using the LabelImg software to generate files in XML format. Four behaviors were labeled as eating, standing, lying, and grooming. After the labeling was completed: eating (2,800), lying (5,000), standing (3,000), and grooming (600) were obtained. The data set was divided into validation sets and training sets in a 1:9 ratio.

The computer system used for this experiment was Windows 10 Home Edition system Intel (R) Core (TM) i7-10750H CPU @ 2.60 GHz 2.59 GHz processor with 16G RAM and NVIDIA GeForce GTX 1650Ti graphics card. Installed the Anaconda3 version loaded with Python 3.7 environment, trained and predicted the algorithm on PyCharm integrated development environment. The epoch of all four object detection algorithms was 300, and the optimal training weight in each algorithm was finally selected for prediction. Both Efficientnet-YoloV3 and YoloV4-tiny algorithms were trained 100 times, with an uninterrupted training process, the batch size is 8, and the thawing training batch size is 4, using 4 threads. The Faster-RCNN algorithm is complex and occupies large memory, the epoch of freezing training is set to 50, batch size to 4, and the batch size of thawing training is 2, trained using 2 threads. The batch size of the YoloV5 algorithm was set to 4 and 4 threads are used for training.

In this paper, four target detection algorithms are used, and three first-order target detection algorithms, namely, Efficientnet-YoloV3, YoloV4-tiny, and YoloV5. The second-order target detection algorithm is Faster-RCNN. The first-order algorithm directly locates the target border to do regression processing, while the second-order algorithm generates sample candidate frames for convolution classification processing. Among the two algorithms, the first-order algorithm has the advantage of high detection speed, while the second-order algorithm has high detection accuracy. In this paper, in order to realize real-time detection of laying hens’ behavior, a faster algorithm is needed, so a variety of first-order algorithms are selected; at the same time, second-order algorithms are selected to compare and verify the detection accuracy of first-order algorithms.

The YoloV3 algorithm has made some improvements on the basis of YoloV1 and YoloV2, which in turn improves its detection speed and has an outstanding performance in small object detection [25]. The backbone feature extraction network of YoloV3 is Darknet53, which contains a residual structure. The convolution of multiple residual structures in the Darknet53 network deepens the network and improves detection accuracy. The FPN (Feature Pyramid) structure strengthens the feature extraction of the three feature layers after the convolution of the backbone feature network to obtain these three effective feature layers, and finally predict the three effective feature layers. In the Efficientnet-YoloV3 detection algorithm, the main purpose is to change the backbone feature extraction network to the Efficientnet model. Efficient net is an efficient and small-parameter model proposed by Google, which improves the efficiency of detection while retaining detection accuracy. The Efficientnet model consists of the Stem part for preliminary feature extraction, the Blocks part for further extraction, and the classification head. The Efficientnet-YoloV3 network structure is shown in Figure 2.

The YoloV4 target detection algorithm is improved based on YoloV3 [26]. The main improvements are: the backbone feature extraction network YoloV4 uses the CSPDarkNet53 structure, the activation function in the backbone network is replaced by the Mish function from YoloV3’s LeakyReLU, while the CSPnet structure is also used in the backbone network to optimize the residual structure. The feature pyramid uses the SPP and PANet structures, The SPP structure performs the maximum pooling operation on the features extracted from the backbone at four different scales to increase the perceptual field, and the PANet structure extracts the features from top to bottom after SPP processing to achieve iterative feature, boosted and enhanced the feature structure of the feature layer. The YoloV4-tiny is simplified based on the YoloV4 structure, and the partial structure is deleted to improve the detection speed of the target detection algorithm. In the YoloV4-tiny structure, the deleted CSPDarkNet53-tiny structure is used to replace the activation function with LeakyReLU to increase the operation speed. Firstly, we perform the second convolution to compress the image, obtain the effective feature layer through the Resblock-body residual structure, and finally obtain the effective feature layer of two arrays, and use a feature pyramid structure for the effective features extracted by the backbone after convolution, the last sample is convolved with the last array. The CSPnet network structure is shown in Figure 3.

In this paper, version 6.0 of YoloV5 is used for training and prediction during the experiments [27]. The network results of YoloV5 mainly consist of four structures: the input side, Backbone, Neck, and Prediction. The input side, like YoloV4, uses the data enhancement function, which can be adjusted by modifying program parameters. The data enhancement is mainly to re-stitch the randomly scaled, cropped, or arranged images to improve the robustness and generalization of the network training. Meanwhile, the input side of YoloV5 integrates the initial anchor frame algorithm directly into the program to realize adaptive calculation. The YoloV5’s Backbone (backbone network) consists of the Focus structure and the CSP structure while using the more effective SiLU activation function. The Focus structure mainly performs a slicing operation on the input picture, compressing the length and width of the picture to increase the number of channels. The CSP structure is also used in YoloV5, one is the CSP1_X structure in the Backbone, and the other is the CSP2_X structure in the Neck. The FPN + PAN structure is used in constructing the construction feature pyramid structure, while the SPP structure used in YoloV4 is directly applied to the backbone feature extraction network. The YoloV5 network structure is shown in Figure 4.

The second-order algorithm has high detection accuracy and has a good effect on small target detection. The Faster-RCNN in the second-order algorithm is selected for training and testing to compare with the test results of various Yolo algorithms [28]. The Faster-RCNN algorithm consists of four parts: conv layers (backbone feature extraction), RPN network, ROI Pooling structure, and Classification and regression (classification and regression). Faster-RCNN has multiple backbone feature extraction networks, and this paper uses Resnet50 as the backbone network, which contains two residual structure blocks, Conv Block and dentity Block. The common feature layer acquired by the backbone feature network has two functions. One is to generate a check box after the RPN structure, and the other is to act on the ROI Pooling structure to obtain the feature layer of the same size and send the results to the full connected layer for classification and regression. The Faster-RCNN network structure is shown in Figure 5.

After the training of this experiment, the optimal weight value in each algorithm is selected to obtain the evaluation index of the algorithm. Precision (precision), Recall (recall), mAP (average accuracy), and IOU (The full name of IOU is Intersection over Union, which is a standard for measuring the accuracy of detecting corresponding objects in a specific data set. IOU is a simple measurement standard that can be used to measure any task with a forecast range in the output.) are selected as the evaluation indicators of this experiment. The calculation methods are as follows:

In the formula, TP (True Positives) means that the sample is determined as positive and correct, TN (True Negatives) means that the sample is determined as negative and correct, and FP (False Positives) means that the sample is determined as positive but incorrect. FN (False Negatives) means that the sample is judged negative but incorrect. Precision refers to the ratio of the number of correctly determined positive samples to the total number of determined positive samples, Recall (recall) refers to the ratio of the number of correctly determined positive samples to the total number of determined positive samples, the average of mAP (mean Average Precision), the calculation method uses the difference average, that is, the area under the Precision-Recall curve.

After the experimental training, the evaluation file was generated, and the nms_iou used for non-maximum inhibition was set to 0.5 to obtain the evaluation indexes of the four algorithms. The following Figure 6 shows the results diagram of the various algorithms at bUFQQDAuNQ==, and the detailed data analysis is shown in Table 1.

The following Figure 7 and Table 2 show the comparison of the Precision of the four different algorithms. Which shows the detection accuracy of each type of algorithm after training under different behaviors of laying hens.

The following Figure 8 and Table 3 show the comparison of the four different algorithms Recall (recall).

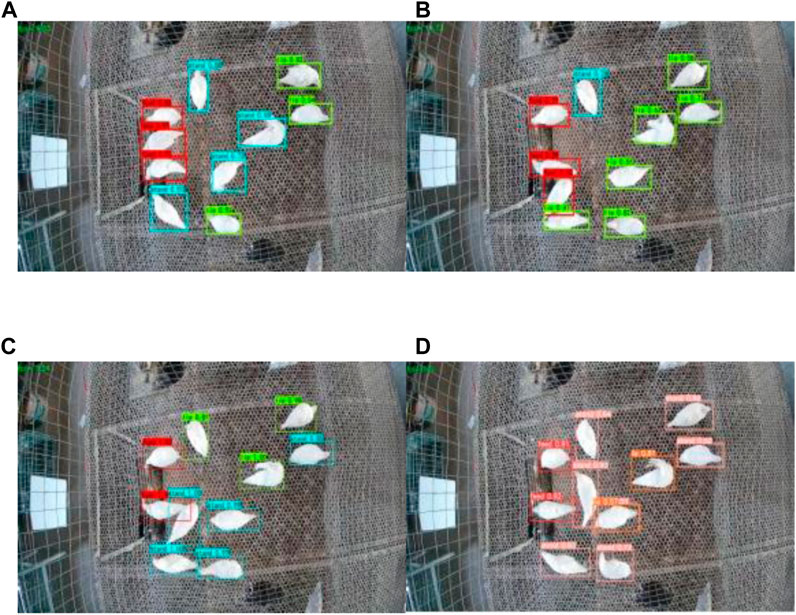

After the comparison of the evaluation indicators, the pictures of the layers in two different environments of day and night were selected and verified by different target detection algorithms, and the results are shown in Figure 9. Figure (A) to Figure (D) verifies the behavior of a laying hen in the daytime environment using YoloV3, YoloV4, YoloV5, and Faster-RCNN respectively, and Figure (E) to Figure (H) identify a picture of a laying hen in the night environment. The identification accuracy of all kinds of algorithms can be seen in the figure below.

FIGURE 9. Detection effect of various algorithms in different environments (A) YoloV3 Day; (B) YoloV4 Day; (C) YoloV5 Day; (D) Faster-RCNN Day; (E) YoloV3 Night; (F) YoloV4 Night; (G) Faster-RCNN Night; (H) YoloV5 Night.

The experiment also compared the FPS values of various algorithms. The video images were intercepted to obtain 9 s of test video and tested using different target detection algorithms. Figure 10 shows the real-time FPS values of the different target detection algorithms, where (A) to (D) are the FPS values when detected using YoloV3, YoloV4, YoloV5, and Faster-RCNN, respectively. The results are shown in Figure 11.

FIGURE 10. Video detection of the FPS values of various algorithms (A) YoloV3 FPS; (B) YoloV4 FPS; (C) Faster-RCNN FPS; (D) YoloV5 FPS.

In this paper, four target detection algorithms of YoloV3, YoloV4, YoloV5, and Faster-RCNN were selected for the training and detection of four behaviors of laying hens. From the analysis of the three evaluation indexes selected, the YoloV5 target detection algorithm has a very good detection effect in this experiment. From Figure 6, the Map value of YoloV5 is significantly higher than that of YoloV3 and YoloV4, and it is better than the Map value of Faster-RCNN. In particular, the modification (Embellish) behavior of laying hens has a Map value of 92.33%. In Figures 7, 8, the precision and recall values of YoloV5 are 91.34% and 98.15%, respectively, higher than the tested values of the other three detection algorithms for this behavior of lying. Experiments with the same size data set and experimental equipment showed that YoloV5 and Faster-RCNN were able to accurately identify various types of laying hens’ behaviors, while YoloV3 and YoloV4 had lower detection effects and there were missing detection and judgment errors. Meanwhile, two environments of day and night were selected for detection in this paper. From Figure 9, the detection confidence of YoloV3, YoloV4, YoloV5, and Faster-RCNN in the behavior of stand in the daytime environment is 91%, 91%, 96%, 100%, and in the dark environment, its detection confidence is 81%, 77%, 93%, and 99%. In the day and night environment, we can see that the detection confidence of YoloV5 and Faster-RCNN was very high, in particular, Faster-RCNN, which reached 100% in the detection of the day environment, while YoloV3 and YoloV4 found a reduced detection accuracy obviously in the night environment. In Figures 10, 11, we can analyze that YoloV5 has a better detection speed under the same hardware conditions and its FPS value can reach 55, while the Faster-RCNN FPS is 3.54, YoloV5 can fully meet the needs of real-time detection of farms. In subsequent studies, the YoloV5 detection accuracy can be further improved by increasing the training data set and enhancing the data effect.

The comparative experimental results of four different target detection algorithms show that the detection accuracy and detection speed of the YoloV5 are better than YoloV3 and YoloV4. Faster-RCNN and YoloV5 detection accuracy are similar, but Faster-RCNN has a low detection speed and occupies more memory. The precision values of the YoloV5 target detection algorithm were 96.12%, 91.34%, 86.97%, and 97.78% for the four behaviors: feed, stand, lie, and embellish, respectively; the recall values were 98.21%, 98.15%, 94.12%, and 86.45%, respectively. YoloV5 can effectively identify four different behaviors of laying hen in a day and night environment, and its detection speed is fast enough to meet the needs of real-time detection. It can be used to realize the real-time detection of laying hens’ behavior in breeding farms and provide data support for the health assessment of laying hens. Its characteristics of high detection accuracy and fast detection speed are easy to be deployed in the embedded intelligent front-end detection equipment, In the experimental link of this paper, the breeding density of the experimental environment needs to be improved. In the future, we will strengthen the research on the breeding farm to improve the breeding density.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

FW and JC: Investigation, formal analysis, and prepare draft; YX: Writing, review and editing; and HL: Conceptualization, methodology, review and editing, project administration.

This paper is supported by Fundamental Research Program of Shanxi Province (20210302123054).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Wu D, Cui D, Zhou M, Ying Y. Information perception in modern poultry farming: A review. Comput Electron Agric (2022) 199:107131. doi:10.1016/j.compag.2022.107131

2. Hartung J, Nowak B, Springorum AC. Animal welfare and meat quality. In: J Kerry, editor. Improving the sensory and nutritional quality of fresh meat. Netherlands: Elsevier (2009). p. 628–646.

3. Silva RTBR, Nääs IA, Neves DP. Selecting the best norms for broiler rearing welfare legislation. In: Precision Livestock Farming 2011 - Papers Presented at the 5th European Conference on Precision Livestock Farming, ECPLF 2011 (2011). p. 522–527.

4. Li B, Wang Y, Zheng W, Tong Q. Research progress in environmental control key technologies, facilities and equipment for laying hen production in China. Nongye Gongcheng Xuebao/Transactions Chin Soc Agric Eng (2020) 36(16):212–221. doi:10.11975/j.issn.1002-6819.2020.16.026

5. Bao J, Xie Q. Artificial intelligence in animal farming: A systematic literature review. J Clean Prod (2022) 331:129956. doi:10.1016/j.jclepro.2021.129956

6. Wang K, Zhao X, He Y. Review on noninvasive monitoring technology of poultry behavior and physiological information. Nongye Gongcheng Xuebao/Transactions Chin Soc Agric Eng (2017) 33(20):197–209. doi:10.11975/j.issn.1002-6819.2017.20.025

7. Gridaphat S, Phakkaphong K, Kraivit W. Toward IoT and data analytics for the chicken welfare using RFID technology. In: 19th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, ECTI-CON; 24-27 May 2022; Prachuap Khiri Khan, Thailand (2022).

8. Wang K, Liu K, Xin H, Chai L, Wang Y, Fei T, et al. An RFID-based automated individual perching monitoring system for group-housed poultry. Trans ASABE (2019) 62(3):695–704. doi:10.13031/trans.13105

9. Dan H, John B. Video recording system to assess animal welfare impact of environmental changes in barns. In: 2022 ASABE Annual International Meeting; 17 - 20 Jul 2022; Houston, USA (2022).

10. Carlos G, Ricardo P, José F, María D V. Real-time monitoring of poultry activity in breeding farms. In: Proceedings IECON 2017 - 43rd Annual Conference of the IEEE Industrial Electronics Society; 29 October 2017 - 01 November 2017; Beijing, China (2017). p. 3574–3579.

11. Okinda C, Lu M, Liu L, Nyalala I, Muneri C, Wang J, et al. A machine vision system for early detection and prediction of sick birds: A broiler chicken model. Biosyst Eng (2019) 188:229–42. doi:10.1016/j.biosystemseng.2019.09.015

12. Huang J, Zhang T, Cuan K, Fang C. An intelligent method for detecting poultry eating behaviour based on vocalization signals. Comput Electron Agric (2021) 180:105884. doi:10.1016/j.compag.2020.105884

13. Fontana I, Tullo E, Butterworth A, Guarino M. An innovative approach to predict the growth in intensive poultry farming. Comput Electron Agric (2015) 119:178–83. doi:10.1016/j.compag.2015.10.001

14. Du X, Lao F, Teng G. A sound source localisation analytical method for monitoring the abnormal night vocalisations of poultry. Sensors (2018) 18(9):2906. doi:10.3390/s18092906

15. Li G, Huang Y, Chen Z, Chesser GD, Purswell JL, Linhoss J, et al. Practices and applications of convolutional neural network-based computer vision systems in animal farming: A review. Sensors (2021) 21(4):1492. doi:10.3390/s21041492

16. Fang C, Zhang T, Zheng H, Huang J, Cuan K. Pose estimation and behavior classification of broiler chickens based on deep neural networks. Comput Electron Agric (2021) 180:105863. doi:10.1016/j.compag.2020.105863

17. Ayadi S, Said A, Jabbar R, Aloulou C. Dairy cow rumination detection: A deep learning approach (2021) arXiv.

18. Chen C, Zhu W, Steibel J, Siegford J, Han J, Norton T. Recognition of feeding behaviour of pigs and determination of feeding time of each pig by a video-based deep learning method. Comput Electron Agric (2020) 176:105642. doi:10.1016/j.compag.2020.105642

19. Kleanthous N, Hussain A, Khan W, Sneddon J, Liatsis P. Deep transfer learning in sheep activity recognition using accelerometer data. Expert Syst Appl (2022) 207:117925. doi:10.1016/j.eswa.2022.117925

20. Nasiri A, Yoder J, Zhao Y, Hawkins S, Prado M, Gan H. Pose estimation-based lameness recognition in broiler using CNN-LSTM network. Comput Electron Agric (2022) 197:106931. doi:10.1016/j.compag.2022.106931

21. Pu H, Lian J, Fan M. Automatic recognition of flock behavior of chickens with convolutional neural network and kinect sensor. Int J Pattern Recognition Artif Intelligence (2018) 32(07):1850023. doi:10.1142/s0218001418500234

22. Wang J, Wang N, Li L, Ren Z. Real-time behavior detection and judgment of egg breeders based on YOLO v3. Neural Comput Appl (2019) 32:5471–81. doi:10.1007/s00521-019-04645-4

23. Nakaguchi VM, Ahamed T. Development of an early embryo detection methodology for quail eggs using a thermal micro camera and the YOLO deep learning algorithm. Sensors (2022) 22(15):5820. doi:10.3390/s22155820

24. Neethirajan S. ChickTrack – a quantitative tracking tool for measuring chicken activity. Measurement (2022) 191:110819. doi:10.1016/j.measurement.2022.110819

25. Jia Y, Liu L, Zhang L, Li H. Target detection method based on improved YOLOv3. In: 2022 3rd International Conference on Computer Vision, Image and Deep Learning and International Conference on Computer Engineering and Applications. CVIDL and ICCEA; 20-22 May 2022; Changchun, China (2022).

26. Jiang Z, Zhao L, Li S, Jia Y. Real-time object detection method based on improved YOLOv4-tiny (2020) arXiv.

27. Cao C, Chen C, Kong X, Wang Q, Deng Z. A method for detecting the death state of caged broilers based on improved Yolov5. SSRN Electron J (2022). doi:10.2139/ssrn.4107058

Keywords: behavior detection, deep learning, poultry behaviors, faster-RCNN, YoloV5

Citation: Wang F, Cui J, Xiong Y and Lu H (2023) Application of deep learning methods in behavior recognition of laying hens. Front. Phys. 11:1139976. doi: 10.3389/fphy.2023.1139976

Received: 08 January 2023; Accepted: 09 February 2023;

Published: 22 February 2023.

Edited by:

Leizi Jiao, Beijing Academy of Agriculture and Forestry Sciences, ChinaReviewed by:

Jiangbo Li, Beijing Academy of Agriculture and Forestry Sciences, ChinaCopyright © 2023 Wang, Cui, Xiong and Lu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Huishan Lu, MTM5MzQ1OTczNzlAMTM5LmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.