- 1Department of Data Science, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany

- 2Max-Planck-Institut für Mathematik in den Naturwissenschaften, Leipzig, Germany

- 3Fakultät für Mathematik und Informatik, Universität Leipzig, Leipzig, Germany

- 4Santa Fe Institute for the Sciences of Complexity, Santa Fe, NM, United States

In this paper, we numerically investigate two distinct phenomena, coherence resonance (CR) and self-induced stochastic resonance (SISR), in multiplex neural networks in the presence of spike-timing-dependent plasticity (STDP). The high degree of CR achieved in one layer network turns out to be more robust than that of SISR against variations in the network topology and the STDP parameters. This behavior is the opposite of the one presented by Yamakou and Jost (Phys. Rev. E 100, 022313, 2019), where SISR is more robust than CR against variations in the network parameters but in the absence of STDP. Moreover, the degree of SISR in one layer network increases with a decreasing (increasing) depression temporal window (potentiation adjusting rate) of STDP. However, the poor degree of SISR in one layer network can be significantly enhanced by multiplexing this layer with another one exhibiting a high degree of CR or SISR and suitable inter-layer STDP parameter values. In addition, for all inter-layer STDP parameter values, the enhancement strategy of SISR based on the occurrence of SISR outperforms the one based on CR. Finally, the optimal enhancement strategy of SISR based on the occurrence of SISR (CR) occurs via long-term potentiation (long-term depression) of the inter-layer synaptic weights.

1 Introduction

Spiking activity in neural systems can be induced and affected by noise, which can be internally produced by the system itself and/or externally by processes acting on the system. The sources of neural noise include 1) synaptic noise, which is externally produced, and is caused by the quasi-random release of neurotransmitters by synapses and/or random synaptic input from other neurons, and 2) channel noise, which is internally produced and comes from the random switching of ion channels [47, 49]. Synaptic and channel noise have been found to give rise to peculiar dynamical behavior in neural networks, including various resonance phenomena. The most prominent forms of these noise-induced resonance phenomena include: stochastic resonance (SR) [3, 28], coherence resonance (CR) [36], self-induced stochastic resonance (SISR) [10, 34], and inverse stochastic resonance (ISR) [18, 42]. The emergence and the dynamics of SR, CR, SISR, and ISR are quite different from each other, and therefore, they have mostly been separately investigated in previous research works. It might nevertheless be possible that fundamental relationships exist between some or even all of these noise-induced resonances and that efficient enhancement schemes for information processing could emerge from their co-existence in a neural network. Some research works have shown intriguing results about the interplay between some of these noise-induced resonance phenomena. The bifurcation and stochastic analysis in [48] revealed that the parameter that changes the relative geometric positioning (and stability) of the fixed point (the quiescent state of the neuron) with respect to the fold point of the cubic nullcline of the FitzHugh-Nagumo (FHN) model [11] can cause a switch between SISR and ISR in the same synaptic weak-noise limit. Experiments have frequently shown that real biological neurons with similar physiological features, excited by identical stimuli, may spike quite differently, thus expressing different neuro-computational properties [40]. The analysis presented in [48] may, therefore, provide a qualitative explanation for this particular behavior. Zamani et al. [51] later showed a similar behavior between SR and ISR in a minimal bistable spiking neural circuit, where both mechanisms could co-exist under careful preparations of the neural circuit. Whether and if so, how all of these different types of noise-induced resonance mechanisms are related and what efficient enhancement schemes for information processing emerge from their interactions is far from being completely understood.

In this paper, CR and SISR will be the phenomena of interest. In fact, in both CR and SISR, small random perturbations of an excitable neural system with a large time scale separation ratio may lead to the emergence of coherent spiking activity. The mechanisms behind these noise-induced resonance phenomena, however, are entirely different, see [10]. CR [36] occurs when a maximal degree of regularity in the neuron’s spiking activity is achieved at an optimal noise intensity without a periodic input signal, provided the neuron’s bifurcation parameters are tuned near the Hopf bifurcation [25, 26, 36] or the saddle-node bifurcation [17, 19, 20, 27] threshold. In this case, a relatively small noise amplitude can easily (without overwhelming the entire dynamics) drive the neuron towards a coherent spiking activity that emerges right after the bifurcation threshold. Thus, during CR, noise plays a relatively passive role. In the FitzHugh-Nagumo neuron model (that will be used in this work), CR requires that the noise source is attached to the slow recovery variable—mimicking the dynamics of channel noise [10].

SISR, on the other hand, occurs when a vanishingly small noise intensity perturbing the fast variable of an excitable system results in the onset of a limit cycle behavior that is absent without noise [34]. SISR combines a coherence resonance-type mechanism with an intrinsic reset mechanism, and no external periodic driving is required. The period of the coherent oscillations created by the noise has a non-trivial dependence on the noise intensity and the timescale between the fast variable and slow variable of the excitable system. SISR essentially depends on the interplay between three different timescales: the two timescales of the deterministic part of the excitable system (i.e., the fast and slow timescale of the fast and slow variable, respectively), plus a third timescale characteristic of the noise, which plays an active role in the mechanism of SISR, in contrast to CR. Thus, the mechanism behind SISR is different from that of CR (see [10]), and remarkably, it can also occur away from bifurcation thresholds in a parameter regime where the zero-noise (deterministic) dynamics do not display a limit cycle nor even its precursor. The properties of the limit cycle that SISR induces are controlled by both the noise intensity and the time scale separation ratio. Moreover, unlike CR, SISR requires a strong timescale separation ratio between the variables of the excitable system. In the FitzHugh-Nagumo neuron model, SISR (in contrast to CR) requires that the noise source is attached to the fast membrane potential variable—mimicking the dynamics of synaptic noise [10].

Of course, it is worth pointing out that a neuron can have both channel and synaptic noise simultaneously. In this case, CR and SISR will compete with each other. The dominant phenomenon will correspond to the one whose conditions are met first. It is shown in [10, 34] that SISR will dominate CR because the oscillations due to SISR are contained in those of CR. Thus, the conditions necessary for SISR can always be met first. In the current work, we shall not consider the situations where we have both channel and synaptic noise in a given layer. Just one type of noise will be considered in a given layer, and thus the competition between CR and SISR in a given layer will also not be considered.

The characteristic features of CR and SISR based on (1) time-delayed feedback couplings and network topology [1, 15, 30], 2) the multiplexing of layer networks [6, 31, 39, 46, 50], and 3) the use of one type of noise-induced resonance mechanism to optimize another type [50] have been established. It has been shown that appropriate selection of the time-delayed feedback parameters of FHN neurons coupled in a ring network can modulate CR: with a local coupling topology, synaptic time delay weakens CR, while in cases of non-local and global coupling, only appropriate synaptic time delays can strengthen or weaken CR [1, 15, 30, 38]. The enhancement of CR and SISR in neural systems with multiplex layer networks has recently attracted attention. In a multiplex network [5], the nodes participate in several networks simultaneously, and the connections and interaction patterns are different in the different networks, although the nodes preserve their identities across the different networks or layers. Since there may exist different types of relations between neurons or brain regions, multiplex networks have also been proposed as neurophysiological models [8]. For instance, functional couplings, like synchronization, between brain regions can be realized in different frequency bands. And each such frequency band can process a different type of information, for instance in language processing, phonetic, semantic and prosodic information [13]. But the main purpose of our paper is a formal investigation of the interplay between different types of stochastic resonance in a model where the individual units follow FHN dynamics, as FHN has become a paradigmatic model system for nonlinear dynamics [24] where many features can be studied in rather explicit terms.

In particular, the enhancement of CR in one layer of a multiplex network of FHN neurons based on the occurrence of SISR in another layer was established in [50]. In this case, two enhancement schemes for CR were compared: In one scheme (CR-CR scheme), one layer of the multiplex network is configured so that CR is optimal and the other layer configured so that CR is non-optimal in isolation. In the other scheme (SISR-CR scheme), one layer of the multiplex network is configured so that SISR is optimal, and the other layer is configured so that CR is non-optimal in isolation. It was then shown that depending on which optimal resonance mechanism (CR or SISR) we had in one layer of the multiplex network, the best enhancement of CR in the other layer would depend on the multiplexing time delay and strength between the two layers. With weaker multiplexing strength and shorter time delays between the layers, the CR-CR scheme performs better than the SISR-CR scheme. But with stronger multiplexing connections, the SISR-CR scheme outperforms the CR-CR scheme, especially at weaker noise amplitudes and longer multiplexing time delays. This result suggests that the interactions between different noise-induced resonance mechanisms in neural networks could open up new possibilities for control and enhancement of information processing. These enhancement schemes could allow us to enhance information processing in neural networks more efficiently, using the interplay between different noise-induced resonance mechanisms and the multiplexing of layer networks. Enhancement schemes based on multiplexing (or, in general, on connecting several layers to form a multilayer network) are advantageous because the dynamics of one layer can be controlled by adjusting the parameters of another layer. So far, the enhancement of CR and SISR based on the multiplexing of neural layer networks has been established only in regular networks in the absence of STDP [6, 31, 39, 46, 50].

Adaptive (or learning) rules in biological neural networks have been linked to an important mechanism, namely, spike-timing-dependent plasticity (STDP) [16, 29]. STDP describes how the synaptic weights get modified by repeated pairings of pre-and postsynaptic action potentials (spikes) with the sign and the degree of the modification dependent on the relative timing of the firing of neurons. Depending on the precise timing of pre-and postsynaptic action potentials, the synaptic weights can exhibit long-term potentiation (LTP, i.e., persistent strengthening of synapses) or long-term depression (LTD, i.e., persistent weakening of synapses). There are two main types of STDP—Hebbian excitatory STDP (eSTDP) and anti-Hebbian inhibitory STDP (iSTDP). In this paper, we will focus only on eSTDP.

The ubiquity and importance of STDP in neural dynamics require us to investigate the enhancement of CR and SISR in adaptive neural networks driven by STDP. Such an investigation should be instrumental both theoretically and experimentally. Some previous works have shown the crucial role of adaptivity in network of coupled oscillators. For example, in [2] it is shown that the plasticity of the connections between oscillators plays a facilitatory role for inverse stochastic resonance (ISR), where adaptive couplings guide the dynamics of coupled oscillators to parameter domains in which noise effectively enhances ISR. In [12], it is shown how the interaction of noise and multiscale dynamics, induced by slowly adapting feedback, may affect an excitable system, giving rise to a new mode of behavior based on switching dynamics which allows for an efficient control of the properties of CR.

In the current work, the main questions we want to address are the following: 1) How do network topology and STDP parameters affect the degree of coherence due to CR and SISR? 2) In the presence of STDP, which of these noise-induced resonance phenomena is more robust to parametric perturbations? 3) In the presence of STDP, is an enhancement of the less robust phenomenon in an isolated layer network still possible via multiplexing? 4) Can the occurrence of one resonance phenomenon in one layer be used to enhance the other phenomenon in another layer in the presence of STDP? 5) If the answers to the previous questions are affirmative, then what behavior (LTP or LTD) of the STDP learning rule optimizes the multiplexing enhancement strategy?

Here is an outline of the remainder of this article: In Section 2, we present the mathematical model of the stochastic neural networks and give a brief description of the STDP learning rule and the dynamical differences in the emergent nature of the two noise-induced resonance phenomena that are of interest. In Section 3, we present the numerical methods used in our numerical simulations. In Section 3.1, we discuss the results of the dynamics of CR and SISR in isolated layer networks. In Section 3.2, we discuss the results of the enhancement of SISR using the multiplexing technique. Finally, we summarize our findings and conclude in Section 4.

2 Mathematical Model

We consider the following two-layer multiplex network, where each layer represents a network of N diffusively coupled FHN neurons in the excitable regime and the presence of noise:

The terms

where the synaptic connectivity matrices

The inter-layer synaptic currents

where τ12 represents the multiplexing (inter-layer) time delay of the electrical synaptic coupling between layer 1 and layer 2. With increasing time t, the intra-layer synaptic strengths

The intra-layer synaptic modifications

where LTP occurs if

Experimental investigations [7, 9, 14, 45, 52] have shown that for a fixed stimulus, D1τ1d > P1τ1p ensures dominant depression of synapses, otherwise (i.e., D1τ1d ≤ P1τ1p) dominant potentiation. In Figures 1A,B, we show the asymmetric Hebbian time window for the synaptic modification

FIGURE 1. Time windows for an asymmetric Hebbian eSTDP learning rule. Plot of synaptic modification

3 Numerical Results

To quantify the degree of SISR (i.e., the degree of coherence of the spiking activity induced by the mechanism of SISR), we use the normalized standard deviation of the mean interspike interval, commonly known as the coefficient of variation [36]. Because the coefficient of variation is based on the time intervals between spikes, it does relate to the timing precision of information processing in neural systems [35] and naturally becomes an essential statistical measure in neural coding. The coefficient of variation (CV) of N coupled neurons is defined as [30]:

where

⟨ISIi⟩ and

For our investigations, we numerically integrate the set of stochastic differential equations in Eq. 1 with the Hebbian eSTDP rule of Eq. 4 by using the fourth-order Runge-Kutta algorithm for stochastic processes [21] and the Box-Muller algorithm [23]. The integration time step is fixed at dt = 0.01 for a total time of T = 1.0, ×, 106 unit. Averages are taken over 20 different realizations of the initial conditions. For each realization, we choose random initial points [vℓi (0), wℓi (0)] for the ith neuron in the ℓth layer with uniform probability in the range of vℓi (0) ∈ (−2, 2) and wℓi (0) ∈ (−2/3, 2/3). The initial synaptic weights

Following the work in [10, 50], we note that while CR and SISR generally require an excitable regime (achieved by fixing γ1 = γ2 = 0.75) for their occurrence, for the particular case of the FHN neuron model, the occurrence of CR requires the presence of only channel noise. Thus, when investigating CR in a given layer of Eq. 1, we will set all synaptic noises to zero (i.e.,

3.1 Optimal CR and SISR in an Isolated Layer

Before investigating the dynamics of CR and SISR in the multiplex network of Eq. 1, we should first understand their dynamics in an isolated layer network. Thus, this subsection is devoted to the investigation of CR and SISR in a single isolated layer of Eq. 1. We present the numerical results on the dynamics of CR and SISR in terms of the network parameters (i.e., the average degree ⟨s1⟩, the rewiring probability β1, and the intra-layer time delay τ1) and the parameters of the eSTDP learning rule (i.e., the potentiation adjusting rate P1 and depression temporal window τ1d) in layer 1 of Eq. 1.

3.1.1 With respect to Network Parameters: ⟨s1⟩, β1, τ1

The average number of synaptic inputs per neuron (i.e., the average degree connectivity) in layer 1 is given by

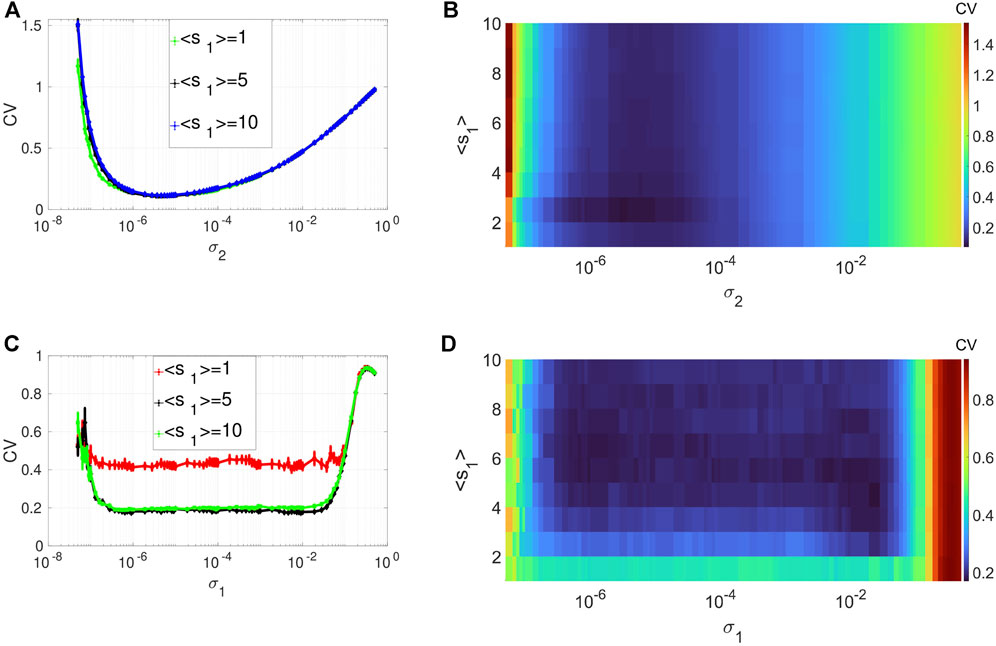

Figures 2A,B depicts the variation in the degree of CR in layer 1 (in isolation) of Eq. 1. We show the variation of CV as a function of the channel noise intensity σ2 (with σ1 = 0) and the average degree ⟨s1⟩ of this layer. In these panels, CR is characterized by a family of non-monotonic CV curves as a function of the noise intensity σ2. Panels A and B also indicate that the phenomenon of CR is robust to changes in the average degree of the network connectivity ⟨s1⟩. As ⟨s1⟩ increases, the minimum of the CV curves (taking the value CVmin = 0.1108 at σ2 = 7.3 × 10–6) does not change. However, when the network is very sparse, i.e., when ⟨s1⟩ = 1, we can see that the green line in Figure 2A extends a little more to the left compared to the denser network configurations. This indicates that when ⟨s1⟩ = 1, a relatively weaker noise intensity can enhance the degree of CR. Therefore, in the rest of our simulations, to have the best degree of CR while also allowing the network to have a small-world topology, we will fix the average degree of the layer networks at ⟨s1⟩ = 2.

FIGURE 2. Variation of CV w.r.t. the average degree ⟨s1⟩ and the noise intensity σ2 for CR or σ1 for SISR. Panels (A) and (B) show CR characterized by a family of non-monotonic CV curves w.r.t. σ2 (σ1 = 0) at ɛ1 = 0.01. Panels (C) and (D) show SISR characterized by a family of non-monotonic CV curves w.r.t. σ1 (σ2 = 0) at ɛ1 = 0.001. In both phenomena, the other parameters of the layer 1 are fixed at: β1 = 0.1, τ1 = 1, P1 = 0.1, τ1d = 20, D1 = 0.5, τ1p = 20, N = 50.

Figures 2C,D show the variation in the degree of SISR in layer 1 (in isolation) of Eq. 1. In this case, we switched on the synaptic noise intensity σ1 (and turned off the channel noise, i.e., σ2 = 0), decreased the timescale separation parameter from ɛ = 0.01 to ɛ = 0.001, and kept the rest of the parameters at the same values as in Figures 2A,B. In contrast to CR, SISR is sensitive to changes in the average degree parameter ⟨s1⟩. In particular, when the network is very sparse, i.e., when ⟨s1⟩ = 1, the degree of SISR becomes significantly lower, with a minimum value of the CV curve around CVmin = 0.4 for a wide range of the noise intensity, i.e., σ1 ∈ (3.7 × 10–7; 1.9, ×, 10–2). As the network becomes denser, i.e., ⟨s1⟩ increases, the degree of SISR also increases with the minimum value of the CV curves occurring at CVmin ≈ 0.1903 for σ1 ∈ (3.7 × 10–7; 1.9, ×, 10–2). Thus, in the rest of our simulations, to have a high (low) degree of SISR, we will fix the average degree of the layer networks at ⟨s1⟩ = 10 (⟨s1⟩ = 1), in contrast to CR.

Interestingly, in Figure 2, we see that the best degree of coherence is higher for CR (with CVmin = 0.1108) than for SISR (with (CVmin ≈ 0.1903). In previous work [50], where we investigated CR and SISR in one isolated layer of Eq. 1 in the absence of STDP, the opposite behavior occurs, i.e., SISR produces a higher degree of coherence (with CVmin = 0.012) than CR (with CVmin = 0.130), when all the other parameters are at their optimal values. This means that in the presence (absence) of STDP, the degree of coherence due to CR (SISR) is higher than that of SISR (CR). Furthermore, while the degree of CR is higher than that of SISR, the range of values of the noise intensity in which the degree of CR is high is significantly smaller than that of SISR. This is explained by the fact that high coherence due to SISR emerges due to the asymptotic matching of the deterministic and stochastic timescales. While the high coherence due to CR emerges as a result of the proximity to the Hopf bifurcation [10, 50]. Figures 2C,D indicate that this matching of timescales occurs for a wider range of values of the synaptic noise intensity (with flat-bottom CV curves), hence the larger interval for coherence. On the other hand, Figures 2A,B show a smaller interval of the channel noise intensity in which the degree CR is highest. In this case, the noise intensity has to be just right (not too weak or strong) to let the systems oscillate between the excitable and the oscillatory regimes via a noise-induced Hopf bifurcation, leading to the emergence of a limit cycle behavior (i.e., noise-induced coherent oscillations). The noise channel noise should not be too weak so that the systems do not stay for too long in the excitable regime, thereby destroying the coherence. Nor should it be too strong to overwhelm the entire oscillations in the oscillatory regime, hence the relatively smaller noise interval for the highest coherence in the case of CR.

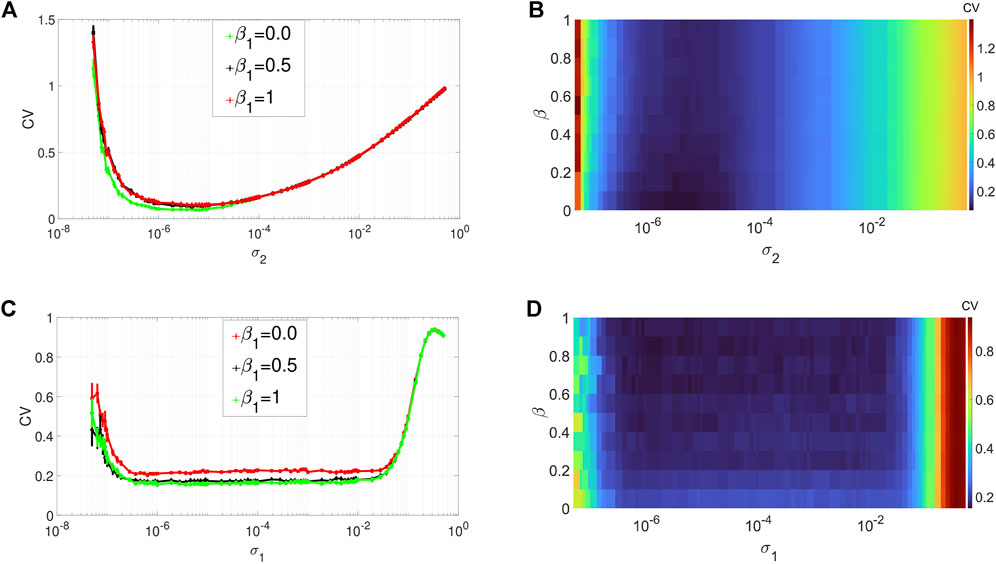

Figures 3A,B depict the variation in the degree of CR in layer 1 (in isolation) of Eq. 1 as a function of the channel noise intensity σ2 and the network rewiring probability β1 at an optimal value of the average degree (i.e., ⟨s1⟩ = 1) chosen from Figure 2A. The other parameters are kept fixed at the values given in Figure 2. From Figures 3A,B, we notice that variations in the rewiring probability do not destroy the high degree of CR. Here the minimum of the CV curves remain very low. However, we can observe that when the network is regular, i. e, when β1 = 0.0, the minimum of the CV curve (see, e.g., the green curve in Figure 3A) is noticeably lower than when we have small-world (with β1 ∈ (0, 1)) and the random network (with β1 = 1) topology. Nevertheless, because in this work we are interested in non-regular networks (i.e., β1 ∈ (0, 1]), we will fix β1 to a very low, but non-zero value (i.e., β1 = 0.1) to have a high degree of CR in a small-world network. Hence, in the rest of our simulations, to have the best degree of CR in our small-world network, we will fix the rewiring probability of the layer networks at β1 = 0.1.

FIGURE 3. Variation of CV w.r.t. the wiring probability β1 and the noise intensity σ1 for SISR or σ2 for CR. Panels (A) and (b) show the CV curves due to CR (σ1 = 0, ɛ1 = 0.01, ⟨s1⟩ = 2). Panels (C) and (D) show the CV curves due to SISR (σ2 = 0, ɛ1 = 0.001, ⟨s1⟩ = 10). In both phenomena, the other parameters are fixed at: τ1 = 1, P1 = 0.1, τ1d = 20, D1 = 0.5, τ1p = 20, N = 50.

Figures 3C,D show the variation in the degree of SISR in layer 1 (in isolation) of Eq. 1 as a function of the synaptic noise intensity σ1 and the network rewiring probability β1 at the optimal value of the average degree (i.e., ⟨s1⟩ = 10) chosen from Figure 2C. The rest of the parameter values are the same as in Figures 3A,B. We observe again that the degree of SISR is more sensitive to changes in the parameter β1 than the degree of CR. Furthermore, we observe that varying the rewiring probability β1 has the opposite effect on the degree of SISR compared to its effect on the degree of CR. The more regular the network is (see, e.g., the red curve in Figure 3C), the higher the CV curve and hence the lower the degree of SISR. Thus, in the rest of our simulations, to have the best degree of SISR in our network, we will fix the rewiring probability of the layer networks at β1 = 1 (see the green curve in Figure 3C).

Figures 4A,B depict the variation in the degree of CR in layer 1 (in isolation) of Eq. 1 as a function of channel noise intensity σ2 and the intra-layer time delay τ1 of the network, at an optimal value of the average degree (i.e., ⟨s1⟩ = 2) and the network rewiring probability (i.e., β1 = 0.1) chosen from Figure 2A and Figure 3A, respectively. Again we notice, from the low values of the minimum of the CV curves, the robustness of the degree of CR to variations of a network parameter, i.e., τ1. Nonetheless, with the low degree of CR, we can still observe that when the synaptic connections between the neurons are instantaneous (i.e., when τ1 = 0), the CV curve (see the green curve in) is slightly lower than the rest of the curves. Further increase in the time delay does not affect the degree of CR. In the rest of the simulations, to have the best degree of CR in our network, we will fix the intra-layer time delay at a low but non-zero value, i.e., at τ1 = 1.

FIGURE 4. Variation of CV w.r.t. the time delay τ1 and noise intensity σ1 for SISR or σ2 for CR. Panels (A) and (B) show the CV curves due to CR (σ1 = 0, ɛ1 = 0.01, ⟨s1⟩ = 2). Panels (C) and (D) show the CV curves due to SISR (σ2 = 0, ɛ1 = 0.001, ⟨s1⟩ = 10). In both phenomena, the other parameters are fixed at: τ1 = 1, P1 = 0.1, τ1d = 20, D1 = 0.5, τ1p = 20, N = 50.

Figures 4C,D show the variation in the degree of SISR in layer 1 (in isolation) of Eq. 1 as a function of synaptic noise intensity σ1 and the intra-layer time delay τ1 at an optimal value of the average degree (i.e., ⟨s1⟩ = 10) and the network rewiring probability (i.e., β1 = 1) chosen from Figure 2C and Figure 3C, respectively. We observe that the degree of SISR is again more sensitive to parametric perturbations than the degree CR. Moreover, the variation in the degree of SISR as a function of the intra-layer time delay τ1 is not linear and significantly depends on values of the synaptic noise σ1. In Figure 4A, for τ1 = 0.0, we have CV values which are higher and lower than those at τ1 = 1,000 and τ1 = 500, respectively. Thus, in the rest of the simulations, to have the best degree of SISR in our network, we will fix the intra-layer time delay at τ1 = 1,000.

3.1.2 With respect to STDP Parameters: P1, τ1d

Using the insight from the previous section on the effects of each network parameter (i.e., ⟨s1⟩, β1, and τ1) on the degree of CR and SISR, we now investigate the effects of STDP on the degree of CR and SISR by varying the parameters P1 of the potentiation adjusting rate and τ1d of the depression temporal window. To do this, we first note that the results in Figures 2–4 are obtained when the parameters of STDP (i.e., P1 and τ1d) are kept fixed at the values indicated in the captions. So in the sequel, we fix the network parameters at their non-optimal values, i.e., at values at which each phenomenon produces the lowest degree of coherence. Then, we vary the parameters (P1 and τ1d) of the STDP learning rule in layer 1.

Extensive numerical simulations (not shown) have indicated that the variations in the degree of CR and SISR with respect to P1 and τ1d are higher at the corresponding optimal network parameter values indicated in Figures 2–4 than at the non-optimal network parameter values. Qualitatively, however, the variations in the degrees of both phenomena are essentially the same when we have optimal or non-optimal network parameter values. Because we are interested in the highest degree of CR and SISR, we present the results on the effects of STDP on the degree of CR and SISR when the network parameters are non-optimal. We will then investigate the enhancement strategies of the degree of each phenomenon using the multiplexing technique.

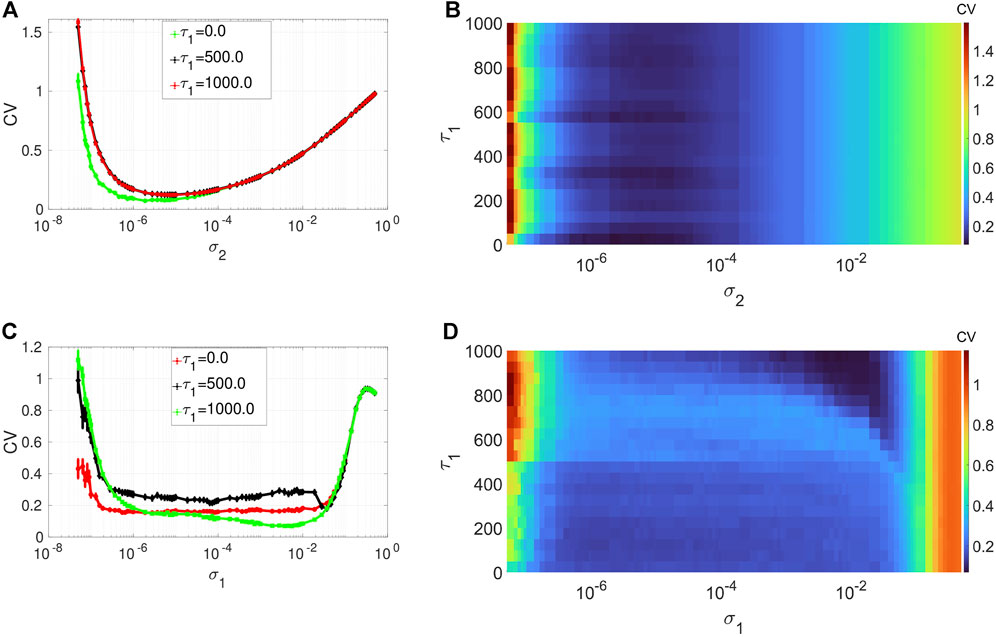

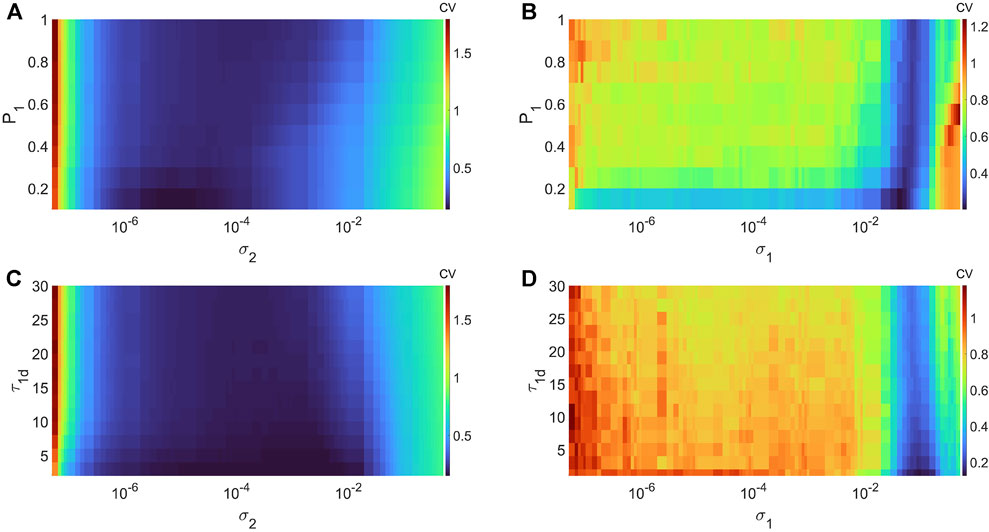

In Figures 5A,B, we show the variations in degree of CR and SISR in layer 1 (in isolation) of Eq. 1 as a function of the channel (σ2) and synaptic (σ1) noise intensity and the potentiation adjusting rate parameter P1 at corresponding non-optimal values of the network parameters, respectively. Figure 5A shows that the higher (compared to the degree of coherence induced by SISR in Figure 5B) degree of coherence induced CR is more (compared to the robustness of the coherence induced by SISR in Figure 5B) robust to variations in the potentiation adjusting rate parameter P1. Even though the lowest CV values for each value of P1 are relatively close to each other, we have the lowest (highest) CVmin = 0.1363 (CVmin = 0.1804) occurring at P1 = 0.1 (P1 = 1). For SISR, we the lowest (highest) CVmin = 0.2009 (CVmin = 0.2588) occurs at P1 = 0.1 (P1 = 1).

FIGURE 5. Variation of CV w.r.t. the potentiation adjusting rate P1, depression temporal window τ1d, and the noise intensity σ1 or σ2, with the corresponding non-optimal network parameter values. Panel (A) and (B) show the values of CV during CR (σ1 = 0, ɛ1 = 0.01, ⟨s1⟩ = 10, β = 1, τ1 = 100, τ1d = 20) and during SISR (σ2 = 0, ɛ1 = 0.001, ⟨s1⟩ = 1, β = 0.1 τ1 = 600, τ1d = 20). Panel (C) and (D) show the values CV during CR (σ1 = 0, ɛ1 = 0.01,⟨s1⟩ = 10, β = 1, τ1 = 100, P1 = 1) and during SISR (σ2 = 0, ɛ1 = 0.001, ⟨s1⟩ = 1, β = 0.1, τ1 = 600, P1 = 1). In both phenomena, the other parameters are fixed at: D1 = 0.5, τ1p = 20, N = 50.

In Figures 5C,D, we show the variations in degree of CR and SISR in layer 1 (in isolation) of Eq. 1 as a function of the channel (σ2) and synaptic (σ1) noise intensity and the depression temporal window τ1d at corresponding non-optimal values both of the network parameters and the potentiation adjusting rate parameter P1 (obtained from Figures 5A,B), respectively. For CR, we the lowest (highest) CVmin = 0.0254 (CVmin = 0.1849) occurs at τ1d = 0.01 (τ1d = 30). For SISR, we the lowest (highest) CVmin = 0.1245 (CVmin = 0.2632) occurs at τ1d = 0.01 (τ1d = 30). Thus, unlike the opposite effects of the variations in each network parameter on the degree of CR and SISR, the variations in the STDP parameters have similar effects on the degree of CR and SISR.

In previous work [50], we aimed at enhancing CR (and not SISR) via the multiplexing technique because CR showed more sensitivity to parametric perturbations than SISR in the absence of the STDP learning rule. The results in Figures 2–5 indicate that the degree of CR becomes more robust to parametric perturbations than the degree of SISR, which is particularly sensitive to the variations in the parameters of the STDP learning rule. For this reason, in the next section, we focus only on the enhancement of the more sensitive phenomenon, i.e., SISR, via the multiplexing technique used in [50].

3.2 Enhancement of SISR via the Multiplexing Technique

It has been shown in [50] that in a two-layer multiplex network with static (non-adaptive) synaptic couplings, CR or SISR in one layer could induce and enhance CR in the other layer. Here, we address whether enhancing a low degree of SISR in one layer of a multiplex network is possible using an enhanced CR or SISR in the other layer when adaptive synaptic couplings drive the network. Then, we investigate which enhancement scheme is best: 1) the CR-SISR scheme or 2) the SISR-SISR scheme.

In the CR-SISR scheme, we use the results from Section 3.1 and we set layer 1 such that it has a low degree of SISR, i.e., we choose the network and STDP parameter values (⟨s1⟩ = 1, β1 = 0.1, τ1 = 600, P1 = 1, τ1d = 0.01) so that the CV curve is high—indicating a poor degree of SISR in layer 1 in isolation. We also set layer two such that it has a high degree of CR, i.e., we choose the network and the STDP parameter values (⟨s1⟩ = 2, β2 = 0.1, τ2 = 1, P2 = 0.1, τ2d = 20) so that the CV curve is low—indicating a high degree of CR in layer 2 in isolation. Then, we couple the two layers in a multiplex fashion, i.e., each neuron in a layer is coupled only to its replica neuron in the other layer via a synaptic coupling driven by STDP. In the SISR-SISR scheme, we have the same settings as in the CR-SISR scheme, except that in layer 2, we set the network and STDP parameter values (⟨s2⟩ = 10, β2 = 1, τ2 = 1, P2 = 0.1, τ2d = 20) so that the CV curve is high—in indicating a high degree of SISR in layer 2 in isolation.

The STDP driving the multiplexing (inter-layer) synaptic connections is governed by synaptic weight

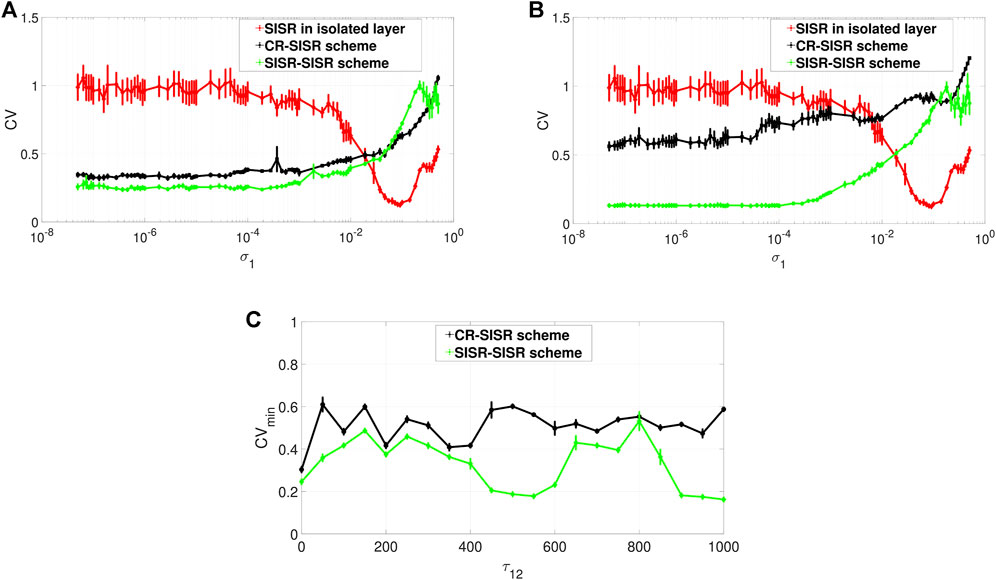

In Figure 6, we show the performance of each scheme in enhancing the low degree of SISR in layer 1. Figures 6A,B depict the performances for an instantaneous (i.e., τ12 = 0) multiplexing and for a time-delayed (τ12 = 1,000) multiplexing between layer 1 and layer 2, respectively. We recall that layer 1 is the layer of interest, i.e., the layer with a low degree of SISR when it is in isolation. The red curve in Figures 6A,B represents the degree of coherence due to SISR in layer 1 when it is in isolation. We can see that for the selected set of network and STDP parameters (i.e. ⟨s1⟩ = 1, β1 = 0.1, τ1 = 600, P1 = 1, τ1d = 0.01), the degree of coherence due to SISR is very low as indicated by the high values of the red CV curve for values of the synaptic noise intensity in some interval, i.e., σ1 ∈ (5.0, ×, 10–8, 3.5 × 10–2).

FIGURE 6. Variation of CV of the controlled layer (exhibiting SISR) w.r.t. the noise intensity σ1 and the inter-layer time delay τ12. Panels (A) and (B) show the CV curves in the absence (τ12 = 0) and presence (τ12 = 1,000) of the inter-layer time delay τ12, respectively. The red curves represent the variation in the degree of SISR in layer 1 when in isolation. The black and the green curves show the enhancement performances of the CR-SISR and SISR-SISR schemes, respectively. Panel (C) shows that for the same value of the inter-layer time delay, the SISR-SISR scheme always outperforms the CR-SISR scheme. Parameters of layer 2 in the CR-SISR scheme:

When layer 1, with its poor degree of SISR, is multiplexed with layer 2 exhibiting a high degree of CR, the performance of this CR-SISR scheme is depicted by the black curve in Figures 6A,B which represent the new degree of SISR in layer 1. We see that the multiplexing of layer 1 with another layer exhibiting a strong CR can significantly improve the degree of SISR in layer 1 by lowering the red CV curve, which becomes black. On the other hand, when layer 1, with its poor degree of SISR, is multiplexed with layer 2 exhibiting a high degree of SISR, the performance of this SISR-SISR scheme is depicted by the green curve in Figures 6A,B which represent the new degree of SISR in layer 1. We see that the multiplexing of layer 1 with another layer exhibiting a strong SISR can significantly improve the degree of SISR in layer 1 by lowering the red CV curve, which becomes green. However, in both schemes, this enhancement of SISR in layer 1 fails when the synaptic noise intensity is larger than 1.9 × 10–2, a point from which the black and the green CV curves of the CR-SISR and SISR-SISR schemes lie above the red CV curve of layer 1 in isolation.

Furthermore, we observe that even though the degree of the coherence induced by CR can be higher than the degree of coherence induced by SISR in an isolated layer network driven by STDP, the degree of SISR induced via a multiplexing SISR-SISR enhancement scheme is higher than that induced by a CR-SISR enhancement scheme. This is clearly indicated by the black and green curves simulated with no inter-layer time delay in Figure 6A and with an inter-layer time delay in Figure 6B. For the majority of values of the synaptic noise intensity of layer 1, the green curve lies entirely below the black one.

To further investigate the effect of the inter-layer time delay τ12 on the degree of coherence due to SISR in layer 1, we computed the minimum value of the CV curve, (i.e., CVmin) for a wide range of values of the inter-layer time delay τ12. The result is shown in Figure 6C, where the green curve representing the enhancement performance of the SISR-SISR scheme always lies below the black curve, which represents the performance of the CR-SISR scheme, as the inter-layer time delay changes in τ12 ∈ [0, 1,000]. Figure 6C also indicates that when the inter-layer time delay is at τ12 = 550 and τ12 = 1,000, the SISR-SISR scheme performs significantly better than at other values of the inter-layer time delay and the CR-SISR scheme. The best inter-layer time delay values in the CR-SISR scheme occur at τ12 = 0 and at τ12 = 350. The results presented in Figure 6 are for fixed values of the alterable parameters of the inter-layer STDP learning rule, i.e. P12 = 0.05 and τ12d = 30.

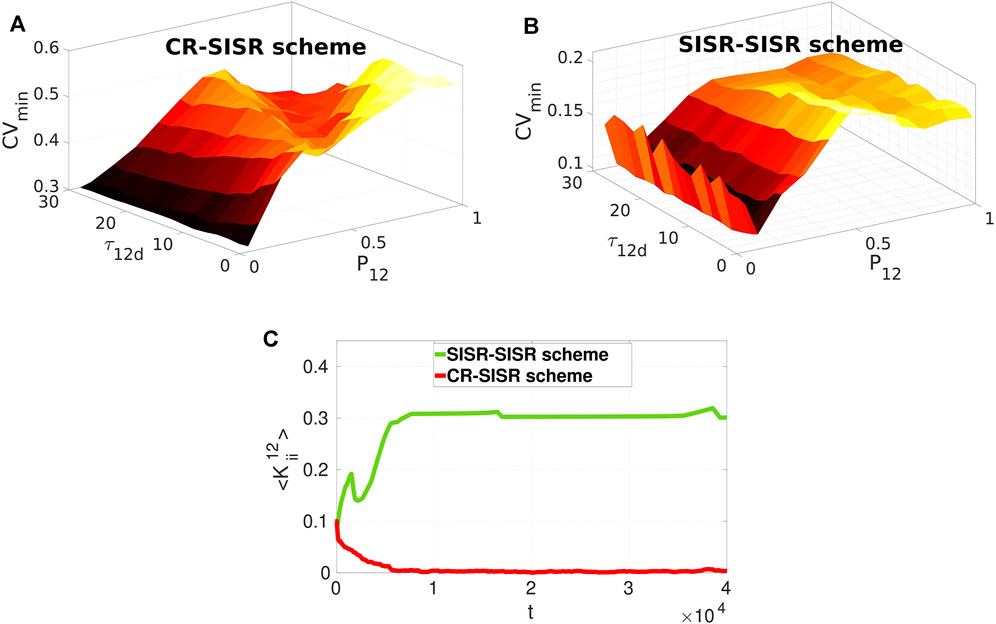

Now we investigate the effects of varying these two parameters on the performances of the CR-SISR and SISR-SISR enhancement schemes. To implement this, we fix, from Figure 6C, the inter-layer time delay at the optimal value for each scheme, i.e., τ12 = 350 and τ12 = 1,000 for the CR-SISR and the SISR-SISR scheme, respectively. Figures 7A,B show the performances of the CR-SISR and SISR-SISR schemes as a function of the multiplexing STDP parameters, i.e., P12 and τ12d, respectively. For the CR-SISR scheme, we observe that a larger depression temporal window (i.e., τ12d → 30) and a smaller potentiation adjusting rate (P12 → 0.05) yield the lowest minimum CV value, given by CVmin = 0.2989 which occurs at the synaptic noise intensity of

FIGURE 7. Minimum CV against the multiplexing potentiation adjusting rate P12 and depression temporal window τ12d for layer 1 exhibiting SISR when multiplexed to layer 2 exhibiting CR and SISR in Panels (A) and (B), respectively. The SISR-SISR scheme outperforms the CR-SISR scheme in enhancing SISR in layer 1 in the entire (τ12d, P12) plane. Parameter values in panels (A) and (B) are the same as in (Figure 6A) with τ12 = 350 and τ12 = 1,000 in the CR-SISR and SISR-SISR scheme, respectively. Panel (C) shows the time-evolution of population-averaged inter-layer synaptic weights

The SISR-SISR scheme in Figure 7B shows better overall performance compared to the CR-SISR scheme with respect to these inter-layer STDP parameters. First, we observe that the surface of the graph CVmin in the SISR-SISR scheme (with the highest value at CVmin = 0.2098, occurring at P12 = 0.5 and τ12 = 0.5) lies entirely below the surface of the graph CVmin in the CR-SISR scheme (with the lowest value at CVmin = 0.2989, occurring at P12 = 0.05 and τ12d = 30). Secondly, from Figure 7B, we observe that small (i.e., P12 = 0.1), but not too small values (unlike in the CR-SISR scheme in Figure 7A with P12 = 0.05) of the potentiation adjusting rate and large values of the depression temporal window (i.e., τ12 = 30) parameters yield the lowest minimum CV value, given by CVmin = 0.1005 which occurs at the synaptic noise intensity of

In [50], the synaptic connections between the FHN neurons are static, and the effects of STDP on the strength of synaptic couplings are entirely ignored. The results [50] show that intermediate and strong multiplexing between the layer networks is required for the enhancement of the coherence, irrespective of the enhancement scheme. However, in the current paper, the inter-layer synaptic strength may not be static. Thus, we cannot choose a priori the strength of the inter-layer synaptic connections because this is entirely controlled by the STDP rule, which depends on the neurons’ random spiking times. The best performance of the CR-SISR scheme (indicated by the lowest of value of CVmin in Figure 7A) occurs when P12 = 0.05, τ12d = 30, τ12 = 350, and σ1 = 1.9, ×, 10–4. While the best performance of the SISR-SISR scheme (indicated by the lowest value CVmin in Figure 7B) occurs when P12 = 0.1, τ12d = 30, τ12 = 1,000, and σ1 = 7.3 × 10–5. Now, using these two sets of parameter values, we computed the time-evolution of the population-averaged multiplexing synaptic weights

4 Summary and Conclusion

In this paper, we have numerically investigated the effects of varying the network and STDP parameters on the degree of CR and SISR in isolated and coupled (multiplexed) layer networks consisting of electrically connected FHN model neurons. In the isolated layer networks and for a fixed set of parameters, the results indicate that: 1) in the presence of STDP, the degree and the robustness of the coherence due to CR are always higher than those of the coherence due to SISR, unlike in the absence of STDP where the opposite behaviors occur [50], 2) the degree of coherence due to SISR increases with the average degree of the network connectivity and the rewiring probability of the network topology. While the degree of the coherence due to SISR shows a nonlinear response to the variation in the electric time delay between the neurons—smaller and significantly larger time delays yield a higher degree of coherence than intermediate values, and 3) the degree of coherence due to SISR increases with a decreasing (increasing) value of the depression temporal window (potentiation adjusting rate) parameter of the STDP learning rule, especially at (relatively) larger synaptic noise intensities.

In the multiplex networks, we set up two enhancement schemes for the more sensitive phenomenon, i.e., SISR in layer 1, based on the multiplexing with layer 2 and using SISR and CR acting as the enhancing phenomena each with a high degree of coherence in layer 2. In the first enhancement scheme (termed the CR-SISR scheme), we have SISR with a low degree of coherence, achieved with specific values of the network and STDP parameter in layer 1 obtained in advance. And in layer 2 we have CR with a high degree of coherence, achieved with specific values of the network and STDP parameters obtained in advance. In the second enhancement scheme, termed the SISR-SISR scheme, we have SISR with a low degree of coherence in layer 1. And in layer 2 we have SISR with a high degree of coherence. Our results showed that: 1) both schemes can significantly enhance (as indicated by the relatively lower value of the CV curves) the poor degree of SISR in layer 1, 2) at the optimal inter-layer time delay (τ12) of each scheme, a larger depression temporal window (τ12d) and a smaller potentiation adjusting rate (P12) parameters of the inter-layer STDP learning rule improve the CR-SISR and SISR-SISR enhancement schemes. However, for the SISR-SISR scheme, the potentiation adjusting rate parameter should not be too small, 3) the SISR-SISR scheme outperforms the CR-SISR scheme in the enhancement of SISR in layer 1 for all the parameter values of the inter-layer STDP learning rule, and 4) at their respective optimal inter-layer time delay, synaptic noise intensity, potentiation adjusting rate, and depression temporal window parameter values, the SISR-SISR scheme enhances SISR in layer 1 via long-term potentiation (LTP) of the synaptic strength between the layers. In contrast, the CR-SISR scheme enhances SISR in layer 1 via long-term depression (LTD) of the synaptic strength between the layers.

Interesting future research directions on the topic would be to investigate the robustness of the results presented in this paper when the topologies of the layer and multiplex networks are different and when the networks are driven by homeostatic structural plasticity.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

MY designed the study, conducted the numerical simulations, analyzed the results, and wrote the manuscript. TT analyzed the results and wrote the manuscript. JJ analyzed the results and wrote the manuscript. All the authors have approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

MY acknowledges support from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—Project No. 456989199.

References

1. Aust R, Hövel P, Hizanidis J, Schöll E .Delay Control of Coherence Resonance in Type-I Excitable Dynamics. Eur Phys J Spec Top (2010) 187:77–85. doi:10.1140/epjst/e2010-01272-5

2. Bačić I, Klinshov V, Nekorkin V, Perc M, Franović I .Inverse Stochastic Resonance in a System of Excitable Active Rotators with Adaptive Coupling. EPL (Europhysics Letters) (2018) 124:40004.

3. Benzi R, Sutera A, Vulpiani A .The Mechanism of Stochastic Resonance. J Phys A: Math Gen (1981) 14:L453–L457. doi:10.1088/0305-4470/14/11/006

4. Bi G-q., Poo M-m. .Synaptic Modifications in Cultured Hippocampal Neurons: Dependence on Spike Timing, Synaptic Strength, and Postsynaptic Cell Type. J Neurosci (1998) 18:10464–72. doi:10.1523/jneurosci.18-24-10464.1998

6. Bönsel F, Krauss P, Metzner C, Yamakou ME .Control of Noise-Induced Coherent Oscillations in Three-Neuron Motifs. Cogn Neurodynamics (2021) 1–20. doi:10.1007/s11571-021-09770-2

7. Dan Y, Poo M-M .Spike Timing-dependent Plasticity: from Synapse to Perception. Physiol Rev (2006) 86:1033–48. doi:10.1152/physrev.00030.2005

8. De Domenico M .Multilayer Modeling and Analysis of Human Brain Networks. Gigascience (2017) 6:1–8. doi:10.1093/gigascience/gix004

9. Debanne D, Gähwiler BH, Thompson SM .Long-term Synaptic Plasticity between Pairs of Individual Ca3 Pyramidal Cells in Rat Hippocampal Slice Cultures. J Physiol (1998) 507:237–47. doi:10.1111/j.1469-7793.1998.237bu.x

10. DeVille RE, Vanden-Eijnden E, Muratov CB .Two Distinct Mechanisms of Coherence in Randomly Perturbed Dynamical Systems. Phys Rev E Stat Nonlin Soft Matter Phys (2005) 72:031105. doi:10.1103/PhysRevE.72.031105

11. FitzHugh R .Mathematical Models of Threshold Phenomena in the Nerve Membrane. Bull Math Biophys (1955) 17:257–78. doi:10.1007/bf02477753

12. Franović I, Yanchuk S, Eydam S, Bačić I, Wolfrum M .Dynamics of a Stochastic Excitable System with Slowly Adapting Feedback. Chaos: Interdiscip J Nonlinear Sci (2020) 30:083109.

13. Friederici AD, Singer W .Grounding Language Processing on Basic Neurophysiological Principles. Trends Cognitive Sciences (2015) 19:329–38. doi:10.1016/j.tics.2015.03.012

14. Froemke RC, Dan Y .Spike-timing-dependent Synaptic Modification Induced by Natural Spike Trains. Nature (2002) 416:433–8. doi:10.1038/416433a

15. Geffert PM, Zakharova A, Vüllings A, Just W, Schöll E .Modulating Coherence Resonance in Non-excitable Systems by Time-Delayed Feedback. Eur Phys J B (2014) 87:291. doi:10.1140/epjb/e2014-50541-2

16. Gerstner W, Kempter R, Van Hemmen JL, Wagner H .A Neuronal Learning Rule for Sub-millisecond Temporal Coding. Nature (1996) 383:76–8. doi:10.1038/383076a0

17. Gu H, Zhang H, Wei C, Yang M, Liu Z, Ren W .Coherence Resonance-Induced Stochastic Neural Firing at a Saddle-Node Bifurcation. Int J Mod Phys B (2011) 25:3977–86. doi:10.1142/s0217979211101673

18. Gutkin BS, Jost J, Tuckwell HC .Inhibition of Rhythmic Neural Spiking by Noise: the Occurrence of a Minimum in Activity with Increasing Noise. Naturwissenschaften (2009) 96:1091–7. doi:10.1007/s00114-009-0570-5

19. Hizanidis J, Schöll E .Control of Coherence Resonance in Semiconductor Superlattices. Phys Rev E Stat Nonlin Soft Matter Phys (2008) 78:066205. doi:10.1103/PhysRevE.78.066205

20. Jia B, Gu H-G, Li Y-Y .Coherence-resonance-induced Neuronal Firing Near a Saddle-Node and Homoclinic Bifurcation Corresponding to Type-I Excitability. Chin Phys. Lett. (2011) 28:090507. doi:10.1088/0256-307x/28/9/090507

21. Kasdin NJ .Runge-kutta Algorithm for the Numerical Integration of Stochastic Differential Equations. J Guidance, Control Dyn (1995) 18:114–20. doi:10.2514/3.56665

22. Kim S-Y, Lim W .Stochastic Spike Synchronization in a Small-World Neural Network with Spike-timing-dependent Plasticity. Neural Networks (2018) 97:92–106. doi:10.1016/j.neunet.2017.09.016

25. Lindner B, Garcıa-Ojalvo J, Neiman A, Schimansky-Geier L .Effects of Noise in Excitable Systems. Phys Rep (2004) 392:321–424. doi:10.1016/j.physrep.2003.10.015

26. Lindner B, Schimansky-Geier L .Analytical Approach to the Stochastic Fitzhugh-Nagumo System and Coherence Resonance. Phys Rev E (1999) 60:7270–6. doi:10.1103/physreve.60.7270

27. Liu Z-Q, Zhang H-M, Li Y-Y, Hua C-C, Gu H-G, Ren W .Multiple Spatial Coherence Resonance Induced by the Stochastic Signal in Neuronal Networks Near a Saddle-Node Bifurcation. Physica A: Stat Mech its Appl (2010) 389:2642–53. doi:10.1016/j.physa.2010.02.029

28. Longtin A .Stochastic Resonance in Neuron Models. J Stat Phys (1993) 70:309–27. doi:10.1007/bf01053970

29. Markram H, Lübke J, Frotscher M, Sakmann B .Regulation of Synaptic Efficacy by Coincidence of Postsynaptic Aps and Epsps. Science (1997) 275:213–5. doi:10.1126/science.275.5297.213

30. Masoliver M, Malik N, Schöll E, Zakharova A .Coherence Resonance in a Network of Fitzhugh-Nagumo Systems: Interplay of Noise, Time-Delay, and Topology. Chaos (2017) 27:101102. doi:10.1063/1.5003237

31. Masoliver M, Masoller C, Zakharova A .Control of Coherence Resonance in Multiplex Neural Networks. Chaos, Solitons & Fractals (2021) 145:110666. doi:10.1016/j.chaos.2021.110666

32. Masquelier T, Guyonneau R, Thorpe SJ .Spike Timing Dependent Plasticity Finds the Start of Repeating Patterns in Continuous Spike Trains. PloS one (2008) 3:e1377. doi:10.1371/journal.pone.0001377

33. Morrison A, Aertsen A, Diesmann M .Spike-timing-dependent Plasticity in Balanced Random Networks. Neural Comput (2007) 19:1437–67. doi:10.1162/neco.2007.19.6.1437

34. Muratov CB, Vanden-Eijnden E, E. W .Self-induced Stochastic Resonance in Excitable Systems. Physica D: Nonlinear Phenomena (2005) 210:227–40. doi:10.1016/j.physd.2005.07.014

35. Pei X, Wilkens L, Moss F .Noise-mediated Spike Timing Precision from Aperiodic Stimuli in an Array of hodgekin-huxley-type Neurons. Phys Rev Lett (1996) 77:4679–82. doi:10.1103/physrevlett.77.4679

36. Pikovsky AS, Kurths J .Coherence Resonance in a Noise-Driven Excitable System. Phys Rev Lett (1997) 78:775–8. doi:10.1103/physrevlett.78.775

37. Ren Q, Kolwankar KM, Samal A, Jost J .Hopf Bifurcation in the Evolution of Networks Driven by Spike-timing-dependent Plasticity. Phys Rev E Stat Nonlin Soft Matter Phys (2012) 86:056103. doi:10.1103/PhysRevE.86.056103

38. Semenov V, Feoktistov A, Vadivasova T, Schöll E, Zakharova A .Time-delayed Feedback Control of Coherence Resonance Near Subcritical Hopf Bifurcation: Theory versus experiment. Chaos (2015) 25:033111. doi:10.1063/1.4915066

39. Semenova N, Zakharova A .Weak Multiplexing Induces Coherence Resonance. Chaos (2018) 28:051104. doi:10.1063/1.5037584

42. Tuckwell HC, Jost J, Gutkin BS .Inhibition and Modulation of Rhythmic Neuronal Spiking by Noise. Phys Rev E Stat Nonlin Soft Matter Phys (2009) 80:031907. doi:10.1103/PhysRevE.80.031907

43. Watts DJSmall Worlds: The Dynamics of Networks between Order and Randomness. Princeton University Press Princeton (2000).

44. Watts DJ, Strogatz SH .Collective Dynamics of 'small-World' Networks. nature (1998) 393:440–2. doi:10.1038/30918

45. Wolters A, Sandbrink F, Schlottmann A, Kunesch E, Stefan K, Cohen LG, et al. A Temporally Asymmetric Hebbian Rule Governing Plasticity in the Human Motor Cortex. J Neurophysiol (2003) 89:2339–45. doi:10.1152/jn.00900.2002

46. Yamakou ME, Hjorth PG, Martens EA .Optimal Self-Induced Stochastic Resonance in Multiplex Neural Networks: Electrical vs. Chemical Synapses. Front Comput Neurosci (2020) 14:62. doi:10.3389/fncom.2020.00062

47. Yamakou ME, Inack EM(2022). Coherence Resonance and Stochastic Synchronization in a Small-World Neural Network: An Interplay in the Presence of Spike-timing-dependent Plasticity. arXiv preprint arXiv:2201.05436.

48. Yamakou ME, Jost J .A Simple Parameter Can Switch between Different Weak-Noise-Induced Phenomena in a Simple Neuron Model. Epl (2017) 120:18002. doi:10.1209/0295-5075/120/18002

49. Yamakou ME, Jost J .Weak-noise-induced Transitions with Inhibition and Modulation of Neural Oscillations. Biol Cybern (2018) 112:445–63. doi:10.1007/s00422-018-0770-1

50. Yamakou ME, Jost J .Control of Coherence Resonance by Self-Induced Stochastic Resonance in a Multiplex Neural Network. Phys Rev E (2019) 100:022313. doi:10.1103/PhysRevE.100.022313

51. Zamani A, Novikov N, Gutkin B .Concomitance of Inverse Stochastic Resonance and Stochastic Resonance in a Minimal Bistable Spiking Neural Circuit. Commun Nonlinear Sci Numer Simulation (2020) 82:105024. doi:10.1016/j.cnsns.2019.105024

Keywords: coherence resonance, self-induced stochastic resonance, small-world network, multiplex network, STDP

Citation: Yamakou ME, Tran TD and Jost J (2022) Optimal Resonances in Multiplex Neural Networks Driven by an STDP Learning Rule. Front. Phys. 10:909365. doi: 10.3389/fphy.2022.909365

Received: 31 March 2022; Accepted: 08 June 2022;

Published: 04 July 2022.

Edited by:

André H. Erhardt, Weierstrass Institute for Applied Analysis and Stochastics (LG), GermanyReviewed by:

Manish Dev Shrimali, Central University of Rajasthan, IndiaRico Berner, Humboldt University of Berlin, Germany

Copyright © 2022 Yamakou, Tran and Jost. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marius E. Yamakou, eWFtYWtvdW1hcml1c0BnbWFpbC5jb20=

Marius E. Yamakou

Marius E. Yamakou Tat Dat Tran

Tat Dat Tran Jürgen Jost

Jürgen Jost