- 1School of Mathematics and Statistics, Zhoukou Normal University, Zhoukou, China

- 2College of Mathematics and System Sciences, Xinjiang University, Urumqi, China

In this article, issues of both stability and dissipativity for a type of bidirectional associative memory (BAM) neural systems with time delays are investigated. By using generalized Halanay inequalities and constructing appropriate Lyapunov functionals, some novelty criteria are obtained for the asymptotic stability for BAM neural systems with time delays. Also, without assuming boundedness and differentiability for activation functions, some new sufficient conditions for proving the dissipativity are established by making use of matrix theory and inner product properties. The received conclusions extend and improve some previously known works on these problems for general BAM neural systems. In the end, numerical simulation examples are made to show the availability of the theoretical conclusions.

1 Introduction

The BAM neural network model, proposed by Kosko in [1], consists of neurons in two layers, the x-layer and the y-layer. The neurons of the same layer are sufficiently interconnected to the neurons arranged in the other layer, but neurons do not interconnect among the same layer. A useful feature of BAM is its ability to invoke stored pattern pairs in the case of noise. For detailed memory structure and examples of the BAM neural network, please refer to [2]. In recent years, BAM neural systems have received significant attention due to their wide applications in a lot of fields such as pattern recognition, image processing, signal processing, associative memories, optimization problems, and other engineering areas [3–6].

In general, due to the limited switching speed and signal propagation speed of neuron amplifiers, the implementation of a neural network will inevitably have a time delay. We also know that using a delayed version of the neural network is very important to solve some motion-related optimization problems. However, research shows that time delay may lead to divergence, oscillation, and instability, which may be bad for BAM neural systems [7, 8]. Therefore, these applications of the BAM neural systems with delays greatly rely on the dynamical behavior of the neural systems. For these reasons, it is necessary to study the dynamical behavior of the neural systems with delays, and it has been widely studied by a great number of researchers [9, 10].

In the design and analysis of neural networks, stability analysis is a very important and essential link. As small as a specific control system or as large as a social system, financial system, and ecosystem, it is always carried out under various accidental or continuous disturbances. After bearing this kind of interference, it is very important whether the system can keep running or working without losing control or swinging. For neural networks, because the output of the network is a function of time, for a given input, the response of the network may converge to a stable output, oscillate, increase infinitely, or follow a chaotic mode. Therefore, if a neural network system wants to play a role in engineering, it must be stable.

The notion of global dissipativity proposed in the 1970s is a common notion in dynamical systems, and it is applied in the fields of chaos and synchronization theory, stability theory, and robust control and system norm estimation [11–14]. Hence, it is a special and interesting problem to study the dissipativity of dynamical networks. Up to now, the dissipativity for several classes of simple neural networks with delays has begun to attract initial interest in investigation, and some sufficient conditions have been received [15–17]. Yet, to our knowledge, only a few articles have not been used for Lyapunov–Krasovskii functionals or Lyapunov functionals [18–22]. In this study, a few dissipativity conclusions have been received for BAM neural networks with varying delays via inner product properties and matrix theory, which are different from the neural systems’ model investigated in [23, 24].

Inspired by the previous discussion, the global asymptotic stability and dissipativity of BAM neural systems with time delays are investigated. Some new criteria to ensure the dissipation and stability of the BAM neural system are received. Compared with the previous results, our main results are more general and less conservative. The innovations of the study are at least the following aspects.

1) The BAM neural network model studied in this article has a time-varying delay.

2) In our article, the nonlinear activation functions we assumed are not differentiable and bound.

3) In this article, the sufficient conditions for the dissipativity of BAM neural networks with time-varying delay are obtained by using only the inner product property and matrix theory.

4) Moreover, the global attraction sets, namely, positive invariant sets, are obtained.

The structure of the article is organized in the following. The model description and some preliminary knowledge with some necessary definitions and lemmas are given in Section 2. In Section 3, by constructing Lyapunov functionals, we discussed the global asymptotic stability for the equilibrium point of delayed BAM neural systems. Some sufficient criteria are obtained and discussed to guarantee the global dissipativity by using inner product properties in Section 4. Two examples and their simulation conclusions are provided in Section 5. In the end, some results are reached in Section 6.

2 Preliminaries

Notations: In this article, let Rn be a Euclidean space with the inner product

In this article, the model of delayed BAM neural networks is investigated.

for t > 0,

In this study, we considered the following continuous activation functions:

(H1):

Remark 1:. The hypothesis of activation function H1 in this study has been widely used in some references. In particular, when discussing the stability, synchronization, and dissipation of neural networks, H1 is a common assumption. In the study, the activation function is Lipschitz continuous, so it is monotonously increasing. But it may not be differentiable or bounded. However, in [8, 13], the activation function should not only satisfy the hypothesis H1 of this study but also satisfy the boundedness. In [15], the derivative of the activation function also satisfies boundedness. In this study, the activation function only needs to satisfy the hypothesis H1. Compared with [8, 13, 15], the assumption of excitation function in this study is more general.The initial condition of the system (1) is considered as

where

Definition 1:. [25]. The neural system (1) is globally dissipative if there exists a compact set S ⊆ R2n, such that ∀z0 ∈ S, ∃ T(z0) > 0, when t ≥ t0 + T(z0), z(t, t0, z0) ⊆ S, in which z(t, t0, z0) represents the solution for (1) from initial time t0 and initial state z0. A set S is said to be forward invariant if ∀z0 ∈ S indicates z(t, t0, z0) ⊆ S for t ≥ t0.

Definition 2:. [26]. The point

Lemma 1:. [27]. For every positive k > 0 and every a, b ∈ Rn,

holds, in which X > 0.

Lemma 2:. (Generalized Halanay inequalities) [28]. If V(t) ≥ 0, t ∈ (−∞, + ∞) and

for t ∈ [t0, + ∞), in which γ(t) ≥ 0, η(t) ≥ 0, and ξ(t) ≤ 0 are continuous functions and τ(t) ≥ 0, and there exists α > 0 such that

Then,

where

3 Global Asymptotic Stability for BAM Neural Networks

First of all, under condition (H1), neural system (1) always at least has an equilibrium point. In the following, the asymptotic stability of the equilibrium point will be proved. For simplicity, we transformed the equilibrium point of system (1) to the origin. We assumed that

where

Remark 2:. It is easy to verify that systems (1) and (2) have the same stability. Therefore, to prove the stability of the equilibrium point z* of the system (1), it is sufficient to prove the stability of the trivial solution of the system (2).

Theorem 1:. Under condition (H1), if there exist positive definite diagonal matrices P = {pi} ∈ Rn×n, N = {ni} ∈ Rn×n and constants ς1, ς2, β1, β2 > 0 such that

where M = diag{m1, …, mn}, L = diag{l1, …, ln}; then the zero solution of neural system (2) is a unique equilibrium point and is globally asymptotically stable. Proof. Now, we chose Lyapunov functional.

Then,

By Lemma 1, we obtained

This implies that the origin solution of system (2) is asymptotically stable. So the equilibrium point of system (1) is asymptotically stable.

Corollary 1:. Under condition (H1), suppose L = M = E, ς1 = ς2 = β1 = β2 = 1, if there exist positive definite diagonal matrices P = {pi} ∈ Rn×n, N = {ni} ∈ Rn×n such that

then, the origin solution of network (2) is a unique equilibrium point, and it is globally asymptotically stable.

4 Global Dissipativity for BAM Neural Networks

In this part, the global dissipativity for the BAM neural system (1) is considered.

Theorem 2:. Under assumption (H1), suppose

then for any given ɛ > 0, there exists T such that for all t ≥ T

So, network (1) is dissipative, and the closed ball

Then,

By

By using Eqs 11–16 in Eq. 10, it is easy to obtain

Then, by Lemma 2, we obtain

where

where ɛ > 0 is sufficiently small. □

Corollary 2:. If taking δ1, δ2, δ3, ρ1, ρ2, ρ3 = 1, under assumptions (H1), suppose that

then network (1) is dissipative, and the closed ball

Corollary 3:. Under assumptions (H1), suppose that

and

then system (1) is globally stable, where

Remark 3:. In the existing articles, a lot of researchers studied the qualitative behaviors of neural systems via the Lyapunov function with linear matrix inequality techniques [26, 29, 30]. However, in this article, some new sufficient criteria of dissipativity of BAM neural networks with time delays are given by only using the property of matrix theory and inner product.

5 Numerical Simulations

In the part, two examples are presented to show the effectiveness.

Example 1. Investigation of the delayed BAM neural network model.

in which

Choose fj(yj) = (|yj + 1| + |yj − 1|)/2, gj(xj) = (|xj + 1| + |xj − 1|)/2, j = 1, 2, l1 = l2 = m1 = m2 = L = M = β1 = β2 = ς1 = ς2 = 1.

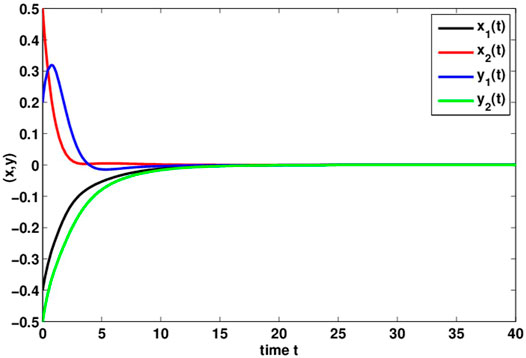

By computing, we can get

So, from Theorem 1, network (18) has a unique equilibrium, and it is globally asymptotically stable. By MATLAB, a unique equilibrium of network (18) (0,0,0,0)T is given, and the simulation results are given in Figure 1.

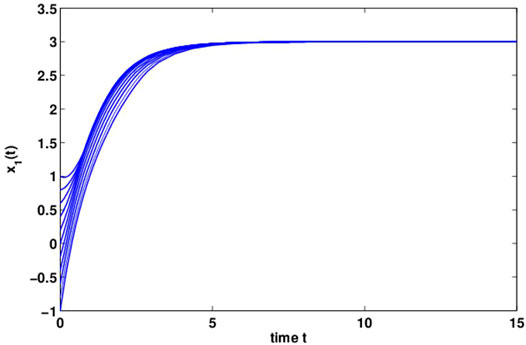

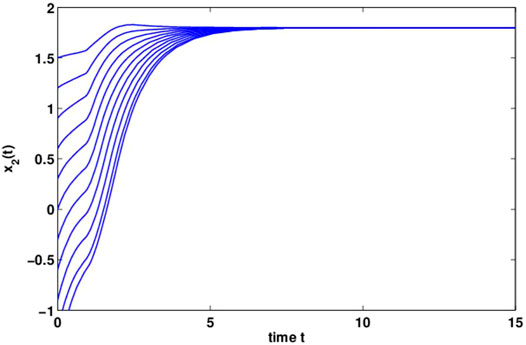

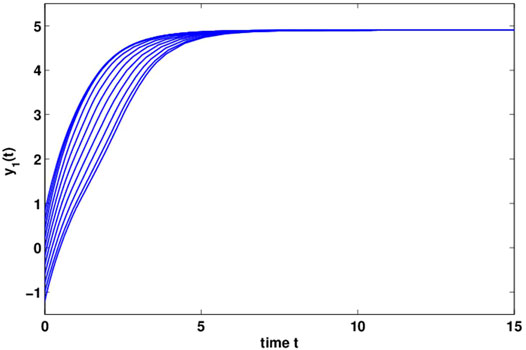

Example 2. The BAM neural model with delays is considered as (Eq. 18), where

Choose fj(yj) = (|yj + 1| + |yj − 1|)/2, gj(xj) = (|xj + 1| + |xj − 1|)/2, j = 1, 2 and l1 = l2 = m1 = m2 = l = m = δ1 = δ2 = δ3 = ρ1 = ρ2 = ρ3 = 1.

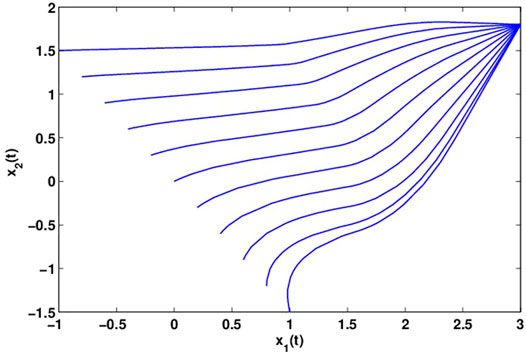

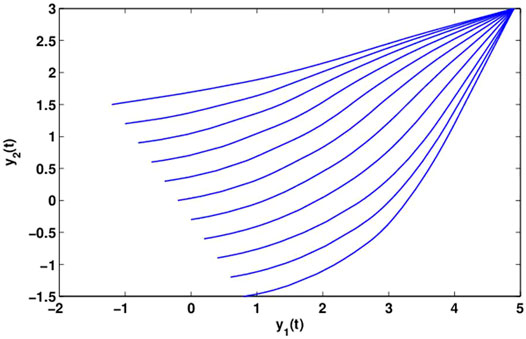

By computing, we can get γ = 7.75, ξ(t) = 2.703, η(t) = 1.04. Let α = 4, ɛ = 0.98, it follows from Theorem 2 and is observed that system (18) is global dissipativity. Figures 2, 3 reflect the behaviors for the states x1(t) and x2(t) with different initial conditions. Figures 4, 5 show the phase plane behaviors of y1(t) and y2(t) with different initial conditions. Figures 6, 7 demonstrate the behaviors of the time domain for the states x1(t), x2(t) and y1(t), y2(t) with different initial conditions. System (18) is globally dissipative from the numerical simulations.

Remark 4. : In the numerical simulation part of [13], the author only gives the simulation diagram of the BAM neural network model with one node. This article presents the simulation diagram of the BAM neural network model with two nodes. Moreover, in [13], the values of σ(t) and τ(t) are all 1, while the values of σ1(t), σ2(t), τ1(t), and τ2(t) in this study are different. Therefore, in numerical simulation, this study is more general in the value of the model and time delay. In addition, the unique equilibrium point (0,0,0,0)T of the system (18) is obtained by MATLAB. Figure 1 shows an image which is globally asymptotically stable of the system (18) under initial conditions

6 Conclusion

In this study, by using matrix theory, inner product properties, generalized Halanay inequalities, and constructing appropriate Lyapunov functionals, novel sufficient criteria of the global asymptotic stability of the system and the global dissipativity of the equilibrium point have been derived for a type of BAM neural systems with delays. The given results might have an impact on investigating the instability, the existence of periodic solutions, and the stability of BAM neural networks. A comparison between the results and the correspondingly previous works implies that the derived criteria are less conservative and more general through numerical simulations.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material; further inquiries can be directed to the corresponding author.

Author Contributions

ML established the mathematical model, theoretical analysis, and wrote the original draft; HJ provided modeling ideas and analysis methods; CH checked the correctness of theoretical results; BL and ZL performed the simulation experiments. All authors read and approved the final manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grants No. 62003380).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors are grateful to the editors and referees for their valuable suggestions and comments, which greatly improved the presentation of this article.

References

1. Kosko B. Adaptive Bidirectional Associative Memories. Appl Opt (1987) 26:4947–60. doi:10.1364/ao.26.004947

2. Rajivganthi1 C, Rihan FA, Lakshmanan S. Dissipativity Analysis of Complex Valued BAM Neural Networks with Time Delay. Neural Comput. Applic. (2019). 31:127–137. doi:10.1007/s00521-017-2985-9

3. Kosko B. Bidirectional Associative Memories. IEEE Trans Syst Man Cybern (1988) 18(10):49–60. doi:10.1109/21.87054

4. Hopfield JJ. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc Natl Acad Sci U.S.A (1982) 79:2554–8. doi:10.1073/pnas.79.8.2554

5. Hopfield JJ. Neurons with Graded Response Have Collective Computational Properties like Those of Two-State Neurons. Proc Natl Acad Sci U.S.A (1984) 81:3088–92. doi:10.1073/pnas.81.10.3088

6. Kosto B. Neural Networks and Fuzzy Systems-A Dynamical System Approach Machine Intelligence. Englewood Cliffs, NJ: Prentice-Hall (1992).

7. Abdurahman A, Jiang H. Nonlinear Control Scheme for General Decay Projective Synchronization of Delayed Memristor-Based BAM Neural Networks. Neurocomputing (2019) 357:282–91. doi:10.1016/j.neucom.2019.05.015

8. Xu G, Bao H. Further Results on Mean-Square Exponential Input-To-State Stability of Time-Varying Delayed BAM Neural Networks with Markovian Switching. Neurocomputing (2020) 376:191–201. doi:10.1016/j.neucom.2019.09.033

9. Liu J, Jian J, Wang B. Stability Analysis for BAM Quaternion-Valued Inertial Neural Networks with Time Delay via Nonlinear Measure Approach. Mathematics Comput Simulation (2020) 174:134–52. doi:10.1016/j.matcom.2020.03.002

10. Priya B, Ali MS, Thakur GK, Sanober S, Dhupia B. Pth Moment Exponential Stability of Memristor Cohen-Grossberg BAM Neural Networks with Time-Varying Delays and Reaction-Diffusion. Chin J Phys (2021) 74:184–94. doi:10.1016/j.cjph.2021.06.027

11. Fang T, Ru T, Fu D, Su L, Wang J. Extended Dissipative Filtering for Markov Jump BAM Inertial Neural Networks under Weighted Try-Once-Discard Protocol. J Franklin Inst (2021) 358:4103–17. doi:10.1016/j.jfranklin.2021.03.009

12. Yan M, Jiang M. Synchronization with General Decay Rate for Memristor-Based BAM Neural Networks with Distributed Delays and Discontinuous Activation Functions. Neurocomputing (2020) 387:221–40. doi:10.1016/j.neucom.2019.12.124

13. Wu Z-G, Shi P, Su H, Lu R. Dissipativity-Based Sampled-Data Fuzzy Control Design and its Application to Truck-Trailer System. IEEE Trans Fuzzy Syst (2015) 23:1669–79. doi:10.1109/tfuzz.2014.2374192

14. Xu C, Aouiti C, Liu Z. A Further Study on Bifurcation for Fractional Order BAM Neural Networks with Multiple Delays. Neurocomputing (2020) 417:501–15. doi:10.1016/j.neucom.2020.08.047

15. Yan M, Jian J, Zheng S. Passivity Analysis for Uncertain BAM Inertial Neural Networks with Time-Varying Delays. Neurocomputing (2021) 435:114–25.

16. Li R, Cao J. Passivity and Dissipativity of Fractional-Order Quaternion-Valued Fuzzy Memristive Neural Networks: Nonlinear Scalarization Approach. IEEE Trans Cybern (2022) 52 (99) 2821–2832. doi:10.1109/tcyb.2020.3025439

17. Li Y, He Y. Dissipativity Analysis for Singular Markovian Jump Systems with Time-Varying Delays via Improved State Decomposition Technique. Inf Sci (2021) 580:643–54. doi:10.1016/j.ins.2021.08.092

18. Zhou L. Delay-dependent Exponential Stability of Cellular Neural Networks with Multi-Proportional Delays. Neural Process Lett (2013) 38(3):321–46. doi:10.1007/s11063-012-9271-8

19. Zhou L, Chen X, Yang Y. Asymptotic Stability of Cellular Neural Networks with Multiple Proportional Delays. Appl Maths Comput (2014) 229(1):457–66. doi:10.1016/j.amc.2013.12.061

20. Zhou L. Global Asymptotic Stability of Cellular Neural Networks with Proportional Delays. Nonlinear Dyn (2014) 77(1):41–7. doi:10.1007/s11071-014-1271-y

21. Zhou L. Delay-dependent Exponential Synchronization of Recurrent Neural Networks with Multiple Proportional Delays. Neural Process Lett (2015) 42(3):619. doi:10.1007/s11063-014-9377-2

22. Cong Z, Li N, Cao J. Matrix Measure Based Stability Criteria for High-Order Networks with Proportional Delay. Neurocomputing (2015) 149:1149. doi:10.1016/j.neucom.2014.09.016

23. Zhang T, Li Y. Global Exponential Stability of Discrete-Time Almost Automorphic Caputo-Fabrizio BAM Fuzzy Neural Networks via Exponential Euler Technique. Knowledge-Based Syst (2022) 246:108675. doi:10.1016/j.knosys.2022.108675

24. Wang S, Zhang Z, Lin C, Chen J. Fixed-time Synchronization for Complex-Valued BAM Neural Networks with Time-Varying Delays via Pinning Control and Adaptive Pinning Control. Chaos, Solitons & Fractals (2021) 153:111583. doi:10.1016/j.chaos.2021.111583

25. Cai Z, Huang L. Functional Differential Inclusions and Dynamic Behaviors for Memristor-Based BAM Neural Networks with Time-Varying Delays. Commun Nonlinear Sci Numer Simulation (2014) 19:1279–300. doi:10.1016/j.cnsns.2013.09.004

26. Zhou L. Novel Global Exponential Stability Criteria for Hybrid BAM Neural Networks with Proportional Delays. Neurocomputing (2015) 161:99–106. doi:10.1016/j.neucom.2015.02.061

27. Ren F, Cao J. LMI-based Criteria for Stability of High-Order Neural Networks with Time-Varying Delay. Nonlinear Anal Real World Appl (2006) 7:967–79. doi:10.1016/j.nonrwa.2005.09.001

28. Bo Liu B, Wenlian Lu W, Tianping Chen T. Generalized Halanay Inequalities and Their Applications to Neural Networks with Unbounded Time-Varying Delays. IEEE Trans Neural Netw (2011) 22(9):1508–13. doi:10.1109/tnn.2011.2160987

29. Wang Z, Huang L. Global Stability Analysis for Delayed Complex-Valued BAM Neural Networks. Neurocomputing (2016) 173:2083–9. doi:10.1016/j.neucom.2015.09.086

Keywords: BAM neural network, global asymptotic stability, dissipativity, inner product, generalized Halanay inequalities, matrix theory

Citation: Liu M, Jiang H, Hu C, Lu B and Li Z (2022) Novel Global Asymptotic Stability and Dissipativity Criteria of BAM Neural Networks With Delays. Front. Phys. 10:898589. doi: 10.3389/fphy.2022.898589

Received: 25 March 2022; Accepted: 26 April 2022;

Published: 30 June 2022.

Edited by:

Erik Andreas Martens, Lund University, SwedenReviewed by:

Baogui Xin, Shandong University of Science and Technology, ChinaShaobo He, Central South University, China

Copyright © 2022 Liu, Jiang, Hu, Lu and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mei Liu, bWVpeWlydW95YUAxNjMuY29t

Mei Liu

Mei Liu Haijun Jiang2

Haijun Jiang2 Cheng Hu

Cheng Hu