- 1Department of Radiation Oncology, Tianjin Medical University Cancer Institute and Hospital, National Clinical Research Center for Cancer, Tianjin’s Clinical Research Center for Cancer, Key Laboratory of Cancer Prevention and Therapy, Tianjin, China

- 2Perception Vision Medical Technologies Co. Ltd, Guangzhou, China

Purpose: The aim of this study is to develop a practicable automatic clinical target volume (CTV) delineation method for radiotherapy of breast cancer after modified radical mastectomy.

Methods: Unlike breast conserving surgery, the radiotherapy CTV for modified radical mastectomy involves several regions, including CTV in the chest wall (CTVcw), supra- and infra-clavicular region (CTVsc), and internal mammary lymphatic region (CTVim). For accurate and efficient segmentation of the CTVs in radiotherapy of breast cancer after modified radical mastectomy, a multi-scale convolutional neural network with an orientation attention mechanism is proposed to capture the corresponding features in different perception fields. A channel-specific local Dice loss, alongside several data augmentation methods, is also designed specifically to stabilize the model training and improve the generalization performance of the model. The segmentation performance is quantitatively evaluated by statistical metrics and qualitatively evaluated by clinicians in terms of consistency and time efficiency.

Results: The proposed method is trained and evaluated on the self-collected dataset, which contains 110 computed tomography scans from patients with breast cancer who underwent modified mastectomy. The experimental results show that the proposed segmentation method achieved superior performance in terms of Dice similarity coefficient (DSC), Hausdorff distance (HD) and Average symmetric surface distance (ASSD) compared with baseline approaches.

Conclusion: Both quantitative and qualitative evaluation results demonstrated that the specifically designed method is practical and effective in automatic contouring of CTVs for radiotherapy of breast cancer after modified radical mastectomy. Clinicians can significantly save time on manual delineation while obtaining contouring results with high consistency by employing this method.

1 Introduction

According to a report from the World Health Organization, breast cancer has overtaken lung cancer as the most prevalent cancer worldwide [1]. Different stages of tumor progression require different types of surgical treatment, including breast-conserving surgery (BCS) and Radical Mastectomy (RM). Modified radical mastectomy (MRM) is widely used in clinical practice for the treatment of breast cancer to ensure surgical efficacy while reducing surgical damage and improving the patient’s quality of life [2]. Specifically, MRM has become a cornerstone of breast cancer treatment in China. It involves excising only the mammary gland and clearing the axillary lymph nodes, while preserving the pectoralis major and minor muscles, thereby ensuring postoperative mobility and appearance.

Although MRM is beneficial to patients, it presents a challenge to clinicians in contouring the clinical target volume (CTV) for postoperative radiotherapy because the corresponding CTVs involve several target areas with relatively complex anatomic structures compared with their counterparts in BCS and HS. There are three targets in the CTV delineation for radiotherapy of breast cancer after MRM: CTV in the chest wall (CTVcw), supraclavicular region (CTVsc), and internal mammary lymphatic region (CTVim), among which the position and volume vary significantly. The significant variation between patients and the inter-intra-observation variability [3, 4] also results in highly demanding and time-consuming work for clinicians. Conversely, research has demonstrated that the incidental doses to regions, such as the contralateral breast and thyroid caused by contouring errors can affect patients’ quality of life [5–7]. Therefore, there is an urgent need to develop an automatic CTV delineation method for radiotherapy of breast cancer after MRM to reduce the burden on clinicians while improving work efficiency and accuracy.

Currently, most automatic contouring methods are developed for radiotherapy after breast-conserving surgery because they only segment the breast with the mammary gland. For example, atlas-based methods are successful in breast [8] segmentation under the condition that the amount of data and the inter-data variation are small. As the volume of data grows, deep-learning-based approaches have achieved significant development toward remedying the cases with large deformation and other considerable variations and have been adopted by an increasing number of institutes and clinicians.

To the best of our knowledge, this is the first study whose aim is to develop a deep learning-based automatic CTV delineation algorithm for radiotherapy of breast cancer after MRM. In this study, we propose a specifically designed multi-objective segmentation method for automatic CTV delineation for radiotherapy of breast cancer after MRM. An orientation attention mechanism is proposed to tackle the misrecognition of a similar structure between the breast and back sides caused by modified radical surgery. To enable the model to segment the targets correctly with significantly different volumes, an inception block-based multi-scale convolution architecture is constructed to obtain different perception fields and capture the corresponding features. In addition, the model is trained by local dice loss to handle the imbalance between segmentation categories and stabilize the training. Furthermore, three particular data augmentation strategies, namely, attention position variance, deformation simulation, and breast implant simulation, are designed to cope with the problem of data scarcity and differentiation.

The remainder of this paper is organized as follows. 2 introduces related research on automatic breast CTV delineation. 3 Materials and methods describe the specifically designed methods. 4 The experimental results show the quantitative and qualitative results. 5 Discussion and 6 Conclusion and future work.

2 Related Works

For the past few decades, traditional methods, particularly Atlas-based methods, have been the preferred solution for automatic CTV delineation. Atlas-based approaches perform deformable image registration to match the target and ground truth. Patients are segmented based on an atlas library, and the most anatomically similar will be selected as the target to be transformed into the same coordinate space as the input data. Anders et al. [9] and Velker et al. [10] collected 9 and 124 cases to build a library for breast cancer. The method proposed by Velker achieved good performance on structured CTVs, such as breast and chest wall, with Dice similarity coefficient (DSC) values of 0.87 and 0.89 for left- and right-side breast, respectively.

Atlas-based solutions have been widely utilized in cancer sites, such as the head and neck [11], breast [12], and lungs [13]. However, the performance of these approaches is limited by the degree of deformation, image registration quality, and additional corrections. For instance, for highly variable structures, such as internal mammary nodes, Velker’s method achieved poor performance with a DSC of 0.3. In this case, several deep-learning-based approaches have been proposed and have made significant progress in terms of accuracy and consistency [14].

Deep learning methods have demonstrated excellent performance in several fields. Convolutional neural networks (CNNs) have become increasingly irreplaceable in the field of image processing and analysis, producing results by extracting and learning the features from well-organized training data. Deep learning-based semantic segmentation is a suitable solution for automatic CTV delineation. Min et al. [15] proposed a deep learning-based breast segmentation algorithm (a 3D fully convolutional DesnseNet) and compared its performance with the aforementioned atlas-based segmentation methods. The comparison results demonstrated that the deep learning method performed more consistently and robustly on the majority of structures. In addition to the segmentation accuracy, clinicians are concerned with the inference speed of the algorithms because the produced segmentation results still require manual correction. To this end, Jan et al. [16] proposed BibNet, a novel neural network built by U-Net [17] with a multi-resolution level processing structure and residual connections, alongside a full-image processing strategy to increase the inference speed while improving the segmentation quality. Kuo et al. [18] proposed a deep dilated residual network (DD-ResNet) for auto-segmentation of the clinical target volume for breast cancer radiotherapy, which outperformed deep dilated convolutional neural network (DDCNN) and deep deconvolutional neural network (DDNN). Compared with those references, we use optimizer U-Net to help doctors contouring the region of breast cancer.

3 Materials and Methods

3.1 Data Acquisition

The data supporting this study comprised 110 CT scans of patients who underwent modified mastectomy surgery collected from Tianjin Medical University Cancer Institute and Hospital. These patients received adjuvant radiotherapy on the chest wall, supra- and infra-clavicular, and internal mammary lymphatic regions after lumpectomy. Therefore, the CTVs delineated for radiotherapy by an experienced clinician according to the RTOG criteria were set as the ground truth for model training [19]. The CTVs on both the left and right sides were delineated to stabilize model training. Patients with breast implants were also collected in our dataset and extended using the breast implant simulation data augmentation method. The two-dimensional size and thickness of the reconstructed CT images were 512*512 and 5 mm, respectively. The dataset was randomly split into a training set and testing set with 82 cases and 28 cases, respectively. For the sake of splitting our dataset for training and test purpose, the ratio of training and test set about 3:1, which is slightly higher than the 4:1 for most commonly used, was adopted, accommodating the limited overall sample size, resulting in an adequately sized test set.

3.2 Architecture and Strategies

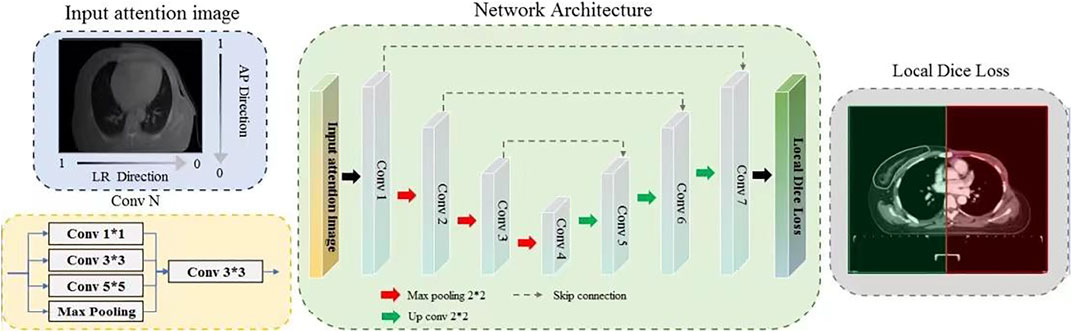

The architecture of the proposed network is illustrated in Figure 1. The input images are preprocessed using a specific orientation attention method before being fed into the network. Each convolution block in the network comprises a inception module, followed by an activation layer and a batch normalization layer. The red arrows symbolize max pooling, whereas the green arrows symbolize transpose convolution. Black arrows indicate the inputs and outputs of the model. Local dice loss is employed to train the model for multi-objective segmentation, followed by a sigmoid activation function to generate the output mask. In this study, we focused on the specific characteristics of CTVs after MRM and designed corresponding solutions to accomplish an automatic contouring task.

FIGURE 1. Illustration of the specifically designed deep-learning based multi-objective segmentation method for the automatic delineation of CTVs for Radiotherapy after Modified Radical Mastectomy. Input attention images are obtained by overlapping an anterior-posterior (AP) direction attention map on to input images.

The breast on the affected side is excised in MRM with only the pectoralis major and minor muscles preserved, resulting in a flat structure that is similar to the back. In addition, the collected data contained patients with left breast cancer and right breast cancer, and even on both sides; therefore, the model should be encouraged to focus more on the affected side and perform delicate segmentation. To this end, an orientation attention mechanism was designed for preprocessing. Specifically, a direction attention map is calculated based on the formula APi = 1 − i/H and LRi = 1 − i/W, where i and H/W are the row/column index and image resolution along the anterior–posterior (AP) and left-right (LR) directions, respectively. The input of the model is the product of the AP and LR direction attention map and the normalized CT image with a range of [−1, 1]. The values on the breast and affected sides in the attention map were set to near 1, whereas the opposite side was set to near 0, thereby assigning higher importance to the breast and affected sides. This can be observed in Figure 1; the input attention image has a gray gradient along the vertical and horizontal directions. The darker side is emphasized, thus implicitly promoting breast segmentation.

The segmentation targets of the model contained CTV in the chest wall (CTVcw), supra-clavicular region (CTVsc), and internal mammary lymphatic region (CTVim), which vary greatly in volume. CTVcw and CTVsc have thin and long shapes, whereas CTVim only occupies a small region. This imbalance may confuse the model and reduce segmentation performance, especially for small targets. Therefore, to enable the model to extract features with different perception fields, thereby performing delicate segmentation of targets with different scales, a network with a multi-scale convolution structure is constructed. This is done by utilizing a refined inception block [20] as a basic convolution element, which can improve the perception field while maintaining minimal pooling operations. Specifically, the input to each convolution block is fed into 1*1, 3*3, and 5*5 convolution layers and a max pooling layer to obtain different perception fields, and the extracted multi-scale features are then fused to model higher-level semantic information. In addition, to overcome the problems of incomplete labels, a novel local loss is introduced for network optimization, where a local mask is calculated based on the label. If parts of the targets are not annotated, the local mask will be initialized by zeros, thereby avoiding optimization of the model with the segmentation error outside the local regions. Benefiting from the larger variation in the breast cancer dataset, this local loss performed excellently in this study. Moreover, the sigmoid activation function is employed in the output layer to produce the probability of the categories of each pixel in the case of overlap among labels.

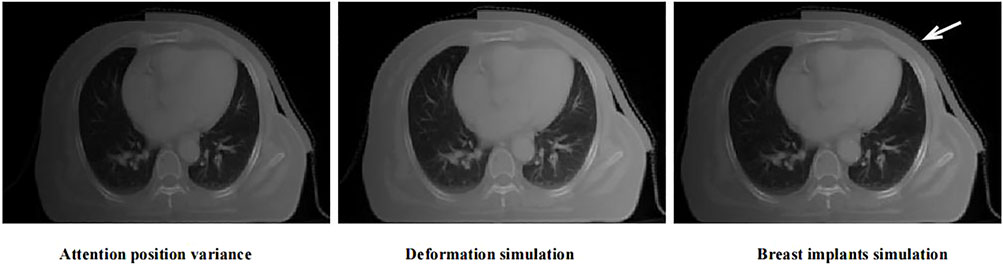

To cope with individual variations, such as various deformations and cases with breast implants, we designed several targeted data augmentation methods. Three specific data augmentation approaches are exploited to improve data diversity: Attention position variance, deformation simulation, and breast implant simulation. The CT scan center may vary significantly for different patients. Furthermore, the attention map is calculated based on the body center, which may be affected by the coach and other similar materials in the image. Thus, we adjusted the body cancer with limited variation and generated the corresponding input image for training. Breast cancer is a deformable organ, and small deformation is common in breast cancer radiotherapy. Thus, a random elastic deformation vector field was applied to the CT images for deformation augmentation. In particular, a breast implant simulation method was designed for data augmentation. Patients who have undergone breast reconstruction have completely different anatomical structures compared with other patients, which may confuse the model in the training process. In this case, we simulated breast implants in the breast region via morphological processing and density simulations. In the study, We collected CT images from 110 patients with breast cancer for model training and testing. They received radiotherapy from June 6, 2016 to January 31, 2020, at Tianjin Medical University Cancer Hospital. The contouring of target areas have been examined and modified by senior radiotherapy doctors. In order to reduce the influence of individual differences, these CT images are processed by the above data enhancement methods. From Figure 2, it can be seen that the simulated images have a relatively similar appearance to the real data. These approaches increase the amount of data, reduce overfitting, and improve the generalization performance of the model.

FIGURE 2. The examples of the proposed data augmentation strategies. The red arrow indicates the position of implanted breast implant.

3.3 Evaluation Metrics

To evaluate this method, the DSC was employed as the quantitative metric, which is defined as the overlap between the segmented mask and the manually labeled mask, witch labled by experienced radiologists. The DSC formula is shown in Eq. 1, where A denotes the ground truth, and B denotes the predicted results. Therefore, a higher DSC indicates a more precise segmentation performance.

In some cases, more attention should be paid to segmentation boundaries. Therefore, the Hausdorff distance (HD) and average symmetric surface distance (ASSD) were calculated to evaluate the segmentation performance on boundaries. HD measures the surface distance between two point sets X and Y, as defined by Eq. 2. ASSD is the average of all the distances from points on the boundary of the predicted results to the boundary of the ground truth, which is calculated by Eq. 3.

where len(X) and len(Y) represent the total number of pixels in the boundary X and boundary Y respectively.

Although the above metrics could provide a scientific assessment of the proposed segmentation method, they are not reliable enough to evaluate the significance of clinical practice [21]. To this end, we conducted a user study to obtain a practical assessment by three experienced radiologists.

3.4 Statistical Analysis

A paired t-test was conducted to verify the statistical difference between the quantitative evaluation results of the proposed method and other approaches. The test was also performed on the clinicians’ scores. A p value of less than 0.05 can be regarded as a significant difference between the proposed method and baseline approaches.

4 Results

4.1 Segmentation Performance

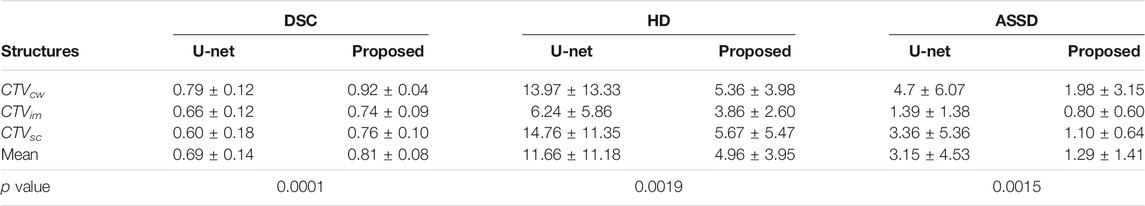

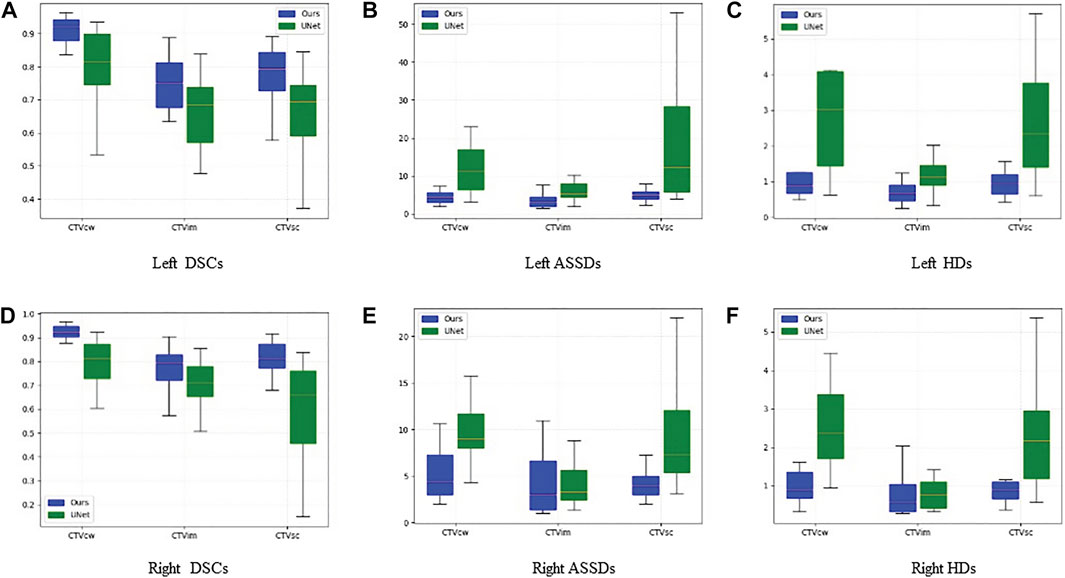

Table 1 presents the quantitative evaluation results of the proposed method and the baseline (U-Net) in terms of DSC, HD, and ASSD. It is observed that the proposed method achieved a mean DSC of 0.92 with standard deviation of 0.04 for CTVcw, a mean DSC of 0.74 with standard deviation of 0.09 for CTVim, and a mean DSC of 0.76 with standard deviation of 0.10 for CTVsc. The average DSC over all categories of the proposed method is 0.81, which outperformed the baseline significantly. The p value of 0.0001 also demonstrated the significant difference between the two methods. Figures 3A,B show the proposed method has larger inter-subject variations in the left CTVs.

TABLE 1. Quantitative evaluation of the proposed method and U-Net on CTVcw, CTVim, and CTVsc in terms of DSC, HD and ASSD. The p value smaller than 0.05 indicates that there are significant differences between the two approaches.

FIGURE 3. Box-plots of DSC, HD and ASSD in left CTVs and right CTVs on the test set using the gold standard as reference. Blue means our method result. Green means U-Net's result. By comparison, we can see that the effect of blue is much better than that of green. Details are given below.

The HD and ASSD evaluations illustrated that the proposed method produced smaller surface discrepancies compared with U-Net in all the CTVs. Figures 3B,C,E,F revealed that the proposed method tends to generate segmentation results with quite small inter-subject diversity compared with U-Net, thereby demonstrating the inference quality and the robustness of the proposed method.

Specifically, our method can produce significantly better result with small inter-subject diversity compared with U-Net on CTVcw and CTVsc, because the multi-scale convolution module enables the model to extract sufficient features to segment targets with complex structure, such as CTVcw and CTVsc. As for targets with small volume like CTVim, the proposed method can also produce precise results by utilizing receptive fields with different scale.

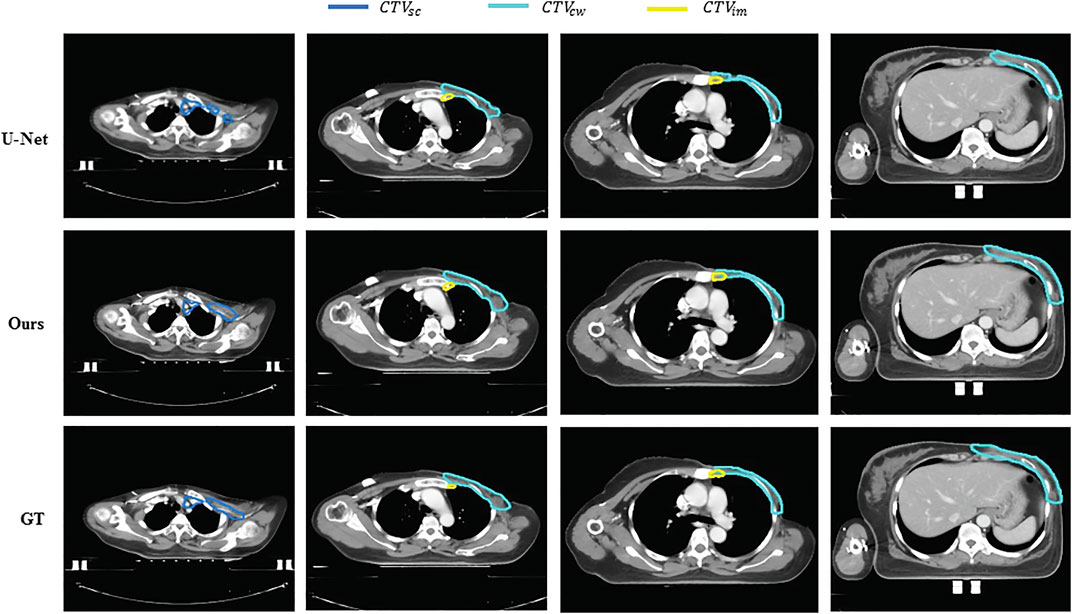

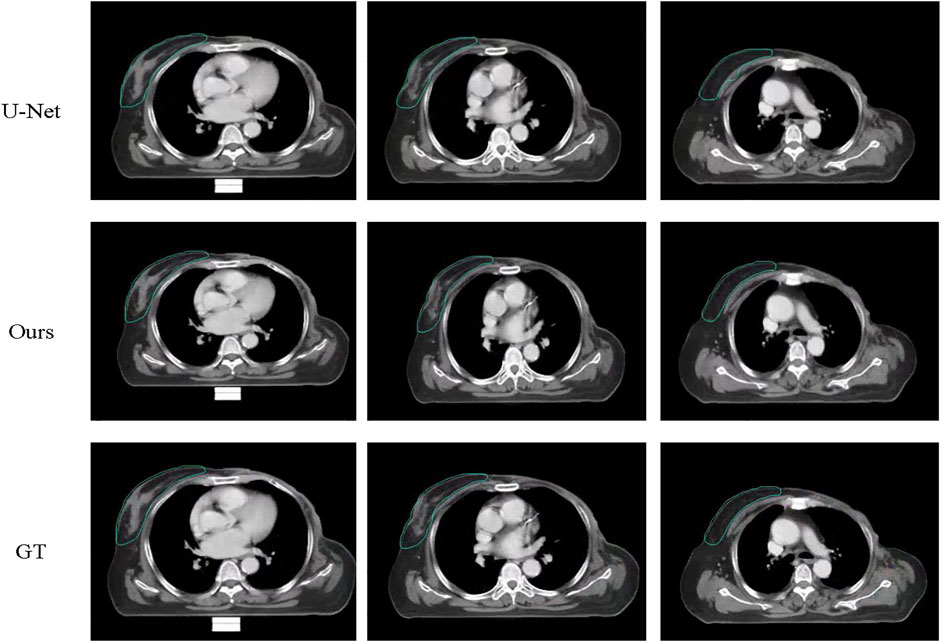

Figures 4, 5 compare the segmentation results of U-Net, the proposed method with the manual segmentation on the cancer affected side and the contralateral side. The CTV in the chest wall (CTVcw) has an anatomically different structure on the affected side and the contralateral side because the mammary gland is removed. The results produced by U-Net suffer from a moderate degree of under-segmentation and holes in targets, which is not acceptable clinically. It can be seen that our proposed method achieved closer results to the gold standard in terms of shape, location, and volume than those of the counterpart of U-Net.

FIGURE 4. Examples of segmentation results of U-Net and the proposed method against gold standard for the affected side. Different colors represent different segmentation targets. The first row is the result of U-Net,the second row is the result of our method, the third row is the groundtruth of the images. And the different colors represent dfferent segmentation targets. Blue meas the supra-clavicular region, yellow means internal mammary lymphatic region (CTV im), another means CTV in the chest wall (CTV cw).

FIGURE 5. Examples of segmentation results of U-Net and the proposed method against gold standard on the contralateral side. The first image is the result of U-Net, the seconf is the ground truth while the third is our proposed method.

4.2 Ablation Study

In this section, we explored the importance and effectiveness of the orientation attention mechanism and breast implant simulation.

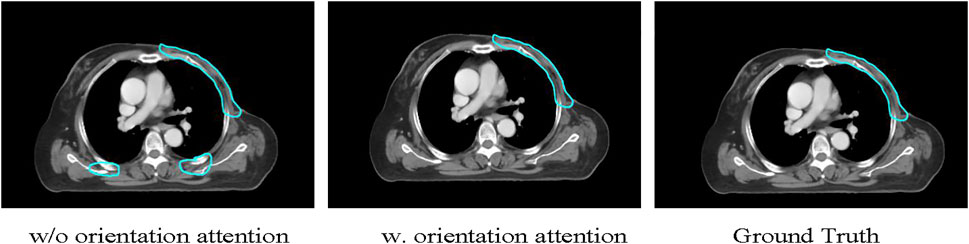

4.2.1 Importance of Orientation Attention

The input orientation attention strategy is expected to encourage the model to distinguish the breast region from the back region in the transverse CT slices and perform segmentation. To verify the effectiveness of this strategy, we conducted an ablation experiment by removing the input orientation attention mechanism and compared the segmentation performance. Figure 6 shows the segmentation results for a test case generated by models with and without input orientation attention preprocessing. The model trained without the orientation attention mechanism incorrectly performs segmentation on the back region, whereas the targets are correctly segmented by the model trained with the orientation attention strategy.

FIGURE 6. The illustration of the usefulness of the proposed method in recognizing the breast side correctly. The U-Net model incorrectly segments dorsally structurally similar regions as target CTVs, while the proposed method successfully identifies the breast side and segments the target CTVs.

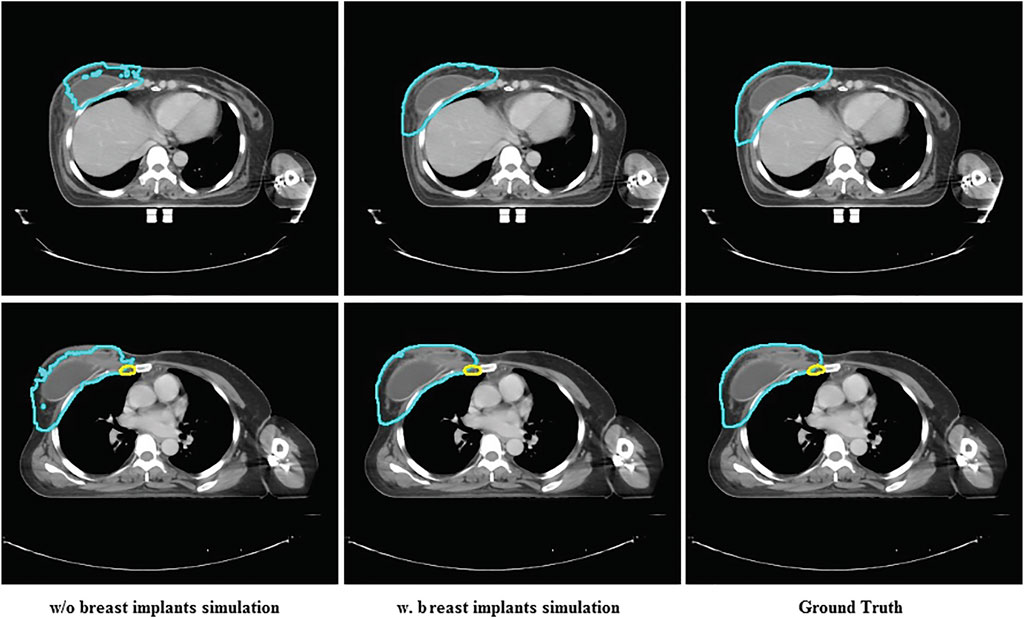

4.2.2 Importance of Breast Implants Simulation

`Only six patients with breast implants were included in the training data, which was extremely imbalanced for training. The different anatomical structures between patients with and without breast implants can confuse the model during the training process. Thus, we expect that the proposed breast implant simulation can handle this problem by increasing the amount of data with breast implants. We investigated the importance of breast implant simulation by training the model with only the original data. Figure 7 presents the segmentation results for the case of breast implants. It was found that the trained model without specific data augmentation was confused by processing cases with breast implants, resulting in poor segmentation results. The proposed method is well suited to cases with breast implants, whereas U-Net performs poorly.

FIGURE 7. The comparison between the segmentation results of U-Net and the proposed method and ground truth on the case with breast implants.

4.3 Timing Performance

The time required to train the proposed model on two GTX 1080 GPUs was approximately 24 h. By utilizing the automatic segmentation method, the time required to delineate a breast CTV of a patient is drastically reduced from approximately 40 min (manual delineating) to several seconds. Even if some special cases need doctors correct the delineating result maunally, the completion of a breast CTV contouring can be controlled within 10 min with the manual correction time, demonstrating the feasibility and effectiveness of the proposed approach.

5 Discussion

In this study, we proposed a specifically-designed deep learning-based framework for automatic contouring of 10 targets in CT scans for modified mastectomy RT. The experiment results indicate that our method performed well, exhibiting excellent agreement with the CTVs that were manually delineated by clinicians. In detail, both quantitative and qualitative evaluations demonstrated the feasibility of the proposed methods in contouring CTVs for modified mastectomy RT. The orientation attention provides reliable supervision for the model to recognize the breast and affect sides in CT images. Different from simply applying a deep learning-based segmentation network for automatic CTVs contouring, we conducted statistic analysis of the CTVs in modified mastectomy surgery-based radiotherapy and designed the network according to the statistical characteristics. The multi-scale convolutional structure constructed by refined inception module increases both the width of the network and the adaptability of the network to scales, thereby producing delicate segmentation results of targets with different volume. Besides, the local loss drives the optimization for all of the targets even in the cases with labels missing.

Considering the scarcity of data volume and the variability among data, we designed three data enhancement methods for data expansion to improve the generalization performance of the model while avoiding overfitting. Data augmentation is particularly essential for medical-related researches, since it takes long and a lot to collect medical data. Apart from the attention position and general deformation simulation, we particularly designed the breast implants simulation method to increase the number of cases with breast implants. The breast anatomical structure of patients with breast implants is completely different from the patients without. So a small amount of data with breast implants can affect the model training, resulting in the model not converge. Through the breast implants simulation, the problem of category imbalance is alleviated and the model is able to generate more accurate segmentation results for patients with breast implants.

Although deep learning solutions performs well in producing contouring results for RT (RT is a file that stores the coordinates of the region of interest), the nature of deep learning makes it sort of disputable [22] because it learns how to segment only based on the ground truth delineated by one clinician. Radiotherapy requires clinical input and creativity in terms of science and art [19]. The delineation results of the same case can vary between clinicians, and it is sometimes difficult to determine which one is optimal. Therefore, the ground truth used for training the deep learning model also should have diversity. The reinforcement learning provides a potential way to enable the DL model to learn how to optimally segment targets.

Manual delineation of OARs and CTVs for RT is a laborious task for clinicians, which requires not only experience but also physical exertion. Repetitive work for long periods can lead to reduced productivity and even errors on the part of clinicians [2]. In this case, automatic segmentation algorithms serve as a useful tool for reducing the workload of clinicians and producing highly consistent results. A previous study illustrated that atlas-based automatic segmentation (ABAS) for loco-regional RT of breast cancer reduced the time needed for manual delineation by 93% (before correction) and 32% (after correction) [23]. Our method reduced the time required for contouring from 40 min (manual) to 10 min (automatic) on average. With the assistance of deep learning-based auto-segmentation, radiation oncologists can work more efficiently.

To evaluate the segmentation results more carefully and efficiently, and to explore the detailed gap between the deep learning-based automatic contouring algorithm and manual contouring, we used both HD and ASSD to evaluate the performance of the contouring results on the edges and surfaces. In this case, we further proved the level of advancement of the proposed method on 3D level rather than the 2D level only. Table 1 and Figure 3 illustrate that the proposed method can produce segmentation results with better agreement with the manually delineated structures in terms of region and surface.

This study has several limitations. First, we conducted this research in a single center with limited sample size and diversity, which will impose a challenge on the generalization power of the proposed model. The well-performing model may produce unacceptable segmentation results when applied to other centers owing to the variance between the data. Therefore, we plan to validate the proposed method using data from other institutions. Second, the accuracy and pattern of the segmentation results depend heavily on the manual annotations used for training, which can be both advantageous and disadvantageous. As aforementioned, the model can be trained using a homogeneous gold standard created by a single clinician. However, there is no 100% gold standard in clinical settings, as inter-intra-observer variations always exist. Thus, further studies should be conducted to evaluate the generalization of the gold standard created by multiple clinicians. Additionally, it may be more favorable if the OARs are segmented simultaneously. By extracting corresponding features and segment-related organs and tissues, the model can obtain a better perception of the target region. Specifically, the OARs that are most helpful for segmenting target CTVs in the breast region still need to be considered. For instance, the importance of coronary vessels has been increasingly acknowledged.

6 Conclusion

Auto-contouring of the CTVs can relieve clinicians from tedious contouring work while improve the consistency and reliability of radiotherapy. In this study, a specifically designed deep learning-based segmentation method was developed to delineate CTVs for modified mastectomy radiotherapy. Qualitative and quantitative evaluations demonstrated the outstanding performance of the proposed method. The method can also handle cases with breast implants and large shape variability. The user study also suggests that the proposed method is practical and beneficial to clinical work by significantly saving time and improving the consistency of decisions.

Data Availability Statement

The datasets presented in this article are not readily available because data security requirement of our hospital. Requests to access the datasets should be directed to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by the Medical Ethics Committee of Tianjin Medical University Cancer Institute and Hospital. The ethics committee waived the requirement of written informed consent for participation. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 81872472).

Conflict of Interest

Authors RW, QA, HC, ZY and JW were employed by the company Guangzhou Perception Vision Medical Technologies Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global Cancer Statistics 2020: Globocan Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA A Cancer J Clin (2021) 71:209–49. doi:10.3322/caac.21660

2. Abdlaty R, Doerwald L, Hayward J, Fang Q Radiation-Therapy-Induced Erythema: Comparison of Spectroscopic Diffuse Reflectance Measurements and Visual Assessment. In: Medical Imaging 2019: Image Perception, Observer Performance, and Technology Assessment. Bellingham, WA: International Society for Optics and Photonics (2019) 10952: 109520H.

3. Li XA, Tai A, Arthur DW, Buchholz TA, Macdonald S, Marks LB, et al. Variability of Target and normal Structure Delineation for Breast Cancer Radiotherapy: An Rtog Multi-Institutional and Multiobserver Study. Int J Radiat Oncol Biol Phys (2009) 73:944–51. doi:10.1016/j.ijrobp.2008.10.034

4. Louie AV, Rodrigues G, Olsthoorn J, Palma D, Yu E, Yaremko B, et al. Inter-observer and Intra-observer Reliability for Lung Cancer Target Volume Delineation in the 4d-Ct Era. Radiother Oncol (2010) 95:166–71. doi:10.1016/j.radonc.2009.12.028

5. Gross JP, Lynch CM, Flores AM, Jordan SW, Helenowski IB, Gopalakrishnan M, et al. Determining the Organ at Risk for Lymphedema after Regional Nodal Irradiation in Breast Cancer. Int J Radiat Oncol Biol Phys (2019) 105:649–58. doi:10.1016/j.ijrobp.2019.06.2509

6. Stovall M, Smith SA, Langholz BM, Boice JD, Shore RE, Andersson M, et al. Dose to the Contralateral Breast from Radiotherapy and Risk of Second Primary Breast Cancer in the Wecare Study. Int J Radiat Oncol Biol Phys (2008) 72:1021–30. doi:10.1016/j.ijrobp.2008.02.040

7. Yaney A, Ayan A, Pan X, Jhawar S, Healy E, Beyer S, et al. Dosimetric Parameters Associated with Radiation-Induced Esophagitis in Breast Cancer Patients Undergoing Regional Nodal Irradiation. Radiol Oncol (2021) 155:167–73. doi:10.1016/j.radonc.2020.10.042

8. Bell LR, Dowling JA, Pogson EM, Metcalfe P, Holloway L Atlas-based Segmentation Technique Incorporating Inter-observer Delineation Uncertainty for Whole Breast. J Phys Conf Ser (2017) 777:012002. doi:10.1088/1742-6596/777/1/012002

9. Anders LC, Stieler F, Siebenlist K, Schäfer J, Lohr F, Wenz F Performance of an Atlas-Based Autosegmentation Software for Delineation of Target Volumes for Radiotherapy of Breast and Anorectal Cancer. Radiother Oncol (2012) 102:68–73. doi:10.1016/j.radonc.2011.08.043

10. Velker VM, Rodrigues GB, Dinniwell R, Hwee J, Louie AV Creation of Rtog Compliant Patient Ct-Atlases for Automated Atlas Based Contouring of Local Regional Breast and High-Risk Prostate Cancers. Radiat Oncol (2013) 8:188. doi:10.1186/1748-717x-8-188

11. Lee H, Lee E, Kim N, Kim Jh., Park K, Lee H, et al. Clinical Evaluation of Commercial Atlas-Based Auto-Segmentation in the Head and Neck Region. Front Oncol (2019) 9:239. doi:10.3389/fonc.2019.00239

12. Reed VK, Woodward WA, Zhang L, Strom EA, Perkins GH, Tereffe W, et al. Automatic Segmentation of Whole Breast Using Atlas Approach and Deformable Image Registration. Int J Radiat Oncol Biol Phys (2009) 73:1493–500. doi:10.1016/j.ijrobp.2008.07.001

13. Pirozzi S, Horvat M, Piper J, Nelson A SU-E-J-106: Atlas-Based Segmentation: Evaluation of a Multi-Atlas Approach for Lung Cancer. Med Phys (2012) 39:3677. doi:10.1118/1.4734942

14. Hoffman R, Jain AK Segmentation and Classification of Range Images. IEEE Trans Pattern Anal Mach Intell (1987) 9:608–20. doi:10.1109/TPAMI.1987.4767955

15. Min SC, Byeong SC, Seung YC, Nalee K, Jaeh ee C, Yong BK, et al. Clinical Evaluation of Atlas- and Deep Learning-Based Automatic Segmentation of Multiple Organs and Clinical Target Volumes for Breast Cancer. Radiother Oncol (2020) 677.

16. Schreier J, Attanasi F, Laaksonen H A Full-Image Deep Segmenter for Ct Images in Breast Cancer Radiotherapy Treatment. Front Oncol (2019) 9:677. doi:10.3389/fonc.2019.00677

17. Ronneberger O, Fischer P, Brox T U-net: Convolutional Networks for Biomedical Image Segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Cham, switzerland: Springer (2015). p. 234–41. doi:10.1007/978-3-319-24574-4_28

18. Men K, Zhang T, Chen X, Chen B, Tang Y, Wang S, et al. Fully Automatic and Robust Segmentation of the Clinical Target Volume for Radiotherapy of Breast Cancer Using Big Data and Deep Learning. Physica Med (2018) 50:13–9. doi:10.1016/j.ejmp.2018.05.006

19. Abdlaty R, Doerwald-Munoz L, Madooei A, Sahli S, Yeh S, Zerubia J, et al. Hyperspectral Imaging and Classification for Grading Skin Erythema. Front Phys (2018) 6. doi:10.3389/fphy.2018.00072

20. Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going Deeper with Convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA (2015). p. 1–9. doi:10.1109/cvpr.2015.7298594

21. Bertels J, Eelbode T, Berman M, Vandermeulen D, Maes F, Bisschops R, et al. Optimizing the Dice Score and Jaccard index for Medical Image Segmentation: Theory and Practice. International Conference on Medical Image Computing and Computer-Assisted Intervention. Cham, Switzerland: Springer (2019) 92–100. doi:10.1007/978-3-030-32245-8_11

22. Wong J, Fong A, Mcvicar N, Smith SL, Alexander AS Comparing Deep Learning-Based Auto-Segmentation of Organs at Risk and Clinical Target Volumes to Expert Inter-observer Variability in Radiotherapy Planning. Int J Radiat OncologyBiologyPhysics (2019) 105:S70–S71. doi:10.1016/j.ijrobp.2019.06.523

23. Eldesoky AR, Yates ES, Nyeng TB, Thomsen MS, Nielsen HM, Poortmans P, et al. Internal and External Validation of an ESTRO Delineation Guideline - Dependent Automated Segmentation Tool for Loco-Regional Radiation Therapy of Early Breast Cancer. Radiother Oncol (2016) 121:424–30. doi:10.1016/j.radonc.2016.09.005

Keywords: modified radical mastectomy breast cancer surgery, auto-contouring, deep learning, clinical target volume, radiotherapy

Citation: You J, Wang Q, Wang R, An Q, Wang J, Yuan Z, Wang J, Chen H, Yan Z, Wei J and Wang W (2022) Deep Learning-Aided Automatic Contouring of Clinical Target Volumes for Radiotherapy in Breast Cancer After Modified Radical Mastectomy. Front. Phys. 9:754248. doi: 10.3389/fphy.2021.754248

Received: 06 August 2021; Accepted: 08 December 2021;

Published: 12 January 2022.

Edited by:

Daniel Rodriguez Gutierrez, Nottingham University Hospitals NHS Trust, United KingdomCopyright © 2022 You, Wang, Wang, An, Wang, Yuan, Wang, Chen, Yan, Wei and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wei Wang, d2Vpd2FuZ18yQDEyNi5jb20=

†These authors have contributed equally to this work and share first authorship

Jinqiang You1†

Jinqiang You1† Ruoxi Wang

Ruoxi Wang