95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Phys. , 25 February 2020

Sec. Nuclear Physics

Volume 8 - 2020 | https://doi.org/10.3389/fphy.2020.00029

This article is part of the Research Topic The Long-Lasting Quest for Nuclear Interactions: The Past, the Present and the Future View all 16 articles

This review presents some of the challenges in constructing models of atomic nuclei starting from theoretical descriptions of the strong interaction between nucleons. The focus is on statistical computing and methods for analyzing the link between bulk properties of atomic nuclei, such as radii and binding energies, and the underlying microscopic description of the nuclear interaction. The importance of careful model calibration and uncertainty quantification of theoretical predictions is highlighted.

The ab initio approach to describe atomic nuclei and nuclear matter is grounded in a theoretical description of the interaction between the constituent protons and neutrons. The long-term goal with this course of action is to construct models to describe and analyze the properties of nuclear systems with maximum predictive power. It is of course well-known that the elementary particles of the strongly interacting sector of the Standard Model are quarks and gluons, not protons and neutrons. However, since the relevant momentum scales of typical nuclear structure phenomena are low enough to not resolve the internal degrees of freedoms of nucleons, it is reasonable to model the nucleus as a collection of strongly interacting and point-like nucleons. This idea has inspired significant efforts aimed at developing algorithms and mathematical approaches for solving the many-nucleon Schrödinger equation in a bottom-up fashion and with as few uncontrolled approximations as possible (see e.g., references [1–10]), as well as a multitude of theoretical descriptions of the interaction between nucleons, at various levels of phenomenology (see e.g., references [11, 12], and references [13–15]) for comprehensive reviews on (chiral) effective field theory (EFT) methods. Reference [16] also offers a historical account of various approaches to understand the nuclear interaction.

Currently, ab initio modeling of atomic nuclei faces two main challenges:

• We have limited knowledge about the details of the interaction between nucleons, which in turn limits our ability to predict nuclear properties.

• Given a microscopic description of the interaction between nucleons inside a nucleus, a quantum-mechanical solution of the nuclear many-body problem is exacerbated by the curse of dimensionality.

There is however continuous progress on both frontiers, and attempts at quantifying the uncertainty of model predictions are beginning to emerge in the community. Rapid algorithmic advances in combination with a dramatic increase in available computational resources make it possible to employ several complementary mathematical methods for solving the nuclear Schrödinger equation. We can nowadays generate numerical representations of microscopic many-nucleon wavefunctions, for selected medium-mass and heavy-mass nuclei, with a rather impressive precision. Although several observables remain beyond the reach of state-of-the-art models, e.g., most properties associated with highly collective states, we can still describe certain classes of observables rather well, such as total ground-state binding energies and radii, and sometimes low-energy excitation spectra. We are thus capable of analyzing experimentally relevant nuclei directly in terms of a quantum mechanical description of the interaction between its constituent nucleons. Indeed, the list of, sometimes glaring, discrepancies between theory and experiment furnish some of the most interesting nuclear physics questions at the moment (see e.g., references [17–23]). Many of these efforts are aimed at understanding the nuclear binding mechanism, the location of the neutron dripline, the existence of shell-closures and magic numbers in exotic systems, and the emergence of nuclear saturation.

State-of-the-art theoretical analyses of experimental data indicate a large and non-negligible systematic uncertainty in the description of bulk nuclear observables (see e.g., reference [24]). Given the high-precision of modern many-body methods, much of this uncertainty can be traced to the description of the interaction potential. Although there exists ab initio models that describe nuclei rather well, albeit in a limited domain, it is less clear why other models sometimes fail. Indeed, the NNLOsat interaction potential [25] reproduces several key experimental binding energies and charge radii for nuclei up to mass A ~ 50 [23, 26–28], while the so-called 1.8/2.0 (EM) interaction potential [29, 30] reproduces binding energies and low-energy spectra up to mass A ~ 100 [26, 31–35] while radii are underestimated. The origin of the differences between these potentials is unknown. It is of course the role of nuclear theory to close the gap between theory and experiment by developing and refining the theoretical underpinnings of the model. But given the complex nature of atomic nuclei, there is significant value in trying to quantify, or estimate, the detailed structure of the observed theoretical uncertainty. This might provide important clues about where we should focus our efforts. There exists well-defined statistical inference methods that can provide additional guidance, and several ongoing projects are currently focused on applying statistical computing methods in the field of ab initio modeling. The topic of uncertainty quantification in nuclear physics has been discussed at a series of workshops on Information and Statistics in Nuclear Experiment and Theory (ISNET). Recent developments in this field are documented in the associated focus issue published in Journal of Physics G [36]. A second focus issue has just been announced, and the first few papers are already published.

In sections 2 and 3 of this paper I will review a selection of recent results and often applied methods for calibrating ab initio models. In sections 4 and 5 I will discuss some of the recently emerging strategies for making progress using statistical computing and Bayesian inference methods. The aim is to provide an overview of selected accomplishments in the field of statistical inference and statistical computing with ab initio models of atomic nuclei. Hopefully, this paper can serve as a brief introduction to practitioners who wish to learn about ongoing developments and possible future directions.

As a final remark, in this paper I will try to consistently use the word model when referring to any current method for theoretically describing the properties of atomic nuclei, including descriptions that claim to be building on more fundamental underpinnings, such as EFT. One can certainly make a finer distinction between models, EFTs, and theories. As outlined in reference [37]; theories provide a unified framework, categorization, and the joint language used for discussions; EFTs capture physics at a given momentum scale; and models can be used to study aspects of a theory, increase understanding, and provide intuition.

An ab initio model is here defined as a description that is based on a state |Ψ〉 that solve the many-nucleon Schrödinger equation

In this schematic representation, is the total kinetic energy operator for the A-nucleon system, is the potential energy operator for the interaction between the nucleons, and E is the total energy of the system in the state represented by |Ψ〉. The potential operator term depends on a set of parameters that governs the strengths of the various interaction pieces in the potential. In the context of EFT, these parameters are often referred to as low-energy constants (LECs). Given a particular expression for the potential , with numerical values for the parameter vector , and a mathematical method to solve Equation (1) for e.g., the state |Ψ〉 with lowest energy, it is in principle possible to quantitatively compute the expectation value for any observable with respect to this state, e.g., its charge radius. Of course the trustworthiness of the result and its level of agreement with experimental data can vary dramatically between different models, i.e., combinations of potentials and many-body methods.

I will denote an ab initio model with . It is defined as the combination of a definite expression for the potential , and a method for solving the Schrödinger equation. The vector is a set of control inputs that specify all necessary settings, such as nucleon numbers, which observable to compute, values of the fundamental physical constants, and algorithmic settings for the mathematical method used for solving Equation (1). Once a set of numerical values for has been determined, a subset of the control inputs of the model can be varied to make model predictions, preferably at some physical setting, for e.g., exotic nuclei where we cannot easily make measurements. Provided that the form of the potential operator and relevant physical constants remain the same, and the model parameters were calibrated carefully, it is of course possible to transfer the vector between ab initio models based on different methods for solving the many-nucleon Schrödinger equation. This is also in line with a physical interpretation of the parameters that elevate them to a status beyond being simple tunable parameters inherent to a specific model with the sole purpose of achieving a good fit to calibration data. This will be discussed further in section 3.

One of the most exciting developments in nuclear theory is that we nowadays have access to a range of methods for solving Equation (1) with very high numerical precision for selected isotopes and observables. This gives us the opportunity to compare model predictions with experimental data to learn more about the elusive structure of the interaction between nucleons. However, such an analyses require careful statistical interpretation of the theoretical results. In particular a sensible estimate of the uncertainty associated with a theoretical prediction. Indeed, only with reliable theoretical errors is it possible to infer the significance of a disagreement between experiment and theory, which in turn may hint at new physics.

On a fundamental level, the atomic nucleus is a quantum mechanical and self-bound system of interacting nucleons. In turn these particles are composed of three quarks whose mutual interactions are described well by the Standard Model of particle physics. As such, starting from the Standard Model it should be possible to account for all observed phenomena also in atomic nuclei, besides possible signals of beyond Standard Model physics. However, to theoretically understand the emergence of nuclei from the Standard Model is an open problem, and linking the quantitative predictions of atomic nuclei to the dynamics of quarks and gluons is a central challenge in low-energy nuclear theory. Although, viewing the atomic nucleus as a (color-singlet) composite multi-quark system is not the most economical choice. Indeed, the strong interaction, which is the most important component for nuclear binding and well-described by quantum chromodynamics (QCD), is non-perturbative in the low-energy region inhabited by atomic nuclei. Non-perturbative Monte Carlo sampling of the quantum fields of QCD amounts to a computational problem of tremendous proportions. This strategy, referred to as lattice QCD, is expected to require at least exascale resources for a realistic analysis of even the lightest multi-nucleon systems. Without any unforeseen disruptive technology, this approach will not provide an operational method for routine analyses of nuclei. For the cases where numerically converged results can be obtained, lattice QCD offers a unique computational laboratory for theoretical studies of QCD in a low-energy setting (see e.g., references [38, 39]).

The description of nuclei should nevertheless build on QCD, or the Standard Model in general. A turning point in the development of QCD-based descriptions of the nuclear interaction came when EFTs of QCD [40] arrived also to many-nucleon physics [41]. An EFT formulates the dynamics between low-energy degrees of freedom, e.g., nucleons and pions, in harmony with some assumed symmetries of an underlying theory, e.g., QCD, and any high-energy dynamics, e.g., quark-gluon interactions, are integrated out of the theory. The resulting chiral effective Lagrangian models the low-energy interactions between two or more nucleons in terms of pion exchanges between nucleons and the high-energy dynamics is incorporated as zero-ranged contact interactions. This approach introduces several model parameters referred to as low energy constants (LECs). They were denoted with above, and play a central role during the model calibration discussed below. The notion of high- and low-energy scales in EFT requires the presence of at least two scales in the physical system under study. An EFT formally exploits this scale separation to expand observables in powers of the low-energy (soft) scale over the high-energy (hard) scale, and in chiral EFT the resulting ratio is often denoted

where, in the case of chiral EFT, the soft scales are mπ and k, the pion mass and a typical external momentum scale, respectively. The hard scale is denoted Λb and is set by the e.g., the nucleon mass MN. Depending on the system under study, one can always try to exploit existing scale separations to construct other kinds of EFTs in nuclear physics, e.g., pion-less EFT [42], vibrational EFT [43], or chiral perturbation theory (the prototypical EFT of QCD) [44]. In the following, I will only discuss results from ab initio models based on chiral EFT, i.e., a pion-full EFT, but many of the methods can be generally applied.

In chiral EFT, the nuclear interaction potential V is analyzed as an order-by-order expansion in terms of Qν and organized following the principles of an underlying power counting (PC). Terms at a higher chiral expansion-orders ν should be less important than terms at a lower orders. Potentials expanded to higher orders are expected to describe data better. Higher chiral orders contain more involved pion exchanges and polynomial nucleon-contacts of increasing exponential dimension, and therefore more undetermined model parameters to handle during the calibration stage. To provide some detail about the chiral potentials: the leading-order (LO) typically consists of the familiar one-pion exchange interaction plus a nucleonic contact-potential. The structure of the contact potential, and the exact treatment of sub-leading orders vary depending on the PC. Still, typical chiral potentials include at most contributions up to a handful of chiral orders, e.g., next-to-next-leading order (NNLO) and next-to-next-to-next-to-leading order (N3LO), and the total number of LECs, i.e., undetermined model parameters, range between ~10 and 20, sometimes a few more, at such chiral orders. Several important contributions to the two-, three-, and four-nucleon interactions at higher orders in the chiral expansion have also been worked out [45–50]. At N5LO, a new set of 26 contact LECs appear, bringing the total number of contacts to 50. Some of the unique advantages of chiral EFT descriptions of the nuclear interaction are the natural emergence of two-, three-, and many-nucleon interactions [51–55], the consistent formulation of quantum currents, e.g., with respect to electroweak operators [56–62], and a clear connection with the pion-nucleon Lagrangian which makes it possible to link nuclei with low-energy pion-nucleon scattering processes [63]. For a detailed account of chiral EFT potentials (see references [13–15]).

To ensure steady progress toward a realistic ab initio model for atomic nuclei, we need to critically examine and evaluate the quality and predictive power of different theoretical approaches and model predictions. To this end it is crucial to equip all quantitative theoretical results with uncertainties, and this is where another advantageous aspect of EFT comes into play. It promises to deliver a handle on the systematic uncertainty of a theoretical prediction. Indeed, on a high level the EFT expansion for an observable can be written

where is the first term in the above expansion, and cν are dimensionless expansion coefficients. Here, and in the following, the LO result () was pulled out in front of the sum to set the overall scale. One could equally well use the experimental value for or the highest-order calculation to set the scale of the observable expansion. If we are dealing with an EFT, one should expect the expansion coefficients to be of natural size such that predictions at successive chiral orders are smaller by a factor of Q. See also references [64, 65] for discussions on how to assess the convergence of data. In an actual calculation, the order-by-order description of is truncated at some finite order k, which induces a truncation error δk in the prediction. The underlying EFT description then, in principle, allows us to determine the formal structure of the truncation error

This type of handle on the theoretical uncertainty in a prediction is not present in purely phenomenological descriptions of the nuclear interaction, such as the Argonne V18 potential [11] or the CD-Bonn potential [12]. Despite all of the promised advantages of chiral EFT, it should be pointed out that much work remains to be done regarding the analysis and theoretical underpinnings of chiral EFT, in particular the formulation of a PC that, arguably, fulfills the field theoretic requirements for an EFT of QCD (see e.g., references [66–73]), for various views on this topic. Indeed, one cannot yet confidently claim that the uncertainty estimates in ab initio predictions of nuclear observables based on proposed chiral EFT interactions are linked to missing physics at the level of the effective Lagrangian. The details of the PC, regularization approach, and chosen maximum chiral order k in Equation (3), are some of many possible choices that give rise to the rich landscape of different chiral interactions in nuclear theory. Although there is a flurry of activity, and far from clear which is the best way to proceed, there is tremendous overarching value to organize the model analysis according to the fundamental ideas and expectations of EFT, most importantly the promise of order-by-order improvement.

The goal of model calibration is to learn about the parameter of the model using a pool of calibration data. This can mean many different things depending on the situation, and in this section I will discuss a few representative model calibration examples from ab initio nuclear theory.

Assume that we have a model that consists of a method for solving the Schrödinger equation and some theoretical description of the nuclear interaction, e.g., a particular interaction potential from chiral EFT, and we do not know the permissible values for . The vector denotes the N physically relevant and adjustable calibration parameters of model M, and the vector denotes the set of control inputs. The adjustable parameters of interest will typically correspond to the LECs of the nuclear interaction potential, and the vector will contain e.g., proton- and neutron-numbers, observable type, or some kinematical setting. In principle the model might contain additional adjustable parameters that for some reasons can be considered as constants. For instance, we typically do not consider the pion mass as a calibration parameter, although the variation of such fundamental properties can also play an important role (see e.g., references [74, 75]). The choice of many-body method will depend on which class of observables is targeted, either during prediction or calibration. For instance, coupled-cluster theory will perform very well for nuclei in the vicinity of closed shells and Faddeev integration will be able to access the positive energy spectrum of the three-nucleon Hamiltonian. Throughout, I will implicitly assume that the model is realized only on a computer, i.e., M is defined through some computer code, and there is no stochastic element present in the output. This means that each time the model is evaluated with the same input and settings, we will basically get the same result.

To calibrate the parameters, suppose that we have a set of n experimental observations compiled in a data vector D = [z1, z2, …, zn]. They correspond to particular settings of the control variables, to produce model outputs for e.g., ground-state energies for light nuclei or scattering cross sections at selected scattering momenta. We can link the data points to the model outputs via the following relation

This expression relates the reality of measurement with our model, and includes a so-called model discrepancy term δ, that depends on the control variable . The measurement error is denoted with εi. In cases where the measurement is accompanied with zero uncertainty, something that is highly unlikely of course, the model discrepancy term represents the entire difference between the model and reality. The theoretical discrepancy δ is not physics per se, but should rather be interpreted as a random variable of statistical origin, informed via domain knowledge.

The model discrepancy term can be partitioned into at least three terms

and they represent the neglected or missing physics in the theoretical description of the nuclear interaction, neglected or missing many-body correlations in the mathematical solution of the many-body Schrödinger equation, and any numerical errors arising due to algorithmic approximations in the implementation of the computer model, respectively. We are currently most interested in understanding δinteraction in situations where we, to a good approximation, can neglect δmany−body and δnumerical. Thus, in most of the literature, the dominant part of the model discrepancy originates from the chiral EFT description of the nuclear interaction. It should be pointed out that the discrepancy term of the many-body method can be quite large for many types of observables. However, ab initio methods are often applied wisely, and there exists plenty of domain knowledge regarding which many-body methods that are best suited for different kinds of observables. Yet, it is not easy to set bounds on this discrepancy a priori. Comparison between several complementary ab initio models provides important validation [76–78]. Although challenging, it would be of great value to quantify the many-body discrepancy more carefully. Finally, the last term in Equation (6) is currently not the dominant part of the discrepancy, provided that the computer code has been benchmarked.

Two related questions immediately arise: (i) what is the impact of the discrepancy term on the inference about the model parameters ? and (ii) what happens if we neglect all sources of model discrepancy during model calibration?

Let us consider the second question, since it is easier and also sheds light on the first one. Ignoring in Equation (5) leaves us with the following expression

This is the conventional starting point in nuclear model calibration. If one also assumes that the measurement errors εi have finite variance, then the principle of maximum entropy dictates that the likelihood of the data is normally distributed. For independent errors, this leads to the canonical expression for the likelihood

Here, the notation P(X|Y) denotes the probability density function (pdf) of X conditioned on Y. The structure of the likelihood remains the same for correlated measurement errors, although one must employ the full covariance matrix instead of only the diagonal terms to represent the variance of the data. Model calibration in ab initio nuclear theory is typically formulated as a maximum likelihood problem. This boils down to finding the optimal, or best-fitting, parameters that minimize the exponent in Equation (8). We are thus facing a mathematical optimization (minimization) problem

of finding the point that fulfills for all , where Ω represents the parameter domain. In general, this is an intractable problem unless we have detailed information about or that the parameter domain is discrete and contains a finite number of points. In reality, we are trying to find local minimizers to , i.e., points for which for all close to .

For ab initio models, optimization of the likelihood function typically proceeds in several steps [11, 12, 79–82]. First, the parameters, i.e., the LECs in chiral EFT, are calibrated such that the model optimally reproduces nucleon-nucleon scattering phase-shifts from published partial-wave analyses [83, 84]. This typically yield model parameters confined to some narrow range of values. Although each scattering phase-shift only depends on a limited subset of the entire vector of model parameters , this stage still benefits from using mathematical optimization algorithms, such as the derivate-free algorithm called pounders [85, 86]. In a next step, the results from the phase-shift optimization serves as the starting point for a second round of parameter optimization where all model parameters are varied to best reproduce thousands of nucleon-nucleon scattering cross sections up to scattering energies in the vicinity of the pion-production threshold.

Minimizing the χ2 in Equation (8) for nucleon-nucleon interaction potentials with respect to nucleon-nucleon scattering data1 has been the workhorse of model calibration in nuclear theory for decades2. Since long, the figure of merit for a nuclear interaction potential has been the χ2-per-datum value. If this value is close to unity for some particular parameterization , then the corresponding potential is dubbed to be “high-precision.” This is beginning to change. Only for models M, where the model-discrepancy is in fact negligible this approach can be justified. Otherwise, chasing a low χ2 leads down the path of significant over-fitting, with unreliable predictions as a consequence. For the calculation of nucleon-nucleon scattering phase shifts and cross sections it is valid to ignore δmany−body and δnumerical since the corresponding equations are can be solved more or less numerically exactly. However, since we clearly cannot claim to have a zero-valued δinteraction term, the χ2-per-datum with respect to nucleon-nucleon scattering data is not the optimal measure to guide future efforts in nuclear theory. Before and during the development of ab initio many-body methods and EFT principles, when it was very unclear how to understand the concept of model discrepancy in nuclear theory, it was certainly more warranted to benchmark nuclear potentials based solely on a straightforward χ2 value.

State-of-the-art interaction potentials also contain three-nucleon force terms. Although some of the parameters in chiral EFT are shared between two- and three-nucleon terms, there exists a subset of parameters inherent only to the three-nucleon interaction. Such parameters must be determined using observables from A > 2 systems. Arguably, all parameters of a chiral potential should be optimized simultaneously to a joint dataset D. The easiest approach is to employ also e.g., the binding energies and charge radii of 3, 4He and 3H. Unfortunately, there exists a universal correlation between the binding energies of 3H and 4He, the so-called Tjon line [88]. Also the radii and binding energies exhibit a strong correlation. Altogether, this reduces the information content of this data. Fortunately, it was demonstrated in reference [89] that the beta decay of 3H can add valuable, although limited [90], information about the parameters in the three-nucleon interaction, and this has been employed in several works, as indicated by the long list of citations of reference [89]. Recently, selected three-nucleon scattering observables have been added to the pool of calibration data [91, 92], however not routinely since it is still computationally quite costly to evaluate the ab initio models for such observables. There are indications that it is necessary to include also data from nuclei heavier than 4He to learn about the parameters in ab initio models. This is discussed in section 3.2.

Ignoring the δinteraction discrepancy terms during model calibration can have serious consequences. Most importantly, this reduces the LECs to tuning parameters without any physical meaning. Indeed, in the strive to replicate the data at any cost, the numerical values can be driven far away from the true values of the model. At some point, continued tuning of the parameters induces over-fitting and the model will pick up on the noise in the data. Naturally, this leads to poor predictive power. With increasing amounts of data, the optimization process will converge with increasing certainty to false values for . A pedagogical introduction to the statistics of model discrepancies and a physics example is provided in reference [93].

A total model discrepancy, according to Equation (6), was included in ab initio model calibration for the first time in reference [80]. The parameters in a set of chiral interactions at LO, NLO, and NNLO were optimized using nucleon-nucleon, and pion-nucleon scattering data. The terms in the three-nucleon interaction were simultaneously informed using bound-state observables from A = 2, 3 nuclei. The details of the analysis and results can be found in the original paper. The discrepancy terms were interpreted as uncorrelated errors and added in quadrature with the data uncertainties, leading to a slight modification of the corresponding χ2 function

The interaction discrepancy was constructed from the EFT assumption that the external momenta flowing through the interaction diagrams scale as some power corresponding to the truncation of the chiral expansion, in accordance with Equation (4). The intrinsic scale of this error was solved for self-consistently by requiring that the χ2-per-datum should approach unity providing that the model error is correctly estimated. This implicitly assumes a correct estimate of the number of statistical degrees of freedom. Something that cannot be easily estimated for non-linear χ2 functions [94].

To summarize, although the inclusion of model discrepancies is preferred, it is not without problems. To blindly include a term to capture model discrepancies in the process of model calibration can lead to statistical confounding between and a general discrepancy function δ(·) [93]. This means that the model parameters and the discrepancy term are not identifiable and we only recover a some joint pdf for the two components. Indeed, for any there is a δ(·) given by the difference between model and reality. To make progress requires us to specify some a priori ranges for and/or δ(·). Or in the language of Bayesian inference, we need to specify the prior pdf for the model parameters and the theory uncertainties. This is partly related to approaches where one augments the χ2 function with a penalty term to constrain the values of the model parameters (see e.g., reference [95]). For EFT descriptions of the nuclear interaction one can argue that the LECs should maintain values of order unity, if expressed in units of the breakdown scale, and the discrepancy could follow the pattern of Equation (4). To adequately represent the discrepancy term in nuclear models is ongoing research, and it appears advantageous to reformulate model calibration as a Bayesian inference problem, see section 4.

At the optimum parameter point , a Taylor expansion of the χ2 function to second order gives

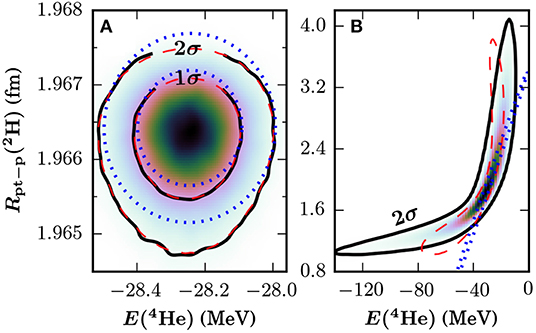

where H denotes a Hessian matrix, the inverse of which is proportional to the covariance matrix for the model parameters [96]. Contracting the parameter-Jacobian of any model prediction with this covariance matrix yields the standard error propagation result of the parameter uncertainties. For the conventional χ2 function, the parameter covariances reflect the impact of the experimental uncertainties on the precision of the optimum and predicted observables. Sometimes, this is referred to as statistical uncertainties, which is a bit confusing since all uncertainties are statistical in nature. See Figure 1 for an example result of applying a parameter covariance matrix to obtain the joint pdf for the 4He ground-state energy and the 2H point-proton radius, two important few-nucleon observables. This particular result is taken from reference [80], where in fact a model discrepancy term δ(·) was incorporated during the optimization, thus in this particular case the covariances reflect more than just the measurement noise. See e.g., references [97–102] for details about statistical error analysis and illuminating examples of forward error propagation in ab initio nuclear theory.

Figure 1. Joint distribution for the ground-state energy of 4He (x-axis) and the point-proton radius of 2H (y-axis) for (A) the chiral potential NNLOsim and (B) the chiral potential NNLOsep (see reference [80]). Contour lines for the distributions are shown as black solid lines, while blue dotted (red dashed) contours are obtained assuming a linear (quadratic) dependence on the LECs for the observables.

To extract the covariance matrix requires computation of the second-order derivatives of the χ2 function with respect to the model parameters. The general process of numerically differentiating an ab initio model with respect to is significantly simplified, and numerically much more precise, with the use of automatic differentiation (AD) [80]. This corresponds to applying the chain rule of differentiation on a function represented as a computer code. It relies on the principle that any computer code, no matter how complicated always executes a set of elementary arithmetic operations on a finite set of elementary functions (exponentiation, logarithmization, etc). To implement AD requires modification of the original computer code, e.g., operator overloading via third-party libraries. Once implemented, AD also enables application of more advanced derivative-based optimization algorithms and Markov chain Monte Carlo methods [103] with the computer model M. An alternative, and derivative-free approach, to computing the Hessian matrix for forward error propagation is to employ Lagrange multipliers [104]. This method is more robust, but also more computationally demanding to carry out. From a practical and computational perspective, if one considers to use Lagrange multipliers, one should also look into performing a Bayesian analysis (see section 4).

It is preferable to use data corresponding to observables that are computationally cheap to evaluate, and if possible with model settings corresponding to low discrepancies. One should also strive to include data with highly complementary information content that constrain a maximum amount of linearly independent combinations of model parameters.

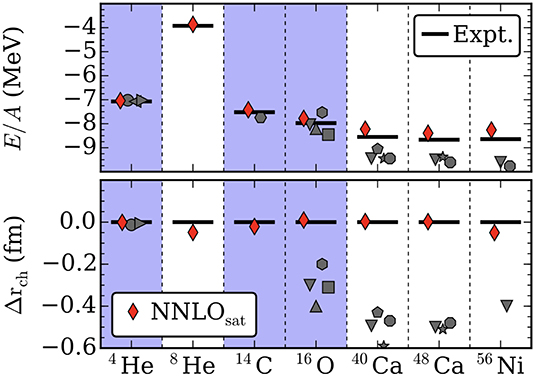

The conventional approach to calibrate ab initio models is to use only data from A ≲ 4 nuclei, as was discussed above. It was observed in reference [25] that the additional inclusion of ground-state energies and charge radii of selected carbon and oxygen isotopes dramatically increases the predictive power of models for bulk properties of nuclei up to the medium-mass nickel region (see Figure 2). This calibration strategy led to the construction of the so-called NNLOsat interaction. The strategy to include data from selected A > 4 nuclei was also used in the construction of the Illinois 3NF presented in reference [105]. From a quantitative perspective, the advent of models capable of accurate predictions is of course an important step forward and has proven very useful [26, 27, 106, 107].

Figure 2. Ground-state energies per nucleon (top) and differences between theoretical and experimental charge radii (bottom) for selected light and medium-mass nuclei and results from ab initio computations. The red diamonds mark results based on the chiral interaction NNLOsat. The blue columns indicate which nuclei where included in the optimization of the LECs in NNLOsat, while the white columns are predictions. Gray symbols indicate other chiral interactions.

The major drawback of any model based on the NNLOsat interaction is the lack of quantified theoretical uncertainties. This is quite common also for ab initio models based on other interaction potentials. At the moment, the best we can do is to estimate the truncation error using Equation (4). This requires additional and sub-leading chiral-order potentials using the same optimization protocol, e.g., LOsat and NLOsat, which do not exist. The calibration of such models require an even more careful inclusion of model discrepancies. This is discussed more in section 4. One can certainly argue that it becomes even more important to quantify the theory errors for models that we strongly believe will make accurate predictions, like the ones based on the NNLOsat interaction. Otherwise we are limited in our ability to assess discrepancies with respect to experiment. This argument applies equally well to models based on e.g., the 1.8/2.0 interaction from reference [29, 30] which typically yield good predictions for binding energies and low-energy spectra. In reference [26], the prediction from ab initio models based on different interactions, NNLOsat and the 1.8/2.0 interactions amongst other, were compared to estimate the overall theoretical uncertainty.

It is difficult to judge the degree of over-fitting to finite nuclei in NNLOsat. It was noted during calibration that this interaction fails to reproduce experimental nucleon-nucleon scattering cross sections for scattering momenta larger than ~mπ. Enforcing a good reproduction of all scattering data up to e.g., the pion-production threshold most likely corresponds to over-fitting in the A = 2 sector. It is the role of the model discrepancy term, with appropriate priors, to balance this.

One clearly gains predictive power regarding saturation properties by including additional medium-mass data during the calibration stage. This was also observed in a lattice EFT analysis of the nuclear binding mechanism [108]. The related topic of possibly emergent nuclear phenomena like saturation, binding, and deformation of atomic nuclei is discussed further in reference [109]. Although the inclusion of a model discrepancy term while calibrating to heavier-mass data will be important, it does not solve the underlying problem of having a systematically uncertain model. It was noted in references [110–112] that the explicit inclusion of the Δ isobar in the chiral description of the nuclear interaction dramatically improves the description of nuclei while also reproducing nucleon-nucleon scattering data. A possibly fruitful way forward is to employ improved models, i.e., with explicit inclusion of the Δ isobar, that are calibrated using also data from selected heavy-mass nuclei, while systematically accounting for model discrepancies. Furthermore, it will be interesting to se how much additional information is contained in three-nucleon scattering data [91, 92, 113].

The previous section introduced the concept of model calibration and the fundamental expression in Equation (5) that relates a model with measured data. In this section I will outline the Bayesian strategy for learning about the model parameters and some existing estimates of the discrepancy term. The overarching goal is still to calibrate an ab initio model , and reliably predict properties of atomic nuclei. However, instead of finding a single point in parameter space that maximizes the likelihood for the data, we can use Bayes' theorem to relate the data likelihood to a pdf for the model parameters themselves

where denotes the prior pdf for the parameters, denotes the likelihood of the data, the denominator P(D|M, I) denotes the marginal likelihood of the data, and denotes the sought-after posterior pdf of the model parameters. The additional I represents any other information at hand.

The Bayesian reformulation of the inference problem can at first sight appear as a subtle point, and it is easy to overlook the fundamental difference between computing the pdf for the parameters and maximizing the likelihood, i.e., frequentist inference. From a practical perspective, it is clearly advantageous to obtain a pdf for the model parameters . This quantity is also intuitively straightforward to interpret compared to frequentist interval estimates that might contain the true value of the unknown model parameters, e.g., confidence intervals. The prior pdf for the parameters given a model M offers up front possibility to incorporate any prior knowledge (or belief) about the parameters, before we look at the data. In the case of ab initio modeling, an underlying EFT-description of the nuclear interaction embodies substantial prior knowledge, such as the typical magnitude of the model parameters as well as a handle on the systematic uncertainty. The Bayesian requirement of prior specification also ensures full transparency regarding the assumptions that goes into the analysis.

The existence of priors in Bayesian inference is sometimes criticized and one can argue that the scientific method should let the data speak for itself, without the explicit insertion of subjective prior belief. Inference about model parameters in terms of hypothesis tests or confidence intervals, derived from the frequency of the data, is referred to as frequentist inference. Note however that the likelihood rests on initial subjective choice(s) regarding the data model. In this review, I will maintain a practical perspective, and just recognize the usefulness of the Bayesian approach to encode prior information about the model parameters and the model discrepancy terms. Which is also required in order to handle possible confounding between the discrepancy and the model parameters [93]. Either way, it is difficult to avoid subjective choices in statistical inference involving uncertainties and limited data. In fact, one can even argue that only subjective probabilities exist [114].

Bayesian model calibration, sometimes called Bayesian parameter estimation, is currently emerging in ab initio modeling [115–117]. To get some intuition about this topic, let us look at Bayesian parameter estimation in its most simple version. This amounts to assuming a (bounded) uniform prior pdf for the model parameters , i.e.,

and adopting a data likelihood as in Equation (8). In practice, what remains is to explicitly evaluate in Equation (12) by computing the product of the two terms in the numerator. The denominator can be neglected since it does not explicitly depend on . This marginal likelihood does however matter for absolute normalization of the posterior pdf. The evaluation of the posterior can be done via brute force evaluation in some simple cases, but for computationally expensive models and/or high-dimensional parameter space typically more clever strategies are required, such as Markov chain Monte Carlo. With uniform priors, the point for the maximum posterior coincides exactly with the point obtained using maximum likelihood methods, which for normal likelihood distributions is nothing but least-squares.

The advantages of Bayesian parameter estimations becomes apparent once we include non-uniform prior knowledge, and in most cases we know a bit more about the parameters than what a simple uniform pdf reflects. The general strategies for application of Bayesian methods to calibrate EFTs are pedagogically outlined in reference [116]. To exemplify the use of priors and some of the related techniques, let us assume a Gaussian prior with zero mean for the model parameters , i.e.,

where the parameter ā2 denotes the prior variance. This is not an unreasonable prior for the model parameters in chiral EFT. The impact of this parameter prior is to penalize model parameters that are too large, which would typically signal over-fitting. For situations where there exist a large amount of precise data, the prior specification for the parameters matter less. Nevertheless, the question remains, what value should we pick for ā? This can be dealt with straightforwardly by marginalizing over ā, i.e., we express the prior for the parameters as

which only forces us to specify a prior for the variance for our belief about the model parameters, here we could choose a rather broad range if we like. With appropriate analytical form for the prior on ā, it is even possible to carry out this marginalization step analytically. See reference [117] for illuminating examples about the impact of different priors in model calibration with scattering-phase shifts.

Observables computed with potentials from chiral EFT should exhibit a pattern where contributions from successive orders ν = 0, 1, 2, 3, … are smaller by factors Qν. This is reflected in Equation (3). Therefore, the expansion coefficients {cν} should remain of natural size, a clear example of a situation where we have prior knowledge3. Given a series of model calculations of the observable , up to the chiral order ν = k, i.e., , and an estimate of the factor Q, it is straightforward to extract the coefficients [c0, c1, …, ck]. It was shown in references [118, 119] how to extract a pdf for the EFT truncation error δk in Equation (4) using this information. First, we factor out the overall scale, and define

as the overall dimensionless truncation error. We now seek an expression for given the known values for the first k + 1 coefficients. It turns out that for independent, bounded, and uniform prior pdfs for the expansion coefficients, the integrals can be solved analytically if one also approximates with the leading term. Thus, we assume

The posterior pdf is given in reference [119] (Equation 22), and explicitly derived in the appendix of reference [118]. This posterior pdf is the complete inference about . If the pdf is multi-modal or otherwise non-trivial one should use it in its entirety in forward analyses. However, we can sometimes use a so-called degree of belief (DOB) value to quantify the width of a pdf. This is the probability p%, expressed in percent, that the value of an uncertain variable η, distributed according to the pdf P(η), falls within an interval [a, b]. This interval is then referred to as a credible interval with p% DOB, where

The posterior pdf for is not Gaussian, however it is symmetric and have zero mean. Therefore, we can define a smallest interval that captures p% of the probability mass

and solve for . This will define the width of the credible interval within which the next term in the EFT expansion will fall with p% DOB, i.e., an estimate of the truncation error. The expression is derived in references [118, 119], and given by

where nc denotes the number of available coefficients. Thus, with nc/(nc + 1) × 100% DOB, the EFT truncation error for the observable , in dimensionful units, is straightforwardly estimated by . This estimate also corresponds to the prescription employed in reference [120]. This a posteriori truncation error estimate essentially boils down to guessing the largest number that one can expect based on a series of numbers drawn from the same underlying distribution. For example, given only one (nc = 1) expansion parameter c0, we have a 50% DOB that we have encountered the largest coefficient in the series. This procedure has been applied to estimate the truncation error in several ab initio model calculations, see the long list of papers that are citing references [119, 120].

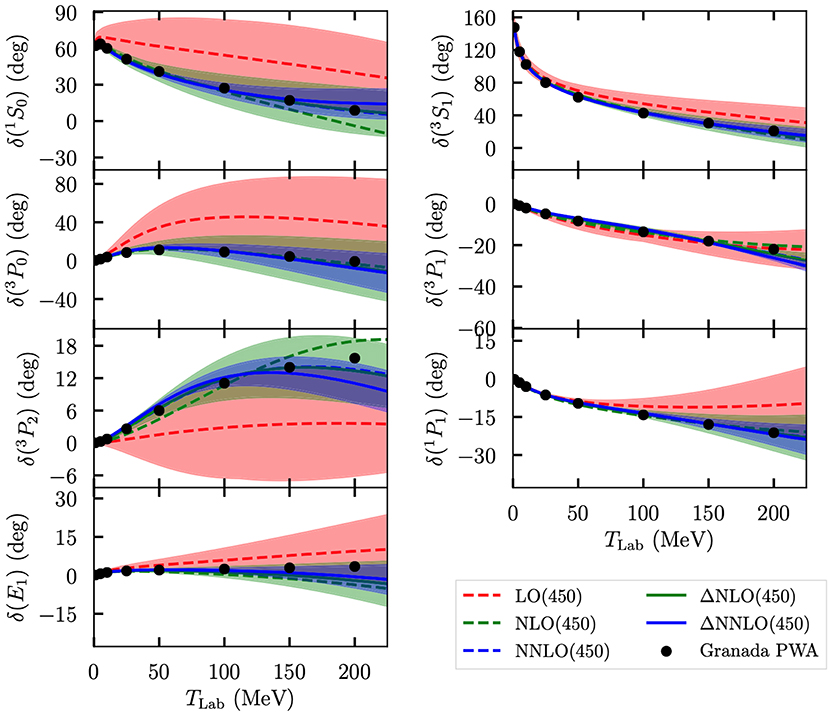

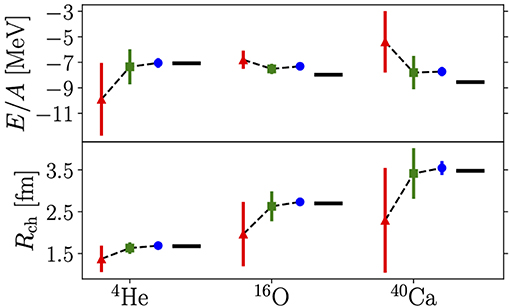

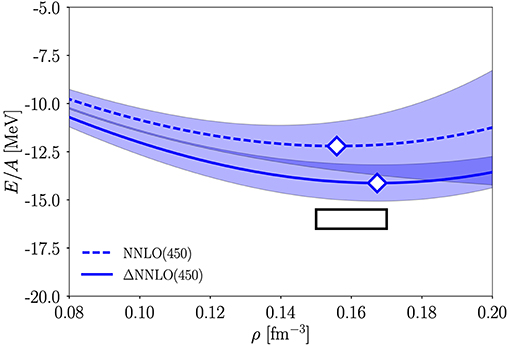

The procedure for estimating the EFT truncation error, i.e., part of the model discrepancy, requires an estimate of the high-energy scale Λb of the underlying EFT. For the models discussed here, the results are based on chiral EFT, for which the naive estimate of Λb is roughly MN ~ 1 GeV. This was analyzed more carefully for semi-local chiral potentials [45, 120] in reference [121]. The posterior pdf for Λb indicated that a more probable value is Λb ≈ 500 MeV. This value was also used for the breakdown scale in the truncation error analysis of nucleon-nucleon scattering phase shifts from the Δ-full models at LO,NLO, and NNLO chiral orders in reference [110]. The results are presented in Figure 3. This result also strengthens the observation made earlier, that the inclusion of the Δ degree of freedom tend to improve model descriptions of nuclear systems. This is more clearly seen when employing the same potentials to make model predictions for the ground-state-energies and charge radii of selected finite nuclei (see Figure 4), and the energy per nucleon in symmetric nuclear matter (see Figure 5).

Figure 3. Neutron-proton scattering phase shifts computed with models based on Δ-full and Δ-less chiral interaction potentials. The bands indicate the limits of the expected DOB intervals at each chiral order ν. The black dots represent the values from the Granada partial wave analysis [84].

Figure 4. Ground-state energy (negative of binding energy) per nucleon and charge radii for selected nuclei computed with coupled cluster theory and the Δ-full potential ΔNNLO(450). For each nucleus, from left to right as follows: LO (red triangle), NLO (green square), and NNLO (blue circle). The black horizontal bars are data. Vertical bars estimate uncertainties from the order-by-order EFT truncation errors.

Figure 5. Coupled-cluster based model prediction of the energy per nucleon (in MeV) in symmetric nuclear matter using an NNLO potential with (solid line) and without (dashed line) the Δ isobar. Both interactions employ a momentum regulator-cutoff Λ = 450 MeV. The shaded areas indicate the estimated EFT-truncation errors. The diamonds mark the saturation point and the black rectangle indicates the region E/A = −16 ± 0.5 MeV and ρ = 0.16 ± 0.01 fm−3.

The model predictions for the nuclear matter indicate that the Δ-full models on average agree better with experimental energies and radii. The uncertainty bands for the predictions were extracted under the additional assumption that the relevant soft-scales for finite and infinite nuclear systems are given by the pion mass and the Fermi momentum, respectively. Although these are rough estimates of the soft scales, it is important to note that the the truncation error in Equation (20) only holds up to factors of order unity. A comparison of theoretical error estimates based on different statistical methods provide additional validation. The Bayesian method for estimating the truncation error and the model errors estimated using the modified χ2-function in Equation (10) are quite different in nature. Nevertheless, a comparison of the theoretical errors in nucleon-nucleon cross sections at high scattering-energies agree very well for these methods [80, 119]. The link between the two approaches for estimating the model uncertainties is discussed further in reference [117]. A complete Bayesian parameter estimation including model discrepancy will hopefully reveal more details about the structure of the chiral EFT error.

At the moment, most model discrepancies in ab initio modeling based on chiral EFT are extracted a posteriori using predictions based on calibrated models. This is possible based on the expectation that the predictions might follow an EFT pattern. This of course remains to be validated on theoretical grounds. However, under the assumption that the interaction potential actually gives rise to an EFT pattern for the observable, we can build on Equation (4) to include a discrepancy term in the likelihood for calibrating ab initio models. See reference [122] for a discussion about correlated truncation errors in nucleon-nucleon scattering observables following this line of thought, where it is also observed that the expansion parameters behave largely as expected.

Statistical representation of a sound model discrepancy term is certainly challenging. Still, the assumption of zero model discrepancy is a rather extreme position. Almost any reasonable guess is better than nothing in order to avoid false values for the model parameters and to minimize over-fitting.

The importance of acknowledging model discrepancies is neatly summarized in the famous quote of George E. P. Box: “Essentially, all models are wrong, but some are useful” [123], with the additional comment in reference [93]: “But a model that is wrong can only be useful if we acknowledge the fact that it is wrong.”

Fortunately, most of the ab initio models of atomic nuclei are built on methods from EFT, which by construction promises extra information about the expected impact of the neglected or missing physics in theoretical predictions. Bayesian inference is a natural choice for accounting for model discrepancies and prior knowledge, especially when the priors have a physical basis. Indeed, extracting the posterior pdf for the model parameters via Bayesian inference methods makes it possible to abandon the notion of having a single parameterization of a particular interaction potential and instead build models based on a continuous pdf of parameters. Developments along these lines are already taking place in e.g., density functional theory for atomic nuclei [124].

At the moment, most theoretical analyses of atomic nuclei proceed in the following fashion. Given a potential , optimized to reproduce some set of calibration data D, we setup a model to analyze an experimental result corresponding to the control setting , i.e., we evaluate . In a few cases we propagate uncertainties originating from the measurement errors present in the data vector D, and sometimes we estimate the EFT truncation error using a series of models at different chiral orders. This takes a lot of effort. Indeed, ab initio nuclear models are represented by complex computer codes, implemented via years of dedicated work by several people, and computationally expensive to evaluate. On top of that, to understand the underlying nuclear interaction is, arguably, one of the most difficult problems in all physics. Still, we would like to answer questions like: how much should we trust a model prediction? is the model M over-fitted? why is it not agreeing with observed data, and how do we understand this discrepancy?

We should strive to use Bayesian methods for calibrating our models to obtain posterior pdfs for the parameters. Subsequent evaluations of an observable , corresponding to setting the model control variable to , should be marginalized over the parameter posterior pdf to produce a posterior predictive pdf

This quantity will best reflect our state of knowledge, and is quite meaningful to compare with data. Various marginalizations with respect to subsets of the parameters can provide better insights into the qualities of the ab initio model. Bayesian inference also allows us to compare different models via the computation of Bayes factors [125], which in turn enables us to address questions like: which PC in chiral EFT has the strongest support by data? It is also theoretically straightforward to compute the posterior predictive pdf averaged over a set of different models [126], each weighted by their probability of being true, in the finite space spanned by , given data D.

There are several challenges connected with the outlook presented above: working out the theoretical underpinnings of chiral EFT, specifying prior information, formulating model discrepancy terms, and performing challenging Markov Chain Monte Carlo evaluation of complicated posterior pdfs. From a practical point of view, handling, the computational complexity is the most difficult one. Indeed, evaluating models of medium- and heavy-mass atomic nuclei typically requires vast high-performance computing resources. This clearly puts the feasibility of the Bayesian scenario presented above into question. Without any unforeseen disruptive computer technologies or dramatic algorithmic advances, it will be necessary to employ, where possible, fast emulators that accurately mimic the response of the original ab initio models. This is where we can draw from advances in machine learning. Possibly useful methods are e.g., Gaussian process regression and artificial neural networks. Both of these approaches can be challenging since they introduce hyperparameters that require additional optimization. Although it can be difficult to assess how well such methods will work, there exist several examples of useful surrogate interpolation and extrapolation in nuclear modeling (see e.g., references [122, 124, 127–131]). A new method called eigenvector continuation [132] turns out to be a promising tool for accurate extrapolation and fast emulation of nuclear properties [133]. In a recent paper [134], this method proved capable of emulating (with a root mean squared error of 1%) more than one million solutions of an ab initio model for the ground-state energy and radius of 16O in one hour on a standard laptop. An equivalent set of exact ab initio coupled-cluster computations would require 20 years.

The author has made a substantial contribution to the work, and approved it for publication.

This work was supported by the European Research Council (ERC) under the European Unions Horizon 2020 research and innovation programme (Grant agreement No. 758027).

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

I would like to thank all my collaborators for fruitful discussions and for sharing their insights during our joint work on the topics presented here.

1. ^A recent compilation of scattering data that is typically employed for this is provided in reference [84].

2. ^The χ2 function employed for nucleon-nucleon scattering data is slightly more involved to encompass partially correlated measurements (see e.g., [87]).

3. ^The wording; prior knowledge vs. prior expectation, or even prior belief, signals the level of subjective certainty or source for the prior.

1. Dickhoff WH, Barbieri C. Self-consistent Green's function method for nuclei and nuclear matter. Prog Part Nucl Phys. (2004) 52:377–496. doi: 10.1016/j.ppnp.2004.02.038

2. Lee D. Lattice simulations for few- and many-body systems. Prog Part Nucl Phys. (2009) 63:117–54. doi: 10.1016/j.ppnp.2008.12.001

3. Bogner SK, Furnstahl RJ, Schwenk A. From low-momentum interactions to nuclear structure. Prog Part Nucl Phys. (2010) 65:94–147. doi: 10.1016/j.ppnp.2010.03.001

4. Barrett BR, Navrátil P, Vary JP. Ab initio no core shell model. Prog Part Nucl Phys. (2013) 69:131–81. doi: 10.1016/j.ppnp.2012.10.003

5. Carbone A, Cipollone A, Barbieri C, Rios A, Polls A. Self-consistent Green's functions formalism with three-body interactions. Phys Rev C. (2013) 88:054326. doi: 10.1103/PhysRevC.88.054326

6. Hagen G, Papenbrock T, Hjorth-Jensen M, Dean DJ. Coupled-cluster computations of atomic nuclei. Rep Prog Phys. (2014) 77:096302. doi: 10.1088/0034-4885/77/9/096302

7. Hergert H, Bogner SK, Morris TD, Binder S, Calci A, Langhammer J, et al. Ab initio multireference in-medium similarity renormalization group calculations of even calcium and nickel isotopes. Phys Rev C. (2014) 90:041302. doi: 10.1103/PhysRevC.90.041302

8. Carlson J, Gandolfi S, Pederiva F, Pieper SC, Schiavilla R, Schmidt KE, et al. Quantum Monte Carlo methods for nuclear physics. Rev Mod Phys. (2015) 87:1067–118. doi: 10.1103/RevModPhys.87.1067

9. Barnea N, Leidemann W, Orlandini G. Ground state wave functions in the hyperspherical formalism for nuclei with A>4. Nucl Phys A. (1999) 650:427–42. doi: 10.1016/S0375-9474(99)00113-X

10. Glöckle W, Witala H, Hüber D, Kamada H, Golak J. The three-nucleon continuum: achievements, challenges and applications. Phys Rep. (1996) 274:107–285. doi: 10.1016/0370-1573(95)00085-2

11. Wiringa RB, Stoks VGJ, Schiavilla R. Accurate nucleon-nucleon potential with charge-independence breaking. Phys Rev C. (1995) 51:38–51.

12. Machleidt R. High-precision, charge-dependent Bonn nucleon-nucleon potential. Phys Rev C. (2001) 63:024001. doi: 10.1103/PhysRevC.63.024001

14. Epelbaum E, Hammer HW, Meißner UG. Modern theory of nuclear forces. Rev Mod Phys. (2009) 81:1773–825. doi: 10.1103/RevModPhys.81.1773

15. Machleidt R, Entem DR. Chiral effective field theory and nuclear forces. Phys Rep. (2011) 503:1–75. doi: 10.1016/j.physrep.2011.02.001

16. Machleidt R. 2. In: The Meson Theory of Nuclear Forces and Nuclear Structure. Boston, MA: Springer US (1989). p. 189–376. doi: 10.1007/978-1-4613-9907-0_2

17. Elhatisari S, Lee D, Rupak G, Epelbaum E, Krebs H, Lähde TA, et al. Ab initio alpha–alpha scattering. Nature. (2015) 528:111–4. doi: 10.1038/nature16067

18. Rosenbusch M, Ascher P, Atanasov D, Barbieri C, Beck D, Blaum K, et al. Probing the N = 32 shell closure below the magic proton number Z = 20: mass measurements of the exotic isotopes 52, 53K. Phys Rev Lett. (2015) 114:202501. doi: 10.1103/PhysRevLett.114.202501

19. Lapoux V, Somà V, Barbieri C, Hergert H, Holt JD, Stroberg SR. Radii and binding energies in oxygen isotopes: a challenge for nuclear forces. Phys Rev Lett. (2016) 117:052501. doi: 10.1103/PhysRevLett.117.052501

20. Lu BN, Li N, Elhatisari S, Lee D, Epelbaum E, Meißner UG. Essential elements for nuclear binding. Phys Lett B. (2019) 797:134863. doi: 10.1016/j.physletb.2019.134863

21. Taniuchi R, Santamaria C, Doornenbal P, Obertelli A, Yoneda K, Authelet G, et al. 78Ni revealed as a doubly magic stronghold against nuclear deformation. Nature. (2019) 569:53–8. doi: 10.1038/s41586-019-1155-x

22. Gysbers P, Hagen G, Holt JD, Jansen GR, Morris TD, Navrátil P, et al. Discrepancy between experimental and theoretical β-decay rates resolved from first principles. Nat Phys. (2019) 15:428–31. doi: 10.1038/s41567-019-0450-7

23. Somá V, Navrátil P, Raimondi F, Barbieri C, Duguet T. Novel chiral Hamiltonian and observables in light and medium-mass nuclei. Phys Rev C. (2020) 101:014318. doi: 10.1103/PhysRevC.101.014318

24. Binder S, Langhammer J, Calci A, Roth R. Ab initio path to heavy nuclei. Phys Lett B. (2014) 736:119–23. doi: 10.1016/j.physletb.2014.07.010

25. Ekström A, Jansen GR, Wendt KA, Hagen G, Papenbrock T, Carlsson BD, et al. Accurate nuclear radii and binding energies from a chiral interaction. Phys Rev C. (2015) 91:051301. doi: 10.1103/PhysRevC.91.051301

26. Hagen G, Ekström A, Forssén C, Jansen GR, Nazarewicz W, Papenbrock T, et al. Neutron and weak-charge distributions of the 48Ca nucleus. Nat Phys. (2016) 12:186. doi: 10.1038/nphys3529

27. Garcia Ruiz RF, Bissell ML, Blaum K, Ekström A, Frömmgen N, Hagen G, et al. Unexpectedly large charge radii of neutron-rich calcium isotopes. Nat Phys. (2016) 12:594–8. doi: 10.1038/nphys3645

28. Duguet T, Somà V, Lecluse S, Barbieri C, Navrátil P. Ab initio calculation of the potential bubble nucleus 34Si. Phys Rev C. (2017) 95:034319. doi: 10.1103/PhysRevC.95.034319

29. Nogga A, Bogner SK, Schwenk A. Low-momentum interaction in few-nucleon systems. Phys Rev C. (2004) 70:061002. doi: 10.1103/PhysRevC.70.061002

30. Hebeler K, Bogner SK, Furnstahl RJ, Nogga A, Schwenk A. Improved nuclear matter calculations from chiral low-momentum interactions. Phys Rev C. (2011) 83:031301. doi: 10.1103/PhysRevC.83.031301

31. Hagen G, Jansen GR, Papenbrock T. Structure of 78Ni from first-principles computations. Phys Rev Lett. (2016) 117:172501. doi: 10.1103/PhysRevLett.117.172501

32. Morris TD, Simonis J, Stroberg SR, Stumpf C, Hagen G, Holt JD, et al. Structure of the lightest tin isotopes. Phys Rev Lett. (2018) 120:152503. doi: 10.1103/PhysRevLett.120.152503

33. Simonis J, Stroberg SR, Hebeler K, Holt JD, Schwenk A. Saturation with chiral interactions and consequences for finite nuclei. Phys Rev C. (2017) 96:014303. doi: 10.1103/PhysRevC.96.014303

34. Liu HN, Obertelli A, Doornenbal P, Bertulani CA, Hagen G, Holt JD, et al. How robust is the N = 34 subshell closure? First spectroscopy of 52Ar. Phys Rev Lett. (2019) 122:072502. doi: 10.1103/PhysRevLett.122.072502

35. Holt JD, Stroberg SR, Schwenk A, Simonis J. Ab initio limits of atomic nuclei. arXiv [Preprint] arXiv:1905.10475 (2019).

36. Ireland DG, Nazarewicz W. Enhancing the interaction between nuclear experiment and theory through information and statistics. J Phys G Nucl Part Phys. (2015) 42:030301. doi: 10.1088/0954-3899/42/3/030301

37. Hartmann S. Effective field theories, reductionism and scientific explanation. Stud Hist Philos Sci B Stud Hist Philos Mod Phys. (2001) 32:267–304. doi: 10.1016/S1355-2198(01)00005-3

38. Barnea N, Contessi L, Gazit D, Pederiva F, van Kolck U. Effective field theory for lattice nuclei. Phys Rev Lett. (2015) 114:052501. doi: 10.1103/PhysRevLett.114.052501

39. Chang CC, Nicholson AN, Rinaldi E, Berkowitz E, Garron N, Brantley DA, et al. A per-cent-level determination of the nucleon axial coupling from quantum chromodynamics. Nat Publ. Group. (2018) 558:91–4. doi: 10.1038/s41586-018-0161-8

42. Bedaque PF, van Kolck U. Effective field theory for few-nucleon systems. Annu Rev Nucl Part Sci. (2002) 52:339–96. doi: 10.1146/annurev.nucl.52.050102.090637

43. Papenbrock T. Effective theory for deformed nuclei. Nucl Phys A. (2011) 852:36–60. doi: 10.1016/j.nuclphysa.2010.12.013

45. Epelbaum E, Krebs H, Meißner UG. Precision nucleon-nucleon potential at fifth order in the chiral expansion. Phys Rev Lett. (2015) 115:122301. doi: 10.1103/PhysRevLett.115.122301

46. Epelbaum E. Four-nucleon force in chiral effective field theory. Phys Lett B. (2006) 639:456–61. doi: 10.1016/j.physletb.2006.06.046

47. Krebs H, Gasparyan A, Epelbaum E. Chiral three-nucleon force at N4LO: longest-range contributions. Phys Rev C. (2012) 85:054006. doi: 10.1103/PhysRevC.85.054006

48. Krebs H, Gasparyan A, Epelbaum E. Chiral three-nucleon force at N4LO. II. Intermediate-range contributions. Phys Rev C. (2013) 87:054007. doi: 10.1103/PhysRevC.87.054007

49. Entem DR, Kaiser N, Machleidt R, Nosyk Y. Peripheral nucleon-nucleon scattering at fifth order of chiral perturbation theory. Phys Rev C. (2015) 91:014002. doi: 10.1103/PhysRevC.91.014002

50. Entem DR, Kaiser N, Machleidt R, Nosyk Y. Dominant contributions to the nucleon-nucleon interaction at sixth order of chiral perturbation theory. Phys Rev C. (2015) 92:064001. doi: 10.1103/PhysRevC.92.064001

52. Epelbaum E, Nogga A, Glöckle W, Kamada H, Meißner UG, Witała H. Three-nucleon forces from chiral effective field theory. Phys Rev C. (2002) 66:064001. doi: 10.1103/PhysRevC.66.064001

53. Bernard V, Epelbaum E, Krebs H, Meißner UG. Subleading contributions to the chiral three-nucleon force: long-range terms. Phys Rev C. (2008) 77:064004. doi: 10.1103/PhysRevC.77.064004

54. Bernard V, Epelbaum E, Krebs H, Meißner UG. Subleading contributions to the chiral three-nucleon force. II. Short-range terms and relativistic corrections. Phys Rev C. (2011) 84:054001. doi: 10.1103/PhysRevC.84.054001

55. Girlanda L, Kievsky A, Viviani M. Subleading contributions to the three-nucleon contact interaction. Phys Rev C. (2011) 84:014001. doi: 10.1103/PhysRevC.84.014001

56. Park TS, Min DP, Rho M. Chiral dynamics and heavy-fermion formalism in nuclei: exchange axial currents. Phys Rep. (1993) 233:341–95.

57. Park TS, Min DP, Rho M. Chiral Lagrangian approach to exchange vector currents in nuclei. Nucl Phys A. (1996) 596:515–52.

58. Krebs H, Epelbaum E, Meißner UG. Nuclear axial current operators to fourth order in chiral effective field theory. Ann Phys. (2017) 378:317–95. doi: 10.1016/j.aop.2017.01.021

59. Baroni A, Girlanda L, Pastore S, Schiavilla R, Viviani M. Nuclear axial currents in chiral effective field theory. Phys Rev C. (2016) 93:015501. doi: 10.1103/PhysRevC.93.015501

60. Kölling S, Epelbaum E, Krebs H, Meißner UG. Two-nucleon electromagnetic current in chiral effective field theory: one-pion exchange and short-range contributions. Phys Rev C. (2011) 84:054008. doi: 10.1103/PhysRevC.84.054008

61. Pastore S, Girlanda L, Schiavilla R, Viviani M, Wiringa RB. Electromagnetic currents and magnetic moments in chiral effective field theory (χEFT). Phys Rev C. (2009) 80:034004. doi: 10.1103/PhysRevC.80.034004

62. Piarulli M, Girlanda L, Marcucci LE, Pastore S, Schiavilla R, Viviani M. Electromagnetic structure of A = 2 and 3 nuclei in chiral effective field theory. Phys Rev C. (2013) 87:014006. doi: 10.1103/PhysRevC.87.014006

63. Hoferichter M, de Elvira JR, Kubis B, Meißner UG. Roy-Steiner-equation analysis of pion-nucleon scattering. Phys Rep. (2016) 625:1–88. doi: 10.1016/j.physrep.2016.02.002

64. Lepage P. How to renormalize the Schrodinger equation. arXiv [Preprint] arXiv:nucl-th/9706029 (1997).

65. Grieshammer H. Assessing Theory Uncertainties in EFT Power Countings From Residual Cutoff Dependence. Vol. 253 of The 8th International Workshop on Chiral Dynamics, CD2015g. Proceedings of Science (Pisa) (2015). doi: 10.22323/1.253.0104

66. Nogga A, Timmermans RGE, Kolck UV. Renormalization of one-pion exchange and power counting. Phys Rev C. (2005) 72:054006. doi: 10.1103/PhysRevC.72.054006

67. Pavón Valderrama M, Arriola ER. Renormalization of the NN interaction with a chiral two-pion-exchange potential: central phases and the deuteron. Phys Rev C. (2006) 74:054001. doi: 10.1103/PhysRevC.74.054001

68. Long B, Yang CJ. Renormalizing chiral nuclear forces: triplet channels. Phys Rev C. (2012) 85:034002. doi: 10.1103/PhysRevC.85.034002

69. Epelbaum E, Meißner UG. On the renormalization of the one–pion exchange potential and the consistency of Weinberg's power counting. Few Body Syst. (2013) 54:2175–90. doi: 10.1007/s00601-012-0492-1

70. Dyhdalo A, Furnstahl RJ, Hebeler K, Tews I. Regulator artifacts in uniform matter for chiral interactions. Phys Rev C. (2016) 94:034001. doi: 10.1103/PhysRevC.94.034001

71. Sánchez MS, Yang CJ, Long B, van Kolck U. Two-nucleon 1S0 amplitude zero in chiral effective field theory. Phys Rev C. (2018) 97:024001. doi: 10.1103/PhysRevC.97.024001

72. Epelbaum E, Gasparyan AM, Gegelia J, Meißner UG. How (not) to renormalize integral equations with singular potentials in effective field theory. Eur Phys J A. (2018) 54:186. doi: 10.1140/epja/i2018-12632-1

73. Yang CJ. Do we know how to count powers in pionless and pionful effective field theory? arXiv [Preprint] arXiv:1905.12510 (2019).

74. Flambaum VV, Wiringa RB. Dependence of nuclear binding on hadronic mass variation. Phys Rev C. (2007) 76:054002. doi: 10.1103/PhysRevC.76.054002

75. Epelbaum E, Krebs H, Lähde TA, Lee D, Meißner UG. Viability of carbon-based life as a function of the light quark mass. Phys Rev Lett. (2013) 110:112502. doi: 10.1103/PhysRevLett.110.112502

76. Kamada H, Nogga A, Glöckle W, Hiyama E, Kamimura M, Varga K, et al. Benchmark test calculation of a four-nucleon bound state. Phys Rev C. (2001) 64:044001. doi: 10.1103/PhysRevC.64.044001

77. Hebeler K, Holt JD, Menéndez J, Schwenk A. Nuclear forces and their impact on neutron-rich nuclei and neutron-rich matter. Annu Rev Nucl Part Sci. (2015) 65:457–84. doi: 10.1146/annurev-nucl-102313-025446

78. Hergert H. In-medium similarity renormalization group for closed and open-shell nuclei. Phys Script. (2016) 92:023002. doi: 10.1088/1402-4896/92/2/023002

79. Entem DR, Machleidt R. Accurate charge-dependent nucleon-nucleon potential at fourth order of chiral perturbation theory. Phys Rev C. (2003) 68:041001. doi: 10.1103/PhysRevC.68.041001

80. Carlsson BD, Ekström A, Forssén C, Strömberg DF, Jansen GR, Lilja O, et al. Uncertainty analysis and order-by-order optimization of chiral nuclear interactions. Phys Rev X. (2016) 6:011019. doi: 10.1103/PhysRevX.6.011019

81. Piarulli M, Girlanda L, Schiavilla R, Pérez RN, Amaro JE, Arriola ER. Minimally nonlocal nucleon-nucleon potentials with chiral two-pion exchange including Δ resonances. Phys Rev C. (2015) 91:024003. doi: 10.1103/PhysRevC.91.024003

82. Reinert P, Krebs H, Epelbaum E. Semilocal momentum-space regularized chiral two-nucleon potentials up to fifth order. Eur Phys J A. (2018) 54:86. doi: 10.1140/epja/i2018-12516-4

83. Stoks VGJ, Klomp RAM, Rentmeester MCM, de Swart JJ. Partial-wave analysis of all nucleon-nucleon scattering data below 350 MeV. Phys Rev C. (1993) 48:792–815. doi: 10.1103/PhysRevC.48.792

84. Pérez RN, Amaro JE, Arriola ER. Coarse-grained potential analysis of neutron-proton and proton-proton scattering below the pion production threshold. Phys Rev C. (2013) 88:064002. doi: 10.1103/PhysRevC.88.064002

85. Wild SM. Solving derivative-free nonlinear least squares problems with POUNDERS. In: Terlaky T, Anjos MF, Ahmed S, editors. Advances and Trends in Optimization With Engineering Applications. SIAM (2017). p. 529–40.

86. Ekström A, Baardsen G, Forssén C, Hagen G, Hjorth-Jensen M, Jansen GR, et al. Optimized chiral nucleon-nucleon interaction at next-to-next-to-leading order. Phys Rev Lett. (2013) 110:192502. doi: 10.1103/PhysRevLett.110.192502

87. Bergervoet JR, van Campen PC, van der Sanden WA, de Swart JJ. Phase shift analysis of 0–30 MeV pp scattering data. Phys Rev C. (1988) 38:15–50.

89. Gazit D, Quaglioni S, Navrátil P. Three-nucleon low-energy constants from the consistency of interactions and currents in chiral effective field theory. Phys Rev Lett. (2009) 103:102502. doi: 10.1103/PhysRevLett.103.102502

90. Klos P, Carbone A, Hebeler K, Menéndez J, Schwenk A. Uncertainties in constraining low-energy constants from 3H β decay. Eur Phys J A. (2017) 53:168. doi: 10.1140/epja/i2017-12357-7

91. Epelbaum E, Golak J, Hebeler K, Hüther T, Kamada H, Krebs H, et al. Few- and many-nucleon systems with semilocal coordinate-space regularized chiral two- and three-body forces. Phys Rev C. (2019) 99:024313. doi: 10.1103/PhysRevC.99.024313

92. Girlanda L, Kievsky A, Viviani M, Marcucci LE. Short-range three-nucleon interaction from A = 3 data and its hierarchical structure. Phys Rev C. (2019) 99:054003. doi: 10.1103/PhysRevC.99.054003

93. Brynjarsdóttir J, O'Hagan A. Learning about physical parameters: the importance of model discrepancy. Inv Probl. (2014) 30:114007. doi: 10.1088/0266-5611/30/11/114007

94. Andrae R, Schulze-Hartung T, Melchior P. Dos and don'ts of reduced Chi-squared. arXiv [Preprint] arXiv:1012.3754 (2010).

95. Furnstahl RJ, Phillips DR, Wesolowski S. A recipe for EFT uncertainty quantification in nuclear physics. J Phys G Nucl Part Phys. (2015) 42:034028. doi: 10.1088/0954-3899/42/3/034028

96. Dobaczewski J, Nazarewicz W, Reinhard PG. Error estimates of theoretical models: a guide. J Phys G Nucl Part Phys. (2014) 41:074001. doi: 10.1088/0954-3899/41/7/074001

97. Ekström A, Carlsson BD, Wendt KA, Forssén C, Jensen MH, Machleidt R, et al. Statistical uncertainties of a chiral interaction at next-to-next-to leading order. J Phys G. (2015) 42:034003. doi: 10.1088/0954-3899/42/3/034003

98. Navarro Pérez R, Amaro JE, Ruiz Arriola E, Maris P, Vary JP. Statistical error propagation in ab initio no-core full configuration calculations of light nuclei. Phys Rev C. (2015) 92:064003. doi: 10.1103/PhysRevC.92.064003

99. Navarro Pérez R, Amaro JE, Ruiz Arriola E. Statistical error analysis for phenomenological nucleon-nucleon potentials. Phys Rev C. (2014) 89:064006. doi: 10.1103/PhysRevC.89.064006

100. Acharya B, Carlsson BD, Ekström A, Forssén C, Platter L. Uncertainty quantification for proton-proton fusion in chiral effective field theory. Phys Lett B. (2016) 760:584–9. doi: 10.1016/j.physletb.2016.07.032

101. Acharya B, Ekström A, Platter L. Effective-field-theory predictions of the muon-deuteron capture rate. Phys Rev C. (2018) 98:065506. doi: 10.1103/PhysRevC.98.065506

102. Hernandez OJ, Ekström A, Dinur NN, Ji C, Bacca S, Barnea N. The deuteron-radius puzzle is alive: a new analysis of nuclear structure uncertainties. Phys Lett B. (2018) 778:377–83. doi: 10.1016/j.physletb.2018.01.043

103. Brooks S, Gelman A, Jones G, Meng XL. Handbook of Markov Chain Monte Carlo. Boca Raton, FL; London; New York, NY: CRC Press (2011).

104. Carlsson BD. Quantifying statistical uncertainties in ab initio nuclear physics using Lagrange multipliers. Phys Rev C. (2017) 95:034002. doi: 10.1103/PhysRevC.95.034002

105. Pieper SC, Pandharipande VR, Wiringa RB, Carlson J. Realistic models of pion-exchange three-nucleon interactions. Phys Rev C. (2001) 64:014001. doi: 10.1103/PhysRevC.64.014001

106. Rotureau J, Danielewicz P, Hagen G, Jansen GR, Nunes FM. Microscopic optical potentials for calcium isotopes. Phys Rev C. (2018) 98:044625. doi: 10.1103/PhysRevC.98.044625

107. Idini A, Barbieri C, Navrátil P. Ab initio optical potentials and nucleon scattering on medium mass nuclei. Phys Rev Lett. (2019) 123:092501. doi: 10.1103/PhysRevLett.123.092501

108. Elhatisari S, Li N, Rokash A, Alarcón JM, Du D, Klein N, et al. Nuclear binding near a quantum phase transition. Phys Rev Lett. (2016) 117:132501. doi: 10.1103/PhysRevLett.117.132501

109. Hagen G, Hjorth-Jensen M, Jansen GR, Papenbrock T. Emergent properties of nuclei from ab initio coupled-cluster calculations. Phys Scr. (2016) 91:063006. doi: 10.1088/0031-8949/91/6/063006

110. Ekström A, Hagen G, Morris TD, Papenbrock T, Schwartz PD. Δ isobars and nuclear saturation. Phys Rev C. (2018) 97:024332. doi: 10.1103/PhysRevC.97.024332

111. Piarulli M, Baroni A, Girlanda L, Kievsky A, Lovato A, Lusk E, et al. Light-nuclei spectra from chiral dynamics. Phys Rev Lett. (2018) 120:052503. doi: 10.1103/PhysRevLett.120.052503

112. Logoteta D, Bombaci I, Kievsky A. Nuclear matter properties from local chiral interactions with Δ isobar intermediate states. Phys Rev C. (2016) 94:064001. doi: 10.1103/PhysRevC.94.064001

113. Kalantar-Nayestanaki N, Epelbaum E, Messchendorp JG, Nogga A. Signatures of three-nucleon interactions in few-nucleon systems. Rep Prog Phys. (2011) 75:016301. doi: 10.1088/0034-4885/75/1/016301

114. de Finetti B. Theory of Probability: A Critical Introductory Treatment. New York, NY: John Wiley and Sons Ltd. (2017).

115. Schindler MR, Phillips DR. Bayesian methods for parameter estimation in effective field theories. Ann Phys. (2009) 324:682–708. doi: 10.1016/j.aop.2008.09.003

116. Wesolowski S, Klco N, Furnstahl RJ, Phillips DR, liya AT. Bayesian parameter estimation for effective field theories. J Phys G Nucl Part Phys. (2016) 43:074001. doi: 10.1088/0954-3899/43/7/074001

117. Wesolowski S, Furnstahl RJ, Melendez JA, Phillips DR. Exploring Bayesian parameter estimation for chiral effective field theory using nucleon–nucleon phase shifts. J Phys G Nucl Part Phys. (2019) 46:045102. doi: 10.1088/1361-6471/aaf5fc