95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Phys. , 09 August 2016

Sec. Interdisciplinary Physics

Volume 4 - 2016 | https://doi.org/10.3389/fphy.2016.00034

This article is part of the Research Topic At the Crossroads: Lessons and Challenges in Computational Social Science View all 11 articles

In recent years researchers have gravitated to Twitter and other social media platforms as fertile ground for empirical analysis of social phenomena. Social media provides researchers access to trace data of interactions and discourse that once went unrecorded in the offline world. Researchers have sought to use these data to explain social phenomena both particular to social media and applicable to the broader social world. This paper offers a minireview of Twitter-based research on political crowd behavior. This literature offers insight into particular social phenomena on Twitter, but often fails to use standardized methods that permit interpretation beyond individual studies. Moreover, the literature fails to ground methodologies and results in social or political theory, divorcing empirical research from the theory needed to interpret it. Rather, investigations focus primarily on methodological innovations for social media analyses, but these too often fail to sufficiently demonstrate the validity of such methodologies. This minireview considers a small number of selected papers; we analyse their (often lack of) theoretical approaches, review their methodological innovations, and offer suggestions as to the relevance of their results for political scientists and sociologists.

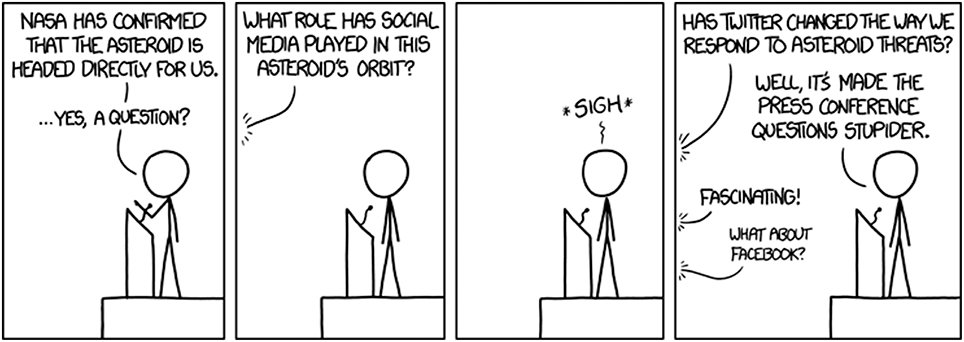

Since its founding in 2006, Twitter has become an important platform for news, politics, culture, and more across the globe [1]. Twitter, like other social media platforms, empowers new forms of social organization that were once impossible. Margetts et al. discuss changing conceptions of membership and organization on social media [2]; Twitter communities and conversations need not be bounded by geography, propinquity, or social hierarchy. As a result, social and political movements have taken to the site as a means of organizing activity both online and offline. In facilitating these movements, Twitter simultaneously makes available a data trail never before seen in social research. Researchers have embraced these data to create an expanding body of literature on Twitter and social media writ large. On the other hand some researchers have been more skeptical about using social media data in general, and specially data from Twitter, in studying social behavior [3]. And some others question the relevance of such data to social sciences completely; see Figure 1 for a satirical illustration of this view.

Figure 1. Social Media: an illustration of overstimating the relevance of social media to social events from XKCD. Available online at http://xkcd.com/1239/ (Accessed June 16, 2016).

This literature is quite diverse. Some investigations seek to relate Twitter to the offline world [4]. Kwak et al. [5] crawl the entirety of Twitter and find that the platform's social networks differ from offline socialiability in important ways. Huberman et al. [6] examine user behavior in addition to network structure, and find strong “friend” relationships, akin to offline sociability, are important predictors of Twitter activity. Gonçalves et al. [7] use Twitter data to validate anthropologist Robin Dunbar's proposed quantitative limit to social relationships. Still other investigations analyze the various uses of Twitter. Examining social influence, Bakshy et al. [8] study Twitter cascades, and find that the largest are started by past influential users with many followers. Semantic investigations in various languages and national contexts have been quite popular [9–11]. Questions of how Twitter and platform phenomena map onto offline geographic have also been widely studied [12, 13].

Yet, this body of literature is only unified in the source of its data; it remains fractured across many disciplines and fails to establish set procedures for drawing conclusions from these rich datasets; for an earlier survey of the literature see Jungherr [14]. Indeed, metareviews of election prediction using Twitter have raised significant concerns of this literature's validity [15, 16]. This minireview extends this critical discussion of Twitter literature to political action. We selected the reviewed studies in order to sample a variety of topics and methodologies, however this collection is not exhaustive by any means and hence we named the paper a “biased review.” The approach we have taken in this work deviates from systematic reviews in the field such as ones described in Petticrew and Roberts [17]. Our sample purposively draws a geographic diversity of papers studying Twitter-based political action in Europe, the Middle East, and the United States. Yet, some gaps certainly remain, including the glaring absence of hashtag activism studies and terrorist propaganda activity, two topics important to political action on Twitter that warrant further study. Hence we acknowledge that our review is not inclusive in terms of coverage of all the relevant papers in the field. For a general overview of studies on online behavior see DiMaggio et al. [18].

In reviewing the state of Twitter literature on political action, we seek to explain the role of computational social science (also called social data science) methodologies in augmenting political scientific and sociological understanding of these phenomena. Our minireview is structured as follows. We begin by examining the role of theory, and find that most often authors do not consider the expansive political and social theoretical literature in their analyses of online social phenomena. Instead, they provide case studies and methodological developments exclusively for Twitter research. We next examine the methodologies of these studies, and, drawing upon Ruths and Pfeffer [3], we find that many papers fail to support their choice of methodology within the greater literature. We then examine significant results and discuss implications for further Twitter studies of political action.

Social and political theory serves an important role in making sense of social research by fitting individual studies into larger theoretical frameworks. In this way, individual studies can intelligibly inform future research. Alternatively, data analysis without a coherent, defensible theoretical framework serves only to explain a single observation at one point in time. The papers reviewed here fall into three broad categories in their use of theory: no theory, theory-light, and theory-heavy. Papers fall into these categories irrespective of methodological or phenomenological focus.

Papers without theoretical grounding may cursorily cite but fail to engage theoretical texts. Beguerisse-Díaz et al. [19] examine communities and functional roles on Twitter during the UK riots of August 2011. To explain these phenomena, however, they cite no social theory. While the authors offer sophisticated methodical innovations for determining interest communities and individual roles in those communities, they do so without reference to a broader social science literature. Some other investigations offer cursory theory in their discussions of Twitter data. Borge-Holthoefer et al. [20] investigate political polarization surrounding the events that precipitated Egyptian President Morsi's removal from power in 2013. Their analysis of changes in loudness of opposing factions, although quite enlightening, is not grounded in any theoretical model of political action. Instead, the authors proceed based on a number of platform-specific assumptions that do not readily permit results to be generalized beyond Twitter. The authors suggest their findings contribute to the study of bipolar societies yet do not develop a theoretical model for such applications. The authors do use social theory, however sparingly, in order to contextualize their results, but even here theoretical discussion is lacking. Conover et al. [21] study partisan communities and behavior on Twitter during the 2010 U.S. midterm elections. They similarly prioritize analytical innovations over theoretical explanation. The authors analyze behavior, communication, and connectivity between users, but do not seek to explain observed partisan differences. Their research yields statistically significant differences between liberal and conservative communities in follower and retweet networks, which begs the question: why do these differences exist? Such explanations could benefit from examining elections literature to develop a general theoretical model of partisan sharing. Although the authors do briefly address the 2008 U.S. presidential election, it is only to contrast resulting phenomena, not to offer explanatory theories.

In contrast, Alvarez et al. [22] explain political action in the Spanish 15M movement using Durkheimian theory of collective identity and establish their work on firm basis in collective action literature. Yet, while the authors base their methodology in theory, their findings do not directly engage with that theory aside from “quantifying” it. A similar fate befalls sampled predictive studies, which draw on theory to produce empirical results, but often fail to engage those results with underlying theory. Weng et al. [23] develop a model that predicts viral memes using community structure, based on theoretical insight from contagion theory. The authors find that viral memes spread by simple contagion, in contrast to unsuccessful memes which spread via complex contagion; still, only the briefest theoretical discussion for this result is offered. Garcia-Herranz et al. [24] develop a methodological innovation using individual Twitter users as sensors for contagious outbreaks based in the “friendship paradox” and contagion theory. This mechanism uses network topology as an effective predictor, but does not address the social phenomena that create and sustain that topology. Such methodological innovations provide researchers new analytical tools for observational analysis, but these tools remain of dubious explanatory value because they fail to ground methods in theory of the social world.

Twitter data present an opportunity not simply for analysis of social interactions on the platform but, if done well, these insights hold potential to contribute to new visions of the social world. Rigorous data science can generate new theory. Coppock et al. [25] are particularly notable in this regard. The authors base their methodological innovation in Twitter mobilization inducement on an extensive theoretical literature review, which yields three opposing hypotheses. They assess the political theory of collective action as it applies to Twitter via these three hypotheses, and find that the Civic Voluntarism Model is most consistent with their results. Likewise, González-Bailón et al. [26], in their study of protest recruitment dynamics in the Spanish 15M movement, offer both an extensive grounding in social theory and theory-engaging results. The authors' findings serve to clarify threshold models of political action and “collective effervescence.”

As to the particular theories addressed, the above mentioned papers focus primarily on political action and network theories of diffusion and contagion. Important in such topics, but absent from all investigations, is discussion of power or hierarchy. Although Twitter may permit communication between the powerful and powerless, it does not do so in a vacuum. The platform operates within numerous contexts, e.g., the offline influence of particular users and the online influence of those with numerous followers. Reconciling methodologies with theories of power promises to provide further insight into political action on Twitter. More broadly, a greater focus on theory is needed for Twitter analyses to provide externally valid insight into the social world, both online and off.

In developing analyses of Twitter data, researchers have not drawn on a coherent body of agreed-upon methodologies. Rather, methodological choices differ considerably from one paper to another. Ruths and Pfeffer [3] offers a critique of many common social media analysis practices. Drawing from that work as well as our own insights, we examine many of the methodological choices made in our sample papers. We have delineated these choices into several overarching categories: data, filtering, networks and centrality, cascades and communities, experiments, and conjecture.

Before addressing the methodological choices outlined above, we first address several important findings from Ruths and Pfeffer [3]. Today, academic research writ large—including social media work and much more—is insufficiently transparent. Academic journals publish only “successful” studies. Without publishing methodologies that failed to explain political action phenomena, how is one to weigh the probability that the supposed “fit” observed is not due to random chance? Even those papers which address the robustness of their analysis, often stop at a very shallow significance tests using p-value, which is argued to be a flawed practice [27, 28]. Similarly, when new methodologies are created, as in Weng et al. [23], Garcia-Herranz et al. [24] and Coppock et al. [25], they are justified vis-à-vis random baselines and not prior methods. New methods are useful, but are they better than existing tools? These opacity critiques are fundamental to the current state of Twitter scholarship. Researchers should be cognizant of these limitations when drawing conclusions from their work and should alter their methodologies to account for these limitations whenever possible.

Twitter data ultimately comes from the Twitter platform. If scholars wish to make claims about the versatility of their methodologies and findings, they must justify their data-collection methods as representative of underlying populations—on Twitter or elsewhere. This proves a problematic task. The Twitter API offers researchers an incredible array of tweet, user, and more data for analysis; yet, the API acts as a “blackbox” filter that may not yield representative data [29, 30]. For example, Weng et al. [23] “randomly” collect 10% of public tweets for one month from the API. Not only does the API preclude analysis as to the representativeness of the sample but it too prevents researchers from comparing studies over time, as the API sampling algorithm itself will change. Proprietary sampling methods only further exacerbate the opacity problem. In González-Bailón et al. [26], the authors use a proprietary sampling method to generate their dataset of Spanish tweets from Spain. The authors of Borge-Holthoefer et al. [20] do as well, using Twitter4J1 and TweetMogaz2 as data sources.

Other papers do not use a global sampling method, but obtain data in other ways. Beguerisse-Díaz et al. [19] use a list of “influential Twitter users” published in The Guardian as the starting point for data collection. Coppock et al. [25] develop their experimental design in cooperation with the League of Conservation Voters, and use their Twitter followers as test subjects. Other papers, including Conover et al. [21] and Alvarez et al. [22] collect data by following particular hashtags and the users who tweeted them. Garcia-Herranz et al. [24] collect Twitter data by snowball-sampling from one influential user, Paris Hilton, as well as all users mentioning trending topics. None of these sampling methods allows authors to make broad claims about the Twitter platform and political action in general. The method used in Garcia-Herranz et al. [24] is particularly concerning, as it attempts to collect a large sample to sufficiently model a Twitter population, but the choice of method undermines this very goal.

A final complication of data in Twitter studies regards the publication of that data. Once data is collected and analyzed, it is rarely made available for others to replicate these studies—the hallmark of good research. The problem here lies with Twitter itself; the terms of use preclude the republication of tweet contents that have been scraped from the site3.

Following data collection, researchers often filter an intractable dataset into a manageable sample. Researchers often use filtering to select a coherent sample. Language and geography offer clear examples. Borge-Holthoefer et al. [20] limit their dataset to Arabic tweets about Egypt. Both González-Bailón et al. [26] and Alvarez et al [22] limit their datasets to Spanish tweets from Spain. To do so, however, both papers use a proprietary filtering process from Cierzo Development Ltd4 As addressed above, proprietary methodologies stymie research transparency and replication.

Filtering can likewise facilitate a narrowing of research focus given a particular sample population. One common means of achieving a relevant dataset is to use hashtags as labels for tweets in which they appear. In González-Bailón et al. [26] the authors obtain a sample of protest-related tweets using a list of 70 hashtags affiliated with the Spanish 15M movement. Conover et al. [21] filter to a sample of political tweets using a list of political hashtags and, in an excellent technique, allow the list of hashtags to grow based on co-occurring hashtags. In Borge-Holthoefer et al. [20] the authors go one step further, and query not only hashtags but complete tweet content. Arabic tweets were normalized for spelling and filtered by a series of Boolean queries with a set of 112 relevant keywords.

Researchers, after filtering for a relevant sample and topic, may further filter for user attributes. Borge-Holthoefer et al. [20] restrict their sample to high activity users with more than ten tweets extant in the limited sample. Beguerisse-Díaz et al. [19] limit their dataset to users central in the friend-follower network, those in the giant component. Users outside the giant component generally had incomplete Twitter information, and, as such, were dropped from the analysis. Weng et al. [23] limits the data to only reciprocal relationships. Conover et al. [21] filter tweets with geo-tags. The authors use a self-reported location field as their data source, despite the fact that someone can put “the moon” or anything else as their location. Indeed, Graham et al. use linguistic analyses to determine that such user-provided locations are poor proxies for true physical location [13]. Although the authors acknowledge the preliminary status of their analysis and its utility as an illustration of potential data-driven hypotheses, it left us unsatisfied with a lack of methodological rigor that should underlie even the most tentative of filtering claims.

Authors may choose to filter for no other reason than to obtain a manageable dataset. Such decisions need not be arbitrary. Garcia-Herranz et al. [24] settle on a particular sample size for their analyses, seeking to balance statistical power and the need to keep test and control groups from overlapping in the network. The authors offer an effective defense of their decision, presenting brief analyses of other sample sizes as well. Coppock et al. [25], on the other hand, arbitrarily remove Twitter users with more than 5000 followers from their sample because, they argue, these users are “more likely” to be influential or organizations, and therefore differ from the rest of the sample. This decision to remove outliers and the arbitrariness of the choice of threshold introduces systematic biases in the results, fundamentally undermining their analyses.

These myriad filtering decisions often go insufficiently defended. Those who do defend filtering choices often do so without referencing past literature. Even sound filtering decisions, however, undermine the general claims researchers can make. This may be one reason most of the studies fail to contribute to social theory beyond their micro case studies.

Twitter lends itself to fruitful network analyses—of both explicit interactions and other derivative relations. Conover et al. [21] use three network projections to analyze partisan political behavior during the 2010 U.S. midterm elections: one network sees users connected when mentioned together in a tweet, another where users are linked by retweeting behavior and third, the original explicit user follow-ship network. Weng et al. [23] also uses three networks—mention, retweet, and follow—to study meme virality. The authors conduct primary analysis on the follow network and use the other two as robustness tests. In studying protest recruitment to the 15M movement, González-Bailón et al. [26] make use of two networks, one symmetric (comprised of reciprocated following relationships) and one asymmetric to study protest recruitment to the 15M movement. The authors use these networks to determine the influence of broadcasting users. Still other authors use single, traditional follower networks in their analyses [24, 25].

Network analyses are all the more powerful when they are combined, as in Weng et al. [23] and González-Bailón et al. [26]. In Borge-Holthoefer et al. [20], the authors offer another insight when they use network analyses over time with temporally evolving networks in response to events that preceded Egyptian President Morsi's removal from power. The authors recreate a sequence of networks that evolve over time. This method offers insight into how online activity responds to offline events in Egypt, and could be a powerful tool in many other contexts, helping to parse a key question of political action: how groups respond to events and evolve over time. The opposite, to assume a network remains static during a given period, precludes this insight and undermines social analysis. In González-Bailón et al. [26], the authors exemplify this pitfall, as a network of protesters being recruited surely saw significant changes during their study's time period. Given the fast growing literature on temporal networks [31, 32], more attention is required in analyzing the dynamics of networked political activities.

Beyond decisions of network type and temporality, authors make important choices in projecting and using Twitter networks. Weng et al. [23] does not weight network edges based on number of tweets, and choses to limits the network projection to reciprocal relationships. Both decisions fundamentally affect results, and undermine its validity as representing activity on the Twitter platform. Others, including González-Bailón et al. [26] account for asymmetry in their network projections.

In doing network analysis, many researchers use centrality scores as a means to find the most influential users. Researchers have developed a number of different definitions and algorithms for centrality [33]. The choice of a specific approach, however, depends on the particular context and research questions. Often times this choice is not well justified in the given context of online political mobilizations. Among the papers considered here, k-core centrality [34] is the most common choice [21, 22, 26]. While k-core centrality is a very useful tool to find the backbone of the network, it neglects social brokers, or the nodes with high betweenness centrality,—relevant features in their own right when studying social behavior [35].

Whether in networks or another form, Twitter data yield insight through a multitude of different analytical techniques. One such technique examines tweets as they flow through the network in cascades. Cascades follow a single tweet that is retweeted or similar tweets as they move across a network. The Twitter platform makes these analyses difficult, however, as retweets are connected to the original tweet, not the tweet that triggered the retweet [3]. Researchers address this pitfall by using temporal sequencing to order and connect tweets or retweets. To achieve meaningful results, studies must sufficiently filter the tweets to establish that sequential tweets are related in content as well as time, which undermines representativeness, as discussed above [20, 22, 23].

Another common technique examines tweet content. Alvarez et al. [22] analyze their data for its social and sentiment content using semantic and sentiment analytic algorithms that analyzes tweets based on a test set. The authors use this technique to draw conclusions of individual users opinions of the 15M movement in Spain by analyzing up to 200 authored tweets on the topic per user. This technique holds great promise for future studies of political activity, and indeed any activity, on Twitter. Borge-Holthoefer et al. [20] use a less sophisticated solution toward a similar goal: they characterize users as either for or against military intervention in Egypt. The authors attempt to show changes in opinion, and so cannot not rely on comprehensive opinion from a mass of past tweets as done in Alvarez et al. [22]. Instead, Borge-Holthoefer et al. [20] uses coded hashtags to indicate users' opinions. Although this technique allows for discernable changes in opinion, the authors establish a dichotomy that threatens to oversimplify users' opinions.

Community detection is another key analytical tool for Twitter researchers. Using network topology or node (user or tweet) content, researchers can cluster similar nodes and provide insight into social systems on a macroscopic scale. There are a variety of techniques, each with its own set of strengths and weaknesses. Weng et al. [23] uses the Infomap algorithm [36] and test the robustness of their results by applying a second community detection technique, Link Clustering. Conover et al. [21] uses a combination of two techniques, Rhaghavan's label propagation method [37] seeded with node labels from Newman's leading eigenvector modularity maximization [38]. The authors selected this combination of methods because it “neatly divides the population …into two distinct communities.” Yet, the authors fail to defend these observations rigorously in their paper. Beguerisse-Díaz et al. [19], on the other hand, effectively defend their decisions in setting resolution parameters for the Markov Stability method [39]. The authors also use community detection creatively in conjunction with a functional role-determining algorithm to assign “roles” to users without a priori assignments of those groups. Borge-Holthoefer et al. [20] select an apt community detection method that corresponds well with their objectives: to follow changes in polarity over time, the authors use label propagation, whereby nodes spread their assigned polarity. This method allows for seeding with nodes of known belief—useful in monitoring the progression of the Egyptian protests on Twitter, as many important actors' positions were publicly known. Yet this decision too comes with a cost: the authors program the label propagation to allow for only two polarities: Secularist or Islamist, even though they acknowledge that a third camp likely existed, namely supporters of deposed Hosni Mubarak.

While community detection is still considered as an open question in network science, both at the definition and algorithmic implementation levels [40], many papers use one or more of these methods without enough care to make sure that the methods and definitions that they are using in their specific problem is well justified.

Twitter also lends itself as an experimental platform for researchers to implement controlled studies of social phenomena. In particular, Garcia-Herranz et al. [24] and Weng et al. [23] seek to predict viral memes on Twitter using network topology and activation in linked users and communities, respectively. Coppock et al. [25] run two experiments on inducing political behavior on Twitter using different types and phrasing of messages. In all cases, authors necessarily use controls in their experimental context. Garcia-Herranz et al. [24] create a null distribution of tweets with randomly shuffled timestamps to distinguish the effect of user centrality from user tweeting rate. Weng et al. [23] use two baseline models to quantify the predictive power of their community-based model. The authors use a random guess and community-blind predictor, against both of which the model is highly statistically significant. Coppock et al. [25], with a true experimental design, offer an extensive discussion of experimental controls on Twitter. The platform has inherent limitations for public tweet experiments because there is no effective way to separate experimental and control users given an inherently interconnected network structure. But the authors design their study to use direct messages to selected users as the experimental variable. The authors even tweaked and repeated the study to improve randomization in the control. Such a methodology makes [25] an example of a particularly strong experimental Twitter paper.

As we have seen, Twitter provides researchers myriad analytical techniques. Methodological choices as to which techniques to use present a fundamental challenge for researchers. They must select and properly defend their choice of methods that both work and fit their theoretical objectives. As we have noted above, there are numerous instances where researchers will do better jobs than others are achieving a methodological fit and defending it in their studies. Some researchers may face the temptation to extend analyses to produce exciting results, but do so at the expense of sound methodologies. Future Twitter research would be well served to stress defensible, rigorous methodologies that are couched within existing theoretical literature from the social sciences, something that is rare today.

Taken collectively, the reviewed investigations offer considerable insight into political activities conducted on the Twitter platform, through analyses that examine political action in the abstract and others that offer case studies of concrete political action. These insights particularly address the roles of communities and individual users, connections between such entities, as well as the content they tweet. Predictive models take these insights and offer tools for, perhaps, understanding political action in real-time. Garcia-Herranz et al. use a sensor group of central users to predict virality of content, and extend this predictive sensor beyond Twitter to Google searches [24]. Weng et al. use connection topology to predict virality, although the predictive model is not extended to other content [23]. González-Bailón et al. observe viral tweets emerge from randomly distributed seed users, indicating exogenous factors determine the origins of viral content [26]. Taken together, these three studies offer an understanding of mass communication on Twitter: viral content tends to originate randomly across the platform, reach more central users first, and spread across communities more easily than non-viral content. Theoretical explanations of what makes viral content in the first place, however, is lacking in these analyses, and warrants further attention.

Given a methodological focus, topology can offer insights into its embedded users. Beguerisse-Díaz et al. [19] use topographical analyses to reveal flow based roles, interest communities, and individual vantage points without a priori assignment. Conover et al. [21] assign political leaning and then examine differences in partisan topologies in communities, tweeting activity, retweeting behavior, and mentions. Both approaches offer insight into political behavior using topology, with different strengths. The techniques used in Beguerisse-Díaz et al. [19] are quite useful when the partisan landscape on a particular issue is unknown; The approach in Conover et al. [21] yields greater understanding of known divisions.

Topology is not the sole determinant of activity, however, and tweet content analyses offer a second means of understanding political activity on Twitter. Alvarez et al. [22] finds that, in the context of the Spanish 15M indignados, tweets with high social and negative content spread in larger cascades. Tweet content also readily lends itself to analyses which link Twitter with offline phenomena. Borge-Holthoefer et al. [20] and González-Bailón et al. [26] find that, in 2013 Egyptian protests and Spanish 15M protests, respectively, real world events impact tweeting behavior. Coppock et al. [25] successfully induce off-Twitter behavior using the content of tweets. Content analyses offer insight into non-platform-dependent political activity.

Topology and content are distinct analyses. Research that combines the two to answer a single question can yield robust results. Several papers attempt this, Borge-Holthoefer et al. [20] most successfully. The authors use content analysis to classify tweets and users into opinion groups, and then create temporally based retweet networks to follow changes in the activity and composition of those opinion groups. Alvarez et al. [22] use content analysis of observed network topological phenomena, e.g., cascades, to quantify the social and emotional effects of content on sharing outcomes. Beguerisse-Díaz et al. [19] too combine methodologies, although less rigorously: they use word clouds to label topologically derived network communities.

In this vein, many of the above mentioned investigations could benefit from incorporating mixed methodologies and drawing on each others analyses. Future research should seek to emulate the approach in Borge-Holthoefer et al. [20]. Further use of sentiment analyses from Alvarez et al. [22] would render even more robust results. Additional joint content and topology analyses would be even more useful: would using Garcia-Herranz et al. [24]'s central users in communities, i.e., incorporate Weng et al.'s methods [23], result in to more precise virality predictor? Would adding content analysis as used in Alvarez et al. [22] further improve precision? If holistic understanding of social phenomena is researchers goal, future efforts should seek to incorporate not one but numerous methodologies in pursuit of that end.

The papers considered in this minireview offer several important considerations on the state of Twitter research into social phenomena. What was once the arena of solely political scientists and sociologists, political action and social phenomena have now become research topics for computer scientists and social physicists. New disciplines have much to offer social research, as indicated in the methodology review of our sample papers; yet, these methodologies are often divorced from underlying social theory. Thus far, Twitter studies offer primarily observational—not explanatory—analyses.

What does account for this bias away from social theory? Some possible explanations are readily apparent. Twitter research is new, and computational social science is an emerging field; thus far both have tended to prioritize methodological innovation over incorporation or analysis of preexisting social theories. This tendency has surely been exacerbated by the relatively narrow range of disciplines contributing to the field: despite its name, the field has drawn from computer scientists, mathematicians, and physicists far more than social scientists. Perhaps interdisciplinary collaboration may present a solution as the field continues to develop; see Beguerisse-Díaz et al. [41] for a recent example. The tendency to disregard social theory also likely has its origins in the structure of technical journals. A high premium on space and their technical audience simply do not permit lengthy discussion of theory.

Greater dialog between theory and methods, as well as a holistic use of all available methodologies, is needed for data science to truly offer insight into our social world, both on Twitter and off it.

All authors listed, have made substantial, direct and intellectual contribution to the work, and approved it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

For providing useful feedback on the original manuscript we thank Mariano Beguerisse-Díaz and Peter Grindrod.

1. ^http://twitter4j.org/en/index.html

2. ^http://www.tweetmogaz.com/

3. ^Twitter Terms of Service: https://twitter.com/tos?lang=en

4. ^Formerly http://www.cierzo-development.com; see archive at http://tinyurl.com/jzbewt8

1. Weller K, Bruns A, Burgess J, Mahrt M, Puschmann C. Twitter and Society. New York, NY: Peter Lang (2013).

2. Margetts H, John P, Hale S, Yasseri T. Political Turbulence: How Social Media Shape Collective Action. Princeton, NJ: Princeton University Press (2015).

3. Ruths D, Pfeffer J. Social media for large studies of behavior. Science (2014) 346:1063–4. doi: 10.1126/science.346.6213.1063

4. Steinert-Threlkeld ZC, Mocanu D, Vespignani A, Fowler J. Online social networks and offline protest. EPJ Data Sci. (2015) 4:1. doi: 10.1140/epjds/s13688-015-0056-y

5. Kwak H, Lee C, Park H, Moon S. What is Twitter, a social network or a news media? In: Proceedings of the 19th International Conference on World Wide Web. New York, NY: ACM (2010). pp. 591–600.

6. Huberman BA, Romero DM, Wu F. Social Networks that Matter: Twitter under the Microscope. First Monday (2009) 14:1.

7. Gonçalves B, Perra N, Vespignani A. Modeling users' activity on twitter networks: validation of dunbar's number. PLoS ONE (2011) 6:e22656. doi: 10.1371/journal.pone.0022656

8. Bakshy E, Hofman JM, Mason WA, Watts DJ. Everyone's an influencer: quantifying influence on twitter. In: Proceedings of the Fourth ACM International Conference on Web Search and Data Mining. New York, NY: ACM (2011). pp. 65–74.

9. Yan P, Yasseri T. Two roads diverged: a semantic network analysis of guanxi on twitter. arXiv:160505139 (2016).

10. Livne A, Simmons MP, Adar E, Adamic LA. The party is over here: structure and content in the 2010 election. ICWSM (2011) 11:17–21. Available online at: http://www.aaai.org/ocs/index.php/ICWSM/ICWSM11/paper/view/2852

11. Lietz H, Wagner C, Bleier A, Strohmaier M. When politicians talk: assessing online conversational practices of political parties on Twitter. In: Proceedings of the Eighth International Conference on Weblogs and Social Media. Ann Arbor, MI: ICWSM (2014).

12. Grindrod P, Lee T. Comparison of social structures within cities of very different sizes. Open Sci. (2016) 3:150526. doi: 10.1098/rsos.150526

13. Graham M, Hale SA, Gaffney D. Where in the world are you? Geolocation and language identification in Twitter. Prof Geogr. (2014) 66:568–78. doi: 10.1080/00330124.2014.907699

14. Jungherr A. Twitter in politics: a comprehensive literature review. SSRN 2402443. (2014). doi: 10.2139/ssrn.2402443

15. Gayo-Avello D. “I wanted to predict elections with Twitter and all I got was this lousy paper”–a balanced survey on election prediction using Twitter data. arXiv:12046441 (2012).

16. Metaxas PT, Mustafaraj E. Social media and the elections. Science (2012) 338:472–3. doi: 10.1126/science.1230456

17. Petticrew M, Roberts H. Systematic reviews in the social sciences: a practical guide. Hoboken, NJ: John Wiley & Sons (2008).

18. DiMaggio P, Hargittai E, Celeste C, Shafer S. From unequal access to differentiated use: a literature review and agenda for research on digital inequality. In: Social Inequality New York, NY: Russell Sage Foundation (2004). pp. 355–400.

19. Beguerisse-Díaz M, Garduño-Hernández G, Vangelov B, Yaliraki SN, Barahona M. Interest communities and flow roles in directed networks: the Twitter network of the UK riots. J R Soc Interface (2014) 11:20140940. doi: 10.1098/rsif.2014.0940

20. Borge-Holthoefer J, Magdy W, Darwish K, Weber I. Content and network dynamics behind Egyptian political polarization on Twitter. In: Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing. New York, NY: ACM (2015). pp. 700–11.

21. Conover MD, Gonçalves B, Flammini A, Menczer F. Partisan asymmetries in online political activity. EPJ Data Sci. (2012) 1:1–19. doi: 10.1140/epjds6

22. Alvarez R, Garcia D, Moreno Y, Schweitzer F. Sentiment cascades in the 15M movement. EPJ Data Sci. (2015) 4:1–13. doi: 10.1140/epjds/s13688-015-0042-4

23. Weng L, Menczer F, Ahn YY. Virality prediction and community structure in social networks. Sci Rep. (2013) 3:2522. doi: 10.1038/srep02522

24. Garcia-Herranz M, Moro E, Cebrian M, Christakis NA, Fowler JH. Using friends as sensors to detect global-scale contagious outbreaks. PLoS ONE (2014) 9:e92413. doi: 10.1371/journal.pone.0092413

25. Coppock A, Guess A, Ternovski J. When treatments are Tweets: a network mobilization experiment over Twitter. Pol Behav. (2016) 38:105–128. doi: 10.1007/s11109-015-9308-6

26. González-Bailón S, Borge-Holthoefer J, Rivero A, Moreno Y. The dynamics of protest recruitment through an online network. Sci Rep. (2011) 1:197. doi: 10.1038/srep00197

27. Vidgen B, Yasseri T. P-values: misunderstood and misused. Front Phys. (2016) 4:6. doi: 10.3389/fphy.2016.00006

28. Wasserstein RL, Lazar NA. The ASA's statement on p-values: context, process, and purpose. Am Stat. (2016) 70:129–33. doi: 10.1080/00031305.2016.1154108

29. Morstatter F, Kumar S, Liu H, Maciejewski R. Understanding Twitter data with TweetXplorer. In: Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD '13. New York, NY: ACM (2013). pp. 1482–5.

30. Morstatter F, Pfeffer J, Liu H, Carley KM. Is the sample good enough? Comparing data from Twitter's streaming API with Twitter's firehose. In: Proceedings of the Seventh International AAAI Conference on Weblogs and Social Media. New York, NY: AAAI Press (2013). p. 400.

31. Holme P, Saramäki J. Temporal networks. Phys Rep. (2012) 519:97–125. doi: 10.1016/j.physrep.2012.03.001

32. Holme P. Modern temporal network theory: a colloquium. Eur Phys J B (2015) 88:1–30. doi: 10.1140/epjb/e2015-60657-4

34. Wasserman S, Faust K. Social Network Analysis: Methods and Applications. Vol. 8. Cambridge: Cambridge University Press (1994).

35. González-Bailón S, Wang N. The bridges and brokers of global campaigns in the context of social media. In: SSRN Work Paper (2013).

36. Rosvall M, Bergstrom CT. Maps of random walks on complex networks reveal community structure. Proc Natl Acad Sci USA. (2008) 105:1118–23. doi: 10.1073/pnas.0706851105

37. Raghavan UN, Albert R, Kumara S. Near linear time algorithm to detect community structures in large-scale networks. Phys Rev E (2007) 76:036106. doi: 10.1103/PhysRevE.76.036106

38. Newman ME. Finding community structure in networks using the eigenvectors of matrices. Phys Rev E (2006) 74:036104. doi: 10.1103/PhysRevE.74.036104

39. Schaub MT, Delvenne JC, Yaliraki SN, Barahona M. Markov dynamics as a zooming lens for multiscale community detection: non clique-like communities and the field-of-view limit. PLoS ONE (2012) 7:e32210. doi: 10.1371/journal.pone.0032210

40. Fortunato S. Community detection in graphs. Phys Rep. (2010) 486:75–174. doi: 10.1016/j.physrep.2009.11.002

Keywords: social media, twitter, mobilization, campaign, collective action, bias, theory

Citation: Cihon P and Yasseri T (2016) A Biased Review of Biases in Twitter Studies on Political Collective Action. Front. Phys. 4:34. doi: 10.3389/fphy.2016.00034

Received: 17 May 2016; Accepted: 26 July 2016;

Published: 09 August 2016.

Edited by:

Matjaž Perc, University of Maribor, SloveniaReviewed by:

Renaud Lambiotte, Université de Namur, BelgiumCopyright © 2016 Cihon and Yasseri. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Taha Yasseri, dGFoYS55YXNzZXJpQG9paS5veC5hYy51aw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.