94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Pharmacol. , 11 September 2023

Sec. Drugs Outcomes Research and Policies

Volume 14 - 2023 | https://doi.org/10.3389/fphar.2023.1186456

A delayed treatment effect is a commonly observed phenomenon in tumor immunotherapy clinical trials. It can cause a loss of statistical power and complicate the interpretation of the analytical findings. This phenomenon also poses challenges for interim analysis in the context of phase II/III seamless design or group sequential design. It shows potential to lead researchers to make incorrect go/no-go decisions. Despite its significance, rare research has explored the impact of delayed treatment effects on the decision success rate of the interim analysis and the methods to compensate for this loss. In this study, we propose an analysis procedure based on change points for improving the decision success rate at the interim analysis in the presence of delayed treatment effects. This procedure primarily involves three steps: I. detecting and testing the number and locations of change points; II. estimating treatment efficacy; and III. making go/no-go decisions. Simulation results demonstrate that when there is a delayed treatment effect with a single change point, using the proposed analysis procedure significantly improves the decision success rate while controlling the type I error rate. Moreover, the proposed method exhibits very little disparity compared to the unadjusted method when the proportional hazards assumption holds. Therefore, the proposed analysis procedure provides a feasible approach for decision-making at the interim analysis when delayed treatment effects are present.

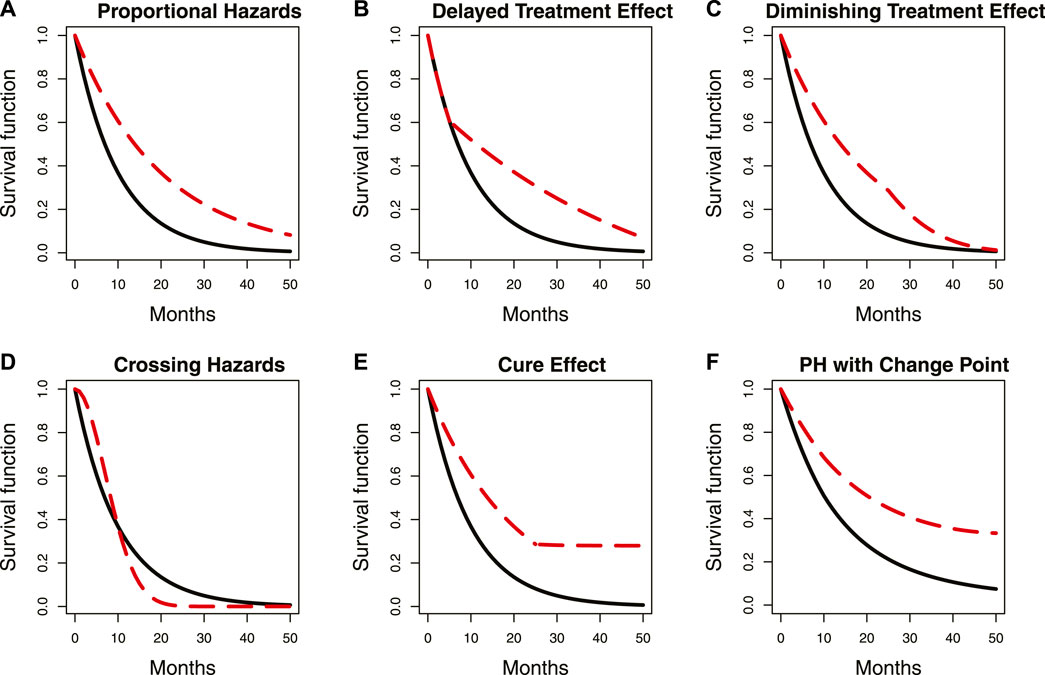

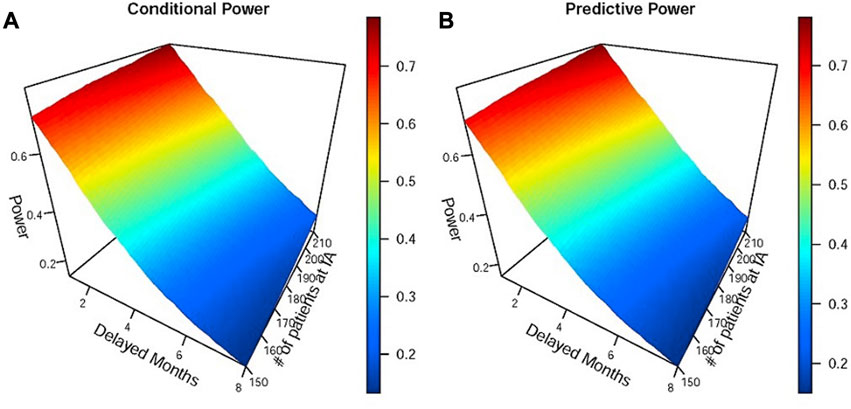

Tumor immunotherapy research has emerged as a prominent focus in clinical drug development (Melero et al., 2015; Farkona et al., 2016) and is one of the most promising areas in the current anti-cancer drug research and development pipeline. The mechanism of action of tumor immunotherapy involves the following key steps (Freeman et al., 2000; Ribas and Wolchok, 2018): i) inhibiting the activity of immune checkpoints; ii) releasing the immune “brake” in the tumor microenvironment; and iii) activating anti-cancer immune responses. Consequently, immunotherapy needs time to activate immune responses, which results in a typical issue when designing a tumor immunotherapy trial, namely, delayed treatment effects (Hodi et al., 2010; Chen, 2013; Larkin et al., 2015; Mick and Chen, 2015; Kudo, 2019; Finn et al., 2020). As shown in Panel B of Figure 1, the delayed treatment effect can lead to an accelerated failure phenomenon at the early stage of the survival curve, which violates the proportional hazards assumption for statistical analysis. This phenomenon can diminish treatment efficacy, reduce the power of the study, and make it challenging to interpret the final results (Reck et al., 2016). In other words, studies may ultimately fail if this issue is not adequately and thoroughly taken into account at the study design stage (Ren et al., 2023). Particularly in an adaptive phase II/III seamless design or a group sequential design, delayed treatment effects can lead to wrong go/no-go decisions at the interim analysis. This is attributed to a substantial proportion of events occurring in the accelerated failure stage due to the limitations imposed by the observation period. The following two situations may occur if left unaddressed: first, early termination of an effective treatment due to a low predictive success rate in the final analysis with a fixed sample size; and second, re-estimating an overpowered sample size when adjustments based on interim analysis results are permitted. Similar situations can arise in phase II/III seamless designs. Figure 2 demonstrates the simulated impact of delayed treatment effects on the conditional and predictive power at the interim analysis of group sequential designs. It is evident that increasing delay time results in decreased conditional and predictive power. Another point worth noting in Figure 2 is that solely increasing the number of interim analysis events has limited efficacy in compensating for the loss caused by the delayed treatment effect. Thus, this action is insufficient to address the issue. In light of the aforementioned considerations, the primary objective of this paper is to find targeted methods that improve the decision success rate of the interim analysis in the presence of delayed treatment effects in phase II/III seamless designs or group sequential designs.

FIGURE 1. Summary of some common proportional and non-proportional hazard examples. Panels (A–F) represent the scenarios described by the captions in each panel. The location of a change point in the survival curve with delayed treatment effects is indicated by the circle. The red dashed line represents survival curves with change points, while the solid black line represents those without change points. PH denotes proportional hazards.

FIGURE 2. Simulations of the impact of different delayed months on conditional and predictive power at the interim analysis of the group sequential design. Panels (A) and (B) represent conditional and predictive power, respectively. IA denotes interim analysis. The efficacy parameters used in simulations were as follows: the median OS of the immunotherapy group was 8 and 12 months before and after the delayed effect, and the median OS of the control group was 8 months.

In contrast to the existing body of research on delayed treatment effects that predominantly focuses on fixed designs and final analysis, there is a notable dearth of methods specifically tailored for interim analysis. Moreover, the majority of existing methods rely on the weighted log-rank test statistic and its extension methods (Hasegawa, 2016; Yang, 2019; Prior, 2020). Despite disregarding the complex calculation and poor interpretability associated with the weighted log-rank test statistic, there is still a deficiency in its application. Specifically, in phase III confirmatory tumor clinical trials, the log-rank test serves as the primary method for analyzing time-to-event endpoints, regardless of whether the proportional hazard assumption is satisfied, while the weighted log-rank test statistic is often only used as a sensitivity analysis. Consequently, we shift our research direction toward improving the go/no-go decision success rate of the interim analysis. To address this research issue, we propose a comprehensive set of methods and procedures. We first detect the number and locations of change points in the survival curve, estimate the hazard function values for each segment of the survival curve based on these change points, then estimate the treatment effect size, and finally apply it to go/no-go decision indicators such as conditional power, predictive power, or the probability of success.

A change point is defined as a specific juncture at which the probability density function of random variables changes (Hinkley, 1970). The survival curve typically has one or more change points when the proportional hazards assumption is violated. Panel B in Figure 1 illustrates an example where the two survival curves overlap in the first 5 months due to a delayed treatment effect. Notably, a distinct change point can be observed in the red dashed survival curve, as indicated by the circle in the figure. Various statistical methods have been proposed for change point detection and hazard function estimation. Matthews and Farewell (1982) used the maximum likelihood function to find a single change point in the survival curve at an unknown time. Goodman et al. (2011) proposed using the maximum profile likelihood function to detect multiple change points and hazard functions in a piecewise exponential model. They also applied the Wald-type statistic for sequential testing of the statistical significance of these change points. The sequential testing means testing the kth change point only when the kth-1 change point is significant. Moreover, they used a decreasing alpha spending function to control the type I error rate, which is similar to the approach proposed by Lan and DeMets (1983) in group sequential design. Matthews et al. (1985) suggested using the score statistic to test the significance of change points. He et al. (2013) proposed a sequential testing procedure using the likelihood ratio statistic to identify multiple change points. In addition to frequentist methods, Bayesian methods have also been used, with Raftery and Akman (1986) first introducing a Bayesian framework that focuses on the Poisson process with a single change point. Arjas and Gasbarra (1994) used a Gibbs sampler to identify multiple change points. Chapple et al. (2020) applied the Markov Chain Monte Carlo (MCMC) method and a reversible jump algorithm to determine the number and location of change points. Additionally, a non-parametric approach was proposed by Müller and Wang (1994), who used a non-parametric smoothing technique to detect change points. Although the aforementioned methods can be used for change point detection and hazard function estimation, none of them mentioned clinical phase II/III seamless design or group sequential design, and there are few methods related to clinical trial practice. Therefore, we modify some frequentist and Bayesian methods, and provide recommendations on the order of searching for multiple change points to ensure their suitability for our research purposes. Furthermore, we propose two non-parametric methods based on the Kaplan–Meier estimator and the area under the survival curve, respectively. These two non-parametric methods demonstrate comparable performance to the parametric methods under certain simulation scenarios while reducing the complexity of change point detection. Next, while the aforementioned methods allow for testing the statistical significance of the detected change points, most of them are based on the hazard ratio (HR) and are recommended to be used in the unblinded analysis. However, in real clinical trials, interim analysis may need to be conducted without unblinding. Therefore, we propose a sequential testing approach based on the ratio of the log-likelihood function, which differs from the method proposed by He et al. (2013) and can be applied to test change points in both blinded and unblinded analyses. Finally, by calculating the average hazard ratio (AHR), we can apply the change points and hazard functions to the efficacy estimation. The AHR is then incorporated into go/no-go decision indicators to assist us in making decisions. In addition to the traditional AHR (Kalbfleisch and Prentice, 1981), we also propose a new AHR calculation method whose weighting considers both the influence of time and the number of events. Our proposed analysis procedure involves both blinded and unblinded interim analyses. The main distinction is that unblinded analysis allows for an additional test for the proportional hazards assumption prior to commencing the analysis procedure. If the test result is statistically significant, it indicates that the proportional hazards assumption may not hold. We proceed to detect change points and estimate hazard functions. Otherwise, the interim analysis is conducted directly without using the proposed analysis procedure. Since existing methods for testing the proportional hazards assumption are primarily based on the HR (Moore, 2016), estimating it under the blind condition is complex and requires some strong assumptions. As a result, we skip this step and directly detect change points in the blinded analysis. Moreover, since determining an appropriate time for interim analysis when the proportional hazards assumption does not hold is a topic worth discussing, we propose a maximum interim information design. This flexible and simple-to-implement method considers both event-driven and calendar-driven factors when selecting the interim analysis time.

The subsequent sections of this paper are organized as follows: Section 2 introduces the methods for detecting and testing change points, estimating the effect size, and choosing the analysis time for the interim analysis. To depict the proposed analysis procedure more clearly, we also provide an analysis flowchart at the end of this section. In Section 3, Section 4, Section 5, we compare the performance of the proposed methods with the unadjusted traditional method in various scenarios and a real case. The discussion remarks are summarized in the last section.

The survival time and censoring time of N subjects are denoted as

where

where

The log-likelihood function is changed by substituting the given equation of

We obtain

In a Bayesian framework, the posterior density is proportional to the product of the likelihood function and the prior density. Specifically,

where

The model’s parameters can be estimated using the MCMC method.

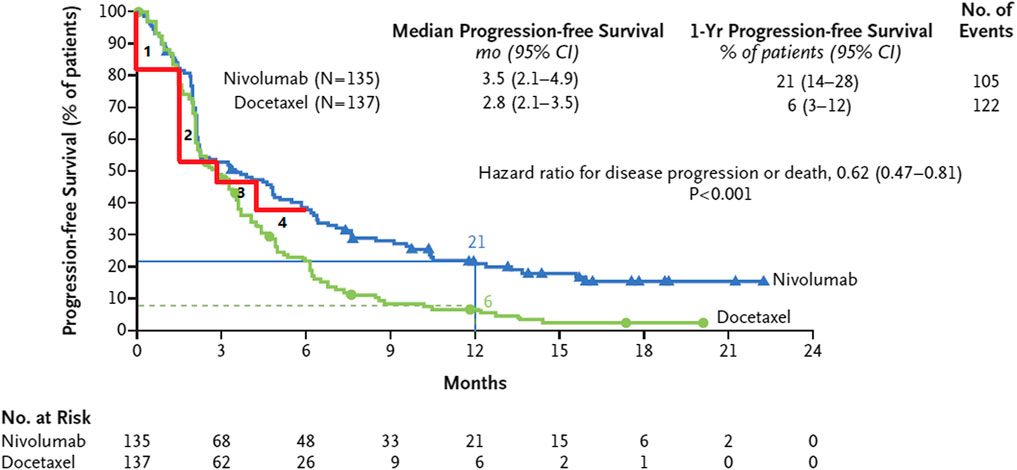

The frequentist and Bayesian approaches have a disadvantage which is the fact that their calculation processes can become overly complex. To overcome this limitation, we propose two non-parametric methods. The idea of the non-parametric approach is very intuitive, as shown in Figure 3 (Brahmer et al., 2015), which describes that the survival curve of the nivolumab group has one change point near the 3rd month after the first administration. This implies that there are different hazard rates before and after the change point. Our first approach involves dividing the survival curve into multiple time subintervals of equal length. We calculate the area under the survival curve for each time subinterval and identify the subinterval that has the largest change in comparison to the previous subinterval. The starting point of this subinterval is chosen as the change point. Taking the identification of one change point as an example, we first predict a range of the change point, such as from the 1st to the 5th month after the first administration. The survival curve is divided into equal-length time subintervals, for example, from the 1st to the 2nd month, from 1.1th to the 2.1th month, or from the 1.2th to the 2.2th month, denoted by

FIGURE 3. The survival curve of nivolumab has one change point in the 3rd month after the first administration and is divided into four subintervals of equal length.

After identifying the change points, researchers may want to know which of these change points are statistically significant. In addition to the methods introduced in Section 1, we propose a sequential likelihood ratio test that is applicable to both blinded and unblinded analyses. This test consists of the following three steps to assess whether there is one change point or no change point.

I. Use a frequentist, Bayesian, or non-parametric approach to find one change point in real trial data. Calculate the log-likelihood function value based on the piecewise exponential model for this change point, denoted as

II. Simulate 1000 samples of data using the hazard function parameters estimated from the exponential distribution without any change points, which were obtained in the previous step. Each sample has the same sample size as the real trial. Repeat step I for each sample, resulting in 1000 likelihood ratio statistics,

III. Substitute

By repeating the same sequential test steps, it is possible to determine the presence of two change points beyond one. If two change points are confirmed, we can proceed to test whether there are three or more change points. In general, this sequential test does not require adjusting the type I error rate. However, to avoid the over-fitted issue with a large number of change points, we can refer to the decreasing alpha spending function proposed by Goodman et al. (2011). It is important to note that there may be cases where the hazard function changes significantly after a change point, yet the proportional hazards assumption still holds, such as in Panel F of Figure 1. We simulate and discuss the impact of such a case in Section 3, Section 4.

To estimate the treatment effect using the change points and hazard functions obtained from the previous steps, a common approach is to use the AHR proposed by Kalbfleisch and Prentice (1981). For any two treatment groups, the AHR can be calculated as follows:

where

The aforementioned weighting method has the disadvantage of being complex to calculate when there is more than one change point, and it is also challenging to choose the value of

Although the weights assigned to AHR2 are easily chosen and assume equal weight for each event, they only consider the number of events and disregard the influence of the duration of each subinterval in the survival curve. For instance, in a survival curve with one change point in the 3rd month after the first administration, there exists an accelerated failure phase before the change point. It is assumed that 100 events are observed both before and after the change point, respectively. Both subintervals are equally weighted when using the AHR2. However, the observation duration for the first 100 events is only 3 months, considerably shorter than the duration after the change point, and there are still large amounts of censored data after the change point that is not included in the weights. Considering these factors, we propose another weighting method that takes into account the impact of both time and the number of events, which is called the time- and event-weighted HR (TEHR). It is calculated as follows:

One benefit of the TEHR is that the duration of time used in the AHR1 and the number of events used in the AHR2 are taken into account when assigning weights. Both the AHR and TEHR can be calculated directly in unblinded analysis. The challenge is in the estimation of the hazard function for each treatment group without unblinding. One approach is to use the EM algorithm to estimate the hazard function. The other approach is to make certain assumptions. For example, it is assumed that the blind combined hazard rate

When planning an interim analysis in a group sequential design, the interim analysis time is usually determined using either an event- or calendar-driven approach. In an event-driven design, the interim analysis is triggered when a predetermined number of events is observed. A calendar-driven design refers to conducting the interim analysis after a pre-specified time has arrived. Researchers typically choose one of these approaches as the criteria for initiating the interim analysis. For example, a tumor immunotherapy trial plans to conduct an interim analysis when 150 events are observed. In the case where the proportional hazards assumption is established, 150 events are expected to be observed in the 18th month after the first administration. Hence, either event-driven or calendar-driven criteria could be used to determine the interim analysis time. However, if a delayed treatment effect is present, the hazard rate would be higher before the change point and decrease afterward. Consequently, it is possible to observe 150 events before the 18th month. On the other hand, if the hazard rates of both groups are relatively low before the change point but rise rapidly in the control group after the change point, fewer than 150 events may be observed in the 18th month. To this end, we propose a flexible method for determining the interim analysis time that considers event- and calendar-driven information. The approach selects the later of the two criteria as the interim analysis time. In the previously described first scenario, the interim analysis would be conducted in the 18th month after the first administration, where there would be more than 150 events due to the presence of a delayed treatment effect. This approach, which selects the interim analysis time with the larger information fraction between event- and calendar-driven, is referred to as the maximum interim information design.

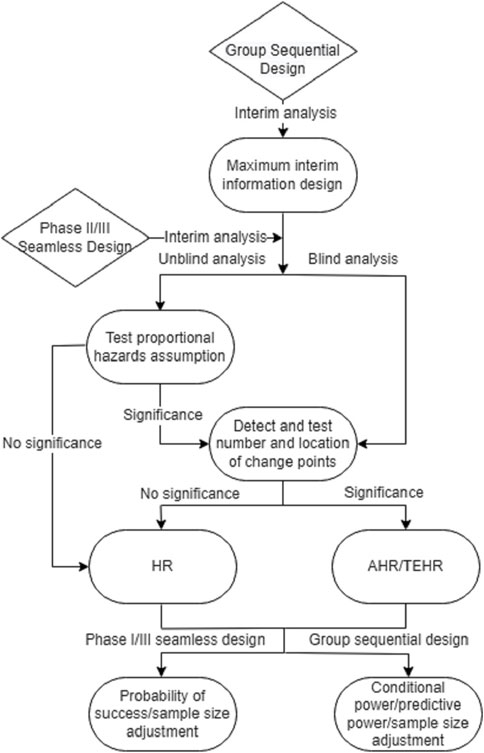

Figure 4 introduces a flowchart that provides an outline of the proposed analysis procedure for the phase II/III seamless design and the group sequential design in the presence of a potential delayed treatment effect. This flowchart can serve as a useful reference when implementing the proposed analysis procedure.

FIGURE 4. Analysis flowchart for phase II/III seamless design and group sequential design when there may be a delayed treatment effect.

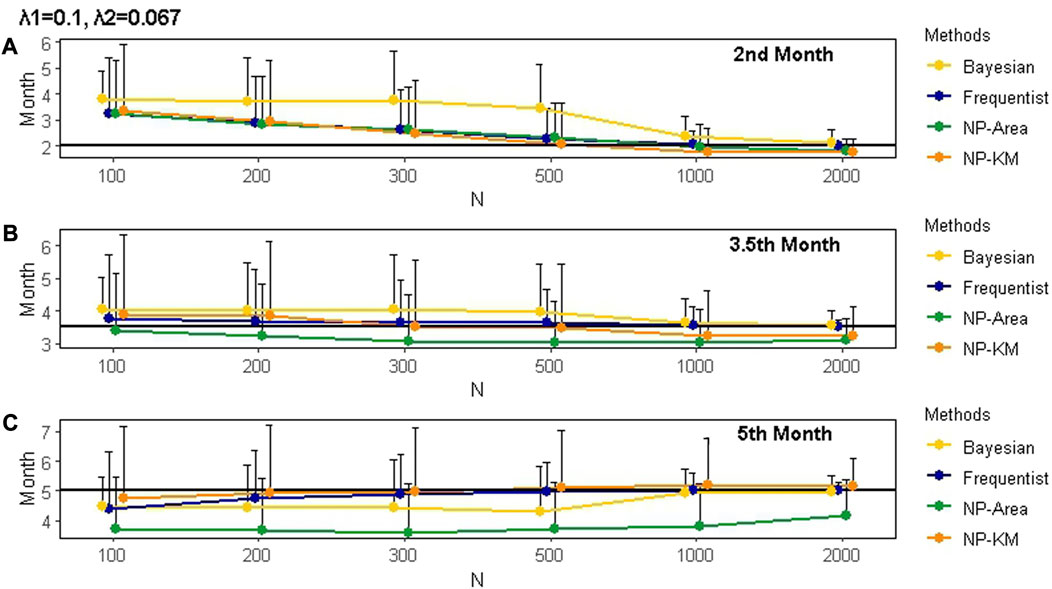

In this section, we first conducted a comparative simulation for different change point detection methods under various sample sizes and data maturities. Our simulations focused on a scenario with a single treatment group and assumed that there would be only one change point, which was in the 2nd, 3.5th, or 5th month after the first administration, respectively. The sample sizes were 200, 300, and 500, while the censoring rates were set at 0%, 20%, or 50%. We denoted the hazard rates of the survival curve before and after the change point as

Next, to assess the impact of the proposed analysis procedure on the decision success rate at the unblinded interim analysis, we conducted a simulation of a double-blind, randomization, placebo-controlled phase III clinical trial. The trial had two treatment groups. Overall survival (OS) was the primary efficacy endpoint. The estimated median OS for the test group was 12 months (

To calculate these two indicators, the clinical success criterion was set to 0.76. The predictive power solely relied on the interim analysis data without incorporating any prior knowledge. The decision threshold was set at 90%, indicating that if the conditional or predictive power at the interim analysis exceeded 90%, it was deemed likely that the trial would succeed in the final analysis without requiring any additional actions, such as sample size re-estimation. To measure whether the decision success rate at the interim analysis was improved, we compared the go/no-go decisions with the final log-rank test results. The treatment effect size at the interim analysis was estimated by the AHR1 and TEHR, with

The estimates of change points and hazard rates obtained by different methods, considering varying sample sizes and censoring rates, are summarized in Supplementary Table S1. It is evident that the accuracy of estimates was influenced by the magnitude of the hazard rate gap before and after the change point. Higher accuracy was achieved when the hazard rate gap was larger. In addition, larger sample sizes led to more accurate estimates, and censoring rates also had an impact. The hazard rates estimated by various methods were close to the true value when the estimated change points did not significantly deviate from the true change points. Taking a sample size of 200 and the true change point occurring in the 2nd month after the first administration as an example, we observed that when the estimated change point differed by 0.5 months from the true change point, the estimated hazard rates showed a minor deviation from the true values. Furthermore, when the difference between the estimated and true change points increased to 1 month, as demonstrated in the frequentist method with true hazard rates

FIGURE 5. Mean and SD of the actual change points and the change points estimated by different methods under various scenarios for 1000 simulations. Panels (A), (B), and (C) represent change points occurring in the 2nd, 3.5th, and 5th months after the first administration, respectively. The black line represents the true value of the change point. The censoring rate is zero. SD is represented by the length of the whisker. The true hazard rates before and after the change points are 0.1 and 0.067, respectively. NP is non-parametric. KM is Kaplan–Meier.

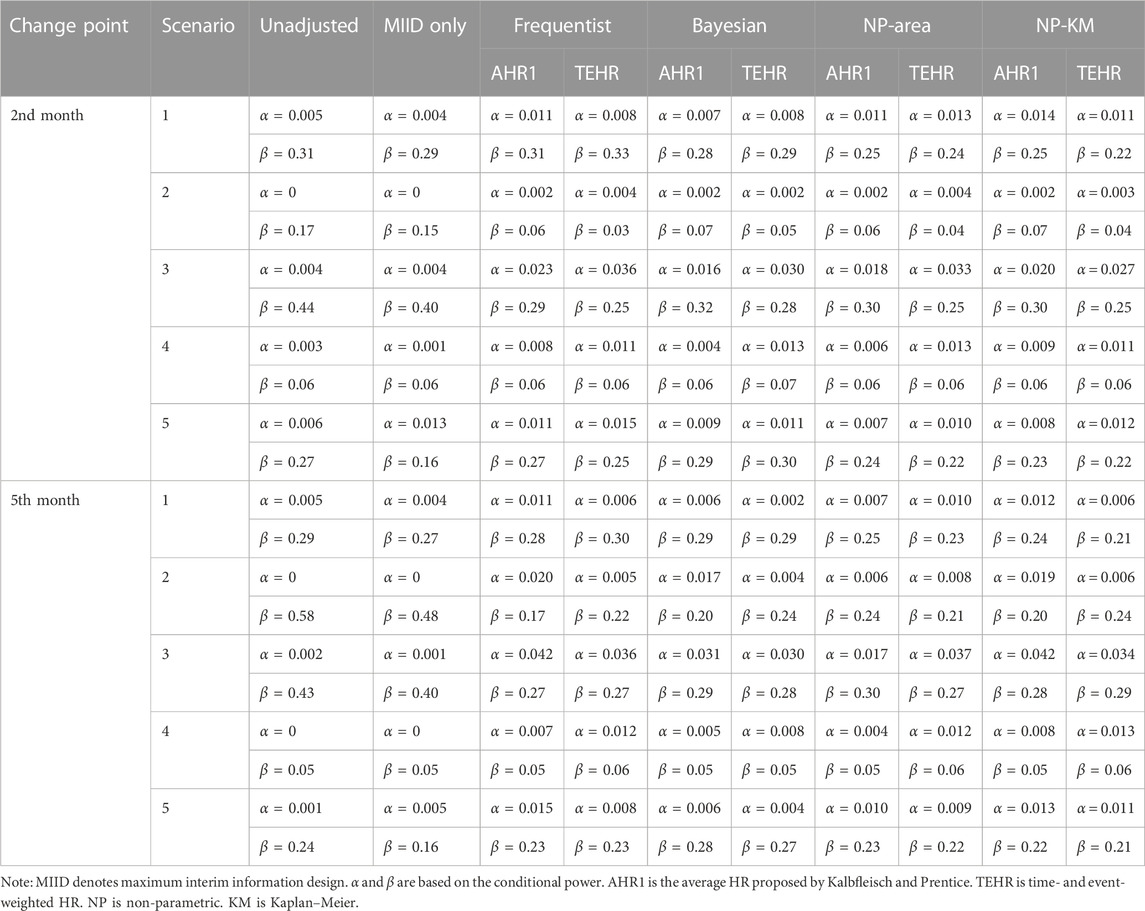

For the results of the decision success rate at the interim analysis,

TABLE 2.

We first focus on the results in Scenarios 2 and 3 as they had a delayed treatment effect, which is the main issue of this research. In Scenario 3, when we analyzed the data directly without considering the impact of the delayed treatment effect,

In all scenarios, the

In the comparison between AHR1 and TEHR, the

The number of events adjusted by the maximum interim information design at the interim analysis for various scenarios is shown in Supplementary Table S5. The unadjusted method maintained a fixed number of events at 174. In previous simulations, all change point detection methods selected the interim analysis time based on the maximum interim information design. Therefore, they had the same number of events as the maximum interim information design. In cases where the proportional hazards assumption was held, such as scenarios 1, 4, and 5, different change points had no impact on the number of events. The number of events in scenarios 2 and 3 increased as the delayed treatment effect persisted. This maintained the power of the study to some extent and demonstrated the benefits of the maximum interim information design. Supplementary Table S6 provides a summary of the actual average time of the interim analysis under various scenarios. When there was a delayed treatment effect due to the longer accelerated failure period in the survival curve, the interim analysis time shifted earlier as the number of delayed months increased. In Scenario 5, although the proportional hazards assumption was established, the interim analysis time was postponed. However, as observed in Table 2, this phenomenon had a limited impact on the decision results in Scenario 5. It further reaffirmed that the violation of the proportional hazards assumption was the key factor influencing the accuracy of the analysis and decision-making. In scenarios 1 and 4, where no delayed treatment effect was present, the interim analysis time was not impacted.

The Bladder1 dataset in the R package survival, which contains information on bladder cancer recurrences, is frequently used by researchers to assess statistical methods. It consists of three treatment groups, namely, thiotepa, pyridoxine, and placebo, comprising 81, 85, and 128 subjects, respectively. The primary endpoint event is defined as the occurrence of bladder cancer recurrence or death due to any reason. Upon plotting the survival curves for these groups, the figure reveals no discernible disparity in the curves during the first 7 or 8 months. However, the decline in the survival curve for the thiotepa group subsequently decelerates, indicating a potential delayed treatment effect. We opted to apply the proposed analysis procedure only to the thiotepa and pyridoxine groups as the placebo group’s survival curve fell between them. We used the log-rank test to compare the thiotepa and pyridoxine groups, yielding a p-value of 0.258, suggesting a lack of statistical significance. To convert the trial’s data into interim analysis data, we sorted the data by start time and set the last 30% as censoring, regardless of whether the events were observed. The censoring rate increased to 50.6% as a result. Our intent is to make an accurate go/no-go decision by applying the proposed analysis procedure to the unblinded interim data. To accomplish this, we used frequentist, Bayesian, and two non-parametric methods to detect the change point in the combined data from the 5th to the 9th month after the first administration, maintaining the same settings as the previous simulations for other parameters. Subsequently, we calculated the TEHR, conditional power, and predictive power based on the estimates of the hazard rates before and after the change point for the two groups. The outcomes of the proposed analysis procedure were then compared with those of the unadjusted method. The clinical success criterion was set to 0.8 when calculating the conditional and predictive power. The final results are presented in Table 3.

The change point detection results obtained through the frequentist and non-parametric methods based on the KM estimator demonstrated higher accuracy than the other two methods. If 50% of this was used as the go/no-go decision criterion, it was evident from the conditional and predictive power that the unadjusted method would have incorrectly decided to continue the trial. In contrast, all values of calculated conditional and predictive power from the proposed analysis process were below 50%, especially the results of the frequentist and non-parametric methods based on the KM estimator, which were exceptionally low.

In the context of phase II/III seamless design and group sequential design, the violation of the proportional hazards assumption can result in two potential outcomes at the interim analysis: overpower and underpower. Overpower occurs when the power of the interim analysis exceeds expectations, while underpower occurs when the power is insufficient due to a delayed treatment effect. Given the impact of the latter on statistical analysis, this paper proposes an analysis procedure that includes detecting the number and location of change points in the survival curve and then using them to estimate the treatment effect size, ultimately improving the decision success rate of the interim analysis. The main benefit of the proposed analysis procedure over the unadjusted method is a significant reduction in the type II error rate at the expense of a slight increase in the type I error rate. The accuracy of the four proposed change point detection methods is influenced by the sample size and censoring rate. In simulations, we found that the frequentist method demonstrated relatively stable performance, while the non-parametric methods, particularly those based on the Kaplan–Meier estimator, outperformed the frequentist method in some scenarios with a sample size of less than 300. All four methods yielded superior results compared to the unadjusted method for decision-making. We introduce TEHR, which incorporates both time and events to estimate efficacy in the presence of delayed treatment effects. In comparison to the commonly used AHR, TEHR has its own advantages, particularly when dealing with multiple change points. We also provide a method for selecting the interim analysis time in the group sequential design, which can limitedly improve the decision success rate. In this section, we discuss important considerations while applying the analysis procedure.

In the previous sections, we summarized the performance of various change point detection methods. However, these conclusions were based on the assumption that the simulated data followed the piecewise exponential model. The selection of the detection method should be guided by the specific characteristics of the data, such as the distribution, sample size, and available prior information. Simulation can assist in identifying an appropriate method for a given scenario. In general, if there is a good understanding of the distribution and an adequate sample size, frequentist methods can be considered. This approach is particularly convenient when the survival curve has only one change point, which is the most common situation in tumor immunotherapy studies. On the other hand, if the distribution is uncertain or the sample size is small, non-parametric methods may be more appropriate. In cases where reliable prior information is available, Bayesian methods can be utilized.

In comparison to AHR2, which applies the same weights for all time intervals, TEHR can be regarded as a weighted approach that incorporates the element of time. In scenarios with a significant treatment delay, TEHR tends to provide a relatively conservative estimate of treatment efficacy between the two groups. Conversely, when there is a shorter delay, TEHR yields a relatively larger estimate of treatment efficacy. This characteristic of TEHR was adequately illustrated through the simulations and the provided example. It is important to note that TEHR is just one type of weighted method that can be used based on the aforementioned rules; it may not be applicable in all situations. Researchers should carefully consider the specific context and characteristics of the study when using TEHR. We did not specifically test for violations of the proportional hazards assumption or the significance of change points while calculating AHR1 or TEHR in the simulations. The results showed that the performance of the proposed analysis procedure was not inferior to that of the unadjusted method when the proportional hazards assumption held, and it exhibited clear advantages when this assumption was violated. This suggests that the proposed analysis procedure can be directly applied to scenarios with no more than one change point without the need to test its statistical significance. Since most real tumor immunotherapy scenarios only involve a single change point, this application condition is particularly useful. It demonstrates the robustness of the proposed analysis procedure while simultaneously reducing the complexity of statistical analysis.

One more issue that has not been discussed is the adjustment and allocation of the type I error rate when using the maximum interim information design. Some traditional methods can still be used to address this issue. For example, the alpha spending function (DeMets and Lan, 1994) can be used to adjust the type I error rate according to the updated interim analysis time. Moreover, the proposed analysis procedure makes decisions based on indicators such as conditional power, predictive power, or probability of success. These indicators can play a decisive role in the phase II/III seamless design. For the group sequential design, in addition to its application in go/no-go decisions, the analysis procedure can also be used in conjunction with the p-value obtained at the interim analyses. For instance, if the p-value of the interim analysis results cannot reject or accept

Publicly available datasets were analyzed in this study. The dataset Bladder1 of the Survival package used in this study can be found in the CRAN website: https://cran.r-project.org/.

L-SX developed the methods and drafted the manuscript, while HL provided additional review and content verification. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphar.2023.1186456/full#supplementary-material

Arjas, E., and Gasbarra, D. (1994). Nonparametric Bayesian inference from right censored survival data, using the Gibbs sampler. Stat. Sin. 4, 505–524. doi:10.1007/BF01199902

Brahmer, J., Reckamp, K. L., Baas, P., Crinò, L., Eberhardt, W. E. E., Poddubskaya, E., et al. (2015). Nivolumab versus docetaxel in advanced squamous-cell non-small-cell lung cancer. N. Engl. J. Med. 373, 123–135. doi:10.1056/NEJMoa1504627

Chapple, A. G., Peak, T., and Hemal, A. (2020). A novel Bayesian continuous piecewise linear log-hazard model, with estimation and inference via reversible jump Markov chain Monte Carlo. Statistics Med. 39, 1766–1780. doi:10.1002/sim.8511

Chen, T.-T. (2013). Statistical issues and challenges in immuno-oncology. J. Immunother. Cancer 1, 18. doi:10.1186/2051-1426-1-18

DeMets, D. L., and Lan, K. K. G. (1994). Interim analysis: the alpha spending function approach. Stat. Med. 13, 1341–1352. doi:10.1002/sim.4780131308

Farkona, S., Diamandis, E. P., and Blasutig, I. M. (2016). Cancer immunotherapy: the beginning of the end of cancer? BMC Med. 14, 73. doi:10.1186/s12916-016-0623-5

Finn, R. S., Ryoo, B.-Y., Merle, P., Kudo, M., Bouattour, M., Lim, H. Y., et al. (2020). Pembrolizumab as second-line therapy in patients with advanced hepatocellular carcinoma in KEYNOTE-240: a randomized, double-blind, phase III trial. J. Clin. Oncol. 38, 193–202. doi:10.1200/JCO.19.01307

Freeman, G. J., Long, A. J., Iwai, Y., Bourque, K., Chernova, T., Nishimura, H., et al. (2000). Engagement of the PD-1 immunoinhibitory receptor by a novel B7 family member leads to negative regulation of lymphocyte activation. J. Exp. Med. 192, 1027–1034. doi:10.1084/jem.192.7.1027

Goodman, M. S., Li, Y., and Tiwari, R. C. (2011). Detecting multiple change points in piecewise constant hazard functions. J. Appl. Statistics 38, 2523–2532. doi:10.1080/02664763.2011.559209

Hasegawa, T. (2016). Group sequential monitoring based on the weighted log-rank test statistic with the fleming-harrington class of weights in cancer vaccine studies: group sequential monitoring based on the weighted log-rank test statistic with the fleming-harrington class of weights in cancer vaccine studies. Pharm. Stat. 15, 412–419. doi:10.1002/pst.1760

He, P., Fang, L., and Su, Z. (2013). A sequential testing approach to detecting multiple change points in the proportional hazards model. Stat. Med. 32, 1239–1245. doi:10.1002/sim.5605

Hinkley, D. V. (1970). Inference about the change-point in a sequence of random variables. Biometrika 57, 1–17. doi:10.1093/biomet/57.1.1

Hodi, F. S., O’Day, S. J., McDermott, D. F., Weber, R. W., Sosman, J. A., Haanen, J. B., et al. (2010). Improved survival with ipilimumab in patients with metastatic melanoma. N. Engl. J. Med. 363, 711–723. doi:10.1056/NEJMoa1003466

Kalbfleisch, J. D., and Prentice, R. L. (1981). Estimation of the average hazard ratio. Biometrika 68, 105–112. doi:10.1093/biomet/68.1.105

Kudo, M. (2019). Pembrolizumab for the treatment of hepatocellular carcinoma. Liver Cancer 8, 143–154. doi:10.1159/000500143

Lan, K. K. G., and DeMets, D. L. (1983). Discrete sequential boundaries for clinical trials. Biometrika 70, 659–663. doi:10.2307/2336502

Larkin, J., Hodi, F. S., and Wolchok, J. D. (2015). Combined Nivolumab and ipilimumab or monotherapy in untreated melanoma. N. Engl. J. Med. 373, 1270–1271. doi:10.1056/NEJMc1509660

Matthews, D. E., and Farewell, V. T. (1982). On testing for a constant hazard against a change-point alternative. Biometrics 38, 463–468. doi:10.2307/2530460

Matthews, D. E., Farewell, V. T., and Pyke, R. (1985). Asymptotic score-statistic processes and tests for constant hazard against a change-point alternative. Ann. Stat. 13. doi:10.1214/aos/1176349540

Melero, I., Berman, D. M., Aznar, M. A., Korman, A. J., Pérez Gracia, J. L., and Haanen, J. (2015). Evolving synergistic combinations of targeted immunotherapies to combat cancer. Nat. Rev. Cancer 15, 457–472. doi:10.1038/nrc3973

Mick, R., and Chen, T.-T. (2015). Statistical challenges in the design of late-stage cancer immunotherapy studies. Cancer Immunol. Res. 3, 1292–1298. doi:10.1158/2326-6066.CIR-15-0260

Moore, D. F. (2016). Applied survival analysis using R. Cham: Springer International Publishing. doi:10.1007/978-3-319-31245-3

Müller, H. G., and Wang, J.-L. (1994). “Change-point models for hazard functions,” in Institute of mathematical statistics lecture notes - monograph series (Hayward, CA: Institute of Mathematical Statistics), 224–241. doi:10.1214/lnms/1215463127

Prior, T. J. (2020). Group sequential monitoring based on the maximum of weighted log-rank statistics with the Fleming–Harrington class of weights in oncology clinical trials. Stat. Methods Med. Res. 29, 3525–3532. doi:10.1177/0962280220931560

Raftery, A. E., and Akman, V. E. (1986). Bayesian analysis of a Poisson process with a change-point. Biometrika 73, 85–89. doi:10.1093/biomet/73.1.85

Reck, M., Rodríguez-Abreu, D., Robinson, A. G., Hui, R., Csőszi, T., Fülöp, A., et al. (2016). Pembrolizumab versus chemotherapy for PD-L1–positive non–small-cell lung cancer. N. Engl. J. Med. 375, 1823–1833. doi:10.1056/NEJMoa1606774

Ren, S., Feng, J., Ma, S., Chen, H., Ma, Z., Huang, C., et al. (2023). KEYNOTE-033: randomized phase 3 study of pembrolizumab vs docetaxel in previously treated, PD-L1-positive, advanced NSCLC. Intl J. Cancer 153, 623–634. doi:10.1002/ijc.34532

Ribas, A., and Wolchok, J. D. (2018). Cancer immunotherapy using checkpoint blockade. Science 359, 1350–1355. doi:10.1126/science.aar4060

Keywords: tumor immunotherapy, delayed treatment effect, change point, interim analysis, decision-making

Citation: Xie L-S and Lu H (2023) A change point-based analysis procedure for improving the success rate of decision-making in clinical trials with delayed treatment effects. Front. Pharmacol. 14:1186456. doi: 10.3389/fphar.2023.1186456

Received: 15 March 2023; Accepted: 18 August 2023;

Published: 11 September 2023.

Edited by:

Jean-Marie Boeynaems, Université libre de Bruxelles, BelgiumReviewed by:

Shein-Chung Chow, Duke University, United StatesCopyright © 2023 Xie and Lu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hui Lu, aHVpbHVAc2p0dS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.