94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

TECHNOLOGY AND CODE article

Front. Pharmacol. , 21 February 2022

Sec. Experimental Pharmacology and Drug Discovery

Volume 13 - 2022 | https://doi.org/10.3389/fphar.2022.833099

This article is part of the Research Topic Chemoinformatics Approaches to Structure- and Ligand-Based Drug Design, Volume II View all 20 articles

Benjamin P. Brown1*

Benjamin P. Brown1* Oanh Vu2

Oanh Vu2 Alexander R. Geanes2

Alexander R. Geanes2 Sandeepkumar Kothiwale2

Sandeepkumar Kothiwale2 Mariusz Butkiewicz2

Mariusz Butkiewicz2 Edward W. Lowe Jr2

Edward W. Lowe Jr2 Ralf Mueller2

Ralf Mueller2 Richard Pape2

Richard Pape2 Jeffrey Mendenhall2*

Jeffrey Mendenhall2* Jens Meiler3,4*

Jens Meiler3,4*The BioChemical Library (BCL) cheminformatics toolkit is an application-based academic open-source software package designed to integrate traditional small molecule cheminformatics tools with machine learning-based quantitative structure-activity/property relationship (QSAR/QSPR) modeling. In this pedagogical article we provide a detailed introduction to core BCL cheminformatics functionality, showing how traditional tasks (e.g., computing chemical properties, estimating druglikeness) can be readily combined with machine learning. In addition, we have included multiple examples covering areas of advanced use, such as reaction-based library design. We anticipate that this manuscript will be a valuable resource for researchers in computer-aided drug discovery looking to integrate modular cheminformatics and machine learning tools into their pipelines.

Computer-aided drug discovery (CADD) methods are routinely employed to improve the efficiency of hit identification and lead optimization (Macalino et al., 2015; Usha et al., 2017). The importance of in silico methods in drug discovery is exemplified by the multitude of cheminformatics tools available today. These tools frequently include capabilities for tasks such as high-volume molecule processing (Hassan et al., 2006; SciTegic, 2007), ligand-based (LB) small molecule alignment (Labute et al., 2001; Jain Ajay, 2004; Chan, 2017; Brown et al., 2019), conformer generation (Cappel et al., 2015; Kothiwale et al., 2015; Friedrich et al., 2017a, 2019), pharmacophore modeling (Hecker et al., 2002; Acharya et al., 2011; Vlachakis et al., 2015), structure-based (SB) protein-ligand docking (Friesner et al., 2004; Meiler and Baker, 2006; Davis and Baker, 2009; Hartmann et al., 2009; Morris et al., 2009; Kaufmann and Meiler, 2012; Lemmon et al., 2012), and ligand design. Increasingly, modern drug discovery relies on customizable and target-specific machine learning-based quantitative structure-activity relationship (QSAR) and structure-property relationship (QSPR) modeling during virtual high-throughput screening (vHTS) (Lo et al., 2018; Vamathevan et al., 2019).

Frequently, building a drug discovery pipeline with all of these parts requires users to combine multiple different software packages into their workflow. This can be challenging because of different version requirements in package dependencies. Moreover, file- and data-type incompatibilities between packages can lead to errors and pipeline inefficiencies. Here, we describe the BioChemical Library (BCL) cheminformatics toolkit, a freely available academic open-source software package with tightly integrated machine learning-based QSAR/QSPR capabilities.

The BCL is an application-based software package programmed and compiled in C++. This means that BCL applications can be integrated into existing pipelines without the need for package dependency management (i.e., maintaining directory-dependent virtual environments, or keeping separate Miniconda environments for each task). In addition, BCL applications are modular and can be easily combined into complex protocols with simple Shell scripts. Output files from the BCL are primarily common file types that can also be read as input by other software packages. Its command-line usage will be familiar to users of the popular macromolecular modeling software Rosetta (Kaufmann et al., 2010). The simple command line user interface (UI) makes it easy to create complex protocols without extensive coding or scripting experience. Our goal with this manuscript is to describe the core functionalities of the BCL cheminformatics toolkit and provide detailed examples for real use cases. At the end, we briefly discuss ongoing software developments that may be of interest to users.

The first thing to complete after downloading and installing the BCL is to add the license file to the/path/to/bcl folder. We further recommend adding/<path>/<to>/bcl to the LD_LIBRARY_PATH and PATH environment variables in the. cshrc/.bashrc. This allows users to access the BCL from any terminal window simply by typing bcl. exe into the command-line. For detailed setup instructions, read the appropriate operating system (OS)-specific ReadMe file in bcl/installer/.

The BCL is organized into application groups each of which contains multiple applications. To view the application groups and associated applications, run the BCL help command:

bcl.exe help

The BCL has application groups for cheminformatics, protein folding, machine learning, and other tasks (Supplementary Table S1). To isolate and view the applications associated with the application group molecule, for example, run the application group help command:

bcl.exe molecule:Help

Generally, the syntax to access a BCL application is as follows:

bcl.exe application_group:Application

The help menu for any application cans similarly be accessed as

bcl.exe application_group:Application --help

These help options list the basic arguments and parameters available for each application. More detailed help options are also frequently available for individual application parameters. In this way, all of the documentation required to run the BCL can be readily accessed from the command line. The application groups composing the core of the BCL cheminformatics toolkit include the following: Molecule, Descriptor, and Model (Table 1).

Molecules are input to the BCL in the MDL structure-data format (SDF) file. Often, molecules that are downloaded or converted from one source to another contain errors (e.g., incorrect bond order assignments, undesired protonation states/formal charge, etc.). Dataset sanitization is a critical component of computational chemistry and informatics projects. The BCL molecule: Filter application is the first step in correcting these errors or identifying molecules that cannot be easily and automatically corrected.

To see all of the options available in molecule:Filter, run the following command:

bcl.exe molecule:Filter--help

or view the supplementary material (Supplementary Table S2,S3).

For the following examples we will make use of a set of the Platinum Diverse Dataset, a subset of high-quality ligands in their protein-bound 3D conformations (Friedrich et al., 2017b).

bcl.exe molecule:Filter \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_matched platinum_diverse_dataset_2017_01. matched.sdf.gz \

-output_unmatched platinum_diverse_dataset_2017_01. unmatched.sdf.gz \

-add_h -neutralize \

-defined_atom_types–simple \

-logger File platinum_diverse_dataset_2017_01. Filter.log

This command reads in the SDF platinum_diverse_dataset_2017_01. sdf.gz, saturates all molecules with hydrogen atoms, neutralizes any formal charges, checks to see whether the molecules have valid atom types (e.g., carbon atoms making five covalent bonds are not valid), and then checks to see whether the molecules have simple connectivity (e.g., whether they are part of a molecular complex, such as a salt). The neutralization flag identifies atoms with formal charge and tries to remove the formal charge. The default behavior allows modification of the protonation state of the atom (i.e., pH) and/or the bond order. Other options (more or less aggressive neutralization schemes) are also available and can be seen in the help menu. Adding hydrogen atoms and neutralizing charges are not required operations but are shown above to demonstrate the functionality.

All molecules that match the filter (i.e., molecules with defined atom types and are not part of molecular complexes) are output into platinum_diverse_dataset_2017_01. matched.sdf, and molecules that fail to pass the filters are output into platinum_diverse_dataset_2017_01. unmatched.sdf. In this case, all molecules pass the filter. This allows the user to review the molecules that failed the filter and choose to either fix them or continue without them.

The molecule:Filter application can also be used to separate molecules by property and/or substructure using the compare_property_values flag. For example, to filter out molecules that contain 10 or more rotatable bonds and a topological polar surface area (TPSA) less than 140 Å2, the following command can be used:

bcl.exe molecule:Filter \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_matched platinum_diverse_dataset_2017_01. veber_pass.sdf.gz \

-output_unmatched platinum_diverse_dataset_2017_01. veber_fail.sdf.gz \

-compare_property_values TopologicalPolarSurfaceArea less 140 \

NRotBond less_equal 10 \

-logger File platinum_diverse_dataset_2017_01. veber.log

Of 2,859 molecules, 395 were first filtered out for have a TPSA ≥140 Å2, and then an additional 84 molecules that had greater than 10 rotatable bonds were filtered out. Notice that the filters are applied sequentially, and molecules must pass both filters to be output to the matched file. Alternatively, the any flag can be specified such that if a molecule meets any one of the filter criteria, then it is output to the matched file:

bcl.exe molecule:Filter \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_matched platinum_diverse_dataset_2017_01. any_pass.sdf.gz \

-output_unmatched platinum_diverse_dataset_2017_01. any_fail.sdf.gz \

-compare_property_values TopologicalPolarSurfaceArea less 140 \

NRotBond less_equal 10 \

-any -logger File platinum_diverse_dataset_2017_01. any.log

In this example, 2,801 molecules passed at least one of the filters and only 58 were filtered out.

One may also filter based on substructure similarity. This is particularly useful if there are specific substructures that are desired or that need to be avoided. For example, aromatic amines are a well-known toxicophore and cannot be incorporated into potential druglike molecules; however, it is not uncommon to find these substructures in datasets. Here, we will filter a subset of DrugBank (Wishart et al., 2018) molecules to remove aniline-containing compounds:

bcl.exe molecule:Filter \

-input_filenames drugbank_nonexperimental.simple.sdf.gz \

-output_matched drugbank_nonexperimental.simple.anilines.sdf.gz \

-output_unmatched drugbank_nonexperimental.simple.clean.sdf.gz \

-contains_fragments_from aniline. sdf.gz \

-logger File drugbank_nonexperimental.simple.toxicity_check.log

In practice, we usually explicitly filter certain toxicophore substructures via graph search with the MoleculeTotalToxicFragments descriptor in conjunction with compare_property_values flag; however, this example illustrates the flexibility to filter by substructure similarity with molecule:Filter. In addition to the standard use cases presented here, molecule:Filter can identify molecules with clashes in 3D space, conformers outside of some tolerance value from a reference conformer, exact substructure matches, specific chemical properties, and more. Some of these filters will be further explored in other subsections.

Another critical aspect of dataset sanitization is removing redundancy. This is especially important when preparing datasets for QSAR model training and testing. If molecules appear more than once in a dataset, then it is possible that they could appear simultaneously in the training and test sets, leading to an artificial inflation in test set performance.

The BCL application molecule:Unique can help with this task. It has four levels at which it can compare and differentiate molecules:

1. Constitutions–compares atom identities and connectivity disregarding stereochemistry;

2. Configurations–compares atom identities, connectivity, and stereochemistry;

3. Conformations–compares configurations as well as 3D conformations;

4. Exact–checks to see whether atom identities and order are equal with the same connectivities, bond orders, stereochemistry, and 3D coordinates.

The first time the BCL encounters a molecule in an SDF it will store it in memory. Any additional encounters with the same molecule (at the chosen level described above) will be marked as duplicate encounters. The default behavior is to output only the first encounter of each molecule. There are cases in which a molecule appears multiple times but has different MDL properties and/or property values. It may not be desirable to lose the stored properties on duplicate compounds. In such cases, the user can choose to merge the properties or overwrite the duplicate descriptors instead.

For example, one may want to see if any high-throughput screening (HTS) hits have activity on multiple targets. Previously, we published nine high-quality virtual HTS (vHTS) benchmark sets for QSAR modeling binary classification tasks (Butkiewicz et al., 2013). Here, we will take a look at the active compounds from each of those nine datasets and see if any of them have activity on multiple targets.

bcl.exe molecule:Unique \

-input_filenames 1798_actives.sdf.gz 1843_actives.sdf.gz \

2258_actives.sdf.gz 2689_actives.sdf.gz \

435008_actives.sdf.gz 435034_actives.sdf.gz \

463087_actives.sdf.gz 485290_actives.sdf.gz 488997_actives.sdf.gz \

-compare Constitutions \

–output_dupes all_actives.dupes.sdf.gz \

–logger File all_actives.unique.log

The output file all_actives.dupes.sdf.gz contains 22 molecules that are active in at least two different datasets (note that each individual dataset was pre-processed to remove redundant molecules). If we want to merge the properties of these 22 compounds and isolate them from the rest of the actives, we can perform a second molecule: Unique with the merge_descriptors flag set, and then use molecule:Filter with the contains flag to isolate the duplicated compounds:

bcl.exe molecule:Unique \

-input_filenames 1798_actives.sdf.gz 1843_actives.sdf.gz \

2258_actives.sdf.gz 2689_actives.sdf.gz \

435008_actives.sdf.gz 435034_actives.sdf.gz \

463087_actives.sdf.gz 485290_actives.sdf.gz 488997_actives.sdf.gz \

-compare Constitutions–merge_descriptors \

-output all_actives.unique_merged.sdf.gz \

–logger File all_actives.unique_merged.log

followed by

bcl.exe molecule:Filter \

-input_filenames all_actives.unique_merged.sdf.gz \

-contains all_actives.dupes.sdf.gz \

-output_matched all_actives.dupes_merged.sdf.gz \

–logger File all_actives.dupes_merged.log

When merge_descriptors is passed, all unique properties are included in the resultant output file. If the same property is present on duplicates, then the first observation of that property is stored on the output molecule. If overwrite_descriptors is passed instead of merge_descriptors, the last observation of a duplicate property is stored. By default, without either of these flags only the MDL properties on the first occurrence of a molecule are stored in the output.

It may be that some of the compounds in the previous example that have activity on multiple targets are actually stereoisomers. Here, the molecules were compared based on atom identity and connectivity (Constitutions). Iterative runs of molecule:Unique coupled with molecule:Filter can be used to identify such cases.

Sorting molecules is also useful during vHTS. After making predictions on a million compounds with a QSAR model, frequently users will want to identify some small top fraction of most probable hits for experimental testing. This can be readily achieved with molecule:Reorder (note–this example utilizes pseudocode for filenames):

bcl.exe molecule:Reorder \

-input_filenames < screened_molecules.sdf> \

-output < screened_molecules.best.sdf > -output_max 100 \

-sort <QSAR_Score> -reverse \

–logger File < screened_molecules.best.log>

In this example, the reverse flag indicates that the scores will be sorted from largest to smallest (default behavior is smallest to largest). Not more than 100 molecules will be output into the file screened_molecules.best.sdf.gz because of the output_max specification (the default behavior returns all molecules in the new order).

In the previous section, we demonstrated that the BCL could identify duplicate compounds at multiple levels of discrimination. One important note is that redundant molecules are excluded (i.e., sent to the output_dupes file) in the order in which they are observed in the original input. Often, the user may want to control this sequence by sorting the molecules according to some property. In these cases, molecule:Reorder can be used to do just that.

Finally, a general note on SDF input and output. Aromaticity is automatically detected when reading input files; however, output structures are Kekulized (represented as alternating single-double bonds) by default. To output an SDF that contains explicit aromatic bonds (achieved by labeling bond order as 4 in the MDL SDF), pass the explicit_aromaticity flag on the command line.

The BCL application molecule:Split gives researchers a tool to derive fragments from starting small molecules to aid in pharmacophore modeling, fragment-based drug discovery, and de novo drug design. There are many different types of fragments molecule:Split is able generate from whole molecule(s) (Table 2).

For example, we can derive the Murcko scaffold from the FDA-approved 3rd generation tyrosine kinase inhibitor (TKI) osimertinib (Ramalingam et al., 2017) as follows:

bcl.exe molecule:Split \

-input_filenames osimertinib. sdf.gz \

-output osi. murcko.sdf.gz \

-implementation Scaffolds

Alternatively, we could remove the Murcko scaffold and return the other components:

bcl.exe molecule:Split \

-input_filenames osimertinib. sdf.gz \

-output osi. inverse_scaffold.sdf.gz \

-implementation InverseScaffold

Substructure comparisons are described in more detail in Section 5.1.

The last application of interest for molecule processing is molecule: Coordinates molecule: Coordinates is a minor application that performs several convenience tasks. First, molecule: Coordinates can recenter all molecules in the input file(s) to the origin. Second, it can compute molecular centroids. Third, molecule: Coordinates can compute statistics on molecular conformers.

For example, passing the statistics flag compute statistics on bond lengths, bond angles, and dihedral angles. Passing the dihedral_scores flag will compute a per-dihedral breakdown of the BCL 3D conformer score. The BCL 3D conformer score, or ConfScore, computes an amide non-planarity penalty in addition to a normalized dihedral score. Passing the amide_deviations and amide_penalties will output the amide deviations and penalties on a per-amide basis, respectively. This can be useful when comparing conformations obtained from conformation sampling algorithms, crystal structures, and/or molecular dynamics (MD) trajectory ensembles. See Section 4 for more information on conformer sampling.

Computing molecular descriptors/properties is a critical component of cheminformatics model building. We use the term “properties” to refer to individual chemical features and “descriptors” to refer to combinations of properties, often used to train QSAR/QSPR models; however, the terms are often used interchangeably in the BCL. In conjunction with substructure-based comparisons, generating molecular descriptors is arguably the foundation of LB CADD. The BCL was designed with a modular descriptor interface and extensible property definitions framework. This allows both developers and users alike to write new descriptors for specific applications as needed. To see a list of available predefined molecular properties, perform the following command:

bcl.exe molecule:Properties–help

The property interface is organized into two general categories: 1) Descriptors of Molecules, and 2) Descriptors of Atoms. As you will see throughout this section and Section 6, properties can be modified and recombined in a highly customizable fashion. See the Supplementary Materials for an example containing multiple custom property definitions, as well as for sample output from the molecule:Properties help menu options detailing available features.

As the names suggest, some descriptors are intrinsic to the whole molecule, while others are specific to atoms. For example, compute some whole molecule descriptors for the EGFR kinase inhibitor osimertinib:

bcl.exe molecule:Properties \

-input_filenames osimertinib. sdf.gz \

-output osi. mol_properties.sdf.gz \

-add Weight NRotBond NRings TopologicalPolarSurfaceArea \

-tabulate Weight NRotBond NRings TopologicalPolarSurfaceArea \

-output_table osi. mol_properties.table.txt

The flag add will add the specified properties to the SDF as MDL properties. The tabulate flag will output the properties for each molecule in row-column format in the file specified by output_table. There is also a statistics flag that will compute basic statistics for each of the specific descriptors across all the molecules in the input SDFs and output to output_histogram. The key observation regarding the output file is that the values for Weight, NRotBond, etc., are emergent properties of the whole molecule.

Next, compute some atomic descriptors for osimertinib:

bcl.exe molecule:Properties \

-add_h–neutralize \

-input_filenames osimertinib. sdf.gz \

-output osi. atom_properties.sdf.gz \

-add Weight Atom_SigmaCharge Atom_TopologicalPolarSurfaceArea \

-tabulate Atom_SigmaCharge Atom_TopologicalPolarSurfaceArea \

-output_table osi. atom_properties.table.txt \

-statistics Atom_SigmaCharge Atom_TopologicalPolarSurfaceArea \

-output_histogram osi. atom_properties.hist.txt

Notice here that the statistics flag outputs statistics across each atom property rather than across each molecule property. This is also the behavior when there are multiple input molecules. Importantly, here we see that the output is an array of values for each property. The indices of the array correspond to the atom indices of the molecule.

Each category of descriptors can further be modified by molecule-specific or atom-specific operations. For example, some whole molecule properties can be obtained by performing simple operations on the per-atom properties. TopologicalPolarSurfaceArea (whole molecule property) is the sum of Atom_TopologicalPolarSurfaceArea (atomic property) across the whole molecule.

bcl.exe molecule:Properties \

-add_h–neutralize \

-input_filenames osimertinib. sdf.gz \

-output osi. mol_properties.sdf.gz \

-add TopologicalPolarSurfaceArea \

“MoleculeSum (Atom_TopologicalPolarSurfaceArea)”

Check to verify that TopologicalPolarSurfaceArea and MoleculeSum (Atom_TopologicalPolarSurfaceArea) yield the same value for osimertinib.

Examples of additional operations include other basic statistics (mean, max, min, standard deviation, etc.), property radial distribution function (RDF), Coulomb force, and shape moment. See the help menu for additional options and details.

In Section 2.2 we discussed using the molecule:Filter application to remove molecules from a dataset that failed specific druglikeness criteria (e.g., TPSA ≥140 Å2). Several familiar druglikeness metrics come prepackaged in the BCL (i.e., Lipinski’s Rule of 5 and Veber’s Rule), as well as several others inspired by the literature and conventional medicinal chemistry practices. For each molecule in the Platinum Diverse dataset, count how many Lipinski and Veber violations there are. In addition, count as drug-like all molecules that have fewer than two Lipinski violations:

bcl.exe molecule:Properties \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_table platinum_diverse_dataset_2017_01. druglike.txt \

-tabulate LipinskiViolations LipinskiViolationsVeber LipinskiDruglike

The property LipinskiViolations counts how many times a molecule violates one of Lipinski’s Rules ( ≤ 5 hydrogen bond donors (HBD; –NH and–OH groups), ≤10 hydrogen bond acceptors (HBA; any–N or–O), molecular weight (MW) < 500 Daltons, and water-octanol partition coefficient (logP) < 5). The LipinskiViolationsVeber property computes the number of times a molecule violates Veber’s Rule (infraction if TPSA ≥140 Å2 and/or number of rotatable bonds >10). The LipinskiDruglike property is a Boolean that returns 1 if fewer than two Lipinski violations occur; 0 otherwise. There is no equivalent Boolean operator for Veber druglikeness; however, it is simple to implement one using the aforementioned operators.

bcl.exe molecule:Properties \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_table platinum_diverse_dataset_2017_01. veber_druglike.txt \

-tabulate “Define [VeberDruglike = Less (lhs = LipinskiViolationsVeber, rhs = 1)]” VeberDruglike

This command makes use of the Define and Less operators to return 1 if there are no violations to Veber’s Rule and 0 otherwise. New properties created with Define can also be passed to subsequent operators on the same line. For example, one could create a descriptor called VeberAndLipinskiDruglike by doing the following:

bcl.exe molecule:Properties \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_table platinum_diverse_dataset_2017_01. veber_druglike.txt \

-tabulate \

“Define [VeberDruglike = Less (lhs = LipinskiViolationsVeber, rhs = 1)]” \

“Define [VeberAndLipinskiDruglike = Multiply (LipinskiDruglike, VeberDruglike)]” \

VeberAndLipinskiDruglike

This new descriptor returns 1 if a molecule passes both druglikeness filters, and 0 otherwise.

Many metrics can be created using the BCL descriptor framework without modifying the source code. This can be useful to users who come across novel methods in the literature and wish to implement them in their own work. Take as an example a seminal work from Bickerton et al., which sought to quantify the chemical aesthetics of potential druglike compounds. Bickerton et al. asked 79 medicinal chemists at AstraZeneca to answer “would you undertake chemistry on this compound if it were a hit?” for ∼200 compounds each, to which chemists replied either “yes” or “no” (Bickerton et al., 2012). They generated a regression function that yielded a quantitative estimate of druglikeness (QED) using eight chemical descriptors: molecular weight, logP, number of hydrogen bond acceptors, number of hydrogen bond donors, polar surface area, number of rotatable bonds, number of aromatic rings, and number of ALERTS (Bickerton et al., 2012).

Using the same dataset and descriptors as Bickerton et al. (generously provided in their Supplemental Materials), similar druglikeness metrics can be implemented in the BCL through the descriptor framework. One approach could be to use the operators described above to reproduce the algebraic expression described in Eq. 1 of Bickerton et al. with the parameters described in their Supplemental Materials. The algebra expressed in BCL notation can be saved to an external text file and passed to the command-line using standard shell script syntax (e.g., @File.txt in Bash). Because there are relatively few descriptors in the Bickerton et al. model, an alternative approach could be to create a classification model.

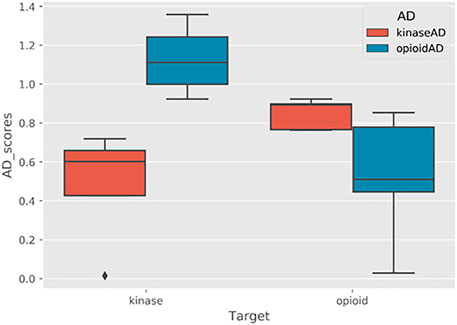

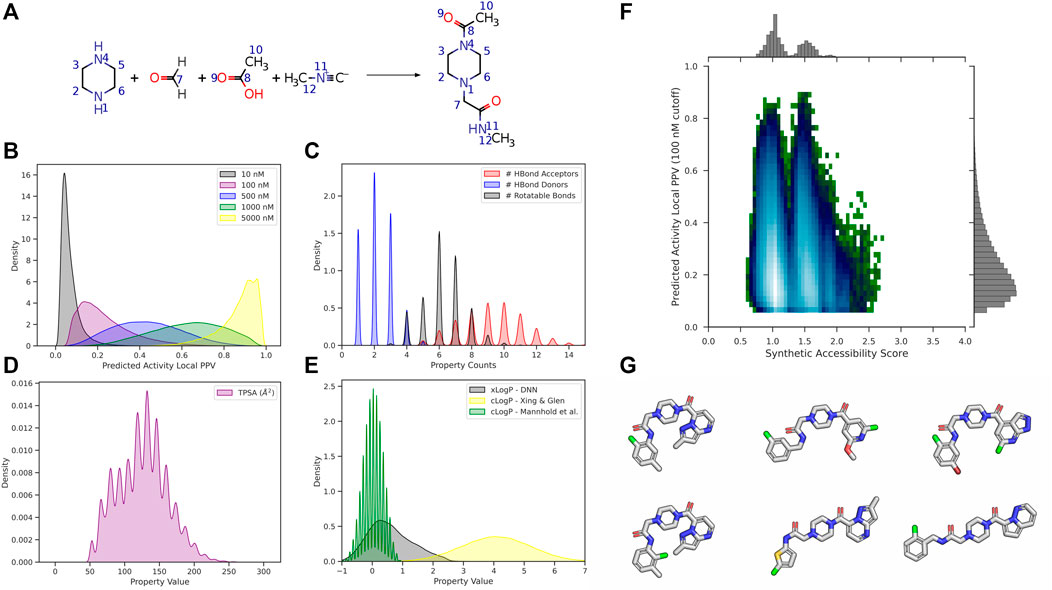

Here, we demonstrate the latter by (Eq. 1) generating a decision tree (DT) model and then 2) converting our DT into a single logic statement to pass to the BCL descriptor interface. For comparison, we also generate linear regression (LinReg) and artificial neural network (ANN) models, and we include the original QED score. All models are trained to predict a chemist’s verdict for each potential compound based on the descriptors used in Bickerton et al. (for details on model training and validation, see Supplementary Methods; for details on how to build machine learning models with the BCL, see Section 7).

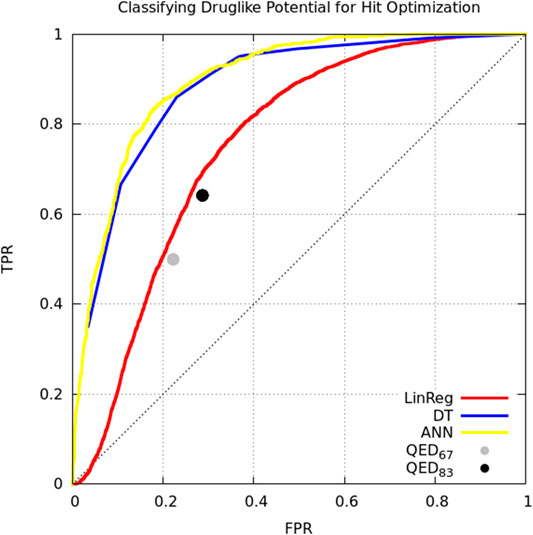

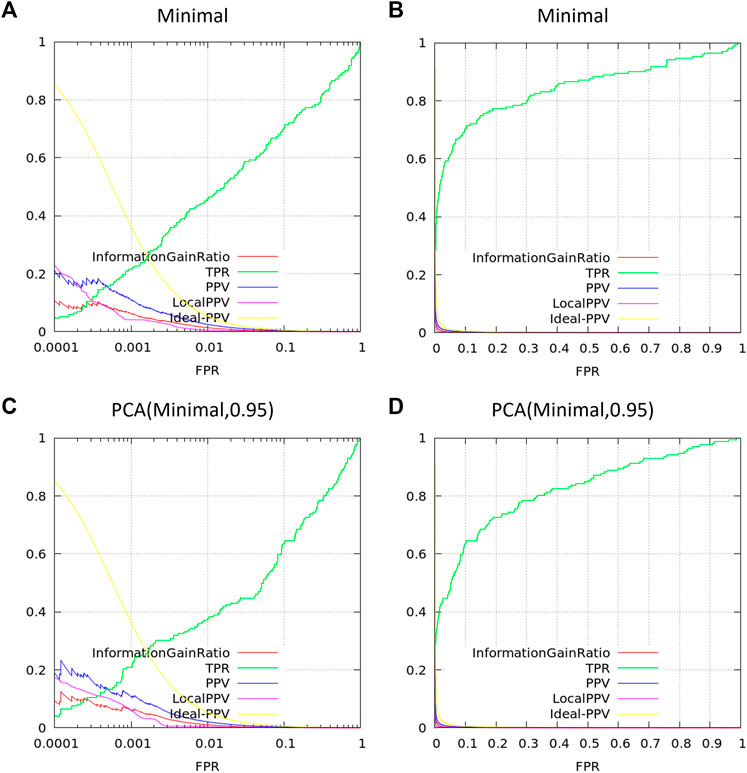

Model classification performance is displayed as a receiver-operating characteristic (ROC) curve (Figure 1). Bickerton et al. found that the mean QED score for molecules that medicinal chemists found favorable was 0.67 (±0.16 standard deviation). Taking the mean and mean plus standard deviation QED scores as cutoffs, we see that QED performs comparably to multiple linear regression. The ANN and DT perform better, but perhaps owing to the small number of and simple relation between variables there is no performance benefit of the ANN over the DT (Figure 1).

FIGURE 1. Classifying small molecules’ potential for hit optimization. Models were trained to predict whether medicinal chemists would perform hit optimization on target molecules (“yes” or “no”) starting with seven descriptors: molecular weight, logP, number of hydrogen bond acceptors, number of hydrogen bond donors, polar surface area, number of rotatable bonds, number of aromatic rings. Model types include linear regression (red), decision tree (blue), single-layer artificial neural network (yellow), and the quantitative estimate of druglikeness score with cutoffs at mean score for attractive molecules (0.67; gray) and the mean plus one standard deviation (0.83; black) by Bickerton et al. (Bickerton et al., 2012). Models trained on supplemental data from Bickerton et al. (Bickerton et al., 2012).

Now that we have our DT, we can reduce it to a readable if-else style format that can be converted into a BCL descriptor. Run the script SimplifyDecisionTree.py, passing as an argument the DT model:

/path/to/bcl/scripts/machine_learning/analysis/SimplifyDecisionTree.py \

./models/DT/model000000. model > DT. logic_summary.txt

We can see in the contents of DT. logic_summary.txt that the first thing the DT checks is whether the small molecule has less than two aromatic rings. Molecules with no aromatic rings are excluded, and molecules with one aromatic ring are subject to different criteria than molecules with two or more. Subsequent criteria are then evaluated. We can rewrite the logic summary as a descriptor and save it in a file called “dt.obj”. Then, we pass that file to molecule:Properties as a descriptor definition and use it to classify molecules:

bcl.exe molecule:Properties \

-add_h -neutralize \

-input_filenames platinum_diverse_dataset_2017_01. sdf.gz \

-output_table platinum_diverse_dataset_2017_01. dt_druglike.txt \

-tabulate “Define (Hitlike = @dt.obj)” Hitlike

The “dt.obj” code object file is a plain text file that can be opened with any text editor. The syntax mimics the BCL command-line syntax. Code object files are a convenient way to write a long, multi-line BCL command-line that makes it easier to build and reuse feature sets.

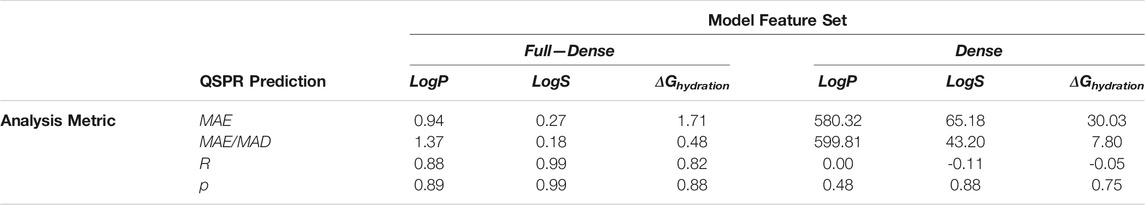

On the topic of druglikeness, it is worth noting that additional advanced methods are also available to classify the chemical space of molecules in a dataset. In some cases, it is useful to identify potential drug-like compounds that not only fit the criteria discussed above but are also similar to some known class (es) of drugs. For example, when performing fragment-based combinatorial library design for kinase inhibitors, in addition to filtering out molecules that violate Veber’s rules, it may also be desirable to filter molecules that are not sufficiently chemically similar to existing kinase inhibitors. This can be accomplished by building and scoring against an applicability domain (AD) model. For further details on creating and using AD models in the BCL, see Section 7.4.3.

We have described multiple uses of the molecule:Properties application, placing special emphasis on how it can be utilized to build different types of druglikeness metrics. As it is fundamentally a tool to obtain information from small molecule chemical structures, molecule:Properties can also be used to help generate statistical potentials, chemical filters, QSAR/QSPR models, and more. Some of these use-cases will be explored in later sections.

Small molecule 3D conformer generation is a critical aspect of both SB and LB CADD because the biologically relevant conformation of the molecule of interest is rarely known a priori. In SB molecular docking, small molecule flexibility is often represented through the inclusion of multiple discrete pre-generated conformers (Brylinski and Skolnick, 2008; Morris et al., 2009; Lemmon and Meiler, 2012; Combs et al., 2013; DeLuca et al., 2015). Small molecule conformations need to be sampled to arrive at the correct binding pose. Molecules that appear in binding pockets of substantially different proteins often bind in distinct modes for each protein, suggesting that the binding pose of the molecule need not be near the global energy minima of the molecule (Nicklaus et al., 1995; Boström et al., 1998; Perola and Charifson, 2004; Sitzmann et al., 2012; Friedrich et al., 2018). Likewise, in LB pharmacophore modeling, small molecules need to be flexibly aligned according to their chemical properties to identify the biologically relevant 3D features conferring bioactivity.

The BCL conformer generator, also called BCL:Conf, utilizes a fragment-based rotamer library derived from the crystallography open database (COD) to combine rotamers consisting of one or more dihedral angles according to a statistically-derived energy (Mendenhall et al., 2020). Clashes are dynamically resolved by iteratively identifying clashed atom pairs and rotating the central-most bonds between them without changing dihedral bins. In this way, conformational ensembles are stochastically generated according to likely rotamer combinations from the COD.

The BCL small molecule conformation sampler is a leader among general purpose small molecule conformer generation algorithms (Kothiwale et al., 2015; Mendenhall et al., 2020). In this section, we demonstrate how to use the BCL to generate global and local conformational ensembles and sample discrete rotamers within a molecule.

Start by generating conformers of osimertinib with the default settings. Here, all that is needed is an input filename and an output filename:

bcl.exe molecule:ConformerGenerator \

-ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. global_confs.sdf.gz

The ensemble_filenames argument is equivalent to the input_filenames argument used elsewhere (the difference is historical). The conformers_single_file argument is one of two output options. The other option is conformers_separate_files. As implied by the name, in the former case all conformers are output to a single file. In the latter case, if multiple molecules are input to ensemble_filenames, then a unique SDF will be written for the conformational ensembles of each of the input molecules [e.g., if the input SDF(s) contained 10 molecules, then conformers_separate_files would output 10SDFs each with a conformational ensemble of one of the input molecules].

By default, BCL:Conf will perform 8,000 conformer generation iterations, each of which rebuilds the molecule essentially from scratch (excepting rigid ring structures and bonds that do not vary substantially in length or angle). Without any other options, the top conformations will be clustered, yielding the 100 best-scoring representatives of each different cluster. An unbiased view of the conformational space around the ligand can be obtained by setting the skip_cluster flag. For this application, it is advisable to lower the number of iterations to roughly double the number of desired conformations; the conformers are rebuilt from scratch at every iteration, so there is little gain from doing more iterations than conformers desired. The returned conformers are sorted by score. Number of iterations and final conformers can be specified with the max_iterations and top_models flags, respectively.

Conformations can be filtered to remove highly-similar conformations using the conformation_comparer flag (e.g., to standard RMSD, dihedral distance, etc.) and the tolerance for what constitutes an “identical” conformer increased from the default (0.0) to an arbitrarily large value (note that RMSD- and dihedral-based metrics have units of Å and degrees, respectively) (Kothiwale et al., 2015). For most applications, we recommend the use of SymmetryRMSD with a modest tolerance of 0.25 Å. By default, the tolerance is adjusted automatically to yield the desired number of clusters so as to best represent conformational space, however, a user-provided tolerance is treated as a minimal acceptable difference between clusters.

For high-throughput applications, we recommend reducing iterations from 8,000 down to 800 or even 250. BCL:Conf’s speed is nearly linear in number of iterations. Generally, more iterations yield better performance, at a trade-off of slightly-faster than linear increase in time per conformation when clustering is used (Mendenhall et al., 2020).

Alternatively, if conformation_comparer is set to “RMSD 0.0”, then no filtering or clustering is specified, and BCL:Conf will perform max_iterations conformer generation iterations, randomly select top_models conformers, sort them from best to worst by score, and return them. This option is the fastest, and the ensembles returned are arguably the most Boltzmann-like. For a recent comparison of each set of parameters to one another and other conformer generation algorithms, please see Mendenhall et al., 2020 (Mendenhall et al., 2020). We recommend generating conformers with explicit hydrogen atoms added.

Generate conformers using two of the protocols described protocols. First, run

bcl.exe molecule:ConformerGenerator \

-add_h -ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. symrmsd_cluster.confs.sdf.gz \

-max_iterations 8,000 –top_models 25 \

-conformation_comparer SymmetryRMSD 0.25

Then,

bcl.exe molecule:ConformerGenerator \

-add_h -ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. raw.confs.sdf.gz \

-max_iterations 8,000 –top_models 250 –skip_cluster

-conformation_comparer RMSD 0.0

Notice that the ensemble generated with the SymmetryRMSD comparer and clustering enabled occupies the densest part of the broader conformational space sampled in the raw distribution.

Local sampling was implemented in the recent algorithmic improvements to BCL:Conf (Mendenhall et al., 2020). The idea is that sometimes users know or have predicted with some degree of certainty a chemically meaningful or bioactive pose of a small molecule, but additional refinement is needed. This is a common use case when modeling protein-ligand complexes starting with another ligand with some similarity to the ligand of interest (Bozhanova et al., 2021; Hanker et al., 2021). When using pre-generated conformers for docking or small molecule flexible alignment, it is unlikely that the best ligand conformer will be chosen and simultaneously have its position fully optimized in Cartesian space. Local sampling around an input conformer allows the user to refine ligand poses after an initial search.

Local sampling in the BCL is accomplished by restricting the rotamer search in one of four ways:

1. -skip_rotamer_dihedral_sampling–preserve input dihedrals to within 15-degrees of closest 30-degree bin (centered on 0°) in non-ring bonds.

2. -skip_bond_angle_sampling–preserve input conformer bond lengths and angles

3. -skip_ring_sampling–preserve input ring conformations

4. –change_chirality–by default, input chirality and isometry are preserved. Use this flag to allow for generation of enantiomers and stereoisomers.

These options are not mutually exclusive. Depending on how they are combined, different levels of sampling can be achieved. Moreover, they can be used in combination with any of the other options (e.g., conformation comparison type, clustering) described above. Generate local conformational ensembles of osimertinib by first placing all three restrictions:

bcl.exe molecule:ConformerGenerator \

-ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. skip_all.local_confs.sdf.gz \

-skip_rotamer_dihedral_sampling -skip_bond_angle_sampling \

-skip_ring_sampling–skip_cluster

Next, apply only the skip_rotamer_dihedral_sampling and skip_bond_angle_sampling restrictions to generate a local ensemble:

bcl.exe molecule:ConformerGenerator \

-ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. skip_dihed_ring.local_confs.sdf.gz \

-skip_rotamer_dihedral_sampling -skip_ring_sampling–skip_cluster

Both of the ensembles show less conformational diversity than the global conformational ensemble created in the previous section. Notice the relative sampling differences between each of the local conformation sampling protocols described.

Often times one may wish to only sample conformations of part of a molecule. For example, in docking congeneric ligand series, the core scaffold pose may be known with a high degree of confidence, and the goal is to optimize the pose of the rest of the molecule while keep the core scaffold fixed. Alternatively, crystal structures of protein-ligand complexes often have low or missing density for part of a bound ligand, and thus coordinate assignment may not accurate. Discretely sampling specific small molecule rotamers thus becomes a useful task to perform.

In the BCL, this is accomplished by first assigning an MDL miscellaneous property named “SampleByParts” to the molecule(s) of interest. The value of the SampleByParts property corresponds to the 0-indexed atom indices of atoms in dihedrals that are allowed to be sampled by molecule:ConformerGenerator. By encoding this as a molecule-specific property, we avoid multiple command-line calls with different atom index specifications, allowing users to generate conformers more rapidly for multiple molecules and/or different independent rotamers within a molecule.

As an example, consider a crystal structure of epidermal growth factor receptor (EGFR) kinase in complex with osimertinib (PDB ID 4ZAU) (Yosaatmadja et al., 2015). This is the first publicly available crystal structure of the EGFR-osimertinib complex. In this structure, the solvent-exposed ethyldimethylamine substituent is missing density. We will sample alternative conformations of the ethyldimethylamine substituent than that which is proposed in the PDB ID 4ZAU. First, add the corresponding atom indices to the file osimertinib. sdf:

bcl.exe molecule:Properties \

-add “Define [SampleByParts = Constant (3,36,18,19,6,20,21)]” SampleByParts \

-input_filenames osimertinib. sdf.gz–output \

osimertinib.sample_by_parts.sdf

Also, note that if you have many molecules for which you want to assign SampleByParts atom indices and you do not want to have to manually identify the relevant indices, you can also use the molecule:SetSampleByPartsAtoms application. This application sets SampleByParts indices based on comparison to user-supplied substructures. With the SampleByParts property defined in the SDF, generate global conformers as previously described:

bcl.exe molecule:ConformerGenerator \

-ensemble_filenames osimertinib. sample_by_parts.sdf.gz \

-conformers_single_file osimertinib. sample_by_parts.confs.sdf.gz \

-top_models 250 –cluster

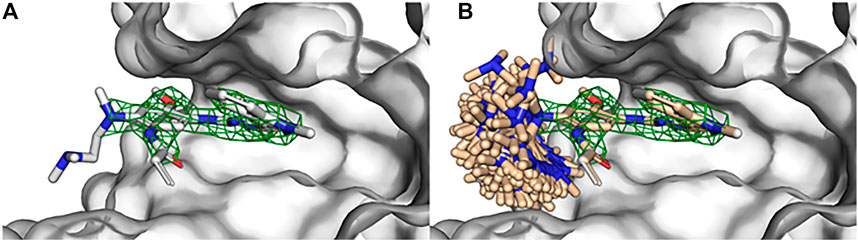

Observe that sampling global conformers (i.e., sampling across dihedral bins allowing bond angle/length adjustment and ring conformer changes) with SampleByParts maintains the coordinates of all unspecified atoms. In this case, only dihedrals containing strictly the ethyldimethylamine atoms are sampled (Figure 2). Similarly, SampleByParts can be used in conjunction with the local sampling methods described above.

FIGURE 2. Substructure sampling of small molecule rotamers with BCL:Conf. (A) Crystallographic structure of osimertinib bound to EGFR kinase (PDB ID 4ZAU) contains missing density of the ethyldimethylamine substituent of osimertinib. (B) Global conformational sampling of the osimertinib ethyldimethylamine substituent without perturbing the rest of the bound pose using BCL:Conf. Osimertinib electron density visualized with green mesh by importing the 2fo-fc map in PyMOL and contouring at 2σ.

A critical component of LB CADD is molecular similarity analysis. Provided a set of molecules, we frequently want to know how similar each molecule is to a reference molecule(s). Fundamentally, this requires 1) defining what specifically will be compared between the molecules, and 2) defining the metric with which similarity will be measured. In the BCL, this is accomplished primarily through use of the molecule:Compare application. The command-line syntax of molecule:Compare differs from the syntax of other applications discussed so far. The SDF input files to molecule:Compare are passed as parameters instead of argument flags.

bcl.exe molecule:Compare < mandatory_parameter_one.sdf> \

<optional_parameter_two.sdf> –output < mandatory_output.file> \

This syntax strictly enforces two types of behavior:

1. If a single SDF is specified as a parameter, then all molecules in the file are compared with one another

2. If two SDFs are specified, then the molecule(s) in the second file will be compared against the molecule(s) in the first file.

Finally, it is worth noting that molecule:Compare’s performance scales approximately linearly with number of threads for costly comparisons. To enable threads, set -scheduler PThread <number_threads>. We suggest setting number_threads to number of physical cores on the device for maximum performance.

The BCL encodes molecules as graphs where the edges are bonds, and the atoms are nodes. For substructure-based comparisons, we can define equivalence between bonds and atoms using various comparisons dubbed comparison types. For any substructure-based comparison between two or more molecules, some combination of atom and bond comparison types is required, which defines the equivalence class for the atoms and bonds, respectively. The default combination differs between tasks. For a summary of available atom and bond type comparisons, examine the help menu options of any comparer that utilizes substructures. For example,

bcl.exe molecule:Compare \

-method “LargestCommonSubstructureTanimoto (help)”

will display the default atom and bond comparison types for this comparison method as well as list the available comparison types. For example, if atom type resolution occurs at AtomType, then an SP3 carbon would match another SP3 carbon but not an SP2. If the resolution is lowered to ElementType, then all carbon atoms can match one another independent of their orbital configuration. Similarly, bond type resolutions of BondOrder and BondOrderAmideWithIsometryOrAromaticWithRingness will yield different substructure matches.

Not all similarity comparisons occur at the structural/substructural level. A number of comparison metrics in the BCL occur between properties computed at the whole molecular, substructural, or atomic level. Further, distance-based comparisons between molecules that are constitutionally identical can also be made.

In cases where the similarity between unique molecules is desired there are broadly two approaches for measuring similarity: by substructure and by property. These are not mutually exclusive; depending on the desired resolution of the substructure comparisons, one can further measure property differences between substructures of different molecules.

One common substructure similarity metric is the Tanimoto coefficient (TC), expressed between two molecules as the ratio of matched-to-unmatched atoms:

where A and B are the two molecules. The intersection of atoms in (Eq. 1) is the size of the largest common substructure under the specified comparison types. This is a specific formalism of the more general Tversky index when both α and β are equal to 1:

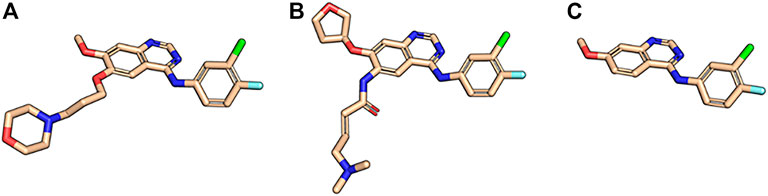

The first-generation EGFR tyrosine kinase inhibitor gefitinib and the second-generation inhibitor afatinib are structurally very similar. Afatinib is modified from the gefitinib scaffold and incorporates an acrylamide linker. Visualize the maximum common substructure (MCS) of afatinib and gefitinib using molecule:Split (Figure 3):

bcl.exe molecule:Split \

-implementation “LargestCommonSubstructure (file = afatinib.sdf)" \

-input_filenames gefitinib. sdf.gz–output mcs_gef_afa.sdf.gz

FIGURE 3. Maximum common substructure between gefitinib and afatinib. (A) Afatinib (PDB ID 4G5J) and (B) gefitinib (PDB ID 4I22) in their binding mode 3D conformations next to (C) their maximum common substructure extracted with the BCL.

Next, calculate the MCS TC of the gefitinib and afatinib:

bcl.exe molecule:Compare gefitinib. sdf.gz afatinib. sdf.gz \

-method LargestCommonSubstructureTanimoto–output gef_afa_mcs_tani.txt

This method searches for the single largest common connected substructure as the intersection of two molecules and computes the TC. In this case, the MCS TC is approximately 0.48. Sometimes searching for a single connected substructure can be disadvantageous. For example, if the primary differences between molecules results from core substitutions bridging two otherwise identical halves, then the single largest common substructure approach will fail to account for the complete degree of similarity. Alternatively, the user can calculate the maximum common disconnected substructure (MCDS) TC:

bcl.exe molecule:Compare gefitinib. sdf.gz afatinib. sdf.gz \

-method LargestCommonDisconnectedSubstructureTanimoto \

–output gef_afa_mcds_tani.txt

As expected, the MCDS TC is greater than the MCS TC at approximately 0.86.

In Section 4 we demonstrated how the BCL can be used to generate small molecule conformational ensembles. One common way to measure the performance of small molecule conformer generators is to measure how close we can recover biologically relevant conformations. We can do this in the BCL by measuring the RMSD or SymmetryRMSD of molecules in our conformational ensemble to the experimentally determined conformations. Generate a global ensemble of osimertinib:

bcl.exe molecule:ConformerGenerator \

-add_h -ensemble_filenames osimertinib. sdf.gz \

-conformers_single_file osimertinib. confs.sdf.gz \

-max_iterations 8,000 –top_models 50 –cluster \

-conformation_comparer SymmetryRMSD 0.25 –generate_3D

Note that we are generating the molecule completely de novo ignoring all information from input coordinates by using generate_3D. Measure the heavy-atom symmetric RMSD to the native conformation:

bcl.exe molecule:Compare osimertinib. sdf.gz osimertinib. confs.sdf.gz \

-method SymmetryRMSD -logger File osi. sym_rmsd_native.log \

-output osi. sym_rmsd_native.txt -remove_h

On examination of osi. sym_rmsd_native.txt, we see that see that of our 25 generated conformers, 3 are less than 2.0 Å from the native conformer, and the best is approximately 0.66 Å from native. If we repeat this process for two additional TKIs, the first-generation inhibitor erlotinib and the second-generation inhibitor afatinib, we also see that we are able to obtain multiple conformers less than 1.0 Å from native.

In addition to RMSD-based metrics, molecule:Compare can also measure distance in the form of dihedral angle sums and dihedral distance bins. For additional information, examine the help menu options.

The BCL can be used to align small molecules according to their MCS. Unlike most of the examples in this section, this is accomplished through the molecule:AlignToScaffold application by passing three parameters:

bcl.exe molecule:AlignToScaffold <scaffold> <ensemble> <output>

For example, to align afatinib to gefitinib based on their MCS, use the following command:

bcl.exe molecule:AlignToScaffold gefitinib. sdf.gz afatinib. sdf.gz \

afatinib.ats.sdf.gz \

Instead of aligning by MCS, the user may also align the target ensemble to the largest rigid component of the scaffold structure by passing the align_rigid flag. Moreover, if the user wants to a define an alternative set of atoms to be aligned instead of the defaults, this can be accomplished by specifying those atoms for each the scaffold and target ensemble with align_scaffold_atoms and align_ensemble_atoms, respectively.

In addition to substructure-based alignment, we can also perform property-based alignment. Property-based alignment algorithms typically maximize the overlap or minimize the distance between molecular and/or atomic properties (Sliwoski et al., 2014). We have previously demonstrated that the performance of the BCL property-based alignment algorithm, also referred to as BCL:MolAlign, is on par with leading academic and commercial molecular alignment algorithms (Brown et al., 2019).

BCL:MolAlign combines the conformational sampling ability of BCL:Conf with the property framework described in Section 3 to minimize the property-distance between two molecules through flexible superimposition. The property-distance is computed between mutually-matching atom pairs that are dynamically updated with each iteration. Alignment pose sampling is accomplished through a series of moves that traverse the co-space defined by the relative position of the two molecules to one another (Brown et al., 2019). BCL:MolAlign can be used to perform alignments which can be classified as rigid (two molecules with fixed conformers), semi-flexible (one molecule with a fixed conformer, one molecule whose conformers are sampled), and fully-flexible (two molecules whose conformers are sampled).

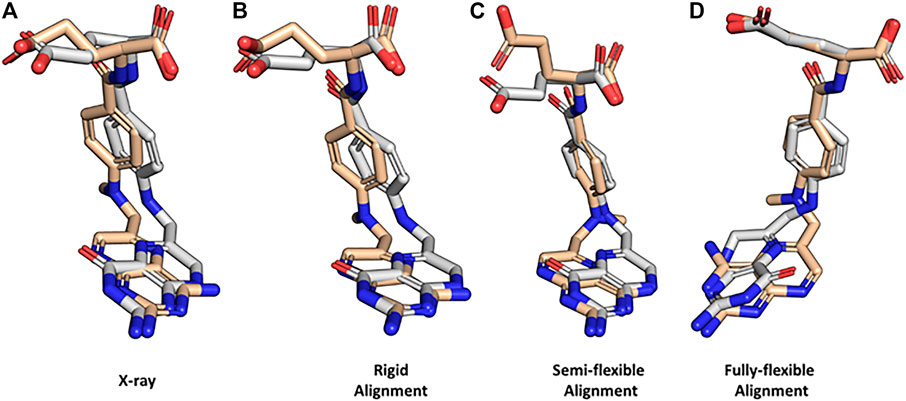

To demonstrate how BCL:MolAlign can be used to perform each of these alignments, consider the classic problem of obtaining the crystallographic alignment of methotrexate (MTX) and dihydrofolic acid (DHF). This example is a good one because the intuitive heterocyclic overlap is not the correct one (Labute et al., 2001). Instead, alignment of the binding pockets of dihydrofolate reductase (DHFR) co-crystallized with MTX (PDB ID 1DLS) and DHF (PDB ID 1DHF) shows only partial heterocycle overlap and superimposition of the heterocycle carbonyl in DHF and an aromatic hydrogen bond accepting nitrogen in MTX (Figure 4A). Perform a rigid alignment of MTX to DHF with the following command:

bcl.exe molecule:Compare mtx. perturbed.sdf.gz dhf. sdf.gz \

-add_h–neutralize \

-output mtx_dhf_rigid_rmsdx.output \

-logger File rigid_alignment.log -random_seed \

-method “PsiField \

(

output aligned mol a = mtx. rigid_aligned.sdf,

iterations = 1,000,

number outputs = 1

)"

FIGURE 4. Property-based alignment of dihydrofolic acid and methotrexate with BCL:MolAlign. (A) Superimposed crystallographic structures of dihydrofolic acid (DHF; PDB ID 1DHF) and methotrexate (MTX; PDB ID 1DLS) in complex with dihydrofolate reductase (DHFR). (B) Rigid alignment of DHF and MTX starting from the bioactive conformers from the crystal structures. (C) Flexible alignment of MTX (flexible) to DHF (rigid, bioactive conformer). (D) Fully flexible alignment of DHF and MTX. DHF is colored white and MTX is colored wheat. MTX was randomly rotated and translated prior to rigid alignment to DHF. All flexible alignments performed using generate_3D to remove bias from start coordinates.

The rigid alignment ranks the correct alignment mode as the top scoring alignment (Figure 4B). Rigid alignments are rarely useful for drug discovery because the bioactive conformation of the target small molecule is usually unknown; however, they provide a useful check for alignment scoring functions. Next, flexibly align MTX to the DHFR-binding pose of DHF:

bcl.exe molecule:Compare mtx. perturbed.sdf.gz dhf. sdf.gz \

-add_h–neutralize \

-output mtx_dhf_rigid_rmsdx.output \

-logger File semi-flexible_alignment.log -random_seed \

-method “PsiFlexField

(

output_aligned_mol_a = mtx. semiflex_aligned.sdf,

rigid_mol_b = true,

number_flexible_trajectories = 3,

fraction_filtered_initially = 0.25,

fraction_filtered_iteratively = 0.50,

iterations = 400,

filter_iterations = 200,

refinement_iterations = 50,

conformer_pairs = 500,

number_outputs = 1,

sample_conformers = SampleConformations (

conformation_comparer = SymmetryRMSD,

generate_3D = 1,tolerance = 0.10,rotamer_library = cod,

max_iterations = 8,000,max_conformations = 50,

cluster = true)

)”

Here, we can see that BCL:MolAlign correctly determines the alignment of the heterocycles, central aromatic rings, and (partially) the acidic groups (Figure 4C). Note that rigid_mol_b is enabled, which fixes the pose of the second parameter molecule. For a detailed description of how each argument modifies the alignment algorithm, see Brown et al. (Brown et al., 2019). For performance considerations, we generally find that the number of conformer pairs is more critical to pose recovery than the numbers of iterations at each stage. For complex ligands with many rotational bonds, we recommend increasing max_conformations and conformer_pairs.

Fully-flexible alignment is useful when one is trying to recover pharmacophore features without knowing the binding pose of either molecule. Here, the goal is to align pharmacophore features of the molecules, not recover the native pose of the target molecule(s) by aligning to another molecule with a known binding mode. Perform a fully-flexible alignment of MTX and DHF.

bcl.exe molecule:Compare mtx. perturbed.sdf.gz dhf. perturbed.sdf.gz \

-add_h–neutralize \

-output mtx_dhf_rigid_rmsdx.output \

-logger File fully-flexible_alignment.log \

-random_seed–scheduler PThread 8 \

-method “PsiFlexField \

( \

output_aligned_mol_a = mtx-dhf. fullflex_aligned.sdf, \

rigid_mol_b = false, \

number_flexible_trajectories = 5, \

fraction_filtered_initially = 0.25, \

fraction_filtered_iteratively = 0.50, \

iterations = 800, \

filter_iterations = 400, \

refinement_iterations = 100, \

conformer_pairs = 2,500, \

number_outputs = 1, \

sample_conformers = SampleConformations ( \

conformation_comparer = SymmetryRMSD, \

generate_3D = 1,tolerance = 0.10,rotamer_library = cod, \

max_iterations = 8,000,max_conformations = 50, \

cluster = true) \

)”

Fully-flexible alignment of MTX and DHF does not recover the most native-like conformations of MTX and DHF; however, it does recover correct alignments of the heterocycles, central aromatic rings, and acidic groups (Figure 4D). Notice that we increased the number of conformer pairs from 500 to 2,500 when we went from semi-flexible to fully-flexible alignment.

The descriptor application group is the workhorse for molecule featurization. Similar to the molecule:Properties application, the descriptor application group provides command-line access to the internal descriptor framework. Unlike molecule, descriptor is dataset centric; its primary purpose is to generate, manipulate, and analyze feature datasets for QSAR/QSPR. In this section, we will demonstrate core applications in descriptor and how they can be utilized in QSAR/QSPR modeling.

Four specifications are required to generate feature datasets from small molecules:

1. The molecules for which to generate the features; these can be any valid SDF.

2. The types of features to generate; these are properties such as those described in Section 3. Typically, these are stored in a separate file and passed to the command-line at run-time; however, they can also be specified directly on the command-line. Importantly, combining multiple descriptors for feature generation requires the use of the Combine descriptor.

3. The feature result label; this indicates the output(s) that models will train toward. This can be a constant value (i.e., if featurization is being done for some purpose other than model training), a property (e.g., LogP for a QSPR model), or another label (e.g., bioactivity label from experimental data).

4. The output filename; three output types are available. The BCL has a partial binary format with the “.bin” suffix that is used for all model training. Feature datasets can also be output with the “.csv” suffix for a comma-separated values (CSV) file. Moreover, “.csv” files and “.bin” files can be interconverted. In this way, features generated with the BCL can be used by other software, and vice versa. For inter-operability with Weka software, “.arff” format is also supported, with a limitation of only working with continuous variables.

Generate a simple feature dataset consisting of several scalar descriptors for a set of confirmed active M1 Muscarinic Receptor positive allosteric modulators (PAMs) and corresponding true negatives (Butkiewicz et al., 2013). The SDF corresponding to these compounds is 1798. combined.sdf. These molecules have been labeled with the MDL property “IsActive” such that the confirmed actives have a value of 1 and the negatives have a value of 0.

bcl.exe descriptor:GenerateDataset \

-source “SdfFile (filename = 1798. combined.sdf)” –id_labels “String (M1)” \

-result_labels “Combine (IsActive)” \

-feature_labels “Combine (Weight, LogP,HbondDonor, HbondAcceptor)” \

-output 1798. combined.scalars.bin

Binary files were designed for rapid non-consecutive reading and writing, but the interested reader will find that the file format consists of a textual header specifying the properties and their sizes followed by a simple binary output of all features. Dataset information and statistics can be obtained by calling descriptor:GenerateDataset compare. For example:

bcl.exe descriptor:GenerateDataset–compare 1798. combined.scalars.bin

To better understand the binary file encodings, convert 1798. combined.scalars.bin to a CSV file:

bcl.exe descriptor:GenerateDataset \

-source “Subset (filename = 1798. combined.scalars.bin)” \

-output 1798. combined.scalars.csv

The first column of every row contains the ID label “M1” as specified when the binary file was generated. The next four columns contain the descriptors specified above: Weight, LogP, HbondDonor, and HbondAcceptor. The very last column is the result value, which contains either 0 or 1 depending on the value in the SDF MDL property “IsActive”.

Convert CSV file back to a binary file:

bcl.exe descriptor:GenerateDataset \

-source “Csv(filename = 1798. combined.scalars.csv, number result cols = 1, number id chars = 2)” \

-output 1798. combined.scalars.bin

CSV files do not contain all of the supplementary information contained within the partial binary file format. Thus, certain information needs to be provided directly. For example, we need to specify the number of characters that are part of the row ID label, otherwise the BCL will try to convert the string (or numerical) ID into feature values. ID labels therefore must be fixed-width. In addition, we need to tell the BCL how many of the columns are result values. By default, the BCL will assume that only the last column is the result label. By specifying number result cols = N, we tell the BCL to take the last N columns of the CSV as the result value(s).

Also notice that the feature and result label information is not informative after converting from CSV to binary. The values are transferred to the new file format, but the BCL obviously cannot know where those values came from. These must be manually specified.

bcl.exe descriptor:GenerateDataset \

-source “Csv(filename = 1798. combined.scalars.csv, number result cols = 1, number id chars = 2)” \

–id_labels “String (M1)” \

-result_labels “Combine (IsActive)” \

-feature_labels “Combine (Weight, LogP,HbondDonor, HbondAcceptor)” \

-output 1798. combined.scalars.bin

In this case, the feature labels are internal parsable properties of the BCL; however, when relabeling feature labels upon converting from CSV to binary format, the user can specify any labels so long as the total number of labels is consistent with the number of feature columns.

After generating a dataset or importing a CSV file and converting it to binary format, feature datasets can be modified. The most frequent form of modification is randomization. Training a machine learning model, for example a neural network, often requires dataset randomization.

bcl.exe descriptor:GenerateDataset \

-source “Randomize [Subset (filename = 1798. combined.scalars.bin)]” \

-output 1798. combined.scalars.rand.bin

Binary files are read by the “Subset” retriever. The Randomize operator is passed through the source flag and provided the dataset retriever option corresponding to the binary file.

Additional dataset operators can be classified by how they modify the dataset. For example, the PCA (principal components analysis) and EncodeByModel operators perform dimensionality reduction across feature (column) space, while the KMeans operator reduces dimensionality across molecule (row) space. Other operators are useful during model training and validation, such as Balanced, Chunks, and YScramble. Still others can be used to select particular ranges of rows from a dataset, such as Rows. Here, we will take a look at a few dataset operators. For full details on all available dataset operators, see the descriptor:GenerateDataset help menu.

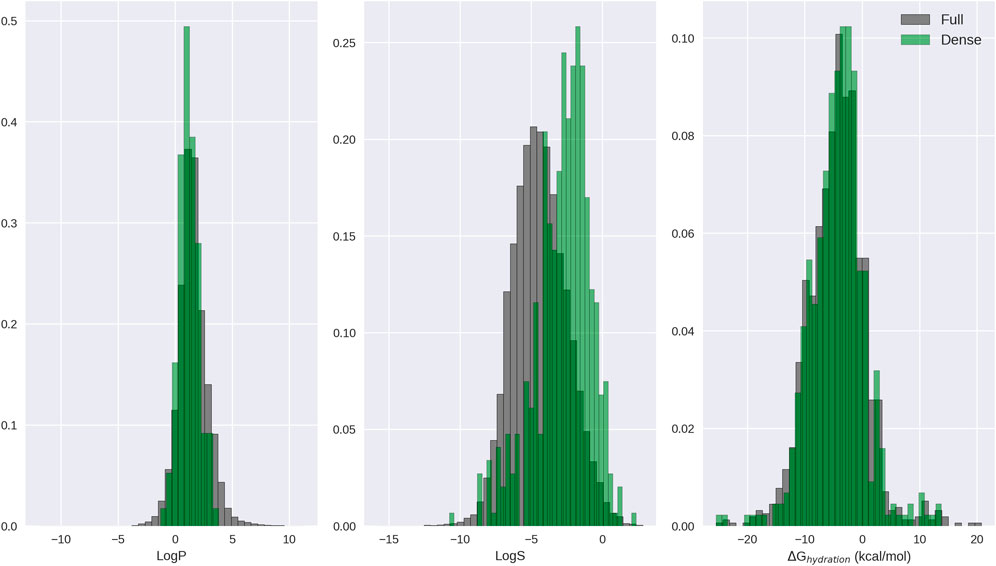

Start by generating a dataset for the Kir2.1 inward rectifying potassium channel using the dataset compiled in Butkiewicz et al. (Butkiewicz et al., 2013) and the best performing LB descriptor set from Mendenhall and Meiler (Mendenhall and Meiler, 2016). This dataset contains 301,493 small molecules, 172 of which are confirmed active molecules. For each molecule, there will be 1,315 feature columns and 1 result column.

bcl.exe descriptor:GenerateDataset \

-source “SdfFile (filename = 1843. combined.sdf.gz)” –scheduler PThread 8 \

-feature_labels MendenhallMeiler2015. Minimal.object \

-result_labels “Combine (IsActive)” \

–output 1843. Minimal.bin–logger File 1843. Minimal.log

Randomize the dataset:

bcl.exe descriptor:GenerateDataset \

-source “Randomize (Subset (filename = 1843. combined.bin))” \

-output 1843. combined.rand.bin–logger File 1843. Minimal.rand.log

Note that we could have generated a randomized dataset with a single command by wrapping the SdfFile dataset retriever with Randomize; however, the Randomize dataset retriever is unable to support hyperthreading. Consequently, it is faster to generate larger datasets first using multiple threads and randomize them afterward. Next, perform PCA on the dataset using OpenCL to accelerate the calculation with a GPU. The flag opencl is optional and may not be supported on all platforms, but may provide a substantial speedup, depending on the GPU and dataset size:

bcl.exe descriptor:GeneratePCAEigenVectors \

-training “Subset (filename = 1843. Minimal.rand.bin)” \

-output_filename 1843. Minimal.PCs.dat–opencl \

-logger File 1843. Minimal.PCs.log

Finally, generate a new feature dataset accounting for 95% of the variance:

bcl.exe descriptor:GenerateDataset \

-source “PCA(dataset = Subset (filename = 1843. Minimal.rand.bin), fraction = 0.95, filename = 1843. Minimal.PCs.dat)” \

-output 1843. Minimal.rand.pca_095. bin–opencl \

-logger File 1843. Minimal.rand.pca_095. log

Performing PCA on the dataset has reduced the number of descriptors from 1,315 to 695. Alternatively, one could use EncodeByModel to reduce the number of feature columns using a pre-generated model. The following example utilizes pseudocode and a hypothetical pre-generated ANN with the MendenhallMeiler2015. Minimal.object features.

bcl.exe descriptor:GenerateDataset/

-source “EncodeByModel [storage = File (directory = /path/to/model/directory, prefix = model),retriever = Subset (filename=<my_binary_file.bin>)]” \

-output < my_encoded_binary_file.bin>

The input file < my_binary_file.bin > would have 1,315 descriptors from MendenhallMeiler2015. Minimal.object, and the output file < my_encoded_binary_file.bin > would have a number of descriptors corresponding to the number of neurons in the final hidden layer preceding the output layer of our hypothetical pre-generated ANN.

As a practical note, we have found that PCA-based dimensionality reduction useful for dataset visualization, but of limited value in improving model performance. Performance can often be recovered to that of the initial dataset when requiring at least 95% of the variance to be preserved, but performance improvement is rare from PCA, when using a regularized method such as dropout-ANNs.

Suppose you encoded the same original feature set using two different models and now want to combine the new encoded files for further training. This can readily be accomplished with the Combine operator.

bcl.exe descriptor:GenerateDataset \

-source “Combined [Subset (filename=<my_binary_file_1. bin>), Subset (filename=<my_binary_file_2. bin>)]” \

-output < my_combined_binary_file.bin>

Next, instead of performing dimensionality reduction along the column (features) axis, we will reduce the dimensionality along the row (molecule) axis. Perform K-means clustering of the feature dataset to reduce our row number from 301,493 to 300.

bcl.exe descriptor:GenerateDataset \

-source “KMeans [dataset = Subset (filename = 1843. combined.rand.bin), clusters = 300]” \

-output 1843. combined.rand.k300. bin \

-logger File 1843. combined.rand.k300. log

This form of dimensionality reduction is unlikely to be as useful for training a deep neural network (DNN); however, it can be useful in similarity analysis in low dimensional feature space. Some of the datasets generated in this section will be referenced again in Section 7 to train classification machine learning models.

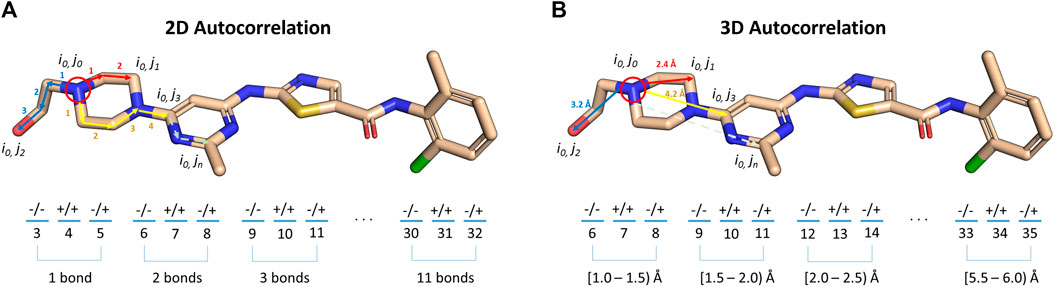

As indicated in the previous section, the BCL can also compute signed autocorrelation functions. Autocorrelations are regularly used as features in cheminformatics machine learning models (Sliwoski et al., 2014). When computed for atomic descriptors, such as Atom_SigmaCharge, the autocorrelations sum pairwise property products into distance bins by calculating the separation between molecule atom pairs in number of bonds (2DA) or Euclidean distance (3DA). Each distance bin is further separated into three sign-pair bins corresponding to property value sign of each atom in the pair (Eq. 3) (Sliwoski et al., 2015).

where ra and rb are the boundaries of the current distance interval, N is the total number of atoms in the molecule, r(i,j) is the distance between the two atoms being considered, δ is the Kronecker delta, and P is the property computed for each atom. 2DAs are conformation-independent, while 3DAs are conformation-dependent (Figure 5).

FIGURE 5. Illustration of signed autocorrelation descriptors. Signed autocorrelations are the sums of products of each atom property pair (e.g., i0,j2) in a distance bin defined by (A) bond separation, or (B) Euclidean distance in 3D space. Within each distance bin, atom property pairs are further separated into bins corresponding to the sign of the property of the first (left hand side of ‘/’) and second (right hand side of ‘/’) atoms in the pair.

The “dasatinibs.sdf” file contains the coordinates and connectivity for two dasatinib molecules: one with 2D coordinates, the other with 3D coordinates. Compute the signed 2DA and 3DA for Atom_SigmaCharge on both dasatinib molecules.

bcl.exe descriptor:GenerateDataset \

–source “SdfFile (filename = dasatinibs.sdf)” \

-feature_labels “Combine (3daSmoothSign (property = Atom_SigmaCharge))” \

-result_labels “Combine [Constant (999)]” -output dasatinibs.3da.csv \

–logger File dasatinibs.3da.log

bcl.exe descriptor:GenerateDataset \

–source “SdfFile (filename = dasatinibs.sdf)” \

-feature_labels “Combine [2DASmoothSign (property = Atom_SigmaCharge)]” \

-result_labels “Combine [Constant (999)]” -output dasatinibs.2da.csv \

–logger File dasatinibs.2da.log

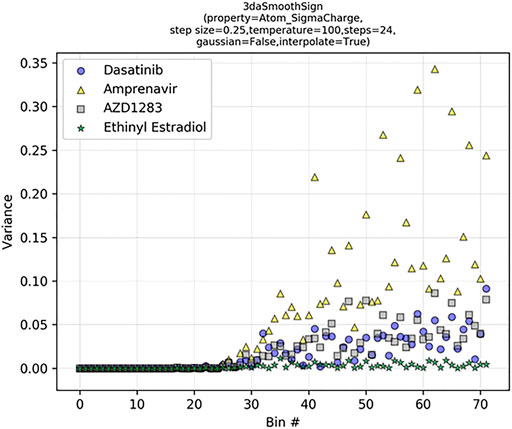

Upon examination of the tabulated 2DA and 3DA values for the two different dasatinib molecules, we observe that the 2DA contains the same values in both cases, while the 3DA contains unique values for the different conformers. To visualize the variance in each 3DA distance bin, we can tabulate the 3DAs for Atom_SigmaCharge on an ensemble of 3D conformations for several different molecules (Figure 6). Dasatinib is a TKI with 7 rotatable bonds, amprenavir is a HIV protease inhibitor with 12 rotatable bonds, AZD1283 is an antagonist of the P2Y12 receptor with 9 rotatable bonds, and ethinyl estradiol is a synthetic estradiol with only 1 rotatable bond that binds and activates estrogen receptors.

FIGURE 6. Signed 3DA variance increases with bin distance in flexible molecules. The 3DA distance bins extend to 6.0 Å at intervals of 0.25 Å. At each distance bin, there are three sign-pair bins (−/−, +/+, −/+).

We can see that the variance in each descriptor column increases as a function of distance and number of rotatable bonds. In ethinyl estradiol there is little change in descriptor column variance as a function of distance. In contrast, molecules with increasing numbers of rotatable bonds display increasingly large variances at longer distance bins. This suggests that increasing conformational heterogeneity at longer distance bins leads to increased noise. Indeed, we have previously found that extending LB 3DAs beyond approximately 6.0 Å generally results in reduced performance on QSAR classification tasks (Sliwoski et al., 2015), consistent with our example here (Figure 6). Importantly, however, at shorter distances where there is less conformational heterogeneity we are able to improve our performance with 3DAs even when the active conformation of the small molecule is unknown (Sliwoski et al., 2015; Mendenhall and Meiler, 2016). Moreover, models making predictions on molecules that are fairly rigid (e.g., steroid derivatives) may benefit from longer range distance bins.

It is also possible to use molecule:Properties to tabulate and compute statistics for molecules instead of plotting the CSV file data from descriptor:GenerateDataset. Here, we used descriptor:GenerateDataset to illustrate its usage. In practice, we do not just use a single 3DA or 2DA, but instead build sets of descriptors for feature and result labels and store them as separate code object files. As mentioned previously, the code object file format is the same format as allowed on the command line.

The BCL supports multiple machine learning algorithms for QSAR/QSPR modeling. Among the methods available are ANNs (including DNNs and multitasking neural networks) (Dahl, 2014; Bharath et al., 2015; Mendenhall and Meiler, 2016; Xu et al., 2017), support vector machines (SVM) (Kawai et al., 2008; Ma et al., 2008; Mariusz et al., 2009), Kohonen networks (KN) (Kohonen, 1990; Korolev et al., 2003; Wang et al., 2005), restricted Boltzmann machines (RBM) (Le Roux and Bengio, 2008; Tijmen Tieleman, 2008), and decision trees (DT) (Mariusz et al., 2009; Sheridan, 2012; Butkiewicz et al., 2013). GPU acceleration is available for ANNs and SVMs through OpenCL (Munshi, 2008). The primary application group for machine learning in the BCL is model. To see the applications within model, check the help menu:

bcl.exe model:Help

Here, we will first introduce the user to the overall workflow involved in training, analyzing, and subsequently testing BCL machine learning models. The basic workflow for model training is the same for each machine learning method and can be completed via the model:Train application. To see the available machine learning methods, access the help options within model:Train.

bcl.exe model:Train --help

As of this writing, the available model types can be found in Table 3. The most reliable way to see available model types is via the help menu options of your version of the BCL.

To expose all options for a particular machine learning method, pass the algorithm name as the first parameter to the application with the help menu request:

bcl.exe model:Train “<training algorithm>(help)”

The following is a typical command-line format to train a model beginning with a pre-generated descriptor binary file:

bcl.exe model:Train < training algorithm> \

-max_minutes < maximum time of training in minutes> \

-max_iterations < maximum number of training iterations> \

-final_objective_function < performance metrics for model evaluation> \

-feature_labels < names of descriptors> \

-training < training set> \

-monitoring < monitoring set> \

-independent < independent set> \

-storage_model < location in which to store the model> \

-opencl < enables GPU acceleration> \

-logger File < log file>

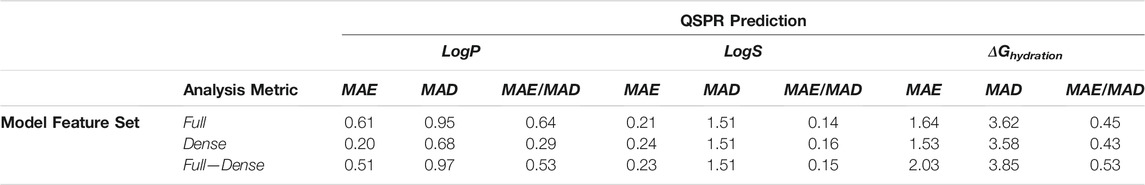

Model performance is evaluated with the user-specified objective function. The choice of objective function is typically related to the task being performed (e.g., classification vs regression) (Table 4).