94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Pharmacol. , 18 October 2019

Sec. Drugs Outcomes Research and Policies

Volume 10 - 2019 | https://doi.org/10.3389/fphar.2019.01228

Rieko Ueda1,2

Rieko Ueda1,2 Yuji Nishizaki1,2*

Yuji Nishizaki1,2* Yasuhiro Homma1,3

Yasuhiro Homma1,3 Shoji Sanada4,5,6

Shoji Sanada4,5,6 Toshiaki Otsuka7,8

Toshiaki Otsuka7,8 Shinji Yasuno9

Shinji Yasuno9 Kotone Matsuyama10,11

Kotone Matsuyama10,11 Naotake Yanagisawa1

Naotake Yanagisawa1 Masashi Nagao1,3,12

Masashi Nagao1,3,12 Kazutoshi Fujibayashi1,13

Kazutoshi Fujibayashi1,13 Shuko Nojiri1

Shuko Nojiri1 Yumiko Seo1

Yumiko Seo1 Natsumi Yamada1

Natsumi Yamada1 Patrick Devos14

Patrick Devos14 Hiroyuki Daida2,15

Hiroyuki Daida2,15Background: The number of papers published by an institution is acknowledged as an easy-to-understand research outcome. However, the quantity as well as the quality of research papers needs to be assessed.

Methods: To determine the relation between the number of published papers and paper quality, a survey was conducted to assess publications focusing on interventional clinical trials reported by 11 core clinical research hospitals. A score was calculated for each paper using Système d’interrogation, de gestionet d’analyse des publications scientifiques scoring system, allowing for a clinical paper quality assessment independent of the field. Paper quality was defined as the relative Journal impact factor (IF) total score/number of papers.

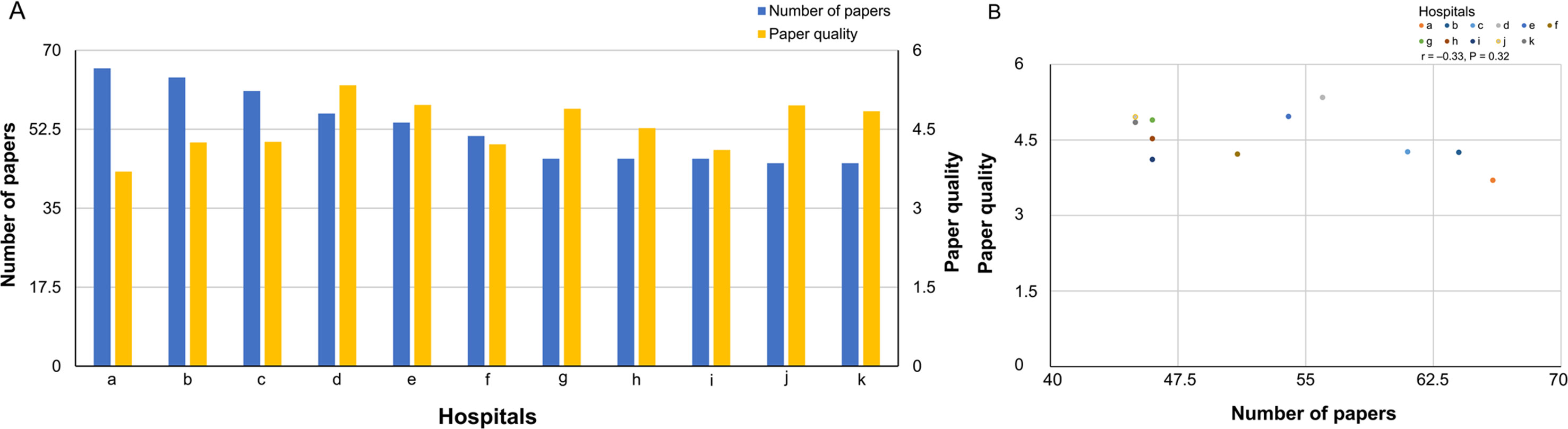

Results: We surveyed 580 clinical trial papers. For each of the 11 medical institutions (a–k), respectively, the following was found: number of published papers: a:66, b:64, c:61, d:56, e:54, f:51, g:46, h:46, i:46, j:45, k:45 (median: 51, maximum: 66, minimum: 45); total Journal IF: a:204, b:252, c:207, d:225, e:257, f:164, g:216, h:190, i:156, j:179, k:219 (median: 207, maximum: 257, minimum: 156); relative Journal IF total score: a:244, b:272, c:260, d:299, e:268, f:215, g:225, h:208, i:189, j:223, k:218 (median: 225, maximum: 299, minimum: 189); and paper quality (relative Journal IF total score/number of papers): a:3.70, b:4.25, c:4.26, d:5.34, e:4.96, f:4.22, g:4.89, h:4.52, i:4.11, j:4.96, k:4.84 (median: 4.52, maximum: 5.34, minimum: 3.70). Additionally, no significant relation was found between the number of published papers and paper quality (correlation coefficient, −0.33, P = 0.32).

Conclusions: The number of published papers does not correspond to paper quality. When assessing an institution’s ability to perform clinical research, an assessment of paper quality should be included along with the number of published papers.

Assessing a medical institution’s ability to perform clinical research is extremely important. The number of scientific papers published by an institution is acknowledged as an easy-to-understand research outcome. However, not only the quantity but also the quality of papers needs to be addressed. One of the most well-established metric indicators for assessing paper quality is the impact factor (IF) of the journal in which the article has been published (Garfield, 2006). However, citation frequency and trends vary across research fields, and the inability to compare the IF of journals from different scientific areas is the main shortcoming of this metric. To address this issue, the bibliometric software tool developed in France, Système d’interrogation, de gestionet d’analyse des publications scientifiques (SIGAPS; “software to identify, manage, and analyze scientific publications”), is used to calculate a score that objectively assesses paper quality (Devos et al., 2003; Devos et al., 2006; Devos, 2008), allowing a comparison of the IF of journals from different research fields using the SIGAPS estimated score. This score is therefore considered a journal’s “relative IF score.” To estimate the SIGAPS score, the IFs of journals from a specific research field are ranked from high to low and points are attributed based on the IF percentiles, providing a quality assessment that is independent of the research field.

For this study, 580 clinical trial papers were surveyed to investigate the relation between number of papers and paper quality (relative Journal IF total score/number of papers). Clinical trial papers were retrieved from 11 core clinical research hospitals in Japan.

Based on data published on the website of the Ministry of Health, Labour and Welfare, a descriptive research was conducted to compare the quantity and quality of clinical trial papers published by 11 core clinical research hospitals. A relative Journal IF score, based on the SIGAPS scoring system, was used to assess paper quality.

In Japan, core clinical research hospitals are appointed by the Medical Care Act. They play a central role in physician-led clinical trials and in clinical research developed according to international standards toward the development of innovative pharmaceuticals and medical instruments in Japan. These hospitals support clinical research developed in other medical institutions and play a key role in optimizing next-generation healthcare by enhancing the quality of clinical research in those medical institutions where joint research efforts are conducted.

The Ministry of Health, Labour and Welfare grants approval for core clinical research hospitals (Ministry of Health, Labour and Welfare, 2017), which are required to meet specific requirements established by the Medical Care Act. Approval requirements include the existence of infrastructures to support clinical research, both in terms of facilities and personnel, as well as evidence of former clinical research performance. Moreover, each of these hospitals is strictly audited based on on-site inspections. This system was established in April 2015 and, as of February 2019, 12 medical institutions (nine national universities, two national centers, and one private university) have been granted formal approval.

To meet former clinical research performance requirements, core hospitals must have submitted a minimum of 45 clinical trial papers published over the last three years. All papers were required to be published in PubMed. Requirements for approval of hospitals as core clinical research institutions, as determined by the Ministry of Health, Labour and Welfare, only include the development of interventional clinical trials, excluding observational studies.

For study purposes, all clinical trial papers submitted until November 2018 by 11 core hospitals were extracted from the Ministry of Health, Labour and Welfare website and examined. Additionally, a list of clinical trial publications from each study hospital was retrieved from a 2017 business report of core clinical research hospitals published by the Ministry of Health, Labour and Welfare (Ministry of Health, Labour and Welfare, 2017).

Using PubMed “JournalTitle,” “MedAbbr,” and “IsoAbbr,” search strings, both printed and online International Standard Serial Number information was obtained by comparing journals in which papers listed in the business report were published. If a match between PubMed records and journal list content could not be found, a visual check was conducted whenever appropriate.

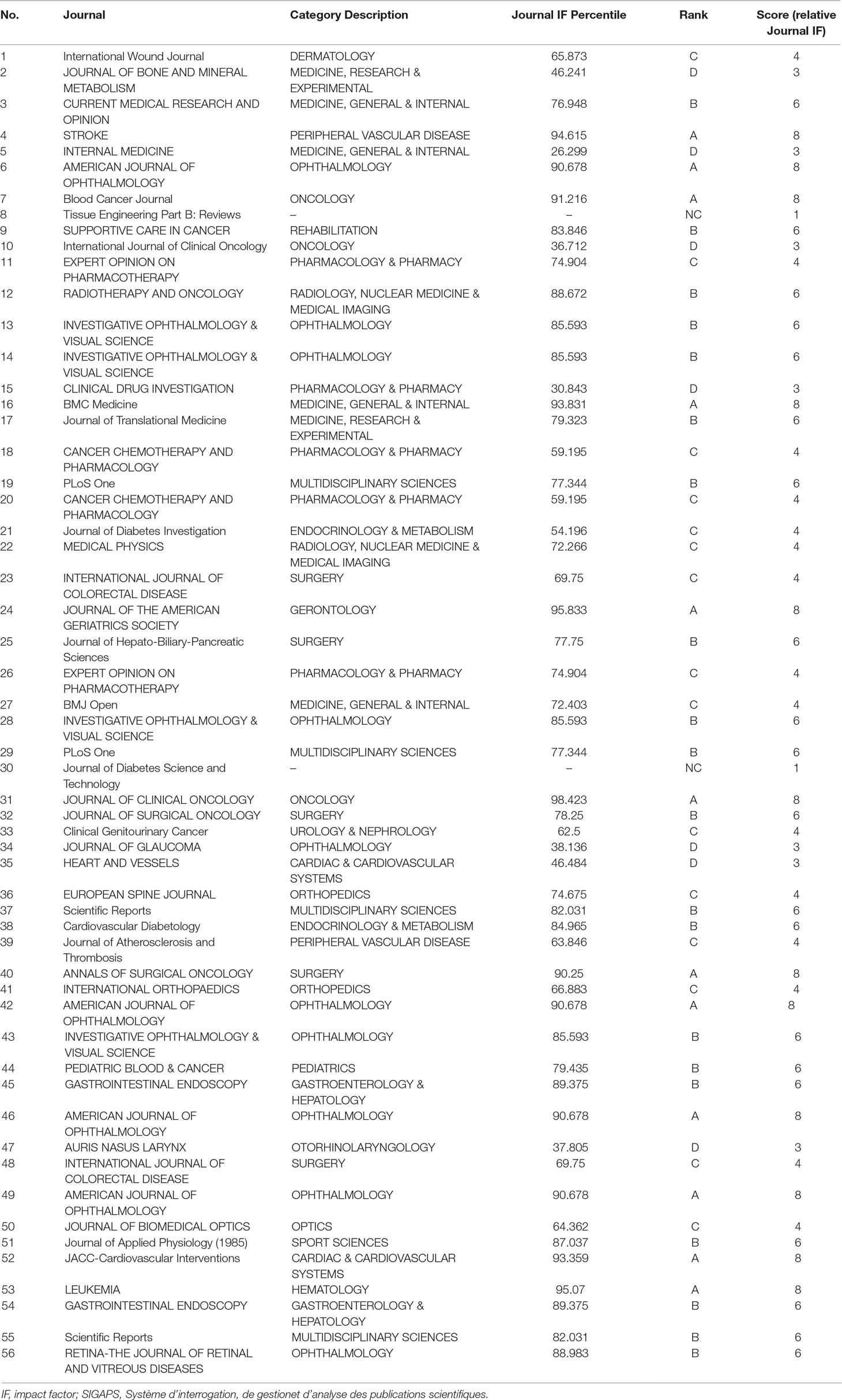

Relative Journal IF score was calculated based on the SIGAPS scoring system. Research fields of each clinical trial papers were initially categorized based on the Web of Science Category. A Journal IF percentile was then calculated for each field, and both a rank and a score (relative Journal IF score) were attributed to each journal based on that percentile (Table 1). This Journal IF percentile was applied to the 2018 release of Clarivate Analytics’ Journal Citation Reports (Journal Citation Reports 2017 Metrics). A formula of Journal IF percentile was as follows: Journal IF percentile = (N − R + 0.5)/N, wherein N was the number of journals in the category and R was the Descending Rank.

For journals with multiple IF percentiles, the highest value was selected. Additionally, paper quality was defined as relative Journal IF total score/number of papers.

SIGAPS components include the journal’s rank and the author’s rank, including first or last author (4 points), second or second-to-last author (3 points), third author (2 points), or any other contributing author (1 point) with a weighting factor. However, to approve a clinical research hospital, the Ministry of Health, Labour and Welfare requires that the first author of a clinical publication belongs to the considered institution. For this reason, in this study the relative Journal IF score was calculated based on only the journal’s rank.

Table 2 shows journal names, category descriptions, Journal IF percentiles, ranks, and scores (relative Journal IF score) for 56 papers published by clinical researchers at Hospital D.

Table 2 Example of relative Journal IF score calculated using the SIGAPS scoring system (Hospital D).

The correlation between paper quantity and quality was estimated through the Spearman’s correlation coefficient. Aggregation and analysis of all data was performed using SAS ver. 9.4 (SAS Institute Inc., Cary, NC, USA).

Overall, 580 clinical trial publications from the last three years were surveyed. The number of published papers, total Journal IF, relative Journal IF total score, and paper quality (relative Journal IF total score/number of papers) for each of the 11 medical institutions investigated are shown in Table 3.

Figure 1 depicts journal rank distribution based on Journal IF percentile for each medical institution.

A comparison between the number of published papers and paper quality is shown in Figure 2A (bar graph) and Figure 2B (scatter plot). Spearman’s correlation showed that the number of published papers did not correlate with paper quality (correlation coefficient, −0.33, P = 0.32). Additionally, no significant association was found between the number of published papers and paper quality as calculated by the absolute Journal IF (total Journal IF/number of papers) (correlation coefficient, −0.53, P = 0.09).

Figure 2 (A) A comparison of number of published papers and paper quality (bar graph). (B) A comparison of number of published papers and paper quality (scatter plot).

We performed additional analyses with data excluding 44 protocol papers. The following was found: number of published papers: a:41, b:56, c:61, d:54, e:54, f:51, g:45, h:45, i:40, j:44, k:45 (median: 45, maximum: 61, minimum:40); total Journal IF: a:166, b:221, c:207, d:218, e:257, f:164, g:213, h:188, i:147, j:175, k:219 (median: 207, maximum: 257, minimum:147); relative Journal IF total score: a:180, b:243, c:260, d:289, e:268, f:215, g:221, h:205, i:172, j:219, k:218 (median: 219, maximum: 289, minimum: 172); and paper quality (relative Journal IF total score/number of papers): a:4.39, b:4.34, c:4.26, d:5.35, e:4.96, f:4.22, g:4.91, h:4.56, i:4.30, j:4.98, k:4.84 (median: 4.56, maximum: 5.35, minimum: 4.22). No significant relation was also found between the number of published papers and paper quality (correlation coefficient, −0.08, P = 0.80).

In this study, 580 clinical trial papers reported by 11 core clinical research hospitals in Japan were surveyed to examine the relation between quantity and quality of publications. Results showed no significant relation between the number of papers published by a hospital and the quality of those papers. Therefore, an evaluation of both the number and quality of published papers should be performed when assessing an institution’s competence to execute clinical research based on their scientific publications.

This study employed a quality assessment metric indicator (relative Journal IF score) calculated based on the SIGAPS scoring system. SIGAPS was developed in 2002 at the French University Hospital, Lille (CHU) (Devos et al., 2003; Devos et al., 2006; Devos, 2008). The SIGAPS score is one of the metric indicators used by the French Ministry of Health when allocating research funds to public research institutions such as university hospitals (Rouvillain et al., 2014) In France, several studies evaluated surgery and internal medicine scientific publication outputs using the SIGAPS scoring system (Rouprêt et al., 2012; Lefèvre et al., 2013). Griffon et al. evaluated that association between the SIGAP score and publications in French (Griffon et al., 2012). Additionally, several researchers have discussed how to use the SIGAP score in several fields (Sabourin and Darmoni, 2008; Mancini et al., 2009; Darmoni et al., 2009; Ruffion et al., 2012). The present study represents the first effort toward the development of a quality assessment method for core clinical research hospitals in Japan using a SIGAPS-based score: the relative Journal IF score.

In this study, the only paper quality assessment measure used was the relative Journal IF score. However, other metrics must be considered. The citation index is one such candidate (Garfield, 2006). However, the year of publication and the research field impact associated with this metric should be considered, and a method that corrects for these factors is required. As an example, the authors propose to use the percentage of publications in top 1% or top 10%, referring to the percentage of published papers within the same research field and year falling in the top 1% or top 10% of papers with the highest number of citations. Moreover, h-index is a useful metric indicator for measuring both paper productivity and impact at the author level (Hirsch, 2007; Allen et al., 2009; Durieux and Gevenois, 2010). PubMed currently provides citation indexes and impact metrics, such as the Relative Citation Ratio (Hutchins et al., 2016). The next phase of this research comprises a multidimensional clinical paper assessment through the use of a composite metric combining the relative IF, citation index, and h-index. Moreover, unlike IF and citation index, an advantage of altmetrics is that it can be evaluated instantaneously, eliminating the need to wait till the article is published. In addition, almetrics can provide a comprehensive overview of the degree of impact on society by incorporating aspects such as the social impact of publications, including media and mass media references. Based on both the aforementioned points, introducing altmetrics to the evaluation index could prove to be of considerable value.

When assessing the quality of medical publications, it is also necessary to account for their contribution to the development of treatment guidelines. Medical treatment guidelines are developed by field specialists, and they provide the latest evidence-based data on medical practices and procedures in the clinical setting. Areas covered ranged from disease pathophysiology to prevention, diagnosis, treatment, and rehabilitation, and guidelines in each of these areas contribute to improve quality standards of medical treatment. Medical treatment guidelines are important contributions to healthcare, and publications focusing them should be considered as high-quality, regardless of the journal’s IF or citation index.

The method for calculating relative Journal IF based on the SIGAPS scoring system that was developed in France is clear and technically acceptable in any country other than France, as described in the Methods section. However, there are issues regarding Journal IF that still need to be solved. One challenge is the adequate assessment of negative studies. For example, clinical researchers tend not to publish negative studies with small sample sizes. This could cause publication bias. Since negative studies with small sample sizes may have a very high social significance, there is a need to create a mechanism to appropriately incorporate the value of negative studies with small sample sizes in Journal IF.

Although the focus of this study was paper quality assessment, it is equally important to develop objective metric indicators for quality assessment of clinical research itself. Months or years can go between completion of clinical studies and publication of their results. Therefore, in absence of a metric indicator for paper quality assessment, quality assessment of the ongoing or recently completed clinical study is not possible. Developing metric indicators to assess the quality of clinical research, and not only the quality of resulting publications, is therefore an unmet and urgent need. As the next step, an index should be added that can objectively evaluate the quality of the research process, including the number of patients enrolled, speed of enrollment, rate of satisfaction with enrollment, number of protocol deviations, and number of protocol amendments.

The present study has several limitations, including undeniable social issues. The first limitation is the method used to calculate the relative Journal IF score. This method attributes a score by categorizing a paper based on the percentiles for its field, and a concern exists that the maximum and minimum Journal IF in the same percentile may be treated as the same score.

The second limitation relates to policy differences for assessment of track records of research institutions, which constitutes an undeniable social issue. When assessing faculty track records, some research institutions may prioritize paper quality over the number of published papers, others may take the opposite approach, and others may even prioritize citations instead. Overcoming these inconsistencies will require the development of metric indicators combining number of papers and relative Journal IF scores with citation indexes or h-indexes in a well-balanced fashion.

The third limitation is the impact of research fields considered, at certain times, as “fashionable.” This also constitutes an indisputable social issue. At different time periods, research in popular fields may register great advances, and government agencies and foundations tend to apply their budgets accordingly. Research institutions that can adapt to such trends are likely to experience an increase, both in the number of published papers, as in paper quality. Counteracting this trend-driven impact is difficult. For example, in the field of rare and incurable diseases, the Government of Japan’s “Act on Medical Care for Patients with Intractable Diseases,” passed on May 23, 2014, promoted a reform and the establishment of a sustainable social insurance system. The law went into effect on January 1, 2015, using consumption taxes to create funding for healthcare subsidies and a stable healthcare subsidy system for patients with rare and incurable diseases (Japan Intractable Diseases Information Center). Research in the field of rare and incurable diseases has made great progress due to this wave of government support. The Japan Agency for Medical Research and Development invested in rare and incurable diseases’ research (Japan Agency for Medical Research and Development (AMED), 2017), and the Initiative on Rare and Undiagnosed Diseases was created as a platform for research and treatment of these conditions (Adachi et al., 2017; Adachi et al., 2018), thus generating a relatively large amount of new research in the field.

The fourth limitation is management of protocol papers. Because the approval requirements for core clinical research hospitals include protocol papers, a total of 44 protocol papers have been included in this study (44/580, 7.6%). Although these have several benefits, such as deterring publishing bias, preventing similar research, and giving hope to patients regarding the possibility of new or innovative treatments, protocol papers are published at the start of research, prior to result generation. Therefore, their quality should be evaluated separately from that of result-generating publications. We performed additional analyses with the data, excluding the 44 protocol papers. The results revealed a trend similar to that of the overall results. However, an analysis of protocol publications alone was not performed this time because of the limited number available, which was 44.

The fifth limitation is the evaluation of open access journals, which are known to have a higher citation impact than closed journals (Piwowar et al., 2018). Future studies should consider the handling of open access journals.

Finally, the relation between paper quantity and quality was only assessed for interventional clinical trials in this study. To address this issue, a subsequent study targeting both interventional and observational studies is currently being planned.

This study revealed that the quantity of papers published by an institution does not necessarily correspond to their quality. When assessing an institution’s ability to execute clinical research, assessment of paper quality should be included alongside assessment of the number of published papers.

The datasets analyzed in this manuscript are not publicly available. Requests to access the datasets should be directed to Yuji Nishizaki, eW5pc2hpemFAanVudGVuZG8uYWMuanA=

RU and YN designed this study in whole and drafted this manuscript. RU contributed to data collection. NaoY and SN contributed to statistical analysis. YH, SS, TO, SY, KM, NaoY, MN, KF, SN, YS, NatY, and PD provided advice on the interpretation of results. YN and HD provided a critical revision of the manuscript for intellectual content and gave the final approval for the submitted manuscript. All authors have read and approved the final manuscript.

The study was supported by a grant from the Japan Agency for Medical Research and Development (AMED) under Grant Number JP181k1903001 from April 1, 2018 to March 31, 2019.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to thank Ms. Kaoru Hatano and Ms. Miho Sera, Clarivate Analytics (Japan) Co., Ltd., for their help in searching for journals and calculating the score based on the SIGAPS scoring system. This research was supported by the Japan Agency for Medical Research and Development (AMED) under Grant Number JP181k1903001.

Adachi, T., Kawamura, K., Furusawa, Y., Nishizaki, Y., Imanishi, N., Umehara, S., et al. (2017). Japan’s initiative on rare and undiagnosed diseases (IRUD): towards an end to the diagnostic odyssey. Eur. J. Hum. Genet. 25, 1025–1028. doi: 10.1038/ejhg.2017.106

Adachi, T., Imanishi, N., Ogawa, Y., Furusawa, Y., Izumida, Y., Izumi, Y., et al. (2018). Survey on patients with undiagnosed diseases in Japan: potential patient numbers benefiting from Japan’s initiative on rare and undiagnosed diseases (IRUD). Orphanet J. Rare Dis. 13, 208. doi: 10.1186/s13023-018-0943-y

Allen, L., Jones, C., Dolby, K., Lynn, D., Walport, M. (2009). Looking for landmarks: the role of expert review and bibliometric analysis in evaluating scientific publication outputs. PLoS One 4, e5910. doi: 10.1371/journal.pone.0005910

Darmoni, S. J., Ladner, J., Devos, P., Gehanno, J. F. (2009). [Reliability of a bibliometric tool used in France for hospital founding]. [Article in French]. Presse Med. 38, 1056–1061. doi: 10.1016/j.lpm.2009.03.011

Devos, P. (2008). [From the bibliometry to the financing: the SIGAPS software]. [Article in French]. J. Neuroradiol 35, 31–33. doi: 10.1016/j.neurad.2008.01.003

Devos, P., Dufresne, E., Renard, J. M., Beuscart, R. (2003). SIGAPS: a prototype of bibliographic tool for medical research evaluation. Stud. Health. Technol. Inform. 95, 721–726. doi: 10.3233/978-1-60750-939-4-721

Devos, P., Lefranc, H., Dufresne, E., Beuscart, R. (2006). From bibliometric analysis to research policy: the use of SIGAPS in Lille University Hospital. Stud. Health. Technol. Inform. 124, 543–548.

Durieux, V., Gevenois, P. A. (2010). Bibliometric indicators: quality measurements of scientific publication. Radiology 255, 342–351. doi: 10.1148/radiol.09090626

Garfield, E. (2006). Citation indexes for science. A new dimension in documentation through association of ideas. Int. J. Epidemiol. 35, 1123–7; discussion 1127-8. doi: 10.1093/ije/dyl189

Garfield, E. (2006). The history and meaning of the journal impact factor. JAMA 295, 90–93. doi: 10.1001/jama.295.1.90

Griffon, N., Devos, P., Gehanno, J. F., Darmoni, S. J. (2012). [Is there a correlation between the SIGAPS score and publishing articles in French]? Presse Med., 41, e432–e435. doi: 10.1016/j.lpm.2012.03.015

Hirsch, J. E. (2007). Does the h index have predictive power? Proc. Natl. Acad. Sci. U.S.A. 104, 19193–19198. doi: 10.1073/pnas.0707962104

Hutchins, B. I., Yuan, X., Anderson, J. M., Santangelo, G. M. (2016). Relative Citation Ratio (RCR): a new metric that uses citation rates to measure influence at the article level. PLoS Biol. 14, e1002541. doi: 10.1371/journal.pbio.1002541

Japan Agency for Medical Research and Development (AMED). (2017). About AMED [Internet] [cited 27 Feb 2019]. Available from: https://www.amed.go.jp/index.html.

Japan Intractable Diseases Information Center. New measures against intractable diseases since 2015 [Internet] [cited 27 Feb 2019]. Available from: http://www.nanbyou.or.jp/entry/4141. (in Japanese).

Journal Citation Reports 2017 Metrics. [Internet] [cited 1 Apr 2019]. Available from: https://jcr.clarivate.com/.

Lefèvre, J. H., Faron, M., Drouin, S. J., Glanard, A., Chartier-Kastler, E., Parc, Y., et al. (2013). [Objective evaluation and comparison of the scientific publication from the departments of the Assistance publique-Hôpitaux de Paris: analysis of the SIGAPS score]. [Article in French]. Rev. Med. Interne 34, 342–348. doi: 10.1016/j.revmed.2012.08.006

Mancini, J., Darmoni, S., Chaudet, H., Fieschi, M. (2009). [The paradox of bibliometric activity-based funding (T2A) SIGAPS: a risk of deleterious effects on French hospital research]? [Article in French]. Presse Med. 38, 174–176. doi: 10.1016/j.lpm.2008.10.005

Ministry of Health, Labour and Welfare. (2017).About publication of business report of core clinical research hospitals [Internet] [cited 27 Feb 2019]. Available from: https://www.mhlw.go.jp/stf/seisakunitsuite/bunya/0000165585.html. (in Japanese).

Ministry of Health, Labour and Welfare. (2017). About core clinical research hospitals [Internet] [cited 27 Feb 2019]. Available from: https://www.mhlw.go.jp/stf/seisakunitsuite/bunya/tyukaku.html. (in Japanese).

Piwowar, H., Priem, J., Larivière, V., Alperin, J. P., Matthias, L., Norlander, B., et al. (2018). The state of OA: a large-scale analysis of the prevalence and impact of Open Access articles. PeerJ 6, e4375. doi: 10.7717/peerj.4375

Rouprêt, M., Drouin, S. J., Faron, M., Glanard, A., Bitker, M. O., Richard, F., et al. (2012). [Analysis of the bibliometrics score of surgical department from the academic hospitals of Paris: what is the rank of urology]? [Article in French]. Prog. Urol. 22, 182–188. doi: 10.1016/j.purol.2011.08.032

Rouvillain, J. L., Derancourt, C., Moore, N., Devos, P. (2014). Scoring of medical publications with SIGAPS software: application to orthopedics. Orthop. Traumatol. Surg. Res. 100, 821–825. doi: 10.1016/j.otsr.2014.06.020

Ruffion, A., Descotes, J. L., Kleinclauss, F., Zerbib, M., Dore, B., Saussine, C., et al. (2012). [Is SIGAPS score a good evaluation criteria for university departments]? [Article in French]. Prog. Urol. 22, 195–196. doi: 10.1016/j.purol.2011.09.013

Keywords: clinical trial, core clinical research hospital, journal impact factor, quality assessment, SIGAPS

Citation: Ueda R, Nishizaki Y, Homma Y, Sanada S, Otsuka T, Yasuno S, Matsuyama K, Yanagisawa N, Nagao M, Fujibayashi K, Nojiri S, Seo Y, Yamada N, Devos P and Daida H (2019) Importance of Quality Assessment in Clinical Research in Japan. Front. Pharmacol. 10:1228. doi: 10.3389/fphar.2019.01228

Received: 18 July 2019; Accepted: 23 September 2019;

Published: 18 October 2019.

Edited by:

Sandor Kerpel-Fronius, Semmelweis University, HungaryReviewed by:

Domenico Criscuolo, Italian Society of Pharmaceutical Medicine, ItalyCopyright © 2019 Ueda, Nishizaki, Homma, Sanada, Otsuka, Yasuno, Matsuyama, Yanagisawa, Nagao, Fujibayashi, Nojiri, Seo, Yamada, Devos and Daida. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuji Nishizaki, eW5pc2hpemFAanVudGVuZG8uYWMuanA=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.