95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Pediatr. , 04 July 2022

Sec. Pediatric Critical Care

Volume 10 - 2022 | https://doi.org/10.3389/fped.2022.919481

This article is part of the Research Topic Simulation in Pediatric Critical Care Medicine Training and Education View all 5 articles

Background: Entrustable professional activities (EPAs) were first introduced by Olle ten Cate in 2005. Since then, hundreds of applications in medical research have been reported worldwide. However, few studies discuss the use of EPAs for residency training in pediatric intensive care medicine. We conducted a pilot study of EPA for pediatric intensive care medicine to evaluate the use of EPAs in this subspecialty.

Materials and Methods: A cross-sectional study was implemented in pediatric intensive care medicine standardized residency training at the Qilu Hospital of Shandong University. An electronic survey assessing EPA performance using eight scales composed of 15 categories were distributed among residents and directors.

Results: A total of 217 director-assessment and 44 residents’ self-assessment questionnaires were collected, both demonstrating a rising trend in scores across postgraduate years. There were significant differences in PGY1-vs.-PGY2 and PGY1-vs.-PGY3 director-assessment scores, while there were no differences in PGY2-vs.-PGY3 scores. PGY had a significant effect on the score of each EPA, while position significantly affected the scores of all EPAs except for EPA1 (Admit a patient) and EPA2 (Select and interpret auxiliary examinations). Gender only significantly affected the scores of EPA6 (Report a case), EPA12 (Perform health education), and EPA13 (Inform bad news).

Conclusion: This study indicates that EPA assessments have a certain discriminating capability among different PGYs in Chinese standardized residency training in pediatric intensive care medicine. Postgraduate year, gender, and resident position affected EPA scores to a certain extent. Given the inconsistency between resident-assessed and director-assessed scores, an improved feedback program is needed in the future.

Entrustable professional activities (EPAs) were formally conceptualized in 2005 by Olle ten Cate, who defined EPAs as “units of professional practice, defined as tasks or responsibilities to be entrusted to the unsupervised execution by a trainee once he or she has attained sufficient specific competence” (1). The focus of competency-based medical education (CBME) in the recent years has been on achieving EPAs, which at present are likely the most widespread approach to CBME worldwide (2–7). It is indispensable that supervising consultants need a valid and reliable assessment tool of a learner’s performance, helping both realize the learner’s real abilities and improve in time. EPAs seem to be the optimal choice. Despite the seemingly universal affinity for EPAs, there is limited empirical evidence for their use in trainee assessment and a paucity of feedback about their clinical implementation.

It has become clear over the past decade that various EPA phenotypes exist worldwide (8–10). These phenotypes may vary based on how they define a stage of training or a profession. These differences may also reflect regulatory oversight in different regions, with some having a single regulatory body that facilitates alignment across the continuum and others having different regulatory bodies overseeing different phases of training and practice (7, 11–15). 35 evaluated a formative assessment system based on EPAs in pediatric residency training at the Peking University First Hospital, proposing an EPA system to assess postgraduate medical education (PGME) that was made up of 15 EPA categories on eight scales (16, 17). This highlighted the complementary advantage of EPAs that could be integrated with an ongoing CBME formative assessment program, including mini-clinical-evaluation exercises (Mini-CEX), direct observation of procedural skills (DOPSs), subjective-objective-assessment-plan (SOAP), and 360-degree assessment. Hence, in the last year, we began to push forward an EPA assessment program at the Qilu Hospital in Shandong University based on the CBME system for standardized residency training at the Peking University First Hospital.

Entrustable professional activities have been developed and published for a variety of pediatric subspecialties (18–23), as an emerging and practical tool for assessing trainees’ clinical competencies. Hennus et al. (19) reported a nationally modified Delphi study on developing a set of EPAs for Dutch pediatric intensive care medicine fellows. But few works discuss EPAs for residency training in pediatric intensive care medicine. To preliminarily explore the effectiveness of EPAs and deficiencies in residency training, we, therefore, performed a pilot study of 44 residents within the Chinese standardized residency training program in the pediatric intensive care medicine department at the Qilu Hospital of Shandong University and solicited both resident self-assessment and director-assessment of this training model.

Like many other Chinese standardized training residency programs, Qilu Hospital of Shandong University has a CBME evaluation course that spans the resident’s training after graduation from medical school and includes Mini-CEX, DOPS, SOAP, and 360-degree assessment. According to the national guidelines for standardized residency training, every pediatric resident is supposed to rotate through Pediatric Hematology, Urology, Neurology, Respiratory, Neonatology, Angiocardiopathy, Gastroenterology, Outpatient and Emergency, Infectious Diseases, and Child Healthcare subspecialties for at least 3 months within a 3-year training phase. The departmental rotation examination is administered at the end of each subspecialty rotation phase and is composed of all of the aforementioned skill tests and formative assessments. Directors in charge of pediatric intensive care medicine were pediatric intensive care unit physicians well-trained by the national or provincial director course for Chinese standardized training residency program, who obtained qualification certifications from the Chinese Health Commission or Shandong Provincial Health Commission.

This study enrolled 44 residents who were trained in pediatric intensive care medicine as part of a standardized residency training program from January 2021 to February 2022 at the Qilu Hospital of Shandong University. In total, seven directors in charge of pediatric intensive care medicine over the same time period were also recruited for this study. All the enrolled residents were categorized into postgraduate year 1 (PGY1) to PGY3 according to their seniority. The study was approved by the Qilu Hospital of Shandong University Institutional Review Board.

Entrustable professional activity resident self-assessments and director-assessments were used at the end of the pediatric intensive care department rotation to evaluate resident performance and competency from both points of view. An electronic questionnaire composed of EPAs with 15 categories on eight scales was administered to solicit both resident self-assessment and director assessment in addition to the ongoing evaluation program (Mini-CEX, DOPS, SOAP, and 360-degree assessment). The director assessments of each resident were performed by several directors, whereas, the self-assessment of each resident was performed by the resident his/herself. Each questionnaire included general information (director name, resident name, resident gender, seniority, and position such as professional master, entrusted training residents from junior hospitals, residents of permanent staff at the Qilu Hospital of Shandong University, and social training residents) and EPA evaluation. The 15 categories of the EPA evaluation were established using the guidelines of the Peking University First Hospital (Table 1; 6). Based on the previous literature (16), each EPA was set using eight scales (Table 2). All the EPA assessments were performed until participating residents or directors were well-informed about all of the details of this questionnaire. All the questionnaires were conducted electronically via mobile software. Multiple reminders and phone follow-ups by data collection staff were set to ensure all required responses were collected in time. Each enrolled questionnaire result indicates that all of the included questions were completed.

All the questionnaires were administered using the Wenjuanwang APP 2.7.0 (Zhongyan Network Technology Co., Ltd., Shanghai, China). Data collection was performed using Excel (Microsoft, Redwood, WA, United States), and statistical analysis and figure creation were performed using SPSS 23.0.0 (IBM, Armonk, NY, United States). Comparisons between self-assessments and director-assessments for every EPA across different PGYs were statistically analyzed using the Kruskal–Wallis test. A two-sided p < 0.05 was considered statistically significant. Comparisons between self-assessments and director-assessments for every EPA between every two PGY levels were statistically analyzed using the Mann–Whitney U test, with significance defined as a corrected p-value of 0.017 using the Bonferroni correction for three times the Mann–Whitney U test for the same EPA. The effect analysis of PGY, gender, and position on the EPA scores of director assessments was analyzed using the generalized estimated equation (GEE), with p < 0.05 considered statistically significant.

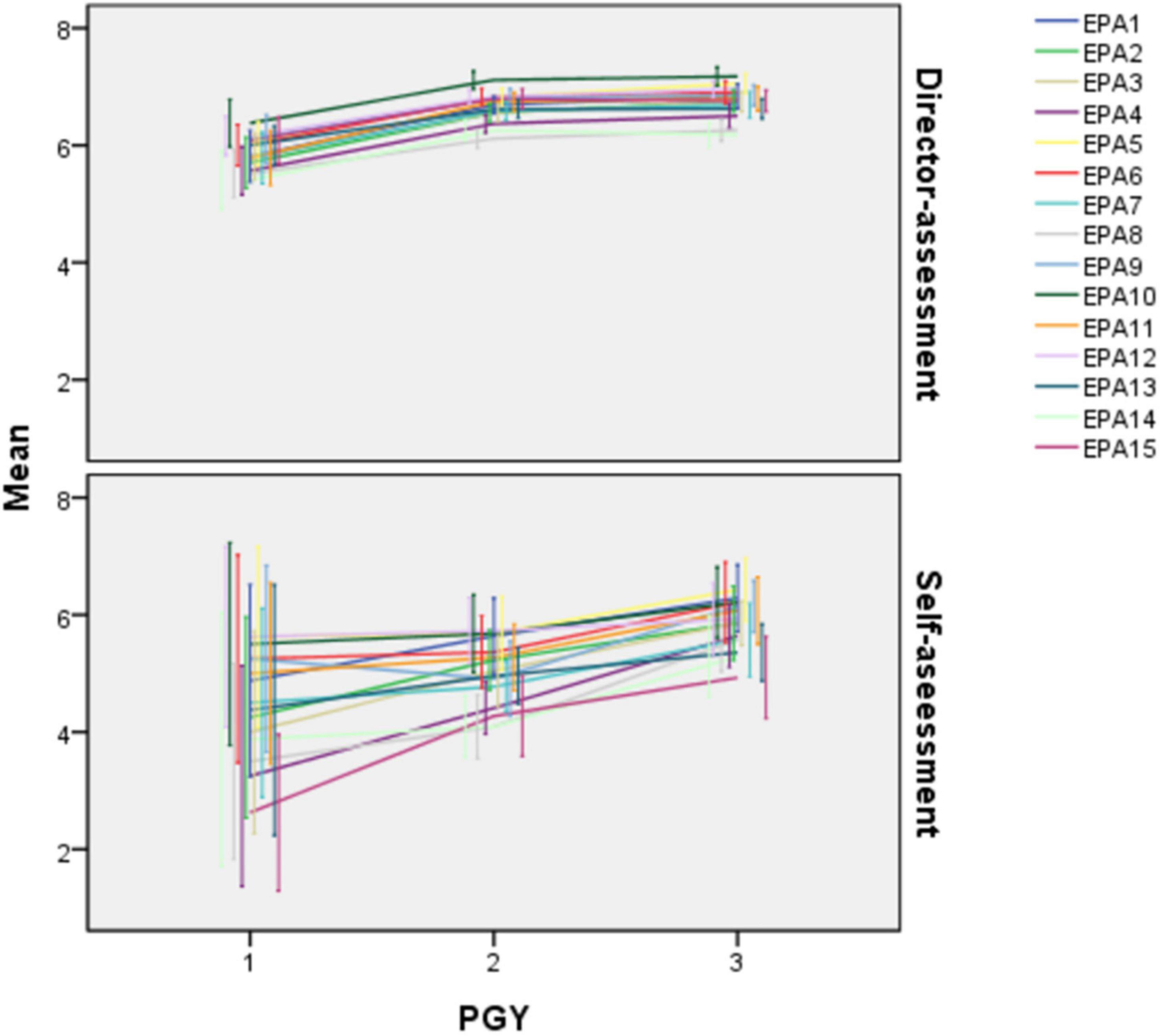

This study recruited 44 residents (Table 3) and seven directors. The collected results included 44 resident self-assessment questionnaires and 217 director-assessment questionnaires, with a 100% response rate. The number of director-assessment and self-assessment questionnaire results are listed in Table 3. A line graph was created to show the trend in director-assessment and self-assessment EPA scores over progressive PGY levels (Figure 1). A slowly rising trend in director-assessment scores across all the EPA by PGY year was noted, while self-assessment scores showed a non-distinctive trend across different PGYs.

Figure 1. Line graph of scores of director-assessment and self-assessment in each entrustable professional activity (EPA). Each point stands for the mean of scores of a certain subgroup, with bars standing for the 95% CI of the mean of each subgroup.

Director-assessment EPA scores are listed in Table 4. There were significant differences between the EPA scores across different PGYs. The higher the PGY year that the residents were in, the higher the scores that they got. When univariate PGY years were compared, there were significant differences between PGY1 and PGY2 and between PGY1 and PGY3 (p < 0.017), whereas there were no obvious differences between PGY2 and PGY3 in any EPA category.

Given that resident PGY, gender, and position all could affect director EPA scores (Table 5), a GEE model analysis was performed to analyze the effect of these factors on EPAs score (Table 6). PGY had a significant effect on all EPA scores (p < 0.05), whereas, resident position significantly affected every EPA score except for EPA1 (p = 0.714, >0.05) and EPA2 (p = 0.076, >0.05). Resident gender only significantly affected EPA6 (p = 0.002, <0.05), EPA12 (p = 0.010, <0.05), and EPA13 (p = 0.018, <0.05) (Table 6).

The scores of all 15 EPA categories rose as PGY grew except for EPA14 (perform clinical education, set PGY1 as zero; PGY2: B = 0.753, p = 0.001, <0.05, PGY3: B = 0.693, p = 0.001, <0.05) and EPA15 (Manage public health events, PGY2: B = 0.695, p = 0.000, <0.05, PGY3: B = 0.634, p = 0.001, <0.05), with higher mean scores for PGY2s than PGY3s and the lowest mean score at PGY1. The mean scores of male residents in EPA6 (Report a case, set male as zero; female: B = −0.394, p = 0.002, <0.05), EPA12 (Perform health education, female: B = −0.346, p = 0.010, <0.05), and EPA13 (Inform bad news, female: B = −0.463, p = 0.018, <0.05) were higher than those of females. Entrusted training residents got the highest scores in EPA3 (Diagnose and make differential diagnosis, set professional master as zero; B = 0.350, p = 0.001, <0.05), EPA7 (Recognize and manage general clinical conditions, B = 0.233, p = 0.019, <0.05), EPA8 (Recognize and manage emergent and critical conditions, B = 0.288, p = 0.025, <0.05), EPA9 (Transfer and hand over a patient, B = 0.332, p = 0.003, <0.05), EPA11(Perform basic operation, B = 0.277, p = 0.006, <0.05), while permanent staff ranked as the top subgroup in EPA5 (Compose medical documents, set professional master as zero; B = 0.360, p = 0.000, <0.05), EPA6 (Report a case, B = 0.381, p = 0.000, <0.05), EPA10 (Perform informed consent, B = 0.424, p = 0.000, <0.05), EPA12 (Perform health education, B = 0.698 p = 0.000, <0.05), EPA13 (Inform bad news, B = 0.809, p = 0.000, <0.05), and EPA14 (Perform clinical education, B = 0.431, p = 0.000, <0.05). Social training residents were the best subgroup in EPA2 (Select and interpret auxiliary examinations, set professional master as zero; B = 0.315, p = 0.036, <0.05) and EPA4 (Make therapeutic decision, B = 0.446, p = 0.003, <0.05) while professional masters performed best in EPA15 (Manage public health events, p < 0.05).

Self-assessment EPA scores are listed in Table 7. There were significant differences only within EPA2 (Select and interpret auxiliary examinations), EPA3 (Diagnose and make the differential diagnosis), EPA4 (Make therapeutic decision), EPA8 (Recognize and manage emergent and critical conditions), EPA9 (Transfer and hand over a patient), EPA14 (Perform clinical education), and EPA15 (Manage public health events) across the different PGY years, with higher level PGY residents scoring better. There were no obvious differences in the other EPAs across different PGYs. As for the comparisons between the two PGYs, there were significant differences in EPA15 (Manage public health events) scores between PGY1 and PGY2 (p < 0.017), and in EPA3 (Diagnose and make the differential diagnosis), EPA4 (Make therapeutic decision), EPA8 (Recognize and manage emergent and critical conditions), EPA9 (Transfer and hand over a patient), and EPA14 (Perform clinical education) between PGY2 and PGY3 (p < 0.017). Significant differences in EPA3 (Diagnose and make the differential diagnosis), EPA4 (Make therapeutic decision), EPA8 (Recognize and manage emergent and critical conditions), and EPA15 (Manage public health events) were seen between PGY1 and PGY3 (p < 0.017).

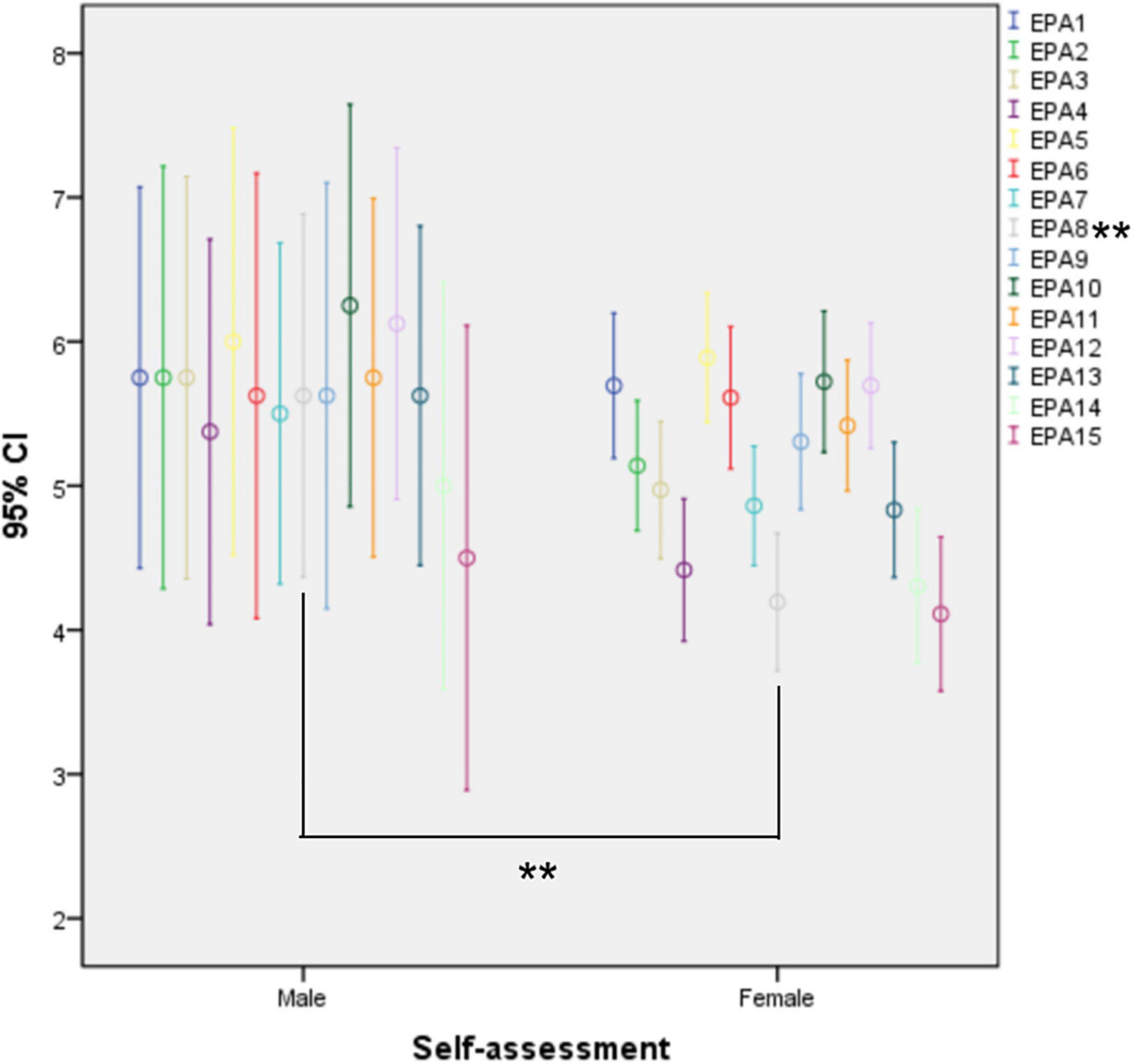

There was a significant difference in EPA8 (Recognize and manage emergent and critical conditions, p = 0.019, p < 0.05) between the self-assessment scores of male and female residents, with male residents self-scoring better than females (Figure 2).

Figure 2. Error bar chart of self-assessment between genders. The edges of each bar stand for the 95% CI of scores in subgroups. **EPA8: p = 0.019, p < 0.05.

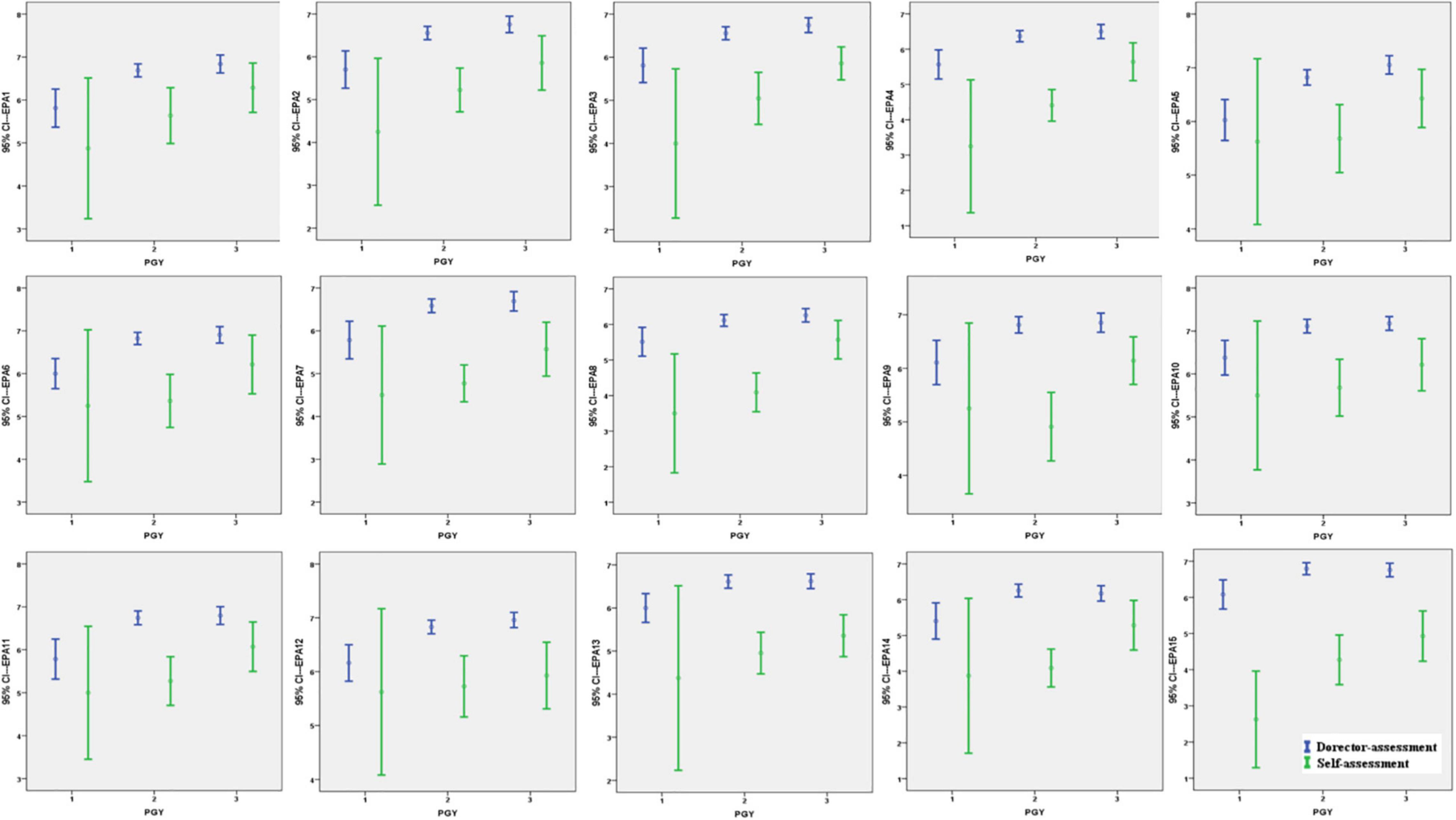

The director and self-assessment scores of PGY1s were mostly consistent except for EPA2 (Select and interpret auxiliary examinations, p = 0.31, p < 0.05), EPA3 (Diagnose and make a differential diagnosis, p = 0.12, p < 0.05), EPA4 (Make the therapeutic decision, p = 0.03, p < 0.05), EPA7 (Recognize and manage general clinical conditions, p = 0.39, p < 0.05), EPA8 (Recognize and manage emergent and critical conditions, p = 0.002, p < 0.05), and EPA15 (Manage public health events, p = 0.00, p < 0.05), where directors awarded higher scores. There were significant differences between the self-assessment and director-assessment scores for every EPA for PGY2s and PGY3s (PGY2: EPA1 p = 0.001, other EPAs P = 0.000; PGY3: EPA1 p = 0.036, EPA2 P = 0.003, EPA3 P = 0.000, EPA4 P = 0.002, EPA5 P = 0.012, EPA6 P = 0.034, EPA7 P = 0.001, EPA8 P = 0.008, EPA9 P = 0.002, EPA10 P = 0.001, EPA11 P = 0.014, EPA12 P = 0.000, EPA13 P = 0.000, EPA14 P = 0.009, EPA15 P = 0.000), with higher scores awarded by the director-assessment for each EPA (Figure 3).

Figure 3. Comparison of director-assessment vs. self-assessment in entrustable professional activities (EPAs) within the same postgraduate year (PGY). The edges of each bar mean the 95% CI of scores of each subgroup in EPAs. The point of each bar in the middle stand for the mean of scores of a certain subgroup.

Since their initial introduction by Olle ten Cate (1) in 2005, EPA have become an important part of CBME in undergraduate and postgraduate medical education settings (17, 19, 24). EPAs are designed to be real-life activities, and as such can be understood and applied more easily than prior concepts within CBME, such as milestones (25). An EPA combines the knowledge, skills, and attitudes necessary to perform a task, incorporating and synthesizing learning objectives into a meaningful unit. EPAs provide a framework to make judgments of trainee ability explicit, which is important at all stages of medical education (26). In their literature search, Kerth et al. (27) reported a notable shift from descriptions of EPA development processes toward aspects beyond development, such as implementation, feasibility, acceptance/perception, and assessment. Of note, there are few studies about EPAs in pediatric postgraduate education, of which most are from general pediatric residencies or other subspecialties, such as pediatric emergency medicine, pediatric cardiology, and neonatology. Furthermore, studies from Asia are scarce. This study focused on the implementation and feasibility of EPAs in Chinese standardized residency training in pediatric intensive care medicine.

Our study suggested that the director-assessment scores of residents in pediatric intensive care in every EPAs rose significantly over postgraduate training, with significant differences between PGY1 vs. PGY2 and PGY1 vs. PGY3 but not PGY2 vs. PGY3. These findings were nearly consistent with previous studies that utilized residency training programs (28) and fellows using American Board of Pediatrics subspecialty EPAs (29). However, with respect to self-assessment scores, only a segment of EPA scores were significantly different across PGYs and between individual PGY years. When an effect analysis on PGY, gender, and position on EPA scores was performed, EPA scores rose with PGY except for EPA14 and EPA15 while gender affected every EPA score significantly, with the male residents scoring higher. In contrast, residents in different positions scored better in different EPAs. The male self-assessment scores in “Recognize and managed emergent and critical conditions in pediatric intensive care” were significantly higher than female scores, while other EPAs were equivalent between genders. When self-assessment and director-assessment scores of PGY1s were compared, most of the director-assessed scores were significantly higher than those of the resident self-assessments. Furthermore, all of the director-assessment scores in every EPA category were significantly better than the self-assessment scores of both PGY2s and PGY3s.

This was a cross-sectional study in pediatric intensive care that evaluated the implementation and feasibility of EPAs in the formative assessment of the ongoing CBME for standardized residency training. The upward trend in director-assessed scores for each EPA over pediatric intensive care was significant. PGY1 residents are less capable of certain professional activities in pediatric intensive care than PGY2s and PGY3s, while there were no significant differences in any director-assessed score between PGY2 and PGY3 in pediatric intensive care. This obvious change in ability between the PGY1 and PGY2/PGY3 years may be due to the first years of training immediately after graduating, while there would be incremental development during the second or third year due to wide-ranging rotations across all the pediatric subspecialties instead of continuous training within one certain subspecialty. On the other hand, the insufficiency of professional activities during undergraduate education for trainees before standardized training was revealed based on the relatively lower scores of PGY1 residents. As stepped elevation is emphasized in the CBME program, residents are thought to develop their professional skills as their training time increases (30, 31). These areas include all EPAs, suggesting that the curriculum for training residents in these areas requires notable improvement, and directors and regulatory agencies should be encouraged to reinforce the idea up-grading professional skills between the PGY2 and PGY3 years (32, 33).

For most of these 15-category EPAs, there was a certain percentage of residents who were able to practice EPAs unsupervised by the end of 3 years of residency training. However, for the remaining group of unqualified residents to be able to practice those EPAs unsupervised by the end of their required training, educators and regulatory agencies would need to implement EPA-based assessments more broadly or efficiently in pediatric subspecialties, as suggested previously (34). If we expect residents to meet the standards for unsupervised practice after training in all 15 EPA categories, either training needs to be enhanced significantly in these areas or our expectations of what residents are required to achieve by the completion of their training need to be adjusted. Future studies should be performed to determine whether similar experiences have been reported in other specialties.

Given that PGY, gender, and position could affect EPA score, we used a GEE model to analyze our correlation analysis. EPA scores rose significantly across PGY years except for EPA14 (Perform clinical education) and EPA15 (Manage public health events), with the highest scores noted among PGY2s. This suggests a lack of stepwise training between the 2nd and 3rd year within this standardized training program. After the first postgraduate year of training, individual talents might be distinguishing each resident’s abilities. With respect to the gender gap, the scores for EPA6 (Report a case), EPA12 (Perform health education), and EPA13 (Inform bad news) were significantly higher among the male residents. After interviewing the enrolled directors, the potential advantages in the logical thinking and professional image credibility of male physicians in the daily workplace make this result understandable. There were four kinds of resident positions, which had an effect on EPA score differences. Professional masters had just graduated with their bachelor’s degrees from medical school while permanent staff mostly had doctoral degrees, which required a prolonged research period or more professional knowledge in some fields. Whereas, the entrusted training residents and social training residents were more experienced in clinical work and usually worked for a few years prior to attending standardized residency training, they generally had a lesser educational background. Different backgrounds led to different advantages in professional activities, which can allow us to reinforce the personalized training plan for residents in different positions to play to everyone’s strengths.

Resident self-assessment scores were inconsistent with the director’s perception. Residents believed that they had significantly developed only in EPA2 (Select and interpret auxiliary examinations), EPA3 (Diagnose and make the differential diagnosis), EPA4 (Make therapeutic decision), EPA8 (Recognize and manage emergent and critical conditions), EPA9 (Transfer and hand over a patient), EPA14 (Perform clinical education), and EPA15 (Manage public health events) over their 3 years of standardized training. There was a significant difference in self-assessment scores between genders only in EPA8 (Recognize and manage emergent and critical conditions), with males scoring higher. This might come from the male advantage in physical strength and adaptability to a heavy daily workload and the burden of the pediatric intensive care medicine rotation. Of note, there were a limited number of male residents enrolled in this study, which might lead to inconsistencies between director- and self-assessment scores. A further large cohort of residents is required to produce more reliable results.

The director-assessment scores were higher than the self-assessment scores of PGY1s in EPA2 (Select and interpret auxiliary examinations), EPA3 (Diagnose and make the differential diagnosis), EPA4 (Make therapeutic decision), EPA7 (Recognize and manage general clinical conditions), EPA8 (Recognize and manage emergent and critical conditions), and EPA15 (Manage public health events). Similar situations were found in the PGY2 and PGY3 years across all EPAs categories. This is likely due to the lack of self-confidence and self-recognition among the residents. It may also indicate the lack of efficient feedback from directors to residents, preventing the trainee’s understanding of how they performed and what they needed to improve. Further efficient feedback on EPAs is required.

Our study has several strengths. It reported the implementation and feasibility of EPAs in the Chinese standardized training of pediatric intensive care residents. It established obvious differences in EPA performance between lower PGY and higher PGY residents and provided a well-structured framework to guide residents in the development of the knowledge, skills, and attitudes necessary to perform a task while incorporating and synthesizing learning objectives. We analyzed the effects of PGY, gender, and resident position on EPAs scores, confirming that PGY and gender correlated with EPA scores while resident position had a limited impact. The incongruity between director-assessed and self-assessment scores indicates the need for an efficient feedback program.

There are also limitations to our study. First, the sample size is limited, leading to our inability to analyze the reliability and validity of EPA implementation in pediatric intensive care medicine training. This was limited by the capability of resident training at our hospital and the number of directors at our institution. The translation into clinical practice and how these skills affect the patient outcome remains to be determined. Second, this is a cross-sectional study that enrolled residents trained in pediatric intensive care medicine within the last year. There are no detailed outcomes related to clinical practice and patient outcomes measured. Since EPAs were newly integrated into the ongoing CBME program in China, we had limited experience with this. A longitudinal study may be validated, and a multicenter longitudinal study would be of great value.

In summary, this study indicates that EPA assessments had a certain discriminating capability between class years of Chinese standardized residency training in pediatric intensive care medicine, with scores rising with PGY year. Postgraduate year, gender, and resident position impacted EPA scores. Given the incongruities between resident-assessed and director-assessed scores, an improved feedback program is needed.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Qilu Hospital of Shandong University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

ZY initiated the study, participated in the design and coordination, did the basic statistical analysis, and drafted the manuscript. LJ did the majority of the statistical analysis. ZA helped to initiate the study and edit the manuscript. CJ, ZW, WJ, and YT helped to collect the original data and did the statistical analysis. All authors read and approved the final manuscript.

This research received a grant from the Innovative Research Project on Standardized Residency Training in the Qilu Hospital of Shandong University (ZPZX2019A07).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We are grateful to all the staff in Medical Training Office at the Qilu Hospital in Shandong University. Finally, we are especially grateful to the residents and directors whose data had been enrolled in the study.

1. Ten CO. Entrustability of professional activities and competency-based training. Med Educ. (2005) 39:1176–7. doi: 10.1111/j.1365-2929.2005.02341.x

2. Ten CO, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. (2007) 82:542–7. doi: 10.1097/ACM.0b013e31805559c7

3. Peters H, Holzhausen Y, Boscardin C, Ten CO, Chen HC. Twelve tips for the implementation of EPAs for assessment and entrustment decisions. Med Teach. (2017) 39:802–7. doi: 10.1080/0142159X.2017.1331031

4. Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system–rationale and benefits. N Engl J Med. (2012) 366:1051–6. doi: 10.1056/NEJMsr1200117

5. Simpson JG, Furnace J, Crosby J, Cumming AD, Evans PA, Friedman BDM, et al. The Scottish doctor–learning outcomes for the medical undergraduate in Scotland: a foundation for competent and reflective practitioners. Med Teach. (2002) 24:136–43. doi: 10.1080/01421590220120713

6. Iobst WF, Sherbino J, Cate OT, Richardson DL, Dath D, Swing SR, et al. Competency-based medical education in postgraduate medical education. Med Teach. (2010) 32:651–6. doi: 10.3109/0142159X.2010.500709

7. Hart D, Franzen D, Beeson M, Bhat R, Kulkarni M, Thibodeau L, et al. Integration of entrustable professional activities with the milestones for emergency medicine residents. West J Emerg Med. (2019) 20:35–42. doi: 10.5811/westjem.2018.11.38912

8. Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. (2010) 376:1923–58. doi: 10.1016/S0140-6736(10)61854-5

9. Carraccio C, Englander R, Gilhooly J, Mink R, Hofkosh D, Barone MA, et al. Building a framework of entrustable professional activities, supported by competencies and milestones, to bridge the educational continuum. Acad Med. (2017) 92:324–30. doi: 10.1097/ACM.0000000000001141

10. Caverzagie KJ, Cooney TG, Hemmer PA, Berkowitz L. The development of entrustable professional activities for internal medicine residency training: a report from the education redesign committee of the alliance for academic internal medicine. Acad Med. (2015) 90:479–84.

11. Deitte LA, Gordon LL, Zimmerman RD, Stern EJ, McLoud TC, Diaz-Marchan PJ, et al. Entrustable professional activities: ten things radiologists do. Acad Radiol. (2016) 23:374–81. doi: 10.1016/j.acra.2015.11.010

12. McCloskey CB, Domen RE, Conran RM, Hoffman RD, Post MD, Brissette MD, et al. Entrustable professional activities for pathology: recommendations from the college of american pathologists graduate medical education committee. Acad Pathol. (2017) 4:2374289517714283. doi: 10.1177/2374289517714283

13. Shaughnessy AF, Sparks J, Cohen-Osher M, Goodell KH, Sawin GL, Gravel JJ. Entrustable professional activities in family medicine. J Grad Med Educ. (2013) 5:112–8. doi: 10.4300/JGME-D-12-00034.1

14. Young JQ, Hasser C, Hung EK, Kusz M, O’Sullivan PS, Stewart C, et al. Developing end-of-training entrustable professional activities for psychiatry: results and methodological lessons. Acad Med. (2018) 93:1048–54.

15. Mink RB, Myers AL, Turner DA, Carraccio CL. Competencies, milestones, and a level of supervision scale for entrustable professional activities for scholarship. Acad Med. (2018) 93:1668–72.

16. Chen HC, van den Broek WE, Ten CO. The case for use of entrustable professional activities in undergraduate medical education. Acad Med. (2015) 90:431–6. doi: 10.1097/ACM.0000000000000586

17. Meyer EG, Chen HC, Uijtdehaage S, Durning SJ, Maggio LA. Scoping review of entrustable professional activities in undergraduate medical education. Acad Med. (2019) 94:1040–9. doi: 10.1097/ACM.0000000000002735

18. Werho DK, DeWitt AG, Owens ST, McBride ME, van Schaik S, Roth SJ. Establishing entrustable professional activities in pediatric cardiac critical care. Pediatr Crit Care Med. (2022) 23:54–9. doi: 10.1097/PCC.0000000000002833

19. Hennus MP, Nusmeier A, van Heesch GGM, Riedijk MA, Schoenmaker NJ, Soeteman M, et al. Development of entrustable professional activities for paediatric intensive care fellows: a national modified Delphi study. PLoS One. (2021) 16:e0248565. doi: 10.1371/journal.pone.0248565

20. Turner DA, Schwartz A, Carraccio C, Herman B, Weiss P, Baffa JM, et al. Continued supervision for the common pediatric subspecialty entrustable professional activities may be needed following fellowship graduation. Acad Med. (2021) 96:S22–8. doi: 10.1097/ACM.0000000000004091

21. Pitts S, Schwartz A, Carraccio CL, Herman BE, Mahan JD, Sauer CG, et al. Fellow entrustment for the common pediatric subspecialty entrustable professional activities across subspecialties. Acad Pediatr. (2021) [Online ahead of print]. doi: 10.1016/j.acap.2021.12.019

22. Cully JL, Schwartz S. The argument for entrustable professional activities in pediatric dentistry. Pediatr Dent. (2019) 41:427–8.

23. Emke AR, Park YS, Srinivasan S, Tekian A. Workplace-based assessments using pediatric critical care entrustable professional activities. J Grad Med Educ. (2019) 11:430–8. doi: 10.4300/JGME-D-18-01006.1

24. O’Dowd E, Lydon S, O’Connor P, Madden C, Byrne D. A systematic review of 7 years of research on entrustable professional activities in graduate medical education, 2011-2018. Med Educ. (2019) 53:234–49. doi: 10.1111/medu.13792

25. Ten CO, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. (2015) 37:983–1002. doi: 10.3109/0142159X.2015.1060308

26. Ten CO, Hart D, Ankel F, Busari J, Englander R, Glasgow N, et al. Entrustment decision making in clinical training. Acad Med. (2016) 91:191–8. doi: 10.1097/ACM.0000000000001044

27. Kerth JL, van Treel L, Bosse HM. The use of entrustable professional activities in pediatric postgraduate medical education: a systematic review. Acad Pediatr. (2022) 22:21–8. doi: 10.1016/j.acap.2021.07.007

28. Schumacher DJ, West DC, Schwartz A, Li ST, Millstein L, Griego EC, et al. Longitudinal assessment of resident performance using entrustable professional activities. JAMA Netw Open. (2020) 3:e1919316. doi: 10.1001/jamanetworkopen.2019.19316

29. Mink RB, Schwartz A, Herman BE, Turner DA, Curran ML, Myers A, et al. Validity of level of supervision scales for assessing pediatric fellows on the common pediatric subspecialty entrustable professional activities. Acad Med. (2018) 93:283–91. doi: 10.1097/ACM.0000000000001820

30. Schultz K, Griffiths J. Implementing competency-based medical education in a postgraduate family medicine residency training program: a stepwise approach, facilitating factors, and processes or steps that would have been helpful. Acad Med. (2016) 91:685–9. doi: 10.1097/ACM.0000000000001066

31. Li ST, Tancredi DJ, Schwartz A, Guillot AP, Burke AE, Trimm RF, et al. Competent for unsupervised practice: use of pediatric residency training milestones to assess readiness. Acad Med. (2017) 92:385–93. doi: 10.1097/ACM.0000000000001322

32. McMillan JA, Land MJ, Leslie LK. Pediatric residency education and the behavioral and mental health crisis: a call to action. Pediatrics. (2017) 139:e20162141. doi: 10.1542/peds.2016-2141

33. Peter NG, Forke CM, Ginsburg KR, Schwarz DF. Transition from pediatric to adult care: internists’ perspectives. Pediatrics. (2009) 123:417–23. doi: 10.1542/peds.2008-0740

34. Taylor DR, Park YS, Smith CA, Karpinski J, Coke W, Tekian A. Creating entrustable professional activities to assess internal medicine residents in training: a mixed-methods approach. Ann Intern Med. (2018) 168:724–9. doi: 10.7326/M17-1680

Keywords: entrustable professional activities (EPA), pediatric intensive care medicine, standardized residency training (SRT), Chinese, assessment and education

Citation: Yun Z, Jing L, Junfei C, Wenjing Z, Jinxiang W, Tong Y and Aijun Z (2022) Entrustable Professional Activities for Chinese Standardized Residency Training in Pediatric Intensive Care Medicine. Front. Pediatr. 10:919481. doi: 10.3389/fped.2022.919481

Received: 13 April 2022; Accepted: 10 June 2022;

Published: 04 July 2022.

Edited by:

Muhammad Waseem, Lincoln Medical Center, United StatesReviewed by:

Yves Ouellette, Mayo Clinic, United StatesCopyright © 2022 Yun, Jing, Junfei, Wenjing, Jinxiang, Tong and Aijun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhang Aijun, emhhbmdhaWp1bkBzZHUuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.