- 1Department of Chemical Engineering, Indian Institute of Technology Delhi, New Delhi, India

- 2Yardi School of Artificial Intelligence, Indian Institute of Technology Delhi, New Delhi, India

- 3Department of Computer Science and Engineering, Indian Institute of Technology Delhi, New Delhi, India

- 4Henry Ford Pancreatic Cancer Center, Henry Ford Health, Detroit, MI, United States

- 5Department of Surgery, Henry Ford Health, Detroit, MI, United States

Introduction: Early detection of pancreatic cancer continues to be a challenge due to the difficulty in accurately identifying specific signs or symptoms that might correlate with the onset of pancreatic cancer. Unlike breast or colon or prostate cancer where screening tests are often useful in identifying cancerous development, there are no tests to diagnose pancreatic cancers. As a result, most pancreatic cancers are diagnosed at an advanced stage, where treatment options, whether systemic therapy, radiation, or surgical interventions, offer limited efficacy.

Methods: A two-stage weakly supervised deep learning-based model has been proposed to identify pancreatic tumors using computed tomography (CT) images from Henry Ford Health (HFH) and publicly available Memorial Sloan Kettering Cancer Center (MSKCC) data sets. In the first stage, the nnU-Net supervised segmentation model was used to crop an area in the location of the pancreas, which was trained on the MSKCC repository of 281 patient image sets with established pancreatic tumors. In the second stage, a multi-instance learning-based weakly supervised classification model was applied on the cropped pancreas region to segregate pancreatic tumors from normal appearing pancreas. The model was trained, tested, and validated on images obtained from an HFH repository with 463 cases and 2,882 controls.

Results: The proposed deep learning model, the two-stage architecture, offers an accuracy of 0.907 0.01, sensitivity of 0.905 0.01, specificity of 0.908 0.02, and AUC (ROC) 0.903 0.01. The two-stage framework can automatically differentiate pancreatic tumor from non-tumor pancreas with improved accuracy on the HFH dataset.

Discussion: The proposed two-stage deep learning architecture shows significantly enhanced performance for predicting the presence of a tumor in the pancreas using CT images compared with other reported studies in the literature.

1 Introduction

Pancreatic adenocarcinoma is currently one of the deadliest cancers, with an overall 5-year survival rate of approximately 11% (1). Signs and symptoms of pancreatic cancer are non-specific and thus have limited utility in early detection. Moreover, efficient screening tests for the early detection of pancreatic tumors do not currently exist. It is clear that the pancreatic cancer survival rate is likely to significantly improve if the cancer can be detected at an early stage where definitive treatment with surgery and systemic therapy can be offered (2). Computed tomography (CT) and magnetic resonance imaging (MRI) are two common screening modalities that can be better utilized for diagnosing pancreatic cancer. Recent advancements in artificial intelligence and radiographic imaging provide hope that there may be the opportunity to use the aforementioned modalities as early screening detection tests, especially for early pancreatic cancer detection (3–5). Automated medical image segmentation and classification have been extensively investigated in the image analysis community due to the fact that manual, dense labeling of large amounts of medical images is tedious and error-prone. Accurate and reliable solutions are desired to increase clinical workflow efficiency and support decision-making through fast and automatic extraction of quantitative measurements (6, 7).

In the field of biomedical image analysis, the analysis of pancreatic images has significant importance in clinical diagnosis and research, including a range of tasks: (1) segmentation of tumor region, (2) diagnosing the presence of cancer, and (3) clustering the region. This research primarily focuses on an integrated framework that has the potential to perform classification, segmentation, and clustering. Traditional fully supervised techniques require accurately annotated data, which is laborious, uncertain, and time-consuming. However, unsupervised methods extract features from unlabeled data and have limited application to high-level tasks such as image segmentation and classification. In this scenario, the proposed algorithm strikes a balance by leveraging the benefits of both supervised and unsupervised approaches. This paper addresses the effectiveness and efficiency of accomplishing high-level tasks with a minimum of manual annotation and to automatically extract fine-grained information from coarse-grained labels.

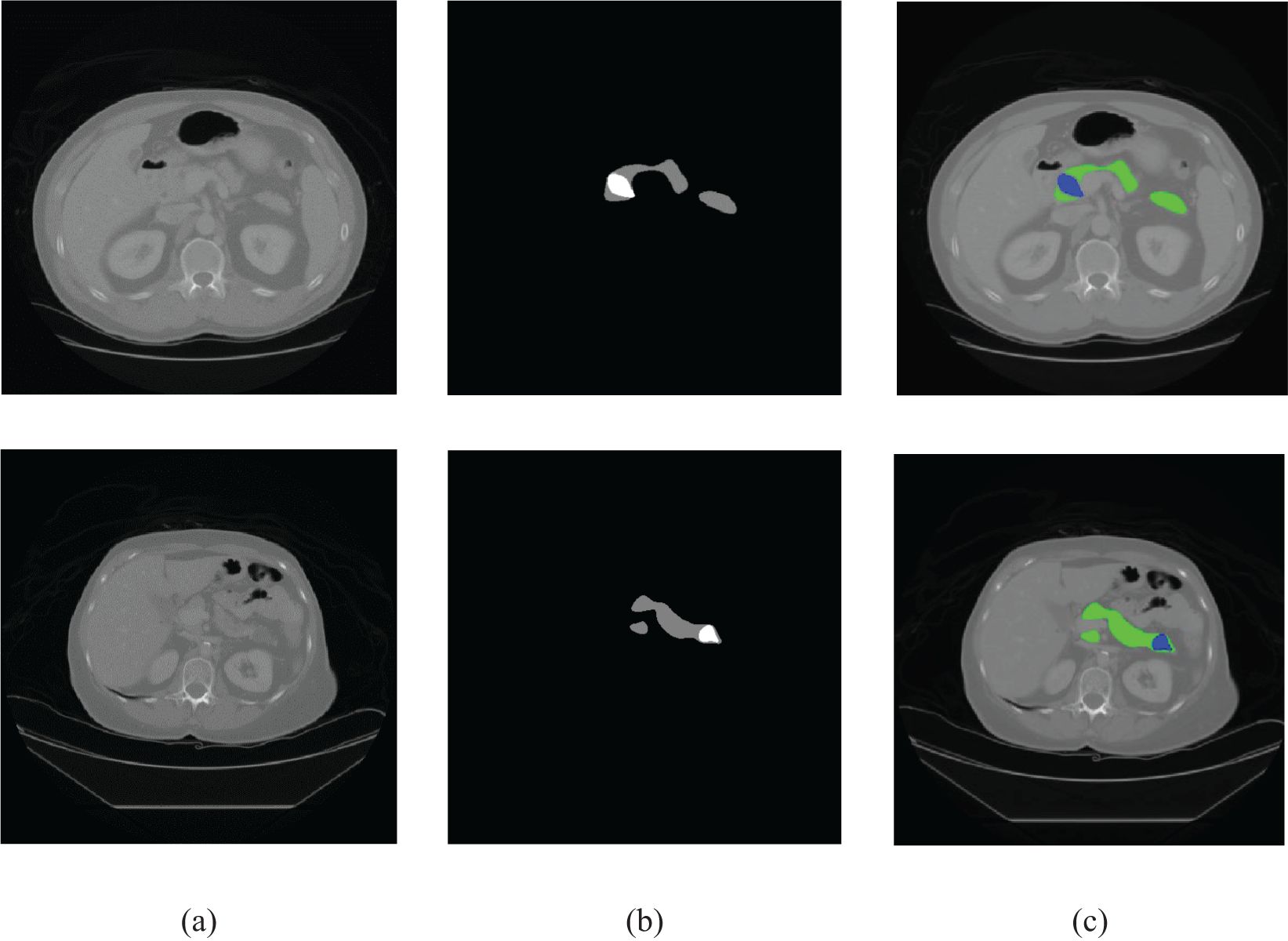

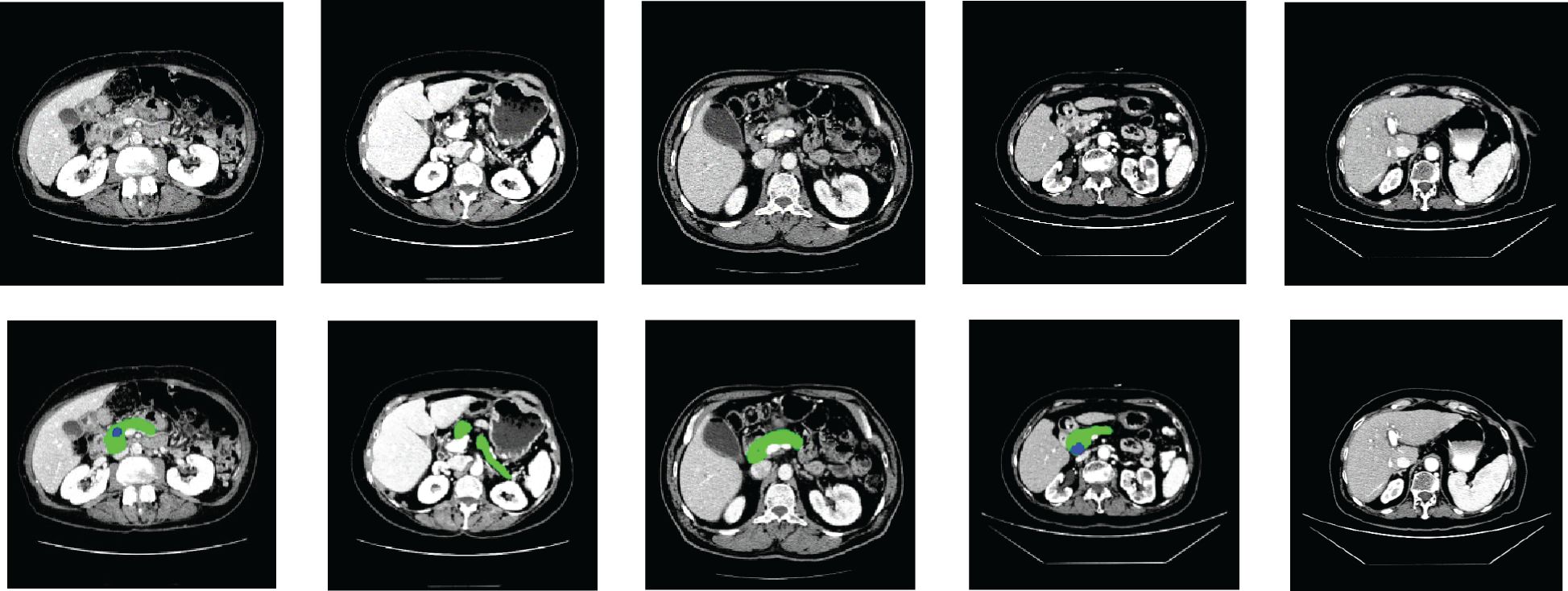

Convolutional neural networks (CNNs) (8) have made incredible strides in the medical imaging industry over the past 10 years, particularly in the areas of CT, MRI, and ultrasound image analysis. Since their advent, near-radiologist level performance has been achieved in automated medical image analysis tasks, including detection or prediction of hypertrophic cardiomyopathy (9, 10), future cardiovascular event (11), cancerous lung nodules (12, 13), liver tumors (14), and hepatocellular carcinoma (15). Figure 1 demonstrates the difficulty in traditionally segmenting the pancreas compared with other organs due to various factors such as relatively small size, complicated anatomical structure due to adjacent structures, and uncertain boundaries of the organ in the limited slices in which the pancreas appears on traditional CTs.

Figure 1. Sample CT images. (A) Original image, (B) ground truth, and (C) color-coded depiction of overlay on an axial CT image (pancreas: green, cancer: blue).

High representation power and fast inference properties have made CNNs the de-facto standard for image segmentation and classification. Fully convolutional networks (FCNs) (12, 16) in general and U-Net (6) in particular are some of the commonly used architectures for automated medical image segmentation. The architectures are typically cascaded with multistage CNN models when the target organs show large inter-patient variation in terms of shape and size (17). Although deep learning has been studied for the detection of pancreatic cancer (18–24), pancreatic neuroendocrine tumors (pNETs) (25), and intraductal papillary mucinous neoplasms (IPMNs) in pancreas (26), it is yet to be incorporated as a part of the routine workup for patients diagnosed with pancreatic cancers. The U-Net model and its extended versions have been used in the literature for organ segmentation and have been demonstrated to deliver good accuracy (27–29).

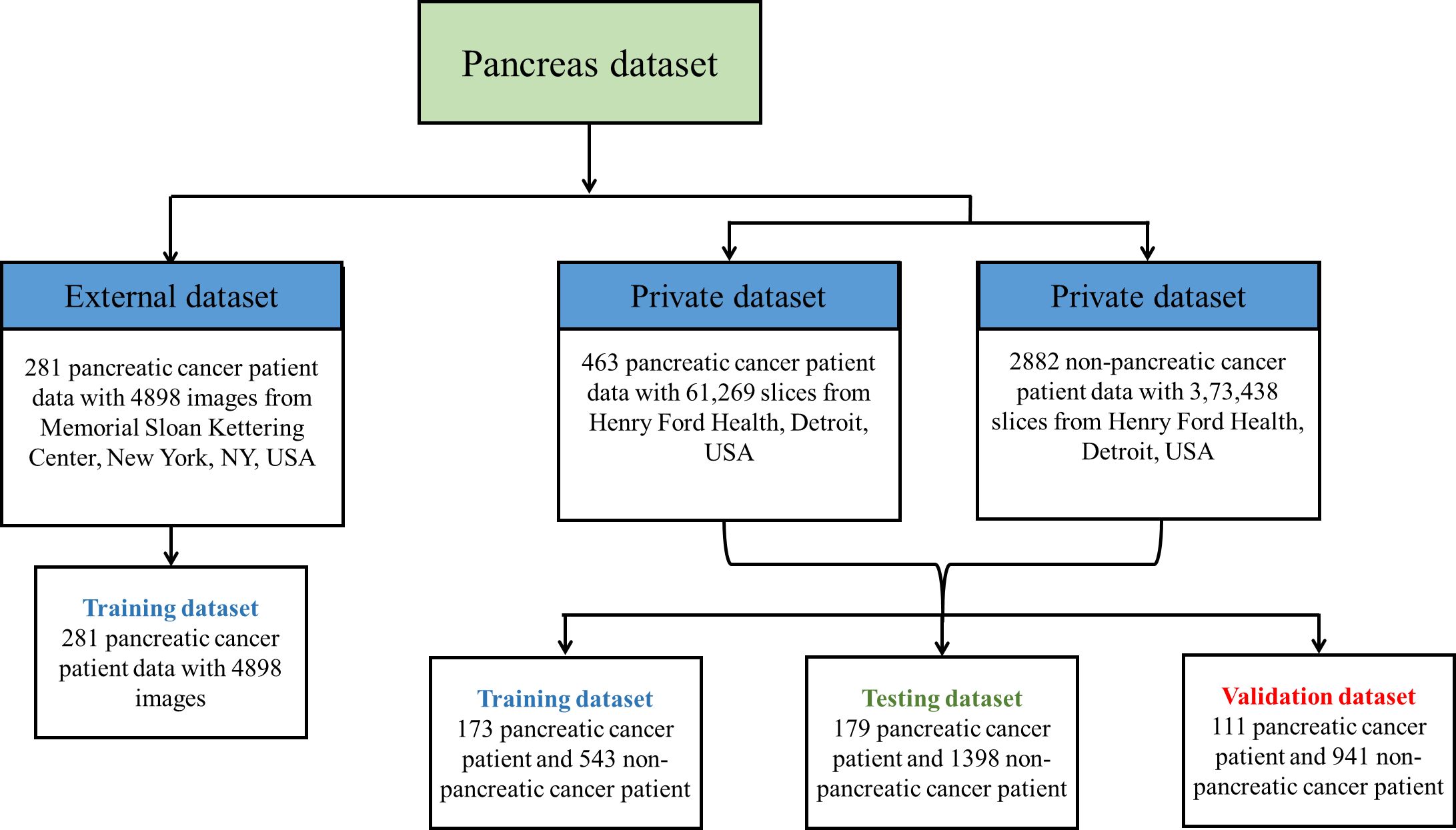

Pancreatic cancer detection from CT images by applying deep learning is a challenging task because the pancreas is a small organ and is located in a complicated position in the retroperitoneum [Nakao et al., (30)]. A typical CT scan of a patient contains 131 slices in the full axial view, with approximately 20 to 60 slices containing the image of the pancreas. In early stages, the pancreatic tumors are too small and irregularly shaped for easy identification. Following that, several researchers proposed cutting-edge CNN techniques based on segmentation of the pancreas using either cascaded or coarse-to-fine segmentation networks. However, prior investigations of pancreatic segmentation were conducted on extremely limited populations (4, 18, 31, 32) and the results have been unsatisfactory (maximum dice coefficient 0.58) (33). This is primarily due to the minor differences between a singular image that contains the tumor vs. an image that does not. To the best of our knowledge, there are very few DL studies that have been conducted on big CT datasets that encompass a variety of pancreatic volumes. Figure 2 illustrates the private and publicly available pancreatic dataset with normal and abnormal patients used for training the proposed framework. Therefore, the objective of this investigation is to carry out an effectiveness study using a weakly supervised algorithm with a total of 463 patients suffering from pancreatic tumors and a total of 2,882 controls.

Furthermore, as the tumor size is significantly small compared with the overall size of the CT image, the task of identification is further complicated when all slices are analyzed together. To circumvent these issues, we propose a weakly supervised two-stage architecture with a cascade of segmentation and classification. As we do not have annotated mask of the pancreas in our local dataset (Henry Ford Health (HFH)), the segmentation model was trained using MSKCC data to segment the pancreas. It should be noted that the Memorial Sloan Kettering Cancer Center (MSKCC) data set is small and does not capture all possible clinical variations of tumors. On the other hand, HFH is a much larger dataset but contains only patient-label information (cancer versus non-cancer). Our proposed approach intelligently combines “the best of both the worlds” by using a weakly supervised classification that utilizes patient label information of the HFH data and applies it to the cropped pancreas images, after initial processing by the segmentation model trained on the MSKCC data.

The goal of semantic segmentation (34) is to identify common features in an input image by learning and then to label each pixel in an image with a class. In this technique, raw image data are converted to quantitative, spatially structured information and can then be used for further processing. The segmentation method is an essential component in finding features in several clinical applications, such as applications of artificial intelligence in a diagnostic support system, tumor growth monitoring, therapy planning, and intraoperative assistance.

Based on FCN models, researchers have proposed a variety of strategies recently, including Hierarchical 3D FCN (35), DeepLab (36), SegNet (37), PSPNet (38), and RefineNet (39). The majority of these techniques automatically fit into the category of fully supervised learning methods, hence requiring a sufficient number of annotated data to train. Overall, the existing state-of-the-art methods for performing segmentation and classification either involve careful generation of handcrafted features or heavily rely on the extensive delineation of pancreatic tumor areas to give the annotation masks, which really place a tremendous load on the oncologists and researchers, respectively. Fully supervised methods have achieved remarkable performance in segmentation tasks such as brain tumor segmentation, lesion segmentation, and multiorgan segmentation (40). However, when a fully supervised algorithm is applied to pancreatic cancer detection and segmentation, these models have not achieved satisfactory results (3).

When employing advanced machine learning to the diagnosis of pancreatic tumors, the following major challenges arise: (1) Over 70% of pancreatic tumors have irregular shapes and ambiguous margins, resulting in imperceptible boundaries with the surrounding tissues. This characteristic increases the complexity of the segmentation process and may result in oversights when segmenting tumors. (2) The pancreas region is surrounded by many organs and tissues, and cancers affect a small area of the organ. Due to this, training a CNN architecture becomes difficult and the model gets distracted by irrelevant regions of the image, potentially leading to misclassification. (3) Training a deep learning model requires a substantial quantity of precisely annotated images for training. However, owing to the anatomical intricacy of the organ and differences in tumor appearance, physically identifying the pancreas and tumor locations is a labor-intensive and time-consuming task.

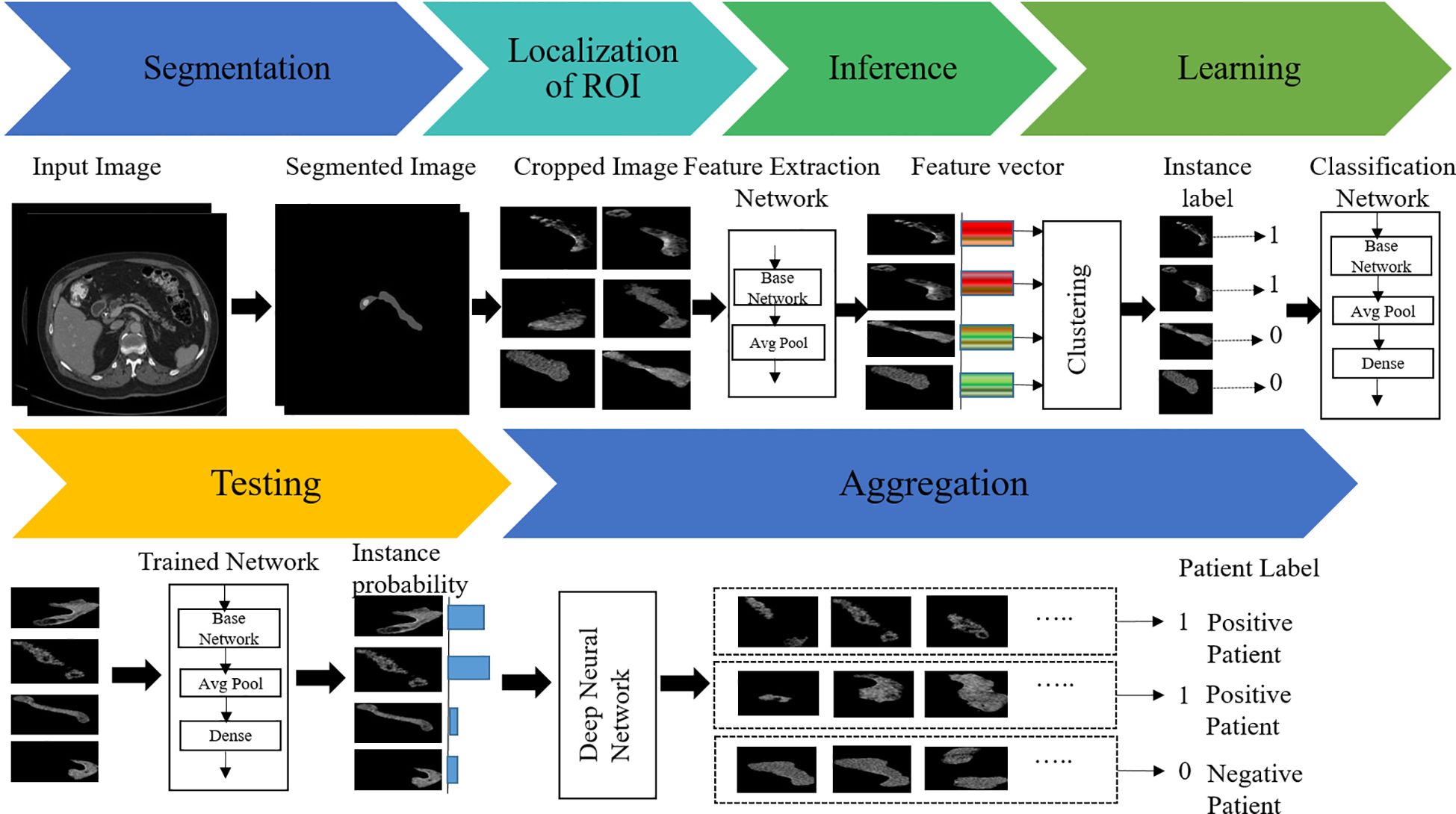

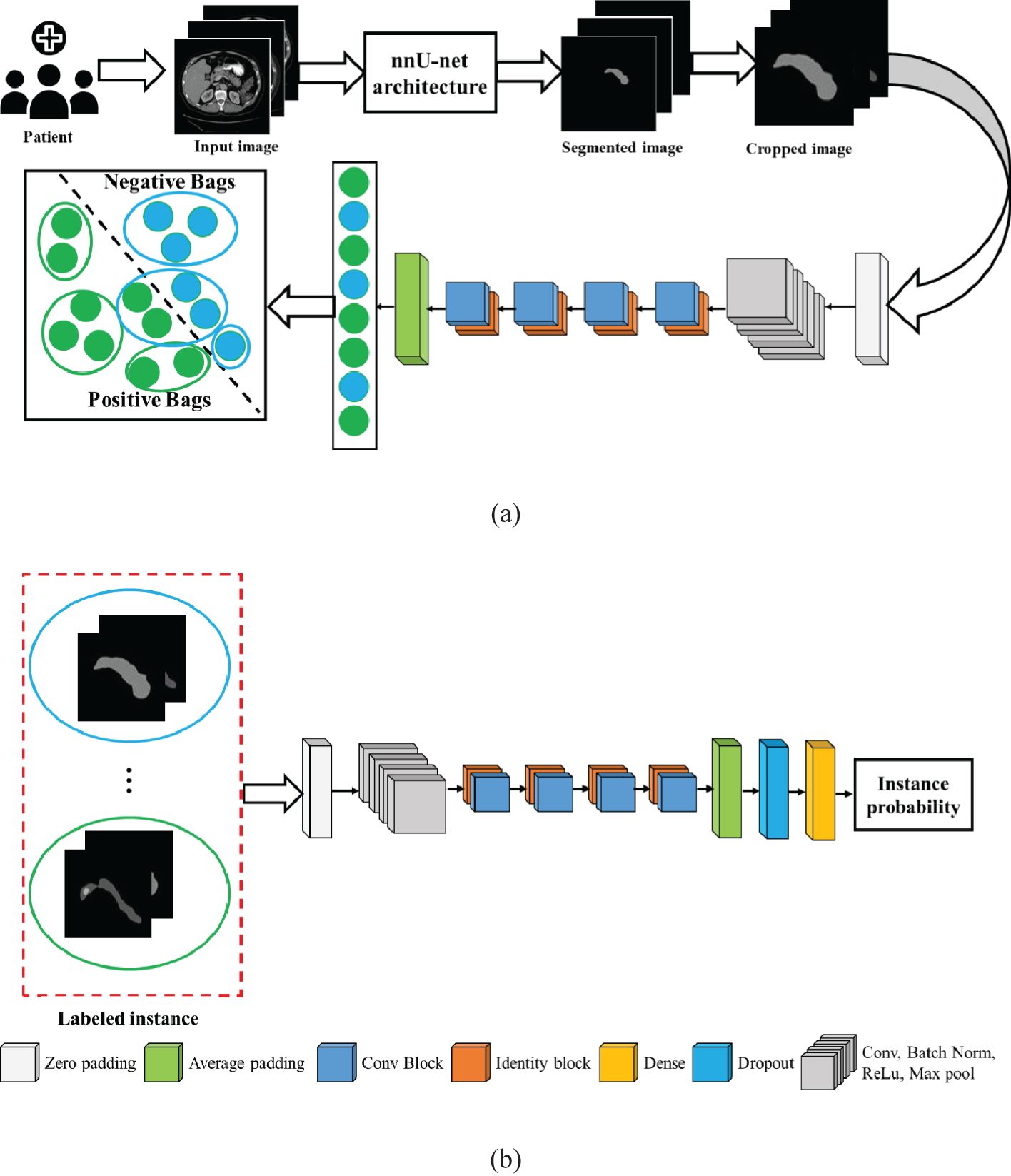

To overcome these issues, we herein propose a new weakly supervised algorithm that has a two-stage architecture, namely, segmentation and classification. In the first stage, the pancreas is segmented by the supervised segmentation model, and in the second stage, the multi-instance learning-based weakly supervised classification method is applied to the cropped pancreas images, which were obtained from segmentation, to classify pancreatic tumor images and normal-appearing pancreas, as schematically illustrated in Figure 3. There are three major impactful contributions from this work:

1. An end-to-end model for high-accurate pancreas segmentation along with classification has been proposed. The segmentation model is built upon an nn-Unet architecture, which segregates the pancreas, pancreatic cancers, and residual background organ and intraperitoneal space.

2. Furthermore, end-to-end multiple instance learning has been performed by multiple-instance neural networks, which accept a bag containing different numbers of instances as input and output the bag label right away.

3. Finally, comprehensive experiments on the unannotated HFH dataset have been conducted to demonstrate that the proposed approach outperforms other state-of-the-art techniques. To validate the effectiveness of the overall approach, the proposed architecture has been tested on a large volume of data obtained from the local data repository at Henry Ford Health (HFH) in Detroit, Michigan.

In this paper, Section 2 highlights the dataset used, network architectures, its components, and methodology. Sections 3 and 4 present the results and discussion in terms of ablation and comparison study. Finally, the conclusions of the study are presented in Section 5.

2 Materials and methods

CT images from HFH and Memorial Sloan Kettering Cancer Centre (MSKCC) were used to develop an end-to-end algorithm to detect the presence of pancreatic tumors in case versus control images. MSKCC is an open-source dataset that was used to train the segmentation model. Following this, we focused on the larger HFH dataset to further fine-tune and validate the model. A method has been proposed utilizing the axial view CT images and segmentation and classification techniques.

2.1 Dataset

In the proposed algorithm, the publicly available MSKCC dataset has been used to develop the segmentation model, followed by a separate and more robust HFH dataset used to train, test, and validate the MIL classification model. The MSKCC dataset comprised patients undergoing resection of pancreatic masses (31). These data consist of portal venous phase CT scans of 281 patients. Each patient has a single nifty (.nii) file that contains a full series of axial view images at the volumetric level. The classification model train, test, and validation data included CT images of 3,453 adult patients from HFH. Cases were images from patients diagnosed with pancreatic ductal adenocarcinoma, and controls were those where there was no suspicion of pancreas disease. Patients who had pancreatitis and women who were pregnant were excluded from the dataset.

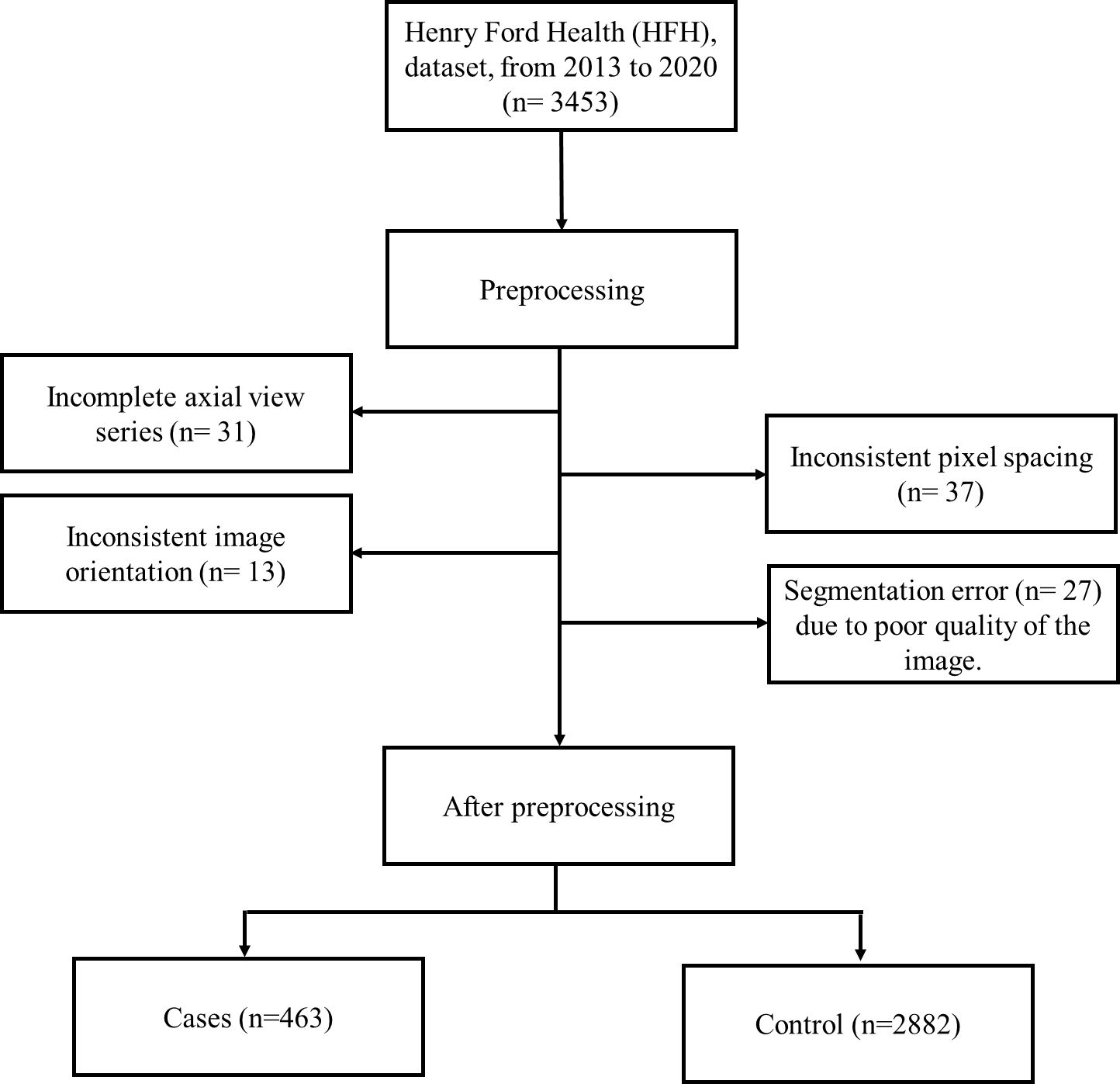

2.2 Data preprocessing

In this study, retrospective imaging data were collected at Henry Ford Health (HFH), Detroit, Michigan, United States, from 2013 to 2020. Each patient on the HFH dataset had axial, sagittal, and coronal view images. In this investigation, the axial view is preferred because it provides a higher resolution and more detailed cross-sectional images of the pancreas. In addition, the axial perspective is consistent with the conventional clinical methods, which guarantees that our analysis and findings are reliable and consistent every time. For patients with known pancreatic tumors, image acquisition was performed with a pancreas protocol, high-resolution imaging cut at 2.5 mm with dedicated arterial and portal venous phases to identify vascular abnormalities, characteristics of hypoattenuating tumors, and to recognize hepatic metastases. The axial raw images were in DICOM format with several images (60 to 350) per study.

A Python function was used to convert a 2D slice-level multiple images to a single 3D volumetric level image in nifty (.nii) format. To account for scanner and acquisition variability, a third-order spline interpolation was used for image data and nearest-neighbor interpolation for the corresponding segmentation mask to convert heterogeneous spacing to homogeneous (3, 41). During training and inference, each image was normalized using a global normalization scheme. While preprocessing HFH data, a total of 108 patients’ CT images were excluded due to incomplete axial view series (38), inconsistent pixel spacing (33), inconsistent image orientation (13), and segmentation error (29) due to poor quality of the image. This is represented in the flowchart (Figure 4). After this preprocessing step, the train, test and validation dataset sizes were 463 patients with pancreatic tumors and 2,882 controls.

2.3 Model architectures and training

In this study, the objectives of the segmentation method were to identify the slices containing the pancreas from the full-volume image and thereby identify the location of the pancreas in each slice. The segmentation method was applied to the volumetric axial view images, and it produced a segmented portion of the pancreas. In the first stage, the pancreas was segmented by the nnU-Net model (3). The segmented pancreas image was then used to find the location of the pancreas in the input image. The supervised classification model provides satisfactory results if the training data set captures a wide variation of the clinical samples. Therefore, we applied a weakly supervised classification model known as multi-instance learning (MIL). The MIL-based classification model was applied to distinguish pancreatic tumor and non-tumor pancreas on the cropped pancreas images. MIL is a weakly supervised classification wherein a label is only assigned to a collection of observations or a bag of instances (42–45).

In this approach, a three-dimensional image is converted into a bag of two-dimensional slices. Initially, each image is divided into patches as instance, with bags representing a subset of these patches. The instances are assigned with a label based on the presence or absence of the cancer region. If at least one instance within the bag consists of the tumor region, the bag is labeled as positive and none of the instances in the negative bag are positive. One of the challenges in applying MIL is finding the positive instances and negative instances from the positive bag. If all instances within the bad are classified as non-tumors, then the bag is labeled as negative. For the given coarse-grained (bag) label, MIL aims to predict the fine-grained (instance) labels for each patch within the image.

To guarantee that each positive bag consists of more than one positive-labeled instance, the K-means clustering algorithm is employed to partition the data into distinct groups based on the features extracted from the images. The architecture of the proposed MIL approach is given in Figure 5. The feature of the instances was extracted by the average pool layer, where the base network is taken as ResNet50, a popular CNN framework used for image processing (46–48). This ensures that each positive bag consists of multiple instances representing tumors regions within the image. The following are the steps involved to obtain the predicted instance probability:

Figure 5. Architecture of the proposed model: (A) multi-instance learning, and (B) classification model.

Step 1: We label the instances in a positive bag by clustering the image features into two groups: one is a positive group that contains all positive instances, and another is a negative group that contains negative instances. Given a dataset, containing volumetric images, , and is the patient label, where 1 indicates positive patient and 0 indicates a normal patient. Each volumetric image is a bag of instances .

Step 2: For the negative bag, all instances are negative. In the MIL pipeline, the features of the images are captured by ResNet50 and classified by ResNet50 (46).

Step 3: After extracting features of the images in ResNet50, K-means clustering was employed to get the label of the instance. In the feature extraction network, instance features are extracted at average pooling layers and returned as , where is the feature of instance .

Step 4: The instance labels are assigned by clustering method as , where .

Step 5: The label instances are fed into the classification model that produces the predicted instance probability.

In this case, the ResNet50 model was used as a classification model. ResNet50 is a CNN-based classification method available in the Keras environment with pretrained weights in the TensorFlow backend. The original model was trained on the ImageNet dataset and was slightly modified in the proposed method. The fully connected output layers that were used for the prediction in the original model were not used. Instead, an average pooling layer with a pool size of (4 × 4) was added, followed by a dense layer with the number of neurons as 256. As the problem is that of binary classification, the output layer is a dense layer with dimension 2, and the softmax activation function is used for this layer. A dropout rate of 0.3 was applied in between the output layer and its previous layer. The final layer of the network, called the probability layer, calculates the probability of the input (cropped) image being of that class. Furthermore, a multilayer perceptron neural network (NN) was applied to aggregate the instance probability to patient probability and schematically illustrated in Figure 5. The NN structure is optimized, and the best-performing network is found to have one hidden with the number of neurons 18. The MIL and NN were trained by 173 cases and 543 control patients of the HFH dataset.

3 Results

To validate the effectiveness of the proposed architecture, a series of ablation studies with different baseline models were conducted in this section. The model was trained, tested, and validated on images obtained from an HFH repository with 463 cases and 2,882 controls.

3.1 Quantitative evaluation

We created a two-stage weakly supervised deep learning-based model to identify pancreatic tumors using CT images from publicly available Memorial Sloan Kettering Cancer Center (MSKCC) and Henry Ford Health (HFH) data sets. In the first stage, the nnU-Net supervised segmentation model was used to crop an area in the location of the pancreas, which was trained on the MSKCC repository of 281 patient image sets with established pancreatic tumors. In the second stage, a multi-instance learning-based weakly supervised classification model was applied on the cropped pancreas region to segregate pancreatic tumors from normal-appearing pancreas. The performance of the proposed two-stage architecture was then compared with the existing models that have been recently published.

The performance of the proposed method was tested on the HFH dataset comprising 179 patients with known pancreatic tumors (23715 slices) as well as 1,398 patients without pancreatic cancer (182,757 slices), whereas the model parameters were fixed by the validation dataset including 111 patients with known pancreatic tumors (14,612 slices) as well as 941 patients without pancreatic cancer (122,214 slices), both selected randomly, yielding an average number of slices per patient of 131. A training dataset of HFH patients was created with 173 randomly selected patients with pancreatic cancer (22,942 slices) and 543 patients without pancreatic cancer (68,467 slices). The training dataset was used to train the MIL classification and NN aggregation models. The segmentation model was fed with the input CT image. The train and test data of HFH were segmented by the trained nnU-Net model. The nnU-Net model was trained by 281 patients of MSKCC dataset. Figure 6 depicts the segmentation results of samples with both normal and abnormal by using the nnU-Net model applied on the HFH dataset. The Supplementary Material (Supplementary Figure S1) contains a box plot of the dice score for pancreas and pancreatic cancer regions.

Figure 6. Segmentation results on HFH data: the first row represents the raw input image, and the second row represents the results of segmentation (pancreas: green, cancer: blue).

The cropped pancreas images from the HFH train dataset were used to train the MIL classification model. In this case, only the list of positive patients and negative patients is known. In the MIL approach, each patient is considered as a bag, a cancerous patient is called a positive bag, and a control patient is called a negative bag. All instances in the negative bag are negative, and we directly fed the instances with label 0 to the classification model without clustering. In the positive bag, positive and negative instances are mixed. The instances in the positive bag are fed to the feature extraction model to extract the feature. The clustering method segregates the positive and negative instances by utilizing their features. The positive instances are labelled as 1 and fed to the classification model. The classification model was trained by the labeled instances from the training dataset. For test data, instance probability for the risk of cancer was predicted by the trained classification model. In the aggregation model, the neural network produces patients’ cancer probability by combining all instances of the probability of the patients. The neural network model parameters were fixed by the validation dataset. The results of validation dataset were as follows: sensitivity 0.847 0.015, specificity 0.880 0.025, 0.876 0.021, and AUC 0.863 0.01.

3.2 Ablation study of the proposed model

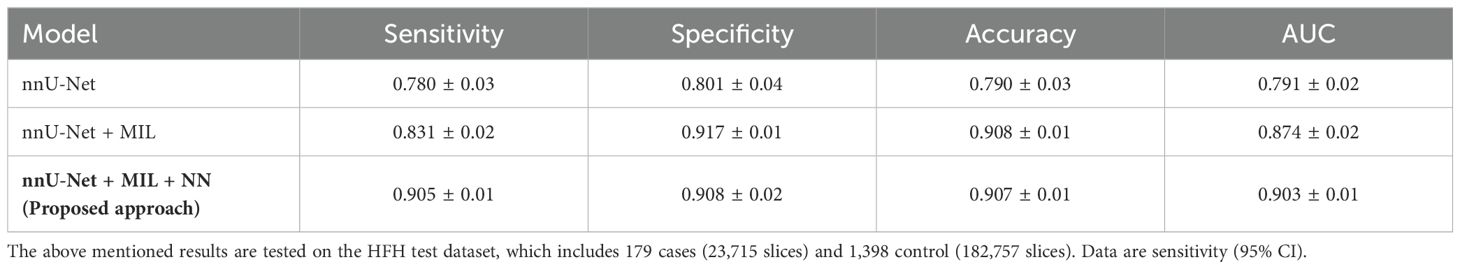

The proposed pancreatic tumor detection method was then compared with the existing nnU-Net-based segmentation method shown in Table 1. As the nnU-Net is a segmentation method for pancreas and pancreatic tumor segmentation (3), its efficacy toward identification of pancreatic cancer in the HFH dataset was characterized by measuring sensitivity, specificity, accuracy, and area under curve (AUC) based on the tumor segmentation results. The corresponding 95% confidence intervals (CI) were obtained using the Delong technique (49). With a 95% CI of (0.88, 0.92), the sensitivity was 0.905, demonstrating a high level of reliability in detecting true positives. The proposed framework’s ability to detect true negatives is reflected by specificity, which is 0.908 (95% CI: 0.86, 0.94). The model demonstrated outstanding performance across different assessment measures, with an overall accuracy of 0.907 (95% CI: 0.887, 0.927) and an AUC of 0.903 (95% CI: 0.883, 0.923).

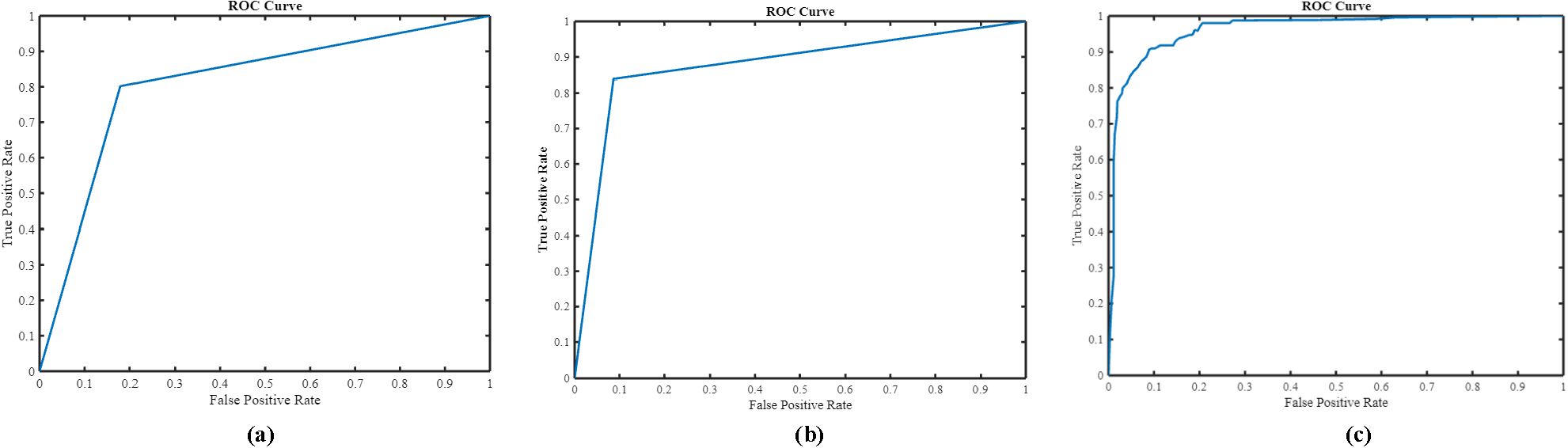

As is evident, nnU-Net + MIL with the NN aggregation model (the proposed method) yielded the best performance with sensitivity 0.905 0.01, specificity 0.908 0.02 accuracy 0.907 0.01, and AUC(ROC) 0.903 0.01. In comparison, the nnU-Net + MIL and nnU-Net alone significantly underperformed, as shown in Table 1. The AUC(ROC) for the three methods are illustrated in Figure 7. As the nnU-Net + MIL + NN method provides each patient label as probability, the ROC is a smooth curve unlike other two methods which involve detection by setting threshold yielding binary patient label (0 or 1).

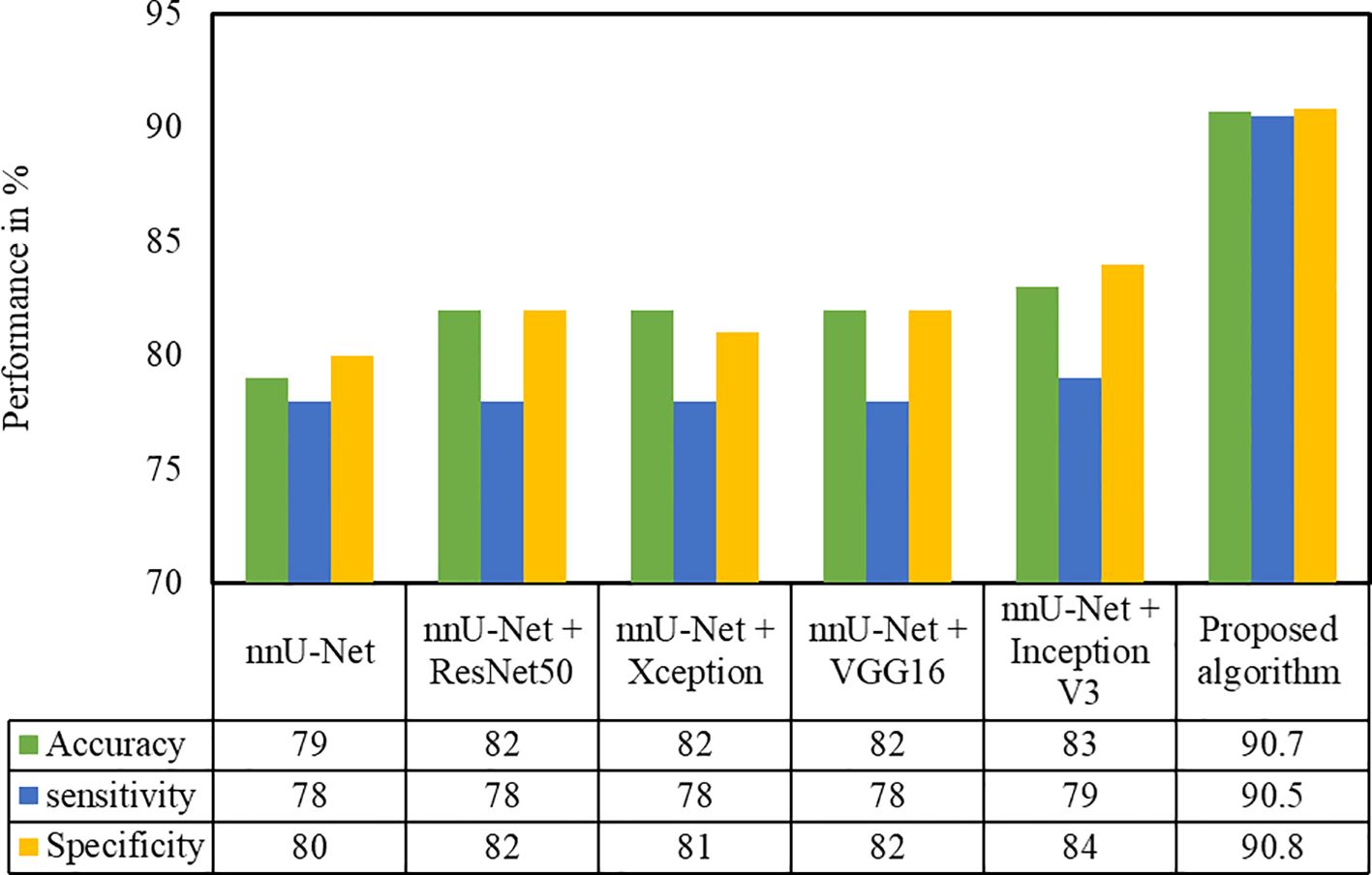

Several classification methods such as ResNet50, Xception, VGG16, and InceptionV3 were implemented in the second-phase test dataset on the cropped pancreas image. The pancreatic tumor detection results of these methods with the proposed architecture are depicted in Figure 8. It is evident that the proposed algorithm yields better performance in terms of accuracy and sensitivity. We have compared the proposed (nnU-Net + MIL with the NN aggregation model) architecture with various models such as nnU-Net. As can be deduced from Figure 8, the InceptionV3 classification model yielded the best performance (accuracy 0.83, sensitivity 0.79, and specificity 0.84) compared with the nnU-Net (0.79, sensitivity 0.78, specificity 0.8), nnU-Net + ResNet50 (accuracy 0.82, sensitivity 0.78, specificity 0.82), nnU-Net + Xception (accuracy 0.82, sensitivity 0.78, specificity, 0.81), and nnU-Net + VGG16 (accuracy 0.82, sensitivity 0.78, specificity, 0.82) models.

Figure 8. Performance evaluation of the proposed approach with different classification techniques on the HFH test dataset.

P-values are computed for both proposed and state-of-the-art techniques and are represented in Supplementary Figure S2.

4 Discussion

We have developed and validated an image analysis model that can identify pancreatic tumors on CT images by a combination of segmentation and classification methods, both based on deep learning techniques. The two-stage framework was developed utilizing a previously reported pancreas dataset from MSKCC and validated on the HFH dataset of control patients and pancreatic tumor patients. It has been demonstrated that the proposed method results in satisfactory performance. As is evident from Table 1, the proposed method yields superior performance when compared with other existing models and offers an accuracy of 0.907. Equally importantly, the performance of the proposed two-stage approach is demonstrated to be stable in a large dataset. The robust performance of the proposed method is an outcome of the verification and optimization that are performed at each layer. The information output from the pancreas is high in the segmented region. Hence, in the proposed method, the MIL classification method was applied only on the cropped pancreas image. As a result, the chances of identifying false positives are minimized. Overall, our results indicate that two-stage image analysis can distinguish between presence and absence of pancreatic tumors on CT images when given blinded images.

The nnU-Net used in this study has been applied toward medical segmentation decathlon (MSD) competition for multiple-organ segmentation and tumor detection tasks such as liver, spleen, kidney, pancreas, gallbladder, colon, and prostate (3). However, the detection efficacy of the pancreatic tumor by employing nnU-Net is limited, exemplified by a low dice coefficient of 0.53. In our study, we identified that a single-stage approach using nnU-Net produces more false positives, primarily because the contour of the pancreatic tumor is irregular and there are ill-defined margins on the CT image, leading to false detection of the normal pancreas portion as cancer. The CNN patch-based classification method was also attempted in literature for pancreatic cancers detection (18) but suffered from the following lacunae. First, CNN was trained to classify pancreas patches and pancreatic cancer patches. The patches were generated by the sliding windows method in a region of interest determined by the presence of pancreas fed in as the input. While this may be feasible in training, for a test image, such masks are unlikely to be available. Secondly, in this patch-based analysis, a patch is labeled positive, even if only a single pixel is predicted as cancerous. Apart from the above method, whole slide image (WSI) classification has been studied for pancreatic cancer detection (50, 51). Since the pancreas is a small organ, the tumor size is also very small in the early stages and thus a classification model alone is unlikely to be sufficient for locating the features of the small tumor portion with respect to the whole slide. This study also had limitations. Manual labeling of pancreatic images are labor-intensive, so we used publicly available datasets and private datasets for training and validation; the testing dataset included only American participants from a single institution. In response to this limitation, we used the MIL technique to balance both supervised and unsupervised approaches and verified the generalizability of the model. The findings demonstrate the effectiveness of a proposed two-stage weakly supervised deep learning system for detecting pancreatic cancer. By employing the proposed prediction model to aid in the radiographic diagnosis of tumors, therapeutic intervention may be accelerated, leading to better clinical results for the patient.

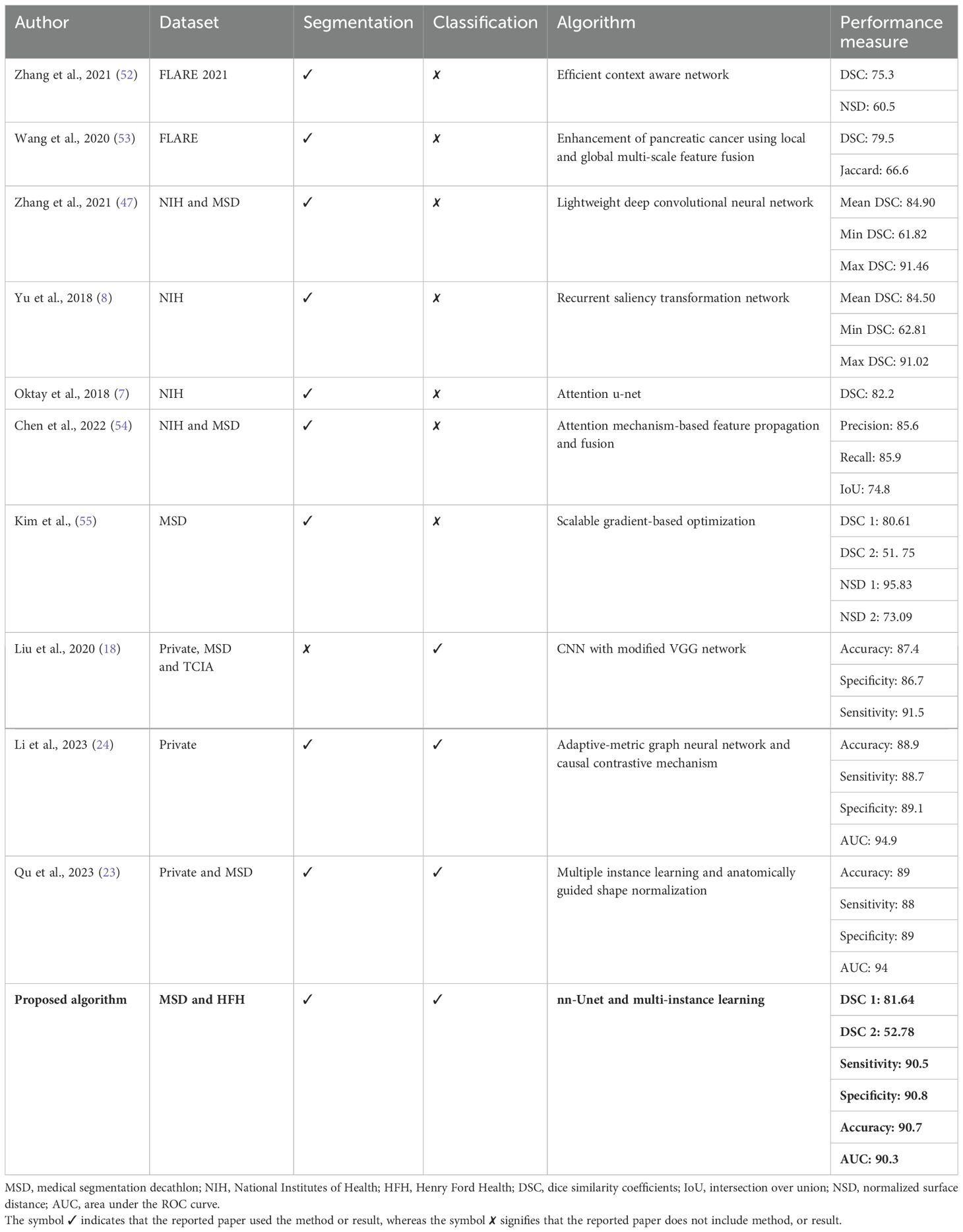

Table 2 summarizes the state-of-the-art techniques validated on different datasets such as FLARE, NIH, and MSD. As indicated by the references in the table, the codes for these studies are not available, making it impossible to reproduce their investigations. Therefore, a direct comparison of these approaches is not feasible. However, all these studies used pancreatic images either for classification or segmentation tasks. A modified CNN model was trained to classify patches as cancerous or non-cancerous (18). The model was trained on the local dataset and externally tested on 281 patients with pancreatic cancer, and 82 individuals with normal pancreas. Researchers have developed a generalized pancreatic cancer diagnosis, and the method consists of anatomically guided shape normalization, instance-level contrastive learning, and a balance-adjustment strategy (23) on two unseen datasets (a private test set with 316 and a publicly available test set with 281). The effectiveness of the adaptive-metric graph neural network and causal contrastive mechanism has been developed to enhance the discriminability of the target features and improve early diagnosis stability (24). The training dataset with cross-validation consists of 953 subjects including 554 pancreatic cancer and 399 non-tumor pancreas. Despite the superior performance reported in the majority of the studies, a limited dataset with just a few hundred samples was utilized. We evaluated our pipeline on a significantly larger dataset with 463 cases and 2,882 controls CT images, whereby > accuracy and sensitivity values were achieved. The significant diversity in the HFH dataset ensured strong generalization capabilities, demonstrating its superior performance. Moreover, our deployment of the segmentation model enabled both accurate normal pancreas and pancreatic cancer detection. As a result, our approach may enable an early detection modality that affords comprehensive options for clinicians to assess earlier onset of pancreatic cancers as well as offer curative intention options for pancreatic cancers that would not otherwise be feasible.

5 Conclusion

In this paper, we propose an end-to-end model for accurate pancreatic tumor prediction. The model incorporates segmentation using the nnU-Net architecture and multi-instance classification using weakly supervised learning. The pancreatic tumor samples are processed by localizing the area of interest from the segmented image. A bag is then formed for each region, which is labeled based on the grade. Finally, the multi-instance learning model is trained for classification. The proposed MIL classification technique achieves an optimal performance by utilizing patient label information on the cropped image, not on the whole pixel patches. Our experimental findings demonstrate that the proposed framework outperforms nnU-Net with Inception V3 by a large margin (7.0%) using the HFH test dataset. From the results, it is evident that the two-stage deep learning architecture of patient radiographic imaging has the potential to be of great assistance in the pursuit of early pancreatic tumor detection. Furthermore, it has the potential to reduce the number of incorrect diagnoses of pancreatic cancer, which would ultimately result in much-required improvements in patient care. We will investigate the possibility of employing auto-encoding DNN rather than K-means in the future.

Data availability statement

The datasets presented in this article are not readily available because CT images from HFH and Memorial Sloan Kettering Cancer Centre (MSKCC) were used to develop a novel algorithm to detect the presence of pancreas tumors in case versus control images. This data cannot be shared without patient approval. MSKCC is an open-source dataset that was used to train the segmentation model. Requests to access the datasets should be directed to DK, System Director, Surgical Oncology, Department of Surgery, Henry Ford Health, Detroit, MI, USA,ZGt3b24xQGhmaHMub3Jn.

Ethics statement

The studies involving humans were approved by Henry Ford Health, Detroit MI, USA. The studies were conducted in accordance with the local legislation and institutional requirements. The ethics committee/institutional review board waived the requirement of written informed consent for participation from the participants or the participants’ legal guardians/next of kin because data has been anonymized for this study. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article because data has been anonymized for this study.

Author contributions

SM: Conceptualization, Formal analysis, Investigation, Methodology, Software, Visualization, Writing – original draft. KB: Formal analysis, Software, Validation, Writing – review & editing. HK: Formal analysis, Validation, Writing – review & editing, Methodology. CA: Formal analysis, Methodology, Validation, Writing – review & editing. JC: Data curation, Investigation, Validation, Visualization, Writing – review & editing. DK: Data curation, Investigation, Supervision, Validation, Visualization, Writing – review & editing. AR: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Visualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by the Henry Ford Pancreatic Cancer Centre (Grant no. FT/03/2032/2019).

Acknowledgments

The Department of Radiology at Henry Ford Health assisted in obtaining images for this project. The authors would like to express their gratitude to the Yardi School of Artificial Intelligence for their valuable assistance with this project. This study used the high-performance computing capabilities of the IIT Delhi.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2024.1362850/full#supplementary-material

References

1. Pancreatic cancer prognosis, Aug. 08, 2021. Available online at: https://www.hopkinsmedicine.org/health/conditions-and-diseases/pancreatic-cancer/pancreatic-cancer-prognosis (Accessed Oct. 04, 2022).

2. Kenner B, Chari ST, Kelsen D, Klimstra DS, Pandol SJ, Rosenthal M, et al. Artificial intelligence and early detection of pancreatic cancer: 2020 summative review. Pancreas. (2021) 50:251. doi: 10.1097/MPA.0000000000001762

3. Isensee F. From manual to automated design of biomedical semantic segmentation methods. University of Heidelberg, Heidelberg, Germany (2020). doi: 10.11588/HEIDOK.00029345

4. Wang T, Lei Y, Schreibmann E, Roper J, Liu T, Schuster D, et al. Lesion segmentation on 18 F-fluciclovine (18 F-FACBC) PET/CT images using deep learning. Front Oncol. (2023) 13:1274803. doi: 10.3389/fonc.2023.1274803

5. Zhou Y, LALANDE A, Chevalier C, Baude J, Aubignac L, Boudet J, et al. Deep learning application for abdominal organs segmentation on 0.35 T MR-Linac images. Front Oncol. (2024) 13:1285924. doi: 10.3389/fonc.2023.1285924

6. Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science Cham: Springer (2015) , pp. 234–41. doi: 10.1007/978-3-319-24574-4_28

7. Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, et al. Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv:1804.03999. (2018). doi: 10.48550/arXiv.1804.03999

8. Yu Q, Xie L, Wang Y, Zhou Y, Fishman EK, Yuille AL. (2018). Recurrent saliency transformation network: Incorporating multi-stage visual cues for small organ segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA. pp. 8280–9. doi: 10.1109/CVPR.2018.00864

9. Augusto JB, Davies RH, Bhuva AN, Knott KD, Seraphim A, Alfarih M, et al. Diagnosis and risk stratification in hypertrophic cardiomyopathy using machine learning wall thickness measurement: a comparison with human test-retest performance. Lancet Digital Health. (2021) 3:e20–8. doi: 10.1016/S2589-7500(20)30267-3

10. Zhou H, Li L, Liu Z, Zhao K, Chen X, Lu M, et al. Deep learning algorithm to improve hypertrophic cardiomyopathy mutation prediction using cardiac cine images. Eur Radiol. (2021) 31:3931–40. doi: 10.1007/s00330-020-07454-9

11. Wu JR, Leu HB, Yin WH, Tseng WK, Wu YW, Lin TH, et al. The benefit of secondary prevention with oat fiber in reducing future cardiovascular event among CAD patients after coronary intervention. Sci Rep. (2019) 9:1–6. doi: 10.1038/s41598-019-39310-2

12. Long J, Shelhamer E, Darrell T. (2015). Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3431–40. doi: 10.48550/arXiv.1411.4038

13. Jiang C, Liu X, Qu Q, Jiang Z, Wang Y. Prediction of adenocarcinoma and squamous carcinoma based on CT perfusion parameters of brain metastases from lung cancer: a pilot study. Front Oncol. (2023) 13:1225170. doi: 10.3389/fonc.2023.1225170

14. Wang CJ, Hamm CA, Savic LJ, Ferrante M, Schobert I, Schlachter T, et al. Deep learning for liver tumor diagnosis part II: convolutional neural network interpretation using radiologic imaging features. Eur Radiol. (2019) 29:3348–57. doi: 10.1007/s00330-019-06214-8

15. Yao W, He JC, Yang Y, Wang JM, Qian YW, Yang T, et al. The prognostic value of tumor-infiltrating lymphocytes in hepatocellular carcinoma: a systematic review and meta-analysis. Sci Rep. (2017) 7:1–11. doi: 10.1038/s41598-017-08128-1

16. Wang G, Van Stappen G, De Baets B. Automated detection and counting of Artemia using U-shaped fully convolutional networks and deep convolutional networks. Expert Syst Appl. (2021) 171:114562. doi: 10.1016/j.eswa.2021.114562

17. Bohr A, Memarzadeh K. The rise of artificial intelligence in healthcare applications. Artif Intell healthcare. (2020), 25–60. doi: 10.1016/B978-0-12-818438-7.00002-2

18. Liu KL, Wu T, Chen PT, Tsai YM, Roth H, Wu MS, et al. Deep learning to distinguish pancreatic cancer tissue from non-cancerous pancreatic tissue: a retrospective study with cross-racial external validation. Lancet Digital Health. (2020) 2:e303–13. doi: 10.1016/S2589-7500(20)30078-9

19. Sekaran K, Chandana P, Krishna NM, Kadry S. Deep learning convolutional neural network (CNN) With Gaussian mixture model for predicting pancreatic cancer. Multimedia Tools Appl. (2020) 79:10233–47. doi: 10.1007/s11042-019-7419-5

20. Xuan W, You G. Detection and diagnosis of pancreatic tumor using deep learning-based hierarchical convolutional neural network on the internet of medical things platform. Future Generation Comput Syst. (2020) 111:132–42. doi: 10.1016/j.future.2020.04.037

21. Hussein S, Kandel P, Bolan CW, Wallace MB, Bagci U. Lung and pancreatic tumor characterization in the deep learning era: novel supervised and unsupervised learning approaches. IEEE Trans Med Imaging. (2019) 38:1777–87. doi: 10.1109/TMI.42

22. Liu Z, Su J, Wang R, Jiang R, Song YQ, Zhang D, et al. Pancreas Co-segmentation based on dynamic ROI extraction and VGGU-Net. Expert Syst Appl. (2022) 192:116444. doi: 10.1016/j.eswa.2021.116444

23. Qu J, Wei X, Qian X. Generalized pancreatic cancer diagnosis via multiple instance learning and anatomically-guided shape normalization. Med Image Anal. (2023) 86:102774. doi: 10.1016/j.media.2023.102774

24. Li X, Guo R, Lu J, Chen T, Qian X. Causality-driven graph neural network for early diagnosis of pancreatic cancer in non-contrast computerized tomography. IEEE Trans Med Imaging. (2023) 42:1656–67. doi: 10.1109/TMI.2023.3236162

25. Luo Y, Chen X, Chen J, Song C, Shen J, Xiao H, et al. Preoperative prediction of pancreatic neuroendocrine neoplasms grading based on enhanced computed tomography imaging: validation of deep learning with a convolutional neural network. Neuroendocrinology. (2020) 110:338–50. doi: 10.1159/000503291

26. Song C, Wang M, Luo Y, Chen J, Peng Z, Wang Y, et al. Predicting the recurrence risk of pancreatic neuroendocrine neoplasms after radical resection using deep learning radiomics with preoperative computed tomography images. Ann Trans Med. (2021) 9:833. doi: 10.21037/atm

27. Fu M, Wu W, Hong X, Liu Q, Jiang J, Ou Y, et al. Hierarchical combinatorial deep learning architecture for pancreas segmentation of medical computed tomography cancer images. BMC Syst Biol. (2018) 12:119–27. doi: 10.1186/s12918-018-0572-z

28. Qiao Z, Du C. RAD-UNet: a residual, attention-based, dense UNet for CT sparse reconstruction. J Digital Imaging. (2022) 35:1748–58. doi: 10.1007/s10278-022-00685-w

29. Qu T, Wang X, Fang C, Mao L, Li J, Li P, et al. M3Net: A multi-scale multi-view framework for multi-phase pancreas segmentation based on cross-phase non-local attention. Med Image Anal. (2022) 75:102232. doi: 10.1016/j.media.2021.102232

30. Nakao M, Nakamura M, Mizowaki T, Matsuda T. Statistical deformation reconstruction using multi-organ shape features for pancreatic cancer localization. Med image Anal. (2021) 67:101829. doi: 10.1016/j.media.2020.101829

31. Simpson AL, Antonelli M, Bakas S, Bilello M, Farahani K, Van Ginneken B, et al. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. arXiv preprint arXiv:1902.09063. (2019). doi: 10.48550/arXiv.1902.09063

32. Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, et al. The Cancer Imaging Archive (TCIA): maintaining and operating a public information repository. J digital Imaging. (2013) 26:1045–57. doi: 10.1007/s10278-013-9622-7

33. Campanella G, Hanna MG, Geneslaw L, Miraflor A, Werneck Krauss Silva V, Busam KJ, et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat Med. (2019) 25:1301–9. doi: 10.1038/s41591-019-0508-1

34. Dong D, Fu G, Li J, Pei Y, Chen Y. An unsupervised domain adaptation brain CT segmentation method across image modalities and diseases. Expert Syst Appl. (2022) 207:118016. doi: 10.1016/j.eswa.2022.118016

35. Roth HR, Oda H, Hayashi Y, Oda M, Shimizu N, Fujiwara M, et al. Hierarchical 3D fully convolutional networks for multi-organ segmentation. arXiv preprint arXiv:1704.06382. (2017). doi: 10.48550/arXiv.1704.06382

36. Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell. (2017) 40:834–48. doi: 10.1109/TPAMI.2017.2699184

37. Badrinarayanan V, Kendall A, Cipolla R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell. (2017) 39:2481–95. doi: 10.1109/TPAMI.34

38. Zhao H, Shi J, Qi X, Wang X, Jia J. (2017). Pyramid scene parsing network, in: Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA., pp. 2881–90. doi: 10.1109/CVPR.2017.660

39. Lin G, Milan A, Shen C, Reid I. (2017). Refinenet: Multi-path refinement networks for high-resolution semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, pp. 1925–34. doi: 10.1109/CVPR.2017.549

40. Tahri S, Texier B, Nunes JC, Hemon C, Lekieffre P, Collot E, et al. A deep learning model to generate synthetic CT for prostate MR-only radiotherapy dose planning: a multicenter study. Front Oncol. (2023) 13:1279750. doi: 10.3389/fonc.2023.1279750

41. Yao J, Shi Y, Lu L, Xiao J, Zhang L. (2019). Deepprognosis: Preoperative prediction of pancreatic cancer survival and surgical margin via contrast-enhanced CT imaging, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, MICCAI 2020. Lecture Notes in Computer Science, Lima, Peru, October 4–8, 2020, vol 12262, pp. 272–82. Springer, Cham. doi: 10.1007/978-3-030-59713-9_27

42. Tennakoon R, Bortsova G, Ørting S, Gostar AK, Wille MM, Saghir Z, et al. Classification of volumetric images using multi-instance learning and extreme value theorem. IEEE Trans Med Imaging. (2019) 39:854–65. doi: 10.1109/TMI.42

43. Tibo A, Jaeger M, Frasconi P. Learning and interpreting multi-multi-instance learning networks. J Mach Learn Res. (2020) 21:1–60.

44. He K, Zhang X, Ren S, Sun J. (2016). Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, pp. 770–8. doi: 10.1109/CVPR.2016.90

45. Waqas M, Tahir MA, Khan SA. Robust bag classification approach for multi-instance learning via subspace fuzzy clustering. Expert Syst Appl. (2023) 214:119113. doi: 10.1016/j.eswa.2022.119113

46. Li Y, Wu H. A clustering method based on K-means algorithm. Phys Proc. (2012) 25:1104–9. doi: 10.1016/j.phpro.2012.03.206

47. Zhang D, Zhang J, Zhang Q, Han J, Zhang S, Han J. Automatic pancreas segmentation based on lightweight DCNN modules and spatial prior propagation. Pattern Recognition. (2021) 114:107762. doi: 10.1016/j.patcog.2020.107762

48. Ibragimov B, Toesca DA, Chang DT, Yuan Y, Koong AC, Xing L. Automated hepatobiliary toxicity prediction after liver stereotactic body radiation therapy with deep learning-based portal vein segmentation. Neurocomputing. (2020) 392:181–8. doi: 10.1016/j.neucom.2018.11.112

49. DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. (1988) 44(3):837–45. doi: 10.2307/2531595

50. Luo Y, Chen X, Chen J, Song C, Shen J, Xiao H, et al. Preoperative prediction of pancreatic neuroendocrine neoplasms grading based on enhanced computed tomography imaging: validation of deep learning with a convolutional neural network. Neuroendocrinology. (2020) 110:338–50. doi: 10.1159/000503291

51. Ma H, Liu ZX, Zhang JJ, Wu FT, Xu CF, Shen Z, et al. Construction of a convolutional neural network classifier developed by computed tomography images for pancreatic cancer diagnosis. World J Gastroenterol. (2020) 26:5156. doi: 10.3748/wjg.v26.i34.5156

52. Zhang F, Wang Y, Yang H. Efficient context-aware network for abdominal multi-organ segmentation. arXiv preprint arXiv:2109.10601. (2021). doi: 10.48550/arXiv.2109.10601

53. Wang H, Wang G, Liu Z, Zhang S. Global and local multi-scale feature fusion enhancement for brain tumor segmentation and pancreas segmentation. In: Crimi A, Bakas S. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes. Lecture Notes in Computer Science, Shenzhen, China, October 17, 2019, Springer, Cham. (2020) 11992:80–88. doi: 10.1007/978-3-030-46640-4_8

54. Chen H, Liu Y, Shi Z. FPF-Net: feature propagation and fusion based on attention mechanism for pancreas segmentation. Multimedia Syst. (2022) 29(2):525–38. doi: 10.1007/s00530-022-00963-1

Keywords: pancreatic cancer, multi-instance learning, image segmentation, feature extraction, medical image analysis

Citation: Mandal S, Balraj K, Kodamana H, Arora C, Clark JM, Kwon DS and Rathore AS (2024) Weakly supervised large-scale pancreatic cancer detection using multi-instance learning. Front. Oncol. 14:1362850. doi: 10.3389/fonc.2024.1362850

Received: 29 December 2023; Accepted: 01 August 2024;

Published: 29 August 2024.

Edited by:

Harrison Kim, University of Alabama at Birmingham, United StatesReviewed by:

Shanglong Liu, Affiliated Hospital of Qingdao University, ChinaJakub Nalepa, Silesian University of Technology, Poland

Lukas Vrba, University of Arizona, United States

Man Lu, Sichuan Cancer Hospital, China

Copyright © 2024 Mandal, Balraj, Kodamana, Arora, Clark, Kwon and Rathore. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anurag S. Rathore, YXNyYXRob3JlQGJpb3RlY2hjbXouY29t

Shyamapada Mandal1

Shyamapada Mandal1 Keerthiveena Balraj

Keerthiveena Balraj Chetan Arora

Chetan Arora Anurag S. Rathore

Anurag S. Rathore