95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Oncol. , 23 September 2022

Sec. Cancer Imaging and Image-directed Interventions

Volume 12 - 2022 | https://doi.org/10.3389/fonc.2022.969707

This article is part of the Research Topic Deep Learning Approaches in Image-guided Diagnosis for Tumors View all 14 articles

Purpose: Preoperative evaluation of lymph node metastasis (LNM) is the basis of personalized treatment of locally advanced gastric cancer (LAGC). We aim to develop and evaluate CT-based model using deep learning features to preoperatively predict LNM in LAGC.

Methods: A combined size of 523 patients who had pathologically confirmed LAGC were retrospectively collected between August 2012 and July 2019 from our hospital. Five pre-trained convolutional neural networks were exploited to extract deep learning features from pretreatment CT images. And the support vector machine (SVM) was employed as the classifier. We assessed the performance using the area under the receiver operating characteristics curve (AUC) and selected an optimal model, which was compared with a radiomics model developed from the training cohort. A clinical model was built with clinical factors only for baseline comparison.

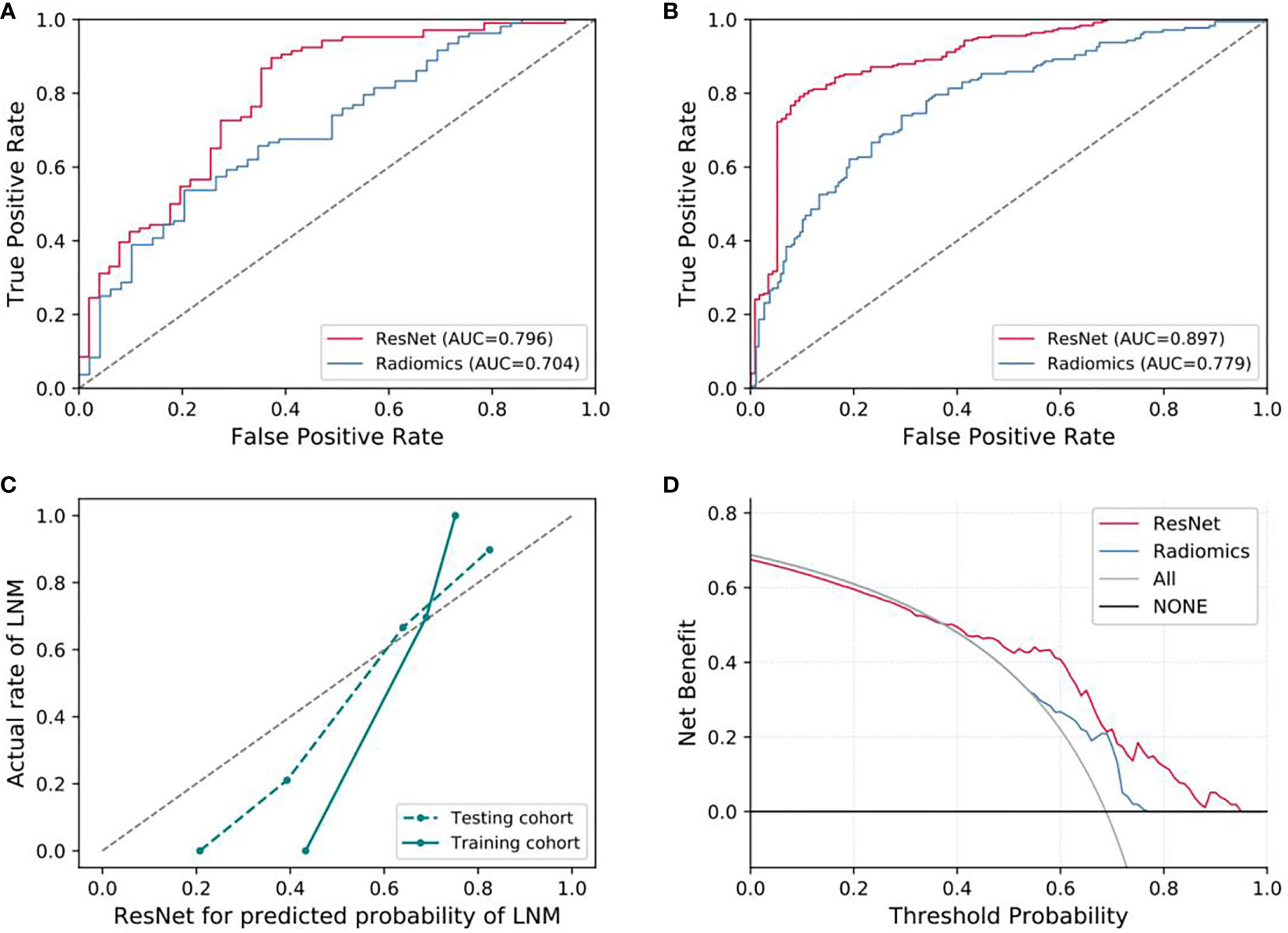

Results: The optimal model with features extracted from ResNet yielded better performance with AUC of 0.796 [95% confidence interval (95% CI), 0.715-0.865] and accuracy of 75.2% (95% CI, 67.2%-81.5%) in the testing cohort, compared with 0.704 (0.625-0.783) and 61.8% (54.5%-69.9%) for the radiomics model. The predictive performance of all the radiological models were significantly better than the clinical model.

Conclusion: The novel and noninvasive deep learning approach could provide efficient and accurate prediction of lymph node metastasis in LAGC, and benefit clinical decision making of therapeutic strategy.

Gastric cancer (GC) is one of the most common cancers and the third leading cause of death from cancer worldwide (1). The incidence rate of gastric cancer is relatively high in Asia, South American and Europe (2–4). Locally advanced gastric cancer refers to the wall invasion deeper than the submucosa, with a high rate of lymph node metastasis (LNM) and poor clinical prognosis (5–7). Accurate evaluation on lymphatic metastasis based on preoperative computed tomography (CT) images is crucial for individual treatment of LAGC (8–10). Preoperative knowledges of LNM have important clinical significance for selecting the optimal surgical procedure (endoscopic procedures or gastrectomy plus lymph node dissection) and the need for adjuvant therapy (11–13). The National Comprehensive Cancer Network recommended CT as a first-line imaging technique for detecting LNM, but the overall accuracy is 50%-70%, which is unsatisfactory (14).

The advances in deep learning techniques provides a new field for CT imaging analysis, which could convert medical images to mineable data and generate thousands of quantitative features (15). Convolutional neural networks (CNNs) have been proved to be an effective method for improving the diagnostic accuracy of medical imaging (16–18). Due to the lack of enough annotated cases, training a CNN model from scratch for one specific clinical problem often is infeasible. An effective approach is to adopt the transfer learning technique using pre-training CNNs, which ran additional steps of pre-training on specific medical domain from the existing checkpoint. It is frequently used to alleviate the limitations of small datasets and expensive annotation (19, 20). Part of natural imaging descriptors developed for object detection have been used for lesion segmentation in medical imaging analysis (21). Another option is to use a pretrained CNNs models as the feature extractor and traditional machine learning methods as classifier, which may also have satisfactory performance in terms of prediction accuracy and computational cost (22). Handcrafted radiomics have been studied extensively for radiological diagnosis and prediction (8, 23, 24). However, the application of transfer learning to prediction of LNM in gastric cancer has not been explored.

In this study, we hypothesize that CT-based transfer learning techniques are feasible to extract deep learning features for preoperatively predicting LNM risk. To this end, our study aims to build a noninvasive measurement based on pre-trained deep learning models for the preoperatively prediction of LNM in patients with gastric cancer, making comparison with the handcrafted radiomics method. Additionally, we further explored the application value of deep learning features in predicting LNM and making treatment decisions.

This retrospective study was approved by the institutional review board of our hospital, and the requirement for informed consent was waived. A total of 523 consecutive patients with gastric cancer who was treated between August 2012 and July 2019 were enrolled. The patients were enrolled based on following inclusion criteria: (a) pathologically diagnosed as local advance gastric cancer (pT2-4aNxM0); (b) all patients with gastrectomy plus lymph node dissection and CT imaging data were complete; (c) without any systematic or local treatment before CT imaging study or surgery; (d) the lesion covers at least 3 slices on CT cross section. The patients were excluded based on the following criteria: (a) invisible lesion on CT images; (b) insufficient stomach distension; (c) poor image quality for post-processing due to artifacts. The flowchart of patient selection was shown in Figure 1. We adopted computer-generated random numbers to split the training cohort (n=367, 74.40% males; mean age, 59.75 ± 10.38; range, 22-82 years) and the testing cohort (n=156, 73.98% males; mean age, 59.36 ± 9.94; range, 22-81 years). The tumor location information was got from the medical or endoscopic reports, and the clinical information was got by reviewing the medical reports.

All patients underwent contrast-enhanced CT scan and informed consent forms were signed before inspection. The CT scans were acquired with breath-hold with the patient head first supine in all of the phases for covering the whole abdomen. The details on CT acquisition parameters were described in Supplemental Material.

Tumor regions of interest (ROIs) were manually segmented CT images by two experienced radiologists using ITK-SNAP software (version 3.6.0; http://www.itksnap.org). In order to make a fair comparison with different features, we only chosen one slice with the maximum cross-sectional area of the tumor lesion by the radiologists. We randomly chosen 30 patients from training cohorts to assess the interobserver reproducibility for ROI-based radiomics features in a blinded manner. After one month, segmentation procedure was repeated to assess the intraobserver reproducibility. The features with intra-class correlation coefficient (ICC) greater than 0.75 were selected for further analysis. For deep learning features extraction, the 3 axial slices containing the delineated tumor were resized to 224× 224mm (the size for the input layer of the pretrained CNN models) with the use of a bounding box covering the radiologist contoured tumor area.

We employed five commonly convolutional neural networks (ResNet (25), VGG16 (26), VGG19 (26), Xception (27) and InceptionV3 (28)) as base models to extract deep learning features automatically. These five CNNs models were pre-trained on the large-scale lightweight well-annotated biomedical image database (29). We removed the last fully connected layer at top of the network, and applied global max pooling strategies to efficiently capture the maximum values of each layer of the feature maps. Finally, we converted the feature maps to the raw values. The extracted deep features were used to construct the machine learning model. Due to the complexity of deep learning model structure, the potential mechanisms of predictive value were unclear. Additional details of deep features extraction in this study are listed in Supplementary Material. Furthermore, Gradient-weighted Class Activation Mapping technique (Grad-CAM) could generate visual explanations for any CNN-based model (30). We use this visualization technique to investigate which regions of the ROI were most important in the deep features.

Image standardization was implemented before feature extraction: bi-cubic spline interpolation was used to resample the image scale in the slice to reduce the heterogeneity results from different scanners, resulting in a voxel size of 1mm×1mm×1mm (31, 32). The radiomics features were automatically extracted from each radiologist’s ROIs using the Python package Pyradiomics (http://pyradiomics.readthedocs.io) (33). The radiomics features were standardized by referring to the Image Biomarker Standardization Initiative (IBSI) (34). The study was based on the reporting guidelines of IBSI. The hand-crafted radiomics features were divided into three different groups of features: shape features, histogram statistics, second order features: Gray Level Co-occurrence Matrix (GLCM), Gray Level Dependence Matrix (GLDM), Gray Level Run Length Matrix (GLRLM), Gray Level Size Zone Matrix (GLSZM), Neighboring Gray Tone Difference Matrix (NGTDM). Most features mentioned above were delineated according to the IBSI, and the detailed introduction of the features were described in Supplementary Material.

Radiomics extracts features from medical images more precisely than general visual evaluation. However, radiomics features are affected by the acquisition protocol and reconstruction methods, thus obscuring underlying biologically important texture features. In practical clinical retrospective studies, it is impractical to standardize the parameters of different devices in advance. In order to reduce the batch effect, ComBat harmonization technique had been successfully applied to properly correct radiomic feature values from different scanner or protocol effect (35). We exploited the ComBat to pool and harmonize radiomics and deep learning features after extraction.

Based on the training set, we performed deep learning or radiomics feature selection and constructed model for predicting lymph node metastasis. Firstly, the z-score normalization was used for standardization. In addition, we selected top 20% best features by univariate analysis. Then, we used an embedded feature selection approach based on the least absolute shrinkage and selection operator (LASSO) algorithm to select the most predictive features. Classification model was constructed by the SVM (36). We also built a clinical model based on the clinical characteristics. The code for model construction is available on Github (https://github.com/cmingwhu/DL-LNM).

P values for differences in the clinical characteristics between cohorts were assessed by Fisher’s exact test or Chi-square test for categorical variables, and the Mann-Whitney U test or independent t-test for numeric variables. Receiver operating characteristic curve (ROC) was adopted to determine the predictive performance of the related models, while the DeLong’s test was adopted for comparison of AUC between each model. The AUC and 95% confidence interval (CI) were calculated. Accuracy, specificity and sensitivity were calculated to assess the diagnostic performance. The calibration of the model was evaluated by the calibration curves using the Hosmer-Lemeshow test. To assess the reproducibility of our results, we randomly divided the patients into training or testing set ten times. Subsequently, the model was reconstructed and validated repeatedly. P value < 0.05 was considered statistically significant. We used Python version 3.6 (https://www.python.org/) and R version 4.0.3 (https://www.r-project.org) to perform statistical analysis and graphic production. The packages used in this study are shown in Supplementary Material.

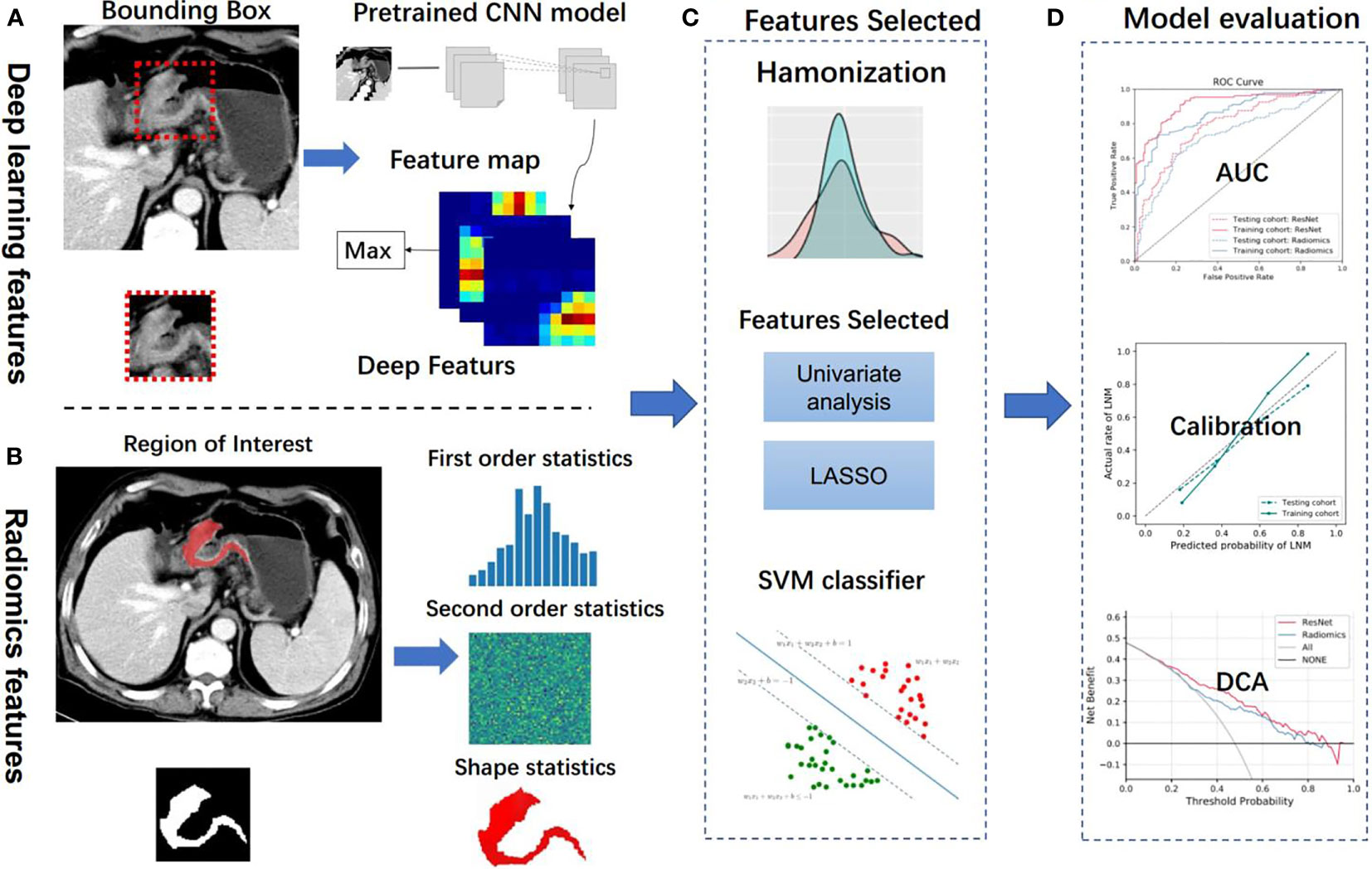

Figure 2 depicts the workflow processes. Of the 523 patients (mean age: 59.64 ± 10.24 years; male: 74.40%) with locally advance gastric cancer for this study, 367 patients were assigned to the training cohort, and 156 patients was assigned for testing cohort. Clinical characteristics in two cohorts are shown in Table 1. No significant difference was identified in terms of sex, age, tumor location, tumor thickness between the two cohorts (Tables 1, S1). Tumor diameter, clinical T stage, and CT-reported LN differed significantly between LNM-negative and positive group in two cohorts (p <0.05). Finally, a clinical model was established (incorporating tumor diameter, CT-reported LN and clinical T stage) for predicting LNM, yielding an AUC of 0.683 and 0.756 for testing and training cohorts, respectively, as shown in Tables 2, S2.

Figure 2 Analysis flowchart. (A, B) Features extraction from the deep learning method and handcrafted radiomics method. (C) Machine learning methods were employed in model construction. (D) Model evaluation. CNN, convolutional neural network; LASSO, the least absolute shrinkage and selection operator; SVM, support vector machine; AUC, area under the receiver operating characteristic curve; DCA, decision curve analysis.

851 handcrafted radiomics features were extracted, where 107 were from the original images and 744 were from the wavelet filtered images. After ComBat harmonization (35). Forty-eight features were selected, including three and forty-five from original and wavelet filtered images (Table S3). The handcrafted radiomics model get an AUC of 0.704, C-index of 0.704, accuracy of 61.8%, sensitivity of 56.5%, specificity of 73.5%, positive predictive value (PPV) of 82.4%, and negative predictive value (NPV) of 43.4% in the testing cohort, and an AUC of 0.779, C-index of 0.779, accuracy of 74.0%, sensitivity of 77.5%, specificity of 66.4%, positive predictive value (PPV) of 83.2%, and negative predictive value (NPV) of 57.9% in the training cohort in Tables 2, S2.

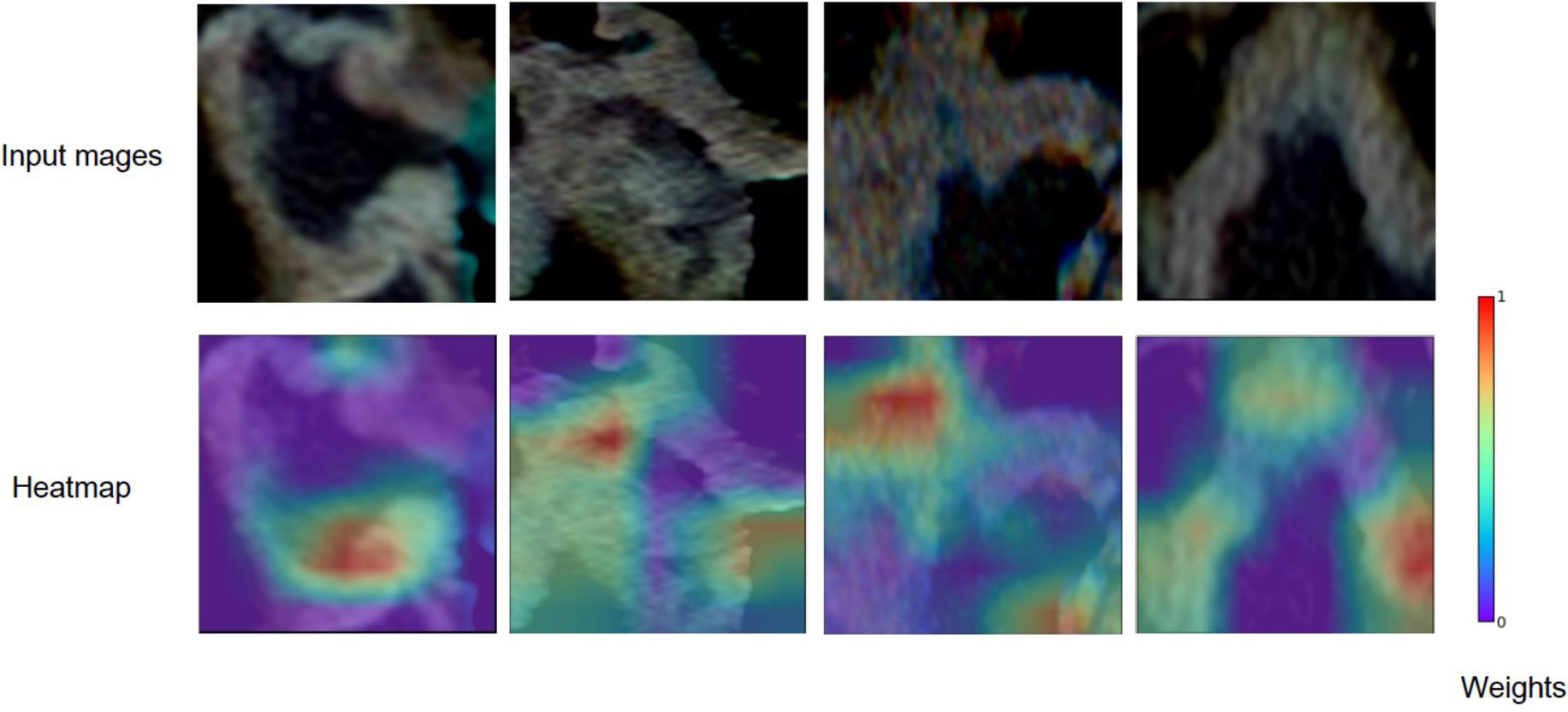

For predicting LNM based on deep learning features, we compared five CNNs models which were adopted to extract deep features to optimize the prediction performance. The AUC ranged from 0.578 to 0.796 for testing cohort, and 0.804 to 0.897 for training cohort, as shown in Table 2, S2. The ResNet-SVM model containing 116 deep learning features could get the best classification performance among the five CNNs models and was superior to the radiomics model, and yielding an AUC of 0.796, C-index of 0.796, accuracy of 75.2%, sensitivity of 80.2%, specificity of 64.7%, PPV of 82.5%, NPV of 61.1% in the testing cohort in Figures 3A, B. The calibration and favorable clinical benefit could also get good performance in Figures 3C, D. The number of features were adopted in model of different CNNs are listed in Table S4. Features maps from the ResNet model could indicate the locations that were important in generating the output. With the segmentation of the tumor region delineated, the informative slices (one slice with the maximum tumor area) were cropped to 224 × 224 mm using a bounding box covering the whole tumor area. The cropped images were used to generate the features from ResNet and the visualization of feature heatmaps were generated based on the Guided Grad-CAM, as shown in Figure 4. The tumoral lesion and perifocal areas in images were of great valuable for the feature pattern extraction. Then, we further analyzed the performance generated by features extracted from different layers to see whether the last layer was the most suitable to extract features. The current features extraction strategy is the best for ResNet in Table S5.

Figure 3 Evaluation of predictive performances for ResNet-SVM model and radiomics model. (A) The ROC curves showing the predictive performances of the ResNet and the radiomics model in testing cohorts. (B) The ROC curves showing the predictive performances of the ResNet and the radiomics model in training cohorts. (C, D) Curves of calibration analysis and the decision curve analysis for the ResNet and radiomics model. AUC, area under the receiver operating characteristic curve; LNM, lymph node metastasis.

Figure 4 Grad-CAM visualizations for the feature heatmaps of representative patients generated from the ResNet. The right color bar indicates the scaled weights of deep features.

Different classifiers and features selection methods could greatly affect the prediction performance. For the features extracted from different CNNs, we compared the cross combination of multiple classifiers and feature selection methods. We find that the performances of different combinations are different, the results shown that the current combination method of classifier and extraction (ResNet-SVM) demonstrated the best discrimination ability with an AUC of 0.796 (95% CI, 0.715-0.865) for our dataset, as shown in Figure S1 and Table S6, but further generalization tests on other datasets are required. The DeLong test showed that there were significant improvements in contrast to the radiomics model and the clinical signature (p < 0.05), which yielded AUCs of 0.704 (95% CI, 0.625–0.783) and 0.683 (95% CI, 0.632–0.721), respectively.

We further integrated the radiomics and deep learning features to explore whether the predictive capability could be improved. After combination with radiomics and deep features, the prediction performance had not been improved, with a comparable AUC of 0.787 in the testing cohort in Figure S2. In addition, we further evaluated the addition of clinical factors to radiomics or deep learning features for potential improvement of prediction performance. The combination of deep and/or radiomics features with clinical features were incorporated into the model construction, the experimental results showed that combination of clinical factors could not increase the prediction performance in the testing cohort in Figures S3, S4.

In this retrospective study, we applied deep transfer learning techniques to build a CT imaging-based prediction model for LNM prediction in gastric cancer. Our previous studies shown that the noninvasive deep learning CT image-based radiomics model was effective for LNM prediction and prognosis in GC (37). Hereby, we adopted transfer learning technique and extract deep learning features from five different pre-trained CNNs. Finally, the ResNet-SVM model could achieve better performance than the handcrafted radiomics and clinical models. In addition, different gastric cancers have different potentials for lymph node metastasis due to the heterogeneity and complexity of primary tumors. Previous studies clarified that the tumor size were independent risk factors for LNM. Our results are consistent with the above studies, and it is reasonable that GCs with greater tumor size tend to have a higher risk of lymph node metastasis.

As an emerging image quantification approach, radiomics has been widely used in diagnosis and prognosis of cancer patients based on medical images (5, 38, 39). Previous studies mainly focused on the characteristic manifestations of CT imaging to develop radiomic model, and did not use the transfer learning technology in the field of radiological prediction of LNM in gastric cancer. We established a CT-based model using the novel deep learning technique. Deep learning features extraction only needs to set a fixed size bounding box to tumor area, which not only improves the efficiency, but also reduces the subjectivity of manual segmentation in the radiomics procedure.

Deep learning technology has been widely used in the field of medical image processing. However, training a deep learning model from scratch is often not feasible because of various reasons: (1) the lack of a number of annotated images for one specific clinical problem. (2) reaching convergence could take too long for experiments to be worth. In the medical domain, using pre-trained CNNs as feature extractors is an effective way to alleviate these issues (19, 39–41). Transfer learning can transfer prior knowledge of image features and apply it to medical imaging with better generalization and ease of replication and testing. Our research shows that deep learning features extracted by transfer learning approach generalized well in medical tasks and achieved fairly good results. Moreover, the combination of radiomics and deep learning features did not improve the prediction performance in our study (Figures S2), which is similar to the results published by (40, 41). The reason is that the imaging features calculated from different frameworks might have different high-level dimensional characteristics, which are not suitable for feature combination.

Our study has some limitations that are worth noting. First, tumor regions of interest were manually delineated on CT images, which is high cost and laborious task. Semi-automatic or automatic segmentation method may be better. Second, although our experimental results showed good prediction performance, indicating that transfer learning could alleviate the domain difference, heterogeneity existed between various dataset. The main obstacle of this research is the lack of sufficient annotated medical images to further train the deep learning models. Such dataset could further extract more valuable features to improvement prediction performance. Third, we followed the IBSI benchmarks to filter the images after resampling, which may lead to the failure of estimating how much this would affect wavelet features to some extent. Last, this study is a single-center which is lack of external validation for the developed model, but we further randomly divided the patients into training or testing set and reconstructed and tested repeatedly ten times to evaluate the results. And, we are working to further access our model in a bigger dataset that may come from multiple centers.

In conclusion, our study adopted a noninvasive deep learning technique to perform prediction of LNM in GC. Compared to the handcrafted radiomics methods, the ResNet-SVM model could get better performance, and the implementation is simple and efficient without drawing the tumor contour manually. This study represented that the transfer learning strategy might also achieve good performance in medical imaging tasks without sufficient annotated medical images.

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author. Requests to access these datasets should be directed toZmNjY2hlbmdtQHp6dS5lZHUuY24=.

H-PZ and MC: design the research. A-QZ: performed the research. A-QZ and H-PZ: collected the data. FL and PL: analyzed the data. A-QZ and MC: analyzed the data and wrote the paper. J-BG and MC: reviewed the paper. All authors contributed to the article and approved the submitted version.

This work was supported by the Key Project of Science and Technology Research of Henan Province (No. 222102210112), the National Natural and Science Fund of China (No. 61802350, 81971615), National Key Research and Development Program of China (No. 2019YFC0118803).

This is a short text to acknowledge the contributions of specific colleagues, institutions, or agencies that aided the efforts of the authors.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2022.969707/full#supplementary-material

1. Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: Cancer J Clin (2018) 68(6):394–424. doi: 10.3322/caac.21492

2. Shen L, Shan YS, Hu HM, Price TJ, Sirohi B, Yeh KH, et al. Management of gastric cancer in Asia: resource-stratified guidelines. Lancet Oncol (2013) 14(12):e535–47. doi: 10.1016/S1470-2045(13)70436-4

3. Smyth EC, Verheij M, Allum W, Cunningham D, Cervantes A, Arnold D. ESMO guidelines committee. gastric cancer: ESMO clinical practice guidelines for diagnosis, treatment and follow-up. Ann Oncol (2016) 27(suppl 5):v38–49. doi: 10.1093/annonc/mdw350

4. Chen W, Zheng R, Baade PD, Zhang S, Zeng H, Bray F, et al. Cancer statistics in China. CA: Cancer J Clin (2016) 66(2):115–32. doi: 10.3322/caac.21338

5. Dong D, Fang MJ, Tang L, Shan XH, Gao JB, Giganti F, et al. Deep learning radiomic nomogram can predict the number of lymph node metastasis in locally advanced gastric cancer: an international multicenter study. Ann Oncol (2020) 31(7):912–20. doi: 10.1016/j.annonc.2020.04.003

6. Hartgrink HH, van de Velde CJ, Putter H, Bonenkamp JJ, Klein Kranenbarg E, Songun I, et al. Extended lymph node dissection for gastric cancer: who may benefit? final results of the randomized Dutch gastric cancer group trial. J Clin Oncol (2004) 22(11):2069–77. doi: 10.1200/JCO.2004.08.026

7. Amin MB, Edge SB, Greene FL, Schilsky RL, Washington ML, Sullivan DC, et al. AJCC cancer staging manual. Basel. (Switzerland: 8th ed: Springer) (2017).

8. Li J, Fang M, Wang R, Dong D, Tian J, Liang P, et al. Diagnostic accuracy of dual-energy CT-based nomograms to predict lymph node metastasis in gastric cancer. Eur Radiol (2018) 28(12):5241–9. doi: 10.1007/s00330-018-5483-2

9. Yamashita K, Hosoda K, Ema A, Watanabe M. Lymph node ratio as a novel and simple prognostic factor in advanced gastric cancer. Eur J Surg Oncol (2016) 42(9):1253–60. doi: 10.1016/j.ejso.2016.03.001

10. Persiani R, Rausei S, Biondi A, Boccia S, Cananzi F, D’Ugo D. Ratio of metastatic lymph nodes: impact on staging and survival of gastric cancer. Eur J Surg Oncol (2008) 34(5):519–24. doi: 10.1016/j.ejso.2007.05.009

11. Oka S, Tanaka S, Kaneko I, Mouri R, Hirata M, Kawamura T, et al. Advantage of endoscopic submucosal dissection compared with EMR for early gastric cancer. Gastrointest Endosc (2006) 64(6):877–83. doi: 10.1016/j.gie.2006.03.932

12. Ajani JA, Bentrem DJ, Besh S, D’Amico TA, Das P, Denlinger C, et al. Gastric cancer, version 2.2013: featured updates to the NCCN guidelines. J Natl Compr Canc Netw (2013) 11(5):531–46. doi: 10.6004/jnccn.2013.0070

13. Hyung WJ, Cheong JH, Kim J, Chen J, Choi SH, Noh SH. Application of minimally invasive treatment for early gastric cancer. J Surg Oncol (2004) 85(4):181–5. doi: 10.1002/jso.20018

14. Limkin EJ, Sun R, Dercle L, Zacharaki EI, Robert C, Reuzé S, et al. Promises and challenges for the implementation of computational medical imaging (radiomics) in oncology. Ann Oncol (2017) 28(6):1191–206. doi: 10.1093/annonc/mdx034

15. Shen DG, Wu GR, Suk HI. Deep learning in medical image analysis. Annu Rev Biomed Eng (2017) 19(1):221–48. doi: 10.1146/annurev-bioeng-071516-044442

16. Kermany DS, Goldbaum M, Cai W, Valentim CCS, Liang H, Baxter SL, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell (2018) 172(5):1122–31. doi: 10.1016/j.cell.2018.02.010

17. Li F, Chen H, Liu Z, Zhang X, Wu Z. Fully automated detection of retinal disorders by image-based deep learning. Graefes Arch Clin Exp Ophthalmol (2019) 257(3):495–505. doi: 10.1007/s00417-018-04224-8

18. Wakiya T, Ishido K, Kimura N, Nagase H, Kanda T, Ichiyama S, et al. CT-based deep learning enables early postoperative recurrence prediction for intrahepatic cholangiocarcinoma. Sci Rep (2022) 12(1):8428. doi: 10.1038/s41598-022-12604-8

19. Shin HC, Roth HR, Gao M, Le L, Xu Z, Nogues I, et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging (2016) 35(5):1285–98. doi: 10.1109/TMI.2016.2528162

20. Alzubaidi L, Fadhel MA, Al-Shamma O, Zhang JL, Santamaría J, Duan Y, et al. Towards a better understanding of transfer learning for medical imaging: a case study. Appl Sci (2020) 10(13):4523. doi: 10.3390/app10134523

21. Lowe DG. Distinctive image features from scale-invariant keypoints. Int J Comput Vision (2004) 60(2):91–110. doi: 10.1023/B:VISI.0000029664.99615.94

22. Raghu S, Sriraam N, Temel Y, Rao SV, Kubben PL. EEG Based multi-class seizure type classification using convolutional neural network and transfer learning. Neural Netw (2020) 124:202–12. doi: 10.1016/j.neunet.2020.01.017

23. van Rossum PSN, Xu C, Fried DV, Goense L, Court LE, Lin SH. The emerging field of radiomics in esophageal cancer: current evidence and future potential. Transl Cancer Res (2016) 5(4):410–23. doi: 10.21037/tcr.2016.06.19

24. Gu L, Liu Y, Guo X, Tian Y, Ye H, Zhou S, et al. Computed tomography-based radiomic analysis for prediction of treatment response to salvage chemoradiotherapy for locoregional lymph node recurrence after curative esophagectomy. J Appl Clin Med Phys (2021) 22(11):71–9. doi: 10.1002/acm2.13434

25. He K, Zhang X, Ren S, Sun J. (2016). Deep residual learning for image recognition, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Las Vegas, NV, USA: IEEE), June 27-30. pp. 770–8. doi: 10.1109/CVPR.2016.90

26. Simonyan K, Zisserman A. Very deep convolutional networks for Large-scale image recognition, in: 3rd International Conference on Learning Representations (ICLR), (2015) (San Diego, CA, USA: OpenReview.net), May 7-9, 2015. doi: 10.48550/arXiv.1409.1556

27. Chollet F. Xception: Deep learning with depth wise separable convolutions, (2017) in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Honolulu, HI, USA: IEEE), July 21–26. doi: 10.1109/CVPR.2017.195

28. Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. (2016). Rethinking the inception architecture for computer vision, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Las Vegas, NV, USA: IEEE). pp. 2818–26. doi: 10.1109/CVPR.2016.308

29. Yang J, Shi R, Wei D, Liu Z, Zhao L, Ke B, et al. MedMNIST v2: A Large-scale lightweight benchmark for 2D and 3D biomedical image classification. CoRR (2021) abs/2110.14795. doi: 10.48550/arXiv.2110.14795

30. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int J Comput Vision (2020) 128(2):336–59. doi: 10.1007/s11263-019-01228-7

31. Mackin D, Fave X, Zhang L, Yang J, Jones AK, Ng CS, et al. Correction: Harmonizing the pixel size in retrospective computed tomography radiomics studies. PloS One (2018) 13(1):e0191597. doi: 10.1371/journal.pone.0191597

32. Ligero M, Jordi-Ollero O, Bernatowicz K, Garcia-Ruiz A, Delgado-Muñoz E, Leiva D, et al. Minimizing acquisition-related radiomics variability by image resampling and batch effect correction to allow for large-scale data analysis. Eur Radiol (2021) 31(3):1460–70. doi: 10.1007/s00330-020-07174-0

33. van Griethuysen JJM, Fedorov A, Parmar C, Hosny A, Aucoin N, Narayan V, et al. Computational radiomics system to decode the radiographic phenotype. Cancer Res (2017) 77(21):e104–7. doi: 10.1158/0008-5472.CAN-17-0339

34. Zwanenburg A, Vallières M, Abdalah MA, Aerts HJWL, Andrearczyk V, Apte A, et al. The image biomarker standardization initiative: Standardized quantitative radiomics for high-throughput image-based phenotyping. Radiology (2020) 295(2):328–38. doi: 10.1148/radiol.2020191145

35. Orlhac F, Frouin F, Nioche C, Ayache N, Buvat I. Validation of a method to compensate multicenter effects affecting CT radiomics. Radiology (2019) 291(1):53–9. doi: 10.1148/radiol.2019182023

36. Huang S, Cai N, Pacheco PP, Narrandes S, Wang Y, Xu W. Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genomics Proteomics (2018) 15(1):41–51. doi: 10.21873/cgp.20063

37. Li J, Dong D, Fang M, Wang R, Tian J, Li H, et al. Dual-energy CT-based deep learning radiomics can improve lymph node metastasis risk prediction for gastric cancer. Eur Radiol (2020) 30(4):2324–33. doi: 10.1007/s00330-019-06621-x

38. Wang S, Dong D, Zhang W, Hu H, Li H, Zhu Y, et al. Specific borrmann classification in advanced gastric cancer by an ensemble multilayer perceptron network: a multicenter research. Med Phys (2021) 48(9):5017–28. doi: 10.1002/mp.15094

39. Lopes UK, Valiati JF. Pre-trained convolutional neural networks as feature extractors for tuberculosis detection. Comput Biol Med (2017) 89:135–43. doi: 10.1016/j.compbiomed.2017.08.001

40. Yun J, Park JE, Lee H, Ham S, Kim N, Kim HS. Radiomic features and multilayer perceptron network classifier: a robust MRI classification strategy for distinguishing glioblastoma from primary central nervous system lymphoma. Sci Rep (2019) 9(1):5746. doi: 10.1038/s41598-019-42276-w

Keywords: deep learning, locally advanced gastric cancer, lymph node metastasis, radiomics, computed tomography

Citation: Zhang A-q, Zhao H-p, Li F, Liang P, Gao J-b and Cheng M (2022) Computed tomography-based deep-learning prediction of lymph node metastasis risk in locally advanced gastric cancer. Front. Oncol. 12:969707. doi: 10.3389/fonc.2022.969707

Received: 15 June 2022; Accepted: 05 September 2022;

Published: 23 September 2022.

Edited by:

Wei Wei, Xi’an University of Technology, ChinaReviewed by:

Qiongwen Zhang, Sichuan University, ChinaCopyright © 2022 Zhang, Zhao, Li, Liang, Gao and Cheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ming Cheng, ZmNjY2hlbmdtQHp6dS5lZHUuY24=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.