94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Oncol., 15 July 2022

Sec. Cancer Imaging and Image-directed Interventions

Volume 12 - 2022 | https://doi.org/10.3389/fonc.2022.905955

This article is part of the Research TopicArtificial Intelligence in Digital Pathology Image AnalysisView all 16 articles

Jingya Yang1,2†

Jingya Yang1,2† Xiaoli Shi2,3†

Xiaoli Shi2,3† Bing Wang1

Bing Wang1 Wenjing Qiu1,2

Wenjing Qiu1,2 Geng Tian2,3

Geng Tian2,3 Xudong Wang1

Xudong Wang1 Peizhen Wang1*

Peizhen Wang1* Jiasheng Yang1*

Jiasheng Yang1*A thyroid nodule, which is defined as abnormal growth of thyroid cells, indicates excessive iodine intake, thyroid degeneration, inflammation, and other diseases. Although thyroid nodules are always non-malignant, the malignancy likelihood of a thyroid nodule grows steadily every year. In order to reduce the burden on doctors and avoid unnecessary fine needle aspiration (FNA) and surgical resection, various studies have been done to diagnose thyroid nodules through deep-learning-based image recognition analysis. In this study, to predict the benign and malignant thyroid nodules accurately, a novel deep learning framework is proposed. Five hundred eight ultrasound images were collected from the Third Hospital of Hebei Medical University in China for model training and validation. First, a ResNet18 model, pretrained on ImageNet, was trained by an ultrasound image dataset, and a random sampling of training dataset was applied 10 times to avoid accidental errors. The results show that our model has a good performance, the average area under curve (AUC) of 10 times is 0.997, the average accuracy is 0.984, the average recall is 0.978, the average precision is 0.939, and the average F1 score is 0.957. Second, Gradient-weighted Class Activation Mapping (Grad-CAM) was proposed to highlight sensitive regions in an ultrasound image during the learning process. Grad-CAM is able to extract the sensitive regions and analyze their shape features. Based on the results, there are obvious differences between benign and malignant thyroid nodules; therefore, shape features of the sensitive regions are helpful in diagnosis to a great extent. Overall, the proposed model demonstrated the feasibility of employing deep learning and ultrasound images to estimate benign and malignant thyroid nodules.

Thyroid nodules can be divided into benign and malignant nodules. According to research, the incidence of thyroid cancer has increased by 2.4 times in the past 30 years, which is one of the fastest-growing malignant tumors (1–4).

There are two ways to diagnose thyroid nodules, fine needle aspiration (FNA) and ultrasound. FNA is the gold standard for thyroid nodule detection, but it is traumatic to the human body (5). Acharya et al. indicated that 70% of the diagnoses with FNA were benign; about 4% (1% to 10%) were malignant or suspected malignant (6). Therefore, FNA is not suitable for all thyroid nodules. Ultrasound is a non-invasive and radiation-free method, which has low cost and short time and can even show a few millimeters of lesions, so it has been widely used (7, 8). However, the diagnosis of ultrasound depends on the experience and judgment of doctors to a great extent, which may lead to misdiagnosis (9). The computer-aided diagnosis (CAD) system could help doctors diagnose thyroid nodules objectively (10–14). Park et al. confirmed that CAD and radiology were generally comparable; CAD is feasible to assist doctors in diagnosis (15). Chang et al. extracted six features and calculated the F-score of these feature sets and screened out the main texture features through support vector machines (SVMs) for subsequent classification (16). Lyra et al. adopted the gray-level co-occurrence matrix (GLCM) to characterize texture features of thyroid nodules (17). Keramidas et al. tried to classify thyroid nodules by local binary patterns (LBPs) (18). Acharya et al. segmented thyroid nodule images manually, extracted four texture features from images, including fractal dimension (FD), LBP, Fourier spectrum descriptor (FS), and Laws’ texture energy (LTE), and then used these feature vectors to predict thyroid nodules using SVM, decision tree (DT), Sugeno fuzzy, gaussian mixture model (GMM), K-nearest neighbor (KNN), radial basis probabilistic neural network (RBPNN), and naive bayes classifier (NBC) (19). Ma et al. tried to classify thyroid nodules by five different machine learning methods, namely, deep neural network (DNN), SVM, central clustering methods, KNN, and logistic regression; the accuracy was 0.87 (20).

These methods need to extract and even fuse many features manually to achieve high results. While deep learning can deal with massive data and learn deeper and more abstract features automatically, it also avoids complex manual feature extraction (21–23). Guan et al. employed the Inception v3 model to classify thyroid nodules (24). Chi et al. proposed a deep learning framework to extract features from ultrasound images. The proposed model achieved 96.34% accuracy, 86% sensitivity, and 99% specificity on their own database (25). Peng et al. developed a ThyNet model to classify thyroid nodules from multiple hospitals; the area under the receiver operating characteristic curve (AUROC) reached 0.922, and the AUROC of the radiologist’s diagnosis improved from 0.837 to 0.875 with the aid of ThyNet, and from 0.862 to 0.873 in the clinical test. The frequency of FNA is reduced in the simulated scenario, while the missed diagnosis of malignant tumors was reduced from 18.9% to 17% as well (26). Avola et al. proposed a knowledge-driven classification framework; the proposed framework finally achieved an AUC value of 98.79% (27). Ye et al. applied a residual network pretrained by transfer learning to classify thyroid nodules; the highest accuracy reached 93.75% (28). Ma et al. tried to classify thyroid nodules by two pretrained convolutional neural networks (CNNs); the feature maps obtained by the two CNNs were fused (29). Sun et al. transferred the CNN model learned from ImageNet as a pretrained feature extractor to a new dataset of ultrasound images. The proposed method combined traditional low-level features extracted from the histogram of oriented gradient (HOG) and LBP with high-level deep features extracted from CNN models to form a hybrid feature space. The experimental accuracy was 93.10% (30). Furthermore, Chen et al. used a deep learning ultrasound text classifier for predicting thyroid nodules. The method achieved 93% and 95% accuracy on real medical datasets and standard datasets, respectively (31).

In this paper, 508 ultrasound images were collected from the Third Hospital of Hebei Medical University in China, which are the same as that by Ma et al. (20). The main work is as follows. First, the ResNet18 model, combined with transfer learning, was employed to classify benign and malignant thyroid nodules (32). Second, a heatmap was used to visualize the model’s attention on thyroid nodule images. Next, the highlighted regions expressed by heatmaps were extracted and analyzed from original images. Finally, we found that the characteristics of thyroid nodules with different properties were quite different (P < 0.05 means the difference is statistically significant.). In summary, our method is effective in the classification of thyroid nodule images.

In this paper, we used the ultrasound images of thyroid nodules provided by the Third Hospital of Hebei Medical University in China. Images of thyroid nodules had been checked by an experienced doctor; artifacts and blurred images were excluded. The pathologist determined the benign and malignant nodules according to the pathological diagnosis. Finally, we collected 508 ultrasound images, of which 415 were benign nodules and 93 were malignant nodules. Each ultrasound image corresponded to a patient. Among them, 70% of the dataset served as the training set and 30% as the test set. There was no overlap between the training and testing sets. Table 1 shows the distribution of benign and malignant nodules in the training and testing groups.

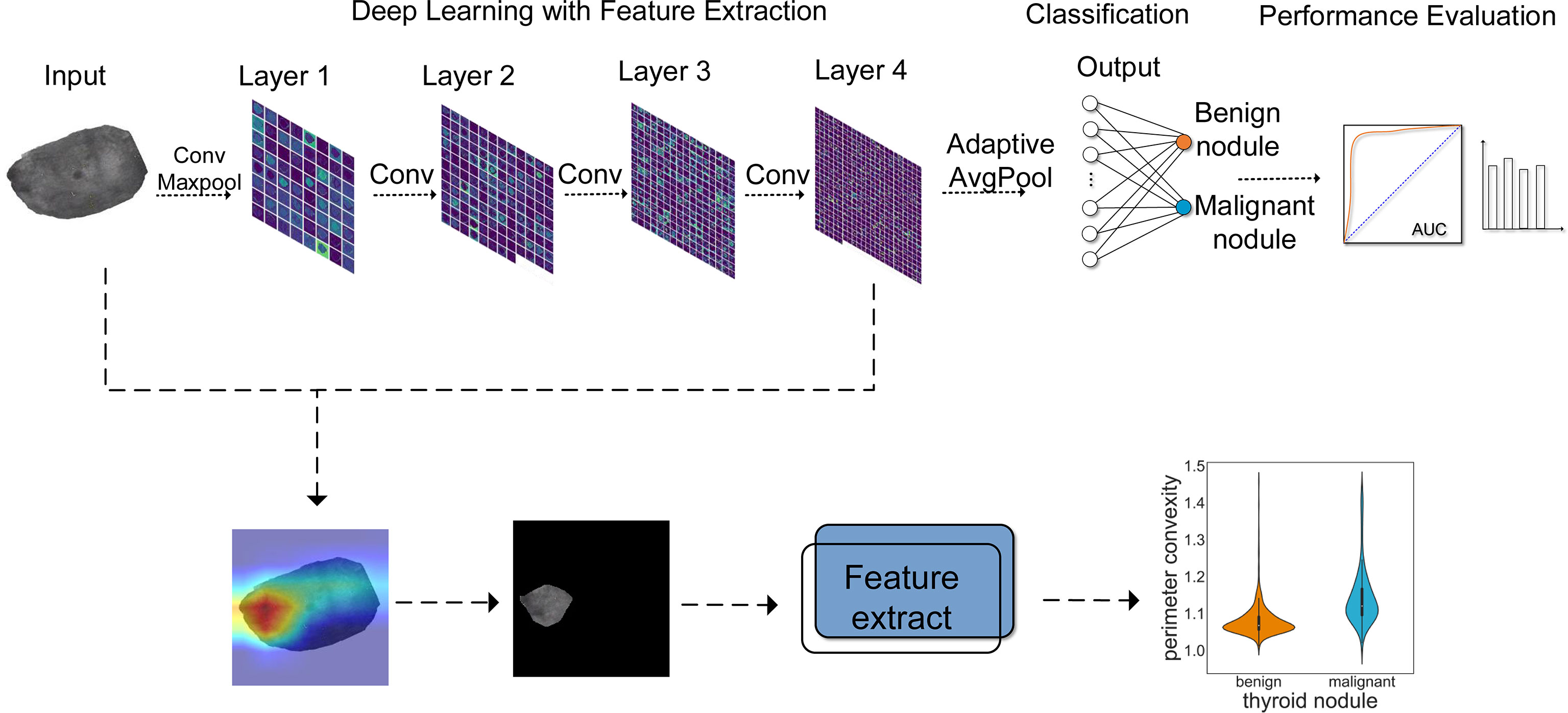

The complete process of predicting benign and malignant thyroid nodules is shown in Figure 1, which can be divided into three components. The Resnet18 model was employed to diagnose whether the nodule is benign or malignant; the heatmap shows the highlighted regions of the model, which were then extracted and analyzed.

Figure 1 The workflow for thyroid module classification with ResNet18. Layer 1~layer 4 show the process of image analysis by ResNet18. With the increase of layers, the features extracted by the model became more abstract. AUC and other evaluation indicators were used to evaluate the effect of model classification. In addition, a heatmap was employed to visualize the prediction results, by which we extracted and analyzed the highlighted areas.

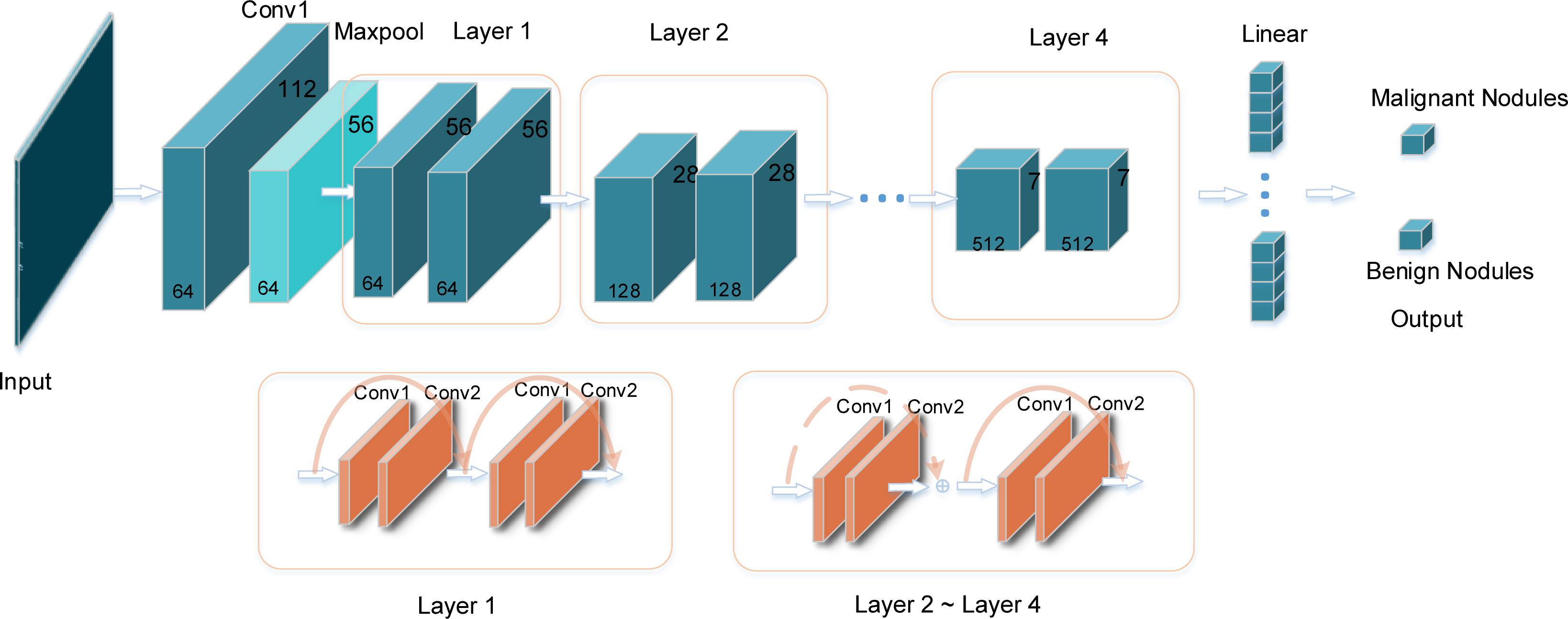

The convolution neural network performs well in image classification. The CNN has an input layer, hidden layers, and an output layer. With the image always as input, the hidden layers are used to extract features in the image. ResNet is a classical convolutional neural network, which was proposed by He et al. (32); it was the champion at the 2015 ImageNet Large Scale Visual Recognition Challenge (ILSVRC). The structure of the ResNet18 network is shown in Figure 2. A 7*7 convolution and a 3*3 max pooling operation were employed successively. Then it was followed by four layers, with each layer containing two basic blocks, and each basic block containing two convolutions, a batch normalization, an activation function, and a shortcut connection. Then it was modified to two classes. Among them, the role of batch normalization is to speed up the training and convergence of the network, preventing the gradient from vanishing, exploding, and overfitting at the same time. The activation function adds non-linear factors to improve the expressive ability of the neural network. The shortcut connection directly bypasses the input information to the output; this ensures the integrity of information transmission. The constructure of layers is depicted in Figure 2; the dotted line indicates that the number of channels has changed, and the solid line indicates that the number of channels has not changed.

Figure 2 The specific structure of the ResNet18 model. The input is the image of thyroid nodules with the same size. After the convolution layers and pooling layers, the image features are extracted automatically. The output layer is the result of classification: benign or malignant nodule.

Although ResNet18 predicts well in natural images, the results cannot be guaranteed to be optimal due to the huge difference between ultrasonic images and natural images. In addition, it is very complicated to train the neural network from scratch, which needs a lot of computing and memory resources. Transfer learning extracts basic information from the source dataset and applies it to the target domain by fine-tuning parameters. It can use fewer computing resources and shorter time to train the model to obtain better results.

Cross entropy (CE) is a commonly used loss function. We can obtain results that are consistent with expectations by using cross entropy as the loss function and minimizing the target loss function as the goal. Unfortunately, our dataset is unbalanced. If CE is used as the objective function, different categories will be given equal weights when calculating, which may greatly interfere with the learning process of model parameters. Many negative samples constitute a large part of the loss, thus controlling the direction of gradient updates, making the final trained model more inclined to classify samples into this type. Therefore, in order to avoid the hidden dangers that may be caused by dataset imbalance, focal loss was employed (33). Focal loss was proposed by Lin et al. in 2017; it was applied to solve the problem of data imbalance and difficult samples. Focal loss is derived from the CE loss function; CE is defined as:

where y represents the labels of negative and positive samples, corresponding to 0 or 1 in binary classification, and p ∈ [0,1] represents the estimated probability of the class labeled y = 1.

At this point, CE becomes:

Lin et al. added a modulating factor on the basis of CE: (1−pt)γ; α is the adjustment factor. Finally, focal loss can be written as:

In summary, our framework consists of the following steps.

1. Assign a label for each thyroid nodule image.

2. Resize input images to 224*224, then transform them by flip, rotate, and so on to generate a more complex and diverse dataset, then normalize images to improve the accuracy and generality.

3. Pretrain the ResNet18 model on ImageNet to learn general image feature parameters from natural images.

4. Fine-tune the pretrained model, and adjust the 1000 classification on ImageNet to 2 in our dataset.

5. Adjust the parameters about the network, set the learning rate to 0.0001, and employ the Adam optimizer and focal loss function to optimize the network.

6. Perform pooling and fully connect the layers, then output the prediction results.

CNN’s performance is excellent, but it sacrifices intuition and interpretability, and it is hard to interpret the prediction results of the model. Class activation mapping (CAM) is a modern technique for model interpretation (34). However, the disadvantage is that CAM depends on the global average pooling layer; if not, we need to change and retrain the model. Therefore, the use of CAM is not convenient. Grad-CAM alleviates this problem (35). It does not need to modify the structure of the existing model, which makes it applicable for any CNN-based architecture. Grad-CAM shows the contribution distribution of the model output by heatmap, and the contribution is shown by colors; the red color in the heatmap represents a large contribution to the output, which is the main basis for judgment, while blue represents a small contribution. As shown in formula (5), let y be the probability of class and ∂k be the feature map of the last convolutional layer of the network. Compute the gradient of y with respect to ∂k and take a global average pooling of all pixels (i and j are width and height, respectively) to obtain a weight ∂k, which represents the importance of feature map k to discriminate the thyroid nodule category.

Next, weighted combinations were performed to sum the feature maps. Then, a ReLU function was followed, because we only focus on the areas that have a positive impact on the class judgment, as described in formula (6). Grad-CAM shows the areas of positive impact clearly by the heatmap.

After visualizing the area of interest of the model by Grad-CAM, the highlighted regions based on the heatmap were extracted. First of all, according to the corresponding relationship between HSV and RGB colors, the image was converted from RGB to HSV color space. Then, the red areas in the heatmap were captured; we extracted the boundaries of the red area and overlapped them with the original image. Then the extracted areas were analyzed.

In general, image feature extraction includes shape, texture, and color feature extraction. These features depict images from different aspects. Shape features are mostly used to describe shapes of objects in images (36–38). Combined with the extracted areas of thyroid nodules, the form parameter, area convexity, and perimeter convexity were adopted to describe the shape. These features might not be the most perfect representation of the properties of the target regions but may be the most appropriate description of the shape of the regions.

The form parameter refers to the ratio of the area to the square of perimeter in one region, which indicates the complexity of the edge. The formula of the form parameter is as follows:

where S represents the area of the region and P represents the perimeter of the region. A smaller value for the form parameter indicates a more complex edge of the region.

The area convexity is the ratio of the area to the convex hull area. The convex hull refers to the smallest convex polygon containing the specified area; that is to say, all points in the target region are on or inside the surrounding convex hull area. The formula for area convexity is as follows.

Sch represents the convex hull area. The area convexity is smaller than or equal to 1; the smaller the value is, the more complex the edge of the region is.

Similarly, the perimeter convexity refers to the ratio of the perimeter to the convex hull perimeter of the region.

where Pch represents the perimeter of the convex hull, and the perimeter convexity is equal to or larger than 1. The larger the value, the more complex the edge of the region.

Our model was evaluated by ROC curve, accuracy, recall, precision, and F1 score (39, 40), which are defined as follows:

where TP, TN, FP, and FN represent true positive, true negative, false positive, and false negative, respectively.

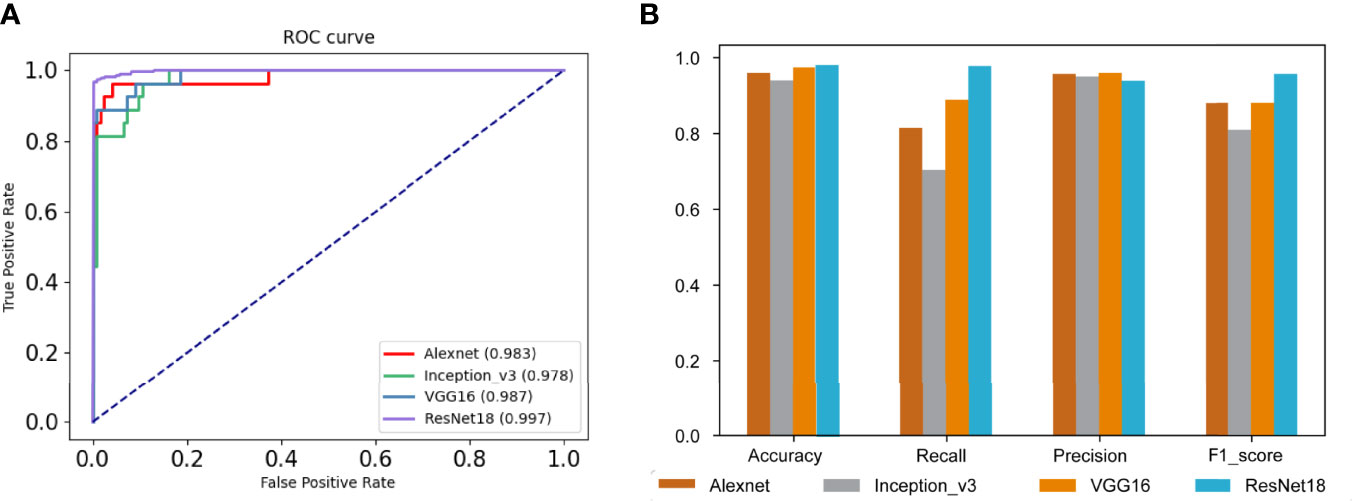

Our dataset was randomly divided into 10 times, and we calculated the average of the 10 times to avoid the chance of the results caused by one division. Compared with AlexNet, Inception_v3, and VGG16, the performance of our model was best, the AUC was 0.997, and as shown in Figure 3A, the average accuracy, recall, precision, and F1 score were 0.984, 0.978, 0.997, and 0.957, respectively; our model performed well in the above indicators. The results are shown in Figure 3B.

Figure 3 Evaluations of model results. (A) The receiver operating characteristic (ROC) curves and the area under the curve (AUC) of our model and other comparative models. (B) The performance of our model and other comparative models on accuracy, recall, precision, and F1 score.

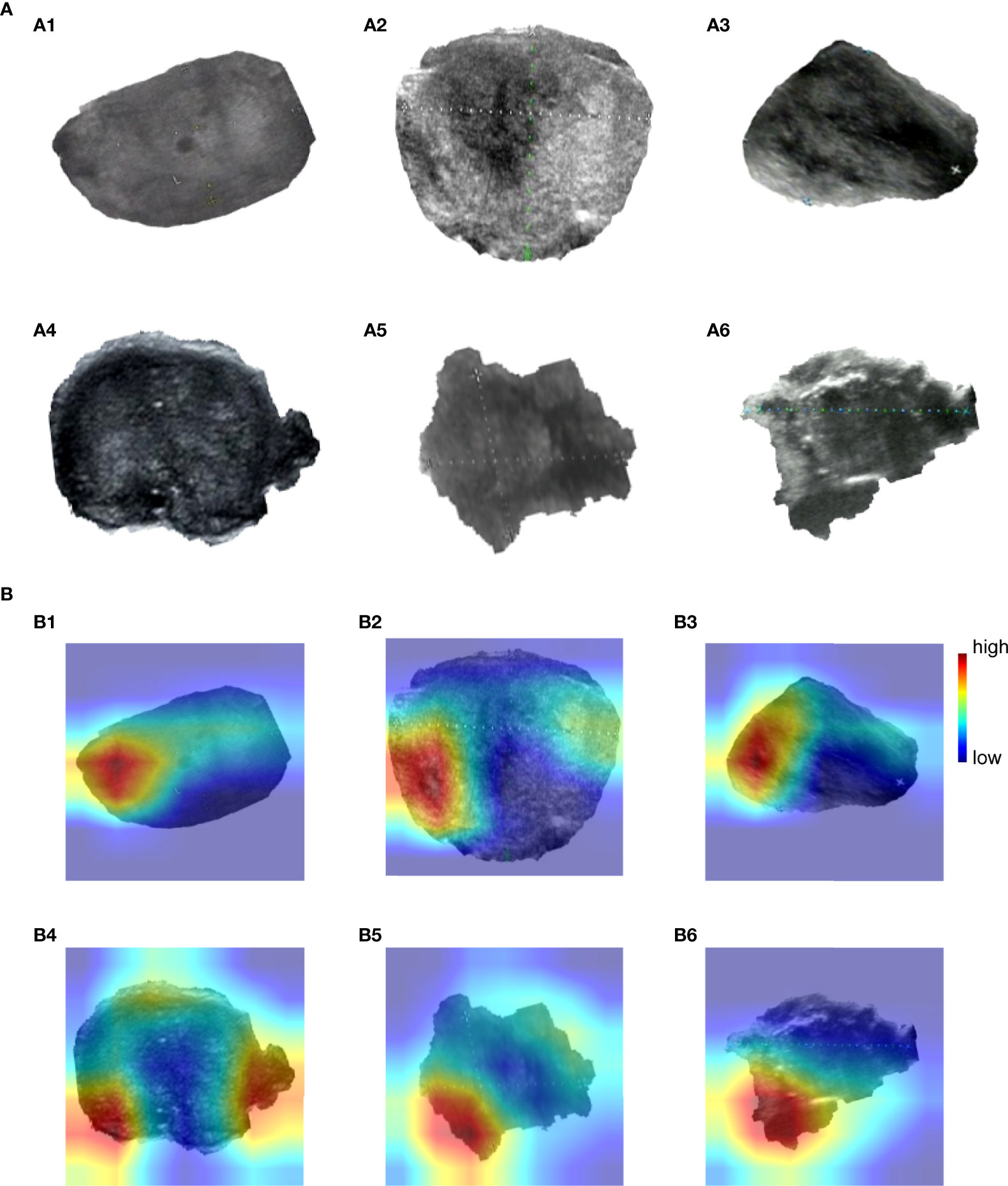

As we mentioned in the “Visualizing the highlighted regions by heatmap” section, the model achieved unprecedented accuracy in image classification, but the interpretability was poor. Visualization is helpful to understand and debug the model. Grad-CAM was used to validate model predictions on images; it adopted the final convolutional layer gradients to generate the positioning heatmaps when predicting. Figure 4A shows original images, where (a1)~(a3) are benign nodules and (a4)~(a6) are malignant nodule images. Figure 4B) shows the corresponding heatmaps by Grad-CAM. The highlighted regions in the heatmaps are shown in red, and the weak regions are shown in blue. The red and blue marks represent the regions of strong and weak emphases, respectively.

Figure 4 Grad-CAM visualizes highlighted regions. (A) (a1)~(a6) are original images, (a1)~(a3) are benign nodules, (a4)~(a6) are malignant nodules. (B) (b1)~(b6) are heatmaps drawn by Grad-CAM, corresponding to Figure 4 (a1)~(a6).

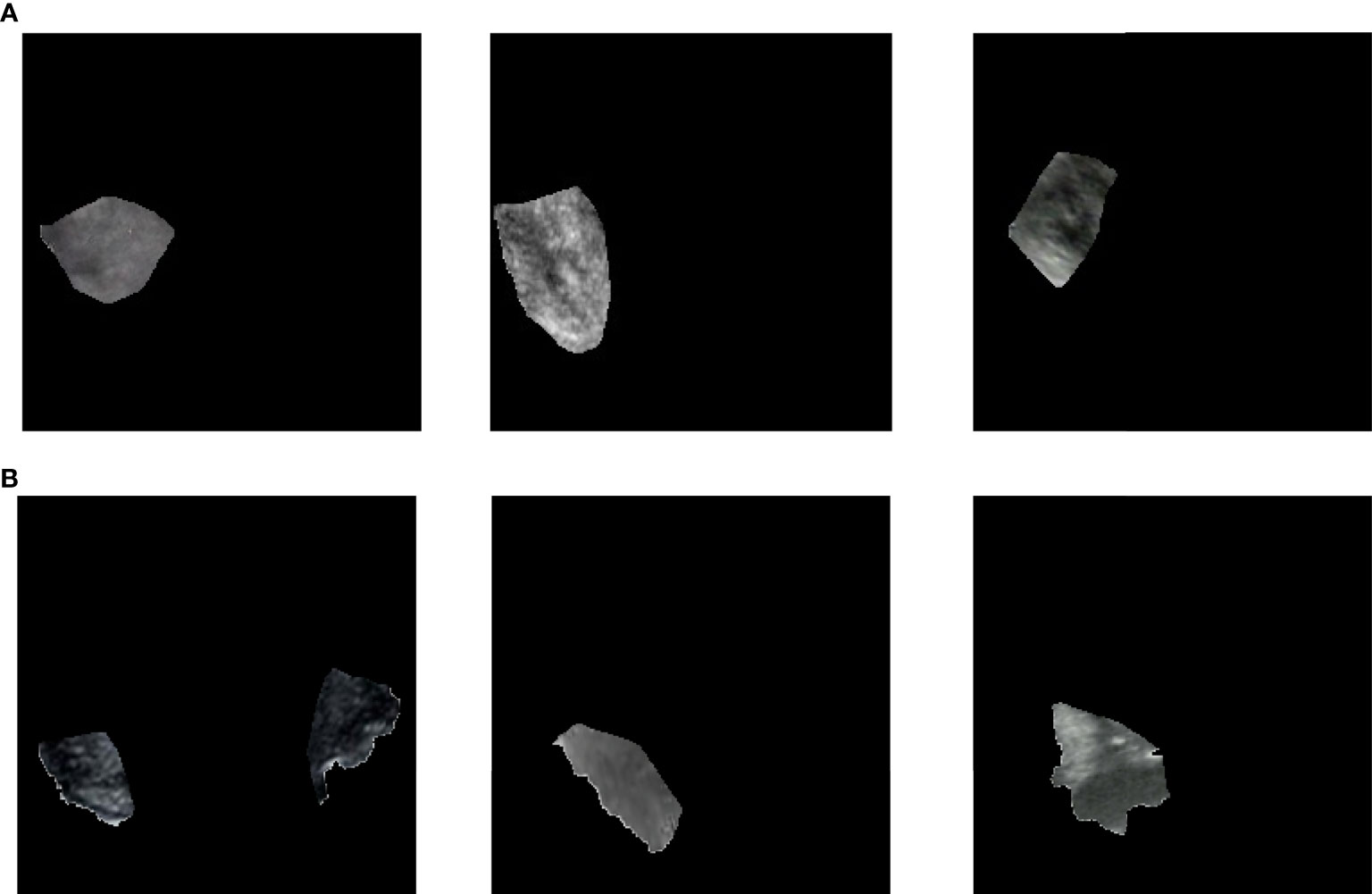

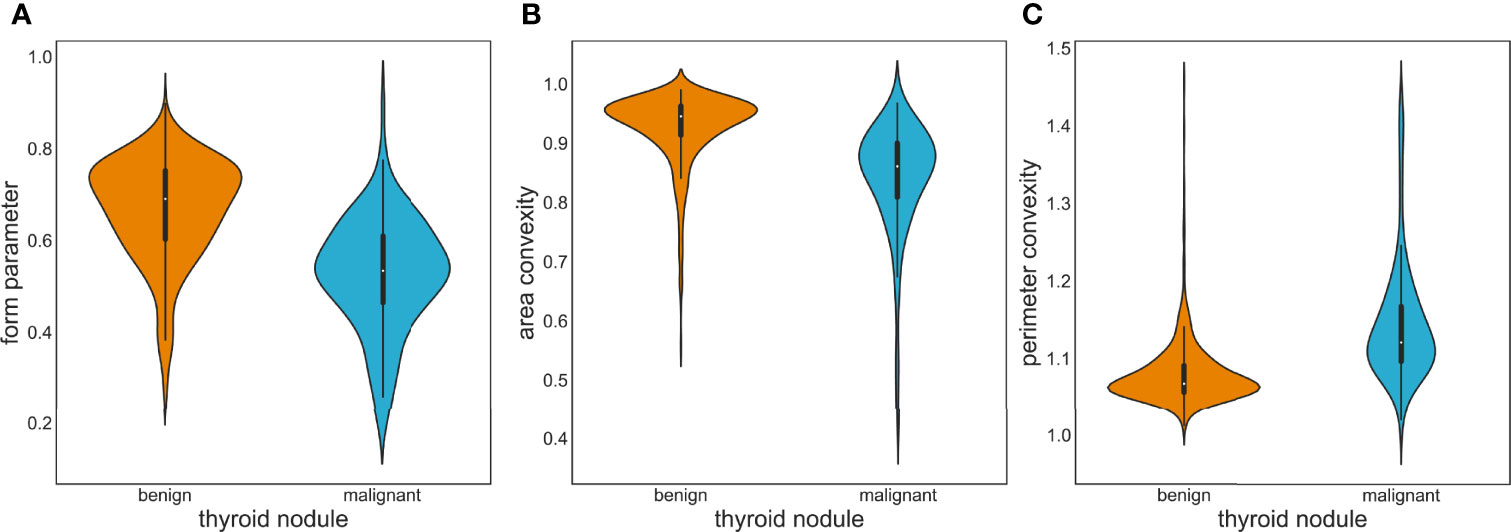

OpenCV was used to perform region extraction on the red highlight in the heatmap. The extracted results are shown in Figure 5; samples in (a) are the extraction result of benign thyroid nodules, and those in (b) are malignant. We found that the outline boundary of benign nodules was relatively regular, while the outline boundary of malignant nodules was relatively irregular. To verify whether almost all thyroid nodules fit this phenomenon, the shape features of thyroid nodules were calculated according to the above formulas. As a result, the form parameter, area convexity, and perimeter convexity were statistically different for benign and malignant nodules (their p-values were 1.68e-27, 7.01e-32, and 8.1e-33, respectively. P < 0.05 means the difference is statistically significant). As shown in Figure 6, violin plots were employed to describe the distribution of values of benign and malignant thyroid nodules with the abovementioned features.

Figure 5 Extract highlighted regions in heatmaps. These heatmaps correspond to Figure 4A) or (B). Samples in (A) are the extraction result of benign thyroid nodules, which correspond to Figure 4 (A1–A3) or (B1–B3). Samples in (B) are the extraction result of malignant thyroid nodules, which correspond to Figure 4 (a4)~(a6) or (b4)~(b6).

Figure 6 Violin plots of image feature distribution with benign and malignant nodules. (A) Form parameter. (B) Area convexity. (C) Perimeter convexity.

The prevalence of thyroid nodules is increasing year by year, and people’s awareness of health management is also gradually improving. As a result, the burden of ultrasound doctors in hospitals and physical examination institutions is increasing. If an AI-assisted diagnosis system can be used to assist doctors to distinguish ultrasound image data, the pressure of doctors will be relieved and work efficiency will be improved. Moreover, the interpretation of ultrasound images largely depends on the clinical experience of radiologists. It was reported that the sensitivity of radiologists varied from 40.3% to 100%, and the specificity from 50% to 100% (41–44). Computer-aided diagnosis can provide more objective and more accurate results, which is very helpful to doctors with less experience. Grani et al. showed that many thyroid nodules removed by surgery were not malignant, which increased the economic burden and physical pain for patients (45), whereas the AI-assisted diagnosis system based on deep learning algorithms could lower the false positive rate and then help to reduce unnecessary FNAB and surgery.

In this paper, the ResNet18 framework was applied to train the model and Grad-CAM was proposed to highlight sensitive regions in the ultrasound images. Finally, the 10-time average AUC of our proposed method was 0.997, and the average accuracy was 0.984, which is higher than the accuracy of 0.89 designed by Ma et al. (20). Moreover, the shape features of the sensitive regions rather than other features are more helpful in the discrimination of benign and malignant tumors. From the perspective of methodology, the performance of neural network-based methods is generally higher than the traditional feature-based methods. CNNs can learn efficient and useful features automatically, avoiding the time-consuming and laborious task of obtaining features manually.

Although the proposed method achieved a supportive result, it still had some limitations. Firstly, the number of images for training and testing is insufficient and multicenter data are not available. In the future work, we will collect more data to validate the model performance. Secondly, a more detailed classification of thyroid nodules may be tried using a variety of algorithms as benign thyroid nodules can be divided into benign follicular nodules and follicular adenomas, etc., clinically, and malignant thyroid nodules can be divided into papillary, follicular, etc., medullary carcinomas. Finally, yet importantly, the results were obtained based on static ultrasound images and we should consider how to better assist doctors in making decisions in a real clinical environment.

It is believed that deep learning in diagnosing thyroid nodules has a bright future. Deep learning algorithms have been widely concerned and applied in various fields. They can map unstructured information to structured forms and learn relevant information automatically. The automatic and intelligent method not only improves the efficiency of diagnosis but also ensures the reliability, which may significantly affect early diagnosis and subsequent treatment.

In this paper, we have explored the problem of thyroid nodule classification. ResNet18 was deployed with 508 thyroid nodule ultrasound images. Due to insufficient datasets, transfer learning was adopted. At the same time, considering the imbalance of the dataset, focal loss was employed to adjust the weight of data. Finally, the AUC was 0.997, which means that we can predict almost all thyroid nodules correctly. Moreover, in order to visualize the model’s attention in thyroid nodule images and help understand the model’s predictions more easily, Grad-CAM was used to identify sensitive regions in the learning process of ultrasound images, which were an important reference of image prediction. The regions concerned by the model were segmented and analyzed. Finally, in the region of interest, there are differences between benign and malignant nodules. The results of this study show that our model could diagnose well benign and malignant thyroid nodules in ultrasound images. Besides, our localization information could be regarded as a second opinion for clinical decision-making. The proposed method could assist doctors in making better decisions, reducing the time for human participation, and improving the efficiency of diagnosis.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

PW and JSY designed the study; JYY, XS, BW, WQ, and GT performed the study, analyzed the data, and interpreted the data; JYY and JSY wrote the manuscript; BW, JYY, GT, and PW reviewed the manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by the National Natural Science Foundation of China (Nos. 51574004, 62172004) and Natural Science Foundation of the Higher Education Institutions of Anhui Province, China (Nos. KJ2019A0085, KJ2019ZD05).

JYY, XS, WQ, and GT are currently employed in Geneis Beijing Co., Ltd.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Camargo RY, Tomimori EK. [Usefulness of Ultrasound in the Diagnosis and Management of Well-Differentiated Thyroid Carcinoma]. Arq Bras Endocrinol Metabol (2007) 51:783–92. doi: 10.1590/S0004-27302007000500016

2. Enewold L, Zhu K, Ron E, Marrogi AJ, Stojadinovic A, Peoples GE, et al. Rising Thyroid Cancer Incidence in the United States by Demographic and Tumor Characteristics, 1980-2005. Cancer Epidemiol Biomarkers Prev (2009) 18:784–91. doi: 10.1158/1055-9965.EPI-08-0960

3. Russ G, Leboulleux S, Leenhardt L, Hegedüs L. Thyroid Incidentalomas: Epidemiology, Risk Stratification With Ultrasound and Workup. Eur Thyroid J (2014) 3:154–63. doi: 10.1159/000365289

4. Liu H, Qiu C, Wang B, Bing P, Tian G, Zhang X, et al. Evaluating DNA Methylation, Gene Expression, Somatic Mutation, and Their Combinations in Inferring Tumor Tissue-Of-Origin. Front Cell Dev Biol (2021) 9:619330. doi: 10.3389/fcell.2021.619330

5. Baloch ZW, Fleisher S, Livolsi VA, Gupta PK. Diagnosis of "Follicular Neoplasm": A Gray Zone in Thyroid Fine-Needle Aspiration Cytology. Diagn Cytopathol (2002) 26:41–4. doi: 10.1002/dc.10043

6. Acharya UR, Swapna G, Sree SV, Molinari F, Gupta S, Bardales RH, et al. A Review on Ultrasound-Based Thyroid Cancer Tissue Characterization and Automated Classification. Technol Cancer Res Treat (2014) 13:289–301. doi: 10.7785/tcrt.2012.500381

7. Haugen BR, Alexander EK, Bible KC, Doherty GM, Mandel SJ, Nikiforov YE, et al. 2015 American Thyroid Association Management Guidelines for Adult Patients With Thyroid Nodules and Differentiated Thyroid Cancer: The American Thyroid Association Guidelines Task Force on Thyroid Nodules and Differentiated Thyroid Cancer. Thyroid (2016) 26:1–133. doi: 10.1089/thy.2015.0020

8. Wang L, Zhang L, Zhu M, Qi X, Yi Z. Automatic Diagnosis for Thyroid Nodules in Ultrasound Images by Deep Neural Networks. Med Imag Anal (2020) 61:101665. doi: 10.1016/j.media.2020.101665

9. Li X, Wang S, Xi W, Zhu J, Liu S. Fully Convolutional Networks for Ultrasound Image Segmentation of Thyroid Nodules". In: 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS). Exeter, UK: IEEE (2018).

10. Huang L, Li X, Guo P, Yao Y, Liao B, Zhang W, et al. Matrix Completion With Side Information and Its Applications in Predicting the Antigenicity of Influenza Viruses. Bioinformatics (2017) 33:3195–201. doi: 10.1093/bioinformatics/btx390

11. Cheng L, Hu Y, Sun J, Zhou M, Jiang Q. DincRNA: A Comprehensive Web-Based Bioinformatics Toolkit for Exploring Disease Associations and ncRNA Function. Bioinformatics (2018) 34:1953–6. doi: 10.1093/bioinformatics/bty002

12. Yang J, Peng S, Zhang B, Houten S, Schadt E, Zhu J, et al. Human Geroprotector Discovery by Targeting the Converging Subnetworks of Aging and Age-Related Diseases. Geroscience (2020) 42:353–72. doi: 10.1007/s11357-019-00106-x

13. Hunt C, Montgomery S, Berkenpas JW, Sigafoos N, Cao R. Recent Progress of Machine Learning in Gene Therapy. Curr Gene Ther (2021) 22:132–43. doi: 10.2174/1566523221666210622164133

14. Meng Y, Lu C, Jin M, Xu J, Zeng X, Yang J. A Weighted Bilinear Neural Collaborative Filtering Approach for Drug Repositioning. Brief Bioinform (2022) 23(2):bbab581. doi: 10.1093/bib/bbab581

15. Park VY, Han K, Seong YK, Park MH, Kim EK, Moon HJ, et al. Diagnosis of Thyroid Nodules: Performance of a Deep Learning Convolutional Neural Network Model vs. Radiologists. Sci Rep (2019) 9:17843. doi: 10.1038/s41598-019-54434-1

16. Chang CY, Tsai MF, Chen SJ. Classification of the Thyroid Nodules using Support Vector Machines. In: IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence). Hong Kong, China: IEEE (2008). p. 3093–8.

17. Lyra ME, Lagopati N, Charalabatou P, Vasoura E, Skouroliakou K. Texture Characterization in Ultasonograms of the Thyroid Gland. Corfu, Greece: IEEE (2010).

18. Keramidas EG. Efficient and Effective Ultrasound Image Analysis Scheme for Thyroid Nodule Detection. Springer-Verlag (2007) 4633:1052–60. doi: 10.1007/978-3-540-74260-9_93

19. Acharya UR, Vinitha S Sree, Krishnan MM, Molinari F, Garberoglio R, Suri JS. Non-Invasive Automated 3D Thyroid Lesion Classification in Ultrasound: A Class of ThyroScan™ Systems. Ultrasonics (2012) 52:508–20. doi: 10.1016/j.ultras.2011.11.003

20. Ma X, Xi B, Zhang Y, Zhu L, Yang J. A Machine Learning-Based Diagnosis of Thyroid Cancer Using Thyroid Nodules Ultrasound Images. Curr Bioinf (2020) 15(4):349–58. doi: 10.2174/1574893614666191017091959

21. Zhao T, Hu Y, Peng J, Cheng L. DeepLGP: A Novel Deep Learning Method for Prioritizing lncRNA Target Genes. Bioinformatics (2020) 36:4466–72. doi: 10.1093/bioinformatics/btaa428

22. Du B, Tang L, Liu L, Zhou W. Predicting LncRNA-Disease Association Based on Generative Adversarial Network. Curr Gene Ther (2022) 22:144–51. doi: 10.2174/1566523221666210506131055

23. Yang J, Ju J, Guo L, Ji B, Shi S, Yang Z, et al. Prediction of HER2-Positive Breast Cancer Recurrence and Metastasis Risk From Histopathological Images and Clinical Information via Multimodal Deep Learning. Comput Struct Biotechnol J (2022) 20:333–42. doi: 10.1016/j.csbj.2021.12.028

24. Guan Q, Wang Y, Du J, Qin Y, Lu H, Xiang J, et al. Deep Learning Based Classification of Ultrasound Images for Thyroid Nodules: A Large Scale of Pilot Study. Ann Transl Med (2019) 7:137. doi: 10.21037/atm.2019.04.34

25. Chi J, Walia E, Babyn P, Wang J, Groot G, Eramian M. Thyroid Nodule Classification in Ultrasound Images by Fine-Tuning Deep Convolutional Neural Network. J Dig Imaging (2017) 30:477–86. doi: 10.1007/s10278-017-9997-y

26. Peng S, Liu Y, Lv W, Liu L, Zhou Q, Yang H, et al. Deep Learning-Based Artificial Intelligence Model to Assist Thyroid Nodule Diagnosis and Management: A Multicentre Diagnostic Study. Lancet Dig Health (2021) 3:e250–9. doi: 10.1016/S2589-7500(21)00041-8

27. Avola D, Cinque L, Fagioli A, Filetti S, Grani G, Rodolà E. Knowledge-Driven Learning via Experts Consult for Thyroid Nodule Classification. ArXiv E-prints. (2020). arXiv:2005.14117. 27. doi: 10.48550/arXiv.2005.14117

28. Ye Z, Zhuang F, Jian F. An Image Augmentation Method using Convolutional Network for Thyroid Nodule Classification by Transfer Learning, In: 2017 3rd IEEE International Conference on Computer and Communications (ICCC). Chengdu, China: IEEE (2017). 1819–23.

29. Ma J, Wu F, Zhu J, Xu D, Kong D. A Pre-Trained Convolutional Neural Network Based Method for Thyroid Nodule Diagnosis. Ultrasonics (2017) 73:221–30. doi: 10.1016/j.ultras.2016.09.011

30. Sun W, Liu T, Xie S, Yu J, Niu L, Sun W. Classification of Thyroid Nodules in Ultrasound Images using Deep Model Based Transfer Learning and Hybrid Features In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). New Orleans, LA, USA: IEEE (2017). p. 919–23.

31. Chen D, Niu J, Qiao P, Yue L, Mei W. A Deep-Learning Based Ultrasound Text Classifier for Predicting Benign and Malignant Thyroid Nodules. In: International Conference on Green Informatics. Fuzhou, China: IEEE (2017). p. 199–204

32. Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet Large Scale Visual Recognition Challenge. Int J Comput Vision (2015) 115:211–52. doi: 10.1007/s11263-015-0816-y

33. Lin TY, Goyal P, Girshick R, He K, Dollar P. Focal Loss for Dense Object Detection. IEEE Trans Pattern Anal Mach Intell (2020) 42:318–27. doi: 10.1109/TPAMI.2018.2858826

34. Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning Deep Features for Discriminative Localization. CVPR (2016) 2921–9. doi: 10.1109/CVPR.2016.319

35. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. Int J Comput Vision (2020) 128:336–59. doi: 10.1007/s11263-019-01228-7

36. Wu SG, Bao FS, Xu EY, Wang YX, Xiang QL. A Leaf Recognition Algorithm for Plant Classification Using Probabilistic Neural Network. IEEE (2007).

37. Yang M, Kpalma K, Ronsin J. A Survey of Shape Feature Extraction Techniques. InTech (2007) 15(7):43–90. doi: 10.5772/6237

38. Lande MV, Bhanodiya P, Jain P. An Effective Content-Based Image Retrieval Using Color, Texture and Shape Feature. Intelligent Computing, Networking, and Informatics (2014).

39. Cheng L, Jiang Y, Ju H, Sun J, Peng J, Zhou M, et al. InfAcrOnt: Calculating Cross-Ontology Term Similarities Using Information Flow by a Random Walk. BMC Genomics (2018) 19:919. doi: 10.1186/s12864-017-4338-6

40. Puranik N, Yadav D, Chauhan PS, Kwak M, Jin JO. Exploring the Role of Gene Therapy for Neurological Disorders. Curr Gene Ther (2021) 21(1):11–22. doi: 10.2174/1566523220999200917114101

41. Park SH, Kim SJ, Kim EK, Kim MJ, Son EJ, Kwak JY. Interobserver Agreement in Assessing the Sonographic and Elastographic Features of Malignant Thyroid Nodules. AJR Am J Roentgenol (2009) 193:W416–423. doi: 10.2214/AJR.09.2541

42. Kim SH, Park CS, Jung SL, Kang BJ, Kim JY, Choi JJ, et al. Observer Variability and the Performance Between Faculties and Residents: US Criteria for Benign and Malignant Thyroid Nodules. Kor J Radiol (2010) 11:149–55. doi: 10.3348/kjr.2010.11.2.149

43. Park CS, Kim SH, Jung SL, Kang BJ, Kim JY, Choi JJ, et al. Observer Variability in the Sonographic Evaluation of Thyroid Nodules. J Clin Ultrasound (2010) 38:287–93. doi: 10.1002/jcu.20689

44. Kim HG, Kwak JY, Kim EK, Choi SH, Moon HJ. Man to Man Training: Can it Help Improve the Diagnostic Performances and Interobserver Variabilities of Thyroid Ultrasonography in Residents? Eur J Radiol (2012) 81:e352–356. doi: 10.1016/j.ejrad.2011.11.011

Keywords: thyroid nodule, ultrasound images, deep learning, convolutional neural network, Grad-CAM, feature extraction

Citation: Yang J, Shi X, Wang B, Qiu W, Tian G, Wang X, Wang P and Yang J (2022) Ultrasound Image Classification of Thyroid Nodules Based on Deep Learning. Front. Oncol. 12:905955. doi: 10.3389/fonc.2022.905955

Received: 28 March 2022; Accepted: 22 June 2022;

Published: 15 July 2022.

Edited by:

Min Tang, Jiangsu University, ChinaReviewed by:

Weinan Zhou, University of Illinois at Urbana-Champaign, United StatesCopyright © 2022 Yang, Shi, Wang, Qiu, Tian, Wang, Wang and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Peizhen Wang, cHpod2FuZ0BhaHV0LmVkdS5jbg==; Jiasheng Yang, anN5YW5nLm1jY0BnbWFpbC5jb20=

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.