95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Oncol. , 14 April 2022

Sec. Radiation Oncology

Volume 12 - 2022 | https://doi.org/10.3389/fonc.2022.856346

This article is part of the Research Topic Machine Learning in Radiation Oncology View all 14 articles

Suqing Tian1†

Suqing Tian1† Cuiying Wang2†

Cuiying Wang2† Ruiping Zhang3

Ruiping Zhang3 Zhuojie Dai4

Zhuojie Dai4 Lecheng Jia5

Lecheng Jia5 Wei Zhang6

Wei Zhang6 Junjie Wang1*

Junjie Wang1* Yinglong Liu5*

Yinglong Liu5*Objectives: Glioblastoma is the most common primary malignant brain tumor in adults and can be treated with radiation therapy. However, tumor target contouring for head radiation therapy is labor-intensive and highly dependent on the experience of the radiation oncologist. Recently, autosegmentation of the tumor target has been playing an increasingly important role in the development of radiotherapy plans. Therefore, we established a deep learning model and improved its performance in autosegmenting and contouring the primary gross tumor volume (GTV) of glioblastomas through transfer learning.

Methods: The preoperative MRI data of 20 patients with glioblastomas were collected from our department (ST) and split into a training set and testing set. We fine-tuned a deep learning model for autosegmentation of the hippocampus on separate MRI scans (RZ) through transfer learning and trained this deep learning model directly using the training set. Finally, we evaluated the performance of both trained models in autosegmenting glioblastomas using the testing set.

Results: The fine-tuned model converged within 20 epochs, compared to over 50 epochs for the model trained directly by the same training set, and demonstrated better autosegmentation performance [Dice similarity coefficient (DSC) 0.9404 ± 0.0117, 95% Hausdorff distance (95HD) 1.8107 mm ±0.3964mm, average surface distance (ASD) 0.6003 mm ±0.1287mm] than the model trained directly (DSC 0.9158±0.0178, 95HD 2.5761 mm ± 0.5365mm, ASD 0.7579 mm ± 0.1468mm) with the same test set. The DSC, 95HD, and ASD values of the two models were significantly different (P<0.05).

Conclusion: A model developed with semisupervised transfer learning and trained on independent data achieved good performance in autosegmenting glioblastoma. The autosegmented volume of glioblastomas is sufficiently accurate for radiotherapy treatment, which could have a positive impact on tumor control and patient survival.

Glioblastoma is the most common primary malignant brain tumor in adults (1). At present, the standard treatment for this disease is combination therapy, including postoperative radiotherapy and adjuvant chemotherapy after the initial surgery. Intensity-modulated radiotherapy (IMRT) is a commonly used method for delivering radiotherapy to glioblastomas. An accurate radiotherapy plan is required to ensure accurate patient treatment (2). The delineation of brain tumor targets and other brain tissue structure areas from multimodal MRI sequences can provide important information for the radiotherapy plan. Traditionally, the manual contouring of these areas is time-consuming and dependent on the experience of the doctors.

The implementation of deep learning has resulted in the development of new ideas for the automatic and accurate delineation of brain tumors (3). Deep learning approaches through convolutional neural networks (CNNs) have been proposed for glioblastoma segmentation (4–6). For example, Yi et al. (4) developed a framework of three-dimensional (3D) fully CNN models for glioblastoma segmentation from multimodality MRI data and achieved a Dice score of 0.89 in whole tumor glioblastoma segmentationon the segmentation dataset of the Brain Tumor Image Segmentation Challenge (BRATS) with 274 tumor samples.

Recently, transfer learning has found multiple applications in brain MRI (7). Transfer learning allows the reuse of a pretrained model to solve a related target problem, potentially yielding better results from fine-tuning pretrained CNNs than training CNNs from scratch (8). In this work, we provide a deep learning model for the autosegmentation of the gross tumor volume (GTV) of glioma. A deep learning model trained for hippocampus autosegmentation was fine-tuned and trained using a limited MR dataset of 20 glioblastoma patients. This approach is expected to serve as a basis for accurate radiotherapy dose calculation and optimization in the development of a high-quality radiotherapy plan (9).

We retrospectively collected the MRI scans and medical records of patients with histologically proven glioblastomas from a single institute (Department of Radiation Oncology of Peking University Third Hospital). The MRI examinations were performed with preoperative contrast-enhanced T1-weighted sequences. Details of the MRI characteristics are shown in Table 1. The MRI dataset consisted of GTVs of high-grade gliomas (HGGs) of 20 patients, which was then randomly split into three cohorts: 16 patients as the training set (including 4 patients as the validation set) for training an autosegmentation model and optimization of hyperparameters during model training and 4 patients as the test set for evaluating the performance of the trained model.

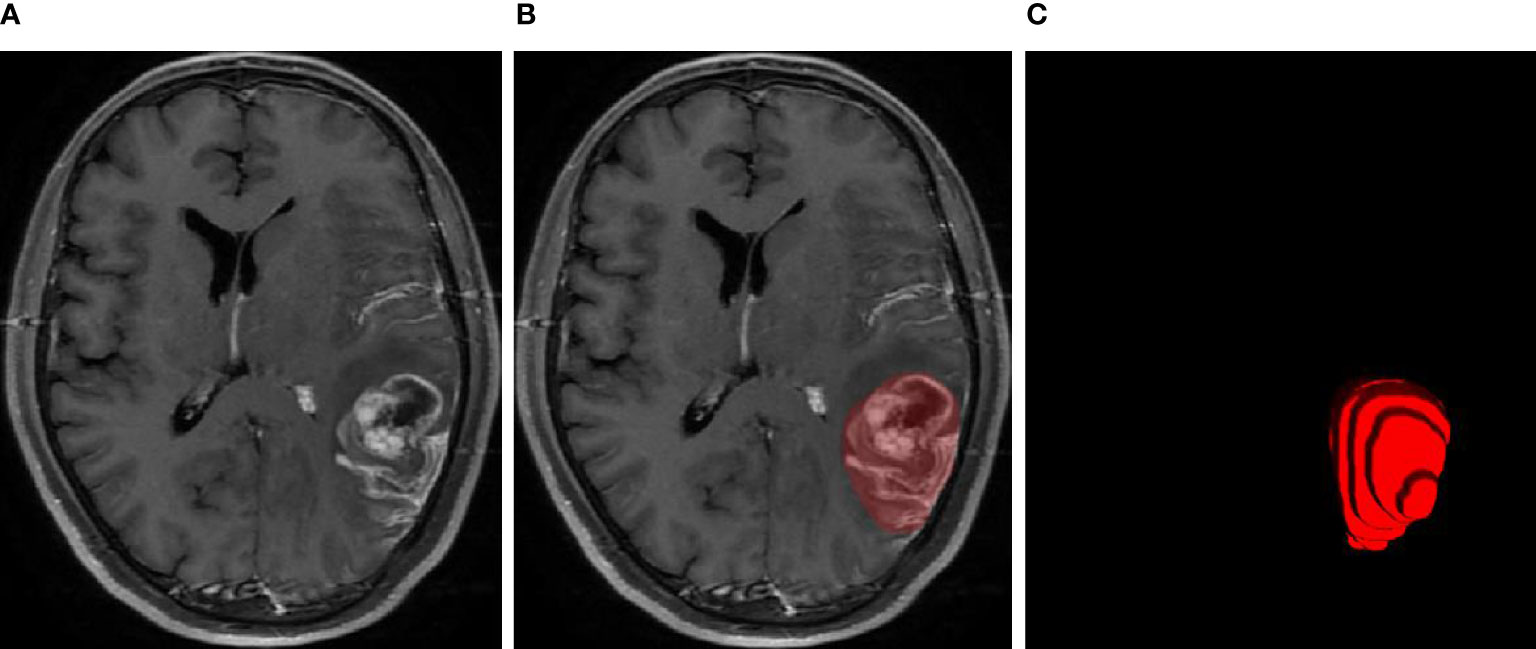

The MRI examinations of the 20 patients were assigned to two expert radiation oncologists (ST and ZD, both with more than 15 years of experience in radiotherapy treatment of head and neck tumors) to delineate the ground-truth GTVs via consensus. A third radiologist (CW, with more than 20 years of experience) specializing in radiation oncology was consulted in cases of disagreement. A diagram of the GTV contours delineated by the human experts is presented in Figure 1.

Figure 1 MRI examination of the glioblastoma (A), GTV contours delineated by human experts (B), and 3D diagram corresponding to the GTV contours (C).

All MRI sequences were cropped to only include regions of non-zero value to reduce the size of the network input and thereby reduce the computational load of the network (10). To enable our network to properly learn spatial semantics, all MRI sequences were resampled to the median voxel spacing of the dataset, where third-order spline interpolation was used for the images of all MRI scans and nearest-neighbor interpolation for their corresponding contours. All images were additionally normalized by simple Z score normalization for the individual patients (11).

To overcome the overfitting problem caused by training a deep network with limited data, we adopted a number of real-time data enhancement techniques, such as random flip, random zoom, random elastic deformation, gamma adjustment, and mirroring, to increase the diversity of the data.

U-Net is a popular encoder-decoder network (11, 12) that has been widely used in semantic segmentation fields. Its encoder part works similarly to a traditional classification CNN in that it successively aggregates semantic information at the expense of spatial information. Its decoder receives semantic information from the bottom layer of the encoder and recombines it with higher-resolution feature maps obtained directly from the encoder through skip connections (13) to recover the spatial information missing in the encoder.

Since 3D CNNs have demonstrated high effectiveness in aggregating valuable information in the context of 3D medical images (14), we implemented a 3D deep CNN to extract representative features for complicated GTVs based on the MRI sequences. Our network is based on the architecture of 3D U-Net (15), with 5 encoders and 5 decoders. In each encoder and decoder, we designed a couple of convolutional layers with a 3×3×3 convolution kernel to extract the feature of the image, each convolutional layer followed by the LeakyReLU (negative slope 1e–2) and the instance normalization (16) with a dropout of 0.5, which respectively replaced the more common ReLU activation function and batch normalization in the popular deep learning model. We used the 2×2×2 max pooling to create a downsampled feature map in each encoder; conversely, we used the 2×2×2 deconvolution kernel to create an upsampled feature map in each decoder. The layers in the encoders were skip connected and concatenated with layers in the corresponding decoders to use fine-grained details learned in the encoders to construct the feature maps in the decoders. The detailed architecture of our network is shown in Figure 2.

Due to the limited available Graphics Processing Unit (GPU) memory, we slid and cropped smaller image patches from the original images as the input of the segmentation network. Although this patch-based training method limits the field of view of the model and is unable to collect sufficient contextual information, the impact on small target segmentation is minimal.

The objective loss L of the segmentation network is the weighted sum of Dice loss Ldice and cross-entropy loss LCE:

Here, the weights α1 and β1 were set to 0.4 and 0.6, respectively.

where C is the number of divided categories, N is the number of voxels in each patch sample in the training set, and and are the contour corresponding to the ith voxel of the kth category and the probability output of the model prediction, respectively.

We used PyTorch 1.6 to build 3D U-Net on Ubuntu 18.04 and trained the model framework on an NVIDIA Tesla V100. When training the model, the model input patch size was 32 × 256 × 256, the batch size was 2, the optimizer was RMSprop, the initial learning rate was 0.001, the gradient descent strategy was stochastic gradient descent (SGD) with momentum (0.9), and the maximum number of training rounds (epochs) was 150. In addition, due to the limited amount of collected data, we did not divide the test set separately but adopted an 8:2 dataset division method. For the segmentation results of each patch of the model, we used Gaussian fusion to obtain the full-resolution segmentation result for each class, which was postprocessed with the largest connected component as the final segmentation result.

We calculated the Dice similarity coefficient (DSC), 95% Hausdorff distance (95HD), and average surface distance (ASD) between the GTVs segmented automatically by the model and the corresponding manual annotations as quantitative assessments of the accuracy of the model segmentation. The DSC is defined as:

where P is the automatically segmented contour, G is the ground-truth contour. DSC is an indicative degree of similarity for agreement, which measures the spatial overlap between the automatic segmentation and the ground-truth segmentation. The 95HD is defined as:

DSC is more sensitive to the inner filling of the segmented contour, while Hausdorff distance (HD) is more sensitive to the boundary of the segmented contour. The 95%HD is similar to maximum HD. However, it is based on the calculation of the 95th percentile of the distances between boundary points in P and G. The purpose of using this metric is to eliminate the impact of a very small subset of the outliers. The ASD is defined as:

where S(P and S(G) denote the point set of automatic segmentation pixels and ground-truth pixels, respectively. The most consistent segmentation result can be obtained when ASD equals 0.

Transfer learning is a process by which existing models are reused to solve a new challenge, usually the problem of overfitting due to data scarcity (17). Given the limited size of the dataset delineated by our human experts and the fact that our modified 3D U-Net is a kind of supervised learning method that works well depending on the severity of the big data, we applied transfer learning to this work to fine-tune the glioblastoma segmentation model.

To apply transfer learning in this work, the 3D U-Net was trained to autosegment the hippocampus with the data from 50 patients with T1C glioblastomas (spacing[mm]: 0.5×0.36×0.36, resolution: (327~364) ×640×640). This hippocampal dataset was collected from a single institute (The First Hospital of Tsinghua University) for the radiotherapy treatment of brain metastases. All contours of the hippocampus were delineated by two expert radiation oncologists (ZD, with more than 15 years of experience in radiotherapy treatment of head and neck tumors; RZ, with more than 20 years of experience in radiotherapy treatment) according to the results of the RTOG0933 trial and then cross-checked and revised.

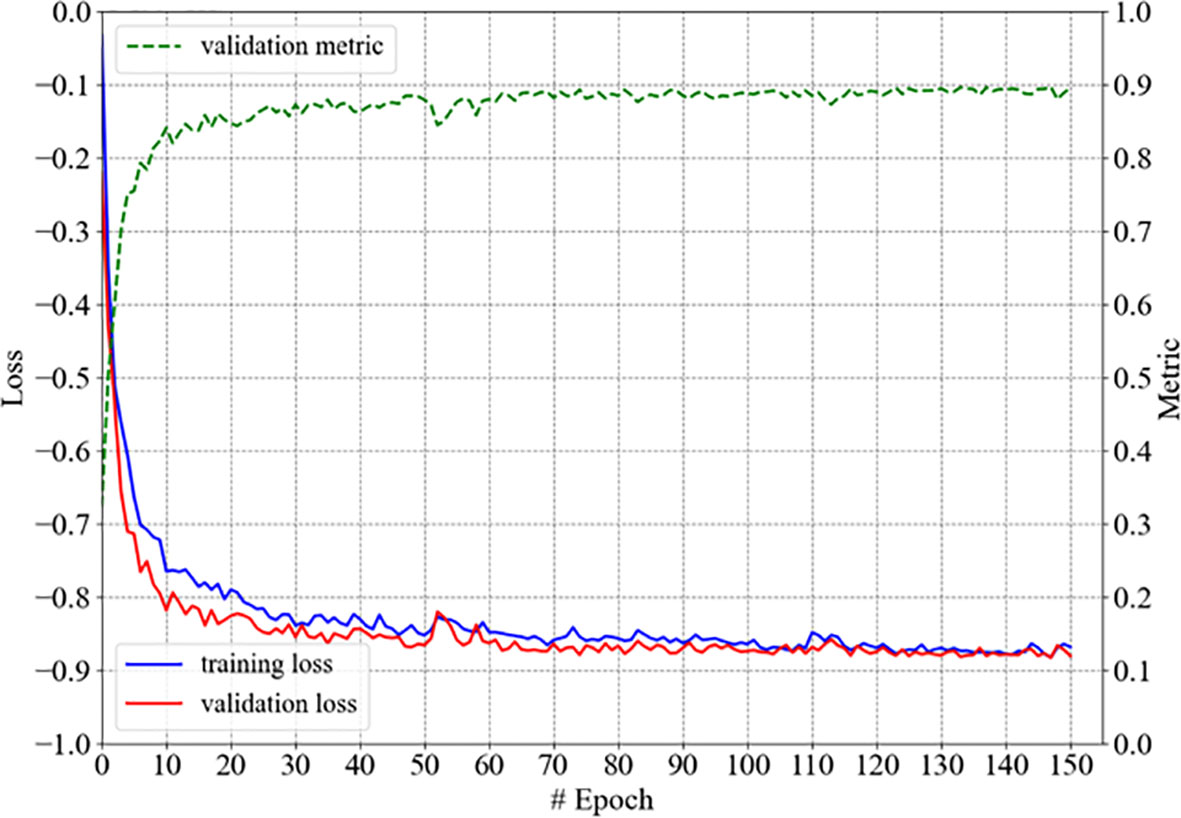

Our 3D U-Net for the autosegmentation of the hippocampus was trained with the hippocampus data from 40 patients, converged within 150 epochs, and was denoted as model-hippo; the training process is shown in Figure 3. The model achieved a DSC of 0.897 (±0.011) for the left hippocampus and 0.895 (±0.019) for the right hippocampus with the test set (10 cases).

Figure 3 The training process of model-hippo: the loss is the objective loss L and the metric is DSC.

The model for autosegmenting the GTV of glioblastomas was trained as follows. First, the parameters of our 3D U-Net were randomly initialized, and the model was trained simply with 50 epochs on the training set of gliomas, as illustrated in Figure 4A; the model thus developed was denoted as model-glioma. Second, transfer learning was applied to fine-tune model-glioma. Specifically, instead of random parameter initialization, the network parameters of model-hippo were used as the initial parameters of model-glioma, and the resulting model was then trained for 50 epochs using the same training set for training model-glioma. This model was denoted as model-glioma-TL, where TL refers to transfer learning. The corresponding training process is shown in Figure 4B.

As shown in Figure 4, we found that model-glioma-TL converged faster than model-glioma did within the same 50 epochs. The validation metric reached 0.9 within 10 epochs for model-glioma-TL but within 40 epochs for model-glioma. Moreover, the validation metric of model-glioma-TL on the final epoch was greater than that of model-glioma.

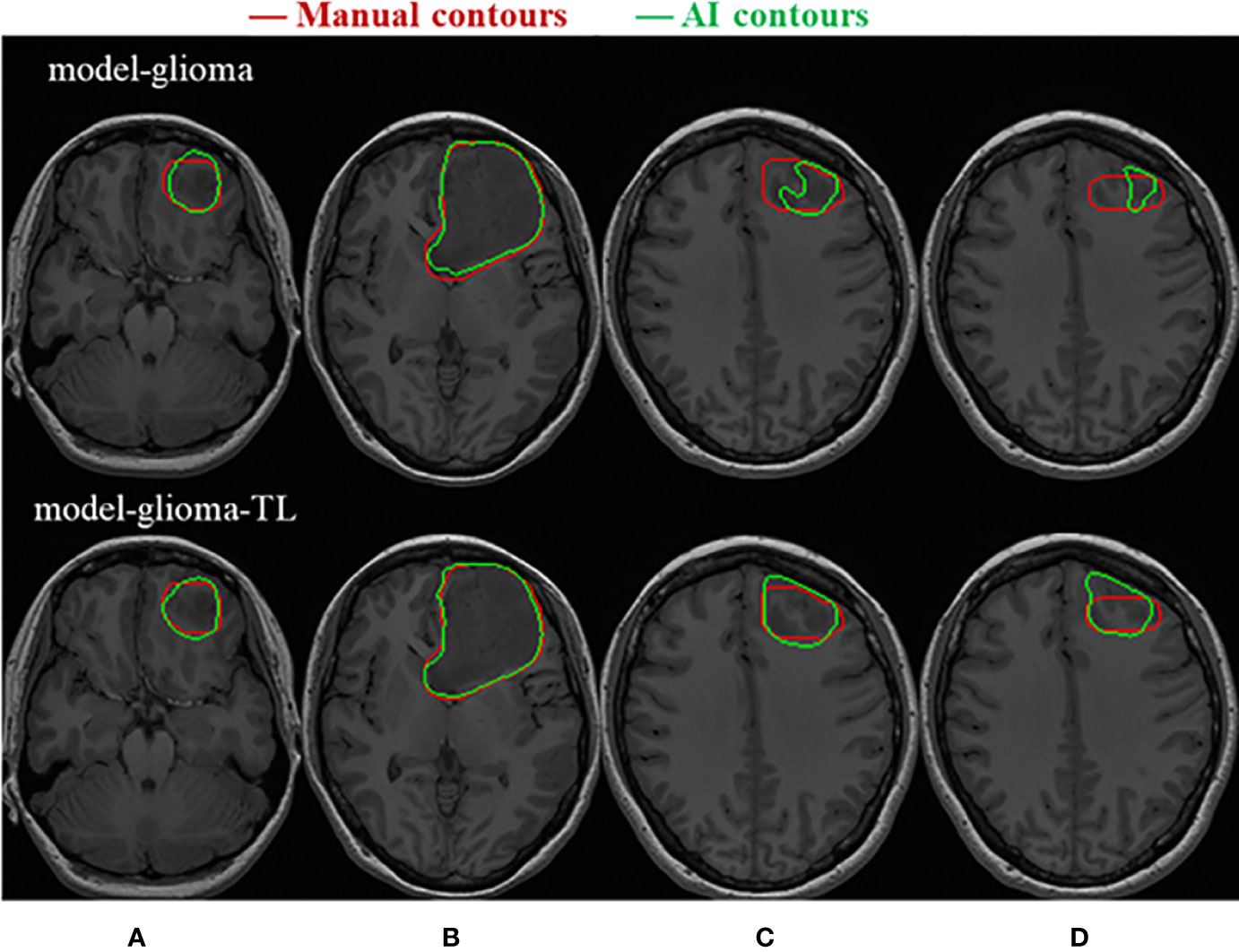

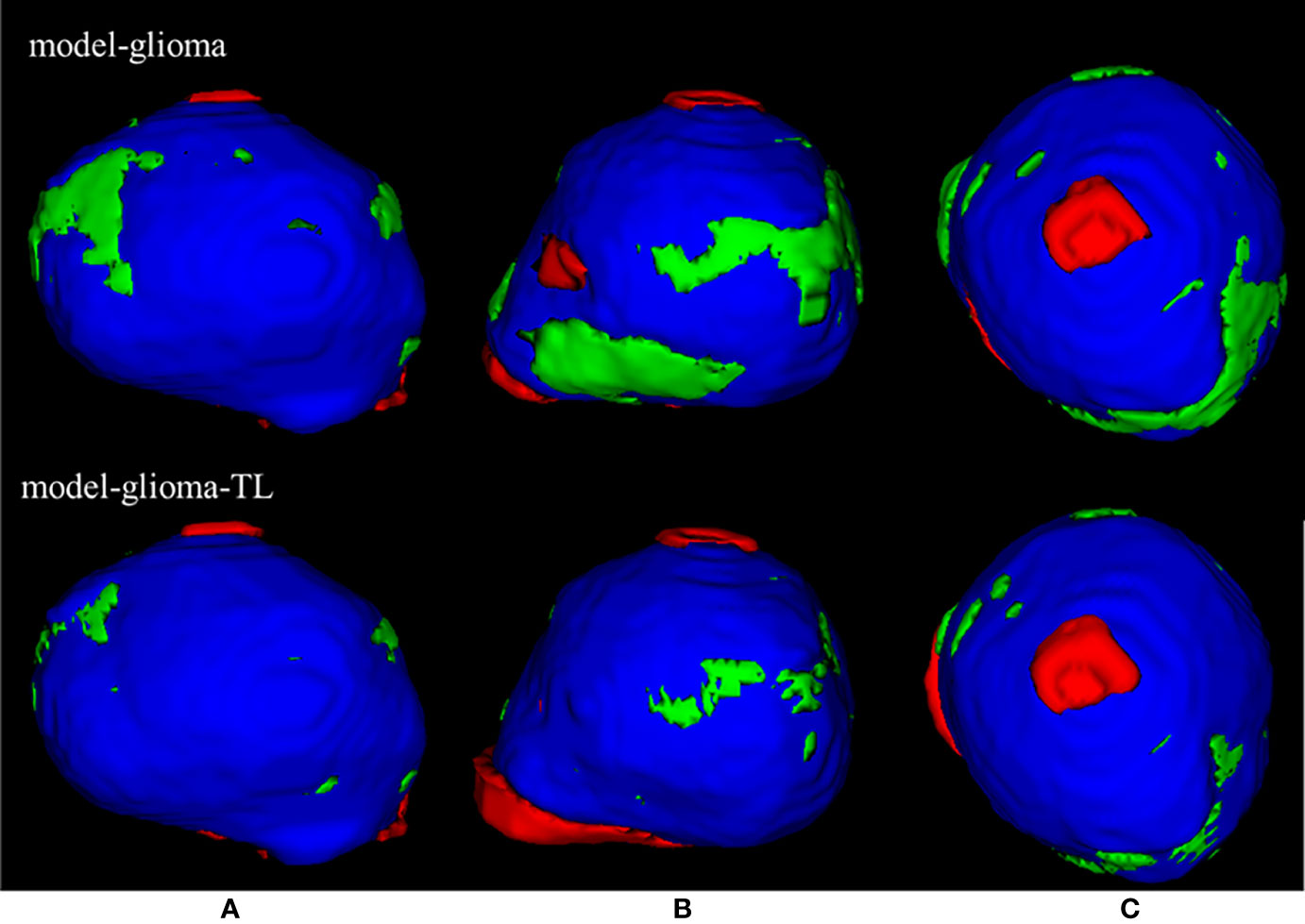

Two sets of experiments (model-glioma and model-glioma-TL) were conducted to verify the effectiveness of transfer learning on training with small sample data and to evaluate the performance of the two models by the DSC, 95HD, and ASD metrics. The evaluation metrics of two sets of experiments with the same test set, including the mean, standard deviation (SD), and P value for the T test (two-tailed), are presented in Table 2. We found that model-glioma-TL significantly outperformed model-glioma in these terms [DSC 0.9404 & 0.9158, 95HD 1.8107mm & 2.5761mm, ASD 0.6003mm & 0.7579mm (and P<0.05). The autosegmentation results of a test sample are visualized in Figure 5 and its 3D morphology are visualized in Figure 6.

Figure 5 Visualization of the test samples for the two models. The performance of model-glioma-TL was superior to that of model-glioma in the autosegmentation of glioblastoma GTVs, especially in the recognition of the small GTV in the upper and lower MRI slices (A, D) and the boundary delineation of the GTV contours in the intermediate MRI slices (B, C).

Figure 6 | The 3D visualization of the test samples for the two models. The red region is the individual contouring by human experts, the green region is the individual contouring by AI models, and the blue region is the mutual contouring combined with human experts and AI models. The contouring by model-glioma-TL coincided with the contouring by human experts better than that of model-glioma in three different profiles of the test sample (A–C). And the mean absolute percentage error of model-glioma-TL in the autosegmentation of glioblastoma GTVs with the same test set was 2.58% and superior to 4.74% of modelgli.

The rapid development of modern radiotherapy technology has resulted in more abundant relevant multimodal medical imaging information (18). Since a considerable amount of time is necessary to manually contour MRI slices and the segmentation results of artificial tumors often depend on the doctor’s prior knowledge and work experience, the final target volume results can be variable (19, 20). Therefore, deep learning technology combined with MRI can help improve the accuracy of tumor target delineation and reduce differences caused by subjective factors (10). Additionally, it can help doctors efficiently and practically complete their tumor target area delineation tasks (21, 22).

Gliomas are the most common primary brain tumors and seriously endanger human health (23). Therefore, segmentation of the images of brain tumors has become a popular research topic (24, 25). In recent years, brain tumor image segmentation methods have undergone continuous improvement, transitioning from manual to half-motion and automatic segmentation techniques (22, 26). In the present study, we have demonstrated that the performance of the transfer learning approach is comparable to the models trained through 3D CNN (4, 6), but a much smaller dataset and fewer epochs are required. Indeed, this method should be further evaluated using larger datasets such as BRATS.

In conclusion, transfer learning is feasible and effective in training models for accurate and consistent glioblastoma autosegmentation. This is more crucial for a radiation oncology department that is willing to implement deep learning with limited number of clinical cases.

In this work, the artificial intelligence (AI) algorithm based on transfer learning has achieved good results for the autosegmentation of glioblastoma GTV; however, there are still several issues that need to be cleared. 1) The location and boundary of glioblastoma GTV not only need to consider the enhanced area of contrast-enhanced T1-weighted imaging and the abnormal area of T2 FLAIR in clinical practice, perhaps the autosegmentation of the glioblastoma tumor using multimodality MRI is more satisfying for clinical practice (27). 2) Scanners from different manufacturers or different scanning protocols often result in medical imaging with different voxel spacing and resolution, as well as image quality and style. These differences are especially pronounced for MRI. Additionally, different tumor delineation styles come from the subjectivity of different doctors; these various differences seriously affect the generalizability of the AI algorithms. Therefore, further study using the data from multiple centers is an important topic. 3) We confirmed that transfer learning can significantly improve the automatic segmentation of the glioblastoma GTV in this work; however, some organs or tumor target areas, such as the optic chiasm, have a similar X-shape, while the brain stem has a similar apple shape (28, 29), and it needs to be judged by combining different medical imaging procedures and the rich medical prior knowledge of professional doctors (30, 31). How to incorporate such shape and prior knowledge and medical prior knowledge into the AI model to further improve the generalizability and generalization of AI algorithms still is an open problem.

The original contributions presented in the study are included in the article/supplementary materials. Further inquiries can be directed to the corresponding authors.

Conceptualization, ST and CW. Methodology, ST and YL. Software, YL and LJ. Validation, ST, CW, RZ and ZD. Formal analysis, ST and ZD. Investigation, ST and CW. Resources, ST and JW. Data curation, ST, JW, ZD, and YL. Writing—original draft preparation, ST and YL. Writing—review and editing, JW, CW and RZ. Visualization, YL. Supervision, WZ. Project administration, and WZ. ST and CW contributed equally to this work, so they are listed as co-first authors. All authors contributed to the article and approved the submitted version..

WZ is employed by Shanghai United Imaging Healthcare Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Ostrom QT, Gittleman H, Truitt G, Boscia A, Kruchko C, Barnholtz-Sloan JS. CBTRUS Statistical Report: Primary Brain and Other Central Nervous System Tumors Diagnosed in the United States in 2011-2015. Neuro Oncol (2018) 20 Suppl 4:iv1. doi: 10.1093/neuonc/noy131

2. Ten Haken RK, Thornton AF Jr, Sandler HM, LaVigne ML, Quint DJ, Fraass BA, et al. A Quantitative Assessment of the Addition of MRI to CT-Based, 3-D Treatment Planning of Brain Tumors. Radiother Oncol (1992) 25:121. doi: 10.1016/0167-8140(92)90018-P

3. Ranjbarzadeh R, Bagherian Kasgari A, Jafarzadeh Ghoushchi S, Anari S, Naseri M, Bendechache M. Brain Tumor Segmentation Based on Deep Learning and an Attention Mechanism Using MRI Multi-Modalities Brain Images. Sci Rep (2021) 11:10930. doi: 10.1038/s41598-021-90428-8

4. Yi D, Zhou M, Chen Z, Gevaert O. 3-D Convolutional Neural Networks for Glioblastoma Segmentation. Comput Vision Pattern Recognit (2016) arXiv:1611.04534. doi: 10.48550/arXiv.1611.04534.

5. Havaei M, Davy A, Warde-Farley D, Biard A, Courville A, Bengio Y, et al. Brain Tumor Segmentation With Deep Neural Networks. Med Image Anal (2017) 35:18–31. doi: 10.1016/j.media.2016.05.004

6. Chen L, Wu Y, DSouza AM, Abidin AZ, Wismüller A, Xu C. Medical Imaging 2018: Image Processing. In: MRI Tumor Segmentation With Densely Connected 3D CNN, vol. 10574. . Bellingham, WA, USA: International Society for Optics and Photonics (2018). p. 105741F.

7. Valverde JM, Imani V, Abdollahzadeh A, De Feo R, Prakash M, Ciszek R, et al. Transfer Learning in Magnetic Resonance Brain Imaging: A Systematic Review. J Imaging (2021) 7:66. doi: 10.3390/jimaging7040066

8. Shin HC, Roth HR, Gao M, Lu L, Xu Z, Nogues I, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging (2016) 35:1285–98. doi: 10.1109/TMI.2016.2528162

9. Isensee F, Jäger PF, Kohl SA, Petersen J, Maier-Hein KH. Automated Design of Deep Learning Methods for Biomedical Image Segmentation. arXiv preprint arXiv (2019). Comput Vision Pattern Recognit (2016) arXiv:1611.04534. doi: 10.48550/arXiv.1611.04534

10. Zhao Y-X, Zhang Y-M, Liu C-L. Bag of Tricks for 3d Mri Brain Tumor Segmentation. In: Crimi A., Bakas S. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2019. Lecture Notes in Computer Science (), vol 11992. Cham: Springer (2020). doi: 10.1007/978-3-030-46640-4_20

11. Isensee F, Jaeger PF, Kohl SAA, Petersen J, Maier-Hein KH. nnU-Net: A Self-Configuring Method for Deep Learning-Based Biomedical Image Segmentation. Nat Methods (2021) 18(2):203–11. doi: 10.1038/s41592-020-01008-z

12. Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N., Hornegger J., Wells W., Frangi A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science, vol. 9351. Cham: Springer (2015). doi: 10.1007/978-3-319-24574-4_28.

13. Drozdzal M, Vorontsov E, Chartrand G, Kadoury S, Pal C. The Importance of Skip Connections in Biomedical Image Segmentation. Comput Vision Pattern Recognit (2016) arXiv:1608.04117. doi: 10.48550/arXiv.1608.04117

14. Milletari F, Navab N, Ahmadi S-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In: International Conference on 3D Vision (3dv). Stanford, CA, USA: IEEE (2016). p. 565–71. doi: 10.1109/3DV.2016.79

15. C¸i¸ cek O, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3d U-Net: Learning Dense Volumetric Segmentation From Sparse Annotation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Cham: Springer (2016). p. 424–32. doi: 10.1007/978-3-319-46723-8_49

16. Ulyanov D, Vedaldi A, Lempitsky V. Instance Normalization: The Missing Ingredient for Fast Stylization. Comput Vision Pattern Recognit (2016) arXiv:1607.08022. doi: 10.48550/arXiv.1607.08

17. Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, et al. A Comprehensive Survey on Transfer Learning. Proc IEEE (2021) 109(1):43–76. doi: 10.1109/JPROC.2020.3004555

18. Ostrom QT, Bauchet L, Davis FG, Deltour I, Fisher JL, Langer CE, et al. The Epidemiology of Glioma in Adults: A State of the Science Review. Neuro Oncol (2014) 16(7):896–913. doi: 10.1093/neuonc/nou087

19. Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, et al. The Multimodal Brain Tumor Image Segmentation Benchmark (Brats). IEEE Trans Med Imaging (2014) 34(10):1993–2024.

20. Wang G, Li W, Ourselin S, Vercauteren T. Automatic Brain Tumor Segmentation Using Cascaded Anisotropic Convolutional Neural Networks. In: Crimi A., Bakas S., Kuijf H., Menze B., Reyes M. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2017. Lecture Notes in Computer Science () vol 10670. Cham: Springer (2018).doi: 10.1007/978-3-319-75238-9_16.

21. Meier R, Bauer S, Slotboom J, Wiest R, Reyes M. Patient-Specific Semi-Supervised Learning for Postoperative Brain Tumor Segmentation. Med image Comput computer-assisted Intervention: MICCAI Int Conf Med Image Comput Computer-Assisted Intervention (2014) 17:714–21. doi: 10.1007/978-3-319-10404-1_89

22. Gutman DA, Dunn WD Jr, Grossmann P, Cooper LA, Holder CA, Ligon KL, et al. Somatic Mutations Associated With MRI-Derived Volumetric Features in Glioblastoma. Neuroradiology (2015) 57:1227–37. doi: 10.1007/s00234-015-1576-7

23. Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, et al. The Multimodal Brain TumorImage Segmentation Benchmark (BRATS). IEEE Trans Med Imaging (2015) 34(10):1993–2024. doi: 10.1109/TMI.2014.2377694

24. Egger J, Kapur T, Fedorov A, Pieper S, Miller JV, Veeraraghavan H, et al. GBM Volumetry Using the 3D Slicer Medical Image Computing Platform. Sci Rep (2013) 3:164. doi: 10.1038/srep01364

25. Bauer S, Nolte LP, Reyes M. Fully Automatic Segmentation of Brain Tumor Images Using Support Vector Machine Classification in Combination With Hierarchical Conditional Random Field Regularization. Med Image Comput Computer-Assisted Intervention: MICCAI Int Conf Med Image Comput Computer-Assisted Intervention (2011) 14:354–61. doi: 10.1007/978-3-642-23626-6_44

26. Stummer W, Reulen HJ, Meinel T, Pichlmeier U, Schumacher W, Tonn JC, et al. Extent of Resection and Survival in Glioblastoma Multiforme: Identification of and Adjustment for Bias. Neurosurgery (2008) 62:564–576 discussion 564-576.

27. Meyer P, Noblet V, Mazzara C, Lallement A. Survey on Deep Learning for Radiotherapy. Comput Biol Med (2018) 98:126–46. doi: 10.1016/j.compbiomed.2018.05.018

28. Gondi V, Tomé WA, Mehta MP. Why Avoid the Hippocampus? A Comprehensive Review. Radiother Oncol (2010) 97:370–6. doi: 10.1016/j.radonc.2010.09.013

29. Brown PD, Gondi V, Pugh S. Hippocampal Avoidance During Whole-Brain Radiotherapy Plus Memantine for Patients With Brain Metastases: Phase III Trial NRG Oncology Cc001. J Clin Oncol (2020) 38:1019–29. doi: 10.1200/JCO.19.02767

30. Sze V, Chen Y, Yang T, Emer JS. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc IEEE (2017) 105(12):2295–329. doi: 10.1109/JPROC.2017.2761740

31. Isensee F, Kickingereder P, Wick W, Bendszus M, Maier-Hein KH. Brain Tumor Segmentation and Radiomics Survival Prediction: Contribution to the Brats 2017 Challenge. In: Crimi A., Bakas S., Kuijf H., Menze B., Reyes M. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2017. Lecture Notes in Computer Science () vol 10670. Cham: Springer (2018). doi: 10.1007/978-3-319-75238-9_25.

Keywords: glioblastoma, autosegmentation, deep learning, transfer learning, radiotherapy treatment

Citation: Tian S, Wang C, Zhang R, Dai Z, Jia L, Zhang W, Wang J and Liu Y (2022) Transfer Learning-Based Autosegmentation of Primary Tumor Volumes of Glioblastomas Using Preoperative MRI for Radiotherapy Treatment. Front. Oncol. 12:856346. doi: 10.3389/fonc.2022.856346

Received: 17 January 2022; Accepted: 23 February 2022;

Published: 14 April 2022.

Edited by:

Wei Zhao, Beihang University, ChinaReviewed by:

Fuli Zhang, Chinese PLA General Hospital, ChinaCopyright © 2022 Tian, Wang, Zhang, Dai, Jia, Zhang, Wang and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Junjie Wang, anVuamlld2FuZ19lZHVAc2luYS5jbg==; Yinglong Liu, eWluZ2xvbmcubGl1QGNyaS11bml0ZWQtaW1hZ2luZy5jb20=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.