94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Oncol., 26 May 2022

Sec. Breast Cancer

Volume 12 - 2022 | https://doi.org/10.3389/fonc.2022.850515

This article is part of the Research TopicQuantitative Imaging and Artificial Intelligence in Breast Tumor DiagnosisView all 27 articles

Background: The detection of phosphatidylinositol-3 kinase catalytic alpha (PIK3CA) gene mutations in breast cancer is a key step to design personalizing an optimal treatment strategy. Traditional genetic testing methods are invasive and time-consuming. It is urgent to find a non-invasive method to estimate the PIK3CA mutation status. Ultrasound (US), one of the most common methods for breast cancer screening, has the advantages of being non-invasive, fast imaging, and inexpensive. In this study, we propose to develop a deep convolutional neural network (DCNN) to identify PIK3CA mutations in breast cancer based on US images.

Materials and Methods: We retrospectively collected 312 patients with pathologically confirmed breast cancer who underwent genetic testing. All US images (n=800) of breast cancer patients were collected and divided into the training set (n=600) and test set (n=200). A DCNN-Improved Residual Network (ImResNet) was designed to identify the PIK3CA mutations. We also compared the ImResNet model with the original ResNet50 model, classical machine learning models, and other deep learning models.

Results: The proposed ImResNet model has the ability to identify PIK3CA mutations in breast cancer based on US images. Notably, our ImResNet model outperforms the original ResNet50, DenseNet201, Xception, MobileNetv2, and two machine learning models (SVM and KNN), with an average area under the curve (AUC) of 0.775. Moreover, the overall accuracy, average precision, recall rate, and F1-score of the ImResNet model achieved 74.50%, 74.17%, 73.35%, and 73.76%, respectively. All of these measures were significantly higher than other models.

Conclusion: The ImResNet model gives an encouraging performance in predicting PIK3CA mutations based on breast US images, providing a new method for noninvasive gene prediction. In addition, this model could provide the basis for clinical adjustments and precision treatment.

Breast cancer has become the leading cause of global cancer incidence in 2020 (1), and it is the fifth cause of cancer deaths among Chinese women (2). A high degree of heterogeneity can be observed in breast cancer, and genomic instability is regarded as a major driver of tumor heterogeneity (3). The differences at the genetic and molecular levels make clinical treatment options hugely different. Somatic mutations are stable mutations and play an important role in cancer development and progression (4). The phosphatidylinositol-3 kinase catalytic alpha (PIK3CA) gene is one of the most frequent somatic mutations in breast cancer. According to the Cancer Genome Atlas Network, the percentage of PIK3CA mutations is 34% (5). Phosphatidylinositol 3-kinase (PI3K) is an activator of AKT, which participates in the regulation of cell growth, proliferation, survival, and motility. The PI3K heterodimer consists of two subunits: the regulatory subunit (P85) and the catalytic subunit (p110). PIK3CA induces hyperactivation of the alpha isoform (p110α) of PI3K and can act on the PI3K-AKT-mTOR signaling pathway to trigger oncogene activation, and also lead to persistent AKT activation and regulation of tumor growth in breast cancer (6–8).

Currently, available treatment options for breast cancer are chemotherapy, endocrine therapy (ET), targeted therapy, and immunotherapy. Two-thirds of breast cancer patients express hormone receptors (HR) and lack human epidermal growth factor receptor 2 (HER2) overexpression and/or amplification, and for them, ET is the paramount medical treatment (9, 10). However, about 50% of patients eventually develop ET resistance due to several mechanisms, such as the dysregulation of PI3K-AKT-mTOR signaling (11). The orally available α-selective PIK3CA inhibitor, alpelisib, has been approved by the U.S. Food and Drug Administration (FDA) for the treatment and prognosis of patients with HR+/HER2- advanced or metastatic breast cancer (12, 13). In addition, alterations in the PI3K pathway are associated with poor outcomes of targeted therapy in HER2+ breast cancer (14). For triple-negative breast cancer (TNBC), PIK3CA protein expression is significantly associated with improved overall survival and disease-free survival (15). Therefore, the PIK3CA mutation status plays a vital role in determining the optimal treatment choice for breast cancer patients.

Clinically relevant PIK3CA alterations are detected in several biospecimens using different genetic testing techniques including direct sequencing, real-time polymerase chain reaction (PCR), next-generation sequencing (NGS), and analysis of liquid biopsy samples (16). Although these methods for detecting genetic mutations have improved considerably, molecular testing is often time-consuming, operator dependent, and may be limited by inadequate sample availability. In addition, the cost of genetic testing remains too high for patients. Thus, it is necessary to develop noninvasive and efficient methods for estimating PIK3CA mutation status.

Recently, medical images have been employed to identify the gene mutations in different cancers where different images from different modalities such as computerized tomography (CT) and magnetic resonance imaging (MRI). For instance, Weisset al. (17) found that texture analysis on CT images can differentiate the presence of K-ras mutation from pan-wildtype non-small cell lung cancer. Dang et al. (18) used MRI texture analysis to predict p53 mutation status in head and neck squamous cell carcinoma. Meanwhile, texture analysis has been used to assess the relationship between genetic mutations in breast cancer and morphological features of the masses in MRI images. Woo et al. (19) applied texture and morphological analysis in breast MRI images to evaluate TP53 and PIK3CA mutations. Georgia et al. (20) performed texture analysis of breast MRI to predict BRCA-associated genetic risk. However, CT and MRI are relatively expensive, time-consuming, and not available for all patients.

As one of the widely used tools in breast tumor assessment, ultrasound (US) has similar features to assess breast tumors as CT and MRI and also has the advantages of being non-invasive, real-time, and low cost (21). To solve the disadvantages of operator dependence, many deep learning methods have been proposed for US images. Unlike traditional machine learning and radionics methods, a deep convolutional neural network (DCNN), a special type of deep learning, does not require domain experts to select the specific features beforehand. In contrast, it takes the raw medical images as inputs, does not require manually designed features, and can automatically learn features related to classification or segmentation tasks (22). To improve the efficiency of clinical workflows and reduce inter-observer variation, deep learning has already been applied in large datasets of US images for classifying benign and malignant breast tumors (23–25), classifying molecular subtypes of breast cancer (26, 27), predicting breast cancer lymph node metastasis (28–31) and predicting the response of breast cancer to neoadjuvant chemotherapy (32, 33), etc.

Some studies have applied deep learning models to identify TP53 mutations in pancreatic cancer using MRI multi-modal imaging (34), EGFR mutation status of lung adenocarcinoma using CT imaging (35, 36), and KRAS mutations in colorectal cancer using CT imaging (37). However, it remains unclear whether deep learning models can be employed to identify breast cancer gene mutations on US images. This study observed the differences in breast morphology and other features resulting from microstructural changes in PIK3CA mutant of breast cancers, investigated whether the differences could be captured and interpreted by US images, and identified them using an improved residual network (ImResNet).

This study enrolled 589 female patients with breast cancer who are treated in Guangdong Provincial People’s Hospital between January 2017 and October 2021. To obtain PIK3CA mutation status, all patients submitted their breast tissue samples and blood samples for targeted sequencing to a clinical laboratory accredited by the College of American Pathologists (CAP) and certified by the Clinical Laboratory Improvement Amendments (CLIA). This retrospective study was approved by the Institutional Review Board of Guangdong Provincial People’s Hospital and exempt from obtaining informed consent from patients.

Mutational analysis of the PIK3CA gene was performed using the next-generation sequencing (NGS) technique. First, tissue and genomic DNAs were extracted from formalin-fixed, paraffin-embedded (FFPE) tumor tissues using QIAamp DNA FFPE tissue kit and from blood samples using QIAampDNA blood mini kit (Qiagen, Hilden, Germany), respectively. NGS library construction required at least 50 ng DNA. Then, tissue DNA was sheared using Covaris M220 (Covaris, MA, USA), followed by end repair, phosphorylation, and adaptor ligation. A 200-400bp fragment was purified, followed by hybridization with capture probes decoys, magnetic bead hybridization selection, and PCR amplification. Fragment quality and size were assessed by the high sensitivity DNA kit (Bioanalyzer 2100, Agilent Technologies, CA, USA). Target capture was performed using a commercial panel consisting of 520 cancer-related genes. The cases were selected for study following the criteria ①surgical resection was performed for the target tumor; ②the Pathological and immunohistochemical results were completely obtained; ③the preoperative breast US images of the patients were fully obtained and stored. Finally, 312 patients including 127 PIK3CA mutation patients (the mean age of 51.2 years; the age range of 25-76 years) and 185 Non-PIK3CA mutation patients (the mean age of 48.7 years; the age range of 22-89 years) with 800 US images were collected in this study. The flowchart of the study cohort selection is shown in Figure 1. To ensure the robustness and accuracy of the model, multiple US images of different sections were acquired per lesion as much as possible.

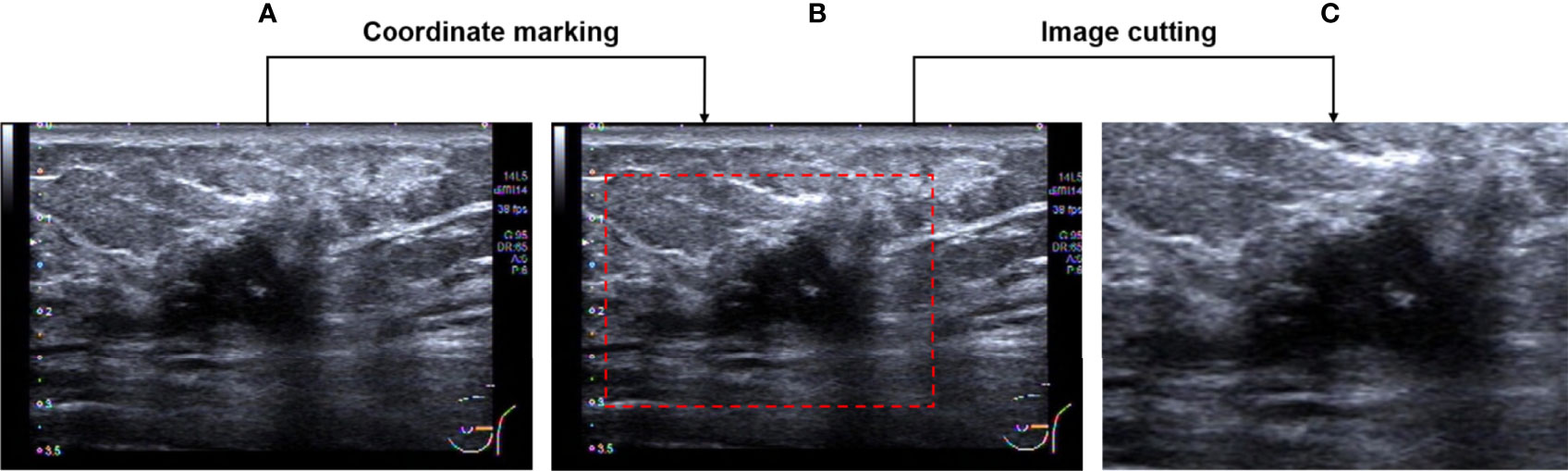

Firstly, the region of interest (ROI) which includes the entire tumor area, as well as the minimum peritumoral tissue was manually cropped in breast US images which were completed by a senior radiologist with 12 years of experience. An example of the ROI of breast US images is shown in Figure 2. After that, a total of 800 ROI images were obtained and then split into training and testing groups at the ratio of 75% to 25%.

Figure 2 Image pre-processing. (A) An original breast US image. (B) Image after coordinate marking. (C) The selected effective image area.

In the proposed method, the PIK3CA mutation status is observed on the ROI images and the mutation identification problem is transferred into an image classification problem. A deep residual network (ResNet) is redesigned by changing the architecture to extract the textural features on images and its output parts were modified to accomplish this classification task.

In deep learning networks, multiple layers are stacked in sequence and the output of the previous layer is fed to the following layer. A convolution layer is a basic layer where different filters perform a convolution operation to extract the features from the former layers with different kernels (22). The kth convolution layer Lk is noted as:

where is the mth feature map of layer Lk-1, is the connecting weights between nth feature map of the output layer and mth feature map of the previous layer, and the bias of nth feature map is denoted as ⊗ denotes the convolution operation, is randomly initialized and is then tuned using a backpropagation procedure, and further optimized with stochastic gradient descent (SGD) algorithm (38), f is an activation layer to convert the nonlinear values into linear values. There are some commonly used activation functions namely rectified linear units (ReLU), Sigmoid, Tangent and softmax functions (39).

Pooling layers reduce the redundant parameters in the convolution layer to increase the computing speed.

Where P is a pool function, the max pooling, average pooling, global max pooling, and global average pooling methods are used for this process.

In the fully connected layer, each neuron is connected to the previous layer. Their outputs estimate the confidence to different categories.

For a classification problem, the final layer usually uses an activation function as the classification layer. The classification layer yields the probabilities of the inputs belonging to a certain class (40).

Where y is the class target, x ∈ RNx1 is a N dimensional feature vector, w ∈ RNx1 is the weight parameter, and b is a bias term. In our model, the output layer has two outputs for PIK3CA and Non-PIK3CA mutation, respectively.

The ResNet employs a unique residual operation in the network which makes it easy to converge, to gain accuracy from increased depth. A ResNet utilizes skip connections, or short-cuts, to jump over some layers. Typically, it consists of convolutional layers, rectified linear units (ReLU) layers, batch normalization layers, and layer skips (41). The transfer learning approach redesigns the pre-defined network to make it accomplish different tasks, which reduces the time in training and improves the network’s generalization ability. In our proposed network, rather than building a model from scratch, a ResNet50 model pre-trained by natural images from ImageNet, is selected as a backbone to extract the features from ROI images. ImageNet comprises more than 14 million images that have been hand-annotated to indicate the pictured objects and are categorized into more than 20,000 categories (42). Of note, in breast US transfer learning, ImageNet is used as a pre-training dataset in most cases (43–45). The advantages of using the pre-trained network include reducing training time, providing better performance for neural networks, and requiring limited data. The original ResNet is improved by adding a new fully connected layer for feature extraction and adding a new global average pooling to interpret these features in the classification task. The idea is to generate one feature map for each corresponding category of the classification task in the last convolutional layer. Thus, the feature maps can be interpreted as categories confidence maps. Also, the global average pooling is a structural regularize to prevent overfitting for the overall structure. Then, another fully connected layer is added as a classification layer to match the output numbers of classified categories, and a binary cross-entropy (BCE) function is used as the loss function which computes the BCE between predictions and targets (46). Figure 3 shows the structure diagram of our proposed ImResNet.

A confusion matrix (CM) is used to evaluate classification performance. The rows of CM represent the instances of a predicted class and columns represent the instances of an actual class, Using the results in CM, four parameters namely precision (P), recall (R), F1-score, and accuracy (ACC) were defined as follows:

P(i) is the fraction of samples where the algorithm correctly predicted class i out of all predictions using the algorithm, and R(i) is the fraction of cases where the algorithm correctly predicted i out of all the true cases of i. Mij is the samples whose true class is i and prediction class is j.

where ∑iMii is all correct predictions and ∑ijMij is total predictions. Accuracy is one metric for evaluating classification performance, which is defined as a fraction of correct predictions out of total predictions.

The receiver operator characteristic (ROC) curve was also utilized to measure the classification performances of different models. The area under curve (AUC) was calculated and worked as a metric to evaluate the classification performance.

The modified deep learning model was trained on a server with a 2 x Six-Core Intel Xeon processor and 128GB of memory. The server is equipped with an NVIDIA Tesla K40 GPU with 12GB of memory.

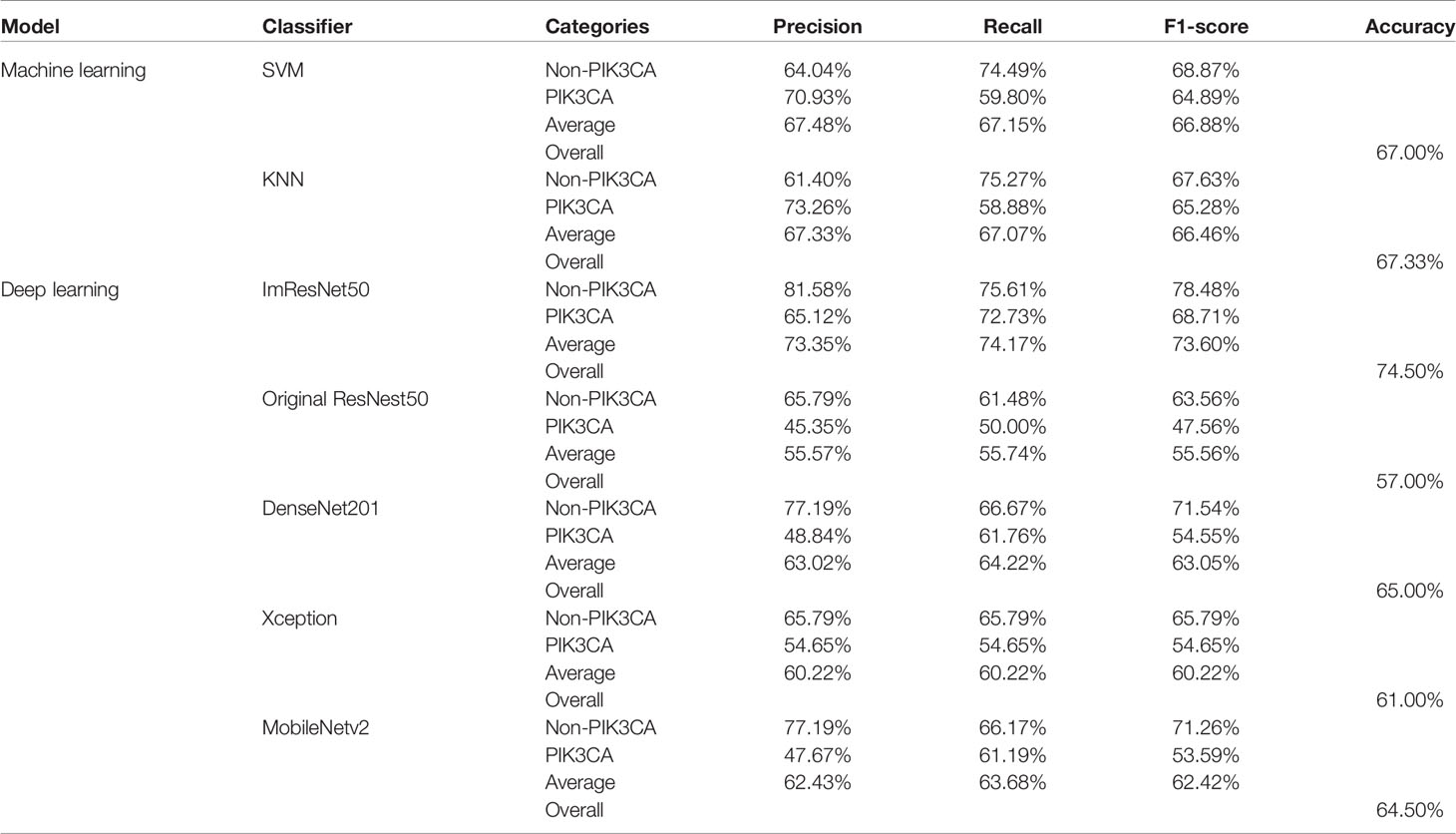

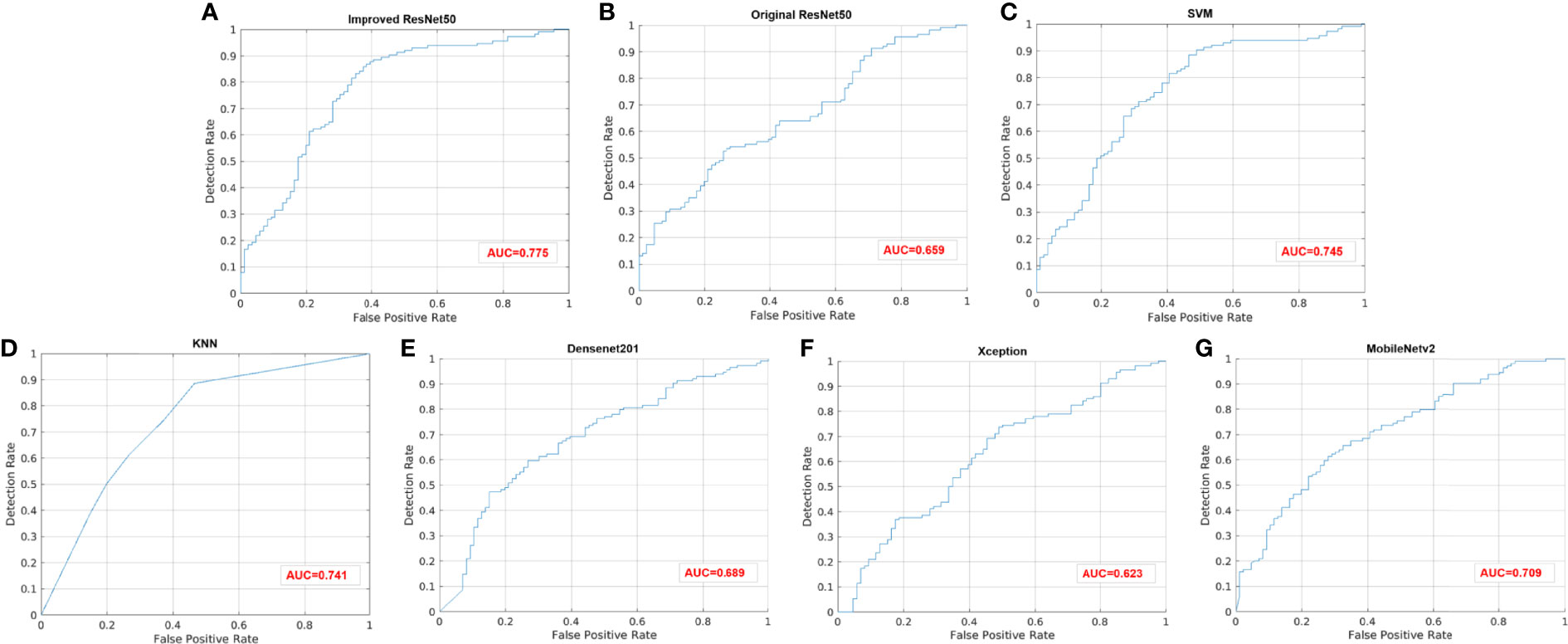

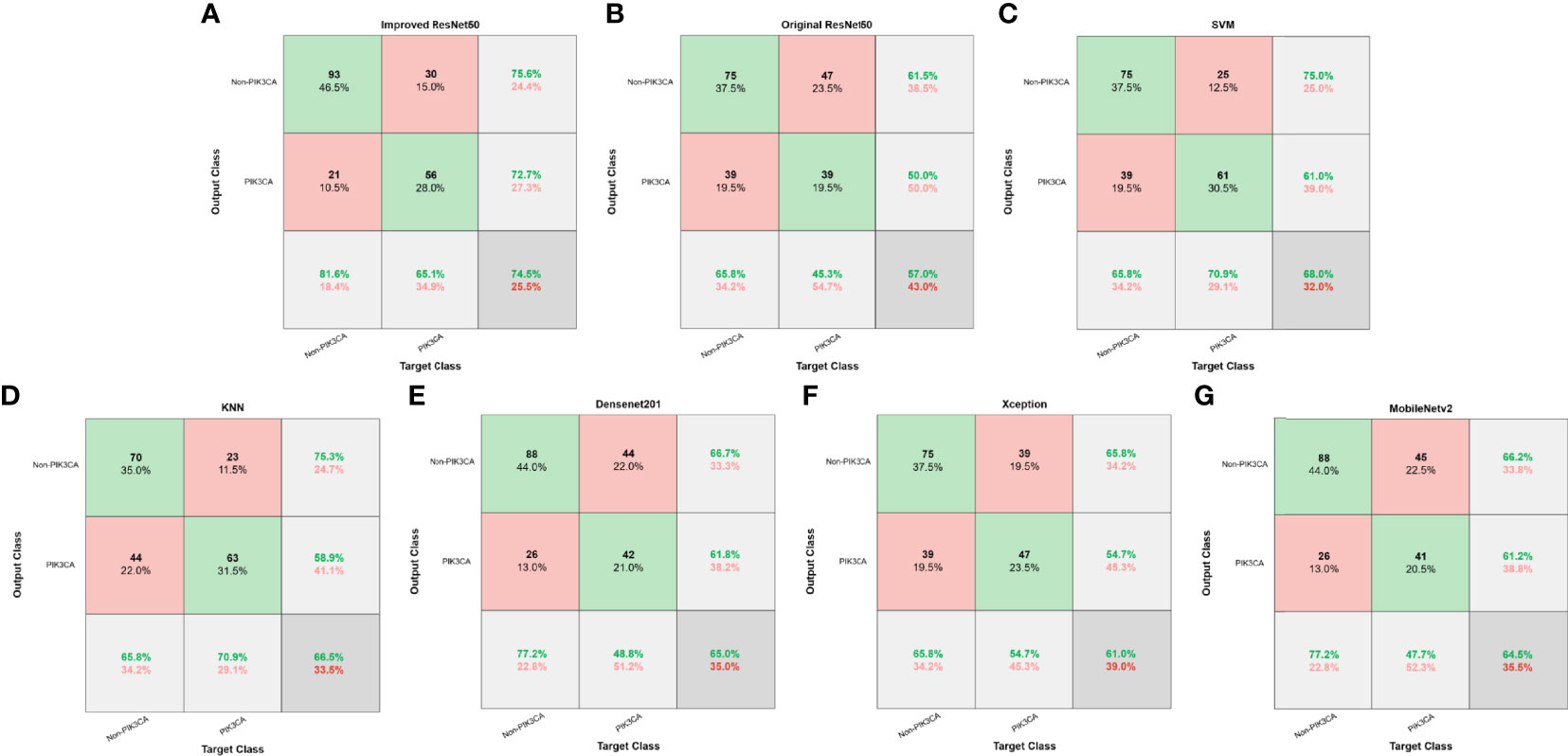

For the test set of 200 US images, the performance of the ImResNet50 model has been given in Table 1. The ImResNet model achieved the best performance in all models, with an overall accuracy of 74.50%, and the average precision, recall, and F1-score reached 73.35%, 74.17%, and 73.60%, respectively. Figure 4A shows the model achieved an AUC of 0.775. Besides, the performance of the ImResNet model can be visualized from the CM in Figure 5. In the figures of CM, the first two rows represent the instances of a predicted class, the first two columns represent the instances of an actual class, the diagonal elements correspond to correctly classified observations, and the off-diagonal cells correspond to incorrectly classified observations. As well, the bottom row is the row-normalized row summary, and it shows the percentages of correctly and incorrectly classified observations for each true class. The rightest column is the column-normalized column summary and displays the percentages of correctly (in green color) and incorrectly classified observations (in red color) for each predicted class. In each cell, the percentage value is calculated using the current number over the whole sample number. Figure 6 shows the classification examples of the ImResNet model. In the first line, the four images in the PIK3CA category are listed, while the four images in the Non-PIK3CA category are shown in the second row.

Table 1 A performance summary of the ImResNet model and other models in identifying PIK3CA mutations of breast cancer.

Figure 4 ROC curves of different models. (A) Improved ResNet50. (B) Original ResNet50. (C) SVM. (D) KNN. (E) DenseNet201. (F) Xception. (G) MobileNetv2.

Figure 5 Confusion matrices of different models. (A) Improved ResNet50. (B) Original ResNet50. (C) SVM. (D) KNN. (E) DenseNet201. (F) Xception. (G) MobileNetv2.

First, we compared our proposed ImResNet model with two commonly used machine learning methods to identify PIK3CA mutations on the same dataset. In machine learning, the support vector machine (SVM) (47) is one of the most robust supervised learning models for classification and regression analysis, which transfers the training examples to points in space to maximize the width of the gap between the two categories and maps the new unknown examples into that same space and predict their belongings to a category based on which side of the gap they are in. The K-nearest neighbors (KNN) algorithm is a type of instance-based classification method where an unknown object is classified by a plurality vote of its neighbors, with the object being assigned to the class most common among its K nearest neighbors. In the parameters of KNN, 5 neighbors are selected. Euclidean distance is the distance metric, and all features are standardized in the range of [0, 1]. The two machine learning models’ performance is listed in Table 1, and the ROC curves are depicted in Figures 4C, D. The ImResNet achieves an AUC of 0.775, higher than that of SVM and KNN models (AUC: 0.745, 0.741). The overall accuracy, average precision, recall rate, and F1-score of the ImResNet model were all significantly higher than the SVM and KNN models. The CMs of the two machine learning models are shown in Figures 5C, D. We find that compared with the SVM and KNN models, the ImResNet model has an improvement in the ability to identify Non-PIK3CA mutation. Compared with the KNN, the ImResNet model has increased 23(11.5%) correctly identified cases in Non-PIK3CA mutation.

To confirm the enhanced performance of the improved ResNest50 model, we compare it with the original ResNest50 model and other deep learning models (DenseNet201, Xception, MobileNetv2). We obtained the ROC curves, AUC values (as shown in Figure 4), accuracy, precision, recall, and F1-score (as presented in Table 1). Our model’s AUC value was 11.6% higher than original ResNest50, 8.6% higher than DenseNet201, 15.2% higher than Xception, and 6.6% higher than MobileNetv2. Meanwhile, all quantitative metrics are better than other deep learning models. From the CM in Figure 5, we found that the ImResNet model has increased 17(19.8%), 5(2.5%), 18(9.0%) and 5(2.5%) correctly identified cases in PIK3CA mutations compared to the original ResNest50 model, DenseNet201, Xception and MobileNetv2, respectively.

In this study, we proposed a DCNN-ImResNet using non-invasive US images to identify PIK3CA mutation status for patients with breast cancer. As one of the most common mutated genes in breast cancer, PIK3CA plays an essential role in both the development and progression of breast cancer (48, 49). As an oral PI3K inhibitor, Alpelisib has received FDA approval for targeted breast cancer therapy (13). Accordingly, determining the PIK3CA mutation status of breast cancer patients is critical to the management. Whereas complexity of genetic testing has limited timely testing and targeted treatment to breast cancer patients in the era of precision medicine. Previously, Woo Kyung et al. (19) found that texture analysis of segmented tumors on breast MRI based on ranklet transform was potential in recognizing the presence of TP53 mutation and PIK3CA mutation, and for PIK3CA mutation, the AUC of ranklet texture feature was 0.70. But this study has some limitations. On the one hand, acquiring MRI images of breasts is time-consuming and expensive. On the other hand, the computer-aided diagnostic approach in that study is semi-automated and still needs manual interactions. Hence, we proposed the ImResNet model which can automatically identify PIK3CA mutations. So far, it is the first study of US images based on deep learning for the identification of PIK3CA mutations in breast cancer.

The ImResNet model is a feasible model for identifying PIK3CA mutations with an AUC of 0.775 for the test cohorts, outperforming the two machine learning models (SVM and KNN) and other deep learning models (Original ResNest50, DenseNet201, Xception, and MobileNetv2). The good performance obtained illustrates that the differences in breast morphology and other features resulting from microstructural changes in PIK3CA mutant breast cancers could be captured by US images and identified using a deep learning model. The ResNet50 has been proven to have good performance in breast US images classification because it is possible to go deeper without losing generalization capability (26). We used transfer learning to pre-train ResNet50 to overcome our small sample size problem and improved the original ResNet50 by adding a new fully connected layer for feature extraction and adding a new global average pooling to interpret these features in the classification task to obtain the ImResNet. Then, we trained the ImResNet model using the presence or absence of the PIK3CA mutations as a label and finally confirmed that the PIK3CA mutation status can be identified from US image data alone.

One of the advantages of our model is that it automatically learns US image features without the need to extract features manually. In recent years, radiomics features extracted from non-invasive images have been applied to identify gene mutations in some tumors. Zhang et al. (35) proposed to develop a deep learning model to recognize EGFR status of LADC by using the radiomics features extracted from CT images. Their results show that this method can precisely recognize EGFR mutation status of lung adenocarcinoma patients. Nevertheless, the radiomics features rely on manual annotation by professionals and automatic segmentation of the target area. Manual annotation is time-consuming and labor-intensive. Moreover, automated segmentation requires a well-established segmentation system in clinical practice. By contrast, deep learning models can automatically learn multi-level features. A study by Kan et al. (37) investigated performance by using a deep learning method to estimate the KRAS mutation status in colorectal cancer patients based on CT imaging and compared it with a radiomics model, and the results show that the deep learning model has a better performance.

Meanwhile, some studies have focused on pathological specimens of tumors to test whether deep learning models can predict gene mutations from pathological pictures. Wang et al. (50) demonstrated that a DCNN could assist pathologists in the detection of BRCA gene mutation in breast cancer. Velmahos et al. (51) used a deep learning model to identify bladder cancers with FGFR-activating mutations from histology images. Furthermore, Nicolas et al. (52) trained a DCNN to predict the ten most commonly mutated genes in lung adenocarcinoma on pathology images. They found that six of them (TK11, EGFR, FAT1, SETBP1, KRAS, and TP53) can be predicted with AUCs from 0.733 to 0.856. However, some histopathological information can only be evaluated after invasive biopsy or surgery resection. The proposed ImResNet model on US images can repeatedly be tracked during the exploration of tumor treatment when the patient’s physical condition is not suitable for invasive biopsy or surgery.

Despite the better performance of the ImResNet model to identify PIK3CA mutations, it still has several limitations that can be improved in future work. First, the sample size was relatively small and retrospectively collected in this study. Therefore, prospective investigation using considerably larger datasets is required to further validate the robustness and reproducibility of our conclusions. Second, we included only a single-center cohort with the internal testing set. In the future, multi-centercohorts should be recruited for evaluation. Third, the 74.50% accuracy of our proposed method is not yet sufficient for clinical needs, and further performance improvements are needed in future work. However, this promising performance could still encourage more researchers to utilize deep learning methods based on US imaging to identify breast cancer gene mutations.

In this study, we proposed a DCNN-ImResNet for the automated identification of PIK3CA mutations in breast cancer based on US images. Our method’s main advantage is that it is a non-invasive method for identifying PIK3CA mutations in breast cancer suitable for avoiding invasive damage when surgery and biopsy are inconvenient. In addition, US images are easily available to monitor for PIK3CA mutations throughout the treatment period of breast cancer. And the cost and time to obtain US images are relatively low. Although the ImResNet model has some potential in identifying PIK3CA mutations, there is still space for performance improvement. In the future, prospective multicenter validation should be performed to provide a high level of evidence for the clinical application of the ImResNet model.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

The studies involving human participants were reviewed and approved by The Institutional Review Board of Guangdong Provincial People’s Hospital. The ethics committee waived the requirement of written informed consent for participation.

G-QD and NL conceived and designed the study. YG designed the proposed method and accomplished experiments. W-QS, W-ER, CL, and G-CZ collected the clinical and imaging data. W-QS and W-ER formed the data interpretation and the statistical analysis. All authors approved the final manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin (2021) 71(3):209–49. doi: 10.3322/caac.21660

2. Lei S, Zheng R, Zhang S, Chen R, Wang S, Sun K, et al. Breast Cancer Incidence and Mortality in Women in China: Temporal Trends and Projections to 2030. Cancer Biol Med (2021) 18(3):900–9. doi: 10.20892/j.issn.2095-3941.2020.0523

3. Haynes B, Sarma A, Nangia-Makker P, Shekhar MP. Breast Cancer Complexity: Implications of Intratumoral Heterogeneity in Clinical Management. Cancer Meta Rev (2017) 36(3):547–55. doi: 10.1007/s10555-017-9684-y

4. Kuijjer ML, Paulson JN, Salzman P, Ding W, Quackenbush J. Cancer Subtype Identification Using Somatic Mutation Data. Br J Cancer (2018) 118(11):1492–501. doi: 10.1038/s41416-018-0109-7

5. Deng L, Zhu X, Sun Y, Wang J, Zhong X, Li J, et al. Prevalence and Prognostic Role of PIK3CA/AKT1 Mutations in Chinese Breast Cancer Patients. Cancer Res Treat (2019) 51(1):128–40. doi: 10.4143/crt.2017.598

6. Goncalves MD, Hopkins BD, Cantley LC. Phosphatidylinositol 3-Kinase, Growth Disorders, and Cancer. N Engl J Med (2018) 379(21):2052–62. doi: 10.1056/NEJMra1704560

7. Hernandez-Aya LF, Gonzalez-Angulo AM. Targeting the Phosphatidylinositol 3-Kinase Signaling Pathway in Breast Cancer. Oncologist (2011) 16(4):404–14. doi: 10.1634/theoncologist.2010-0402

8. Miron A, Varadi M, Carrasco D, Li H, Luongo L, Kim HJ, et al. PIK3CA Mutations in in Situ and Invasive Breast Carcinomas. Cancer Res (2010) 70(14):5674–8. doi: 10.1158/0008-5472.CAN-08-2660

9. Rugo HS, Rumble RB, Macrae E, Barton DL, Connolly HK, Dickler MN, et al. Endocrine Therapy for Hormone Receptor–Positive Metastatic Breast Cancer: American Society of Clinical Oncology Guideline. J Clin Oncol (2016) 34(25):3069–103. doi: 10.1200/jco.2016.67.1487

10. Cardoso F, Senkus E, Costa A, Papadopoulos E, Aapro M, Andre F, et al. 4th ESO-ESMO International Consensus Guidelines for Advanced Breast Cancer (ABC 4) Dagger. Ann Oncol (2018) 29(8):1634–57. doi: 10.1093/annonc/mdy192

11. Liu CY, Wu CY, Petrossian K, Huang TT, Tseng LM, Chen S. Treatment for the Endocrine Resistant Breast Cancer: Current Options and Future Perspectives. J Steroid Biochem Mol Biol (2017) 172:166–75. doi: 10.1016/j.jsbmb.2017.07.001

12. Andre F, Ciruelos E, Rubovszky G, Campone M, Loibl S, Rugo HS, et al. Alpelisib for PIK3CA-Mutated, Hormone Receptor-Positive Advanced Breast Cancer. N Engl J Med (2019) 380(20):1929–40. doi: 10.1056/NEJMoa1813904

13. Markham A. Alpelisib: First Global Approval. Drugs (2019) 79(11):1249–53. doi: 10.1007/s40265-019-01161-6

14. Yang SX, Polley E, Lipkowitz S. New Insights on PI3K/AKT Pathway Alterations and Clinical Outcomes in Breast Cancer. Cancer Treat Rev (2016) 45:87–96. doi: 10.1016/j.ctrv.2016.03.004

15. Elfgen C, Reeve K, Moskovszky L, Guth U, Bjelic-Radisic V, Fleisch M, et al. Prognostic Impact of PIK3CA Protein Expression in Triple Negative Breast Cancer and Its Subtypes. J Cancer Res Clin Oncol (2019) 145(8):2051–9. doi: 10.1007/s00432-019-02968-2

16. Fusco N, Malapelle U, Fassan M, Marchio C, Buglioni S, Zupo S, et al. PIK3CA Mutations as a Molecular Target for Hormone Receptor-Positive, HER2-Negative Metastatic Breast Cancer. Front Oncol (2021) 11:644737. doi: 10.3389/fonc.2021.644737

17. Weiss GJ, Ganeshan B, Miles KA, Campbell DH, Cheung PY, Frank S, et al. Noninvasive Image Texture Analysis Differentiates K-Ras Mutation From Pan-Wildtype NSCLC and is Prognostic. PloS One (2014) 9(7):e100244. doi: 10.1371/journal.pone.0100244

18. Dang M, Lysack JT, Wu T, Matthews TW, Chandarana SP, Brockton NT, et al. MRI Texture Analysis Predicts P53 Status in Head and Neck Squamous Cell Carcinoma. AJNR Am J Neuroradiol (2015) 36(1):166–70. doi: 10.3174/ajnr.A4110

19. Moon WK, Chen HH, Shin SU, Han W, Chang RF. Evaluation of TP53/PIK3CA Mutations Using Texture and Morphology Analysis on Breast MRI. Magn Reson Imaging (2019) 63:60–9. doi: 10.1016/j.mri.2019.08.026

20. Vasileiou G, Costa MJ, Long C, Wetzler IR, Hoyer J, Kraus C, et al. Breast MRI Texture Analysis for Prediction of BRCA-Associated Genetic Risk. BMC Med Imaging (2020) 20(1):86. doi: 10.1186/s12880-020-00483-2

21. Berg WA, Bandos AI, Mendelson EB, Lehrer D, Jong RA, Pisano ED. Ultrasound as the Primary Screening Test for Breast Cancer: Analysis From ACRIN 6666. J Natl Cancer Inst (2016) 108(4):djv367. doi: 10.1093/jnci/djv367

22. LeCun Y, Bengio Y, Hinton G. Deep Learning. Nature (2015) 521(7553):436–44. doi: 10.1038/nature14539

23. Qian X, Pei J, Zheng H, Xie X, Yan L, Zhang H, et al. Prospective Assessment of Breast Cancer Risk From Multimodal Multiview Ultrasound Images via Clinically Applicable Deep Learning. Nat BioMed Eng (2021) 5(6):522–32. doi: 10.1038/s41551-021-00711-2

24. Fujioka T, Kubota K, Mori M, Kikuchi Y, Katsuta L, Kasahara M, et al. Distinction Between Benign and Malignant Breast Masses at Breast Ultrasound Using Deep Learning Method With Convolutional Neural Network. Jpn J Radiol (2019) 37(6):466–72. doi: 10.1007/s11604-019-00831-5

25. Zhang X, Liang M, Yang Z, Zheng C, Wu J, Ou B, et al. Deep Learning-Based Radiomics of B-Mode Ultrasonography and Shear-Wave Elastography: Improved Performance in Breast Mass Classification. Front Oncol (2020) 10:1621. doi: 10.3389/fonc.2020.01621

26. Jiang M, Zhang D, Tang SC, Luo XM, Chuan ZR, Lv WZ, et al. Deep Learning With Convolutional Neural Network in the Assessment of Breast Cancer Molecular Subtypes Based on US Images: A Multicenter Retrospective Study. Eur Radiol (2021) 31(6):3673–82. doi: 10.1007/s00330-020-07544-8

27. Zhang X, Li H, Wang C, Cheng W, Zhu Y, Li D, et al. Evaluating the Accuracy of Breast Cancer and Molecular Subtype Diagnosis by Ultrasound Image Deep Learning Model. Front Oncol (2021) 11:623506. doi: 10.3389/fonc.2021.623506

28. Zhou LQ, Wu XL, Huang SY, Wu GG, Ye HR, Wei Q, et al. Lymph Node Metastasis Prediction From Primary Breast Cancer US Images Using Deep Learning. Radiology (2020) 294(1):19–28. doi: 10.1148/radiol.2019190372

29. Sun Q, Lin X, Zhao Y, Li L, Yan K, Liang D, et al. Deep Learning vs. Radiomics for Predicting Axillary Lymph Node Metastasis of Breast Cancer Using Ultrasound Images: Don't Forget the Peritumoral Region. Front Oncol (2020) 10:53. doi: 10.3389/fonc.2020.00053

30. Zheng X, Yao Z, Huang Y, Yu Y, Wang Y, Liu Y, et al. Deep Learning Radiomics can Predict Axillary Lymph Node Status in Early-Stage Breast Cancer. Nat Commun (2020) 11(1):1236. doi: 10.1038/s41467-020-15027-z

31. Guo X, Liu Z, Sun C, Zhang L, Wang Y, Li Z, et al. Deep Learning Radiomics of Ultrasonography: Identifying the Risk of Axillary Non-Sentinel Lymph Node Involvement in Primary Breast Cancer. EBioMedicine (2020) 60:103018. doi: 10.1016/j.ebiom.2020.103018

32. Byra M, Dobruch-Sobczak K, Klimonda Z, Piotrzkowska-Wroblewska H, Litniewski J. Early Prediction of Response to Neoadjuvant Chemotherapy in Breast Cancer Sonography Using Siamese Convolutional Neural Networks. IEEE J BioMed Health Inform (2021) 25(3):797–805. doi: 10.1109/JBHI.2020.3008040

33. Jiang M, Li CL, Luo XM, Chuan ZR, Lv WZ, Li X, et al. Ultrasound-Based Deep Learning Radiomics in the Assessment of Pathological Complete Response to Neoadjuvant Chemotherapy in Locally Advanced Breast Cancer. Eur J Cancer (2021) 147:95–105. doi: 10.1016/j.ejca.2021.01.028

34. Chen X, Lin X, Shen Q, Qian X. Combined Spiral Transformation and Model-Driven Multi-Modal Deep Learning Scheme for Automatic Prediction of TP53 Mutation in Pancreatic Cancer. IEEE Trans Med Imaging (2021) 40(2):735–47. doi: 10.1109/TMI.2020.3035789

35. Zhang B, Qi S, Pan X, Li C, Yao Y, Qian W, et al. Deep CNN Model Using CT Radiomics Feature Mapping Recognizes EGFR Gene Mutation Status of Lung Adenocarcinoma. Front Oncol (2020) 10:598721. doi: 10.3389/fonc.2020.598721

36. Wang S, Shi J, Ye Z, Dong D, Yu D, Zhou M, et al. Predicting EGFR Mutation Status in Lung Adenocarcinoma on Computed Tomography Image Using Deep Learning. Eur Respir J (2019) 53(3):1800986. doi: 10.1183/13993003.00986-2018

37. He K, Liu X, Li M, Li X, Yang H, Zhang H. Noninvasive KRAS Mutation Estimation in Colorectal Cancer Using a Deep Learning Method Based on CT Imaging. BMC Med Imaging (2020) 20(1):59. doi: 10.1186/s12880-020-00457-4

38. Bottou L. Large-Scale Machine Learning With Stochastic Gradient Descent. Physica-Verlag HD (2010) 177–86. doi: 10.1007/978-3-7908-2604-3

39. Krizhevsky A, Sutskever I, Hinton G. ImageNet Classification With DeepConvolutional Neural Networks. Commun ACM (2017) 60(6):84–90. doi: 10.1145/3065386

40. Bridle JS. Probabilistic Interpretation of Feedforward Classification Network Outputs, With Relationships to Statistical Pattern Recognition. Berlin Heidelberg: Springer (1990). doi: 10.1007/978-3-642-76153-9

41. He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. IEEE (2016) 770–78. doi: 10.1109/CVPR.2016.90

42. Ayana G, Dese K, Choe SW. Transfer Learning in Breast Cancer Diagnoses via Ultrasound Imaging. Cancers (Basel) (2021) 13(4):738. doi: 10.3390/cancers13040738

43. Byra M, Galperin M, Ojeda-Fournier H, Olson L, O'Boyle M, Comstock C, et al. Breast Mass Classification in Sonography With Transfer Learning Using a Deep Convolutional Neural Network and Color Conversion. Med Phys (2019) 46(2):746–55. doi: 10.1002/mp.13361

44. Yap MH, Pons G, Marti J, Ganau S, Sentis M, Zwiggelaar R, et al. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J BioMed Health Inform (2018) 22(4):1218–26. doi: 10.1109/JBHI.2017.2731873

45. Yap MH, Goyal M, Osman FM, Marti R, Denton E, Juette A, et al. Breast Ultrasound Lesions Recognition: End-to-End Deep Learning Approaches. J Med Imaging (Bellingham) (2019) 6(1):11007. doi: 10.1117/1.JMI.6.1.011007

46. Shen Y. (2005) Loss Functions for Binary Classification and Class Probability Estimation. [dissertation]. Philadelphia: University of Pennsylvania (2005).

47. Cortes CJML. Support-Vector Networks. Mach Learn (1995) 20(3):273–97. doi: 10.1023/A:1022627411411

48. Cully M, You H, Levine AJ, Mak TW. Beyond PTEN Mutations: The PI3K Pathway as an Integrator of Multiple Inputs During Tumorigenesis. Nat Rev Cancer (2006) 6(3):184–92. doi: 10.1038/nrc1819

49. Samuels Y, Wang Z, Bardelli A, Silliman N, Ptak J, Szabo S, et al. High Frequency of Mutations of the PIK3CA Gene in Human Cancers. Science (2004) 304(5670):554. doi: 10.1126/science.1096502

50. Wang X, Zou C, Zhang Y, Li X, Wang C, Ke F, et al. Prediction of BRCA Gene Mutation in Breast Cancer Based on Deep Learning and Histopathology Images. Front Genet (2021) 12:661109. doi: 10.3389/fgene.2021.661109

51. Velmahos CS, Badgeley M, Lo YJCM. Using Deep Learning to Identify Bladder Cancers With FGFR-Activating Mutations From Histology Images. Cancer Med (2021) 10(14):4805–13. doi: 10.1002/cam4.4044

Keywords: breast cancer, gene mutation, PIK3CA, deep learning, ultrasonic image

Citation: Shen W-Q, Guo Y, Ru W-E, Li C, Zhang G-C, Liao N and Du G-Q (2022) Using an Improved Residual Network to Identify PIK3CA Mutation Status in Breast Cancer on Ultrasound Image. Front. Oncol. 12:850515. doi: 10.3389/fonc.2022.850515

Received: 07 January 2022; Accepted: 11 April 2022;

Published: 26 May 2022.

Reviewed by:

Fajin Dong, Jinan University, ChinaCopyright © 2022 Shen, Guo, Ru, Li, Zhang, Liao and Du. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guo-Qing Du, ZHVndW9xaW5nOUAxNjMuY29t; Ning Liao, c3lsaWFvbmluZ0BzY3V0LmVkdS5jbg==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.