- 1National Cancer Center/National Clinical Research Center for Cancer/Cancer Hospital, Chinese Academy of Medical Sciences and Peking Union Medical College, Beijing, China

- 2Cloud Computing and Big Date Research Institute, China Academy of Information and Communications Technology, Beijing, China

Background: Radical radiotherapy is the main treatment modality for early and locally advanced nasopharyngeal carcinoma (NPC). Magnetic resonance imaging (MRI) has the advantages of no ionizing radiation and high soft-tissue resolution compared to computed tomography (CT), but it does not provide electron density (ED) information for radiotherapy planning. Therefore, in this study, we developed a pseudo-CT (pCT) generation method to provide necessary ED information for MRI-only planning in NPC radiotherapy.

Methods: Twenty patients with early-stage NPC who received radiotherapy in our hospital were investigated. First, 1433 sets of paired T1 weighted magnetic resonance (MR) simulation images and CT simulation images were rigidly registered and preprocessed. A 16-layer U-Net was used to train the pCT generative model and a “pix2pix” generative adversarial network (GAN) was also trained to compare with the pure U-Net regrading pCT quality. Second, the contours of all target volumes and organs at risk in the original CT were transferred to the pCT for planning, and the beams were copied back to the original CT for reference dose calculation. Finally, the dose distribution calculated on the pCT was compared with the reference dose distribution through gamma analysis and dose-volume indices.

Results: The average time for pCT generation for each patient was 7.90 ± 0.47 seconds. The average mean (absolute) error was −9.3 ± 16.9 HU (102.6 ± 11.4 HU), and the mean-root-square error was 209.8 ± 22.6 HU. There was no significant difference between the pCT quality of pix2pix GAN and that of pure U-Net (p > 0.05). The dose distribution on the pCT was highly consistent with that on the original CT. The mean gamma pass rate (2 mm/3%, 10% low dose threshold) was 99.1% ± 0.3%, and the mean absolute difference of nasopharyngeal PGTV D99% and PTV V95% were 0.4% ± 0.2% and 0.1% ± 0.1%.

Conclusion: The proposed deep learning model can accurately predict CT from MRI, and the generated pCT can be employed in precise dose calculations. It is of great significance to realize MRI-only planning in NPC radiotherapy, which can improve structure delineation and considerably reduce additional imaging dose, especially when an MR-guided linear accelerator is adopted for treatment.

Introduction

Nasopharyngeal carcinoma (NPC) is the most common malignant tumor in the head and neck (HN), especially in southern China and Southeast Asia. Radical radiotherapy (RT) is the main treatment modality for early or locally advanced NPC, and computed tomography (CT) is necessary for patient positioning and RT planning, since it provides electron density (ED) information for dose calculation. Magnetic resonance imaging (MRI) has the advantages of high soft-tissue resolution and no additional imaging dose compared to CT. With the development of the MR-guided linear accelerator (MR-linac), an MRI-only RT-planning workflow is desirable. However, MRI does not provide ED information, which hinders its application in RT planning. Therefore, there is a need for a reliable and effective method to predict ED information based on MR images.

Currently, this issue is addressed using three main methods. The primary one is to simply segment soft tissue and bone (1, 2) and assign the densities of water and bone to them, respectively. However, it is difficult to distinguish between bone and air in MRI.

The second is the atlas-based pseudo-CT (pCT) generation. It requires a deformable registration from an MRI atlas to the patient MRI to obtain a special transformation, which is then applied to a paired CT atlas to generate pCT images (3–8). However, when the patient’s MRI is quite different from the image in the atlas library, and there is a special anatomical structure (such as a large tumor or surgical cavity), leading to deformation registration errors, which affect the accuracy of pCT.

The third is the voxel-wise pCT generation (9–12). By establishing a voxel-wise pCT generation model, point-by-point prediction is performed. This method prevents manual or semi-manual segmentation of soft tissue and bone, and it is not sensitive to abnormal anatomical structures. Earlier studies employed machine learning methods, such as cluster analysis, Gaussian regression, and principal component analysis, to establish such a generative model. However, some of them still need manual or semi-automatic delineation of bone and air cavity, and the prediction accuracy still needs to be improved.

Recently, convolutional neural network (CNN) and its derivate deep learning models have been widely used for cross-modality image generation owing to their ability to automatically extract multilevel features of data. At present, most studies on MR-pCT generation focus on brain and prostate RT (11, 13–16), and promising accuracy has been achieved. However, there is a need for further studies to develop and verify deep learning based pCT generative models for treatment sites with more intertwined air cavities and bony structures, such as HN. A previous study (17) used U-Net and T2-weighted (T2w) MRI to generate HN pCT for NPC and reported a promising image quality. In this study, we adopted U-Net but with T1w MRI, another routine clinical MRI modality, to train an MR-pCT generative model for NPC. We not only evaluated the CT number prediction accuracy but also systematically analyzed the dosimetric difference between the obtained pCT and the corresponding original CT with the same beam layout. Besides, we compared the performance of the generative adversarial network (GAN), another popular deep learning network, with U-Net for pCT generation.

Materials and Methods

Image Collection

The image data in this study were obtained from 20 patients with NPC who received RT in our hospital from September 2017 to April 2018. All data are retrospective and nonidentifiable so that the institutional ethics review and written consent are exempted.

Before treatment, all patients underwent CT and MR simulation scanning in our department within very close time and with the same fixing devices for each patient. CT scanning was performed using a CT simulator (SOMATOM Definition AS 40, Brilliance CT big bore, Philips) with the acquisition parameters (voltage: 120 kV; exposure: 240 mAs; pitch: 0.94; image size: 512 × 512; pixel spacing: 0.96 mm; slice thickness: 3.0 mm). MRI scanning was performed using a 3.0-T MR simulator (Discovery MR750w, GE Healthcare) with a 6-channel split head coil and T1-FSE sequence with the acquisition parameters (repetition time (TR): 834 ms; echo time (TE): 7.96 ms; flip angle: 111°; image size: 512 × 512; pixel spacing: 0.55 mm; and slice thickness: 3 mm). All patients were fixed with head-neck-shoulder thermoplastic film. The upper boundary of the scanning range is half of the frontal sinus, and the lower boundary extends to the supraclavicular region.

Data Preprocessing

Due to the design and characteristics of the coil, the signal intensity distribution of the same tissue might be uneven. We used an N3 algorithm to calibrate the bias field and performed gray value normalization and histogram matching of the MR images. Then, the MR and CT images were rigidly registered, and outlines were drawn on the aligned CT and MR images, respectively, using the thresholding segmentation algorithm. The overlap of the two outlines was used to generate a mask, and the density outside the mask was set equal to that of air.

Deep Learning Architecture for MR-pCT Generation

Two deep learning models, CNN and conditional GAN (cGAN), were adopted in this study for comparison. For both models, we used several data enhancement techniques, including random clipping and flipping, to expand the number of data. The Adam method was used to optimize the loss function. The initial learning rate was set to 0.0002, and the maximum number of iterations was set to 40000. The network training and test were based on the Tensorflow platform and NVIDIA Tesla K80 GPU.

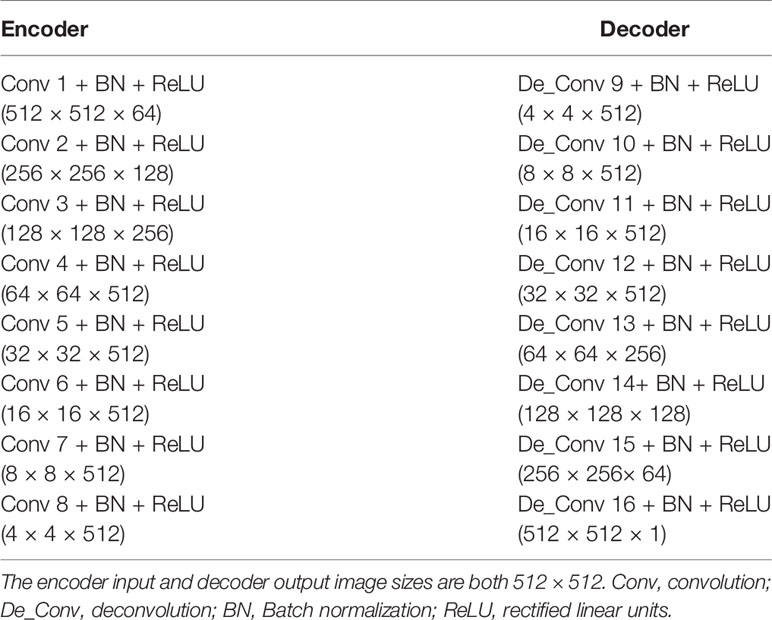

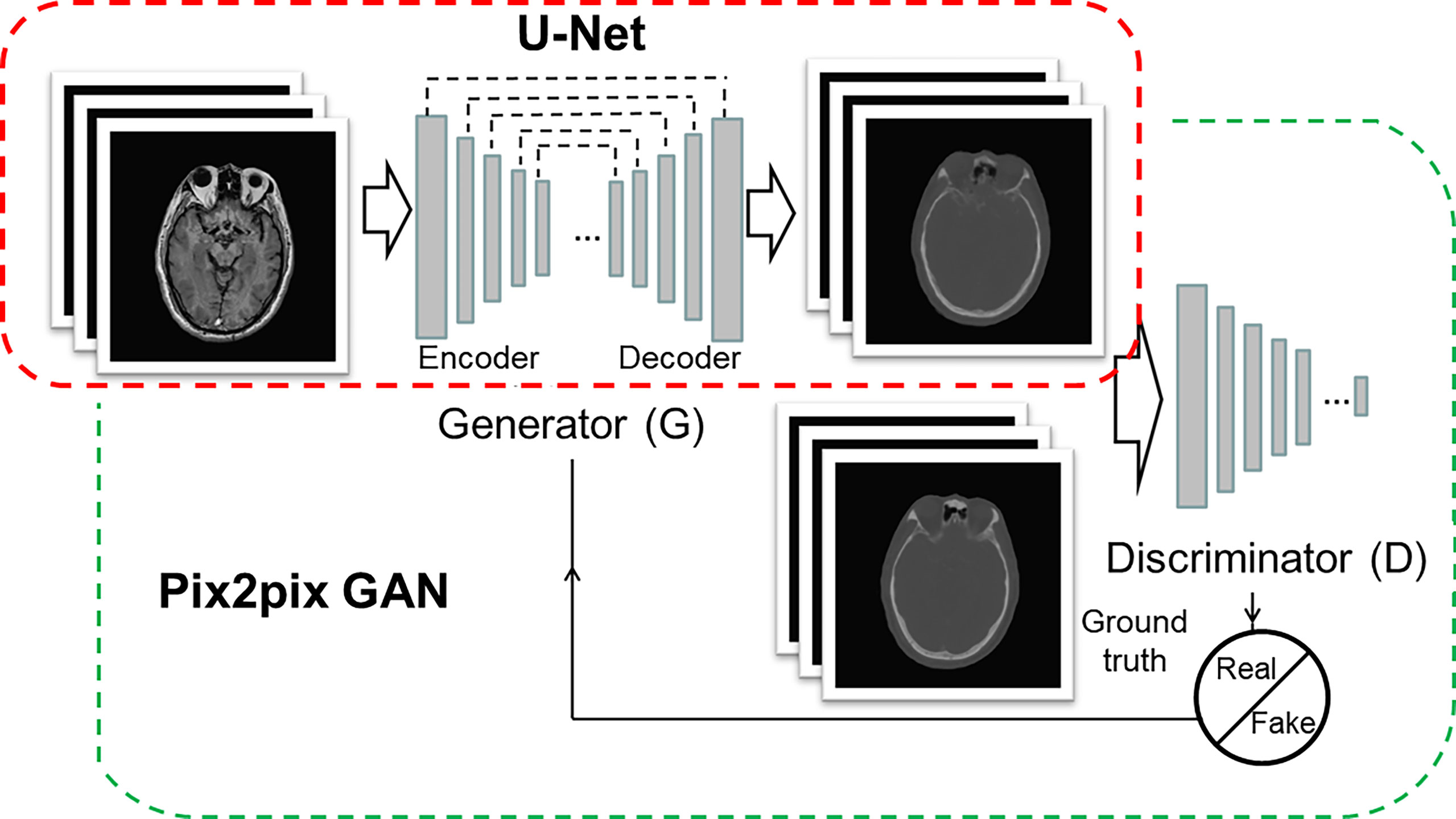

The architecture of the CNN model was 16-layer U-Net, which was developed for MR-pCT generation (Figure 1, red box). As shown in Table 1, the modules of convolution-batch normalization and rectified linear units (ReLU) were used for the encoder–decoder network. The kernel size was 4 × 4 for the convolutional and deconvolutional layers. Skip connections were added between each mirrored encoder and decoder layers for better recovery of image details.

Figure 1 Architectures of U-Net (red box) and pix2pix GAN (green box). The U-Net is composed of an encoder and a decoder, and each of them has eight mosaic layers, which are detailed in Table 1.

The architecture of cGAN, as shown in Figure 1 (green box), was the “pix2pix” model, which used paired MR and CT images as input and ground truth, respectively. It learned a loss that uses a discriminator to determine if an output image is real or fake while simultaneously training a generator to minimize the loss. GAN is supposed to have the ability to overcome problems such as image blurring. For better comparison with the performance of U-Net, the generator part adopted the same U-Net architecture as aforementioned. The patch size of the discriminator was set to 70 × 70. We adopted “cGAN + L1” as a loss function, as suggested in (18). It comprises a standard cGAN loss function and a weighted L1 distance term.

To get a reliable and stable model based on a small sample size, a 10-fold cross-validation method was used to train the pCT generation model. Through cross-validation, optimal model parameters were determined and then used to generate pCT images for the 20 patients. Then, voxel-wise Hounsfield units (HU) comparison was performed between the pCT and the original CT for each patient, considering the mean error (ME), mean absolute error (MAE), and root-mean-square error (RMSE) (Equations 1–3).

where N is the total number of voxels of interest, p(i) the value of the i-th voxel in the pseudo-CT, and g(i) the corresponding voxel value in the ground truth (original) CT.

Dosimetric Evaluation

For each patient, contours of target volumes and organs at risk (OARs) were transferred from the original planning CT by rigid fusion to the corresponding pCT. RT plans were first designed with a Pinnacle treatment planning system (Philips) based on the pCT, and the beams were then copied to the original CT with the same isocenter for the ground truth dose calculation. Gamma analysis was performed using Sun Nuclear Patient (SNC Patient) software with a 2 mm/3% (global mode, 10% low dose threshold) criterion to compare dose distributions of pCT and original CT. The gamma criterion for the calculation is inconsistent in the literature, ranging from 1 mm/1% to 3 mm/3% (14–16). Hence, we used an intermediate value herein. For each patient, 10 slices with a 10-mm interval near the image central slice were selected for 2D gamma analysis, and the mean gamma pass rate of the slices was calculated for dosimetry consistency assessment. Besides, a dose-volume histogram (DVH) comparison was performed to evaluate the accuracy of the clinically concerned dosimetry metrics of PTVs and OARs.

Results

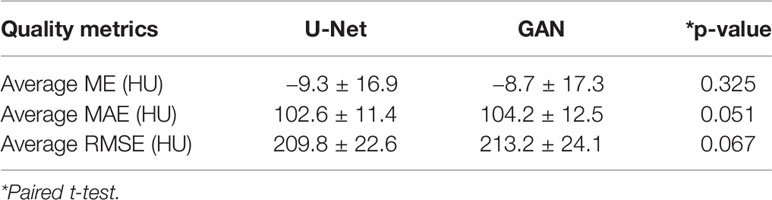

Performance Comparison Between U-Net and pix2pix GAN

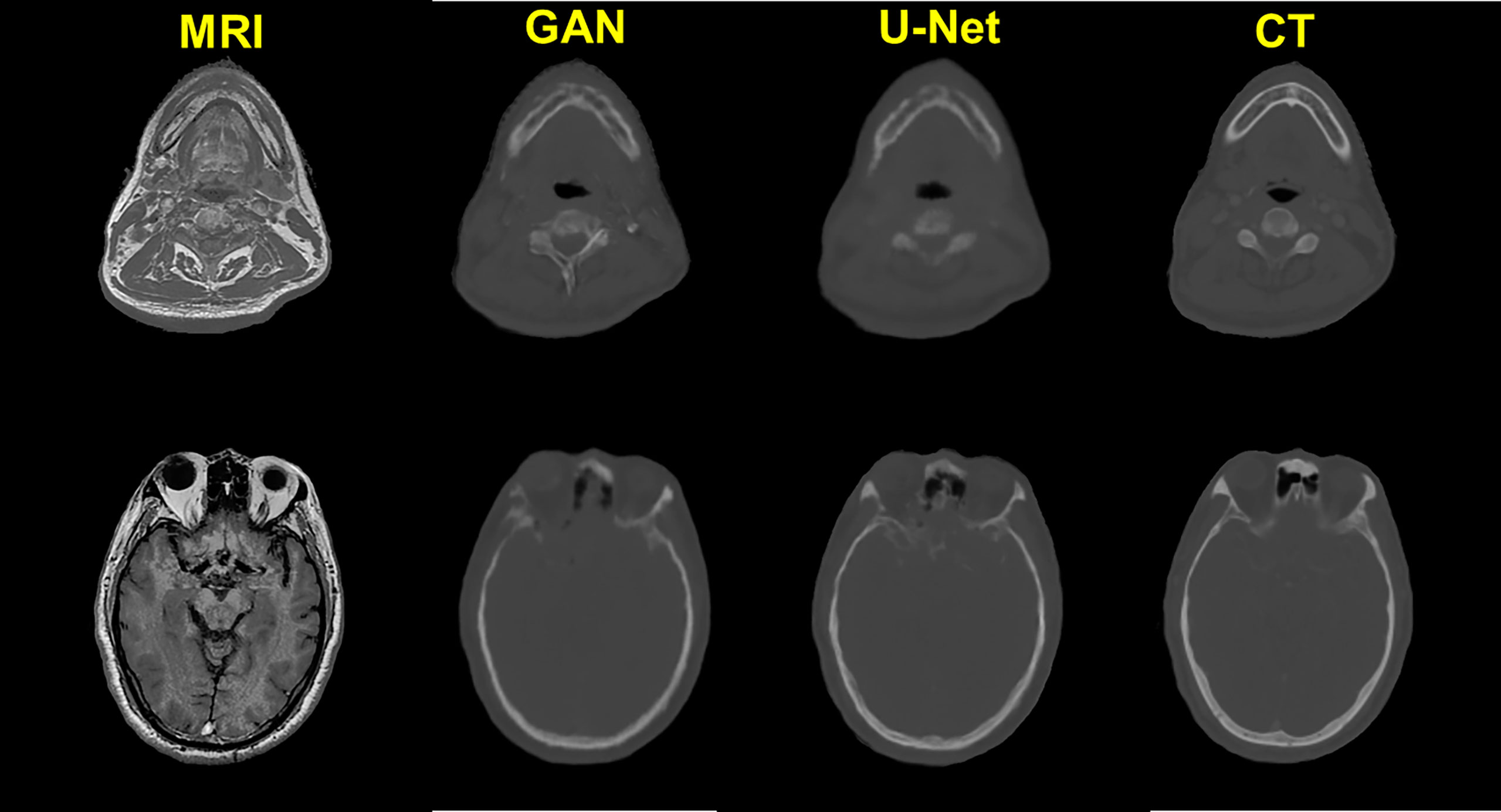

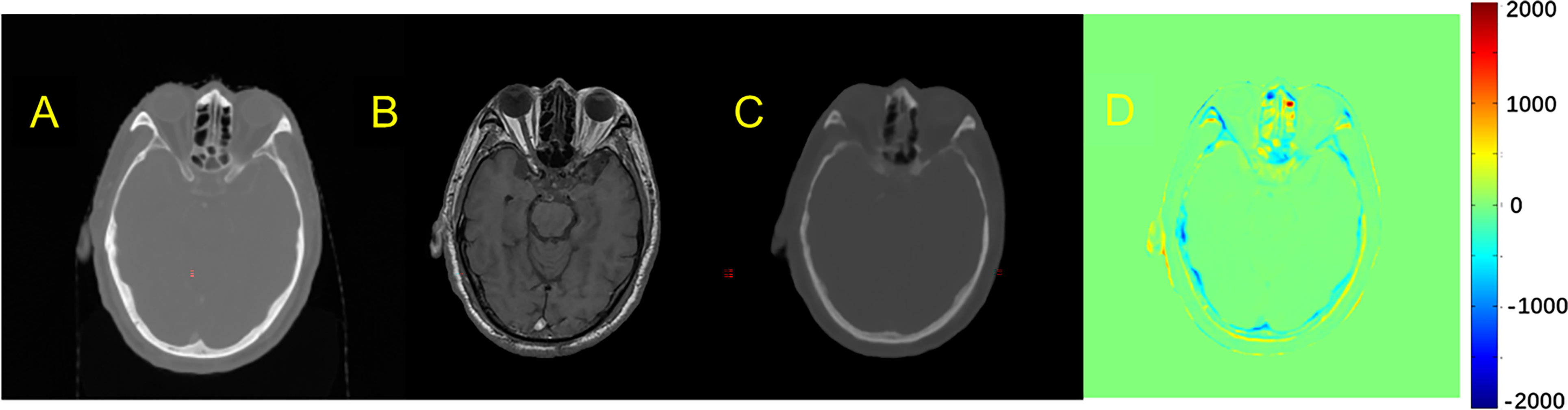

The quality of pCT generated by U-Net and pix2pix GAN has no statistical difference (paired t-test, p > 0.05) in terms of ME, MAE, and RMSE (Table 2), whereas the performance of U-Net was slightly better than that of pix2pix GAN. A visual comparison of the pCTs generated by the two types of networks is shown in Figure 2, and a spatial discrepancy map for the U-Net is shown in Figure 3. Since there was no significant difference between the performance of U-Net and pix2pix GAN in this task, we adopted the simpler-structured U-Net for the further dosimetric comparison.

Figure 3 Comparison example of pCT and original CT: (A) Original CT images; (B) T1-weighted MR images; (C) Predicted pCT images; (D) Difference between the real CT and predicted pCT values, where MAE is 73.1 HU.

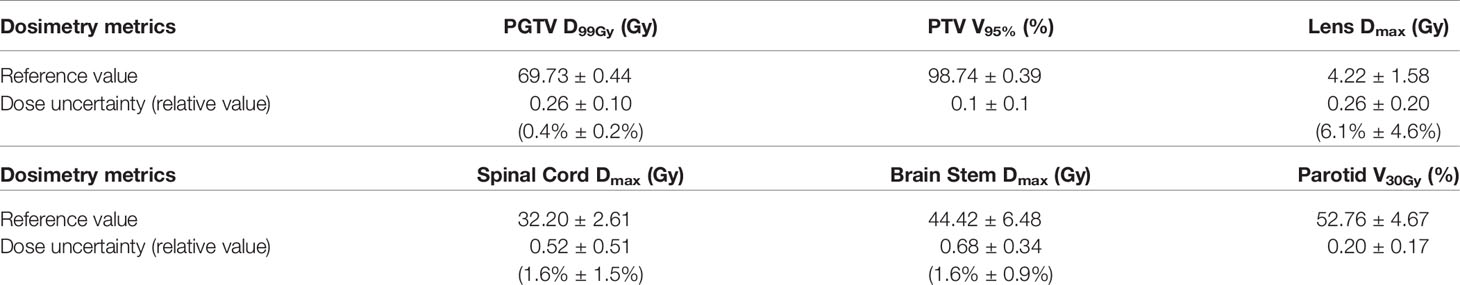

Dosimetric Consistency Between Real CT and pCT-Based RT Planning

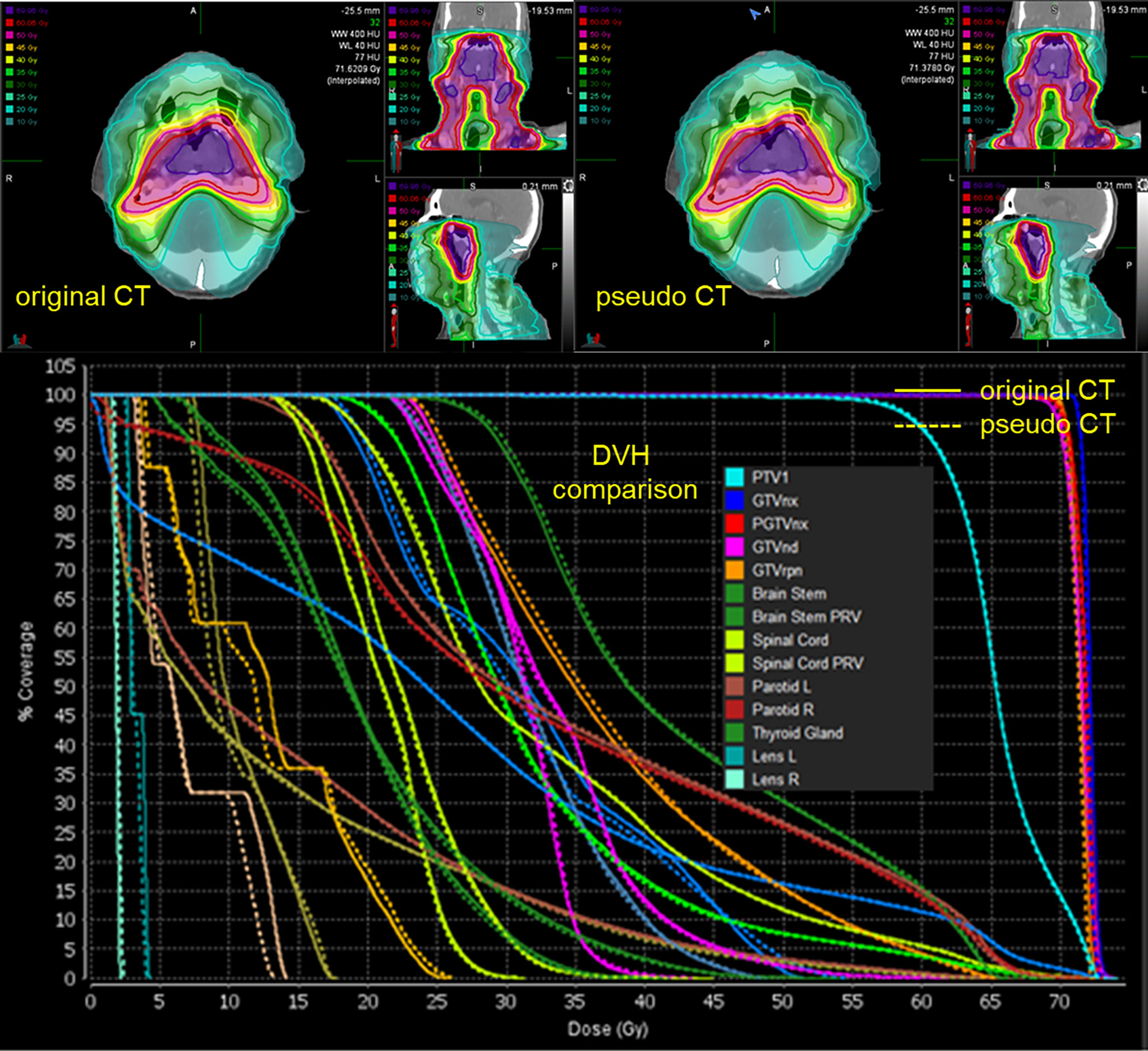

The spatial dose distribution of the pCT-based RT plan could be replicated very well on real CT with the same beam layout, and it demonstrates a good overlap between pCT and CT, regarding the structure DVH (Figure 4). Detailed DVH metric comparison is shown in Table 3.

Figure 4 Spatial dose distributions of the original CT (left-up panel) and pCT (down-left panel) with identical beam assignment and their DVH comparison. The solid lines in the DVH correspond to the original CT, and the dotted lines correspond to pCT.

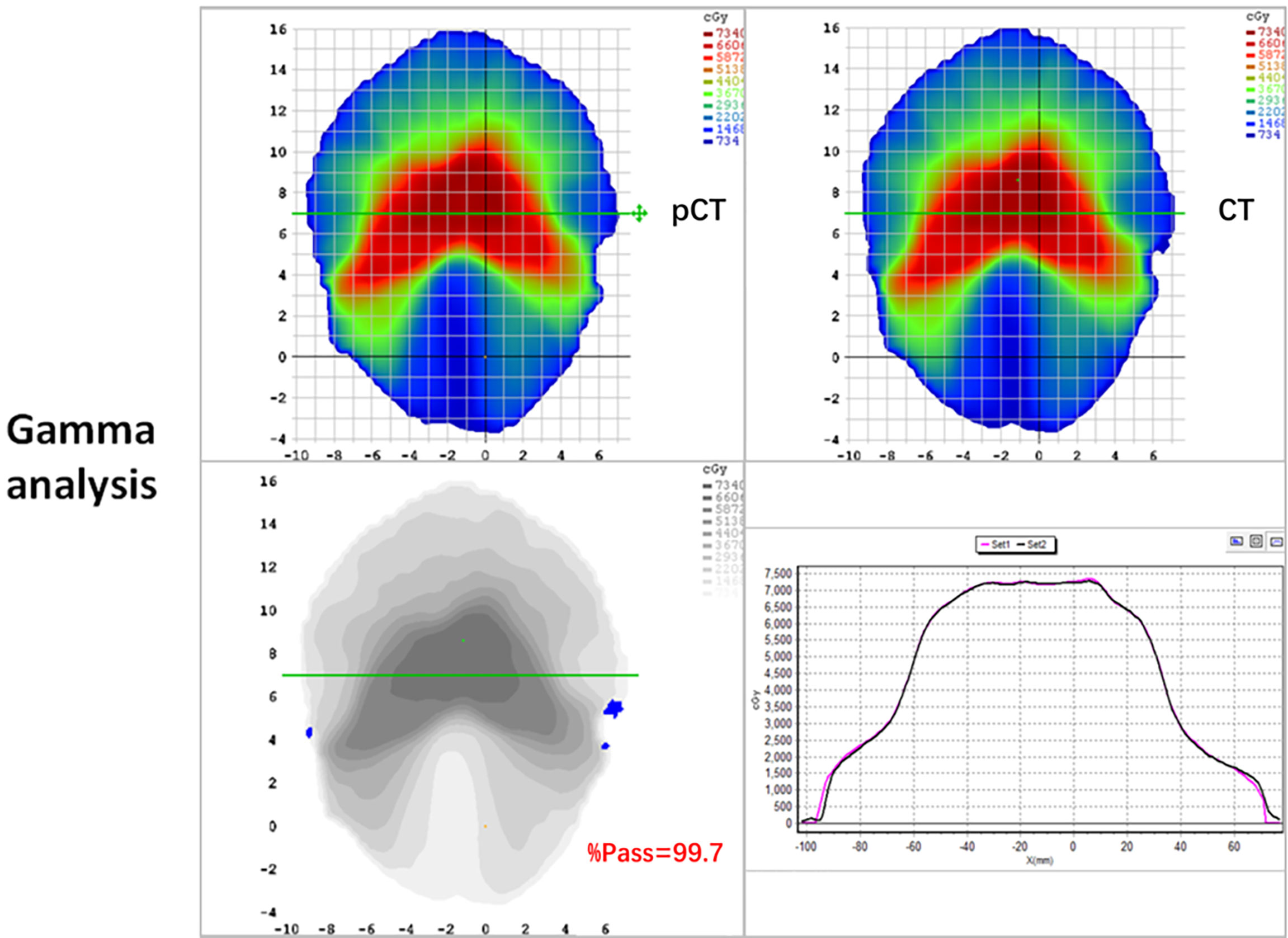

The mean ( ± standard deviation) gamma pass rate of all the patients was 99.1% ± 0.3%, and the median gamma pass rate of all the selected slices was 99.3%, demonstrating a high consistency between the real CT and pCT-based RT planning. The worst slice pass rate was 95.9%, and the best was 100%. An exemplary gamma analysis result is shown in Figure 5. Notably, the positions that failed to pass the analysis are all in the peripheral areas because the CT and MR images were not acquired simultaneously, thus they could not be perfectly registered, especially near the outline.

Figure 5 2D gamma analysis of pCT (up-left panel) and original CT dose distributions (up-right panel). The gamma pass rate of the slice is 99.7% (down-left panel), and the dose profiles are in good agreement in the high dose range (down-right panel).

Discussion

In this study, deep CNN (U-Net) was used to generate pCT from T1 weighted MRI for NPC radiotherapy, and the dosimetric accuracy was assessed for pCT-based RT planning. We proved that the deep learning network can reliably convert MR images to pCT for HN position to provide ED information. Although deep learning network usually needs much training data, we achieved promising intensity and dosimetry prediction accuracy with limited data.

Previous studies on MR-pCT generation mostly focused on the brain or prostate, and only a few considered HN. The variance of existing brain pCT generation quality recorded by atlas-based studies is relatively large (average MAE from 85 to 184 HU) (3, 6, 19), which is attributed to the different data and image processing algorithms. For machine learning-based studies, Gudur et al. (20) used a Bayesian probability model and realized an average MAE of 126 HU for brain pCT generation. Despite the larger structure density variance around NPC than brain tumors, the pCT quality of the proposed model (MAE of 102.6 HU) is comparable, even superior to that in previous brain tumor studies. A direct comparison between the atlas and CNN-based pCT generation was performed by Han et al. (13), and the MAE of the CNN-based method was 10.26% lower than that of the atlas-based method, demonstrating the advantage of CNN-based pCT generation.

T1w MRI was used in this study, whereas a previous study on HN pCT generation (17) adopted T2w MRI for pCT generation using U-Net, and good pCT quality (MAE of 131 HU) was achieved. A dosimetric comparison was performed on a single patient regarding DVH metrics, and the difference between the minimum-dose-of-98%-volume (D98%) of high-risk, intermediate-risk, and low-risk PTVs on true CT and pCT was less than 1%. Herein, we further evaluated dosimetric accuracy in terms of global dose distribution consistency and OAR dose-volume metrics statistically. Combining our results and their study, regardless of the different network architecture, the performance of deep CNN on pCT generation from the two routine clinical MR modalities (i.e., T1w and T2w) prove to be promising, especially in clinical practice when it comes to MR-linac based adaptive RT, where both T1w and T2w MRI are possible to be adopted for online planning for each individual patient. Besides, although GAN is supposed to have a strong nonlinearity modeling ability (17), our results show that there is no significant difference in the performance of pix2pix GAN and U-Net. The training process of GAN can be improved via some sophisticated strategies, such as using other kinds of activation functions, cost functions, normalization, or optimizers, but this is beyond the scope of this study; thus, there is a need for further studies.

As for the computational efficiency of the proposed model, the average pCT generation time is 7.9 s using GPU acceleration, in contrast to several minutes or a few hours in the aforementioned atlas-based studies. The speed advantage of CNN is more important in MR-linac based radiotherapy, where online adaptive RT planning is needed.

For the key factors for CNN-based MRI-pCT generative model training, Andres et al. (21) evaluated the influence of training set size, MR sequence (T1w or contrast-enhanced T1w), MRI standardization approach, bias field correction, and architecture of CNN on the quality of brain pCT generation. They found that larger training set sizes result in higher pCT quality, whereas the other factors have no significant effect on the dosimetry quality, and all the candidate methods are relevant for potential use in clinical practice. The best MAE obtained using the slightly optimized preprocessing method was 78 ± 22 HU. Regarding the network architectures, 3%/3-mm gamma indices of 99.83% 0.19% and 99.74% 0.24% were obtained for HighRes-Net and 3D U-Net, respectively. Largent et al. (14) evaluated and compared U-Net and GAN using various loss functions (L2, single-scale perceptual loss (PL), multiscale PL, weighted multiscale PL), and patch-based method (PBM) based on T2w MRIs in prostate cancer. They found that GAN L2 and U-Net L2 show a lower MAE (≤34.4 HU) than U-Net PL, GAN PL, and PBM. The gamma pass rates were greater than 99% for all DLMs. GAN L2 and U-Net L2 provided the lowest dose uncertainties together with a low computation time. Their results show that the performance of U-Net and GAN is similar for pCT generation, which is consistent with our findings, although their study was for prostate pCT generation.

Besides conventional MRI modalities, such as T1w and T2w, other sequences were used to generate pCT. Many of the previous studies adopted the ultra-short time echo sequence, which could make the segmentation of bone and air easier, for brain pCT generation (22–26). However, MAEs in these studies ranged from 130 to 165 HU, which are not better than that of this study or the aforementioned conventional modality-based methods. Meanwhile, such dedicated MRI modalities result in additional scanning time, which is not conducive to their clinical applications.

The limitation of this study lies in the relatively low CT number and dose prediction accuracy at the surface of the patient. As mentioned in Results section, this may mainly due to the imperfect MR-CT registration and the residual MR distortions, despite the bias correction. A dedicated phantom experiment may be needed to further validate the performance of the proposed MR-pCT generative model, where a perfect MR-CT alignment could be implemented.

This study proved that deep CNN is an important tool to solve the problem of MRI to pCT generation for HN, with high conversion accuracy and efficiency. It can be of great value to the MRI-only radiotherapy community, especially those sites equipped with MR-linacs, by greatly reducing the additional imaging dose to patients and by ensuring the accuracy of delineation and dose calculation for each fraction.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding authors.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

Concept and design of study: XC, KM, and JD. Acquisition of data: XC and JL. Analysis and interpretation of data: XM and XC. Drafting the manuscript: XM and XC. Revising the manuscript critically for important intellectual content: XM, XC, YW, KM, and JD. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Natural Science Foundation of China (11975313, 12005302), the Beijing Nova Program (Z201100006820058), the Beijing Municipal Science & Technology Commission (Z181100001918002), and the Beijing Hope Run Special Fund of Cancer Foundation of China (LC2019B06, LC2018A14).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors sincerely thank Dr. Weijie Cui, Dr. Junjie Miao and Dr. Wenlong Xia for technical assistance. We also thank the radiation oncologists in our department for delineation.

References

1. Ramsey CR, Arwood D, Scaperoth D, Oliver AL. Clinical Application of Digitally-Reconstructed Radiographs Generated From Magnetic Resonance Imaging for Intracranial Lesions. Int J Radiat Oncol Biol Phys (1999) 45(3):797–802. doi: 10.1016/S0360-3016(99)00173-X

2. Lee YK, Bollet M, Charles-Edwards G, Flower MA, Leach MO, McNair H, et al. Radiotherapy Treatment Planning of Prostate Cancer Using Magnetic Resonance Imaging Alone. Radiother Oncol (2003) 66(2):203–16. doi: 10.1016/S0167-8140(02)00440-1

3. Andreasen D, Van Leemput K, Hansen RH, Andersen JA, Edmund JM. Patch-Based Generation of a Pseudo CT From Conventional MRI Sequences for MRI-Only Radiotherapy of the Brain. Med Phys (2015) 42(4):1596–605. doi: 10.1118/1.4914158

4. Dowling JA, Lambert J, Parker J, Salvado O, Fripp J, Capp A, et al. An Atlas-Based Electron Density Mapping Method for Magnetic Resonance Imaging (MRI)-Alone Treatment Planning and Adaptive MRI-Based Prostate Radiation Therapy. Int J Radiat Oncol Biol Phys (2012) 83(1):e5–11. doi: 10.1016/j.ijrobp.2011.11.056

5. Dowling JA, Sun J, Pichler P, Rivest-Henault D, Ghose S, Richardson H, et al. Automatic Substitute Computed Tomography Generation and Contouring for Magnetic Resonance Imaging (MRI)-Alone External Beam Radiation Therapy From Standard MRI Sequences. Int J Radiat Oncol Biol Phys (2015) 93(5):1144–53. doi: 10.1016/j.ijrobp.2015.08.045

6. Edmund JM, Andreasen D, Mahmood F, Van Leemput K. Cone Beam Computed Tomography Guided Treatment Delivery and Planning Verification for Magnetic Resonance Imaging Only Radiotherapy of the Brain. Acta Oncol (2015) 54(9):1496–500. doi: 10.3109/0284186X.2015.1062546

7. Greer PB, Dowling JA, Lambert JA, Fripp J, Parker J, Denham JW, et al. A Magnetic Resonance Imaging-Based Workflow for Planning Radiation Therapy for Prostate Cancer. Med J Aust (2011) 194(4):S24–7. doi: 10.5694/j.1326-5377.2011.tb02939.x

8. Sjolund J, Forsberg D, Andersson M, Knutsson H. Generating Patient Specific Pseudo-CT of the Head From MR Using Atlas-Based Regression. Phys Med Biol (2015) 60(2):825–39. doi: 10.1088/0031-9155/60/2/825

9. Kim J, Glide-Hurst C, Doemer A, Wen N, Movsas B, Chetty IJ. Implementation of a Novel Algorithm for Generating Synthetic CT Images From Magnetic Resonance Imaging Data Sets for Prostate Cancer Radiation Therapy. Int J Radiat Oncol Biol Phys (2015) 91(1):39–47. doi: 10.1016/j.ijrobp.2014.09.015

10. Koivula L, Wee L, Korhonen J. Feasibility of MRI-Only Treatment Planning for Proton Therapy in Brain and Prostate Cancers: Dose Calculation Accuracy in Substitute CT Images. Med Phys (2016) 43(8):4634. doi: 10.1118/1.4958677

11. Korhonen J, Kapanen M, Keyrilainen J, Seppala T, Tenhunen M. A Dual Model HU Conversion From MRI Intensity Values Within and Outside of Bone Segment for MRI-Based Radiotherapy Treatment Planning of Prostate Cancer. Med Phys (2014) 41(1):011704. doi: 10.1118/1.4842575

12. Yu H, Caldwell C, Balogh J, Mah K. Toward Magnetic Resonance-Only Simulation: Segmentation of Bone in MR for Radiation Therapy Verification of the Head. Int J Radiat Oncol Biol Phys (2014) 89(3):649–57. doi: 10.1016/j.ijrobp.2014.03.028

13. Han X. MR-Based Synthetic CT Generation Using a Deep Convolutional Neural Network Method. Med Phys (2017) 44(4):1408–19. doi: 10.1002/mp.12155

14. Largent A, Barateau A, Nunes JC, Mylona E, Castelli J, Lafond C, et al. Comparison of Deep Learning-Based and Patch-Based Methods for Pseudo-CT Generation in MRI-Based Prostate Dose Planning. Int J Radiat Oncol Biol Phys (2019) 105(5):1137–50. doi: 10.1016/j.ijrobp.2019.08.049

15. Wang T, Manohar N, Lei Y, Dhabaan A, Shu HK, Liu T, et al. MRI-Based Treatment Planning for Brain Stereotactic Radiosurgery: Dosimetric Validation of a Learning-Based Pseudo-CT Generation Method. Med Dosim (2019) 44(3):199–204. doi: 10.1016/j.meddos.2018.06.008

16. Liu F, Yadav P, Baschnagel AM, McMillan AB. MR-Based Treatment Planning in Radiation Therapy Using a Deep Learning Approach. J Appl Clin Med Phys (2019) 20(3):105–14. doi: 10.1002/acm2.12554

17. Wang Y, Liu C, Zhang X, Deng W. Synthetic CT Generation Based on T2 Weighted MRI of Nasopharyngeal Carcinoma (NPC) Using a Deep Convolutional Neural Network (DCNN). Front Oncol (2019) 9:1333. doi: 10.3389/fonc.2019.01333

18. Isola P, Zhu JY, Zhou TH, Efros AA. (2017). Image-to-Image Translation with Conditional Adversarial Networks. In: 30th Ieee Conference on Computer Vision and Pattern Recognition (Cvpr 2017). pp. 5967–76.

19. Hofmann M, Steinke F, Scheel V, Charpiat G, Farquhar J, Aschoff P, et al. MRI-Based Attenuation Correction for PET/MRI: A Novel Approach Combining Pattern Recognition and Atlas Registration. J Nucl Med (2008) 49(11):1875–83. doi: 10.2967/jnumed.107.049353

20. Gudur MS, Hara W, Le QT, Wang L, Xing L, Li R. A Unifying Probabilistic Bayesian Approach to Derive Electron Density From MRI for Radiation Therapy Treatment Planning. Phys Med Biol (2014) 59(21):6595–606. doi: 10.1088/0031-9155/59/21/6595

21. Alvarez Andres E, Fidon L, Vakalopoulou M, Lerousseau M, Carre A, Sun R, et al. Dosimetry-Driven Quality Measure of Brain Pseudo Computed Tomography Generated From Deep Learning for MRI-Only Radiation Therapy Treatment Planning. Int J Radiat Oncol Biol Phys (2020) 108(3):813–23. doi: 10.1016/j.ijrobp.2020.05.006

22. Johansson A, Garpebring A, Karlsson M, Asklund T, Nyholm T. Improved Quality of Computed Tomography Substitute Derived From Magnetic Resonance (MR) Data by Incorporation of Spatial Information–Potential Application for MR-Only Radiotherapy and Attenuation Correction in Positron Emission Tomography. Acta Oncol (2013) 52(7):1369–73. doi: 10.3109/0284186X.2013.819119

23. Rank CM, Hunemohr N, Nagel AM, Rothke MC, Jakel O, Greilich S. MRI-Based Simulation of Treatment Plans for Ion Radiotherapy in the Brain Region. Radiother Oncol (2013) 109(3):414–8. doi: 10.1016/j.radonc.2013.10.034

24. Zheng W, Kim JP, Kadbi M, Movsas B, Chetty IJ, Glide-Hurst CK. Magnetic Resonance-Based Automatic Air Segmentation for Generation of Synthetic Computed Tomography Scans in the Head Region. Int J Radiat Oncol Biol Phys (2015) 93(3):497–506. doi: 10.1016/j.ijrobp.2015.07.001

25. Price RG, Kim JP, Zheng W, Chetty IJ, Glide-Hurst C. Image Guided Radiation Therapy Using Synthetic Computed Tomography Images in Brain Cancer. Int J Radiat Oncol Biol Phys (2016) 95(4):1281–9. doi: 10.1016/j.ijrobp.2016.03.002

Keywords: nasopharyngeal carcinoma, radiotherapy, MRI-only planning, pseudo CT, deep learning, dosimetric evaluation

Citation: Ma X, Chen X, Li J, Wang Y, Men K and Dai J (2021) MRI-Only Radiotherapy Planning for Nasopharyngeal Carcinoma Using Deep Learning. Front. Oncol. 11:713617. doi: 10.3389/fonc.2021.713617

Received: 23 May 2021; Accepted: 25 August 2021;

Published: 08 September 2021.

Edited by:

Jose Eduardo Villarreal Barajas, Royal Devon and Exeter Hospital, United KingdomReviewed by:

Ruijie Yang, Peking University Third Hospital, ChinaJoshua Pohyun Kim, Henry Ford Health System, United States

Copyright © 2021 Ma, Chen, Li, Wang, Men and Dai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kuo Men, bWVua3VvMTI2QDEyNi5jb20=; Jianrong Dai, ZGFpX2ppYW5yb25nQGNpY2Ftcy5hYy5jbg==

†These authors have contributed equally to this work

Xiangyu Ma

Xiangyu Ma Xinyuan Chen

Xinyuan Chen Jingwen Li2

Jingwen Li2 Yu Wang

Yu Wang Kuo Men

Kuo Men