- Department of Radiation Medicine, North Shore-LIJ Cancer Institute, Hofstra North Shore-LIJ School of Medicine, New Hyde Park, NY, USA

By combining incident learning and process failure-mode-and-effects-analysis (FMEA) in a structure-process-outcome framework we have created a risk profile for our radiation medicine practice and implemented evidence-based risk-mitigation initiatives focused on patient safety. Based on reactive reviews of incidents reported in our departmental incident-reporting system and proactive FMEA, high safety-risk procedures in our paperless radiation medicine process and latent risk factors were identified. Six initiatives aimed at the mitigation of associated severity, likelihood-of-occurrence, and detectability risks were implemented. These were the standardization of care pathways and toxicity grading, pre-treatment-planning peer review, a policy to thwart delay-rushed processes, an electronic whiteboard to enhance coordination, and the use of six sigma metrics to monitor operational efficiencies. The effectiveness of these initiatives over a 3-years period was assessed using process and outcome specific metrics within the framework of the department structure. There has been a 47% increase in incident-reporting, with no increase in adverse events. Care pathways have been used with greater than 97% clinical compliance rate. The implementation of peer review prior to treatment-planning and use of the whiteboard have provided opportunities for proactive detection and correction of errors. There has been a twofold drop in the occurrence of high-risk procedural delays. Patient treatment start delays are routinely enforced on cases that would have historically been rushed. Z-scores for high-risk procedures have steadily improved from 1.78 to 2.35. The initiatives resulted in sustained reductions of failure-mode risks as measured by a set of evidence-based metrics over a 3-years period. These augment or incorporate many of the published recommendations for patient safety in radiation medicine by translating them to clinical practice.

Introduction

The preparation of a treatment plan for delivery of radiation therapy to a patient requires several process steps and checks spread over about a week with interactions and handoffs between a heterogeneous set of caregivers, hardware, and software interfaces. Thus pathways for errors to propagate toward an unsafe event for any patient are multifold. Yet the rate of serious or adverse errors in radiation therapy is estimated to be around 0.2% per patient (1–4). While comparable to serious error rates in chemotherapy (5), a field that is technologically less complex than radiotherapy, the adverse error rates in radiation medicine are less favorable than those in blood transfusion and anesthesiology (3), or in aviation (6) – an industry that is cognate with radiation therapy in its hierarchical organizational structures and reliance on complex machinery. Thus while serious error rates are low, benchmarks suggest we could do better in radiation medicine.

Recommendations to do better in this regard are typically summarized in reports published by radiotherapy quality and safety organizations worldwide. A recent study of seven such reports from influential radiation therapy safety organizations worldwide yielded 117 recommendations, 61 one of which were deemed to be unique (7). Most reports with the possible exception of one were based on expert opinion rather than evidence. Twelve pertinent topics appeared in more than three of these reports. These were training, staffing, documentation, standard-operating-procedures, incident learning, communication, checklists, quality control, preventive maintenance, dosimetric audits, accreditation, minimizing interruptions, prospective risk assessments, and safety culture. While such recommendations are clearly valuable, the message to translate them uniformly, effectively, and efficiently to clinical practice in multiple departments appears nebulous as this study highlights.

There appears to be only modest improvement in error rates in medicine in general where similar broad recommendations may have been made (8, 9). In one study with 10 US based hospitals chosen on the basis of patient safety activities no significant reduction in overall rates of harm or preventable harm was reported (10), while in another, a substantial percentage of patients from tertiary care hospitals recognized for their efforts in patient safety received harm (11). On the contrary, substantial improvements have been noted where specific evidence-based approaches were used, such as with the prevention of central venous catheter bloodstream infections or reductions in mortality rates in anesthesiology (9). Focused assessments of patient safety outcomes targeted by specific evidence-based interventions and metrics may therefore be a useful complement to translate such recommendations to effective practice (9, 12).

In spite of having implemented many of these traditional recommendations, quantitative analysis of the metadata from our electronic medical records (EMR) or event-reporting database in our radiation medicine department suggested we were at a substantially higher risk than we would have perceived (13). Recognizing that not all poor processes lead to poor outcomes, and some good processes may lead to poor outcomes (14) particularly in an environment where some risk prone behavior is not discouraged, our approach was to develop a quality management (QM) framework that integrated the structure-process-outcomes approach put forth by Donabedian (15–17), knowledge of variations in clinical practice using the Deming approach (18) and event-reporting based on Codman principles (19). In order to incorporate this framework, we utilized systems engineering tools such as those used in high reliability industrial organizations and applied these to our practice (13).

In this work we reviewed incidents reported in our Aspects-of-Care (AOC) incident-reporting database to extract causes and contributory factors for known failures (20) and conducted a Failure-Mode-and-Effects-Analysis (FMEA) on our process-map (21) to predict hypothetical effects relative to patient safety. The rationale for either approach per se is that for every effect there must be a set of causes and for every cause there must be some set of effects (22). Based on the combined approach, we developed and implemented six initiatives for risk-mitigation with specific evidence-based metrics for evaluation that were both process and outcomes based (23, 24). We report on the sustained impact of these initiatives since these were implemented over the past few years at our facility.

Materials and Methods

Department Structure

Our department of Radiation Medicine is spread across the New York metropolitan area over five sites within a major healthcare system. The sites that provide radiation therapy are characterized by a blend of academic, private, and community-practice traits that may be representative to some extent, of a range of practice cultures in many other departments in the country. We treat over 2000 new patients each year with external beam radiation and brachytherapy utilizing eight linear accelerators and three high-dose-rate brachytherapy systems. Our operations are paperless, driven by quality checklists (QCLs) in our EMR and policies and procedures that are updated regularly. Internal and external dosimetric audits and reviews of our programs are conducted regularly by various accreditation agencies. Our QM program encompasses all aspects of patient care using the AOC database as a catchment framework for the registry and analysis of events that represent departures from standard operations or expected patient outcomes.

Incident-Reporting and Learning System (AOC Database)

The AOC database is electronically available to all staff members with an anonymous reporting option. Incidents are reported with a brief narrative and associated reporting elements. The events are reviewed weekly by a multidisciplinary QM team and reported department and health-system wide on monthly and quarterly bases. The taxonomy for analysis of events evolved historically from a drop-down menu with shorthand notations for typical causes prior to 2010 to a more structured and recursive approach that mapped patient effects with underlying structure-process elements. The primary reason for the change was the observation that reviews of the incidents were being overwhelmingly attributed to just a handful of causes. A focused independent review of these however revealed that the causes typically picked were more the result of deeper issues not being considered. Thus efforts to make changes until then were not directed at the correct underlying issues, rendering our reporting system ineffective. The changes in the database taxonomy in 2010 thus allowed for more comprehensive analyses. Events are broken down into the observed patient effect (19) and the underlying structure-process factors that may have contributed to its occurrence. Patient effects include treatment delays, treatment-planning delays, treatment interruptions, patients not starting treatment, patients not completing treatments, morbidity (grade 3 or higher), mortality, preventive safety events (inclusive of near misses), and reactive safety events (inclusive of variances). The taxonomy evolved using validation studies that demonstrated improvements in the consistency of independent analysis by various members of the QM team as measured using free-marginal kappa statistics (25). These included randomly selected events that analyzers independently categorized. There have been over 2600 reports logged and analyzed since its initial use in 2007.

Failure-Mode-and-Effect-Analysis

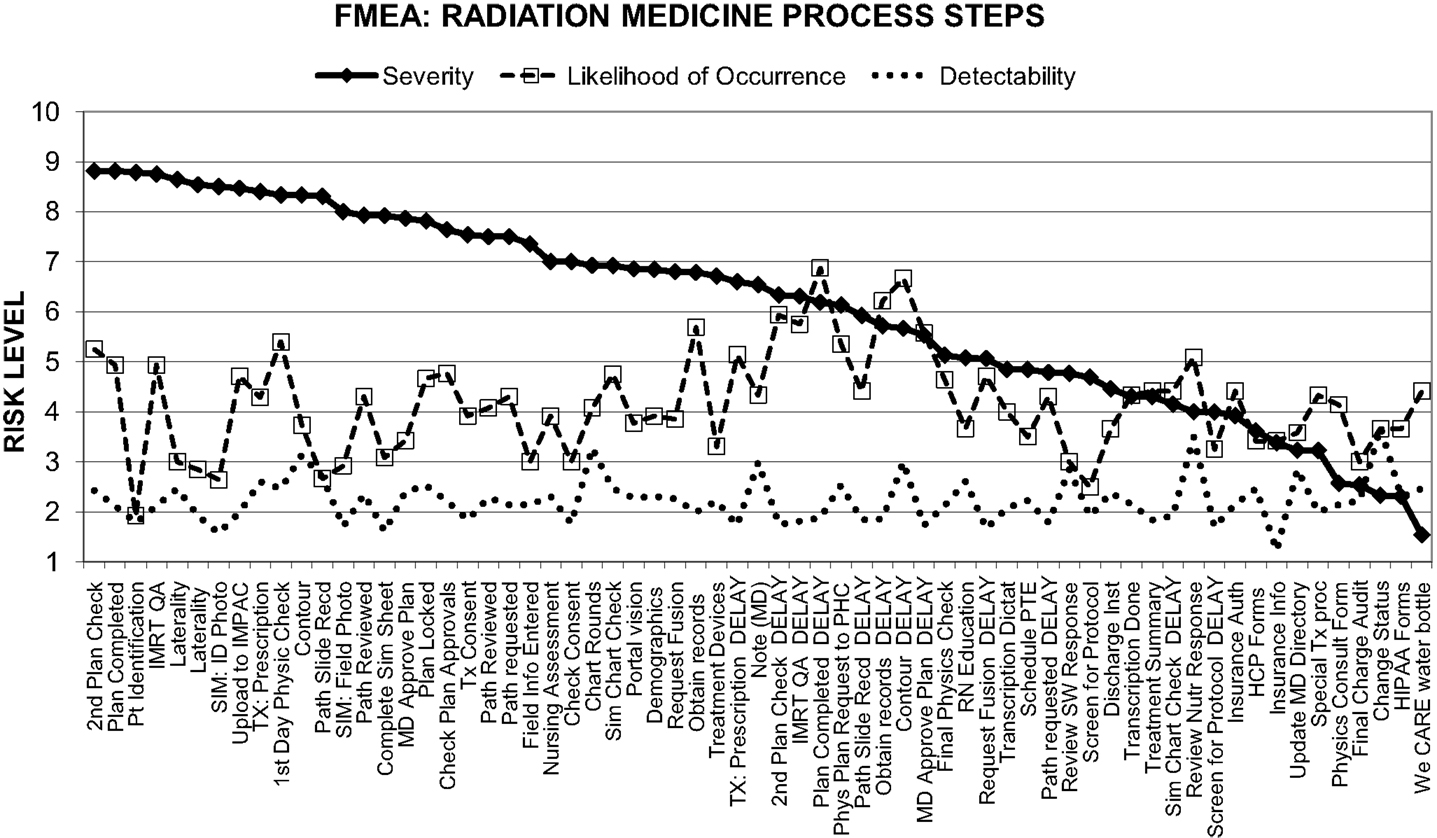

Given the cascaded steps and handoffs evident in the radiation medicine process, our goal was to reorganize the chronologically arranged process-map into one that was risk-sorted for patient safety. The QCL process-map (QPM) in use at our facility and similar to others (1, 26) was cast in the framework of an FMEA spreadsheet with explicit procedural definitions. Our FMEA was based on the electronic process-map driven by the QCLs in the EMR. Thus metadata from the EMR to estimate likelihood-of-occurrence risks over any interrogated time period was potentially available.

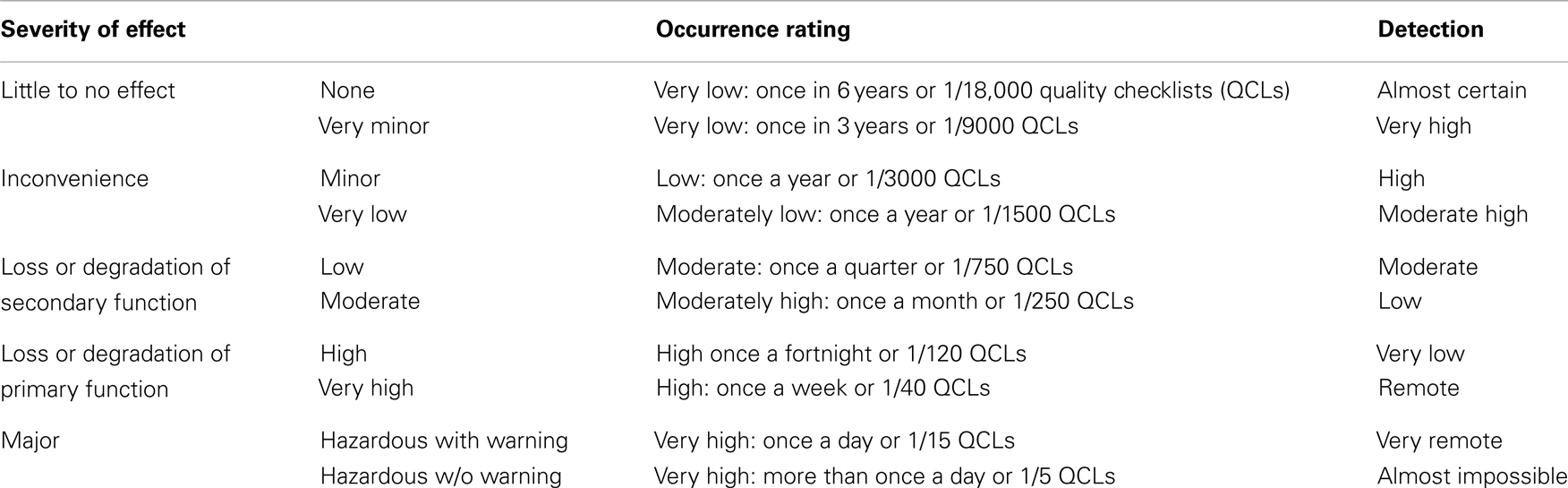

The exercise was conducted by 15 staff members across the various functional groups in the department including radiation oncologists, physicists, dosimetrists, nurses, therapists, and one non-clinical observer. There were two to four participants in each functional group bracketing the hierarchical spread within. The participants were asked to scrutinize the process-map, prospectively consider various modes of failure for each step and list their causes and safety consequences. In addition to individual experience, the AOC database, group discussions, and other event-reporting databases or publications (27–29) served as guidelines. Events in the AOC database were considered for alternate potential manifestations regardless of reported impact. Independent responses were amalgamated after a 2-weeks response period. Risk-scoring guides were established for the three components of risk – severity, likelihood-of-occurrence, and detectability using logarithmic scales (Table 1). Similar scales have been used by others. The master spreadsheet was sent to the participants to grade each failure-mode listed in the three risk components, following clarification of the grading scheme, and collective walkthroughs of sample failure-modes. Responses were obtained within 2 weeks and discussed with the team. Average scores for each failure-mode were computed. The consistency of grades assigned by various participants was evaluated using kappa statistics. A risk priority number (RPN) was computed as the product of the three risk elements for each of the failure-modes.

Development and Evaluation of Risk-Mitigation Initiatives

Given the observed (AOC) and predicted (FMEA) propensity for either delays or defects in high-risk procedures, mitigation strategies were developed to reduce their severity, likelihood-of-occurrence, and detectability risks. The AOC findings were scrutinized to seek evidence for resident pathogens – or latent error-provoking conditions in the clinic and to ascertain if these had any bearing on the performance of the high-risk procedures (30, 31). Staffing, training, and equipment QM were deemed adequate based on external audits by accreditation agencies. Initiatives that would confer robustness in our system in regard to the pathogens regardless of violations or variability were sought (18, 30). Metrics to gage effectiveness of the initiatives, both in terms of specific outcomes and specific processes, were developed and reported to the department and health-system on a regular basis.

Results

Incident-Reporting and Learning System

Incident-reporting increased by 47% following the AOC database restructure in 2010. There was significant variation in reporting by functional group: therapists (62.9%), nurses (26.7%), dosimetrists (6.4%), physicists (1.5%), support staff (1.2%), anonymous (0.9%), and radiation oncologists (0.4%). About 89.6% of the reports were logged by therapists and nurses while 8.3% were logged by the treatment-planning team. A high level of inter-rater agreement (free-marginal multi-rater kappa score of 0.837) was noted amongst QM team members who routinely analyzed incident reports.

Patient effects reported included treatment delays (25.2%), treatment-planning delays (20.9%), incomplete treatments (14.5%), safety: preventive incidents (12.8%), treatment interruptions (6.9%), safety: reactive incidents (6.7%), mortality (5.3%), patients not starting treatment (4.2%), and morbidity (3.5%). Patient treatment-planning or initiation delays and safety incidents (preventive and reactive) comprised 65.6% of the reports in the AOC database. Under-reporting of treatment-planning delays by the treatment-planning group in the AOC database was partially compensated by reporting by the therapist group, albeit at a later day than when the delays were potentially known to be imminent to the planning team. The planning delay issues were typically identified and reported instead during the routine pre-treatment audit of plans in the EMR 2 days prior to treatment initiation conducted by therapists in our department, when expected documentation was determined to be incomplete or missing in the EMR.

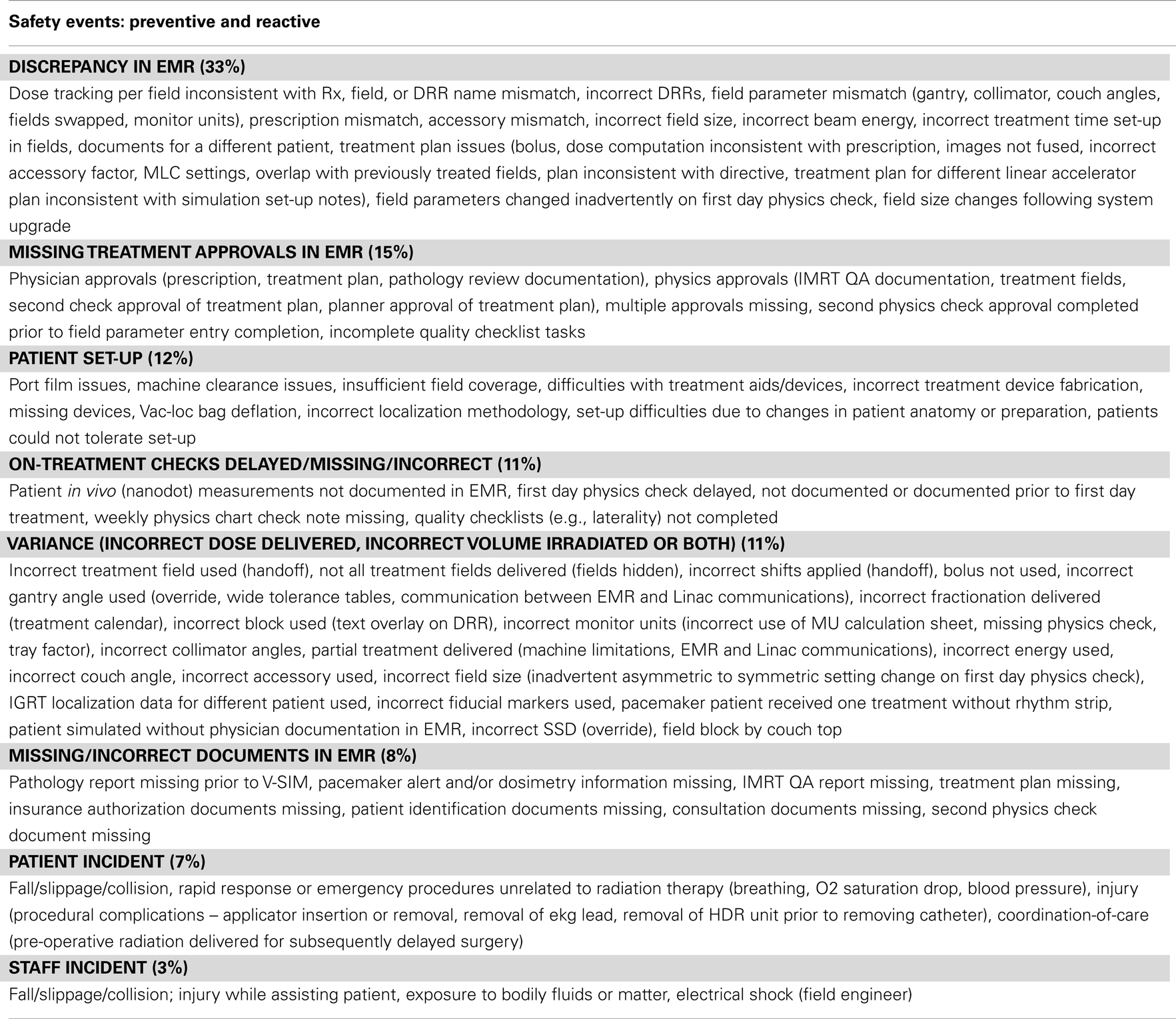

Treatment-planning delays (some of which lead to treatment delays) are attributed to planning procedural delays (58%), plan verification delays (23%), environmental issues (10%), coordination-of-care with other caregivers (5%), and patient factors (4%) (Table 2). Most of the planning procedural delays resulted from the need for additional information, issues with image fusion, modifications in contours or prescriptions and coordination, and scheduling. Safety incidents are attributed to discrepancies in the EMR (33%), missing approvals (15%), patient set-up issues (12%), on-treatment check delays or defects (11%), variances (11%), missing documents (8%), patient and staff incidents related to falls, collisions (10%) (Table 3). The variance rate per patient was under 0.5% while the serious error rate was under 0.01%.

Table 2. Factors contributing toward treatment-planning delays or treatment delays as reported and analyzed based on our incident-reporting and analysis system.

Table 3. Factors contributing toward either preventive (good catches/near misses) or reactive findings as reported and analyzed based on our incident-reporting and analysis system.

Failure-Mode-and-Effect-Analysis

Based on the Landis-Koch criteria (32), Kappa-scores for severity-risks (0.533) and detectability risks (0.629) were fair or good for the highest risk-processes but poor for the likelihood-of-occurrence risk (0.140). The highest RPN based on this exercise was 127 with all participant responses considered and 151 when responses for specific process steps were limited to those who routinely performed them.

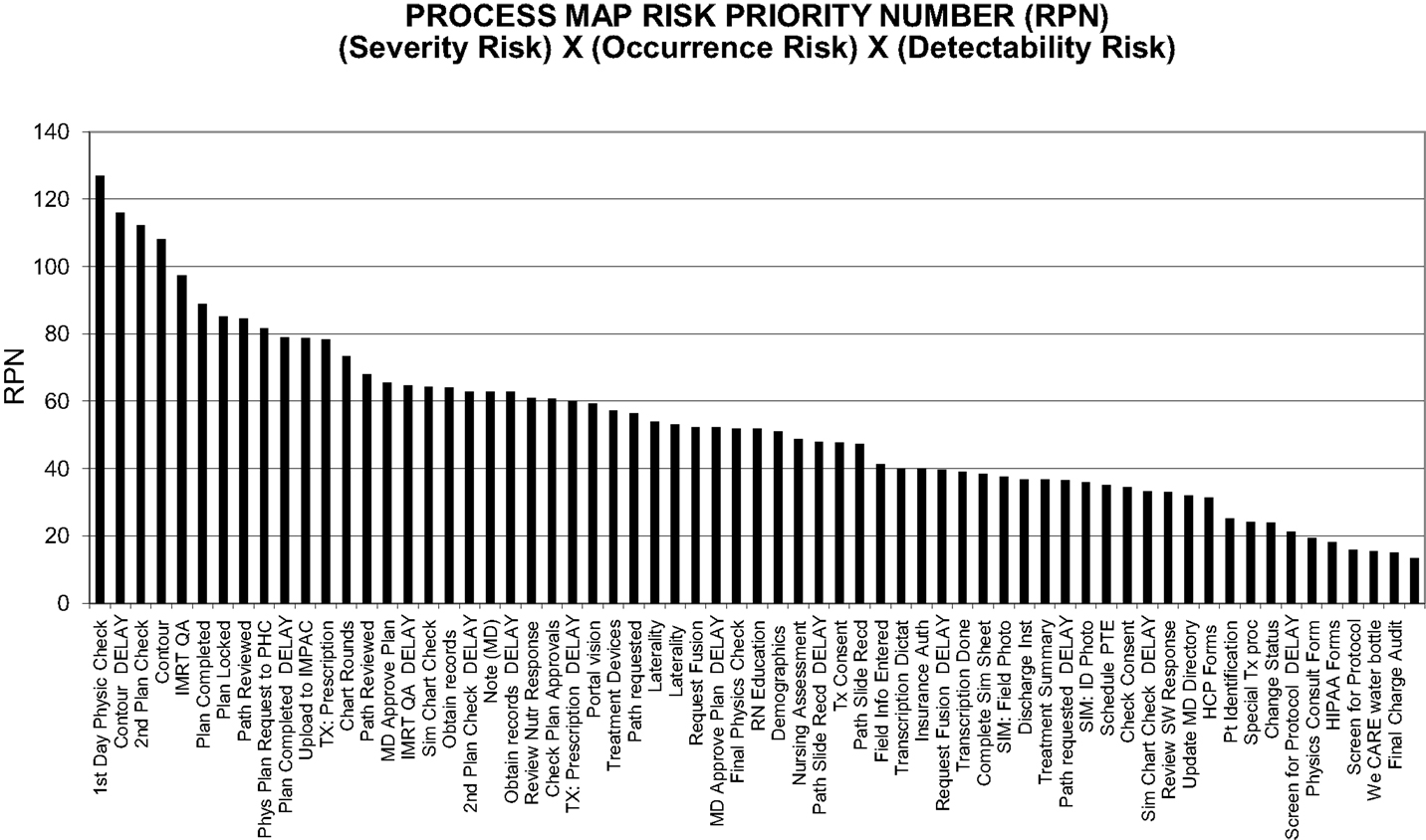

The procedures with the highest severity-risks and RPNs included contours, prescription, treatment plan completion, and transfer to the EMR, MD plan approvals, IMRT QA, second physics checks, pathology review, patient consent, laterality, and first day physics checks (Figures 1 and 2).

Figure 1. Decomposition of our process-map in radiation medicine into three components of risk levels based on prospective failure-mode analysis.

Figure 2. Composite risk probabilities of failure-modes from combined components of risk elements. Based on inputs from all staff members.

Baseline Risk Profile

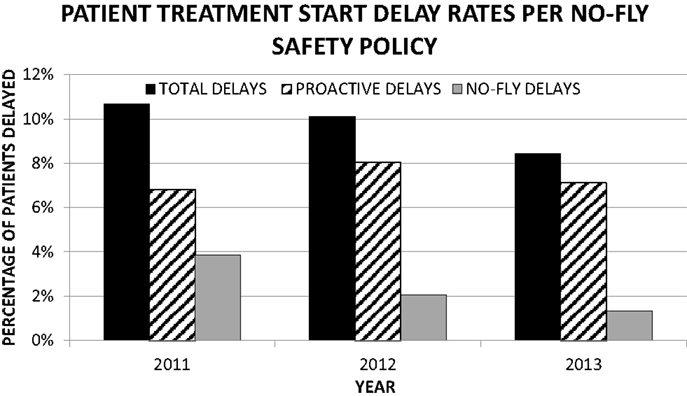

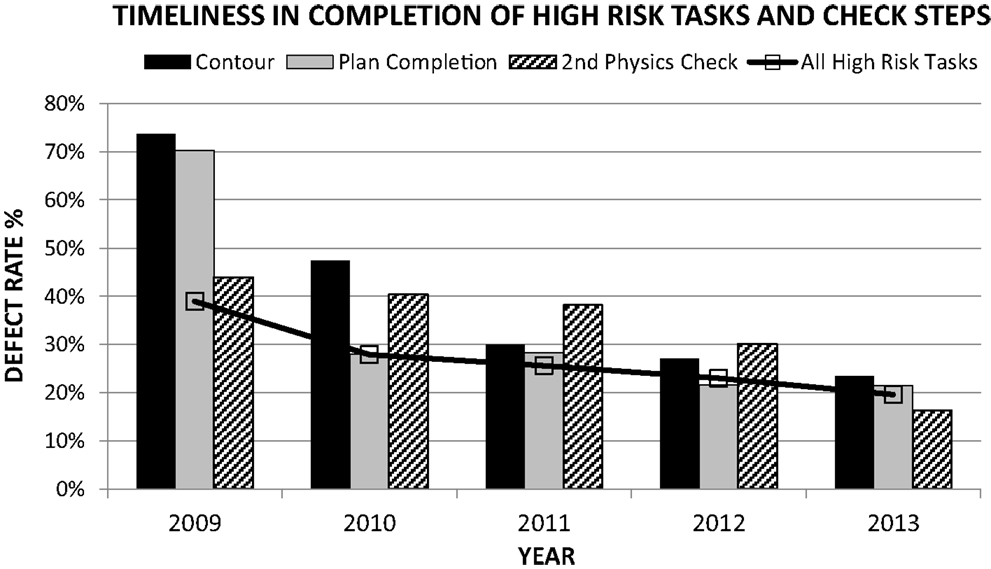

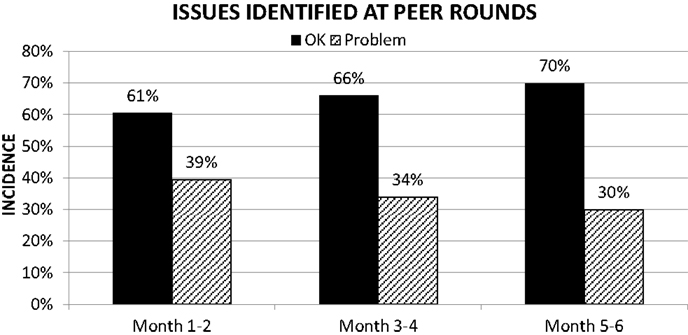

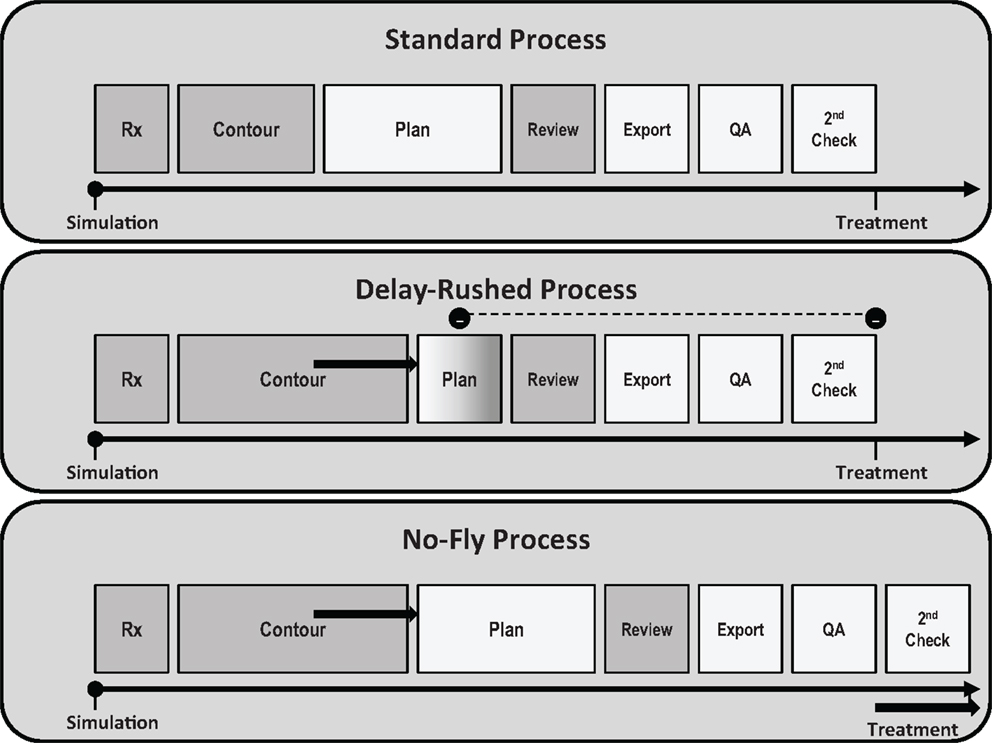

A baseline review of metadata extracted from our EMR indicated that approximately 40% of all the high-risk procedures identified on the FMEA were completed with delays (Figure 3). In particular, 70% of contours and plan completion procedures were delayed. Approximately 39% of all cases presented at pre-treatment-planning peer review rounds presented with issues that required contour completion, modification, or directive changes (Figure 4). Latent risk factors that contributed to these included communication, procedural compliance, and time pressures (Tables 2 and 3). The latent risk factors created an environment where upstream high-risk procedures were delayed, but the overarching tendency to treat on time necessitated a hastened completion of downstream high-risk tasks. The net effect of the latent risk factors was the prevalence of a local delay-rushed culture (Figure 5) incompatible with patient safety goals.

Figure 3. Mitigation of the likelihood-of-occurrence risks for high-risk-process steps over time based on actual data extracted from the EMR.

Figure 4. Mitigation of defects increased process control for high-risk-process steps (contours and prescription in care pathways) over time.

Figure 5. Delay-rushed processes. On the upper panel is a qualitative plot of a nominal timeline for task completions – the width of each block corresponding to the time needed for each task. Not changing the expected treatment date, in the event of upstream task delays, would require shortening the time for completion of the downstream tasks (middle panel) and thus exacerbated time pressures that could lead to errors. On the other hand, extending the treatment date proactively to account for the upstream task delay would restore the downstream timelines (bottom panel) and a “treat safely” first culture.

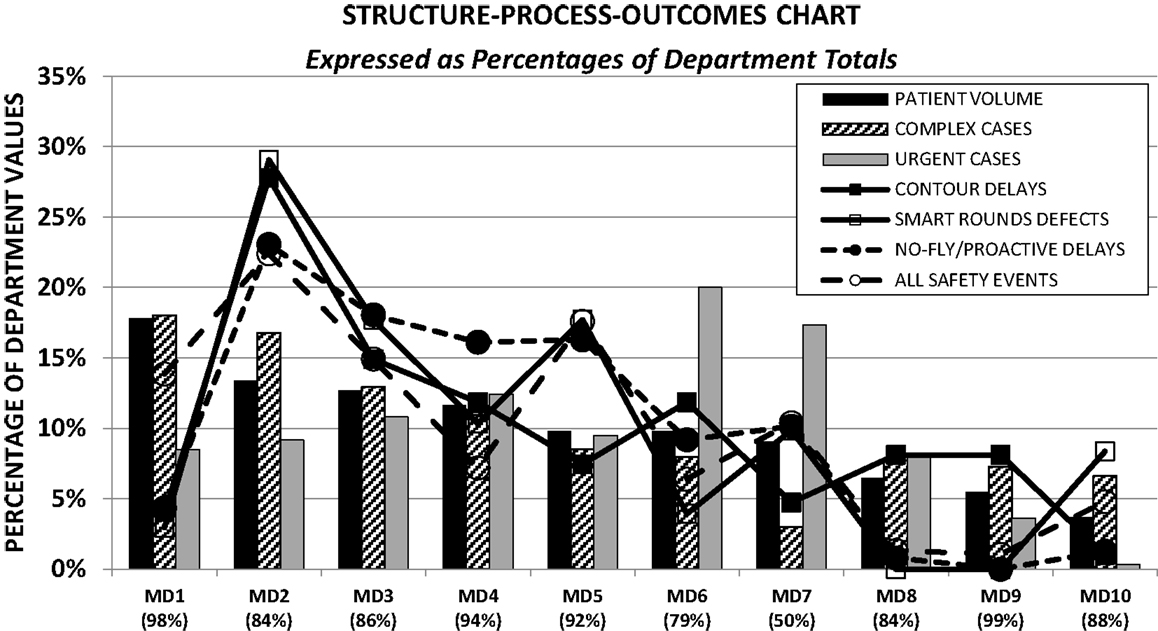

Substantial variability in execution of high-risk procedures was noted amongst staff as illustrated by the structure-process-outcomes chart (Figure 6). Patient treatment volume was chosen as an indicator for structure (17, 33, 34). Three of the 10 radiation oncologists who collectively carried 36% of the department patient volume were associated with 50% of the department contour delays and 65% of the issues (contour modification or inconsistent directives) identified at pre-treatment-planning peer reviews, 52% of all reports logged in the AOC database, 56% of treatment delays, 55% of all safety incidents, and 46% of department variances; thus more than their volume share would predict. In contrast one radiation oncologist who carried 18% of the department volume had 4% of the department contour delays, 3% of the peer review issues, 12% of all AOC reports, 9% of all treatment delays, 14% of all safety incidents, and 18% of department variances; well below or at par with the treatment volume share. For the remaining radiation oncologists process delays or peer review issues and AOC effects were at par with or less than total department shares of patient volumes.

Figure 6. Variability amongst radiation oncologists in process execution and patients effects in the framework of department caseload distributions. All percentages are quoted based on department totals for each category. Numbers quoted near the MD designations correspond to the fraction of patients seen by each MD at their prominent practice site amongst the four sites of the department.

Risk-Mitigation Strategies and Their Impact

Analysis of the AOC database, FMEA, and baseline risk profile resulted in six risk-mitigation initiatives to minimize process delays, defects, and variability amongst staff. These were standardization of care pathways (35) and toxicity grading (36) to reduce the severity risk; pre-treatment-planning peer review rounds (37), and a no-fly-policy (38) to reduce the likelihood-of-occurrence and detectability risks; an electronic whiteboard (39) as a process mapping and error detection tool to enhance communications, reduce detectability risks, and augment the AOC database; and the use of six sigma metrics to gage operational efficiencies in the context of timely completions and reduced variability (13).

The respective metrics used to gage effectiveness were compliance-with-directive usage, kappa-scores for inter-rater reliability of toxicity grading schemes, fraction of cases presented at pre-treatment-planning peer review rounds with no issues, proactive and no-fly treatment delay rates, incident-reporting rates and high-risk-process task delay rates, or Z-scores.

There has been a sustained rate of improvement across all metrics evaluated over the past 3 years. The compliance with the use of care pathways has steadily increased to 97%. Inter-rater reliability for toxicity grading has improved by a factor of two using kappa statistics. Defect rates in timely completion of high-risk tasks have dropped steadily from 39 to 20% (Figure 3). The percentage of cases presented at pre-treatment-planning peer review rounds with no defects identified has increased from 61 to 70% in the first 6 months of its implementation (Figure 4). Current defect detection rates for contours and care pathways are approximately 7 and 9% respectively of patients presented at rounds. Proactive and no-fly delay rates have dropped from 12 to 8% of all new patient starts (Figure 7). Incident-reporting in the whiteboard by the treatment-planning team has increased more than three times in 9 months of usage relative to 6 years of usage of the AOC database with no increase in the observation of adverse events. Operational Z-scores have improved from 1.78 to 2.35.

Discussion

Moving toward safer radiotherapy practice requires an active surveillance of associated failures, their causes and effects, and evidence-based approaches to mitigate them. Surveillance may be conducted reactively, such as via the analysis of incidents reported at any given institution, in multi-institutional databases or published reports (1, 27–29, 40–54). Alternatively, it may be conducted proactively using probabilistic safety assessment tools, risk matrices, or failure-mode-and-effect analysis (29, 55–60). Understanding the nature, cause and effects of errors in radiation medicine is confounded by the involvement of human or cultural factors and their interactions with systems (30, 61), varying human perceptions during incident-reporting and analysis (62), under-reporting, or skewed reporting of incidents amongst functional groups (3), and practical limitations of anticipating all possible trajectories for failure propagation in complex systems (22). This is partially illustrated in one recent study, where it was shown that a large fraction (42%) of incidents reported were not predicted by the FMEA conducted at the same institution (3) and is likely to be true for other studies including ours.

From a practical perspective, what neither reactive nor proactive surveillance approaches can do independently is reveal all underlying causes of specific effects observed or all possible adverse effects of specific causes (22). Much may be learned then from the combined surveillance approach. Using probabilistic safety assessment tools on 443 failure-modes, a multidisciplinary task group of the Ibero American FORO of Nuclear and radiation safety agencies have shown that 90% of potentially catastrophic accidental exposures involving multiple patients could be attributed to as few as eight event sequences with misunderstanding of delineated treatment volumes, initial treatment sessions, patient positioning, treatment delivery, and treatment-planning being the most vulnerable steps in the process (29, 56). Continued incident-reporting on a national or international level may reveal a similar cluster of risk-heightened sequences and therefore direct mitigation strategies more effectively.

A limitation that affects both forms of surveillance is that of the differences in perception by staff members. The same incident report could be interpreted differently by different staff members analyzing the report. This in turn has important consequences on incident learning or relative risk assessment and therefore the legitimacy or effectiveness of resulting corrective actions. The difference in perception was exemplified when the kappa-scores for consistency in analysis of a set of test cases from our AOC database amongst members of the QM team (typically at 0.837) dropped with the addition of a new team member (to 0.747), and by the low kappa score (0.140) for the likelihood-of-occurrence risks for the high-severity risk tasks during the FMEA exercise. This emphasized the need for the continual assessment of inter-rater reliability in the use and development of taxonomies for incident learning. In regard to the FMEA, there was a wider variation in perceptual differences for the likelihood-of-occurrence assessments for two reasons. First, the FMEA was conducted by members across all functional groups and thus some of the failure-modes for a particular type of procedure were less understood by or familiar to those who did not routinely perform them. As errors started by one professional group however are often caught by another (63), an observation exemplified several times in our AOC database, the group perspective was an important step in determining and assessing various modes of procedural failures. Second, many of the failure-modes identified were hypothetical in nature, and thus the estimation of their likelihood-of-occurrence with existing controls in place was subject to individual perception bias. To reduce the effects of these limitations, RPN scores were also calculated by restricting the likelihood-of-occurrence risks to those who routinely performed the procedures and by finding ways to measure actual defect rates by querying the EMR directly where possible. The scales used for FMEA are ordinal scales and are subject therefore to rater perception. The RPN computation is thus somewhat flawed as it involves the multiplication of grades based on ordinal data. Hence our assessment of the risk profile for the various process steps during the FMEA was based on collective consideration of the severity-risks and the semi-quantitative RPN scores rather than on the latter alone.

Despite the limitations of failure analyses mentioned above, the use of various incident-reporting systems, differing taxonomies, and FMEA study designs (including grading schemes used) at different institutions, in comparing our findings (Figures 1 and 2; Tables 2 and 3) with those of others referenced above, we have noted several similarities for the types of incidents observed, the causal relationships and the process steps where high-risk sub-steps appear to be clustered. Incident learning and FMEA studies at our institution have shown us that failures occur due to delays in the completion of the radiation medicine process steps or defects relative to standard-operating-procedures in key steps along the way and that the nature of process execution is dependent on the structural set-up of the department and coordination with other caregivers or the patients themselves. There were three main contributors to failures including treatment delays and safety findings in our database.

The first was the timeliness and accuracy of the execution of high-risk-process steps identified in our FMEA. In particular, contours, prescription, the completion of the treatment plan and second checks are complex in nature, requiring many inter-related subtasks, pre-requisite information, and handoffs, and have a tight coupling with observed treatment delays or safety incidents (Tables 2 and 3) (31, 64). Delays and defects in completing these tasks are attributed not just to staff delinquencies but to a large extent also the need for obtaining the requisite information at the right time from the right source. Structural barriers to this include inadequate procedural training or skills, ineffective handoffs or communications, improper scheduling or coordination of staff and equipment failures. For approximately 40% of the variances noted in our system, the errors were determined to have germinated at various stages of the treatment-planning preparation, clustered around these tasks. Thus despite multiple defenses-in-depth serving as quality control checkpoints (plan reviews, second physics checks, verification simulation, timeouts, and initial treatment checks), quality was not fully assured and errors were allowed to propagate. Second, our review of the structure-process-outcomes metrics highlights variability in practice amongst our radiation oncologists (Figure 6). A similar trend was noted for planners and physicists. As with the limited cluster of high-risk-process steps, the propensity toward risk is elevated for a handful of the staff where high-risk task delays and defects as well as patient effects noted in the AOC database were exacerbated relative to others despite no significant differences in their share of patient volumes, case complexity or urgency. Finally, cultural tendencies to prioritize time pressures for treatment initiation elevate the risk profile by creating local traps and recurrent patterns for delay-rushed practices and thus error-provoking conditions. Prior to 2010 75% of treatment-planning delays in the high-risk steps did not culminate in treatment delays – this dropped by a factor of two in the transition phase leading to the initiation of the no-fly-policy. Multiple incidents were reported with incomplete high–risk procedures while the patient was awaiting treatment initiation, suggesting the site culture somehow supported delay-rushed practices instead of focusing on proactively addressing underlying systemic deficiencies. Thus high-risk task delays or defects, staff variability and delay-rushed tendencies were the three main error-provoking factors in our study.

Risk-mitigation was therefore geared toward these three factors, using the mitigation strategies of the FMEA process – namely reduction of associated severity metrics, likelihood-of-occurrence and the likelihood of detection of errors if these occurred. The main focus was on building robustness within our radiation medicine process with the awareness that it may be formidable to completely eliminate failures (65) but that we may be better oriented to contain their damaging effects by injecting quality control and assurance measures between consultation and completion of planning stages and enhancing defenses-in- depth (66).

Mitigation of the severity risk was approached by enhancing standardization of care pathways and the grading of adverse effects. Non-compliance with treatment protocols and variability in practice has been shown to lead to inferior outcomes (67, 68). To avoid ad hoc care, we instituted detailed evidence-based treatment pathways that encompassed all domains of care including prescription, simulation, planning, nursing interventions and follow-up assessments (35). These were based on the Institute of Medicine, and quantitative analysis of normal tissue effects in the clinic (QUANTEC) guidelines (4). In order to ensure that patients would be evaluated for toxicities in a standardized way regardless of caregiver (69, 70), we incorporated the Common Terminology Criteria for Adverse Effects (CTCAE V4.0) grading scheme from the National Cancer Institute into our EMR (36, 71). These initiatives were aimed toward reducing defects in the completion of high-risk tasks due to care based on individual caregiver experience rather than published evidence. Incorporating the standards into the EMR provided us with a baseline reference with which to compare pathways chosen for specific patients and thus a means to detect and assess differences and reduce variability in practice.

To reduce the likelihood-of-occurrence and detection risks, three initiatives were introduced. Pre-treatment-planning peer reviews were instituted on a daily basis with all faculty, treatment-planning and scheduling team members to review patient charts prior to the initiation of treatment-planning (37). Similar rounds at other institutions demonstrate the value added by peer review (72). The goal was to detect errors in the care pathways as well as contours delineated in the treatment-planning systems for specific patients. The standardized care pathways were based on all faculty inputs and thus provided a framework for managing potentially contentious discussions during rounds as well as exploring opportunities for improvement based on new published evidence in the literature. The rounds served as a checkpoint for the initiation of treatment-planning so that planning would commence after reconciliation of identified defects.

Coordination-of-care amongst caregivers was enhanced by the creation of an electronic whiteboard that was used for peer rounds and for efficiently coordinating all treatment-planning tasks for all patients at all sites of the department in a more transparent manner than what was available in the EMR (39). Traditionally staff members were accustomed to mainly reviewing QCL items assigned just to them in the EMR. This reduced simultaneous awareness of gaps in the planning process by others. With the whiteboard, alerts for potential delays in all high-risk planning tasks for specific patients were made more obvious to all members of the planning team without the need for additional mouse clicks.

Given the paucity of reports in the AOC database by members of the planning team, the whiteboard additionally served as a platform to report errors identified on peer rounds as each case was reviewed. A substantial increase in incident-reporting resulted from the use of the whiteboard as an augment to the AOC database. This does not reflect an increase necessarily in the incidence of events, rather the increase in the reporting of these events. It has been reported that departments that register more events in such systems actually have fewer events that lead to patient harm (3, 73). Three factors contributed toward this improvement. First, the electronic whiteboard was used as an operational tool to navigate daily pre-treatment-planning rounds where all staff members involved in the plans of all patients being reviewed on a certain day were present. In addition to navigating rounds, its immediate access during rounds provided an efficient interface to summarize the review for each patient and also to register issues. Second, the reporting interface within the whiteboard was simpler to use as it did not require onerous and duplicitous data entry such as patient demographic information or associated data, since this data was already available. Thus the overhead in reporting was substantially reduced compared to the AOC database. This enhanced reporting efficiency not just during rounds, but at all times. For planning staff members, selecting a drop-down entry in an already open electronic whiteboard was less of a barrier to reporting than the need to separately open the AOC database and complete all entries. Third, any event identified during pre-treatment-planning rounds was required to be logged with all planning staff present. This requirement served as a forcing function for staff members to complete event entries.

Defect rates as well as delay rates of all procedures were gaged using Z-scores – a standard practice in high reliability industries (13). These metrics were computed previously with metadata from the EMR but are now routinely computed with data from the whiteboard. Z-scores for all high-risk tasks were routinely reported to all staff members at monthly QM meetings using control charts so as to increase awareness of underlying issues and highlight individual staff operational performance improvement needs. The Z-score combines the accuracy with which tasks are completed based on specification limits as well as the variability in task completion. The use of the Z-score has provided a means of evaluating our progress in the same framework used by high reliability industries that strive toward six sigma levels of operations.

Finally to mitigate risks associated with rushed completions of high-risk tasks, we implemented a “No-fly-policy” with stopping rules that proactively delayed patient treatment starts or prevented treatment starts in the event such delay-rushed processes would have occurred (38). Overall, there has been a steady trend showing a drop in high-risk task delays as well as a drop in patient treatment initiation delays. The policy provides a robust mechanism to mitigate potentially unsafe treatment starts regardless of variability in high-risk task completions or staff performance. It has transformed the culture from “treat on time” first to “treat safely” first.

An oft quoted goal in radiation medicine is to deliver the right dose to the right target while minimizing dose to organs at risk. Much has been done by way of algorithm or technique development toward meeting that goal, but little in terms of addressing comprehensive structure-process-outcome deficiencies. Given our baseline risk profile (likely similar to that for other departments), we felt we needed more than a set of policies or checklists in order to transform our culture to one that was actively focused on safety. Driving these risk-mitigation initiatives has challenged traditional norms of operations such as expediting treatment initiation in delay-rushed environments or sustaining care pathways that are more experience based than evidence-based. Therefore their implementation has met with substantial cultural barriers for adoption. Working practices evolve over decades, and changing them with such initiatives creates uncertainty. The inertia of sustaining past cultures and arguments for not changing tend to perseverate (74). Direct persuasion only goes so far (75). In anticipation of these barriers, our initiatives were not implemented abruptly, but via transitional phases focused on staff feedback, education, training, and communication (76). The goal was to introduce them in a manner that would support the staff to embrace the measures by the time of formal implementation. Regardless of the barriers, our focus on patient safety, combined with statistical process control, regular event database reviews and staff meetings, and use of quantitative metrics that has been instrumental in realizing these changes and crossing barriers. We have seen sustained improvements over the past 3 years of implementation in our department. Institution of the following items has helped drive our department toward improvements in patient safety: (i) standardization of treatment care pathways, (ii) standardization of toxicity grading, (iii) institution of prospective pre-treatment-planning peer reviews, (iv) process management for treatment-planning components, (v) assessment of operational efficiencies, and (vi) enforcement of the No-Fly-Policy. We believe that most centers can augment their safety programs by complying with and instituting some or all of these initiatives. By doing so, they can use the work from this study to build a culture of safety without necessarily replicating the more time-consuming aspects of the study. Yet, for others, there is value in validating our results.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. World Health Organization. Radiotherapy Risk Profile. Technical Manual. Geneva: WHO Publishing (2007).

2. Ford EC, Terezakis SA. How safe is safe? Risk in radiotherapy. Int J Radiat Oncol Biol Phys (2010) 78:321–2. doi: 10.1016/j.ijrobp.2010.04.047

3. Terezakis SA, Pronovost P, Harris K, Deweese T, Ford E. Safety strategies in an academic radiation oncology department and recommendations for action. Jt Comm J Qual Patient Saf (2011) 37:291–9.

4. Marks LB, Ten Haken RK, Martel M. Guest editor’s introduction to QUANTEC: a user’s guide. Int J Radiat Oncol Biol Phys (2010) 76(Suppl 3):S1–2. doi:10.1016/j.ijrobp.2009.08.075

5. Munro AJ. Hidden danger, obvious opportunity: error and risk in the management of cancer. Br J Radiol (2007) 80:955–66. doi:10.1259/bjr/12777683

6. Gordon S, Mendenhall P, O’Connor BB. Beyond the Checklist: What Else Health Care Can Learn from Aviation Teamwork and Safety. Ithaca, NY: Cornell University Press (2012).

7. Dunscombe PB. Recommendations for safer radiotherapy: what’s the message? Front Oncol (2012) 2:129. doi:10.3389/fonc.2012.00129

8. Kohn LT, Corrigan JM, Donaldson MS editors. To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press (2000).

9. Shojania KG, Thomas EJ. Trends in adverse events over time: why are we not improving? BMJ Qual Saf (2013) 22:273–7. doi:10.1136/bmjqs-2013-001935

10. Landrigan CP, Parry GJ, Bones CB, Hackbarth AD, Goldmann DA, Sharek PJ. Temporal trends in rates of patient harm resulting from medical care. N Engl J Med (2010) 36:2124–34. doi:10.1056/NEJMsa1004404

11. Classen DC, Resar R, Griffin F, Federico F, Frankel T, Kimmel N, et al. ‘Global trigger tool’ shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood) (2011) 30:581–9. doi:10.1377/hlthaff.2011.0190

12. Terezakis SA, Ford E. Patient safety improvement efforts: how do we know we have made an impact? Pract Radiat Oncol (2013) 3:164–6. doi:10.1016/j.prro.2013.02.002

13. Kapur A, Potters L. Six Sigma tools for a patient safety-oriented, quality-checklist driven radiation medicine department. Pract Radiat Oncol (2012) 2:86–96. doi:10.1016/j.prro.2011.06.010

14. Brook RH, McGlynn EA, Cleary PD. Quality of health care. Part 2: measuring quality of care. N Engl J Med (1996) 335:966–70. doi:10.1056/NEJM199609263351311

15. Donabedian A. Evaluating the quality of medical care. Milbank Mem Fund Q (1966) 44:166–206. doi:10.2307/3348969

16. Donabedian A. The quality of care: how can it be assessed? JAMA (1988) 260:1743–8. doi:10.1001/jama.1988.03410120089033

17. Albert JM, Das P. Quality assessment in oncology. Int J Radiat Oncol Biol Phys (2012) 83:773–81. doi:10.1016/j.ijrobp.2011.12.079

18. Deming WE. The New Economics for Industry, Government, Education. 2nd ed. Cambridge, MA: MIT Press (2000).

19. Codman EA. A Study in Hospital Efficiency. Oakbrook Terrace, IL: Joint Commission on Accreditation of Healthcare Organizations Press (1996).

20. Kapur A, Potters L, Bloom B, Mallalieu LB, Adair N, Zuvic P, et al. Cause or effect? Development and validation of an event reporting database in radiation medicine. Int J Radiat Oncol Biol Phys (2011) 81(Suppl 2):S886. doi:10.1016/j.ijrobp.2011.06.1585

21. Kapur A, Potters L, Sharma R, Lee L, Cao Y, Zuvic P, et al. Failure modes and effects analysis of an electronic quality-checklist process map in radiation medicine – has it made a difference? Int J Radiat Oncol Biol Phys (2011) 81(Suppl 2):S562. doi:10.1016/j.ijrobp.2011.06.890

22. Senders JW. FMEA and RCA: the mantras of modern risk management. Qual Saf Health Care (2004) 13:249–50. doi:10.1136/qshc.2004.010868

23. Rubin HR, Pronovost P, Diette GB. From a process of care to a measure: the development and testing of a quality indicator. Int J Qual Health Care (2001) 13:489–96. doi:10.1093/intqhc/13.6.489

24. Hayman JA. Measuring the quality of care in radiation oncology. Semin Radiat Oncol (2008) 18:201–6. doi:10.1016/j.semradonc.2008.01.008

25. Randolph JJ. Free-Marginal Multirater Kappa: an Alternative to Fleiss’ Fixed Marginal Multirater Kappa. Joensuu: Joensuu University Learning and Instruction Symposium (2005).

26. Ford EC, Fong de LosSantos L, Pawlicki T, Sutlief S, Dunscombe P. Consensus recommendations for incident learning database structures in radiation oncology. Med Phys (2012) 39:7272–90. doi:10.1118/1.4764914

27. Cunningham J, Coffey M, Knöös T, Holmberg O. Radiation oncology safety information system (ROSIS) – profiles of participants and the first 1074 incident reports. Radiother Oncol (2010) 97:601–7. doi:10.1016/j.radonc.2010.10.023

28. International Atomic Energy Agency. Lessons Learned from Accidental Exposures in Radiotherapy. Vienna: IAEA (2000). IAEA Safety Reports Series No. 17.

29. Ortiz López P, Cosset JM, Dunscombe P, Holmberg O, Rosenwald JC, Pinillos Ashton L, et al. ICRP publication 112. Preventing accidental exposures from new external beam radiation therapy technologies. Ann ICRP (2009) 39:1–86. doi:10.1016/j.icrp.2010.02.002

30. Reason J. Human error: models and management. BMJ (2000) 320:768–70. doi:10.1136/bmj.320.7237.768

31. van Beuzekom M, Boer F, Akerboom S, Hudson P. Patient safety: latent risk factors. Br J Anaesth (2010) 105:52–9. doi:10.1093/bja/aeq135

32. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics (1977) 33:159–74. doi:10.2307/2529310

33. Begg CB, Cramer LD, Hoskins WJ. Impact of hospital volume on operative mortality for major cancer surgery. JAMA (1998) 280:1747–51. doi:10.1001/jama.280.20.1747

34. Hillner BE, Smith TJ, Desch CE. Hospital and physician volume or specialization and outcomes in cancer treatment: importance in quality of cancer care. J Clin Oncol (2000) 18:2327–40.

35. Potters L, Raince J, Chou H, Kapur A, Bulanowski D, Stanzione R, et al. Development, implementation, and compliance of treatment pathways in radiation medicine. Front Oncol (2013) 3:105. doi:10.3389/fonc.2013.00105

36. Kapur A, Evans C, Bloom B, Ames JWA, Morgenstern C, Raince J, et al. Inter-rater variability in the assessment of skin reactions in breast cancer radiation therapy – impact of grading scales. Int J Radiat Oncol Biol Phys (2013) 87(Suppl 2):S494. doi:10.1016/j.ijrobp.2013.06.1306

37. Cox BW, Sharma A, Potters L, Kapur A. Prospective contouring rounds: a novel, high-impact tool for optimizing quality assurance. Int J Radiat Oncol Biol Phys (2013) 87(Suppl 2):S118. doi:10.1016/j.ijrobp.2013.06.304

38. Potters L, Kapur A. Implementation of a “no fly” safety culture in a multicenter radiation medicine department. Pract Radiat Oncol (2012) 2:18–26. doi:10.1016/j.prro.2011.04.010

39. Brewster Mallalieu LJ, Kapur A, Sharma A, Potters L, Jamshidi A, Pinsky J. An electronic whiteboard and associated databases for physics workflow coordination in a paperless, multi-site Radiation Oncology department [Abstract]. Med Phys (2011) 38:3755. doi:10.1118/1.3613130

40. Barach P, Small SD. Reporting and preventing medical mishaps: lessons from non-medical near miss reporting systems. BMJ (2000) 320:759–63. doi:10.1136/bmj.320.7237.759

41. Huang G, Medlam G, Lee J, Billingsley S, Bissonnette JP, Ringash J, et al. Error in the delivery of radiation therapy: results of a quality assurance review. Int J Radiat Oncol Biol Phys (2005) 61:1590–5. doi:10.1016/j.ijrobp.2004.10.017

42. Yeung TK, Bortolotto K, Crosby S, Hoar M, Lederer E. Quality assurance in radiotherapy: evaluation of errors and incidents recorded over a 10 year period. Radiother Oncol (2005) 74:283–91. doi:10.1016/j.radonc.2004.12.003

43. Cooke DL, Dunscombe PB, Lee RC. Using a survey of incident reporting and learning practices to improve organisational learning at a cancer care centre. Qual Saf Health Care (2007) 16:342–8. doi:10.1136/qshc.2006.018754

44. Marks LB, Light KL, Hubbs JL, Georgas DL, Jones EL, Wright MC, et al. The impact of advanced technologies on treatment deviations in radiation treatment delivery. Int J Radiat Oncol Biol Phys (2007) 69:1579–86. doi:10.1016/j.ijrobp.2007.08.017

45. Williams MV. Improving patient safety in radiotherapy by learning from near misses, incidents and errors. Br J Radiol (2007) 80:297–301. doi:10.1259/bjr/29018029

47. Klein EE, Drzymala RE, Purdy JA, Michalski J. Errors in radiation oncology: a study in pathways and dosimetric impact. J Appl Clin Med Phys (2005) 6:81–94. doi:10.1120/jacmp.2025.25355

48. Shafiq J, Barton M, Noble D, Lemer C, Donaldson LJ. An international review of patient safety measures in radiotherapy practice. Radiother Oncol (2009) 92:15–21. doi:10.1016/j.radonc.2009.03.007

49. Bissonnette JP, Medlam G. Trend analysis of radiation therapy incidents over seven years. Radiother Oncol (2010) 96:139–44. doi:10.1016/j.radonc.2010.05.002

50. Clark BG, Brown RJ, Ploquin JL, Kind AL, Grimard L. The management of radiation treatment error through incident learning. Radiother Oncol (2010) 95:344–9. doi:10.1016/j.radonc.2010.03.022

51. Mutic S, Brame RS, Oddiraju S, Parikh P, Westfall MA, Hopkins ML, et al. Event (error and near-miss) reporting and learning system for process improvement in radiation oncology. Med Phys (2010) 37:5027–36. doi:10.1118/1.3471377

52. Marks LB, George TJ, Siddiqui T, Mendenhall WM, Zlotecki RA, Grobmyer S, et al. The challenge of maximizing safety in radiation oncology. Pract Radiat Oncol (2011) 1:2–14. doi:10.1097/COC.0b013e3181a650e8

53. Clark BG, Brown RJ, Ploquin J, Dunscombe P. Patient safety improvements in radiation treatment through 5 years of incident learning. Pract Radiat Oncol (2013) 3:157–63. doi:10.1016/j.prro.2012.08.001

54. Terezakis SA, Harris KM, Ford E, Michalski J, DeWeese T, Santanam L, et al. An evaluation of departmental radiation oncology incident reports: anticipating a national reporting system. Int J Radiat Oncol Biol Phys (2013) 85:919–23. doi:10.1016/j.ijrobp.2012.09.013

55. Rath F. Tools for developing a quality management program: proactive tools (process mapping, value stream mapping, fault tree analysis and failure modes and effects analysis). Int J Radiat Oncol Biol Phys (2008) 71(Suppl 1):S187–90. doi:10.1016/j.ijrobp.2007.07.2385

56. Vilaragut LJJ, Ferro FR, Rodriguez M, Ortiz López P, Ramírez ML, Pérez Mulas A, et al. Probabilistic safety assessment (PSA) of the radiation therapy treatment process with an electron linear accelerator (LINAC) for medical uses. XII Congress of the International Association of Radiation Protection, IRPA 12. Buenos Aires (2008).

57. Huq MS, Fraass BA, Dunscombe PB, Gibbons JP Jr, Ibbott GS, Medin PM, et al. A method for evaluating quality assurance needs in radiation therapy. Int J Radiat Oncol Biol Phys (2008) 71(Suppl 1):S170–3. doi:10.1016/j.ijrobp.2007.06.081

58. Ford EC, Gaudette R, Myers L, Vanderver B, Engineer L, Zellars R, et al. Evaluation of safety in a radiation oncology setting using failure mode and effects analysis. Int J Radiat Oncol Biol Phys (2009) 74:852–8. doi:10.1016/j.ijrobp.2008.10.038

59. Scorsetti M, Signori C, Lattuada P, Urso G, Bignardi M, Navarria P, et al. Applying failure mode effects and criticality analysis in radiotherapy: lessons learned and perspectives of enhancement. Radiother Oncol (2010) 94:367–74. doi:10.1016/j.radonc.2009.12.040

60. Denny DS, Allen DK, Worthington N, Gupta D. The use of failure mode and effect analysis in a radiation oncology setting: the Cancer Treatment Centers of America Experience. J Healthc Qual (2012). doi:10.1111/j.1945-1474.2011.00199.x

61. Rivera AJ, Karsh BT. Human factors and systems engineering approach to patient safety for radiotherapy. Int J Radiat Oncol Biol Phys (2008) 71(Suppl 1):S174–7. doi:10.1016/j.ijrobp.2007.06.088

62. Po-Hui H, O’Connor C, Marcia S. A comparison of perceptual and reported errors in radiation therapy. J Med Imag Radiat Sci (2013) 44:23–30. doi:10.1016/j.jmir.2012.11.001

63. Bates DW, Boyle DL, Vander Vliet MB, Schneider J, Leape J. Relationship between medication errors and adverse drug events. J Gen Intern Med (1995) 10:199–205. doi:10.1007/BF02600255

64. Weick KE. Organizational culture as a source of high reliability. Calif Manage Rev (1987) 29:112–27. doi:10.1136/qshc.2007.023978

66. Thomadsen B. Critique of traditional quality assurance paradigm. Int J Radiat Oncol Biol Phys (2008) 71(Suppl 1):S166–9. doi:10.1016/j.ijrobp.2007.07.2391

67. Weber DC, Tomsej M, Melidis C, Hurkmans CW. QA makes a clinical trial stronger: evidence-based medicine in radiation therapy. Radiother Oncol (2012) 105:4–8. doi:10.1016/j.radonc.2012.08.008

68. Ohri N, Shen X, Dicker AP, Doyle LA, Harrison AS, Showalter TN. Radiotherapy protocol deviations and clinical outcomes: a meta-analysis of cooperative group clinical trials. J Natl Cancer Inst (2013) 105:387–93. doi:10.1093/jnci/djt001

69. Brundage MD, Pater JL, Zee B. Assessing the reliability of two toxicity scales: implications for interpreting toxicity data. J Natl Cancer Inst (1993) 85:1138–48. doi:10.1093/jnci/85.14.1138

70. Trotti A, Bentzen SM. The need for adverse effects reporting standards in oncology clinical trials. J Clin Oncol (2004) 22:19–22. doi:10.1200/JCO.2004.10.911

71. National Cancer Institute. Common Terminology Criteria for Adverse Events v4.0 (2010). Available from http://evs.nci.nih.gov/ftp1/CTCAE/About.html

72. Marks LB, Adams RD, Pawlicki T, Blumberg AL, Hoopes D, Brundage MD, et al. Enhancing the role of case-oriented peer review to improve quality and safety in radiation oncology: executive summary. Pract Radiat Oncol (2013) 3:149–56. doi:10.1016/j.prro.2012.11.010

73. Mardon RE, Khanna K, Sorra J, Dyer N, Famolaro T. Exploring relationships between hospital patient safety culture and adverse events. J Patient Saf (2010) 6:226–32. doi:10.1097/PTS.0b013e3181fd1a00

74. Reid W. Developing and implementing organisational practice that delivers better, safer care. Qual Saf Health Care (2004) 13:247–8. doi:10.1136/qshc.2004.009787

75. Reason J. Achieving a safe culture: theory and practice. Work Stress (1998) 12(3):293–306. doi:10.1080/02678379808256868

Keywords: incident learning, failure-mode-and-effects-analysis, root cause analysis, patient safety, no-fly-policy, six sigma, electronic whiteboard

Citation: Kapur A, Goode G, Riehl C, Zuvic P, Joseph S, Adair N, Interrante M, Bloom B, Lee L, Sharma R, Sharma A, Antone J, Riegel A, Vijeh L, Zhang H, Cao Y, Morgenstern C, Montchal E, Cox B and Potters L (2013) Incident learning and failure-mode-and-effects-analysis guided safety initiatives in radiation medicine. Front. Oncol. 3:305. doi: 10.3389/fonc.2013.00305

Received: 07 November 2013; Paper pending published: 21 November 2013;

Accepted: 02 December 2013; Published online: 16 December 2013.

Edited by:

Edward Sternick, Rhode Island Hospital, USACopyright: © 2013 Kapur, Goode, Riehl, Zuvic, Joseph, Adair, Interrante, Bloom, Lee, Sharma, Sharma, Antone, Riegel, Vijeh, Zhang, Cao, Morgenstern, Montchal, Cox and Potters. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ajay Kapur, Department of Radiation Medicine, North Shore-LIJ Health System, Hofstra North Shore-LIJ School of Medicine, 276-05 76th Avenue, New Hyde Park, NY 11040, USA e-mail:YWthcHVyQG5zaHMuZWR1

Ajay Kapur

Ajay Kapur Gina Goode

Gina Goode Catherine Riehl

Catherine Riehl Petrina Zuvic

Petrina Zuvic Sherin Joseph

Sherin Joseph Nilda Adair

Nilda Adair Michael Interrante

Michael Interrante Rajiv Sharma

Rajiv Sharma Anurag Sharma

Anurag Sharma Jeffrey Antone

Jeffrey Antone Adam Riegel

Adam Riegel Lili Vijeh

Lili Vijeh Honglai Zhang

Honglai Zhang Yijian Cao

Yijian Cao Carol Morgenstern

Carol Morgenstern Elaine Montchal

Elaine Montchal Brett Cox

Brett Cox Louis Potters

Louis Potters