- Frontier Research Laboratory, Corporate Research and Development Center, Toshiba Corporation, Kawasaki, Japan

Spiking randomly connected neural network (RNN) hardware is promising as ultimately low power devices for temporal data processing at the edge. Although the potential of RNNs for temporal data processing has been demonstrated, randomness of the network architecture often causes performance degradation. To mitigate such degradation, self-organization mechanism using intrinsic plasticity (IP) and synaptic plasticity (SP) should be implemented in the spiking RNN. Therefore, we propose hardware-oriented models of these functions. To implement the function of IP, a variable firing threshold is introduced to each excitatory neuron in the RNN that changes stepwise in accordance with its activity. We also define other thresholds for SP that synchronize with the firing threshold, which determine the direction of stepwise synaptic update that is executed on receiving a pre-synaptic spike. To discuss the effectiveness of our model, we perform simulations of temporal data learning and anomaly detection using publicly available electrocardiograms (ECGs) with a spiking RNN. We observe that the spiking RNN with our IP and SP models realizes the true positive rate of 1 with the false positive rate being suppressed at 0 successfully, which does not occur otherwise. Furthermore, we find that these thresholds as well as the synaptic weights can be reduced to binary if the RNN architecture is appropriately designed. This contributes to minimization of the circuit of the neuronal system having IP and SP.

1 Introduction

Randomly connected neural networks (RNNs), which have been studied as a simplified theoretical model of the nervous system of biological brains (Somopolinsky et al., 1988; Lazar et al., 2009; Kadmon and Somopolinsky, 2015; Bourdoukan and Deneve, 2015; Tetzlaff et al., 2015; Thalmeier et al., 2016; Landau and Somopolinsky, 2018; Frenkel and Indiveri, 2022), are attracting much attention as a promising artificial intelligence (AI) technique that can perform prediction and anomaly detection of time series data in real time without executing sophisticated AI algorithms (Jaeger, 2001; Maass et al., 2002; Sussillo and Abbott, 2009; Nicola and Clopath, 2017; Das et al., 2018; Bauer et al., 2019). In particular, hardware implementation of RNNs is expected to reduce the power consumption of time series data processing, enabling intelligent operations of edge systems in our society. While the potential of RNNs has been well demonstrated in previous works (Jaeger, 2001; Maass et al., 2002; Sussillo and Abbott, 2009; Nicola and Clopath, 2017; Das et al., 2018; Bauer et al., 2019; Covi et al., 2021), inherent randomness sometimes causes uncontrollable data inference failures, leading to low reliability of the technique. Self-organization mechanism improves the reliability, which can be realized by including intrinsic plasticity (IP) and synaptic plasticity (SP) in the neuronal operation model (Lazar et al., 2009). IP is a homeostatic mechanism of biological neurons that controls neuron firing frequencies within a certain range. It has been shown to be indispensable for unsupervised learning in neuromorphic systems (Desai et al., 1999; Steil, 2007; Bartolozzi et al., 2008; Lazar et al., 2009; Diehl and Cook, 2015; Qiao et al., 2017; Davies et al., 2018; Payvand et al., 2022). SP is a mechanism where a synapse changes its own weight in accordance with incoming signals and the post-synaptic neuron’s activity, known as the fundamental principle of learning in biological brains (Legenstein et al., 2005; Pfister et al., 2006; Ponulak and Kasinski, 2010; Kuzum et al., 2012; Ning et al., 2015; Prezioso et al., 2015; Ambrogio et al., 2016; Covi et al., 2016; Kreiser et al., 2017; Srinivasan et al., 2017; Ambrogio et al., 2018; Faria et al., 2018; Li et al., 2018; Amirshahi and Hashemi, 2019; Cai et al., 2020; Yongqiang et al., 2020; Dalgaty et al., 2021; Frenkel et al., 2023).

Since computing resources may be limited at the edge, we focus on analog spiking neural network (SNN) hardware having ultimately high-power efficiency for edge AI devices (Ning et al., 2015; Davies et al., 2018; Payvand et al., 2022). The most general neuron model for SNNs is the leaky integrate-and-fire (LIF) model (Holt and Koch, 1997). For a LIF neuron, IP function may be added by adjusting its time constant of the membrane potential according to its own firing rate . If we are to design LIF neurons with analog circuitry, tunable capacitor and resistor are required to control the time constant. The former is difficult because no practical device element having variable capacitance has been invented. For the latter, Payvand et al. (2022) proposed an IP circuit using memristors, namely, variable resistors. However, this circuit requires an auxiliary unit for memristor control, whose details are not yet discussed. Considering large device-to-device variability of memristors, each unit must be tuned according to the respective memristor’s characteristics, which would result in a complicated circuit system with large overhead (Payvand et al., 2020; Demirag et al., 2021; Moro et al., 2022; Payvand et al., 2023).

Alternative method for controlling is to adjust the firing threshold itself (Diehl and Cook, 2015; Zhang and Li, 2019; Zhang et al., 2021). For a LIF neuron designed with analog circuitry, is given as a reference voltage applied to a comparator connected to the neuron’s membrane capacitor (Chicca et al., 2014; Ning et al., 2015; Chicca and Indiveri, 2020; Payvand et al., 2022), hence IP can be implemented by adding a circuit that can change the reference voltage in accordance with . It would be straightforward to employ a variable voltage source, but we need a considerable effort to design such a compact voltage source as to be added to every neuron. Instead, we may prepare several fixed voltages and multiplex them to the comparator according to neuronal activity. This is the motivation of this study. What we are interested in are (i) whether or not stepwise control of the threshold voltage is effective for the IP function in a spiking RNN (SRNN) for temporal data learning and (ii) if it is, how far we can go in reducing the number of the voltage lines.

When we introduce variable , we need to care about SP for hardware design. With regard to SP implementation, spike-timing dependent plasticity (STDP; Legenstein et al., 2005; Ning et al., 2015; Srinivasan et al., 2017) is the most popular synaptic update rule. STDP is a comprehensive synaptic update rule that obeys Hebb’s law, but it is not hardware-friendly; it requires every synapse to have a mechanism to measure elapsed time from arrival of a spike. Alternatively, we employ spike-driven synaptic plasticity (SDSP; Brader et al., 2007; Mitra et al., 2009; Ning et al., 2015; Frenkel et al., 2019; Gurunathan and Iyer, 2020; Payvand et al., 2022; Frenkel et al., 2023) which is much more convenient for hardware implementation. It is a rule where an incoming spike change the synaptic weight depending on whether of the post-synaptic neuron is higher than a threshold or lower than another threshold .The magnitude relationship is essential for correct learning hence and should be defined according to .

In this work we study an SRNN with IP and SP where , , and are discretized and synchronized. In order to make our model hardware-oriented, synaptic weights are also discretized so that we can assume conventional digital memory circuits for storing weights. We perform simulations of learning and anomaly detection tasks for publicly available electrocardiograms (ECGs; Liu et al., 2013; Kiranyaz et al., 2016; Das et al., 2018; Amirshahi and Hashemi, 2019; Bauer et al., 2019; Wang et al., 2019) and show the effectiveness of our model. In particular, we discuss how much we can reduce the discretized levels of and , which is an essential aspect for hardware implementation.

2 Methods

2.1 LIF neuron model

The neuron model we employ in this work is the LIF model (Holt and Koch, 1997), which is one of the best-known spiking neuron models due to its computational effectiveness and mathematical simplicity. The membrane potential of neuron is given as

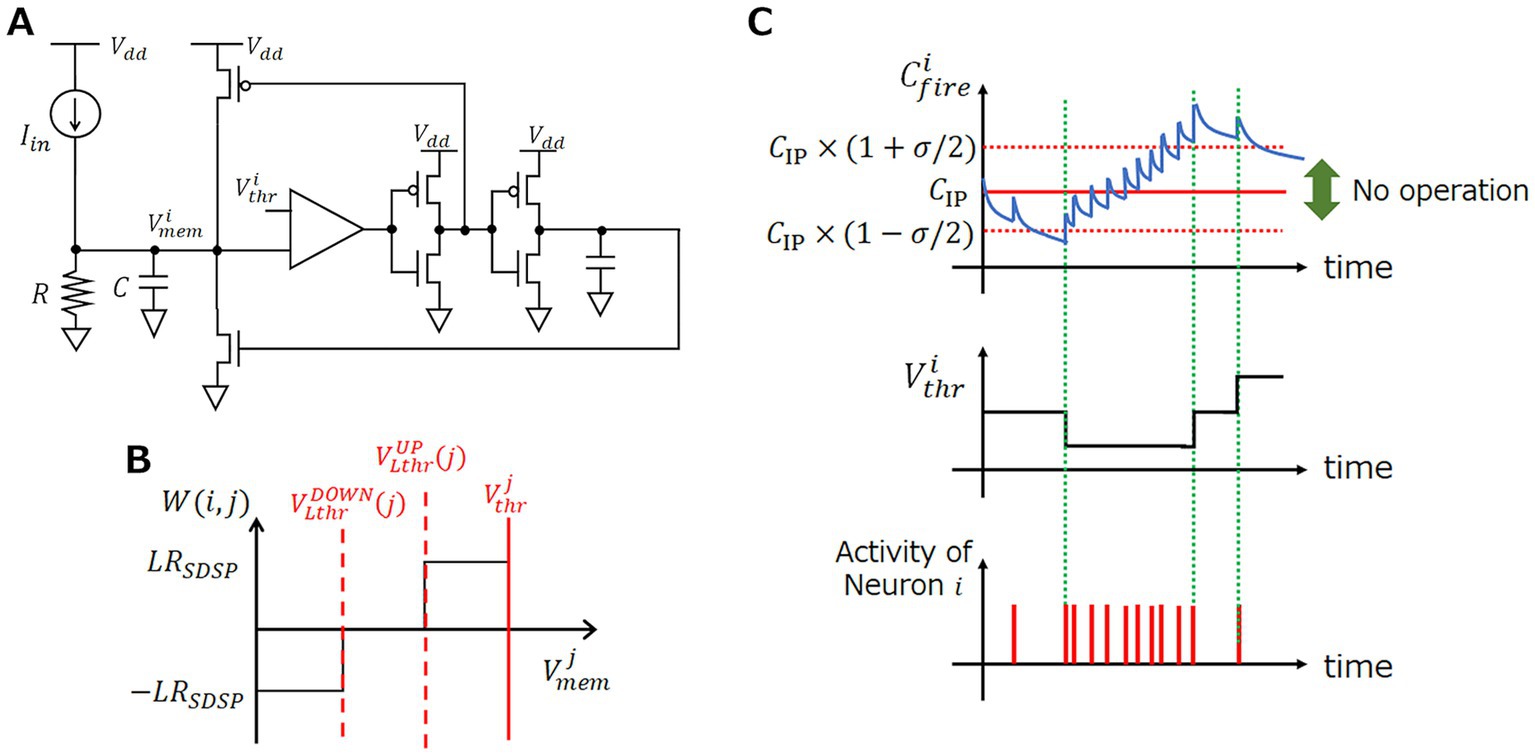

where , , and denote the membrane capacitance, resistance, and the sum of the input current flowing into the neuron, respectively. If exceeds the firing threshold , neuron fires and transfers a spike signal to the next neurons connected via a synapse. Then, neuron resets to and enters a refractory state for time , during which stays at regardless of . The LIF neuron is hardware-friendly because it can be implemented in analog circuits using industrially manufacturable complementary-metal-oxide-semiconductor (CMOS) devices (Indiveri et al., 2011), as illustrated in Figure 1A.

Figure 1. Model and behavior of each component of SRNN. (A) LIF neuron circuit diagram. (B) Schematic diagram of synaptic weight variation. (C) Behavior of and depending on .

2.2 Synapse and SDSP

A synapse receives spikes from neurons and external input nodes. When a spike comes, a synapse converts the spike into a synaptic current proportional to defined as

where and are a time constant, and is an appropriately defined constant. This synapse model is also compatible with the CMOS design.

As mentioned above, we employ SDSP as the synaptic update rule for SP. The synaptic weight between pre-synaptic neuron and post-synaptic neuron increases or decreases if is higher or lower than the learning threshold or when the pre-synaptic neuron fires, as follows:

where is the learning step, which is set to a constant value, as illustrated in Figure 1B.

In practice, the range of is finite, , hence defines the resolution of . Higher resolution is favorable for better performance in general, but this leads to a larger circuit area for storing values. Emerging memory elements such as memristors and phase change memory devices may be employed to avoid this issue (Lazar et al., 2009; Li and Li, 2013), but practical use of these emerging technologies is still a big challenge. In this work, we assume conventional CMOS digital memory cells for storing , raising our interest in how much we can reduce the resolution of for practical application task. In this view, we discuss the feasibility of binary , which is ideal for hardware implementation, later in this work.

A circuit that determines whether should be potentiated, depressed, or unchanged can be designed with two comparator circuits; the one compares with and the other with (see Supplementary materials). Note that it is sufficient for each neuron to have a determinator; it is not necessary for each synapse to have it.

2.3 Event-driven stepwise IP

The IP model we employ executes a stepwise change of the firing threshold voltage of neuron in an event-driven manner as

where denotes the changing step of in a single IP operation, a parameter that measures of the activity of neuron , a constant corresponding to the target activity. is a parameter that defines a healthy regime of , , where IP operation is not executed (see Supplementary materials for details; Payvand et al., 2022). is often referred to as a calcium potential (Brader et al., 2007; Indiveri and Fusi, 2007; Ning et al., 2015), defined as

where is a constant and represents all the firing times of neuron (note that all the firing times are summed up). The behavior of is illustrated in Figure 1C, showing that it can be used as an indicator of the neuron activity if the threshold is appropriately determined.

The firing threshold of a LIF neuron is given as a reference voltage applied to a comparator connected to the membrane capacitor. Stepwise change of is advantageous for hardware implementation because we do not need to design a compact voltage source circuit that can tune the output continuously. Instead, we need to prepare several fixed voltage lines and select one of them using a multiplexer, which is not a difficult task.

2.4 Synchronization of IP and SP thresholds

If the SDSP thresholds are fixed to be constants, the IP rule introduced above interfere with SP because it changes the magnitude relationship between and . For example, let us assume that is lowered by IP and comes below . In this case, decreases every time a spike comes and finally reaches zero because is always less than and never exceeds . This would lead to incorrect learning of the input information.

To operate both IP and SP at the same time correctly, we synchronize the three thresholds of neuron , that is, , , and so that the magnitude relationship should be kept during IP operations. Along with the firing threshold , the learning thresholds and are updated by IP as follows,

where are the change width of the learning thresholds.

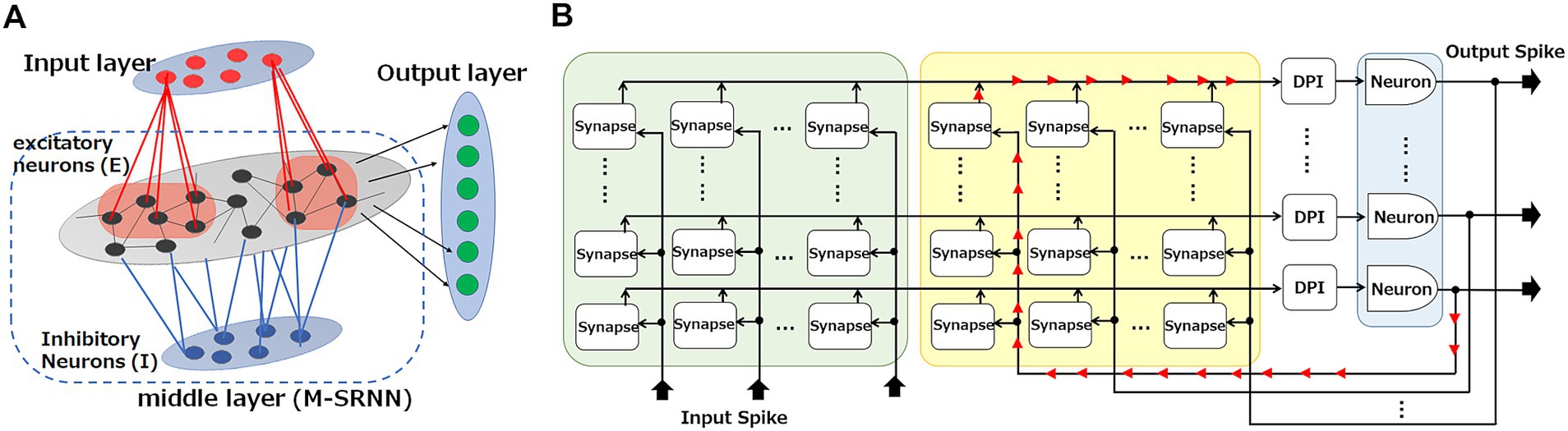

2.5 Network model

Figure 2A shows the architecture of the SRNN system we study in this work. It consists of an input layer, a middle layer, and an output layer. The middle layer (M-SRNN) is an RNN with random connections and synaptic weights, consisting of two neuron types which are excitatory and inhibitory neurons. The M-SRNN in this work consists of 80% excitatory and 20% inhibitory neurons. Input-layer neurons send Poisson spikes to the neurons of the M-SRNN at a frequency corresponding to the value of the input data. The input-layer neurons connect with excitatory neurons of M-SRNN with a probability of , which is 0.1 in this work. Note that they have no connections to inhibitory neurons. The excitatory neurons connect with other excitatory neurons with probability and with inhibitory neurons with probability . Inhibitory neurons connect with excitatory neurons with probability and do not connect with inhibitory neurons. Output-layer neurons are connected from all excitatory neurons of M-SRNN. Not all M-SRNNs will give the desired result because of the random nature, so parameters related to the structure of M-SRNN must be set carefully to obtain the desired results (Payvand et al., 2022). With self-organization mechanism by IP and SP, the M-SRNN reconstruction is automatically performed using spike signals from input layer neurons.

Figure 2. Hardware implementation for an SRNN. (A) SRNN consists of input, middle (M-SRNN), and output layers. The M-SRNN consists of excitatory (E, black) and inhibitory (I, blue) sub-population layers. (B) Hardware implementation for M-SRNN.

The M-SRNN can be implemented as a crossbar architecture (Lazar et al., 2009) shown in Figure 2B. There, each row line is connected to a neuron of the M-SRNN, and each column line is connected to either an input-neuron emitting spikes in response to external inputs or a recurrent input from an M-SRNN neuron. A cross point is a synapse, where spikes from the column line are converted to synaptic current flowing into the row line. Some of the synapses are set inactive to realize the random connection of the RNN.

3 Simulation and results

3.1 Simulation configuration and parameters

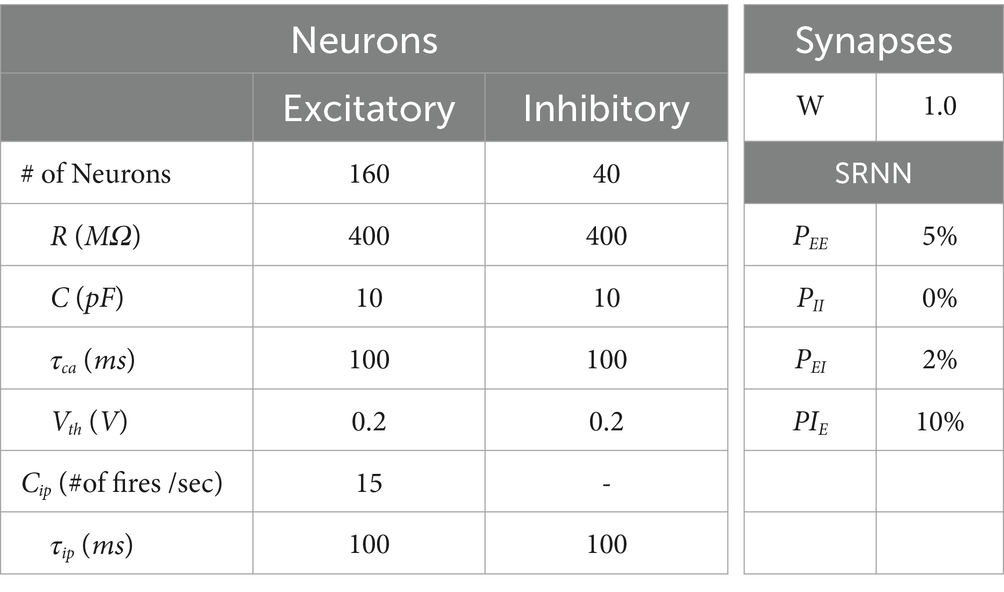

The effectiveness of our M-SRNN model with IP and SP explained above is evaluated using Brian simulator (Goodman and Brette, 2008) by ECG anomaly detection benchmark (PhysioNet, 1999; Goldberger et al., 2000; Moody and Mark, 2001) with parameters listed in Table 1. Input-layer neurons convert the ECG data to Poisson spikes and send them to excitatory neurons in the M-SRNN. The simulation consists of three phases. Phase 1 is the unsupervised learning phase of the M-SRNN by using the training data of the ECGs. Thresholds ( , and ) of excitatory neurons and synaptic weights ( ) between excitatory neurons in the M-SRNN are learned by IP and SP, respectively. Phase 2 is a readout learning phase. The synaptic weights between neurons inside of the M-SRNN are not changed. Synaptic weights between excitatory neurons in the M-SRNN and the neurons in the output layer are calculated by linear regression in a supervised fashion. Phase 3 is the test phase. Using test ECG data, anomaly detection performance of the M-SRNN determined in Phase 1 is evaluated.

In the simulation, the learning step and the firing threshold change width are selected from and respectively. The ranges of and are and . With regard to the SP synchronization with IP, we set throughout this work, hence = . All initial synaptic weights between excitatory neurons are set to , and the initial firing threshold is set to 0.2 V for all neurons. All other synaptic weights are set randomly. The validity of our method is though Counting Task Benchmark (Lazar et al., 2009; Payvand et al., 2022) as shown in Supplementary materials 4.1.

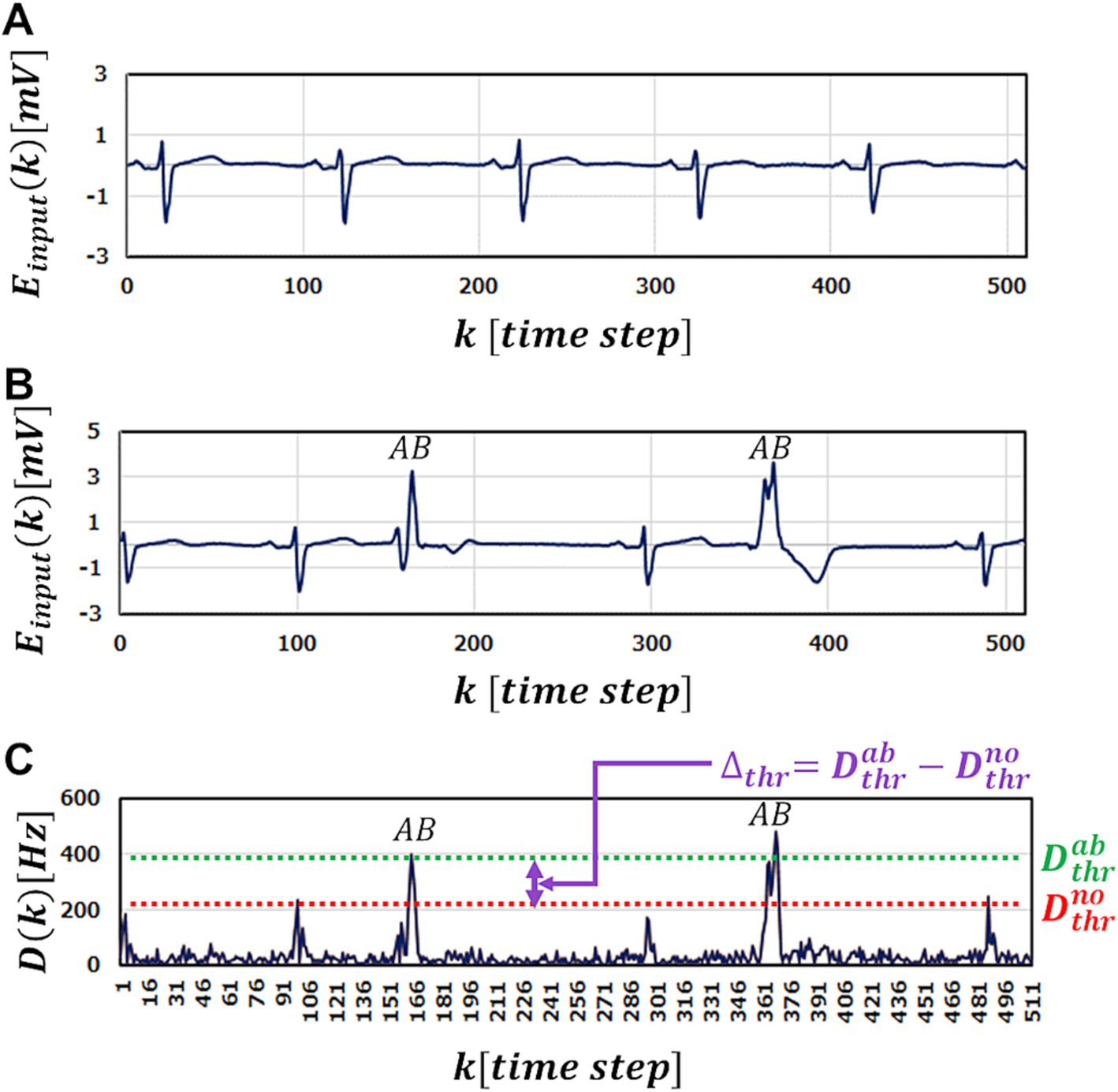

3.2 ECG anomaly detection

For ECG anomaly detection, we use the MIT-BIH arrhythmia database (PhysioNet, 1999; Goldberger et al., 2000; Moody and Mark, 2001). Using the PhysioBank ATM provided by PhysioNet (1999), we download and use MIT-BIT Long-Term ECG data No.14046 for performance evaluation. Figure 3A shows a part of normal waveform of the ECG used as training data. As test data, we use hours waveform data of No.14046 that partially include multiple abnormal waveforms. Figure 3B shows a part of ECG waveform data used in the test. To perform anomaly detection, the SRNN is used as an inference machine. Values of the data points of the ECG waveform are inputted to the SRNN one by one in the time order. At the -th input, it predicts the next -st. The firing frequency of the output-layer neuron at the -th input is compared to the firing frequency of the input neuron at the -st input . Here, we define the abnormality judgment level to detect anomalies; if the absolute difference is greater than a predefined level , the -st input data is regarded to be abnormal.

Figure 3. A part of ECG benchmark waveform No. 14046 used in the simulation. (A) A part of Normal ECG waveform used in training for M-SRNN. (B) A part of ECG waveform with abnormal points (labeled with AB). (C) The test results of input (B).

Figure 3C shows the anomaly detection results using M-SRNN reconstructed by our proposed method when the waveform data in Figure 3B is input. For highly accurate abnormality detection, must be set between and , where is the highest peak of for normal data input point, and is the lowest peak of for the abnormal points (Figure 3C). In other words, is the smallest that does not misdetect normal data points, and is the smallest that does not overlook any anomalies. Note that is unknown in practical use; it is defined for discussion purpose. The window represents judgment margin, which should be large enough for correct detection without overlooking or misdetecting.

Since the raw ECG data is given by time-series data of electrostatic potential in mV, the input-layer neurons convert the potential to the firing frequency as follows,

where is the conversion coefficient. Since an input-layer neuron fires with Poisson probability , a single input is required to be kept for a certain duration ( ) to generate a desired Poisson spike train.

3.3 Simulation results

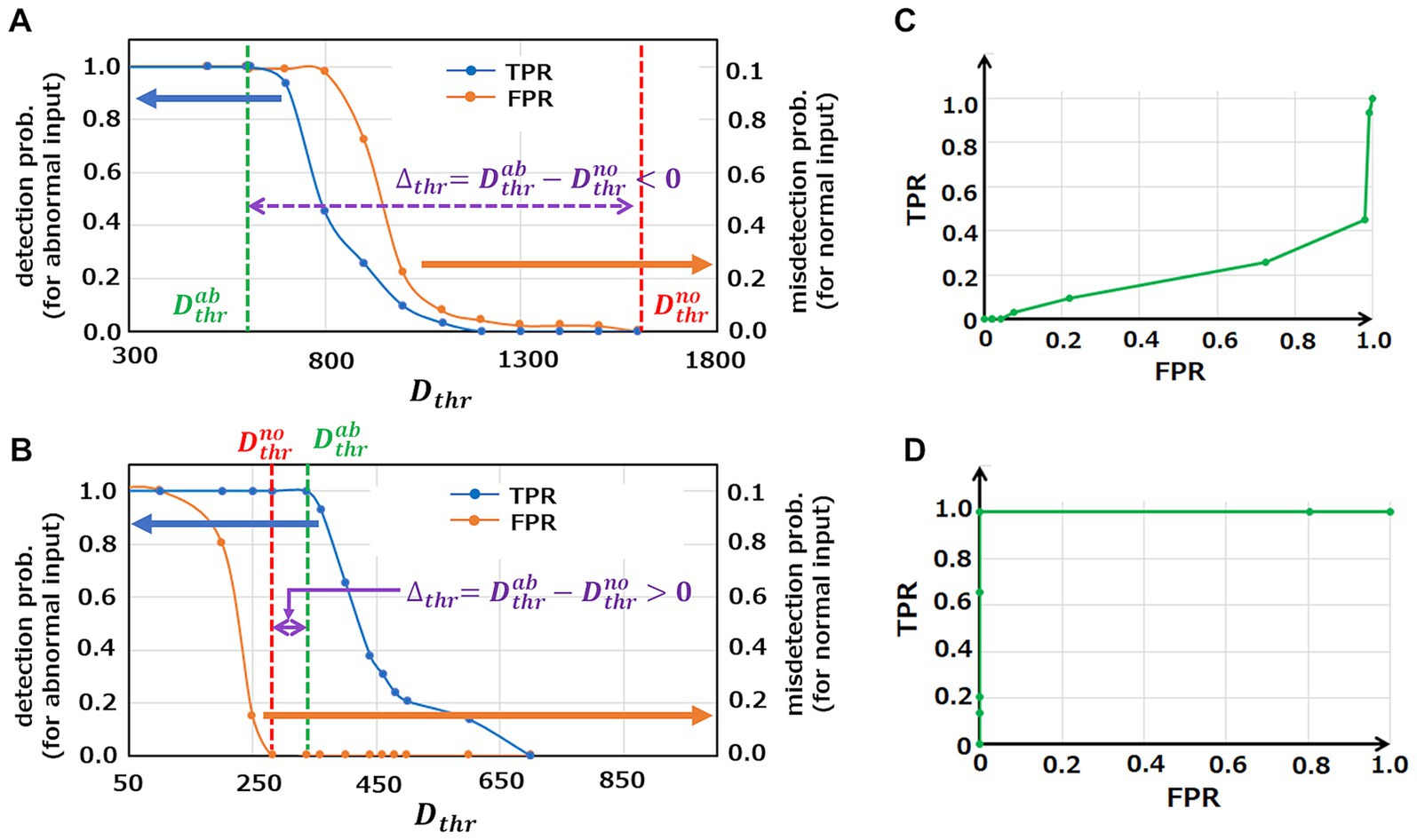

3.3.1 Effectiveness of proposed method on anomaly detection

Anomaly detection results of the initial M-SRNN and the M-SRNN reconstructed with both SP and IP are shown in Figures 4A,B, respectively. For reconstruction of the M-SRNN, we use the waveform data from to of ECG waveform No. 14046 which does not include anomalies. The blue and orange line represent the probability of detecting an abnormal point as abnormal (true positive rate, TPR) and the probability of misdetecting a normal point (false positive rate, FPR) at each , respectively. These probabilities are obtained statistically from the 10 h data of No. 14064. As shown in Figure 4A, the initial M-SRNN cannot detect anomalies correctly because is negative; the misdetection rate (orange) is always larger than the correct detection rate (blue) at any . On the other hand, since is positive, the reconstructed M-SRNN can correctly detect anomalies (Figure 4B). Indeed, if is selected between , the accuracy of the anomaly detection can be achieved while the misdetection rate is suppressed to . Figures 4C,D show Receiver Operating Characteristic (ROC) curves of the initial M-SRNN and the reconstructed M-SRNN, respectively. Since in the case of the initial M-SRNN, the ideal condition for anomaly detection, TPR = 1.0 and FPR = 0.0, cannot be achieved (Figure 4C). On the other hand, such condition is realized in the case of the reconstructed M-SRNN because (Figure 4D). Therefore, our proposed method for the M-SRNN reconstruction is effective for detecting abnormalities in periodic waveform data (in practical use of this method, may be defined as an arbitrary value slightly larger than because the actual value of hence is unknown). Note that the M-SRNN should be reconstructed for individual ECG data (in this case No. 14064). If we are to execute detection tasks for another data set, we need to reconstruct of the M-SRNN using a normal part of the target data set prior to the detection task.

Figure 4. Analysis of anomaly detection capability in the case of using initial M-SRNN and reconstructed M-SRNN with , , , and . (A,B) The probability of detecting an abnormal point as abnormal (TPR, blue) and the probability of misdetecting a normal point (FPR, orange) at each using initial M-SRNN and reconstructed M-SRNN, respectively. (C,D) ROC for initial M-SRNN and reconstructed M-SRNN, respectively.

3.3.2 Reduction of parameter resolutions toward hardware implementation

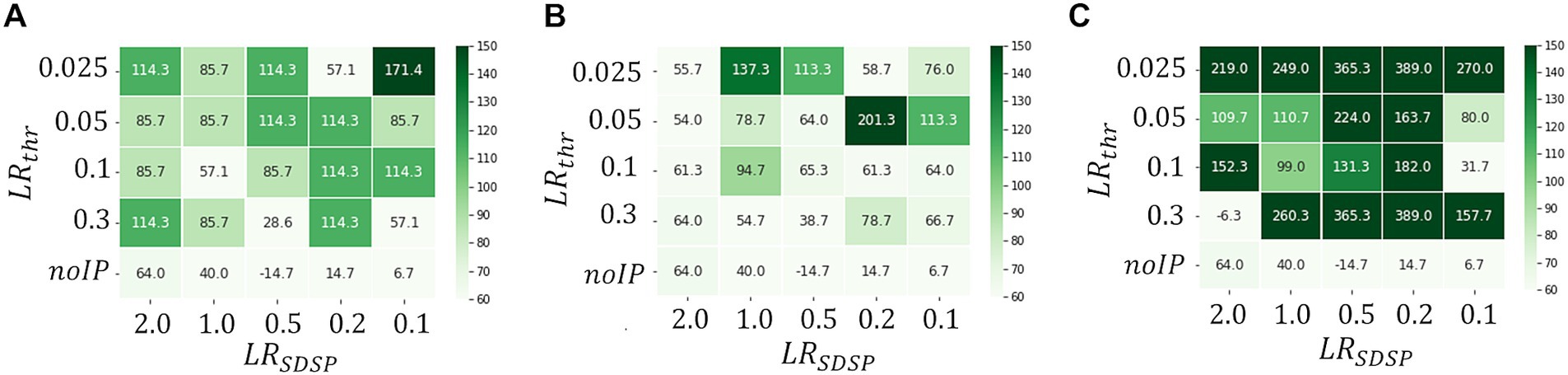

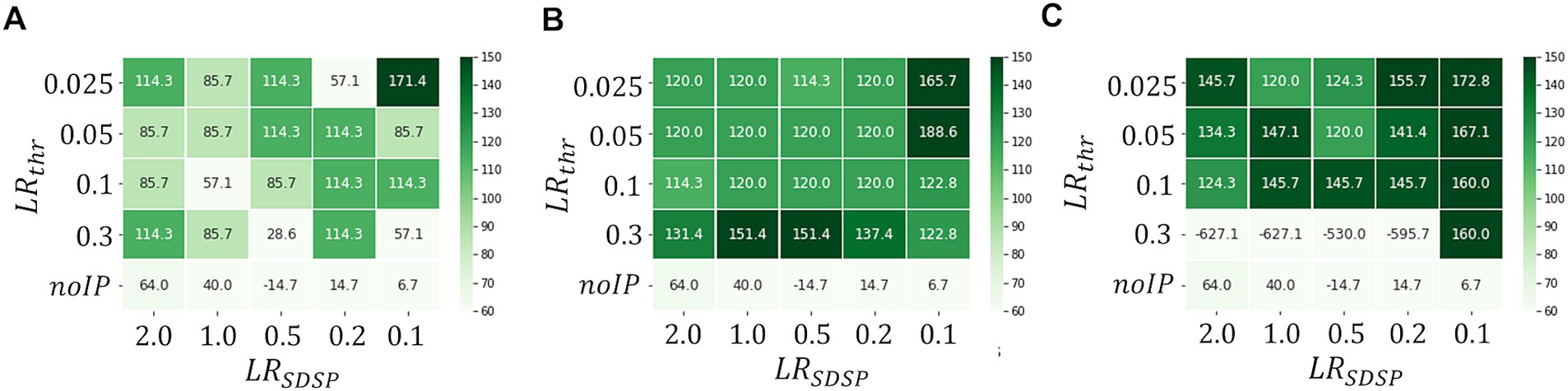

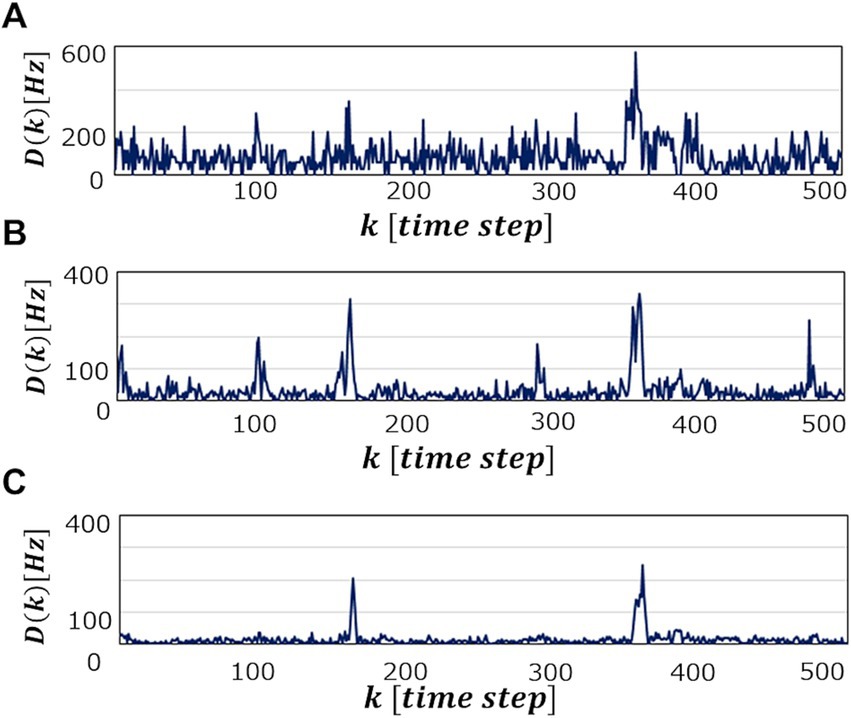

Figure 5 shows a heat map of at each and when the processing time per one ECG data point for reconstruction is set to be (A), (B) and (C). These figures show that becomes large as the operation time increases, which is a reasonable result because the longer becomes, the more information is learned from the data point, leading to higher accuracy of the abnormal detection. In fact, as can be seen in Figure 6, which shows patterns for an abnormal waveform obtained with the M-SRNN reconfigured by and for each , becomes smoother and lower as is set longer.

Figure 6. when abnormal ECG waveform No. 14046 is detected in SRNN reconstructed with and . and (C) , respectively.

3.3.3 Real-time operation for practical applications

For practical application, it is desired that the abnormal data should be detected at the moment it occurs and thus real-time operation is highly expected. In this sense, is desired to be as short as possible. In the case of the ECG anomaly detection, data is collected at 128 steps/s. Therefore, the learning process and anomaly detection must be performed within . However, as discussed above, such short leads to small because the learning duration for each data point is insufficient.

Now we assume that employing longer is equivalent to increasing the number of IP and SP operations within short . To increase the number of IP and SP operations, we have to enhance the activities of neurons, hence two options. The first one is to enhance the parallelism of the inputs; we increase the number of neurons in the input layer so that a neuron in the M-SRNN being connected to the input layer receive more spike signals during short . The other is to enhance the seriality of the input neuron signals; we increase the rate of Poisson spikes from the input layer. The effects of these two methods are verified by simulation.

Figure 7 shows the heatmaps of for in the cases of , and . We observe that increases with in general, indicating that our first idea is effective; real-time anomaly detection without false positive detection is possible by increasing . Note that the binary and i.e., and result in sufficiently large even with in the case of . Thus, a highly parallelized input layer has been shown to be effective for performance improvement with short . However, when is increased too much, the effect would be negative. As can be seen in Figure 7C, where , the M-SRNN does not work appropriately when and . Since the M-SRNN neurons that receive input spikes are always very close to the saturation in the case of large , precise control of the parameters such as and is required.

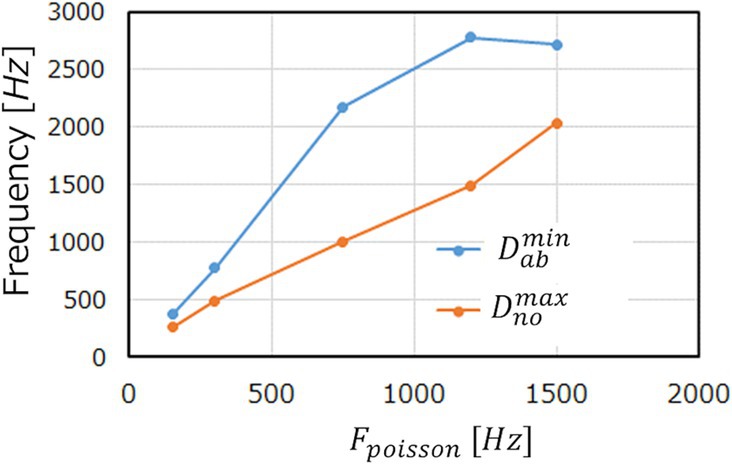

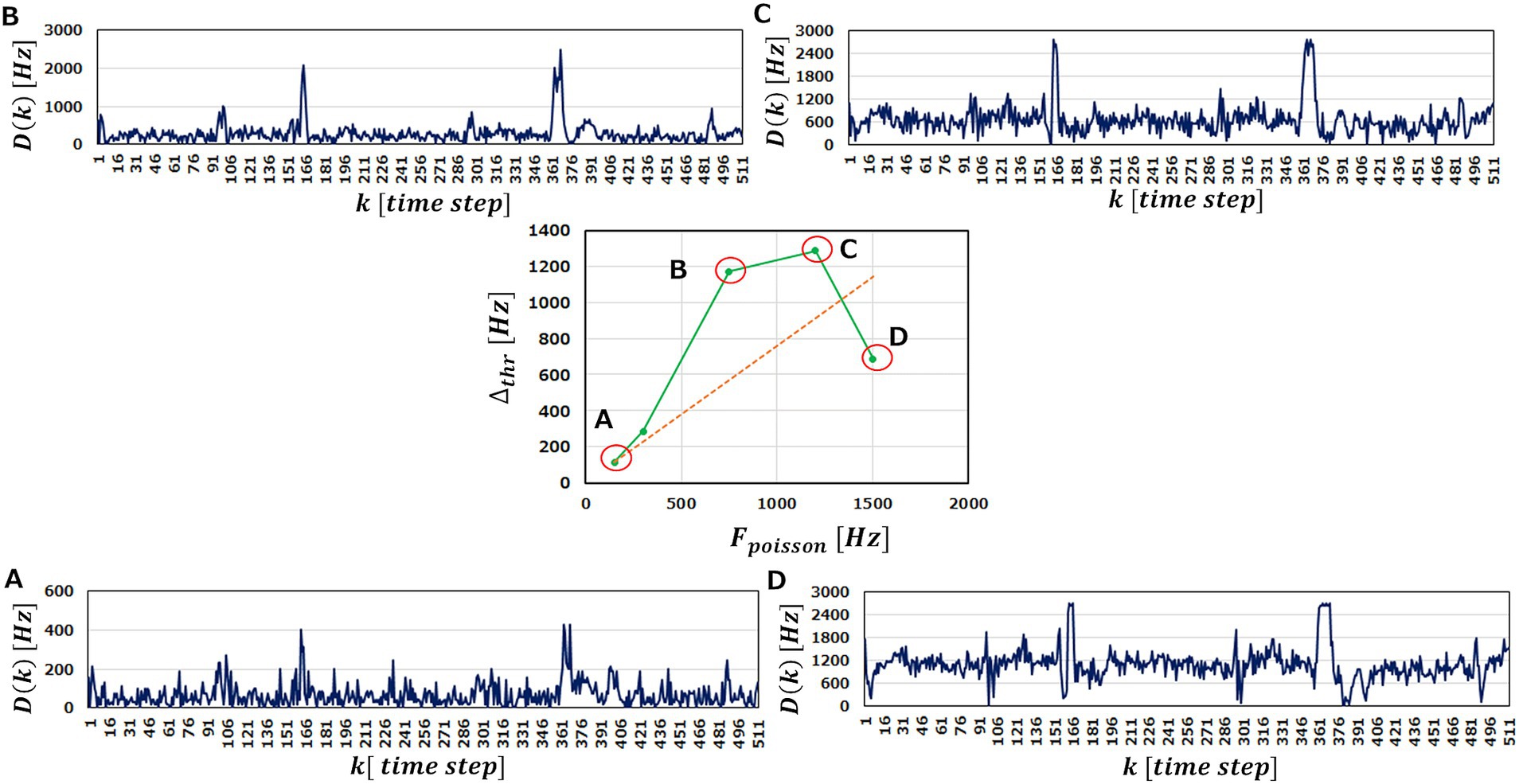

To examine the latter idea, we perform the anomaly detection tasks with being varied. In the center of Figure 8, we plot obtained as a function of . If increasing does not play an effective role on performance improvement, increases just linearly with , as indicated by a red dotted line. As a matter of the fact, however, we obtain above the red line up to , indicating that raising improves the anomaly detection performance of an M-SRNN.

Figure 8. and in the case of and . The center graph shows the against . The outer diagrams represent corresponding to (A–D) points in the center diagram. , and (D) , respectively. (ECG benchmark No. 14046).

We observe in Figures 8A–C that increasing elevates the base line of and magnify the peaks. This is reasonable because the more input spikes come, the more frequently the neurons in the M-SRNN fire, hence being scaled with . At the same time, it smoothens variation of , indicating improved learning performance due to the increased IP and SP operations. This results in being larger than the red dotted line. When is increased further to , the peaks corresponding to the abnormal data in the original waveform saturate, as can be seen in Figure 8D. This is because of the refractory time of neurons. Since a neuron cannot fire faster than its refractory time, it has an upper limit in its firing frequency. The saturation observed in Figure 8D is interpreted as a case where the firing frequency at the anomaly data points reaches its limit. As a result, at is suppressed and comes below the red dotted line. This discussion can be clearly seen in Figure 9, which shows the evolutions of and with of the input neurons. We observe that increases linearly, while increases only up to For , reaches its limit and only increases, hence smaller . We note that the results shown in Figure 8 are obtained with and i.e., binarized and .

It is noteworthy that we have found that binary and may be employed if the input layer is optimized. This is highly advantageous for hardware implementation. For (and also for ), we may prepare the smallest 2-input multiplexers and only two voltage lines (see Supplementary materials). What is more conspicuous is that can be reduced to binary. This means that for synapses we have no need of using an area-hungry multi-bit SRAM array or waiting for analog emerging memories, but we may employ just small 1-bit latches (see Supplementary materials). Since the number of synapses scales with square of the number of neurons, this result has a large impact on the SRNN chip size.

Thus, optimization of the input gives a large impact on both performance and physical chip size of the SRNN. Whether we optimize or may be up to engineering convenience. It is possible to optimize both. As we have seen in Figures 7, 8, the former has a better smoothing effect in the normal data area than the latter. Considering hardware implementation, on the other hand, the latter is more favorable because the former requires physical extension of the input layer system. For the latter, we only have to tune the conversion rate of raw input data to spike trains, which may be done externally. Therefore, the parameters in the input layer should be designed carefully taking those conditions discussed above into consideration.

4 Discussion

Lazer et al. proposed to introduce two plasticity mechanisms, SP and IP, to an RNN to reconstruct its network structure in the training phase (Lazar et al., 2009). While software implementation of SP and IP seems to be quite simple, we need some effort for hardware implementation.

With regard to the IP operation, Lazer et al. adjusted the firing threshold of each neuron according to its firing rate at every time step. In hardware implementation, constantly controlling the thresholds of all of the N neurons is not realistic. Therefore, we proposed a mechanism that regulate the threshold of a neuron in an event-driven way; each neuron changes its firing threshold when it fires in accordance with its activity being higher or lower than the predetermined levels. This event-driven mechanism releases us from designing a circuit for precise control of the thresholds. As discussed by Lazar et al., we need to control the thresholds with an accuracy of if it is done constantly, which requires quite large hardware resource that consumes power as well. Our event-driven method, on the other hand, has been shown to allow us stepwise control of the thresholds with only a few gradations, which is highly advantageous for hardware implementation.

Another way to realize the IP mechanism is to regulate the current of a LIF neuron (Holt and Koch, 1997). The current value can be adjusted by changing the resistance values in the previous researches (Dalgaty et al., 2019; Zhang et al., 2021). This can be achieved by using variable resistors such as memristors (Dalgaty et al., 2019; Payvand et al., 2022) or by selecting several fixed resistors prepared in advance. For the former method, precise control of the resistance would be a central technical issue, but it is still a big challenge even today because the current memristor has large variation (Dalgaty et al., 2019). Payvand et al. discussed that variation and stochasticity of rewriting may lead to better performance, but further studies including practical hardware implementation and general verification are yet to be done. The latter requires a set of large resistors (~ ) for each neuron, which is not favorable for hardware implementation because resistors occupy quite large chip area. We believe that stepwise change of the firing threshold is the most favorable implementation of IP.

For implementation of the SP mechanism, STDP (Legenstein et al., 2005; Ning et al., 2015; Srinivasan et al., 2017) is widely known as a biologically plausible synaptic update rule, but it is not hardware friendly as discussed in the introduction. Hence recent neuromorphic chips tend to employ SDSP (Brader et al., 2007; Mitra et al., 2009; Ning et al., 2015; Frenkel et al., 2019; Gurunathan and Iyer, 2020; Payvand et al., 2022; Frenkel et al., 2023). However, SDSP cannot be implemented concurrently with threshold-controlled IP in its original form, because the latter may push down the upper limit of the membrane potential (i.e., the firing threshold) below the synaptic potentiation threshold. Our proposal that the synaptic update thresholds synchronize with the firing threshold realized the concurrent implementation of the two, and their interplay with each other led to successful learning and anomaly detection of ECG benchmark data (PhysioNet, 1999; Goldberger et al., 2000; Moody and Mark, 2001) even with binary thresholds and weights if the parallelism and the seriality of the input are well optimized. This is highly advantageous for analog circuitry implementation from the viewpoints of circuit complexity and size.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://physionet.org/content/ltdb/1.0.0/.

Author contributions

KN: Conceptualization, Formal analysis, Methodology, Visualization, Writing – original draft. YN: Conceptualization, Formal analysis, Methodology, Supervision, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

KN and YN were employed by Toshiba Corporation.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2024.1402646/full#supplementary-material

References

Ambrogio, S., Ciocchini, N., Laudato, M., Milo, V., Pirovano, A., Fantini, P., et al. (2016). Unsupervised learning by spike timing dependent plasticity in phase change memory (pcm) synapses. Front. Neurosci. 10:56. doi: 10.3389/fnins.2016.00056

Ambrogio, S., Narayanan, P., Tsai, H., Shelby, R. M., Boybat, I., Nolfo, C., et al. (2018). Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67. doi: 10.1038/s41586-018-0180-5

Amirshahi, A., and Hashemi, M. (2019). ECG classification algorithm based on STDP and R-STDP neural networks for real-time monitoring on ultra low-power personal wearable devices. IEEE Trans. Biomed. Circ. Syst. 13, 1483–1493. doi: 10.1109/TBCAS.2019.2948920

Bartolozzi, C., Nikolayeva, O., and Indiveri, G. (2008). “Implementing homeostatic plasticity in VLSI networks of spiking neurons.” in Proc. of 15th IEEE International Conference on Electronics, Circuits and Systems. pp. 682–685.

Bauer, F. C., Muir, D. R., and Indiveri, G. (2019). Real-time ultra-low power ECG anomaly detection using an event-driven neuromorphic processor. IEEE Trans. Biomed. Circ. Syst. 13, 1575–1582. doi: 10.1109/TBCAS.2019.2953001

Bourdoukan, R., and Deneve, S. (2015). Enforcing balance allows local supervised learning in spiking recurrent networks. Advances in Neural Information Processing Systems.

Brader, J. M., Senn, W., and Fusi, S. (2007). Learning real-world stimuli in a neural network with spike-driven synaptic dynamics. Neural Comput. 19, 2881–2912. doi: 10.1162/neco.2007.19.11.2881

Cai, F., Kumar, S., Vaerenbergh, T. V., Sheng, X., Liu, R., Li, C., et al. (2020). Power-efficient combinatorial optimization using intrinsic noise in memristor hopfield neural networks. Nat. Elect. 3, 409–418. doi: 10.1038/s41928-020-0436-6

Chicca, E., and Indiveri, G. (2020). A recipe for creating ideal hybrid memristive-CMOS neuromorphic processing systems. Appl. Phys. Lett. 116:120501. doi: 10.1063/1.5142089

Chicca, E., Stefanini, F., Bartolozzi, C., and Indiveri, G. (2014). Neuromorphic electronic circuits for building autonomous cognitive systems. Proc. IEEE 102, 1367–1388. doi: 10.1109/JPROC.2014.2313954

Covi, E., Brivio, S., Serb, A., Prodromakis, T., Fanciulli, M., and Spiga, S. (2016). Analog memristive synapse in spiking networks implementing unsupervised learning. Front. Neurosci. 10:482. doi: 10.3389/fnins.2016.00482

Covi, E., Donati, E., Liang, X., Kappel, D., Heidari, H., Payvand, M., et al. (2021). Adaptive extreme edge computing for wearable devices. Front. Neurosci. 15:611300. doi: 10.3389/fnins.2021.611300

Dalgaty, T., Castellani, N., Turck, C., Harabi, K.-E., Querlioz, D., and Vianello, E. (2021). In situ learning using intrinsic memristor variability via Markov chain Monte Carlo sampling. Nat. Elect. 4, 151–161. doi: 10.1038/s41928-020-00523-3

Dalgaty, T., Payvand, M., Moro, F., Ly, D. R. B., Pebay-Peyroula, F., Casas, J., et al. (2019). Hybrid neuromorphic circuits exploiting non-conventional properties of RRAM for massively parallel local plasticity mechanisms. APL Materials 7:8663. doi: 10.1063/1.5108663

Das, A., Pradhapan, P., Groenendaal, W., Adiraju, P., Rajan, R. T., Catthoor, F., et al. (2018). Unsupervised heart-rate estimation in wearables with liquid states and a probabilistic readout. Neural Netw. 99, 134–147. doi: 10.1016/j.neunet.2017.12.015

Davies, M., Srinivasa, N., Lin, T. H., Chinya, G., Cao, Y., Choday, S. H., et al. (2018). Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro. 38, 82–99. doi: 10.1109/MM.2018.112130359

Demirag, Y., Moro, F., Dalgaty, T., Navarro, G., Frenkel, C., Indiveri, G., et al. (2021). “PCM-trace: scalable synaptic eligibility traces with resistivity drift of phase-change materials.” in Proc. of 2021 IEEE International Symposium on Circuits and Systems. pp. 1–5.

Desai, N. S., Rutherford, L. C., and Turrigiano, G. G. (1999). Plasticity in the intrinsic excitability of cortical pyramidal neurons. Nat. Neurosci. 2, 515–520. doi: 10.1038/9165

Diehl, P. U., and Cook, M. (2015). Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 9:99. doi: 10.3389/fncom.2015.00099

Faria, R., Camsari, K. Y., and Datta, S. (2018). Implementing bayesian networks with embedded stochastic MRAM. AIP Adv. 8:1332. doi: 10.1063/1.5021332

Frenkel, C., Bol, D., and Indiveri, G. (2023). Bottom-up and top-down neural processing systems design: neuromorphic intelligence as the convergence of natural and artificial intelligence. Proc. IEEE 28:1288. doi: 10.48550/arXiv.2106.01288

Frenkel, C., and Indiveri, G. (2022). ReckOn: a 28nm sub-mm2 task-agnostic spiking recurrent neural network processor enabling on-Chip learning over second-long timescales. IEEE International Solid-State Circuits Conference.

Frenkel, C., Lefebvre, M., Legat, J. D., and Bol, D. (2019). A 0.086-mm2 12.7-pJ/SOP 64k-synapse 256-neuron online-learning digital spiking neuromorphic processor in 28nm CMOS. IEEE Trans. Biomed. Circ. Syst. 13, 145–158. doi: 10.1109/TBCAS.2018.2880425

Goldberger, A., Amaral, L., Glass, L., Hausdorff, J., Ivanov, P. C., Mark, R., et al. (2000). PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation 101, e215–e220. doi: 10.1161/01.cir.101.23.e215

Goodman, D. F. M., and Brette, R. (2008). Brian: a simulator for spiking neural networks in python. Front. Neuroinform. 2:5. doi: 10.3389/neuro.11.005.2008

Gurunathan, A, and Iyer, L. (2020). “Spurious learning in networks with spike driven synaptic plasticity.” in International Conference on Neuromorphic Systems. pp. 1–8.

Holt, G. R., and Koch, C. (1997). Shunting inhibition does not have a divisive effect on firing rates. Neural Comput. 9, 1001–1013. doi: 10.1162/neco.1997.9.5.1001

Indiveri, G., and Fusi, S. (2007). “Spike-based learning in VLSI networks of integrate-and-fire neurons.” in Proc. of 2007 IEEE International Symposium on Circuits and Systems.

Indiveri, G., Linares-Barranco, B., Hamilton, T. J., van Schaik, A., Etienne-Cummings, R., Delbruck, T., et al. (2011). Neuromorphic silicon neuron circuits. Front. Neurosci. 5:73. doi: 10.3389/fnins.2011.00073

Jaeger, H. (2001) The echo state approach to analysing and training recurrent neural networks with an erratum note. GMD Report.

Kadmon, J., and Somopolinsky, H. (2015). Transition to Chaos in random neuronal networks. Phys. Rev. X 5:041030. doi: 10.1103/PhysRevX.5.041030

Kiranyaz, S., Ince, T., and Gabbouj, M. (2016). Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 63, 664–675. doi: 10.1109/TBME.2015.2468589

Kreiser, R., Moraitis, T., Sandamirskaya, Y., and Indiveri, G., (2017). “On-chip unsupervised learning in winner-take-all networks on spiking neurons.” in Proc. of 2017 IEEE Biomedical Circuits and Systems Conference. pp. 1–4.

Kuzum, D., Jeyasingh, R.-G.-D., Lee, B., and Wong, H.-S. P. (2012). Nanoelectronic programmable synapses based on phase change materials for brain-inspired computing. Nano Lett. 12, 2179–2186. doi: 10.1021/nl201040y

Landau, I. D., and Somopolinsky, H. (2018). Coherent chaos in a recurrent neural network with structured connectivity. PLoS Comput. Biol. 14:e1006309. doi: 10.1371/journal.pcbi.1006309

Lazar, A., Pipa, G., and Triesch, J. (2009). SORN: a self-organizing recurrent neural network. Front. Comput. Neurosci. 3:23. doi: 10.3389/neuro.10.023.2009

Legenstein, R., Naeger, C., and Maass, W. (2005). What can a neuron learn with spike-timing-dependent plasticity? Neural Comput. 17, 2337–2382. doi: 10.1162/0899766054796888

Li, C., Belkin, D., Li, Y., Yan, P., Hu, M., Ge, N., et al. (2018). Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9:2385. doi: 10.1038/s41467-018-04484-2

Li, C., and Li, Y. (2013). A spike-based model of neuronal intrinsic plasticity. IEEE Trans. Auton. Ment. Dev. 5, 62–73. doi: 10.1109/TAMD.2012.2211101

Liu, S.-H., Cheng, D.-C., and Lin, C.-M. (2013). Arrhythmia identification with two-lead electrocardiograms using artificial neural networks and support vector machines for a portable ECG monitor system. Sensors 13, 813–828. doi: 10.3390/s130100813

Maass, W., Natschlger, T., and Markram, H. (2002). Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560. doi: 10.1162/089976602760407955

Mitra, S., Fusi, D., and Indiveri, G. (2009). Real-time classification of complex patterns using spike-based learning in neuromorphic VLSI. IEEE Trans. Biomed. Circ. Syst. 3, 32–42. doi: 10.1109/TBCAS.2008.2005781

Moody, G. B., and Mark, R. G. (2001). The impact of the MIT-BIH arrhythmia database. IEEE Eng. Med. Biol. Mag. 20, 45–50. doi: 10.1109/51.932724

Moro, F., Esmanhotto, E., Hirtzlin, T., Castellani, N., Trabelsi, A., Dalgaty, T., et al. (2022). “Hardware calibrated learning to compensate heterogeneity in analog RRAM-based spiking neural networks.” IEEE International Symposium on Circuits and Systems (ISCAS). pp. 380–383.

Nicola, W., and Clopath, C. (2017). Supervised learning in spiking neural networks with force training. Nat. Commun. 8:2208. doi: 10.1038/s41467-017-01827-3

Ning, Q., Hesham, M., Federico, C., Marc, O., Stefanini, F., Sumislawska, D., et al. (2015). A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 9:141. doi: 10.3389/fnins.2015.00141

Payvand, M., D’Agostino, S., Moro, F., Demirag, Y., Indiveri, G., and Vianello, E. (2023). “Dendritic computation through exploiting resistive memory as both delays and weights.” in Proc. of the 2023 International Conference on Neuromorphic Systems. p. 27.

Payvand, M., Demirag, Y., Dalgathy, T., Vianello, E., and Indiveri, G. (2020). “Analog weight updates with compliance current modulation of binary ReRAMs for on-chip learning.” in Proc. of 2020 IEEE International Symposium on Circuits and Systems. pp. 1–5.

Payvand, M., Moro, F., Nomura, K., Dalgaty, T., Vianello, E., Nishi, Y., et al. (2022). Self-organization of an inhomogeneous memristive hardware for sequence learning. Nat. Commun. 13:5793. doi: 10.1038/s41467-022-33476-6

Pfister, J., Toyoizumi, T., Barber, D., and Gerstner, W. (2006). Optimal spike-timing-dependent plasticity for precise action potential firing in supervised learning. Neural Comput. 18, 1318–1348. doi: 10.1162/neco.2006.18.6.1318

PhysioNet (1999). MITBIH Long-Term ECG Database (Version 1.0). Available at: https://physionet.org/content/ltdb/1.0.0/ [Accessed August 3, 1999].

Ponulak, F., and Kasinski, A. (2010). Supervised learning in spiking neural networks with ReSuMe: sequence learning, classification, and spike shifting. Neural Comput. 22, 467–510. doi: 10.1162/neco.2009.11-08-901

Prezioso, M., Merrikh-Bayat, F., Hoskins, B. D., Adam, G. C., Likharev, K. K., and Strukov, D. B. (2015). Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64. doi: 10.1038/nature14441

Qiao, N., Bartolozzi, C., and Indiveri, G. (2017). An ultralow leakage synaptic scaling homeostatic plasticity circuit with configurable time scales up to 100ks. IEEE Trans. Biomed. Circ. Syst. 11, 1271–1277. doi: 10.1109/TBCAS.2017.2754383

Somopolinsky, H., Crisanti, A., and Sommers, H. J. (1988). Chaos in random neural networks. Phys. Rev. Lett. 61, 259–262. doi: 10.1103/PhysRevLett.61.259

Srinivasan, G., Roy, S., Raghunathan, V., and Roy, K. (2017). “Spike timing dependent plasticity based enhanced self-learning for efficient pattern recognition in spiking neural networks.” in Proc. of 2017 International Joint Conference on Neural Networks. pp. 1847–1854.

Steil, J. J. (2007). Online reservoir adaptation by intrinsic plasticity for backpropagation-decorrelation and echo state learning. Neural Netw. 20, 353–364. doi: 10.1016/j.neunet.2007.04.011

Sussillo, D., and Abbott, L. F. (2009). Generating coherent patterns of activity from chaotic neural networks. Neuron 63, 544–557. doi: 10.1016/j.neuron.2009.07.018

Tetzlaff, C., Dasgupta, S., Kulvicius, T., and Wörgötter, F. (2015). The use of Hebbian cell assemblies for nonlinear computation. Sci. Rep. 5:12866. doi: 10.1038/srep12866

Thalmeier, D., Uhlmann, M., Kappen, J. H., and Memmesheimer, R. M. (2016). Learning universal computations with spikes. PLoS Comput. Biol. 12:e1004895. doi: 10.1371/journal.pcbi.1004895

Wang, N., Zhou, J., Dai, G., Huang, J., and Xie, Y. (2019). Energy-efficient intelligent ECG monitoring for wearable devices. IEEE Trans. Biomed. Circ. Syst. 13, 1112–1121. doi: 10.1109/TBCAS.2019.2930215

Yongqiang, M., Donati, E., Chen, B., Ren, P., Zheng, N., and Indiveri, G. (2020). “Neuromorphic implementation of a recurrent neural network for EMG classification.” in Proc. of 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS). pp. 69–73.

Zhang, W., and Li, P. (2019). Information-theoretic intrinsic plasticity for online unsupervised learning in spiking neural networks. Front. Neurosci. 13:31. doi: 10.3389/fnins.2019.00031

Keywords: spiking neural network, intrinsic plasticity, synaptic plasticity, randomly connected neural network, neuromorphic chip

Citation: Nomura K and Nishi Y (2024) Synchronized stepwise control of firing and learning thresholds in a spiking randomly connected neural network toward hardware implementation. Front. Neurosci. 18:1402646. doi: 10.3389/fnins.2024.1402646

Edited by:

Qinru Qiu, Syracuse University, United StatesReviewed by:

Seenivasan M. A., National Institute of Technology Meghalaya, IndiaJingang Jin, Syracuse University, United States

Copyright © 2024 Nomura and Nishi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kumiko Nomura, a3VtaWtvLm5vbXVyYUB0b3NoaWJhLmNvLmpw

†These authors have contributed equally to this work and share first authorship

Kumiko Nomura

Kumiko Nomura Yoshifumi Nishi

Yoshifumi Nishi