94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 27 February 2024

Sec. Visual Neuroscience

Volume 18 - 2024 | https://doi.org/10.3389/fnins.2024.1361486

This article is part of the Research TopicArtificial Systems Based on Human and Animal Natural Vision in Image and VideoView all 8 articles

Introduction: Binocular color fusion and rivalry are two specific phenomena in binocular vision, which could be used as experimental tools to study how the brain processes conflicting information. There is a lack of objective evaluation indexes to distinguish the fusion or rivalry for dichoptic color.

Methods: This paper introduced EEGNet to construct an EEG-based model for binocular color fusion and rivalry classification. We developed an EEG dataset from 10 subjects.

Results: By dividing the EEG data from five different brain areas to train the corresponding models, experimental results showed that: (1) the brain area represented by the back area had a large difference on EEG signals, the accuracy of model reached the highest of 81.98%, and more channels decreased the model performance; (2) there was a large effect of inter-subject variability, and the EEG-based recognition is still a very challenge across subjects; and (3) the statistics of EEG data are relatively stationary at different time for the same individual, the EEG-based recognition is highly reproducible for an individual.

Discussion: The critical channels for EEG-based binocular color fusion and rivalry could be meaningful for developing the brain computer interfaces (BCIs) based on color-related visual evoked potential (CVEP).

With the improvement of various types of hardware and software performance, humans in the 21st century wanted to be able to restore a real world as much as possible in the monitor. Therefore, stereo display technology had become a hot topic of research for scholars (Liberatore and Wagner, 2021). Binocular color fusion and rivalry as two special phenomena in stereoscopic displays had also attracted a lot of attention and discussion. When the left and right eyes were viewing different colors at the same time, taking the red and the green as an example, if the color difference between red and green was small, the human brain can fuse the two colors as a single color. This phenomenon was called binocular color fusion. If the color difference between red and green increased to a certain threshold, the brain perceived periodic alternating changes of the red and the green. This phenomenon was called binocular color rivalry (Malkoc and Kingdom, 2012).

Binocular color fusion and rivalry, as two specific phenomena in binocular vision, reflected physiological changes in the brain’s visual perception of color. They could be used as experimental tools to study consciousness, attention, and how the brain processes conflicting information. A large number of studies had also been reported on the threshold values for binocular color fusion and rivalry. Jung et al. (2011) measured the binocular color fusion limit which was quantified by ellipses for eight chromaticity points sampled from the CIE 1976 chromaticity diagram. Malkoc and Kingdom (2012) measured the dichoptic color difference threshold (DCDT) in MacLeod-Boynton color space. The DCDT was the smallest detectable difference in color between two dichoptically superimposed stimuli. Chen et al. (2019) measured the binocular color fusion limit for five hues at different disparities in the 1976 CIE u’v’ chromaticity diagram. The binocular color fusion limit varied for each hue and different disparities. Xiong et al. (2021) conducted a psychophysical experiment to quantitatively measure the binocular chromatic fusion limit on four opposite color directions in the CIELAB color space. They suggested the fusion limit was independent of the distribution of cells and had nothing to do with the color inconsistency between eyes. The dominant eye might have some effects on binocular color fusion. But binocular color rivalry mainly involved the participation of brain cognition. However, these studies mainly used the subjective evaluation method to measure the binocular color fusion limit, and there was a lack of an objective indicator for judging binocular color fusion or binocular color rivalry.

The perception of binocular color fusion and rivalry in current traditional psychophysical experiments was mainly discriminated through subjects’ subjective reports, and lacked an objective judging index. And electroencephalography (EEG) was a technique for studying the relationship between brain activity and cognition, behavior, emotion, and physiological responses. It was also increasingly used by scholars to study the science of color. Cao et al. (2010) designed experiments to investigate the stimulus event-related potentials (ERPs) for blue/yellow colors in normal subjects. Experiments had shown that the brain was more sensitive to yellow in equal luminance mode. Yellow stimuli caused not only a shortening of the latency and an increase in the amplitude of the early ERP components N1 and P2, but also a shortening of the latency and an increase in the amplitude of the late ERP components N2 and P3. Wang and Zhang (2010) used red and blue car pictures to induce ERP. The experimental results showed that the average potential evoked by the red car picture was greater than that evoked by the blue car picture. Zakzewski et al. (2014) used eight different colors such as yellow, blue, red, cyan, white, black, magenta and green to design the experiment. Experiments were conducted to investigate whether EEG responses to stimuli of different colors were suitable for color classification. The results showed that only skewness data (averaged over 10 trials) could be used as a feasible feature for color classification by EEG features. Bekdash et al. (2015) designed experiments to study P1 components of normal subjects for four colors of different intensities. The four colors included red, green, blue, and yellow. The experimental results showed that the greater the color brightness, the greater the amplitude of P1 components. Liu et al. (2019) designed experiments to conduct the color-difference evaluation based on EEG signals. The experimental results showed that N1 component in the right-side and occipital area of brain had obvious regularity when observers were gazing at color-difference stimuli. However, the above studies did not target the discussion of the effect of individual variability on the EEG of binocular color fusion and rivalry as well as the experimental reproducibility for a single individual, which is the main issue explored in this paper.

EEG had become a key tool for research in many fields (Tang et al., 2022; Huang et al., 2023), but there were still some challenges in its analysis and processing. Firstly, the signal-to-noise ratio (SNR) of EEG was low (Jas et al., 2017). Second, individual variability among subjects also affected the performance of the model (Jeunet et al., 2016). Third, EEG statistics varied across time on the same individual (Gramfort et al., 2013; Cole and Voytek, 2019). To overcome the challenges described above, new approaches are required to improve the processing of EEG toward better generalization capabilities and more flexible applications. For example, the hierarchical nature of deep neural networks (DNNs) means that features can be learned from raw or minimally preprocessed EEG data, reducing the need for domain-specific processing and feature extraction pipelines. Features learned through DNNs might also be more effective or expressive than the ones engineered by humans (Roy et al., 2019). Deep learning (LeCun et al., 2015) was also gradually being applied to process EEG data in various fields (Bi et al., 2023) such as emotion recognition, epilepsy diagnosis, and depression diagnosis. Cecotti and Graser (2010) implemented the first classification of P300 event-related potentials using a Convolutional Neural Network (CNN). The method achieved a character recognition accuracy of 95.5%. Kulasingham et al. (2016) used a deep belief network and a stacked autoencoder to classify event-related potentials P300, achieving an average accuracy of 86.9% without feature extraction. Liu et al. (2018) introduced Batch Normalization into the training of network models to improve the performance for P300 classification. Liu et al. (2017) used SVM and Logistic classification model with EEG data of depressed patients as features while using CNN for recognition classification with 96.7% accuracy. Zheng and Lu (2015) used Deep Belief Networks to explore the key channels and bands for emotion recognition. Lawhern et al. (2018) proposed CNN-based EEGNet to accurately classify EEG signals for different brain-computer interface (BCI) paradigms. The above mainly described the research related to deep learning for emotion recognition, event-related potential classification, and depression diagnosis. However, for two special visual phenomena, binocular color fusion and rivalry, there were no studies using deep learning for recognition classification and exploration of key channels.

In this paper, we introduced EEGNet to construct an EEG-based model for binocular color fusion and rivalry classification. By using the model to explore the key channels for identifying binocular color fusion and rivalry, this research contributed to three distinct areas: 1, examining the model performance in five brain areas, investigating the critical brain area of brain associated with the binocular color fusion/rivalry task, 2, examining the model performance across subjects, exploring the effect of inter-subject variability on EEG-based judgmental recognition of binocular color fusion and rivalry, and 3, examining the model performance at a different time on the same individual, verifying the reproducibility across time with limited amounts of data. Figure 1 illustrates the general process of EEG-based classification for binocular color fusion and rivalry. The framework consists of four components: (1) the EEG experiment gives the specific design of our experiments and the specific process of data acquisition, (2) data preprocess describes our preprocess for the raw data, (3) model structure introduces the network framework of EEGNet, and (4) model evaluation presents the evaluation indicators and the model performance.

The EGI geodesic EEG system (GES 400) was used in this experiment. The GES 400 includes a standard electrode cap of 128 channels, an EEG signal amplifier, a computer with NetStation5 software and a computer with E-prime software. The computer with E-prime software was used to provide the stimulus images and provide event marks to the computer with NetStation5 software. Stimuli were displayed through a 23-inch size Samsung 3D monitor (S23A950D). The resolution of the monitor is 1920 (horizontal) * 1080 (vertical) pixels. The monitor offers a 2D/3D switching function with 3D glasses. The monitor connected to a graphics card (NVIDIA GeForce GTX 1080). To ensure that stimulus colors provided by the monitor are consistent with selected color samples, we used a PR-715 spectroradiometer to characterize the display appearing color through 3D glasses. The luminance of the digital input, as well as the luminance and chromaticity of the center point of the display, were obtained through the method of look-up-table (LUT). The computer with NetStation5 was used to collect and record the EEG signals transmitted by the electrode cap through the EEG signal amplifier.

To avoid the influence of other factors (especially external light), the whole experiments were conducted in a dark room. Subjects were required to minimize physical activity during the experiment to void the effect of electromyography (EMG) on the EEG data. According to the International Telecommunication Union standards (Series, 2009), subjects were required to sit approximately 860 mm from the screen to complete the experiment. Subjects were required to wear the electrode cap and 3D glasses connected to a monitor, which functioned by presenting different color stimulus images to the left and right eyes, thereby inducing binocular color fusion or binocular color rivalry.

Ten university students participated in this experiment, all of whom had normal visual acuity, normal stereopsis and normal color vision, and their age range was 22–25 years. Subjects signed an informed consent form for the experiment, which met the criteria set out in the Declaration of Helsinki (WMA, 2022).

Stimulus images were generated by specially written software in C++, as shown in Figure 2. The size of the generated image was 3840*1080, and the subjects were presented with images of 1920*1080 size in the left and right eyes, respectively, by wearing 3D glasses. The color stimulus samples were selected in the CIELAB color space where luminance was fixed ( ). Subjects were presented with a color stimulus picture centered on a 2°circular block of color on a black background. Chen et al. (2019) had suggested that the gray rectangular boxes could be used as a zero-disparity reference to avoid or reduce the influence of disparity cues on the experimental result. The color values of the circular blocks were selected in the (red-green) and (yellow-blue) directions, and the specific color values were shown in Table 1. The left eye in Table 1 indicated the coordinates of the color sample points observed by the left eye. The right eye indicated the coordinates of the color sample points observed by the right eye. The selection of color sample points was based on our previous work (Xiong et al., 2021), which confirmed to induce binocular color fusion or rivalry for most normal people.

There were four types of stimulus images: binocular color fusion on red/green direction (FoRG), binocular color rivalry on red/green direction (RoRG), binocular color fusion on yellow/blue direction (FoYB), binocular color rivalry on yellow/blue direction (RoYB). The experiment session did not last more than roughly 10 min at a time, including the explanations to the participant and 48 trials. The order of stimulus presentation for a trial was as follows: a FoRG image was presented for 500 ms, and a Mid-Gray field image lasted for 1,000 ms, and a RoRG image was presented for 500 ms, then the Mid-Gray field image was presented for 1,000 ms, next, a FoYB image was presented for 500 ms, and the Mid-Gray field image lasted for 1,000 ms, and a RoYB image was presented for 500 ms, and then Mid-Gray field image presented for 1,000 ms. The experimental stimulus presentation process was shown in Figure 3.

To explore the effect of inter-subject variability, the session was completed one time for eight subjects. Their experiments were conducted in the afternoon. To verify the reproducibility across time on the same individual, the session was completed 6 times for the other two subjects. Their experiments repeated 2 times in the morning, afternoon, and evening in 3 days. Hence, a total of 960 (= 8 subjects × 48 trials +2 subjects × 48 trials ×6 times) trials were recorded.

We recorded the EEG data of the binocular color fusion/rivalry task for 10 subjects. Figure 4 gives the flow of EEG data preprocessing. The sampling rate of the raw data was 250 Hz. The experimental data were band-pass filtered from 0.5 Hz to 30 Hz. Then Independent Principal Component Analysis (ICA) was used to remove artifacts such as Electromyogram (EMG) and Electrooculogram (EOG) from the EEG data. After completing ICA, we extracted all the data from 200 ms before the color stimulus to 800 ms after the color stimulus. Each channel of the EEG data was divided into the same-length epochs without overlapping. EEG data from 200 ms before color stimuli were used as a baseline calibration and thresholds were designed to remove some extreme data (potential amplitudes greater than 100 μV). All preprocess was performed by writing code in Python MNE toolkit. Our raw data and source code were available at: https://figshare.com/s/e3350a7fb74e504012c7.

EEGNet is a deep neural network-based EEG signal classification model (Lawhern et al., 2018). It can process raw EEG signals without complex feature extraction. The network structure of EEGNet mainly consists of a series of convolutional and pooling layers. Figure 5 shows the network structure of the deep neural network EEGNet. There are three types of convolution operations in the convolutional layer, including Conv2D, DepthwiseConv2D, and SeparableConv2D. Conv2D performs a convolution operation on EEG data in the time dimension to capture the temporal information and dynamic changes in the EEG signal. DepthwiseConv2D is used to process the spatial information of EEG signals. It computes the convolution independently for each channel of the input data and has a smaller number of parameters and computational complexity. Thus, DepthwiseConv2D can reduce the model parameters while maintaining a good feature extraction capability. DepthwiseSeparateConv2D consists of two parts, DepthwiseConv2D and SeparableConv2D. In the DepthwiseConv2D stage, it can be computed independently for each channel of the input data to capture the spatial information. And in the SeparableConv2D stage, the feature dimension is extended by applying a convolution kernel to combine the channel information. This design allows a significant reduction in the number of parameters and improves the efficiency of the model. In EEGNet, SeparableConv2D is used to process the spatio-temporal information of EEG signals. Through the DepthwiseConv2D stage, the model is able to capture the spatial dependencies between different brain areas and extract distinguishing features. The SeparableConv2D stage increases the nonlinear expressiveness of the model and further enriches the feature representation.

In EEGnet, Batch Normalization (BN) operation is a normalization technique used to speed up the training process of neural networks and to improve the stability of the model. The BN operation focuses on normalizing the inputs for each layer in the neural network. The following expressions are the core formula of BN operation:

In Expression (1), ... are the first inputs, and is the mean of the data. In Expression (2), is the variance of the data. Expression (3) is the most crucial, it is used to normalize the ... , and the main purpose of is to avoid the denominator being zero in the calculation of normalization. In Expression (4), the training related parameters , and the output y are obtained by linear mapping of y to to get the new data values. In forward propagation, the new distribution values can be derived from the learnable and . At the time of backward propagation, the and and the associated weights are derived by chaining the derivatives.

In EEGNet, Dropout is a common regularization technique used to reduce overfitting in neural networks. Specifically, two Dropout layers are used in EEGNet: one after DepthwiseConv2D and the other before the full connectivity layer. The role of the Dropout layer after the DepthwiseConv2D is to perform random discarding of the channel features at each time point. This helps the network to better learn the correlation between different channels and enhances the robustness of the network to noise and other disturbances. The Dropout layer before the fully connected layer serves to randomly discard the output of the full connectivity layer on each sample. This prevents the network from over-relying on some specific neurons and thus enhances the generalization ability of the network.

In this paper, the training can be briefly described as the following steps: (1) capture temporal features in the EEG data through Conv2D; (2) capture spatial features of the EEG through DepthwiseConv2D; and (3) use SeparableConv2D to continue to capture the channel feature information while reducing the number of parameters and computation, provide regularization, and finally pass the features directly to the Softmax classification unit.

We tried to use the deep neural network EEGNet to explore key areas of the brain about binocular color fusion and rivalry. We collected EEG data from 10 subjects, and all data was disrupted and reordered then put into EEGNet for training. Table 2 showed some of the training parameter information for the EEGNet. Dropout rate represented the random dropout ratio of Dropout. F1 was the number of filters in the DepthwiseConv2D; F2 was the number of filters in the SeparableConv2D. The number of samples in a batch at each iteration of training was 32; the optimizer was Adam; the loss function was CrossEntropyLoss; the activation function was ELU; the size of was 0.001; and the proportion of the test set was 10%.

Identifying critical brain area for EEG-based binocular color fusion and rivalry is a matter for discussion. In this paper, we used the EGI geodesic EEG system (GES 400) to acquire multichannel EEG data up to 128 channels. Because of the large number of channels in the experimental data and the fact that the position of the electrode cap worn by each subject was to some extent affected by individual variability. We therefore envisaged dividing the electrode positions into five areas, according to the International 10–20 standard lead system (Sanei and Chambers, 2013), as shown in Figure 6. The front area (F) is concentrated in the prefrontal lobe, the left area (L) is distributed in the left temporal lobe, the right area (R) is distributed in the right temporal lobe, the top area (T) is in the parietal lobe, and the back area (B) covers the occipital region.

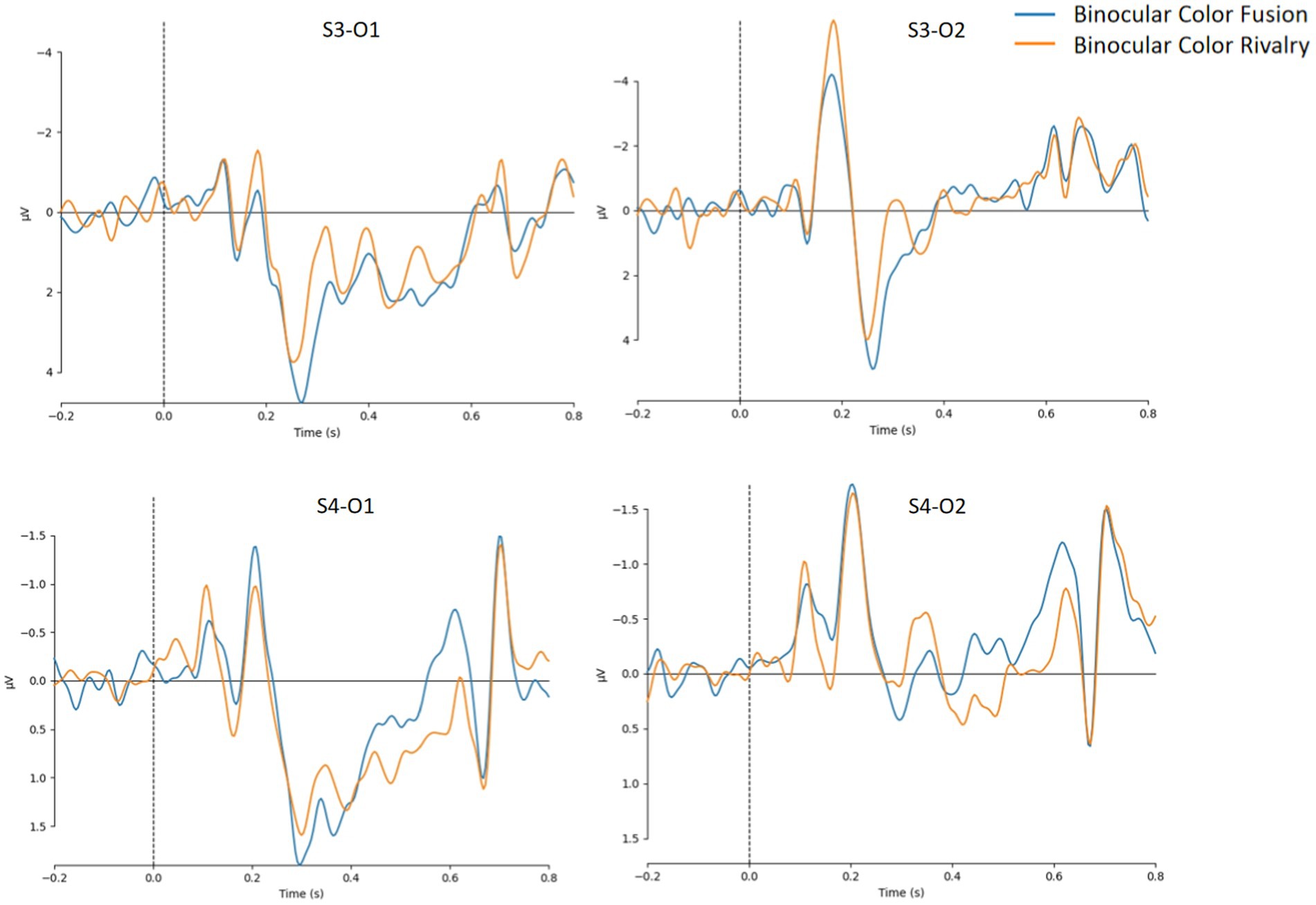

First, we trained models using EEGNet on corresponding data from 8 subjects (labeled S1-S8), and the indicators for model evaluation were shown in Table 3. For models trained on data from S1-S8, model performance was unsatisfactory for either the full 128 channels of data and each single area. The model did not effectively distinguish EEG data between binocular color fusion and rivalry. Based on the above experimental results, we then observed the average data of O1, O2, and their surrounding 6 channels for two subjects (S3 and S4), as shown in Figure 7. It can be seen from Figure 7 that there was a high degree of individual variability among the two subjects. This indicated that the poor performance of the EEGNet model might be due to the individual variability among subjects.

Figure 7. Mean potentials of O1, O2, and their surrounding 6 channels about binocular color fusion and rivalry for subject 3 (S3) and subject 4 (S4).

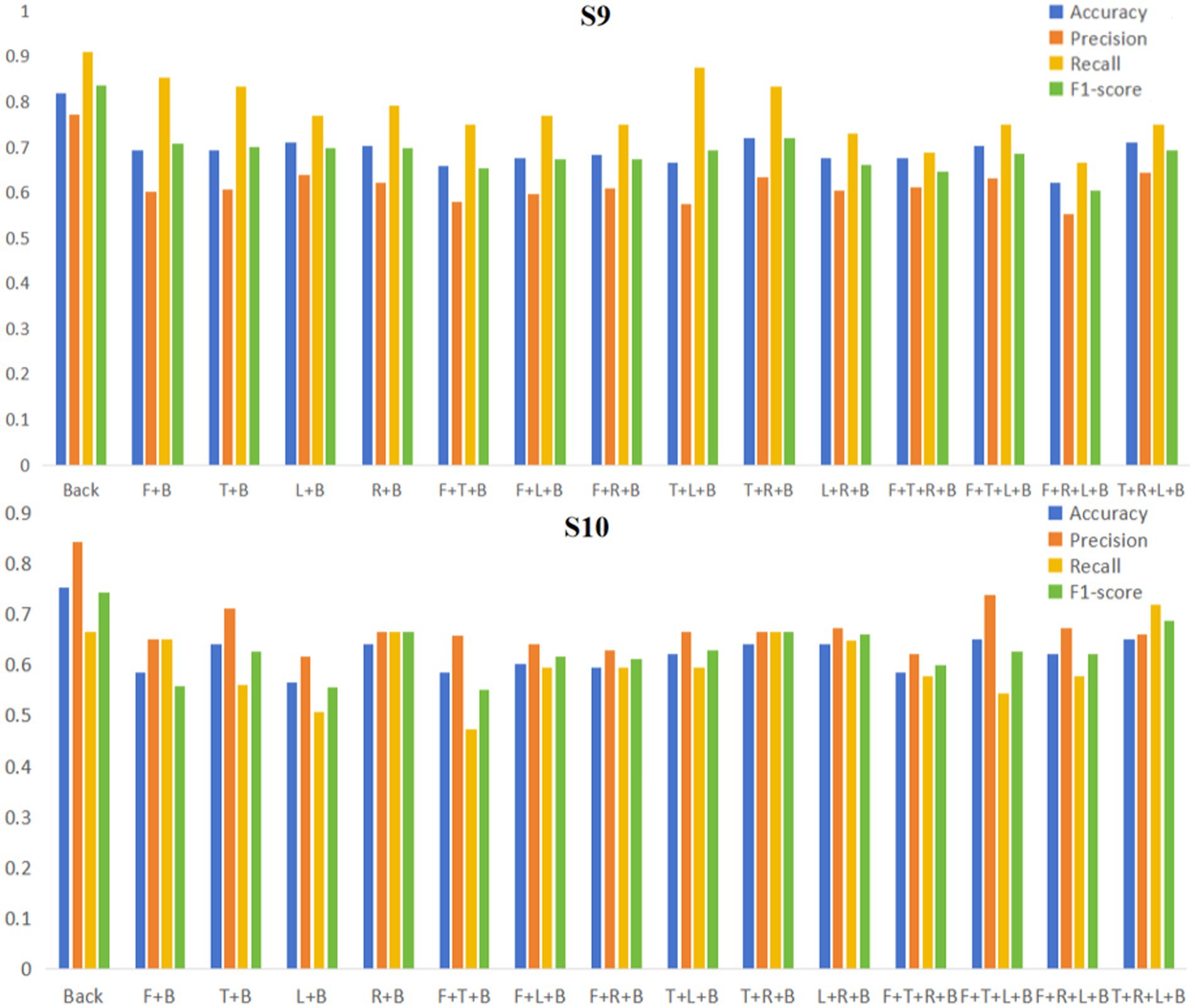

We then collected six sets of data for the other two subjects (labeled S9, S10) using the GES 400 to further verify whether there was an effect of individual variability between subjects on the performance of the EEGNet model and to validate the reproducibility of our experiments. We trained the model using EEGNet with the experimental data of the two subjects according to the previously divided areas, and the obtained model evaluation indicators are shown in Table 4 and Figure 8. By comparing the evaluation indicators of each model, we could find that for the same subject, the model trained with data from the back area could get relatively high evaluation indicators (Accuracy, Precision, Recall, and F1-score) compared to the model using data from full area or other single areas.

To verify whether the back area is the critical brain area for EEG-based binocular color fusion and rivalry identification, we proceeded to train the model using EEGNet with the experimental data from the two subjects based on different combinations of areas (both containing the back area). The model performances were shown in Table 5 and Figure 9. The training results showed that for subject 9, all model evaluation indicators for the back area were higher than the combinations that included the back areas. For subject 10, the back area performed higher on three evaluation indicators than area combinations, except recall indicator of T + R + L + B combination. These results indicated that once data from other areas is added, the overall performance of the model actually decreases. Therefore, we can confirm that binocular color fusion and rivalry have a large difference in the data in the back area, and the back area is the key area for distinguishing binocular color fusion from rivalry.

Figure 9. EEGNet model evaluation indicators in different regional combinations (S9, S10). F represents the Front area; T represents the Top area; L represents the Left area; R represents the Right area; and B represents the Back area.

For binocular color fusion and rivalry, above results and analysis demonstrated that the brain area represented by the back area (the occipital region) had a large difference on EEG signals, and more channels decreased the performance of the model. Reducing the number of electrodes reduced the computational complexity and also filtered out some irrelevant noise. Irrelevant channels require more computational cost and reduced the performance of the trained model. However, it should be noted that: although the performance of the model trained using the 29 channels of data from the back area is better than the performance of the model using the global channel data, this did not mean that the remaining 99 channels are useless for identifying binocular color fusion and rivalry.

In this study, our goal was to use EEGNet to validate the experiment reproducibility and to roughly determine the areas of the brain where binocular color fusion and rivalry is associated for different individuals. However, there are structural and functional differences in the brains of subjects. Different optimal combinations of channels may exist in different subjects. Some channels contribute significantly to the performance of some subjects, but not to others. Our results showed that there was a large effect of inter-subject variability on EEG-based judgmental recognition, and the statistics of EEG data is relatively stationary at different time on the same individual. Consequently, the EEG recognition of binocular color fusion and rivalry is still a very challenge across subjects, but the problem of across time could be solvable for an individual. We can train a model for each individual, to objectively judge the binocular color fusion and rivalry. And the individual model also can be used in BCI applications.

The rise of BCI in recent years has triggered scholars’ interest in EEG. As two special visual phenomena, binocular color fusion and rivalry are mostly judged based on the subjective evaluation of the subjects, and there is a lack of objective evaluation indexes. The current mainstream BCI paradigms are the steady-state visual evoked potential paradigm (SSVEP) (Vidaurre and Blankertz, 2010), the motor imagery paradigm (Xie et al., 2017), and so on. Chen et al. described how to use a BCI for high-speed spelling (Chen et al., 2015). The Berlin BCI group proposed a small-sample based motor imagery system (Vidaurre and Blankertz, 2010). Eye artifacts contaminating EEG signals were considered as a valuable source of information by Karas et al. (2023). The above studies involve different BCI paradigms, and for some of the already well-established BCI paradigms, the related key channels have been quite well studied. However, for some newly proposed BCI paradigms, these studies did not propose a simple and general method for exploring the key channels of a BCI paradigm. Offline analysis of EEG signals helps us to improve the classification accuracy, and once an EEG signal is classified, it can be sent to an external application for the rest of the operations. In addition to searching for key channels for binocular color fusion and rivalry, our experiments also performed classification operations on relevant EEG signals. This is very meaningful for the development of relevant BCI applications in the future. For example, the key channels of the relevant BCI paradigm are first found through our experimental approach, and then the optimal stimulus combinations are found to refine the previous BCI paradigm based on the classification results.

In this paper, we conducted EEG experiments to investigate critical brain area for binocular color fusion and rivalry classification with the EEGNet. By training the EEGNet model with data from 8 subjects (S1-S8) and comparing model performance for different channel combinations, we propose the hypothesis that individual variability between subjects has a great effect on the recognition of binocular color fusion and rivalry. By modeling two subjects (S9, S10) separately using EEGNet and comparing the model performance of different channel combinations, it is found that the model performance in the back area is higher than those in other areas. It helps us to verify that the individual variability between subjects has a large effect on the EEGNet model performance. It also verifies that our experiments are highly reproducible for the same individual. More importantly, the relatively high EEGNet model performance in the back area suggests that the back brain area is more distinct for binocular color fusion and rivalry. Reducing electrodes by selecting key channels not only reduces the cost of computation, but also significantly improves the performance and robustness of the model. This is of great research significance for the development of wearable devices for BCI based on the color-related visual evoked potential (VEP).

There are also some limitations to this study. The labels we consider in this study are limited to binocular color fusion and binocular color rivalry. In our future work, we will also apply the method proposed in this paper to more categories of datasets.

The datasets presented in this study ssssssscan be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://figshare.com/s/e3350a7fb74e504012c7.

ZL: Writing – original draft, Data curation, Methodology, Validation, Visualization. XL: Data curation, Formal analysis, Writing – review & editing. MD: Data curation, Formal analysis, Writing – review & editing. XJ: Data curation, Formal analysis, Writing – review & editing. XH: Data curation, Formal analysis, Writing – review & editing. ZC: Conceptualization, Project administration, Supervision, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by National Science Foundation of China (62165019) and Yunnan Youth and Middle-aged Academic and Technical Leaders Reserve Talent Program (202305 AC160084).

Thank the Color and Image Vision Laboratory of Yunnan Normal University for providing the experimental equipment and space.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Bekdash, M., Asirvadam, V. S., and Kamel, N. (2015). Visual evoked potentials response to different colors and intensities. In: 2015 International Conference on BioSignal Analysis, Processing and Systems (ICBAPS). IEEE, pp. 104–107.

Bi, J., Chu, M., Wang, G., and Gao, X. (2023). TSPNet: a time-spatial parallel network for classification of EEG-based multiclass upper limb motor imagery BCI. Front. Neurosci. 17:1303242. doi: 10.3389/fnins.2023.1303242

Cao, Q., Li, I., and Chen, X. (2010). Study on the event related potentials of blue/yellow color stimulus in normal-vision subjects. Chinese Journal of Forensic Sciences. 3. doi: 10.3969/j.issn.1671-2072.2010.03.005

Cecotti, H., and Graser, A. (2010). Convolutional neural networks for P300 detection with application to brain-computer interfaces. IEEE Trans. Pattern Anal. Mach. Intell. 33, 433–445. doi: 10.1109/TPAMI.2010.125

Chen, Z., Tai, Y., Shi, J., Zhang, J., Huang, X., and Yun, L. (2019). Changes in binocular color fusion limit caused by different disparities. IEEE Access 7, 70088–70101. doi: 10.1109/ACCESS.2019.2918785

Chen, X., Wang, Y., Nakanishi, M., Gao, X., Jung, T. P., and Gao, S. (2015). High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. 112, E6058–E6067. doi: 10.1073/pnas.1508080112

Cole, S., and Voytek, B. (2019). Cycle-by-cycle analysis of neural oscillations. J. Neurophysiol. 122, 849–861. doi: 10.1152/jn.00273.2019

Gramfort, A., Strohmeier, D., Haueisen, J., Hämäläinen, M. S., and Kowalski, M. (2013). Time-frequency mixed-norm estimates: sparse M/EEG imaging with non-stationary source activations. NeuroImage 70, 410–422. doi: 10.1016/j.neuroimage.2012.12.051

Huang, Y., Zheng, J., Xu, B., Li, X., Liu, Y., Wang, Z., et al. (2023). An improved model using convolutional sliding window-attention network for motor imagery EEG classification. Front. Neurosci. 17:385. doi: 10.3389/fnins.2023.1204385

Jas, M., Engemann, D. A., Bekhti, Y., Raimondo, F., and Gramfort, A. (2017). Autoreject: automated artifact rejection for MEG and EEG data. NeuroImage 159, 417–429. doi: 10.1016/j.neuroimage.2017.06.030

Jeunet, C., Lotte, F., and n'Kaoua, B. (2016). Human learning for brain–computer interfaces. Brain–computer interfaces 1: Foundations and Methods, pp. 233–250.

Jung, Y. J., Sohn, H., Lee, S. I., Ro, Y. M., and Park, H. W. (2011). Quantitative measurement of binocular color fusion limit for non-spectral colors. Optics 19, 7325–7338. doi: 10.1364/OE.19.007325

Karas, K., Pozzi, L., Pedrocchi, A., Braghin, F., and Roveda, L. (2023). Brain-computer interface for robot control with eye artifacts for assistive applications. Sci. Rep. 13:17512. doi: 10.1038/s41598-023-44645-y

Kulasingham, J. P., Vibujithan, V., and De Silva, A. C. (2016). Deep belief networks and stacked autoencoders for the p300 guilty knowledge test. In: 2016 IEEE EMBS conference on biomedical engineering and sciences (IECBES). IEEE, pp. 127–132.

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15:056013. doi: 10.1088/1741-2552/aace8c

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Liberatore, M. J., and Wagner, W. P. (2021). Virtual, mixed, and augmented reality: a systematic review for immersive systems research. Virtual Reality 25, 773–799. doi: 10.1007/s10055-020-00492-0

Liu, Y., Cui, G., Chu, J., Xu, T., Jiang, L., Ruan, X., et al. (2018). The study of color-difference evaluation based on EEG signals. Journal of Wenzhou University (Natural Science Edition), 1:10. doi: 10.3875/j.issn.1674-3563.2019.01.006

Liu, M., Wu, W., Gu, Z., Yu, Z., Qi, F., and Li, Y. (2019). Deep learning based on batch normalization for P300 signal detection. Neurocomputing 275, 288–297.

Liu, Y., Li, Y., and Chen, M. (2017). Classification analysis of depression EEG signals based on intrinsic mode decomposition and deep learning[J]. Chinese Journal of Medical Physics, 34, 5. doi: 10.3969/j.issn.1005-202X.2017.09.021

Malkoc, G., and Kingdom, F. A. (2012). Dichoptic difference thresholds for chromatic stimuli. Vis. Res. 62, 75–83. doi: 10.1016/j.visres.2012.03.018

Roy, Y., Banville, H., Albuquerque, I., Gramfort, A., Falk, T. H., and Faubert, J. (2019). Deep learning-based electroencephalography analysis: a systematic review. J. Neural Eng. 16:051001. doi: 10.1088/1741-2552/ab260c

Series, B. T. (2009). Methodology for the subjective assessment of the quality of television pictures. Recommendation ITU-R BT. Available at: http://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT,500–12.

Tang, C., Gao, T., Li, Y., and Chen, B. (2022). EEG channel selection based on sequential backward floating search for motor imagery classification. Front. Neurosci. 16:1045851. doi: 10.3389/fnins.2022.1045851

Vialatte, F. B., Maurice, M., Dauwels, J., and Cichocki, A. (2010). Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog. Neurobiol. 90, 418–438. doi: 10.1016/j.pneurobio.2009.11.005

Vidaurre, C., and Blankertz, B. (2010). Towards a cure for BCI illiteracy. Brain Topogr. 23, 194–198. doi: 10.1007/s10548-009-0121-6

Wang, H., and Zhang, N. (2010). The analysis on vehicle color evoked EEG based on ERP method. In: 2010 4th International Conference on Bioinformatics and Biomedical Engineering. IEEE, pp. 1–3.

WMA. (2022). Ethical principles for medical research involving human subjects. Available at: https://www.wma.net/en/30publications/10policies/b3/ (Accessed October 8, 2022].

Xie, X., Yu, Z. L., Lu, H., Gu, Z., and Li, Y. (2017). Motor imagery classification based on bilinear sub-manifold learning of symmetric positive-definite matrices. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 504–516. doi: 10.1109/TNSRE.2016.2587939

Xiong, Q., Liu, H., and Chen, Z. (2021). Detection of binocular chromatic fusion limit for opposite colors. Opt. Express 29, 22–25. doi: 10.1364/OE.433319

Zakzewski, D., Jouny, I., and Yu, Y. C. (2014). Statistical features of EEG responses to color stimuli. In: 2014 40th Annual Northeast Bioengineering Conference (NEBEC). IEEE, pp. 1–2.

Keywords: binocular color fusion, binocular color rivalry, electroencephalography, EEGNet, BCI

Citation: Lv Z, Liu X, Dai M, Jin X, Huang X and Chen Z (2024) Investigating critical brain area for EEG-based binocular color fusion and rivalry with EEGNet. Front. Neurosci. 18:1361486. doi: 10.3389/fnins.2024.1361486

Received: 26 December 2023; Accepted: 14 February 2024;

Published: 27 February 2024.

Edited by:

Yuki Todo, Kanazawa University, JapanReviewed by:

Florina Ungureanu, Gheorghe Asachi Technical University of Iași, RomaniaCopyright © 2024 Lv, Liu, Dai, Jin, Huang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zaiqing Chen, emFpcWluZ2NoZW5AeW5udS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.