94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 14 July 2023

Sec. Perception Science

Volume 17 - 2023 | https://doi.org/10.3389/fnins.2023.1221740

This article is part of the Research TopicAdvanced Methods and Applications for NeurointelligenceView all 12 articles

This study proposes a multidimensional uncalibrated technique for tracking and grasping dynamic targets by a robotic arm in the eye-in-hand mode. This method avoids complex and cumbersome calibration processes, enabling machine vision tasks to be adaptively applied in a variety of complex environments, which solved the problem of traditional calibration methods being unstable in complex environments. The specific method used in this study is first, in the eye-in-hand mode, the robotic arm moves along the x, y, and z axes in sequence, and images are taken before and after each movement. Thereafter, the image Jacobian matrix is calculated from the three (or more) sets of images collected. Finally, the robotic arm converts the target coordinates in the real-time captured images by the camera into coordinates in the robotic arm coordinate system through the image Jacobian matrix and performs real-time tracking. This study tests the dynamic quasi-Newton method for estimating the Jacobian matrix and optimizes the initialization coupling problem using the orthogonal moving method. This optimization scheme significantly shortens the iteration process, making the uncalibrated technology more fully applied in the field of dynamic object tracking. In addition, this study proposes a servo control algorithm with predictive compensation to mitigate or even eliminate the systematic error caused by time delay in dynamic target tracking in robot visual servo systems.

In the 1960s, due to the development of robotics and computer technology, people began to study robots with visual functions, and in the 1980s, the concept of robot visual servo was proposed. In the following decades, robot visual servoing underwent rapid development. Visual servo control mainly inputs visual information provided by visual sensors into the control system, enabling the control system to process external information. Traditional robot visual servo systems are mostly implemented based on system model calibration technology (Gans, 2003; Huang et al., 2022), which mainly involves models such as camera models, robot models, and target object models. The camera model refers to the internal and external parameters of the camera; the robot model generally refers to the robot kinematics model; the target model mainly refers to the depth information from the target to the end of the robotic arm, as well as the pose and motion parameters of the target in a fixed coordinate system. In the traditional robot visual servo system, the first step is to complete the calibration of the camera and the calibration between the camera and the robot (Hutchinson et al., 1996) to obtain an accurate conversion matrix between the image coordinate system and the robot coordinate system. Then, based on the calibrated transformation matrix, the coordinates of the target object in the image captured by the visual system are converted to obtain the pose of the robot in the coordinate system. Finally, the robot tracks, locates, and grasps the target object in the camera's field of view based on the converted coordinate information (Kang et al., 2020). Throughout the entire work process, the accuracy of the transformation matrix between the image coordinate system and the robot coordinate system is heavily dependent (Malis, 2004). The calibration work between the camera and the robot is extremely cumbersome, requiring data such as the internal and external parameters of the camera, the motion model of the robot model, and the position relationship between the camera and the fixed position of the robot. However, in practical applications, replacing the camera or camera lens, or loosening the installation position between the camera and the robot can cause deviation in the calibration results, requiring complex calibration work to be carried out again. The traditional calibration methods for robot visual servo systems make it difficult for them to operate in complex working environments, which is currently a bottleneck limiting the development of robot visual servo systems.

To break the bottleneck, researchers have begun to focus on studying the “eye-in-hand” structure visual servo control method for calculating the image Jacobian matrix without knowing system parameters. The robot visual servo system still needs to overcome many technical difficulties to be put into normal use in various complex production environments.

The development of uncalibrated technology between cameras and robots without knowing system parameters can be divided into multiple stages: 1. The robot visual servo system achieves precise positioning and grasping of static targets through uncalibrated technology; 2. the robot visual servo system achieves tracking and positioning of dynamic targets through uncalibrated technology; and 3. the robot visual servo system achieves practical production applications with low latency and high accuracy in complex environments.

The fundamental goal of implementing a robot visual servo system is to achieve precise positioning and grasping of static targets. Hosoda and Asada first proposed the exponential weighted recursive least squares method to obtain the Jacobian matrix. This method achieves servo tracking and positioning of stationary targets in an uncalibrated state, but there are still shortcomings in terms of system stability and accuracy of image feature extraction (Hosoda and Asada, 1994; Cao et al., 2022a,b). Yoshimi and Allen introduced an additional robotic arm to explore motion and observed corresponding changes in image features during each calculation cycle. Then, they combined the least square method to calculate the Jacobian matrix of the current image, achieving more accurate two-dimensional target tracking. However, this method is too cumbersome and lacks real-time performance, making it difficult to apply in practical work (Yoshimi and Allen, 1995). In addition, many researchers have obtained the image Jacobian matrix by converting the online estimation of the Jacobian matrix into system state observation (Jianbo, 2004) or recursive formula calculation (Longjiang et al., 2003) and tested the algorithm from four aspects: initial value, operating range, stability, and robustness. Simulation experiments have been conducted to verify the reliability of the algorithm (Hao and Sun, 2007). At this stage, it is possible to use robot visual servo systems for positioning and grasping static targets in industrial production applications that meet various requirements (Singh et al., 1998). Compared to traditional calibration methods (Jingmei et al., 2014), it avoids the tedious process of repeated calibration.

With the development of production technology, the function of only achieving precise positioning and grasping static targets no longer meets the production needs of enterprises. Therefore, Piepmeier proposed the Broyden method to estimate the image Jacobian matrix, thereby achieving tracking and positioning of moving targets. However, when the deviation of image features is large, the performance of the control system will decrease, even leading to control failure (Piepmeier and Lipkin, 2003). When the robot visual servo system tracks irregularly moving targets (Haifeng et al., 2010), it is necessary to improve the real-time performance of the system (Zaien et al., 2014) and the convergence speed of the image Jacobian matrix (Chang et al., 2020). However, while ensuring the real-time performance of the system, it can also lead to problems such as slow recognition speed and low accuracy of the visual system during high-speed movement. Many researchers have combined BP neural networks and genetic algorithms (Samad and Haq, 2016; Chen et al., 2020; Yuhan et al., 2021; Wu et al., 2022) and applied them to real-time image processing in the visual system, improving the processing speed of the visual system, improving the processing speed of the visual system. In addition, it is necessary to improve the robustness of the robot's visual servo system (Li et al., 2009; Hao et al., 2020) to adapt to stable operation in various complex environments. For example, in the field of medical equipment, the robot servo system needs to operate absolutely accurately and stably (Piepmeier, 2003; Gu et al., 2018; Zhang et al., 2020), thus improving the robustness and anti-interference of the system is very important (Cao et al., 2021; Gao and Xiao, 2021).

This study researches the application background of tracking and trajectory coverage of irregular dynamic targets. First, an online estimation test of the dynamic quasi-Newtonian Jacobian matrix was conducted in the simulation system. After analyzing the simulation test results, the system initialization process was targeted and optimized, significantly improving the convergence speed of Jacobian matrix iteration. In addition, this study also proposes a predictive compensation Jacobian matrix PI control algorithm to solve the lag problem of the visual system in the dynamic tracking process, effectively improving the accuracy of the robot servo system in the dynamic tracking process.

The remainder of this article is structured as follows. In Section 2, a detailed introduction is given to the control system. This includes the hardware composition of the control system, theoretical deduction of uncalibrated technology, and an introduction to servo control algorithms. In Section 3, we present the experimental results and discuss them. These results include the iterative process for the proposed uncalibrated visual servo system and the optimized iterative process. In addition, a comparative analysis of the research and experimental data conducted in this study is also presented in Section 4.

The robot uncalibrated servo technology reviewed in this study is based on the application of tracking and coating trajectories to moving targets. The technology analyzed in this study can be applied to different fields such as the application of mobile robots to building cracks and robot welding. The robot platform used in this study is a six-axis industrial robot independently developed by Bozhilin, as shown in Figure 1. A Daheng high-speed industrial camera is installed at the end of the robotic arm to collect image information within the working range of the robotic arm. The camera used needs to have a large field of view, as the target object cannot leave the camera's field of view during uncalibrated initialization; otherwise, it will cause the Jacobian matrix error to increase. The camera and robot are installed in the eye-in-hand mode, and the model diagram is shown in Figure 2.

Uncalibration technology, such as traditional calibration techniques, is used to describe the relationship between the speed of robot end effectors and the rate of feature change in the image. Assuming a point P in three-dimensional space, based on the traditional camera pinhole imaging model as shown in Figure 3, it can be concluded that

Pc(xc, yc, zc) is the coordinate of point P in the camera coordinate system, Pw(xw, yw, zw) is the Cartesian coordinate of point P in the world coordinate system (robotic arm base coordinate system), PI(xi, yi) is the projection coordinate of point P in the camera plane coordinate system, and (ui, vi) is the pixel coordinate in the pixel plane coordinate system.

The relationship between the camera imaging plane coordinate PI(xi, yi) and the pixel plane coordinate (ui, vi) is

In the above equation, u0 and v0 are the pixel coordinates of the penetration point of the camera's optical axis in the pixel plane, while dx and dy represent the spatial distance represented by a single pixel in the X and Y directions in the pixel plane, respectively.

Convert the above equation into a matrix equation as follows:

Assuming that the focal length of the camera is f , under the ideal pinhole model of the eye-in-hand system, the conversion relationship between the camera coordinate system and the pixel coordinate system is

According to the motion equation of the robot's end effector, we have

Converting the above equation into a matrix equation, we obtain as follows:

In practical applications, it is impossible to obtain the transformation matrix between (ui, vi) and [Tc, Ωc]T by measuring each variable in the above equation. Therefore, the variables in the matrix are considered unknown:

On the six-axis robotic arm platform, a single feature pixel does not meet the dimensional requirements, so three feature points are taken and stacked up and down:

Ḟ represents the rate of change of image features, J0 represents the Jacobian transformation matrix, and Ṗ represents the motion vector of the robotic arm end effector. The above equation can be expressed as follows:

In practical applications, we need to convert the two change rates of image features to obtain the motion vector of the robotic arm end effector, so we need to inverse the Jacobian matrix .

In application, two change rates of image features are obtained from two adjacent images, so discretization of equations is also required. In the process of high-frequency camera image retrieval, we assume that the Jacobian matrix of adjacent two frames of images remains approximately unchanged. The discrete equation can be obtained as follows:

During the initialization process of the robot visual servo system, there is a coupling relationship between multiple movements of the robot, which can lead to the irreversibility and solvability of the Jacobian matrix. In order to obtain a more accurate Jacobian matrix, this article optimized the initialization process of the robot visual servo system. Therefore, by standardizing the movement direction of the robotic arm during the initialization process, the obtained feature point set is naturally linearly uncorrelated by decomposing the movement of the robotic arm into independent movements of each degree of freedom during the initialization process. When moving in the independent Tx direction, we get

After completing the initialization of the image Jacobian matrix, it is necessary to update and iterate the matrix in real time to ensure accuracy during the robot operation process. In the image plane, the difference between the actual feature and the expected feature is f(θ, t) = y(θ, t)−y*, where θ is the joint angle and t is time. Taylor expansion is performed on the deviation function f(θ, t) and the radiation model is defined as m(θ, t).

At moment k-1, we get

The iterative equation can be obtained as follows:

The process of running a robot visual servo control system is as follows: first, the visual system captures images and processes them, and then the processed image information inputs into the robot controller to start the robot moving. There is a time delay between the visual system capturing images and the robot starting to move, which can cause systematic errors in the robot's tracking of dynamic targets. Therefore, in the process of robot motion control, this study designs a Jacobian matrix PI control algorithm with predictive compensation to reduce systematic errors caused by the time lag.

Assuming that the expected image feature of the moving target is f*(u*, v*) and the actual feature of the robot pose after the image Jacobian matrix transformation is ft(ut, vt) the actual pose and expected pose feature error of the system are as follows:

In order to improve the real-time performance of the system and ensure that the target motion speed is fast and can complete effective tracking tasks, a predictive compensation method is introduced into the Jacobian matrix control algorithm on the inverse Jacobian matrix visual servo control algorithm, and a Jacobian matrix PI control algorithm with predictive compensation is designed. We define the system image feature error as follows:

In the above equation, is the current image feature, and fd is the expected image feature. The predicted compensation amount ξ is defined as follows:

Vimage is the rate of change in image features, and k is the compensation coefficient.

In the process of dynamic target tracking, in order to reduce system tracking error, the PI control algorithm is introduced into the inverse Jacobian matrix control algorithm, with a control amount of

In order to reduce the impact of system image processing time delay on the system, the compensation amount will be predicted ξ bringing it into the control algorithm to obtain the final visual servo control algorithm:

KP and KI represent the proportional and differential coefficients, while k represents the predictive compensation coefficient of the system, which is related to the rate of change of image features. As shown in Figure 4, the robot control system is combined with the visual system to form a closed-loop robot visual servo system.

To verify the correctness of the uncalibrated visual servo algorithm, a robotic arm model, a monocular camera model, and a target object model were established in the simulation platform MATLAB by simulating real robotic arm servo experiments. A camera robotic arm model with “eyes in hand” was adopted, and the Jacobian online estimation algorithm using the dynamic quasi-Newton method was used for visual feedback. By using a visual controller, the control amount is calculated using image feature deviation to drive the end of the robotic arm to move toward the target. Finally, the effectiveness of the uncalibrated visual servo algorithm was verified through simulation experiments, providing a theoretical basis for practical development work.

We established a robotic arm model, monocular camera model, and target object model in the simulation platform MATLAB. The robotic arm is a six-axis Puma560 robotic arm. The camera has a resolution of 1,024 * 1,024, a focal length of 8 mm, and is installed at the end of the robotic arm (eye in hand). The target object is three small balls located above the robotic arm.

At the initial moment, the end of the robotic arm undergoes six exploratory movements. As shown in Figure 5, it is a simulation model of the servo system. The robotic arm is Puma560, and the camera is installed at the end of the robotic arm in green. The three blue balls in the picture are the target objects. Robot movement generates displacement ΔP0 at the end of the robotic arm and the displacement of feature points ΔF0 within the image plane. The initial value of the Jacobian matrix is

Using the dynamic quasi-Newton method to update the Jacobian matrix, the update frequency of the robotic arm is set to 0.1–0.2 mm per movement until the pixel error of the image reaches the range.

The error of the robotic arm in this experiment after 20 iterations is 0.31. After 35 iterations, the error is 0.011. After 56 iterations, the error was 0.0001, and the final image coordinates of the small ball were 761.999661.999, 761.999412.0, and 212.0661.999, respectively. The initial expected pixel coordinates were 762662, 762412, and 212662.

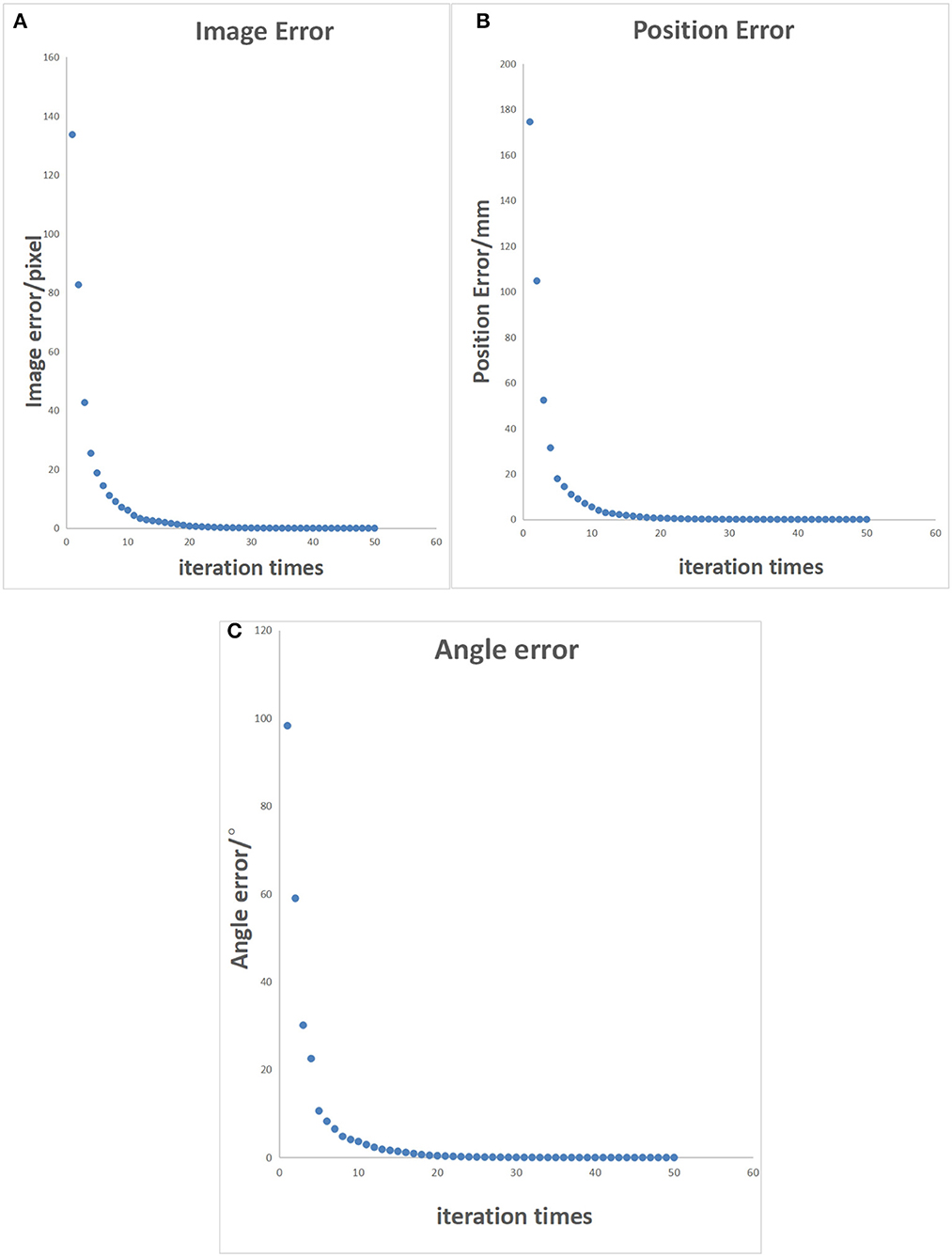

The initial posture of the robotic arm servo system and the pixel coordinates of three small balls are shown in Figure 6. The posture and ball pixel coordinates at the end of the servo are shown in Figure 7. The motion trajectories of the feature points of three small balls in the image plane are shown in Figure 8. The error of the entire process (image error and robotic arm end pose error) varies with the number of cycles, as shown in Figure 9.

Figure 9. Characteristic points error control chart. (A) Pixel error of characteristic points on the image. (B) Position error of characteristic points in reality. (C) Angle error of the robotic arm.

According to the simulation experiment results shown in Table 1, it can be seen that the uncalibrated system requires multiple iterations to achieve the specified accuracy. However, in actual production environments, there is no enough time for iterative optimization. Looking at the simulation results data, it was found that the Jacobian matrix obtained from the uncalibrated initialization of the original scheme had a significant error in conversion. Through analysis, it was found that during the initialization process, images before and after movement were obtained by moving the robotic arm. In this process, there is coupling in the movement of the manipulator, which will lead to an irreversible and unsolvable Jacobian matrix.

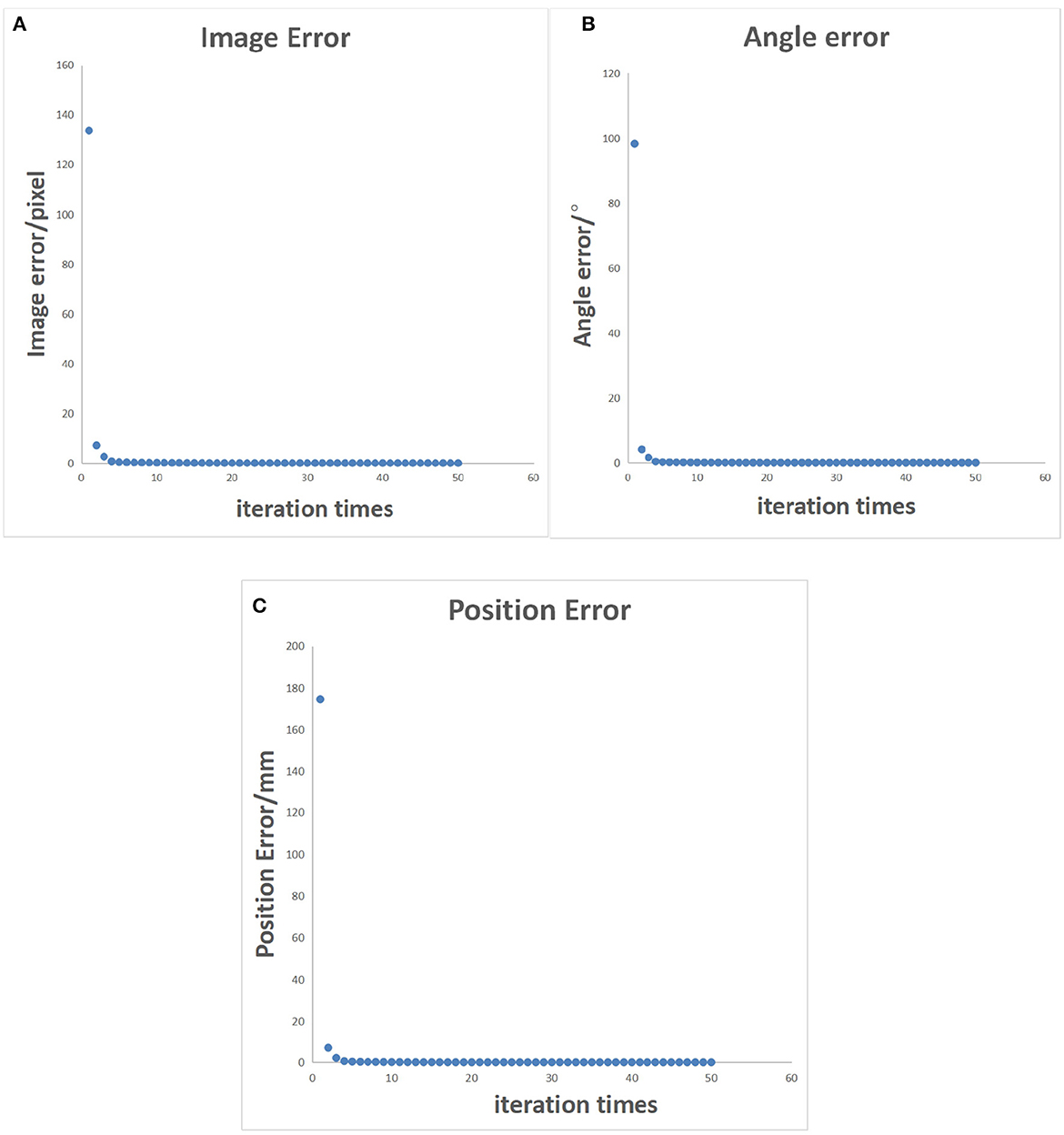

To make the multiple sets of image feature points obtained after the robotic arm moves linearly uncorrelated, it is necessary to decouple the collected feature point set. This will be an incredibly complex and cumbersome task. Therefore, by standardizing the movement direction of the robotic arm during the initialization process, the obtained feature point set is naturally linearly uncorrelated. The iterative process error data of the uncalibrated system after the decoupling optimization initialization process is shown in Table 2, and the error of the entire process varies with the number of cycles, as shown in Figure 10. In Figure 11, it can be seen that the iterative speed of the Jacobian matrix after decoupling optimization has been significantly improved. Faster iterative convergence speed can effectively improve the real-time performance of robot visual servo systems during dynamic tracking.

Figure 10. Error control chart after decoupled optimization. (A) Pixel error of characteristic points on the image. (B) Position error of characteristic points in reality. (C) Angle error of the robotic arm.

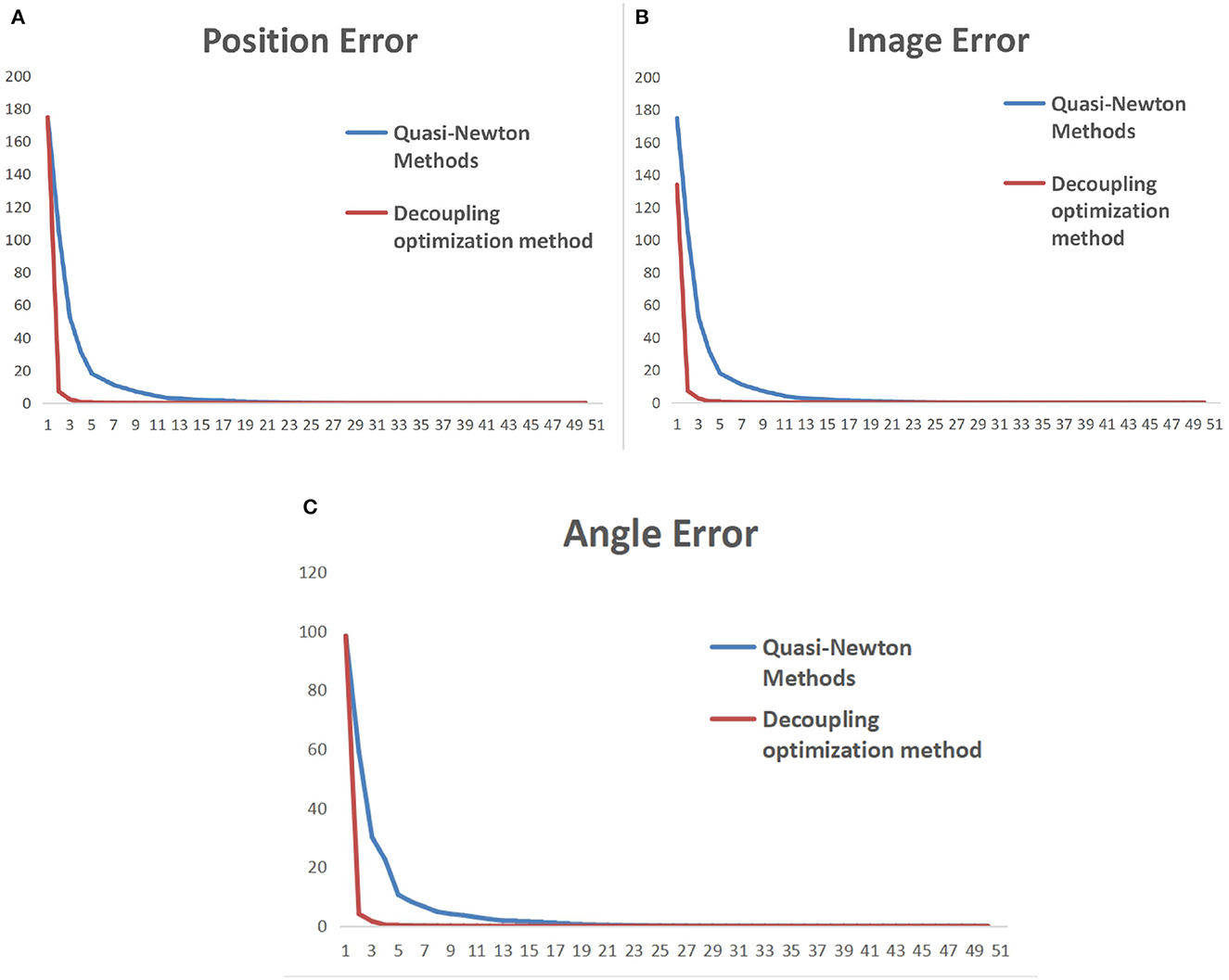

Figure 11. Comparison of iterative convergence before and after initialization optimization. (A) Pixel error of characteristic points on the image. (B) Position error of characteristic points in reality. (C) Angle error of the robotic arm.

In Figure 11, the vertical axis represents the error during the robot iteration process, and the horizontal axis represents the number of iterations. The update cycle for each iteration of the robot is not fixed. The iterative process includes camera shooting, image processing, and robot motion. Due to the different amount of information in each cycle, the iteration period will fluctuate between 20 and 30 ms.

The above simulation tests have verified the reliability of the dynamic quasi-Newton method and the iterative algorithm after decoupling optimization. Next, the two algorithms mentioned above and the servo control algorithm with predictive compensation will be tested on the robotic arm. During the testing process, the robot dynamically tracks the target ball moving on the conveyor belt. The tracking process error data is recorded by identifying the distance between the centroid position of the target ball in the photos captured by the camera during the tracking process and the laser point position vertically shot by the robot arm. The tracking error curves of the three algorithms are shown in Figure 12.

From the tracking error curve in Figure 12, it can be observed that the iterative algorithm after decoupling optimization and the servo control algorithm with predictive compensation have a faster convergence speed than the dynamic equal Newton method. The servo control algorithm with predictive compensation can further reduce the tracking error in the convergence state.

This study investigates the application of the dynamic equal Newton method, the iterative algorithm after decoupling optimization, and the servo control algorithm with predictive compensation in robot uncalibrated visual servo systems. However, due to the dynamic equal Newton method requiring multiple iterations to obtain an accurate Jacobian matrix, a decoupling optimization method for the initialization process was proposed by analyzing the entire process of the uncalibrated robot visual servo system. The iterative algorithm after decoupling optimization can effectively reduce the number of iterations and improve the convergence speed of the Jacobian matrix through simulation testing. Therefore, this algorithm has a high practical value in production applications.

Due to the time lag that cannot be completely eliminated when moving from the visual system to the robot's active position information in the eye-in-hand mode, this study proposes a method called the servo control algorithm with predictive compensation to weaken or even eliminate the tracking error caused by the time lag. It showed a very significant effect on the experimental test results.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

HQ conducted theoretical research, algorithm design, and paper writing for the article. DH conducted simulation testing and built the framework of the robot visual servo system. BZ completed the collection and analysis of experimental data. MW has completed the optimization and revision of the paper content. All authors contributed to the article and approved the submitted version.

The study was supported by the Science and Technology Planning Project of Guangzhou City (Grant No. 2023A04J1691).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Cao, H., Chen, G., Li, Z., Feng, Q., Lin, J., and Knoll, A. (2022a). Efficient grasp detection network with gaussian-based grasp representation for robotic manipulation. IEEE ASME Trans. Mech. doi: 10.1109/TMECH.2022.3224314

Cao, H., Chen, G., Li, Z., Feng, Q., Lin, J., and Knoll, A. (2022b). NeuroGrasp: multimodal neural network with euler region regression for neuromorphic vision-based grasp pose estimation. IEEE Trans. Instrument. Measure. 71, 1–11. doi: 10.1109/TIM.2022.3179469

Cao, H., Chen, G., Xia, J., Zhuang, G., and Knoll, A. (2021). Fusion-based feature attention gate component for vehicle detection based on event camera. IEEE Sensors J. 21, 24540–24548. doi: 10.1109/JSEN.2021.3115016

Chang, Y., Li, L., Wang, Y., and You, K. (2020). Toward fast convergence and calibration-free visual servoing control: a new image based uncalibrated finite time control scheme. IEEE Access 8, 88333–88347. doi: 10.1109/ACCESS.2020.2993280

Chen, G., Cao, H., Conradt, J., Tang, H., Rohrbein, F., and Knoll, A. (2020). Event-based neuromorphic vision for autonomous driving: a paradigm shift for bio-inspired visual sensing and perception. IEEE Signal Process. Magazine 37, 34–49. doi: 10.1109/MSP.2020.2985815

Gans, N. R. (2003). Performance tests for visual servo control systems, with application to partitioned approaches to visual servo control. Int. J. Robot. Res. 22, 955–981. doi: 10.1177/027836490302210011

Gao, Q., and Xiao, W. (2021). Research on the Robot Uncalibrated Visual Servo Method Based on the Kalman Filter With Optimized Parameters. Singapore: Springer.

Gu, J., Wang, W., Zhu, M., Lv, Y., Huo, Q., and Xu, Z. (2018). “Research on a technology of automatic assembly based on uncalibrated visual servo system,” in 2018 IEEE International Conference on Mechatronics and Automation (ICMA). IEEE.

Haifeng, L., Jingtai, L., Yan, L., Xiang, L, and Lei, S. (2010). Visual Servoing With an Uncalibrated Eye-in-Hand Camera. Technical Committee on Control Theory, Chinese Association of Automation (Beihang University Press), 3741–3747.

Hao, M., and Sun, Z. (2007). “Uncalibrated eye-in-hand visual servoing using recursive least squares,” in IEEE International Conference on Systems, Man and Cybernetics, IEEE (2007).

Hao, T., Wang, H., Xu, F., Wang, J., and Miao, Y. (2020). “Uncalibrated visual servoing for a planar two link rigid-flexible manipulator without joint-space-velocity measurement,” in IEEE Transactions on Systems, Man, and Cybernetics: Systems, 1–13.

Hosoda, K., and Asada, M. (1994). “Versatile visual servoing without knowledge of true Jacobian. Intelligent robots and system' 94'. ‘Advanced robotic systems and the real world',IROS' 94,” in Proceedings of the IEEE /RSJ/GI International Conference on IEEE, Vol. 1, 486–193

Huang, H., Bian, X., Cai, F., Li, J., Jiang, T., Zhang, Z., et al. (2022). A review on visual servoing for underwater vehicle manipulation systems automatic control and case study. Ocean Eng. 260, 112065. doi: 10.1016/j.oceaneng.2022.112065

Hutchinson, S., Hager, G. D., and Corke, P. I. (1996). A tutorial on visual servo control. IEEE Trans. Robot Automat. 12, 651–670. doi: 10.1109/70.538972

Jianbo, S. (2004). Uncalibrated Robotic Hand-Eye Coordination of Full Degree-of-Freedoms Based on Fuzzy Neural NetWork (苏剑波), 42–44. doi: 10.13245/j.hust.2004.s1.012

Jingmei, Z., Pengfei, D., and Tie, Z. (2014). Positioning and grasping system design of industrial robot based on visual guidance. Machine Design Res. 30, 45–49. doi: 10.13952/j.cnki.jofmdr.2014.0135

Kang, M., Chen, H., and Dong, J. (2020). Adaptive visual servoing with an uncalibrated camera using extreme learning machine and Q-leaning. Neurocomputing 402, 384–394. doi: 10.1016/j.neucom.2020.03.049

Li, Y. X., Mao, Z. Y., and Tian, L. F. (2009). Visual servoing of 4DOF using image moments and neural network. Control Theory Appl. 26, 1162–1166.

Longjiang, X., Bingyu, S., Dingyu, X., and Xinhe, X. (2003). Model independent uncallbration visual servo control. Robot 25, 424–427. doi: 10.13973/j.cnki.robot.2003.05.009

Malis, E. (2004). Visual servoing invarant to changes in camera-intrinsic parameters. IEEE Trans. Robot. Automat. 20, 72–81. doi: 10.1109/TRA.2003.820847

Piepmeier, J. A. (2003). “Experimental results for uncalibrated eye-in-hand visual servoing,” in IEEE, 335–339.

Piepmeier, J. A., and Lipkin, H. (2003). Uncalibrated eye-in-hand visual servoing. Int. J. Robot. Res. 22, 805–819. doi: 10.1177/027836490302210002

Samad, A. A. I., and Haq, M. Z. (2016). Uncalibrated Visual Servoing Using Modular MRAC Architecture. doi: 10.13140/RG.2.2.25994.34244

Singh, R., Voyles, R. M., Littau, D., and Papanikolopoulos, N. P. (1998). “Grasping real objects using virtual images,” in IEEE Conference on Decision and Control (Cat. No.98CH36171), Tampa, FL, USA, Vol. 3, 3269–3274.

Wu, W., Su, H., and Gou, Z. (2022). “Research on precision motion control of micro-motion platform based on uncalibrated visual servo,” in 2022 4th International Conference on Control and Robotics (ICCR), Guangzhou, China, 77–81.

Yoshimi, B. H., and Allen, P.K. (1995). Alignment using an uncalibrated camera system. IEEE Trans. Robot. Automat. 11, 516–521. doi: 10.1109/70.406936

Yuhan, D., Lisha, H., and Shunlei, L. (2021). Research on computer vision enhancement in intelligent robot based on machine learning and deep learning. Neural Comput. Appl. doi: 10.1007/S00521-021-05898-8

Zaien, Y., Xueliang, P., Zhengyang, L., Yi, J., and Shenglong, C. (2014). The simulation and reconstruction of the complex robot trajectories based on visual tracking. Machine Design Res. 30, 39–46. doi: 10.13952/j.cnki.jofmdr.2014.01.038

Keywords: image Jacobian matrix, machine vision, uncalibrated visual servo, dynamic quasi-Newton algorithm, robot

Citation: Qiu H, Huang D, Zhang B and Wang M (2023) A novel multidimensional uncalibration method applied to six-axis manipulators. Front. Neurosci. 17:1221740. doi: 10.3389/fnins.2023.1221740

Received: 12 May 2023; Accepted: 20 June 2023;

Published: 14 July 2023.

Edited by:

Alois C. Knoll, Technical University of Munich, GermanyReviewed by:

Yingbai Hu, Technical University of Munich, GermanyCopyright © 2023 Qiu, Huang, Zhang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dan Huang, ZGFuNzhodWFuZ0AxNjMuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.