94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 06 July 2023

Sec. Neuroprosthetics

Volume 17 - 2023 | https://doi.org/10.3389/fnins.2023.1219553

This article is part of the Research TopicSmart Wearable Devices in Healthcare—Methodologies, Applications, and AlgorithmsView all 11 articles

The integration of haptic technology into affective computing has led to a new field known as affective haptics. Nonetheless, the mechanism underlying the interaction between haptics and emotions remains unclear. In this paper, we proposed a novel haptic pattern with adaptive vibration intensity and rhythm according to the volume, and applied it into the emotional experiment paradigm. To verify its superiority, the proposed haptic pattern was compared with an existing haptic pattern by combining them with conventional visual–auditory stimuli to induce emotions (joy, sadness, fear, and neutral), and the subjects’ EEG signals were collected simultaneously. The features of power spectral density (PSD), differential entropy (DE), differential asymmetry (DASM), and differential caudality (DCAU) were extracted, and the support vector machine (SVM) was utilized to recognize four target emotions. The results demonstrated that haptic stimuli enhanced the activity of the lateral temporal and prefrontal areas of the emotion-related brain regions. Moreover, the classification accuracy of the existing constant haptic pattern and the proposed adaptive haptic pattern increased by 7.71 and 8.60%, respectively. These findings indicate that flexible and varied haptic patterns can enhance immersion and fully stimulate target emotions, which are of great importance for wearable haptic interfaces and emotion communication through haptics.

Emotions play a crucial role in human social communication and interaction (Keltner et al., 2019). With the development of computer technology and human-computer interaction, the field of affective computing (Picard, 1997) has emerged with the primary objective of studying and developing theories, methods, and systems to recognize, interpret, process, and simulate human emotions. Emotions normally change in response to external stimuli, and haptic stimuli can convey more intricate and subtle emotional experiences to the human body compared to visual and auditory stimuli (Hertenstein et al., 2006, 2009). Consequently, a new research trend has arisen in affective computing, which aims to explore the potential of incorporating haptic technology into the processes of emotion recognition, interpretation, and simulation. This integration of haptic technology with affective computing has given rise to a new area called “Affective Haptics” (Eid and Al Osman, 2016).

Affective haptics focuses on the analysis, design, and evaluation of systems capable of inducing, processing, and simulating emotions through touch, which has been applied in many fields. For example, e-learning applications may benefit from affective haptics by reinvigorating learners’ interest when they feel bored, frustrated, or angry (Huang et al., 2010). In healthcare applications, affective haptics can be used to treat depression and anxiety (Bonanni and Vaucelle, 2006), as well as assist in the design of enhanced communication systems for children with autism (Changeon et al., 2012). Other applications include entertainment and games (Hossain et al., 2011), social and interpersonal communication (Eid et al., 2008), and psychological testing (Fletcher et al., 2005).

Affective haptics consists of two subfields: emotion recognition and haptic interfaces. Emotion recognition is to identify emotional states through the user’s behavior and physiological reactions (Kim et al., 2013). Haptic interfaces provide a communication medium between touch and the human subject (Culbertson et al., 2018). The current status of these two subfields will be discussed in the following paragraphs.

Emotion recognition can be broadly divided into two categories: recognition based on non-physiological signals and physiological signals (Fu et al., 2022). Non-physiological signals, such as speech signals (Zhang et al., 2022), facial expressions (Casaccia et al., 2021), and body posture (Dael et al., 2012), are easily influenced by personal volition and the environment, which makes it hard to accurately evaluate an individual’s emotional state. Conversely, physiological signals, which include electroencephalogram (EEG) (Li et al., 2018), electrocardiogram (ECG) (Sarkar and Etemad, 2022), electromyogram (EMG) (Xu et al., 2023), and electrodermal activity (EDA) (Yin et al., 2022), vary according to emotional states, thus providing a more objective means of measuring emotions (Giannakakis et al., 2022). Among these signals, EEG is widely applied in various fields (Zhang et al., 2023; Zhong et al., 2023) and particularly closely associated with emotions (Zhang et al., 2020), so emotion recognition based on EEG has gained widespread usage.

The process of EEG-based emotion recognition involves emotion induction, EEG data preprocessing, feature extraction, and classification models. Koelstra created a publicly available emotion dataset, the DEAP dataset, which contains the EEG and peripheral physiological signals of subjects when watching music videos (Koelstra et al., 2012). Additionally, Zheng also published continuously three emotion datasets based on 62-channel EEG signals: SEED, SEED-IV, and SEED-V (Zheng et al., 2019a,b; Wu et al., 2022). Currently, the above datasets are commonly utilized to extract various features and boost the classification performance by deep learning algorithms (Craik et al., 2019). Liu proposed a three-dimension convolution attention neural network composed of spatio-temporal feature extraction module and EEG channel attention weight learning module (Liu et al., 2022). Zhong proposed a regularized graph neural network for EEG-based emotion recognition and validated its superiority on two public datasets, SEED, and SEED-IV (Zhong et al., 2022). The datasets mentioned above induced emotions in subjects using movie clips of specific emotions. Recently, a few researchers attempted to combine other senses to induce emotions. Wu developed a novel experimental paradigm that allowed odor stimuli to participate in video-induced emotions, and investigated the effects of the different stages of olfactory stimuli application on subjects’ emotions (Wu et al., 2023). Raheel verified that enhancing more than two of the human senses from cold air, hot air, olfaction, and haptic effects could evoke significantly different emotions (Raheel et al., 2020). In general, emotion induction often relies on visual–auditory stimuli, whereas research on emotions induced by haptic stimuli remains quite limited. In other words, there are few studies on recognizing emotions using EEG signals in affective haptics, which to some extent hinders the development of this field.

The haptic interfaces in affective haptics are primarily used to transmit touch sensations to the user through haptic devices (Culbertson et al., 2018). Incorporating haptic devices into emotional induction can convey feelings that are difficult to express with visual–auditory stimuli. Nardelli developed a haptic device that mimicked the sensation of stroking by moving a fabric strip at varying speeds and pressures to examine how the speed and pressure of haptic stimuli elicit different emotional responses (Nardelli et al., 2020). Tsalamlal used a haptic stimulation method of spraying air on the participant’s arm (Tsalamlal et al., 2018). Haynes developed a wearable electronic emotional trigger device that produced a sense of pleasure by stretching and compressing the skin surface of the wearer (Haynes et al., 2019). Wearable devices such as haptic jackets (Rahman et al., 2010) and haptic gloves (Mazzoni and Bryan-Kinns, 2015) are also commonly used in the field of affective haptics. Ceballos designed a haptic jacket and proposed a haptic vibration pattern that enhanced emotions in terms of valence and arousal (Ceballos et al., 2018). Subsequently, Li combined this vibration pattern with visual–auditory stimuli to form a visual–auditory-haptic fusion induction method, demonstrating that haptic vibration improved the accuracy of EEG-based emotion recognition tasks (Li et al., 2022). Whereas, the design of haptic patterns requires further exploration to better understand the mechanism between haptics and emotions.

In conclusion, significant progress has been made in the field of affective haptics. However, the mechanism between haptics and emotions has not yet been clearly revealed. This is primarily due to: (1) the limited application of objective emotion recognition methods in affective haptics; and (2) the lack of diverse haptic patterns. To address these issues, this study designed two haptic vibration patterns and combined them with conventional visual–auditory stimuli to induce emotions (joy, sadness, fear, and neutral). EEG signals were utilized to classify four target emotions and to explore the effects of haptic stimuli on emotions. The contributions of this work are summarized as follows,

• This paper proposed a novel haptic pattern with adaptive vibration intensity and rhythm according to the video volume, and applied it into the EEG emotional experiment paradigm.

• Compared to the existing haptic pattern with fixed vibration intensity and rhythm, the proposed haptic pattern significantly enhanced emotions.

• This paper analyzed the possible reasons for emotional enhancement due to haptic vibration from the perspective of neural patterns.

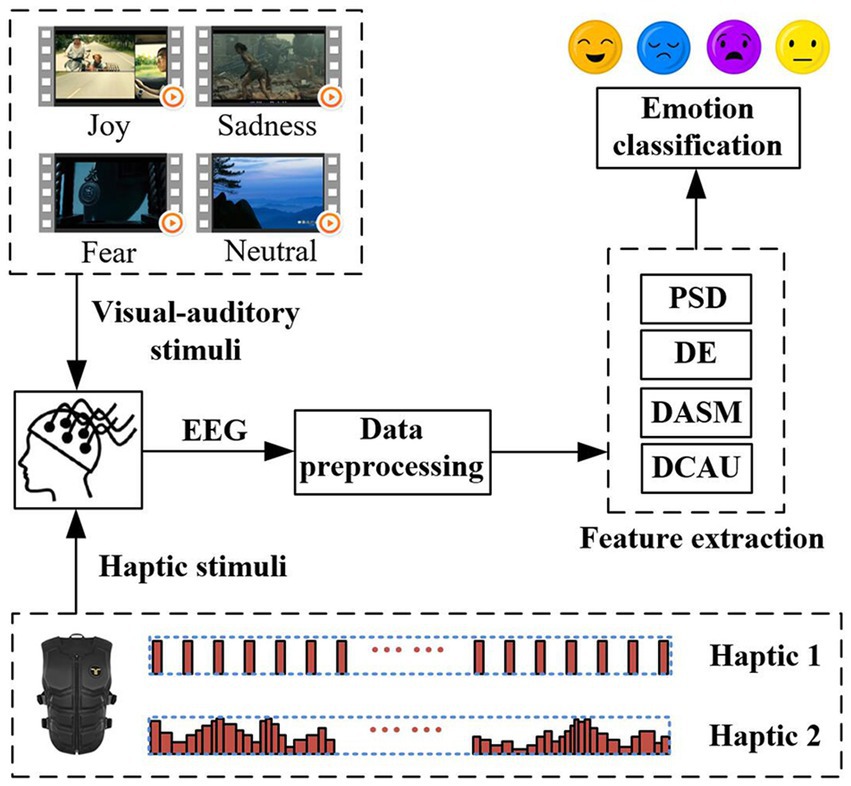

In this work, we presented a novel framework for emotion recognition in combination with two haptic vibration patterns, as illustrated in Figure 1. It included visual–auditory-haptic fusion stimuli, EEG acquisition, EEG pre-processing, feature extraction, and emotion classification. Compared to the conventional EEG-based emotion recognition framework, the innovation of this study is to incorporate two haptic patterns with visual–auditory stimuli to explore the effects on emotions. The former is an existing haptic pattern, named Haptic 1. The latter is the proposed adaptive haptic pattern, named Haptic 2. Please see Section 2.3 for details of the two haptic patterns.

Figure 1. The framework for emotion recognition that incorporates two haptic patterns with traditional visual–auditory stimuli.

Sixteen subjects (11 males and 5 females) aged between 20 and 30 years old, all right-handed and with no history of psychiatric illness, participated in the emotion experiment. Prior to the experiment, they were informed about the procedure and allowed to adapt to the experimental setting. The study was approved by the Ethics Committee of Southeast University, and all subjects received compensation for their involvement in the experiment.

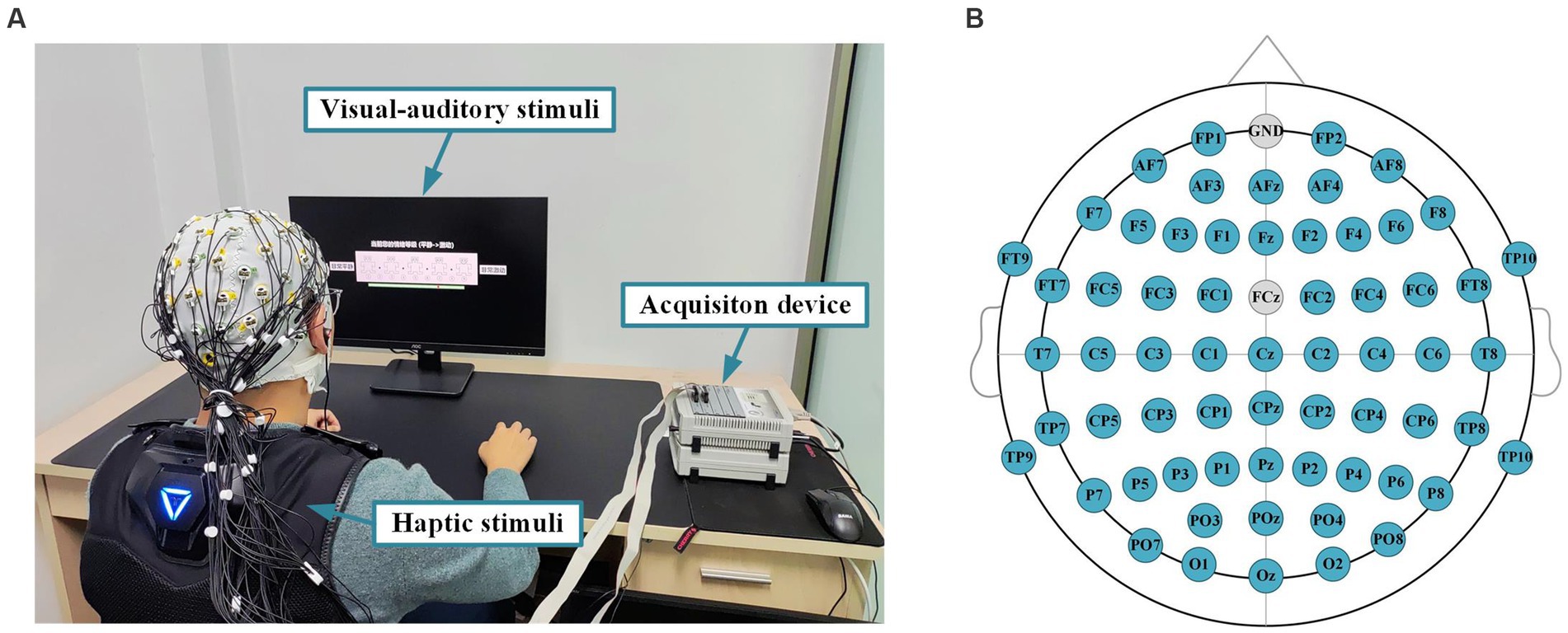

In this experiment, the subjects were exposed to visual–auditory-haptic fusion stimuli to induce emotion. The subjects wore a haptic vest and sat in a comfortable chair approximately 0.7 meters away from the monitor, as shown in Figure 2A. EEG signals were recorded by a 64-channel active electrode cap (Brain Products GmbH, Germany), following the international standard 10–20 system. All channels were referenced to the FCz channel, and the Fpz channel was chosen as the ground, as shown in Figure 2B, so a total of 63 channels of EEG data are available. During the recording, the impedance of all channels was kept below 10 kΩ. The EEG sampling frequency was set to 1,000 Hz, and a band-pass filter from 0.05 to 100 Hz was utilized to filter the EEG signals to attenuate high-frequency band components. Meanwhile, a notch filter at 50 Hz was applied to reduce power line interference.

Figure 2. Experimental setup and paradigm for emotion recognition. (A) An experimental platform for evoking subjects’ emotions through the visual–auditory-haptic fusion stimulation. (B) The EEG cap layout for 64 channels.

In order to elicit target emotions (joy, sadness, fear, and neutral) in the subjects, we employed a team of eight psychology graduate students to jointly select 16 movie clips that characterized the above four emotions. Each emotion corresponded to 4 movie clips, and all the movie clips were accompanied by Chinese subtitles. Further details can be found in Table 1.

The haptic stimuli implemented in the experiment were realized by using a haptic vest from bHaptics Inc., as illustrated in Figure 3A. As we can see from Figure 3B, this wearable and portable vest provides a double 5 × 4 matrix with motors positioned at both the front and back areas. Each motor provides two methods of vibration modulation: the first is to set the motor’s vibration intensity and rhythm directly, while the second one adjusts the intensity and rhythm of the motor adaptively according to the intensity and frequency of the audio signals. Various haptic vibration patterns can be created by setting the individual parameters of each motor and the overall linkage to give users a specific feeling. The device is controlled through the Unity application via Bluetooth. By incorporating corresponding haptic vibration patterns with different movie clips, we generated emotional stimulation materials that combine visual, auditory, and haptic sensations. The detailed descriptions of the vibration patterns are provided in the next section Experimental protocol.

Figure 3. The haptic vest with a dual vibration motors matrix: (A) Vest. (B) Front view of the motors matrix.

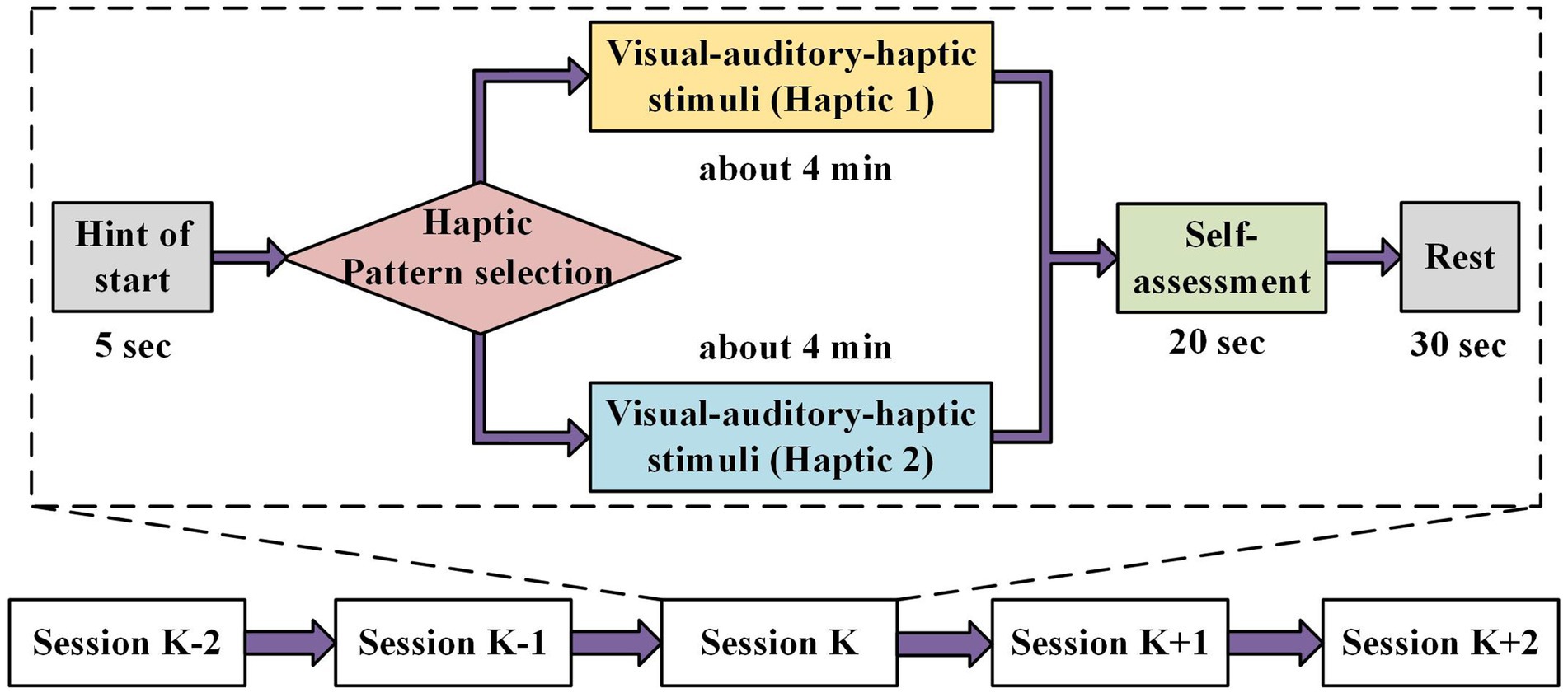

Two different haptic vibration patterns were designed to explore their differential effects on emotion. The detailed flow of the emotion experiment is depicted in Figure 4. In total, there were 16 sessions for each experiment. Firstly, each session had a 5-s cue to start. Next, the visual–auditory-haptic fusion stimuli were applied for approximately 4 min, where the visual–auditory stimuli used a previously selected film clip and the haptic stimuli were chosen from either of the two haptic patterns. Then, the subjects were required to conduct a 20-s self-assessment, followed by a 30-s rest. During the self-assessment, the subjects were requested to declare their emotional responses to each session, which would be used later as a reference for assessing the validity of the collected data.

Figure 4. Emotion experimental paradigm based on the visual–auditory-haptic stimuli. The visual–auditory stimuli include a previously selected film clip and the haptic stimuli chosen from either of the two haptic patterns.

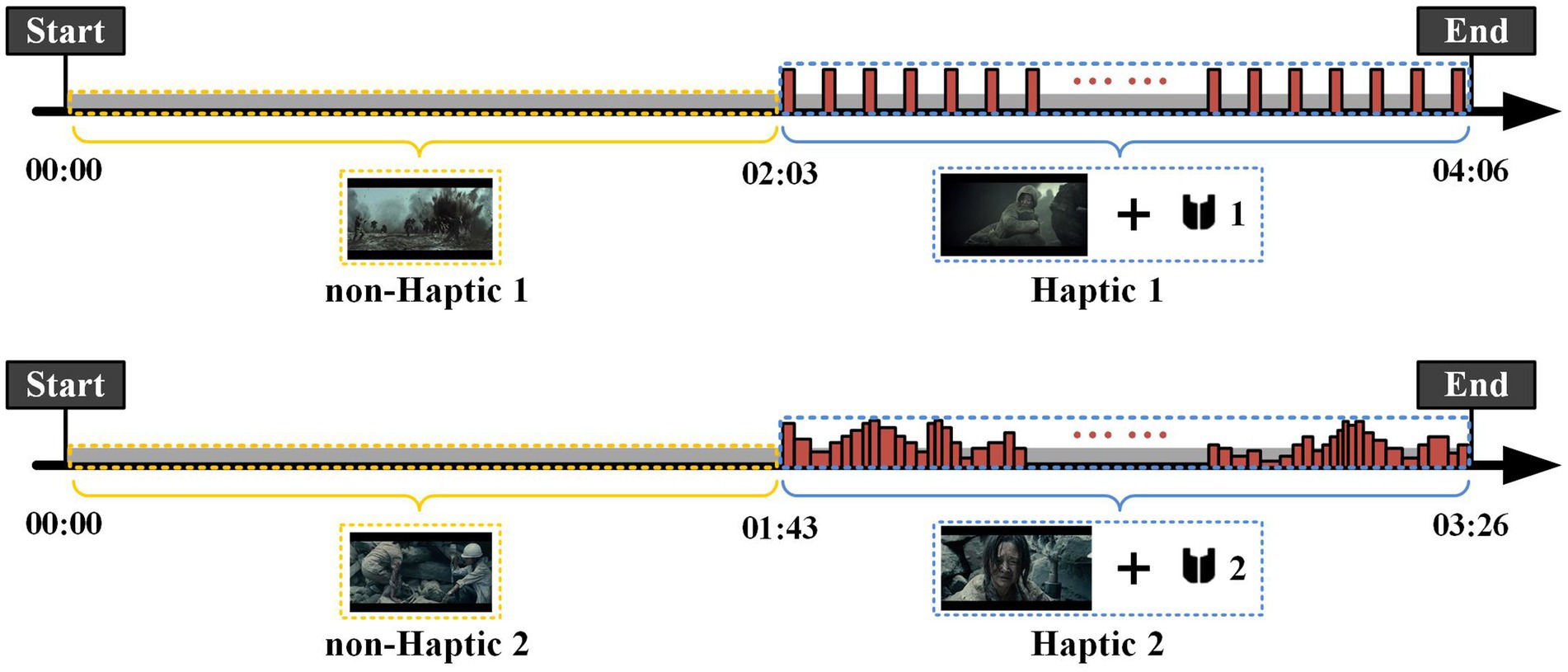

The visual–auditory-haptic fusion stimuli scheme is shown in Figure 5. The visual–auditory stimuli were presented as movie clips throughout the experiment, while the haptic stimuli were applied only in the second half of each clip to explore whether haptic stimuli could enhance emotions. Importantly, we aimed to examine the differences between the two haptic patterns in inducing emotions. We randomly assigned Haptic 1 or Haptic 2 to the 16 movie clips. The first haptic vibration pattern employed a fixed vibration intensity and rhythm, where each emotion (joy, sadness, and fear) corresponded to a specific intensity and rhythm of vibration, as displayed in Table 2. This pattern has been demonstrated to be effective in previous studies (Ceballos et al., 2018; Li et al., 2022). The second haptic pattern adapted the vibration intensity and rhythm to the video volume. Specifically, the vibration intensity was positively correlated with volume, and the vibration rhythm was adjusted by setting a volume threshold below which no vibration was generated. To maintain sample balance, both patterns were presented for eight sessions.

Figure 5. Procedure of two visual–auditory-haptic fusion stimuli. The visual–auditory stimuli were presented as movie clips throughout the experiment, while the haptic stimuli were applied only in the second half of each clip. The first haptic vibration pattern employed a fixed vibration intensity and rhythm, and the second haptic pattern adapted the vibration intensity and rhythm to the video volume.

The EEG signals were decomposed using the EEGLAB toolbox. Initially, the sampling rate of the EEG signals was reduced from 1,000 to 200 Hz to expedite computation. Furthermore, a bandpass filter ranging from 1 to 50 Hz was applied to the signals. Then, the independent component analysis (ICA) was employed to remove EOG and EMG artifacts. Furthermore, the common average reference (CAR) was used to re-reference EEG signals to eliminate the global background activity. To correct for stimulus-unrelated variations in power over time, the EEG signal from the 5 s before each video was extracted as a baseline. Finally, the non-haptic and haptic signals were separately intercepted for subsequent analysis. In this study, the EEG signals were divided into five frequency bands: delta (1–4 Hz), theta (4–8 Hz), alpha (8–14 Hz), beta (14–31 Hz), and gamma (31–50 Hz).

After data preprocessing, we extracted the frequency domain features and their combinations in this study. Four features that proved to be efficient for EEG-based emotion recognition were compared (Zheng et al., 2019b), including PSD, DE, DASM, and DCAU.

The PSD feature is the average energy of EEG signals in five frequency bands for 63 channels, and can be computed directly using a 256-point short-time fourier transform (STFT) with a 1-s-long window and non-overlapped Hanning window. The DE feature is defined as follows,

where the time series X obeys the Gauss distribution N(μ, δ2), x is a variable, and π and e are constants. It has been proven that, in a certain band, DE corresponds to the logarithmic spectral energy of a fixed-length EEG series (Shi et al., 2013). Compared with the PSD, DE has a balanced ability to distinguish between low and high frequency energy in EEG patterns.

The DASM feature is calculated as the differences between DE features of 28 pairs of hemispheric asymmetry electrodes (Fp1-Fp2, F7-F8, F3-F4, FT7-FT8, FC3-FC4, T7-T8, P7-P8, C3-C4, TP7-TP8, CP3-CP4, P3-P4, O1-O2, AF3-AF4, F5-F6, FC5-FC6, FC1-FC2, C5-C6, C1-C2, CP5-CP6, CP1-CP2, AF7-AF8, P5-P6, P1-P2, PO7-PO8, PO3-PO4, FT9-FT10, TP9-TP10, and F1-F2), expressed as

The DCAU feature is defined as the differences between DE features of 22 pairs of frontal-posterior electrodes (FT7-TP7, FC5-CP5, FC3-CP3, FC1-CP1, FCZ-CPZ, FC2-CP2, FC4-CP4, FC6-CP6, FT8-TP8, F7-P7, F5-P5, F3-P3, F1-P1, FZ-PZ, F2-P2, F4-P4, F6-P6, F8-P8, Fp1-O1,Fp2-O2, AF3-CB1, and AF4-CB2). DCAU is defined as

After feature extraction, we utilized linear-kernel SVM classifiers for the 4-class classification. For statistical analysis, a 4-fold cross-validation strategy was utilized to evaluate the classification performance.

To investigate the continual progression of emotional states, we employed wavelet transform methods to conduct time-frequency analysis on EEG signals. A 5-s time window was used to slide the EEG data without overlapping for analysis. According to the two haptic patterns, we calculated the average value of all channels across all subjects in four emotion types, with the results presented in Figures 6, 7. We find that the energy distribution of all emotional states diminishes with an increase in frequency. In comparison to the corresponding non-haptic patterns, the energy distributions of the three emotions (joy, sadness, and fear) are obviously higher in the high-frequency band under the two haptic patterns. Joy and sadness show no obvious changes in the low-frequency band, whereas fear emotions reveal a decrease in energy. This suggests that haptic vibration patterns are capable of affecting various emotions. Moreover, it can be seen that Haptic 2 has more energy in the alpha, beta, and gamma bands than Haptic 1 when comparing Figure 6 with Figure 7. Since the average energy is based on all channels and all time segments, the next paragraph requires further analysis considering topographical maps.

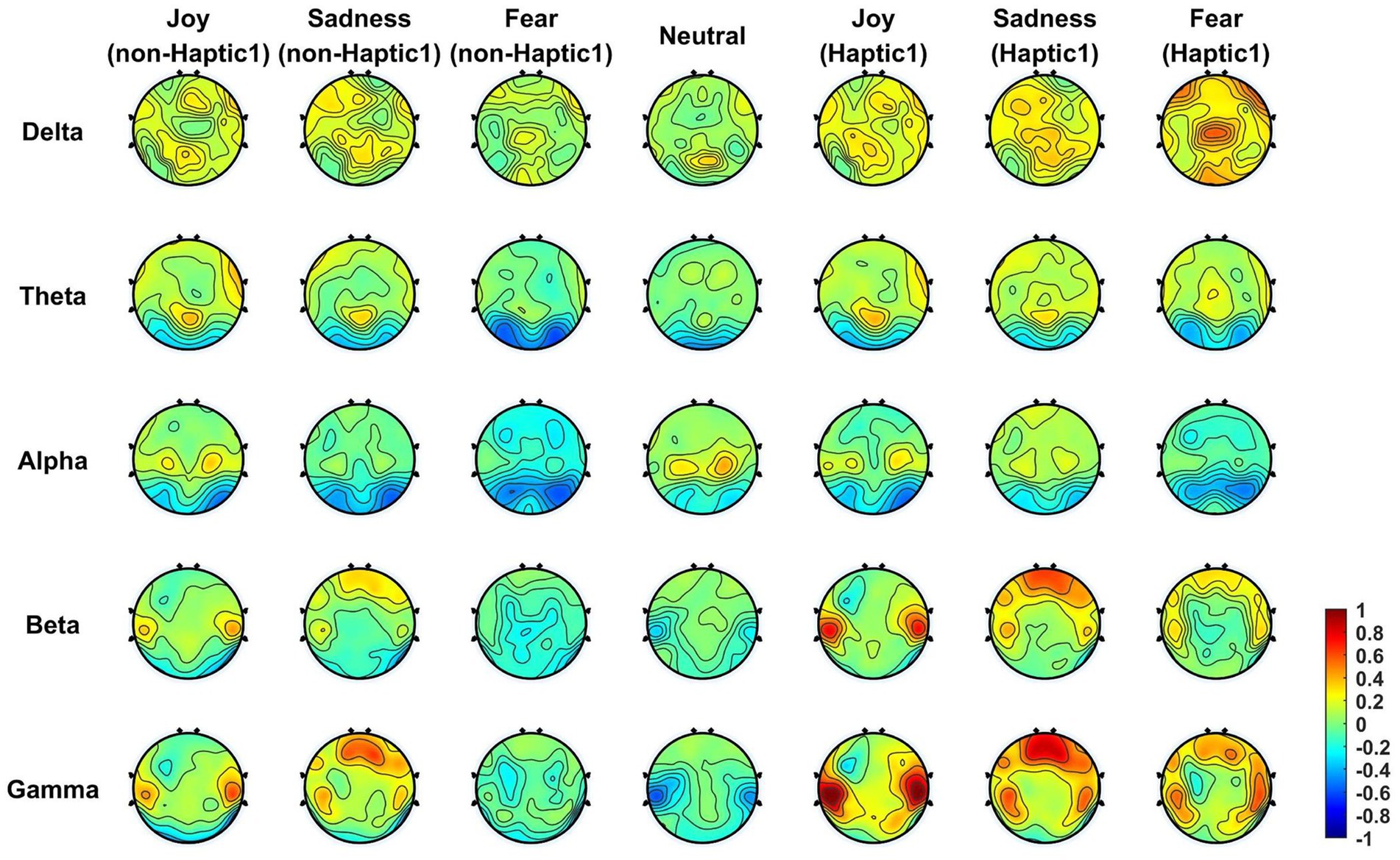

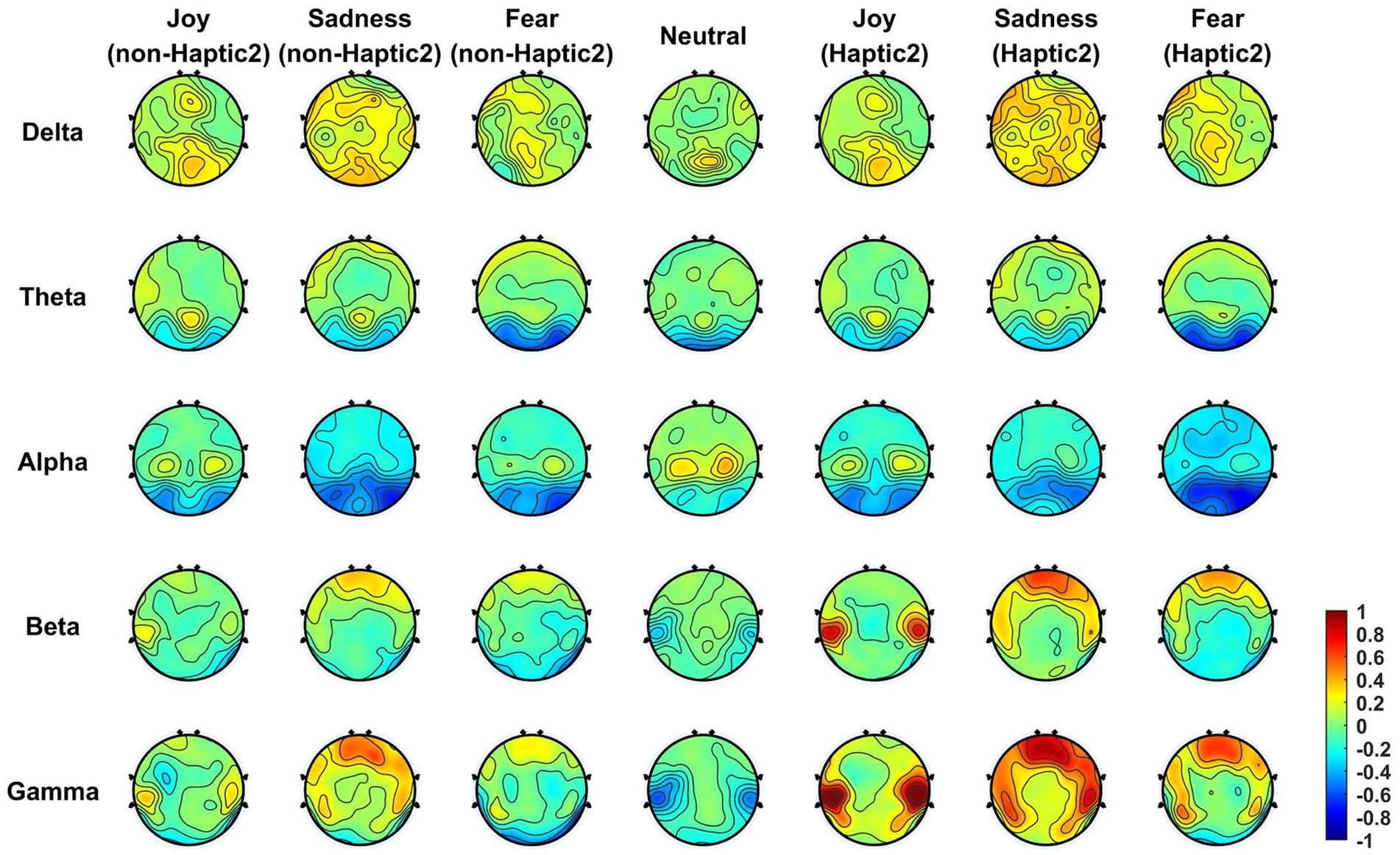

In order to analyze whether and how haptic stimuli affect emotional brain regions, we computed the mean DE features across all subjects in five frequency bands and then projected them onto the scalp. To highlight the changes more distinctly, the corresponding baseline features (collected during the 5-s preparation period) were subtracted from the projected DE features. Figures 8, 9 display the brain topographic maps for different emotional states in non-Haptic 1, Haptic 1, non-Haptic 2, and Haptic 2, respectively.

Figure 8. The average neural patterns in different emotional states for 16 subjects with non-Haptic 1 or Haptic 1.

Figure 9. The average neural patterns in different emotional states for 16 subjects with non-Haptic 2 or Haptic 2.

In Figure 8, the average neural patterns for different emotions in non-Haptic 1 and Haptic 1 are depicted. In the non-Haptic 1 state, the four types of emotions differ in the lateral temporal areas mainly in beta and gamma frequency bands. Specifically, the lateral temporal areas exhibit obvious activation for happy emotions in beta and gamma bands, while the corresponding areas for neutral emotions are inhibited. The prefrontal areas of sadness show more activation in beta and gamma bands compared to other emotions. The alpha band activation in the parietal areas is higher for happy and neutral emotions than for sadness and fear. The occipital regions in the theta and alpha bands for all emotions show low activation, with sadness and fear showing less activation. Afterward, with the application of Haptic 1, the energy distribution of different emotions across brain regions follows a similar trend as non-Haptic 1, but with more notable variations. The lateral temporal areas show more activation in beta and gamma bands for joy. The lateral temporal and parietal areas for sadness and fear concentrate more energy in both beta and gamma bands. Therefore, haptic vibration not only maintains the fundamental neural patterns for different emotions, but also increases the activation of the lateral temporal and prefrontal areas.

Figure 9 presents the average neural patterns of the four emotions for all subjects in non-Haptic 2 or Haptic 2. In non-Haptic 2, the brain topography maps for the four emotions are similar to those displayed in Figure 8, with only minor discrepancies. This phenomenon can be attributed to the fact that subjects are unlikely to respond identically to various audiovisual materials conveying the same emotion. Subsequently, the neural patterns of the different emotions in Haptic 2 state showed some similarity to those of Haptic 1. However, there is a more concentrated distribution of energy in brain regions in Haptic 2 state, with greater activation in lateral temporal and prefrontal areas in beta and gamma bands, and more inhibition in occipital areas in alpha bands. Hence, the above two haptic patterns indeed have different effects on emotion-related regions.

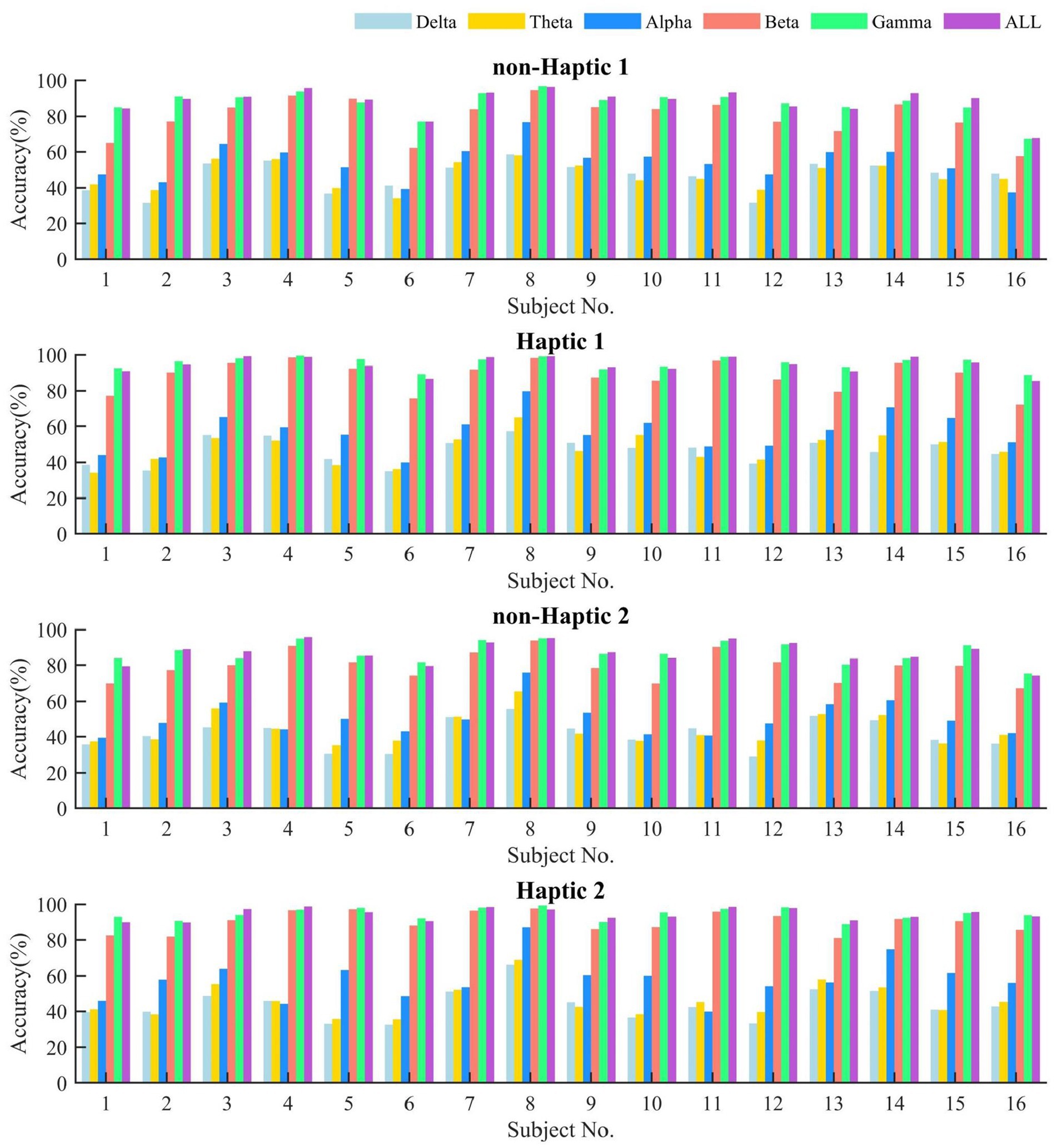

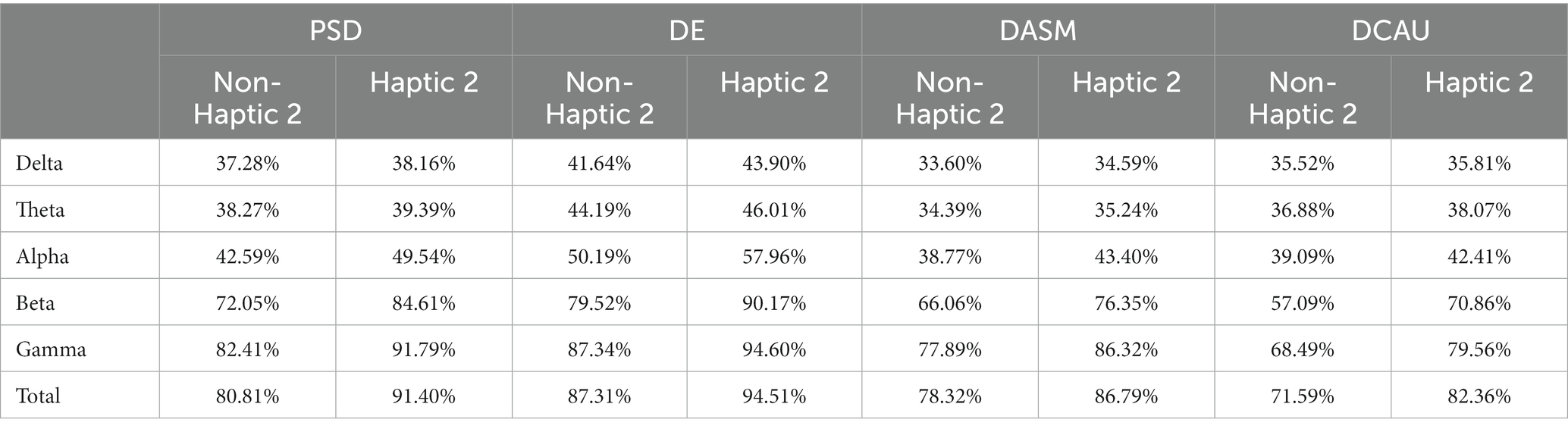

We employed SVM to classify the DE features in all frequency bands. Figure 10 provides a visual representation of the emotional classification results for 16 subjects in non-Haptic 1, Haptic 1, non-Haptic 2, and Haptic 2, respectively. It can be observed that the classification accuracy of DE features in beta, gamma, and full band frequency ranges is significantly higher than in delta and theta bands. This indicates that the delta and theta bands have little impact on emotion recognition, similar to the difficulty of finding obvious differences between these two bands in different emotions in brain topographic maps. Moreover, the accuracy in haptic patterns is significantly higher than that in non-haptic patterns, especially in beta, gamma, and full band frequencies. The results demonstrate that combining traditional visual–auditory stimuli with haptic stimuli can effectively induce emotions.

Figure 10. The average classification accuracy of DE feature by SVM in different frequency bands with non-haptic and haptic patterns.

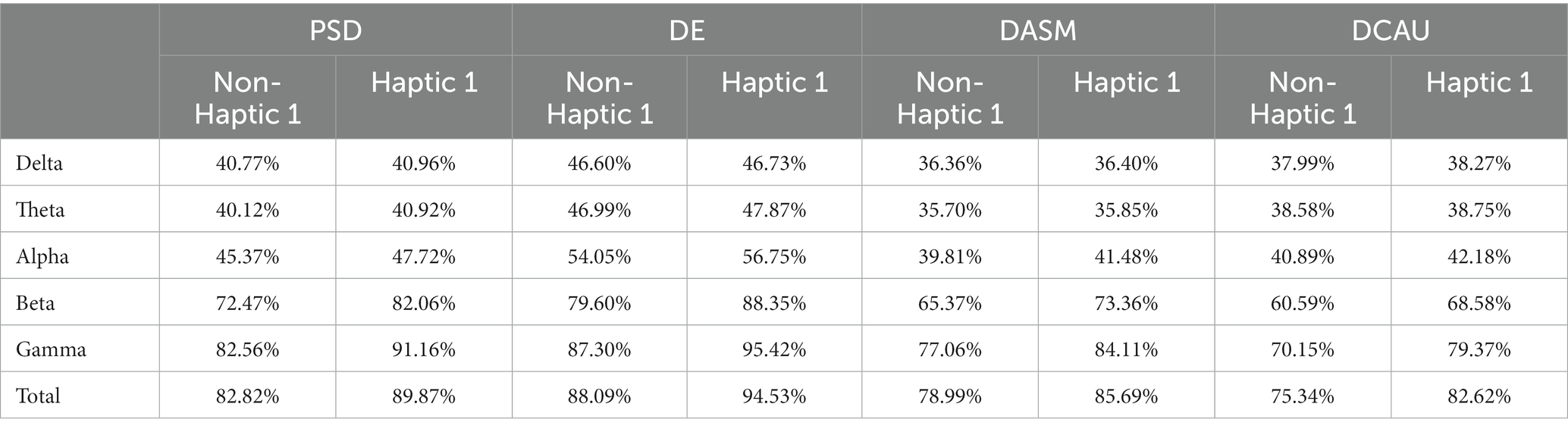

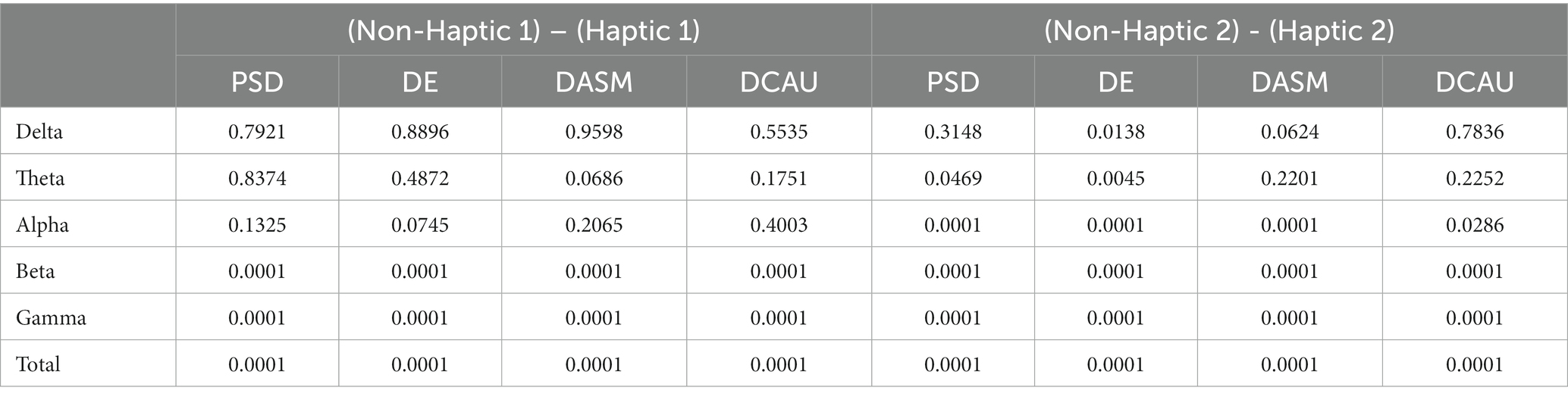

Tables 3, 4 present the average SVM classification results of different features of the five frequency bands in Haptic 1 and Haptic 2, respectively. Meanwhile, the paired-sample t-test results on the accuracy of four features in different frequency bands for the two cases of non-Haptic 1 - Haptic 1, and non-Haptic 2 - Haptic 2 are shown in Table 5. The results indicate that the average accuracy of PSD, DE, DASM, and DCAU features in the two haptic patterns is significantly higher than that in non-haptic patterns, demonstrating that haptic stimuli can enhance subjects’ emotions. In most cases, the average accuracy of DE features is higher than that of the other three features, indicating that DE features are superior in representing emotions. Additionally, the average accuracy of beta and gamma bands is significantly higher than that of other bands for four features. These findings suggest that beta and gamma bands play a crucial role in EEG-based emotion recognition and are highly correlated with emotional states. These quantitative results are consistent with the qualitative results obtained from the brain topographic maps.

Table 3. The average classification accuracy of four features in different frequency bands with non-Haptic 1 and Haptic 1.

Table 4. The average classification accuracy of four features in different frequency bands with non-Haptic 2 and Haptic 2.

Table 5. Paired-sample t-test results on the accuracy of four features in different frequency bands for two cases of non-Haptic 1 - Haptic 1, and non-Haptic 2 - Haptic 2 (α = 0.05).

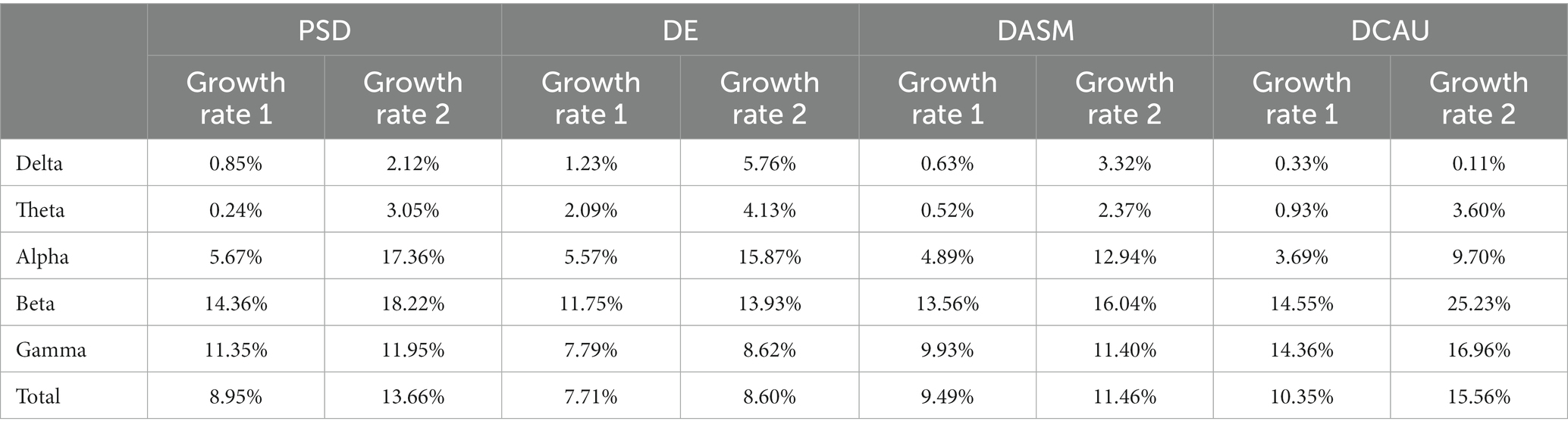

To further compare the emotional enhancement effects of different haptic patterns, we calculated the average classification accuracy growth rates of four features across various frequency bands in Haptic 1 and Haptic 2, as presented in Table 6. It can be seen that the accuracy growth rates in Haptic 2 are higher than those in Haptic 1 for all features and all frequency bands, with a more significant increase observed in the alpha and beta frequency bands. Taking DE features as an example, the classification accuracy of Haptic 1 and Haptic 2 increased by 7.71 and 8.60%, respectively. In particular, as shown in Table 5, Haptic 2 presents a significant improvement in classification accuracy compared to not applying haptic stimuli in almost all bands, but the classification accuracy of Haptic 1 is not significantly improved in the lower bands. These results suggest that Haptic 2, with adaptive vibration intensity and rhythm, is more effective in eliciting emotions than Haptic 1, which has constant vibration intensity and rhythm.

Table 6. The average classification accuracy growth rates of four features in different frequency bands with Haptic 1 and Haptic 2.

This paper presented the average neural patterns associated with four emotional states evoked by visual–auditory stimuli, as depicted in Figures 8, 9. Notably, the activation of lateral temporal and prefrontal areas in beta and gamma bands varied obviously in different emotional states. It suggests that these brain regions are highly correlated with different emotions and are considered the key regions for generating emotions. Overall, these findings are consistent with the results of previous research (Zheng and Lu, 2015; Zheng et al., 2019b). Besides, the accuracy of emotion classification was obviously higher in beta and gamma bands compared to other single bands. Interestingly, in some cases, the accuracy in total bands was lower than that in gamma bands. This may be due to the low classification accuracy of lower frequency band signals, which disturb the overall emotion classification. In summary, collecting EEG signals in beta and gamma bands from lateral temporal and prefrontal regions is an effective approach for recognizing emotions induced by visual–auditory stimuli. This finding can be utilized as a reference to simplify the number of EEG acquisition electrodes and reduce the data scale.

This paper demonstrated the superiority of the application of haptic patterns over non-haptic patterns in EEG-based emotion recognition. Certainly, previous studies have come to a similar conclusion that haptic stimuli improve the efficiency of emotion recognition tasks (Raheel et al., 2020; Li et al., 2022). However, few studies have analyzed and explained the phenomenon from the perspective of neural patterns. Notably, haptic stimuli not only maintain the fundamental neural patterns for different emotions, but also increase the activation of lateral temporal and prefrontal areas that closely associated with emotion, as illustrated in Figures 8, 9. We speculate that there are two reasons for this phenomenon: (1) Although haptic stimuli applied to the torso typically activate the somatosensory cortex in the parietal area directly, there was only a weak enhancement of the parietal area in the brain topographic maps. However, the brain is a complex interconnected structure, and it is possible that haptic stimuli affect lateral temporal and frontal regions through the somatosensory cortex. Meanwhile, a converging body of literature has shown that the somatosensory cortex plays an important role in each stage of emotional processing (Kropf et al., 2019; Sel et al., 2020). (2) The application of haptic stimuli increased the subjects’ immersion. Rather than exclusively focusing on haptic stimuli, the subjects’ senses may have been fully engaged in watching emotional movie clips.

In this paper, a novel haptic pattern (Haptic 2) was designed and compared with the existing haptic pattern (Haptic 1) in EEG emotional paradigm. The experimental results demonstrate that different haptic patterns have varying levels of emotional enhancement. According to the t-test in Table 5, the classification accuracy of Haptic 2 was significantly increased over non-haptic pattern in almost all frequency bands. However, the classification accuracy of Haptic 1 was not significantly improved in the lower bands. Furthermore, as shown in Table 6, the classification accuracy growth rates in Haptic 2 were slightly higher than those in Haptic 1. As we can see from Figure 9, Haptic 2 resulted in a more concentrated energy distribution in subjects’ brain regions. Specifically, lateral temporal and prefrontal regions increased activation in beta and gamma bands, while occipital regions exhibited greater inhibition in alpha bands. This amplified difference in energy distribution in emotion-related regions may account for higher classification accuracy growth rates of Haptic 2. Additionally, based on subjective feedback from subjects, most of them said that Haptic 2 was more suitable for the movie scene. We hypothesize that the adaptive adjustment of vibration intensity and rhythm with audio in Haptic 2 can enhance immersion and fully stimulate target emotions compared to Haptic 1. In general, these findings suggest that the proposed haptic pattern has superiority in evoking target emotions to some degree.

In our work, we combined two haptic vibration patterns with visual–auditory stimuli to induce emotions and classify four emotions based on EEG signals. However, our study still has certain limitations. Firstly, the number of subjects was not large enough, and the age range was limited to 20 to 30 years old. In the future, we will extend the proposed experiment paradigm to a larger number of subjects and a wider age range to investigate whether there are gender and age differences in the effects of haptic stimuli on emotion. In addition, this study only extracted features from single-channel EEG data, ignoring the functional connectivity between brain regions. Subsequently, we will utilize EEG-based functional connectivity patterns and more advanced deep learning algorithms considering brain topology in future studies. Moreover, our experimental results preliminarily showed the adaptive haptic vibration pattern is more advantageous to enhance emotion, while more detailed and reasonable designs of the haptic patterns require further exploration. In the design of the two haptic patterns, we only considered vibration intensity and rhythm, but neglected the impact of vibration location. Hence, we will create more comprehensive haptic vibration patterns to further investigate the mechanism of haptic stimuli on emotion enhancement.

The motivation of this study is to investigate the variations in emotional effects induced by different haptic patterns. This paper proposed a novel haptic pattern with adaptive vibration intensity and rhythm according to the video volume, and compared it to the existing haptic pattern in emotional experiment paradigm. Specifically, the above two haptic patterns were combined with traditional visual–auditory stimuli to induce emotions, and four target emotions were classified based on EEG signals. Compared with the visual–auditory stimuli, the visual–auditory-haptic fusion stimuli significantly improved the emotion classification accuracy. The possible reason is that haptic stimuli cause distinct activation in lateral temporal and prefrontal areas of the emotion-related regions. Moreover, different haptic patterns had varying effects on enhancing emotions. The classification accuracy of the existing and the proposed haptic patterns increased by 7.71 and 8.60%, respectively. In addition, the proposed haptic pattern showed a significant improvement in classification accuracy compared to non-haptic pattern in almost all bands. The results show that the haptic pattern with adaptive vibration intensity and rhythm is more effective in enhancing emotion. Therefore, flexible and varied haptic patterns have extensive potential in the field of affective haptics.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Ethics Committee of Southeast University. The patients/participants provided their written informed consent to participate in this study.

XW and BX designed the study, analyzed the data, and wrote the manuscript. XW, WZ, and JW set up the experiment platform. LD and JP performed the experiment. JW, CH, and HL reviewed and edited the manuscript. All authors read and approved the final manuscript.

This work was supported by National Key Research and Development Program of China (2022YFC2405602), the Natural Science Foundation of Jiangsu Province (No. BK20221464), the Key Research and Development Program of Jiangsu Province (No. BE2022363), the Basic Research Project of Leading Technology of Jiangsu Province (No. BK20192004), the National Natural Science Foundation of China (Nos. 92148205, 62173088, and 62173089), and Guangxi Key Laboratory of Automatic Detecting Technology and Instruments (No. YQ22207).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Bonanni, L., and Vaucelle, C. (2006). “A framework for haptic psycho-therapy,” in Proceedings of IEEE ICPS Pervasive Servives (ICPS), France.

Casaccia, S., Sirevaag, E. J., Frank, M. G., O'Sullivan, J. A., Scalise, L., and Rohrbaugh, J. W. (2021). Facial muscle activity: high-sensitivity noncontact measurement using laser doppler vibrometry. IEEE Trans. Instrum. Meas. 70, 1–10. doi: 10.1109/TIM.2021.3060564

Ceballos, R., Ionascu, B., Park, W., and Eid, M. (2018). Implicit emotion communication: EEG classification and haptic feedback. ACM Trans. Multimed. Comput. Commun. Appl. 14, 1–18. doi: 10.1145/3152128

Changeon, G., Graeff, D., Anastassova, M., and Lozada, J. (2012). “Tactile emotions: a vibrotactile tactile gamepad for transmitting emotional messages to children with autism” in Haptics: Perception, devices, mobility, and communication, 2012 (Finland)

Craik, A., He, Y. T., and Contreras-Vidal, J. L. (2019). Deep learning for electroencephalogram (EEG) classification tasks: a review. J. Neural Eng. 16:031001. doi: 10.1088/1741-2552/ab0ab5

Culbertson, H., Schorr, S. B., and Okamura, A. M. (2018). Haptics: the present and future of artificial touch sensation. Annu. Rev. Control Robot. Auton. Syst. 1, 385–409. doi: 10.1146/annurev-control-060117-105043

Dael, N., Mortillaro, M., and Scherer, K. R. (2012). Emotion expression in body action and posture. Emotion 12, 1085–1101. doi: 10.1037/a0025737

Eid, M. A., and Al Osman, H. (2016). Affective haptics: current research and future directions. IEEE Access. 4, 26–40. doi: 10.1109/ACCESS.2015.2497316

Eid, M., Cha, J. E., and El Saddik, A. (2008). “HugMe: a haptic videoconferencing system for interpersonal communication,” VECIMS 2008 – IEEE conference on virtual environments, human-computer interfaces and measurement systems proceedings. Turkey

Fletcher, D. C., Dreer, L. E., and Elliott, T. R. (2005). Tactile analogue scale instrument for investigation of low vision patient psychological characteristics. Int. Congr. Ser. 1282, 125–128. doi: 10.1016/j.ics.2005.05.173

Fu, Z. Z., Zhang, B. N., He, X. R., Li, Y. X., Wang, H. Y., and Huang, J. (2022). Emotion recognition based on multi-modal physiological signals and transfer learning. Front. Neurosci. 16:1000716. doi: 10.3389/fnins.2022.1000716

Giannakakis, G., Grigoriadis, D., Giannakaki, K., Simantiraki, O., Roniotis, A., and Tsiknakis, M. (2022). Review on psychological stress detection using biosignals. IEEE Trans. Affect. Comput. 13, 440–460. doi: 10.1109/TAFFC.2019.2927337

Haynes, A., Simons, M. F., Helps, T., Nakamura, Y., and Rossiter, J. (2019). A wearable skin-stretching tactile interface for human-robot and human-human communication. IEEE Robot. Autom. Lett. 4, 1641–1646. doi: 10.1109/LRA.2019.2896933

Hertenstein, M. J., Holmes, R., McCullough, M., and Keltner, D. (2009). The communication of emotion via touch. Emotion 9, 566–573. doi: 10.1037/a0016108

Hertenstein, M. J., Keltner, D., App, B., Bulleit, B. A., and Jaskolka, A. R. (2006). Touch communicates distinct motions. Emotion 6, 528–533. doi: 10.1037/1528-3542.6.3.528

Hossain, S. K. A., Rahman, A., and El Saddik, A. (2011). Measurements of multimodal approach to haptic interaction in second life interpersonal communication system. IEEE Trans. Instrum. Meas. 60, 3547–3558. doi: 10.1109/TIM.2011.2161148

Huang, K., Starner, T., Do, E., Weinberg, G., Kohlsdorf, D., Ahlrichs, C., et al. (2010). “Mobile music touch: mobile tactile stimulation for passive learning,” Proceedings of the SIGCHI conference on Human factors in computing systems (CHI), 2010 Atlanta

Keltner, D., Sauter, D., Tracy, J., and Cowen, A. (2019). Emotional expression: advances in basic emotion theory. J. Nonverbal Behav. 43, 133–160. doi: 10.1007/s10919-019-00293-3

Kim, M. K., Kim, M., Oh, E., and Kim, S. P. (2013). A review on the computational methods for emotional state estimation from the human EEG. Comput. Math. Method Med. 2013:573734. doi: 10.1155/2013/573734

Koelstra, S., Muhl, C., Soleymani, M., Lee, J. S., Yazdani, A., Ebrahimi, T., et al. (2012). DEAP: a database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Kropf, E., Syan, S. K., Minuzzi, L., and Frey, B. N. (2019). From anatomy to function: the role of the somatosensory cortex in emotional regulation. Braz. J. Psychiat. 41, 261–269. doi: 10.1590/1516-4446-2018-0183

Li, X., Song, D. W., Zhang, P., Zhang, Y. Z., Hou, Y. X., and Hu, B. (2018). Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 12:162. doi: 10.3389/fnins.2018.00162

Li, D. H., Yang, Z. Y., Hou, F. H., Kang, Q. J., Liu, S., Song, Y., et al. (2022). EEG-based emotion recognition with haptic vibration by a feature fusion method. IEEE Trans. Instrum. Meas. 71, 1–11. doi: 10.1109/TIM.2022.3147882

Liu, S. Q., Wang, X., Zhao, L., Li, B., Hu, W. M., Yu, J., et al. (2022). 3DCANN: a spatio-temporal convolution attention neural network for EEG emotion recognition. IEEE J. Biomed. Health Inform. 26, 5321–5331. doi: 10.1109/JBHI.2021.3083525

Mazzoni, A., and Bryan-Kinns, N. (2015). “How does it feel like? An exploratory study of a prototype system to convey emotion through haptic wearable devices,” International conference on intelligent technologies for interactive entertainment (INTETAIN), 2015. Torino

Nardelli, M., Greco, A., Bianchi, M., Scilingo, E. P., and Valenza, G. (2020). Classifying affective haptic stimuli through gender-specific heart rate variability nonlinear analysis. IEEE Trans. Affect. Comput. 11, 459–469. doi: 10.1109/TAFFC.2018.2808261

Raheel, A., Majid, M., and Anwar, S. M. (2020). DEAR-MULSEMEDIA: dataset for emotion analysis and recognition in response to multiple sensorial media. Inf. Fusion. 65, 37–49. doi: 10.1016/j.inffus.2020.08.007

Rahman, A., Hossain, S., and El-Saddik, A. (2010). “Bridging the gap between virtual and real world by bringing an interpersonal haptic communication system in second life,” IEEE International Symposium on Multimedia, 2010 Taiwan

Sarkar, P., and Etemad, A. (2022). Self-supervised ECG representation learning for emotion recognition. IEEE Trans. Affect. Comput. 13, 1541–1554. doi: 10.1109/TAFFC.2020.3014842

Sel, A., Calvo-Merino, B., Tsakiris, M., and Forster, B. (2020). The somatotopy of observed emotions. Cortex 129, 11–22. doi: 10.1016/j.cortex.2020.04.002

Shi, L. C., Jiao, Y. Y., and Lu, B. L. (2013). “Differential entropy feature for EEG-based vigilance estimation,” 35th Annual International Conference of the IEEE-Engineering-in-Medicine-and-Biology-Society (EMBC), 2013, Japan

Tsalamlal, M. Y., Rizer, W., Martin, J. C., Ammi, M., and Ziat, M. (2018). Affective communication through air jet stimulation: evidence from event-related potentials. Int. J. Hum. Comput. Interact. 34, 1157–1168. doi: 10.1080/10447318.2018.1424065

Wu, M. C., Teng, W., Fan, C. H., Pei, S. B., Li, P., and Lv, Z. (2023). An investigation of olfactory-enhanced video on EEG-based emotion recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 31, 1602–1613. doi: 10.1109/TNSRE.2023.3253866

Wu, X., Zheng, W. L., Li, Z. Y., and Lu, B. L. (2022). Investigating EEG-based functional connectivity patterns for multimodal emotion recognition. J. Neural Eng. 19:016012. doi: 10.1088/1741-2552/ac49a7

Xu, M. H., Cheng, J., Li, C., Liu, Y., and Chen, X. (2023). Spatio-temporal deep forest for emotion recognition based on facial electromyography signals. Comput. Biol. Med. 156:106689. doi: 10.1016/j.compbiomed.2023.106689

Yin, G. H., Sun, S. Q., Yu, D. A., Li, D. J., and Zhang, K. J. (2022). A multimodal framework for large-scale emotion recognition by fusing music and electrodermal activity signals. ACM Trans. Multimed. Comput. Commun. Appl. 18, 1–23. doi: 10.1145/3490686

Zhang, M. M., Wu, J. D., Song, J. B., Fu, R. Q., Ma, R., Jiang, Y. C., et al. (2023). Decoding coordinated directions of bimanual movements from EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 31, 248–259. doi: 10.1109/TNSRE.2022.3220884

Zhang, J. H., Yin, Z., Chen, P., and Nichele, S. (2020). Emotion recognition using multi-modal data and machine learning techniques: a tutorial and review. Inf. Fusion. 59, 103–126. doi: 10.1016/j.inffus.2020.01.011

Zhang, S. Q., Zhao, X. M., and Tian, Q. (2022). Spontaneous speech emotion recognition using multiscale deep convolutional LSTM. IEEE Trans. Affect. Comput. 13, 680–688. doi: 10.1109/TAFFC.2019.2947464

Zheng, W. L., Liu, W., Lu, Y. F., Lu, B. L., and Cichocki, A. (2019a). EmotionMeter: a multimodal framework for recognizing human emotions. IEEE T. Cybern. 49, 1110–1122. doi: 10.1109/TCYB.2018.2797176

Zheng, W. L., and Lu, B. L. (2015). Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Zheng, W. L., Zhu, J. Y., and Lu, B. L. (2019b). Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 10, 417–429. doi: 10.1109/TAFFC.2017.2712143

Zhong, B., Shen, M., Liu, H. W., Zhao, Y. J., Qian, Q. Y., Wang, W., et al. (2023). A cable-driven exoskeleton with personalized assistance improves the gait metrics of people in subacute stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 31, 2560–2569. doi: 10.1109/TNSRE.2023.3281409

Keywords: affective haptics, wearable haptic vibration, electroencephalogram, affective computing, emotion recognition

Citation: Wang X, Xu B, Zhang W, Wang J, Deng L, Ping J, Hu C and Li H (2023) Recognizing emotions induced by wearable haptic vibration using noninvasive electroencephalogram. Front. Neurosci. 17:1219553. doi: 10.3389/fnins.2023.1219553

Received: 09 May 2023; Accepted: 20 June 2023;

Published: 06 July 2023.

Edited by:

Lei Zhang, Nanjing Normal University, ChinaReviewed by:

Yuanpeng Zhang, Nantong University, ChinaJing Xue, Wuxi People’s Hospital Affiliated to Nanjing Medical University, ChinaMingming Zhang, Southern University of Science and Technology, ChinaCopyright © 2023 Wang, Xu, Zhang, Wang, Deng, Ping, Hu and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Baoguo Xu, eHViYW9ndW9Ac2V1LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.