- 1Institute of Innovative Research, Tokyo Institute of Technology, Yokohama, Japan

- 2Institute of Artificial Intelligence and Robotics, Xi'an Jiaotong University, Xi'an, China

- 3Key Laboratory of Biomedical Information Engineering of Ministry of Education, Xi'an Jiaotong University, Xi'an, China

- 4School of Computing, Tokyo Institute of Technology, Yokohama, Japan

The Partial Least Square Regression (PLSR) method has shown admirable competence for predicting continuous variables from inter-correlated electrocorticography signals in the brain-computer interface. However, PLSR is essentially formulated with the least square criterion, thus, being considerably prone to the performance deterioration caused by the brain recording noises. To address this problem, this study aims to propose a new robust variant for PLSR. To this end, the maximum correntropy criterion (MCC) is utilized to propose a new robust implementation of PLSR, called Partial Maximum Correntropy Regression (PMCR). The half-quadratic optimization is utilized to calculate the robust projectors for the dimensionality reduction, and the regression coefficients are optimized by a fixed-point optimization method. The proposed PMCR is evaluated with a synthetic example and a public electrocorticography dataset under three performance indicators. For the synthetic example, PMCR realized better prediction results compared with the other existing methods. PMCR could also abstract valid information with a limited number of decomposition factors in a noisy regression scenario. For the electrocorticography dataset, PMCR achieved superior decoding performance in most cases, and also realized the minimal neurophysiological pattern deterioration with the interference of the noises. The experimental results demonstrate that, the proposed PMCR could outperform the existing methods in a noisy, inter-correlated, and high-dimensional decoding task. PMCR could alleviate the performance degradation caused by the adverse noises and ameliorate the electrocorticography decoding robustness for the brain-computer interface.

1. Introduction

Brain-computer interface (BCI) has been conceived as a promising technology that translates cerebral recordings generated by cortical neurons into appropriate commands for controlling neuroprosthetic devices (Wolpaw et al., 2002). The capability of BCI for repairing or reproducing sensory-motor functions has been increasingly intensified by recent scientific and technological advances (Donoghue, 2002; Mussa-Ivaldi and Miller, 2003; Lebedev and Nicolelis, 2006). The non-invasive recordings, especially electroencephalogram (EEG) and magnetoencephalogram (MEG), are widely exploited to structure BCI systems due to their ease of use and satisfactory temporal resolution, whereas the non-invasive BCI systems could be limited in their capabilities and customarily require considerable training (Amiri et al., 2013). Invasive single-unit activities and local field potentials commonly provide better decoding performance, which suffer pessimistic long-term stability, however, due to capriciousness in the recorded neuronal-ensembles (Chestek et al., 2007). A sophisticated alternative which exhibits higher signal amplitudes than EEG while presents superior long-term stability compared with invasive modalities, is the semi-invasive electrocorticography (ECoG) (Buzsáki et al., 2012). Numerous studies in recent years have investigated the potentials of ECoG signal for decoding motions (Levine et al., 2000; Leuthardt et al., 2004; Chin et al., 2007; Pistohl et al., 2008; Ball et al., 2009b; Chao et al., 2010; Shimoda et al., 2012). The serviceability of ECoG signal for online practice have also been demonstrated in Leuthardt et al. (2004, 2006), Schalk et al. (2008).

To accomplish the inter-correlated and potentially high-dimensional ECoG decoding tasks, the partial least square regression (PLSR) algorithm has been widely utilized to predict continuous variables from ECoG signals as well as various improved versions in the last decade (Chao et al., 2010; Eliseyev et al., 2011, 2012, 2017; Shimoda et al., 2012; Zhao et al., 2012, 2013; Eliseyev and Aksenova, 2016; Foodeh et al., 2020). Chao et al. (2010) successfully predicted the three-dimensional continuous hand trajectories of two monkeys during asynchronous food-reaching tasks from time-frequency features of subdural ECoG signals by PLSR algorithm. They further showed the admirable prediction capability of PLSR in an epidural ECoG study (Shimoda et al., 2012). Recently, different strategies have been investigated to improve the decoding performance of PLSR. For instance, multi-way PLSR algorithms have been proposed as a generalization for tensor analysis in the ECoG decoding tasks (Bro, 1996; Shimoda et al., 2012; Zhao et al., 2013; Eliseyev et al., 2017). Moreover, regularization technique has been used to penalize the objective function with an extra regularization term to achieve desirable prediction (Eliseyev et al., 2012; Eliseyev and Aksenova, 2016; Foodeh et al., 2020). Although the PLSR algorithm was initially developed for econometrics and chemometrics (Wold, 1966), it has emerged as a popular method for neural imaging and decoding (Krishnan et al., 2011; Zhao et al., 2014).

PLSR solves a regression problem primarily with dimensionality reduction on both explanatory matrix (input) and response matrix (output), in which the dimensionality-reduced samples (commonly called as latent variables) for respective sets exhibit maximal correlation, thus structuring association from input variables to output variables. Nevertheless, the conventional PLSR and most existing variants are in essence formulated by the least square criterion, which assigns superfluous importance to the deviated noises. On the other hand, although ECoG signal usually exhibits a relatively higher signal-to-noise ratio (SNR) than the non-invasive EEG recording, previous studies have revealed that ECoG is also prone to be contaminated by physiological artifacts with pronounced amplitudes (Otsubo et al., 2008; Ball et al., 2009a). As a result, PLSR could be incompetent for noisy ECoG decoding tasks due to subnormal robustness.

The present study aims to propose a novel robust version for PLSR through introducing the maximum correntropy criterion (MCC) to replace the conventional least square criterion, which was proposed in the information theoretic learning (ITL) (Principe, 2010), and has achieved the state-of-the-art robust approaches in different tasks, including regression (Liu et al., 2007; Chen and Pŕıncipe, 2012; Feng et al., 2015), classification (Singh et al., 2014; Ren and Yang, 2018), principal component analysis (He et al., 2011), and feature extraction (Dong et al., 2017). Recently, a rudimentary implementation of the MCC in the PLSR algorithm has been investigated in Mou et al. (2018), where MCC was employed in the process of dimensionality reduction. However, the proposed algorithm in Mou et al. (2018) may be limited in some respects. First, except for the MCC-based dimensionality reduction, it remains acquiring the regression relations under the least square criterion. Second, it only considers the dimensionality reduction for the explanatory matrix. Consequently, one has to calculate the regression coefficients separately for each dimension of the response matrix, which means it could be inadequate for multivariate response prediction.

By comparison, the present study aims to realize a more comprehensive implementation of the MCC framework in PLSR. The main contributions of this study are summarized as follows.

1) We reformulate PLSR thoroughly with the MCC framework, that not only the dimensionality reduction, but also the regression relations between the different variables are established by the MCC framework.

2) Both the explanatory matrix (input) and the response matrix (output) are treated with MCC-based dimensionality reduction. As a result, the proposed algorithm is adequate for multivariate response prediction.

3) We utilize Gaussian kernel functions with individual kernel bandwidths for different reconstruction errors and prediction errors. In addition, each kernel bandwidth value could be calculated from the corresponding set of errors directly.

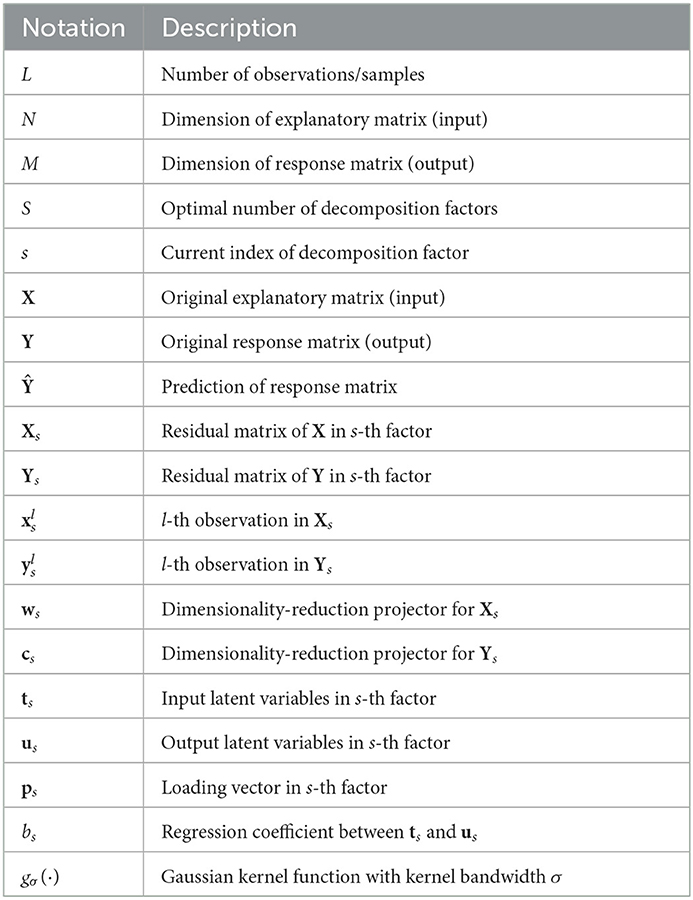

The remainder of this paper is organized as follows. Section 2 introduces the conventional PLSR method as well as the regularized versions. Section 3 gives a brief introduction about MCC and the rudimentary MCC-based PLSR algorithm. Section 4 presents the reformulation of PLSR with the MCC framework, proposing the partial maximum correntropy regression (PMCR) algorithm. Section 5 evaluates the proposed method on synthetic and real ECoG datasets, respectively. Some discussions about the proposed method are given in Section 6. Finally, this paper is concluded in Section 7. To facilitate the presentation of this paper, the main notations are listed in Table 1.

2. Partial least square regression

2.1. Conventional PLSR

Consider the data set with the explanatory matrix X ∈ ℝL×N and the response matrix Y ∈ ℝL×M, in which N and M denote the respective numbers of dimension, while L is the number of observations. PLSR is an iterative regression method which implements dimensionality reduction and decomposition on explanatory and response matrices simultaneously for S iterations, so that they could be expressed by

where and are the latent variables and loading vectors for X, respectively. is the loading vectors of Y, and is a diagonal matrix. For dimensionality reduction, in the s-th iteration with residual matrices Xs and Ys, the covariance between the latent variables ts = Xsws and us = Yscs are maximized by

in which and are utilized for dimensionality reduction on Xs and Ys, respectively. us is the latent variable for Ys. ∥·∥2 denotes the L2-norm. After obtaining the latent variables ts and us, the loading vector ps and the relation from ts to us with the scalar bs are founded by the least square criterion

The residual matrices are updated by and . S is usually selected by cross validation. Eventually, the prediction from X to Y is structured by

where H = PT+BCT ∈ ℝN×M, and PT+ is the pseudo-inverse of PT. denotes the prediction for Y.

Maximizing the covariance between latent variables Eq. (2) could be rewritten as (Barker and Rayens, 2003).

where and denote the l-th samples in Xs and Ys, respectively. One can observe that, PLSR employs the least square criterion not only to obtain the regression relations in Eqs. (3, 4), but for the projectors ws and cs as well. In Eq. (6), the first and second terms are the reconstruction errors for input and output, respectively. The third term denotes the prediction error for the l-th latent variables. Since each step for PLSR is based on the least square criterion, the prediction from input to output could be seriously deteriorated by noises.

2.2. Regularized PLSR

Regularization technique has been popularly employed to ameliorate the decoding performance of the PLSR algorithm. For example, L1-regularization on the projectors was employed so as to acquire sparse projectors, conducting the feature selection simultaneously (Eliseyev et al., 2012). The authors further extended their study in Eliseyev and Aksenova (2016), in which Sobolev-norm and polynomial penalization were introduced into PLSR algorithm to strengthen the smoothness of the predicted response. Recently, the state-of-the-art regularized PLSR was proposed by utilizing L2-regularization to find the regression relation between the latent variables ts and us, so as to reduce the over-fitting risk of each latent variable on the desired response (Foodeh et al., 2020). In particular, for each decomposition factor, the scalar bs is acquired with an individual regularization parameter λs as

Experimental results in Foodeh et al. (2020) showed that, the regularization technique in Eq. (7) can achieve better ECoG decoding performance than regularizing the projectors.

Nevertheless, the regularized PLSR variants remain formulated based on the non-robust least square criterion, as a result, being still prone to suffering the performance deterioration caused by the adverse noises.

3. Maximum correntropy criterion

3.1. Maximum correntropy criterion

The correntropy concept was developed in the field of ITL as a generalized correlation function of random processes (Santamaŕıa et al., 2006), which measures the similarity and interaction between two vectors in a kernel space. Correntropy associates with the information potential of quadratic Renyi's entropy (Liu et al., 2007), where the data's probability density function (PDF) is estimated by the Parzen's window method (Parzen, 1962; Silverman, 1986). The correntropy which evaluates the similarity between two arbitrary variables A and B, is defined by

in which k(·) is a kernel function satisfying the Mercer's theory and E[·] is the expectation operator. In the practical application, one calculates the correntropy with L observations by the following empirical estimation

where the Gaussian kernel function with kernel bandwidth σ is widely used for the kernel function k(·), thus leading to

Maximizing the correntropy Eq. (10), called as the maximum correntropy criterion (MCC), exhibits numerous advantages. Correntropy is essentially a local similarity measure, which is chiefly determined along A = B, i.e. zero-value error. Consequently, the effect of large error caused by adverse noise is alleviated, leading to superior robustness. Additionally, correntropy could extract sufficient information from observations, since it considers all the even moments of errors (Liu et al., 2007). It also relates closely to the m-estimation, which can be regarded as a robust formulation of Welsch m-estimator (Huber, 2004).

3.2. MCC-PLSR

Recently, a rudimentary MCC-based PLSR variant has been investigated in Mou et al. (2018), named as MCC-PLSR. For a univariate output, according to Mou et al. (2018), the dimensionality reduction Eq. (2) could be rewritten as

which aims to maximize the quadratic covariance. Mou et al. (2018) utilized a similar proposition as in the MCC-based principal component analysis (He et al., 2011), proposing the following objective function

from which one can calculate the robust projector ws. Then, one obtains the latent variables by ts = Xsws, and acquires other model parameters similarly as in Eqs. (3-5).

Despite the robust implementation of the projector ws in Eq. (12), the above-described MCC-PLSR algorithm could be inadequate for the following reasons. First, except for the calculation of ws, the other model parameters are still acquired under the least square criterion. Second, dimensionality reduction is not considered for the output matrix. As a result, the prediction performance for multivariate response could be limited. In addition, MCC-PLSR is prone to suffering excessive computation time, since one has to obtain the prediction model for each dimension of the response matrix separately.

4. Partial maximum correntropy regression

In this section, we present a comprehensive reformulation of PLSR with the MCC framework. Compared with the existing MCC-PLSR, our proposed method aims to acquire each model parameter under the MCC. In addition, the generalization for multivariate response prediction is taken into account in this study. The detailed mathematical derivations of the proposed method are given as follows, in which the subscript s denoting the s-th decomposition factor is omitted for the purpose of simplicity.

Substituting the least quadratic reconstruction errors and prediction errors in the conventional PLSR Eq. (6) with the maximum correntropy yields

where σx, σy, and σr denote the Gaussian kernel bandwidths for X-reconstruction errors, Y-reconstruction errors, and the prediction errors, respectively.

Then, one can transform the vectors (xl−xlwwT) and (yl−ylccT) into scalars, provided that the two projectors w and c are unit-length vectors, i.e. wTw = cTc = 1,

Subsequently, one obtains the following optimization problem to acquire the projectors

After obtaining w and c, one could calculate the latent variables as in the conventional PLSR by t = Xw and u = Yc. We then calculate the loading vector p and the regression coefficient b under the MCC by

in which tl and ul denote the l-th elements for the latent variables t and u, respectively. σp and σb denote the corresponding Gaussian kernel bandwidths. The residual matrices are then updated similarly as PLSR.

One repeats such procedures for the optimal number of factors and collects the acquired vectors from each iteration to organize the matrices T, P, B, and C, as in the original PLSR. Ultimately, the predicted response can be obtained from X by the regression relationship Eq. (5). The above-mentioned PLSR variant which is comprehensively reformulated based on the MCC, is named as partial maximum correntropy regression (PMCR). In what follows, we discuss in detail about the optimization, convergence analysis, and determination of hyper-parameters with regard to the proposed PMCR algorithm.

4.1. Optimization

Three optimization problems Eqs. (15, 16, 17) need to be addressed in PMCR. We first consider Eq. (15) for the calculation of the projectors w and c. Based on the half-quadratic (HQ) optimization method (Ren and Yang, 2018), Eq. (15) could be rewritten as

where φ(·) is a convex conjugated function of g(·), and , , and denote three sets of introduced auxiliaries, respectively. Thus, we can conclude that optimizing Eq. (15) is equivalent to updating (αl, βl, γl) and (w, c) alternately by

Since the HQ optimization is an iterative process, we denote the k-th HQ iteration with the subscript k. First, according to the HQ technique (Ren and Yang, 2018), we update the auxiliaries with the current projectors (wk, ck) by

Then, to optimize the projectors, we rewrite Eq. (19) by collecting the terms of projectors and omitting the auxiliaries as

which is a quadratic optimization issue constrained by nonlinear conditions. To accomplish Eq. (21), there exist enormous solutions in the literature, such as the sequential quadratic programming (SQP) which has been widely utilized for nonlinear programming problems (Fletcher, 2013).

After one obtains the projectors w and c, the latent variables are computed by t = Xw and u = Yc. Then, Eqs. (16, 17) can be solved by the following iterative fixed-point optimization method with fast convergence (Chen et al., 2015).

where Ψp and Ψb are L×L diagonal matrices with the diagonal elements and , respectively. Since Ψp and Ψb are dependent on the current solutions p and b, the updates in Eqs. (22, 23) are fixed-point equations which will require multiple iterations (Chen et al., 2015). The comprehensive procedures for PMCR are summarized in Algorithm 1.

4.2. Convergence analysis

For the regression relations p and b, one could find the detailed convergence analysis in Chen et al. (2015). We mainly consider the convergence of the projectors w and c in the optimization problem (15). Because correntropy is in nature an m-estimator (Liu et al., 2007), the local optimums of Eq. (15) will be close sufficiently to the global optimum, which has been proved in a recent theoretical study (Loh and Wainwright, 2015). Therefore, we prove that Eq. (15) will converge to a local optimum with the HQ optimization method.

Proposition 1. If we have Jp(wk, ck) ≤ Jp(wk+1, ck+1) by fixing (αl, βl, γl) = (αl, k+1, βl, k+1, γl, k+1), the optimization problem (Eq. 15) will converge to a local optimum.

Proof: The convergence is proved as

in which the first inequality is guaranteed by the HQ mechanism (Ren and Yang, 2018), and the second inequality arises from the assumption of the present proposition.

One can observe that, to guarantee the convergence of Eq. (15), it is unnecessary to attain the strict maximum of Eq. (21) at each projector-step in Algorithm 1. On the contrary, so long as the updated projectors lead to a larger objective function Jp at each projector-step, Eq. (15) will converge to a local optimum. This reveals great convenience in practice, that one only needs a few SQP iterations for projector-step. One could finish the projector-step once confirming the increase on Jp, thus accelerating the convergence.

4.3. Hyper-parameter determination

There exist five Gaussian kernel bandwidths σx, σy, σr, σp, and σb, respectively, to be determined in practice. In the literature, an effective method to estimate a proper kernel bandwidth for probability density estimation, named as Silverman's rule, was proposed in Silverman (1986). Denoting the current set of errors as E with L observations, the kernel bandwidth is computed

in which σE is the standard deviation of the L errors, and R denotes the interquartile range.

5. Experiments

In this section, we assessed the proposed PMCR algorithm on a synthetic dataset and a real ECoG dataset, respectively, comparing it with the existing PLSR methods. Specifically, we compared PMCR to the following methods: the conventional PLSR, the state-of-the-art regularized PLSR (RPLSR) (Foodeh et al., 2020) described in Eq. (7), and the rudimentary MCC-PLSR (Mou et al., 2018) described in Section 3.2. For a evenhanded comparison, each algorithm used an identical number of factors, which was selected by the conventional PLSR in five-fold cross-validation. The maximal number of factors was set as 100.

Considering the performance indicators for the evaluation, we used three typical measures in regression tasks: i) Pearson's correlation coefficient (r)

where Cov(·, ·) and Var(·) denote the covariance and variance, respectively, and ii) root mean squared error (RMSE) which is computed by

in which and yl denote the l-th observations for the prediction and the target Y, respectively, and iii) mean absolute error (MAE) which represents the average L1-norm distance

To compare the robustness between different algorithms, only contaminating the training samples by noises with isolating testing data from contamination is an extensively approved and implemented method in the literature, as advised in Zhu and Wu (2004). Accordingly, only the training sample would suffer the adverse contamination in the following experiments.

5.1. Synthetic dataset

5.1.1. Dataset description

First, we considered an inter-correlated, high-dimensional, and noisy synthetic example, in which various PLSR methods were assessed with different levels of contamination. Randomly, we generated 300 i.i.d.1 latent variables t~U(0, 1) for training, and 300 i.i.d. latent variables t~U(0, 1) for testing, in which U denotes the uniform distribution, and the dimension of t was set as 20. We generated the hypothesis from the latent variable to the explanatory and response matrices then. Specifically, we randomly generated the transformation matrices with arbitrary values, which were subject to the standard normal distribution. The latent variables t were multiplied with a 20 × 500 transformation matrix, resulting in a 300 × 500 explanatory matrix for input. Similarly, we used a 20 × 3 transformation matrix to acquire a 300 × 3 response matrix for output. Accordingly, we predicted the multivariate responses from 500-dimensional explanatory variables with 300 training samples, and evaluated the prediction performance on the other 300 testing samples.

Considering the contamination for the synthetic dataset, we supposed the explanatory matrix to be contaminated, because the adverse noises mainly happen to the brain recordings, which are usually used as the explanatory in the BCI system. Therefore, a certain proportion (from 0 to 1.0 with a step 0.05) of training samples were randomly selected with equal probability, the inputs of which were then replaced by noises with large amplitude. For the distribution of the noise, we utilized a zero-mean Gaussian distribution with large standard deviation to imitate outliers, where 30, 100, and 300 were used, respectively.

5.1.2. Results

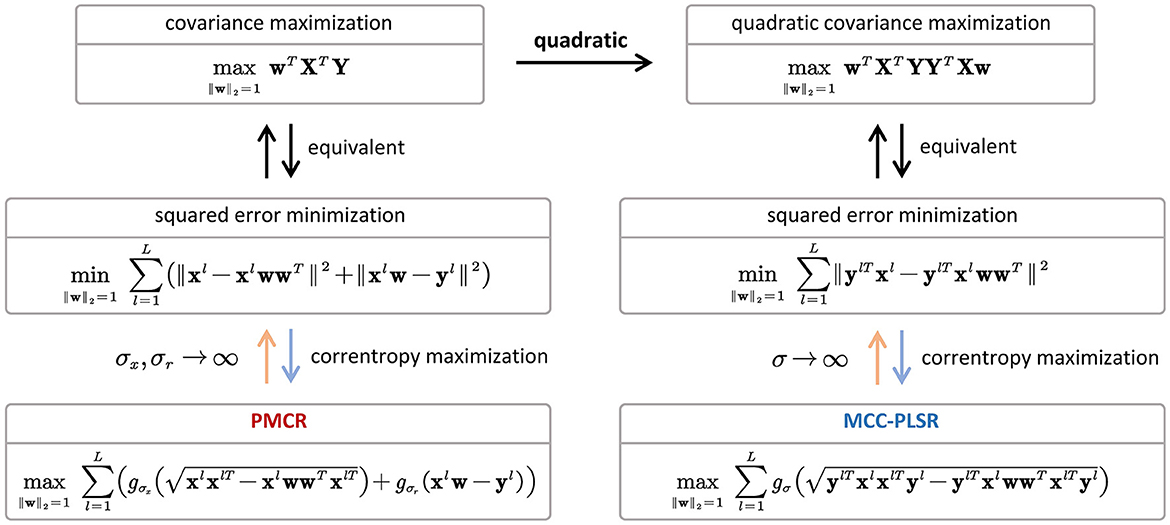

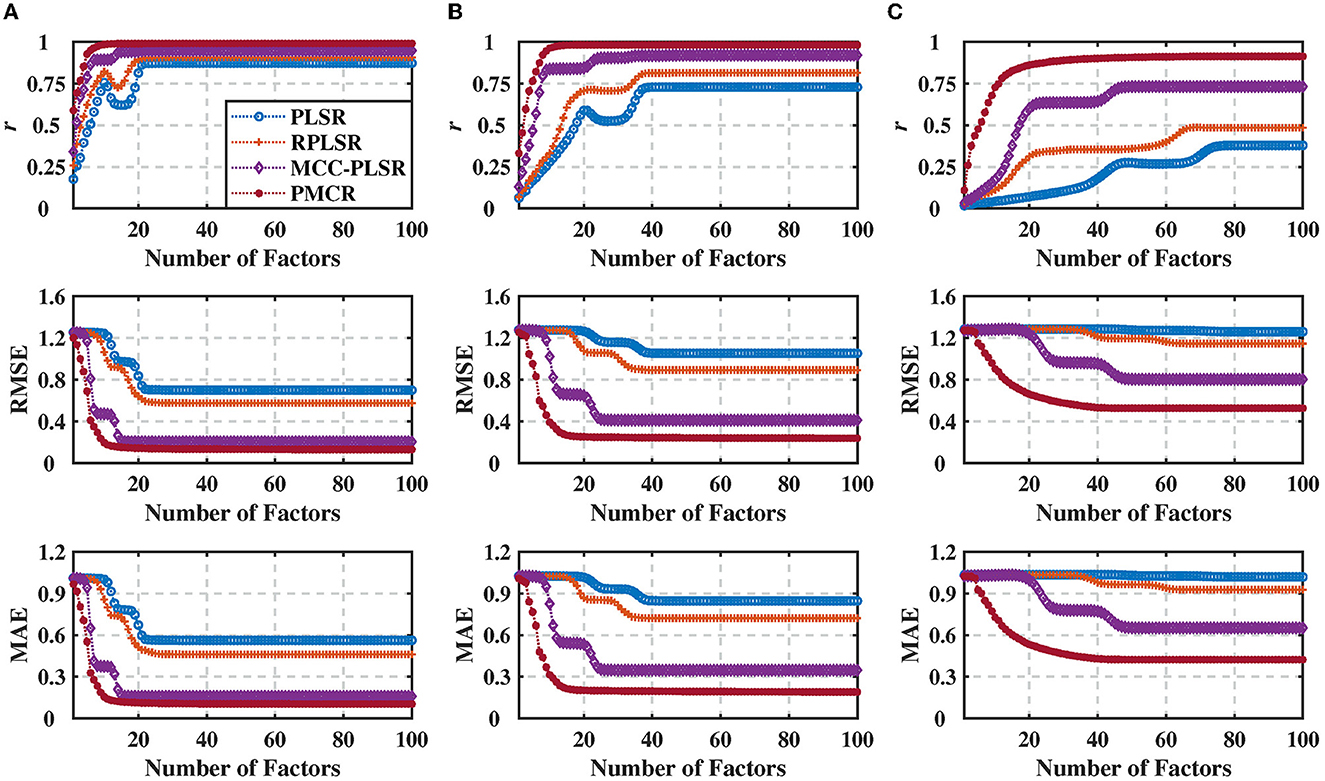

We evaluated the various PLSR methods with 100 Monte-Carlo repetitive trials, and present the results in Figure 1, where the results were averaged across three dimensions of the output. One could observe from Figure 1 that, for all the three different noise standard deviations, the proposed PMCR algorithm achieved superior prediction performance compared with the other existing methods consistently for r, RMSE, and MAE, respectively, in particular when the training set suffered considerable contamination.

Figure 1. Regression performance indicators of the inter-correlated, high-dimensional, and contaminated synthetic dataset under different noise standard deviations with noise levels from 0 to 1.0. (A) Noise standard deviation = 30, (B) noise standard deviation = 100, and (C) noise standard deviation = 300. The performance indicators were acquired from 100 Monte-Carlo repetitive trials and averaged across three dimensions of the output. The proposed PMCR algorithm realized better performance than the existing PLSR algorithms consistently for r, RMSE, and MAE, in particular when the training set was contaminated considerably.

The number of factors S plays a vital role in PLSR methods, representing the iteration numbers to decompose the input and output matrices. Since it usually causes a notable effect on the results, additionally, we evaluated the performance with respect to the number of factors for each method. To this end, we utilized the noise standard deviation 100 under three different noise levels, 0.2, 0.5, and 0.8, respectively. The prediction results for each method are presented in Figure 2 with 100 repetitive trials, with respect to the number of decomposition factors. One could perceive that, not only the proposed PMCR eventually achieved superior regression performance with the optimal number of factors, but also it realized rather commendable performance with a small number of factors. For example, when the noise level was equal to 0.5, the proposed PMCR achieved its optimal performance with no more than 20 factors. By comparison, for the other methods, when the number of factors was larger than 20, their performances remained promoting significantly. One can also observe a similar result in the other two noise levels. This suggests that, PMCR could abstract substantial information with a rather small number of factors from training samples in a noisy regression task.

Figure 2. Regression performance indicators of the synthetic dataset with noise standard deviation being 100 under three different noise levels with the number of factors increasing from 1 to 100. (A) Noise level = 0.2, (B) noise level = 0.5, and (C) noise level = 0.8. The performance indicators were obtained from 100 repetitive trials and averaged across three dimensions of the output. The proposed PMCR algorithm not only acquired better prediction results than the other algorithms ultimately with the optimal number of factors, but also achieved admirable regression performance with a small number of factors.

5.2. ECoG dataset

To further demonstrate the superior robustness of the PMCR algorithm, we evaluated the various PLSR algorithms with the following practical brain decoding task. In this subsection, we used the publicly available Neurotycho ECoG dataset2 which was initially proposed in Shimoda et al. (2012).

5.2.1. Dataset description

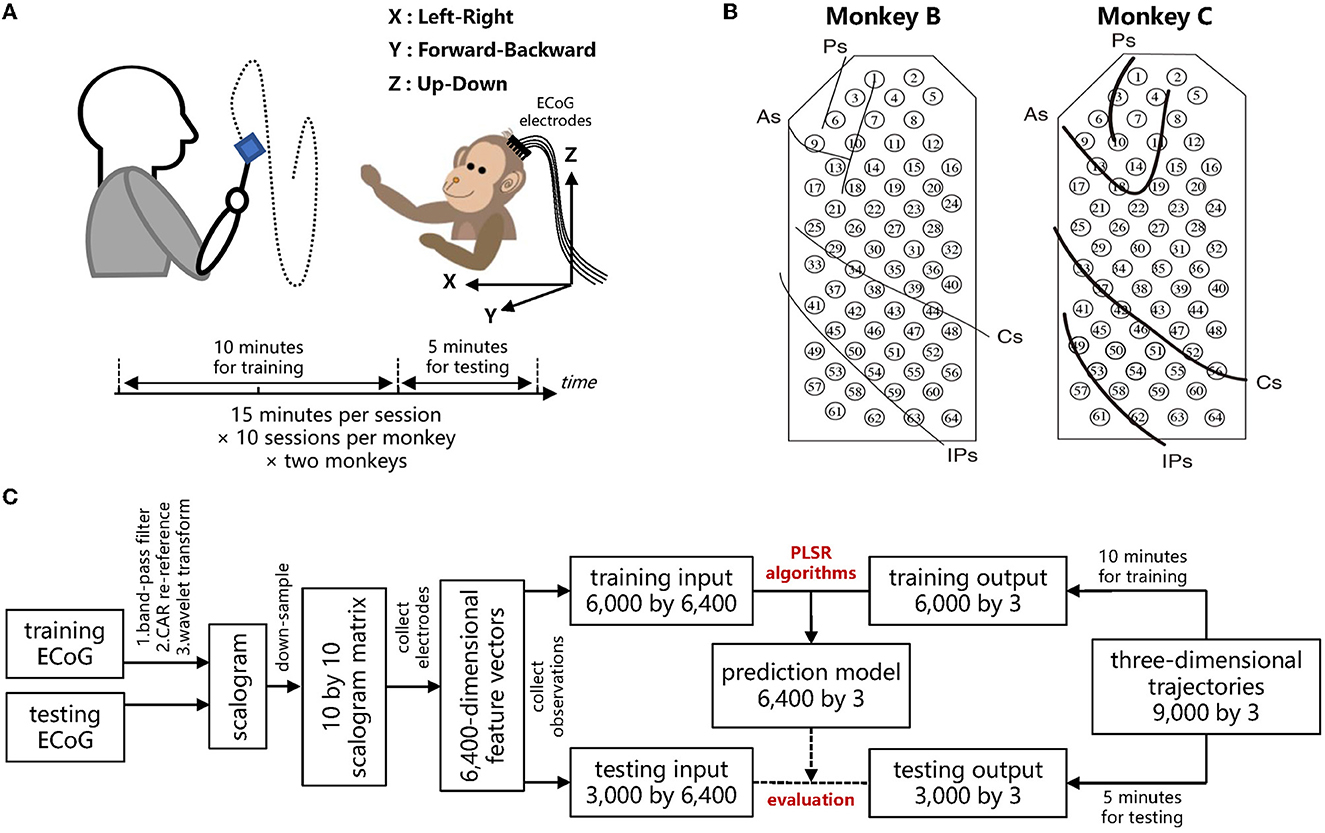

Two Japanese macaques, denoted by Monkey B and C, respectively, were commanded to track foods with the right hands, during which the continuous three-dimensional trajectories of right hands with a sampling rate of 120 Hz were recorded by an optical motion capture instrument. For both Monkey B and C, ten recording sessions were performed, where each recording session lasted 15 minutes. The two macaques were in advance implanted with customized 64-channel ECoG electrodes on the contralateral (left) hemisphere, which covered the regions from the prefrontal cortex to the parietal cortex. ECoG signals were recorded simultaneously during each session with a sampling rate of 1,000 Hz. In accordance with Shimoda et al. (2012), for each recording session, the data of the first ten minutes was used to train a prediction model, while the data of the remaining five minutes was used to evaluate the prediction performance of the trained model. The schemes of the experiments and ECoG electrodes are shown in Figures 3A, B, respectively.

Figure 3. Experimental protocol of the Neurotycho ECoG dataset and decoding paradigm to evaluate the robustness of the different PLSR algorithms. (A) The macaque retrieved foods in a three-dimensional random location, during which the body-centered coordinates of the right wrists and the ECoG signals were recorded simultaneously. (B) Both Monkey B and C were implanted with 64-channel epidural ECoG electrodes on the contralateral (left) hemisphere, overlaying the regions from the prefrontal cortex to the parietal cortex. Ps: principal sulcus, As: arcuate sulcus, Cs: central sulcus, IPs: intraparietal sulcus. (A, B) Were reproduced from Shimoda et al. (2012), which provides the details of this public dataset. (C) Decoding diagram from ECoG signals to three-dimensional trajectories. The training ECoG signals are contaminated to assess the robustness of different algorithms.

5.2.2. Decoding paradigm

For the feature extraction, we used an identical offline decoding paradigm as in Shimoda et al. (2012). Initially, ECoG signals were preprocessed with a tenth-order Butterworth bandpass filter with cutoff frequencies from 1 to 400 Hz, and then re-referenced by the common average referencing (CAR) method. The three-dimensional trajectories of the right wrist were down-sampled to 10 Hz, thus, leading to 9,000 samples in one session (10 Hz × 60 sec × 15 min). The three-dimensional position of time t was predicted from the ECoG signals during the previous one second. To extract the features of ECoG signals, we utilized the time-frequency representation. For the time t, the ECoG signals at each electrode from t- 1.1 s to t were processed by Morlet wavelet transformation. Ten center frequencies ranging from 10 to 120 Hz with equal spacing on the logarithmic scale were considered for the wavelet transformation, overlaying the frequency bands which are most relevant to motion tasks (Shimoda et al., 2012). The time- frequency scalogram was then resampled at ten temporal lags with a 0.1 s gap (t- 1 s, t- 0.9 s,..., t- 0.1 s). Thus, the input of each sample exhibited a 6,400-dimensional vector (64 channels × 10 frequencies × 10 temporal lags), and the output was the three-dimensional position of the right hand. Hence, we trained a regression model with 6,000 samples (the first ten minutes) to predict the three-dimensional output from the 6,400-dimensional input, and evaluated the algorithms with other 3,000 testing samples (the remaining five minutes). The illustrative diagrams for ECoG decoding are summarized in Figure 3C.

5.2.3. Contamination

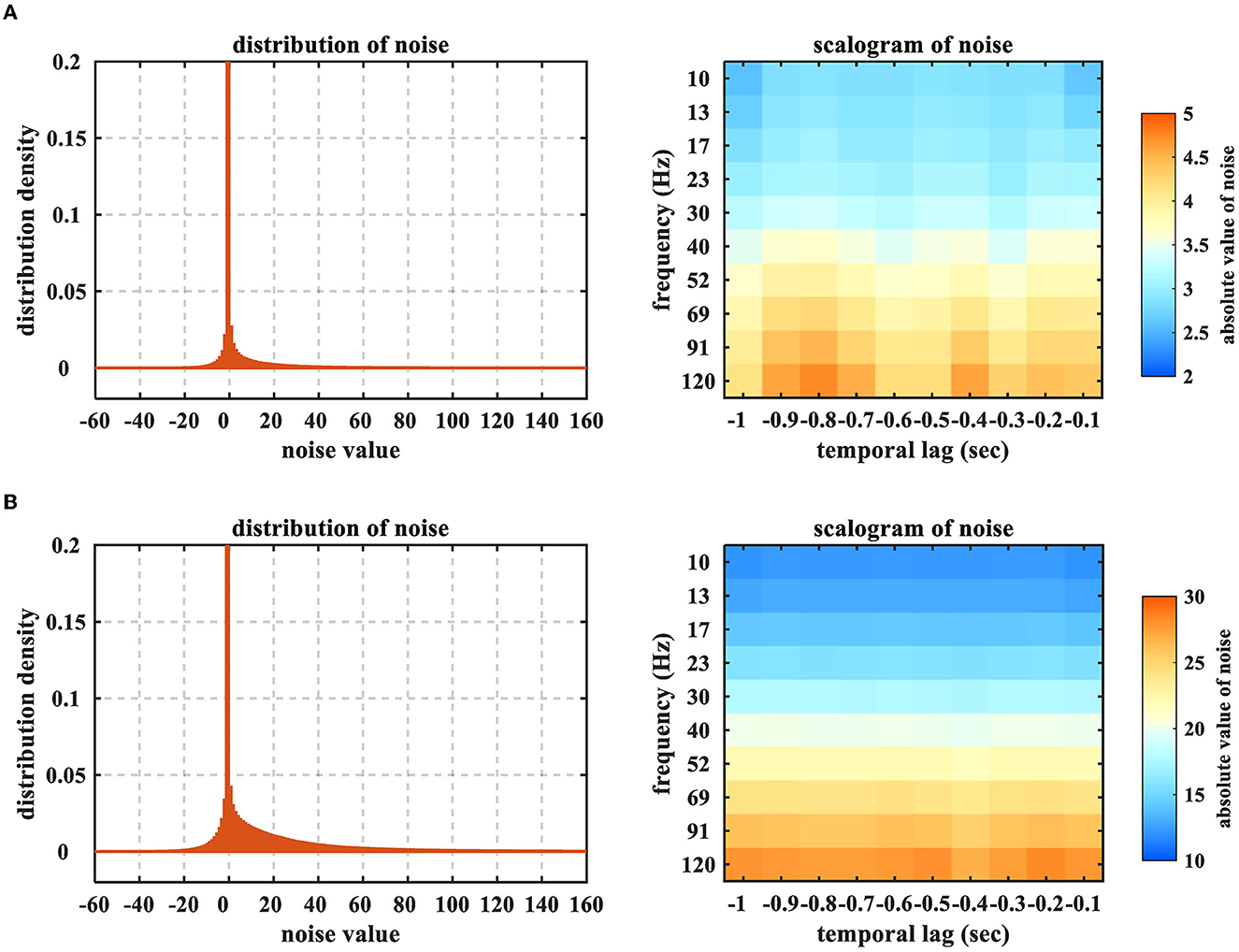

To evaluate the robustness of different algorithms in the practical ECoG decoding task, the ECoG signals were artificially contaminated by outlier to simulate the detrimental artifact. To be specific, we stochastically selected three certain proportions, 0 (no contamination), 10−3, and 10−2, of the training ECoG samplings and corrupted them with outliers which were subject to the zero-mean Gaussian distribution with the variance 50 times that of the signals for the corresponding channel. As stated in Ball et al. (2009a), the blink-related artifacts were remarkably found in ECoG signal that exhibited much larger amplitudes than a normal ECoG recording. Hence, we used the above-mentioned approach to artificially generate adverse artifacts, so as to contaminate the ECoG signals. This method has been widely utilized in the literature to deteriorate the brain signals for evaluating the robustness of different algorithms (Wang et al., 2011; Chen et al., 2018).

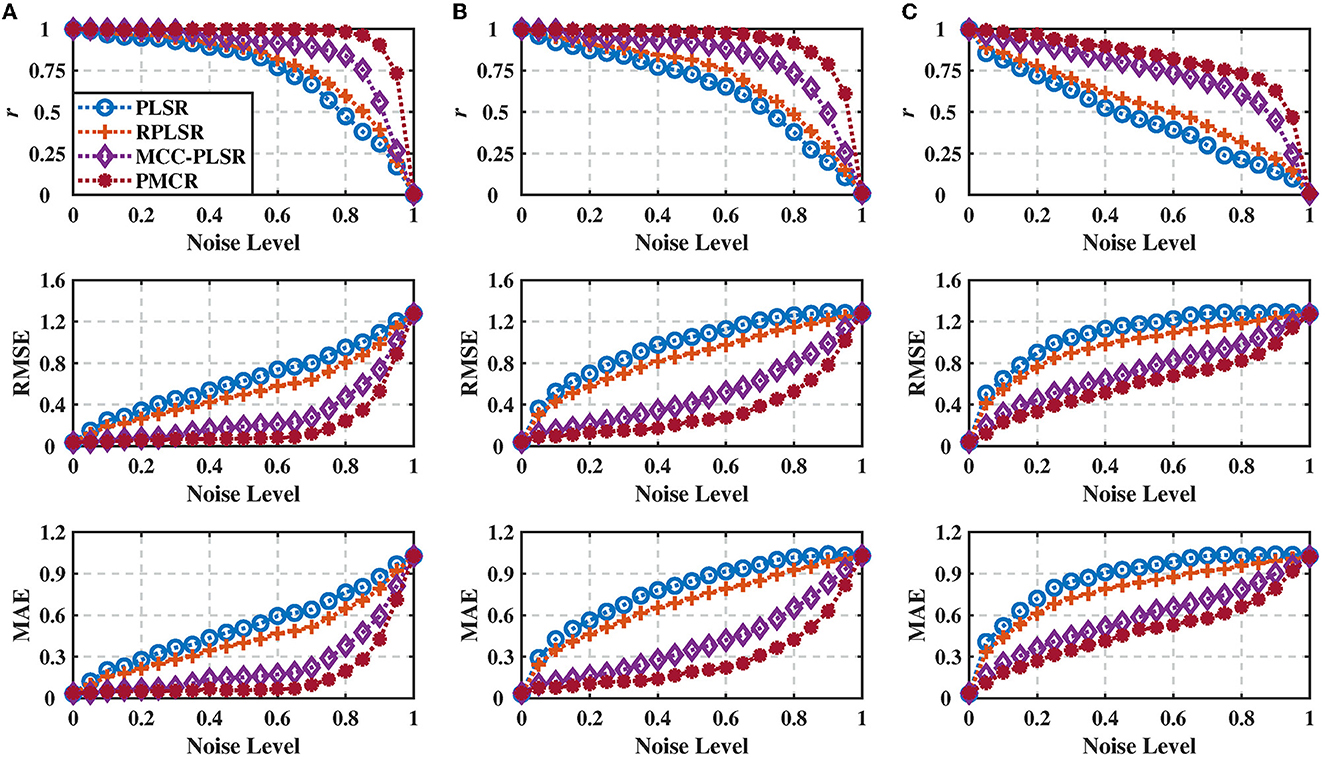

Note that, for this ECoG dataset, the ‘Noise Level' signifies the ratio of the contaminated ECoG samplings in the entirety which is different from the ratio of the deteriorated samples in the 6,000 training samples. The ratio of the affected training samples can be evidently larger than the indicated noise level, since one contaminated ECoG sampling could deteriorate several time windows in feature extraction. For example, when the noise level is denoted as 10−3, the deteriorated proportion of the training set is (0.6645 ± 0.0089). Furthermore, we illustrate how the noise would influence the time-frequency feature in Figure 4. One could obviously perceive the heavy-tailed characteristic on the feature noises, which is in particular intractable for the least square criterion. In addition, the effects of high-frequency band are more prominent, due to the property of impulsive noise.

Figure 4. Distributions and scalograms of the time-frequency feature noises resulting from the ECoG sampling contamination. (A) Noise level = 10−3 (the deteriorated proportion of training set = 0.6645 ± 0.0089), (B) Noise level = 10−2 (the deteriorated proportion of training set ≈1). The time-frequency feature noises were calculated by subtracting the training datasets which were obtained from acoustic and contaminated ECoG signals, respectively. The distributions were averaged by 20 sessions of Monkey B and C, while the scalograms were averaged across all electrodes. The peaks of distributions are truncated to emphasize the heavy-tailed characteristic.

5.2.4. Spatio-spectro-temporal pattern

Studying how the spatio-spectro-temporal weights in the regression model contribute to the entirety can help investigate the neurophysiological pattern. The element of the trained prediction model H can be denoted by hch, freq, temp, which corresponds to the ECoG electrode “ch,” the frequency “freq,” and the temporal lag “temp.” Thus, one could calculate the spatio-spectro-temporal contributions by the ratio between the summation of absolute values of each domain and the summation of absolute values of the entire model

where Wc(ch), Wf(freq), and Wt(temp) denote the contributions of the ECoG electrode “ch,” the frequency “freq,” and the temporal lag “temp,” respectively.

5.2.5. Results

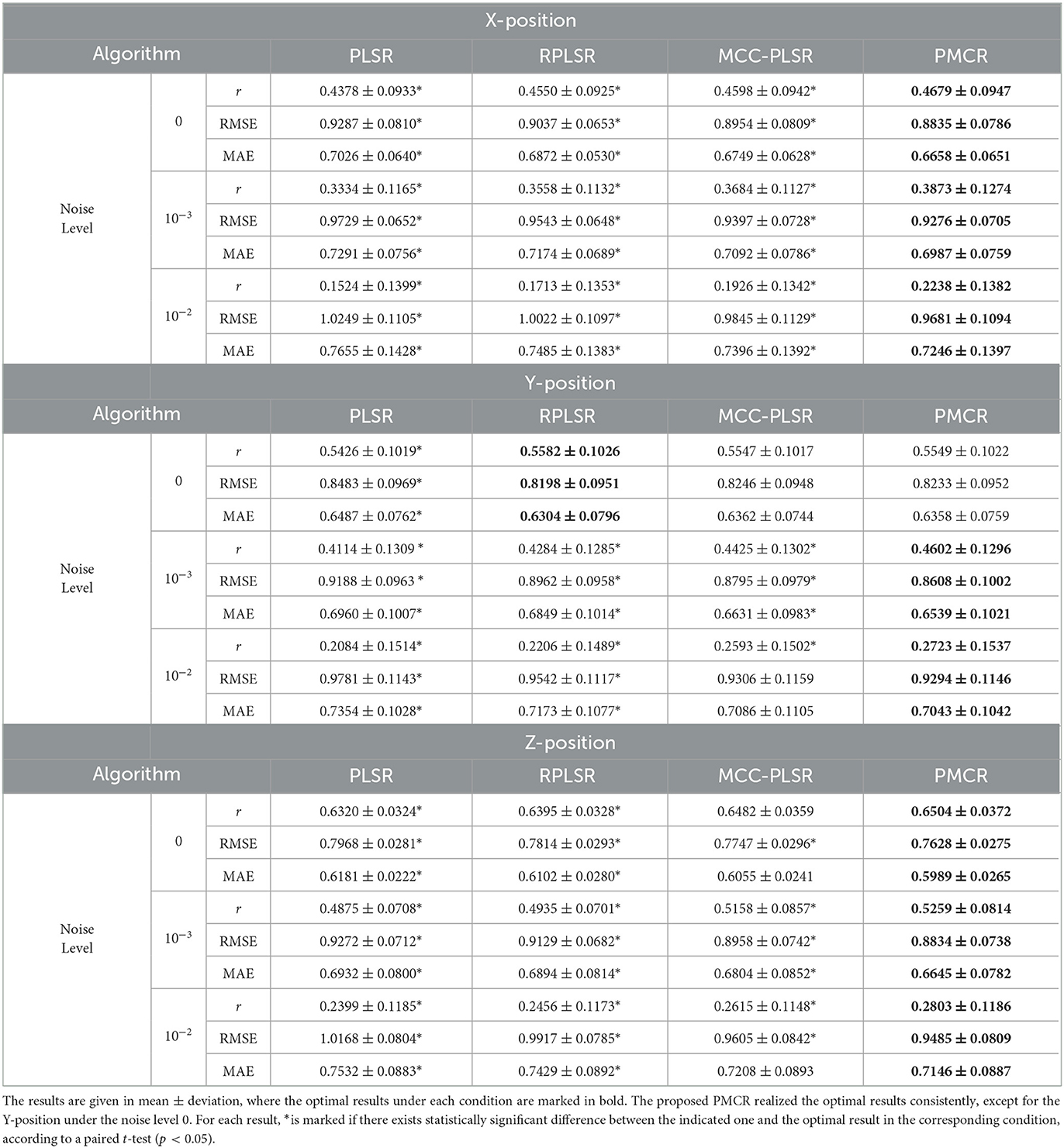

First, we assessed the different algorithms with the uncontaminated ECoG signals. Accordingly, when the noise level was zero, the average performance indicators were obtained by the acoustic 20 sessions (Monkey B and C). Then we contaminated each session with 5 repetitive trials. Hence, for every noise level, each algorithm was evaluated for 100 times (20 sessions × 5 repetitive trials). In Table 2, we present the performance indicators for each algorithm with the noise levels 0, 10−3, and 10−2, respectively. In each row of a specific condition, the optimal result is marked in bold. Moreover, the other results are marked with (*) if there exists statistically significant difference between the current result and the optimal result under each condition. One observes in Table 2 that, the proposed PMCR realized the optimal prediction results consistently, except for the Y-axis under noise level 0. In most cases, PMCR outperformed the other methods with statistically significant difference. One can observe that, when the noise level was 0, PMCR achieved better results than the other algorithms for X-axis and Z-axis. One major reason is, in the acoustic sessions, the motion-related artifacts have been considerably found in the ECoG signals (Shimoda et al., 2012), which further demonstrates the necessity of utilizing PMCR in the practical ECoG decoding tasks.

Table 2. Performance indicators of each algorithm on the Neurotycho ECoG dataset under three noise levels 0, 10−3, and 10−2, respectively.

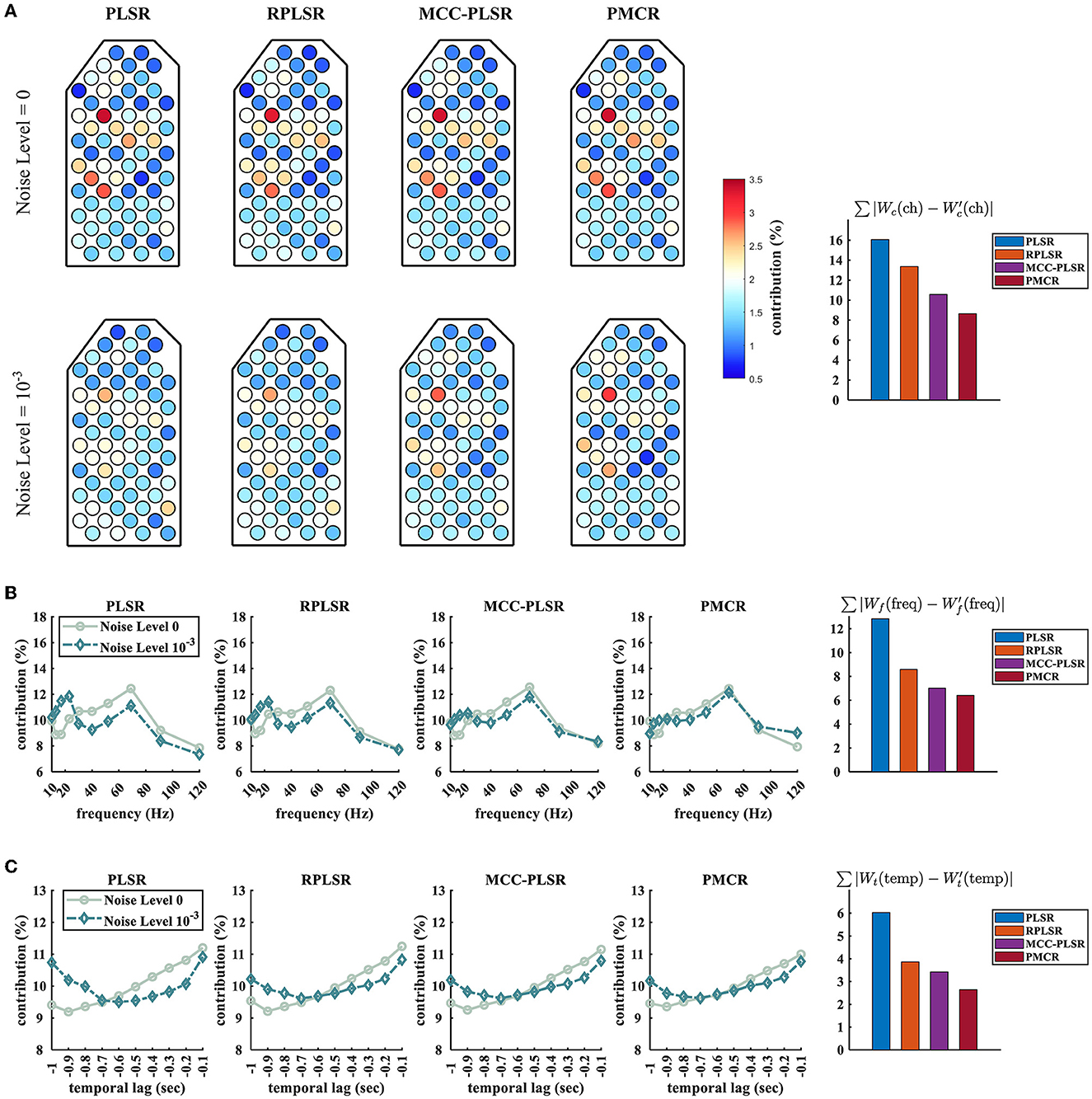

Furthermore, we studied how the neurophysiological patterns for different algorithms were influenced by the sampling noises. We show the differences between the spatial, the spectral, and the temporal contributions which were acquired from the acoustic and the contaminated sessions (under the noise level 10−3), respectively, in Figure 5. The regression model concerning Monkey B's Z-position was used here. We also quantified the effects by computing the summation of the absolute values of the difference between the patterns that were attained from the acoustic and the contaminated sessions, respectively. To be specific, we illustrate , , and for the spatial, the spectral, and the temporal patterns, respectively. Wc(ch), Wf(freq), and Wt(temp) were obtained by the acoustic sessions, while , , and were obtained from the contaminated sessions. One can observe from Figure 5 that, the proposed PMCR algorithm realized the minimal deterioration for the pattern of each domain. This further demonstrates the robustness of PMCR in noisy ECoG decoding tasks.

Figure 5. Spatio-spectro-temporal contributions of the prediction model for Monkey B's Z-position under noise levels 0 and 10−3. (A) Spatial patterns, (B) spectral patterns, and (C) temporal patterns. For each domain, the quantitative deterioration is calculated by the absolute value summation of the difference between the original and the deteriorated patterns. The original patterns Wc(ch), Wf(freq), and Wt(temp) were averaged across the 10 acoustic sessions of Monkey B, while the deteriorated patterns , , and were averaged across 50 trials (10 sessions of Monkey B × 5 repetitive trials). The proposed PMCR achieved the minimal deterioration for each domain.

6. Discussion

6.1. Proposed method

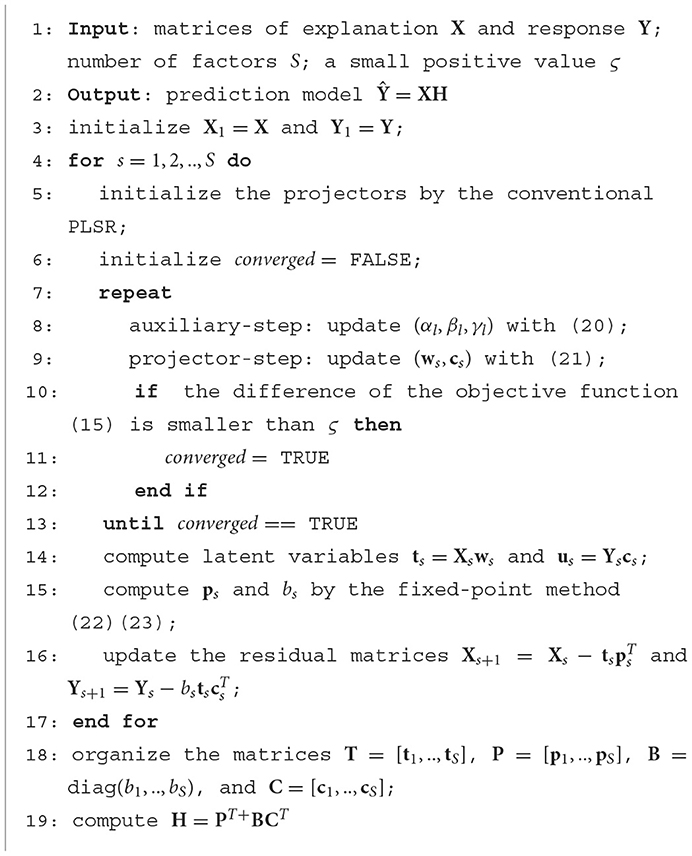

In the present study, we aimed to propose a new robust version for PLSR using the MCC framework, which is named as PMCR. Similarly as the existing PLSR methods, the proposed PMCR decomposes the explanatory matrix (input) and the response matrix (output) iteratively for S decomposition factors. The crucial differences of the proposed PMCR are stated in what follows. First, the objective function regarding the projectors ws and cs in Eq. (15) could be considered as a generalized formulation of the conventional PLSR (Eq. 6), and would be closely related to the calculation in MCC-PLSR (Eq. 12) under specific conditions. As has been proved in Liu et al. (2007), maximizing the correntropy between two variables, when the kernel bandwidth tends to infinity, is equal to minimizing their quadratic Euclidean distance. Hence, if we suppose σx, σy, σr → ∞, the projector calculation of PMCR will degenerate to the conventional PLSR. Then, we consider the differences between MCC-PLSR and the proposed PMCR. For a univariate response, the projector c for dimensionality reduction regarding the response could be ignored. Thus, we can rewrite the dimensionality reduction in PMCR (Eq. 15) as

which could be regarded as a generalized form for the quadratic error minimization (Liu et al., 2007).

which is essentially equal to the conventional PLSR for univariate output. By comparison, MCC-PLSR adopts the MCC framework for the quadratic covariance (Eq. 11), which can be written as Mou et al. (2018)

which is the special case of MCC-PLSR when the kernel bandwidth in Eq. (12) tends to infinity. Thus, the connection between PMCR (Eq. 15) and MCC-PLSR (Eq. 12) could be illustrated as in Figure 6. One can observe that, the starting points of PMCR and MCC-PLSR are different. The proposed PMCR begins from the original covariance maximization, whereas MCC-PLSR was proposed from the quadratic covariance. Therefore, we argue that our proposed PMCR is a more rational robust implementation for PLSR. Moreover, note that we give the above discussion under the premise of a univariate output, which is only a special case of degradation for our proposed PMCR. One the other hand, considering the calculations of the loading vector ps and the regression coefficient bs, the proposed PMCR employs the MCC (Eq. 16, 17), whereas the conventional PLSR and MCC-PLSR utilize the least square criterion. As mentioned above, Eqs. (16, 17) can be also regarded as generalized forms of square error minimization. In summary, the proposed PMCR is more generalized than the conventional PLSR and MCC-PLSR.

In addition, we would like to discuss the advantages and disadvantages of the proposed PMCR algorithm. The essential benefit of utilizing the PMCR algorithm in a noisy ECoG decoding task is the conspicuous robustness with respect to the noises, which was demonstrated with extensive experiments in Section 5. Further, mathematically, the proposed PMCR algorithm is more generalized than the conventional PLSR and MCC-PLSR. As was mentioned above, the conventional PLSR and MCC-PLSR could be regarded as special cases of the proposed PMCR under specific conditions concerning the kernel bandwidths. In particular, compared with MCC-PLSR, the proposed PMCR takes into account the dimensionality reduction for the response matrix. As a result, PMCR could realize better prediction performance for multivariate response. Moreover, PMCR could be further implemented with regularization techniques and extended to the multi-way scenario, which would be discussed in the following subsections. However, PMCR might suffer the performance degradation resulting from inadequate kernel bandwidths that are calculated by the Silverman's rule (Eq. 25). Although the experimental results in this study verified empirically that, the proposed PMCR could perform efficiently with the kernel bandwidths acquired by Eq. (25), it may be difficult to guarantee that the Silverman's rule can always provide adequate kernel bandwidths. Hence, we would like to investigate a better way to determine the kernel bandwidths with solid theoretical guarantees in our future works. In addition, our proposed PMCR is effective to deal with outliers, while it may be inadequate for multi-modal-distributed noise because MCC utilizes only one kernel function for each reconstruction error. To address this issue, it is promising to use minimum error entropy (MEE) to reformulate PLSR, another popular learning criterion in ITL (Principe, 2010). MEE employs multiple kernel functions for each reconstruction error, so that it can realize satisfactory robustness with respect to multi-modal-distributed noise, which has realized robust neural decoding algorithms (Chen et al., 2018; Li et al., 2021).

6.2. PMCR with regularization

One should additionally note that, the PMCR was proposed by reformulating the conventional PLSR algorithm with using the robust MCC, instead of the mediocre least square criterion. Hence, the proposed PMCR exhibits the supplementary potential for further performance improvements with regularization techniques, as well as in the existing regularized PLSR methods. For example, L1-regularization could be utilized in Eq. (15) to encourage sparse and robust projectors. In addition, if one requires better smoothness on the predicted output, polynomial or Sobolev-norm penalization could be utilized in PMCR. Moreover, L2-regularization could be utilized for Eq. (17) to decrease the over-fitting risk considering the regression scalar bs, similarly as Eq. (7) (Foodeh et al., 2020). In the literature, MCC-based algorithms with regularization have been widely investigated. For instance, a robust version of sparse representation classifier (SRC) for face recognition was developed by employing L1-regularization on the MCC-based SRC objective function (He et al., 2010).

6.3. Extension to multi-way application

The multi-way PLSR establishes the regression relationship between tensor variables with dimensionality reduction by tensor factorization technique. In the literature, the multi-way PLSR was usually reported to achieve better decoding capability than the generic PLSR algorithm in the brain decoding task, where the spatio-spectro-temporal feature is organized with the tensor form. Essentially, the multi-way PLSR decomposes the input and output under the least square criterion by minimizing the Frobenius-norm (Kolda and Bader, 2009). Therefore, the multi-way PLSR is prone to the performance deterioration caused by noises as well.

The proposed PMCR method treats the regression problem of matrix, i.e. two-way variable. Extending the PMCR algorithm to multi-way application could probably improve the prediction performance further, which would be investigated in our future works. Promisingly, MCC has been demonstrated effective for tensor variable analysis in a recent study (Zhang et al., 2016).

7. Conclusion

This paper proposed a new robust variant for the PLSR algorithm by reformulating the non-robust least square criterion with the sophisticated MCC framework. The proposed robust objective functions can be effectively optimized by half-quadratic and fixed-point optimization methods. Extensive experimental results with the synthetic dataset and Neurotycho epidural ECoG dataset demonstrate that, the proposed PMCR can outperform the existing PLSR algorithms, revealing promising robustness for high-dimensional and noisy ECoG decoding tasks.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YL and BC contributed to conceptualization of the study and developed the algorithm derivation. YL and GW operated the experiments and analyzed the results. YL wrote the first draft of the manuscript. NY and YK directed the study and guided the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported in part by the Japan Society for the Promotion of Science (JSPS) KAKENHI under Grant 19H05728, in part by the Japan Science and Technology Agency (JST) Support for the Pioneering Research Initiated by Next Generation (SPRING) under Grant JPMJSP2106, and in part by the National Natural Science Foundation of China under Grants U21A20485 and 61976175.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Independent and identically distributed.

2. ^Available online at http://neurotycho.org/epidural-ecog-food-tracking-task.

References

Amiri, S., Fazel-Rezai, R., and Asadpour, V. (2013). “A review of hybrid brain-computer interface systems,” in Advances in Human-Computer Interaction 2013. doi: 10.1155/2013/187024

Ball, T., Kern, M., Mutschler, I., Aertsen, A., and Schulze-Bonhage, A. (2009a). Signal quality of simultaneously recorded invasive and non-invasive EEG. Neuroimage 46, 708–716. doi: 10.1016/j.neuroimage.2009.02.028

Ball, T., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2009b). Differential representation of arm movement direction in relation to cortical anatomy and function. J. Neur. Eng. 6, 016006. doi: 10.1088/1741-2560/6/1/016006

Barker, M., and Rayens, W. (2003). Partial least squares for discrimination. J. Chemometr. 17, 166–173. doi: 10.1002/cem.785

Bro, R. (1996). Multiway calibration: multilinear PLS. J. Chemometr. 10, 47–61. doi: 10.1002/(SICI)1099-128X(199601)10:147::AID-CEM4003.0.CO;2-C

Buzsáki, G., Anastassiou, C. A., and Koch, C. (2012). The origin of extracellular fields and currents–EEG, ECOG, LFP, and spikes. Nat. Rev. Neurosci. 13, 407–420. doi: 10.1038/nrn3241

Chao, Z. C., Nagasaka, Y., and Fujii, N. (2010). Long-term asynchronous decoding of arm motion using electrocorticographic signals in monkey. Front. Neuroeng. 3, 3. doi: 10.3389/fneng.2010.00003

Chen, B., Li, Y., Dong, J., Lu, N., and Qin, J. (2018). Common spatial patterns based on the quantized minimum error entropy criterion. IEEE Trans. Syst. Man Cybern. 50, 4557–4568. doi: 10.1109/TSMC.2018.2855106

Chen, B., and Príncipe, J. C. (2012). Maximum correntropy estimation is a smoothed map estimation. IEEE Signal Proc. Lett. 19, 491–494. doi: 10.1109/LSP.2012.2204435

Chen, B., Wang, J., Zhao, H., Zheng, N., and Principe, J. C. (2015). Convergence of a fixed-point algorithm under maximum correntropy criterion. IEEE Signal Proces. Lett. 22, 1723–1727. doi: 10.1109/LSP.2015.2428713

Chestek, C. A., Batista, A. P., Santhanam, G., Byron, M. Y., Afshar, A., Cunningham, J. P., et al. (2007). Single-neuron stability during repeated reaching in macaque premotor cortex. J. Neurosci. 27, 10742–10750. doi: 10.1523/JNEUROSCI.0959-07.2007

Chin, C. M., Popovic, M. R., Thrasher, A., Cameron, T., Lozano, A., and Chen, R. (2007). Identification of arm movements using correlation of electrocorticographic spectral components and kinematic recordings. J. Neur. Eng. 4, 146. doi: 10.1088/1741-2560/4/2/014

Dong, J., Chen, B., Lu, N., Wang, H., and Zheng, N. (2017). “Correntropy induced metric based common spatial patterns,” in 2017 IEEE 27th International Workshop on Machine Learning for Signal Processing (MLSP) (IEEE) 1–6. doi: 10.1109/MLSP.2017.8168132

Donoghue, J. P. (2002). Connecting cortex to machines: recent advances in brain interfaces. Nat. Neurosci. 5, 1085–1088. doi: 10.1038/nn947

Eliseyev, A., and Aksenova, T. (2016). Penalized multi-way partial least squares for smooth trajectory decoding from electrocorticographic (ecog) recording. PLoS ONE 11, e0154878. doi: 10.1371/journal.pone.0154878

Eliseyev, A., Auboiroux, V., Costecalde, T., Langar, L., Charvet, G., Mestais, C., et al. (2017). Recursive exponentially weighted n-way partial least squares regression with recursive-validation of hyper-parameters in brain-computer interface applications. Sci. Rep. 7, 1–15. doi: 10.1038/s41598-017-16579-9

Eliseyev, A., Moro, C., Costecalde, T., Torres, N., Gharbi, S., Mestais, C., et al. (2011). Iterative n-way partial least squares for a binary self-paced brain-computer interface in freely moving animals. J. Neur. Eng. 8, 046012. doi: 10.1088/1741-2560/8/4/046012

Eliseyev, A., Moro, C., Faber, J., Wyss, A., Torres, N., Mestais, C., et al. (2012). L1-penalized n-way pls for subset of electrodes selection in bci experiments. J. Neur. Eng. 9, 045010. doi: 10.1088/1741-2560/9/4/045010

Feng, Y., Huang, X., Shi, L., Yang, Y., and Suykens, J. A. (2015). Learning with the maximum correntropy criterion induced losses for regression. J. Mach. Learn. Res., 16, 993–1034.

Foodeh, R., Ebadollahi, S., and Daliri, M. R. (2020). Regularized partial least square regression for continuous decoding in brain-computer interfaces. Neuroinformatics 18, 465–477. doi: 10.1007/s12021-020-09455-x

He, R., Hu, B.-G., Zheng, W.-S., and Kong, X.-W. (2011). Robust principal component analysis based on maximum correntropy criterion. IEEE Trans. Image Proc. 20, 1485–1494. doi: 10.1109/TIP.2010.2103949

He, R., Zheng, W.-S., and Hu, B.-G. (2010). Maximum correntropy criterion for robust face recognition. IEEE Trans. Patt. Analy. Mach. Intell. 33, 1561–1576. doi: 10.1109/TPAMI.2010.220

Kolda, T. G., and Bader, B. W. (2009). Tensor decompositions and applications. SIAM Rev. 51, 455–500. doi: 10.1137/07070111X

Krishnan, A., Williams, L. J., McIntosh, A. R., and Abdi, H. (2011). Partial least squares (PLS) methods for neuroimaging: a tutorial and review. Neuroimage 56, 455–475. doi: 10.1016/j.neuroimage.2010.07.034

Lebedev, M. A., and Nicolelis, M. A. (2006). Brain-machine interfaces: past, present and future. TRENDS Neurosci. 29, 536–546. doi: 10.1016/j.tins.2006.07.004

Leuthardt, E. C., Miller, K. J., Schalk, G., Rao, R. P., and Ojemann, J. G. (2006). Electrocorticography-based brain computer interface-the seattle experience. IEEE Trans. Neur. Syst. Rehabilit. Eng. 14, 194–198. doi: 10.1109/TNSRE.2006.875536

Leuthardt, E. C., Schalk, G., Wolpaw, J. R., Ojemann, J. G., and Moran, D. W. (2004). A brain-computer interface using electrocorticographic signals in humans. J. Neur. Eng. 1, 63. doi: 10.1088/1741-2560/1/2/001

Levine, S. P., Huggins, J. E., BeMent, S. L., Kushwaha, R. K., Schuh, L. A., Rohde, M. M., et al. (2000). A direct brain interface based on event-related potentials. IEEE Trans. Rehabil. Eng. 8, 180–185. doi: 10.1109/86.847809

Li, Y., Chen, B., Yoshimura, N., and Koike, Y. (2021). Restricted minimum error entropy criterion for robust classification. IEEE Trans. Neur. Netw. Learn. Syst. 33, 6599–6612. doi: 10.1109/TNNLS.2021.3082571

Liu, W., Pokharel, P. P., and Principe, J. C. (2007). Correntropy: Properties and applications in non-gaussian signal processing. IEEE Trans. Signal Process. 55, 5286–5298. doi: 10.1109/TSP.2007.896065

Loh, P.-L., and Wainwright, M. J. (2015). Regularized m-estimators with nonconvexity: Statistical and algorithmic theory for local optima. J. Mach. Learn. Res. 16, 559–616.

Mou, Y., Zhou, L., Chen, W., Fan, J., and Zhao, X. (2018). Maximum correntropy criterion partial least squares. Optik 165, 137–147. doi: 10.1016/j.ijleo.2017.12.126

Mussa-Ivaldi, F. A., and Miller, L. E. (2003). Brain-machine interfaces: computational demands and clinical needs meet basic neuroscience. TRENDS Neurosci. 26, 329–334. doi: 10.1016/S0166-2236(03)00121-8

Otsubo, H., Ochi, A., Imai, K., Akiyama, T., Fujimoto, A., Go, C., et al. (2008). High-frequency oscillations of ictal muscle activity and epileptogenic discharges on intracranial eeg in a temporal lobe epilepsy patient. Clin. Neurophysiol. 119, 862–868. doi: 10.1016/j.clinph.2007.12.014

Parzen, E. (1962). On estimation of a probability density function and mode. Ann. Mathem. Stat. 33, 1065–1076. doi: 10.1214/aoms/1177704472

Pistohl, T., Ball, T., Schulze-Bonhage, A., Aertsen, A., and Mehring, C. (2008). Prediction of arm movement trajectories from ecog-recordings in humans. J. Neurosci. Methods 167, 105–114. doi: 10.1016/j.jneumeth.2007.10.001

Principe, J. C. (2010). Information Theoretic Learning: Renyi's Entropy and Kernel Perspectives. New York: Springer Science &Business Media. doi: 10.1007/978-1-4419-1570-2

Ren, Z., and Yang, L. (2018). Correntropy-based robust extreme learning machine for classification. Neurocomputing 313, 74–84. doi: 10.1016/j.neucom.2018.05.100

Santamaría, I., Pokharel, P. P., and Principe, J. C. (2006). Generalized correlation function: definition, properties, and application to blind equalization. IEEE Trans. Signal Proces. 54, 2187–2197. doi: 10.1109/TSP.2006.872524

Schalk, G., Miller, K. J., Anderson, N. R., Wilson, J. A., Smyth, M. D., Ojemann, J. G., et al. (2008). Two-dimensional movement control using electrocorticographic signals in humans. J. Neur. Eng. 5, 75. doi: 10.1088/1741-2560/5/1/008

Shimoda, K., Nagasaka, Y., Chao, Z. C., and Fujii, N. (2012). Decoding continuous three-dimensional hand trajectories from epidural electrocorticographic signals in japanese macaques. J. Neur. Eng. 9, 036015. doi: 10.1088/1741-2560/9/3/036015

Silverman, B. W. (1986). Density Estimation for Statistics and Data Analysis, volume 26. New York: CRC press.

Singh, A., Pokharel, R., and Principe, J. (2014). The c-loss function for pattern classification. Patt. Recogn. 47, 441–453. doi: 10.1016/j.patcog.2013.07.017

Wang, H., Tang, Q., and Zheng, W. (2011). L1-norm-based common spatial patterns. IEEE Trans. Biomed. Eng. 59, 653–662. doi: 10.1109/TBME.2011.2177523

Wold, H. (1966). “Estimation of principal components and related models by iterative least squares,” in Multivariate analysis, eds. P. R. Krishnajah (NewYork: Academic Press) 391–420.

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Zhang, M., Gao, Y., Sun, C., La Salle, J., and Liang, J. (2016). “Robust tensor factorization using maximum correntropy criterion,” in 2016 23rd International Conference on Pattern Recognition (ICPR) (IEEE) 4184–4189.

Zhao, Q., Caiafa, C. F., Mandic, D. P., Chao, Z. C., Nagasaka, Y., Fujii, N., et al. (2012). Higher order partial least squares (hopls): A generalized multilinear regression method. IEEE Trans. Patt. Analy. Mach. Intell. 35, 1660–1673. doi: 10.1109/TPAMI.2012.254

Zhao, Q., Zhang, L., and Cichocki, A. (2014). Multilinear and nonlinear generalizations of partial least squares: an overview of recent advances. Wiley Interdisc. Rev. 4, 104–115. doi: 10.1002/widm.1120

Zhao, Q., Zhou, G., Adalı, T., Zhang, L., and Cichocki, A. (2013). “Kernel-based tensor partial least squares for reconstruction of limb movements,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (IEEE) 3577–3581. doi: 10.1109/ICASSP.2013.6638324

Keywords: brain-computer interface, partial least square regression, maximum correntropy, robustness, electrocorticography decoding

Citation: Li Y, Chen B, Wang G, Yoshimura N and Koike Y (2023) Partial maximum correntropy regression for robust electrocorticography decoding. Front. Neurosci. 17:1213035. doi: 10.3389/fnins.2023.1213035

Received: 27 April 2023; Accepted: 15 June 2023;

Published: 30 June 2023.

Edited by:

Peng Xu, University of Electronic Science and Technology of China, ChinaReviewed by:

Dakun Lai, University of Electronic Science and Technology of China, ChinaYakang Dai, Chinese Academy of Sciences, China

M. Van Hulle, KU Leuven, Belgium

Copyright © 2023 Li, Chen, Wang, Yoshimura and Koike. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuanhao Li, bGkueS5heUBtLnRpdGVjaC5hYy5qcA==

Yuanhao Li

Yuanhao Li Badong Chen

Badong Chen Gang Wang

Gang Wang Natsue Yoshimura

Natsue Yoshimura Yasuharu Koike

Yasuharu Koike