- 1Department of Psychology and Neuroscience, BioImaging Research Center, University of Georgia, Athens, GA, United States

- 2Institute for Biomagnetism and Biosignal Analysis, University of Münster, Münster, Germany

- 3Otto Creutzfeldt Center for Cognitive and Behavioral Neuroscience, University of Münster, Münster, Germany

The advent of the Research Domain Criteria (RDoC) approach to funding translational neuroscience has highlighted a need for research that includes measures across multiple task types. However, the duration of any given experiment is quite limited, particularly in neuroimaging contexts, and therefore robust estimates of multiple behavioral domains are often difficult to achieve. Here we offer a “turn-key” emotion-evoking paradigm suitable for neuroimaging experiments that demonstrates strong effect sizes across widespread cortical and subcortical structures. This short series could be easily added to existing fMRI protocols, and yield a reliable estimate of emotional reactivity to complement research in other behavioral domains. This experimental adjunct could be used to enable an initial comparison of emotional modulation with the primary behavioral focus of an investigator’s work, and potentially identify new relationships between domains of behavior that have not previously been recognized.

Introduction

The advent of the Research Domain Criteria (RDoC) approach to funding translational neuroscience has highlighted a need for research that includes measures across multiple task types (Insel et al., 2010; Morris and Cuthbert, 2012). This manifold approach, put forth by the National Institute of Mental Health (NIMH) has led to novel advances in our understanding of complex mental disorders such as depression (Drysdale et al., 2017), anxiety (McTeague and Lang, 2012), and psychosis (Clementz et al., 2016). The majority of mental disorders include substantial emotional symptoms (American Psychiatric Association., 2022), and one major theme of the RDoC approach is to improve the utility of individual studies of mental disorders by expanding data collection across behavioral domains, including cognitive, social, and affective processing. However, the duration of any given experiment is limited (particularly in neuroimaging contexts), and robust estimates of multiple behavioral domains are often difficult to achieve. There is a need for time-efficient paradigms to evoke emotion states with robust measurement properties that can be easily integrated into diverse research programs (LeDoux and Pine, 2016; Peng et al., 2021). Here we offer a brief yet robust emotional perception paradigm suitable for neuroimaging experiments that evokes strong activation effect sizes in cortical and subcortical structures known to be engaged by emotional processing. This 5-min series could be added to any fMRI protocol, and yield a reliable estimate of emotional reactivity to complement research in other behavioral domains.

Naturalistic scene stimuli are often used to evoke emotion in human neuroscience studies. This approach has led to considerable progress in our understanding of basic and translational research problems in psychology and psychiatry (McTeague et al., 2012; Bradley et al., 2014). The majority of neuroimaging investigations of emotional perception have identified modulation of brain activity evoked by naturalistic scenes that can be classified according to two primary dimensions of valence (pleasantness) and arousal. A reliable finding is that emotionally arousing scenes, whether pleasant or unpleasant, evoke enhanced activation in a broad network of regions, including the visual system, amygdala, thalamus, anterior insula, and ventral prefrontal cortex (Kober et al., 2008; Liu et al., 2011; Sabatinelli et al., 2011; Sabatinelli and Frank, 2019). The utility of the emotional scene paradigm for the study of psychosis within the RDoC framework is evident in recent research of emotional scene perception, which altered emotional reactivity in bipolar disorder with psychosis as compared to healthy controls (Trotti et al., 2020), and blunted emotional reactivity in schizophrenia and schizoaffective disorder (Trotti et al., 2021).

The novel purpose of this report is to offer an opportunity for “cross-fertilization” of BOLD signal patterns in emotion-sensitive brain networks with BOLD signal patterns evoked in another behavioral domains that may have relevance to defining the mechanisms of mental disorders. The paradigm described here will provide neuroimaging researchers with a straightforward means to add an assessment of emotional reactivity to any study. The basic turn-key package will include the scene stimuli and the Python code to present the scenes in an event-related fashion in under 5 min. Brain activation magnitude and emotional effect sizes are described based on an unselected sample of 23 healthy participants, using common acquisition, reduction and analysis procedures, but the parameters of image collection and analysis are open to the needs and preferences of the researcher.

Methods

Participants

Our intention is provide a credible demonstration of the emotional impact that a researcher might expect when using a sample size that is typical of human fMRI studies (Szucs and Ioannidis, 2017). Twenty-four members of the University of Münster community participated in the fMRI experiment. All participants gave written informed consent, as approved by the University of Münster Human Subjects Review Board. One participant’s data was excluded due to excessive head motion, leaving 23 participants in the final sample (11 female, mean age 26.9 years, SD 3.8 years). All participants reported no neurological abnormalities and had normal or corrected-to-normal vision. Participants received 50 Euros compensation.

Experimental procedure

In brief, the functional imaging protocol involves a passive scene viewing period of 5 min in the scanner. Participants are asked to maintain central fixation on a projection screen while the scene stimuli are presented in a mixed, event-related design.

Participants were fitted with earplugs, headphones, and given a patient-alarm squeeze ball prior to entering the bore of the scanner. Padding inside the head coil and explicit verbal instruction were used to limit head motion. After a 5-min structural volume was collected, a 5-min functional scan was collected. Participants were instructed to attend to the series of 20 emotional and neutral scenes, while maintaining fixation on a red fixation dot at the center of the screen. The scenes were rear-projected onto a screen visible through a coil-mounted mirror. Following the 20 scene series, participants left the scanner room and reviewed each scene on a laptop computer, while providing their ratings of pleasantness and emotional arousal using the Self-Assessment Manikin (SAM; Bradley and Lang, 1994). Participants then received transcranial direct current stimulation, and viewed two additional scene series while functional imaging data was collected (described in Junghöfer et al., 2017).

Scene stimuli

Participants viewed an event related series of 20 natural scene photographs presented in 256 levels of grayscale, at 800 × 600 resolution, over a 30° horizontal field of view. The stimuli depict 8 pleasant (happy children and families, erotica), 4 neutral (people in daily activities, land, and cityscapes), and 8 unpleasant scenes (threatening people and animals, bodily injuries). All scene stimuli included a central fixation point and were balanced across emotional and neutral content to be statistically equivalent in luminosity and complexity as measured by the 90% quality Joint Photographic Experts Group (JPEG) file size using GIMP 2.8.1

The scene series began with a 2 s checkerboard acclimation image, followed by the 20 experimental scenes, presented for 2 s each, in pseudorandom order, separated by interstimulus intervals of 10 or 12 s. The total acquisition time was 4 min and 42 s, including 5 dummy acquisitions (10 s) prior to the first trial to allow MR signal stabilization.

In the original experiment, the scenes were presented using the Psychophysics Toolbox (Brainard, 1997) which runs in Matlab (Mathworks, Inc.). To enable researchers to avoid a dependence on a Matlab license, the presentation paradigm was rewritten using open-source Psychopy software (Peirce et al., 2019). The code was created with the “builder” graphic user interface such that it can be simple to use and adjust for individual research applications. The code includes a generic trigger wait loop to enable synchronization with the first functional image acquisition, which will need to be set appropriately for each scanner and chosen image repeat time (the default is a 2 s TR). In addition, the scene presentation order is randomized for each run, with a requirement that a specific scene content (e.g., pleasant, neutral, unpleasant) is not repeated twice in succession. This process occurs before the experiment is fully loaded. There is also a means of tracking and correcting the slight time variation (∼1–2 ms) that occurs as each scene is loaded into video memory. This variation is corrected in the following inter trial interval, such that each trial has the same duration, and the total presentation series ends on time. The scene presentation order and onset/offset times are recorded in a log file for each run.

MR data acquisition and reduction

Using a Siemens Prisma 3T MR scanner (Siemens Healthcare, Erlangen, Germany), a T1-weighted structural volume was collected consisting of 192 sagittal slices with a 256 × 256 matrix and 1 mm3 isotropic voxels. The functional prescription covered the whole brain with 30 interleaved axial slices with 3.5 mm3 isotropic voxels (64 × 64 gradient echo EPI, 224 mm FOV, 3.5 mm thickness, no gap, 90° flip angle, 30 ms TE, 2000 ms TR). The functional time series was slice-time corrected using cubic spline interpolation, spatially smoothed across 2 voxels (7 mm full width at half maximum), linearly detrended and high-pass filtered at 0.02 Hz. Structural and co-registered functional data were resampled into 1 mm3 voxels and transformed into standardized Talairach coordinate space, using BrainVoyager (Brain Innovation).2

Estimates of BOLD signal reactivity

A repeated measures ANOVA using a 2-gamma hemodynamic response function (Friston et al., 1998) convolved with scene presentations assessed the effect of emotional scene content on brain activation. The resulting group-averaged BOLD signal map was thresholded at a false discovery rate of p < 0.01 (Genovese et al., 2002) and a minimum cluster size of 250 mm3. Our goal in this paper was to enhance the reliability of our BOLD activation results, thus we chose a relatively large ROI volume to enable the averaging of roughly 70 voxels at raw acquisition size. From each participant’s data, 5 regions of interest (ROI) were sampled, the locations of which were derived from prior studies employing this paradigm (Frank and Sabatinelli, 2014, 2019; Sambuco et al., 2020) as well as 3 large meta-analyses of visual emotional processing (Kober et al., 2008; Liu et al., 2011; Sabatinelli et al., 2011). These ROIs are not intended to represent an complete list of all brain regions that show emotional sensitivity, but instead are used to demonstrate the effects sizes of BOLD signal differences that may be expected when employing this paradigm and protocol. These regions include bilateral amygdala (±22, −7, −12), fusiform gyrus (FG; ±44, −50, −14), lateral occipital cortex (LOC; ±45, −70, 2), inferior frontal gyrus/anterior insula (IFG; ±34, 19, 6), and the medial dorsal nucleus of the thalamus (MDN; 0, −19, 5). A 100 mm3 sample of BOLD signal was extracted from each ROI location for each participant. From these functional time series, BOLD signal change scores were calculated using the average percent signal change 4–8 s following scene onset, deviated from the 2 s pre-stimulus baseline.

Results

Scene content effects

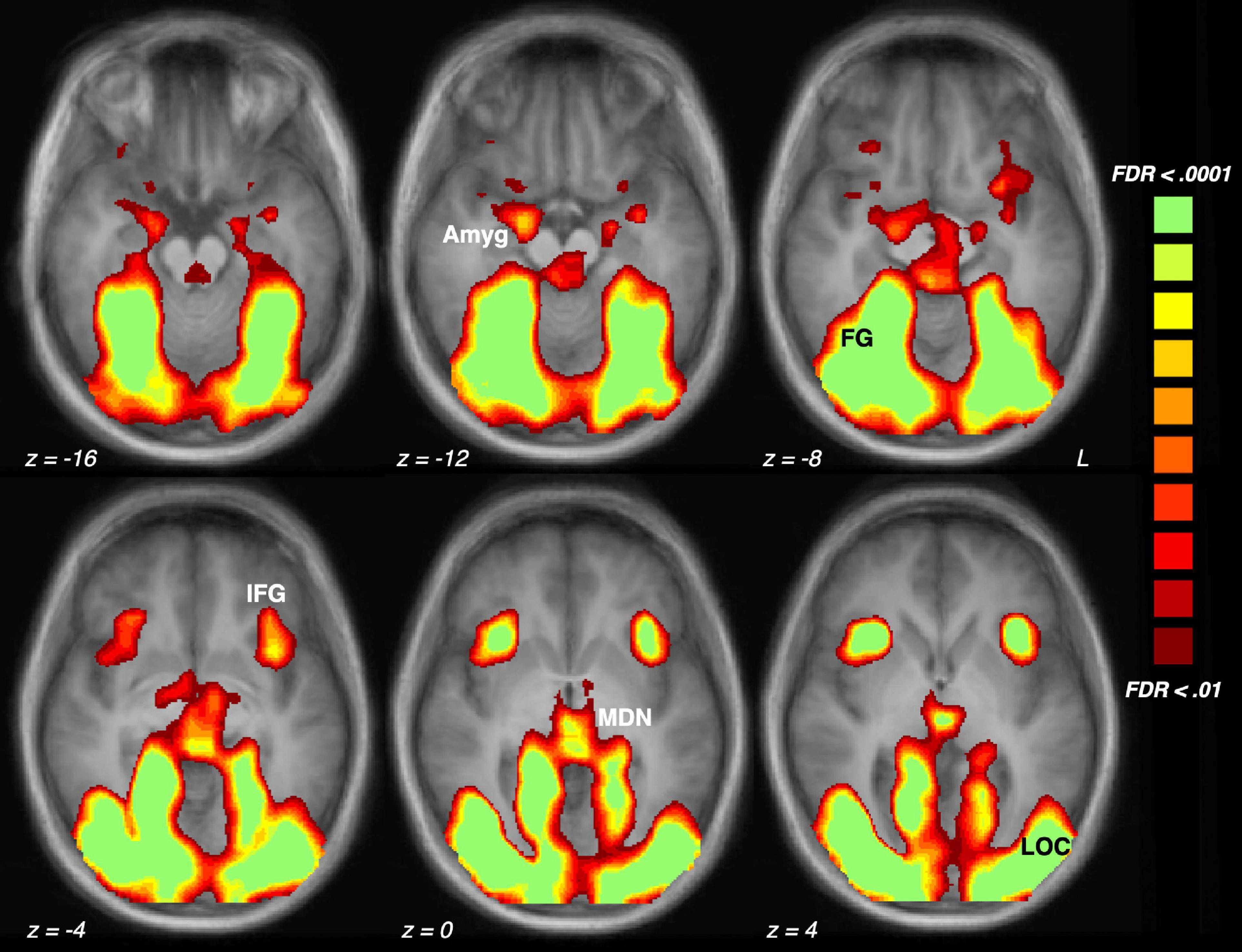

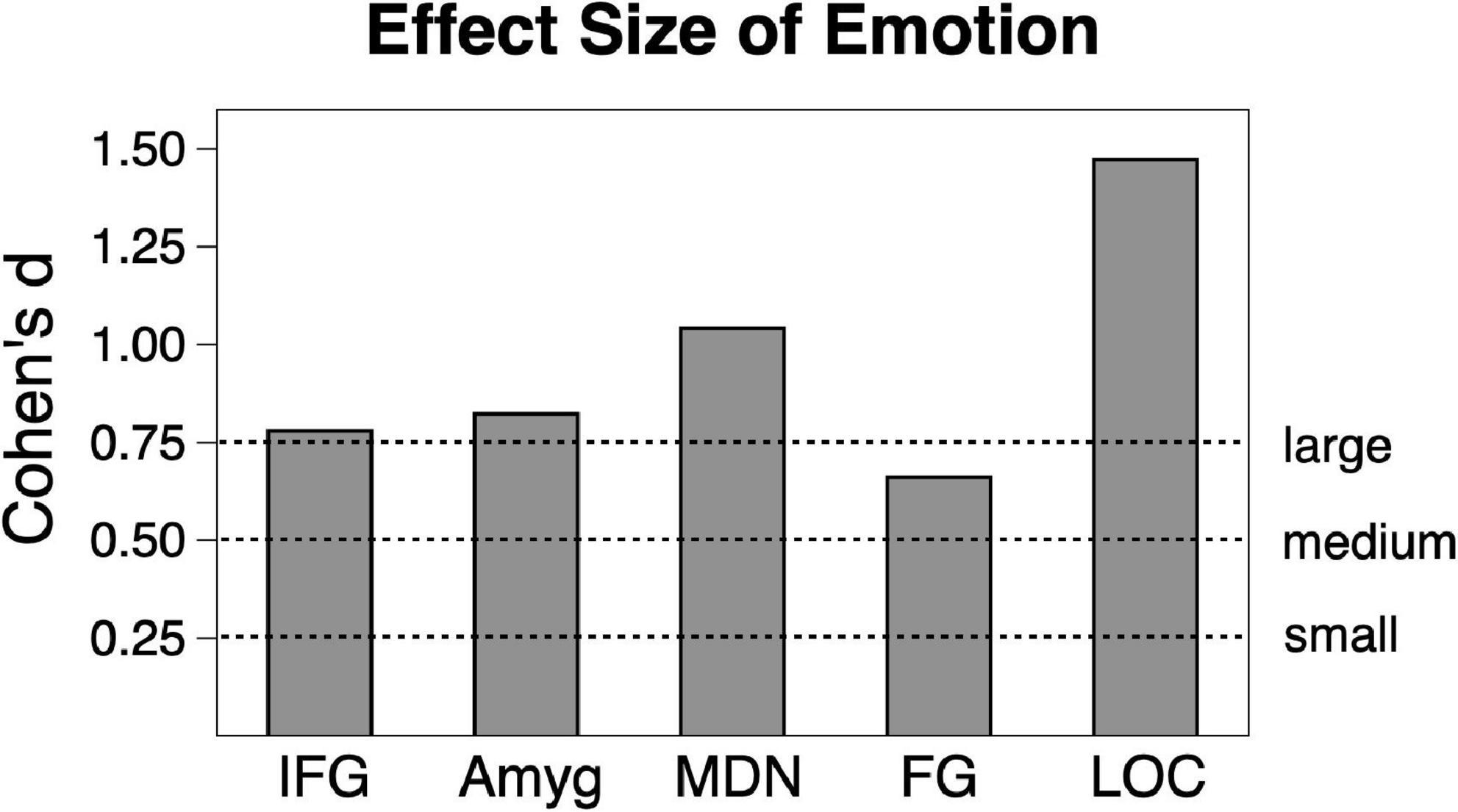

Analysis of scene content effects (Figure 1) were assessed with repeated measures ANOVA across pleasant, neutral and unpleasant scenes, applying corrections for violations of sphericity where necessary. Effect sizes will be listed in generalized η2 (where 0.01 indicates a small effect, 0.06 indicates a medium effect, and 0.14 indicates a large effect) and in Cohen’s d for ease of interpretation in Figure 2.

Figure 1. Brain activation driven by emotional (pleasant and unpleasant) compared to neutral scene perception across the sample of 23 participants. The five regions of interest are indicated: Amyg, amygdala; FG, fusiform gyrus; IFG, inferior frontal gyrus/anterior insula; MDN, medial dorsal nucleus of the thalamus; LOC, lateral occipital cortex.

Figure 2. Emotional vs. neutral contrast Cohen’s effect size across the sample (n = 23) in the five regions of interest. Amyg, amygdala; FG, fusiform gyrus; IFG, inferior frontal gyrus/anterior insula; MDN, medial dorsal nucleus of the thalamus; LOC, lateral occipital cortex.

As expected, scene content significantly modulated ratings of valence (F(2,44) = 168.71, p < 0.001, ηG2 = 0.818) and ratings of arousal (F(2,44) = 99.86, p < 0.001, ηG2 = 0.741). On scales of 1–9 (standard error), the sample rated pleasant scenes at 7.12 (0.181), followed by neutral scenes at 6.70 (0.184), followed by unpleasant scenes at 2.99 (0.175). On a scale of 1–9, the sample rated unpleasant scenes as most arousing at 6.90 (0.196) followed by pleasant scenes at 5.53 (0.203) and neutral scenes at 2.87 (0.235).

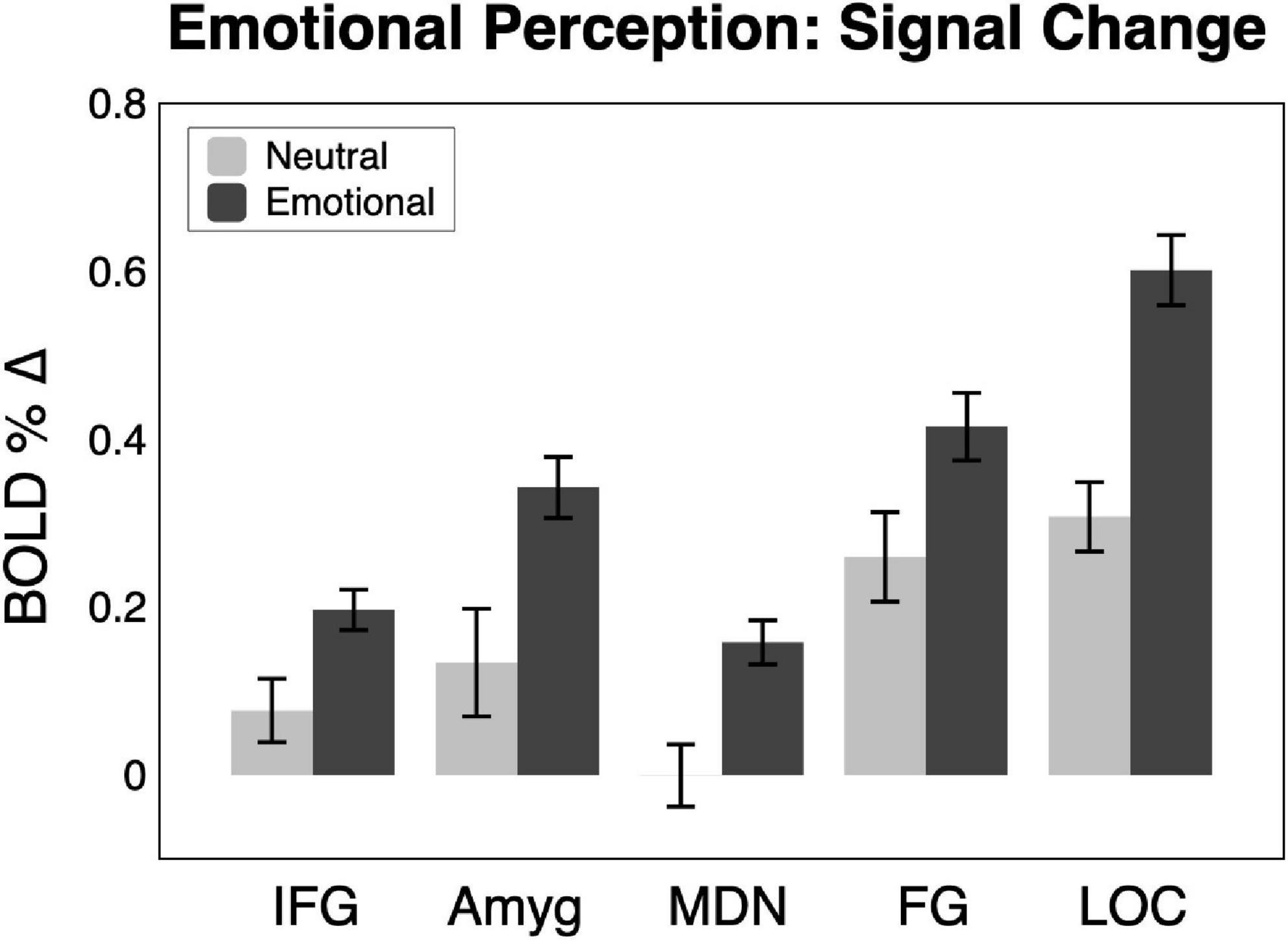

Shown in Figures 2, 3, emotional scene perception significantly modulated BOLD signal in amygdala (F(2,44) = 6.34, p < 0.01, ηG2 = 0.138), fusiform gyrus (F(2,44) = 12.94, p < 0.001, ηG2 = 0.129), lateral occipital cortex (F(2,44) = 30.10, p < 0.001, ηG2 = 0.337), inferior frontal gyrus/anterior insula (F(2,44) = 5.47, p < 0.01, ηG2 = 0.127), and medial dorsal nucleus of the thalamus (F(2,44) = 5.83, p < 0.01, ηG2 = 0.172).

Figure 3. BOLD signal change across the sample (n = 23) in the five regions of interest for neutral and emotional scenes. Amyg, amygdala; FG, fusiform gyrus; IFG, inferior frontal gyrus/anterior insula; MDN, medial dorsal nucleus of the thalamus; LOC, lateral occipital cortex.

Consistent with much prior work, both pleasant and unpleasant scenes elevated BOLD signal compared to neutral scene perception in all regions. To provide an estimate of the strength of emotional reactivity in each ROI, Cohen’s d effect size was calculated for BOLD signal enhancement by emotional (pleasant and unpleasant) relative to neutral scene perception, as shown in Figure 2. These effect sizes were quite strong, ranging from medium to large in FG (0.66), large in IFG (0.78), large in amygdala (0.82), large in MDN (1.04), and very large in LOC (1.47).

Discussion

Scientific progress is often produced through the study of a single domain of behavior in a simplified model. The opposing trend toward interdisciplinary and multimodal approaches toward longstanding research questions has been formalized in the RDoC funding initiative for mental health research. The use of multiple measures that cut across multiple behavioral domains is desirable, but any single experiment is of course limited in scope and duration. Here we offer a 5-min turn-key paradigm that can be added to an fMRI protocol that can evoke substantial emotional reactivity across widespread regions of the brain. This short extension would allow investigators to assess the potential relationships between the dependent measures of their target behavioral domain to emotional modulation based on scene perception, without need for a local collaborator, and with predictable effect sizes, even in a relatively small sample. The emotional scenes as well as the open-source Python code to present those is freely available on the Open Science Framework.3 The investigator will only need to insert the necessary information to register the first RF trigger of the scanner to time lock scene presentation to image acquisition.

The naturalistic emotional scene paradigm has been employed widely and provides several benefits as compared to other emotion-elicitation paradigms. No task training is required, no specific language or culture is represented, and the scenes are inherently engaging. There is no dependence on the interpretation of facial expressions, which can conflate socio-communicative and emotional processes (Barrett et al., 2019), and are known to differentially impact clinical and non-clinical populations (Anticevic et al., 2012; Filkowski and Haas, 2016).

We consider this brief paradigm to represent a robust, but basic assessment of emotional reactivity, that recruits a broad network of structures shown repeatedly to be active in emotional perception (Sabatinelli et al., 2011; Sambuco et al., 2020). The 5-min series is intended to be an adjunct to an existing fMRI protocol, and serves to provide a predictable index of emotional network activity. This index could be used in an exploratory manner to enable an initial comparison of emotional modulation with the primary behavioral focus of an investigator’s work. In this minimal form, interpretive limitations are understood to be compromises of time considerations, and should potentially meaningful relationships between the primary behavior of interest and emotional reactivity arise, a more extended assessment of emotional reactivity would be justified. For example, predictions regarding the relative impact of pleasant and unpleasant scenes are not sufficiently powered in this 5-min protocol, due to the fact that the great majority of brain reactivity is driven by emotional arousal, and not valence (Kober et al., 2008; Liu et al., 2011; Lindquist et al., 2016). If an investigator can afford more time in the scanner, and desires more trials and scene variability, the International Affective Picture System (Lang et al., 2008) is widely used, or additional perceptually balanced scene sets are available from the corresponding author, depicting a broad range of contents.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the University of Münster Human Subjects Review Board. The patients/participants provided their written informed consent to participate in this study.

Author contributions

DS and MJ designed, conducted, analyzed the data and wrote the manuscript for this study. MR and CW conducted, analyzed the data and reviewed the manuscript for this study. AF analyzed the data and wrote the presentation code for the dissemination of the turn-key study protocol. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by the Interdisciplinary Center for Clinical Research of the University of Münster (Ju2/024/15) and the Deutsche Forschungsgemeinschaft (DFG, SFB-TRR58-C01).

Acknowledgments

We thank Harald Kugel, Jochen Bauer, Nina Nagelmann, and Andreas Wollbrink for their technical assistance and help with the data collection.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

American Psychiatric Association. (2022). Diagnostic and statistical manual of mental disorders, 5th Edn. Washington, DC: American Psychiatric Association. doi: 10.1176/appi.books.9780890425787

Anticevic, A., Van Snellenberg, J. X., Cohen, R. E., Repovs, G., Dowd, E. C., and Barch, D. M. (2012). Amygdala recruitment in schizophrenia in response to aversive emotional material: A meta-analysis of neuroimaging studies. Schizophr. Bull. 38, 608–621. doi: 10.1093/schbul/sbq131

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., and Pollak, S. D. (2019). Emotional expres- sions reconsidered: Challenges to inferring emotion from human facial movements. Psychol. Sci. Public Int. 20, 1–68. doi: 10.1177/1529100619832930

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25, 49–59.

Bradley, M. M., Sabatinelli, D., and Lang, P. J. (2014). “Emotion and motivation in the perceptual processing of natural scenes,” in Scene vision: Making sense of what we see, eds K. Kveraga, and M. Bar (Cambridge, MA: MIT Press), 273–290.

Clementz, B. A., Sweeney, J. A., Hamm, J. P., Ivleva, E. I., Ethridge, L. E., Pearlson, G. D., et al. (2016). Identification of distinct psychosis biotypes using brain-based biomarkers. Am. J. Psychiatry 173, 373–384.

Drysdale, A. T., Grosenick, L., Downar, J., Dunlop, K., Mansouri, F., Meng, Y., et al. (2017). Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat. Med. 23, 28–38.

Filkowski, M. M., and Haas, B. W. (2016). Rethinking the use of neutral faces as a baseline in fMRI neuroimaging studies of axis-I psychiatric disorders. J. Neuroimag. 27, 281–291. doi: 10.1111/jon.12403

Frank, D. W., and Sabatinelli, D. (2014). Higher-order thalamic and amygdala modulation in human emotional scene perception. Brain Res. 1587, 69–76. doi: 10.1016/j.brainres.2014.08.061

Frank, D. W., and Sabatinelli, D. (2019). Hemodynamic and electrocortical reactivity to specific scene contents in emotional perception. Psychophysiology 56:e13340. doi: 10.1111/psyp.13340

Friston, K. J., Fletcher, P., Josephs, O., Holmes, A., Rugg, M. D., and Turner, R. (1998). Event-related fMRI: Characterizing differential responses. Neuroimage 7, 30–40.

Genovese, C. R., Lazar, N. A., and Nichols, T. (2002). Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 15, 870–878.

Insel, T., Cuthbert, B., Garvey, M., Heinssen, R., Pine, D. S., Quinn, K., et al. (2010). Research domain criteria (RDoC): Toward a new classification framework for research on mental disorders. Am. J. Psychiatry 167, 748–751. doi: 10.1176/appi.ajp.2010.09091379

Junghöfer, M., Winker, C., Rehbein, M. A., and Sabatinelli, D. (2017). Noninvasive stimulation of the ventromedial prefrontal cortex enhances pleasant scene processing. Cereb. Cortex 27, 3449–3456. doi: 10.1093/cercor/bhx073

Kober, H., Barrett, L. F., Joseph, J., Bliss-Moreau, E., Lindquist, K., and Wager, T. D. (2008). Functional grouping and cortical-subcortical interactions in emotion: A meta-analysis of neuroimaging studies. Neuroimage 42, 998–1031. doi: 10.1016/j.neuroimage.2008.03.059

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-8. Gainesville, FL: University of Florida.

LeDoux, J. E., and Pine, D. S. (2016). Using neuroscience to help understand fear and anxiety: A two-system framework. Am. J. Psychiatry 173, 1083–1093. doi: 10.1176/appi.ajp.2016.16030353

Lindquist, K. A., Satpute, A. B., Wager, T. D., Weber, J., and Barrett, L. F. (2016). The brain basis of positive and negative affect: Evidence from a meta-analysis of the human neuroimaging literature. Cereb. Cortex 26, 1910–1922. doi: 10.1093/cercor/bhv001

Liu, X., Hairston, J., Schrier, M., and Fan, J. (2011). Common and distinct networks underlying reward valence and processing stages: A meta-analysis of functional neuroimaging studies. Neurosci. Biobehav. Rev. 35, 1219–1236. doi: 10.1016/j.neubiorev.2010.12.012

McTeague, L. M., and Lang, P. J. (2012). The anxiety spectrum and the reflex physiology of defense: From circumscribed fear to broad distress. Depress Anxiety 29, 264–281. doi: 10.1002/da.21891

McTeague, L. M., Lang, P. J., Wangelin, B. C., Laplante, M.-C., and Bradley, M. M. (2012). Defensive mobilization in specific phobia: Fear specificity, negative affectivity, and diagnostic prominence. Biol. Psychiatry 72, 8–18. doi: 10.1016/j.biopsych.2012.01.012

Morris, S. E., and Cuthbert, B. N. (2012). Research domain criteria: Cognitive systems, neural circuits, and dimensions of behavior. Dial. Clin. Neurosci. 14, 29–37.

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Peng, Y., Knotts, J. D., Taylor, C. T., Craske, M. G., Stein, M. B., Bookheimer, S., et al. (2021). Failure to identify robust latent variables of positive or negative valence processing across units of analysis. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 6, 518–526. doi: 10.1016/j.bpsc.2020.12.005

Sabatinelli, D., and Frank, D. W. (2019). Assessing the primacy of human amygdala-inferotemporal emotional scene discrimination with rapid whole-brain fMRI. Neuroscience 406, 212–224. doi: 10.1016/j.neuroscience.2019.03.001

Sabatinelli, D., Fortune, E. E., Li, Q., Siddiqui, A., Krafft, C., Oliver, W. T., et al. (2011). Emotional perception: Meta analyses of face and natural scene processing. Neuroimage 54, 2524–2533. doi: 10.1016/j.neuroimage.2010.10.011

Sambuco, N., Bradley, M. M., Herring, D. R., and Lang, P. J. (2020). Common circuit or paradigm shift? The functional brain in emotional scene perception and emotional imagery. Psychophysiology 57:e13522.

Szucs, D., and Ioannidis, J. P. (2017). Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature. PLoS Biol. 15:e2000797.

Trotti, R. L., Abdelmageed, S., Parker, D. A., Sabatinelli, D., Tamminga, C. A., Gershon, E. S., et al. (2021). Neural processing of repeated emotional scenes in schizophrenia, schizoaffective disorder, and bipolar disorder. Schizophr. Bull. 47, 1473–1481. doi: 10.1093/schbul/sbab018

Keywords: RDoC, emotion, functional MRI, protocol, stimuli, amygdala, visual perception

Citation: Sabatinelli D, Winker C, Farkas AH, Rehbein MA and Junghoefer M (2023) A 5-min paradigm to evoke robust emotional reactivity in neuroimaging studies. Front. Neurosci. 17:1102213. doi: 10.3389/fnins.2023.1102213

Received: 18 November 2022; Accepted: 06 February 2023;

Published: 07 March 2023.

Edited by:

Zhi Yang, Shanghai Mental Health Center, ChinaReviewed by:

Xujun Duan, University of Electronic Science and Technology of China, ChinaPengfei Xu, Beijing Normal University, China

Copyright © 2023 Sabatinelli, Winker, Farkas, Rehbein and Junghoefer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dean Sabatinelli, c2FiYXRAdWdhLmVkdQ==

Dean Sabatinelli

Dean Sabatinelli Constantin Winker

Constantin Winker Andrew H. Farkas

Andrew H. Farkas Maimu A. Rehbein

Maimu A. Rehbein Markus Junghoefer

Markus Junghoefer