94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 12 September 2022

Sec. Brain Imaging Methods

Volume 16 - 2022 | https://doi.org/10.3389/fnins.2022.903448

Micro-expressions can reflect an individual’s subjective emotions and true mental state and are widely used in the fields of mental health, justice, law enforcement, intelligence, and security. However, the current approach based on image and expert assessment-based micro-expression recognition technology has limitations such as limited application scenarios and time consumption. Therefore, to overcome these limitations, this study is the first to explore the brain mechanisms of micro-expressions and their differences from macro-expressions from a neuroscientific perspective. This can be a foundation for micro-expression recognition based on EEG signals. We designed a real-time supervision and emotional expression suppression (SEES) experimental paradigm to synchronously collect facial expressions and electroencephalograms. Electroencephalogram signals were analyzed at the scalp and source levels to determine the temporal and spatial neural patterns of micro- and macro-expressions. We found that micro-expressions were more strongly activated in the premotor cortex, supplementary motor cortex, and middle frontal gyrus in frontal regions under positive emotions than macro-expressions. Under negative emotions, micro-expressions were more weakly activated in the somatosensory cortex and corneal gyrus regions than macro-expressions. The activation of the right temporoparietal junction (rTPJ) was stronger in micro-expressions under positive than negative emotions. The reason for this difference is that the pathways of facial control are different; the production of micro-expressions under positive emotion is dependent on the control of the face, while micro-expressions under negative emotions are more dependent on the intensity of the emotion.

In human interpersonal interactions, the face’s complex musculature and direct relationship with the processing and perception of emotions in the brain make it a dynamic canvas on which humans transmit their emotional states and infer those of others (Porter et al., 2012). However, individuals may suppress or hide their true emotional expressions in certain social situations, which complicates the interpretation of facial expressions. Brief facial expressions revealed under such voluntary manipulation are often referred to as micro-expressions (Ekman and Rosenberg, 2005; Ekman, 2009). As instantaneous expressions within 1/2 to 1/25 of a second, micro-expressions are faint and difficult to recognize by the naked eye, but they are believed to reflect a person’s true intent, especially those of hostile nature (ten Brinke et al., 2012a). Micro-expressions are similar to macro-expressions and have basic, discrete types of emotional expressions, such as disgust, anger, fear, sadness, happiness, or surprise, that reveal the emotions that a person may be attempting to hide (Ekman, 2003; Porter and Brinke, 2008). Therefore, micro-expressions can provide essential behavioral clues for lie detection, which has been increasingly used in fields such as national security, judicial systems, medical clinics, and social interaction research.

However, the neural mechanisms of micro-expression remains unclear, which affects the further development of micro-expression recognition applications. The current recognition of micro-expressions rely heavily on image recognition technology (Peng et al., 2017; Zhang et al., 2018; Wang et al., 2021) and expert assessment (Yan et al., 2013, 2014). Image recognition technology refers to the technology of object recognition of human face images using various machine learning (Wang et al., 2015; Liu et al., 2016) and deep learning algorithms (Verma et al., 2020; Wang et al., 2021) to recognize micro-expressions. Expert assessment refers to an assessment method in which a professionally trained micro-expression recognition expert manually judges micro-expressions. The disadvantages of image recognition technology are limited application scenarios (Goh et al., 2020), such as those with covered facial micro-expressions (e.g., wearing a mask during the pandemic), insufficient illumination (Xu et al., 2017), and special people (e.g., patients with facial paralysis). The main problem with expert assessment is subjectivity, which is not only time-consuming but also has a low correctness rate (Monaro et al., 2022). Therefore, exploring the neural mechanisms of micro-expressions can lay the foundation for micro-expression recognition based on physiological signals. This can help to overcome the limitations of micro-expression recognition and broader application scenarios for micro-expression recognition.

The inhibition hypothesis proposed by Ekman (Malatesta, 1985) suggests that micro-expressions are produced by competition between the cortical and subcortical pathways in emotional arousal, which involves both emotional arousal and voluntary cognitive control processes. When an emotion is triggered, the subcortical brain regions project a strong involuntary signal from the amygdala to the facial nucleus. The individual subsequently recruits the voluntary motor cortex to conceal this response, sending a signal to suppress their expression in a socially and culturally acceptable manner. This means that the cortical pathways, including the temporal cortex, primary motor cortex, ventrolateral premotor cortex, and supplementary motor area, can evaluate and make decisions regarding facial expressions and subsequently recruit motor areas that directly control voluntary facial movements (Paiva-Silva et al., 2016). This view can be considered as the basic assumption of the neural perspective of micro-expressions (Frank and Svetieva, 2015).

Therefore, this is the first study that aims to explore the brain mechanisms underlying micro-expressions and their differences from macro-expressions from a neuroscientific perspective. Micro-expressions have the characteristics of spontaneity, short duration, and low intensity. Therefore, the millisecond temporal resolution of EEG technology can rapidly capture brain activity when micro-expressions occur. Moreover, it has the advantage of being non-invasive and low-risk, and a large number of studies have used EEG techniques to examine the brain mechanisms underlying macro-expression generation (Recio et al., 2014; Shangguan et al., 2019; Yuan et al., 2021). For example, using EEG techniques, Recio et al. found that, compared to happy expressions, angry expressions came along with greater allocation of processing resources for the inhibition of the preactivated motor plan (N2), and the updating of a new one (P3)(Recio et al., 2014). Shangguan et al. also used EEG to examine the brain mechanisms underlying the production of happy and angry facial expressions. They found that happiness and anger did not differ during the motor-preparation phase. The difference in amplitudes between N2 and P3 showed that the inhibition and reprogramming costs of anger were greater than those of happiness (Shangguan et al., 2019). Thus, it is evident that the high temporal resolution of EEG technology is substantially advantageous in studying the brain mechanisms underlying the generation of micro- and macro-expressions.

Currently, the suppression-elicitation and lying-leakage paradigms are the approaches typically used for micro-expression elicitation (ten Brinke et al., 2012b; Yan et al., 2014). The lying-leakage method (Ekman and Friesen, 1974; Frank and Ekman, 1997), although more ecologically valid, is problematic because the micro-expression occurrence rate is quite low and EEG studies require a certain number of occurrences before analysis. In contrast, the suppression-elicitation paradigm requires participants to maintain neutral facial expressions while watching a video and eliciting strong emotions. Their performance is related to an experimental reward that increases their motivation to hide their true emotions in facial expressions (Yan et al., 2013, 2014). Video-induced high emotional intensity increased the occurrence of micro-expressions; 109 MEs were detected among 1000 facial expressions (approximately 11%). However, micro-expressions mostly occur in interpersonal situations; thus, we added a real-time supervision module to the suppression-elicitation paradigm to improve the ecological validity of this method. Consequently, the subjects and supervisors participated simultaneously in the experiments, providing simulated social supervision. We named this improved paradigm real-time supervision and emotional expression suppression (SEES) experimental paradigm.

In summary, we designed a paradigm (SEES) to investigate the neural mechanisms via EEG synchronization with a high-speed camera for the difference between micro and macro-expressions under voluntary conditions at the scalp and source levels. Meanwhile, we examined the effect of different types of emotions (i.e., whether the neural mechanisms of micro-expressions are different under positive and negative emotions).

There were 80 self-reported, right-handed participants in the current study. The participants were healthy and did not consume psychoactive substances. Those at risk for depression were excluded (Beck Depression Inventory score of > 18). Of these 80 participants, 78 exhibited at least one instance each of happy and fearful expressions during positive and negative video clips. Thus, the final sample comprised 78 participants (age range: 17–22 years, 23 males, 45 females). All participants provided written informed consent and the ethics committee of Southwest University approved the study.

We chose videos of amusement, fear, and neutral emotions as emotional stimuli to elicit sufficient, relatively pure facial expressions that were not surrounded by various unemotional facial movements. Chinese comedy film clips and variety shows were used for the amusement videos, since native cultural factors may affect elicitation in emotional experiments (Zheng et al., 2017). Classic scary films were used for fear videos. The criteria for selecting the video materials were as follows: (a) the length of the video was < 3 min to avoid visual fatigue, (b) the materials were easily understood and did not require excessive thinking, and (c) the materials should elicit the expression of a single desired target emotion (e.g., urge to laugh or express fear). Based on these criteria, we manually selected 35 online videos as the emotional materials. We requested 20 participants (not part of the formal experiment) to assess the valence of these videos and rate the intensity on a seven-point Likert scale; six points and above were the criteria for selection. We selected seven videos as the elicitation material for experimentation, including three positive (eliciting laughter urges, scale 6.05 ± 0.83), one neutral, and three negative (eliciting fear expression, scale 6.13 ± 0.92) video clips.

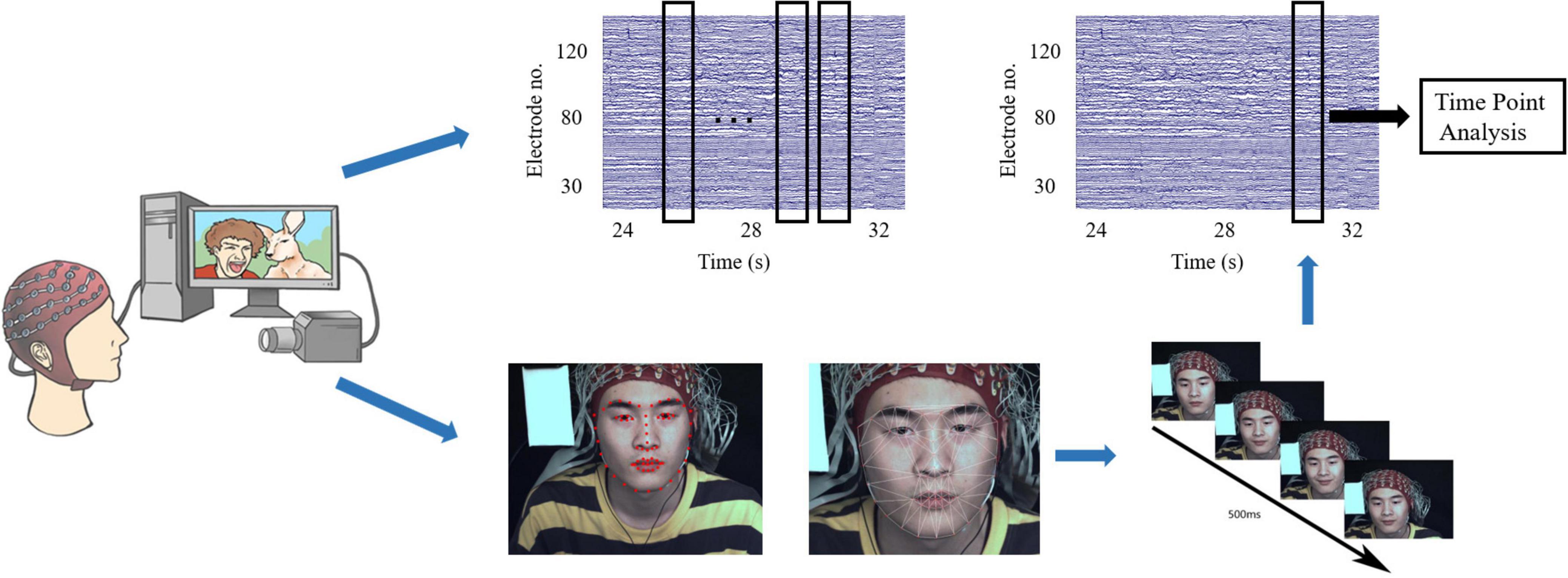

To put the participants into strong motivation to inhibit facial expressions and improve the ecological validity, we increased the pressure (simulated social supervision situation) into the classic micro-expressions suppression-elicitation paradigm (SEES experimental paradigm) to strongly motivate participants to inhibit facial expressions and improve ecological validity. The participants and supervisors participated simultaneously in the experiment. Participants were seated approximately 1 m from a 23-inch screen, behind which there were two cameras: a high-speed recording camera (90 frames/s) and a real-time surveillance camera on a tripod. The supervisor was seated approximately 1 m to the left of the participant to observe facial expressions through a monitor in real time. The participant was aware of the supervisor’s presence. Participants and supervisors were divided by a curtain to ensure that the movement of supervisors did not affect the participant’s attention. EEG signals were recorded from 128 active electrodes using a Biosemi Active system. A labview-based synchronization system was developed to synchronize the EEG acquisition device accurately with a high-speed camera. We ensured that the EEG signal was accurately synchronized with the acquisition of facial images by using the same trigger simultaneously to generate time stamps on the camera recording and Biosemi Active system (see Figure 1).

Participants were seated in a comfortable chair in a silent room at a temperature of 24–26°C. Participants were instructed to concentrate on the video clips and neutralize their facial expressions. Additionally, they were informed that their payments were directly proportional to their performance. If the supervisor observed their facial expressions, two yuan for each expression would be subtracted from their payment. On the contrary, if the supervisor did not observe any facial expressions, ten yuan would be added to their payment as an additional reward.

The seven video clips were grouped into three blocks. Each block comprised three positive, one neutral, and three negative videos played sequentially. To avoid order effects, the order of playing the positive and negative video clips was reversed for different participants. Before starting the experiment, 60 s of resting state was collected as the baseline. After each video, participants were allowed to rest for 60 s and their emotional activation were measured with a 7-point Likert scale. The average score for negative materials was 5.91 and that for positive materials was 6.11.

Complete micro-expressions are difficult to elicit in the laboratory, and only partial facial expressions, such as movements from the lower or upper face, are typically observed (Porter et al., 2012; Yan et al., 2013). However, partial micro-expressions tend to be subtle manifestations of an underlying emotion, and observations of rapid facial expressions in various social situations indicate that micro-expressions are more often partial than are full-face expressions. In this study, partial or full facial expressions with durations of 500 ms were considered micro-expressions, whereas those with durations > 500 ms were classified as macro-expressions. We first used action units (AUs) from a facial action coding system (Yan et al., 2014) to detect micro-expressions among participants at the same stimulus time points based on a discriminative response map fitting method (Asthana et al., 2013), tracking 66 facial landmarks of facial expressions (Liu et al., 2016). Two coders were used to analyze the micro-expressions (Yan et al., 2014). The procedure includes the following three steps (see Figure 2).

Figure 2. Diagram of facial expression identification and determination of the time points of micro-expressions.

Step 1: Count the time points of all micro-expressions across all participants. This step was used to detect all the time points of the participants’ micro-expressions when they watched the videos. We determined the approximate time points for the onset, apex, and offset frames by playing the recording at a 1/3 speed. We selected all time points based on these results.

Step 2: Frame-by-frame coding. This step was used to determine the onset, apex, and offset frames of the micro-expressions based on the time points previously selected. As micro-expressions under positive emotion for instance, the first frame that showed activation of AU6, AU12 (or both) was considered the onset frame. For this facial expression, the apex frame displayed the entire expression with the maximum intensity. The offset frame is the last frame before the face reverts to its original expression (Hess and Kleck, 1990; Hoffmann, 2010; Yan et al., 2013). The coders repeatedly examined minute changes between adjacent frames surrounding the micro-expression onset, apex, and offset to identify these frames accurately. The duration of micro-expression was calculated.

Step 3: Determining time points: Based on the apex frame, we selected time points when > 20 participants showed micro-expressions. Global time points were determined by averaging the timestamps of the apex frames. Based on these reference points, 2-s blocks of the corresponding EEG signals were extracted for each participant to perform the analysis. Participants with micro-expressions occurring at these time points were included in the micro-expression group.

Electroencephalography was recorded continuously from 128 electrodes using an ActiveTwo acquisition 125 system (BioSemi, The Netherlands) at a sampling rate of 2,048 Hz. EEG data were processed offline using EEGLAB (Delorme and Makeig, 2004) and BESA research software (Hoechstetter et al., 2004). Drift and noise reduction were performed by applying a 0.5-50 band-pass filter. To maximize the signal-to-noise ratio, the EEG signals should be referenced to the Reference Electrode Standardization Technique (REST) reference using the REST software (Yao, 2001; Dong et al., 2017). We used BESA research software to correct EEG signals contaminated by eye blinks and movements.

The time synchronization signal and timing of the apex frame of the facial expression were used to estimate the position of the EEG signal. We found that the EEG responses of micro-expressions were relatively short, generally within 1 s, and macro-expressions generally longer. Thus, with the apex of all facial expressions as the midpoint, we trimmed segments with a length of 2 s from the pre-processed EEG signals. The global field power (GFP) (Khanna et al., 2015) was subsequently calculated for the 2 s segments. We selected 1 s of data with the maximum peak (calculated using GFP) as the midpoint for the sample. GFP represents the strength of the electric field over the brain at each instant, and is often used to characterize rapid changes in brain activity. We selected the data with the maximum peak as the midpoint because the peak of the GFP curve instantaneously represented the strongest field strength.

To identify the average power spectral density (PSD) value in different frequency bands for each condition (occurrence of micro- and macro-expressions in positive/negative emotions), we extracted EEG signals using the open-source MATLAB toolbox FieldTrip (Oostenveld et al., 2011). The PSD at each electrode was calculated in 1 Hz steps between 0.5 Hz and 50 Hz with a seven-cycle-length sliding window. This resulted in a decreasing time window length as the frequency increased (e.g., 700 ms for 10 Hz and 350 ms for 20 Hz). The average PSD values from the alpha (8 Hz < f < 12 Hz), beta (12 Hz < f < 30 Hz), and gamma (30 Hz < f < 50 Hz) bands were extracted for all 128 electrodes.

The possible activity among electrodes at different frequency points associated with the micro- and macro-expressions was evaluated by calculating the differences in each condition at each frequency point. The resulting differences were first compared using a post hoc independent-sample t-test to compare the changes elicited by the occurrence of micro-expressions with macro-expressions. We used multiple-comparison corrections based on randomization statistical non-parametric mapping (5,000 times) to assess the statistical significance of all t-values (p < 0.05) (Maris and Oostenveld, 2007).

We used standardized low-resolution brain electromagnetic tomography (SLORETA) analysis to localize the sources of the difference between the two types of facial expressions induced by changes in brain oscillations (Fuchs et al., 2002; Pascual-Marqui, 2002; Jurcak et al., 2007). This calculates the cortical three-dimensional distribution of current source density (CSD) for micro-expressions and macro-expressions. For this purpose, scalp activity recorded at each electrode was first converted into the current CSD field on a three-dimensional source space (6,239 cerebrospinal gray matter voxels with a resolution of 5 mm) based on the transformation matrix. Subsequently, voxel-by-voxel one-sample t-tests on log-transformed data between the two conditions were used to determine whether these two larger late positivities would be mediated by distinct functional neural structures. Multiple comparison corrections based on randomization statistical non-parametric mapping (5,000 times) were used to assess the statistical significance of all t-values (p < 0.05).

The results of topographical maps showed that, during positive emotion, compared with macro-expression, the power of micro-expression was significantly higher in the right central and parietal regions in the alpha and gamma bands. However, in the theta and alpha bands of the prefrontal and left temporal regions, micro-expressions had lower power than macro-expressions (Figure 3A).

Figure 3. Topographical maps for EEG power in micro-expression and macro-expression from theta, alpha, beta and gamma bands in (A) Positive emotion and (Micro-expression minus Macro-expression) (B) Negative emotion (Macro-expression minus Micro-expression). The white dots on the difference graph are the electrode points with significant difference between the power of micro-expression and macro-expression.

Contrary to the pattern of positive emotion, the power of macro-expressions in negative emotion was greater than that of micro-expression; significant differences were located in the channel from the parietal and occipital regions in theta bands; right prefrontal, right frontal, right central, right parietal, and right temporal regions in the alpha band; right frontal, right central, right parietal, and right parietal regions in the beta band; and right frontal, right central, and right temporal regions in the gamma band (Figure 3B).

Micro- and macro-expressions have a similar activation pattern in positive and negative emotions. This is more prominent in the left prefrontal and right frontal regions in the theta and alpha bands and left temporal region in the theta, alpha, and gamma bands.

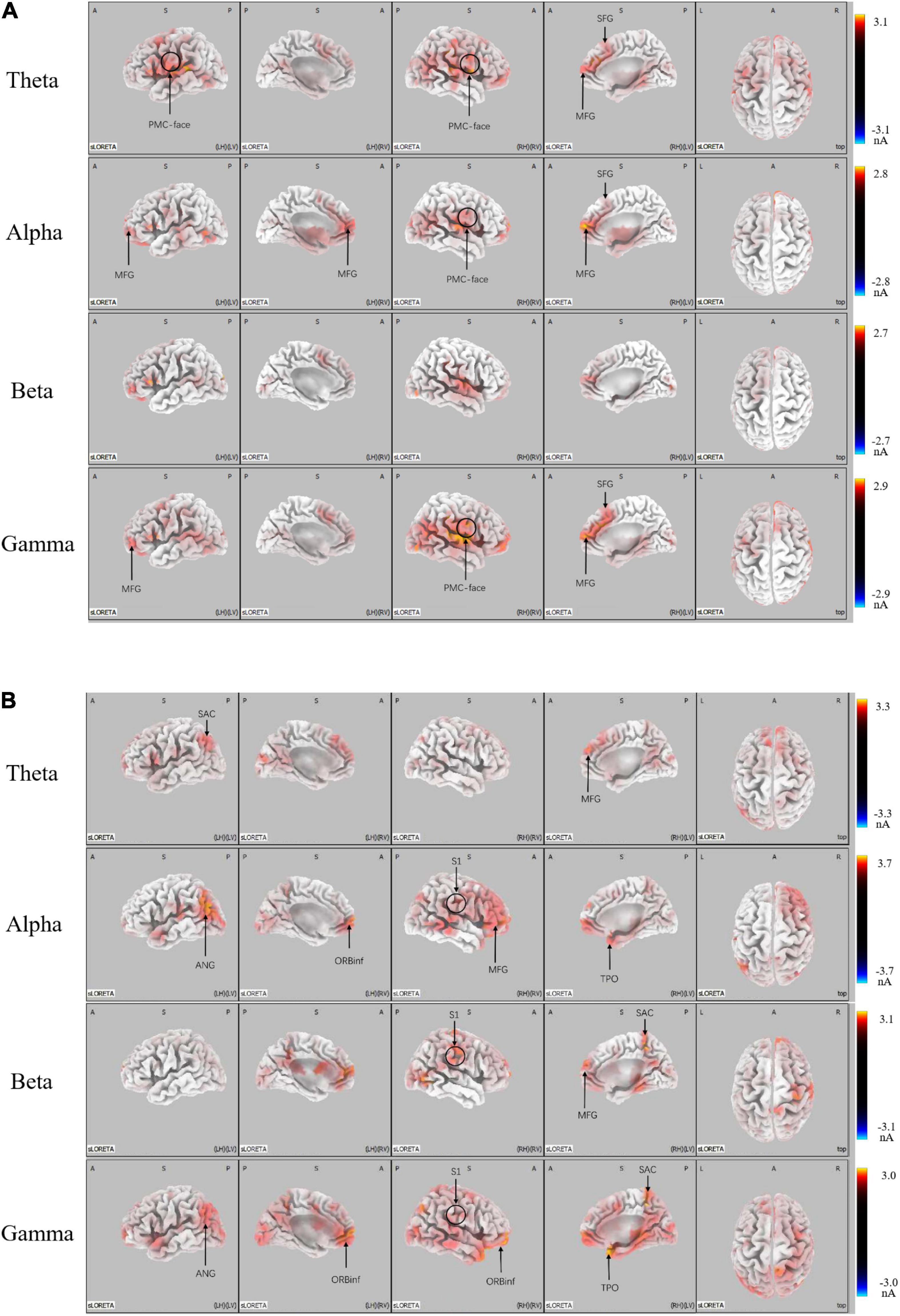

We conducted the SLORETA analysis to further determine whether the brain areas were mediated by distinct functional neural generators. There was greater activation in micro-expression (p < 0.05) for a positive emotion (see Figure 4A). We found significant differences in: the theta band located in the promoter cortex (PMC), right superior frontal gyrus (SFG), and right middle frontal gyrus (MFG); alpha band, which is also located in PMC, right SFG, and MFG; and gamma band, which is located in the PMC, MFG, and SFG. There was greater activation in macro-expression (p < 0.05) for a negative emotion (Figure 4B). We found significant differences in: the theta band located in the left somatosensory association cortex (SAC) in the occipital region; alpha band, which is located in the MFG in the prefrontal region, somatosensory cortex (S1) in the right central region, left angular gyrus (ANG) in the occipital region, and temporal pole (TPO) in the temporal region; beta band, which is located in the SAC in the occipital region, and S1 in the right central region; and gamma band located in the SAC and ANG in the occipital region, S1 in the right central region, and TPO in the temporal region (see Figure 4B).

Figure 4. LORETA probabilistic map showing cortical activation and a significant difference between micro-expression minus macro-expression in (A) Positive emotion and (B) Negative emotion (Micro-expression minus Macro-expression) (B) Negative emotion (Macro-expression minus Micro-expression). Red colors represent a greater activation, blue colors represent a less activation.

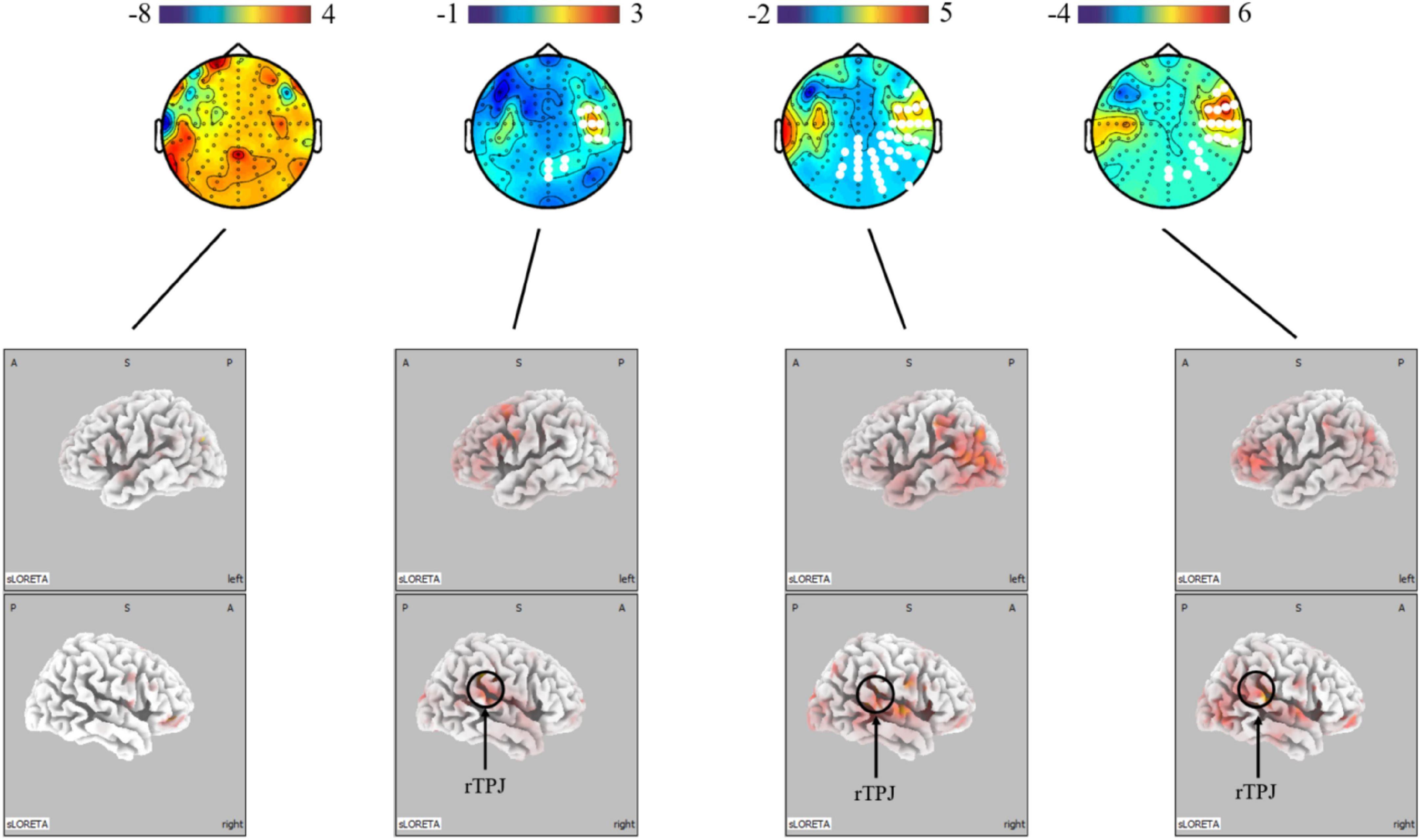

The present study further analyzed the differences in micro-expressions under positive and negative emotions. The power under positive emotion in the alpha, beta, and gamma bands on the scalp level was greater in the right parietal, temporal, and occipital regions than under negative emotion. This difference was not significant in the theta band. The source-level results showed stronger activation under positive emotion in the right temporoparietal junction (rTPJ), which is associated with attentional control (see Figure 5).

Figure 5. Topographical maps for EEG power and LORETA probabilistic map showing cortical activation and a significant difference between micro-expression under positive emotion and negative emotion (positive emotion minus negative emotion). Red colors represent a greater activation, blue colors represent a less activation. The white dots are the electrode points with significant difference.

In order to help overcome for the limitations of micro-expression expert assessment and image recognition techniques, and lay the foundation for micro-expression recognition based on EEG signals. This study is the first to investigate the brain mechanisms of micro-expressions and their differences from macro-expressions from a neuroscience perspective. As a result, we found (1) a general activation of the left temporal and frontal regions in micro-expressions and macro-expressions under both positive and negative emotions; (2) that under positive emotion, micro-expressions shows stronger power in the central and parietal regions on the scalp in the alpha and gamma bands than macro-expressions, which are located in the premotor, supplementary motor cortices, and MFG on the source level. and (3) that under negative emotion, the micro-expressions showed lower power in the right prefrontal, right frontal, right central, right parietal, and right temporal regions than macro-expressions across frequencies in negative emotion. At the source level, differences were located in the somatosensory cortex and angular gyrus regions. These three main points are elucidated in the following discussion.

We found a similar activation on the scalp for micro- and macro-expressions under both positive and negative emotions, with general activation in the left temporal and prefrontal regions, which concurs with previous emotional suppression studies (Ochsner et al., 2012; Buhle et al., 2014). In the present study, participants in the SEES were requested to perform neutral facial expressions that conflicted with their real emotional state. Activation of the prefrontal region, especially higher power in the alpha band (Pfurtscheller et al., 1996; Klimesch, 1999; Coan and Allen, 2004), was reported to be associated with voluntary control of social-emotional behavior and involved in coordinating rapid action selection processes, emotional conflict detection, and inhibition of emotional responses (Volman et al., 2011; Roy et al., 2012; Rive et al., 2013). For instance, in an emotional conflict task (face-word task), successful emotional conflict resolution was associated with regions of the ventral medial prefrontal cortex, cortex supplementary motor area, and superior temporal gyrus (Deng et al., 2014). Meta-analyses of fMRI showed that response inhibition activated the frontostriatal system, including the ventral lateral prefrontal cortex and supplementary motor areas (Hung et al., 2018). In addition, temporal regions are core regions for emotion detection, and the left temporal lobe is considered to be involved in the action component of emotion (Bamford et al., 2009; Jastorff et al., 2016), in avoiding negative items. In addition, according to recent research, activation of the superior temporal cortex suggests that the extrastriate and temporal cortices (such as the superior temporal sulcus) are relevant to facial processing (Gerbella et al., 2019). There are reports in patients with schizophrenia that temporal lobe structural abnormalities are related to deficits in facial emotion recognition (Goghari et al., 2011). Considering both these findings, the general activation in the frontal and left temporal regions appears to reflect the participants’ inhibition state of facial control for emotional stimulation and/or responses when suppressing emotional facial expressions.

For positive emotions, the premotor area showed a significant difference, while micro-expressions showed higher activation in this area. Higher activation of the premotor cortex correlates with facial expression control, as the premotor cortex, supplementary motor area, and primary somatosensory cortex are key regions controlling all subdivisions of the facial nucleus and implicate sensorimotor simulation during action observation (Grosbras and Paus, 2006; Majdandzic et al., 2009). For instance, direct electrical stimulation of the rostral portion of the supplementary motor area elicits complex facial movement patterns (Fried et al., 1991). Furthermore, the premotor cortex is more directly implicated in emotion-related motor behavior, which has been often reported in studies on the passive observation of actions (Balconi and Bortolotti, 2013; Mattavelli et al., 2016). The prefrontal cortex and premotor cortex are the core regions that control responses to emotional cues (Coombes et al., 2012; Braadbaart et al., 2014; Perry et al., 2017). Activation of these areas has been interpreted as evidence that contributes to explicit emotion processing by linking emotion perception with representations of somatic states engendered by emotions (Balconi and Bortolotti, 2013). Furthermore, to support this viewpoint research has indicated that comprehending another person’s facial expressions is related to increased activity in similar sensorimotor cortices (Banissy et al., 2010; Karakale et al., 2019). In the present study, the higher activity of micro-expressions in the premotor cortex may reflect stronger engagement of face control in positive emotions.

In contrast, under negative emotions, macro-expressions were more strongly activated in the somatosensory cortex and angular gyrus regions than in micro-expressions. This may indicate a stronger emotional arousal of macro-expressions compared to micro-expressions of fear. A previous study on fear processing found preferential involvement of the somatosensory cortex in fear processing (Williams et al., 2004; Bertini and Ladavas, 2021). For instance, the prominent role of somatosensory cortices in previous studies on embodied emotional simulation showed consistent activation of somatosensory areas when observing and producing emotional facial expressions (Carr et al., 2003; Winston et al., 2003). Moreover, information regarding the presence of a potential threat relies more heavily on somatosensory representations than other emotions (Pourtois et al., 2004). Activation on the right side of the somatosensory cortex further demonstrates the function of recognizing fearful emotional expressions (Pourtois et al., 2004). For instance, lesion studies showed that damage to the right somatosensory cortex significantly impairs recognition of emotional facial expressions (Adolphs et al., 2000). Inhibiting the activity of the right somatosensory cortex with repetitive transcranial stimulation interferes with the embodied simulation mechanism, thereby disrupting the ability to recognize emotional facial expressions (Pitcher et al., 2008). More importantly, conscious visual perception of fear-related stimuli involves cortical visual pathways to the amygdala, including the primary and extrastriate visual areas (Morris et al., 1999; Cecere et al., 2014; Bertini et al., 2018). This concurs with our finding that the activation of ANG shows that fear-related salient visual stimuli elicit activity in defense circuits that can heighten visual perceptual processing (Keil et al., 2010). Therefore, the specific higher activation occurring in the macro-expressions seems to reflect a stronger facial representation elicited by fear. This has been attributed to a preliminary activation of the somatosensory cortices in response to threat due to a possible interaction between the networks subserving visual perception and emotional arousal mechanisms (Gall and Latoschik, 2020). Accordingly, the stronger activation of macro-expressions in the somatosensory cortex and angular gyrus regions and their right-side lateralization suggest a stronger sensation of fearful emotions in macro-expressions.

These findings, collectively, indicate that micro-expressions are different from macro-expressions. However, the differences are not the same for positive and negative emotions. A possible explanation for the difference between the positive and negative emotional conditions is that the face is controlled by two main pathways: cortical and subcortical (Haxby et al., 2000; Adolphs, 2002). The cortical pathway is top-down voluntary control, whereas the subcortical pathway is a bottom-up physiological response (Vuilleumier and Pourtois, 2007; Ishai, 2008). For instance, the amygdala can influence the activity of somatosensory cortices in the presence of a potential threat, resulting in increased activity in the sensorimotor system and direct projection to the facial nucleus (Bertini and Ladavas, 2021). Thus, when we compared the differences between macro-expressions and micro-expressions under negative emotions, owing to the subcortical way, we found that macro-expressions had stronger activation on the somatosensory and visual cortices. In contrast, in facial expressions of happiness, smiles required little preparation and were usually easier to control in accordance with the requirements. Thus, voluntary control of micro-expressions of positive emotions in the cortical pathway can be observed in the premotor cortex. In other words, micro- and macro-expressions exhibit differences in neural activity under the same conditions; however, these differences vary under positive and negative emotions. Moreover, micro-expressions under positive emotion were more strongly activated in the right temporoparietal junction (rTPJ) than in negative emotion. A previous study found that stronger activation of the rTPJ was associated with greater attentional control. For instance, it influences the detection of deviant stimuli in oddball paradigms (Arrington et al., 2000; Jakobs et al., 2009). Taken together, this is because the pathways of facial control are different; the production of micro-expressions under positive emotions is dependent on the control of the face, while micro-expressions under negative emotions are more dependent on the intensity of the emotion.

This study had several limitations. We used the dipole-source method to study neural activities associated with the generation and evaluation of significant differences between micro-expressions and macro-expressions. However, we were unable to detect the neural activity of key emotion processing structures (such as the amygdala) buried deep in the lower cortex, because of the inherent deficiency in EEG detection depth. Therefore, we were unable to grasp the complete picture of the brain mechanisms involved in micro-expression. Higher spatial resolution tools such as fMRI are needed for future research.

This study is the first to investigate the neural mechanisms underlying the differences between micro- and macro-expressions by using EEG signal. It helps to fill the gap in the field of identifying micro-expressions in neural activation. We designed a paradigm SEES, which we believe is a worthy contribution to the elicitation of micro-expressions. Our findings highlight that both micro- and macro-expressions activate the left temporal and prefrontal lobes under different emotions. This reflects the participants’ state of inhibition of facial control in response to emotional stimuli and/or responses to emotional facial expressions. Micro-expressions were strongly activated in the premotor cortex, supplementary motor cortex, and MFG regions under positive emotions. They were more weakly activated in the somatosensory cortex and angular gyrus regions under negative emotions. This indicates that micro-expressions under positive emotions are dependent on the control of the face, whereas under negative emotions, they are dependent on the intensity of the emotion. Our findings highlight that this difference occurs because the pathways of facial control are different, which contributes to the groundwork for future research on the mechanism and pattern recognition of micro-expressions.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by the Ethical Review Committee of Southwest University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

This work was supported in part by the National Natural Science Foundation of China (Grant/Award Numbers: 61472330 and 61872301).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adolphs, R., Damasio, H., Tranel, D., Cooper, G., and Damasio, A. R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000

Arrington, C. M., Carr, T. H., Mayer, A. R., and Rao, S. M. (2000). Neural mechanisms of visual attention: Object-based selection of a region in space. J. Cogn. Neurosci. 12, 106–117.

Asthana, A., Zafeiriou, S., Cheng, S., and Pantic, M. (2013). “Robust discriminative response map fitting with constrained local models,” in Proceedings of the 2013 IEEE conference on computer vision and pattern recognition, Portland, OR.

Balconi, M., and Bortolotti, A. (2013). Conscious and unconscious face recognition is improved by high-frequency rTMS on pre-motor cortex. Conscious. Cogn. 22, 771–778. doi: 10.1016/j.concog.2013.04.013

Bamford, S., Turnbull, O. H., Coetzer, R., and Ward, R. (2009). To lose the frame of action: A selective deficit in avoiding unpleasant objects following a unilateral temporal lobe lesion. Neurocase 15, 261–270. doi: 10.1080/13554790802680313

Banissy, M. J., Sauter, D. A., Ward, J., Warren, J. E., Walsh, V., and Scott, S. K. (2010). Suppressing sensorimotor activity modulates the discrimination of auditory emotions but not speaker identity. J. Neurosci. 30, 13552–13557. doi: 10.1523/JNEUROSCI.0786-10.2010

Bertini, C., and Ladavas, E. (2021). Fear-related signals are prioritised in visual, somatosensory and spatial systems. Neuropsychologia 150, 107698. doi: 10.1016/j.neuropsychologia.2020.107698

Bertini, C., Pietrelli, M., Braghittoni, D., and Ladavas, E. (2018). Pulvinar lesions disrupt fear-related implicit visual processing in hemianopic patients. Front. Psychol. 9:2329. doi: 10.3389/fpsyg.2018.02329

Braadbaart, L., de Grauw, H., Perrett, D. I., Waiter, G. D., and Williams, J. H. G. (2014). The shared neural basis of empathy and facial imitation accuracy. Neuroimage 84, 367–375. doi: 10.1016/j.neuroimage.2013.08.061

Buhle, J. T., Silvers, J. A., Wager, T. D., Lopez, R., Onyemekwu, C., Kober, H., et al. (2014). Cognitive reappraisal of emotion: A meta-analysis of human neuroimaging studies. Cereb. Cortex 24, 2981–2990.

Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C., and Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. U.S.A. 100, 5497–5502. doi: 10.1073/pnas.0935845100

Cecere, R., Bertini, C., Maier, M. E., and Ladavas, E. (2014). Unseen fearful faces influence face encoding: Evidence from ERPs in hemianopic patients. J. Cogn. Neurosci. 26, 2564–2577. doi: 10.1162/jocn_a_00671

Coan, J. A., and Allen, J. J. B. (2004). Frontal EEG asymmetry as a moderator and mediator of emotion. Biol. Psychol. 67, 7–49.

Coombes, S. A., Corcos, D. M., Pavuluri, M. N., and Vaillancourt, D. E. (2012). Maintaining force control despite changes in emotional context engages dorsomedial prefrontal and premotor cortex. Cereb. Cortex 22, 616–627. doi: 10.1093/cercor/bhr141

Delorme, A., and Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21.

Deng, Z., Wei, D., Du, S. X. X., Hitchman, G., and Qiu, J. (2014). Regional gray matter density associated with emotional conflict resolution: Evidence from voxel-based morphometry. Neuroscience 275, 500–507. doi: 10.1016/j.neuroscience.2014.06.040

Dong, L., Li, F. L., Liu, Q., Wen, X., Lai, Y. X., Xu, P., et al. (2017). MATLAB toolboxes for reference electrode standardization technique (REST) of scalp EEG. Front. Neurosci. 11:601. doi: 10.3389/fnins.2017.00601

Ekman, P. (2003). Emotions revealed: Recognizing faces and feelings to improve communication and emotional life. New York. NY: Times books.

Ekman, P. (2009). Telling lies: Clues to deceit in the marketplace, politics, and marriage (revised edition). New York. NY: WW Norton & Company.

Ekman, P., and Friesen, W. V. (1974). Detecting deception from the body or face. J. Pers. Soc. Psychol. 29, 288.

Ekman, P., and Rosenberg, E. L. (2005). What the face reveals: Basic and applied studies of spontaneous expression using the facial action coding system (FACS), 2nd Edn. New York, NY: Oxford University Press, 21–38. doi: 10.1093/acprof:oso/9780195179644.001.0001

Frank, M. G., and Ekman, P. (1997). The ability to detect deceit generalizes across different types of high-stake lies. J. Pers. Soc. Psychol. 72, 1429–1239. doi: 10.1037//0022-3514.72.6.1429

Frank, M. G., and Svetieva, E. (2015). “Microexpressions and deception,” in Understanding facial expressions in communication, eds M. Mandal and A. Awasthi (New Delhi: Springer), 227–242.

Fried, I., Katz, A., Mccarthy, G., Sass, K., Williamson, P., Spencer, S., et al. (1991). Functional organization of human supplementary motor cortex studied by electrical stimulation. J. Neurosci. 11, 3656–3666.

Fuchs, M., Kastner, J., Wagner, M., Hawes, S., and Ebersole, J. S. (2002). A standardized boundary element method volume conductor model. Clin. Neurophysiol. 113, 702–712.

Gall, D., and Latoschik, M. E. (2020). Visual angle modulates affective responses to audiovisual stimuli. Comput. Hum. Behav. 109:106346.

Gerbella, M., Caruana, F., and Rizzolatti, G. (2019). Pathways for smiling, disgust and fear recognition in blindsight patients. Neuropsychologia 128, 6–13. doi: 10.1016/j.neuropsychologia.2017.08.028

Goghari, V. M., MacDonald, A. W., and Sponheim, S. R. (2011). Temporal lobe structures and facial emotion recognition in schizophrenia patients and nonpsychotic relatives. Schizophr. Bull. 37, 1281–1294.

Goh, K. M., Ng, C. H., Lim, L. L., and Sheikh, U. U. (2020). Micro-expression recognition: An updated review of current trends, challenges and solutions. Vis. Comput. 36, 445–468.

Grosbras, M. H., and Paus, T. (2006). Brain networks involved in viewing angry hands or faces. Cereb. Cortex 16, 1087–1096.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233.

Hess, U., and Kleck, R. E. (1990). Differentiating emotion elicited and deliberate emotional facial expressions. Eur. J. Soc. Psychol. 20, 369–385. doi: 10.1016/j.jbtep.2015.10.004

Hoechstetter, K., Bornfleth, H., Weckesser, D., Ille, N., Berg, P., and Scherg, M. (2004). BESA source coherence: A new method to study cortical oscillatory coupling. Brain Topogr. 16, 233–238. doi: 10.1023/b:brat.0000032857.55223.5d

Hoffmann, H. (2010). Perceived realism of dynamic facial expressions of emotion: Optimal durations for the presentation of emotional onsets and offsets. Cogn. Emot. 24, 1369–1376.

Hung, Y. W., Gaillard, S. L., Yarmak, P., and Arsalidou, M. (2018). Dissociations of cognitive inhibition, response inhibition, and emotional interference: Voxelwise ALE meta-analyses of fMRI studies. Hum. Brain Mapp. 39, 4065–4082. doi: 10.1002/hbm.24232

Jakobs, O., Wang, L. E., Dafotakis, M., Grefkes, C., Zilles, K., and Eickhoff, S. B. (2009). Effects of timing and movement uncertainty implicate the temporo-parietal junction in the prediction of forthcoming motor actions. Neuroimage 47, 667–677. doi: 10.1016/j.neuroimage.2009.04.065

Jastorff, J., De Winter, F. L., Van den Stock, J., Vandenberghe, R., Giese, M. A., and Vandenbulcke, M. (2016). Functional dissociation between anterior temporal lobe and inferior frontal gyrus in the processing of dynamic body expressions: Insights from behavioral variant frontotemporal dementia. Hum. Brain Mapp. 37, 4472–4486. doi: 10.1002/hbm.23322

Jurcak, V., Tsuzuki, D., and Dan, I. (2007). 10/20, 10/10, and 10/5 systems revisited: Their validity as relative head-surface-based positioning systems. Neuroimage 34, 1600–1611. doi: 10.1016/j.neuroimage.2006.09.024

Karakale, O., Moore, M. R., and Kirk, I. J. (2019). Mental simulation of facial expressions: Mu suppression to the viewing of dynamic neutral face videos. Front. Hum. Neurosci. 13:34. doi: 10.3389/fnhum.2019.00034

Keil, A., Bradley, M. M., Ihssen, N., Heim, S., Vila, J., Guerra, P., et al. (2010). Defensive engagement and perceptual enhancement. Neuropsychologia 48, 3580–3584.

Khanna, A., Pascual-Leone, A., Michel, C. M., and Farzan, F. (2015). Microstates in resting-state EEG: Current status and future directions. Neurosci. Biobehav. Rev. 49, 105–113. doi: 10.1016/j.neubiorev.2014.12.010

Klimesch, W. (1999). EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 29, 169–195. doi: 10.1016/s0165-0173(98)00056-3

Liu, Y. J., Zhang, J. K., Yan, W. J., Wang, S. J., Zhao, G. Y., and Fu, X. L. (2016). A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Trans. Affect. Comput. 7, 299–310.

Majdandzic, J., Bekkering, H., van Schie, H. T., and Toni, I. (2009). Movement-specific repetition suppression in ventral and dorsal premotor cortex during action observation. Cereb. Cortex 19, 2736–2745. doi: 10.1093/cercor/bhp049

Malatesta, C. Z. (1985). Telling lies–clues to deceit in the marketplace, politics, and marriage, Vol. 9. New York, NY: Times Book Review.

Maris, E., and Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190.

Mattavelli, G., Rosanova, M., Casali, A. G., Papagno, C., and Lauro, L. J. R. (2016). Timing of emotion representation in right and left occipital region: Evidence from combined TMS-EEG. Brain Cogn. 106, 13–22. doi: 10.1016/j.bandc.2016.04.009

Monaro, M., Maldera, S., Scarpazza, C., Sartori, G., and Navarin, N. (2022). Detecting deception through facial expressions in a dataset of videotaped interviews: A comparison between human judges and machine learning models. Comput. Hum. Behav. 127:107063.

Morris, J. S., Ohman, A., and Dolan, R. J. (1999). A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. U.S.A. 96, 1680–1685. doi: 10.1073/pnas.96.4.1680

Ochsner, K. N., Silvers, J. A., and Buhle, J. T. (2012). “Functional imaging studies of emotion regulation: A synthetic review and evolving model of the cognitive control of emotion,” in Year in cognitive neuroscience, Vol. 1251, eds A. Kingstone and M. B. Miller (New York, NY: Annals of the New York Academy of Sciences), E1–E24.

Oostenveld, R., Fries, P., Maris, E., and Schoffelen, J. M. (2011). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011:156869. doi: 10.1155/2011/156869

Paiva-Silva, A. I., Pontes, M. K., Aguiar, J. S. R., and de Souza, W. C. (2016). How do we evaluate facial emotion recognition? Psychol. Neurosci. 9, 153–175.

Pascual-Marqui, R. D. (2002). Standardized low-resolution brain electromagnetic tomography (sLORETA): Technical details. Methods Find. Exp. Clin. Pharmacol. 24, 5–12.

Peng, M., Wang, C., Chen, T., Liu, G., and Fu, X. (2017). Dual temporal scale convolutional neural network for micro-expression recognition. Front. Psychol. 8:1745. doi: 10.3389/fpsyg.2017.01745

Perry, A., Saunders, S. N., Stiso, J., Dewar, C., Lubell, J., Meling, T. R., et al. (2017). Effects of prefrontal cortex damage on emotion understanding: EEG and behavioural evidence. Brain 140, 1086–1099. doi: 10.1093/brain/awx031

Pfurtscheller, G., Stancak, A., and Neuper, C. (1996). Event-related synchronization (ERS) in the alpha band–an electrophysiological correlate of cortical idling: A review. Int. J. Psychophysiol. 24, 39–46. doi: 10.1016/s0167-8760(96)00066-9

Pitcher, D., Garrido, L., Walsh, V., and Duchaine, B. C. (2008). Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. J. Neurosci. 28, 8929–8933. doi: 10.1523/JNEUROSCI.1450-08.2008

Porter, S., and Brinke, L. T. (2008). Reading between the lies: Identifying concealed and falsified emotions in universal facial expressions. Psychol. Sci. 19, 508–514. doi: 10.1111/j.1467-9280.2008.02116.x

Porter, S., ten Brinke, L., and Wallace, B. (2012). Secrets and lies: Involuntary leakage in deceptive facial expressions as a function of emotional intensity. J. Nonverbal Behav. 36, 23–37.

Pourtois, G., Sander, D., Andres, M., Grandjean, D., Reveret, L., Olivier, E., et al. (2004). Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. Eur. J. Neurosci. 20, 3507–3515. doi: 10.1111/j.1460-9568.2004.03794.x

Recio, G., Shmuilovich, O., and Sommer, W. (2014). Should i smile or should i frown? An ERP study on the voluntary control of emotion-related facial expressions. Psychophysiology 51, 789–799.

Rive, M. M., van Rooijen, G., Veltman, D. J., Phillips, M. L., Schene, A. H., and Ruhe, H. G. (2013). Neural correlates of dysfunctional emotion regulation in major depressive disorder. A systematic review of neuroimaging studies. Neurosci. Biobehav. Rev. 37, 2529–2553. doi: 10.1016/j.neubiorev.2013.07.018

Roy, M., Shohamy, D., and Wager, T. D. (2012). Ventromedial prefrontal-subcortical systems and the generation of affective meaning. Trends Cogn. Sci. 16, 147–156. doi: 10.1016/j.tics.2012.01.005

Shangguan, C. Y., Wang, X., Li, X., Wang, Y. L., Lu, J. M., and Li, Z. Z. (2019). Inhibition and production of anger cost more: Evidence from an ERP study on the production and switch of voluntary facial emotional expression. Front. Psychol. 10:1276. doi: 10.3389/fpsyg.2019.01276

ten Brinke, L., MacDonald, S., Porter, S., and O’Connor, B. (2012a). Crocodile tears: Facial, verbal and body language behaviours associated with genuine and fabricated remorse. Law Hum. Behav. 36, 51–59. doi: 10.1037/h0093950

ten Brinke, L., Porter, S., and Baker, A. (2012b). Darwin the detective: Observable facial muscle contractions reveal emotional high-stakes lies. Evol. Hum. Behav. 33, 411–416.

Verma, M., Vipparthi, S. K., Singh, G., and Murala, S. (2020). LEARNet: Dynamic imaging network for micro expression recognition. IEEE Trans. Image Process. 29, 1618–1627. doi: 10.1109/TIP.2019.2912358

Volman, I., Toni, I., Verhagen, L., and Roelofs, K. (2011). Endogenous testosterone modulates prefrontal-amygdala connectivity during social emotional behavior. Cereb. Cortex 21, 2282–2290. doi: 10.1093/cercor/bhr001

Vuilleumier, P., and Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45, 174–194.

Wang, S.-J., He, Y., Li, J., and Fu, X. (2021). MESNet: A convolutional neural network for spotting multi-scale micro-expression intervals in long videos. IEEE Trans. Image Process. 30, 3956–3969. doi: 10.1109/TIP.2021.3064258

Wang, Y. D., See, J., Phan, R. C. W., and Oh, Y. H. (2015). Efficient spatio-temporal local binary patterns for spontaneous facial micro-expression recognition. PLoS One 10:e0124674. doi: 10.1371/journal.pone.0124674

Williams, L. M., Brown, K. J., Das, P., Boucsein, W., Sokolov, E. N., Brammer, M. J., et al. (2004). The dynamics of cortico-amygdala and autonomic activity over the experimental time course of fear perception. Cogn. Brain Res. 21, 114–123. doi: 10.1016/j.cogbrainres.2004.06.005

Winston, J. S., O’Doherty, J., and Dolan, R. J. (2003). Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage 20, 84–97. doi: 10.1016/s1053-8119(03)00303-3

Xu, F., Zhang, J. P., and Wang, J. Z. (2017). Microexpression identification and categorization using a facial dynamics map. IEEE Trans. Affect. Comput. 8, 254–267.

Yan, W. J., Li, X. B., Wang, S. J., Zhao, G. Y., Liu, Y. J., Chen, Y. H., et al. (2014). CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS One 9:e86041. doi: 10.1371/journal.pone.0086041

Yan, W. J., Wu, Q., Liang, J., Chen, Y. H., and Fu, X. (2013). How fast are the leaked facial expressions: The duration of micro-expressions. J. Nonverbal Behav. 37, 217–230.

Yao, D. Z. (2001). A method to standardize a reference of scalp EEG recordings to a point at infinity. Physiol. Meas. 22, 693–711.

Yuan, G. J., Liu, G. Y., and Wei, D. T. (2021). Roles of P300 and late positive potential in initial romantic attraction. Front. Neurosci. 15:718847. doi: 10.3389/fnins.2021.718847

Zhang, Z. H., Chen, T., Meng, H. Y., Liu, G. Y., and Fu, X. L. (2018). SMEConvNet: A convolutional neural network for spotting spontaneous facial micro-expression from long videos. IEEE Access 6, 71143–71151.

Keywords: micro-expressions, macro-expressions, emotion, electroencephalography (EEG), expression inhibition

Citation: Zhao X, Liu Y, Chen T, Wang S, Chen J, Wang L and Liu G (2022) Differences in brain activations between micro- and macro-expressions based on electroencephalography. Front. Neurosci. 16:903448. doi: 10.3389/fnins.2022.903448

Received: 24 March 2022; Accepted: 23 August 2022;

Published: 12 September 2022.

Edited by:

Tessa Marzi, University of Florence, ItalyReviewed by:

Yuanxiao Ma, Nanjing Normal University, ChinaCopyright © 2022 Zhao, Liu, Chen, Wang, Chen, Wang and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guangyuan Liu, bGl1Z3lAc3d1LmVkdS5jbg==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.