- 1School of Electronic and Information Engineering, South China University of Technology, Guangzhou, China

- 2School of Future Technology, South China University of Technology, Guangzhou, China

Emotion recognition from affective brain-computer interfaces (aBCI) has garnered a lot of attention in human-computer interactions. Electroencephalographic (EEG) signals collected and stored in one database have been mostly used due to their ability to detect brain activities in real time and their reliability. Nevertheless, large EEG individual differences occur amongst subjects making it impossible for models to share information across. New labeled data is collected and trained separately for new subjects which costs a lot of time. Also, during EEG data collection across databases, different stimulation is introduced to subjects. Audio-visual stimulation (AVS) is commonly used in studying the emotional responses of subjects. In this article, we propose a brain region aware domain adaptation (BRADA) algorithm to treat features from auditory and visual brain regions differently, which effectively tackle subject-to-subject variations and mitigate distribution mismatch across databases. BRADA is a new framework that works with the existing transfer learning method. We apply BRADA to both cross-subject and cross-database settings. The experimental results indicate that our proposed transfer learning method can improve valence-arousal emotion recognition tasks.

1. Introduction

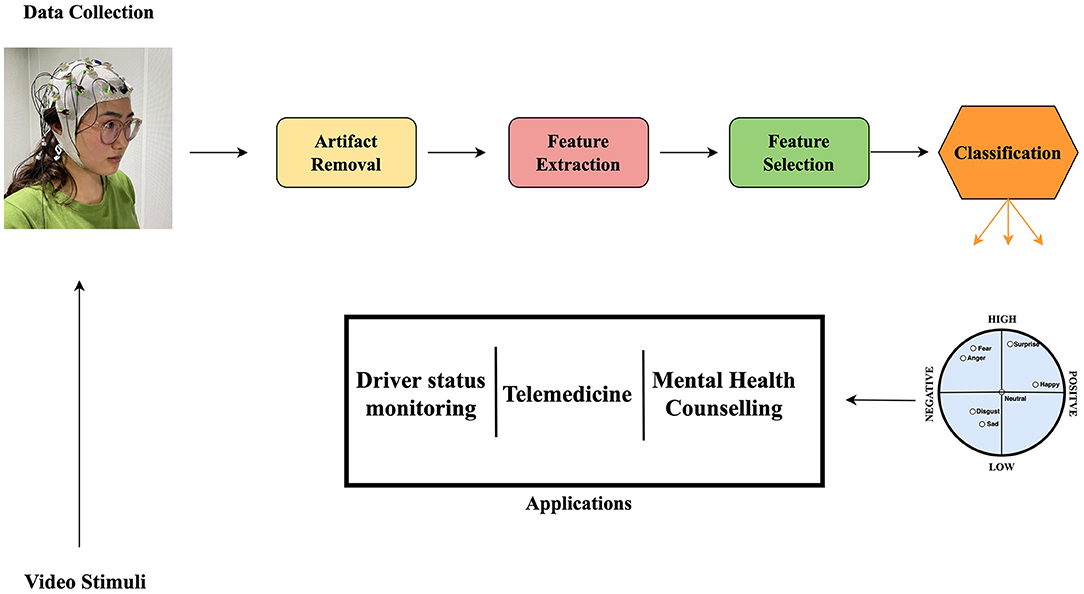

Emotions play an important role in Human-Human Interaction by creating pathways for individuals to learn and adapt to behaviors (Ko et al., 2021). Human-computer interaction should be designed in such a way that better interacts with users, behavior and emotions and respond accordingly (Principi et al., 2021; Yun et al., 2021). Emotion recognition from Electroencephalograph (EEG) signals among many other physiological methods shown to be more advantageous in its non-invasiveness and reliability. EEG is a widely used medical instrument for recording electrical currents generated by brain activity (Kwak et al., 2021). An affective brain-computer interface (aBCI) presents stimuli of different kinds to subjects by taking neural signals in a form of EEG recordings. EEG-based aBCI emotion recognition has gained research attention recently because of its rapidly growing field with multiple interdisciplinary applications (Alarcão and Fonseca, 2019; Torres et al., 2020). Figure 1 is an illustration of an aBCI and some of its applications. However, EEG signals have high inter-subject variations and this creates problems in designing models that generalize well across subjects. Conventionally, data are collected individually for each subject and a classifier is trained specifically for them rather than formulating a model with a classifier that can generalize well on all subjects concurrently. Another generalization problem arising from aBCI is the same subject session-to-session variation (Zheng and Lu, 2015). Domain adaptation (DA) methods can solve this issue (Yan et al., 2018). In order to establish an EEG database that fully elicits human emotions and meets a required classification task, some studies collect data for a single subject in multiple trials or sessions. Data collected per session can be reckoned as somewhat a new task because of the non-stationary characteristics of EEG signals (Shen and Lin, 2019). These require a great deal of time-consuming and re-calibration processes (Fdez et al., 2021) to minimize the variations in the subject-to-subject data and the session-to-session data for a single subject. Over the years, studies have focused on publicly available affective databases to assist researchers in designing and modeling their own affective state estimation methods (Koelstra et al., 2012; Soleymani et al., 2012; Katsigiannis and Ramzan, 2018; Subramanian et al., 2018; Zheng et al., 2019). These databases are collected using different stimulants, equipment, experimental environments and protocols, and target labels among many other different technical discrepancies. These differences are unique to a particular database but create research challenges when designing and building models intended to adapt across databases.

Figure 1. Illustration of an affective brain-computer interfaces (aBCI) and its applications (Fordson et al., 2021). Source for the photo top left: Center for Human Body Data Science, School of Electronic and Information Engineering, South China University of Technology.

Transfer learning (TL) focuses on leveraging and storing knowledge acquired from a source domain task and applying it to a target domain task that may be different but of a related problem without the need to learn from scratch (Pan et al., 2011; Niu et al., 2021). TL compensates for inter-subject variability evidenced in EEG feature dispersion as a covariate shift for aBCI to increase confidence in classification performance in comparison to non-transfer learning tasks (Saha and Baumert, 2020). The study (Lin, 2020) proposes a TL model, robust principal component analysis (RPCA) to Single Day (sD) and Multiple Day (mD) data dealing with inadequate labeled data and concurrently solving inter and intra-individual differences. Pan et al. (2011) proposed a transfer component analysis (TCA), for domain adaptation that finds representation from feature nodes. The study by Yan et al. (2018) proposed a maximum independence domain adaptation (MIDA) technique to tackle different distributions of training and test data. Fernando et al. (2013) introduced a subspace alignment (SA) method mapping function which aligns source subspaces to target ones described by eigenvectors. Unlike image processing (Su, 2021), EEG-based emotion recognition requires a lot of time, effort, and equipment to collect data. Therefore, in order to reduce the constraint on aBCI systems, TL is of great importance. It has been widely used in EEG-based emotion recognition and makes good progress in dealing with the cross-domain scenarios problems of EEG signal (Li et al., 2021). However, to the best of our knowledge, all existing transfer learning methods for EEG treats all channels the same while actually different EEG channel plays a different role. It is more reasonable to transfer knowledge differentially according to channel locations.

Channel selection that uses only part of the channels is a widely used pre-processing step in EEG signal analysis (Alotaiby et al., 2015; Boonyakitanont et al., 2020). It can reduce overfitting which is due to the utilization of unnecessary channels. Various channel selection methods for EEG-based emotion recognition have been proposed, such as measuring the contribution of each channel (Yan et al., 2020), selecting according to classification performance (Özerdem and Polat, 2017), excluding channels least correlated with the emotional state (Dura and Wosiak, 2021), normalized mutual information (Wang, 2019), and weight distribution of trained neural network (Zheng and Lu, 2015). They all select channels by analyzing EEG data or model parameters. EEG signal for emotion recognition is usually stimulated by visual or auditory materials. However, the prior knowledge of brain regions for visual and auditory stimuli is overlooked in channel selection.

In this study, we transfer knowledge from only stimulated brain regions according to prior neuroanatomy knowledge (Sotgiu et al., 2020) for EEG emotion recognition. Our approach does not only improve the accuracy of emotion recognition but provides new insight into EEG transfer learning.

Also, we show that for EEG signals, transferring knowledge in a finer way instead of treating all the channels the same will improve the performance. We reveal the effectiveness of applying prior knowledge of EEG signals for channel selection, unlike previous studies that utilized all EEG signals from channels that may not be needed. Our proposed improved transfer learning method not only works for cross-subject scenarios but also for cross-database scenarios.

2. Materials and Methods

2.1. Related Work

In this section, we review studies relating to aBCI using EEG signals and transfer learning methods that are most related to our proposed study. We presented studies on cross-subject, cross-database, and EEG channel selection for emotion classification. Moreover, we remonstrate the uniqueness of our article to distinguish it from recently published studies.

2.1.1. Transfer Learning for EEG-Based Emotion Recognition

2.1.1.1. Cross-Subject

The amount of available training data in an aBCI affects models' performance. However, the statistical distribution of training data varies across subjects as well as across trials/sessions within subjects, thus limiting the transferability of the training model between them (Lin, 2020). Azab et al. (2019) proposed a novel transfer learning system that reduces calibration time yet maintains classification accuracy by incorporating previously recorded data from other subjects when only few subject-specific sessions are available for training. Standard proposed methods dealing with subjects' differences are mostly based on common spatial pattern (CSP) (Martin-Clemente et al., 2019) which is a dimensionality reduction technique that linearly projects training data onto directions maximizing or minimizing the variations between them. CSP filtering methods reveal more information about the data and result in high efficiency values. Wu et al. (2020) proposed a TL protocol for closed-loop BCI systems and suggests data alignment before spatial filtering to make data from different subjects consistent and facilitate succeeding TL algorithms. Li et al. (2020) identified the problem of time consumption and build models for new subjects to reduce the demand for labeled data. Their method includes source selection and mapping destinations. They used style transfer mapping (STM) to reduce EEG differences between source and target data and explore mapping destination settings. The studies of He and Wu (2020) proposed an approach to align EEG trials from several subjects in Euclidean space by reducing variations that improve the learning performance of new incoming subjects. Their method aligns EEG sessions well into Euclidean space, employs low computational cost, and exhibits the usefulness of unsupervised classification (Rouast et al., 2021).

2.1.1.2. Cross-Database

Cross-database involves using two or more databases in building an effective aBCI for emotion recognition. We have searched numerous academic databases in an attempt to find works in this regard in addition to using transfer learning methods. However, difficult the search was, we have found some related works. Jayaram et al. (2016) introduced a model for transfer learning in EEG-based BCI that exploits multiple subjects and/or sessions shared structures between training data to increase performance. They demonstrated their method's usefulness in limiting time consumption and its capability of outperforming comparable methods on identical datasets. Rodrigues et al. (2019) present a transfer learning approach that deals with statistical variations of EEG signals collected from different subjects in different sessions. Their article proposed a Procrustes analysis method to match the statistical distributions of two datasets (simulated data and real data) using simple geometrical transformations over data points. Cimtay and Ekmekcioglu (2020) investigated pre-trained neural network models trained on the SEED dataset (Zheng and Lu, 2015) and tested them on the DEAP dataset (Koelstra et al., 2012) that yields a reasonable mean prediction accuracy. The study of Lan et al. (2019) focused on comparative studies on SEED and DEAP datasets. They used existing domain adaptation (DA) techniques on these datasets and reported their effectiveness in an unsupervised setting (Fernando et al., 2013).

2.1.1.3. EEG Channel Selection

The study by Daoud and Bayoumi (2019) uses channel selection methods to identify relevant EEG channels using a semi-supervised approach based on transfer learning. In order to simplify the training model, the authors of Ramadhani et al. (2021) used integrated selection (IS) to remove irrelevant EEG channel signals which further improved the performance of an aBCI system. The article by Basar et al. (2020) used welch power spectral density-based analysis to see the effects of CSP algorithms on EEG band and channel relationship, its neural efficacy, and emotional stimuli types. Also, Cao et al. (2020) selected EEG channels according to Fisher criteria and trained their model on a convolutional neural network based on parameter transfer. The selected channel features in comparison with non-channel selection demonstrate a higher accuracy performance.

The works of transfer learning and channel selection on cross-database lack sufficient investigation even though they relax research restraints of a typical aBCI. Previous studies on EEG-based transfer learning do not investigate two real EEG databases with significantly related components. They also do not focus on investigating selecting channels that contribute most to improving affective computing systems. They either use simulated data vs. real data (Yan et al., 2018; Rodrigues et al., 2019) or try to reduce components of one database to the other (Lan et al., 2019). Also, they do not focus on stimulated brain regions and select channels that can contribute meaningful insight. Instead, they extract features from all channels and follow traditional feature combination techniques in recognition tasks. Our article utilizes two databases with a significant focus on selecting stimulated brain regions that can affect participant responses in emotion elicitation. Emotional responses to videos in both databases are correlated with both employing dimensional models of valence and arousal. The motivation of this article is to adapt feature-space transfer learning and parameter-space transfer learning to new subjects in one database by decreasing variations within subject-to-subject and new databases by also decreasing database-to-database variations. This will in turn produce a robust classification method for affective BCI.

2.2. Database

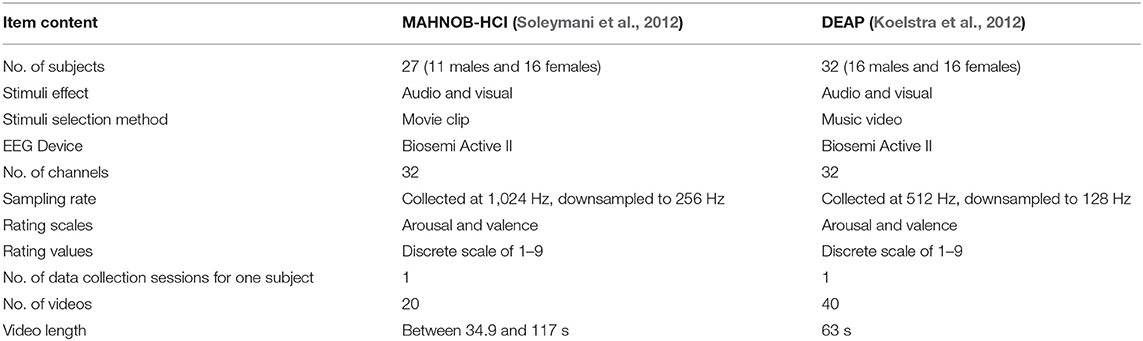

In this article, we utilized two publicly available databases, MAHNOB-HCI (Soleymani et al., 2012) and DEAP (Koelstra et al., 2012). We chose these databases because of the differences and the similarities they share. For example, similarities,—they are both collected with the same type of device and, differences,—the MAHNOB-HCI contains both audio and video while the DEAP only contains audio. As we mentioned in Section 1, transfer learning involves creating new models by fine tuning previously trained models and adapting knowledge learned while solving one problem and applying it to a different but related problem. Therefore, these two databases are different but related. Furthermore, our study is interested in the robustness of an applicable aBCI in a cross-database fashion. We plan to train our model on one database with different subjects and test it on another database with new subjects. We also intend to investigate the possible effects of transfer learning techniques on the prospect of heightening classification accuracy.

The MAHNOB-HCI database is recorded in response to affective stimuli with the common goal of recognizing emotions and implicit tagging. It consists of 30 subjects (13 men, 17 women). They were aged between 19 and 40 years (mean age of 26.06). Unfortunately, three subjects' data were lost due to technical errors, thus, 27 subjects (11 men, 16 women) data were considered for processing. The subjects watched 20 emotional movie videos and self reported felt emotions using arousal, valence, dominance, and predictability in addition to emotional keywords. The database comprises 32-channel EEG signals in accordance with the international 10-20 system. The EEG signals were recorded using the Biosemi Active II system with active electrodes at a 1,024 Hz sampling rate and downsampled to 256 Hz to reduce memory and processing cost.

The DEAP database is recorded for the analysis of human affective states. A total of 32 subjects participated in this experiment (16 men, 16 women). They are aged between 19 and 37 years (mean age of 26.9). The subjects watched as stimuli 40 1-min long excerpts of music video while their physiological signals are being collected. After each trial, participants rated each music video in terms of their level of arousal, valence, dominance, liking, and familiarity. The rating values comprise a continuous scale of 1–9 for arousal, valence, dominance, and liking, and a discrete scale of 1–5 for familiarity. The EEG signals were recorded with 32-channel electrodes placed according to the international 10-20 system at a sampling rate of 512 Hz and downsampled to 128 Hz. Table 1 sums up the important technical specification of the two EEG databases.

2.3. Methodology

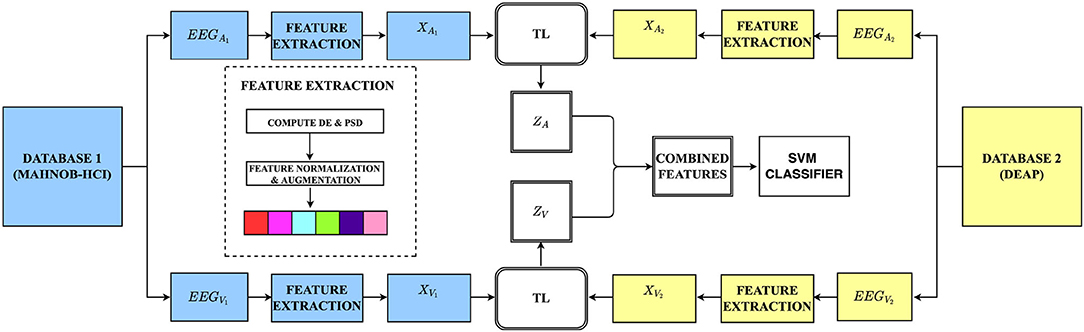

This section introduces our unsupervised transductive learning approach to this study. We present in the sub-sections how data are formulated, which features are extracted, and our proposed brain region aware domain adaptation (BRADA) method. Taking transfer learning between two databases as examples, the illustration of BRADA is shown in Figure 2.

Figure 2. The overall framework of our method: We extracted and selected features according to auditory and visual channels on both databases. Using transfer learning (TL) techniques, we transferred knowledge separately from the MAHNOB-HCI database and employed a feature concatenation method in an attempt to boost performance accuracy in the DEAP dataset (EEGA,V, EEG auditory or visual data; XA,V, auditory or visual input features; ZA,V, combined auditory or visual features).

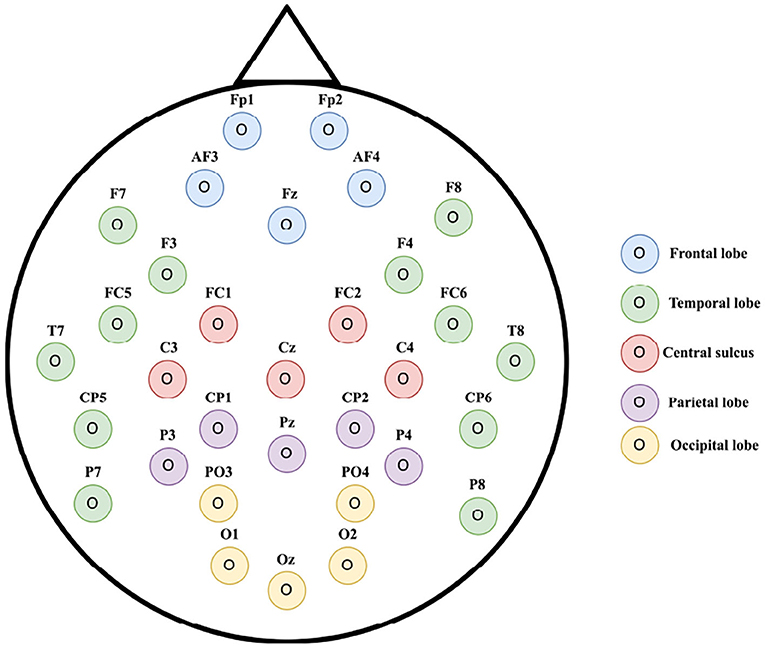

2.3.1. Visual and Auditory Channels

According to neuroanatomy (Sotgiu et al., 2020), we divide the international 10-20 EEG system into five regions (the frontal, parietal, occipital, temporal lobes, and the central sulcus). Figure 3 gives an illustration. Among them, the occipital lobe's primary function is to control vision and visual processing and the temporal lobe is related to the perception and recognition of auditory stimuli, speech, and memory. We call electrodes located in the occipital lobe (PO3, PO4, O1, Oz, and O2) and electrodes located in the temporal lobe (F7, F8, F3, F4, FC5, FC6, T7, T8, CP5, CP6, P7, and P8) as visual and auditory channels. We only extract features for these channels and transfer knowledge for the specific region separately.

Figure 3. Feature selection on electrode placement of 32 channel Electroencephalographic (EEG) according to the international 10-20 system (best seen in color) (Alarcão and Fonseca, 2019).

2.3.2. Feature Extraction

In this article, we adopt Differential Entropy (DE) (Lan et al., 2019) and Power Spectral Density (PSD) (Fang et al., 2021; Zhu and Zhong, 2021) features for emotion classification. These features have been extensively used in EEG-Based emotion recognition (Zheng et al., 2015; Arnau-Gonzalez et al., 2021; Zhu and Zhong, 2021). The feature includes DE and PSD from theta (4 Hz < f < 8 Hz), slow alpha (8 Hz < f < 10 Hz), alpha (8 Hz < f < 12 Hz), beta (12 Hz < f < 30 Hz), and gamma (30 Hz < f) bands of EEG signal. Therefore, for 32 electrode baseline classification, the dimension m of the feature vector is 32 × 5+32 × 5 = 320 features. Specifically, for auditory channel selection, the dimension mA of the feature vector is 12 × 5 + 12 × 5 = 120; for visual channel selection, the dimension mV of the feature vector is 5 × 5 + 5 × 5 = 50 features. After feature extraction, we use min-max normalization to scale the feature value to a proper range as follows:

where x is the original feature vector, and x ′ is the normalized feature value.

2.3.3. Brain Region Aware Domain Adaptation

Assume and are respectively the normalized source and target domain data, where m is the feature dimension, and ns and nt are the sample numbers of source and target domains. Usually, data from different domains follow different distributions. Domain adaptation (Pan and Yang, 2010) is to find a domain-invariant subspace such that a classifier trained on the source domain can be directly applied to the target domain. That is to say, we want to reduce the discrepancies between the source and target domains by transforming to , where h is the dimension of the domain-invariant subspace, and n = ns+nt is the total number of samples.

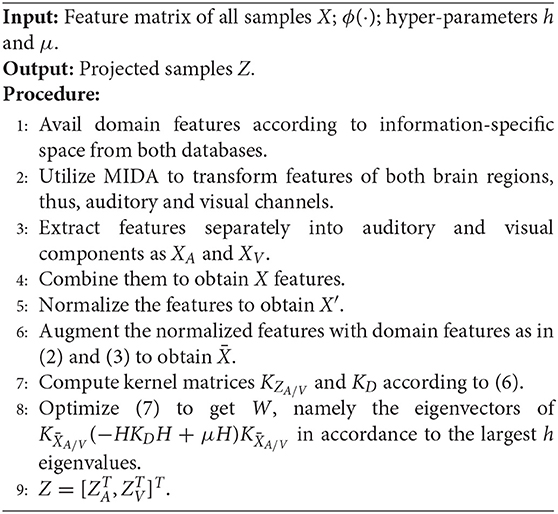

We utilize maximum independence domain adaptation (MIDA) (Yan et al., 2018) for feature transformation of both brain regions. Following Yan et al. (2018) and Lan et al. (2019), we define domain features to describe the background information of a sample and maximize the independence between the projected features and the domain features. The domain feature of a sample is defined by a one-hot encoding vector with di = 1 if the sample is from subject i and 0, otherwise. Following Lan et al. (2019), we encode the background information with feature augmentation by concatenation. The intention of the augmentation operation is to learn information-specific subspaces and to deal with time varying drift. The main difference between our method and Lan et al. (2019) is that we augment features of audio and video channels separately. Denote audio channel features and video channel features after normalization as XA and XV, respectively. Then, the augmented features are represented by

and

For simplification, we use A/V to denote either A or V. We use the kernel trick to project to the desired subspace. Denote as the mapping function. The transformed features are represented by a linear combination of the mapped features, i.e.,

Following kernel dimension reduction methods (Kempfert et al., 2020), is constructed by a linear combination of all samples in . Then, we have

where WA/V is the linear transformation matrix to be determined and is the kernel matrix of . By kernel trick, we can compute with kernel function and does not need the explicit ϕ function. In this article, we use the polynomial kernel function.

As in Lan et al. (2019), we use the Hilbert-Schmidt independence criterion (HSIC) (Gretton et al., 2005) to measure the dependence between ZA/V and D, which can be conveniently estimated by

where and are kernel matrices of ZA/V and D, is the centering matrix, and 1n is an n-dimensional vector full of ones.

Following Yan et al. (2018) and Lan et al. (2019), we simultaneously maximize the variance of the projected data and their independence from the domain features. By omitting the scaling factor, the final objective function is given by:

where μ>0 is a trade-off parameter. Here, we add an orthogonal constraint to the projection matrix W to ease the optimization. The solution to (7) can be obtained in a closed form by finding the h eigenvectors of corresponding to the top-h eigenvalues.

After obtaining WA/V, we compute the features of audio and visual channels by Equation (5). The final feature Z is then composed of the concatenation of ZA and ZV, i.e., . The algorithm of our proposed brain region aware domain adaptation is summarized in Algorithm 1. With the extracted features and their given labels, we apply a support vector machine (SVM) (Burges, 1998) for classification.

3. Results

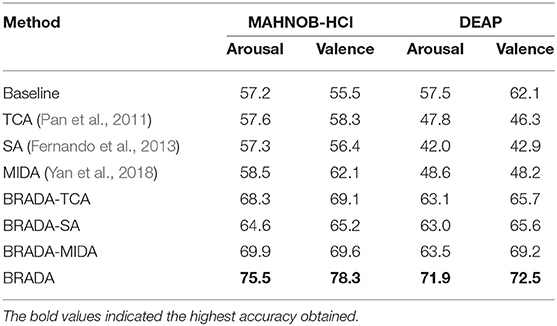

This section demonstrates the effectiveness of BRADA in cross-subject and cross-database settings. We first show that BRADA is compatible with classical transfer learning methods, TCA (Pan et al., 2011), SA (Fernando et al., 2013), and MIDA (Yan et al., 2018) in both cross-subject and cross-database settings for EEG emotion recognition, and then we conduct ablation experiment to show the effectiveness of both brain regions, feature normalization and augmentation, and kernel function.

Baseline denotes applying SVM to the extracted features of all the channels directly. TCA, SA, and MIDA denote applying these methods to the extracted features of all the channels and then classifying the transformed features by SVM. We also replace MIDA in BRADA with the other transfer learning methods, denoted by “BRADA-” followed by a method name. Note that we use a polynomial kernel in Algorithm 1, but the original method (Yan et al., 2018) uses a linear kernel. Without confusion, we use BRADA to denote Algorithm 1 and use BRADA-MIDA to denote using a linear kernel. Without specification, all the transfer learning methods adopt a linear kernel. For all the methods, we set the trade-off parameter μ to 1, and the latent subspace dimension h is searched through {10, 20, ..., 100}.

For MAHNOB-HCI, since data of three subjects could not be validated, data from 27 subjects who had sufficient completed trials were used. Physiological responses recorded with EEG of five hundred and forty samples were collected over the database, 27 × 20 = 540 samples. In DEAP, data from all 32 subjects were used. Physiological responses recorded with EEG of one thousand two hundred and eighty samples were collected over the database, 32 × 40 = 1, 280 samples.

3.1. Cross-Subject Transfer Learning

For the cross-subject setting, we apply leave-one-out cross-validation on each database, i.e., one subject is chosen as the test set, and the remaining subjects are used for training. Each subject constitutes a single domain, and thus, our approach is formulated in a multi-source domain setting. Since our method is unsupervised, it is not constrained by a domain number. Thus, it can also operate in a single source domain multi-target domain mode.

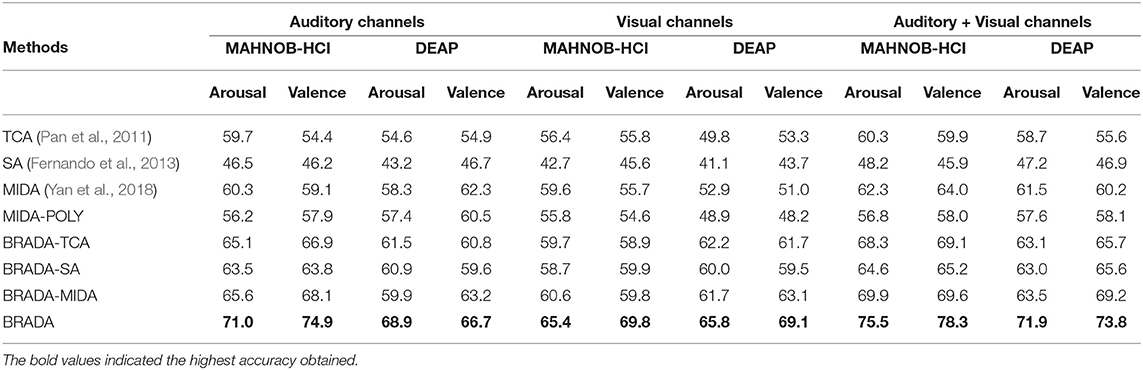

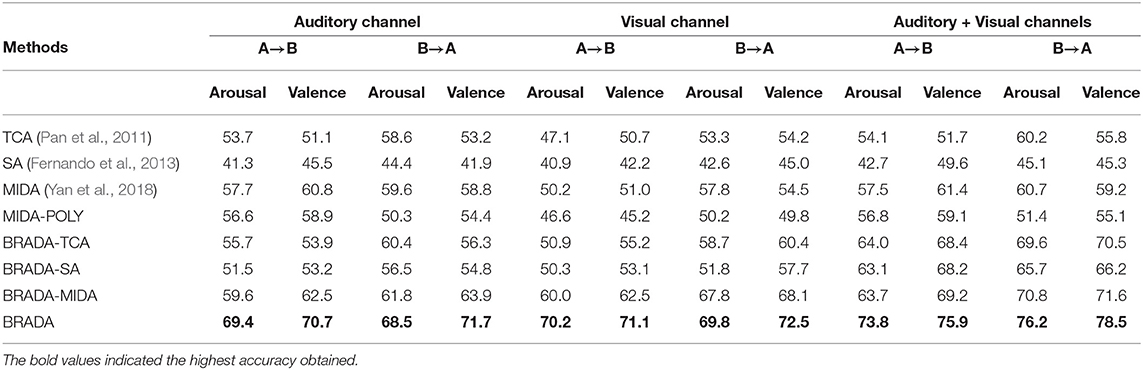

The classification accuracy of both databases is reported in Table 2. We can see that BRADA achieves the best performance. Compared with baseline, all transfer learning methods with all the channels improve the results for MAHNOB-HCI. However, for DEAP, the transfer learning methods result in a negative transfer, i.e., result degradation. This is evident in the study of Chai et al. (2017) which mentions negative transfer in hindering domain adaptation methods from the successful operation of DEAP. Nevertheless, taking these methods into the framework of BRADA, the results are consistently improved. BRADA can effectively improve classification accuracy by 10.4–22.8%, suggesting that individual differences in all subjects are significantly reduced. Statistical significance analysis performed shows that accuracy improvements are significant (t-test, p < 0.05). This strongly suggests that the brain regions of different functions should be separately treated and not all channels are needed for EEG emotion recognition. We should emphasize that performance gain is significant compared to all the other methods.

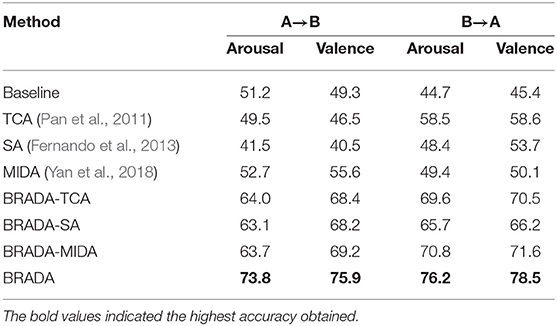

3.2. Cross-Database Transfer Learning

The previous sub-section presents the use of domain adaptation techniques which reduces inter-subject variations and improves classification performance on a single database. Here, we further present our study in a cross-database setting, i.e., the latent subspace is learned from all samples of both databases, and training and testing are applied to different databases. Note that this is a harder task because the differences between the samples are not only from personality and experimental sessions but also from equipment and experimental protocol. Each subject constitutes a single domain and thus this setting is multi-source and multi-target. For simplicity, we refer to MAHNOB-HCI as A and DEAP as B. We report the results of training on A and testing on B, and the reverse order in Table 3. We can see that, under both training settings, BRADA and its variants outperform their counterparts that take all the channels as input. Compared to the baseline accuracies with no domain adaptation method, BRADA can effectively improve classification accuracy by 22.6–33.1%, suggesting that individual differences in all databases are significantly reduced. Statistical significance analysis performed shows that accuracy improvements are significant (t-test, p < 0.05). This again verifies that selecting channels according to the stimuli sources can effectively reduce noise in EEG signals and transfer learning should be conducted separately for different brain regions.

3.3. Ablation Study

In order to effectively evaluate the BRADA algorithm and audio-visual feature combination for classification, we perform an ablation study to better understand our contributions and compare the effects of channel selection, normalization/augmentation, and kernel function selection on baseline features and existing models.

3.3.1. Effect of Channel Selection

The baseline model consists of features from 32 electrode channels—including channels that may not be needed. In our proposed methodology, we selected features from auditory and visual brain regions where we believe participants are more stimulated. The results are reported in Tables 4, 5. Combining both brain regions with BRADA performs better than transferring knowledge from single brain regions or through direct concatenation.

Table 4. Cross-subject classification accuracy (%) comparison with single brain region on MAHNOB-HCI and DEAP databases.

Table 5. Cross-database classification accuracy (%) comparison with single brain region on MAHNOB-HCI and DEAP databases.

Another trend worth mentioning is that during the experiments, we observed that separating features into auditory and visual components independently and combining them together yields better performance than directly extracting features from all brain regions together. It also enables our algorithm in learning to adapt well and transfer knowledge effectively.

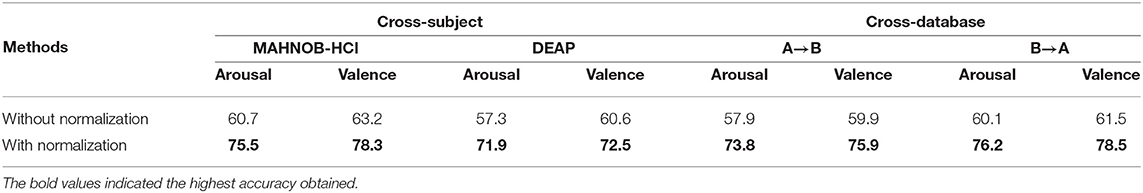

3.3.2. Effect of Normalization

Our method handles features as random variables whose distribution is derived from previous subjects within a database. We introduce a procedure for normalization and augmentation of data which reduces variations within individual subjects. We show that normalization significantly improves the performance across subject-to-subject and database-to-database. The results are reported in Table 6. In our experiment, we observe that applying domain adaptation techniques directly to original features may result in a negative transfer.

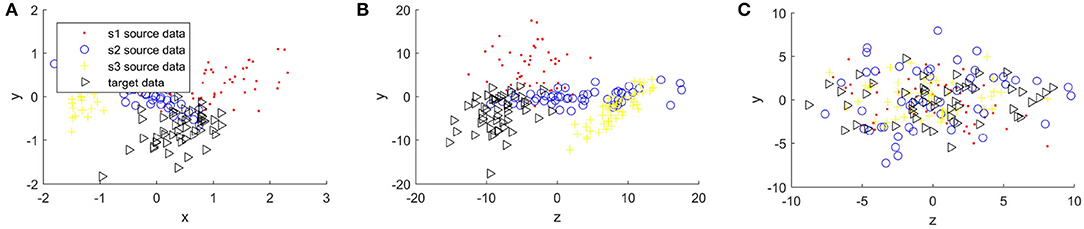

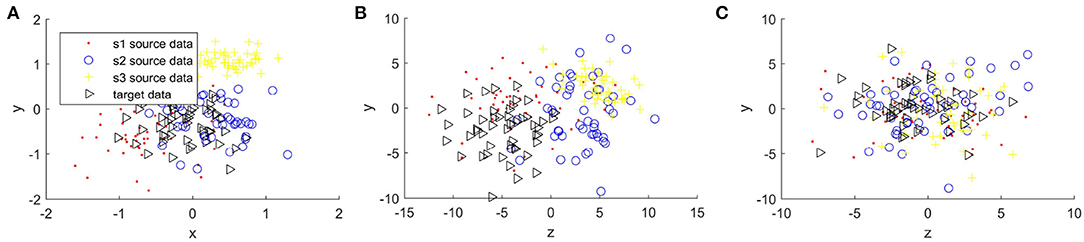

Figures 4, 5 compare the sample distribution of unnormalized and normalized features projected in 2D space with BRADA. We also visualize the original data in 2D space by utilizing principal component analysis (PCA). Here, we used four subjects as an example. Three subjects being source data and one subject as target data. Figures 4A, 5A show the source and target domain features in their original space. As we can observe, feature samples are distributed differently between different participating subjects. Each subject as a source or target domain operates in its own space by belonging to separate clustered regions. This indicates a large divergence between individual subjects. Figures 4B, 5B shows sample distribution when data from the original space is directly applied to the BRADA algorithm. We observe that, in both databases, there was no significant effect in reducing discrepancies existing within subjects. Figures 4C, 5C show the feature sample distribution when features are normalized and augmented. Clearly, it can be seen that discrepancies and regional own space clusters are significantly reduced. Feature samples are better aligned on both databases suggesting that the domain adaptation algorithm was successful in somewhat learning structures across subjects and adapting to their spaces.

Figure 4. Sample distribution comparison of features in their original space and normalized projected spaces in the MAHNOB-HCI database, (A) is MAHNOB original features, (B) is Unnormalized features + brain region aware domain adaptation (BRADA), and (C) is Normalized features + BRADA.

Figure 5. Sample distribution comparison of features in their original space and normalized projected spaces in the DEAP database, (A) is DEAP original features, (B) is Unnormalized features + BRADA, and (C) is Normalized features + BRADA.

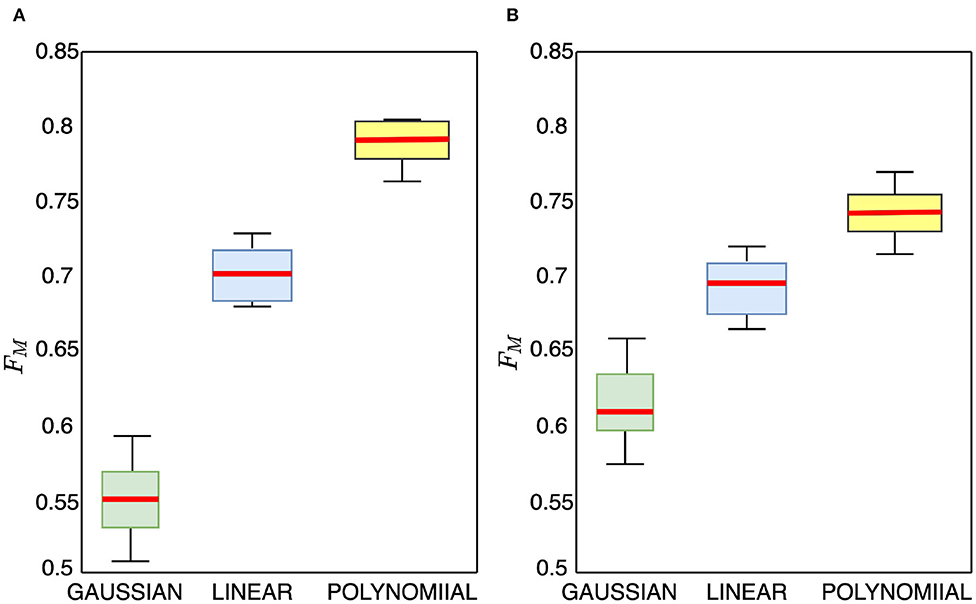

3.3.3. Effect of Kernel Function Selection

The compared TL methods utilize linear kernel function, while we employ polynomial kernel function. To further study the effectiveness of the polynomial kernel function, we compare it with the commonly used Gaussian and linear kernel functions and show the box plots in Figure 6. From the figure, we observe that the Gaussian kernel function approach achieves the lowest performance results. The polynomial kernel function achieves significantly statistically better performance than the linear kernel function with no overlaps seen. The polynomial kernel obtains a higher median accuracy and also produces a narrower box than the linear kernel indicating that the polynomial kernel reduces variations beneficial for transfer learning. Worth noting, we observe during the experiment that applying a polynomial kernel improves both recall and precision of the model's performance on the test data in both cross-subject and cross-database classification proving that our method returns relevant results.

Figure 6. Box plots ablation experiment for valence cross-subject classification accuracy on MAHNOB-HCI and DEAP data using Gaussian, linear, and polynomial kernel mapping functions, (A) MAHNOB-HCI, (B) DEAP.

4. Discussion

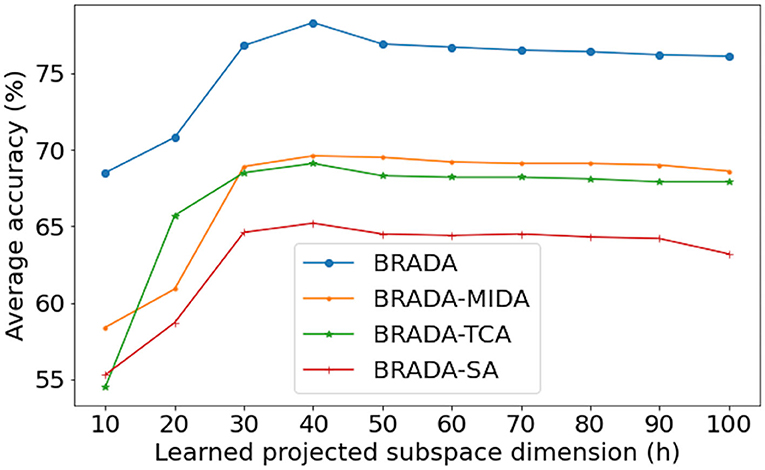

The dimension number h of the latent space is a critical hyper-parameter of domain adaptation methods. It is hard to determine the optimal dimension directly because the intrinsic dimension is affected by channel numbers and the kind of feature. To study the effect of feature dimension, we show the average valence classification accuracy of all BRADA variants on MAHNOB-HCI under cross-subject settings with varying learned projected subspace dimension h in Figure 7. The classification accuracy increases as the dimension increases at the lower dimension and becomes stable when the dimension is large enough. We can see that all BRADA variants achieve the best performance when h = 40. This implies that the feature has an intrinsic dimension of around 40. The cross database strategy identifies that the conventional aBCI prototype cannot be fully satisfied. The BRADA algorithm effectively copes with domain and technical discrepancies. This is of practical sense as it can reduce constraints on aBCI and develop into clinical applications and novel therapies for stress, depression, and other nervous system disorders. Therefore, more future studies are needed on this topic.

This article argues not all electrode channels are needed and investigated the effects of auditory and visual channels on two EEG databases for emotion recognition. We propose a multi-source and multi-target transfer learning method that first applies domain adaptation to different brain regions separately and then combines them. The method reduces inter-subject discrepancy and demand for data calibration effectively. It also can be used to train one database and test on a distinct but related database. Experimental results show the superiority of our approach.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://mahnob-db.eu/hci-tagging/ and https://www.eecs.qmul.ac.uk/mmv/datasets/deap/.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author Contributions

HP, XXi, KG, and XXu proposed the idea. HP conducted the experiment, analyzed the results, and wrote the manuscript. XXi was in charge of technical supervision and provided revision suggestions. KG analyzed the results, reviewed the article, and was in charge of technical supervision. XXu was in charge of technical supervision and funding. All the authors contributed to the article and approved the submitted version.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant U1801262, Guangdong Provincial Key Laboratory of Human Digital Twin (2022B1212010004), in part by Science and Technology Program of Guangzhou under Grant 2018-1002-SF-0561, in part by the Key-Area Research and Development Program of Guangdong Province, China, under Grant 2019B010154003, in part by Natural Science Foundation of Guangdong Province, China, under Grants 2020A1515010781 and 2019B010154003, in part by the Guangzhou key Laboratory of Body Data Science, under Grant 201605030011, in part by Science and Technology Project of Zhongshan, under Grant 2019AG024, and in part by the Fundamental Research Funds for Central Universities, SCUT, under Grants 2019PY21 and 2019MS028.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alarcão, S. M., and Fonseca, M. J. (2019). Emotions recognition using EEG signals: a survey. IEEE Trans. Affect. Comput. 10, 374–393. doi: 10.1109/TAFFC.2017.2714671

Alotaiby, T., El-Samie, F. E., Alshebeili, S. A., and Ahmad, I. (2015). A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 66, 1–21. doi: 10.1186/s13634-015-0251-9

Arnau-Gonzalez, P., Arevalillo-Herraez, M., Katsigiannis, S., and Ramzan, N. (2021). On the influence of affect in EEG-based subject identification. IEEE Trans. Affect. Comput. 12, 391–401. doi: 10.1109/TAFFC.2018.2877986

Azab, A. M., Mihaylova, L., Ang, K. K., and Arvaneh, M. (2019). weighted transfer learning for improving motor imagery-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 1252–1359. doi: 10.1109/TNSRE.2019.2923315

Basar, M. D., Duru, A. D., and Akan, A. (2020). Emotional state detection based on common spatial patterns of EEG. Signal Image Video Process. 14, 473–481. doi: 10.1007/s11760-019-01580-8

Boonyakitanont, P., Lek-uthai, A., Chomtho, K., and Songsiri, J. (2020). A review of feature extraction and performance evaluation in epileptic seizure detection using EEG. (2020) 57, 1–28. doi: 10.1016/j.bspc.2019.101702

Burges, C. J. (1998). A tutorial on support vector machines for pattern recognition. Data Mining Knowl. Discov. 2, 121–167. doi: 10.1023/A:1009715923555

Cao, Y., Gao, C., Yu, H., Zhang, L., and Wang, J. (2020). Epileptic EEG channel selection and seizure detection based on deep learning. J. Tianjin Univ. Sci. Technol. 53, 426–432. doi: 10.1174/tdxbz201904026

Chai, X., Wang, Q., Zhao, Y., Li, Y., Liu, D., Liu, X., et al. (2017). A fast, efficient domain adaptation technique for cross-domain electroencephalography(EEG)-based emotion recognition. Sensors 17, 1014. doi: 10.3390/s17051014

Cimtay, Y., and Ekmekcioglu, E. (2020). Investigating the use of pretrained convolutional neural network on cross-subject and cross-dataset EEG emotion recognition. Sensors 20:2034. doi: 10.3390/s20072034

Daoud, H., and Bayoumi, M. A. (2019). Efficient epileptic seizure prediction based on deep learning. IEEE Trans. Biomed. Circuits Syst. 13, 804–813. doi: 10.1109/TBCAS.2019.2929053

Dura, A., and Wosiak, A. (2021). “EEG channel selection strategy for deep learning in emotion recognition,” in Procedia Computer Science, Vol. 192 (Łódź: Elsevier B.V), 2789–2796. doi: 10.1016/j.procs.2021.09.049

Fang, Y., Yang, H., Zhang, X., Liu, H., and Tao, B. (2021). Multi-feature input deep forest for EEG-based emotion recognition. Front. Neurorobot. 14, 617531. doi: 10.3389/fnbot.2020.617531

Fdez, J., Guttenberg, N., Witkowski, O., and Pasquali, A. (2021). Cross-subject EEG-based emotion recognition through neural networks with stratified normalization. Front. Neurosci. 15626277. doi: 10.3389/fnins.2021.626277

Fernando, B., Habrard, A., Sebban, M., and Tuytelaars, T. (2013). “Unsupervised visual domain adaptation using subspace alignment,” in Proceedings of the IEEE International Conference on Computer Vision (Sydney, NSW), 2960–2967. doi: 10.1109/ICCV.2013.368

Fordson, H. P., Xing, X., Guo, K., and Xu, X. (2021). “A feature learning approach based on multimodal human body data for emotion recognition,” in IEEE Signal Processing in Medicine and Biology Symposium (SPMB), 1–6. doi: 10.1109/SPMB52430.2021.9672303

Gretton, A., Bousquet, O., Smola, A., and Schlkopf, B. (2005). “Measuring statistical dependence with Hilbert-Schmidt norms,” in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol. 3734 LNAI, eds S. Jain, H.U. Simon, and E. Tomita (Berlin; Heidelberg: Springe), 63–77. doi: 10.1007/11564089_7

He, H., and Wu, D. (2020). Transfer learning for brain-computer interfaces: a Euclidean space data alignment approach. IEEE Trans. Biomed. Eng. 67, 399–410. doi: 10.1109/TBME.2019.2913914

Jayaram, V., Alamgir, M., Altun, Y., Scholkopf, B., and Grosse-Wentrup, M. (2016). Transfer learning in brain-computer interfaces. IEEE Comput. Intell. Mag. 11, 20–31. doi: 10.1109/MCI.2015.2501545

Katsigiannis, S., and Ramzan, N. (2018). DREAMER: a database for emotion recognition through EEG and ECG signals from wireless low-cost off-the-shelf devices. IEEE J. Biomed. Health Inform. 22, 98–107. doi: 10.1109/JBHI.2017.2688239

Kempfert, K. C., Wang, Y., Chen, C., and Wong, S. W. (2020). A comparison study on nonlinear dimension reduction methods with kernel variations: visualization, optimization and classification. Intell. Data Anal. 24, 267–290. doi: 10.3233/IDA-194486

Ko, W. R., Jang, M., Lee, J., and Kim, J. (2021). AIR-Act2Act: human-human interaction dataset for teaching non-verbal social behaviors to robots. Int. J. Robot. Res. 40, 691–697. doi: 10.1177/0278364921990671

Koelstra, S., Mühl, C., Soleymani, M., Lee, J. S., Yazdani, A., Ebrahimi, T., et al. (2012). DEAP: a database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Kwak, Y., Song, W. J., and Kim, S. E. (2021). “Deep feature normalization using rest state EEG signals for Brain-Computer Interface,” in 2021 International Conference on Electronics, Information, and Communication, ICEIC 2021 (Xian), 1–3. doi: 10.1109/ICEIC51217.2021.9369712

Lan, Z., Sourina, O., Wang, L., Scherer, R., and Muller-Putz, G. R. (2019). Domain adaptation techniques for EEG-based emotion recognition: a comparative study on two public datasets. IEEE Trans. Cogn. Dev. Syst. 11, 85–94. doi: 10.1109/TCDS.2018.2826840

Li, J., Qiu, S., Shen, Y. Y., Liu, C. L., and He, H. (2020). Multisource transfer learning for cross-subject EEG emotion recognition. IEEE Trans. Cybern.50, 3281–3293.

Li, W., Huan, W., Hou, B., Tian, Y., Zhang, Z., and Song, A. (2021). Can emotion be transferred? - A review on transfer learning for EEG-based emotion recognition. IEEE Trans. Cogn. Dev. Syst. 1–14. doi: 10.1109/TCDS.2021.3098842

Lin, Y. P. (2020). Constructing a personalized cross-day EEG-based emotion-classification model using transfer learning. IEEE J. Biomed. Health Inform. 24, 1255–1264. doi: 10.1109/JBHI.2019.2934172

Martin-Clemente, R., Olias, J., Cruces, S., and Zarzoso, V. (2019). Unsupervised common spatial patterns. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 2135–2144. doi: 10.1109/TNSRE.2019.2936411

Niu, S., Liu, Y., Wang, J., and Song, H. (2021). A decade survey of transfer learning (2010-2020). IEEE Trans. Artif. Intell. 1, 151–166. doi: 10.1109/TAI.2021.3054609

Özerdem, M. S., and Polat, H. (2017). Emotion recognition based on EEG features in movie clips with channel selection. Brain Inform. 4, 241–252. doi: 10.1007/s40708-017-0069-3

Pan, S. J., Tsang, I. W., Kwok, J. T., and Yang, Q. (2011). Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22, 199–210. doi: 10.1109/TNN.2010.2091281

Pan, S. J., and Yang, Q. (2010). A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359. doi: 10.1109/TKDE.2009.191

Principi, R. D. P., Palmero, C., Junior, J. C. S. J., and Escalera, S. (2021). On the effect of observed subject biases in apparent personality analysis from audio-visual signals. IEEE Trans. Affect. Comput. 12, 607–621. doi: 10.1109/TAFFC.2019.2956030

Ramadhani, A., Fauzi, H., Wijayanto, I., Rizal, A., and Shapiai, M. I. (2021). “The implementation of EEG transfer learning method using integrated selection for motor imagery signal,” in Lecture Notes in Electrical Engineering, Vol. 746 LNEE, eds Triwiyanto, H. Adi Nugroh, A. Riza, and W. Caesarendra (Surabaya: Springer Science and Business Media Deutschland GmbH), 457–466. doi: 10.1007/978-981-33-6926-9_39

Rodrigues, P. L. C., Jutten, C., and Congedo, M. (2019). Riemannian procrustes analysis: transfer learning for brain-computer interfaces. IEEE Trans. Biomed. Eng. 66, 2390–2401. doi: 10.1109/TBME.2018.2889705

Rouast, P. V., Adam, M. T., and Chiong, R. (2021). Deep learning for human affect recognition: insights and new developments. IEEE Trans. Affect. Comput. 12, 524–543. doi: 10.1109/TAFFC.2018.2890471

Saha, S., and Baumert, M. (2020). Intra- and inter-subject variability in EEG-based sensorimotor brain computer interface: a review. Front. Comput. Neurosci. 13, 87. doi: 10.3389/fncom.2019.00087

Shen, Y. W., and Lin, Y. P. (2019). Challenge for affective brain-computer interfaces: non-stationary spatio-spectral EEG oscillations of emotional responses. Front. Hum. Neurosci. 13, 366. doi: 10.3389/fnhum.2019.00366

Soleymani, M., Lichtenauer, J., Pun, T., and Pantic, M. (2012). A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 3, 42–55. doi: 10.1109/T-AFFC.2011.25

Sotgiu, M. A., Mazzarello, V., Bandiera, P., Madeddu, R., Montella, A., and Moxham, B. (2020). Neuroanatomy, the Achille's heel of medical students. a systematic analysis of educational strategies for the teaching of neuroanatomy. Anat. Sci. Educ. 13, 107–116. doi: 10.1002/ase.1866

Su, H. (2021). Data research on tobacco leaf image collection based on computer vision sensor. J. Sens. 2021,490212. doi: 10.1155/2021/4920212

Subramanian, R., Wache, J., Abadi, M. K., Vieriu, R. L., Winkler, S., and Sebe, N. (2018). Ascertain: emotion and personality recognition using commercial sensors. IEEE Trans. Affect. Comput. 9, 147–160. doi: 10.1109/TAFFC.2016.2625250

Torres, P. E. P, Torres, E. A., Hernández-Álvarez, M., and Yoo, S. G. (2020). EEG-based BCI emotion recognition: a survey. Sensors 20, 5083. doi: 10.3390/s20185083

Wang, S. (2019). “Knowledge representation for emotion intelligence,” in Proceedings - International Conference on Data Engineering (Macao), 2096–2100. doi: 10.1109/ICDE.2019.00247

Wu, D., Peng, R., Huang, J., and Zeng, Z. (2020). Transfer learning for brain-computer interfaces: a complete pipeline. arXiv:2007.03746. doi: 10.48550/arXiv.2007.03746

Yan, K., Kou, L., and Zhang, D. (2018). Learning domain-invariant subspace using domain features and independence maximization. IEEE Trans. Cybern. 48, 288–298. doi: 10.1109/TCYB.2016.2633306

Yan, M., Lv, Z., Sun, W., and Bi, N. (2020). An improved common spatial pattern combined with channel-selection strategy for electroencephalography-based emotion recognition. Med. Eng. Phys. 83, 130–141. doi: 10.1016/j.medengphy.2020.05.006

Yun, Y., Ma, D., and Yang, M. (2021). Human-computer interaction-based decision support system with applications in data mining. Fut. Gener. Comput. Syst. 114, 285–289. doi: 10.1016/j.future.2020.07.048

Zheng, W. L., Liu, W., Lu, Y., Lu, B. L., and Cichocki, A. (2019). EmotionMeter: a multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 49, 1110–1122. doi: 10.1109/TCYB.2018.2797176

Zheng, W. L., and Lu, B. L. (2015). Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Zheng, W. L., Zhang, Y. Q., Zhu, J. Y., and Lu, B. L. (2015). “Transfer components between subjects for EEG-based emotion recognition,” in 2015 International Conference on Affective Computing and Intelligent Interaction, ACII 2015, 917–922. doi: 10.1109/ACII.2015.7344684

Keywords: emotion recognition, transfer learning, brain region, channel selection, EEG, domain adaptation

Citation: Perry Fordson H, Xing X, Guo K and Xu X (2022) Not All Electrode Channels Are Needed: Knowledge Transfer From Only Stimulated Brain Regions for EEG Emotion Recognition. Front. Neurosci. 16:865201. doi: 10.3389/fnins.2022.865201

Received: 29 January 2022; Accepted: 13 April 2022;

Published: 24 May 2022.

Edited by:

Mufti Mahmud, Nottingham Trent University, United KingdomReviewed by:

Chang Li, Hefei University of Technology, ChinaSepideh Hatamikia, Austrian Center for Medical Innovation and Technology, Austria

Irma Nayeli Angulo Sherman, University of Monterrey, Mexico

Copyright © 2022 Perry Fordson, Xing, Guo and Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kailing Guo, Z3Vva2xAc2N1dC5lZHUuY24=; Xiangmin Xu, eG14dUBzY3V0LmVkdS5jbg==

Hayford Perry Fordson

Hayford Perry Fordson Xiaofen Xing

Xiaofen Xing Kailing Guo

Kailing Guo Xiangmin Xu

Xiangmin Xu