- Laureate Institute for Brain Research, Tulsa, OK, United States

Real-time fMRI (rtfMRI) has enormous potential for both mechanistic brain imaging studies or treatment-oriented neuromodulation. However, the adaption of rtfMRI has been limited due to technical difficulties in implementing an efficient computational framework. Here, we introduce a python library for real-time fMRI (rtfMRI) data processing systems, Real-Time Processing System in python (RTPSpy), to provide building blocks for a custom rtfMRI application with extensive and advanced functionalities. RTPSpy is a library package including (1) a fast, comprehensive, and flexible online fMRI image processing modules comparable to offline denoising, (2) utilities for fast and accurate anatomical image processing to define an anatomical target region, (3) a simulation system of online fMRI processing to optimize a pipeline and target signal calculation, (4) simple interface to an external application for feedback presentation, and (5) a boilerplate graphical user interface (GUI) integrating operations with RTPSpy library. The fast and accurate anatomical image processing utility wraps external tools, including FastSurfer, ANTs, and AFNI, to make tissue segmentation and region of interest masks. We confirmed that the quality of the output masks was comparable with FreeSurfer, and the anatomical image processing could complete in a few minutes. The modular nature of RTPSpy provides the ability to use it for a simulation analysis to optimize a processing pipeline and target signal calculation. We present a sample script for building a real-time processing pipeline and running a simulation using RTPSpy. The library also offers a simple signal exchange mechanism with an external application using a TCP/IP socket. While the main components of the RTPSpy are the library modules, we also provide a GUI class for easy access to the RTPSpy functions. The boilerplate GUI application provided with the package allows users to develop a customized rtfMRI application with minimum scripting labor. The limitations of the package as it relates to environment-specific implementations are discussed. These library components can be customized and can be used in parts. Taken together, RTPSpy is an efficient and adaptable option for developing rtfMRI applications.

Code available at: https://github.com/mamisaki/RTPSpy

Introduction

Online evaluation of human brain activity with real-time functional magnetic resonance imaging (rtfMRI) expands the possibility of neuroimaging. Its application has been extended from on-site quality assurance (Cox et al., 1995), brain-computer-interface (BCI) (Goebel et al., 2010), brain self-regulation with neurofeedback (Sulzer et al., 2013), and online optimization in brain stimulation (Mulyana et al., 2021). Nevertheless, a complex system setup specific to an individual environment and noisy online evaluation of neural activation due to a limited real-time fMRI signal processing have hindered the utility of rtfMRI applications and reproducibility of its result (Thibault et al., 2018). Indeed, the significant risk of noise contamination in the neurofeedback signal has been demonstrated in recent studies (Weiss et al., 2020; Misaki and Bodurka, 2021). These issues have been addressed with a community effort releasing easy-to-use rtfMRI frameworks (Cox et al., 1995; Goebel, 2012; Sato et al., 2013; Koush et al., 2017; Heunis et al., 2018; Kumar et al., 2021; MacInnes et al., 2020) and consensus on reporting detailed online processing and experimental setups (Ros et al., 2020).

As one of the contributions to such an effort, we introduce a software library for rtfMRI; fMRI Real-Time Processing System in python (RTPSpy). The goal of the RTPSpy is to provide building blocks for making a highly customized and advanced rtfMRI system. The library is not assumed to provide a complete application package but offers rtfMRI data processing components to be used as a part of a user’s custom application. We suppose that the tools of RTPSpy can also be combined with other frameworks as a part of processing modules.

RTPSpy is a python library that includes a fast and comprehensive online fMRI image processing pipeline comparable to offline processing (Misaki and Bodurka, 2021) and an interface module for an external application to receive real-time brain activation signals via TCP/IP socket. Each online data processing component is implemented in an independent class, and a processing pipeline can be created by chaining these modules. In addition to the online fMRI signal processing modules, the library provides several utility modules, including brain anatomical image processing tools for fast and accurate tissue segmentation, and an online fMRI processing simulation system. Although these utilities may not always be required in a rtfMRI session, the fast anatomical image processing can be useful for identifying anatomically defined target regions, and the simulation analysis is vital for building an optimal processing pipeline (Ramot and Gonzalez-Castillo, 2019; Misaki et al., 2020; Misaki and Bodurka, 2021). We also provide a boilerplate graphical user interface (GUI) application integrating operations with RTPSpy, and a sample application of neurofeedback presentation using PsychoPy (Peirce, 2008) to demonstrate how the RTPSpy is implemented in an application and to interface to another external application. The GUI application is presented as just one example of library usage. However, a user may develop a custom neurofeedback application with minimum modification on this example script.

The aim of this manuscript is to introduce the structure of the RTPSpy library and its usages as a part of a neurofeedback application. We hope that RTPSpy is used as a part of a user’s own custom application so that the current report focuses on how to script the RTPSpy online processing pipeline and implement it in an application. The detailed usage of the example application is presented in GitHub.1 Also, this manuscript does not provide a comprehensive evaluation of the library’s performance in detail. Such evaluations have been done in our previous report (Misaki and Bodurka, 2021), and only a short overview of the previous report was given in this report. Comparison with other exiting rtfMRI frameworks is also out of the scope of this manuscript. We recognize that many excellent packages are released for rtfMRI (Cox et al., 1995; Goebel, 2012; Sato et al., 2013; Koush et al., 2017; Heunis et al., 2018; Kumar et al., 2021; MacInnes et al., 2020), and we do not claim that RTPSpy is the best. The claim of the advanced functionality of the RTPSpy is for its own sake and not relative to other tools. RTPSpy and this manuscript aim to offer users another option for developing a custom rtfMRI application.

This manuscript is organized as follows. The next section summarizes the installation and supporting system information. The third section introduces the online fMRI data processing modules in RTPSpy, the main components of the library. The issues and caveats in online fMRI data analysis and how they are addressed in RTPspy implementation are discussed here. The fourth section describes fast and accurate anatomical image processing tools. A custom processing stream was made by wrapping external tools, FastSurfer (Henschel et al., 2020), AFNI,2 and ANTs.3 We also evaluated the accuracy of tissue segmentation and the quality of tissue-based noise regressors made by this stream compared to FreeSurfer segmentation. The fifth and sixth sections illustrate the usage of library classes to build a processing pipeline and run a simulation analysis. An example GUI implementation is presented in the seventh section. The last section discusses the system components that are not provided with RTPSpy but are required for a complete system depending on an individual environment. The RTPSpy can be obtained from GitHub4 with GPL3 license.

Installation and Supporting Systems

RTPSpy is assumed to be run on a miniconda35 or Anaconda6 environment. A yaml file for installing the required python libraries in an anaconda environment is provided with the package for easy installation. RTPSpy’s anatomical image processing depends on several external tools, AFNI (see text footnote 2), FastSurfer,7 and ANTs.8 While AFNI needs to be installed separately, FastSurfer and ANTs installation is integrated into the RTPSpy setup. Refer to the GitHub site (see text footnote 4) for further details.

RTPSpy can take advantage of graphical processing unit (GPU) computation. GPU can be utilized in the online fMRI data processing and anatomical image processing with FastSurfer (see text footnote 7). To use GPU computation, a user needs a GPU compatible with NVIDIA’s CUDA toolkit9 and to install a GPU driver compatible with the CUDA. The CUDA toolkit will be installed with the yaml file. We note that GPU is not mandatory for RTPSpy. Online data processing speed in RTPSpy is fast enough for real-time fMRI even without GPU, while GPU can enhance it further (Section “Real-Time Performance”). Also, RTPSpy provides an alternative anatomical image processing stream not using FastSurfer, while the image segmentation accuracy is better with FastSurfer (Section “Evaluations for the Segmentation Masks and Noise Regressors”).

RTPSpy has been developed on a Linux system (Ubuntu 20.04). It can also be run on Mac OS X and Windows with the Windows Subsystem for Linux (WSL), while GPU computation is not supported on OS X and WSL for now.

RTPSpy Online fMRI Data Processing

Overview of the Library Design

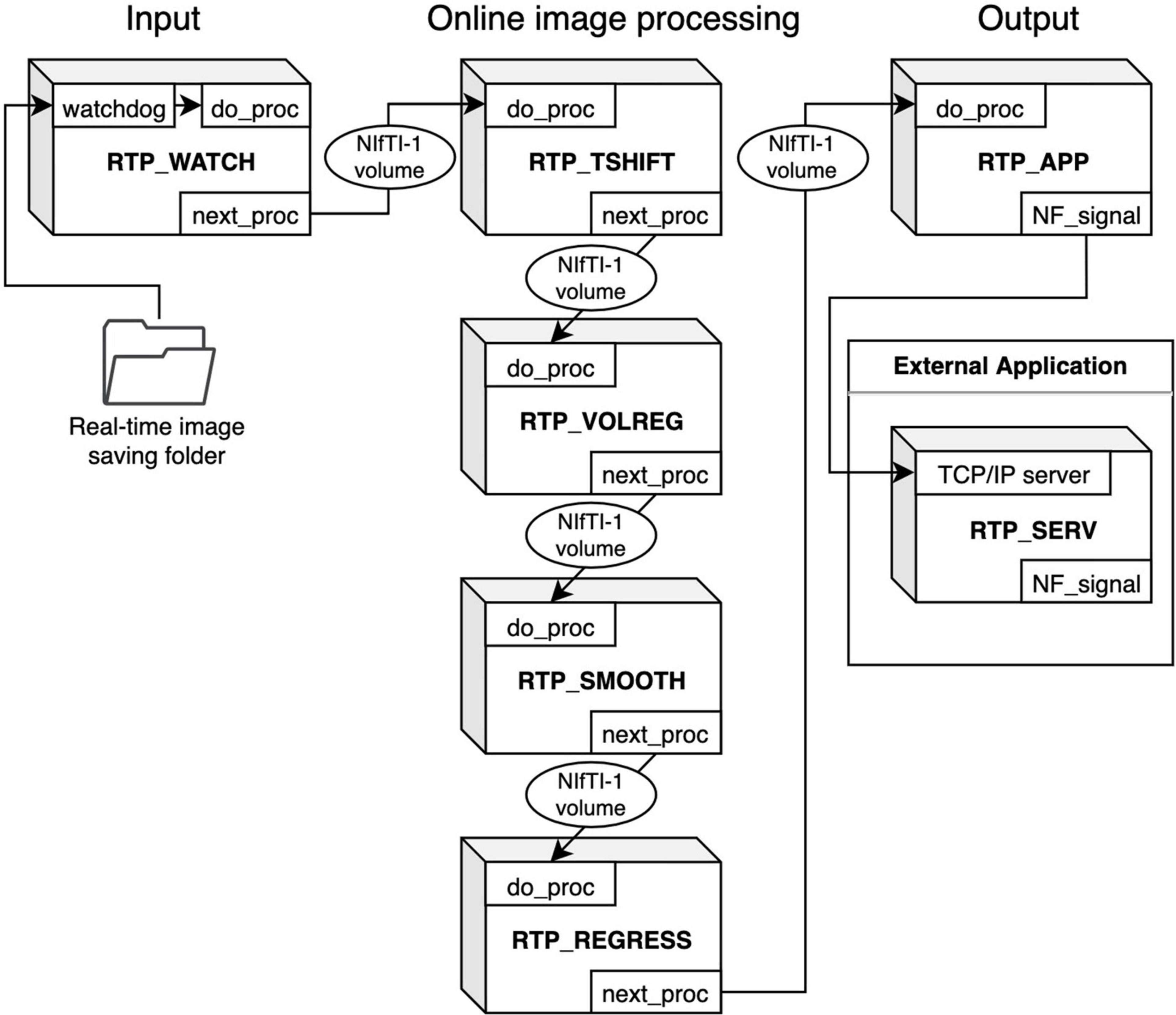

Figure 1 shows an overview of the modules composing an online processing pipeline. RTPSpy includes six online fMRI data processing modules; a real-time data loader (RTP_WATCH), slice-timing correction (RTP_TSHIFT), motion correction (RTP_VOLREG), spatial smoothing (RTP_SMOOTH), noise regression (RTP_REGRESS), and an application module (RTP_APP). A utility module for an external application to receive a processed signal, RTP_SERV, is also provided. RTP_WATCH is the entrance module, and RTP_APP is the terminal module of a pipeline. Other modules have common input and output interfaces so that they can be connected in any combination and order. For example, when a conventional pipeline only with a motion correction is enough, the pipeline can be made only with RTP_WATCH, RTP_VOLREG, and RTP_APP modules. If more comprehensive processing is required, all the components can be chained in a pipeline.

Figure 1. Overview of the RTPSpy module design for creating an online fMRI processing pipeline. RTP_WATCH is the entrance module, and RTP_APP is the terminal module of a pipeline. Other modules have common input and output interfaces. They can be connected in any combination and order. The modules exchange data with the Nifti1Image object of the whole-brain volume data. RTP_SERV is a utility module for an external application to receive a processed signal.

These modules are implemented as a python class. The module’s interface method is “do_proc,” which receives a NiBabel10 Nifti1Image object. Its calling format is the same for all modules. The modules exchange data with the Nifti1Image object of the whole-brain volume data. The processing chain can be made by setting the “next_proc” property to an object of the next module. Calling the “do_proc” method at the head of the pipeline calls the next module’s “do_proc” method in the chain. This simple function interface enables the easy creation of a custom pipeline (see Section “Building a Processing Pipeline” for details).

We assume that the input and output parts should be customized according to the user’s environment and an application need. For example, if a user wants to use another real-time image feeding (e.g., a dicom export feature of a scanner), RTP_WATCH can be replaced or modified in a preferred way. Also, the RTP_APP can be customized to calculate a neurofeedback signal in a user’s way. An example script for such customization is presented in Section “An Example Graphical User Interface Application Integrating the RTPSpy Modules.” We note that RTPSpy is not intended to provide a complete application for any environment. Instead, a necessary module for each environment is supposed to be developed by a user. RTPSpy offers a framework of the interface and building blocks of online fMRI data processing.

Real-Time Performance

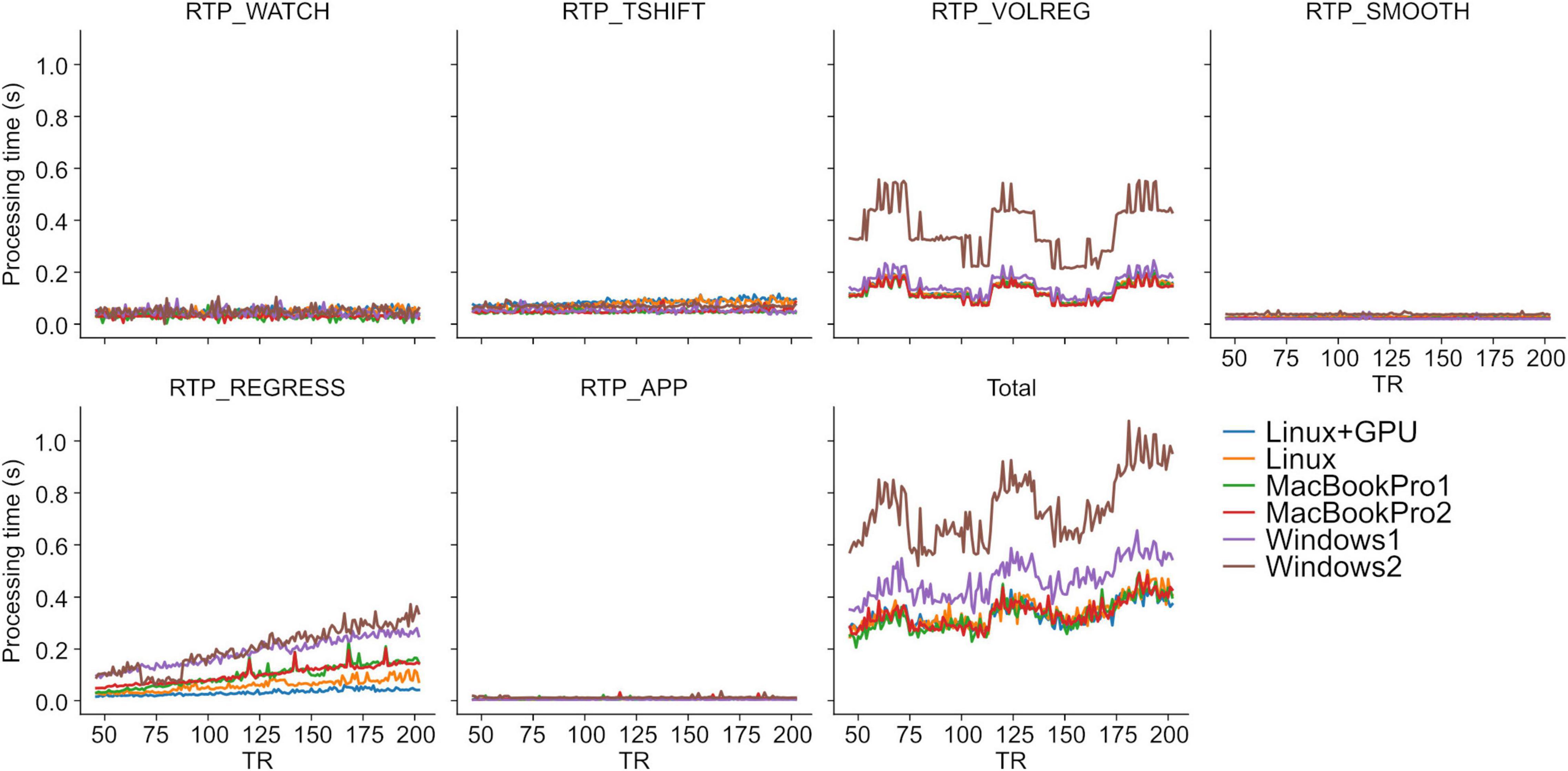

Retaining the whole-brain data throughout the pipeline enables a common interface between modules. It also provides freedom of neurofeedback signal calculation (i.e., an ROI average, connectivity of multiple regions, and multi-voxel patterns in the whole brain) with various combinations of processing modules. Although this implementation seemed burdensome for real-time computation, we found that processing whole-brain volume does not significantly affect the real-time performance in RTPSpy. Our previous report (Misaki and Bodurka, 2021) showed that the pipeline processing was completed in less than 400 ms on a current PC equipped with a GPU. Here, we also evaluated the processing time with several PC specifications with and without GPU for a sample fMRI data (128 × 128 × 34 matrix, 203 volumes). Note that this evaluation is not a comprehensive performance test but rather a rough guide to the PC specifications required for real-time processing with RTPSpy.

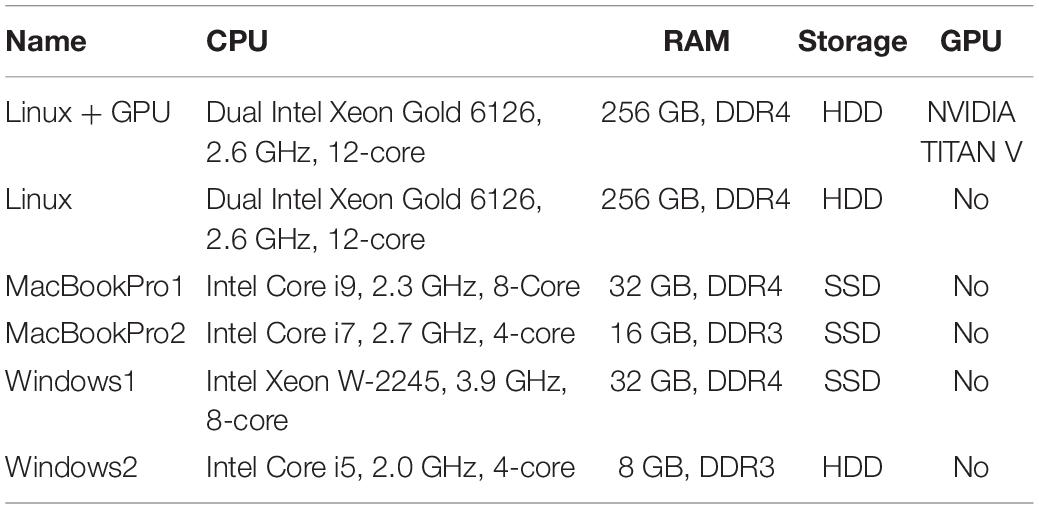

Table 1 shows the specifications of the tested PCs. “Linux+GPU” is the same one used in Misaki and Bodurka (2021). The evaluated pipeline included all modules implemented in RTPSpy and RTP_REGRESS includes all available regressors. Figure 2 shows processing times for each module. The figure shows the results after TR = 45 since the regression waited to receive 40 volumes, excluding the initial three volumes, and the processing of the first regressed volume took a long time due to initialization and retrospective processing (see Section “Implementations of the Online fMRI Processing Algorithms,” RTP_REGRESS). The processing time of RTP_WATCH is from a file creation time to send the volume data to the next module. The processing time of RTP_APP is to extract an ROI average signal and write it in a text file.

Figure 2. RTPSpy online fMRI data processing times. See Table 1 for the specification of the PCs. The evaluation was done with a sample fMRI data (128 × 128 × 34 matrix, 203 volumes). Processing with RTP_REGRESS included all available regressors (Legendre polynomials for high-pass filtering, 12 motion parameters, global signal, mean white matter and ventricle signals, and RETROICOR regressors).

The results were consistent with the previous report (Misaki and Bodurka, 2021). The most time-consuming processing was RTP_VOLREG. RTP_REGRESS’s processing time increased with TR since cumulative GLM uses more samples in later TRs (see Section “Implementations of the Online fMRI Processing Algorithms,” RTP_REGRESS). The slope of the increase was the lowest with GPU, indicating that GPU can be beneficial when a scan has many volumes. Interestingly, however, the total processing time was not significantly different by GPU usage, and MacBookPro showed comparable performance with a high-end Linux PC, at least for the present scan length. The Windows showed relatively longer processing times regardless of the specification, which might be due to the overhead of the Windows subsystem for Linux. These results indicate that the PC requirement for RTPSpy is not high, at least for an ordinary real-time fMRI scan with a few seconds TR and less than a few hundred volumes.

Even if computation time does not limit real-time fMRI processing, the limited number of sample points available online poses a challenge for online processing yet. The next section describes the details of each module functionalities and online analysis methods in RTPSpy to address this issue.

Implementations of the Online fMRI Processing Algorithms

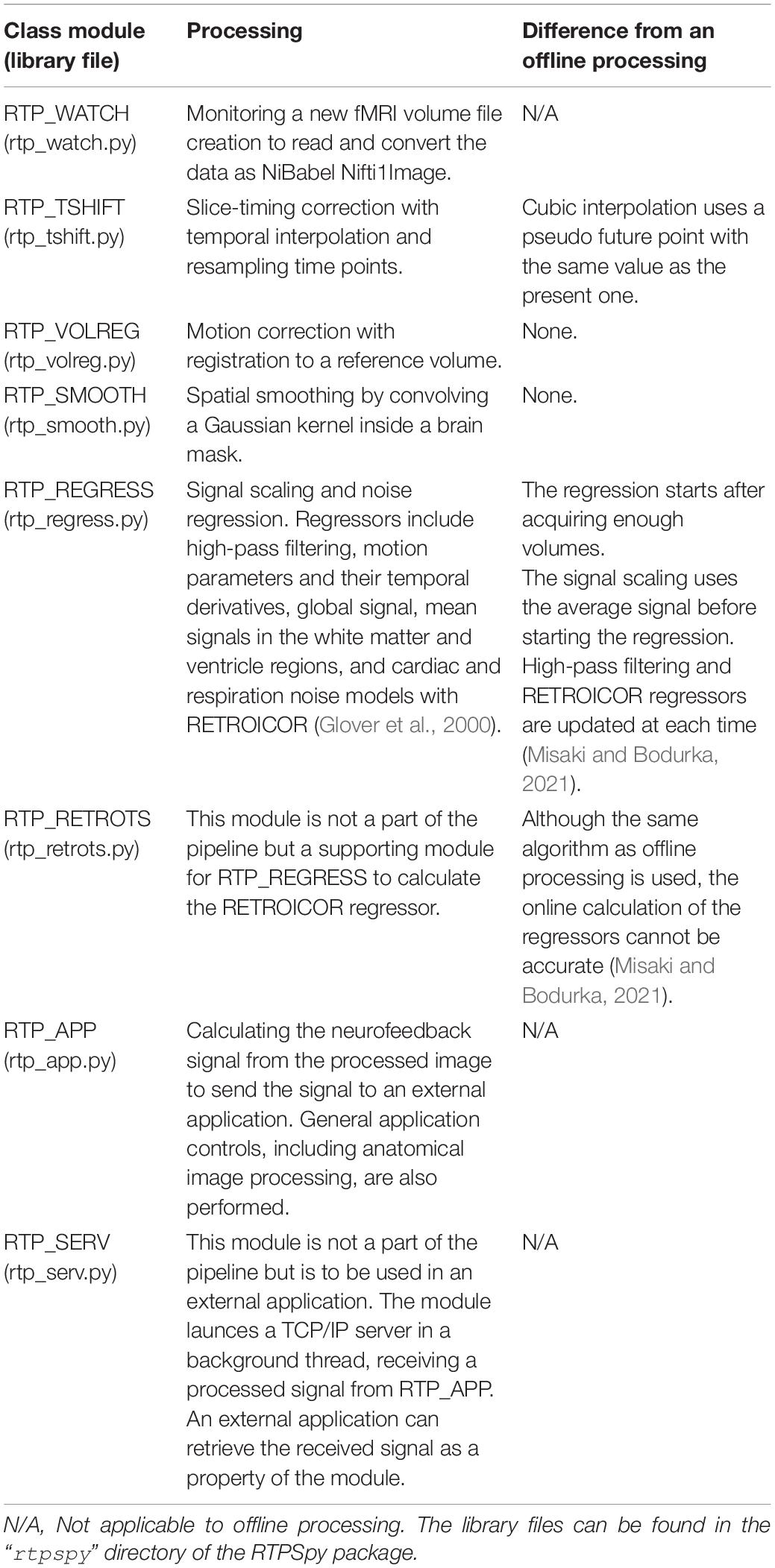

Table 2 summarizes the functions of RTPSpy processing modules. The class files for these modules can be found in the “rtpspy” directory of the package. The issue of the limited number of online available sample points is critical for slice timing correction, signal scaling, and online noise regression. This section describes the methods used in the RTPSpy modules to address this issue.

Table 2. Summaries of RTPSpy real-time processing modules and their differences from offline processing.

RTP_WATCH finds a newly created file in a watching directory in real-time, reads the data, and sends it to the next processing module. The watchdog module in python11 is used to detect a new file creation. RTP_WATCH uses the NiBabel library (see text footnote 10) to read the file. The currently supported file types are NIfTI, AFNI’s BRIK, and Siemens mosaic dicom. Technically, this module can handle all file types supported by NiBabel, so a user can easily extend the supported files as needed. The whole-brain volume data is retained in the NiBabel Nifti1Image format throughout the RTPSpy pipeline. The observer function used in RTP_WATCH (PollingObserverVFS) is system-call independent and can work with various file systems. However, polling may take a long time if many files are in the monitored directory, hindering real-time performance. If a user finds a significant delay by saving files of many runs in a single directory, it is recommended to clean or move files at each run.

Although RTP_WATCH offers a simple interface to read a data file in real-time, how the MRI scanner saves the reconstructed image varies across the manufacturers and sites. RTPSpy does not provide a universal solution for that. A user may need another package to send data to the watched directory or modify the script file (rtp_watch.py) to adjust for each environment. We will discuss this limitation of the environment-specific issues in the last section.

RTP_TSHIFT performs a slice-timing correction by aligning the signal sampling times in different slices to the same one with temporal interpolation. Because we cannot access the future time point in real-time, the online processing cannot be equivalent to offline. RTPSpy aligns the sampling time to the earliest slice for avoiding extrapolation. RTPSpy implements two interpolation methods, linear and cubic. The linear interpolation uses only the current and one past time point so that it is equivalent to offline processing. The cubic interpolation uses four time-points; two from the past, the present, and one future. RTPSpy puts a pseudo future point with the same value as the present one to perform the cubic interpolation. We have confirmed that this pseudo cubic method has a higher correlation with a high-order interpolation method (e.g., FFT) than a linear method (Misaki et al., 2015). By default, RTPSpy uses cubic interpolation.

Slice-timing correction is often skipped in real-time fMRI processing, and its effect could be minor when TR is short (Kiebel et al., 2007; Sladky et al., 2011). However, some neurofeedback signal extraction methods, such as the two-point connectivity (Ramot et al., 2017), could be sensitive to a small timing difference between slices. The two-point method evaluates the consistency of the signal change direction (increase/decrees) at each TR, which could be sensitive to the timing of signal direction change between ROIs in different slices. The user can choose to use this module or not, and RTPSpy does not enforce the specific pipeline for the real-time fMRI processing. We also note that slice-timing correction takes no significant cost of computational time (Figure 2).

RTP_VOLREG performs motion correction by registering volumes to a reference one. The same functions as the AFNI’s 3dvolreg,12 a motion correction command in the AFNI toolkit, is implemented in RTP_VOLREG. We compiled the C source codes of 3dvolreg functions into a custom C shared library file (librtp.so in the RTPSpy package), and RTP_VOLREG accesses it via the python ctypes interface. Thus, this online processing is equivalent to the offline 3dvolreg. By default, RTP_VOLREG uses heptic (seventh order polynomial) interpolation at image reslicing, the same as the 3dvolreg default.

RTP_SMOOTH performs spatial smoothing by convolving a Gaussian kernel within a masked region. Like RTP_VOLREG, RTPSpy uses the AFNI’s 3dBlurInMask13 functions compiled into a C shared library file (librtp.so), and accessed via ctypes interface in python. This process has no difference between online and offline processing.

RTP_REGRESS performs a signal scaling and noise regression analysis. The regression requires at least as many data points as the number of regressors and will not commence the process until sufficient number of data points have been collected. The signal scaling is done with the average signal in this waiting period and converts a signal into percent change relative to the average in each voxel. We note that this scaling is not equivalent to the offline processing using an average of all time points in a run so that the absolute amplitude cannot be comparable between the online and offline processing. We also note that the volumes before the start of regression are processed retrospectively so that the saved data includes all volumes. Once enough volumes are received, the regression is done with an ordinary least square (OLS) approach using the PyTorch library,14 which allows a seamless switching of CPU and GPU usage according to the system equipment. The residual of regression is obtained as a denoised signal.

The regressors can include high-pass filtering (Legendre polynomials), motion parameters (three shifts and three rotations), their temporal derivatives, global signal, mean signals in the white matter and ventricle regions, and cardiac and respiration noise models with RETROICOR (Glover et al., 2000). The order of the Legendre polynomials for high-pass filtering is adjusted according to the data length at each volume with 1 + int(d/150), where d is the scan duration in seconds (the default in AFNI). The motion parameters were received from the RTP_VOLREG module in real-time. The global signal and the mean white matter and ventricle signals are calculated from the unsmoothed data, which is also received from the RTP_VOLREG module. These regressors were made from the mask files defined in “GS_mask” (global signal mask), “WM_mask,” and “Vent_mask” properties of the module. As the RTP_REGRESS depends on RTP_VOLREG outputs, RTP_VOLREG must be included before RTP_REGRESS when it is used in a pipeline. A user can also include any pre-defined time-series such as a task design as a covariate in the regressors. It is up to a user to decide which regressor to use. The report in Misaki and Bodurka (2021) has shown which regressor was effective in reducing what noise in what brain regions and connectivity, which may help decide the noise regressor choice.

RTPSpy uses cumulative GLM (cGLM), which performs regression with all samples at each time, rather than incremental GLM (iGLM), which updates only the most recent estimates based on previous estimates (Bagarinao et al., 2003). In Misaki and Bodurka (2021), we indicated that high-pass filtering regressor, either Legendre polynomial or discrete cosine transform, filtered higher frequencies than the designed threshold at early TRs unless the regressor was adjusted at each TR. This adjustment requires a retrospective update of the regressor. Similarly, the online creation of RETROICOR regressors, made from real-time cardiac and respiration signal recordings, could not be accurate compared to the offline creation, and the error was accumulated unless retrospective correction was made (see Figures 2, 3 in Misaki and Bodurka, 2021). RTPSpy uses cGLM because cGLM has the advantage of being able to recalculate regressors at each volume, thereby improving the quality of regressors made online with limited samples. Although this implementation, whole-brain processing with cGLM, seemed burdensome for real-time processing, the computation time is not inhibitive to real-time performance, as shown in Section “Real-Time Performance.”

RTP_RETROTS is not a pipeline component (thus, not shown in Figure 1) but a supporting module for RTP_REGRESS to calculate the RETROICOR regressors from cardiac and respiration signals in real-time. In our environment, cardiac and respiration signals are measured using photoplethysmography and a pneumatic respiration belt, respectively. Although this hardware implementation could depend on the environment of each site (see Section “Limitations and Environment-Specific Issues”), once the signal acquisition is set up, the usage of RTP_RETROTS is simple. Its interface method (“do_proc”) receives respiration and cardiac signal values as one-dimensional arrays, the signals’ sampling frequency, and fMRI TR parameters. Then, the method returns the RETROICORE regressors.

RTP_RETROTS implements the same functions as the AFNI’s RetroTS.py script, which are rewritten in C codes and compiled into a shared library (librtp.so). The module makes four cardiac and four respiration noise basis regressors. It is possible to also create a respiration volume per time (RVT) regressors (Birn et al., 2008). However, we do not recommend using them in online processing. Our previous study (Misaki and Bodurka, 2021) indicated that the online evaluation of RVT regressors could not be accurate, and its usage could introduce an artifactual signal fluctuation in the processed signal in an online regression.

RTP_APP receives the processed image and calculates the neurofeedback signal from it. The default implementation extracts the average signal in an ROI mask, defined in the “do_proc” method of the rtp_app.py file. This method is provided as a prototype and can be customized according to the need for individual applications. Section “An Example Graphical User Interface Application Integrating the RTPSpy Modules” and Figure 10 show an example of a customized method. The RTPSpy noise reduction is performed for the whole-brain voxels, which is advantageous in calculating the feedback signals from multiple regions, such as the functional connectivity and decoding neurofeedback (Watanabe et al., 2017). The calculated signal can be sent to an external application through a network socket to the RTP_SERV module (Figure 1; see also Section “An Example Graphical User Interface Application Integrating the RTPSpy Modules” and Figure 11). The RTP_APP class also implements general application control methods, including anatomical image processing described in the next section and a high-level scripting interface explained in Section “Example Real-Time fMRI Session.”

RTP_SERV is not a part of the image processing pipeline but offers an interface class for an external application to communicate to RTPSpy. This module is assumed to be implemented in an external application as a receiver of the processed signal. Instantiating this class launches a TCP/IP server in a background thread in an external application to receive a real-time neurofeedback signal (see Section “An Example Graphical User Interface Application Integrating the RTPSpy Modules”).

Anatomical Image Processing With Fast and Accurate Tissue Segmentation

Anatomical image processing is often required in a rtfMRI session. While there are several ways to define the target region for neurofeedback (e.g., functional localizer), if the target brain region is defined in the template brain with a group analysis, we need to warp the region mask into the participant’s brain. The noise regressions with the global signal and white matter and ventricle mean signals also require a brain mask and tissue segmentation masks on an individual brain image.

Although there are many tools for brain tissue segmentation using a signal intensity, they are prone to an image bias field and often need a manual correction. Another approach for tissue segmentation uses anatomical information to segment the regions in addition to the signal intensity, such as FreeSurfer.15 FreeSurfer usually offers more accurate and robust segmentation than using only the signal intensity, but its process takes hours or longer to complete, inhibiting its use in a single visit rtfMRI session. Recently, an alternative approach of brain image segmentation using a deep neural network has been released as FastSurfer (Henschel et al., 2020). FastSurfer uses a U-net architecture (Ronneberger et al., 2015) trained to output a segmentation map equivalent to the FreeSurfer’s volume segmentation from an input of anatomical MRI image. FastSurfer can complete the segmentation in a few minutes with GPU. We made a script called FastSeg utilizing the advantage of FastSurfer to extract a brain mask (skull stripping), gray matter, white matter, and ventricle segmentation. FastSeg is implemented as part of the RTPSpy anatomical image processing pipeline and also released as an independent tool.16 The FastSurfer process in the FastSeg could take very long (about an hour) if GPU was not available. Therefore, RTPSpy also offers another processing stream that does not use FastSeg. This section describes the flow of the anatomical image processing steps and shows the evaluation results of their segmentation accuracy and noise regressor quality compared to FreeSurfer’s segmentation.

Anatomical Image Processing Pipeline

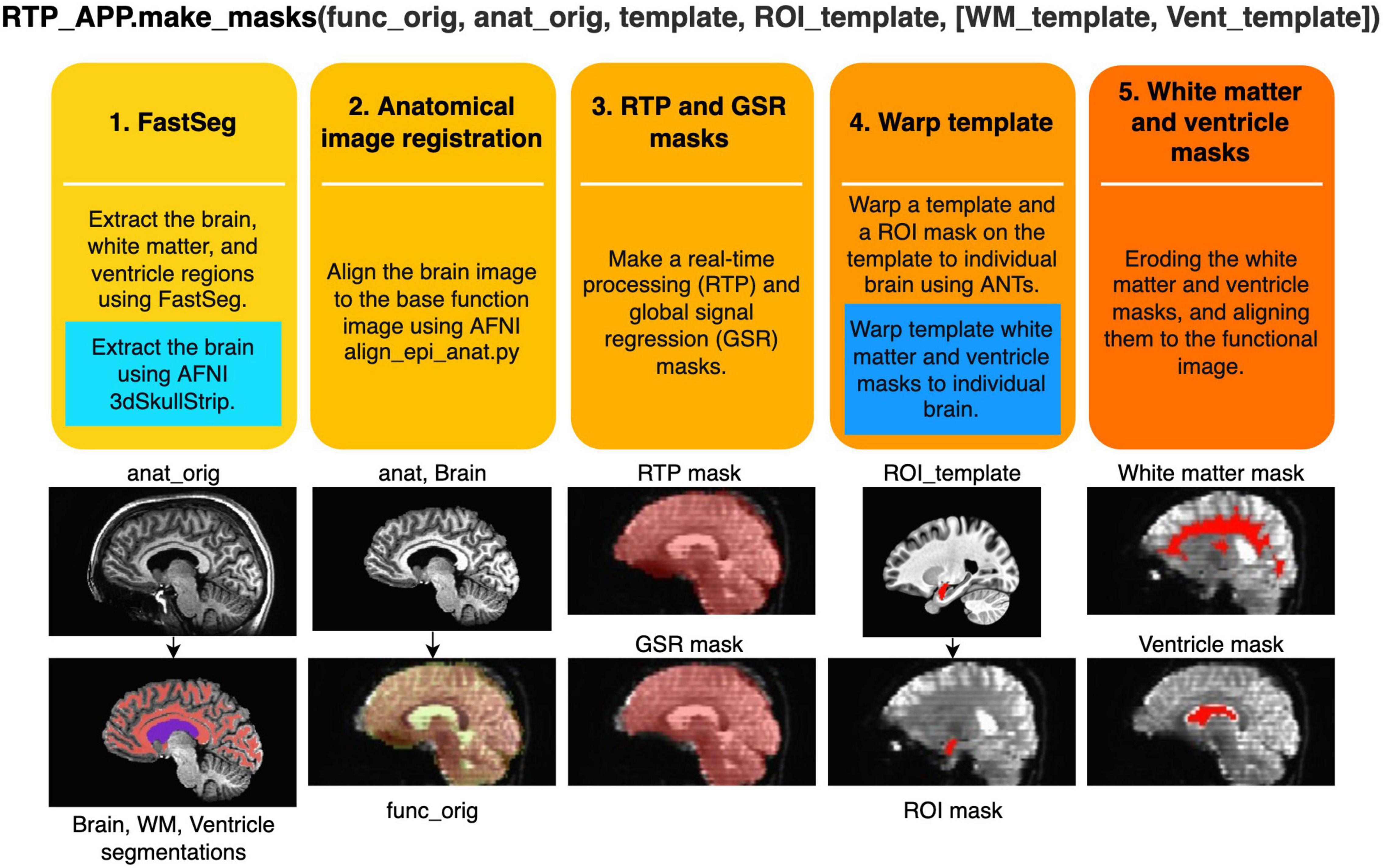

RTPspy offers a simple function interface to run a series of anatomical image processing, the “make_masks” method in RTP_APP class. Figure 3 shows the processing pipeline in this method. The method receives filenames of a reference function image (func_orig), anatomical image (anat_orig), template image (template, optional), and a region of interest (ROI) image in the template space (ROI_template, optional). If the alternative processing stream without FastSeg is used, white matter and ventricle masks defined in the template space (WM_template, Vent_template) can also be received. The process includes the following five steps.

Figure 3. Procedures of creating image masks in the “make_masks” method of RTP_APP. Two blue boxes show the procedures used in the alternative stream without using FastSeg. In the alternative stream, the first process is replaced by a blue box, and the fourth process is performed by adding the procedure in the blue box. The images below demonstrate what image processing is done at each step.

(1) Extracting the brain (skull stripping), white matter, and ventricle regions using FastSeg. FastSeg uses the first stage of the FastSurfer process to make a volume segmentation map (DKTatlas+aseg.mgz). Then, all the segmented voxels are extracted as the brain mask with filling holes. The white matter mask is made with a union of the white matter and corpus callosum segmentations. The ventricle mask is made with lateral ventricle segmentation. We did not include small ventricle areas because the mask is used only for making a regressor for online fMRI denoising.

In the alternative stream not using FastSeg (blue box in Figure 3), AFNI’s 3dSkullStrip is used for brain extraction. White matter and ventricle masks are made in a later step (step 4) by warping the template masks into an individual brain.

(2) Aligning the extracted brain image to a reference function image using AFNI align_epi_anat.py.

(3) Aligning and resampling the brain mask into the reference function image space using the parameters made in step 2 and making a signal mask of the function image using 3dAutomask in AFNI. The union of these masks is made as a real-time processing mask (RTPmask.nii.gz), used at spatial smoothing in RTP_SMOOTH and defining the processing voxels in RTP_REGRESS. The intersect of these masks is also made as a global signal mask (GSRmask.nii.gz), used in RTP_REGRESS.

(4) If the template image and the ROI mask on the template are provided, the template brain is warped into the participant’s anatomical brain image using the python interface of ANTs registration.17 Then, the ROI mask on the template is warped into the participant’s brain anatomy image and resampled to the reference function image to make an ROI mask in the functional image space. This mask will be used for neurofeedback signal calculation.

In the alternative stream not using FastSeg (blue box in Figure 3), white matter and ventricle masks defined in the template brain are also warped into an individual brain.

(5) Eroding the white matter (two voxels) and ventricle (one voxel) masks and aligning them to the functional image space using the alignment parameters (affine transformation) estimated at step 2. These masks will be used for the white matter and ventricle average signal regression.

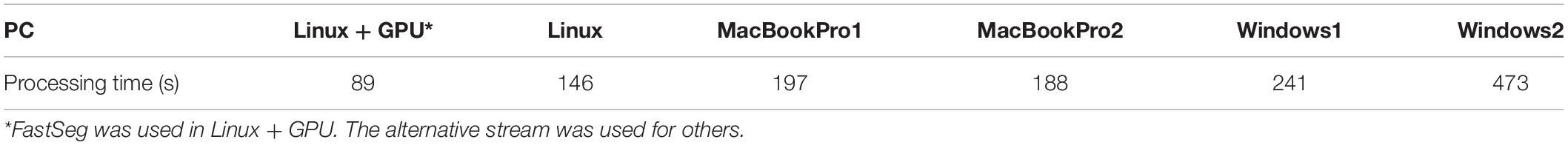

These anatomical image processing could be completed in less than a few minutes. Table 3 shows the processing times with and without FastSeg on the PCs listed in Table 1 for one sample image (MPRAGE image with 256 × 256 × 120 matrix and 0.9 × 0.9 × 1.2 mm voxel size).

Table 3. Anatomical image processing times on the PCs shown in Table 1.

Evaluations for the Segmentation Masks and Noise Regressors

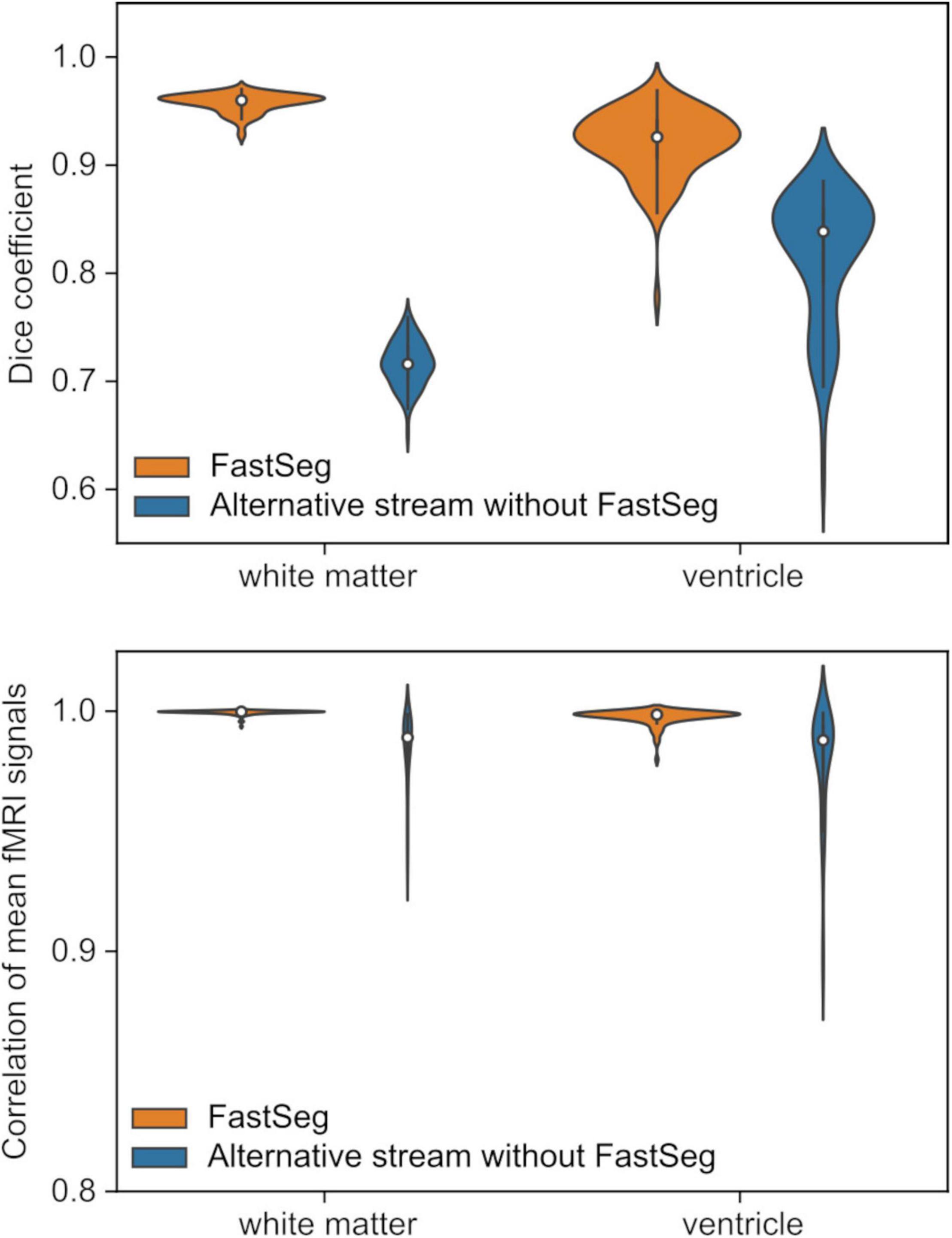

Since the anatomical segmentation by FastSurfer is not exactly the same as FreeSurfer (Henschel et al., 2020), we evaluated the quality of white matter and ventricle masks made by the FatSeg compared to the FreeSurfer segmentation. The comparison was made for 87 healthy participants’ anatomical and resting-state fMRI images (age 18–55 years, 45 females) used in our previous study (Misaki and Bodurka, 2021). We also performed the same comparison for the masks made by the alternative processing stream without FastSeg.

Figure 4 upper panel shows the Dice coefficients of the segmentation masks with the FreeSufer segmentation in anatomical image resolution. The masks made by FastSeg had high Dice coefficients with FreeSurfer segmentation showing their good agreement, while the masks made by the alternative stream had lower agreements, especially for the white matter mask. Nevertheless, the effect of these discrepancies could be minor in creating a noise regressor at functional image resolution. The bottom panel of Figure 4 shows the correlation between the average white matter and ventricle fMRI signals created from the FastSeg (or alternative stream) and FreeSurfer masks. For the FastSeg, the correlation was nearly 1.0 (higher than 0.98 even for the minimum sample). Although a few samples had a relatively low Dice coefficient for the FastSeg ventricle mask, that was because their ventricle region was small, and minor error affected the Dice coefficient much. Indeed, the signal correlation for the sample with minimum Dice coefficient (0.77) was as high as 0.99, indicating a minor segmentation error. Thus, the effect of the segmentation difference between the FastSeg and FreeSurfer was minor on the mean white matter and ventricle signals. The noise regressors made from the FastSeg hold equal quality to those made from FreeSurfer segmentations.

Figure 4. Quality evaluations for the masks created by the RTPSpy anatomical image processing streams by comparing to FreeSurfer segmentation. The upper panel shows Dice coefficients with FreeSurfer segmentations for the white matter and ventricle masks in the anatomical image resolution. The bottom panel shows correlations of the mean signals calculated from the masks in functional images.

For the alternative processing stream without FastSeg, the correlation was lower than the FastSeg, while they were still higher than 0.9 for most samples with the minimum of 0.89. Although the FastSeg offers a better segmentation quality, the alternative processing stream would have an acceptable quality to make a noise regressor if GPU is not available.

RTPSpy Usage

Building a Processing Pipeline

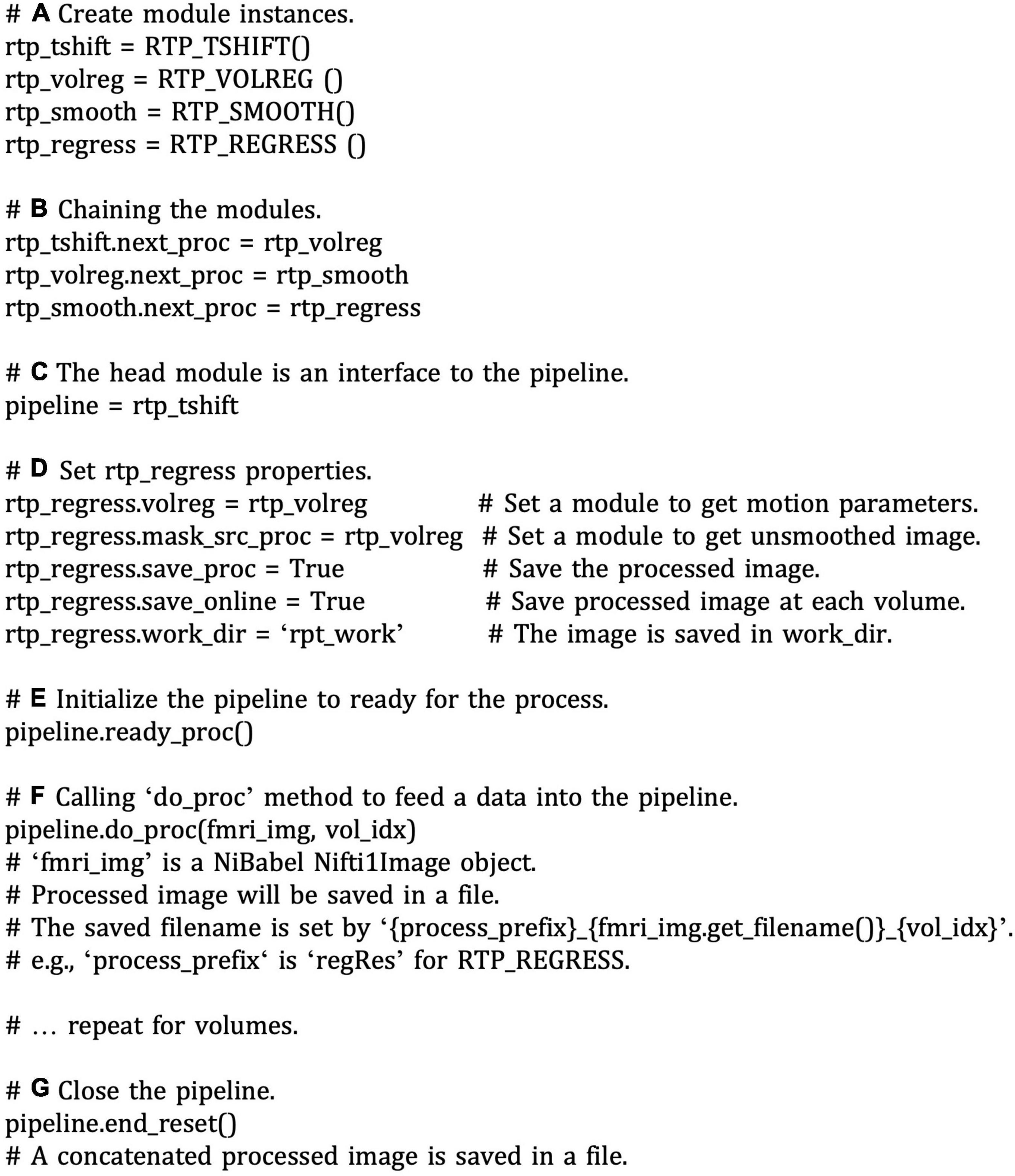

Low-Level Interface

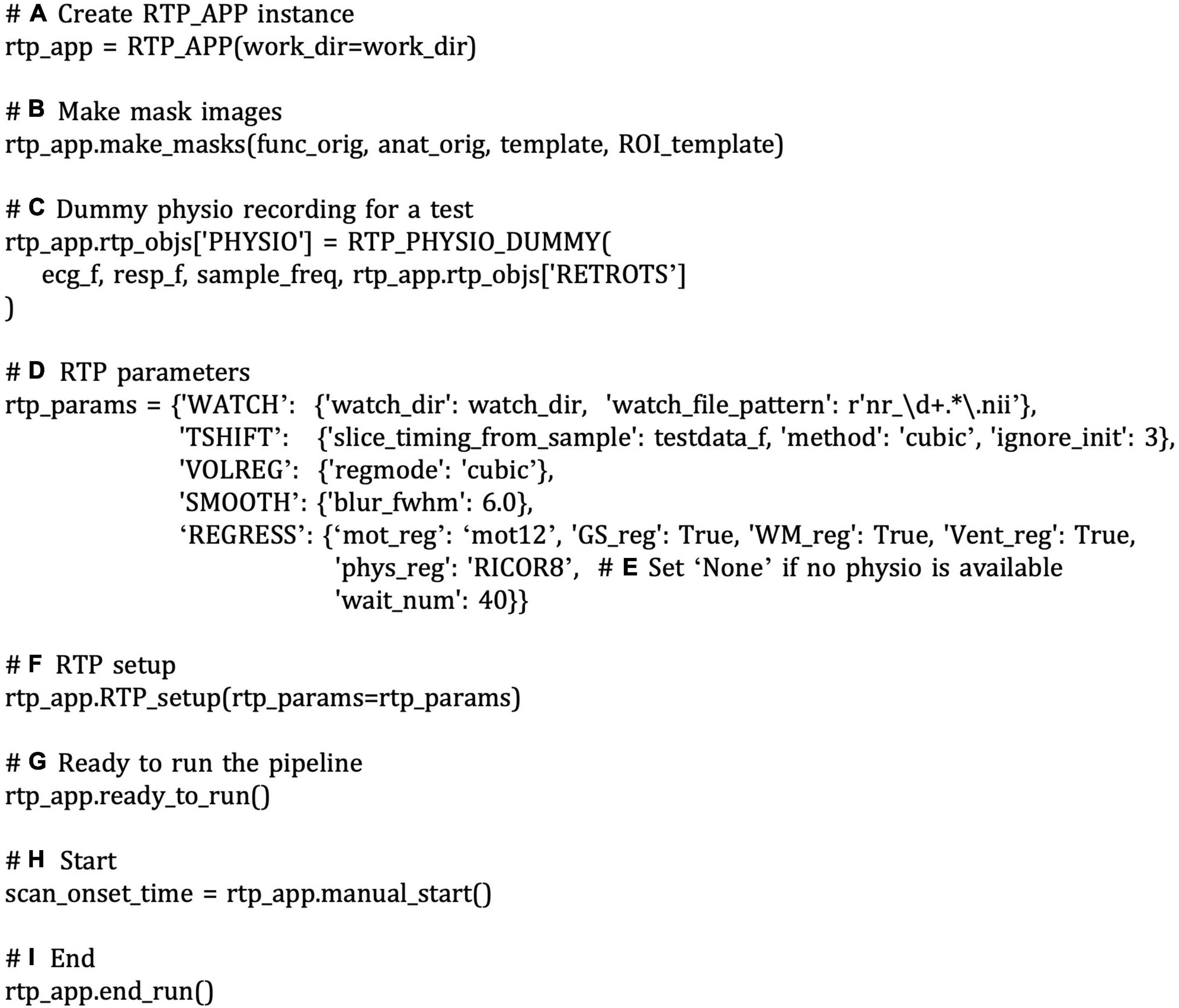

Figure 5 shows a pseudo script to create an online fMRI data processing pipeline. This is presented for explaining the low-level interfaces of building an RTPSpy pipeline. A script with higher-level interfaces using RTP_APP utility methods is presented later (Figure 6). To make a pipeline, we should create instances of each processing module (Figure 5A), set the chaining module in the “next_proc” property (Figure 5B), and the pipeline is ready. The modules’ combination and the order can be arbitrary, except that RTP_VOLREG must exist before RTP_REGRESS. The head module can be used as an interface to the pipeline (Figure 5C).

Figure 5. A pseudo script of low-level interfaces of pipeline creation with RTPSpy. The comments with bold alphabet indicate a part of the script explained in the main text. To make a pipeline, we should create instances of each processing module (A) and set a chaining module to the “next_proc” property (B). The head module can be used as an interface to the pipeline (C). Properties of the modules in a pipeline can be directly accessed and set by the module instances (D). Calling the “redy_proc” method initializes the pipeline (E). The processing is run by feeding a NiBabel Nifti1Image object to the “do_proc” method of the pipeline (F). The pipeline is closed by calling the “end_reset” method (G).

Figure 6. A snippet of an example script to run a real-time processing pipeline using RTP_APP interfaces. The comments with bold alphabet indicate a part of the script explained in the main text. Instantiating the RTP_APP class (A) creates all processing modules inside it. If you use the “make_masks” method to create the mask images, call the method of the rtp_app instance (B). This example uses dummy physiological signals (C). The module properties can be set directly by accessing the “rtp_app.rtp_obj” property (C) or by feeding a property dictionary to the “rtp_app.RTP_setup” method (D,F). If physiological signals are not available, you can disable RETROICOR by setting the “phys_reg” property of “REGRESS” to “None” (E). Calling the “rtp_app.ready_to_run” initializes the pipeline (G). The processing can be started by calling the “manual_start” method (H). To close the pipeline, call the “end_run” method (I).

Properties of the modules in a pipeline can be directly accessed and set by the module instances (Figure 5D). For example, “volreg” and “mask_src_proc” is set to rtp_regress to receive motion parameters and unsmoothed image data, which are used to create a motion regressor and an average signal regressor in a segmented mask. The “save_proc” property (saving the processed volume data in a NIfTI file) and “online_saving” property (saving is done at each volume) are also set for rtp_regress to save the processed volume image in a file. When the “online_saving” property is set True, the processed image at each volume is saved in real-time. The online saving is done after the downstream processing of the pipeline is completed so as not to affect the real-time performance of the pipeline processing.

Calling the “redy_proc” method initialize the pipeline (Figure 5E). The processing is run by feeding a NiBabel Nifti1Image object to the “do_proc” method of the pipeline (Figure 5F). The pipeline is closed by calling the “end_reset” method (Figure 5G), and then a concatenated image file is saved in a file. These low-level interfaces could be useful to develop custom input and output modules by users.

High-Level Interface With RTP_APP

RTPSpy also offers high-level utility methods in RTP_APP. Figure 6 shows a snippet of an example script to run a real-time processing pipeline using RTP_APP interfaces. Refer also to the system check script in the package (rtpspy_system_check.py) for a complete script. Instantiating the RTP_APP class (Figure 6A) creates all processing modules automatically inside it. The processing modules can be accessed by “rtp_app.rtp_obj[‘TSHIFT’]” for RTP_TSHIFT, for example. If you use the “make_masks” method to create the mask images, call the method of the rtp_app instance (Figure 6B). Then, the properties of the mask files, RTP_SMOOTH.mask_file, RTP_REGRESS.mask_file, RTP_REGRESS.GS_mask (global signal mask), RTP_REGRESS.WM_mask, RTP_REGRESS. Vent_mask, and RTP_APP.ROI_mask are automatically set. The module properties can also be set directly by accessing the “rtp_app.rtp_obj” property (e.g., Figure 6C) or by feeding a dictionary to the “rtp_app.RTP_setup” method (Figures 6D,F). You can set a custom mask using these interfaces when you want to set a mask without using the “make_masks” method. The order of the pipeline cannot be modified in this interface, but you can disable a specific module by setting the “enables” property False (e.g., Figure 8A). This example uses dummy physiological signals (Figure 6C) to simulate the cardiac and respiration signal recordings. If these signals are not available, set the “phys_reg” property of “REGRESS” to “None” (Figure 6E). All the properties and possible parameters are described in the script files of each module (see Table 2 for the filenames). The pipeline creation is done in the “rtp_app. RTP_setup” method. The “save_proc” property of the last module (e.g., RTP_REGRESS) is automatically set True, and the RTP_APP object is connected after the last module in the “rtp_app.RTP_setup” method.

Calling the “rtp_app.ready_to_run” initializes the pipeline (Figure 6G). The processing can be started by calling the “manual_start” method (Figure 6H), and then the RTP_WATCH module starts watching a new file in the watched directory. The start of the processing can also be triggered by a TTL signal implemented in the RTP_SCANONSET module (rtp_scanonset.py file). To close the pipeline, call the “end_run” method (Figure 6I), then the WATCH module stops monitoring, and the online processed data is saved in a file.

To customize the feedback signal calculation, you can modify the “do_proc” method in RTP_APP (rtp_app.py). The RTP_APP module works as a pipeline terminal, receiving the processed data, extracting the neurofeedback signal, and sending the signal to an external application. By default, it extracts the mean signal in the ROI mask, but it can be overridden according to the individual application need. An example way to make a customized application class is shown in Section “An Example Graphical User Interface Application Integrating the RTPSpy Modules,” and Figure 10.

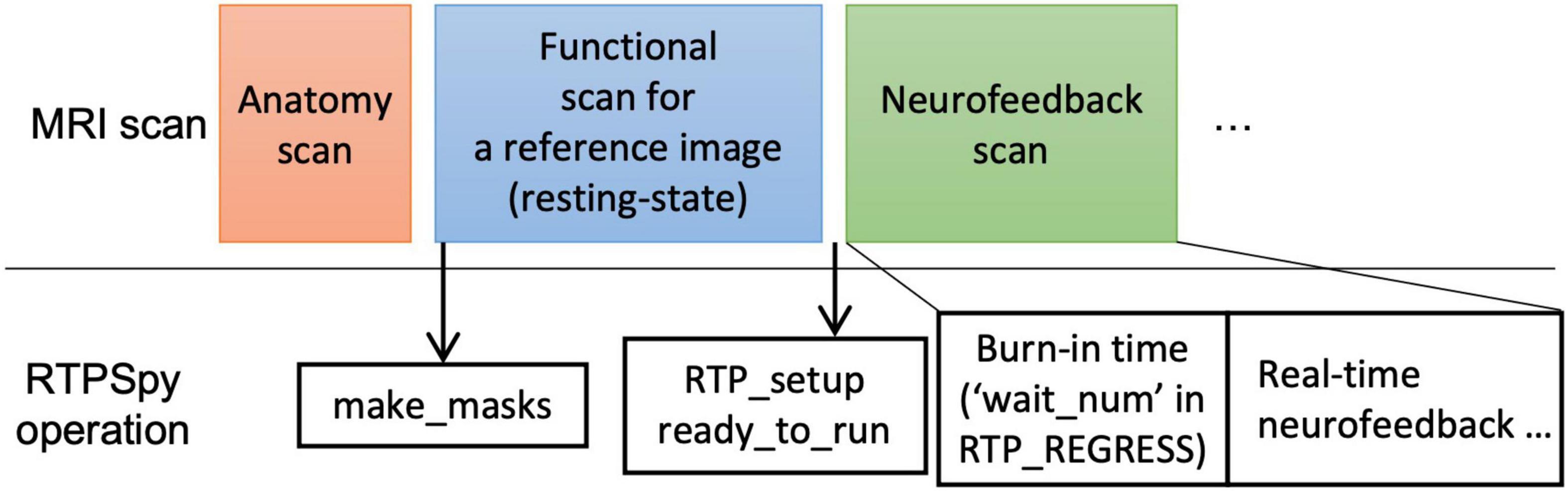

Example Real-Time fMRI Session

Figure 7 presents an example procedure of a real-time fMRI (rtfMRI) session. Note, this is not a requirement for the library but only an example of a single-visit session and anatomically-defined neurofeedback target region. The rtfMRI session using RTPSpy could start with an anatomical image scan and a reference functional image scan to make the mask images. We usually perform a resting-state scan after an anatomical scan, and an initial image of the resting-state scan acquired in real-time is used as the reference function image. A pre-acquired anatomical image can also be used in the processing if the study is multi-visits and an anatomical image has been scanned previously. The mask creation using the “make_masks” method in RTP_APP can be finished during the resting-state scan so that no waiting time is required for a participant. If no resting-state scan is necessary, a short functional scan with the same imaging parameters as the neurofeedback runs can also be used. Then, you can set the RTP parameters, run the “RTP_setup” and “ready_to_run” methods, and start the neurofeedback scan.

Figure 7. Example of real-time fMRI session procedure with RTPSpy. This is an example session and not a requirement for library use.

A critical RTP property related to the task design is the “wait_num” in RTP_REGRESS. This property determines how many volumes the module waits before starting the regression. The task block should start after this burn-in time. Note that this number does not include the initial volumes discarded before the fMRI signal reaches a steady state. The “wait_num” must be larger than the number of regressors, but the just enough number is not enough because the regression with small samples overfits the data, resulting in a very small variance in the denoised output (Misaki et al., 2015). The actual number of required samples depends on the number of regressors and the target region. This waiting time could limit the neurofeedback task design. However, using a noise-contaminated signal as neurofeedback has a high risk of artifactual training effect (Weiss et al., 2020), which may degrade the validity of an experiment. Therefore, the online image processing should include necessary noise regressors, and the task design should accept the initial burn-in time. A simulation analysis would help determine the necessary noise regressors and the optimal number of waiting TRs. Our previous report (Misaki and Bodurka, 2021), investigating what brain regions and connectivity were more contaminated with noises as well as the effect of each noise regressor to reduce the noise, may also help. The volumes during the burn-in time are processed at the beginning of RTP_REGRESS processing (thus, the first processing time of RTP_REGRESS could take long, as shown in Figure 4 of Misaki and Bodurka (2021). These volumes can be used, for example, for the baseline calculation to scale the neurofeedback signal (Zotev et al., 2011; Young et al., 2017).

Simulating Real-Time fMRI Processing

One of the most effective ways to examine the integrity of real-time signal calculation is to simulate online processing and neurofeedback signal calculation using previously obtained fMRI data (Ramot and Gonzalez-Castillo, 2019; Misaki et al., 2020; Misaki and Bodurka, 2021). Assuring the integrity of online noise reduction is critical for neurofeedback training. If the noise reduction is insufficient, other factors than brain activation could confound the training effect (Weiss et al., 2020). Not only for the online image processing, but the feedback signal calculation also can be unique in the online analysis, for example, in the connectivity neurofeedback. The online connectivity calculation should use a short window width for a timely feedback signal reflecting the current brain state, and the optimal window width for the neurofeedback training would be specific to the target region and the task design (Misaki et al., 2020). In addition, simulating the signal processing is useful to evaluate the level of the actual feedback signal. For example, when the baseline level of the neurofeedback signal is adjusted by a mean signal in the preceding rest block (Zotev et al., 2011; Young et al., 2017), simulating such signal calculation could help to estimate a possible signal range to adjust a feedback presentation.

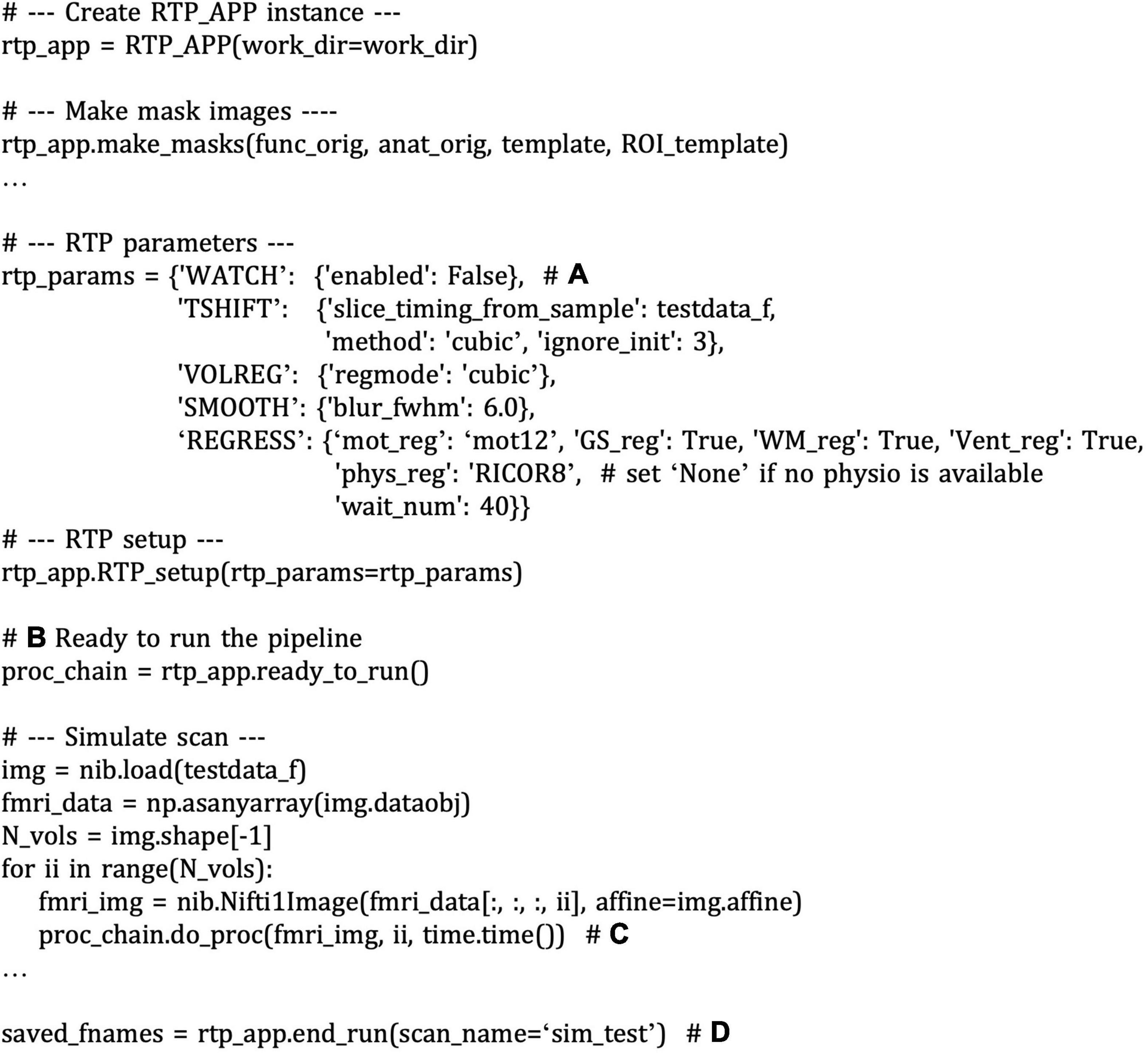

The modular library design of the RTPSpy helps perform a simulation with a simple script. While the simulation can be done by copying the data volume-by-volume into the watched directory, you can also inject the data directly into the pipeline for faster simulation. An example simulation script is provided as the “example/Simulation/rtpspy_simulation.py” file in the package. Figure 8 shows a snippet of the example simulation script. The pipeline creation is the same as shown in Figure 6 except for disabling the RTP_WATCH module (Figure 8A) and getting the pipeline object returned from the “ready_to_run” method (Figure 8B). The simulation can proceed with feeding the Nibabel Nifti1Image object to the “do_proc” method of the pipeline (Figure 8C). This method receives a volume image, image index (optional), and the end time of the previous process (optional). Calling the “end_run” method closes the pipeline and returns the saved filenames (Figure 8D). The output files include the parameter log (text file), ROI signal time-series (csv file), and the denoised image saved as a NIfTI file. You can modify the neurofeedback signal calculation by overriding the do_proc method in the RTP_APP, as explained in the next section, Figure 10.

Figure 8. A snippet of an example script of running a real-time fMRI processing simulation with RTPSpy. The comments with bold alphabet indicate a part of the script explained in the main text. The pipeline creation is the same as shown in Figure 6 except for disabling the RTP_WATCH module (A) and returning the pipeline object from the “ready_to_run” method (B). The simulation can proceed with feeding the Nibabel Nifti1Image object to the “do_proc” method of the pipeline (C). Calling the “end_run” method closes the pipeline and returns the saved filenames (D).

An Example Graphical User Interface Application Integrating the RTPSpy Modules

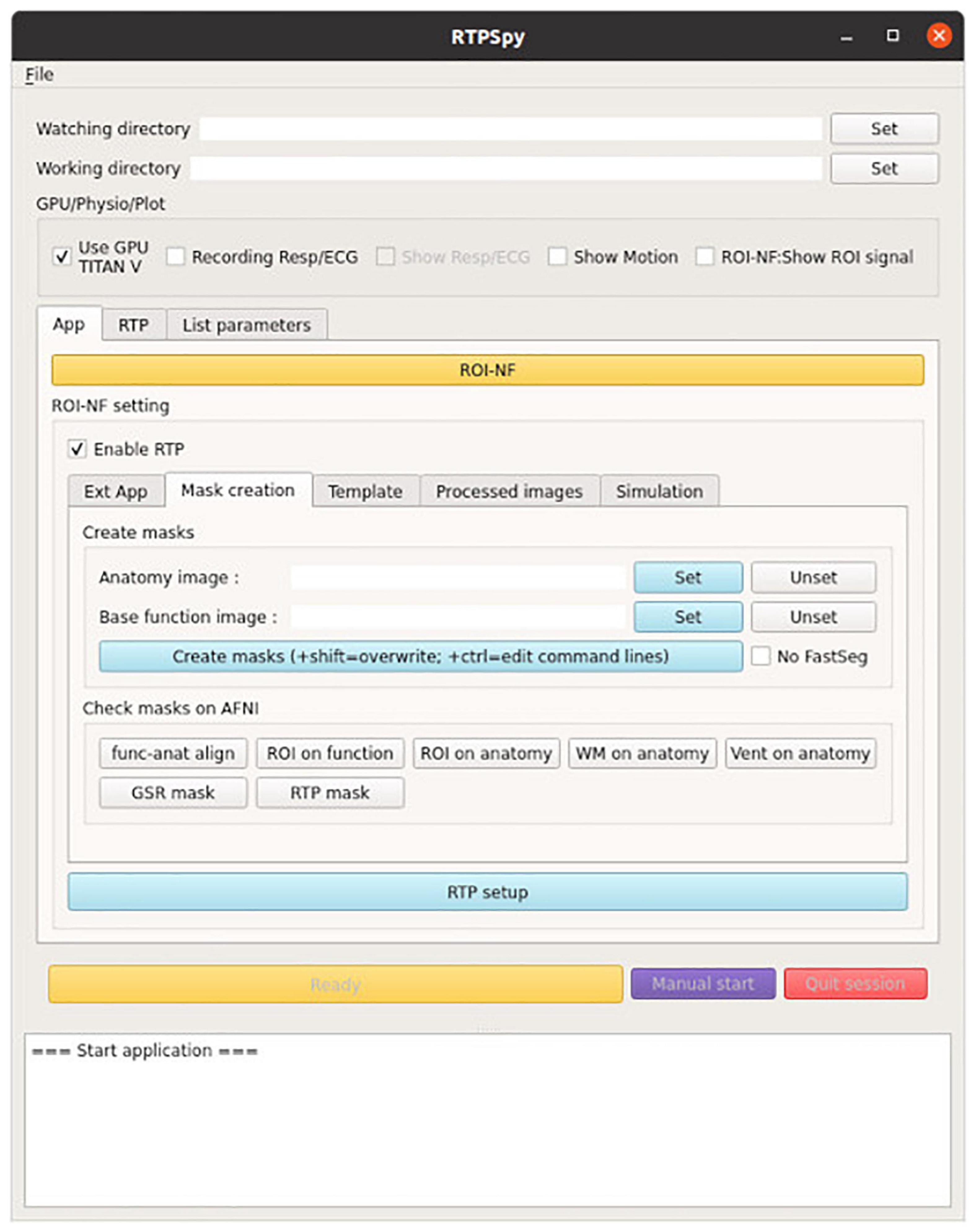

RTPSpy offers a graphical user interface (GUI) class (RTP_UI, rtp_ui.py) for easy access to the module functions. The example GUI application is provided in the “example/ROI-NF” directory in the package. Figure 9 shows the initial window of this example application. This application is presented for demonstrating how the RTPSpy library can be used to build a custom application and as a boilerplate for making a custom application by a user. This section explains how these example scripts can be modified to make a custom application. For a step-by-step usage of this application other than scripting, please refer to GitHub (see text footnote 1).

Figure 9. A view of the example GUI application integrating the RTPSpy modules. The figure presents the “mask creation” tab to run the make_masks process with GUI. The example application also offers graphical interfaces to almost all parameters in RTPSpy. Detailed usage of the application is presented in GitHub (see text footnote 1).

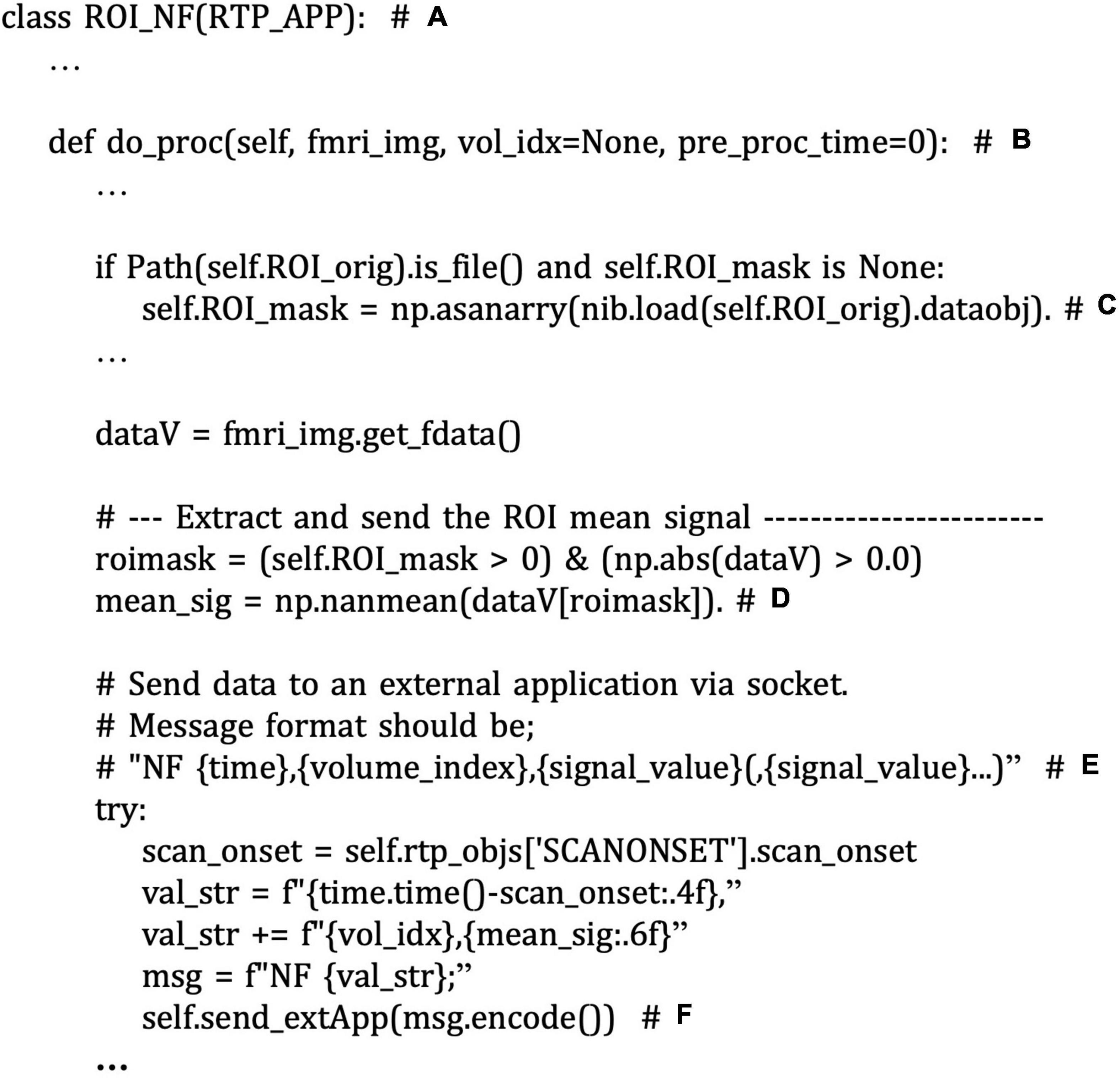

The application development can start with defining a user’s own application class inheriting from the RTP_APP. Figure 10 shows the code snippet from the “roi_nf.py” script file. In this application, ROI_NF class is defined by inheriting RTP_APP class (Figure 10A). Neurofeedback signal extraction is performed in the “do_proc” method in the ROI_NF class (Figure 10B). To customize the neurofeedback signal calculation, a user should override this method. The example script calculates the mean value within the ROI mask (Figure 10D). The ROI mask file is defined in the “ROI_orig” property defined in the RTP_APP class (Figure 10C). If an external application implements the RTP_SERV module, the signal can be sent to it using the “send_extApp” method (Figure 10F) by putting a signal value in a specific format string (Figure 10E).

Figure 10. A snippet of an example script of customized neurofeedback signal extraction and sending the signal to an external application in the “example/ROI-NF/roi_nf.py” file. The comments with bold alphabet indicate a part of the script explained in the main text. ROI_NF class is defined by inheriting RTP_APP class (A). Neurofeedback signal extraction is performed in the “do_proc” method (B). The example script calculates the mean value within the ROI mask (D). The ROI mask file is defined in the “ROI_orig” property (C). The signal can be sent to an external application in real-time using the “send_extApp” method (F) by putting it in a specific format string (E).

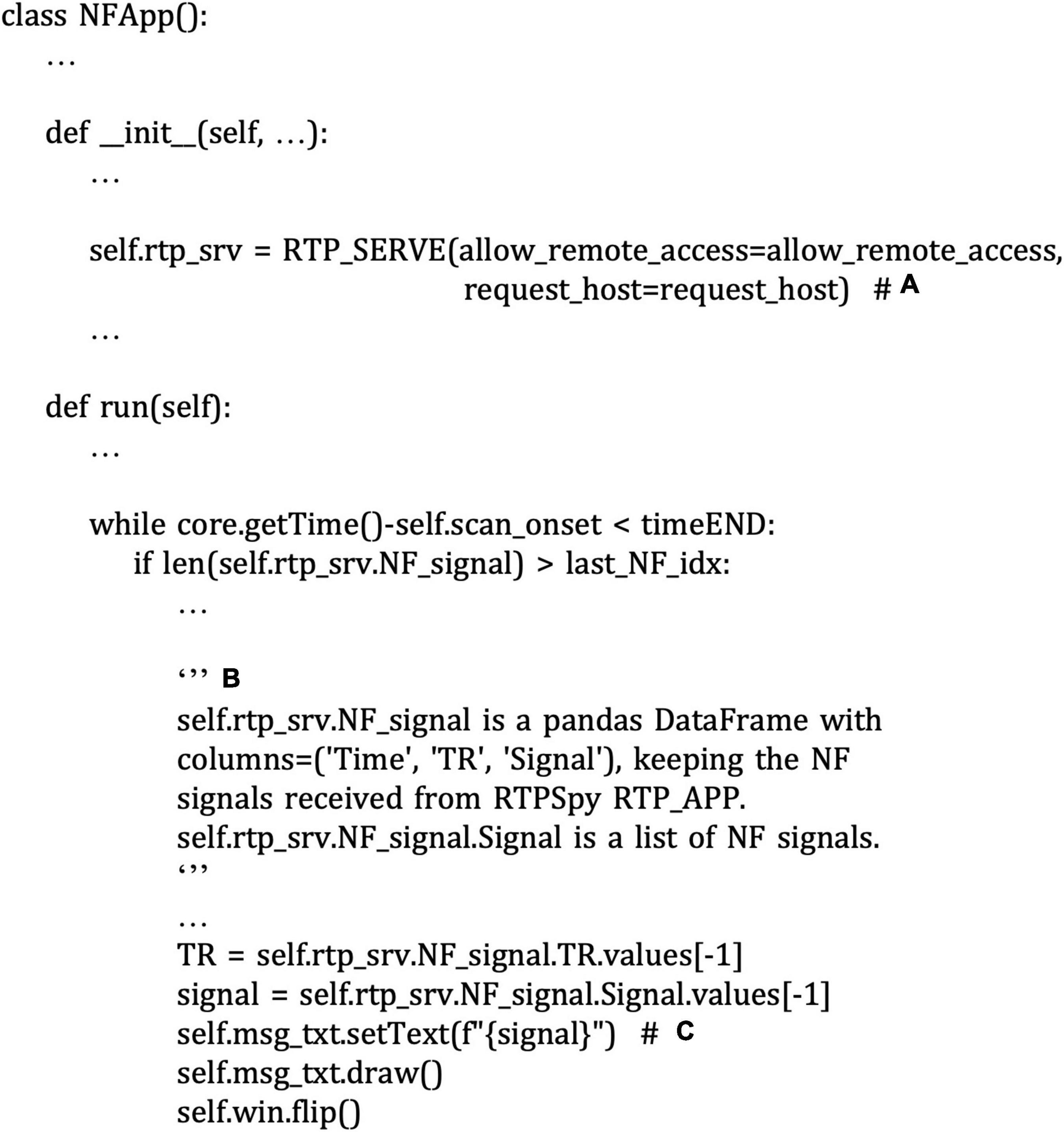

Figure 11 shows the code snippet of an example external application script for neurofeedback presentation (“example/ROI-NF/NF_psypy.py” file). This is an independent PsychoPy18 application from RTPspy but uses the RTP_SERVE module to communicate with an RTPSpy application. Instantiating the RTP_SERVE class object starts a TCP/IP server running in another thread (Figure 11A). This class does all the data exchange in the background. The RTP_SERVE object holds the received neurofeedback data in the pandas data frame19 (Figure 11B). While this example script just displays the latest received value on the screen with text (Figure 11C), users can modify this part to make a decent feedback presentation.

Figure 11. A snippet of an example script of neurofeedback presentation application in the “example/ROI-NF/NF_psypy.py” file. The comments with bold alphabet indicate a part of the script explained in the main text. Instantiating the RTP_SERV class object starts a TCP/IP server running in another thread (A). The RTP_SERVE object holds the received neurofeedback data in the pandas data frame (B). This example script displays the latest received value on the screen with text (C).

In this example application, the GUI operation can be done in parallel to the online image processing as the watchdog in the RTP_WATCH module runs in a separate thread, on which the processing runs. The anatomical image processing tools and a neurofeedback presentation application run on independent processes. Thus, they also run in parallel for a user to operate RTPSpy while an experiment is running. Using these example scripts, a user can develop an easy-to-use and highly customized rtfMRI application with minimum scripting labor. We also provide a full-fledged application of the left-amygdala neurofeedback session (Zotev et al., 2011; Young et al., 2017) in the “example/LA-NF” directory, which is explained in the Supplementary Material, “LA-NF application” and in GitHub (see text footnote 1).

Limitations and Environment-Specific Issues

While the RTPSpy provides general-use libraries for rtfMRI data processing, it is not a complete toolset for all environments. There could be several site-specific settings that a general library cannot support. One of the first critical settings is to obtain a reconstructed MRI image in real-time. The image format, the saved directory, and how to access the data (e.g., network mount or copying to the processing PC) could differ across manufacturers and sites. The RTP_WATCH detects a new file in the watched directory, but setting up the environment to put an fMRI file to an accessible place in real-time is not covered by the library. Specifically, our site uses AFNI’s Dimon command20 running on the scanner console computer and receives the data sent by Dimon with AFNI’s realtime plugin on a rtfMRI operation computer. This is not a part of the RTPSpy library and may not be possible for all if one cannot install additional software on the scanner console. Users may have to set up real-time access to the reconstructed image according to their environment.

Another caveat of environment-specific implementation is physiological signal recording. One of the advantages of the RTPSpy is its ability to run a physiological noise regression with RETROICOR in real-time. However, the equipment for cardiac and respiration signal recording could vary across the sites and manufacturer. In our site, we measure a cardiac signal using a photoplethysmography with an infrared emitter placed under the pad of a participant’s finger and respiration using a pneumatic respiration belt. These are equipped with the MRI scanner, GE MR750, and the signal is bypassed to the processing PC via serial port. Although we developed an interface class for these signals as RTP_PHYSIO, its implementation is highly optimized for our environment. A user may need to develop a custom script to replace the RTP_PHYSIO module adjusting to the individual environment. Similarly, detection of the TTL signal of a scan start, which is defined in the custom RTP_SCANONSET class, is device-dependent and needs to be implemented by a user according to the user’s device.

RTPSpy depends on several external tools for its anatomical image processing stream. Indeed, the package does not intend to provide an all-around solution by itself. Rather, RTPSpy is supposed to be used as a part of the user’s own application project. A required function specific to each application or environment should be implemented by an external tool or developed by users. We assume that the RTPSpy is used not as a complete package by itself but as a part of a custom application.

Conclusion

RTPSpy is a library for real-time fMRI processing, including comprehensive online fMRI processing, fast and accurate anatomical image processing, and a simulation system for optimizing neurofeedback signals. RTPSpy focuses on providing the building blocks to make a highly customized rtfMRI system. It also provides an example GUI application wrapped around RTPSpy modules. Although a library package requiring scripting skills may not be easy to use for everyone, we believe that RTPSpy’s modular architecture and easy-to-script interface will benefit developers who want to create customized rtfMRI applications. With its rich toolset and highly modular architecture, RTPSpy must be an attractive choice for developing optimized rtfMRI applications.

Data Availability Statement

The code and example data can be found here: https://github.com/mamisaki/RTPSpy.

Ethics Statement

The studies involving human participants were reviewed and approved by Western Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MM: conceptualization, data curation, formal analysis, investigation, methodology, software, writing–original draft, and writing–review and editing. JB: conceptualization, funding acquisition, investigation, methodology, software, project administration, and supervision. MP: conceptualization, funding acquisition, project administration, supervision, and writing–review and editing. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the Laureate Institute for Brain Research (LIBR) and the William K Warren Foundation. The funding agencies were not involved in the design of the experiment, data collection and analysis, interpretation of results, and preparation and submission of the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We dedicate this work in memory and honor of the late JB.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2022.834827/full#supplementary-material

Footnotes

- ^ https://github.com/mamisaki/RTPSpy/tree/main/example/ROI-NF

- ^ https://afni.nimh.nih.gov/

- ^ http://stnava.github.io/ANTs/

- ^ https://github.com/mamisaki/RTPSpy

- ^ https://docs.conda.io

- ^ https://www.anaconda.com/

- ^ https://deep-mi.org/research/fastsurfer/

- ^ https://pypi.org/project/antspyx/

- ^ https://developer.nvidia.com/cuda-toolkit

- ^ https://nipy.org/nibabel/

- ^ https://pythonhosted.org/watchdog/index.html

- ^ https://afni.nimh.nih.gov/pub/dist/doc/program_help/3dvolreg.html

- ^ https://afni.nimh.nih.gov/pub/dist/doc/program_help/3dBlurInMask.html

- ^ https://pytorch.org/

- ^ https://freesurfer.net/

- ^ https://github.com/mamisaki/FastSeg

- ^ https://github.com/ANTsX/ANTsPy

- ^ https://www.psychopy.org

- ^ https://pandas.pydata.org/

- ^ https://afni.nimh.nih.gov/pub/dist/doc/program_help/Dimon.html

References

Bagarinao, E., Matsuo, K., Nakai, T., and Sato, S. (2003). Estimation of general linear model coefficients for real-time application. Neuroimage 19, 422–429. doi: 10.1016/s1053-8119(03)00081-8

Birn, R. M., Smith, M. A., Jones, T. B., and Bandettini, P. A. (2008). The respiration response function: the temporal dynamics of fmri signal fluctuations related to changes in respiration. Neuroimage 40, 644–654.

Cox, R. W., Jesmanowicz, A., and Hyde, J. S. (1995). Real-time functional magnetic resonance imaging. Magn. Reson. Med. 33, 230–236. doi: 10.1002/mrm.1910330213

Glover, G. H., Li, T. Q., and Ress, D. (2000). Image-based method for retrospective correction of physiological motion effects in fmri: retroicor. Magn. Reson. Med. 44, 162–167.

Goebel, R. (2012). Brainvoyager–past, present, future. Neuroimage 62, 748–756. doi: 10.1016/j.neuroimage.2012.01.083

Goebel, R., Zilverstand, A., and Sorger, B. (2010). Real-time fmri-based brain-computer interfacing for neurofeedback therapy and compensation of lost motor functions. Imag. Med. 2, 407–415. doi: 10.2217/iim.10.35

Henschel, L., Conjeti, S., Estrada, S., Diers, K., Fischl, B., and Reuter, M. (2020). Fastsurfer - a fast and accurate deep learning based neuroimaging pipeline. Neuroimage 219:117012. doi: 10.1016/j.neuroimage.2020.117012

Heunis, S., Besseling, R., Lamerichs, R., de Louw, A., Breeuwer, M., Aldenkamp, B., et al. (2018). Neu(3)Ca-Rt: a framework for real-time fmri analysis. Psychiatry Res. Neuroi. 282, 90–102. doi: 10.1016/j.pscychresns.2018.09.008

Kiebel, S. J., Kloppel, S., Weiskopf, N., and Friston, K. J. (2007). Dynamic causal modeling: a generative model of slice timing in fmri. Neuroimage 34, 1487–1496. doi: 10.1016/j.neuroimage.2006.10.026

Koush, Y., Ashburner, J., Prilepin, E., Sladky, R., Zeidman, P., Bibikov, S., et al. (2017). Opennft: an open-source python/matlab framework for real-time fmri neurofeedback training based on activity. Connect. Multiv. Pattern Analy. Neuroi. 156, 489–503. doi: 10.1016/j.neuroimage.2017.06.039

Kumar, M., Michael, A., Antony, J., Baldassano, C., Brooks, P. P., Cai, M. B., et al. (2021). Brainiak: The Brain Imaging Analysis Kit. Aperture Neuro. Available online at: https://apertureneuropub.cloud68.co/articles/42/index.html

MacInnes, J. J., Adcock, R. A., Stocco, A., Prat, C. S., Rao, R. P. N., and Dickerson, K. C. (2020). Pyneal: open source real-time fmri software. Front. Neurosci. 14:900. doi: 10.3389/fnins.2020.00900

Misaki, M., and Bodurka, J. (2021). The impact of real-time fmri denoising on online evaluation of brain activity and functional connectivity. J. Neural. Eng. 18:20210701. doi: 10.1088/1741-2552/ac0b33

Misaki, M., Barzigar, N., Zotev, V., Phillips, R., Cheng, S., and Bodurka, J. (2015). Real-time fmri processing with physiological noise correction - comparison with off-line analysis. J. Neurosci. Methods 256, 117–121. doi: 10.1016/j.jneumeth.2015.08.033

Misaki, M., Tsuchiyagaito, A., Al Zoubi, O., Paulus, M., Bodurka, J., and Tulsa, I. (2020). Connectome-wide search for functional connectivity locus associated with pathological rumination as a target for real-time fmri neurofeedback intervention. Neuroi. Clin. 26:102244. doi: 10.1016/j.nicl.2020.102244

Mulyana, B., Tsuchiyagaito, A., Smith, J., Misaki, M., Kuplicki, R., Soleimani, G., et al. (2021). Online closed-loop real-time tes-fmri for brain modulation: feasibility, noise/safety and pilot study. bioRxiv[preprint]. doi: 10.1101/2021.04.10.439268

Peirce, J. W. (2008). Generating stimuli for neuroscience using psychopy. Front. Neuroinform. 2:10. doi: 10.3389/neuro.11.010.2008

Ramot, M., and Gonzalez-Castillo, J. A. (2019). Framework for offline evaluation and optimization of real-time algorithms for use in neurofeedback, demonstrated on an instantaneous proxy for correlations. Neuroimage 188, 322–334. doi: 10.1016/j.neuroimage.2018.12.006

Ramot, M., Kimmich, S., Gonzalez-Castillo, J., Roopchansingh, V., Popal, H., White, E., et al. (2017). Direct modulation of aberrant brain network connectivity through real-time neurofeedback. Elife 6:e28974. doi: 10.7554/eLife.28974.001

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention – Miccai 2015. Lecture Notes in Computer Science, eds N. Navab, J. Hornegger, W. M. Wells, and A. F. Frangi (Cham: Springer International Publishing), 234–241.

Ros, T., Enriquez-Geppert, S., Zotev, V., Young, K. D., Wood, G., Whitfield-Gabrieli, S., et al. (2020). Consensus on the reporting and experimental design of clinical and cognitive-behavioural neurofeedback studies (cred-nf checklist). Brain 143, 1674–1685. doi: 10.1093/brain/awaa009

Sato, J. R., Basilio, R., Paiva, F. F., Garrido, G. J., Bramati, I. E., Bado, P., et al. (2013). Real-time fmri pattern decoding and neurofeedback using friend: an fsl-integrated bci toolbox. PLoS One 8:e81658. doi: 10.1371/journal.pone.0081658

Sladky, R., Friston, K. J., Trostl, J., Cunnington, R., Moser, E., and Windischberger, C. (2011). Slice-timing effects and their correction in functional mri. Neuroimage 58, 588–594. doi: 10.1016/j.neuroimage.2011.06.078

Sulzer, J., Haller, S., Scharnowski, F., Weiskopf, N., Birbaumer, N., Blefari, M. L., et al. (2013). Real-time fmri neurofeedback: progress and challenges. Neuroimage 76, 386–399. doi: 10.1016/j.neuroimage.2013.03.033

Thibault, R. T., MacPherson, A., Lifshitz, M., Roth, R. R., and Raz, A. (2018). Neurofeedback with fmri: a critical systematic review. Neuroimage 172, 786–807. doi: 10.1016/j.neuroimage.2017.12.071

Watanabe, T., Sasaki, Y., Shibata, K., and Kawato, M. (2017). Advances in fmri real-time neurofeedback. Trends Cogn. Sci. 21, 997–1010. doi: 10.1016/j.tics.2017.09.010

Weiss, F., Zamoscik, V., Schmidt, S. N. L., Halli, P., Kirsch, P., and Gerchen, M. F. (2020). Just a very expensive breathing training? Risk of respiratory artefacts in functional connectivity-based real-time fmri neurofeedback. Neuroimage 210:116580. doi: 10.1016/j.neuroimage.2020.116580

Young, K. D., Siegle, G. J., Zotev, V., Phillips, R., Misaki, M., Yuan, H., et al. (2017). Randomized clinical trial of real-time fmri amygdala neurofeedback for major depressive disorder: effects on symptoms and autobiographical memory recall. Am. J. Psychiatry 174, 748–755. doi: 10.1176/appi.ajp.2017.16060637

Keywords: real-time fMRI, neurofeedback, online noise reduction, python library, fast segmentation

Citation: Misaki M, Bodurka J and Paulus MP (2022) A Library for fMRI Real-Time Processing Systems in Python (RTPSpy) With Comprehensive Online Noise Reduction, Fast and Accurate Anatomical Image Processing, and Online Processing Simulation. Front. Neurosci. 16:834827. doi: 10.3389/fnins.2022.834827

Received: 13 December 2021; Accepted: 14 February 2022;

Published: 11 March 2022.

Edited by:

Nikolaus Weiskopf, Max Planck Institute for Human Cognitive and Brain Sciences, GermanyReviewed by:

Stephan Heunis, Research Center Jülich, GermanyRonald Sladky, University of Vienna, Austria

Yury Koush, Yale University, United States

Copyright © 2022 Misaki, Bodurka and Paulus. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Masaya Misaki, bW1pc2FraUBsYXVyZWF0ZWluc3RpdHV0ZS5vcmc=

†Deceased

Masaya Misaki

Masaya Misaki Jerzy Bodurka

Jerzy Bodurka Martin P. Paulus

Martin P. Paulus