94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 19 October 2022

Sec. Perception Science

Volume 16 - 2022 | https://doi.org/10.3389/fnins.2022.1031505

This article is part of the Research TopicAdvances in Color Science: From Color Perception to Color Metrics and its Applications in Illuminated EnvironmentsView all 22 articles

We use the mobile phone camera as a new spectral imaging device to obtain raw responses of samples for spectral estimation and propose an improved sequential adaptive weighted spectral estimation method. First, we verify the linearity of the raw response of the cell phone camera and investigate its feasibility for spectral estimation experiments. Then, we propose a sequential adaptive spectral estimation method based on the CIE1976 L*a*b* (CIELAB) uniform color space color perception feature. The first stage of the method is to weight the training samples and perform the first spectral reflectance estimation by considering the Lab color space color perception features differences between samples, and the second stage is to adaptively select the locally optimal training samples and weight them by the first estimated root mean square error (RMSE), and perform the second spectral reconstruction. The novelty of the method is to weight the samples by using the sample in CIELAB uniform color space perception features to more accurately characterize the color difference. By comparing with several existing methods, the results show that the method has the best performance in both spectral error and chromaticity error. Finally, we apply this weighting strategy based on the CIELAB color space color perception feature to the existing method, and the spectral estimation performance is greatly improved compared with that before the application, which proves the effectiveness of this weighting method.

The surface spectral reflectance is known as the fingerprint of object colors (Wang et al., 2020) and can characterize colors more accurately than RGB trichromatic information, enabling the replication and reproduction of color information. Therefore, obtaining accurate spectral information through spectral imaging is a hot topic in color science research. This makes spectral imaging technology widely used in many fields, such as heritage conservation, remote sensing mapping, skin disease diagnosis, printing color management, food safety monitoring, material non-destructive testing, etc. (Del Pozo et al., 2017; Liang and Wan, 2017; Rateni et al., 2017; Huang et al., 2021; Liang et al., 2021; Tominaga et al., 2022).

In the past decades, a large number of color scientists and research institutions have constructed a large number of spectral imaging systems that consist of monochromatic or multicolor cameras, combined with filter sets, and multiple light sources (Liang and Wan, 2017). However, due to the low imaging efficiency, low spatial resolution, and high system construction cost of such multichannel spectral imaging systems, color scientist Murakami started to study the method of estimating spectral reflectance from single RGB images of digital cameras in 2002 (Murakami et al., 2002).

The spectral reflectance estimation method based on digital camera responses is to solve the ill-posed inverse problem mathematically and estimate the high-dimensional spectral reflectance from the low-dimensional camera responses (Xiao et al., 2019). Compared with multichannel spectral imaging methods, this method has the advantages of fast imaging speed, high spatial resolution, avoidance of geometric distortion, and good economy (Liang et al., 2019) which makes professional SLR digital cameras gradually become the new spectral imaging devices.

Since Kyocera released the first camera-equipped mobile phone in 1999 (Hussain and Bowden, 2021), camera technology on mobile phones has developed rapidly. Today, the mainstream high-end cell phone camera has a resolution of at least 64 megapixels. In terms of hardware, super large-area CMOS, Optical Image Stabilizer, large aperture, Phase Auto Focus, 4-in-1 Super Pixel, and many other new technologies have been popularized. The software adds features like AI portrait, super night photo, time-lapse mode, live photo, slow motion video in 120 fps, etc. Hardware and software upgrades have greatly improved the imaging capability of cameras on the cell phone, and its gap with professional digital cameras is gradually being smoothed out, making mobile phones gradually become a daily shooting tool for most people, and also become the imaging instrument for many scientific studies, used to replace the heavy, expensive professional digital cameras (Kim et al., 2019; Hussain and Bowden, 2021; Stuart et al., 2021; Liang et al., 2022; Tominaga et al., 2022).

The spectral estimation methods based on a single RGB image mainly include the Wiener method, Infinite-dimensional model method, pseudo-inverse method, R matrix method, principal component analysis (PCA) method, kernel method, and so on. Since the camera sensitivity required by the Wiener method and the infinite-dimensional model is difficult to obtain directly (Tominaga et al., 2021), and the indirect method of estimating sensitivity increases the error, these two methods are less applied. In contrast, methods such as pseudo-inverse estimation, R-matrix, and PCA have the advantages of no prior data, simple processes, and small computational, but the spectral estimation accuracy of these methods is relatively low. To improve the estimation accuracy, a large number of optimization methods have been proposed. Connah and Hardeberg (2005) introduced the polynomial model to three-channel and multi-channel spectral imaging systems. Heikkinen et al. (2007) proposed an application of the regularization framework to estimate spectral reflectance from digital camera responses. Shen et al. (2010) proposed a partial least squares-based spectral estimation method that improves on the least square regression method. Xiao et al. (2016) combined the polynomial model with the principal component analysis (PCA) method in eigenvector space and applied it to skin color detection (Xiao et al., 2016). These improved methods have achieved some improvement in estimation accuracy, but they are all based on global training, which poses a limitation on the performance of the method. Subsequent studies in which training samples were optimally selected and weighted emerged. Cao proposed a spectral reflectance estimation method for local linear estimation with sample selection optimization (Cao et al., 2017). Zhang et al. (2017) proposed a spectral estimation method based on local sample selection based on CIEXYZ color space color difference under multiple light sources. Shen proposed a scanner-based local training sample weighted spectral estimation method (Shen and Xin, 2006). Liang and Wan (2017) proposed a local inverse distance weighted linear regression method for spectral estimation from camera response. Amiri and Amirshahi (2014) also studied the weighted non-linear regression models in the form of global weighting. These methods weight the samples by calculating the weight matrix based on the Euclidean distance between the training samples and the test samples in the color space, and although they have better spectral estimation accuracy than previous methods, they still have certain shortcomings because the RGB color space is device-dependent and not a uniform color space, which has a large difference from the color difference perceived by the human eye, and the above methods perform sample selection and weighting by color difference. The method ignores the spectral differences between samples. Wang proposed a two-time sequence weighting method considering the chromaticity and spectral error at the same time, and adaptively optimized the sample selection, which achieved good results (Wang et al., 2020).

Through the development of spectral estimation methods, we can conclude that the optimal selection of samples is extremely important, and how the samples are optimally selected and weighted largely determines the estimation accuracy of the estimation method. The more similar the training and testing samples are in the color space, the better the estimation accuracy, so samples with similar colors should be selected for training as much as possible (Liang and Wan, 2017; Liang et al., 2022). At the same time, the chromaticity difference between the training and test samples is measured precisely, and different weights are assigned to obtain better estimation accuracy.

Meanwhile, we note that the existing methods using Euclidean distance or chromaticity vector angle in color space to measure the difference between training and testing samples are still not accurate. If multiple training samples have the same Euclidean distance to one test sample, but each training sample does not have the same color perception features as the target sample, the existing methods still assign the same weight to these training samples, which will produce an error and the phenomenon of Metamerism. This means that we can measure the color differences between samples more accurately if we take the differences in color perception features such as lightness, hue, and chroma into account.

In this paper, we explore new spectral imaging devices that use cell phone camera raw responses as a data source. We propose an improved sequential adaptive weighted spectral estimation method based on the color perception features of CILAB uniform color space. The novelty of the study is mainly in two aspects: (1) Using a cell phone camera to replace a professional digital camera as an imaging device, the linearity of the cell phone camera raw responses and its application to spectral estimation are verified to be feasible. (2) Using three perceptual features of CIE1976 L*a*b* (CIELAB) uniform color space for local sample selection and calculation of the weighting matrix to achieve a more accurate measure of color differences between samples. By comparing the proposed method with the existing method in a 10 times 10-fold cross-validation, all using raw responses output from the same cell phone, the experimental results show that the proposed method has the best performance in two aspects of spectral error and chromaticity error in four metrics. Finally, we apply the color perception features weighting strategy of the proposed method to the existing method, and the accuracy is significantly improved compared with the original method.

Although the internal space of cell phones is small and their camera components are different from professional digital cameras, the imaging principle is still the same as that of digital cameras. The light emitted from the light source, after reflecting from the surface of the object, passes through the camera lens set and is converted by CMOS for optoelectric conversion to generate raw response signals, after processing by ISP chip, the mobile phone camera output the photo. So the three-channel response yi of the camera is determined by the spectral power distribution l(λ) of the light source, the reflectance r(λ) of the object surface, the sensitivity function m(λ) of the cell phone camera system and the system noise ni together, and we can write the imaging model of the cell phone camera as the following integral calculation process of Equation (1).

Where the subscript i denotes the three channels of the camera, φ denotes the wavelength range of the visible spectrum. If we assume that the noise ni = 0, for mathematical simplicity, Equation 1 can be written as the following matrix equation, as in Equation (2).

Where y denotes the camera response vector, M denotes the spectral sensitivity matrix of the whole system including the spectral power distribution of the light source, the sensitivity function of the cell phone camera, and r is the surface spectral reflectance of the target object.

Based on the imaging model of the camera, we can divide the spectral estimation method into 2 steps: the first step is to calculate the spectral conversion matrix by training samples. The training sample spectral reflectance is obtained by measurement, and the camera three-channel response value y is extracted from the raw file, and the conversion matrix M is calculated by the pseudo-inverse method, as in Equation (3).

The second step is to calculate the spectral reflectance of the target sample using the conversion matrix M. The high-dimensional spectral reflectance R of the target sample is estimated from the known three-channel response Y of the target sample, as in Equation (4).

The methods for calculating the conversion matrix M, as described previously, mainly include: the pseudo-inverse method, PCA, and other methods, and the calculation in this paper use the pseudo-inverse method, as shown in Equation (5).

Where the superscript T denotes matrix transpose and the superscript -1 denotes matrix inverse operation.

JPG and HEIF format photos are processed and compressed by the cell phone ISP processor. To ensure that raw response can be obtained from the cell phone camera, we set the cell phone to output the raw format file and then compared them with the JPG format pictures of the same scene. Under the same light source conditions, the Xrite ColorChecker CLASSIC color chart (hereafter referred to as CC chart) was photographed using five cell phones, and the RGB three channel values of the grayscale in the fourth row of the CC chart were extracted separately to check the raw response linearity of different models and different brands of cell phone cameras (Liang et al., 2019) the results show that the cell phone camera raw response has good linearity. The experimental steps and results are detailed in “Imaging conditions and raw response extraction” and “Verification of cell phone camera raw response,” respectively.

Further, to verify the effect of the difference between the raw response of the cell phone camera and the professional digital camera on the spectral estimation, the above five cell phones were photographed with the Xrite ColorChecker SG chart (hereafter referred to as CCSG color chart) under the same light source conditions as described above, and 140 color blocks of the color charts were taken as the sample set, while the raw response output from a Nikon D3x professional digital camera was also used as a comparison benchmark. All six devices were used for spectral estimation experiments in both OLS and modified ALWLR methods, and the detailed experimental procedures and results are described in “Imaging conditions and raw response extraction” and “Verification of cell phone camera raw response,” respectively.

The proposed method is based on the classical pseudo-inverse method for improvement. It uses two sequential adaptive sample weighting and optimal selection to improve performance. Based on the extraction of raw response data from cell phone cameras, the main process of the method is divided into the following five steps: The first step is to perform the color space conversion of the three-channel RGB values of the samples and the calculation of the color perception features differences in CIELAB uniform color space. The second step is to construct a color difference weight matrix Wc using the color perception feature differences and weighting the training samples. The third step is to calculate the transformation matrix Qc using the weighted test sample raw response and spectral data, and then performing the first spectral estimation calculation using Qc. The fourth step is to calculate the root mean square error (RMSE) of the results of the first spectral estimation, to construct the spectral difference weighting matrix Wr, and to select and weight the training samples for the second time. The fifth step uses the Wr matrix to weight the training samples and calculate the conversion matrix Qr, the second spectral estimation of the test samples is performed to obtain the spectral reflectance results. Figure 1 shows the flow chart of the method.

The RGB three-channel values of each sample are obtained from the cell phone camera raw response by the method described in “Extraction and verification of cell phone camera raw response,” and at this time the response RGB color space is related to the imaging device, and we choose the CIELAB uniform color space as the color space for calculating the color difference. First, the RGB stimulus values of the training samples are converted to CIELAB color space, and the color space conversion matrix T is obtained by the least square method, and T is calculated as Equation (6).

Dtrain denotes the response of the training sample; Ltrain denotes the CIELAB value matrix of the training sample, and the superscript ‘T’ denotes the matrix transpose; the superscript ‘–1’ denotes the pseudo-inverse operations. Then we use this transformation matrix T to transform the camera RAW responses of the test samples into the CIELAB color space as in Equation (7).

Dtest denotes the raw response matrix of the target sample and Ltest denotes the color matrix of the test sample transformed to CIELAB color space.

The metrics of sample color differences in existing studies are mainly divided into 2 categories: one category is to use the color difference in color space, which is the Euclidean distance to measure the color difference between samples (Liang and Wan, 2017), and this method selects training samples with a similar color to the test samples by the color difference in a certain color space while giving different weights to different training samples to achieve local sample selection and weighting, which has the advantage of computational simplicity. Another category is to use chromaticity vector angle to calculate the angular difference between sample color vectors in some color space for sample selection and weighting (Wang et al., 2020). The advantage of this method is that a more uniform CIEXYZ uniform color space is used, and the color differences are more consistent with human eye perception, but the above two types of methods still have the problem that the measure of sample color differences is not accurate enough because this will enable samples with the same or similar Euclidean distance to obtain almost the same weight, while the actual situation is that there may be large differences in color perception features of the samples, which is the reason for the Metamerism phenomenon in this type of methods; and the chromaticity vector angle apparently has the same problem of being unable to characterize and measure the color perception features, which is still not accurate enough. Therefore, this study proposes to use three color perception feature measures of CIELAB uniform color space for sample color difference. In the CIELAB uniform color space, we assume that the color values of the training and test samples are () and (, ,), then the color difference between them can be calculated by Euclidean distance in the color space, as in Equation (8) below.

Also, the color difference can be expressed in terms of the differences in the color perception feature measures of the samples: lightness difference ΔL*, hue difference ΔH*, and chroma difference ΔC*, as in Equation (9).

The color perception features ΔL*, , and are calculated as Equations (10), (11), and (12).

We use the ΔL*, , and as our parameters for the first weighting matrix of the training samples, which will make the difference of any color perceptual feature directly affect the value of the sample weight, and these differences may not be expressed in the color difference of the Euclidean distance so that the color difference can be measured more precisely. The smaller the value means the smaller the difference in visual perception, we use the inverse of the sum of ΔL*, , and to calculate the color perceptual feature weighting matrix WC, and the weight of the i th training sample Wi is calculated as in Equation (13).

To make the denominator non-zero, we introduce a very small value α. In this study, α = 0.0001 is taken. k is the total number of training samples. Then the weights of all training samples are arranged in descending order and converted into a diagonal matrix to form the color perception feature weighting matrix WC, as shown in Equation (14).

Before the first spectral estimation, we performed a polynomial expansion of the cell phone camera raw response, and in “mobile phone raw response spectral estimation performance validation” we tested the effect of the number of polynomial terms on the estimation accuracy, and finally chose 18 terms to avoid the overfitting problem caused by too many terms. The expanded form of 18 terms is shown in Equation (15).

After polynomial expansion, the weighted spectral transition matrix is calculated as Equation 16 according to Equation (16).

Where ,, denotes the weighted training sample spectral reflectance matrix, and denotes the weighted polynomial expansion matrix of the training sample. Finally, the transformation matrix QC is used to estimate the spectral reflectance of the test samples as in Equation (17).

where denotes the estimated spectral reflectance of the test sample, and ytest,exp denotes the polynomially expanded raw response matrix of the test sample.

After the spectral reflectance results are obtained by the previous step, the RMSE of spectral reflectance is calculated for the second weighting and selection of the training samples, and the RMSE of the spectral is calculated as Equation (18).

n denotes the sampling resolution of visible light wavelength range, the range in this study is 400–700 nm, and the spectral sampling resolution is 10 nm, so n is 31 in this study. The training samples are arranged in ascending order according to the RMSE of the samples, the smaller the RMSE means the smaller the spectral difference between training samples and testing samples, in this step we use the inverse of the RMSE to construct the weight matrix, the weight Wj of each training sample is calculated as in Equation (19).

β is similar to α in Equation (12) and is a very small constant that makes the denominator non-zero, taking β = 0.0001. Then the first L local samples are selected by sample sorting according to Wj and the second diagonal weight matrix WR is constructed in the same way as the Equation (13) in Equation (20).

The response values of the training and testing samples are weighted in the same way using WR to update to obtain Ytrain and Rtrain, and then the new transformation matrix QR is calculated using Equation (16). Finally, the spectral reflectance of the testing sample is calculated as in Equation (21).

To verify the usability of cell phone camera raw response. First, linear verification experiments and spectral estimation performance experiments of cell phone camera raw response were conducted. Then, the optimization method of sequence adaptive weighted spectral estimation based on color perception features proposed in this paper has experimented with the existing methods. Finally, the strategy based on color perception features weighting is applied and compared with the existing methods. The raw responses of the cell phone camera for these experiments are obtained in the same experimental environment.

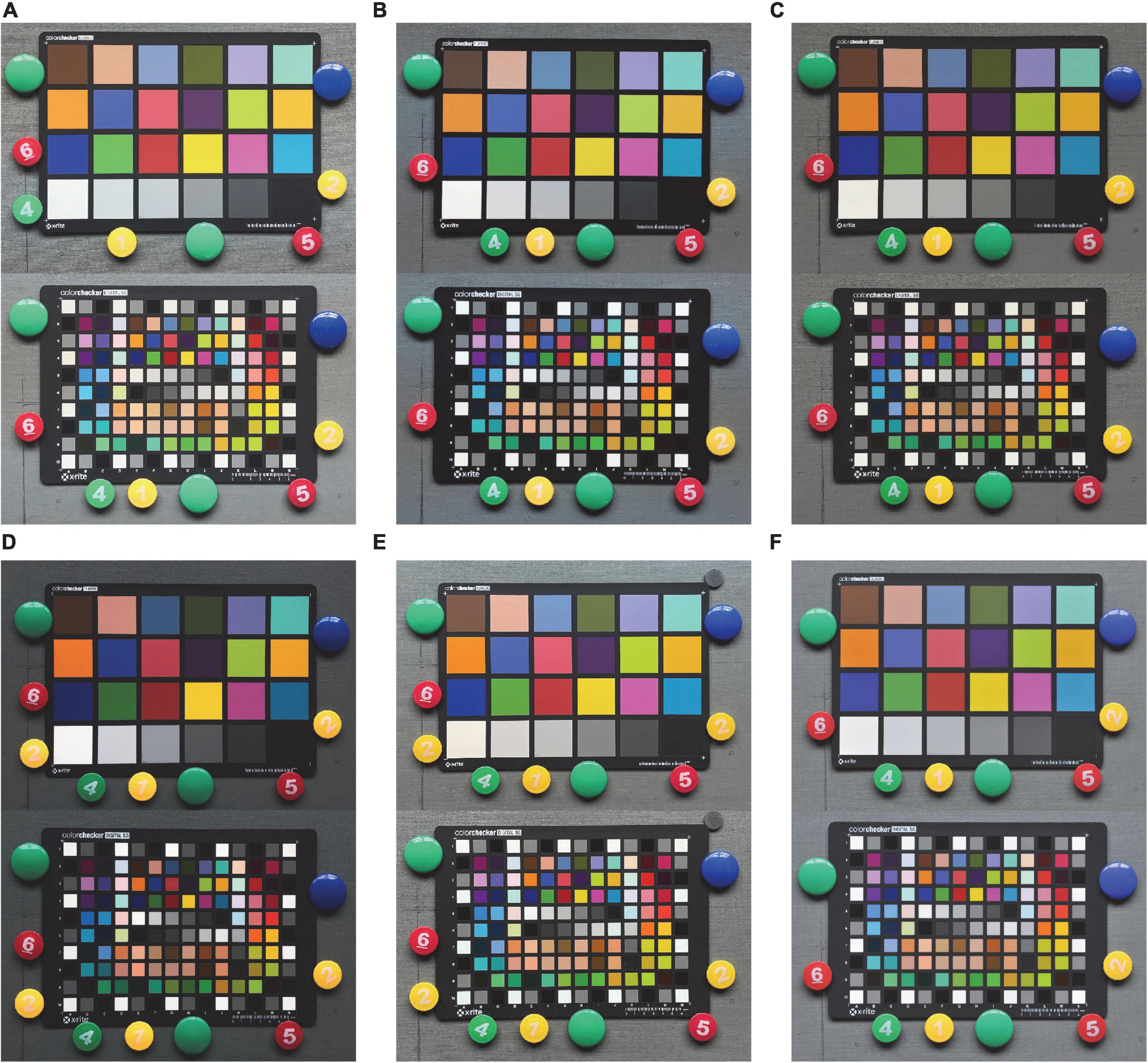

To avoid interference of imaging by cluttered light, the experiments were conducted in a completely shaded laboratory with neutral gray walls. The experimental lighting source was a fluorescent lamp set with a color temperature of about 6000 K. The color chart was fixed on a vertical wall by magnets, and the height of the color chart was adjusted so that the fluorescent lamp irradiated to chart at an angle of 45 degrees, and the cell phone was set on a tripod and fixed by a holder to avoid shaking. The horizontal height of the cell phone was kept the same as the center point of the color card. The cell phone imaging scene is shown in Figure 2, and some of the pictures of the color chart taken by five cell phones and one DSLR camera are shown in Figure 3. These photos were taken in different formats (including DNG raw files and JPG post-processed photos).

Figure 3. JPG pictures of the Xrite CC chart and SG chart were taken by cell phones, photos of the CC chart are arranged on the top, SG color chart is arranged on the bottom: (A) Huawei Mate10, (B) Meizu 17Pro, (C) Xiaomi12S Ultra, (D) iPhone 8, (E) iPhone 12, and (F) Nikon D3x.

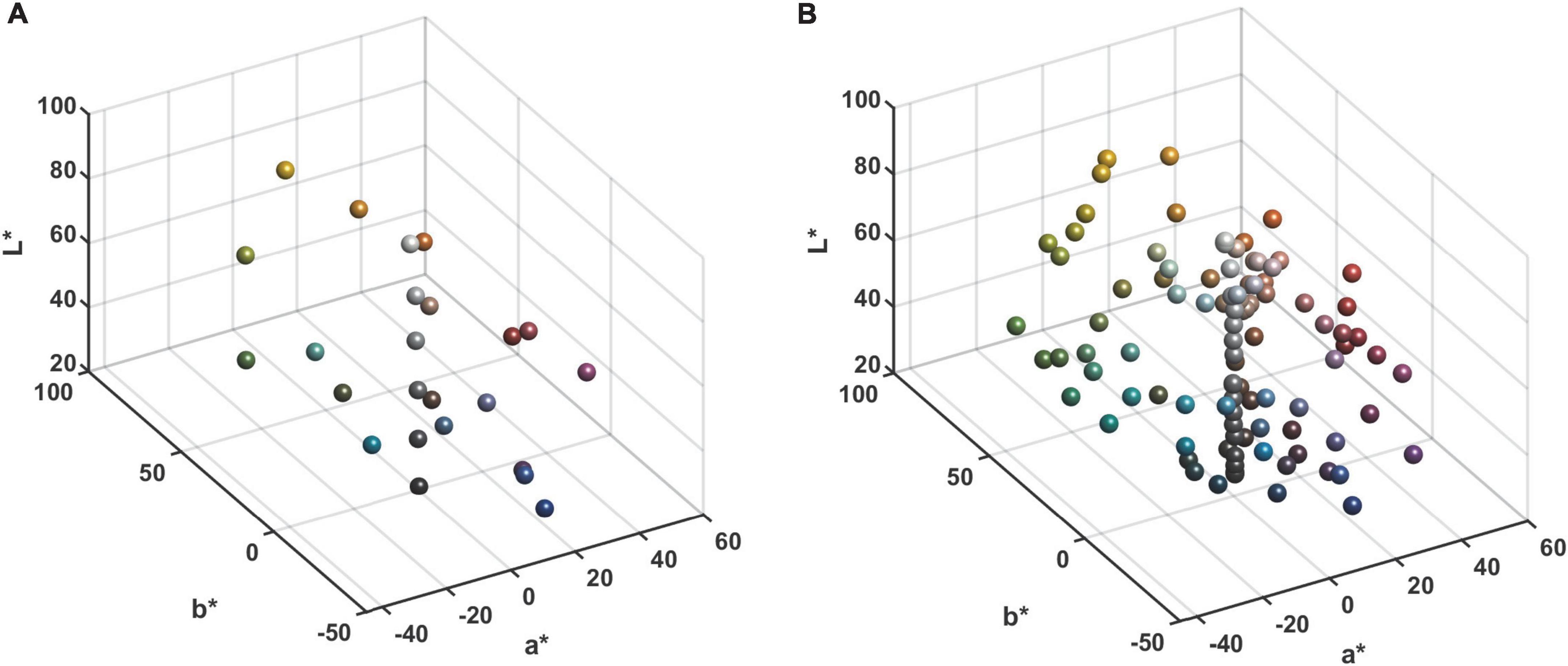

The experiments were conducted using the Xrite ColorChecker CLASSIC color chart (hereafter referred to as CC chart), which contains six grayscale gradient color blocks in the last row. The distribution of the color blocks in the CIELAB color space for the CC and SG color charts is depicted in Figure 4.

Figure 4. Distribution of the Xrite ColorChecker chart samples in CIELAB color space: (A) The CC chart. (B) The SG chart.

The shooting phones contain three Android phones and two Apple phones, while the Nikon D3x camera is used for comparison. The main information and CMOS parameters of the 6 shooting devices are shown in Table 1. Most of the phones have built-in camera apps for taking photos, and for phones where the camera app does not support the raw format output function, Lightroom software was used to take photos to obtain the raw response in DNG format 1111, and the raw file of the Nikon D3x camera was in NEF format F22.

The data content of the raw file is mainly the four-channel response of the CMOS Bayer filter array. We wrote a raw file batch processing program based on the open source software dcraw in MATLAB which contains three main steps: linear normalization of the response value, white balance recovery and demosaicing, and outputs a linear uncompressed three-channel TIFF image after processing. Subsequently, the specified area is intercepted to obtain the RGB three-channel average value. Through the above steps, we convert the camera raw response to three-channel RGB value and complete the extraction of raw response color information, and the processing of raw data in the above are linear transformations (Rob, 2014).

Since the main camera of the phone is usually the best CMOS on the phone with better imaging capability and better optical components, the shots are taken using the main camera of the phone. At the same time, the focal length and aperture of cell phone CMOS are usually fixed, and the aperture size of each cell phone main camera is not the same, so we take a fixed ISO, adjust the shutter time of each device to get the right camera response (D3x uses separate shooting parameters), and try to make the maximum value of the three channels of the white color block between 230 and 245 for each photo to avoid overexposure or underexposure to ensure a good dynamic range.

The spectral reflectance of each color block was measured by Xrite CI64 spectrophotometer and Color iControl software. The spectral reflectance was taken in the range of 400–700 nm with a wavelength interval of 10 nm and a measurement aperture of 6 mm.

The spectral estimation methods were all run using a ten times ten-fold cross-validation. The method randomly aliquots the samples into 10 groups, with a training sample of 9 groups and a test sample of 1 group, and cycles them 10 times to ensure the stability of the estimation results. The evaluation of the accuracy of the spectral estimation method includes four indicators: (1) the root-mean-square error of the spectrum is calculated as the average difference in the values of the spectral curves at each wavelength, and the calculation formula is the same as the Equation (18) in 3.2.4, the value range is 0–1, the smaller the value indicates the smaller the error; (2) The spectral goodness-of-fit coefficient measures the similarity of the shape of the spectral curves of the two samples by the angular cosine difference of their spectral curves, and the result ranges from 0 to 1. The closer to 1 means that the estimated spectral curve fits the actual spectral curve better, and the calculation formula is as in Equation (21).

(3) The CIELAB color difference is for calculating the Euclidean distance in CIELAB color space, the calculation formula is the same as Equation 8; (4) The calculation of CIEDE2000 color difference is ΔE00 and similar to that of LAB color difference, but some improvements based on subjective visual perception experiments are carried out to establish a linear relationship between the changes of lightness, hue, saturation, and visual perception, which is considered to be the best uniform color difference model in line with subjective visual perception, and its calculation formula is as Equation (22).

kL, kC, kH are environment-related correction factors, where kL = kC = kH = 1. The color perception differences ΔL′,ΔH′ and ΔC′ are calculated in the same way as Equations (10), (11), and (12). SL, SC, and SH are the luminance, chromaticity, and hue weighting factors, and RT is the rotation factor used to correct for chromaticity differences to match visual perception (Yang et al., 2022). The smaller values of and ΔE00 indicate the smaller color perception differences of the samples.

First, we compared and analyzed the linearity of the mobile phone camera raw response. Then, spectral estimation experiments were conducted using raw responses from a variety of cell phone cameras, and professional DSLR cameras were used as comparisons to analyze whether they could achieve about the same estimation accuracy. Most importantly, the proposed method is compared with seven existing methods in experiments using the same sample data, and the error results and distributions are compared and analyzed in detail to demonstrate the superiority of the method. Finally, a color perception features weighting strategy is applied to the existing methods to demonstrate its positive effects.

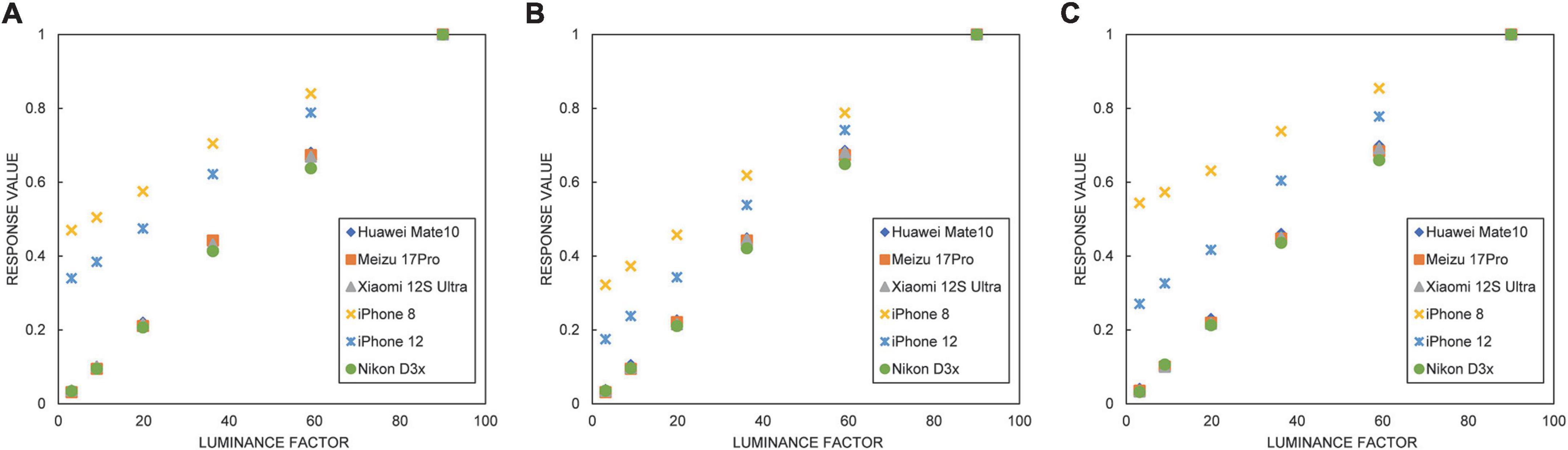

For full validation, several brands of cell phones with different operating systems and price ranges were used for comparison, and the list of devices is presented in Table 1. The Xrite ColorChecker CLASSIC color charts were photographed under the same light source conditions, and the grayscale response color blocks of CC charts were checked for their linearity using the raw response extraction method described in “Imaging conditions and raw response extraction,” as shown in Figure 5.

Figure 5. Linearity of the three channels of raw response for six imaging devices: (A) R channel, (B) G channel, and (C) B channel.

The horizontal coordinate in Figure 5 is the luminance factor of the XYZ color space of the grayscale color block of the CC color card, and the vertical coordinate is the three-channel value of the raw response. Figure 5 shows that the response linearity of the three Android phones is the same as that of the Nikon D3x. The response of the iPhone 8 and iPhone 12 also has good linearity, but the slope of linearity is a little smaller than that of the other four models. Overall, the raw response linearity of all five experimental phones is relatively good.

Further, we added JPEG photos from six devices for comparison, we present the R-squared values of the linear fitting of each channel of each device in Table 2, and the data show that the camera ISP post-processing significantly reduces the three-channel linearity of the JPG pictures. and it can be seen that the R-squared values of TIFF images of all devices are above 99%, while the R-squared values of JPG images are significantly lower. The above results show that the cell phone camera raw response itself has good linear performance, but after processing by the built-in chip and algorithm of the cell phone, the linearity of the three-channel response values of JPG images changes significantly and is difficult to simulate or characterize accurately, so the data source using raw response for spectral estimation is a better choice than images in formats such as jpg.

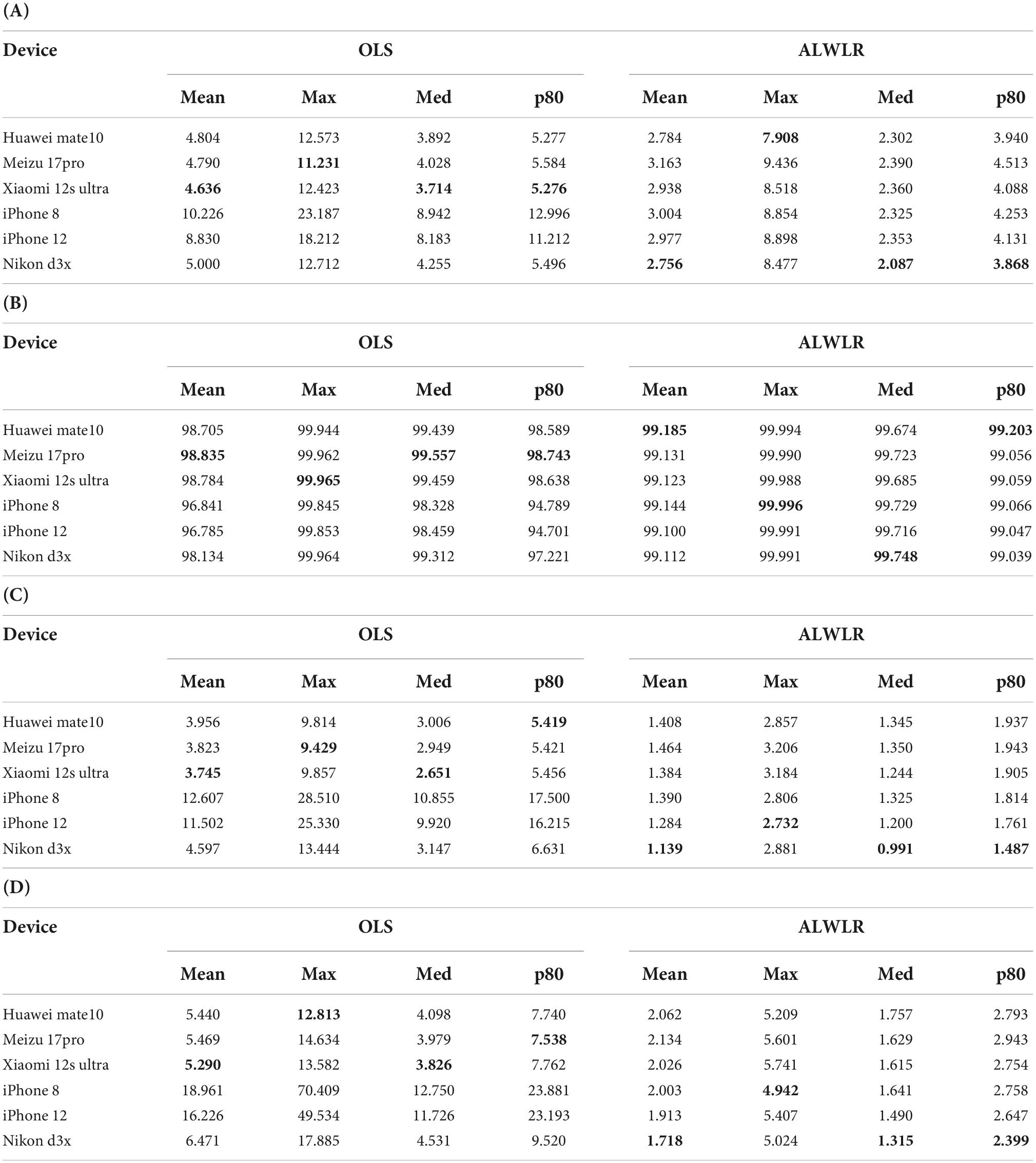

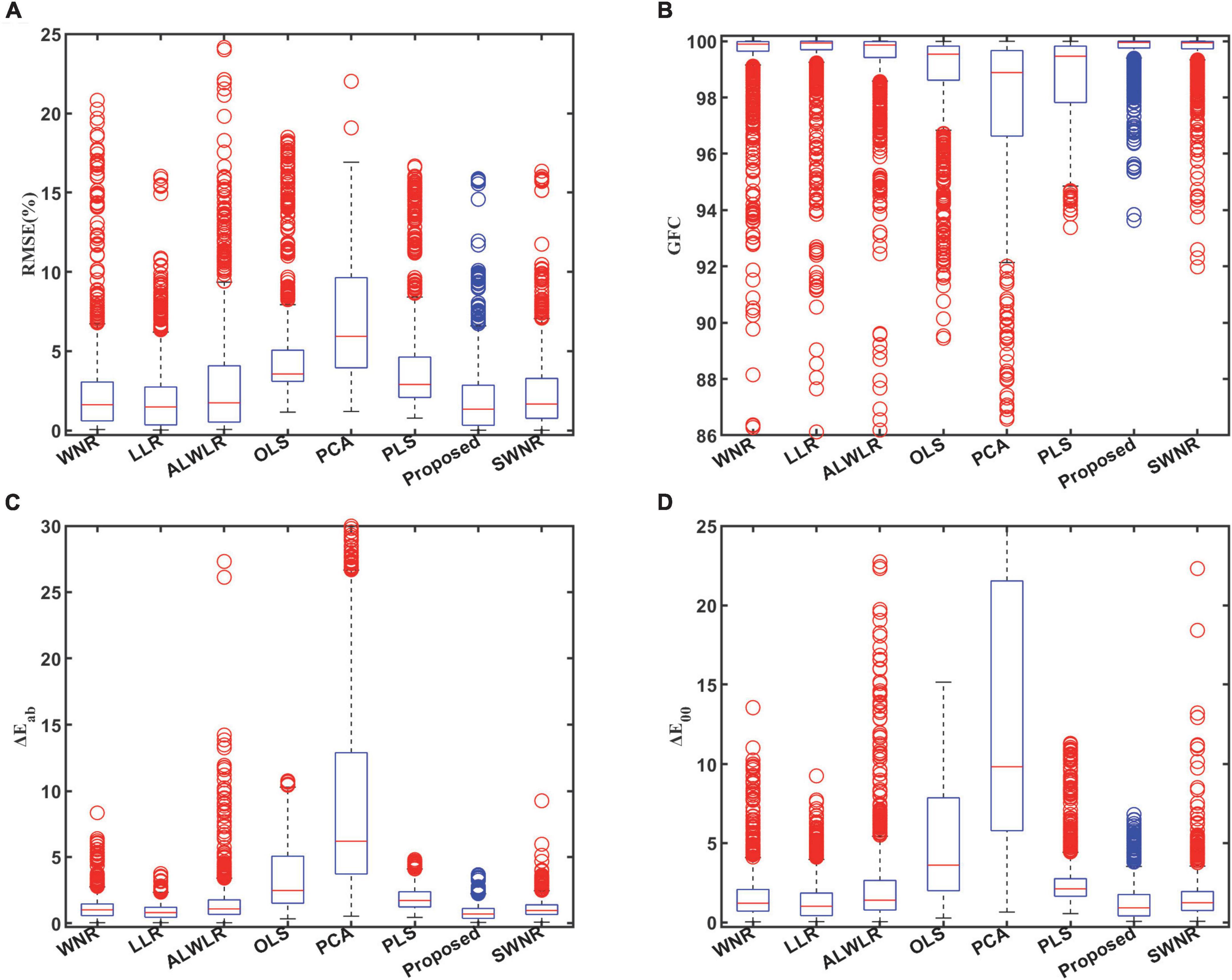

Due to the extremely small internal space of cell phones, the CMOS sensor size is also several times smaller than that of DSLR cameras, and there is a huge difference between the imaging capability of cell phones and professional digital cameras, the impact of these factors on the spectral estimation accuracy has not been studied and verified. To verify the difference between the two raw responses, we still use the above six imaging devices and the same way to shoot SG color charts under the same illumination environment, with their 140 color blocks as the sample set, and use both the classical OLS method and the improved ALWLR method. To verify the relationship between the imaging capability and the spectral estimation accuracy of different cell phones, the Nikon D3x was also used as a comparison benchmark to evaluate the spectral estimation performance of each cell phone. The above experiments still use ten times ten-fold cross-validated to improve the stability of the results, and the experimental results are shown in Table 3.

Table 3. Performance comparison of raw responses in OLS method and ALWLR method for six devices: (A) RMSE, (B) GFC, (C) , and (D) ΔE00.

For the OLS method, the accuracy of all indicators of the three Android phones is better than the D3x camera, with the Xiaomi 12s ultra having a relatively better performance in the 3 areas of RMSE, . and ΔE00 errors; While the errors for two iPhones only had GFC errors close to the other four devices, the other three errors are all more than two times larger than the Android phone errors. For the ALWLR method, the situation changed because the samples were weighted and selected by color difference, which led to a substantial improvement in the estimation accuracy of all six devices, with a much smaller range of error between devices. In terms of RMSE error, Nikon D3x obtained the best overall estimation accuracy, with only the maximum value of RMSE higher than Huawei mate10, and the two iPhones are also very close to each other in the two items of mean and median RMSE. In terms of GFC indicators, the average value and the p80 error of Huawei mate10 were better than D3x, and the performance of the three Android phones was comparable, with Huawei mate10 being the best and slightly better than D3x, Meizu 17pro, and Xiaomi 12s ultra having the average value and the p80 GFC better than D3x, so three Android phones were better than Nikon D3x in terms of GFC mean and p80 error. The GFC errors of the 2 Apple phones were also at the same level. The chromaticity error of and ΔE00 Nikon D3x performance is slightly better, the difference between the color difference value of these six device were very small, the mean value range is within 0.4, and the range of mean value of ΔE00 is within 0.45.

In general, in the classical spectral estimation methods like OLS, Android phones are better than professional cameras in all four metrics. For the ALWLR method, after effective sample selection and weighting using color difference, the spectral estimation errors of all devices are significantly reduced, and the p80 of each error is smaller than the mean error of the OLS method. The mean value of the single error of some cell phones is better than that of professional cameras, while other indicators have a small difference with professional digital cameras, and the chromaticity error is much smaller than the discriminatory ability of the human eye.

The results show that, under the same conditions, the influence of the estimation method on the estimation accuracy is decisive, while under the more complex methods, the difference in estimation accuracy between most cell phone camera raw responses and professional digital cameras is very small, while some evaluation indicators are better than professional cameras. Therefore, we believe that it is feasible for cell phones to replace professional digital cameras as spectral imaging devices overall.

In this section, the number of polynomial expansion items of the proposed method is first experimented to determine the optimal number of expansion terms. Then the proposed method is compared and analyzed together with representative existing methods. The methods that participated in the comparison mainly include Ordinary least squares (OLS) (Connah and Hardeberg, 2005), Partial least squares (PLS) (Shen et al., 2010), PCA (Xiao et al., 2016), Local linear weighting method (LLR) (Liang and Wan, 2017), Weighted non-linear regression method (WNR) (Amiri and Amirshahi, 2014), the local adaptive weighting method (ALWLR) (Liang et al., 2019), and the sequential adaptive weighted non-linear regression method (SWNR) (Wang et al., 2020). In addition, the running time of the eight methods was also counted and compared.

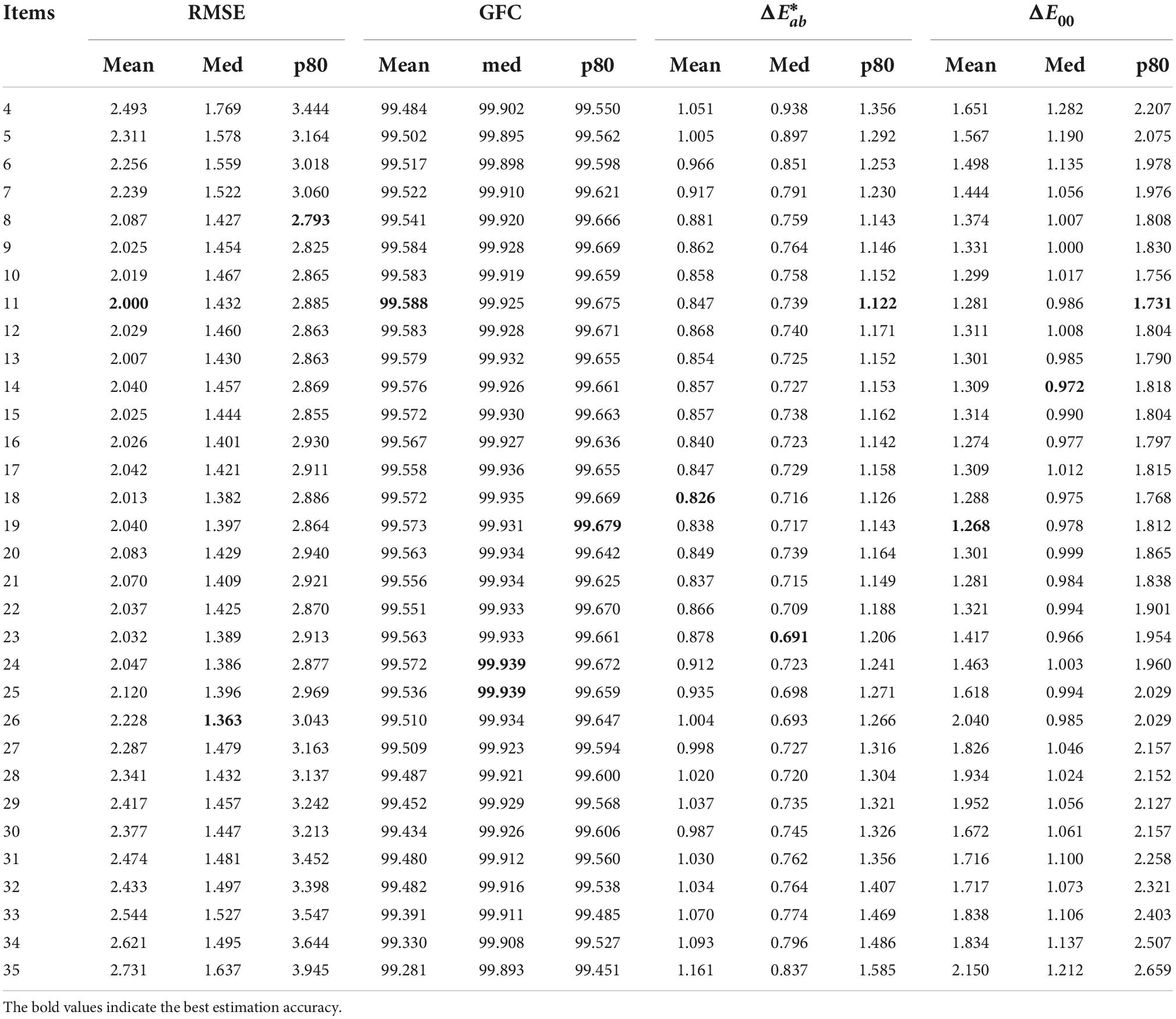

As described in the principle of the method in section “The proposed method,” a polynomial expansion of the camera raw response is performed, so the appropriate number of expansion items needs to be determined, and we experimented with the performance of the proposed method by ranging the number of expansion items from 4 to 35, with the sample set of SG color chart taken from the well-balanced Xiaomi 12S Ultra in section “Mobile phone raw response spectral estimation performance validation” and the same light source environment as described before. The effect of the change in the number of items of the polynomial expansion on the spectral estimation error of this method is shown in Table 4.

Table 4. The estimation accuracy of the proposed method spectral estimation method with the number of polynomial expansions from 4 to 35 items.

The data in Table 4 show that the mean, median, and p80 errors of the four indicators have the same trend, decreasing and then increasing as the number of items increases. In the interval of 9–22 items, it is obvious that each error is in the optimal interval and the differences is very small. As the number of terms exceeds 22, the accuracy of each estimate decreases gradually. After comparison, we choose 18 items with relatively more balanced errors as the number of polynomial expansion items for the subsequent study.

We compare the proposed methods with seven existing methods in detail, where the WNR, ALWLR, and SANR methods contain polynomial expansions and the number of terms we fix to 18. Some of the methods include the selection of samples, and we fix the local sample selection parameter L to be 100 for all methods, which means that the first 100 local samples are selected. All other aspects of the experiments were kept consistent and used the same sample set of SG color chart taken by Xiaomi 12S Ultra.

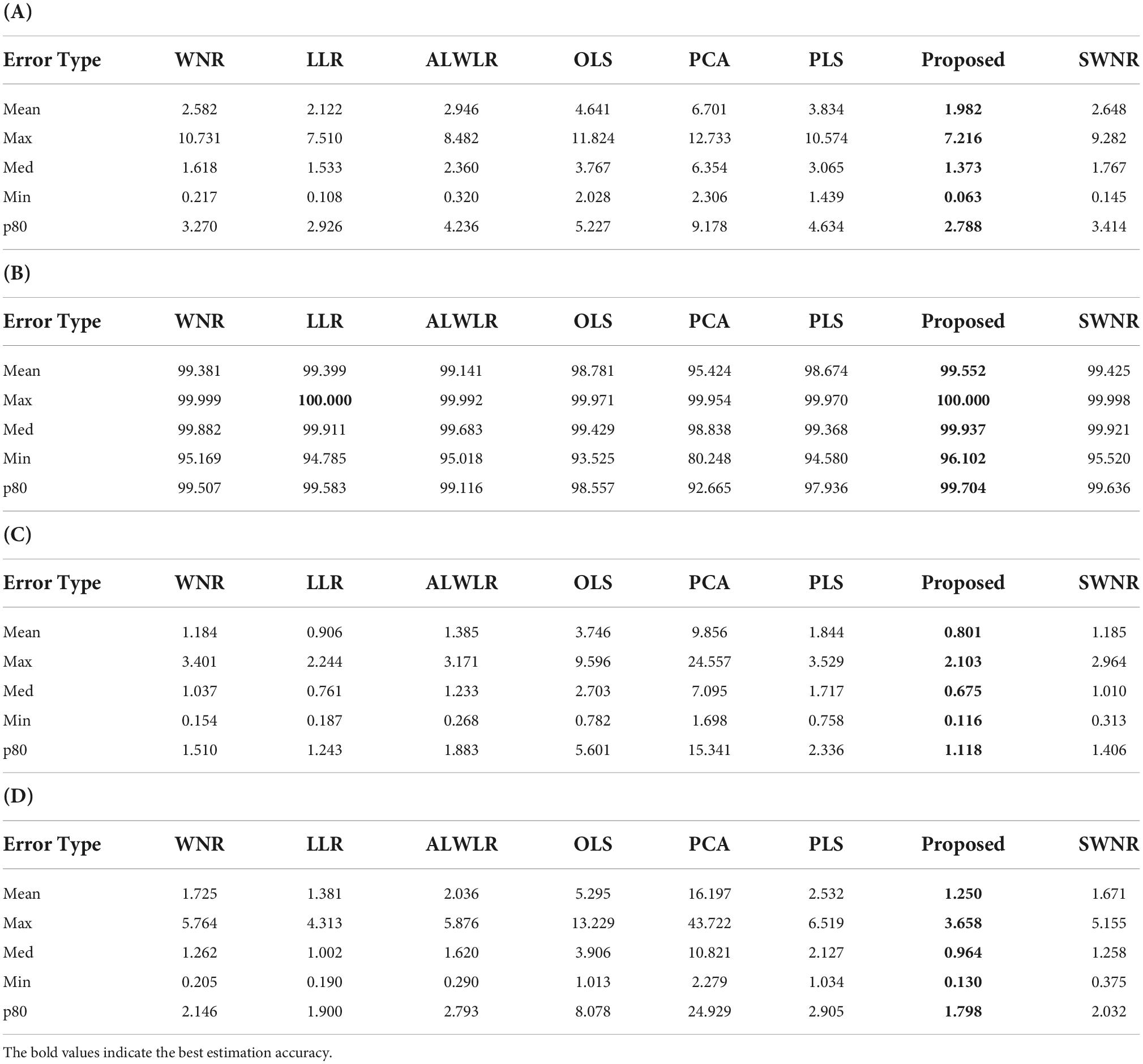

Ten times 10-fold cross-validation was used for all eight methods to enhance the stability of the resulting data. The estimated results are shown in Table 5.

Table 5. Comparison of estimation accuracy between the proposed method and seven existing methods: (A) RMSE, (B) GFC, (C) , and (D) ΔE00.

In Table 5 we bold the data with the best accuracy in each row, and it can be seen that the proposed method in this paper obtains the best spectral reflectance estimation performance in each of the four estimated accuracy metrics. From the data in Table 5. We can also observe that the OLS, PCA, and PLS methods have greater overall errors, while the improved variants such as WNR, LLR, ALWLR, and SWNR methods have better estimation accuracy performance and less difference from the proposed method due to different forms of weighting and sample selection. To further analyze the method estimation error performance, we plotted boxplots for the four evaluation indicators of the above eight methods, as in Figure 6.

Figure 6. Boxplots of estimation errors for the comparison of eight methods: (A) RMSE, (B) GFC, (C) , and (D) ΔE00.

From the four boxplots in Figure 6, it can be seen that the distance between the upper and lower edges of the error distribution and the height of the box of the proposed method are more intensive, while the distance of the box from the minimum value is the closest in all four evaluation indexes, showing that the present method has a smaller overall error. Also, the outlier data of this method are more concentrated near the upper edge of the normal value (GFC is the lower edge).

We counted the running time of each method. All methods count the computation time of 10 times 10-fold cross-validation (excluding the time to save the results) as shown in Table 6, and we counted the time of five runs of 10 times 10-fold cross-validation to get the average time. The hardware is Lenovo Legion R9000P2021H laptop, CPU is AMD Ryzen7 5800H 3.2GHz with 8 cores and 16 threads, memory is 16GB DDR4-3200MHz, the software is MATLAB R2021a, the operating system is 64-bit windows 10. It can be seen that the time of the OLS and PLS methods without weighting and selection of samples for ten times ten-fold cross-validation is within 1 s, and the computing time of the WNR, LLR, and ALWLR methods with one weighting and selection of samples is about more than 1.5 s, and the proposed method increases by 0.5 s compared with the SANR method with the same two weightings because of the addition of three color perception feature volume calculations, and all The average time of all methods is within 2 s, which means that the difference in running time is very small even on a home computer.

According to the comparison and analysis of the above three aspects, the proposed method is ahead of the existing methods in all aspects of estimation accuracy, and the overall error is smaller and the error distribution is more intensive, while the time cost is slightly increased than the existing methods, but the difference is not significant, which proves the superiority of the proposed method.

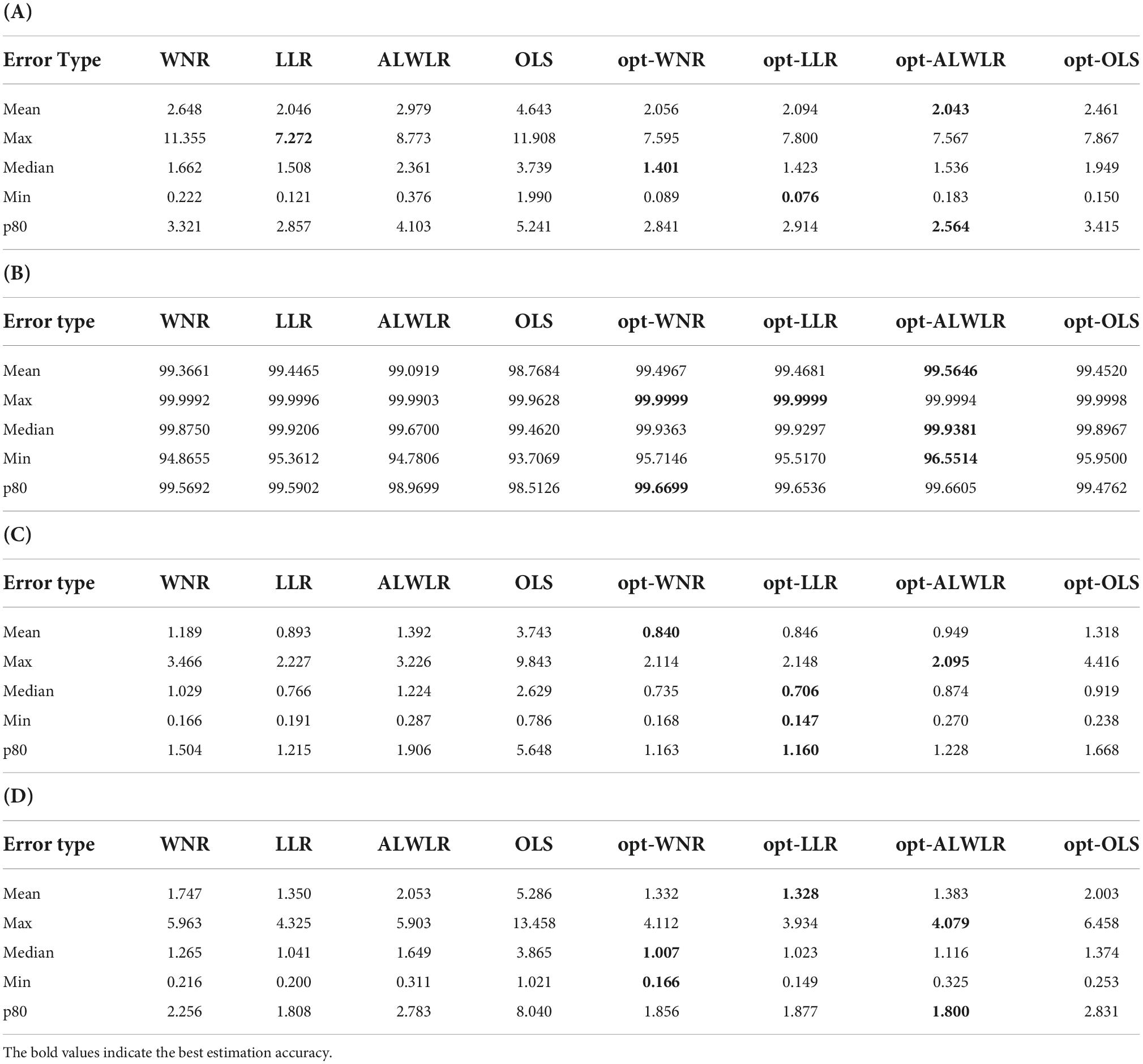

The novelty of this method is to propose a strategy of weighting color perceptual features based on CIELAB uniform color space, which calculates the differences of color perceptual features of samples in LAB color space instead of color difference weighting and achieves better performance. In this section, we try to apply the weighting strategy proposed to the OLS method, the WNR method, and the ALWLR method. The sample set still uses the SG color chart taken by Xiaomi 12S Ultra. The estimated accuracies of the above four methods before and after applying the proposed weighting strategy are presented in Table 7. The methods containing the ‘opt-’ prefix are the methods after applying the proposed weighting strategy.

Table 7. Comparison of the estimation accuracy of four methods applying the weighting strategy: (A) RMSE, (B) GFC, (C) , and (D) ΔE00.

From the overall view of the data in Table 7, all four methods obtained a reduction in estimation error after applying this weighting strategy. Among them, opt-OLS has a greater improvement due to its relatively larger original error. The errors of opt-WNR, opt-LLR, and opt-ALWLR methods were also significantly reduced relative to the original methods in four aspects, for example, in the GFC data in sub-table (B), the 80% error of these three methods exceeded 99.6%; In sub-table (C) , all three methods, opt-WNR, opt-LLR, and opt-ALWLR, were reduced to within 1 in the mean and median values.

In summary, for OLS methods without sample weighting and selection, the application of the present weighting strategy brings a significant improvement. For the methods such as WNR, LLR, and ALWLR that have been optimized with sample weighting and selection, the errors in each spectral and chromaticity are also noticeably reduced. The good improvement of the proposed weighting strategy is proved.

The proposed method uses the idea of two-times sample weighting and selection similar to the SWNR method. By improving the weighting method of the first spectral estimation and using the weighting method of color perception features in CIELAB color space instead of the weighting method based on color difference or based on chromaticity vector angle, a more accurate measure of color difference between samples and a better estimation accuracy are achieved.

The color perceptual features of CIELAB uniform color space include hue, chroma, and lightness, which are closely related to the visual perception of human eyes. The weights are directly related to these three perceptual features in our weighting approach, avoiding the problem of being given the same weight because of the equal color difference Euclidean distance, and thus being more effective than the weighting approach of existing methods.

At the same time, this study also has the following limitations: (1) The color sample sets for the experiments all use the samples of 140 color blocks of the SG color chart, and further adaptation experiments are needed on other sample sets. (2) The validation of the method is conducted under the same light source conditions, and the stability of the method needs to be studied under more light source conditions.

This study starts with the cell phone camera raw response. First, the linearity verification and spectral estimation performance of the cell phone camera raw response are studied, and it is confirmed that it has good linearity at the same time, and the spectral estimation performance is comparable to the professional digital cameras under the same conditions, which proves the feasibility of using the cell phone camera raw response for spectral estimation. Then a sequence adaptive weighted optimal spectral estimation method based on color perception features is proposed, and the effect of polynomial expansion on the proposed method is investigated to obtain the optimal number of expansion items. Most importantly, a detailed comparison experiment based on the raw response of the cell phone camera with the existing methods shows that the proposed method has the best performance in all four metrics of spectral error and chromaticity error. Further, we apply the weighting strategy based on color perception features to the existing method, and the comparison results show that the estimation accuracy is improved after applying the strategy, which fully demonstrates the superiority of the method and the excellent effect of the weighting strategy on the estimation error reduction.

In the future, further research is needed for cell phone camera imaging characterization; based on more different types of color sample sets, spectral estimation methods need to be improved to enhance their generalization capabilities. Spectral estimation methods based on cell phone cameras under different light source conditions also need to be further studied.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

DL: conceptualization, methodology, software, investigation, formal analysis, writing – original draft, writing – review and editing, and visualization. XWW: data curation, formal analysis, and writing – review and editing. JL: conceptualization, writing – review and editing. TW: writing – review and editing. XXW: resources, supervision, writing – review and editing. All authors contributed to the article and approved the submitted version.

This study was supported by the Hubei Province Natural Science Foundation, China, Research on Key Technologies of Multispectral Imaging in Open Environment Based on Spectral Reconstruction (2022CFB), and National Natural Science Foundation of China (NSFC) (61275172 and 61575147).

The authors would like to thank the graduate students Yanhong Yang, Yue Dan, Xiangru Ren, Yu Fu, and Yiren Liu at the Research Center of Graphic Communication, Printing and Packing of Wuhan University, for providing mobile phones for the experiment. And also thank Yanhong Yang for helping process raw responses on MATLAB.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Amiri, M. M., and Amirshahi, S. H. (2014). A hybrid of weighted regression and linear models for extraction of reflectance spectra from CIEXYZ tristimulus values. Opt. Rev. 21, 816–825. doi: 10.1007/s10043-014-0134-6

Cao, B., Liao, N., and Cheng, H. (2017). Spectral reflectance reconstruction from RGB images based on weighting smaller color difference group. Color Res. Appl. 42, 327–332. doi: 10.1002/col.22091

Connah, D., and Hardeberg, J. Y. (2005). “Spectral recovery using polynomial models,” in Color Imaging X: Processing, Hardcopy, and Applications, eds R. Eschbach and G. G. Marcu (Bellingham: Spie-Int Soc Optical Engineering), 65–75. doi: 10.1117/12.586315

Del Pozo, S., Rodríguez-Gonzálvez, P., Sánchez-Aparicio, L. J., Muñoz-Nieto, A., Hernández-López, D., Felipe-García, B., et al. (2017). “Multispectral Imaging in Cultural Heritage Conservation,” in The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. (Göttingen: Copernicus GmbH), 155–162. doi: 10.5194/isprs-archives-XLII-2-W5-155-2017

Heikkinen, V., Jetsu, T., Parkkinen, J., Hauta-Kasari, M., Jaaskelainen, T., and Lee, S. D. (2007). Regularized learning framework in the estimation of reflectance spectra from camera responses. J. Opt. Soc. Am. A 24:2673. doi: 10.1364/JOSAA.24.002673

Huang, Z., Chen, W., Liu, Q., Wang, Y., Pointer, M. R., Liu, Y., et al. (2021). Towards an optimum colour preference metric for white light sources: A comprehensive investigation based on empirical data. Opt. Express 29, 6302–6319. doi: 10.1364/OE.413389

Hussain, I., and Bowden, A. K. (2021). Smartphone-based optical spectroscopic platforms for biomedical applications: A review invited. Biomed. Opt. Express 12, 1974–1998. doi: 10.1364/boe.416753

Kim, S., Kim, J., Hwang, M., Kim, M., Jo, S. J., Je, M., et al. (2019). Smartphone-based multispectral imaging and machine-learning based analysis for discrimination between seborrheic dermatitis and psoriasis on the scalp. Biomed. Opt. Express 10, 879–891. doi: 10.1364/boe.10.000879

Liang, J. X., Xiao, K. D., Pointer, M. R., Wan, X. X., and Li, C. J. (2019). Spectra estimation from raw camera responses based on adaptive local-weighted linear regression. Opt. Express 27, 5165–5180. doi: 10.1364/oe.27.005165

Liang, J., and Wan, X. (2017). Optimized method for spectral reflectance reconstruction from camera responses. Opt. Express 25, 28273–28287. doi: 10.1364/OE.25.028273

Liang, J., Xiao, K., and Hu, X. (2021). Investigation of light source effects on digital camera-based spectral estimation. Opt. Express 29, 43899–43916. doi: 10.1364/OE.447031

Liang, J., Zhu, Q., Liu, Q., and Xiao, K. (2022). Optimal selection of representative samples for efficient digital camera-based spectra recovery. Color Res. Appl. 47, 107–120. doi: 10.1002/col.22718

Murakami, Y., Obi, T., Yamaguchi, M., and Ohyama, N. (2002). Nonlinear estimation of spectral reflectance based on Gaussian mixture distribution for color image reproduction. Appl. Opt. 41, 4840–4847. doi: 10.1364/AO.41.004840

Rateni, G., Dario, P., and Cavallo, F. (2017). Smartphone-based food diagnostic technologies: A Review. Sensors 17:22. doi: 10.3390/s17061453

Rob, S. (2014). Processing Raw Images in Matlab. Available online at: http://www.rcsumner.net/raw_guide/ (accessed July 4, 2022).

Shen, H.-L., and Xin, J. H. (2006). Spectral characterization of a color scanner based on optimized adaptive estimation. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 23, 1566–1569. doi: 10.1364/JOSAA.23.001566

Shen, H.-L., Wan, H.-J., and Zhang, Z.-C. (2010). Estimating reflectance from multispectral camera responses based on partial least-squares regression. J. Electron. Imaging 19:020501. doi: 10.1117/1.3385782

Stuart, M. B., McGonigle, A. J. S., Davies, M., Hobbs, M. J., Boone, N. A., Stanger, L. R., et al. (2021). Low-Cost Hyperspectral Imaging with A Smartphone. J. Imaging 7:13. doi: 10.3390/jimaging7080136

Tominaga, S., Nishi, S., and Ohtera, R. (2021). Measurement and Estimation of Spectral Sensitivity Functions for Mobile Phone Cameras. Sensors 21:21. doi: 10.3390/s21154985

Tominaga, S., Nishi, S., Ohtera, R., and Sakai, H. (2022). Improved method for spectral reflectance estimation and application to mobile phone cameras. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 39, 494–508. doi: 10.1364/JOSAA.449347

Wang, L. X., Wan, X. X., Xiao, G. S., and Liang, J. X. (2020). Sequential adaptive estimation for spectral reflectance based on camera responses. Opt. Express 28, 25830–25842. doi: 10.1364/oe.389614

Xiao, G., Wan, X., Wang, L., and Liu, S. (2019). Reflectance spectra reconstruction from trichromatic camera based on kernel partial least square method. Opt. Express 27, 34921–34936. doi: 10.1364/OE.27.034921

Xiao, K., Zhu, Y., Li, C., Connah, D., Yates, J. M., and Wuerger, S. (2016). Improved method for skin reflectance reconstruction from camera images. Opt. Express 24, 14934–14950. doi: 10.1364/OE.24.014934

Yang, Y., Zou, T., Huang, G., and Zhang, W. (2022). A high visual quality color image reversible data hiding scheme based on B-R-G embedding principle and CIEDE2000 assessment metric. IEEE Trans. Circuits Syst. Video Technol. 32, 1860–1874. doi: 10.1109/TCSVT.2021.3084676

Keywords: spectral estimation, spectral reflectance, mobile phone camera, color perception feature, CIELAB uniform color space, spectral imaging, sample weighting, raw response

Citation: Liu D, Wu X, Liang J, Wang T and Wan X (2022) An improved spectral estimation method based on color perception features of mobile phone camera. Front. Neurosci. 16:1031505. doi: 10.3389/fnins.2022.1031505

Received: 30 August 2022; Accepted: 03 October 2022;

Published: 19 October 2022.

Edited by:

Yandan Lin, Fudan University, ChinaReviewed by:

Zheng Huang, Hong Kong Polytechnic University, Hong Kong SAR, ChinaCopyright © 2022 Liu, Wu, Liang, Wang and Wan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoxia Wan, d2FuQHdodS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.