94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 06 November 2019

Sec. Brain Imaging Methods

Volume 13 - 2019 | https://doi.org/10.3389/fnins.2019.01159

This article is part of the Research TopicBrain Imaging Methods Editor’s Pick 2021View all 31 articles

Electroencephalography (EEG) and source estimation can be used to identify brain areas activated during a task, which could offer greater insight on cortical dynamics. Source estimation requires knowledge of the locations of the EEG electrodes. This could be provided with a template or obtained by digitizing the EEG electrode locations. Operator skill and inherent uncertainties of a digitizing system likely produce a range of digitization reliabilities, which could affect source estimation and the interpretation of the estimated source locations. Here, we compared the reliabilities of five digitizing methods (ultrasound, structured-light 3D scan, infrared 3D scan, motion capture probe, and motion capture) and determined the relationship between digitization reliability and source estimation uncertainty, assuming other contributors to source estimation uncertainty were constant. We digitized a mannequin head using each method five times and quantified the reliability and validity of each method. We created five hundred sets of electrode locations based on our reliability results and applied a dipole fitting algorithm (DIPFIT) to perform source estimation. The motion capture method, which recorded the locations of markers placed directly on the electrodes had the best reliability with an average electrode variability of 0.001 cm. Then, in order of decreasing reliability were the method using a digitizing probe in the motion capture system, an infrared 3D scanner, a structured-light 3D scanner, and an ultrasound digitization system. Unsurprisingly, uncertainty of the estimated source locations increased with greater variability of EEG electrode locations and less reliable digitizing systems. If EEG electrode location variability was ∽1 cm, a single source could shift by as much as 2 cm. To help translate these distances into practical terms, we quantified Brodmann area accuracy for each digitizing method and found that the average Brodmann area accuracy for all digitizing methods was >80%. Using a template of electrode locations reduced the Brodmann area accuracy to ∽50%. Overall, more reliable digitizing methods can reduce source estimation uncertainty, but the significance of the source estimation uncertainty depends on the desired spatial resolution. For accurate Brodmann area identification, any of the digitizing methods tested can be used confidently.

Estimating active cortical sources using electroencephalography (EEG) is becoming widely adopted in multiple research areas as a non-invasive and mobile functional brain imaging modality (Nyström, 2008; Landsness et al., 2011; Bradley C. et al., 2016; Tsolaki et al., 2017). EEG is the recording of the electrical activity on the scalp and is appealing for studying cortical dynamics during movements and decision making due to the high temporal (i.e., millisecond) resolution of electrical signals. One of the challenges of using EEG is that the signal recorded in an EEG electrode is a mixture of electrical activity from multiple sources, which include the cortex, muscles, heart, eye, 60 Hz noise from power lines, and motion artifacts from cable sway and head movements (Kline et al., 2015; Symeonidou et al., 2018). To meaningfully correlate EEG analyses with brain function, the unwanted source content such as muscle activity, eye blinks, and motion artifacts need to be attenuated or separated from the cortical signal content. A multitude of tools such as independent component analysis, artifact rejection algorithms, and phantom heads have been developed to address the need to separate the source signals to extract the underlying cortical signal (Delorme et al., 2012; Mullen et al., 2013; Artoni et al., 2014; Oliveira et al., 2016; Nordin et al., 2018). Using high-density EEG and improving EEG post-processing techniques have also improved spatial resolution of source estimation to ∽1 cm in experimental studies (He and Musha, 1989; Lantz et al., 2003; Scarff et al., 2004; Klamer et al., 2015; Hedrich et al., 2017; Seeber et al., 2019).

Source estimation requires knowing the EEG signals and the locations of the EEG electrodes to estimate the locations of the cortical sources that produced the EEG signals measured on the scalp. An intuitive assumption of source estimation is that precise placement of the EEG electrodes on the scalp is essential for accurate estimation of source locations (Keil et al., 2014). Computational studies reported shifts of 0.5–1.2 cm in estimated source locations as a result of 0.5 cm (or 5°) error in the electrode digitization (Kavanagk et al., 1978; Khosla et al., 1999; Wang and Gotman, 2001; Beltrachini et al., 2011; Akalin Acar and Makeig, 2013). For EEG studies conducted inside a magnetic resonance imaging (MRI) device, the electrode locations with respect to the cortex can be captured and processed with <0.3 cm position error, which results in near perfect alignment of identified brain areas (Scarff et al., 2004; Marino et al., 2016). However, for studies that do not involve MRI, the electrode locations should be “digitized,” i.e., recorded digitally via a three-dimensional (3D) position recording method (Koessler et al., 2007). These digitized locations can then be coupled with either a subject-specific or an averaged template of the brain structure obtained from MRI or other imaging techniques to perform EEG source estimation.

Just one decade ago in the mid-2000's, the main digitizing technologies available were based on ultrasound and electromagnetism, which were expensive, time consuming, and needed trained operators (Koessler et al., 2007; Rodŕıguez-Calvache et al., 2018). An ultrasound digitizing system uses differences in ultrasound-wave travel times from emitters on the person's face and a digitizing wand to an array of receivers to estimate the 3D location of the tip of the digitizing wand with respect to the face emitters. An electromagnetic system tracks the locations of receivers placed on the person's head and on a wand in an emitted electromagnetic field to estimate the position of the tip of the wand with respect to the head receivers. The environment must be clear of magnetic objects when using an electromagnetic digitizing system, otherwise the electrode locations will be warped (Engels et al., 2013; Cline et al., 2018).

Recent efforts have focused on developing technologies to make digitization more accessible and convenient, mainly by incorporating image-based technologies (Baysal and Sengül, 2010; Koessler et al., 2010). For example, using photogrammetry and motion capture methods for digitization can provide accurate electrode locations in a short period of time (Reis and Lochmann, 2015; Clausner et al., 2017). Photogrammetry involves using cameras to take a series of color images at different view angles. These images can then be analyzed to identify the locations of specific points in the 3D space (Russell et al., 2005; Clausner et al., 2017). Motion capture typically uses multiple infrared cameras around the capture volume to take simultaneous images to identify the locations of reflective or emitting markers. If markers are placed directly on the EEG electrodes, a motion capture system could conveniently record the position of all of the electrodes at once (Engels et al., 2013; Reis and Lochmann, 2015). Motion capture could also be used to record the position of the tip of a probe, a rigid body with multiple markers, to digitize 3D locations of the electrodes with respect to the reflective face markers. Several recent commercial digitizing systems use simple motion capture approaches to digitize EEG electrode locations with or without a probe (Cline et al., 2018; Song et al., 2018; ANT-Neuro, 2019; Rogue-Resolutions, 2019).

Another option for digitizing EEG electrodes that has also gained much interest recently are 3D scanners. A common approach for 3D scanning is detecting the infrared or visible reflections of projected light patterns with a camera to estimate the shape of an object (Chen and Kak, 1987). The 3D scanned shapes can then be plotted in a software program such as MATLAB, and the locations of specific points on the 3D scanned shape can be determined. Recently, common EEG analysis toolboxes such as EEGLAB (Delorme and Makeig, 2004) and FieldTrip (Oostenveld et al., 2010) support using 3D scanners to digitize the electrode locations. Studies suggest that 3D scanners can improve digitization accuracy and significantly reduce digitization time (Taberna et al., 2019). Using other camera-based systems such as time-of-flight scanners and virtual reality headsets were also reported to provide comparable digitization reliabilities as the ultrasound or electromagnetic digitizing methods, while reducing the time spent for digitizing the EEG electrodes (Vema Krishna Murthy et al., 2014; Zhang et al., 2014; Cline et al., 2018).

The purposes of this study were (1) to compare the reliability and validity of five digitizing methods and (2) to quantify the relationship between digitization reliability and source estimation uncertainty. We determined source estimation uncertainty using spatial metrics and Brodmann areas. We hypothesized that digitizing methods with less reliability would increase uncertainty in the estimates of the electrocortical source locations. For our analyses, we assumed that all other contributors to source estimation uncertainty such as variability of head-meshes and assumptions of electrical conductivity values were constant.

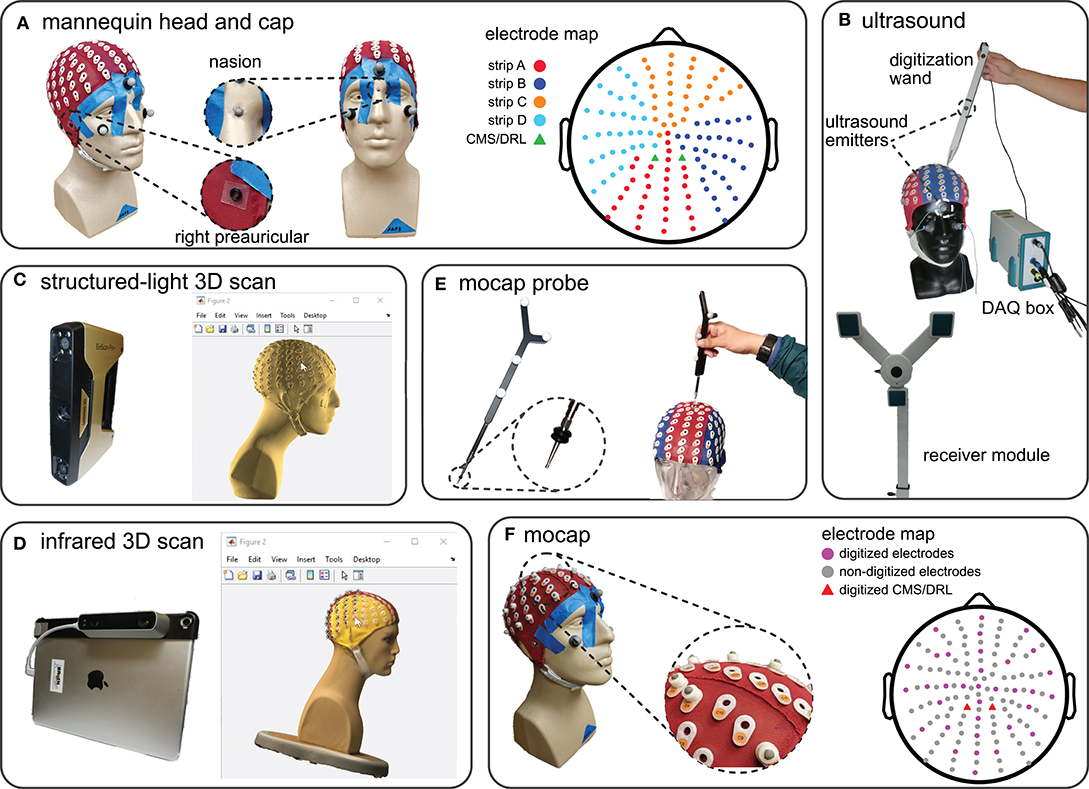

We fitted a mannequin head with a 128-channel EEG cap (ActiveTwo EEG system, BioSemi B.V., Amsterdam, the Netherlands, Figure 1A) and used this mannequin head setup to record multiple digitizations of the locations of the EEG electrodes and fiducials, i.e., right preauricular, left preauricular, and nasion (Klem et al., 1999). To prevent the cap from moving from digitization to digitization, we taped the cap to the mannequin head (Figure 1A). To help ensure that the fiducials were digitized at the same locations for every digitizing method, we marked the fiducials with small 4-mm markers on the mannequin head and with small o-rings on the cap (Figure 1A).

Figure 1. The mannequin head used for digitization and the five digitizing methods tested. (A) The mannequin head fit with the 128-electrode EEG cap used for all of the digitizing recordings. The right and left preauriculars were marked by o-rings and nasion was marked with a reflective marker. The color-coded map of the cap shows the different electrode strips and the order of digitization from (A–D). (B) The ultrasound digitizing system and an operator placing the tip of the wand in the electrode well on the cap. Two of the total five ultrasound emitters on the face and wand, as well as the data acquisition (DAQ) box and the receiver module are also indicated. (C) The structured-light 3D scanner and an operator manually marking the locations of individual electrodes of the scanned model in MATLAB. (D) The infrared 3D scanner and an operator manually marking the locations of individual electrodes of the colored 3D scan in MATLAB. (E) The motion capture digitizing probe with a close-up view of the o-rings placed 7 mm away from the tip. The probe has a similar role to the wand in the ultrasound system. (F) The EEG cap with 35 3D-printed EEG electrode shaped reflective markers, 3 face markers, and 3 fiducial markers used for the motion capture digitization. We placed reflective markers on top of the preauricular o-rings to be able to capture fiducial locations. The electrode map depicts the approximate locations of the digitized electrodes and grounds.

We compared five methods for digitization: ultrasound, structured-light 3D scanning, infrared 3D scanning, motion capture with a digitizing probe, and motion capture with reflective markers. We calibrated each digitizing device only once and completed collecting data for each digitizing in a single session (see Table S1 in the supplement for the calibration results). We also kept the position of the mannequin head, mannequin head orientation, start and endpoint of digitizing, lighting, and temperature constant to avoid introducing additional sources of error to our data collection and analysis.

For each method, four different members of the laboratory digitized the mannequin head five times (one person performed the digitization twice). All of the operators had prior experience in digitizing and were asked to follow each method's specific guidelines. We imported the digitization data to MATLAB (version 9.4, R2018a, Mathworks, Natick, MA) and performed all analyses in MATLAB.

We used a Zebris positioning system with ElGuide software version 1.6 (Zebris Medical GmBH, Tübingen, Germany, Figure 1B) to digitize the electrodes with an ultrasound method. Following the Zebris manual, we placed 3 ultrasound emitters on the face of the mannequin head, placed the receiver module in front of the mannequin head, and used the digitizing wand to record the electrode locations. We calibrated the system using the ElGuide calibration procedure. We marked the fiducials repeatedly until we obtained fiducials with a digitized 3D location of nasion that was <2 mm with respect to the midline and with preauriculars that had a difference of <5 mm in the anterior/posterior and top/bottom directions. Operators followed the interactive ElGuide template to digitize each electrode location. This process involved fully placing the wand tip into the electrode wells and ensuring that the receivers were able to see all emitters at the time of recording electrode locations, so that the estimated position of the wand tip was stable.

We used an Einscan Pro+ (Shining 3D Tech. Co. Ltd., Hangzhou, China, Figure 1C) to digitize the electrodes with a structured-light 3D scanner. This scanner estimates the shape of an object from reflections of the projected visible lights. We calibrated the Einscan Pro+ one time with the Einscan's calibration board and followed the software's step-by-step instructions. We used the scanner's hand-held rapid mode with high details and allowed the scanner to track both texture and markers during the scanning process. Each operator scanned the mannequin head until the scan included the cap, fiducials, and the face. We then applied the watertight model option to the scan and exported the model as a PLY file to continue the digitization process in MATLAB.

After acquiring the 3D scan, the 3D head model needed to be imported into a software program, where the operator manually marked the EEG electrode locations on the 3D scanned head model. We followed the FieldTrip toolbox documentation for digitization using 3D scanners (FieldTrip, 2018) and created a MATLAB script file for importing and digitizing 3D models of the mannequin head. The operator first marked the fiducials on the mannequin head model in MATLAB to build up the head coordinate system. Then, the operator marked the locations of the electrodes on the screen in each section of the cap in alphanumerical order (A, B, C, D, and the fiducials, total: 131 locations, Figure 1A). The operators referred to a physical EEG cap for guidance to help mark the locations in the expected order because these scans were not in color and the letter labels of the electrodes were not visible on the 3D model.

We used the Structure sensor (model ST01, Occipital Inc., San Francisco, CA) integrated with an Apple® iPad (10-inch Pro) to digitize the electrodes with an infrared dot-projection 3D scanner (Figure 1D). This scanner shares similar working principles as a structured-light scanner but uses infrared light projection to estimate the shape of objects. We calibrated the sensor in daylight and office light according to the manual. We scanned the head using the high color and mesh resolutions. When the mannequin head was completely in the sensor's field of view, the operator started scanning. The Structure sensor interface gives the operator visual feedback to help the operator obtain a complete high-quality scan. We visually inspected that the scanned model matched the mannequin and then exported the model to the MATLAB environment. We used the FieldTrip toolbox to import and digitize the 3D mannequin head scans following the same procedure described for the structured-light 3D scan digitization.

We used a digitizing probe and a 22-camera motion capture system (OptiTrack, Corvallis, OR) to digitize the electrodes. The probe is a solid rigid body with four fixed reflective markers (Figure 1E). We placed three reflective makers on the face of the mannequin to account for possible movements of the head during data collection. Each operator digitized the fiducials and each section of the cap (A, B, C, D, Figure 1A) in separate takes. We placed double o-rings 7 mm away from the probe tip to ensure consistent placement of the tip inside the electrode wells (Figure 1E). The tracking error of the motion capture system was <0.4 mm.

We used the motion capture system to record the locations of 35 3D printed reflective markers that resembled a 4-mm reflective marker on top of a BioSemi active pin electrode (Figure 1F). We did not use actual BioSemi electrodes, which have wires that could prevent the cameras from seeing the markers. We placed 27 EEG electrode shaped markers to approximate the international 10–20 EEG cap layout and placed an additional eight EEG electrode shaped markers randomly on the cap to add asymmetry to improve tracking of the markers. We recorded 2-s takes of the positions of the 35 markers, three markers on the fiducials, and three face markers. Before transforming the locations to the head coordinate system, we identified and canceled movements of the head during data collection using the three face markers.

We developed a dedicated pipeline to convert the digitized electrode locations for each digitizing method to a format that could be imported to the common toolboxes for EEG analyses. Because EEGLAB and FieldTrip can easily read Zebris ElGuide's output file (an SFP file), we created SFP files for all digitizations.

The head coordinate system in ElGuide defines the X-axis as the vector connecting the left preauricular to the right preauricular and the origin as the projection of the naison to the X-axis. Therefore, the Y-axis is the vector from the origin to the naison, and the Z-axis is the cross product of the X and Y unit vectors, which starts from the origin.

Variations in the digitized electrode locations could originate from random errors and systematic bias. The effects of random errors can be quantified as variability. Reliability is inversely related to variability. Systematic bias can be quantified as the difference between measured locations and the ground truth locations. Validity is inversely related to systematic bias.

To assess the effects of random errors, we quantified digitization variability. We averaged the five digitized locations for each electrode to find the centroid. We then calculated the average Euclidean distances of the five digitized points to the centroid for each electrode and averaged those distances for all of the electrodes to quantify within-method variability. We identified and excluded outliers, single measurements that were beyond five standard deviations of the average variability for a digitizing method (1 out of 655 measurements for ultrasound, 4 out of 655 measurements for motion capture probe and 2 out of 190 measurements for motion capture). If there were outliers, we recalculated the average digitization reliability with the updated dataset. Throughout the paper, we use “variability” to refer to “within-method variability.” Because reliability is inversely related to the variability, the most reliable method has the least variability.

To quantify the systematic bias of a digitizing method, we calculated the average Euclidean distance between the centroid for a digitizing method and the ground-truth centroid for the same electrode. We used the electrode centroids from the most reliable digitizing method as the ground-truth (Dalal et al., 2014). Then, we averaged the Euclidean distances for the 128 electrodes to obtain the magnitude of the systematic bias for each digitizing method. Because validity is inversely related to the systematic bias, the most valid method has the least systematic bias.

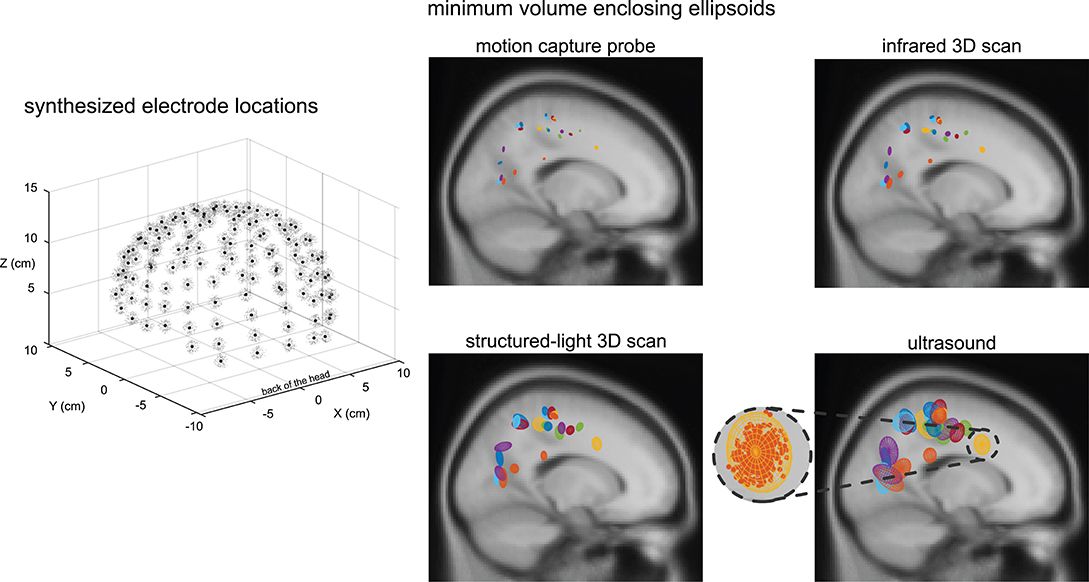

To generalize the possible effects of digitization reliability, we synthesized 500 sets of electrode locations with a Gaussian distribution using the variability average and standard deviation calculated for each digitizing method in section 2.3.1. We excluded the motion capture method from the source estimation uncertainty analyses because we only recorded the locations of 35 EEG electrode shaped reflective markers instead of all 128 EEG electrode locations. We used a single representative 128-channel EEG dataset from a separate study for the source estimation analyses. We applied the Adaptive Mixture Independent Component Analysis (AMICA) to decompose EEG signals into independent components (ICs) (Palmer et al., 2007), which has been reported to represent dipolar activities of different brain and non-brain sources (Delorme et al., 2012).

We used EEGLAB's DIPFIT toolbox version 2.3 to estimate a dipole equivalent for each IC and applied DIPFIT 500 times for each digitizing method. Each DIPFIT iteration used one of the 500 sets of synthesized electrode locations, the Montreal Neurological Institute (MNI) head model (Evans et al., 1993), and the ICs from the AMICA. The MNI head model is an averaged structural head model from 305 participants and provides 1 × 1 × 1 mm resolution. To convert the mannequin head to be compatible with the MNI model, we warped the electrode locations to the MNI model using only the fiducials to preserve individual characteristics of the mannequin head. We used the dipoles produced with the electrode location centroids from the digitizing method with the highest reliability and identified the dipoles that described >85% of the IC signal variance. We also excluded any dipole that was estimated to be outside of the brain volume for any of the DIPFIT results (500/method × five methods = 2,500 DIPFIT results). In the end, 23 ICs remained.

We fitted an enclosing ellipsoid with the minimum volume to each IC's cluster of 500 dipoles (Moshtagh, 2005) and quantified spatial uncertainty in terms of the volume and width of the ellipsoid. A larger ellipsoid volume indicated that a single dipole could reside within a larger volume, and thus, had greater volumetric uncertainty. A larger ellipsoid width indicated that a single dipole could have a larger shift in location. We calculated the ellipsoid's width as the maximum distance that the IC's dipoles could have from one another. We averaged the volumes and widths of all 23 ICs to quantify the spatial uncertainty for each digitizing method.

To identify Brodmann areas, we used a modified version of the eeg_tal_lookup function from EEGLAB's Measure Projection Toolbox (MPT). This function looks for the anatomic structures and Brodmann areas in a 10-mm vicinity of each dipole and assigns the dipole to the Brodmann area with the highest posterior probability (Lancaster et al., 2000; Bigdely-Shamlo et al., 2013). We identified the “ground-truth” Brodmann areas from the dipoles estimated using the centroid electrode locations of the most reliable digitizing method. Then, we calculated Brodmann area accuracy as the percentage of the other 500 Brodmann area assignments that matched the “ground-truth” Brodmann area.

We also analyzed Brodmann area accuracy using a template of electrode locations based on the MNI head model (Oostenveld and Praamstra, 2001). Because the BioSemi 128-electrode cap is not based on the 10-10 electrode map, instead of using the 10-10 electrode locations, we warped the BioSemi electrode locations to reside on the outer surface of the MNI head model. We then compared the Brodmann area identified from the template to the “ground-truth” Brodmann area. Since there is only one template for the Biosemi 128-electrode location on the MNI head model and the locations are fixed, we could not calculate a percentage of assignments; thus, the template's Brodmann area for each IC was either a hit or miss. However, we did calculate and compare the distance between the template's dipole to the “ground truth” dipole. We also compared the distance between each digitizing method's dipoles to the “ground truth” dipole. These distances indicated whether the dipoles estimated using each digitization method were near the “ground truth” dipole.

We used a one-way repeated measures analysis of variance (rANOVA) to compare the reliability and validity of the digitizing methods, the spatial uncertainty of the estimated dipoles, and the Brodmann area accuracy. For significant rANOVA's, we performed Tukey–Kramer's post-hoc analysis to determine which comparisons were significant. We also performed a one-sided Student t-test to identify if the Brodmann area accuracy of each digitizing method was different from the template. The level of significance for all statistics was α = 0.05. For rANOVA, we reported degrees of freedom (DF), Fisher's F-test result and the probability value (p-value). We used p-values to report post-hoc and Student t-test results.

Additionally, we fit a polynomial, using a step-wise linear model (MATLAB stepwiselm function), to describe spatial uncertainty as a function of digitization variability. We forced the y-intercept of the first-order polynomials and the y-intercept and y'-intercept of the higher-order polynomials to be zero. We set the y-intercepts to be zero for two reasons: (1) when we used the exact same electrode locations and performed DIPFIT 100 times, the maximum distance between source locations was on the order of 10−4 cm, and (2) the fit should not model the uncertainty values <0 for positive digitization variability values. The step-wise linear model started with a zero order model and only added a higher-order polynomial term when necessary. The criterion for adding a higher-order polynomial term to the model was a statistically significant decrease of the sum of the squared error between the data points and the predicted values.

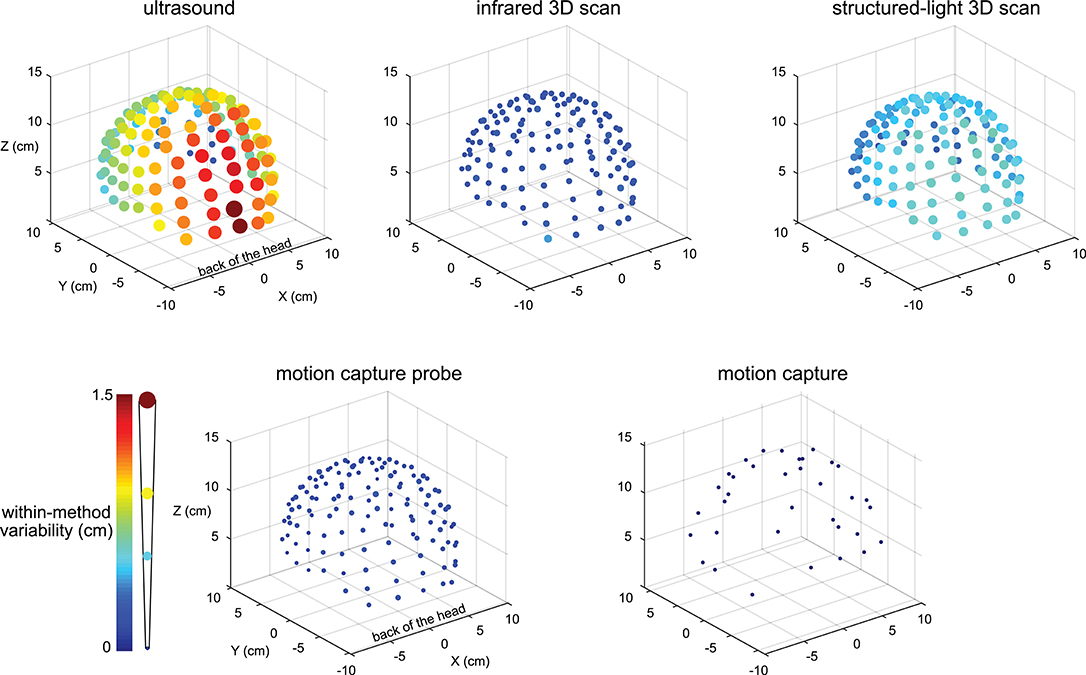

The variability results for the five digitizing methods were visibly different, and electrodes located at the back of the head tended to have greater variability (Figure 2). The variability for the ultrasound method was generally the largest compared to the other methods and could be as large as ∽1.5 cm for electrodes at the back of the head. The variability for all electrodes digitized with the motion capture method was small, being no greater than 0.001 cm.

Figure 2. Visualization of the digitization reliability. Colored and scaled dots show the electrode location within-method variability for all 128 electrodes for the five digitizing methods. Ultrasound had the greatest variability and was the least reliable. The electrodes at the back of the head also tended to have the greatest variability. The motion capture method had the least variability and was the most reliable. The color bar and scale for the radii of the dots illustrate the magnitude of variability.

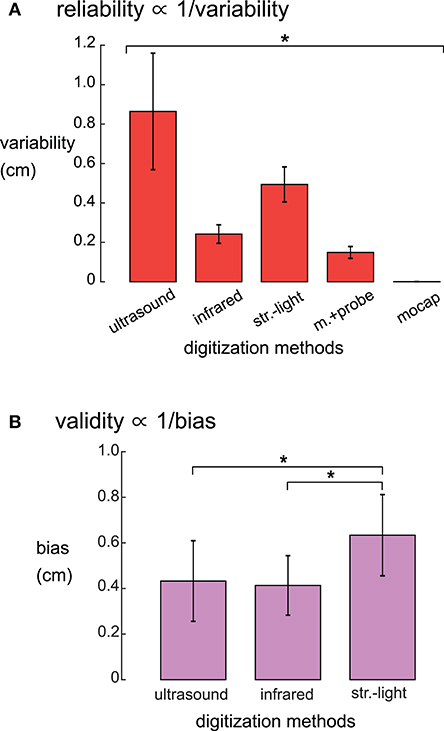

There was a range of reliabilities among the digitizing methods (Figure 3A). The motion capture digitizing method had the smallest variability of 0.001 ± 0.0003 cm (mean ± standard deviation) and hence, the greatest digitization reliability. The motion capture probe was the next most reliable method with an average variability of 0.147 ± 0.03 cm, followed by the infrared 3D scan (0.24 ± 0.05 cm), the structured-light scan (0.50 ± 0.09 cm), and the ultrasound digitization (0.86 ± 0.3 cm). The variability for the digitizing methods were significantly different (rANOVA DF = 4, F = 1,121, p < 0.001), and the variability for each digitizing method was significantly different from all other digitizing methods (post-hoc Tukey–Kramer, p's < 0.001).

Figure 3. (A) Reliabilities, quantified as the average variability, were significantly different for the five digitizing methods. The reliability of each digitizing method was significantly different from all other methods (*Tukey–Kramer p's < 0.001 for all pair-wise comparisons). (B) Validity, quantified as the average systematic bias showed that the structured-light 3D scan had the largest systematic bias compared to ultrasound and the infrared 3D scan. The motion capture probe method was assumed to be the ground truth and thus has no systematic bias and is not shown. *Tukey–Kramer p's < 0.001. Error bars are the standard deviation. Infrared = infrared 3D scan; str.-light = structured-light 3D scan; m.+probe = motion capture probe; mocap = motion capture.

The systematic biases, thus validities, of the digitizing methods were significantly different (rANOVA DF = 2, F = 143.1, p < 0.01, Figure 3B). The digitization validity of the structured-light 3D scan was the worst of the digitizing methods with a systematic bias of 0.63 ± 0.18 cm that was significantly larger than the other digitizing methods (post-hoc Tukey–Kramer, p's < 0.001). The digitization validity of the ultrasound and the infrared 3D scans were similar, with systematic biases of 0.43 ± 0.18 and 0.41 ± 0.13 cm, respectively.

Within a given digitizing method, dipoles generally showed similar spatial uncertainty while different digitizing methods generally showed differences in spatial uncertainty (Figure 4). Ellipsoid sizes for the motion capture probe, infrared 3D scan, structured-light 3D scan, and ultrasound digitization increased in order from the smallest to the largest, respectively. The enclosing ellipsoids of adjacent ICs also overlapped when the ellipsoid size was large, on the order of 1 cm3, such as for the ultrasound method.

Figure 4. An example depiction of the synthesized electrode locations with a Gaussian distribution using the same averaged variability and standard deviation as the structured-light 3D scans, and the enclosing ellipsoids of the 500 dipoles for each independent component (IC) and digitizing method. Black dots = centroids of the electrode locations. Light gray dots = first 150 out of 500 synthesized electrode locations. Each color represents a different IC (23 ICs total). A close-up view of the ellipsoid fit for an Anterior Cingulate IC based on the reliability of the ultrasound digitizing method.

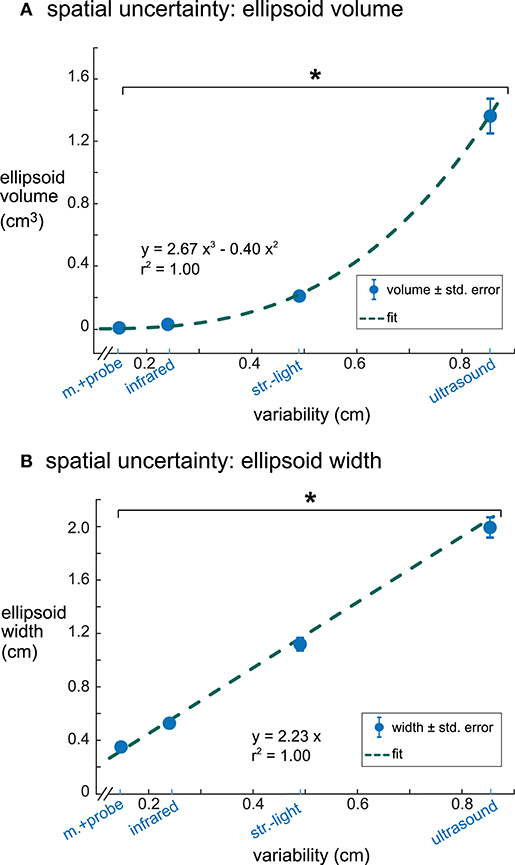

Ellipsoid volumes increased significantly with increasing digitization variability among the digitizing methods and had a cubic relationship (r2 = 1.00, Figure 5A). The motion capture probe and infrared 3D scan had the smallest uncertainty volumes (mean ± standard error) 0.007 ± 0.0007 and 0.029 ± 0.0027 cm3, respectively, whereas ultrasound had the largest uncertainty volume (1.37 ± 0.13 cm3). Structured-light 3D scan had an average uncertainty volume of 0.21 ± 0.014 cm3. The volumes of the enclosing ellipsoids showed a significant between-group difference (rANOVA, DF = 3, F = 114.4, p < 0.001), and all uncertainty volume combinations of paired digitizing methods were significantly different (Tukey–Kramer post-hoc, p's <0.001).

Figure 5. The relationships between digitization variability and dipole spatial uncertainty. (A) Digitization variability and ellipsoid volume had a cubic relationship with an r2 of 1.00. (B) Digitization variability and ellipsoid width had a linear relationship with an r2 of 1.00. Error bars are the standard error. *Tukey–Kramer p's < 0.001 for all pair-wise comparisons. m.+probe = motion capture probe; infrared = infrared 3D scan; str.-light = structured-light 3D scan.

Ellipsoid widths also increased significantly with increasing digitization variability among the digitizing methods but had a linear relationship where the ellipsoid width was twice the size of the digitization variability (r2 = 1.00, Figure 5B). The average ellipsoid width was the smallest for the motion capture probe (mean ± standard error), 0.34 ± 0.018 cm. The average ellipsoid widths for the two 3D scans were 0.53 ± 0.028 cm for the infrared 3D scan and 1.09 ± 0.051 cm for the structured-light 3D scan. The largest average ellipsoid width was for the ultrasound digitization, 1.90 ± 0.081 cm. The rANOVA for the widths of the enclosing ellipsoids showed a significant between-group difference (DF = 3, F = 434.8, p < 0.001) and all combinations of paired digitizing methods had significantly different uncertainty widths (Tukey–Kramer post-hoc p's <0.001).

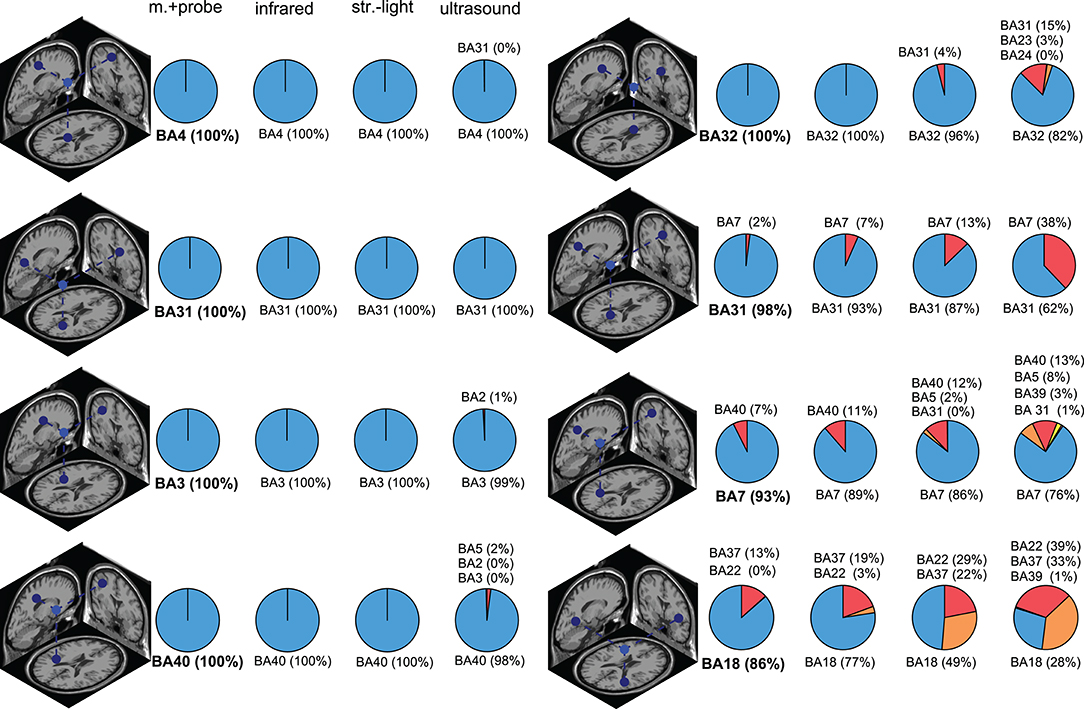

The Brodmann area accuracy among the digitizing methods could be extremely consistent within some ICs and could also be drastically different for other ICs (Figure 6 and in Figure S1). In general, the digitizing method with the highest reliability also had the highest Brodmann area accuracy within a given IC. For some ICs, all digitizing methods had >98% Brodmann area accuracy. For other ICs, the Brodmann area accuracy decreased as reliability decreased. The most drastic example for this dataset was BA18 in Figure 6, where the Brodmann area accuracy was 86% with the motion capture probe method but dropped to 26% with the ultrasound method.

Figure 6. Brodmann area (BA) accuracy for a subset of ICs. The dipole depicts the “ground truth” dipole produced from the most reliable digitizing method, the motion capture probe method. The pie charts show the distribution of the Brodmann area assignments compared to the “ground truth” Brodmann area (shown in bold). ICs in the left column had consistent Brodmann area assignments regardless of digitizing method while the ICs in the right column had more varied Brodmann area assignments for the different digitizing methods. In general, less reliable digitizing methods led to less consistent Brodmann area assignments. Infrared = infrared 3D scan; str.-light = structured-light 3D scan; m.+probe = motion capture probe.

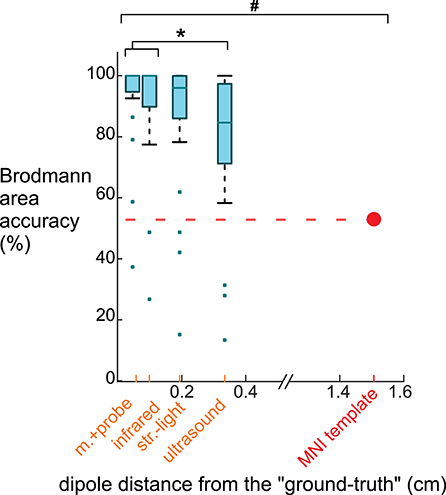

The Brodmann area accuracy for the digitizing methods and the template were significantly different (Figure 7). The motion capture probe had the highest Brodmann area accuracy, 93% ± 16 (mean ± standard deviation). The remaining digitizing methods in order of decreasing Brodmann area accuracy were the infrared 3D scan (91 ± 19%), the structured light 3D scan (87 ± 23%), and the ultrasound digitization (79 ± 25%). The rANOVA for the Brodmann area accuracy showed a significant between-group difference (DF = 4, F = 306.4, p < 0.001). Post-hoc Tukey–Kramer analysis showed significant pair-wise differences between all groups except the motion capture probe and infrared 3D scan. Using the MNI electrode template decreased the Brodmann area accuracy to 53% and was significantly different compared to any of the digitizing methods (p's <0.001). The average distance of the dipoles of each digitizing method to the “ground-truth” dipole was <0.4 cm while the average distance of the template dipoles to the “ground-truth” dipole was ∽1.4 cm.

Figure 7. Brodmann area accuracy plotted vs. the average dipole distance from the “ground truth” dipole when using different digitizing methods and the MNI template. Because larger distances between the dipoles and the “ground truth” likely would decrease Brodmann area accuracy, we plotted the methods on the x-axis at the method's averaged dipole distance from the “ground truth” dipole. The box-whisker plot contains the Brodmann area accuracy averages for the 23 ICs. The Brodmann area accuracy average for an IC was the average of the percentage of the 500 iterations when the Brodmann area identified matched the “ground truth” Brodmann area for that IC. For the template, 53% of the Brodmann areas assigned for the 23 ICs using the template matched the “ground truth” Brodmann area. The Brodmann area accuracy was significantly different among the digitizing methods, except between the motion capture probe and infrared 3D scan (*Tukey–Kramer p's < 0.001). The template's Brodmann area accuracy was significantly different than all digitizing methods (# Student's t-test p's < 0.001). m.+probe = motion capture probe; infrared = infrared 3D scan; str.-light = structured-light 3D scan.

We found that there was a range of reliability and validity values among the digitizing methods. We also observed that less reliable digitizing methods translated to greater uncertainty in source estimation and poorer Brodmann area accuracy, assuming all other contributors to source estimation uncertainty were constant. Of the five digitizing methods (ultrasound, structured-light 3D scan, infrared 3D scan, motion capture probe, and motion capture), the most reliable digitizing method was the motion capture while ultrasound was the least reliable. The structured-light digitizing method had the greatest systematic bias and was thus the least valid method. We had hypothesized that less reliable digitizing methods would lead to greater source estimation uncertainty. In support of our hypothesis, digitizing methods with decreased reliability resulted in increased spatial uncertainty of the dipole locations and decreased Brodmann area accuracy. Surprisingly, any digitizing method led to an average Brodmann area accuracy of >80%. Using a template of electrode locations decreased Brodmann area accuracy to 53%. Overall, these results indicate that electrode digitization is crucial for accurate Brodmann area identification using source estimation and that more reliable digitizing methods are beneficial if the functional resolution for interpreting source estimation is more specific than Brodmann areas.

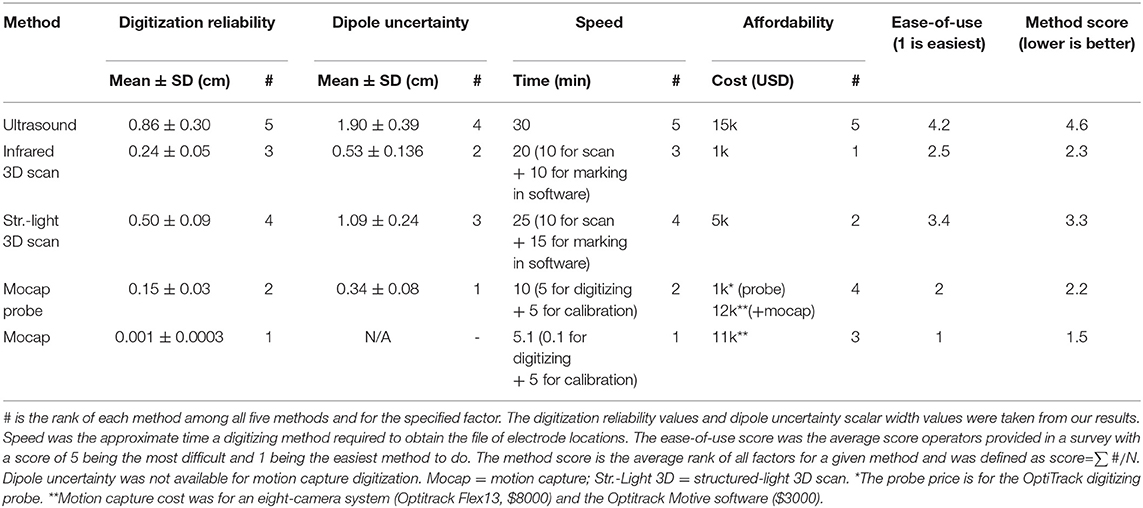

To help summarize the advantages of the different digitization systems, we created a table comparing the digitization reliability, dipole uncertainty, speed, affordability, and ease-of-use score, which are different factors that could influence which digitization a laboratory might choose to use (Table 1). We estimated the digitizing speed as how much time each digitization required. The fastest digitizing method that required manual electrode marking was the motion capture probe method, which took 5 min to mark each electrode and 5 min to calibrate the system. The least expensive system was the infrared 3D scanner, which is likely to become even less expensive as cameras on smartphones become more advanced and could soon be used to obtain an accurate 3D scan for digitizing EEG electrodes. We also surveyed the operators to score each digitization on a scale of 1–5, with 1 being easy to use. While performing the actual 3D scan was perceived as being easy, marking the electrodes in MATLAB was not an easy task. The operators indicated that the motion capture was the easiest and that ultrasound was the most difficult method to use. To create a final ranking, we averaged the rankings for each factor (digitization reliability, dipole uncertainty, speed, affordability, and ease-of-use) to obtain a method score. Based on the method score, the best digitizing method was the motion capture. The next best method was tied between the motion capture probe and infrared 3D scan. The fourth best digitizing method was the structured-light 3D scan, and the worst digitizing method was the ultrasound method, which ranked poorly for all factors.

Table 1. Rankings for each digitizing method based on factors related to performance, cost, and convenience.

Our results suggest that the motion capture method currently provides the most reliable electrode digitization. The average variability of the motion capture digitization was less than the mean calibration error reported by the motion capture system (0.001 vs. <0.04 cm, respectively). This difference might be because of the different natures of the two variabilities. The digitization variability is defined for a seated subject (or mannequin) and multiple sub-second snapshots of the static electrodes placed on the cap. However, the mean calibration error is defined for a set of moving markers in a much larger volume across several minutes of a calibration period. Using the same position for mannequin placement and lack of head movement may have also contributed to the small digitization variability using motion capture. In a previous study, Reis and Lochmann developed an active-electrode motion capture approach for an EEG system with 30 electrodes and reported small deviation of the digitized locations from the ground truth locations (Reis and Lochmann, 2015). In addition to having sub-millimeter variability, the motion capture method only required 1–2 s to digitize, assuming that the markers were already placed on the EEG electrodes. However, tracking 64+ markers on an EEG cap may be challenging for most motion capture systems. Determining the maximum number of EEG electrodes that could be digitized using a motion capture approach could be beneficial and pursued in future work. Laboratories that already have a motion capture system and do not need to digitize more than 64 EEG electrodes could conveniently use the motion capture method, which would provide a cost-effective, fast, and easy digitizing process. For laboratories that need to digitize 64+ electrodes and have a motion capture system already, the motion capture probe digitizing method would be the recommended option.

Our results support recent efforts to use 3D scanners as a reliable and cost-effective method to digitize EEG electrodes (Chen et al., 2019; Homölle and Oostenveld, 2019; Taberna et al., 2019). Both the structured-light and infrared 3D scanning methods were more reliable than digitizing with the ultrasound method. Furthermore, our reliability results for the two 3D scanners align well with a recent study that showed that an infrared 3D scan could automatically digitize electrode locations on three different EEG caps and achieve good reliability after additional post-processing (Taberna et al., 2019). Of the two 3D scanners we tested, the less expensive infrared 3D scanner was more reliable, had higher validity, and resulted in less dipole uncertainty, compared to the structured-light 3D scanner. Even though the structured-light 3D scanner provides more details from the mannequin head and cap, those details did not seem to be important for improving digitization reliability or validity. Additionally, the highly detailed structured-light 3D scans created large files and resulted in sluggish refresh rates that made using FieldTrip toolbox to rotate and manipulate the 3D scans difficult. The infrared 3D scan, unlike the structured-light 3D scan, was in color, which was helpful for the operators to identify the EEG electrodes more easily on the computer screen. In the future, artificial intelligence approaches may be able to fully automate the digitizing process and use the additional topographic details from high resolution 3D scans. A continuous image-based digitizing method such as using a regular video recorded using a typical smartphone could also potentially be developed to digitize EEG electrode locations.

Compared to simulation studies, our experimental results demonstrated that source estimation uncertainty increased steeply with increasing EEG electrode variability. We showed that a digitizing method with an average variability of 1 cm could lead to a shift of a single dipole by more than 2 cm, which is >20% of the head radius. There is just one simulation study that we know of that also showed a two-fold increase in source uncertainty for every unit of digitization variability (Akalin Acar and Makeig, 2013). In that study, digitization variabilities were created using systematic rotations applied to every electrode location. The majority of the simulation studies however, suggest that source uncertainty could only be as large as the digitization variability (Khosla et al., 1999; Van Hoey et al., 2000; Wang and Gotman, 2001; Beltrachini et al., 2011). In one of the mathematical studies, the theoretical lower bound of source estimation uncertainty was 0.1 cm for 0.5 cm shifts in EEG electrode location (Beltrachini et al., 2011), which is 10x smaller than our experimental results. While simulation studies can be insightful, results should also be cross-validated with a conventional source estimation method (e.g., DIPFIT, LORETA, or minimum norm) to determine whether simulation results are indicative of real-world source estimation uncertainty.

Because researchers often use Brodmann areas to describe the function of a source, we translated our results to be in terms of Brodmann area accuracy, which led to a few surprising revelations. The main revelation was that despite the range of digitization reliabilities, any of the digitizing methods we tested produced an average Brodmann area accuracy >80%. As long as sources are only discussed according to Brodmann areas or larger cortical spatial regions, any current digitizing method can be used. The second revelation was that using the template electrode locations, instead of digitizing the electrodes, significantly decreased Brodmann area accuracy from >80% to ∽50%, which may be due to a ∽1.5 cm shift in dipoles locations (Figure 7). This shift may occur because the template removes information related to individual's head shape. The third revelation was that for several sources, the same Brodmann area was almost always identified, regardless of the digitizing method used (left column in Figure 6). For other sources, less reliable digitizing methods led to more potential Brodmann area assignments (right column in Figure 6), but those different Brodmann areas may be functionally similar. Most likely, the proximity of a source to the boundary of a Brodmann area as well as the size of the Brodmann area contribute to Brodmann area accuracy. Ultimately, the accuracy of source estimation will depend on the target volumes of cortical regions of interest.

This study does not account for all of the possible sources of errors contributing to digitizing EEG electrodes or source estimation. We placed markers on the fiducials to control for the digitization error of the fiducials, but in practice, marking the fiducials while the subject wears the cap can be challenging. Mismarking a fiducial can significantly shift every dipole location by 2 times the distance of the fiducial mismarking (Shirazi and Huang, 2019a). We also used a mannequin to control for the head movements and relative cap movements to the head. In reality, participants may move their head and the cap may slightly change position during digitization or data collection that would affect the location of the EEG electrodes with respect to the head. Further, we only calibrated our digitizing devices once for multiple data collections. Nevertheless, in a real laboratory setup, device calibration might be required before each instance of data collection. We, however, included digitization by multiple experienced operators to acknowledge that in a research laboratory different members might complete the digitization for different participants. Overall, our results suggest that as long as all sources of digitization error do not create variability >1 cm, Brodmann area accuracy would be >80%. Using the same electrical head model and source localization approach helped us to only quantify the effects of digitization variability on source estimation uncertainty. In reality, the EEG signal noise, number and distribution of EEG electrodes, electrical properties of the head model, head model shape and mesh accuracy, and solving approach are among the other potential contributors to source estimation uncertainty (Akalin Acar and Makeig, 2013; Dalal et al., 2014; Song et al., 2015; Akalin Acar et al., 2016; Mahjoory et al., 2017; Beltrachini, 2019).

Limitations of this study were that we tested a subset of all digitizing methods, used a mannequin head, and not an actual human head for the digitization, and did not perform source estimation using other common algorithms. Even though we did not test many of the marketed digitizing systems, we replicated and tested the fundamental methods used by most of the marketed digitizing systems. One widely used EEG electrode digitizing method we did not test is an electromagnetic digitizing method (e.g., Polhemus Patriot or Fastrack system). Another study using similar digitization reliability analyses reported an average variability of 0.76 cm for an electromagnetic digitization system (Clausner et al., 2017), which is slightly better than the ultrasound digitizing method, with a variability of 0.86 ± 0.3 cm. Collecting digitization data from an actual participant might have helped in having a better distribution of the sources inside the brain volume, but we decided to use a mannequin to better control for head movements, relative cap movements and other environmental factors. Here, we used the EEG data only to provide a platform to understand the relationship between the digitization variability and source uncertainty, and locations of the sources do not have any neurological implications. Last, we did not use other different source estimation algorithms such as LORETA or beam-forming. Studies indicate that commonly used source estimation algorithms generally identify the similar source locations (Song et al., 2015; Bradley A. et al., 2016; Mahjoory et al., 2017), which suggests that the choice of the source estimation algorithm used would probably not significantly alter our results.

Future efforts to improve source estimation, so that sources can be interpreted in terms of cortical spatial regions smaller than Brodmann areas, will involve more than just developing more reliable, convenient, and cost-effective digitizing methods to help reduce source estimation uncertainty. Even if a perfect digitizing method could be developed, there would still be uncertainty in source estimation as result of other factors such as improper head-model meshes and inaccurate electrical conductivity values (Akalin Acar et al., 2016; Beltrachini, 2019), which were assumed to have a constant contribution to the source estimation uncertainty in our analyses. Obtaining and using as much subject specific information, such as subject-specific MRI scans in addition to digitizing EEG electrode locations, should improve source estimation. EEGLAB's Neuroelectromagnetic Forward Head Modeling Toolbox (NFT) could be used to warp the MNI head model to the digitized electrode locations to retain the individual's head shape but is computationally expensive (Acar and Makeig, 2010). Using subject-specific MRIs instead of the MNI head model is also limited to groups with access to an MRI at an affordable cost per scan.

In summary, there was a range of digitization reliabilities among the five digitizing methods tested (ultrasound, structured-light 3D scanning, infrared 3D scanning, motion capture with a digitizing probe, and motion capture with reflective markers), and less reliable digitization resulted in greater spatial uncertainty in source estimation and poorer Brodmann area accuracy. We found that the motion capture digitizing method was the most reliable while the ultrasound method was the least reliable. Interestingly, Brodmann area accuracy for a source only dropped from ∽90 to ∽80%, when using the most and least reliable digitizing methods, respectively. If source locations will be discussed in terms of Brodmann areas, any of the digitizing methods tested could provide accurate Brodmann area identification. Using a template of EEG electrode locations, however, decreased the Brodmann area accuracy to ∽50%, suggesting that digitizing EEG electrode locations for source estimation results in more accurate Brodmann area identifications. Even though digitizing EEG electrodes is just one of the factors that affects source estimation, developing more reliable and accessible digitizing methods can help reduce source estimation uncertainty and may allow sources to be interpreted in terms of cortical regions more specific than Brodmann areas in the future.

The datasets generated for this study are available on request to the corresponding author.

HH proposed the problem. SS and HH designed the experiment, conducted data collections, analyzed the data, and wrote and edited the manuscript.

This work was partially supported by the National Institute on Aging of the National Institutes of Health, under award number R01AG054621. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This manuscript has been released as a Pre-Print at BioRxiv (Shirazi and Huang, 2019b). MATLAB functions and dependencies for the motion capture and 3D scan digitizing methods are available on GitHub at: github.com/neuromechanist/eLocs.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2019.01159/full#supplementary-material

Acar, Z. A., and Makeig, S. (2010). Neuroelectromagnetic forward head modeling toolbox. J. Neurosci. Methods 190, 258–270. doi: 10.1016/j.jneumeth.2010.04.031

Akalin Acar, Z., Acar, C. E., and Makeig, S. (2016). Simultaneous head tissue conductivity and EEG source location estimation. Neuroimage 124(Pt A):168–180. doi: 10.1016/j.neuroimage.2015.08.032

Akalin Acar, Z., and Makeig, S. (2013). Effects of forward model errors on EEG source localization. Brain Topogr. 26, 378–396. doi: 10.1007/s10548-012-0274-6

ANT-Neuro (2019). xensorTM. ANT neuro. Available online at: https://www.ant-neuro.com/products/xensor (accessed February 8, 2019).

Artoni, F., Menicucci, D., Delorme, A., Makeig, S., and Micera, S. (2014). RELICA: a method for estimating the reliability of independent components. Neuroimage 103, 391–400. doi: 10.1016/j.neuroimage.2014.09.010

Baysal, U., and Sengül, G. (2010). Single camera photogrammetry system for EEG electrode identification and localization. Ann. Biomed. Eng. 38, 1539–1547. doi: 10.1007/s10439-010-9950-4

Beltrachini, L. (2019). Sensitivity of the projected subtraction approach to mesh degeneracies and its impact on the forward problem in EEG. IEEE Trans. Biomed. Eng. 66, 273–282. doi: 10.1109/TBME.2018.2828336

Beltrachini, L., von Ellenrieder, N., and Muravchik, C. H. (2011). General bounds for electrode mislocation on the EEG inverse problem. Comput. Methods Programs Biomed. 103, 1–9. doi: 10.1016/j.cmpb.2010.05.008

Bigdely-Shamlo, N., Mullen, T., Kreutz-Delgado, K., and Makeig, S. (2013). Measure projection analysis: a probabilistic approach to EEG source comparison and multi-subject inference. Neuroimage 72, 287–303. doi: 10.1016/j.neuroimage.2013.01.040

Bradley, A., Yao, J., Dewald, J., and Richter, C. P. (2016). Evaluation of electroencephalography source localization algorithms with multiple cortical sources. PLoS ONE 11:e0147266. doi: 10.1371/journal.pone.0147266

Bradley, C., Joyce, N., and Garcia-Larrea, L. (2016). Adaptation in human somatosensory cortex as a model of sensory memory construction: a study using high-density EEG. Brain Struct. Funct. 221, 421–431. doi: 10.1007/s00429-014-0915-5

Chen, C., and Kak, A. (1987). “Modeling and calibration of a structured light scanner for 3-D robot vision,” in 1987 IEEE International Conference on Robotics and Automation (Raleigh, NC). doi: 10.1109/ROBOT.1987.1087958

Chen, S., He, Y., Qiu, H., Yan, X., and Zhao, M. (2019). Spatial localization of EEG electrodes in a TOF+CCD camera system. Front. Neuroinform. 13:21. doi: 10.3389/fninf.2019.00021

Clausner, T., Dalal, S. S., and Crespo-Garcia, M. (2017). Photogrammetry-Based head digitization for rapid and accurate localization of EEG electrodes and MEG fiducial markers using a single digital SLR camera. Front. Neurosci. 11:264. doi: 10.3389/fnins.2017.00264

Cline, C. C., Coogan, C., and He, B. (2018). EEG electrode digitization with commercial virtual reality hardware. PLoS ONE 13:e0207516. doi: 10.1371/journal.pone.0207516

Dalal, S. S., Rampp, S., Willomitzer, F., and Ettl, S. (2014). Consequences of EEG electrode position error on ultimate beamformer source reconstruction performance. Front. Neurosci. 8:42. doi: 10.3389/fnins.2014.00042

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Delorme, A., Palmer, J., Onton, J., Oostenveld, R., and Makeig, S. (2012). Independent EEG sources are dipolar. PLoS ONE 7:e30135. doi: 10.1371/journal.pone.0030135

Engels, L., Warzee, N., and De Tiege, X. (2013). Method of Locating EEG and MEG Sensors on a Head. U.S. Patent No. EP2561810:A1.

Evans, A. C., Collins, D. L., Mills, S. R., Brown, E. D., Kelly, R. L., and Peters, T. M. (1993). “3D statistical neuroanatomical models from 305 MRI volumes,” in 1993 IEEE Conference Record Nuclear Science Symposium and Medical Imaging Conference (San Francisco, CA), 1813–1817. doi: 10.1109/NSSMIC.1993.373602

FieldTrip (2018). Tutorial:3dscanner [FieldTrip]. Available online at: http://www.fieldtriptoolbox.org/tutorial/electrode (accessed January 29, 2019).

He, B., and Musha, T. (1989). Effects of cavities on EEG dipole localization and their relations with surface electrode positions. Int. J. Biomed. Comput. 24, 269–282. doi: 10.1016/0020-7101(89)90022-6

Hedrich, T., Pellegrino, G., Kobayashi, E., Lina, J. M., and Grova, C. (2017). Comparison of the spatial resolution of source imaging techniques in high-density EEG and MEG. Neuroimage 157, 531–544. doi: 10.1016/j.neuroimage.2017.06.022

Homölle, S., and Oostenveld, R. (2019). Using a structured-light 3D scanner to improve EEG source modeling with more accurate electrode positions. J. Neurosci. Methods 326:108378. doi: 10.1016/j.jneumeth.2019.108378

Kavanagk, R. N., Darcey, T. M., Lehmann, D., and Fender, D. H. (1978). Evaluation of methods for three-dimensional localization of electrical sources in the human brain. IEEE Trans. Biomed. Eng. 25, 421–429. doi: 10.1109/TBME.1978.326339

Keil, A., Debener, S., Gratton, G., Junghöfer, M., Kappenman, E. S., Luck, S. J., et al. (2014). Committee report: publication guidelines and recommendations for studies using electroencephalography and magnetoencephalography. Psychophysiology 51, 1–21. doi: 10.1111/psyp.12147

Khosla, D., Don, M., and Kwong, B. (1999). Spatial mislocalization of EEG electrodes – effects on accuracy of dipole estimation. Clin. Neurophysiol. 110, 261–271. doi: 10.1016/S0013-4694(98)00121-7

Klamer, S., Elshahabi, A., Lerche, H., Braun, C., Erb, M., Scheffler, K., et al. (2015). Differences between MEG and high-density EEG source localizations using a distributed source model in comparison to fMRI. Brain Topogr. 28, 87–94. doi: 10.1007/s10548-014-0405-3

Klem, G. H., Lüders, H. O., Jasper, H. H., and Elger, C. (1999). The ten-twenty electrode system of the international federation. the international federation of clinical neurophysiology. Electroencephalogr. Clin. Neurophysiol. Suppl. 52, 3–6.

Kline, J. E., Huang, H. J., Snyder, K. L., and Ferris, D. P. (2015). Isolating gait-related movement artifacts in electroencephalography during human walking. J. Neural Eng. 12:046022. doi: 10.1088/1741-2560/12/4/046022

Koessler, L., Cecchin, T., Ternisien, E., and Maillard, L. (2010). 3D handheld laser scanner based approach for automatic identification and localization of EEG sensors. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2010, 3707–3710. doi: 10.1109/IEMBS.2010.5627659

Koessler, L., Maillard, L., Benhadid, A., Vignal, J. P., Braun, M., and Vespignani, H. (2007). Spatial localization of EEG electrodes. Neurophysiol. Clin. 37, 97–102. doi: 10.1016/j.neucli.2007.03.002

Lancaster, J. L., Woldorff, M. G., Parsons, L. M., Liotti, M., Freitas, C. S., Rainey, L., et al. (2000). Automated talairach atlas labels for functional brain mapping. Hum. Brain Mapp. 10, 120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8

Landsness, E. C., Goldstein, M. R., Peterson, M. J., Tononi, G., and Benca, R. M. (2011). Antidepressant effects of selective slow wave sleep deprivation in major depression: a high-density EEG investigation. J. Psychiatr. Res. 45, 1019–1026. doi: 10.1016/j.jpsychires.2011.02.003

Lantz, G., Grave de Peralta, R., Spinelli, L., Seeck, M., and Michel, C. M. (2003). Epileptic source localization with high density EEG: how many electrodes are needed? Clin. Neurophysiol. 114, 63–69. doi: 10.1016/S1388-2457(02)00337-1

Mahjoory, K., Nikulin, V. V., Botrel, L., Linkenkaer-Hansen, K., Fato, M. M., and Haufe, S. (2017). Consistency of EEG source localization and connectivity estimates. Neuroimage 152, 590–601. doi: 10.1016/j.neuroimage.2017.02.076

Marino, M., Liu, Q., Brem, S., Wenderoth, N., and Mantini, D. (2016). Automated detection and labeling of high-density EEG electrodes from structural MR images. J. Neural Eng. 13:056003. doi: 10.1088/1741-2560/13/5/056003

Moshtagh, N. (2005). Minimum Volume Enclosing Ellipsoid. Available online at: semanticscholar.org

Mullen, T., Kothe, C., Chi, Y. M., Ojeda, A., Kerth, T., Makeig, S., et al. (2013). Real-time modeling and 3D visualization of source dynamics and connectivity using wearable EEG. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2013, 2184–2187. doi: 10.1109/EMBC.2013.6609968

Nordin, A. D., Hairston, W. D., and Ferris, D. P. (2018). Dual-electrode motion artifact cancellation for mobile electroencephalography. J. Neural Eng. 15:056024. doi: 10.1088/1741-2552/aad7d7

Nyström, P. (2008). The infant mirror neuron system studied with high density EEG. Soc. Neurosci. 3, 334–347. doi: 10.1080/17470910701563665

Oliveira, A. S., Schlink, B. R., Hairston, W. D., Konig, P., and Ferris, D. P. (2016). Induction and separation of motion artifacts in EEG data using a mobile phantom head device. J. Neural Eng. 13:036014. doi: 10.1088/1741-2560/13/3/036014

Oostenveld, R., Fries, P., Maris, E., and Schoffelen, J.-M. (2010). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011:156869. doi: 10.1155/2011/156869

Oostenveld, R., and Praamstra, P. (2001). The five percent electrode system for high-resolution EEG and ERP measurements. Clin. Neurophysiol. 112, 713–719. doi: 10.1016/S1388-2457(00)00527-7

Palmer, J. A., Kreutz-Delgado, K., Rao, B. D., and Makeig, S. (2007). “Modeling and estimation of dependent subspaces with non-radially symmetric and skewed densities,” in Independent Component Analysis and Signal Separation, eds M. E. Davies, C. J. James, S. A. Abdallah, and M. D. Plumbley (Berlin; Heidelberg: Springer), 97–104.

Reis, P. M., and Lochmann, M. (2015). Using a motion capture system for spatial localization of EEG electrodes. Front. Neurosci. 9:130. doi: 10.3389/fnins.2015.00130

Rodríguez-Calvache, M., Calle, A., Valderrama, S., López, I. A., and López, J. D. (2018). “Analysis of exact electrode positioning systems for multichannel-EEG,” in Applied Computer Sciences in Engineering, eds J. Figueroa-Garca, E. Lpez-Santana, and J. Rodriguez-Molano (Cham: Springer International Publishing), 523–534.

Rogue-Resolutions (2019). Brainsight TMS Navigation - Rogue Resolutions. Available online at: https://www.rogue-resolutions.com/catalogue/neuro-navigation/brainsight-tms-navigation/ (accessed February 8, 2019).

Russell, G. S., Jeffrey Eriksen, K., Poolman, P., Luu, P., and Tucker, D. M. (2005). Geodesic photogrammetry for localizing sensor positions in dense-array EEG. Clin. Neurophysiol. 116, 1130–1140. doi: 10.1016/j.clinph.2004.12.022

Scarff, C. J., Reynolds, A., Goodyear, B. G., Ponton, C. W., Dort, J. C., and Eggermont, J. J. (2004). Simultaneous 3-T fMRI and high-density recording of human auditory evoked potentials. Neuroimage 23, 1129–1142. doi: 10.1016/j.neuroimage.2004.07.035

Seeber, M., Cantonas, L.-M., Hoevels, M., Sesia, T., Visser-Vandewalle, V., and Michel, C. M. (2019). Subcortical electrophysiological activity is detectable with high-density EEG source imaging. Nat. Commun. 10:753. doi: 10.1038/s41467-019-08725-w

Shirazi, S. Y., and Huang, H. J. (2019a). “Influence of mismarking fiducial locations on EEG source estimation*,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) (San Francisco, CA), 377–380.

Shirazi, S. Y., and Huang, H. J. (2019b). More reliable EEG electrode digitizing methods can reduce source estimation uncertainty, but current methods already accurately identify brodmann areas. bioRxiv 557074. doi: 10.1101/557074

Song, C., Jeon, S., Lee, S., Ha, H. G., Kim, J., and Hong, J. (2018). Augmented reality-based electrode guidance system for reliable electroencephalography. Biomed. Eng. Online 17:64. doi: 10.1186/s12938-018-0500-x

Song, J., Davey, C., Poulsen, C., Luu, P., Turovets, S., Anderson, E., et al. (2015). EEG source localization: sensor density and head surface coverage. J. Neurosci. Methods 256, 9–21. doi: 10.1016/j.jneumeth.2015.08.015

Symeonidou, E.-R., Nordin, A. D., Hairston, W. D., and Ferris, D. P. (2018). Effects of cable sway, electrode surface area, and electrode mass on electroencephalography signal quality during motion. Sensors 18:E1073. doi: 10.3390/s18041073

Taberna, G. A., Marino, M., Ganzetti, M., and Mantini, D. (2019). Spatial localization of EEG electrodes using 3D scanning. J. Neural Eng. 16:026020. doi: 10.1088/1741-2552/aafdd1

Tsolaki, A. C., Kosmidou, V., Kompatsiaris, I. Y., Papadaniil, C., Hadjileontiadis, L., Adam, A., et al. (2017). Brain source localization of MMN and P300 ERPs in mild cognitive impairment and Alzheimer's disease: a high-density EEG approach. Neurobiol. Aging 55, 190–201. doi: 10.1016/j.neurobiolaging.2017.03.025

Van Hoey, G., Vanrumste, B., D'Havé, M., Van de Walle, R., Lemahieu, I., and Boon, P. (2000). Influence of measurement noise and electrode mislocalisation on EEG dipole-source localisation. Med. Biol. Eng. Comput. 38, 287–296. doi: 10.1007/BF02347049

Vema Krishna Murthy, S., MacLellan, M., Beyea, S., and Bardouille, T. (2014). Faster and improved 3-D head digitization in MEG using kinect. Front. Neurosci. 8:326. doi: 10.3389/fnins.2014.00326

Wang, Y., and Gotman, J. (2001). The influence of electrode location errors on EEG dipole source localization with a realistic head model. Clin. Neurophysiol. 112, 1777–1780. doi: 10.1016/S1388-2457(01)00594-6

Keywords: electrocortical dynamics, electrode position, 3D scanning, source localization, spatial accuracy, independent component analysis (ICA), mobile brain/body imaging (MoBI)

Citation: Shirazi SY and Huang HJ (2019) More Reliable EEG Electrode Digitizing Methods Can Reduce Source Estimation Uncertainty, but Current Methods Already Accurately Identify Brodmann Areas. Front. Neurosci. 13:1159. doi: 10.3389/fnins.2019.01159

Received: 01 March 2019; Accepted: 14 October 2019;

Published: 06 November 2019.

Edited by:

Enkelejda Kasneci, University of Tübingen, GermanyReviewed by:

Evangelia-Regkina Symeonidou, University of Florida, United StatesCopyright © 2019 Shirazi and Huang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Seyed Yahya Shirazi, c2hpcmF6aUBpZWVlLm9yZw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.