94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci., 06 June 2019

Sec. Neuroprosthetics

Volume 13 - 2019 | https://doi.org/10.3389/fnins.2019.00578

This article is part of the Research TopicNeuroprosthetics Editor's Pick 2021View all 10 articles

State of the art myoelectric hand prostheses can restore some feedforward motor function to their users, but they cannot yet restore sensory feedback. It has been shown, using psychophysical tests, that multi-modal sensory feedback is readily used in the formation of the users’ representation of the control task in their central nervous system – their internal model. Hence, to fully describe the effect of providing feedback to prosthesis users, not only should functional outcomes be assessed, but so should the internal model. In this study, we compare the complex interactions between two different feedback types, as well as a combination of the two, on the internal model, and the functional performance of naïve participants without limb difference. We show that adding complementary audio biofeedback to visual feedback enables the development of a significantly stronger internal model for controlling a myoelectric hand compared to visual feedback alone, but adding discrete vibrotactile feedback to vision does not. Both types of feedback, however, improved the functional grasping abilities to a similar degree. Contrary to our expectations, when both types of feedback are combined, the discrete vibrotactile feedback seems to dominate the continuous audio feedback. This finding indicates that simply adding sensory information may not necessarily enhance the formation of the internal model in the short term. In fact, it could even degrade it. These results support our argument that assessment of the internal model is crucial to understanding the effects of any type of feedback, although we cannot be sure that the metrics used here describe the internal model exhaustively. Furthermore, all the feedback types tested herein have been proven to provide significant functional benefits to the participants using a myoelectrically controlled robotic hand. This article, therefore, proposes a crucial conceptual and methodological addition to the evaluation of sensory feedback for upper limb prostheses – the internal model – as well as new types of feedback that promise to significantly and considerably improve functional prosthesis control.

The ease with which adults use their hands is owed to an intricate feedforward-feedback mechanism that has been honed since birth (Johansson and Cole, 1992). To those who have lost a hand (i.e., amputees) or were born without it, some feedforward motor functions can be restored with hand prostheses. However, while prostheses with myoelectric control represent the clinical state of the art (Schmidl, 1973), current commercial devices do not intentionally provide sensory feedback, and only few sensory feedback systems have found their way out of the research labs (Antfolk et al., 2013; Clemente et al., 2016; Ortiz-Catalan et al., 2017).

Feedforward control of myoelectric hand prostheses is chiefly influenced by two factors: (1) the robustness of the control of the movements of the prosthesis, which is affected by the method of recording and decoding the users’ intent (i.e., their signals) (Geethanjali, 2016). (2) the users’ ability to produce these control signals that is dependent on their understanding of the system – how it is represented in the central nervous system – which is known as the internal model (Kawato, 1999). In the unimpaired individual, internal models are continuously updated through multi-modal sensory feedback (tactile, visual, and auditory) during and after any movement (Imamizu et al., 2000). In amputees wearing a prosthesis, this differs due to the poor implicit sensory feedback available. Prosthesis users rely, chiefly, on proprioception in the remaining muscles (sense of contraction), visual feedback and, to some extent, on the incidental feedback that motor noise, and socket vibration provide (Simpson, 1973; Childress, 1980; Antfolk et al., 2013; Markovic et al., 2018b). Consequently, they cannot adequately hone their internal model, which negatively affects their ability to control the prosthesis (Lum et al., 2014; Shehata et al., 2018c). When highly reliable efferent signals are available for control, incomplete sensory inputs may suffice to retain the internal model (Hermsdörfer et al., 2008; Saunders and Vijayakumar, 2011; Ninu et al., 2014; Dosen et al., 2015b; Markovic et al., 2018b). However, it is a desirable goal to restore natural closed-loop control with supplementary (explicit) sensory feedback.

To address this goal, researchers have devised and assessed ways of providing feedback through invasive and non-invasive methods (Childress, 1980; Antfolk et al., 2013). Invasive peripheral nerve stimulation holds the promise of eventually being able to restore close-to-natural, modality- and somatotopically matched sensations (Riso, 1999; Graczyk et al., 2016). So far, however, realization of this hope has proven difficult; truly natural “touch” sensations have only been reported once (Tan et al., 2014). Non-invasive feedback does not directly interface with the nerves and is thus potentially less informative, but it is preferred by prospective users (Engdahl et al., 2015). It has also proven capable to improve functional performance in prosthetic hand users (Chatterjee et al., 2008; Ninu et al., 2014; Raspopovic et al., 2014; Clemente et al., 2016; Dosen et al., 2016; Markovic et al., 2017, 2018a). All these studies demonstrated new technological devices and methods, produced new knowledge, and revived the interesting question on the need/effectiveness of sensory feedback and how to assess it. However, no study had assessed the effects of sensory feedback on the internal model within a formalized framework.

In an attempt to reduce this gap, we recently proposed to assess the internal model strength developed while controlling myoelectric prostheses by using a psychophysical framework borrowed and modified from motor adaptation studies (Johnson et al., 2017; Shehata et al., 2018a,c). This framework uses parameters, such as sensory and control noise, to compute uncertainties in the developed internal model. Our recent work (Shehata et al., 2018c) showed that this framework can be used to investigate the effect of the feedback level on internal model strength. As a test bed for assessing this new method, we developed a versatile non-invasive human-machine interface that included a classifier for control and an audio sensory feedback system conveying continuous information about the control inputs of the classifier (EMG biofeedback) (Shehata et al., 2018a,b). The psychophysical framework proved that the strength of the internal model depends on the sensory input received (Shehata et al., 2018c). In particular, it showed that when audio biofeedback was added to vision, it outperformed the visual feedback alone in terms of internal model strength and performance in a functional task – both in a virtual environment and while using a multi-DoF hand prosthesis (Shehata et al., 2018a,b).

Based on these results, we sought to further enhance the sensory input available to the user, with complementary cues, in order to assess whether and how this could result in an even stronger internal model, and better performance in a functional task. To this aim we assessed and compared four sensory feedback conditions while controlling a myoelectric research hand prosthesis in psychophysical and functional tests. The three main conditions differed regarding the amount of complementary information: “visual-only (V),” “visual-plus-audio (VA),” and “visual-plus-audio-plus-tactile (VAT).” To disentangle the effects of the tactile component on the outcomes of the VAT feedback, the fourth condition was “visual-plus-tactile (VT).” The tactile feedback was provided by means of short-lasting vibrotactile cues (time-discrete) rather than continuous feedback, according to our previous work (Cipriani et al., 2014; Crea et al., 2015; Clemente et al., 2016; Barone et al., 2017; Aboseria et al., 2018) and the discrete event-driven sensory feedback control (DESC) policy (Johansson and Cole, 1992; Johansson and Edin, 1993; Johansson and Flanagan, 2009). The latter is a neuroscientific hypothesis of the mechanisms involved in human sensorimotor control, which posits that manipulation tasks are organized by means of multi-modally encoded discrete sensory events, e.g., resulting from object contact and lift-off.

Our findings show that all augmented feedback types significantly improved the performance compared to vision alone in the functional task, but only the audio biofeedback (VA) had an effect on the internal model strength, as measured by the psychophysical framework/metrics. Conversely, the tactile feedback demonstrated poor psychophysical metrics without (VT) and in combination with the audio biofeedback (VAT). These results on how the different inputs combine (either constructively or destructively) in the integrated sensory percept contribute to the scientific debate on the internal model and suggest ways for providing effective supplementary sensory feedback to prosthetic hand users.

We collected data from 28 healthy participants without any limb difference [13 females; age: 25 ± 4.5 (mean and standard deviation)]. All participants had normal or corrected-to-normal vision, were right-handed, and had no previous experience with myoelectric control. We had already collected the data for the “visual-only (V)” and “visual-plus-audio (VA)” groups (14 participants) and presented some aspects of it in our previous study (Shehata et al., 2018a). Written informed consent according to the University of New Brunswick Research and Ethics Board and the Scuola Superiore Sant’Anna Ethical Committee was obtained from all participants before conducting the experiments (UNB REB 2014-019 and SSSA 02/2017). The protocol used in this study was approved by the University of New Brunswick Research and Ethics Board and the Scuola Superiore Sant’Anna Ethical Committee.

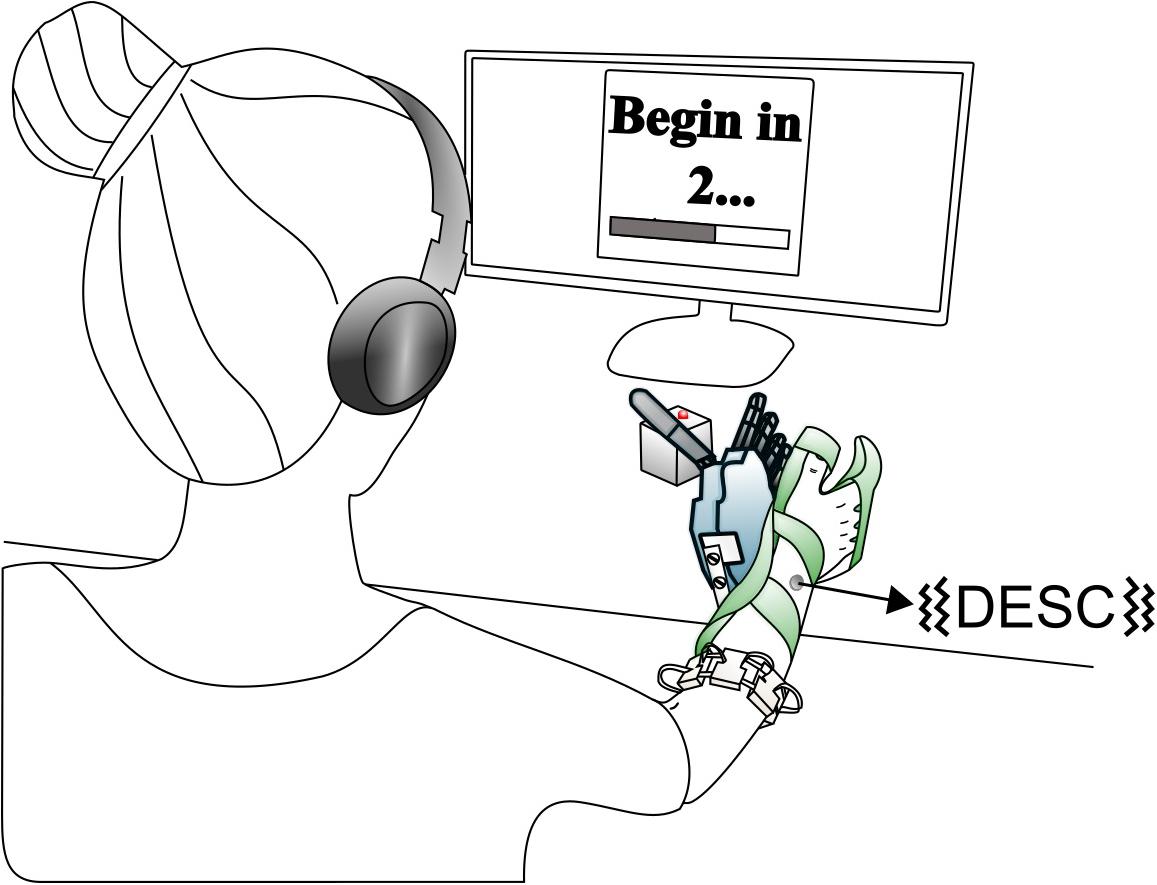

The experimental setup was similar to that of our previous study (Shehata et al., 2018a) and is briefly described here. It comprised an array of eight custom-made myoelectric sensors in a bracelet; a right-handed sensorized research hand prosthesis (IH2 Azzurra hand, Prensilia S.r.l., IT) that was mounted on a bypass attached to the participant’s forearm; a PC running the control and feedback algorithms; standard commercial headphones (MDRZX100, Sony, JP) for the audio feedback; a vibrotactor for the tactile feedback (Pico Vibe 312-101, Precision Microdrives, United Kingdom); and an instrumented test object [57 mm × 57 mm × 57 mm, ca. 180 g; (Controzzi et al., 2017; Figure 1)].

Figure 1. Overview of the setup. The robotic hand was attached to the participant via bypass. The EMG signals recorded from the electrode bracelet around the forearm controlled the hand. A vibrotactor on the dorsal forearm provided discrete feedback i.e., discrete event-driven sensory feedback control (DESC), and the headphones provided continuous feedback. If the grasping force on the test object exceeded a breaking threshold in fragile mode, its red LED turned on. Modified from Shehata et al. (2018a), used under CC BY 4.0.

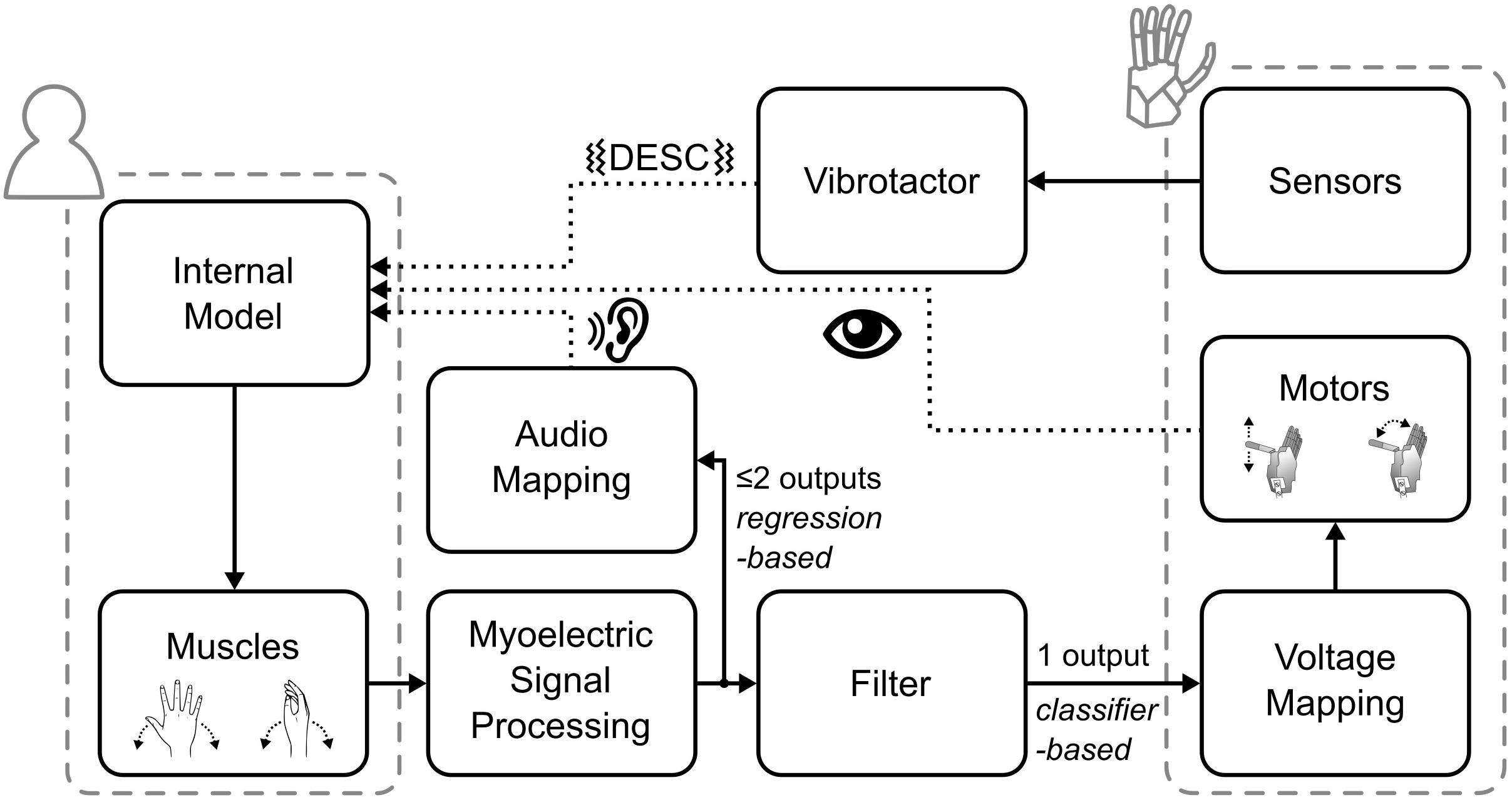

The myoelectric sensor bracelet was placed around the forearm of the participant and recorded the muscle activity used to control the robotic hand. We limited robotic hand movements to two degrees of freedom (DoF): (1) flexion/extension of the thumb, index and middle fingers, and (2) abduction/adduction of the thumb. Each of the four directions of movement of the robotic hand was mapped to one of four specific wrist movements: flexion and extension of the wrist corresponded to flexion/extension of the digits, while wrist abduction and adduction corresponded to abduction/adduction of the thumb. To implement the mapping, i.e., to interpret the electromyographic (EMG) signals, we used a Support Vector Regression algorithm that provided two regression-based control signals, which could simultaneously activate the two DoFs (e.g., thumb adduction and finger flexion) (Shehata et al., 2017, 2018c). These signals were then gated by a classifier; that means, the hand only moved in one direction at a time (Figure 2).

Figure 2. Overview of the control and feedback loop. The dotted lines indicate the three types of feedback provided to the participants.

Biofeedback is the technique of providing biological information to participants in real-time that would otherwise be unknown (Giggins et al., 2013). Accordingly, the audio biofeedback continuously relayed the two outputs of the regression-based controller to the participant, in the form of four distinct tones. Wrist flexion and extension were mapped to tones of 400 and 500 Hz, wrist abduction and adduction to 800 and 900 Hz, respectively. The amplitude of the tones was proportional to the output of the regression algorithm (max volume = 53 ± 3 dB Sound Pressure Level). With this architecture (Figure 2), while the hand moved only one DoF at a time, the audio biofeedback provided richer information about the participant’s myoelectric signals, which encompassed both proprioceptive and motor output information.

The tactile feedback provided information about the physical interactions of the robotic hand with the environment through the vibrotactor on the dorsal forearm. It delivered a short-lasting vibration burst (60 ms, 150 Hz, peak-to-peak force amplitude of ca. 0.3 N) upon contact, liftoff, replacement, and release of the test object. These events are known to be highly significant for the normal grasp-and-lift control, as per the DESC policy (Johansson and Cole, 1992; Johansson and Edin, 1993; Cipriani et al., 2014).

The test object – called an iVE – contained load cells measuring the grasp and load forces. The iVE could virtually break when a grasp exceeded a force of ca. 3 N, which was signaled to the participant by the activation of a red-colored LED on the iVE (Controzzi et al., 2017).

Participants were divided into four groups (7 persons each) according to the kind of feedback they received: “visual-only (V),” “visual-plus-audio (VA),” “visual-plus-tactile (VT),” and “visual-plus-tactile-plus-audio (VAT).” They performed two tests according to a previously developed psychophysical framework (Shehata et al., 2018c): the “adaptation rate test” to measure the rate of optimization of grasping due to the feedback, and the “just-noticeable difference (JND) test” to measure the threshold of perceiving a control perturbation. A third “functional test” was added in order to measure the ability to use the robotic hand in a manipulation task (Clemente et al., 2016).

In the adaptation rate test, the participants had 40 trials and were asked to grasp, lift, and replace the iVE as quickly as possible without breaking it. The iVE was placed in front of the participant so that the LED faced upward and the two instrumented sides could be grasped without the need to turn the object. A limit of 5 s was given to execute each trial, after which the hand automatically reopened. During trials 1–25, breaking was signaled through a red LED (fragile mode); during trials 26–40, the breaking was no longer signaled (rigid mode). This was done to keep subjects engaged with the task and prepare them for the following test.

In the JND test, the participants grasped the iVE (fragile mode) in two consecutive trials, lasting 4 s each (after which the hand automatically reopened). In one of the two, a stimulus perturbed the control of the hand. Participants were told to identify the altered trial (two-alternative forced choice) by pressing a key on a keypad placed near their unconstrained hand. The stimulus was calculated using an adaptive staircase procedure with a target probability set to 0.84 and a step size of 67 degrees and repeated until 23 reversals were achieved, as in our previous work (Shehata et al., 2018c).

In the functional test, for 20 trials of 10 s each, the participants attempted to grasp, and transfer the iVE over a barrier (H: 14.5 cm × W: 25 cm) without breaking it (fragile mode), akin to the well-known Box and Block test (Mathiowetz et al., 1985; Clemente et al., 2016). For a more detailed description of the tests please refer to (Shehata et al., 2018a).

In all groups, participants first trained freely to become familiar with the control and then trained to grasp and lift the test object. After that, they completed the three tests receiving only visual feedback. Subsequently, participants repeated the training and the three tests with either V, VA, VT, or VAT feedback. Ergo, each participant completed training and the three tests twice. The four groups were thus different and received the following feedback (in order): V-V, V-VA, V-VT, and V-VAT. In between the tests, the participants took short breaks; they took additional breaks during the (long-lasting) JND (every 12 min, or more often if desired). Each trial of the three tests was started with the hand fully opened and ended with the hand (automatically) returning to this starting pose. In the adaptation rate test and in the JND test, the thumb was fully adducted, meaning that participants had to activate only one DoF to close the hand [see also Figure 3 in Shehata et al., 2018a]. In the functional test, the fingers were extended, and the thumb fully abducted in resting position, meaning that the participants had to activate the two DoFs, mimicking the control of a multi-DoF prosthetic hand.

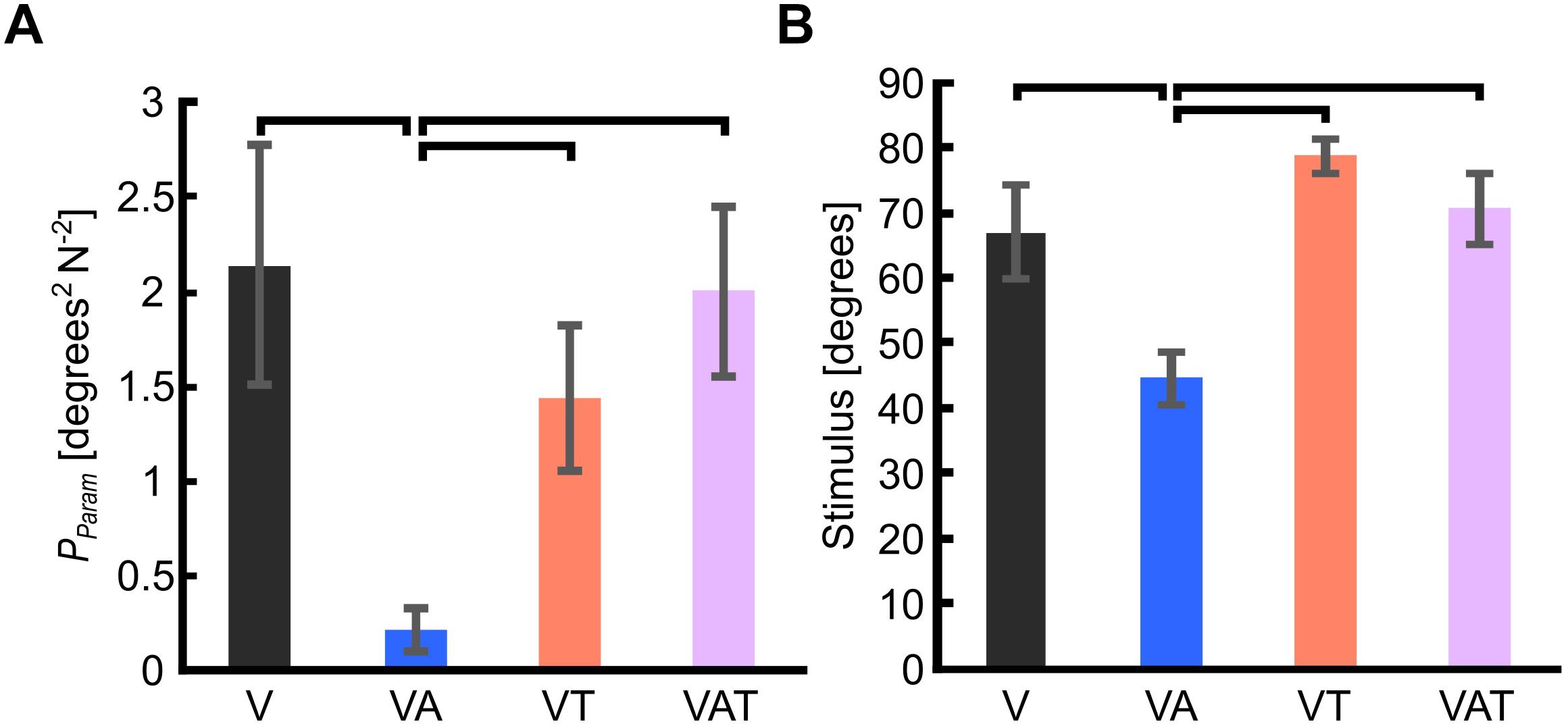

Figure 3. (A) Internal model uncertainty (Pparam). (B) Sensorimotor threshold (JND). Horizontal bars denote p < 0.05. Error bars show the standard error of the mean for each group.

The internal model developed while using the robotic hand was reconstructed from the data of the second repetition of the tests, by extracting four metrics from the adaptation rate and the JND tests, following the procedure described in our previous study (Shehata et al., 2018a). These are termed psychophysical metrics and consist of:

• Pparam: Internal model uncertainty. This measure describes the confidence participants have in their developed internal model, and it is computed from the outcomes of the adaptation rate test and the JND test (Shehata et al., 2018c and their Supplementary Materials).

• JND: Just-noticeable difference (or sensorimotor threshold). It describes, in degrees, the smallest external control perturbation from the trajectory (generated by the participant) that the participant perceived. The JND was defined as the final noticeable stimulus after 23 reversals of the adaptive staircase in the JND test (Shehata et al., 2018c).

• R: Sensory uncertainty. R determines the participants’ trust in the sensory information they receive from the system (Shehata et al., 2018c). It is derived from the JND and the controller noise (Q) as follows:

Q was extracted from the adaptation rate test as the variance in the control signal between the start of each trial and the first activation of the muscles (ca. 100–200 ms).

• −β1: Adaptation rate. This is a measure of the participants’ modification of the feedforward control signal (from one trial to the next) based on the perceived error between the optimal and their actual movement (Bastian, 2008; Johnson et al., 2017). It was computed from each trial in the adaptation rate test by analyzing the first 150–300 ms window of the output signal from the controller (Shehata et al., 2018b). This window was selected to truly assess modifications in the control signals before integration of the visual feedback (Elliott and Allard, 1985). Since this test required only flexion of the digits, any other activations were considered self-generated errors (Shehata et al., 2017). Participants were incentivized to minimize these errors while executing the task as quick as possible without (virtually) breaking the object. We computed the −β1 as follows:

where error is the angle between the ideal and the actual hand trajectory, β0 is the linear regression constant, β1 is the adaptation rate, and n is the trial number. A unity value for β1 indicates perfect adaptation, i.e., an internal model that is modified to perfectly compensate for errors. Higher or lower adaptation rates suggest over- or under-compensation.

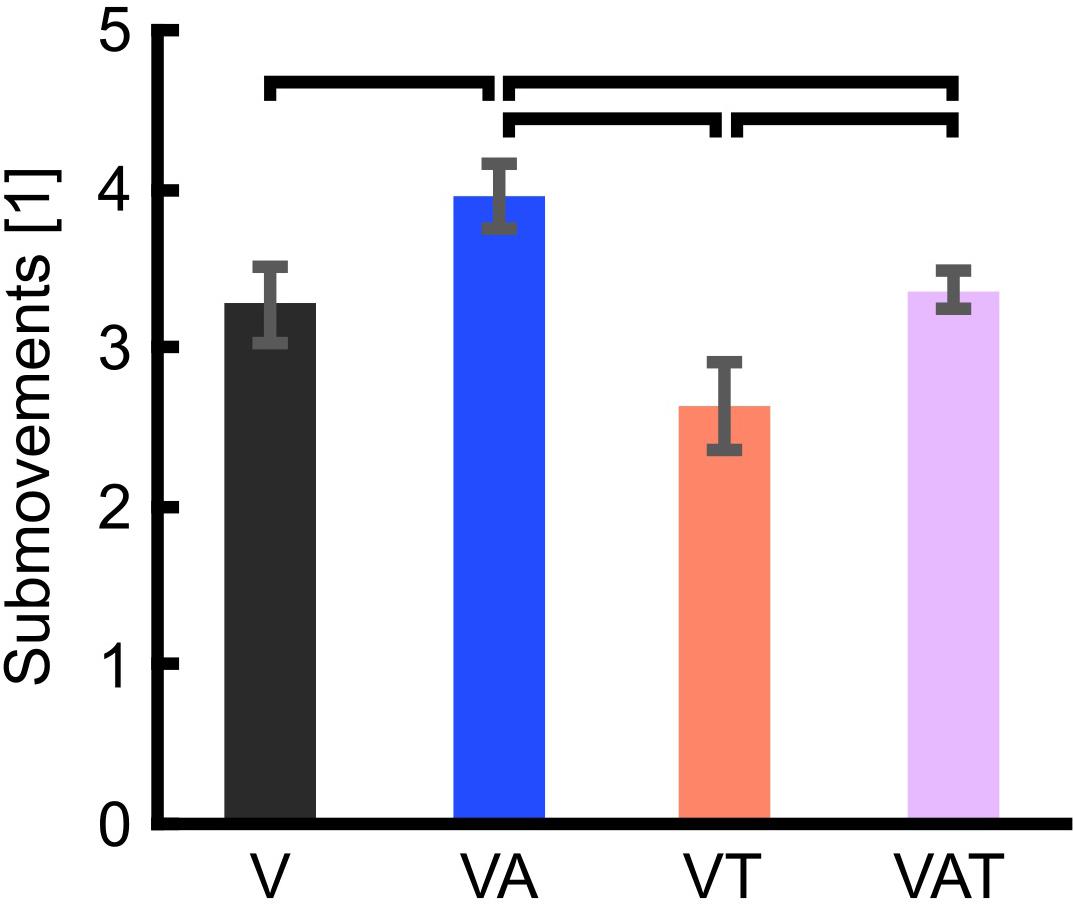

In addition – to infer the way participants used the sensory input to control the robotic hand to grasp and lift the object – we computed the number of sub-movements from the second block of data in the adaptation test. Sub-movements are defined as the number of zero-crossing pairs of the third derivative of the grasp force profile per trial (Fishbach et al., 2007). This measure describes the real-time (or closed-loop) regulation of the grasp force and depends on the received feedback (Doeringer and Hogan, 1998; Kositsky and Barto, 2001; Dipietro et al., 2009). Specifically, a higher number of sub-movements indicates closed-loop regulation of the grasp force.

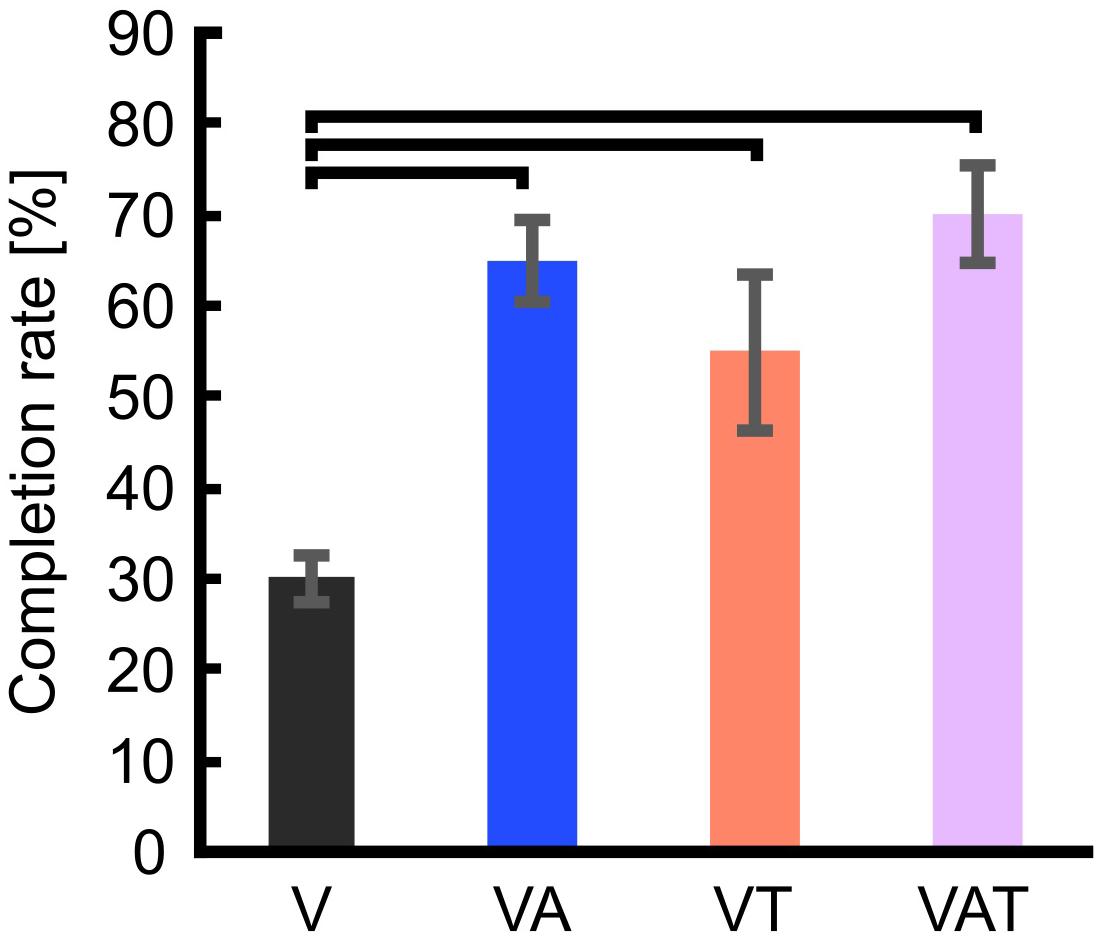

Finally, the completion rate (defined as the proportion of successful transfers) and the mean completion time (the average time needed to successfully transfer the object) were computed from the second repetition of the functional test, akin to our previous studies (Clemente et al., 2016).

We tested all parameters for homogeneity in variances of the data by using Levene’s test in SPSS (IBM Corp., United States). If data variances were homogenous, one-way ANOVAs were used to assess differences among metrics for the feedback types tested. If statistical significance was found, Bonferroni post hoc analysis test was performed. However, if data variances were found to be non-homogeneous, robust Welch ANOVA was used instead and followed by post hoc analysis using Games-Howell test. The confidence interval was calculated using the standard deviation (95% CI = mean ± 1.96 × SD).

The internal model uncertainty, Pparam, proved significantly lower with the audio augmented feedback (VA) compared to all other conditions [robust Welch ANOVA (F (3, 24) = 8.6, p = 0.006) and Games-Howell post hoc tests p < 0.05] (Figure 3A). No other statistical differences were observed. In contrast to our expectations, with VAT, Pparam (2.0 ± 0.45) was larger than VA (0.22 ± 0.11) and VT (1.4 ± 0.4), and not statistically different from V (2.14 ± 0.64). In other words, adding the tactile component to the audio biofeedback not only produced a lower confidence on the internal model than the two components (VA and VT) individually, but it degraded to the level of visual feedback alone.

The JND was 67 ± 7.2 degrees for V, 44.6 ± 3.9 degrees for VA, 78.6 ± 2.5 degrees for VT, and 70.6 ± 5.4 degrees for VAT. Its trend matched with that of Pparam. Indeed, it was lowest for the VA condition (one-way ANOVA with Bonferroni post hoc tests, p < 0.05), while no other statistical differences were found among the conditions (Figure 3B).

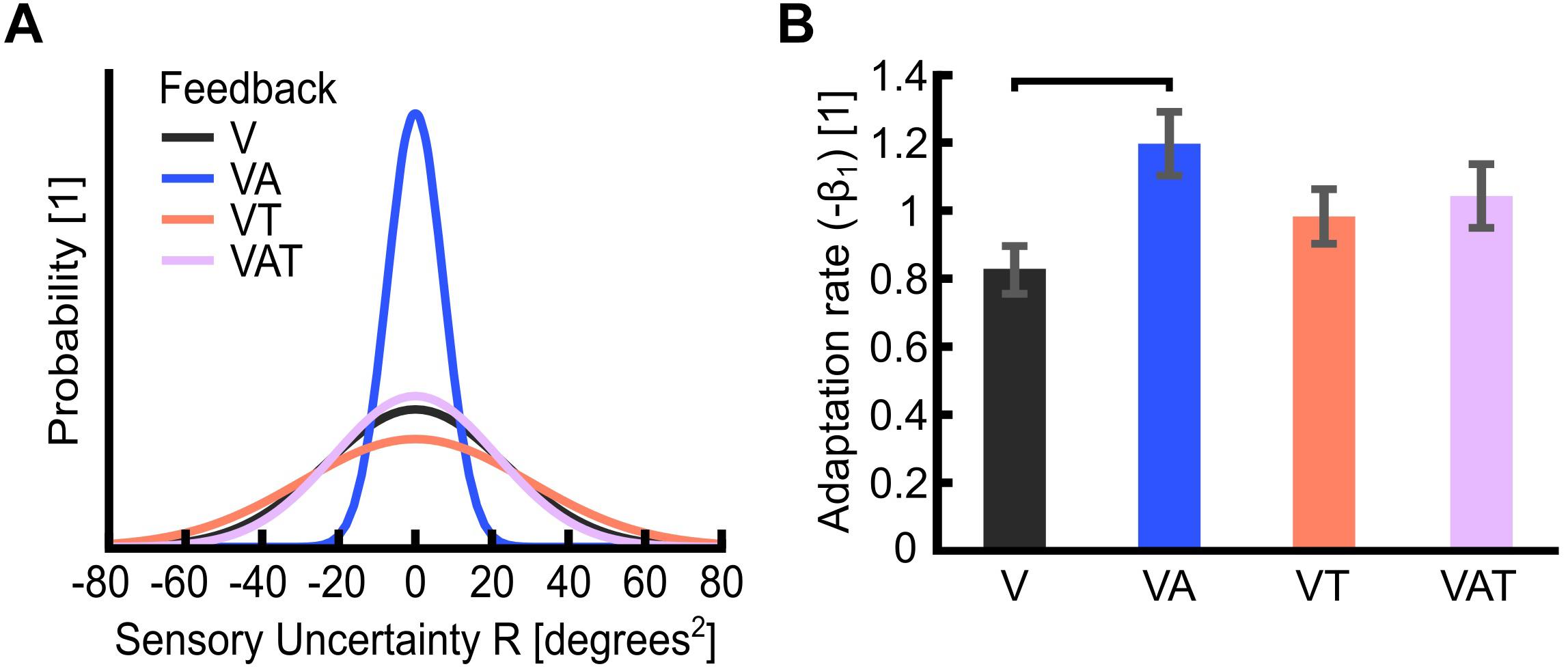

Akin to Pparam and JND, the sensory uncertainty (R) was lowest under VA and largest under VT (Figure 4A).

Figure 4. (A) Sensory uncertainty, R. The graph is a visualization of the variance of each feedback strategy in the probability curve. It displays Gaussian curves constructed with a variance of R and an arbitrary area under the curve of 1 unit. Visual-plus-audio biofeedback (VA) shows the narrowest variance, i.e., the lowest uncertainty. Conversely, visual-plus-tactile (VT) shows the widest variance, i.e., the highest uncertainty. (B) Adaptation rate (−β1). The adaptation rate describes the extent to which participants adapted to the self-generated error, i.e., how well they could optimize their control from one trial to the next. Horizontal bars denote p < 0.05. Error bars show the standard error of the mean for each group.

The adaptation rate was 0.82 ± 0.1 for V, 1.2 ± 0.1 for VA, 0.98 ± 0.1 for VT and 1.03 ± 0.1 for VAT. These outcomes indicated that, when using the VA feedback, participants adapted more than when using the other types, although this difference was statistically significant only in comparison to V (Figure 4B; Shehata et al., 2018a). No other statistical differences could be observed across conditions. It is worth noting that the controller noise Q extracted from this test was consistent across all conditions (<20%; not shown). This means that any variability in R is an effect of the sensory feedback, not of the variability of Q.

Altogether, the psychophysical metrics all indicated VA as the condition yielding the strongest internal model, as assessed by a lower JND and sensory uncertainties, higher adaptation rate, and a stronger internal model.

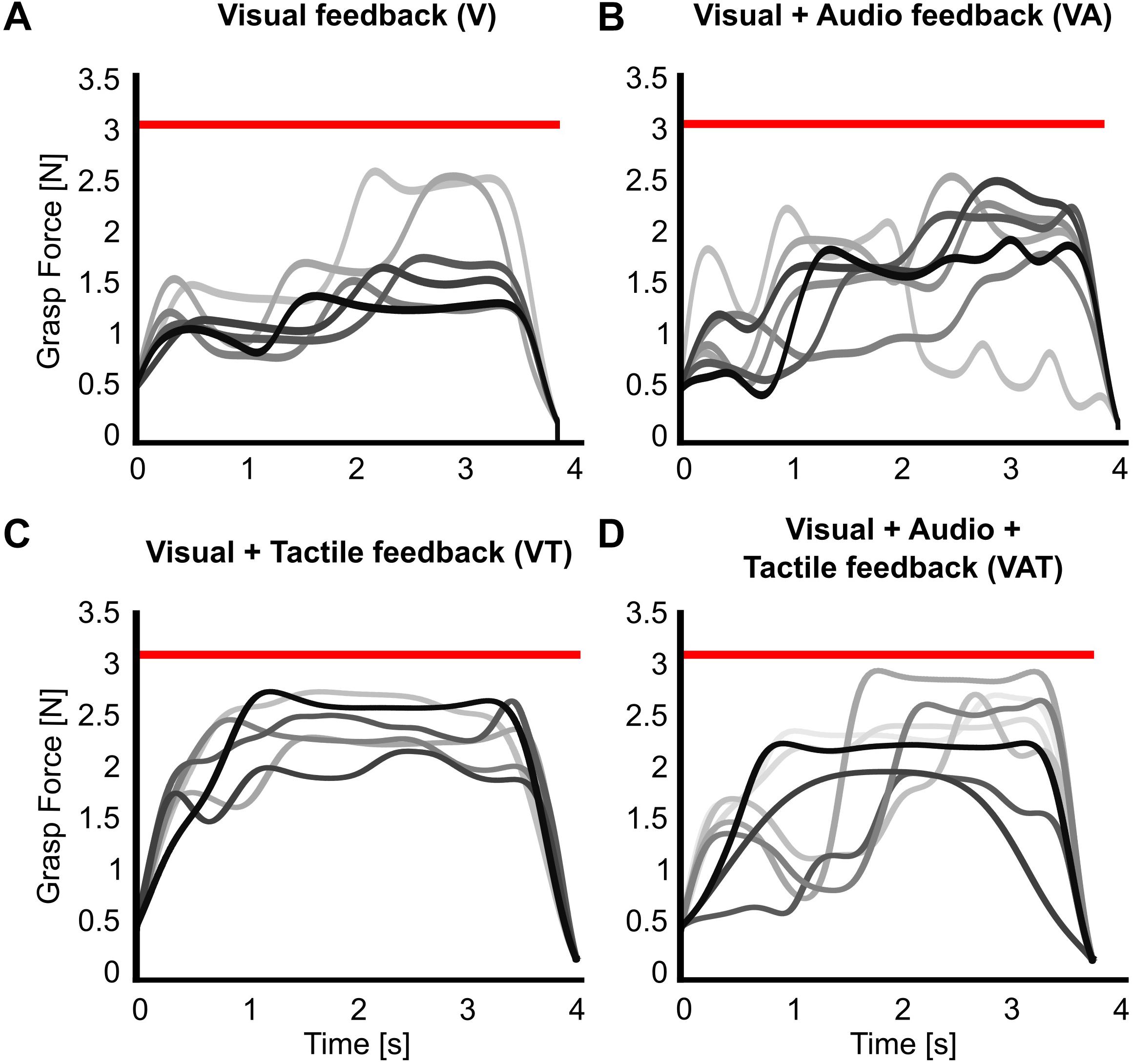

The analysis on the sub-movements highlighted a statistically significant difference across conditions (one-way ANOVA; p = 0.001) (Figure 5). Participants using VA adjusted their control signals significantly more than participants using the other feedback types (Bonferroni post hoc tests, p < 0.05). In other words, the participants tended to use the audio biofeedback in real-time, in order to modulate their grasp force to prevent breaking the object. Conversely, participants using the VT performed significantly less sub-movements than participants using the audio biofeedback (VAT and VA) (Bonferroni post hoc tests, p < 0.05). No other statistical differences were observed. These general behaviors were nicely captured by the time series of the grasp forces during the adaptation rate test (Figure 6). The evolution of the grasp force profiles, under the different conditions, suggest that, in the VAT, the participants used the audio biofeedback in the initial trials (light gray traces in Figure 6), and the discrete tactile feedback in the later trials (dark gray traces).

Figure 5. Trial sub-movements. The graph shows significantly more sub-movements with VA than with all other feedback types. VAT lead to significantly more sub-movements than VT. VT and V are not statistically different. Horizontal bars denote p < 0.05. Error bars show the standard error of the mean for each group.

Figure 6. Grasp force profiles. Representative grasp force profiles from individual trials during the adaptation rate test for all feedback types. A participant using (A) only visual feedback had lower variance in their grasping patterns over trials, (B) visual-plus-audio adjusted their grasping force throughout the trials and had higher variance in their grasping patterns, (C) visual-plus-tactile had automated grasping patterns with very low variance, and (D) visual-plus-audio-plus-tactile seemed to have highly variable grasping pattern in the initial trials but more automated grasping pattern at the final trials. Earlier trials are in lighter, later trials in darker shades of gray. The red horizontal bar indicates the breaking threshold. Incomplete trials in which the iVE was broken are not shown.

Regarding the functional test, the completion rate with visual feedback only (V) proved significanly worse than with VA, VT, and VAT (One-way ANOVA; Bonferroni post hoc tests, p < 0.05). Further, there may be a slight trend toward more successful transfers with VAT (70 ± 5.4) compared to VT (55 ± 8.5) and VA (65 ± 4.6) but it did not reach significance (p = 0.3 and p = 0.6, respectively) (Figure 7).

Figure 7. Completion rate. The figure shows that the percentage of successful transfers (i.e., the iVE was not broken) was significantly higher when the participants received any kind of augmented feedback compared to visual alone. Horizontal bars denote p < 0.05. Error bars show the standard error of the mean for each group.

The mean completion time was not affected by the different feedback types and was 8.4 ± 0.65 s for V, 8.3 ± 0.74 s for VA, 8.6 ± 0.32 s for VT, and 8.4 ± 0.34 s for VAT.

Many researchers have explored ways of providing hand prostheses with supplementary sensory feedback, showing that it could indeed improve the performance in functional tasks. Yet, very little consideration has been given to the causes underpinning improved performance; in particular, it is still unknown how feedback contributes and combines to build strong internal models of the myoelectric control system. Here, we hypothesized that increasing the sensory information provided to a myoelectric hand user could result in a stronger internal model and better performance in a functional task. Hence, we combined continuous audio biofeedback with event-based vibratory tactile feedback in a myocontrolled prosthetic hand. Furthermore, we also explored the complex interactions between different feedback types (i.e., continuous visual and audio, and discrete tactile feedback) and their effects on the internal model strength.

Audio biofeedback provided continuous information about intensity of the control signal but not about the actual grasp, whereas time-discrete tactile feedback exclusively conveyed precise information about the interactions between the robotic hand and the environment. According to Johansson and colleagues (reviewed in Johansson and Flanagan, 2009), these interactions are processed and signaled to the nervous system through discrete sensory events and are crucial for developing efficient and natural feed-forward grasping in humans.

In this study we confirmed our previous findings (Shehata et al., 2018a): adding complementary audio biofeedback to visual feedback enables the development of a stronger internal model for controlling a myoelectric hand, as assessed by all psychophysical metrics (Pparam, JND, R, and −β1). The fact that the VA feedback yielded a lower sensory uncertainty (or variance) than V (cf. Figure 3A) suggests that the audio component dominates the integrated visual-audio percept, according to the maximum-likelihood estimate theory (Ernst and Banks, 2002). In other words, when the visual input is complemented with a coherent audio biofeedback, participants would likely rely more on the latter to execute the motor task. This is in agreement with current understanding of human sensorimotor control: the nervous system can never be completely certain about the relevance of visual information, as it provides only indirect information about the motor task, and the interactions with the environment (Johansson and Flanagan, 2009; Wei and Kording, 2009). Whether these results are due to the modality of the biofeedback (i.e., audio) or to the nature of the biofeedback itself (i.e., the sensory input which closely matches the intended motor output), remains to be assessed. Interestingly our results align with those of Dosen et al. (2015a), who conveyed EMG biofeedback using a visual interface.

The reconstructed internal model did not further improve when another piece of redundant information – this time about the touch event – was added to visual and audio biofeedback. In fact, the VAT condition yielded significantly worse psychophysical metrics compared to VA, showing results closer to the basic condition V (and also to VT). These results – if the psychophysical metrics are a truthful and complete description of the internal model – indicate that adding sensory information, albeit consistent with the already available information, may not necessarily enhance the formation of the internal model in the short term. In fact, it could even degrade it. A possible explanation for this is given by the causal inference hypothesis (Knill, 2003; Ernst, 2006; Körding et al., 2007), which posits that the nervous system interprets cues in terms of their causes. When the cues are very different from one another in space and time, the nervous system will infer that they are not related and thus should be processed separately. The visual and audio cues were indeed caused by the same process (i.e., the control input) while the tactile cues were due to the interaction of the robot hand with the environment (the control input is also causal of touch but through a transformation that involves extrinsic factors as well).

Combined in the VAT, the tactile component apparently dominated in the so-integrated percept, as indicated by the sub-movements and grasp force profiles (Figures 5, 6) and also shown by the clear degradation of the psychophysical metrics. This suggests that, when combined and during grasping, (extrinsic) tactile sensory cues are more relevant to the central nervous system than (intrinsic) biofeedback cues – at least in the time frame explored. It is interesting to observe that this degradation was not immediate, as the tactile feedback only became predominant after several trials (Figure 6). This could mean that, when both types are present, audio biofeedback may be easier to pick-up in the initial phases – perhaps because it is very informative and closely matches the motor output – whereas it becomes less relevant in the later stages – possibly because it is more cognitively taxing compared to the tactile input. This argument would be supported by the literature on motor adaptation (Wei and Kording, 2009) and sensorimotor control of dexterous manipulation tasks (Johansson and Flanagan, 2009). Another possible reason for favoring the continuous feedback in the initial phases is related to how people expect to receive information of the grasp based on their top-down knowledge of the interactions of the body with the environment: in nature these interactions are continuous (although they may be processed differently by the nervous system). However, why and how the tactile input corrupted the psychophysical metrics (instead of enhancing them), remains unclear so far. Future tests could investigate whether the internal model is updated more efficiently with audio biofeedback than with tactile feedback after disturbances, for example by doing a pick-and-lift task with unexpectedly changing object weight (Jenmalm et al., 2006).

The degradation of the psychophysical metrics with VAT is, nevertheless, interesting, as one would expect that the tactile feedback should barely interfere in such tests, contrary to what we observed. The JND tested how well the participants could perceive discrepancies in the control input. Here, audio biofeedback provided a lot of relevant information, visual some (because the hand was not always, and completely under visual control) but tactile only notified the participants about touching the object (which was expected to be meaningless in the JND). In the adaptation rate test – where the task was to grasp and lift the object – tactile info conveyed some more information about the final result of the control, i.e., a successful or unsuccessful grasp. In fact, in the adaptation rate test, tactile information (VT and VAT) yielded optimal results (−β1 close to 1) whereas with only audio feedback (VA) participants overcompensated (−β1 > 1). While the near-perfect adaptation with VT and VAT may be due to the saliency of the time-discrete sensory feedback policy (Johansson and Cole, 1992; Johansson and Edin, 1993; Johansson and Flanagan, 2009), we argue that audio feedback alone – being a reliable and continuous sensory input coherent with vision – induced the participants to adapt continuously. However, it is still unclear whether this difference between VA and VT/VAT (and V and VT/VAT) is meaningful.

The results from the functional test were complementary to those from the psychophysical tests. We found that all kinds of augmented feedback (VA, VAT, and VT) enabled users to perform significantly better than with vision alone (Figure 7). It was expected that VT would allow for better performance than V alone (Clemente et al., 2016). Further, this result advocates that continuous audio biofeedback can enhance motor control of a myoelectric prosthesis [in agreement with the work of Dosen et al. (2015a) and our previous study (Shehata et al., 2018a)]. However, it also reveals a significant deviation from the results of the other tests. Indeed, while participants with VT or VAT integrated the sensory input and exhibited a predictive control behavior, participants with VA used it for continuously regulating their grip force in real-time, in a closed-loop manner (as seen in the data from the adaptation test in Figures 5, 6B). We believe this was due to the nature of the feedback: the time-discrete sensory cues could only be used by participants as checkpoints for the motor task (Johansson and Edin, 1993; Clemente et al., 2016), whereas the audio biofeedback – as discussed above – induced the participants to use it constantly, even when the grasp was successful. Both approaches seem to be equally adequate to improve grasping performance with a prosthetic hand.

During object manipulation, the brain uses sensory predictions and afferent signals to adapt the motor output to the physical properties of the manipulated object, as well as to monitor and update task performance (Johansson and Flanagan, 2009). In this way, humans can predict and use an adequate level of grip force required to lift an object by producing highly coordinated grasping and lifting forces and correcting their actions in the case of unexpected events (e.g., object slip or incorrectly predicted weight). Sensory feedback plays a crucial role in building and keeping the motor control repertoire updated. However, neural delays make continuous closed-loop control of dynamic motor behaviors impractical at frequencies above 1 Hz (Hogan et al., 1987; Johansson and Edin, 1993). Hence, natural grasping largely involves predictive feedforward rather than closed-loop (servo control) mechanisms. With this in mind, and considering that the VAT and VT yielded a successful predictive control behavior in the functional test (a sign of a mature internal model), we suspect that the psychophysical tests used may not grasp all the facets of the internal model. In particular, they may not be capable to properly assess the contribution of touch-related sensory information, or, alternatively, the discrete tactile feedback may have masked the measurements.

Written informed consent according to the University of New Brunswick Research and Ethics Board and the Scuola Superiore Sant’Anna Ethical Committee was obtained from all participants before conducting the experiments (UNB REB 2014-019 and SSSA 02/2017). This work is not a clinical trial. All experiments were conducted in a lab and not in a clinic.

All authors took part in conceiving and designing the study, discussed the results, and contributed to manuscript revision, read, and approved the submitted version. AS and LE conducted the study and wrote the manuscript. AS analyzed the data.

This work was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC), the New Brunswick Health Research Foundation (NBHRF), and the European Commission (DeTOP – #687905). The work by CC was also funded by the European Research Council (MYKI – #679820).

CC is co-founder and holds shares of Prensilia S.r.l., which manufactures and sells the IH2 Azzurra hand used in this study.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors thank Francesco Clemente and Michele Bacchereti for their maintenance of the IH2 Azzurra Hand and the iVE.

DESC, discrete event-driven sensory control; DoF, degree of freedom; EMG, electromyography/electromyographic; iVE, instrumented virtual egg; V, visual feedback; VA, visual + audio biofeedback; VAT, visual + audio + tactile feedback; VT, visual + tactile feedback.

Aboseria, M., Clemente, F., Engels, L. F., and Cipriani, C. (2018). Discrete vibro-tactile feedback prevents object slippage in hand prostheses more intuitively than other modalities. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 1577–1584. doi: 10.1109/TNSRE.2018.2851617

Antfolk, C., D’Alonzo, M., Rosén, B., Lundborg, G., Sebelius, F., and Cipriani, C. (2013). Sensory feedback in upper limb prosthetics. Expert Rev. Med. Devices 10, 45–54. doi: 10.1586/erd.12.68

Barone, D., D’Alonzo, M., Controzzi, M., Clemente, F., and Cipriani, C. (2017). A cosmetic prosthetic digit with bioinspired embedded touch feedback. IEEE Int. Conf. Rehabil. Robot 2017, 1136–1141. doi: 10.1109/ICORR.2017.8009402

Bastian, A. J. (2008). Understanding sensorimotor adaptation and learning for rehabilitation. Curr. Opin. Neurol. 21, 628–633. doi: 10.1097/WCO.0b013e328315a293.Understanding

Chatterjee, A., Chaubey, P., Martin, J., and Thakor, N. (2008). Testing a prosthetic haptic feedback simulator with an interactive force matching task. J. Prosthetics Orthot. 20, 27–34. doi: 10.1097/01.JPO.0000311041.61628.be

Childress, D. S. (1980). Closed-loop control in prosthetic systems: historical perspective. Ann. Biomed. Eng. 8, 293–303. doi: 10.1007/BF02363433

Cipriani, C., Segil, J. L., Clemente, F., Weir, R. F., and Edin, B. (2014). Humans can integrate feedback of discrete events in their sensorimotor control of a robotic hand. Exp. brain Res. 232, 3421–3429. doi: 10.1007/s00221-014-4024-4028

Clemente, F., D’Alonzo, M., Controzzi, M., Edin, B. B., and Cipriani, C. (2016). Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 24, 1314–1322. doi: 10.1109/tnsre.2015.2500586

Controzzi, M., Clemente, F., Pierotti, N., Bacchereti, M., Cipriani, C., Superiore, S., et al. (2017). “Evaluation of hand function trasporting fragile objects: the virtual eggs test,” in Myoelectric Control Symposium, eds W. Hill and J. Sensinger (New Brunswick, CA: University of Salford).

Crea, S., Cipriani, C., Donati, M., Carrozza, M. C., and Vitiello, N. (2015). Providing time-discrete gait information by wearable feedback apparatus for lower-limb amputees: usability and functional validation. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 250–257. doi: 10.1109/TNSRE.2014.2365548

Dipietro, L., Krebs, H. I., Fasoli, S. E., Volpe, B. T., and Hogan, N. (2009). Submovement changes characterize generalization of motor recovery after stroke. CORTEX 45, 318–324. doi: 10.1016/j.cortex.2008.02.008

Doeringer, J. A., and Hogan, N. (1998). Intermittency in preplanned elbow movements persists in the absence of visual feedback. J. Neurophysiol. 80, 1787–1799. doi: 10.1152/jn.1998.80.4.1787

Dosen, S., Markovic, M., Somer, K., Graimann, B., and Farina, D. (2015a). EMG Biofeedback for online predictive control of grasping force in a myoelectric prosthesis. J. Neuroeng. Rehabil. 12:55. doi: 10.1186/s12984-015-0047-z

Dosen, S., Markovic, M., Wille, N., Henkel, M., Koppe, M., Ninu, A., et al. (2015b). Building an internal model of a myoelectric prosthesis via closed-loop control for consistent and routine grasping. Exp. Brain Res. 233, 1855–1865. doi: 10.1007/s00221-015-4257-4251

Dosen, S., Markovic, M., Strbac, M., Perovic, M., Kojic, V., Bijelic, G., et al. (2016). Multichannel electrotactile feedback with spatial and mixed coding for closed-loop control of grasping force in hand prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 4320, 1–1. doi: 10.1109/TNSRE.2016.2550864

Elliott, D., and Allard, F. (1985). The utilization of visual feedback information during rapid pointing movements. Q. J. Exp. Psychol. 37A, 407–425. doi: 10.1080/14640748508400942

Engdahl, S. M., Christie, B. P., Kelly, B., Davis, A., Chestek, C. A., and Gates, D. H. (2015). Surveying the interest of individuals with upper limb loss in novel prosthetic control techniques. J. Neuroeng. Rehabil 12:53. doi: 10.1186/s12984-015-0044-42

Ernst, M. O. (2006). “A Bayesian view on multimodal cue integration,” in Human Body Perception from the Inside Out, eds G. Knoblich, I. Thornton, M. Grosjean, and M. Shiffrar (New York, NY: Oxford University Press), 105–131.

Ernst, M. O., and Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433. doi: 10.1038/415429a

Fishbach, A., Roy, S. A., Bastianen, C., Miller, L. E., and Houk, J. C. (2007). Deciding when and how to correct a movement: discrete submovements as a decision making process. Exp. Brain Res. 177, 45–63. doi: 10.1007/s00221-006-0652-y

Geethanjali, P. (2016). Myoelectric control of prosthetic hands: state-of-the-art review. Med. Devices Evid. Res. 9, 247–255. doi: 10.2147/MDER.S91102

Giggins, O. M., Persson, U. M. C., and Caulfield, B. (2013). Biofeedback in rehabilitation. J. Neuroeng. Rehabil. 10, 6–19. doi: 10.1186/1743-0003-10-60

Graczyk, E. L., Schiefer, M. A., Saal, H. P., Delaye, B. P., Bensmaia, S. J., and Tyler, D. J. (2016). The neural basis of perceived intensity in natural and artificial touch. Sci. Transl. Med. 142, 1–11. doi: 10.1126/scitranslmed.aaf5187

Hermsdörfer, J., Elias, Z., Cole, J. D., Quaney, B. M., and Nowak, D. A. (2008). Preserved and impaired aspects of feed-forward grip force control after chronic somatosensory deafferentation. Neurorehabil. Neural Repair 22,374–384. doi: 10.1177/1545968307311103

Hogan, N., Bizzi, E., Mussa-Ivaldi, F. A., and Flash, T. (1987). Controlling multijoint motor behavior. Exerc. Sport Sci. Rev. 15, 153–190.

Imamizu, H., Tamada, T., Yoshioka, T., and Pu, B. (2000). Human cerebellar activity reflecting an acquired internal model of a new tool. Nature 403, 192–195. doi: 10.1038/35003194

Jenmalm, P., Schmitz, C., Forssberg, H., and Ehrsson, H. H. (2006). Lighter or heavier than predicted: neural correlates of corrective mechanisms during erroneously programmed lifts. J. Neurosci. 26, 9015–9021. doi: 10.1523/jneurosci.5045-05.2006

Johansson, R. S., and Cole, K. J. (1992). Sensory-motor coordination during grasping and manipulative actions. Curr. Opin. Neurobiol. 2, 815–823. doi: 10.1016/0959-4388(92)90139-C

Johansson, R. S., and Edin, B. B. (1993). Predictive feed-forward sensory control during grasping and manipulation in man. Biomed. Res. 14, 95–106.

Johansson, R. S., and Flanagan, J. R. (2009). Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 10, 345–359. doi: 10.1038/nrn2621

Johnson, R. E., Kording, K. P., Hargrove, L. J., and Sensinger, J. W. (2017). Adaptation to random and systematic errors: comparison of amputee and non-amputee control interfaces with varying levels of process noise. PLoS One 12:e0170473. doi: 10.1371/journal.pone.0170473

Kawato, M. (1999). Internal models for motor control and trajectory planning. Curr. Opin. Neurobiol. 9, 718–727. doi: 10.1016/s0959-4388(99)00028-8

Knill, D. C. (2003). Mixture models and the probabilistic structure of depth cues. Vision Res. 43, 831–854. doi: 10.1016/S0042-6989(03)00003-8

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., and Shams, L. (2007). Causal inference in multisensory perception. PLoS One 2:e943. doi: 10.1371/journal.pone.0000943

Kositsky, M., and Barto, A. G. (2001). The emergence of multiple movement units in the presence of noise and feedback delay. Adv. Neural Inf. Process. Syst. 14, 43–50.

Lum, P. S., Black, I., Holley, R. J., Barth, J., and Dromerick, A. W. (2014). Internal models of upper limb prosthesis users when grasping and lifting a fragile object with their prosthetic limb. Exp. Brain Res. 232, 3785–3795. doi: 10.1007/s00221-014-4071-4071

Markovic, M., Karnal, H., Graimann, B., Farina, D., and Dosen, S. (2017). GLIMPSE: google glass interface for sensory feedback in myoelectric hand prostheses. J. Neural Eng. 14:036007. doi: 10.1088/1741-2552/aa620a

Markovic, M., Schweisfurth, M. A., Engels, L. F., Bentz, T., Wüstefeld, D., Farina, D., et al. (2018a). The clinical relevance of advanced artificial feedback in the control of a multi-functional myoelectric prosthesis. J. Neuroeng. Rehabil. 15:28. doi: 10.1186/s12984-018-0371-371

Markovic, M., Schweisfurth, M. A., Engels, L. F., Farina, D., and Dosen, S. (2018b). Myocontrol is closed-loop control: incidental feedback is sufficient for scaling the prosthesis force in routine grasping. J. Neuroeng. Rehabil. 15:81. doi: 10.1186/s12984-018-0422-427

Mathiowetz, V., Volland, G., Kashman, N., and Weber, K. (1985). Adult norms for the box and block test of manual dexterity. Am. J. Occup. Ther. 39, 386–391. doi: 10.5014/ajot.39.6.386

Ninu, A., Dosen, S., Muceli, S., Rattay, F., Dietl, H., and Farina, D. (2014). Closed-loop control of grasping with a myoelectric hand prosthesis: which are the relevant feedback variables for force control? IEEE Trans. Neural Syst. Rehabil. Eng. 22, 1041–1052. doi: 10.1109/TNSRE.2014.2318431

Ortiz-Catalan, M., Mastinu, E., Brånemark, R., and Håkansson, B. (2017). Direct neural sensory feedback and control via osseointegration. XVI World Congr. Int. Soc. Prosthetics Orthot. 11, 1–2.

Raspopovic, S., Capogrosso, M., Petrini, F. M., Bonizzato, M., Rigosa, J., Di Pino, G., et al. (2014). Restoring natural sensory feedback in real-time bidirectional hand prostheses. Sci. Transl. Med. 6:222ra19. doi: 10.1126/scitranslmed.3006820

Riso, R. R. (1999). Strategies for providing upper extremity amputees with tactile and hand position feedback – moving closer to the bionic arm. Technol. Heal. Care 7, 401–409. doi: 10.1080/09640560701402075

Saunders, I., and Vijayakumar, S. (2011). The role of feed-forward and feedback processes for closed-loop prosthesis control. J. Neuroeng. Rehabil. 8:60. doi: 10.1186/1743-0003-8-60

Shehata, A. W., Engels, L. F., Controzzi, M., Cipriani, C., Scheme, E. J., and Sensinger, J. W. (2018a). Improving internal model strength and performance of prosthetic hands using augmented feedback. J. Neuroeng. Rehabil. 15:70. doi: 10.1186/s12984-018-0417-414

Shehata, A. W., Scheme, E. J., and Sensinger, J. W. (2018b). Audible feedback improves internal model strength and performance of myoelectric prosthesis control. Sci. Rep. 8:8541. doi: 10.1038/s41598-018-26810-w

Shehata, A. W., Scheme, E. J., and Sensinger, J. W. (2018c). Evaluating internal model strength and performance of myoelectric prosthesis control strategies. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 1046–1055. doi: 10.1109/TNSRE.2018.2826981

Shehata, A. W., Scheme, E. J., and Sensinger, J. W. (2017). The effect of myoelectric prosthesis control strategies and feedback level on adaptation rate for a target acquisition task. Int. Conf. Rehabil. Robot 2017, 200–204. doi: 10.1109/ICORR.2017.8009246

Simpson, D. C. (1973). “The control and supply of a multimovement externally powered upper limb prosthesis,” in Proceedings of the 4th International Symposium on External Control of Human Extremities, (Dubrovnik), 247–254.

Tan, D. W., Schiefer, M. A., Keith, M. W., Anderson, J. R., Tyler, J., and Tyler, D. J. (2014). A neural interface provides long-term stable natural touch perception. Sci. Transl. Med. 6:257ra138. doi: 10.1126/scitranslmed.3008669

Keywords: internal model, prosthetics, sensory feedback, psychophysics, motor learning, electromyography

Citation: Engels LF, Shehata AW, Scheme EJ, Sensinger JW and Cipriani C (2019) When Less Is More – Discrete Tactile Feedback Dominates Continuous Audio Biofeedback in the Integrated Percept While Controlling a Myoelectric Prosthetic Hand. Front. Neurosci. 13:578. doi: 10.3389/fnins.2019.00578

Received: 05 February 2019; Accepted: 21 May 2019;

Published: 06 June 2019.

Edited by:

Andrew Joseph Fuglevand, The University of Arizona, United StatesReviewed by:

Sabato Santaniello, University of Connecticut, United StatesCopyright © 2019 Engels, Shehata, Scheme, Sensinger and Cipriani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ahmed W. Shehata, YWhtZWQuc2hlaGF0YUB1YWxiZXJ0YS5jYQ==; YWhtZWR3YWdkeWVtYW1AZ21haWwuY29t

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.