95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 20 March 2019

Sec. Brain Imaging Methods

Volume 13 - 2019 | https://doi.org/10.3389/fnins.2019.00210

This article is part of the Research Topic Artificial Intelligence for Medical Image Analysis of NeuroImaging Data View all 20 articles

Chenxi Huang1

Chenxi Huang1 Ganxun Tian1

Ganxun Tian1 Yisha Lan1

Yisha Lan1 Yonghong Peng2

Yonghong Peng2 E. Y. K. Ng3

E. Y. K. Ng3 Yongtao Hao1*

Yongtao Hao1* Yongqiang Cheng4*

Yongqiang Cheng4* Wenliang Che5*

Wenliang Che5*Recent research has reported the application of image fusion technologies in medical images in a wide range of aspects, such as in the diagnosis of brain diseases, the detection of glioma and the diagnosis of Alzheimer’s disease. In our study, a new fusion method based on the combination of the shuffled frog leaping algorithm (SFLA) and the pulse coupled neural network (PCNN) is proposed for the fusion of SPECT and CT images to improve the quality of fused brain images. First, the intensity-hue-saturation (IHS) of a SPECT and CT image are decomposed using a non-subsampled contourlet transform (NSCT) independently, where both low-frequency and high-frequency images, using NSCT, are obtained. We then used the combined SFLA and PCNN to fuse the high-frequency sub-band images and low-frequency images. The SFLA is considered to optimize the PCNN network parameters. Finally, the fused image was produced from the reversed NSCT and reversed IHS transforms. We evaluated our algorithms against standard deviation (SD), mean gradient (Ḡ), spatial frequency (SF) and information entropy (E) using three different sets of brain images. The experimental results demonstrated the superior performance of the proposed fusion method to enhance both precision and spatial resolution significantly.

In 1895 Rontgen obtained the first human medical image by X-ray, after which research of medical images gained momentum, laying the foundation for medical image fusion. With the development of both medical imaging technology and hardware facilities, a series of medical images with different characteristics and information were obtained, contributing to a key source of information for disease diagnosis. At present, clinical medical images mainly include Computed Tomography (CT) images, Magnetic Resonance Imaging (MRI) images, Single-Photon Emission Computed Tomography (SPECT) images, Dynamic Single-Photon Emission Computed Tomography (DSPECT) and ultrasonic images, etc. (Jodoin et al., 2015; Hansen et al., 2017; Zhang J. et al., 2017). It is necessary to fuse different modes of medical images into more informative images based on fusion algorithms, in order to provide doctors with more reliable information during clinical diagnosis (Kavitha and Chellamuthu, 2014; Zeng et al., 2014). At present, medical image fusion has been considered in many aspects, such as the localization of brain diseases, the detection of glioma, the diagnosis of AD (Alzheimer’s disease), etc. (Huang, 1996; Singh et al., 2015; Zeng et al., 2018).

Image fusion is the synthesis of images into a new image using a specific algorithm. The space-time relativity and complementarity of information in fused images can be fully used in the process of image fusion, contributing to a more comprehensive expression of the scene (Wu et al., 2005; Choi, 2006). Conventional methods of SPECT and CT fusion images mainly include component substitution and multi-resolution analysis (Amolins et al., 2007; Huang and Du, 2008; Huang and Jiang, 2012). Component substitution mainly refers to intensity-hue-saturation (IHS) transform, with the advantage of improving the spatial resolution of SPECT images (Huang, 1999; Rahmani et al., 2010). The limitation of transform invariance leads to difficulty in extracting both image contour and edge details. In order to solve this problem, contourlet transform was proposed by Da et al. (2006), Zhao et al. (2012), Xin and Deng (2013). Moreover, non-subsampled contourlet transform (NSCT) was also proposed to fully extract the directional information of SPECT images and CT images to be fused, providing better performance in image decomposition (Da et al., 2006; Wang and Zhou, 2010; Yang et al., 2016).

The Pulse Coupled Neural Network (PCNN) was discovered by Eckhorn et al. (1989) in the 1990s while studying the imaging mechanisms of the visual cortex of small mammals. No training process is required in the PCNN and useful information can be obtained from a complex background through the PCNN. Nevertheless, the PCNN has its shortcomings, such as the numerous parameters and the complicated process of setting parameters. Thus, novel algorithms to optimize the PCNN parameters has been introduced to improve the calculation speed of PCNN (Huang, 2004; Huang et al., 2004; Jiang et al., 2014; Xiang et al., 2015). SFLA is a new heuristic algorithm first presented by Eusuff and Lansey, which combines the advantages of the memetic algorithm and particle swarm optimization. The algorithm can search and analyze the optimal value in a complex space with fewer parameters and has a higher performance and robustness (Samuel and Asir Rajan, 2015; Sapkheyli et al., 2015; Kaur and Mehta, 2017).

In our study, a new fusion approach based on the SFLA and PCNN is proposed to address the limitations discussed above. Our proposed method not only innovatively uses SFLA optimization to effectively learn the PCNN parameters, but also produces high quality fused images. A series of contrasting experiments are discussed in view of image quality and objective evaluations.

The remaining part of the paper is organized as follows. Related work is introduced in Section “Related Works.” The fusion method is proposed in Section “Materials and Methods.” The experimental results are presented in Sections “Result” and “Conclusion” concludes the paper with an outlook on future work.

Image fusion involves a wide range of disciplines and can be classified under the category of information fusion, where a series of methods have been presented. A novel fusion method, for multi-scale images has been presented by Zhang X. et al. (2017) using Empirical Wavelet Transform (EWT). In the proposed method, simultaneous empirical wavelet transforms (SEWT) were used for one-dimensional and two-dimensional signals, to ensure the optimal wavelets for processed signals. A satisfying visual perception was achieved through a series of experiments and in terms of objective evaluations, it was demonstrated that the method was superior to other traditional algorithms. However, time consumption of the proposed method is high, mainly during the process of image decomposition, causing application difficulties in a real time system. Noised images should also be considered in future work where the process of generating optimal wavelets may be affected (Zeng et al., 2016b; Zhang X. et al., 2017).

Aishwarya and Thangammal (2017) also proposed a fusion method based on a supervised dictionary learning approach. During the dictionary training, in order to reduce the number of input patches, gradient information was first obtained for every patch in the training set. Second, both the information content and edge strength was measured for each gradient patch. Finally, the patches with better focus features were selected by a selection rule, to train the over complete dictionary. Additionally, in the process of fusion, the globally learned dictionary was used to achieve better visual quality. Nevertheless, high computational costs also exist in this proposed approach during the process of sparse coding and final fusion performance, which may be affected by high frequency noise (Zeng et al., 2016a; Aishwarya and Thangammal, 2017).

Moreover, an algorithm for the fusion of thermal and visual images was introduced by M Kanmani et al. in order to obtain a single comprehensive fused image. A novel method called self tuning particle swarm optimization (STPSO) was presented to calculate the optimal weights. A weighted averaging fusion rule was also used to fuse the low frequency- and high frequency coefficients, obtained through Dual Tree Discrete Wavelet Transform (DT-DWT) (Kanmani and Narasimhan, 2017; Zeng et al., 2017a). Xinxia Ji et al. proposed a new fusion algorithm based on an adaptive weighted method in combination with the idea of fuzzy theory. In the algorithm, a membership function with fuzzy logic variables were designed to achieve the transformation of different leveled coefficients by different weights. Experimental results indicated that the proposed algorithm outperformed existing algorithms in aspects of visual quality and objective measures (Ji and Zhang, 2017; Zeng et al., 2017b).

The algorithm 3.1 represents an image fusion algorithm based on the PCNN and SFLA, where SPECT and CT images are fused. In our proposed algorithm, a SPECT image is first decomposed on three components using IHS transform, which include saturation S, hue H and intensity I. Component I is then decomposed to a low-frequency and high-frequency image through NSCT decomposition. Additionally, a CT image is decomposed into a low-frequency and high-frequency image through NSCT decomposition. Moreover, the two low-frequency images obtained above are fused in a new low-frequency image through the SFLA and PCNN combination fusion rules, while the two high-frequency images obtained above are fused into a new high-frequency image through the SFLA and PCNN combination fusion rules. Next, the new low-frequency and new high-frequency images are fused to generate a new image with intensity I’ using reversed NSCT. Finally, the target image is achieved by using reversed IHS transform to integrate the three components S, H and I’.

Algorithm 1: An image fusion algorithm based on PCNN and SFLA |

|---|

| Input: A SPECT image A and a CT image B |

| Output: A fused image F |

| Step 1: Obtain three components of image A using IHS transform; saturation S, hue H and intensity I. |

| Step 2: Image decomposition |

| (1) Decompose the component I of image A to a low-frequency image AL and high-frequency image AH through NSCT decomposition. |

| (2) Decompose image B to a low-frequency image BL and high-frequency image BH through NSCT decomposition. |

| Step 3: Image fusion |

| (1) Fuse the low-frequency images AL and BL to a new low-frequency image CL through the SFLA and PCNN combination fusion rules. |

| (2) Fuse the high-frequency images AH and BH to form a new high-frequency image CH through the SFLA and PCNN combination fusion rules. |

| Step 4: Inverse transform |

| Fuse the low-frequency image CL and high-frequency image CH to a new image with intensity I’ using reversed NSCT. |

| Step 5: Reversed IHS transform |

| Through the reversed IHS transform, integrate the three components S, H and I’, then obtain the target image F. |

The overall method of the proposed algorithm for the fusion of a SPECT and CT image is outlined in Figure 1.

In our proposed method, the SPECT image and CT image are decomposed into a low-frequency and high-frequency image using NSCT.

Non-subsampled contourlet transform (Huang, 1999; Rahmani et al., 2010) is composed of a non-subsampled pyramid filter bank (NSPFB) and a non-subsampled directional filter bank (NSDFB). The source image is decomposed into a high-frequency sub-band and a low-frequency sub-band by NSPFB. The high-frequency sub-band is then decomposed into a sub-band of each direction by NSDFB. The structure diagram of the two-level decomposition of NSCT is shown in Figure 2.

An analysis filter {H1 (z), H2 (z)} and a synthesis filter {G1 (z), G2 (z)} are used when using NSCT to decompose images and the two filters satisfy H1(z)G1(z) + H2(z)G2(z) = 1. The source image can generate low-frequency and high-frequency sub-band images when it is decomposed by NSP. The next level of NSP decomposition is performed on low-frequency components obtained by the upper-level decomposition. An analysis filter {U1 (z), U2 (z)} and synthesis filters {V1 (z), V2 (z)} are contained in the design structure of NSDFB with the requirement of U1(z)V 1(z) + U2(z)V 2(z) = 1. The high-pass sub-band image decomposed by J-level NSP is decomposed by L-level NSDFB, and the high-frequency sub-band coefficients can be obtained at the number of 2n, where n is an integer higher than 0. A fused image with clearer contours and translation invariants can be obtained through the fusion method based on NSCT (Xin and Deng, 2013).

Fusion rules affect image performance, so the selection of fusion rules largely determines the quality of the final fused image. In this section, the PCNN fusion algorithm based on SFLA is introduced for low-frequency and high-frequency sub-band images decomposed by NSCT.

The PCNN is a neural network model of single-cortex feedback, to simulate the processing mechanism of visual signals in the cerebral cortex of cats. It consists of several neurons connected to each other, where each neuron is composed of three parts: the receiving domain, the coupled linking modulation domain and the pulse generator. In image fusion using the PCNN, the M ∗ N neurons of a two-dimensional PCNN network correspond to the M ∗ N pixels of the two-dimensional input image, and the gray value of the pixel is taken as the external stimulus of the network neuron. Initially, the internal activation of neurons is equal to the external stimulation. When the external stimulus is greater than the threshold value, a natural ignition will occur. When a neuron ignites, its threshold will increase sharply and then decay exponentially with time. When the threshold attenuates to less than the corresponding internal activation, the neuron will ignite again, and the neuron will generate a pulse sequence signal. The ignited neurons stimulate the ignition of adjacent neurons by interacting with adjacent neurons, thereby generating an automatic wave in the activation region to propagate outward (Ge et al., 2009).

The parameters of the PCNN affect the quality of image fusion, and most current research uses the method of regressively exploring the values of parameters, which is subjective to a certain degree. Therefore, how to reasonably set the parameters of the PCNN is the key to improving its performance. In our paper, SFLA is used to optimize the PCNN network parameters.

Shuffled frog leaping algorithm is a particle swarm search method based on groups to obtain optimal results. The flowchart of SFLA is shown in Figure 3. First, the population size F, the number of sub populations m, the maximum iterations of local search for each sub population N and the number of frogs in each sub population n were defined. Second, a population was initialed, and the fitness value of each frog was calculated and sorted in a descending order. A memetic algorithm is used in the process of the search, and the search is carried out in groups. All groups are then fused, and the frogs are sorted according to an established rule. Moreover, the frog population is divided based on the established rules, and the overall information exchange is achieved using this method until the number of iterations are equal to the maximum iterations N (Li et al., 2018).

F(x) is defined as a fitness function and Ω is a feasible domain. In each iteration, Pg is the best frog for a frog population, Pb represents the best frog for each group and Pw is the worst frog for each group. The algorithm adopts the following update strategy to carry out a local search in each group:

where Sj represents the updated value of frog leaping, rand () is defined as the random number between 0 and 1, Smax is described as the maximum leaping value, and Pw,new is the worst frog of updated group. If Pw,new ∈ Ω and F(Pw,new) > F(Pw), Pw can be replaced by Pw,new, otherwise, Pb will be replaced by Pg. At the same time, if P′w,new ∈ Ω and F(P′w,new) > F(Pw), Pw can be replaced by P′w,new, otherwise Pw can be replaced by a new frog and then the process of iteration will continue until the maximum iterations is reached.

Three parameters α𝜃,β and V 𝜃 in PCNN are essential for the results of image fusion. Therefore, as it is shown in Figure 4, in our study, the SFLA is used to optimize the PCNN in order to achieve the optimal solution of the PCNN parameters. Each frog is defined as a spatial solution X(α𝜃,β,V 𝜃) and the optimal configuration scheme of the PCNN parameters can finally be obtained by searching for the best frog Xb(α𝜃,β,V 𝜃).

In our proposed method, possible configuration schemes of parameters are defined, which constitute a solution space for the parameter optimization. After generating an initial frog solution space, F frogs in the population are divided into m groups, and each group is dependent on one another. Starting from the initial solution, the frogs in each group first carry out an intraclass optimization by a local search, thereby continuously updating their own fitness values. In N iterations of local optimization, the quality of the whole frog population is optimized with the improvement of the quality of frogs in all groups. The frogs of the population are then fused and regrouped according to the established rule, and local optimization within the group is carried out until reaching the final iteration conditions. Finally, the global optimal solution of the frog population is defined as the optimal PCNN parameter configuration. The final fusion image is thus obtained using the optimal parameter configuration above.

In order to verify the accuracy and preservation of the edge details in our proposed method, three sets of CT and SPECT images were fused based on our method. The results of each set were compared with four fusion methods; IHS, NSCT+FL, DWT, NSCT+PCNN. In the method of NSCT+FL, images are first decomposed by NSCT to obtain high-frequency and low-frequency coefficients, and then fusion images are obtained by taking large value high-frequency coefficients and taking average value low-frequency coefficients. In NSCT+PCNN, images are decomposed by NSCT and fused by the PCNN.

Experiments were implemented on the image database from the Whole Brain Web Site of Harvard Medical School (Johnson and Becker, 2001) which contains two groups of images including CT and SPECT images. Each group has three examples including normal brain images, glioma brain images and brain images of patients diagnosed with Alzheimer’s disease. The testing images have been used in many related papers (Du et al., 2016a,b,c) and the platform is MATLAB R2018a.

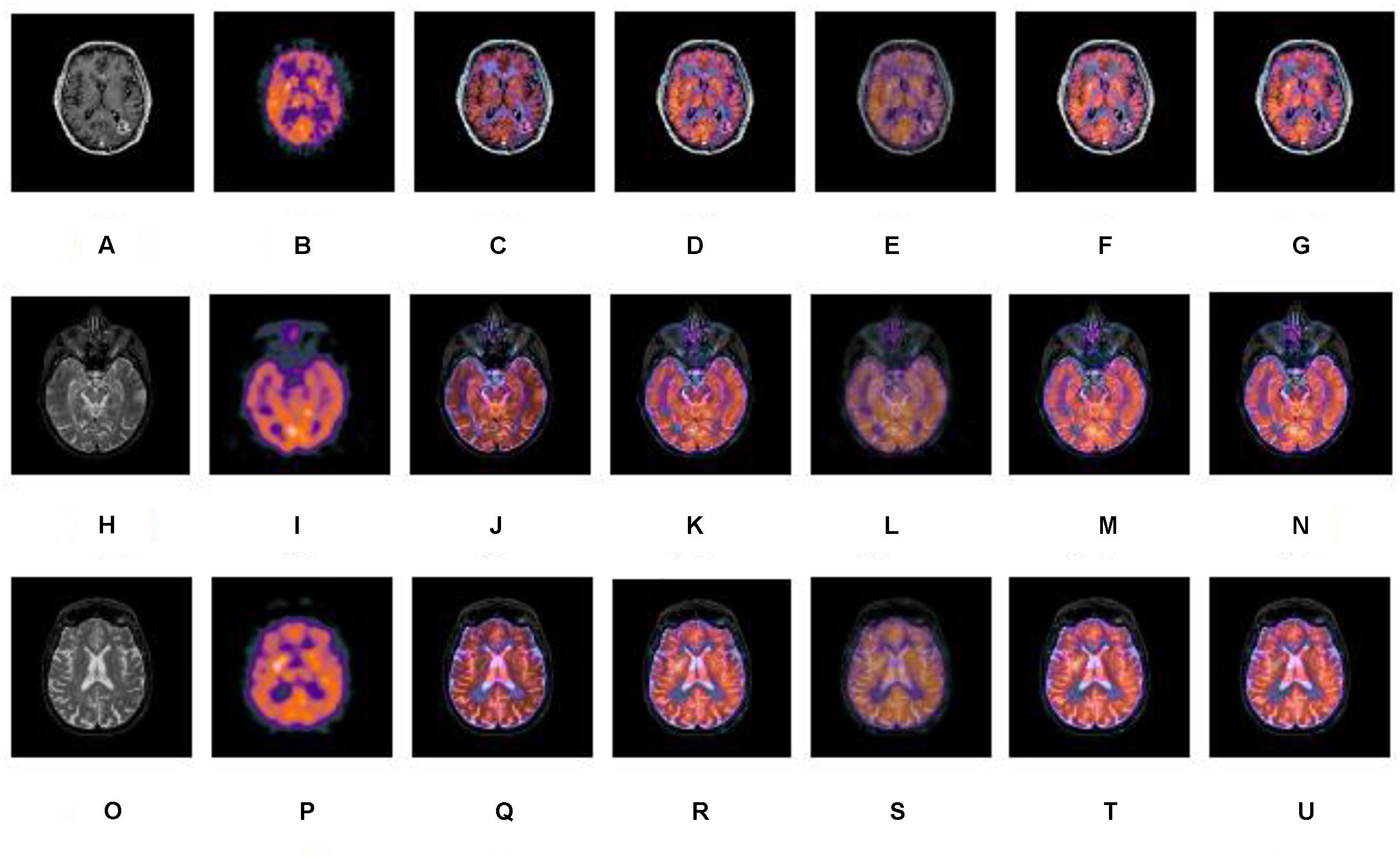

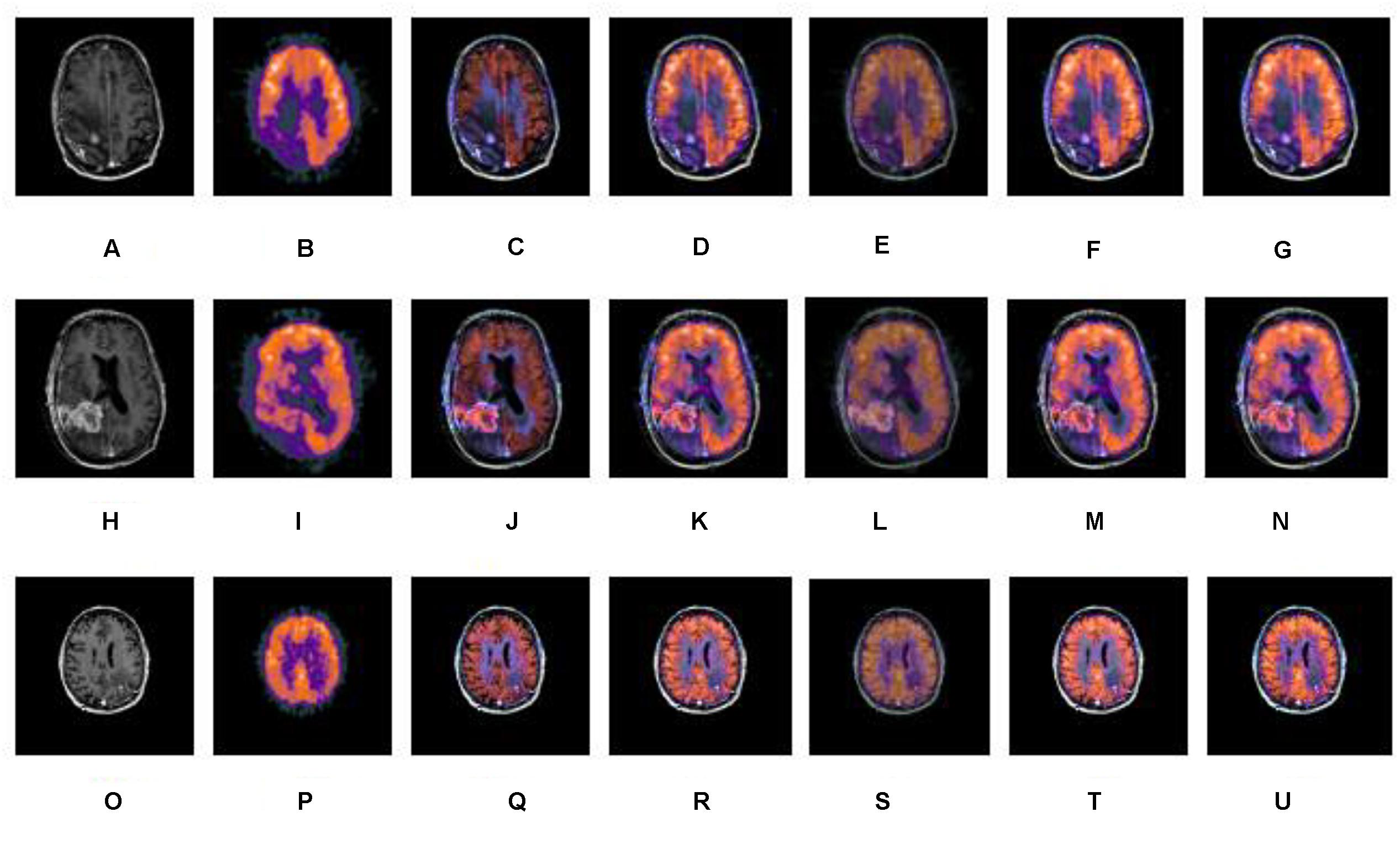

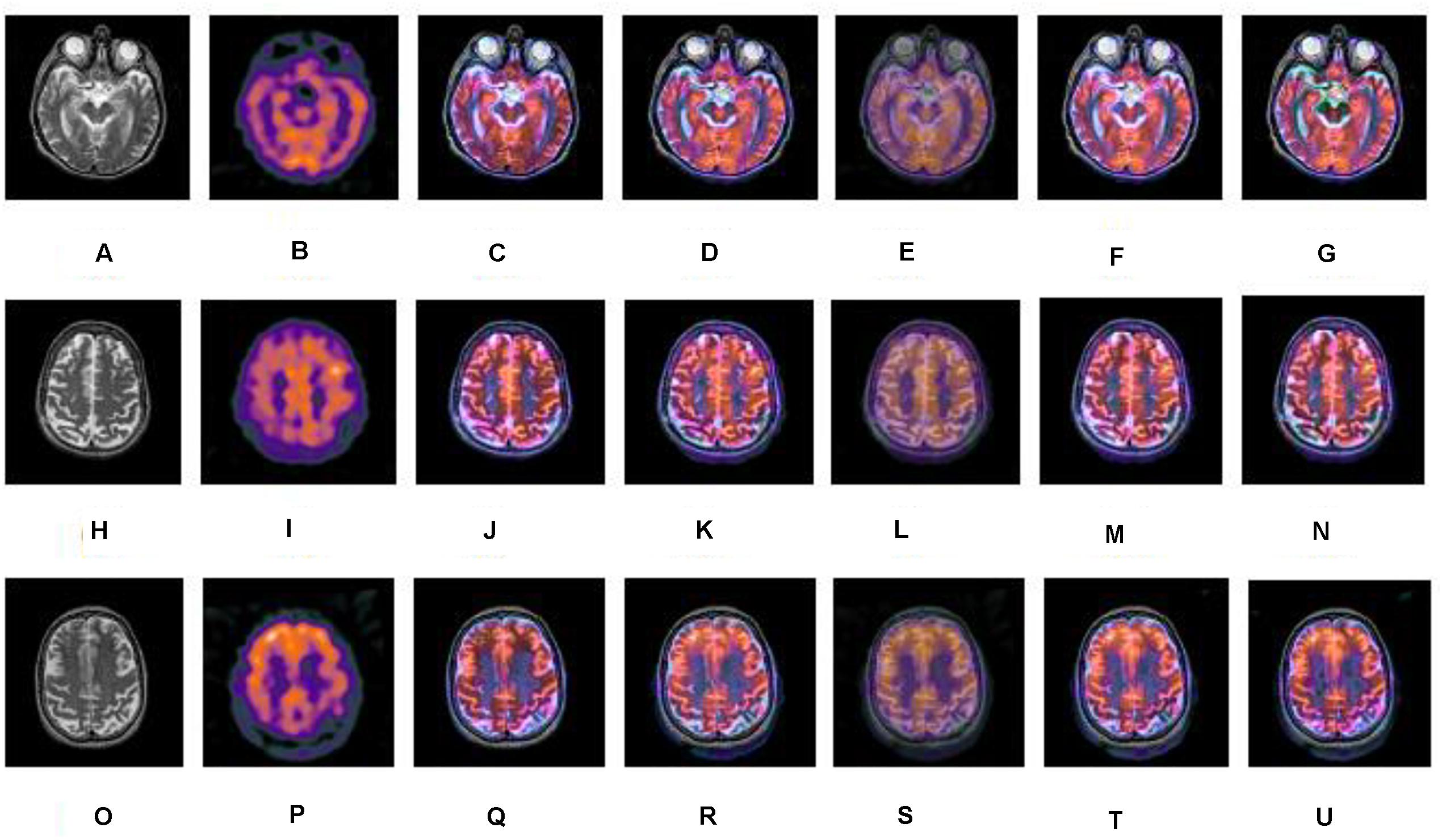

A series of fusion results of SPECT and CT images, based on different methods including IHS, NSCT+FL, DWT, NSCT+PCNN, and our proposed method is shown in Figures 5–7. The fusion results of a set of normal brain images are shown in Figure 5, the fusion results of a set of glioma brain images are presented in Figure 6, while a set of brain images of patients diagnosed with Alzheimer’s disease are shown in Figure 7. In Figures 5–7, (a), (h) and (o) are source CT images; (b), (i), (p) are source SPECT images; (c), (j) and (q) are fused images based on IHS; (d), (k) and (r) are fused images based on NSCT+FL; (e), (l) and (s) are fused images based on DWT; (f), (m) and (t) are fused images based on the combination of NSCT+PCNN; (g), (n) and (u) are fused images based on the proposed method. It can be seen that the fusion results based on our proposed method are more accurate and clearer than those based on various other methods. Our proposed method contributes to a higher brightness of fusion images and more information on the edge details.

Figure 5. A series of contrasting experiments for normal brain images on fusion images based on different fusion methods (set 1). (A,H,O) are source CT images; (B,I,P) are source SPECT images; (C,J,Q) are fused images based on IHS; (D,K,R) are fused images based on NSCT+FL; (E,L,S) are fused images based on DWT; (F,M,T) are fused images based on the combination of NSCT+PCNN; (G,N,U) are fused images based on the proposed method.

Figure 6. A series of contrasting experiments for glioma brain images on fusion images based on different fusion methods (set 2). (A,H,O) are source CT images; (B,I,P) are source SPECT images; (C,J,Q) are fused images based on IHS; (D,K,R) are fused images based on NSCT+FL; (E,L,S) are fused images based on DWT; (F,M,T) are fused images based on the combination of NSCT+PCNN; (G,N,U) are fused images based on the proposed method.

Figure 7. A series of contrasting experiments for brain images of patients diagnosed with Alzheimer’s disease on fusion images based on different fusion methods (set 3). (A,H,O) are source CT images; (B,I,P) are source SPECT images; (C,J,Q) are fused images based on IHS; (D,K,R) are fused images based on NSCT+FL; (E,L,S) are fused images based on DWT; (F,M,T) are fused images based on the combination of NSCT+PCNN; (G,N,U) are fused images based on the proposed method.

A set of metrics is used to compare the performance of the fusion methods including IHS, DWT, NSCT, PCNN, a combination of NSCT and the PCNN, and our proposed method. The evaluation metrics including standard deviation (SD), mean gradient (Ḡ), spatial frequency (SF) and information entropy (E) are entailed as follows (Huang et al., 2018):

(1) Standard deviation

Standard deviation is used to evaluate the contrast of the fused image, which is defined as

where Z(i,j) represents the pixel value of the fused image and is the mean value of the pixel values of the image.

The SD reflects the discrete image gray scale relative to the mean value of gray scale. And a higher value of SD demonstrates the performance of a fused image.

(2) Mean gradient (Ḡ)

Ḡ corresponds to the ability of a fused image to represent the contrast of tiny details sensitively. It can be mathematically described as

The fused image is clearer when the value of mean gradient is higher.

(3) Spatial frequency (SF)

Spatial frequency is the measure of the overall activity in a fused image. For an image with a gray value Z(xi,yj) at position (xi,yj), the spatial frequency is defined as

Where row frequency

Column frequency

The higher the value of frequency, the better the fused image quality.

(4) Information entropy (E)

Information entropy is provided by the below equation

where L is image gray scale and Pi is the proportion of the pixel of the gray value i in whole pixels. A higher value of entropy indicates more information contained in the fused image.

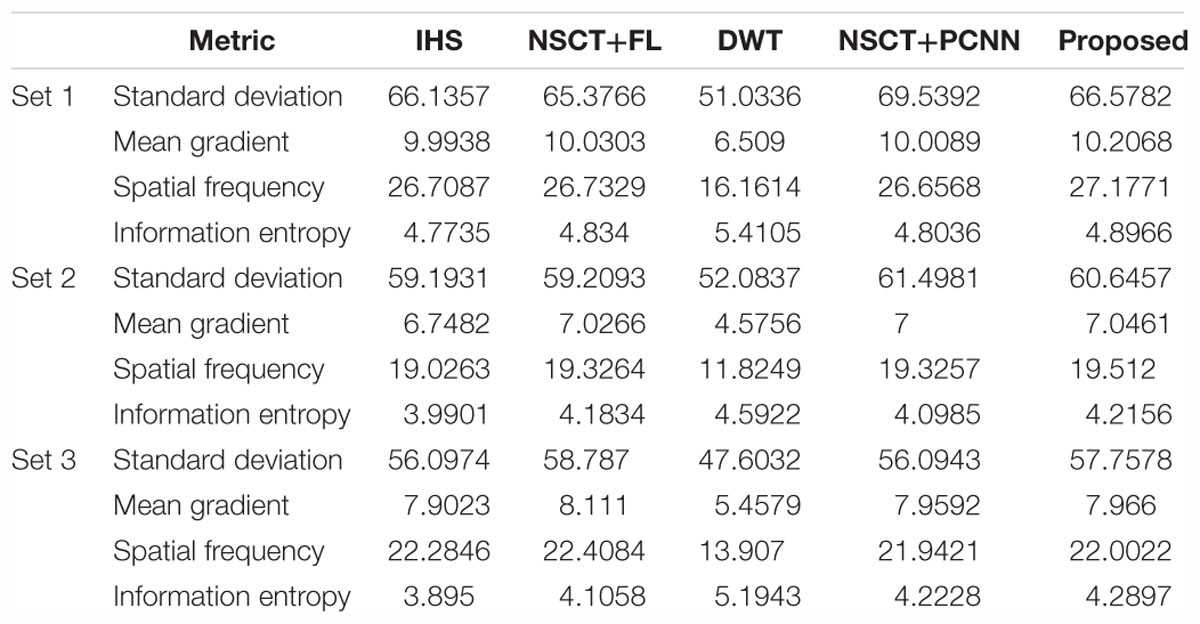

Experiment results on fused images of SPECT images and CT images are shown in Tables 1–3. The fusion results of a set of normal brain images are shown in Table 1, the fusion results of a set of glioma brain images are presented in Table 2, while a set of brain images of patients diagnosed with Alzheimer’s disease are shown Table 3. It can be seen that compared to other fusion methods, our proposed method generally has higher values in SD, Ḡ, SF and E. The experimental results demonstrate that information of fusion images obtained by our proposed method is more abundant, the inheritance of detail information performs better, while the resolution is significantly improved.

Table 3. Performance evaluations on fused brain images of patients diagnosed with Alzheimer’s disease, based on different methods.

In this paper, a new fusion method for SPECT brain and CT brain images was put forward. First, NSCT was used to decompose the IHS transform of a SPECT and CT image. The fusion rules, based on the regional average energy, was then used for low-frequency coefficients and the combination of SFLA and the PCNN was used for high-frequency sub-bands. Finally, the fused image was produced by reversed NSCT and reversed IHS transform. Both subjective evaluations and objective evaluations were used to analyze the quality of the fused images. The results demonstrated that the method we put forward can retain the information of source images better and reveal more details in integration. It can be seen that the proposed method is valid and effective in achieving satisfactory fusion results, leading to a wide range of applications in practice.

The paper focuses on multi-mode medical image fusion. However, there is a negative correlation between the real-time processing speed and the effectiveness of medical image fusion. Under the premise of ensuring the quality of fusion results, how to improve the efficiency of the method should be considered in the future.

Publicly available datasets were analyzed in this study. This data can be found here: http://www.med.harvard.edu/aanlib/.

CH conceived the study. GT and CH designed the model. YC and YP analyzed the data. YL and WC wrote the draft. EN and YH interpreted the results. All authors gave critical revision and consent for this submission.

This work was supported in part by the Tongji University Short-term Study Abroad Program under Grant 2018020017, National Science and Technology Support Program under Grant 2015BAF10B01, and National Natural Science Foundation of China under Grants 81670403, 81500381, and 81201069. CH acknowledges support from Tongji University for the exchange with Nanyang Technological University.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Aishwarya, N., and Thangammal, C. B. (2017). An image fusion framework using novel dictionary based sparse representation. Multimed. Tools Appl. 76, 21869–21888. doi: 10.1007/s11042-016-4030-x

Amolins, K., Zhang, Y., and Dare, P. (2007). Wavelet based image fusion techniques- an introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 62, 249–263. doi: 10.1016/j.isprsjprs.2007.05.009

Choi, M. (2006). A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 44, 1672–1682. doi: 10.1109/TGRS.2006.869923

Da, C., Zhou, J., and Do, M. (2006). “The non-subsampled contourlet transform: theory, design, and applications,” in Proceedings of the IEEE Transaction on Image Processing, Vol. 15 (Piscataway, NJ: IEEE), 3089–3101. doi: 10.1109/TIP.2006.877507

Du, J., Li, W., Xiao, B., and Nawaz, Q. (2016a). An overview of multi-modal medical image fusion. Knowl. Based Syst. 215, 3–20.

Du, J., Li, W., Xiao, B., and Nawaz, Q. (2016b). Medical image fusion by combining parallel features on multi-scale local extrema scheme. Knowl. Based Syst. 113, 4–12. doi: 10.1016/j.knosys.2016.09.008

Du, J., Li, W., Xiao, B., and Nawaz, Q. (2016c). Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 194, 326–339. doi: 10.1016/j.neucom.2016.02.047

Eckhorn, R., Reitboeck, H. J., Arndt, M., and Dicke, P. (1989). “A neural network for feature linking via synchronous activity: results from cat visual cortex and from simulations,” in Models of Brain Function, ed. R. M. J. Cotterill (Cambridge: Cambridge University Press), 255–272. doi: 10.1139/w00-039

Ge, J., Wang, Y., Zhang, H., and Zhou, B. (2009). Study of intelligent inspection machine based on modified pulse coupled neural network. Chin. J. Sci. Instrum. 30, 1866–1873.

Hansen, N. L., Koo, B. C., Gallagher, F. A., Warren, A. Y., Doble, A., Gnanapragasam, V., et al. (2017). Comparison of initial and tertiary centre second opinion reads of multiparametric magnetic resonance imaging of the prostate prior to repeat biopsy. Eur. Radiol. 27, 2259–2266. doi: 10.1007/s00330-016-4635-5

Huang, C., Xie, Y., Lan, Y., Hao, Y., Chen, F., Cheng, Y., et al. (2018). A new framework for the integrative analytics of intravascular ultrasound and optical coherence tomography images. IEEE Access 6, 36408–36419. doi: 10.1109/ACCESS.2018.2839694

Huang, D. (1999). Radial basis probabilistic neural networks: model and application. Int. J. Pattern Recogn. 13, 1083–1101. doi: 10.1109/LGRS.2010.2046715

Huang, D. (2004). A constructive approach for finding arbitrary roots of polynomials by neural networks. IEEE Trans. Neural Netw. 15, 477–491. doi: 10.1109/TNN.2004.824424

Huang, D., and Du, J. (2008). A constructive hybrid structure optimization methodology for radial basis probabilistic neural networks. IEEE Trans. Neural Netw. 19, 2099–2115. doi: 10.1109/TNN.2008.2004370

Huang, D., Horace, H., and Chi, Z. (2004). A neural root finder of polynomials based on root moments. Neural Comput. 16, 1721–1762. doi: 10.1162/089976604774201668

Huang, D., and Jiang, W. (2012). A general CPL-AdS methodology for fixing dynamic parameters in dual environments. IEEE Trans. Syst. Man Cybern. B Cybern. 42, 1489–1500. doi: 10.1109/TSMCB.2012.2192475

Huang, S. (1996). Systematic Theory of Neural Networks for Pattern Recognition (in Chinese). Beijing: Publishing House of Electronic Industry of China.

Ji, X., and Zhang, G. (2017). Image fusion method of SAR and infrared image based on Curvelet transform with adaptive weighting. Multimed. Tools Appl. 76, 17633–17649. doi: 10.1007/s11042-016-4030-x

Jiang, P., Zhang, Q., Li, J., and Zhang, J. (2014). Fusion algorithm for infrared and visible image based on NSST and adaptive PCNN. Laser Infrared 44, 108–113.

Jodoin, P. M., Pinheiro, F., Oudot, A., and Lalande, A. (2015). Left-ventricle segmentation of SPECT images of rats. IEEE Trans. Biomed. Eng. 5, 2260–2268. doi: 10.1109/TBME.2015.2422263

Johnson, K. A., and Becker, J. A. (2001). The Whole Brain Altas. Available at: http://www.med.harvard.edu/aanlib/

Kanmani, M., and Narasimhan, V. (2017). An optimal weighted averaging fusion strategy for thermal and visible images using dual tree discrete wavelet transform and self tunning particle swarm optimization. Multimed. Tools Appl. 76, 20989–21010. doi: 10.1007/s11042-016-4030-x

Kaur, P., and Mehta, S. (2017). Resource provisioning and work flow scheduling in clouds using augmented shuffled frog leaping algorithm. J. Parallel Distrib. Comput. 101, 41–50. doi: 10.1016/j.jpdc.2016.11.003

Kavitha, C. T., and Chellamuthu, C. (2014). Fusion of SPECT and MRI images using integer wavelet transform in combination with curvelet transform. Imaging Sci. J. 63, 17–24. doi: 10.1179/1743131X14Y.0000000092

Li, R., Ji, C., Sun, P., Liu, D., Zhang, P., and Li, J. (2018). Optimizing operation of cascade reservoirs based on improved shuffled frog leaping algorithm. J. Yangtze River Sci. Res. Inst. 35, 30–35.

Rahmani, S., Strait, M., Merkurjev, D., Moeller, M., and Wittman, T. (2010). An adaptive IHS pan-sharpening method. IEEE Geosci. Remote Sens. Lett. 7, 746–750. doi: 10.1109/LGRS.2010.2046715

Samuel, G. C., and Asir Rajan, C. C. (2015). Hybrid: particle swarm optimization-genetic algorithm and particle swarm optimization-shuffled frog leaping algorithm for long-term generator maintenance scheduling. Int. J. Elec. Power 65, 432–442. doi: 10.1016/j.ijepes.2014.10.042

Sapkheyli, A., Zain, A. M., and Sharif, S. (2015). The role of basic, modified and hybrid shuffled frog leaping algorithm on optimization problems: a review. Soft Comput. 19, 2011–2038. doi: 10.1007/s00500-014-1388-4

Singh, S., Gupta, D., Anand, R. S., and Kumar, V. (2015). Nonsubsampled shearlet based CT and MRI medical image fusion using biologically inspired spiking neural network. Biomed. Signal Process. Control 18, 91–101. doi: 10.1016/j.bspc.2014.11.009

Wang, D., and Zhou, J. (2010). Image fusion algorithm based on the nonsubsampled contourlet transform. Comput. Syst. Appl. 19, 220–224. doi: 10.1109/ICFCC.2010.5497801

Wu, J., Huang, H., Qiu, Y., Wu, H., Tian, J., and Liu, J. (2005). “Remote sensing image fusion based on average gradient of wavelet transform,” in Proccedings of the 2005 IEEE International Conference on Mechatronics and Automation, Vol. 4 (Niagara Falls, ON: IEEE), 1817–1821. doi: 10.1109/ICMA.2005.1626836

Xiang, T., Yan, L., and Gao, R. (2015). A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys. Technol. 69, 53–61. doi: 10.1016/j.infrared.2015.01.002

Xin, Y., and Deng, L. (2013). An improved remote sensing image fusion method based on wavelet transform. Laser Optoelectron. Prog. 15, 133–138.

Yang, J., Wu, Y., Wang, Y., and Xiong, Y. (2016). “A novel fusion technique for CT and MRI medical image based on NSST,” in Proccedings of the Chinese Control and Decision Conference (Yinchuan: IEEE), 4367–4372. doi: 10.1109/CCDC.2016.7531752

Zeng, N., Qiu, H., Wang, Z., Liu, W., Zhang, H., and Li, Y. (2018). A new switching-delayed-PSO-based optimized SVM algorithm for diagnosis of Alzheimer’s disease. Neurocomputing 320, 195–202. doi: 10.1016/j.neucom.2018.09.001

Zeng, N., Wang, H., Liu, W., Liang, J., and Alsaadi, F. E. (2017a). A switching delayed PSO optimized extreme learning machine for short-term load forecasting. Neurocomputing 240, 175–182. doi: 10.1016/j.neucom.2017.01.090

Zeng, N., Zhang, H., Li, Y., Liang, J., and Dobaie, A. M. (2017b). Denoising and deblurring gold immunochromatographic strip images via gradient projection algorithms. Neurocomputing 247, 165–172. doi: 10.1016/j.neucom.2017.03.056

Zeng, N., Wang, Z., and Zhang, H. (2016a). Inferring nonlinear lateral flow immunoassay state-space models via an unscented Kalman filter. Sci. China Inform. Sci. 59:112204. doi: 10.1007/s11432-016-0280-9

Zeng, N., Wang, Z., Zhang, H., and Alsaadi, F. E. (2016b). A novel switching delayed PSO algorithm for estimating unknown parameters of lateral flow immunoassay. Cogn. Comput. 8, 143–152. doi: 10.1007/s12559-016-9396-6

Zeng, N., Wang, Z., Zineddin, B., Li, Y., Du, M., Xiao, L., et al. (2014). Image-based quantitative analysis of gold immunochromato graphic strip via cellular neural network approach. IEEE Trans. Med. Imaging 33, 1129–1136. doi: 10.1109/TMI.2014.2305394

Zhang, J., Sun, Y., Zhang, Y., and Teng, J. (2017). Double regularization medical CT image blind restoration reconstruction based on proximal alternating direction method of multipliers. EURASIP J. Image Video Process. 2017:70. doi: 10.1186/s13640-017-0218-x

Zhang, X., Li, X., and Feng, Y. (2017). Image fusion based on simultaneous empirical wavelet transform. Multimed. Tools Appl. 76, 8175–8193. doi: 10.1007/s11042-016-4030-x

Keywords: single-photon emission computed tomography image, computed tomography image, image fusion, pulse coupled neural network, shuffled frog leaping

Citation: Huang C, Tian G, Lan Y, Peng Y, Ng EYK, Hao Y, Cheng Y and Che W (2019) A New Pulse Coupled Neural Network (PCNN) for Brain Medical Image Fusion Empowered by Shuffled Frog Leaping Algorithm. Front. Neurosci. 13:210. doi: 10.3389/fnins.2019.00210

Received: 21 October 2018; Accepted: 25 February 2019;

Published: 20 March 2019.

Edited by:

Nianyin Zeng, Xiamen University, ChinaReviewed by:

Ming Zeng, Xiamen University, ChinaCopyright © 2019 Huang, Tian, Lan, Peng, Ng, Hao, Cheng and Che. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongtao Hao, aGFvMHl0QDE2My5jb20= Yongqiang Cheng, WS5DaGVuZ0BodWxsLmFjLnVr Wenliang Che, Y2hld2VubGlhbmdAdG9uZ2ppLmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.