95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 24 December 2015

Sec. Neuromorphic Engineering

Volume 9 - 2015 | https://doi.org/10.3389/fnins.2015.00488

This article is part of the Research Topic Enabling technologies for very large-scale synaptic electronics View all 9 articles

Xinjie Guo1†

Xinjie Guo1† Farnood Merrikh-Bayat1†

Farnood Merrikh-Bayat1† Ligang Gao1

Ligang Gao1 Brian D. Hoskins1

Brian D. Hoskins1 Fabien Alibart2

Fabien Alibart2 Bernabe Linares-Barranco3

Bernabe Linares-Barranco3 Luke Theogarajan1

Luke Theogarajan1 Christof Teuscher4

Christof Teuscher4 Dmitri B. Strukov1*

Dmitri B. Strukov1*The purpose of this work was to demonstrate the feasibility of building recurrent artificial neural networks with hybrid complementary metal oxide semiconductor (CMOS)/memristor circuits. To do so, we modeled a Hopfield network implementing an analog-to-digital converter (ADC) with up to 8 bits of precision. Major shortcomings affecting the ADC's precision, such as the non-ideal behavior of CMOS circuitry and the specific limitations of memristors, were investigated and an effective solution was proposed, capitalizing on the in-field programmability of memristors. The theoretical work was validated experimentally by demonstrating the successful operation of a 4-bit ADC circuit implemented with discrete Pt/TiO2−x/Pt memristors and CMOS integrated circuit components.

Recurrent artificial neural networks are an important computational paradigm capable of solving a number of optimization problems (Hopfield, 1984; Tank and Hopfield, 1986). One classic example of such networks is a Hopfield analog-to-digital converter (Tank and Hopfield, 1986; Lee and Sheu, 1989; Smith and Portmann, 1989). Although such a circuit may be of little practical use, and inferior, for example, to similar-style feed forward-type ADC implementations (Chigusa and Tanaka, 1990), it belongs to a broader constrained optimization class of networks which minimize certain pre-programmed energy functions and have several applications in control and signal processing (Tank and Hopfield, 1986). The Hopfield network ADC circuit also represents an important bridge between computational neuroscience and circuit design, and an understanding of the potential shortcomings of such a relatively simple circuit is therefore important for implementing more complex recurrent neural networks.

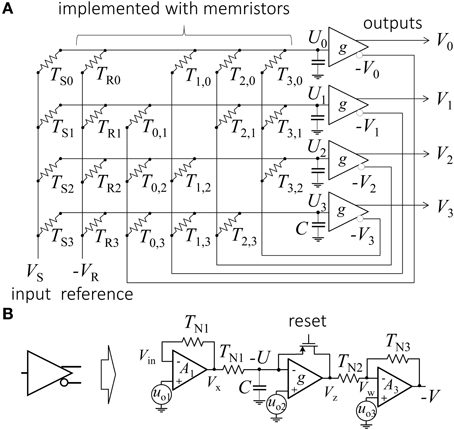

An example of a 4-bit Hopfield network ADC is shown in Figure 1 (Tank and Hopfield, 1986). The originally proposed network consists of an array of linear resistors (also called weights or synapses) and four peripheral inverting amplifiers (neurons). Each neuron receives currents from the input and reference lines and from all other neurons via corresponding synapses. The analog input voltage VS is converted to the digital code V3V2V1V0, i.e.,

by first forcing all neuron outputs to zero (Lee and Sheu, 1989) and then letting the system evolve to the appropriate stationary state.

Figure 1. (A) Conventional Hopfield network implementation of a 4-bit ADC and (B) specific implementation of a neuron as considered in this paper.

To understand how the Hopfield network performs the ADC operation, let us first describe its electrical behavior. Assuming leakage-free neurons with infinite input and zero output impedances, the dynamic equation governing the system evolution of the input voltage Uj of the j-th neuron is described as:

where g(·) is a neuron activation function, C is the neuron's input capacitance, Tij is a conductance of the synapse connecting the output of the i-th neuron with the input of the j-th neuron, while

are the corresponding effective offset input current and effective input conductance for the j-th neuron. HereVR is a reference voltage, while TR and TS are conductances of reference and input weights, respectively (Figure 1A). Note that neuron input Ui can be either positive or negative, but the output of the neuron is either zero or positive. The inverted outputs of the neurons, which are fed back to the network, are therefore either negative or zero. One activation function suitable for such mapping is the sigmoid function 1/(1+exp[-U]). Neuron output needs to be inverted to keep the feedback weights positive and thus to allow physical implementation with passive devices, such as resistors1.

Alternatively, the Hopfield network operation can be described by an energy function. The evolution of the dynamic system described by Equation (2) is equivalent to a minimization of the energy function:

where the last term can be neglected for very steep transfer functions (Hopfield, 1984). In Tank and Hopfield (1986), showed that a 4-bit ADC task (Equation 1) can be described by the following energy function:

Here the first term tends to satisfy Equation (1), while the second tends to force each digital output Vi to be either “0” or “1.” After rearranging the terms in Equation (6) and comparing the result with Equation(5), the appropriate weights for performing the ADC task are:

In the Hopfield ADC network, the number of synapses grows quadratically with the number of neurons. Compact implementation of the synapses is therefore required if such circuits are to be practical. This is certainly challenging to achieve with conventional CMOS technology, because, according to Equation (7), it requires analog weights with a relatively large dynamic range, i.e., in the order of 22N, where N is the bit precision. Weights can be stored digitally, but this approach comes with a large overhead (Moopenn et al., 1990). On the other hand, analog CMOS implementations of the synapses have to cope with the mismatch issues often encountered in CMOS circuits (Indeveri et al., 2011). Consequently, several attempts have been made to implement synapses with alternative, nonconventional technologies. In some of the early implementations of Hopfield networks, weights were realized as corresponding thin film (Jackel et al., 1987) or metal line (Graf et al., 1986; Schwartz et al., 1987) conductance values, patterned using e-beam lithography and reactive-ion-etching. The main limitation of these approaches was that the weights were essentially one-time programmable, with rather crude accuracy. A much more attractive solution was very recently demonstrated in Eryilmaz et al. (2014), which describes a Hopfield network implementation with synapses based on phase change memory paired with conventional field-effect transistors. That work, together with other recent advances in device technologies (Wu et al., 2012; Zhang et al., 2012) revived interest in the theoretical modeling of recurrent neural networks based on hybrid circuits (Waser et al., 2009; Strukov and Kohlstedt, 2012; Lehtonen et al., 2014; Rakkiyappan et al., 2014; Walls and Likharev, 2014).

This paper explores the implementation of synapses with an emerging, very promising type of memory devices, namely metal-oxide resistive switching devices (“memristor”) (Wu et al., 2012; Zhang et al., 2012). In the next section we discuss the general implementation details of the Hopfield network ADC, including the memristor devices which were utilized in the experimental setup. This is followed by a theoretical analysis of the considered hybrid circuits' sensitivity to certain representative sources of non-ideal behavior and discussion of a possible solution to such problems. The theoretical results were validated with SPICE simulations (Section Simulation Results) and experimental work (Section Experimental Results). The paper concludes with a Discussion section. It should be noted that preliminary experimental results, without any theoretical analysis, were reported earlier in Gao et al. (2013a), where we first presented a Hopfield network implementation with metal-oxide memristors. The only other relevant experimental work on memristor-based Hopfield networks that we are aware of was published recently in Hu et al. (2015). However, the network demonstrated in Hu et al. (2015) was based on 9 memristors whereas the circuit presented in this work involves 16.

Following on from our earlier works (Alibart et al., 2013; Gao et al., 2013b; Merrikh-Bayat et al., 2014), we here consider the implementation of a hybrid CMOS/memristive circuit (Figure 1). In this circuit, density-critical synapses are implemented with Pt/TiO2−x/Pt memristive devices, while neurons are implemented by CMOS circuits.

In their simplest form, memristors are two-terminal passive elements, the conductance of which can be modulated reversibly by applying electrical stress. Due to the simple structure and ionic nature of their memory mechanism, metal-oxide memristors have excellent scaling prospects, often combined with fast, low energy switching and high retention (Strukov and Kohlstedt, 2012). Many metal oxide based memristors can also be switched continuously, i.e., in analog manner, by applying electrical bias (current or voltage pulses) with gradually increasing amplitude and/or duration.

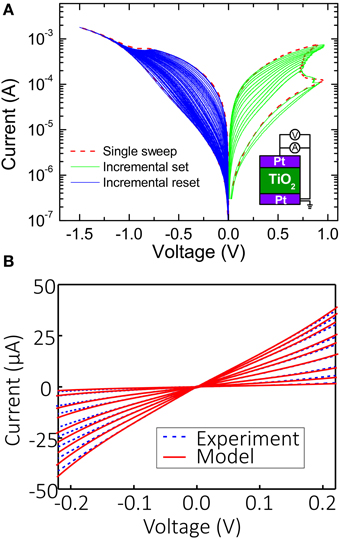

Figure 2A shows typical continuous switching I-Vs for the considered Pt/TiO2−x/Pt devices (Alibart et al., 2012). The devices were implemented in “bone-structure” geometry with an active area of ~1 μm2 using the atomic layer deposition technique. An evaporated Ti/Pt bottom electrode (5 nm/25 nm) was patterned by conventional optical lithography on a Si/SiO2 substrate (500 μm/200 nm, respectively). A 30 nm TiO2 switching layer was then realized by atomic layer deposition at 200°C using Titanium Isopropoxide (C12H28O4Ti) and water as precursor and reactant, respectively. A Pt/Au electrode (15 nm/25 nm) was evaporated on top of the TiO2 blanket layer, and the device was finally rapidly annealed at 500°C in an N2 and N2+O2 atmosphere for 5 min to improve the crystallinity of the TiO2 material. Details of the fabrication and characterization of the considered memristors are given in Alibart et al. (2012).

Figure 2. (A) Typical I-V curves with current-controlled set and voltage-controlled reset switching for the considered Pt/TiO2−x/Pt memristors. (B) Modeling of static I-V curves at small disturb-free voltages for several different states. The fitting parameters are β = 1, α1 = 14.7 V−3, ΩV−3, Ω2V−3 for V > 0, and α1 = 34.6 V−3, ΩV−3, Ω2V−3 for V < 0.

After programming the memristors to the desired resistance, it was important for their state to remain unchanged during operation of the Hopfield network, so to prevent any disturbance the voltage drop across them was always kept within the |V| ≤ 0.2 V “disturb-free” range (Alibart et al., 2012).

The static I-V characteristics (i.e., those within disturb-free regime) for several different memory states are shown in Figure 2B. To assist SPICE simulation, the experimental I-V curves at small biases were fitted by the following static equation with a single memory state G:

The need to keep the voltage drop across memristive devices small also affects neuron design. A simple leaky operational amplifier (op-amp) integrator could be sufficient to implement neuron functionality, but ensuring disturb-free operation with such a design is not easy. This issue was resolved by implementing neurons with three op-amps connected in series (Figure 1B). The first op-amp was an inverting amplifier which held virtual ground even if the neuron's output was saturated. The second op-amp was an open loop amplifier implementing a sign-like activation function. The field effect transistor in the negative feedback of this op-amp was initially turned on to force the neuron's outputs to zero (i.e., to set into initial state before computing output) and then turned off during network convergence. The last op-amp inverted the signal and ensured that the neuron output was within the −0.2 V ≤ V ≤ 0 voltage range. Note that since the neuron bandwidth was mainly determined by the input capacitance of the second amplifier, and the other sources of parasitic capacitance could be neglected for simplicity, the capacitive load of the second amplifier (Figure 1B) was effectively a neuron input capacitance (Figure 1A).

Assuming ideal op-amps and no possibility of saturation by the first and last amplifiers, the dynamic equation for this neuron design can be written as:

where g() is a transfer function of the second op-amp (see Appendix for more details on derivation).

For a very steep transfer function, the second term in the right hand part of Equation (9a) can be neglected (Hopfield, 1984). The network is then described by the original energy function (Equation 5) and the weights are proportional to those defined in Equation (7), i.e.,

where the additional coefficient 5 is due to the reduced, i.e., 0.2 V, output voltage corresponding to digital “1” in the considered circuit [as opposed to output voltage 1 V assumed in the original ADC energy function in Equation (6) for ADC and the weights in Equation (7) derived from that energy function].

The physical implementation of this Hopfield network ADC posed a number of additional challenges. However, it should first be mentioned that variations in neuron delay and input capacitances, which may result in oscillatory behavior and the settling in of false energy minima (Lee and Sheu, 1989; Smith and Portmann, 1989), were not a problem in our case thanks to the slow operating speed, which was enforced to reduce capacitive coupling. The specific problems regarding the considered implementation were offsets in virtual ground, resulting from the voltage offsets (uo) and limited gain (A) of the op-amps (Figure 1B). Another, somewhat less severe, problem was the nonlinear conductance of the memristive devices (defined via parameter β–, see Equation 8). In the Appendix it is shown how limited gain and non-zero offset result in an additional constant term I0 in dynamical equation (Equation A7), which can be factored into the reference weights as follows:

The Hopfield network with practical, non-ideal neurons can still therefore be approximated by the original energy equation and it should be possible to circumvent the effects of limited gain and voltage offset by fine-tuning the reference weights. This idea was verified via SPICE modeling and experimental work, as described in the next section.

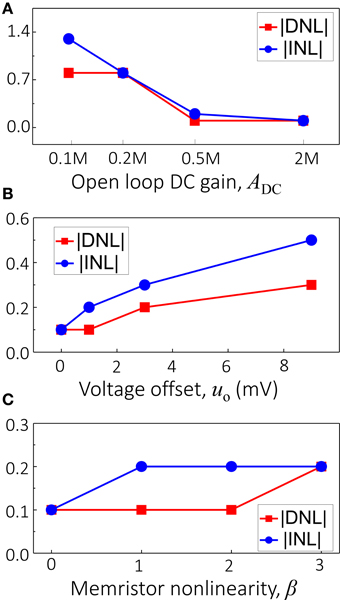

Using Equation (8) for the memristors and SPICE models for the IC components, in the next series of simulations we studied how particular non-ideal behavior affects differential (DNL) and integral (INL) nonlinearities in ADC circuits (van de Plassche, 2003). Figure 3A shows INL and DNL as a function of the open loop DC gain, which was varied simultaneously for all three op-amps, assuming ideal memristors with β = 0 and no voltage offset. Note that in this simulation, the gain-bandwidth product (GBP) was increased proportionally to the open loop DC gain, and was equal to 3 MHz at . Because the circuit operated at about 1.5 KHz, the effective gain A ≈ ADC/100 for all simulations (and also for the experimental work discussed below). Figure 3B shows the impact of the voltage offset on DNL and INL (simulated as an offset on the ground nodes), which was varied simultaneously for all three op-amps. Finally, Figure 3C shows the effect of I-V nonlinearity, which was varied by changing constant β in Equation (8), assuming all other parameters of the network to be close to ideal, i.e., that the voltage offset uo = 0 and the open loop DC gain . Note that for β > 0, the memristor weights were chosen in such a way that the conductance of the device at −0.2V matched the corresponding values prescribed by Equation (10).

Figure 3. Theoretical analysis of the performance sensitivity of a 4-bit Hopfield network ADC with respect to (A) open-loop DC gain, (B) voltage offsets in the operational amplifiers, and (C) the nonlinearity of memristive devices.

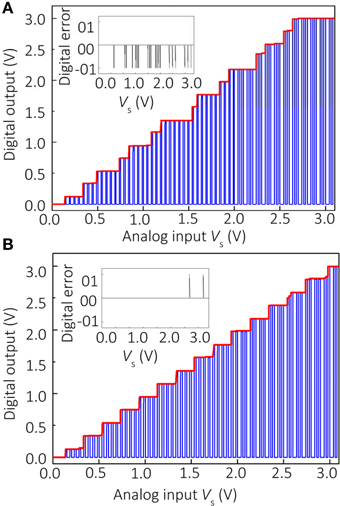

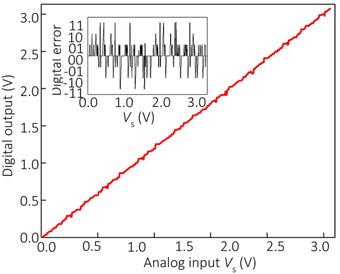

The results shown in Figure 3 confirm the significant individual contribution of the considered sources of non-ideal behavior on the ADC's performance. Figure 4A shows the simulation results considering all these factors together for the specific values uo = 3 mV, β = 1, , and GBP = 3 MHz, which are representative of the experimental setup. The gain and voltage offset values were taken from the specifications of the discrete IC op-amps used in the experiment. Clearly, the ADC output is distorted and contains numerous errors, with the largest contribution to INL being due to finite gain (Figure 3). Figures 4B, 5 show the simulation results with new values for the reference weights calculated according to Equation (11) for the 4-bit and 8-bit ADCs, respectively. The results shown in these figures confirm that non-ideal behavior in op-amps, such as limited gain and voltage offsets, can be efficiently compensated by fine-tuning memristors.

Figure 4. Simulation results for (A) the original and (B) the optimized 4-bit Hopfield network ADC with β = 1, , and uo = 3 mV voltage offset, which are representative parameters for the experimental setup. For the optimized network, TR” = 0.97 TR1, TR2” = 0.86 TR2, TR3” = 0.95 TR3, TR4” = 0.97 TR4.

Figure 5. Simulation results for the optimized 8-bit Hopfield network ADC with TR1” = 0.8 TR1, TR2” = 0.81 TR2, TR3” = 0.89 TR3, TR4” = 0.83 TR4, TR5” = 0.55 TR5, TR6” = 0.74 TR6, TR7” = 0.71 TR7, TR8” = 0.75 TR8. All other parameters are equal to those used for Figure 4.

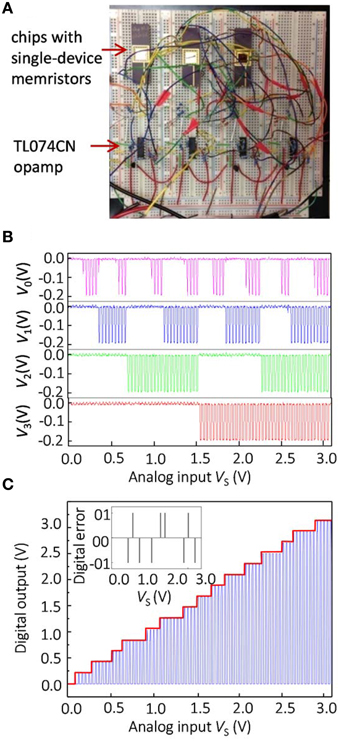

The simulation results were also validated experimentally by implementing a 4-bit Hopfield network ADC in a breadboard setup consisting of Pt/TiO2−x/Pt memristive devices and discrete IC CMOS components (Figure 6A). The memristor chips were assembled in standard 40-pin DIP packages by wire-bonding 20 standalone memristive devices. Because input voltage range is 0 ≤ VS ≤ V, the weights TS were realized with regular resistors2. The discrete memristors and other IC components were then connected as shown in Figure 1 with external wires.

Figure 6. Experimental results for the optimized 4-bit Hopfield ADC: (A) experimental setup, (B) measured outputs for every output channel, and (C) measured transfer characteristics.

The memristors implementing feedback and reference weights were first tuned ex-situ using a previously developed algorithm (Alibart et al., 2012) to the values defined by Equation (10). The ex-situ tuning for each memristor was performed individually before the devices were connected in a circuit. This was done to simplify the experiment and it is worth mentioning that in general, it should be possible to tune memristors after they are connected in the crossbar circuit, as it was experimentally demonstrated by our group for standalone devices connected in crossbar circuits (Alibart et al., 2013; Gao et al., 2013c) and integrated passive crossbar circuits (Prezioso et al., 2015a,b).

As was discussed in Sections Materials and Methods for Hopfield Network Implementation with Hybrid Circuits and Results, limited gain and voltage offsets of operational amplifiers can be compensated by adjusting reference weights according to Equations (11, A12). To demonstrate in-field configurability of memristors, the reference weights were fine-tuned in-situ. In particular, reference weights were adjusted to ensure correct outputs at four particular input voltages, when VS is equal to 1/16, 1/8, 1/4, and 1/2 of its maximum value. The tuning is performed first for VS = 1∕16 , for which the correct operation of ADC assumes that the least significant output bit V0 flips from 0 to 1 (corresponding to voltage 0.2 V in our case), which is ensured by fine-tuning reference weight TR0. Similarly, the output bit V1 should flip from 0 to 1 when VS = 1∕8 , which is ensured by fine-tuning reference weight TR1 and so on. Because we started fine-tuning from the least significant output, it is sufficient to fine-tune only one corresponding reference weight at a time for a particular input voltage, which greatly simplified in-situ tuning procedure. Also, the direction of adjustment was always straightforward to determine due to monotonic dependence of the input voltage at which a particular output bit flips from 0 to 1, on the corresponding reference weight (Equation 11).

The network parameters for the experimental work are summarized in Table 1. Although there were a few A/D conversion errors in the experimental work (Figure 6), the results are comparable with the simulations of the optimized network, and much better than those obtained for the unoptimized network. The experimental results for the unoptimized network were significantly worse in comparison with the simulation, and are not shown in this paper.

It is worth mentioning that for the considered memristors drift of conductive state over time was negligible due to highly nonlinear switching kinetics specific to these devices (Alibart et al., 2012, 2013; Prezioso et al., 2015a). In principle, for other types of memristors with inferior retention properties it should be possible to occasionally fine-tune memristor state to cope with conductance drift. A related issue might be measurement noise upon reading the state of the memristor, e.g., due to the fluctuations in the device conductance over time, which is sometimes observed as random telegraph noise (Gao et al., 2012, 2013b; Prezioso et al., 2015b). Such noise can be tolerated by performing quasi DC read measurements, however, the downside would be potentially much slower tuning process.

To conclude, in this work we investigated hybrid CMOS/metal-oxide-memristor circuit implementation of a Hopfield recurrent neural network performing analog-to-digital conversion tasks. We showed that naïve implementation of such networks, with weights prescribed by the original theory, produces many conversion errors, mainly due to the non-ideal behavior of the CMOS components in the integrated circuit. We then proposed a method of adjusting weights in the Hopfield network to overcome the non-ideal behavior of the network components and successfully validated this technique experimentally on a 4-bit ADC circuit. The ability to fine-tune the conductances of memristors in a circuit was essential for implementing the proposed technique. In our opinion, the work carried out proved to be an important milestone and its results will be valuable for implementing more practical large-scale recurrent neural networks with CMOS/memristor circuits. Experimental research into CMOS/memristor neural networks is still very scarce and, to the best of our knowledge, the demonstrated Hopfield network is the most complex network of its type reported to date. From a broader perspective, this paper demonstrates one of the main advantages of utilizing memristors in analog circuits, namely the feasibility of fine-tuning memristors after fabrication to overcome variations in analog circuits.

XG and FMB performed simulation work. FMB, XG, and LG performed the experimental demo. LG, BH, and FA fabricated devices. DS supervised the project. All discussed the results.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This work was supported by NSF grant CCF-1028378 and by the Air Force Office of Scientific Research (AFOSR) under MURI grant FA9550-12-1-0038, and by Spanish grant TEC2012-37868-C04-01 (BIOSENSE) (with support from the European Regional Development Fund).

1. ^The sign of the first term on the left in Equation (2a), and of all right hand terms in Equation (5), is different from that of the original paper (Hopfield, 1984). In this work we assume that all weights are strictly positive, making it necessary explicitly to flip the neuron feedback signal sign.

2. ^In principal, input voltage range could be decreased by increasing input weights correspondingly. However, such rescaling would require larger a dynamic range of conductances to implement (Equation 6), and this was not possible with the considered memristive devices.

Alibart, F., Gao, L., Hoskins, B. D., and Strukov, D. B. (2012). High precision tuning of state for memristive devices by adaptable variation-tolerant algorithm. Nanotechnology 23:075201. doi: 10.1088/0957-4484/23/7/075201

Alibart, F., Zamanidoost, E., and Strukov, D. B. (2013). Pattern classification by memristive crossbar circuits using ex situ and in situ training. Nat. Commun. 4, 2072. doi: 10.1038/ncomms3072

Chigusa, Y., and Tanaka, M. (1990). “A neural-like feed-forward ADC,” in Proc. ISCAS'90 (New Orleans, LA), 2959–2962.

Eryilmaz, S. B., Kuzum, D., Jeyasingh, R., Kim, S., BrightSky, M., Lam, C., et al. (2014). Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array. Front. Neurosci. 8:205. doi: 10.3389/fnins.2014.00205

Gao, L., Merrikh-Bayat, F., Alibart, F., Guo, X., Hoskins, B. D., Cheng, K.-T., et al. (2013a). “Digital-to-analog and analog-to-digital conversion with metal oxide memristors for ultra-low power computing,” in Proc. NanoArch'13 (New York, NY), 19–22.

Gao, L., Alibart, F., and Strukov, D. (2013b). Programmable CMOS/memristor threshold logic. IEEE Trans. Nanotechnol. 12, 115–119. doi: 10.1109/TNANO.2013.2241075

Gao, L., Alibart, F., Strukov, D., and B. (2013c). “A high resolution nonvolatile analog memory ionic devices,” in Proc. Non-Volatile Memories Workshop (San Diego, CA).

Gao, L., Alibart, F., and Strukov, D. B. (2012). “Analog-input analog-weight dot-product operation with Ag/a-Si/Pt memristive devices,” in Proc. VLSI-SoC'12 (Santa Cruz, CA), 88–93.

Graf, H. P., Jackel, L. D., Howard, R. E., Straughn, B., Denker, J. S., Hubbard, W., et al. (1986). VLSI implemetnation of a nerual network memory with several hundreds of neurons. AIP Conf. Proc. 151, 182–187. doi: 10.1063/1.36253

Hopfield, J. J. (1984). Neurons with graded response have collective computational properties like those of two-state neruons. Proc. Natl. Acad. Sci. U.S.A. 81, 3088–3092. doi: 10.1073/pnas.81.10.3088

Hu, S. G., Liu, Y., Liu, Z., Chen, T. P., Wang, J. J., Yu, Q., et al. (2015). Associative memory realized by a configurable memristive Hopfiled neural network. Nat. Commun. 6, 7522. doi: 10.1038/ncomms8522

Indiveri, G., Linares-Barranco, B., Hamilton, T. J., van Schaik, A., Etienne-Cummings, R., Delbruck, T., et al. (2011). Neuromorphic silicon neuron circuits. Front. Neurosci. 5:73. doi: 10.3389/fnins.2011.00073

Jackel, L. D., Graf, H. P., and Howard, R. E. (1987). Electronic neural network chips. Appl. Opt. 26, 5077–5080. doi: 10.1364/AO.26.005077

Lee, B. W., and Sheu, B. J. (1989). Design of a neural-based A/D converter using modified Hopfield network. IEEE J. Solid-State Circ. 24, 1129–1135. doi: 10.1109/4.34101

Lehtonen, E., Poikonen, J. H., Laiho, M., and Kanerva, P. (2014). Large-scale memristive associative memories. IEEE Trans. VLSI 22, 562–574. doi: 10.1109/TVLSI.2013.2250319

Merrikh-Bayat, F., Alibart, F., Gao, L., and Strukov, D. B. (2014). “A reconfigurable FIR filter with memristor-based weights,” in: Proc. ISCAS'15 (Melbourne, VIC).

Moopenn, A., Duong, T., and Thakoor, A. P. (1990). Adv. Neural Inofmra Digital-analog hybrid synapse chips for electronic nerual networks t. Proces. Syst. 2, 769–776.

Prezioso, M., Kataeva, I., Merrikh-Bayat, F., Hoskins, B., Adam, G., Sota, T., et al. (2015b). “Modeling and implementation of firing-rate neuromorphic-network classifiers with bilayer Pt/Al2O3/TiO2−x/Pt memristors,” in IEDM'15. (Washington, DC).

Prezioso, M., Merrikh Bayat, F., Hoskins, B. D., Adam, G. C., Likharev, K. K., and Strukov, D. B. (2015a). Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64. doi: 10.1038/nature14441

Rakkiyappan, R., Chandrasekar, A., Laksmanan, S., and Ju Park, H. (2014). State estimation of memristor-based recurrent neural networks with time-varying delays based on passivity theory. Complexity 19, 32–43. doi: 10.1002/cplx.21482

Schwartz, D. B., Howard, R. E., Denker, J. S., Epworth, R. W., Graf, H. P., Hubbard, W., et al. (1987). Dynamics of microfabriacted eletronic neural networks. Appl. Phys. Lett. 50, 1110–1112.

Smith, M. J. S., and Portmann, C. L. (1989). Practical design and analysis of a simple “neural” optimization circuit. IEEE Trans. Circ. Syst. 36, 42–50. doi: 10.1109/31.16562

Strukov, D. B., and Kohlstedt, H. (2012). Resistive switching phenomena in thin films: Materials, devices, and applications. MRS Bull. 37, 108–114. doi: 10.1557/mrs.2012.2

Tank, D. W., and Hopfield, J. J. (1986). Simple neural optimization networks—an A/D converter, signal decision circuit, and a linear-programming circuit. IEEE Trans. Circ. Syst. 33, 533–541. doi: 10.1109/TCS.1986.1085953

van de Plassche, R. J. (2003). CMOS Integrated Analog-to-Digital and Digital-to-Analog Converters, 2nd Edn. Norwell, MA: Kluwer Academic Publishers.

Walls, T. J., and Likharev, K. K. (2014). Self-organization in autonomous, recurrent, firing-rate CrossNets with quasi-hebbian plasticity. IEEE Trans. Neural Netw. Learn. Syst. 25, 819–824. doi: 10.1109/TNNLS.2013.2280904

Waser, R., Dittmann, R., Staikov, G., and Szot, K. (2009). Redox−based resistive switching memories–nanoionic mechanisms, prospects, and challenges. Adv. Mat. 21, 2632–2663. doi: 10.1002/adma.200900375

Wu, A., Wen, S., and Zeng, Z. (2012). Synchronization control of a class of memristor-based recurrent neural networks. Inform. Sci. 183, 106–116. doi: 10.1016/j.ins.2011.07.044

Zhang, G., Shen, Y., and Sun, J. (2012). Global exponential stability of a class of memristor-based recurrent neural networks with time-varying delays. Neurocomputing 97, 149–154. doi: 10.1016/j.neucom.2012.05.002

Assuming negligible op-amp input currents and output impedances, the Hopfield network is described by the following equations, which also account for limited gain and voltage offsets:

Solving these equations results in the following dynamic equation

where g() is a transfer function of the saturating amplifier implemented with the second op-amp, and

Keywords: Hopfield network, recurrent neural network, hybrid circuits, memristor, resistive switching, analog-to-digital conversion

Citation: Guo X, Merrikh-Bayat F, Gao L, Hoskins BD, Alibart F, Linares-Barranco B, Theogarajan L, Teuscher C and Strukov DB (2015) Modeling and Experimental Demonstration of a Hopfield Network Analog-to-Digital Converter with Hybrid CMOS/Memristor Circuits. Front. Neurosci. 9:488. doi: 10.3389/fnins.2015.00488

Received: 20 October 2015; Accepted: 07 December 2015;

Published: 24 December 2015.

Edited by:

Emmanuel Michael Drakakis, Imperial College London, UKReviewed by:

Duygu Kuzum, University of California, San Diego, USACopyright © 2015 Guo, Merrikh-Bayat, Gao, Hoskins, Alibart, Linares-Barranco, Theogarajan, Teuscher and Strukov. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dmitri B. Strukov, c3RydWtvdkBlY2UudWNzYi5lZHU=

†These authors have contributed equally to this work.

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.