- 1 Leibniz Institute for Neurobiology, Magdeburg, Germany

- 2 Institute of Biology, Otto-von-Guericke University, Magdeburg, Germany

- 3 Department of Psychology, The University of Texas, Austin, TX, USA

Learning from punishment is a powerful means for behavioral adaptation with high relevance for various mechanisms of self-protection. Several studies have explored the contribution of released dopamine (DA) or responses of DA neurons on reward seeking using rewards such as food, water, and sex. Phasic DA signals evoked by rewards or conditioned reward predictors are well documented, as are modulations of these signals by such parameters as reward magnitude, probability, and deviation of actually occurring from expected rewards. Less attention has been paid to DA neuron firing and DA release in response to aversive stimuli, and the prediction and avoidance of punishment. In this review, we first focus on DA changes in response to aversive stimuli as measured by microdialysis and voltammetry followed by the change in electrophysiological signatures by aversive stimuli and fearful events. We subsequently focus on the role of DA and effect of DA manipulations on signaled avoidance learning, which consists of learning the significance of a warning cue through Pavlovian associations and the execution of an instrumental avoidance response. We present a coherent framework utilizing the data on microdialysis, voltammetry, electrophysiological recording, electrical brain stimulation, and behavioral analysis. We end by outlining current gaps in the literature and proposing future directions aimed at incorporating technical and conceptual progress to understand the involvement of reward circuit on punishment based decisions.

Introduction

According to Skinner (1938), events that strengthen or increase the likelihood of preceding responses are called positive reinforcers, and events whose removal strengthens preceding responses are called negative reinforcers. Based on the affective attributes that determine the reinforcing nature of the unconditioned stimulus (US), these can also be classified as appetitive and aversive reinforcers, respectively (Konorski, 1967). Decades of research have documented phasic (short latency and short duration) dopamine (DA) signals evoked by appetitive reward or conditioned reward predictors and the modification of these signals by changes in reward value (e.g., magnitude, probability, and delay) or reward omission (Schultz et al., 1997). However, the DA neuron response to aversive reinforcers as a function of punishment prediction or avoidance has received far less research attention. Here we review convergent findings, obtained utilizing microdialysis, voltammetry, electrophysiological recording, and electrical brain stimulation, indicating that DA not only plays a role in coding aversive stimuli, but also serves essential functions for the formation of behavioral learning strategies aimed at the avoidance of aversive stimuli.

The Dopaminergic System and Aversive Stimuli

The release of DA in the context of aversive stimuli has been extensively studied using microdialysis. For example, after stressful tail-stimulation extracellular DA levels were increased in the dorsal striatum, nucleus accumbens (NAc), and medial prefrontal cortex (PFC), suggesting involvement of nigrostriatal, mesolimbic, and mesocortical DA systems (Abercrombie et al., 1989; Boutelle et al., 1990; Pei et al., 1990). Moreover, regional differences in DA release have been demonstrated within the ventral striatum in response to aversive stimuli. Prolonged administration of footshock increased extracellular DA in the NAc shell but not core (Kalivas and Duffy, 1995). Furthermore, presentation of sensory stimuli preconditioned with footshock elevated DA levels in NAc (Young et al., 1993). Pretreatment with footshock over several days decreased cocaine-induced DA elevation in mPFC but increased DA in the NAc (Sorg and Kalivas, 1991, 1993; Ungless et al., 2010). In some studies, the DA response to aversive stimuli declined with repeated stress exposure (Imperato et al., 1992). Across studies, different experimental procedures (seconds vs. minutes; 1 min sampling period vs. 10 min sampling period; brief, novel aversive stimuli vs. repeated, chronic aversive stimuli) have made it difficult to draw coherent conclusions.

While microdialysis is useful for directly measuring the localized concentration of DA within a brain region, its temporal sensitivity is limited, usually reflecting more tonic fluctuations in DA release averaged across intervals of 2–10 min. Fast scan cyclic voltammetry (FSCV), on the other hand, is an indirect measure of DA release interpreted from the electrical currents associated with the oxidation and reduction of DA but has high temporal resolution (on the order of 200 ms), which is capable of detecting phasic DA signals associated with a single learning trial. A recent study clarified the role of DA for processing appetitive and aversive reinforcers by measuring the phasic DA signal every 100 ms using FSCV in response to opposite hedonic taste stimuli (rewarding sucrose vs. aversive quinine). A strong DA increase in response to sucrose and DA decrease in response to quinine was found in the NAc and dorsolateral bed nucleus of the stria terminalis, suggesting suppression of DA in these two regions in response to aversive taste stimuli (Roitman et al., 2008; Park et al., 2012). However, a 3 s tail pinch with a soft rubber glove led to different results. A phasic DA increase was time-locked to the tail pinch in the dorsal striatum and NAc core, while an increase in the NAc shell was evident once the tail pinch was removed (Budygin et al., 2012). This suggests that the delivery and removal of aversive stimuli may trigger different DA responses in different projection regions. In addition to phasic DA transients in the NAc core time-locked to aversive physical stimuli, spontaneous DA transients have also been reported in response to aversive social confrontations, such as facing an aggressive resident followed by social defeat (Anstrom et al., 2009). The difference in phasic DA transients in the NAc shell and core in response to aversive events is consistent with a specific motivational role executed by different DA pathways (Salamone, 1994; Ikemoto and Panksepp, 1999; Salamone and Correa, 2002; Ikemoto, 2007).

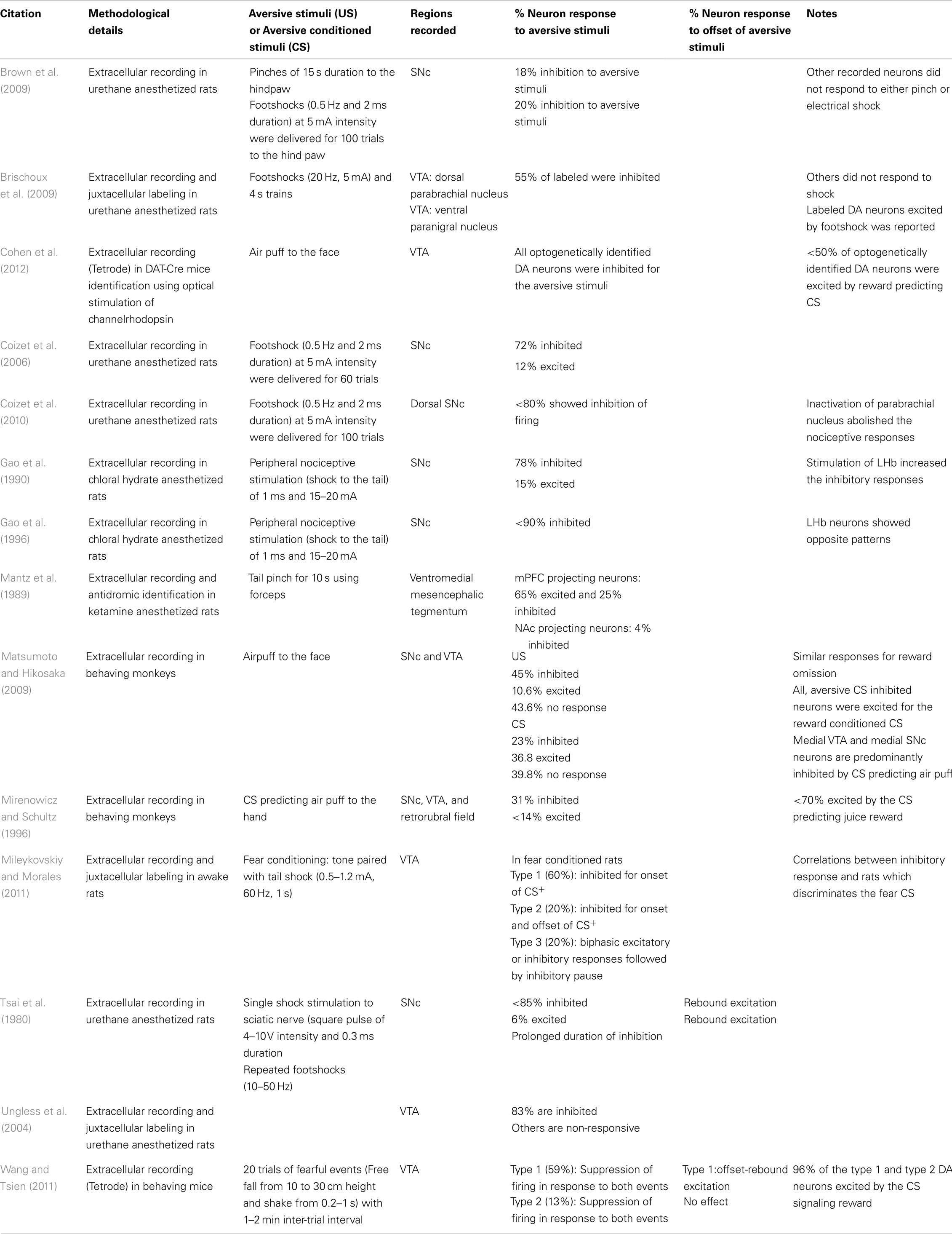

On the level of single neuron activity, aversive stimuli have often been reported to inhibit phasic DA neuron firing in several species (Mirenowicz and Schultz, 1996; Schultz et al., 1997; See Table 1). However, some studies also reported increased phasic firing in response to an aversive conditioned stimulus (CS; e.g., Guarraci and Kapp, 1999). To gain further insights into such discrepant results, recent studies combined extracellular recording and unit identification by juxtacellular neurobiotin labeling (Ungless et al., 2004; Brischoux et al., 2009; Mileykovskiy and Morales, 2011). In response to aversive footshock, DA neurons from different components of the VTA (the dorsal parabrachial pigmented nucleus and the ventral paranigral nucleus) showed opposite modulation of firing, i.e., a reduction and an increase, respectively (Ungless et al., 2004; Brischoux et al., 2009). Valenti et al. (2011) further demonstrated that a single footshock inhibited most of the recorded DA neurons, but repeated footshock evoked different responses on DA neuronal population activity along the mediolateral direction, with predominant excitation on the medial side. Also, DA neurons which were inhibited by the CS signaling the arrival of aversive airpuff were located more medially in VTA and substantia nigra pars compacta (SNc) medial part as opposed to the lateral SNc DA neurons which were predominantly excited (Matsumoto and Hikosaka, 2009).

Mileykovskiy and Morales (2011) studied the response of VTA DA neurons to a CS paired with a tail shock US. Three types of responses from DA neurons were observed during the presentation of the aversive CS, some of which featured biphasic inhibition and excitation. But all of the response types featured an inhibitory pause in firing, the duration of which was correlated with the expression of fear.

Furthermore, inhibition of DA neuron (59%) firing evoked by fearful events such as free fall and shake was followed by offset-rebound excitation (phasic burst firing) upon their termination. Interestingly, the same DA neurons also displayed a reward prediction signal (modulated firing in response to a stimulus that is associated with later occurrence of a reward) when conditioned later with sugar pellet (Wang and Tsien, 2011). From the available evidence including recent optogenetic insights, our understanding of DA neuron response to appetitive and aversive stimuli has broadened. The vast majority of DA neurons appear excited by appetitive rewards and their predictors, and inhibited by aversive punishments and their predictors, as well as by reward omission (Tobler et al., 2003; Mileykovskiy and Morales, 2011; Wang and Tsien, 2011; Cohen et al., 2012).

Dopamine and Avoidance Learning

Avoidance learning is the process by which an individual learns a behavioral response to avoid aversive stimuli. An important feature of avoidance learning is that it is governed by negative reinforcement; that is, the absence of a stimulus motivates behavioral change. The mechanism for exactly how the absence of something can come to serve as a reinforcer has been a puzzle for learning theorists and the focus of much behavioral research. A popular theory accounting for this phenomenon is the two-process theory of avoidance (Dinsmoor, 2001), which states that an animal first learns a Pavlovian association that a CS, such as a tone, will be followed by an aversive US, such as a shock. This Pavlovian association then becomes the basis for operant learning, in that the CS becomes aversive in its own right and thus capable of motivating an operant response. The two-process theory proposes that the CS triggers a state of fear, which the animal then acts to reduce. Thus, fear reduction becomes the ultimate mechanism for negative reinforcement learning. However, here we outline evidence for an alternative mechanism: namely, the formation of an expectation of CS-US contingency is indeed a critical prerequisite, but the violation of aversive expectation when the animal performs the correct avoidance response directly activates the DA reward system. Thus, the ultimate mechanism for negative reinforcement learning is isomorphic with that of positive reinforcement learning, and it is dopaminergic.

Numerous studies have found specific effects of DA manipulations on avoidance learning. Beninger et al. (1989) found that low doses of DA antagonists impaired active avoidance responses without affecting motor behavior. Depletion of DA by 6-hydroxydopamine (6-OHDA) in the SNc (Cooper et al., 1973; Jackson et al., 1977; Salamone, 1994), NAc (McCullough et al., 1993), or PFC (Sokolowski et al., 1994) impaired the development and maintenance of active avoidance strategies, usually without affecting motor responses, including escape responses. Active avoidance behavior was also disrupted by alpha-methyl-p-tyrosine injections in NAc and rescued by DA injections (Bracs et al., 1982). The D2 antagonist sulpiride inhibited avoidance learning when injected into NAc, but not when injected into PFC, amygdala, or caudate putamen (Wadenberg et al., 1990). However, other studies found that D2 antagonist injections into NAc did not impair acquisition but did reduce conditioned responding during subsequent tests, whereas D1 antagonist injections into NAc impaired conditioned responding during both acquisition and subsequent testing (Boschen et al., 2011; Wietzikoski et al., 2012).

While it seems clear from these studies that some dopaminergic target regions play a DA-dependent role in avoidance learning, it is not yet fully transparent what this role is or which DA receptors are essential for it. Extensive work in our laboratory has addressed these questions using shuttle-box avoidance learning, either conditioned by a frequency-modulated (FM) tone or by a GO-NO GO discrimination paradigm using rising and falling FM tones, the processing of which depends on auditory cortex (Wetzel et al., 1998, 2008; Ohl et al., 1999). Microdialysis in auditory cortex and medial PFC showed that DA release in both structures reaches a peak during the first few trials of successful avoidance (Stark et al., 2001, 2008). The consequences of this initial DA release were clarified by subsequent reversal learning experiments, in which a consolidated GO response to two oppositely modulated FM tones was challenged by switching the requirement for one of the FM tones to a NO GO response (Stark et al., 2004). This resulted in an initial breakdown in avoidance responding to chance levels for all animals. However, some animals showed improvement in discrimination learning over subsequent days, and only these animals showed strong DA release in mPFC. This suggests an association between mPFC DA and the discovery of correct discrimination contingencies, and a facilitative or perhaps even causal role for DA in the formation of successful go vs. no go discrimination.

Neuronal activity in auditory cortex is known to be influenced by dopaminergic inputs (e.g., Bao et al., 2001) compatible with the anatomical connectivity from the VTA to the auditory cortex (e.g., Budinger et al., 2008). To investigate the role of specific DA receptors in auditory discrimination learning, a variety of DA agonists and antagonists were administered bilaterally to the auditory cortex both before and after training (Tischmeyer et al., 2003; Schicknick et al., 2008, 2012). The chief conclusion from these experiments was that only drugs affecting D1/D5 receptors are capable of depressing or enhancing discrimination learning. The most interesting effect was that the D1 agonist SKF 38393 injected before training did not influence acquisition during the training session but did lead to improved retrieval the next day. This effect was blocked by concurrent application of rapamycin, a specific inhibitor of the protein kinase mTOR implicated in the control of synaptic protein synthesis and relevant for memory consolidation in discriminative avoidance learning (Kraus et al., 2002). Taken together, these experiments suggest that DA release in auditory cortex is necessary for the FM tone conditioned avoidance response, and may enhance memory consolidation via a D1-receptor-mediated pathway.

While the administration of pharmacological agents is useful for elucidating specific receptor pathways, this approach is limited in that it alters tonic neuromodulation over a prolonged period of time without informing, and perhaps even interfering with, the role of dynamic neuromodulation, i.e., the up-and-down fluctuations in neuromodulators over very short time scales. Based on the evidence outlined in the previous sections, such phasic changes in the DA signal may be especially relevant to incentivized learning. Specifically, DA neurons are known to respond to the omission of an expected appetitive stimulus with a momentary cessation in firing. We theorized that DA neurons would greet the omission of an expected aversive stimulus in a symmetrical manner, namely, with a transient burst in firing. Signaled active avoidance learning inherently leads to such a negative expectation (e.g., shock will follow tone) as well as its subsequent violation (e.g., shock does not follow tone if hurdle is promptly crossed). Could DA involvement in avoidance learning be specific to the trials when the expectation of shock is violated, that is, when the animal first performs a successful avoidance response? Could a pronounced DA increase at this critical moment be responsible for reinforcing the avoidance response?

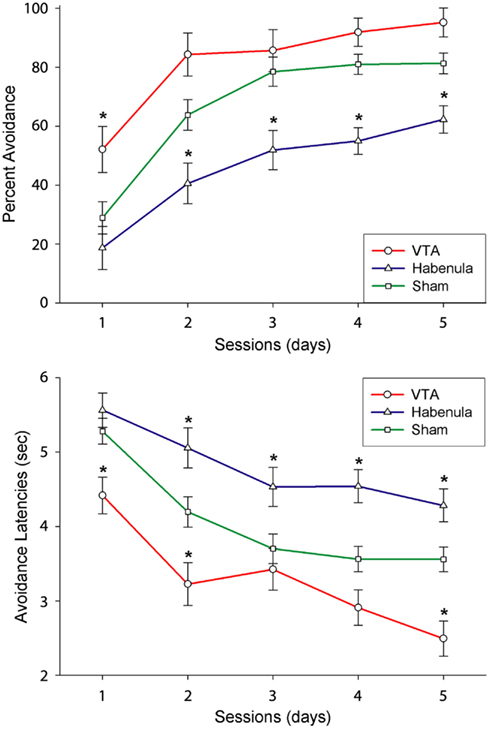

If so, a transient disruption of DA transmission following the initial trials of successful avoidance responding (when the animal is pleasantly surprised by the absence of shock) should disrupt learning. On the other hand, an equivalent manipulation following later trials after the avoidance response is well learned (when the animal fully expects that its behavior will lead to the absence of shock) should have no effect. Electrical stimulation of the lateral habenula (LHb), which results in transient, widespread inhibition of DA neurons in rodents and primates (Christoph et al., 1986; Ji and Shepard, 2007; Matsumoto and Hikosaka, 2007), was used to test this hypothesis (Shumake et al., 2010). Specifically, we implanted the LHb with a stimulation electrode and delivered brief electrical stimulation whenever the animal performed a correct avoidance response, i.e., when the initial avoidance of foot shock was hypothesized to trigger an intrinsic reward signal. As predicted, LHb stimulation initiated early in training impaired learning, but LHb stimulation initiated late in training had no effect (Shumake et al., 2010; Figure 1). These findings suggest a vital role for phasic DA signaling in the successful acquisition of active avoidance behavior. What is not yet clear is whether the presumed phasic increases in DA add up to the tonic increases in forebrain DA levels previously observed (Stark et al., 1999, 2000; Giorgi et al., 2003), or whether phasic and tonic DA signals convey differential information in the context of avoidance learning.

Figure 1. Effect of VTA vs. LHb stimulation on the acquisition of avoidance. Upper and lower panels indicate the Mean and SEs of successful avoidance trials and avoidance latency. Asterisks indicate significant differences between stimulated vs. control group (Modified from “Shumake et al., 2010”).

Brain Stimulation Reward and Avoidance Learning

Since James Olds discovered intracranial self-stimulation (ICSS; Olds and Milner, 1954; Olds, 1958), several ICSS-supporting regions have been characterized. The majority of these regions lie along DA projections, such that robust ICSS can be evoked from the VTA, substantia nigra, and lateral hypothalamus. Moreover, extracellular DA elevation is necessary to maintain ICSS (Fibiger et al., 1987; Fiorino et al., 1993; Owesson-White et al., 2008). Over the years, the effects of brain stimulation reward (BSR) were studied in learning and memory experiments, and it was found that BSR applied as experimenter-delivered stimulation or self-stimulation by the animal facilitated avoidance learning (Mondadori et al., 1976; Huston et al., 1977; Destrade and Jaffard, 1978; Segura-Torres et al., 1988, 1991, 2010; Huston and Oitzl, 1989; Aldavert-Vera et al., 1997; Ruiz-Medina et al., 2008). These results show that BSR given before or after training led to improvement of avoidance learning by improving the learning efficiency. However, correct avoidance responding is reinforced not only by terminating the aversive warning signal, i.e., relief from fear, but also by producing a safety signal, i.e., response-generated feedback stimuli signaling safety (Cicala and Owen, 1976; Dinsmoor, 1977; Masterson et al., 1978). Concerning the aversive component, the potential for enhancing the strength of reinforcement, e.g., by increasing shock intensity, is rather limited. Concerning the appetitive component, however, it is possible to enhance the magnitude of reinforcement by using additional feedback stimuli, e.g., sensory cues contingent to avoidance (Morris, 1975; Cicala and Owen, 1976), access to a safe place (Modaresi, 1975; Baron et al., 1977), or handling during the inter-trial interval (Wahlsten and Sharp, 1969).

These data support the view that any stimuli negatively correlated with shock, whether exteroceptive (presented by the experimenter) or interoceptive (presented by the subject’s own behavior), are inherently rewarding (Dinsmoor, 2001). Compatible with this idea, recent MRI studies in humans have suggested that activity in the medial orbitofrontal cortex, a reinforcement evaluating area, reflects an intrinsic reward signal that serves to reinforce avoidance behavior (Kim et al., 2006). Thus, we can assume that in aversively motivated learning, avoidance learning responses come under the control of positive incentives. Earlier investigations on appetitive-aversive interactions have shown that appetitive training appears to facilitate subsequent aversive conditioning (Dickinson, 1976; Dickinson and Pearce, 1977) and that operant behavior is enhanced by using concurrent schedules of positive and negative reinforcement (Kelleher and Cook, 1959; Olds and Olds, 1962). Moreover, a few studies reported the facilitation of discrete-trial avoidance (Stein, 1965; Castro-Alamancos and Borrell, 1992) and Sidman avoidance (in which shock is not signaled but rather occurs at fixed intervals unless the animal performs the operant response; Margules and Stein, 1968; Carder, 1970) by non-contingent rewarding brain stimulation, an effect resembling the action of stimulant drugs like amphetamine on self-stimulation and avoidance performance.

These results support the idea that the brain reward system facilitates operant behavior, whether positively or negatively reinforced. Not tested, however, was the effect of BSR given contingently to a correct response, i.e., exactly during the time-point when the response-generated safety signal occurs. Thus, in our studies we used the shuttle-box two-way avoidance paradigm to provide a way to combine BSR with footshock negative reinforcement to drive the same learned operant behavior. We found that this reinforcer combination potentiated the speed of acquisition, led to superior (nearly 100% correct) performance and delayed extinction, as compared to either reinforcer alone (Ilango et al., 2010, 2011; Shumake et al., 2010). These findings demonstrate that adding intrinsic reward (by stimulating dopaminergic structures) to the relief from punishment results in maximum avoidance performance, supporting the view that brain reward circuits serve as a common neural substrate for both appetitively and aversively motivated behavior.

Perspectives

In conclusion, several lines of evidence strongly argue in favor of the involvement of reward circuitry for the processing of aversive stimuli, especially to encode their predictors and to form an instrumental strategy to avoid them (e.g., Brischoux et al., 2009; Matsumoto and Hikosaka, 2009; Bromberg-Martin et al., 2010; Ilango et al., 2010, 2011; Budygin et al., 2012). Specifically, the neurotransmitter DA is involved in neuronal and behavioral responses to cues predicting reward (approach) or punishment (avoidance), both of which are vital for adaptive behavior. Electrophysiological signatures obtained from VTA DA neurons have begun to reveal their convergent encoding strategy for mediating both appetitive and aversive learning (Kim et al., 2012). Furthermore, VTA BSR can be integrated into avoidance learning tasks to investigate the nature of reinforcer interaction, and to understand the similarity between affective states associated with absence of predicted appetitive stimuli (frustration) and predicted aversive stimuli (fear) vs. absence of predicted aversive stimuli (relief) and predicted appetitive stimuli (hope; Seymour et al., 2007; Ilango et al., 2010).

Further progress in understanding the neuronal basis of affective behaviors will rely on both technical and conceptual progress. On the technical side, optogenetic approaches will allow triggering temporally precise events in specific cell types. For example, driving DA neurons in VTA by channelrhodopsin has already been demonstrated to support vigorous intracranial self-stimulation and place preference (Tsai et al., 2009; Witten et al., 2011). Such approaches could be extended to clarify the respective roles of several cell populations in different behaviors.

On the conceptual side, behavioral paradigms that allow the assessment of DA-related neuronal signatures in flexible scenarios will be important. For example, deeper insight into the role of DA with respect to the dissociation between (1) the association of specific behavioral meaning to stimuli and (2) the organization of appropriate behaviors can be expected from comparison of Pavlovian and instrumental paradigms. Also, discriminative avoidance learning tasks can be used to investigate how the same DA neuron responds to a CS+ in a hit vs. a miss trial or to a CS- in false-alarm vs. a correct-rejection trial, thereby allowing assessment of which factors govern the recruitment of excitatory and inhibitory contributions to neuronal and behavioral responses.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by grants from the Deutsche Forschungsgemeinschaft (DFG), SFB 779 and SFB TRR 62. We thank all the technicians involved in the work over the years especially Kathrin Ohl, and Lydia Löw for help in animal training, care, and histology.

References

Abercrombie, E. D., Keefe, K. A., DiFrischia, D. S., and Zigmond, M. J. (1989). Differential effect of stress on in vivo dopamine release in striatum, nucleus accumbens, and medial frontal cortex. J. Neurochem. 52, 1655–1658.

Aldavert-Vera, L., Costa-Miserachs, D., Massanes-Rotger, E., Soriano-Mas, C., Segura-Torres, P., and Morgado-Bernal, I. (1997). Facilitation of a distributed shuttle-box conditioning with posttraining intracranial self-stimulation in old rats. Neurobiol. Learn. Mem. 67, 254–258.

Anstrom, K. K., Miczek, K. A., and Budygin, E. A. (2009). Increased phasic dopamine signaling in the mesolimbic pathway during social defeat in rats. Neuroscience 161, 3–12.

Bao, S., Chan, V. T., and Merzenich, M. M. (2001). Cortical remodeling induced by activity of ventral tegmental dopamine neurons. Nature 412, 79–83.

Baron, A., Dewaard, R. J., and Lipson, J. (1977). Increased reinforcement when timeout from avoidance includes access to a safe place. J. Exp. Anal. Behav. 27, 479–494.

Beninger, R. J., Hoffman, D. C., and Mazurski, E. J. (1989). Receptor subtype-specific dopaminergic agents and conditioned behavior. Neurosci. Biobehav. Rev. 13, 113–122.

Boschen, S. L., Wietzikoski, E. C., Winn, P., and Da Cunha, C. (2011). The role of nucleus accumbens and dorsolateral striatal D2 receptors in active avoidance conditioning. Neurobiol. Learn. Mem. 96, 254–262.

Boutelle, M. G., Zetterström, T., Pei, Q., Svensson, L., and Fillenz, M. (1990). In vivo neurochemical effects of tail pinch. J. Neurosci. Methods 34, 151–157.

Bracs, P. U., Jackson, D. M., and Gregory, P. (1982). alpha-methyl-p-tyrosine inhibition of a conditioned avoidance response: reversal by dopamine applied to the nucleus accumbens. Psychopharmacology (Berl.) 77, 159–163.

Brischoux, F., Chakraborty, S., Brierley, D. I., and Ungless, M. A. (2009). Phasic excitation of dopamine neurons in ventral VTA by noxious stimuli. Proc. Natl. Acad. Sci. U.S.A. 106, 4894–4899.

Bromberg-Martin, E. S., Matsumoto, M., and Hikosaka, O. (2010). Dopamine in motivational control: rewarding, aversive, and alerting. Neuron 68, 815–834.

Brown, M. T., Henny, P., Bolam, J. P., and Magill, P. J. (2009). Activity of neurochemically heterogeneous dopaminergic neurons in the substantia nigra during spontaneous and driven changes in brain state. J. Neurosci. 29, 2915–2925.

Budinger, E., Laszcz, A., Lison, H., Scheich, H., and Ohl, F. W. (2008). Non-sensory cortical and subcortical connections of the primary auditory cortex in Mongolian gerbils: bottom-up and top-down processing of neuronal information via field AI. Brain Res. 1220, 2–32.

Budygin, E. A., Park, J., Bass, C. E., Grinevich, V. P., Bonin, K. D., and Wightman, R. M. (2012). Aversive stimulus differentially triggers subsecond dopamine release in reward regions. Neuroscience 201, 331–337.

Carder, B. (1970). Lateral hypothalamic stimulation and avoidance in rats. J. Comp. Physiol. Psychol. 71, 325–333.

Castro-Alamancos, M. A., and Borrell, J. (1992). Facilitation and recovery of shuttle box avoidance behavior after frontal cortex lesions is induced by a contingent electrical stimulation in the ventral tegmental nucleus. Behav. Brain Res. 50, 69–76.

Christoph, G. R., Leonzio, R. J., and Wilcox, K. S. (1986). Stimulation of the lateral habenula inhibits dopamine-containing neurons in the substantia nigra and ventral tegmental area of the rat. J. Neurosci. 6, 613–619.

Cicala, G. A., and Owen, I. W. (1976). Warning signal termination and a feedback signal may not serve the same function. Learn. Motiv. 7, 356–367.

Cohen, J. Y., Haesler, S., Vong, L., Lowell, B. B., and Uchida, N. (2012). Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature 482, 85–88.

Coizet, V., Dommett, E. J., Klop, E. M., Redgrave, P., and Overton, P. G. (2010). The parabrachial nucleus is a critical link in the transmission of short latency nociceptive information to midbrain dopaminergic neurons. Neuroscience 168, 263–272.

Coizet, V., Dommett, E. J., Redgrave, P., and Overton, P. G. (2006). Nociceptive responses of midbrain dopaminergic neurones are modulated by the superior colliculus in the rat. Neuroscience 139, 1479–1493.

Cooper, B. R., Breese, G. R., Grant, L. D., and Howard, J. L. (1973). Effects of 6-hydroxydopamine treatments on active avoidance responding: evidence for involvement of brain dopamine. J. Pharmacol. Exp. Ther. 185, 358–370.

Destrade, C., and Jaffard, R. (1978). Post-trial hippocampal and lateral hypothalamic electrical stimulation. Facilitation on long-term memory of appetitive and avoidance learning tasks. Behav. Biol. 22, 354–374.

Dickinson, A. (1976). Appetitive-aversion interactions: facilitation of aversive conditioning by prior appetitive training in the rat. Anim. Learn. Behav. 4, 416–420.

Dickinson, A., and Pearce, J. M. (1977). Inhibitory interactions between appetitive and aversive stimuli. Psychol. Bull. 84, 690–711.

Dinsmoor, J. A. (1977). Escape, avoidance, punishment: where do we stand? J. Exp. Anal. Behav. 28, 83–95.

Dinsmoor, J. A. (2001). Stimuli inevitably generated by behavior that avoids electric shock are inherently reinforcing. J. Exp. Anal. Behav. 75, 311–333.

Fibiger, H. C., LePiane, F. G., Jakubovic, A., and Phillips, A. G. (1987). The role of dopamine in intracranial self-stimulation of the ventral tegmental area. J. Neurosci. 7, 3888–3896.

Fiorino, D. F., Coury, A., Fibiger, H. C., and Phillips, A. G. (1993). Electrical stimulation of reward sites in the ventral tegmental area increases dopamine transmission in the nucleus accumbens of the rat. Behav. Brain Res. 55, 131–141.

Gao, D. M., Hoffman, D., and Benabid, A. L. (1996). Simultaneous recording of spontaneous activities and nociceptive responses from neurons in the pars compacta of substantia nigra and in the lateral habenula. Eur. J. Neurosci. 8, 1474–1478.

Gao, D. M., Jeaugey, L., Pollak, P., and Benabid, A. L. (1990). Intensity-dependent nociceptive responses from presumed dopaminergic neurons of the substantia nigra pars compacta in the rat and their modification by lateral habenula inputs. Brain Res. 529, 315–319.

Giorgi, O., Lecca, D., Piras, G., Driscoll, P., and Corda, M. G. (2003). Dissociation between mesocortical dopamine release and fear-related behaviours in two psychogenetically selected lines of rats that differ in coping strategies to aversive conditions. Eur. J. Neurosci. 17, 2716–2726.

Guarraci, F. A., and Kapp, B. S. (1999). An electrophysiological characterization of ventral tegmental area dopaminergic neurons during differential pavlovian fear conditioning in the awake rabbit. Behav. Brain Res. 99, 169–179.

Huston, J. P., Mueller, C. C., and Mondadori, C. (1977). Memory facilitation by posttrial hypothalamic stimulation and other reinforcers: a central theory of reinforcement. Biobehav. Rev. 1, 143–150.

Huston, J. P., and Oitzl, M. S. (1989). The relationship between reinforcement and memory: parallels in the rewarding and mnemonic effects of the neuropeptide substance P. Neurosci. Biobehav. Rev. 13, 171–180.

Ikemoto, S. (2007). Dopamine reward circuitry: two projection systems from the ventral midbrain to the nucleus accumbens-olfactory tubercle complex. Brain Res. Rev. 56, 27–78.

Ikemoto, S., and Panksepp, J. (1999). The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Res. Brain Res. Rev. 31, 6–41.

Ilango, A., Wetzel, W., Scheich, H., and Ohl, F. W. (2010). The combination of appetitive and aversive reinforcers and the nature of their interaction during auditory learning. Neuroscience 166, 752–762.

Ilango, A., Wetzel, W., Scheich, H., and Ohl, F. W. (2011). Effects of ventral tegmental area stimulation on the acquisition and long term retention of active avoidance learning. Behav. Brain Res. 225, 515–521.

Imperato, A., Angelucci, L., Casolini, P., Zocchi, A., and Puglisi-Allegra, S. (1992). Repeated stressful experiences differently affect limbic dopamine release during and following stress. Brain Res. 577, 194–199.

Jackson, D. M., Ahlenius, S., Andén, N. E., and Engel, J. (1977). Antagonism by locally applied dopamine into the nucleus accumbens or the corpus striatum of alpha-methyltyrosine-induced disruption of conditioned avoidance behaviour. J. Neural Transm. 41, 231–239.

Ji, H., and Shepard, P. D. (2007). Lateral habenula stimulation inhibits rat midbrain dopamine neurons through a GABA(A) receptor-mediated mechanism. J. Neurosci. 27, 6923–6930.

Kalivas, P. W., and Duffy, P. (1995). Selective activation of dopamine transmission in the shell of the nucleus accumbens by stress. Brain Res. 675, 325–328.

Kelleher, R. T., and Cook, L. (1959). An analysis of the behavior of rats and monkeys on concurrent fixed-ratio avoidance schedules. J. Exp. Anal. Behav. 2, 203–211.

Kim, H., Shimojo, S., and O’Doherty, J. P. (2006). Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS Biol. 4, e233. doi:10.1371/journal.pbio.0040233

Kim, Y., Wood, J., and Moghaddam, B. (2012). Coordinated activity of ventral tegmental neurons adapts to appetitive and aversive learning. PLoS ONE 7, e29766. doi:10.1371/journal.pone.0029766

Konorski, J. (1967). The Integrative Activity of the Brain: An Interdisciplinary Approach. Chicago: Chicago University Press.

Kraus, M., Schicknick, H., Wetzel, W., Ohl, F., Staak, S., and Tischmeyer, W. (2002). Memory consolidation for the discrimination of frequency-modulated tones in mongolian gerbils is sensitive to protein-synthesis inhibitors applied to the auditory cortex. Learn. Mem. 9, 293–303.

Mantz, J., Thierry, A. M., and Glowinski, J. (1989). Effect of noxious tail pinch on the discharge rate of mesocortical and mesolimbic dopamine neurons: selective activation of the mesocortical system. Brain Res. 476, 377–381.

Margules, D. L., and Stein, L. (1968). Facilitation of sidman avoidance behavior by positive brain stimulation. J. Comp. Physiol. Psychol. 66, 182–184.

Masterson, F. A., Crawford, M., and Bartter, W. D. (1978). Brief escape from a dangerous place: the role of reinforcement in the rat’s one-way avoidance acquisition. Learn. Motiv. 9, 141–163.

Matsumoto, M., and Hikosaka, O. (2007). Lateral habenula as a source of negative reward signals in dopamine neurons. Nature 447, 1111–1115.

Matsumoto, M., and Hikosaka, O. (2009). Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature 459, 837–841.

McCullough, L. D., Sokolowski, J. D., and Salamone, J. D. (1993). A neurochemical and behavioral investigation of the involvement of nucleus accumbens dopamine in instrumental avoidance. Neuroscience 52, 919–925.

Mileykovskiy, B., and Morales, M. (2011). Duration of inhibition of ventral tegmental area dopamine neurons encodes a level of conditioned fear. J. Neurosci. 31, 7471–7476.

Mirenowicz, J., and Schultz, W. (1996). Preferential activation of midbrain dopamine neurons by appetitive rather than aversive stimuli. Nature 379, 449–451.

Modaresi, H. A. (1975). One-way characteristic performance of rats under two-way signaled avoidance conditions. Learn. Motiv. 6, 484–497.

Mondadori, C., Ornstein, K., Waser, P. G., and Huston, J. P. (1976). Post-trial reinforcing hypothalamic stimulation can facilitate avoidance learning. Neurosci. Lett. 2, 183–187.

Morris, R. G. M. (1975). Preconditioning of reinforcing properties to an exteroceptive feedback stimulus. Learn. Motiv. 6, 289–298.

Ohl, F. W., Wetzel, W., Wagner, T., Rech, A., and Scheich, H. (1999). Bilateral ablation of auditory cortex in Mongolian gerbil affects discrimination of frequency modulated tones but not of pure tones. Learn. Mem. 6, 347–362.

Olds, J. (1958). Self-stimulation of the brain. Its use to study local effects of hunger, sex, and drugs. Science 127, 315–324.

Olds, J., and Milner, P. M. (1954). Positive reinforcement produced by electrical stimulation of septal area and other regions of rat brain. J. Comp. Physiol. Psychol. 47, 419–427.

Olds, M. E., and Olds, J. (1962). Approach-escape interactions in rat brain. Am. J. Physiol. 203, 803–810.

Owesson-White, C. A., Cheer, J. F., Beyene, M., Carelli, R. M., and Wightman, R. M. (2008). Dynamic changes in accumbens dopamine correlate with learning during intracranial self-stimulation. Proc. Natl. Acad. Sci. U.S.A. 105, 11957–11962.

Park, J., Wheeler, R. A., Fontillas, K., Keithley, R. B., Carelli, R. M., and Wightman, R. M. (2012). Catecholamines in the bed nucleus of the stria terminalis reciprocally respond to reward and aversion. Biol. Psychiatry 71, 327–334.

Pei, Q., Zetterström, T., and Fillenz, M. (1990). Tail pinch-induced changes in the turnover and release of dopamine and 5-hydroxytryptamine in different brain regions of the rat. Neuroscience 35, 133–138.

Roitman, M. F., Wheeler, R. A., Wightman, R. M., and Carelli, R. M. (2008). Real-time chemical responses in the nucleus accumbens differentiate rewarding and aversive stimuli. Nat. Neurosci. 12, 1376–1377.

Ruiz-Medina, J., Redolar-Ripoll, D., Morgado-Bernal, I., Aldavert-Vera, L., and Segura-Torres, P. (2008). Intracranial self-stimulation improves memory consolidation in rats with little training. Neurobiol. Learn. Mem. 89, 574–581.

Salamone, J. D. (1994). The involvement of nucleus accumbens dopamine in appetitive and aversive motivation. Behav. Brain Res. 61, 117–133.

Salamone, J. D., and Correa, M. (2002). Motivational views of reinforcement: implications for understanding the behavioral functions of nucleus accumbens dopamine. Behav. Brain Res. 137, 3–25.

Schicknick, H., Reichenbach, N., Smalla, K. H., Scheich, H., Gundelfinger, E. D., and Tischmeyer, W. (2012). Dopamine modulates memory consolidation of discrimination learning in the auditory cortex. Eur. J. Neurosci. 35, 763–774.

Schicknick, H., Schott, B. H., Budinger, E., Smalla, K. H., Riedel, A., Seidenbecher, C. I., Scheich, H., Gundelfinger, E. D., and Tischmeyer, W. (2008). Dopaminergic modulation of auditory cortex-dependent memory consolidation through mTOR. Cereb. Cortex 18, 2646–2658.

Schultz, W., Dayan, P., and Montague, P. R. (1997). A neural substrate of prediction and reward. Science 275, 1593–1599.

Segura-Torres, P., Aldavert-Vera, L., Gatell-Segura, A., Redolar-Ripoll, D., and Morgado-Bernal, I. (2010). Intracranial self-stimulation recovers learning and memory capacity in basolateral amygdala-damaged rats. Neurobiol. Learn. Mem. 93, 117–126.

Segura-Torres, P., Capdevila-Ortís, L., Martí-Nicolovius, M., and Morgado-Bernal, I. (1988). Improvement of shuttle-box learning with pre- and post-trial intracranial self-stimulation in rats. Behav. Brain Res. 29, 111–117.

Segura-Torres, P., Portell-Cortés, I., and Morgado-Bernal, I. (1991). Improvement of shuttle-box avoidance with post-training intracranial self-stimulation, in rats: a parametric study. Behav. Brain Res. 42, 161–167.

Seymour, B., Singer, T., and Dolan, R. (2007). The neurobiology of punishment. Nat. Rev. Neurosci. 8, 300–311.

Shumake, J., Ilango, A., Scheich, H., Wetzel, W., and Ohl, F. W. (2010). Differential neuromodulation of acquisition and retrieval of avoidance learning by the lateral habenula and ventral tegmental area. J. Neurosci. 30, 5876–5883.

Sokolowski, J. D., McCullough, L. D., and Salamone, J. D. (1994). Effects of dopamine depletions in the medial prefrontal cortex on active avoidance and escape in the rat. Brain Res. 65, 293–299.

Sorg, B. A., and Kalivas, P. W. (1991). Effects of cocaine and footshock stress on extracellular dopamine levels in the ventral striatum. Brain Res. 559, 29–36.

Sorg, B. A., and Kalivas, P. W. (1993). Effects of cocaine and footshock stress on extracellular dopamine levels in the medial prefrontal cortex. Neuroscience 53, 695–703.

Stark, H., Bischof, A., and Scheich, H. (1999). Increase of extracellular dopamine in prefrontal cortex of gerbils during acquisition of the avoidance strategy in the shuttle-box. Neurosci. Lett. 264, 77–80.

Stark, H., Bischof, A., Wagner, T., and Scheich, H. (2000). Stages of avoidance strategy formation in gerbils are correlated with dopaminergic transmission activity. Eur. J. Pharmacol. 405, 263–275.

Stark, H., Bischof, A., Wagner, T., and Scheich, H. (2001). Activation of the dopaminergic system of medial prefrontal cortex of gerbils during formation of relevant associations for the avoidance strategy in the shuttle-box. Prog. Neuropsychopharmacol. Biol. Psychiatry 25, 409–426.

Stark, H., Rothe, T., Deliano, M., and Scheich, H. (2008). Dynamics of cortical theta activity correlates with stages of auditory avoidance strategy formation in a shuttle-box. Neuroscience 151, 467–475.

Stark, H., Rothe, T., Wagner, T., and Scheich, H. (2004). Learning a new behavioral strategy in the shuttle-box increases prefrontal dopamine. Neuroscience 126, 21–29.

Stein, L. (1965). Facilitation of avoidance behaviour by positive brain stimulation. J. Comp. Physiol. Psychol. 60, 9–19.

Tischmeyer, W., Schicknick, H., Kraus, M., Seidenbecher, C. I., Staak, S., Scheich, H., and Gundelfinger, E. D. (2003). Rapamycin-sensitive signalling in long-term consolidation of auditory cortex-dependent memory. Eur. J. Neurosci. 18, 942–950.

Tobler, P. N., Dickinson, A., and Schultz, W. (2003). Coding of predicted reward omission by dopamine neurons in a conditioned inhibition paradigm. J. Neurosci. 23, 10402–10410.

Tsai, C. T., Nakamura, S., and Iwama, K. (1980). Inhibition of neuronal activity of the substantia nigra by noxious stimuli and its modification by the caudate nucleus. Brain Res. 195, 299–311.

Tsai, H. C., Zhang, F., Adamantidis, A., Stuber, G. D., Bonci, A., de Lecea, L., and Deisseroth, K. (2009). Phasic firing in dopaminergic neurons is sufficient for behavioral conditioning. Science 324, 1080–1084.

Ungless, M. A., Argilli, E., and Bonci, A. (2010). Effects of stress and aversion on dopamine neurons: implications for addiction. Neurosci. Biobehav. Rev. 35, 151–156.

Ungless, M. A., Magill, P. J., and Bolam, J. P. (2004). Uniform inhibition of dopamine neurons in the ventral tegmental area by aversive stimuli. Science 303, 2040–2042.

Valenti, O., Lodge, D. J., and Grace, A. A. (2011). Aversive stimuli alter ventral tegmental area dopamine neuron activity via a common action in the ventral hippocampus. J. Neurosci. 31, 4280–4289.

Wadenberg, M. L., Ericson, E., Magnusson, O., and Ahlenius, S. (1990). Suppression of conditioned avoidance behavior by the local application of (-)sulpiride into the ventral, but not the dorsal, striatum of the rat. Biol. Psychiatry 28, 297–307.

Wahlsten, D., and Sharp, D. (1969). Improvement of shuttle avoidance by handling during the intertrial interval. J. Comp. Physiol. Psychol. 67, 252–259.

Wang, D. V., and Tsien, J. Z. (2011). Convergent processing of both positive and negative motivational signals by the VTA dopamine neuronal populations. PLoS ONE 6, e17047. doi:10.1371/journal.pone.0017047

Wetzel, W., Ohl, F. W., and Scheich, H. (2008). Global versus local processing of frequency-modulated tones in gerbils: an animal model of lateralized auditory cortex functions. Proc. Natl. Acad. Sci. U.S.A. 105, 6753–6758.

Wetzel, W., Ohl, F. W., Wagner, T., and Scheich, H. (1998). Right auditory cortex lesion in Mongolian gerbils impairs discrimination of rising and falling frequency-modulated tones. Neurosci. Lett. 252, 115–118.

Wietzikoski, E. C., Boschen, S. L., Miyoshi, E., Bortolanza, M., Dos Santos, L. M., Frank, M., Brandão, M. L., Winn, P., and Da Cunha, C. (2012). Roles of D1-like dopamine receptors in the nucleus accumbens and dorsolateral striatum in conditioned avoidance responses. Psychopharmacology (Berl.) 219, 159–169.

Witten, I. B., Steinberg, E. E., Lee, S. Y., Davidson, T. J., Zalocusky, K. A., Brodsky, M., Yizhar, O., Cho, S. L., Gong, S., Ramakrishnan, C., Stuber, G. D., Tye, K. M., Janak, P. H., and Deisseroth, K. (2011). Recombinase-driver rat lines: tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron 72, 721–733.

Keywords: dopamine, aversive stimuli, avoidance learning, intracranial self-stimulation, reward and punishment, dorsal vs. ventral striatum, lateral habenula, ventral tegmental area

Citation: Ilango A, Shumake J, Wetzel W, Scheich H and Ohl FW (2012) The role of dopamine in the context of aversive stimuli with particular reference to acoustically signaled avoidance learning. Front. Neurosci. 6:132. doi: 10.3389/fnins.2012.00132

Received: 29 April 2012; Paper pending published: 14 May 2012;

Accepted: 25 August 2012; Published online: 14 September 2012.

Edited by:

Philippe N. Tobler, University of Zurich, SwitzerlandReviewed by:

Shunsuke Kobayashi, Fukushima Medical University, JapanMitchell Roitman, University of Illinois at Chicago, USA

Copyright: © 2012 Ilango, Shumake, Wetzel, Scheich and Ohl. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Anton Ilango, Intramural Research Programme, National Institute on Drug Abuse, National Institutes of Health, 251 Bayview Blvd., Baltimore, MD 21224, USA. e-mail:YW50b25pbGFuZ29AaG90bWFpbC5jb20=; Frank W. Ohl, Leibniz Institute for Neurobiology, Brenneckestrasse 6, D-39118 Magdeburg, Germany. e-mail:ZnJhbmsub2hsQGlmbi1tYWdkZWJ1cmcuZGU=

†Present address: Anton Ilango, Intramural Research Programme, National Institute on Drug Abuse, National Institutes of Health, 251 Bayview Blvd., Baltimore, MD 21224, USA.

Jason Shumake1,3

Jason Shumake1,3