- 1 Department of Psychology and Neuroscience, Duke University, Durham, NC, USA

- 2 Center for Cognitive Neuroscience, Duke University, Durham, NC, USA

Decision neuroscience research, as currently practiced, employs the methods of neuroscience to investigate concepts drawn from the social sciences. A typical study selects one or more variables from psychological or economic models, manipulates or measures choices within a simplified choice task, and then identifies neural correlates. Using this “neuroeconomic” approach, researchers have described brain systems whose functioning shapes key economic variables, most notably aspects of subjective value. Yet, the standard approach has fundamental limitations. Important aspects of the mechanisms of decision making – from the sources of variability in decision making to the very computations supported by decision-related regions – remain incompletely understood. Here, I outline 10 outstanding challenges for future research in decision neuroscience. While some will be readily addressed using current methods, others will require new conceptual frameworks. Accordingly, a new strain of decision neuroscience will marry methods from economics and cognitive science to concepts from neurobiology and cognitive neuroscience.

Introduction

Decision neuroscience, including its subfield of neuroeconomics, has provided new insights into the mechanisms that underlie a wide range of economic and social phenomena, from risky choice and temporal discounting to altruism and cooperation. However, its greatest successes clearly lie within one domain: identifying and mapping neural signals for value. Canonical results include the linking of dopaminergic neuron activity to information about current and future rewards (Schultz et al., 1997); the generalization of value signals from primary rewards to include money (Delgado et al., 2000; Knutson et al., 2001), social stimuli and interpersonal interactions (Sanfey et al., 2003; King-Casas et al., 2005); and the identification of neural markers for economic transactions (Padoa-Schioppa and Assad, 2006; Plassmann et al., 2007). And, in recent studies, these value signals can be shown to be simultaneously and automatically computed for complex stimuli (Hare et al., 2008; Lebreton et al., 2009; Smith et al., 2010). In all, research has coalesced on a common framework for the neural basis of valuation; for reviews see Platt and Huettel (2008), Rangel et al. (2008), Kable and Glimcher (2009).

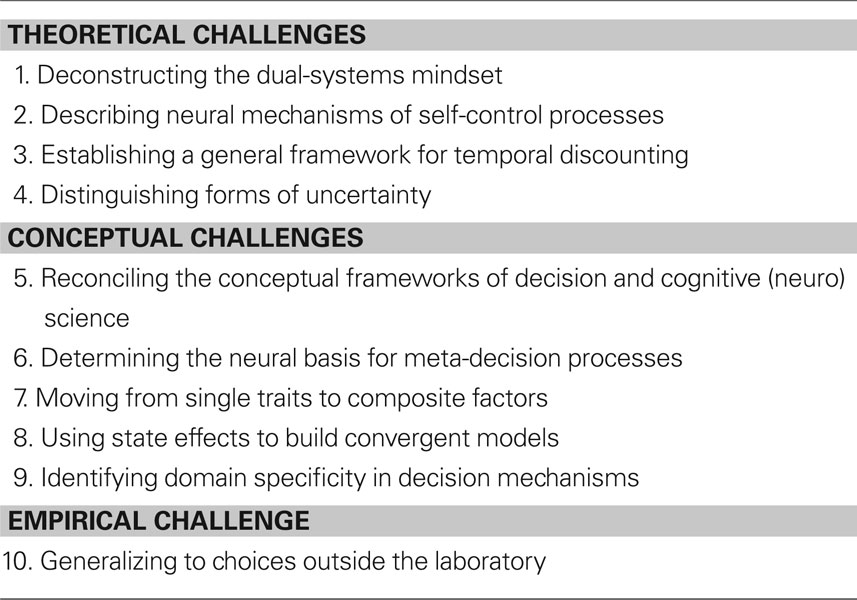

Despite these successes, other aspects of the neural basis of decision making remain much less well understood. Even where there has been significant progress – as in elucidating the neural basis of other decision variables like probability and temporal delay – there remain key open and unanswered questions. Below are described ten major problems for future research in decision neuroscience (Table 1). By focusing on theoretical and conceptual challenges specific to decision neuroscience, this review necessarily omits important future methodological advances that will shape all of neuroscience: applications to new populations, longitudinal analyses of individuals, genomic advances, and new technical advances (e.g., linking single-unit and fMRI studies). Even with these caveats, this list provides a broad overview of the capabilities of and challenges facing this new discipline.

Deconstructing the Dual-Systems Mindset

The most pervasive conceptual frameworks for decision neuroscience – and for decision science and even all of psychology, more generally – are dual-systems models. As typically framed, such models postulate that decisions result from the competitive interactions between two systems: one slow, effortful, deliberative, and foresightful, the other rapid, automatic, unconscious, and focused on the present state (Kahneman, 2003). Considerable behavioral research supports key predictions of this class of models: e.g., time pressure and cognitive load have more limiting effects on deliberative than automatic judgments (Shiv and Fedorikhin, 1999). Many decision neuroscience studies, including seminal work, have described their findings in dual-systems terms (Sanfey et al., 2003; McClure et al., 2004a; De Martino et al., 2006), typically linking regions like lateral prefrontal cortex to the slow deliberative system and regions like the ventral striatum and amygdala to the rapid automatic system.

The dual-systems framework has been undoubtedly successful for psychological research, both by sparking studies of the contributions of affect to decision making and by generating testable predictions for new experiments. Yet, it has had some unintended consequences for decision neuroscience, often because the psychological and neural instantiations of each system vary dramatically across studies. Psychological factors attributed to the emotional system include a range of disparate processes, from time pressure and anger to pain and temptation. Conversely, brain regions like the orbitofrontal cortex can be labeled as making cognitive or emotional contributions, in different contexts (Bechara et al., 2000; Schoenbaum et al., 2006; Pessoa, 2008). Some key regions do not fit readily into either system; e.g., insula and dorsomedial prefrontal cortex, which may play important roles in shaping activation in other regions (Sridharan et al., 2008; Venkatraman et al., 2009a). As a final and most general limitation, studies of functional connectivity throughout the brain indicate the often-simultaneous engagement of a larger set of functional systems (Beckmann et al., 2005; van den Heuvel et al., 2009).

The intuitiveness of the dual-system framework poses challenges for its replacement. Even so, considering models that involve a wider array of processes – each engaged according to task demands – would better match decision neuroscience to adaptive, flexible choice mechanisms (Payne et al., 1992; Gigerenzer and Goldstein, 1996).

Describing Neural Mechanisms of Self-Control Processes

Self-control is a common construct in decision research, both in interpretations of real-world behavior (Thaler and Shefrin, 1981; Baumeister et al., 2007) and in explanations of neuroscience results (Hare et al., 2009). Social psychology researchers have operationalized self-control as the ability to pursue long-term goals instead of immediate rewards. Cognitive psychology and neuroscience researchers often adopt a broader perspective: control processes shape our thoughts and actions in a goal-directed and context-dependent manner. Prior cognitive neuroscience research has linked control processing to the prefrontal cortex (PFC), specifically lateral PFC, which is assumed to modulate processing in other parts of the brain based on current goals (Miller and Cohen, 2001). Decision neuroscience studies have argued that lateral PFC exerts an influence upon regions involved in the construction of value signals (Barraclough et al., 2004; McClure et al., 2004b; Plassmann et al., 2007), potentially leading to the adaptive behaviors (e.g., delay of gratification) considered by social psychology research (Figner et al., 2010).

But, control demands are not identical across contexts, nor is control processing likely to be linked to one neural module. Even a unique link to PFC would be an oversimplification; across humans and other great apes the PFC constitutes approximately one-third of the brain, by volume (Semendeferi et al., 2002). Accordingly, a core theme of cognitive neuroscience research has been to parse PFC according to distinct sub-regions’ contributions to control of behavior. Considerable evidence now supports the idea that, within lateral PFC, more posterior regions contribute to the control of action in an immediate temporal context, while more anterior regions support more abstract, integrative, and planning-oriented processes (Koechlin et al., 2003; Badre and D’Esposito, 2007). Recent work has extended this posterior-to-anterior organization to dorsomedial PFC (Kouneiher et al., 2009; Venkatraman et al., 2009b), which has often been implicated in processes related to reward evaluation.

By connecting to this burgeoning literature on PFC organization, decision neuroscience could move beyond simple reverse-inference interpretations of control (Poldrack, 2006). Simple links can be made through increased specificity in descriptions of activation locations and their putative contributions to control (Hare et al., 2009). Stronger links could be made through parallel experimentation. When a decision variable is mapped to a specific sub-region (e.g., the frontopolar cortex), researchers should also test non-decision-making tasks that challenge the hypothesized control processes of that region (e.g., relational integration). If the attributed function is correct, then both sorts of tasks should modulate the same brain region, ideally with similar effects of state and similar variability across individuals. Furthermore, manipulation techniques like transcranial magnetic stimulation should not only alter preferences and choices in the decision task, but also should influence performance in the simpler non-decision context – providing converging evidence for the underlying control processes.

Distinguishing Forms of Uncertainty

Uncertainty pervades decision making. Nearly all real-world choices involve some form of psychological uncertainty, whether about the likelihood of an event or about the nature of our future preferences. Most studies in the decision neuroscience literature – like in its counterparts in the social sciences – have examined the effects of risk; for reviews see Knutson and Bossaerts (2007), Platt and Huettel (2008), Rushworth and Behrens (2008). While definitions vary across contexts, a “risky decision” involves potential outcomes that are known but probabilistic, such that risk increases with variance among those outcomes, potentially normalized by the expected value (Weber et al., 2004). Uncertainty can have other forms, however. Outcomes may be known but occur with unknown probability; such decisions reflect ambiguity (Ellsberg, 1961). Only a handful of studies, so far, have investigated the neural basis of ambiguity (Smith et al., 2002; Hsu et al., 2005; Huettel et al., 2006; Bach et al., 2009). And, still other states of uncertainty might be evoked in cases where the outcomes themselves are unknown, as is the case in complex real-world decisions.

So far, decision neuroscience research has established weak, albeit numerous, links between uncertainty and its neural substrates. During active decision making, risk modulates regions of lateral prefrontal cortex, parietal cortex, and anterior insular cortex (Mohr et al., 2010), all of which contribute to the adaptive control of other aspects of behavior. Yet, risk also influences activation in other regions seemingly associated with simpler sensory, motor, or attentional processes (McCoy and Platt, 2005), as well as in the brain’s reward system directly (Berns et al., 2001; Fiorillo et al., 2003). The presence of ambiguity likewise modulates activation in both regions that support executive control (Huettel et al., 2006) and regions that track aversive outcomes (Hsu et al., 2005). In some of the above studies, these brain regions have been linked to the characteristics of the decision problem, in others to the choices made by a participant, and in still others to individual differences in uncertainty aversion. Still needed are characterizations of both common and distinct computational demands associated with different sorts of uncertainty – which would in turn provide new insights into neural function.

Establishing a General Framework for Temporal Discounting

Decision neuroscience research has long sought to understand the neural mechanisms of temporal discounting (Luhmann, 2009). Much of the recent debate in this area has revolved around questions of value: Do rewards available at different delays engage distinct value systems, such as a rapid, immediate system versus a patient, delayed system (McClure et al., 2004a)? Conversely, other evidence indicates that immediate rewards have no special status, at least in the monetary domain; they engage the same value system as observed for more distal rewards (Kable and Glimcher, 2007).

Monetary value, however, constitutes an unnatural reward for discounting experiments. The same research subject who discounts rewards by 5% per month – as when indifferent between $40 now and $42 four weeks later – might simultaneously evince discounting of few percent a year in their financial investments. This participant, who would likely be coded as relatively “patient”, evinces a laboratory discount rate more than an order of magnitude faster than that of typical real-world monetary investments. Even more strikingly, non-human animals often discount rewards completely within a few seconds (Mazur, 1987). Some differences in discount rate may come from task effects; e.g., animals are tested during reward learning while humans are asked about the value of prospective rewards. However, reward modality also affects discount rate: When human subjects make decisions about primary and social rewards, they show appreciable discounting within the seconds-to-minutes range (Hayden et al., 2007; McClure et al., 2007). Non-monetary rewards, therefore, may represent a better model for evolutionarily conserved processes of discounting.

The vast range of temporal discounting behavior – from patient real-world investments to impulsive laboratory choices – presents both a challenge and an opportunity. Research using human participants will need to test a wider range of rewards – both primary rewards like juice and more complex rewards like visual experiences (McClure et al., 2007) – and will need within-participant comparisons to monetary stimuli. By investigating individual differences in resistance to immediate rewards, more generally, researchers may identify interactions between control-related regions and value-related regions that together shape intertemporal choice (Hare et al., 2009). And, research will need better links to the well-established literature on interval timing (Buhusi and Meck, 2005); for example, parallel manipulations of time perception could provide a bridge between discounting phenomena and the associated neural processes. Through such integrative approaches, new research will provide more satisfying answers to core decision neuroscience questions about differences in processing of temporally immediate and distant rewards.

Reconciling the Conceptual Frameworks of Decision and Cognitive (Neuro)Science

For the dual-systems model to be replaced, simple criticisms will be insufficient – new models must be set forth in its place. Ideally, any replacement model should build upon cutting-edge findings in cognitive neuroscience about how brain systems are organized and interact. Yet, there is a conceptual disconnect between decision neuroscience and cognitive neuroscience. In decision neuroscience, concepts are typically described in terms of their behavioral consequences (e.g., temporal discounting) or decision variables (e.g., risk, ambiguity); i.e., contributions to a model of behavior. In cognitive science and cognitive neuroscience, however, functional concepts are typically described in terms of their contributions to a model of process (e.g., inhibition, working memory). Without reconciling these concepts, research in each field will continue apace.

The key challenge, accordingly, will be to create a functional taxonomy that maps decision behavior onto its underlying process. Because most economic models predict choices, but do not describe the choice process, they may have only an “as if” relationship to mechanisms. Each variable or operation in the model results from a host of independent computations, many of which may correspond to specific functions studied by cognitive neuroscience. To elucidate these relations will require two changes to typical decision-neuroscience methods. First, psychological studies of decision-making behavior can decompose key processes; e.g., interference observed in a dual-task paradigm can reveal that the primary decision process relies on the secondary process. Second, parallel measurement of cognitive tasks alongside decision-making tasks can strengthen functional claims, especially when an individual-difference variable or external manipulation exerts similar effects on multiple measures.

Determining the Neural Basis for Meta-Decision Processes

Early integrations of behavioral economics and psychology shared a common perspective: individuals vary in their approaches to decision making, especially in realistic scenarios (Simon, 1959; Tversky and Kahneman, 1974). Individuals can choose based on complex rules that involve compensatory trade-offs between decision variables or based on simplifying rules that ignore some information and emphasize other, depending on immediate task demands (Payne et al., 1992). Yet, the nature of most neuroscience experimentation discourages analysis of strategic, meta-decision processes. The fMRI signal associated with a single decision is relatively small, compared to ongoing noise, while PET and TMS studies collapse across all decisions in an entire experimental session. Thus, trial-to-trial variability is an infrequent target for analyses. Tasks in most studies are simple, with small stakes (e.g., tens to hundreds of dollars) obtained over a short duration, reducing the incentive to explore the full space of possible decision strategies. Participants are often well-practiced, especially in non-human primate single-unit studies that can involve thousands of trials; this can lead to stereotypy of behavior. Moreover, meta-decision processing can be difficult to model – in many contexts, different strategies could lead to the same expressed behavior.

Despite the challenges of describing strategic aspects of decision making, such analyses will become increasingly feasible. Neuroimaging techniques, in particular, provide an unique advantage by characterizing large-scale functional relationships, via recent advances in connectivity analyses (Buckner et al., 2009; Greicius et al., 2009). The explosion of interest in condition-specific connectivity modeling, functional mediation analyses, and similar techniques will provide new insights into how the same brain regions can support very different sorts of behavior, across individuals and contexts. Moreover, proof-of-concept examples can be seen in domains where a priori models exist – as in cases of exploration/exploitation (Daw et al., 2006) or compensatory/heuristic choice (Venkatraman et al., 2009a).

Moving from Single Traits to Composite Factors

Some of the most striking results in decision neuroscience link specific brain regions to complex cognitive traits. Most such examples come from across-subjects correlations between trait scores – whether derived based on observed behavior or self-reported on a questionnaire – and fMRI activation associated with a relevant task. In recent years, researchers have identified potential neural correlates for behaviors and traits as diverse as reward sensitivity (Beaver et al., 2006), Machiavellian personality (Spitzer et al., 2007), loss aversion (Tom et al., 2007), and altruism (Tankersley et al., 2007; Hare et al., 2010).

Trait-to-brain correlations, in themselves, provide only limited information about the specific processes supported by the associated brain regions. Due to the small sample sizes of fMRI research, relatively few studies adopt the methods of social and personality psychology. Even if a single trait is desired, incorporating related measures can improve specificity of claims; e.g., identifying the effects of altruism, controlling for empathy and theory-of-mind. In other areas of cognitive psychology, for example, measures of processing speed, memory, and other basic abilities can predict individual differences in more complex cognitive functions (Salthouse, 1996). And, factor and cluster analyses can take a set of related measures and derive composite traits – or can segment a sample into groups with shared characteristics, as frequently done in clinical settings. Improved trait measures will also facilitate genomic analyses; single genes will rarely match to traditional trait measures, making identification of robust traits crucial for multi-gene analyses.

Using State Effects to Build Convergent Models

Choices depend on one’s internal state. Long-recognized have been the effects of emotion and mood states; e.g., anger can lead to impulsive and overly optimistic choices, while fear and sadness can lead to considered, analytic, but pessimistic choices (Lerner and Keltner, 2001). Sleep deprivation leads to attentional lapses and to impairments in memory, reducing the quality of subsequent decisions and increasing risk-seeking behavior (Killgore et al., 2006), with concomitant effects on brain function (Venkatraman et al., 2007). And, state manipulations of key neurotransmitter systems can have effects similar to those of chronic drug abuse and of brain damage (Rogers et al., 1999). Given the wide variety of phenomena investigated, the study of state effects provides some of the clearest applications for decision neuroscience research.

The standard approach, so far, has been the characterization (cataloging) of each state effect separately. That is, researchers adopt a paradigm used in prior decision neuroscience research and then measure how a single manipulation of state alters the functioning of targeted brain regions. The result has been a collection of independent observations – each valuable in itself, but difficult to combine. A challenge for subsequent research, therefore, will be to create mechanistic models that allow generalization across a range of states. For one potential direction, consider the growing evidence that emotion interacts with cognitive control in a complex, and not necessarily antagonistic, manner (Gray et al., 2002; Pessoa, 2008). A general model of control would need to predict the effects of individual difference variables across several states; e.g., how trait impulsiveness influences choices under anger, following sleep deprivation, and after consumption of alcohol. Even more problematic will be creating models that account for combinations of states, such as interactions between drug abuse and depression. A key milestone for the maturity of decision neuroscience, as a discipline, will be the development of biologically plausible models that can predict behavior across a range of states.

Identifying Domain Specificity in Decision Mechanisms

Research in decision neuroscience is often motivated via examples from evolutionary biology. A canonical example comes from the domain of resource acquisition (e.g., foraging): Humans and other animals must identify potential sources of food in their local environment, each of which may lead to positive (e.g., nourishment) or negative (e.g., predation) consequences with different likelihoods. Thus, organisms have evolved with faculties for learning and deciding among options with benefits, costs, and probabilities – and the expression of these faculties in the modern environment results in the general models of decision-making found within economics and psychology (Weber et al., 2004). While such examples can provide important insights into the behavior of humans and our primate relatives, they also can lead to simple, ad hoc (or “just-so”) explanations based on evolutionary pressures.

An important new direction for decision neuroscience will lie in the evaluation of potential domain specificity. Different sorts of decision problems may led to different sorts of selection pressures. For example, compared to foraging decisions, choices in social contexts may have dramatically different properties. Our social decisions can be infrequent but have long-lasting consequences, poor social decisions limit the space of our future interactions, and all social interactions are made in a dynamic landscape altered by others’ behavior. Some of these features may change the cognitive processing necessary for adaptive social decisions, resulting in distinct neural substrates (Behrens et al., 2009). Because of this potential variability, decision neuroscience will need to consider how domains shape decision-making priors – the biases (and even mechanisms) that people bring to a decision problem. A focus on contextual priors has been very profitable in explaining other adaptive behaviors; perceptual biases, for example, reflect the natural statistics of our visual environment (Howe and Purves, 2002; Weiss et al., 2002). By identifying and characterizing distinct domains of decision making, and by understanding the natural properties of those domains, researchers will be better able to construct models that span both individual and social decision making.

Generalizing to Choices Outside the Laboratory

Concepts and paradigms from decision making have had unquestionably salutary effects on neuroscience research. Neuroscience, conversely, has had a much more limited influence on decision-making research in the social sciences. Concepts from decision neuroscience now appear in the marketing (Hedgcock and Rao, 2009), game theory (Bhatt and Camerer, 2005), finance (Bossaerts, 2009), and economics (Caplin and Dean, 2008) literatures. In several striking examples, researchers have used decision neuroscience experimentation to guide mechanism design in auctions (Delgado et al., 2008) and allocation of public goods (Krajbich et al., 2009). These sorts of conceptual influences can be labeled “weak decision neuroscience”, or the study of brain function to provide insight into potential regularities, without making novel predictions about real-world choices.

The most difficult challenge for future research will be to use neuroscience data, which by definition are collected in limited samples in laboratory settings, to predict the patterns of choices made by the larger population. Predictive power will be unlikely to come from the traditional experimental methods of cognitive neuroscience, which typically seek to predict an individual’s behavior based on their brain function (and thus cannot scale to the larger population). There are compelling arguments that, even in principle, no decision neuroscience experiment can falsify or prove any social science model (Clithero et al., 2008; Gul and Pesendorfer, 2008). The goal of a “strong decision neuroscience” – i.e., to use a single decision-neuroscience experiment to shape economic modeling or to guide a real-world policy – may be unattainable with conventional methods. However, this goal requires too much of decision neuroscience, in isolation (Clithero et al., 2008). The ability to better predict real-world choices will come from the iterative combination of neuroscience methods with measures of choice behavior, with the specific goal of using neuroscience to constrain the space of needed behavioral experiments. By identifying biologically plausible models and potentially productive lines of experimentation, decision neuroscience will speed the generation of novel predictions and better models.

Summary

So far, the field of decision neuroscience reflects a particular merging of disciplines: It integrates concepts from economics and cognitive psychology with the research methods of neuroscience. This combination has not only shaped understanding of the neural mechanisms that underlie decision making, it has influenced how researchers approach problems throughout the cognitive neurosciences – as one of many examples, consider recent work on the interactions between reward and memory (Adcock et al., 2006; Axmacher et al., 2010; Han et al., 2010).

Yet, fundamental problems remain. Some will be resolved over the coming years through new analysis techniques, novel experimental tasks, or the accumulation of new data. Other, more difficult problems may not be addressable using the methods typical to current studies. They require, instead, a reversal of the traditional approach – changing to one that integrates research methods from economics and psychology (e.g., econometrics, psychometric analyses) with conceptual models from neuroscience (e.g., topographic functional maps, computational biology). In doing so, decision neuroscience can extend the progress it has made in understanding value to the full range of choice phenomena.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The author thanks John Clithero for comments on the manuscript. Support was provided by an Incubator Award from the Duke Institute for Brain Sciences.

References

Adcock, R. A., Thangavel, A., Whitfield-Gabrieli, S., Knutson, B., and Gabrieli, J. D. (2006). Reward-motivated learning: mesolimbic activation precedes memory formation. Neuron 50, 507–517.

Axmacher, N., Cohen, M. X., Fell, J., Haupt, S., Dumpelmann, M., Elger, C. E., Schlaepfer, T. E., Lenartz, D., Sturm, V., and Ranganath, C. (2010). Intracranial EEG correlates of expectancy and memory formation in the human hippocampus and nucleus accumbens. Neuron 65, 541–549.

Bach, D. R., Seymour, B., and Dolan, R. J. (2009). Neural activity associated with the passive prediction of ambiguity and risk for aversive events. J. Neurosci. 29, 1648–1656.

Badre, D., and D’Esposito, M. (2007). Functional magnetic resonance imaging evidence for a hierarchical organization of the prefrontal cortex. J. Cogn. Neurosci. 19, 2082–2099.

Barraclough, D. J., Conroy, M. L., and Lee, D. (2004). Prefrontal cortex and decision making in a mixed-strategy game. Nat. Neurosci. 7, 404–410.

Baumeister, R. F., Vohs, K. D., and Tice, D. M. (2007). The strength model of self-control. Curr. Dir. Psychol. Sci. 16, 351–355.

Beaver, J. D., Lawrence, A. D., van Ditzhuijzen, J., Davis, M. H., Woods, A., and Calder, A. J. (2006). Individual differences in reward drive predict neural responses to images of food. J. Neurosci. 26, 5160–5166.

Bechara, A., Damasio, H., and Damasio, A. R. (2000). Emotion, decision making and the orbitofrontal cortex. Cereb. Cortex 10, 295–307.

Beckmann, C. F., DeLuca, M., Devlin, J. T., and Smith, S. M. (2005). Investigations into resting-state connectivity using independent component analysis. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 360, 1001–1013.

Behrens, T. E., Hunt, L. T., and Rushworth, M. F. (2009). The computation of social behavior. Science 324, 1160–1164.

Berns, G. S., McClure, S. M., Pagnoni, G., and Montague, P. R. (2001). Predictability modulates human brain response to reward. J. Neurosci. 21, 2793–2798.

Bhatt, M., and Camerer, C. F. (2005). Self-referential thinking and equilibrium as states of mind in games: fMRI evidence. Games Econ. Behav. 52, 424–459.

Bossaerts, P. (2009). What decision neuroscience teaches us about financial decision making. Annu. Rev. Fin. Econ. 1, 383–404.

Buckner, R. L., Sepulcre, J., Talukdar, T., Krienen, F. M., Liu, H., Hedden, T., Andrews-Hanna, J. R., Sperling, R. A., and Johnson, K. A. (2009). Cortical hubs revealed by intrinsic functional connectivity: mapping, assessment of stability, and relation to Alzheimer’s disease. J. Neurosci. 29, 1860–1873.

Buhusi, C. V., and Meck, W. H. (2005). What makes us tick? Functional and neural mechanisms of interval timing. Nat. Rev. Neurosci. 6, 755–765.

Caplin, A., and Dean, M. (2008). Dopamine, reward prediction error, and economics. Q. J. Econ. 123, 663–701.

Clithero, J. A., Tankersley, D., and Huettel, S. A. (2008). Foundations of neuroeconomics: from philosophy to practice. PLoS Biol. 6: e298. doi:10.1371/journal.pbio.0060298.

Daw, N. D., O’Doherty, J. P., Dayan, P., Seymour, B., and Dolan, R. J. (2006). Cortical substrates for exploratory decisions in humans. Nature 441, 876–879.

De Martino, B., Kumaran, D., Seymour, B., and Dolan, R. J. (2006). Frames, biases, and rational decision-making in the human brain. Science 313, 684–687.

Delgado, M. R., Nystrom, L. E., Fissell, C., Noll, D. C., and Fiez, J. A. (2000). Tracking the hemodynamic responses to reward and punishment in the striatum. J. Neurophysiol. 84, 3072–3077.

Delgado, M. R., Schotter, A., Ozbay, E. Y., and Phelps, E. A. (2008). Understanding overbidding: using the neural circuitry of reward to design economic auctions. Science 321, 1849–1852.

Figner, B., Knoch, D., Johnson, E. J., Krosch, A. R., Lisanby, S. H., Fehr, E., and Weber, E. U. (2010). Lateral prefrontal cortex and self-control in intertemporal choice. Nat. Neurosci. 13, 538–539.

Fiorillo, C. D., Tobler, P. N., and Schultz, W. (2003). Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902.

Gigerenzer, G., and Goldstein, D. G. (1996). Reasoning the fast and frugal way: models of bounded rationality. Psychol. Rev. 103, 650–669.

Gray, J. R., Braver, T. S., and Raichle, M. E. (2002). Integration of emotion and cognition in the lateral prefrontal cortex. Proc. Natl. Acad. Sci. U.S.A. 99, 4115–4120.

Greicius, M. D., Supekar, K., Menon, V., and Dougherty, R. F. (2009). Resting-state functional connectivity reflects structural connectivity in the default mode network. Cereb. Cortex 19, 72–78.

Gul, F., and Pesendorfer, W. (2008). “The case for mindless economics,” in The Foundations of Positive and Normative Economics, eds W. Caplin and A. Schotter (Oxford: Oxford University Press), 3–39.

Han, S., Huettel, S. A., Raposo, A., Adcock, R. A., and Dobbins, I. G. (2010). Functional significance of striatal responses during episodic decisions: recovery or goal attainment? J. Neurosci. 30, 4767–4775.

Hare, T. A., Camerer, C. F., Knoepfle, D. T., and Rangel, A. (2010). Value computations in ventral medial prefrontal cortex during charitable decision making incorporate input from regions involved in social cognition. J. Neurosci. 30, 583–590.

Hare, T. A., Camerer, C. F., and Rangel, A. (2009). Self-control in decision-making involves modulation of the vmPFC valuation system. Science 324, 646–648.

Hare, T. A., O’Doherty, J., Camerer, C. F., Schultz, W., and Rangel, A. (2008). Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J. Neurosci. 28, 5623–5630.

Hayden, B. Y., Parikh, P. C., Deaner, R. O., and Platt, M. L. (2007). Economic principles motivating social attention in humans. Proc. Biol. Sci. 274, 1751–1756.

Hedgcock, W., and Rao, A. R. (2009). Trade-off aversion as an explanation for the attraction effect: A functional magnetic resonance imaging study. J. Market. Res. 46, 1–13.

Howe, C. Q., and Purves, D. (2002). Range image statistics can explain the anomalous perception of length. Proc. Natl. Acad. Sci. U.S.A. 99, 13184–13188.

Hsu, M., Bhatt, M., Adolphs, R., Tranel, D., and Camerer, C. F. (2005). Neural systems responding to degrees of uncertainty in human decision-making. Science 310, 1680–1683.

Huettel, S. A., Stowe, C. J., Gordon, E. M., Warner, B. T., and Platt, M. L. (2006). Neural signatures of economic preferences for risk and ambiguity. Neuron 49, 765–775.

Kable, J. W., and Glimcher, P. W. (2007). The neural correlates of subjective value during intertemporal choice. Nat. Neurosci. 10, 1625–1633.

Kable, J. W., and Glimcher, P. W. (2009). The neurobiology of decision: consensus and controversy. Neuron 63, 733–745.

Kahneman, D. (2003). Maps of bounded rationality: Psychology for behavioral economics. Am. Econ. Rev. 93, 1449–1475.

Killgore, W. D., Balkin, T. J., and Wesensten, N. J. (2006). Impaired decision making following 49 h of sleep deprivation. J. Sleep Res. 15, 7–13.

King-Casas, B., Tomlin, D., Anen, C., Camerer, C. F., Quartz, S. R., and Montague, P. R. (2005). Getting to know you: reputation and trust in a two-person economic exchange. Science 308, 78–83.

Knutson, B., Adams, C. M., Fong, G. W., and Hommer, D. (2001). Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J. Neurosci. 21, 1–5.

Knutson, B., and Bossaerts, P. (2007). Neural antecedents of financial decisions. J. Neurosci. 27, 8174–8177.

Koechlin, E., Ody, C., and Kouneiher, F. (2003). The architecture of cognitive control in the human prefrontal cortex. Science 302, 1181–1185.

Kouneiher, F., Charron, S., and Koechlin, E. (2009). Motivation and cognitive control in the human prefrontal cortex. Nat. Neurosci. 12, 939–945.

Krajbich, I., Camerer, C., Ledyard, J., and Rangel, A. (2009). Using neural measures of economic value to solve the public goods free-rider problem. Science 326, 596–599.

Lebreton, M., Jorge, S., Michel, V., Thirion, B., and Pessiglione, M. (2009). An automatic valuation system in the human brain: evidence from functional neuroimaging. Neuron 64, 431–439.

Luhmann, C. C. (2009). Temporal decision-making: Insights from cognitive neuroscience. Front. Behav. Neurosci. 3: 39. doi: 10.3389/neuro.08.039.2009.

Mazur, J. E. (1987). “An adjusting procedure for studying delayed reinforcement,” in Quantitative Analyses of Behavior, vol. 5: The Effect of Delay and of Intervening Events on Reinforcement Value, eds M. L. Commons, J. E. Mazur, J. A. Nevin, and H. Rachlin (Erlbaum: Mahwah, NJ), 55–73.

McClure, S. M., Ericson, K. M., Laibson, D. I., Loewenstein, G., and Cohen, J. D. (2007). Time discounting for primary rewards. J. Neurosci. 27, 5796–5804.

McClure, S. M., Laibson, D. I., Loewenstein, G., and Cohen, J. D. (2004a). Separate neural systems value immediate and delayed monetary rewards. Science 306, 503–507.

McClure, S. M., Li, J., Tomlin, D., Cypert, K. S., Montague, L. M., and Montague, P. R. (2004b). Neural correlates of behavioral preference for culturally familiar drinks. Neuron 44, 379–387.

McCoy, A. N., and Platt, M. L. (2005). Risk-sensitive neurons in macaque posterior cingulate cortex. Nat. Neurosci. 8, 1220–1227.

Miller, E. K., and Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202.

Mohr, P. N., Biele, G., and Heekeren, H. R. (2010). Neural processing of risk. J. Neurosci. 30, 6613–6619.

Padoa-Schioppa, C., and Assad, J. A. (2006). Neurons in the orbitofrontal cortex encode economic value. Nature 441, 223–226.

Payne, J. W., Bettman, J. R., and Johnson, E. J. (1992). Behavioral decision research: A constructive processing perspective. Annu. Rev. Psychol. 43, 87–131.

Pessoa, L. (2008). On the relationship between emotion and cognition. Nat. Rev. Neurosci. 9, 148–158.

Plassmann, H., O’Doherty, J., and Rangel, A. (2007). Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J. Neurosci. 27, 9984–9988.

Platt, M. L., and Huettel, S. A. (2008). Risky business: The neuroeconomics of decision making under uncertainty. Nat. Neurosci. 11, 398–403.

Poldrack, R. A. (2006). Can cognitive processes be inferred from neuroimaging data? Trends Cogn. Sci. 10, 59–63.

Rangel, A., Camerer, C., and Montague, P. R. (2008). A framework for studying the neurobiology of value-based decision making. Nat. Rev. Neurosci. 9, 545–556.

Rogers, R. D., Everitt, B. J., Baldacchino, A., Blackshaw, A. J., Swainson, R., Wynne, K., Baker, N. B., Hunter, J., Carthy, T., Booker, E., London, M., Deakin, J. F., Sahakian, B. J., and Robbins, T. W. (1999). Dissociable deficits in the decision-making cognition of chronic amphetamine abusers, opiate abusers, patients with focal damage to prefrontal cortex, and tryptophan-depleted normal volunteers: Evidence for monoaminergic mechanisms. Neuropsychopharmacology 20, 322–339.

Rushworth, M. F., and Behrens, T. E. (2008). Choice, uncertainty and value in prefrontal and cingulate cortex. Nat. Neurosci. 11, 389–397.

Salthouse, T. A. (1996). The processing-speed theory of adult age differences in cognition. Psychol. Rev. 103, 403–428.

Sanfey, A. G., Rilling, J. K., Aronson, J. A., Nystrom, L. E., and Cohen, J. D. (2003). The neural basis of economic decision-making in the ultimatum game. Science 300, 1755–1758.

Schoenbaum, G., Roesch, M. R., and Stalnaker, T. A. (2006). Orbitofrontal cortex, decision-making and drug addiction. Trends Neurosci. 29, 116–124.

Schultz, W., Dayan, P., and Montague, P. R. (1997). A neural substrate of prediction and reward. Science 275, 1593–1599.

Semendeferi, K., Lu, A., Schenker, N., and Damasio, H. (2002). Humans and great apes share a large frontal cortex. Nat. Neurosci. 5, 272–276.

Shiv, B., and Fedorikhin, A. (1999). Heart and mind in conflict: The interplay of affect and cognition in consumer decision making. J. Consum. Res. 26, 278–292.

Simon, H. A. (1959). Theories of decision-making in economics and behavioral science. Am. Econ. Rev. 49, 253–283.

Smith, D. V., Hayden, B. Y., Truong, T. K., Song, A. W., Platt, M. L., and Huettel, S. A. (2010). Distinct value signals in anterior and posterior ventromedial prefrontal cortex. J. Neurosci. 30, 2490–2495.

Smith, K., Dickhaut, J., McCabe, K., and Pardo, J. V. (2002). Neuronal substrates for choice under ambiguity, risk, gains, and losses. Manage. Sci. 48, 711–718.

Spitzer, M., Fischbacher, U., Herrnberger, B., Gron, G., and Fehr, E. (2007). The neural signature of social norm compliance. Neuron 56, 185–196.

Sridharan, D., Levitin, D. J., and Menon, V. (2008). A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proc. Natl. Acad. Sci. U.S.A. 105, 12569–12574.

Tankersley, D., Stowe, C. J., and Huettel, S. A. (2007). Altruism is associated with an increased neural response to agency. Nat. Neurosci. 10, 150–151.

Thaler, R. H., and Shefrin, H. M. (1981). An economic-theory of self-control. J. Polit. Econ. 89, 392–406.

Tom, S. M., Fox, C. R., Trepel, C., and Poldrack, R. A. (2007). The neural basis of loss aversion in decision-making under risk. Science 315, 515–518.

Tversky, A., and Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science 185, 1124–1131.

van den Heuvel, M. P., Mandl, R. C., Kahn, R. S., and Hulshoff Pol, H. E. (2009). Functionally linked resting-state networks reflect the underlying structural connectivity architecture of the human brain. Hum. Brain Mapp. 30, 3127–3141.

Venkatraman, V., Chuah, Y. M., Huettel, S. A., and Chee, M. W. (2007). Sleep deprivation elevates expectation of gains and attenuates response to losses following risky decisions. Sleep 30, 603–609.

Venkatraman, V., Payne, J. W., Bettman, J. R., Luce, M. F., and Huettel, S. A. (2009a). Separate neural mechanisms underlie choices and strategic preferences in risky decision making. Neuron 62, 593–602.

Venkatraman, V., Rosati, A. G., Taren, A. A., and Huettel, S. A. (2009b). Resolving response, decision, and strategic control: Evidence for a functional topography in dorsomedial prefrontal cortex. J. Neurosci. 29, 13158–13164.

Weber, E. U., Shafir, S., and Blais, A. (2004). Predicting risk sensitivity in humans and lower animals: Risk as variance or coefficient of variation. Psychol. Rev. 111, 430–445.

Keywords: decision making, neuroeconomics, reward, uncertainty, value

Citation: Huettel SA (2010) Ten challenges for decision neuroscience. Front. Neurosci. 4:171. doi:10.3389/fnins.2010.00171

Received: 30 May 2010;

Paper pending published: 25 June 2010;

Accepted: 30 August 2010;

Published online: 21 September 2010

Edited by:

Read Montague, Baylor College of Medicine, USAReviewed by:

Antonio Rangel, California Institute of Technology, USASamuel McClure, Stanford University, USA

Nathaniel Daw, New York University, USA

Copyright: © 2010 Huettel. This is an open-access article subject to an exclusive license agreement between the authors and the Frontiers Research Foundation, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Scott A. Huettel, Center for Cognitive Neuroscience, Box 90999, Duke University, Durham, NC 27708, USA. e-mail: scott.huettel@duke.edu