- 1School of Automation, Chongqing University of Posts and Telecommunications, Chongqing, China

- 2Department of Integrated Chinese and Western Medicine, The Second Affiliated Hospital of Chongqing Medical University, Chongqing, China

- 3College of Business and Economics, California State University, Los Angeles, CA, United States

- 4Double Deuce Sports, Bowling Green, KY, United States

- 5Computer Information Systems Department, State University of New York at Buffalo State, Buffalo, NY, United States

- 6China Merchants Chongqing Communications Research and Design Institute Co., Ltd., Chongqing, China

As a type of biometric recognition, palmprint recognition uses unique discriminative features on the palm of a person to identify his/her identity. It has attracted much attention because of its advantages of contactlessness, stability, and security. Recently, many palmprint recognition methods based on convolutional neural networks (CNN) have been proposed in academia. Convolutional neural networks are limited by the size of the convolutional kernel and lack the ability to extract global information of palmprints. This paper proposes a framework based on the integration of CNN and Transformer-GLGAnet for palmprint recognition, which can take advantage of CNN's local information extraction and Transformer's global modeling capabilities. A gating mechanism and an adaptive feature fusion module are also designed for palmprint feature extraction. The gating mechanism filters features by a feature selection algorithm and the adaptive feature fusion module fuses them with the features extracted by the backbone network. Through extensive experiments on two datasets, the experimental results show that the recognition accuracy is 98.5% for 12,000 palmprints in the Tongji University dataset and 99.5% for 600 palmprints in the Hong Kong Polytechnic University dataset. This demonstrates that the proposed method outperforms existing methods in the correctness of both palmprint recognition tasks. The source codes will be available on https://github.com/Ywatery/GLnet.git.

1. Introduction

With the rapid development of information security, various identification technologies using human-specific biometric features have attracted widespread attention. Human-specific biometric features include face, gait, and fingerprints (Shi et al., 2020; Yang, 2020; Zhang et al., 2021). As an emerging recognition technique of biometric features, palmprint recognition can analyze various recognizable features, including main lines, ridges, minutiae, and textures (Zhang et al., 2018). Palmprint features are considered to be unique biometric information for each person, with a high degree of security and reliability. The research on palmprint recognition techniques is also receiving more and more attention from academia (Wang et al., 2019; Zhong et al., 2019; Fei et al., 2020a). Traditional palmprint recognition methods are mainly developed based on both texture an structure features (Fei et al., 2018). Many effective methods have been applied, such as apparent latent direction code (ALDC; Fei et al., 2019) and joint multi-view feature learning (JMvFL; Fei et al., 2020b), have been applied to palmprint recognition. Some researchers also proposed techniques such as 2D palmprint recognition (Korichi et al., 2021) and 3D palmprint recognition (Yang et al., 2020) for different palmprint morphologies. Recently, new breakthroughs in palmprint recognition performance have been achieved by using deep learning methods (Ungureanu et al., 2020). Deep learning-based palmprint recognition methods can avoid information loss caused by traditional manual features and significantly improve recognition performance (Zhong et al., 2019). For example, Liu et al. (2021) proposed a novel and effective end-to-end algorithm for palmprint recognition, known as the similarity metric hash network.

However, most of the current deep learning-based palmprint recognition methods have certain shortcomings. First, general image classification is applied to recognize palmprints (Zhong and Zhu, 2019), which is not specially designed for palmprint features. In other words, the relationship between palmprint features involving fine details, texture and global mainline information is not considered. Some researchers designed specialized palmprint recognition neural networks based on the characteristics of palmprints (Genovese et al., 2019). Most of these specially designed neural networks are developed based on CNN. Affected by the size of the convolution kernel, CNN can only extract local features of palmprints in a limited way, and cannot extract global feature information of palmprints. For palmprint recognition, it is necessary to study the neural network design of palmprint global feature information extraction. The Transformer, a framework based entirely on a self-attentive mechanism with powerful modeling capabilities for global information, has been widely used in image feature extraction (Zhu et al., 2022, 2023; He et al., 2023).

This paper designs a network structure GLGAnet based on CNN and Transformer. CNN is used to extract local features such as texture and fine details of the palmprint. The Transformer module is used to establish the connection between main lines and ridges in the palmprint features. In the network structure, the features extracted by each component in the GLGAnet backbone network are controlled by the designed gating mechanism. The proposed network structure is able to extract global features such as main lines and ridges of the palmprint, local features such as palm texture and fine details. This paper has three main contributions as follows.

1. A new lightweight network-GLGANet is designed to process local features (e.g., texture) and global features (e.g., mainline) of palmprints. The proposed network extracts local features of palmprints by deep convolutional layers and global feature information of palmprints by using Transformer.

2. A gating mechanism is designed based on the different features extracted by the deep convolutional layer and the Transformer module. The proposed gating mechanism first selects the features of the palmprint by using a feature selection algorithm, and then multiplies them with the features extracted by the backbone network to achieve control over the features extracted by the backbone network.

3. An adaptive convolutional fusion module is designed. This module performs dimensionality reduction and fusion of multi-level features extracted by the gating mechanism through multi-level convolution and single convolution.

The rest of this paper is organized as follows. Section 2 briefly introduces the related topics. Section 3 elaborates on the deep learning network structure we proposed for palmprint recognition. Section 4 presents the experimental results. Section 5 offers the concluding remarks.

2. Related work

2.1. Traditional palmprint recognition methods

After more than two decades of development, traditional palmprint recognition methods have been developed based on hand-crafted and machine learning methods. The most typical hand-crafted feature recognition methods include Competitive code (Kong and Zhang, 2004), Palm code (Zhang et al., 2003), Fusion code (Kong et al., 2006), RLOC (Jia et al., 2008), and BOCV (Guo et al., 2009). These methods are characterized by fast recognition, and a distance measure is often used in the matching process, which may cause a decrease in the correct rate. For example, the competitive coding scheme (CompCode) proposed by Kong and Zhang (2004) uses several Gabor filters in different directions to encode the palmprint features and applies the Hamming distance to match the palmprint. Traditional machine learning recognition methods typically include PCA (Wang et al., 2022), SVM (Yaddaden and Parent, 2022), histogram (Jia et al., 2013), etc. Compared with hand-crafted feature recognition-based methods, these methods are stabler, which extract more palmprint features with a subsequent increase in computational complexity. For example, Patil et al. (2015) extracted palmprint features by some discrete transforms, dimensionalized features using principal component analysis (PCA) and finally used the dimensionalized features for matching.

2.2. Deep learning based palmprint recognition methods

In recent years, CNN-based approaches have achieved remarkable performance on many computer vision tasks. Many deep learning-based recognition methods have also been proposed in the field of palmprint recognition. For example, Tarawneh et al. (2018) first used a pre-trained deep neural network VGG-19 (Simonyan and Zisserman, 2014) to extract palmprint features and then used SVM to match and classify palmprints. Dian and Dongmei (2016) used AlexNet (Krizhevsky et al., 2017) to extract palmprint and then combined hausdorff distance to match and recognize palmprint images. Yang et al. (2017) proposed a deep learning and local coding approach. Zhang et al. (2018) proposed an improved Inception-ResNet-v1 network structure and introduced a central loss function for feature extraction PalmRCNN. Matkowski et al. (2019) proposed an end-to-end palmprint recognition network (EE-PRnet). The network consists of two main networks, the ROI localization and alignment network (ROI-lanet) and the Feature Extraction and Recognition Network (FERnet). Most of these deep learning-based palmprint recognition methods are designed for image classification tasks. But there is no design that studies the characteristics of the main lines, fine details, and textures of palmprints. Other researchers have studied the characteristics of palmprints and have integrated deep learning into traditional hand-based design methods. As a typical approach, Genovese et al. (2019) proposed an unsupervised model-PalmNet-which trains palmprint-specific filters through an unsupervised process based on Gabor response and principal component analysis (PCA). The proposed method was experimented on several datasets and achieved remarkable results. Also, Liang et al. (2021) proposed a competitive convolutional neural network (CompNet) with constrained learnable Gabor filters, which extracts palmprint features for recognition by training learnable Gabor convolutional kernels. In addition, some other researchers have considered making neural networks for lightweight palmprint recognition after considering the extent to which palmprint functionality can be run on edge devices. For example, Jia et al. (2022) proposed a lightweight palmprint recognition neural network (EEPNet), which incorporates five strategies such as image stitching and image downscaling to improve palmprint recognition performance. These deep learning-based methods mentioned above are limited by the convolutional kernel perceptual field when extracting features and cannot effectively extract the global contextual information of palmprints, and can only achieve matching by extracting local features for recognition. The proposed GLGAnet integrating CNN and Transformer architecture can not only model the relationship between main lines and ridges of palmprints, but also effectively extract texture information of palmprints.

3. The proposed method

In this section first introduces the general framework of the improved network architecture integrating CNN and Transformer. The functions of the individual modules are then described.

3.1. Network structure

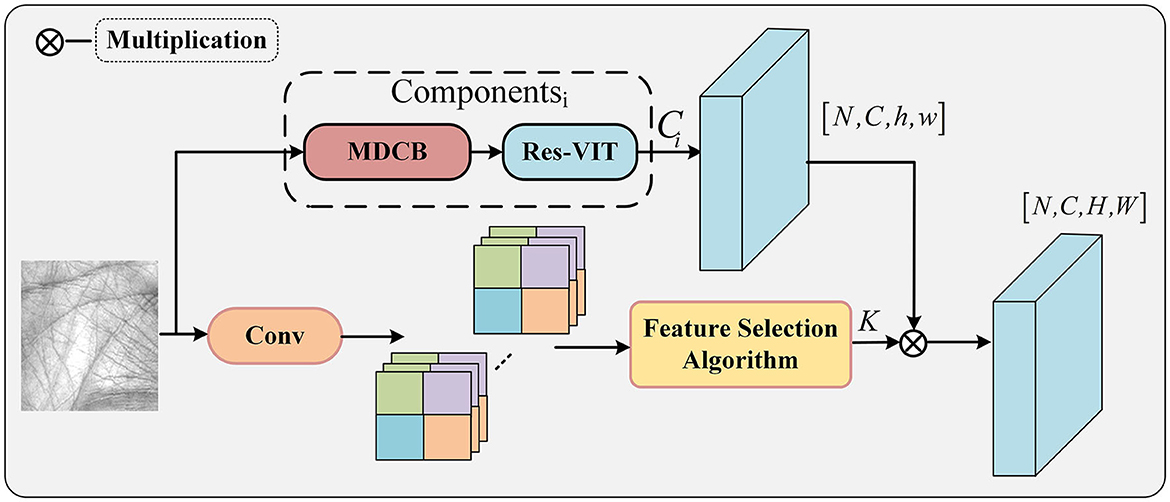

The proposed palmprint recognition framework is shown in Figure 1, which mainly consists of a multilayer depth convolution module, a residual Vision Transformer module, a gating mechanism module, and an adaptive convolutional fusion module. The multi-layer depth convolution module is composed of depth-separable convolutional layers, which extract various local features of palmprints, such as ridge features and texture features, from the original palmprint image. The residual VIT module uses Transformer as the backbone structure to model the palmprint image globally and extract global contextual features of the palmprint, such as the orientation features of the three main lines in the palmprint. The gating mechanism module is dominated by the feature selection algorithm, which controls features extracted by the multilayer depth convolution module and the residual VIT module through the feature selection algorithm. The adaptive convolution fusion module performs feature fusion through the gate mechanism of multi-convolution and single convolution.

Figure 1. The structure of the proposed network. The Xi expressed the output of the gating mechanism.

3.2. Multilayer deep convolution block and res-VIT block

Multiple multi-layer depth convolution modules (MDCB) are used to extract local information from palmprints. The multi-layer depth convolution module consists of a depth-separable convolution layer, two normal convolution layers, a regularization layer and two activation layers. The depth-separable convolution learns the correlation of spatial features across channels of the palmprint image, such as local textures of the palmprint. Specifically, when the multilayer deep convolution module receives the input palmpArint image, it first expands the feature volume to multiple channels by ordinary convolution to obtain the output features. Further, the features obtained by ordinary convolution are output to a depth-separable convolution layer to obtain more local feature information of the palm print in a recursive manner across multiple depth-separable convolution layers. The feature volume is then compressed by ordinary convolution layers to the same channel as the input features. Finally, to avoid gradient disappearance during the computation, the feature are superimposed on the original input to obtain the depth convolution feature output Fmap:

Where the X is the input of the multi-layer depth convolution module.

The most important structure in Res-VIT is the Visual Transformer. The Transformer is developed entirely based on a self-attentive mechanism and has good global modeling capabilities. The input y is linearized as Q, K, andV, denote key, value, and dimension, respectively. The self-attentive mechanism can be obtained by Equation (2):

Since the Transformer structure is designed based on a fully self-attentive mechanism, it lacks the inductive bias in convolution. Therefore, in the Res-VIT module, the input Y is first expanded into M non-overlapping flat blocks , where P = wh, and , and then encoded between the blocks by applying the Transformer to obtain as:

Where λ is the number of Transformer blocks.

After encoding, Res-VIT loses neither the order of the individual blocks nor the order of the pixel space of the palmprint image within each block. Thus, by a collapse operation, YG is transformed to YU, and YU is then projected into the lower dimensional space by point-by-point convolution to obtain the features.Finally, the input features of the original palmprint are spliced with this feature by means of the channel adaptive convolution module.

3.3. Gate control mechanism

The multi-layer depth convolution module extracts local feature information of the palmprint, and Res-VIT extracts global feature information of the palmprint. A gating mechanism is designed to process the extracted information by the convolution and feature selection algorithm. Its structure is schematically shown in Figure 2. Specifically, the palmprint image is first convolved to preliminary palmprint features, and then these features are processed by a feature selection algorithm to select more prominent features of the palmprint. These features are then multiplied with the features extracted from the multi-layer depth convolution and Res-VIT structures, allowing the features extracted from the multi-layer depth convolution and Res-VIT structures to be enhanced.

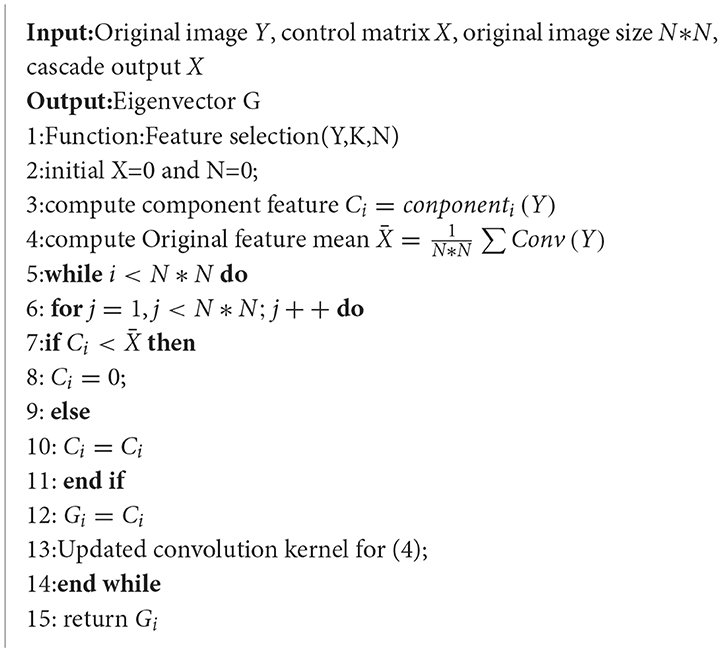

Feature selection algorithm: First, each input palmprint image is convolved, and then the average value of the input features is calculated. Second, the features in each feature image pixel that are higher than the mean of the feature image pixel are retained and the features that are lower than the mean of the feature image pixel are set to zero to obtain a feature map vector. Finally, this feature map is multiplied with component i to control the features obtained from component i organization so that the features obtained from component i are more prominent. The convolution kernel is updated once for each execution of the feature selection algorithm. The algorithm flow is shown in Algorithm 1.

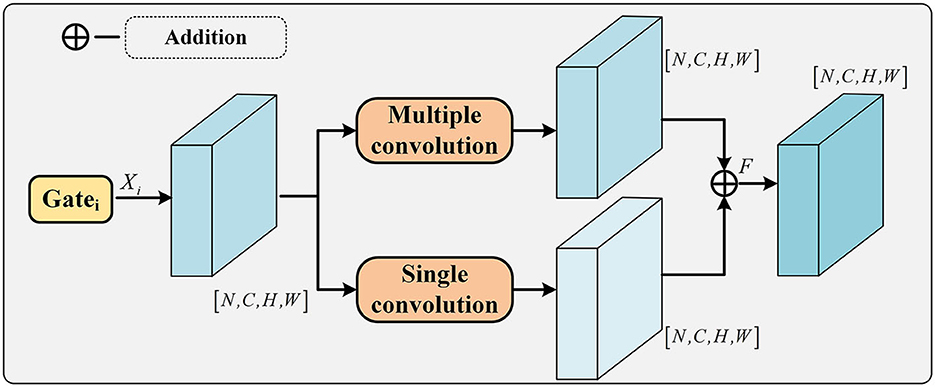

3.4. Adaptive convolution fusion module

The feature maps extracted by the gating mechanism are too large to be effectively fused with the feature maps of the backbone network, and an adaptive convolutional fusion module is designed. A schematic diagram of the adaptive convolutional fusion module is shown in Figure 3. The adaptive convolution fusion module consists of a combination of multi-level convolution and single-level convolution. The fusion module efficiently fuses the feature maps of each level from the output of the gating mechanism with the feature maps extracted from the backbone network. The single-level convolution consists of a convolutional layer, a combined pooling layer and an activation layer. Specifically, in Figure 2, the output of each layer is finally fed into the adaptive convolution module by the gating mechanism, and the first two layers of features can be resized by multi-layer convolution in the adaptive convolution module. Further extraction of local features is also performed. The last three layers have Res-VIT, which extracts the global contextual information of the palmprint, compresses the extracted information by single layer convolution in the adaptive convolution layer, and finally fuses the features of the five layers. The process can be represented by Equation (4).

Where Xi denotes the features output by the gating mechanism and F denotes the output features of the adaptive convolution module.

3.5. Loss function

Palmprint recognition is essentially a classification problem. To enable the network to achieve good recognition performance, the proposed method is optimized during training in this paper using a cross-entropy loss function, which can be expressed by Equation (5).

Where M denotes the number of categories of palmprints; yijis a signed function (0 or 1) that takes 1 if the true category of palmprint sample i is equal to j and 0 otherwise; yij denotes the predicted probability that the observed palmprint sample i belongs to sample j.

4. Experiments

4.1. Experimental preparation

To verify the validity of the proposed method, experiments were conducted on the Tongji University palmprint dataset (Tongji), and the Hong Kong Polytechnic University palmprint dataset (Poly-U). The Tongji dataset collected contactless palmprint images from 300 volunteers, including 192 males and 108 females. All volunteers were from Tongji University in Shanghai, China. There were two collections in total. The average time interval between the first and second acquisition collections was ~2 months. In each collection, 10 palmprint images were collected from each palm of each volunteer, and 6,000 palmprint images were collected from 600 palms. A total of 12,000 (300 × 2 × 10 × 2) high quality non-contact palmprint images were collected in the Tongji dataset. A schematic diagram of the Tongji palmprint dataset is shown in Figure 4A. The Poly-U dataset collected contact palmprint images from 50 volunteers on two occasions. The Poly-U palmprint dataset is shown in Figure 4B.

Figure 4. Schematic diagram of the two palmprint datasets. (A) Tongji palmprint dataset. (B) Poly-U palmprint dataset.

The proposed model framework was implemented in the Pytorch environment. The input Tongji dataset has an image size of 128*128 and a batch size of 8. The input Poly-U dataset has an image size of 128*128 and a batch size of 8. Two NVIDIA TITAN RTX graphics cards were used for training. In the experiment, the number of Transformer blocks λ was set to 4. The optimizer used in this paper is Adam, with all parameters at default values. The initial learning rate and weight decay for model training were 1e-4 and 1e-5, respectively, and the experimental dataset was divided into a training and the testing set uses a 6:4 ratio. (7,200 sheets in the Tongji dataset are used as the training set and 4,800 sheets are used as the testing; 360 sheets in the Poly-U dataset are used as the training set and 240 sheets are used as the testing).

4.2. Comparative experiments

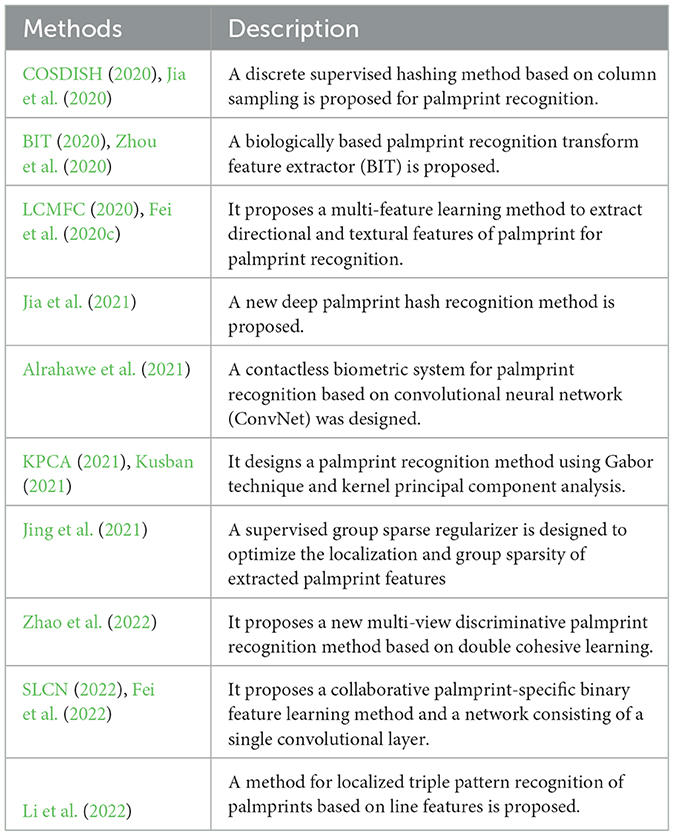

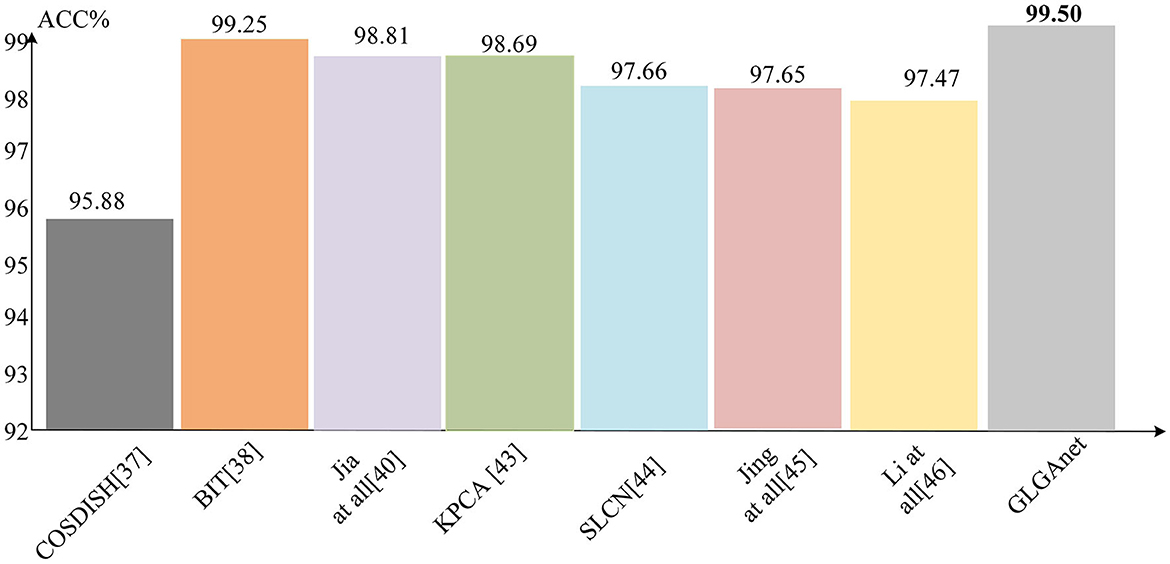

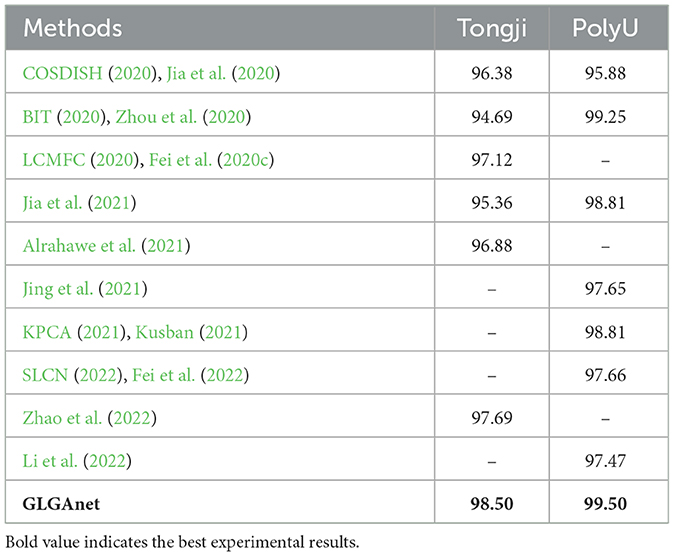

To validate the effectiveness of the proposed method in palmprint recognition, several state-of-the-art recognition methods that were tested on the Tongji palmprint dataset and the Poly-U palmprint dataset benchmarks are compared. This includes the proposed traditional descriptor-based palmprint recognition methods (Fei et al., 2020c, 2022; Jia et al., 2020; Zhou et al., 2020; Kusban, 2021; Li et al., 2022; Zhao et al., 2022) and the deep learning-based palmprint recognition methods (Alrahawe et al., 2021; Jia et al., 2021; Jing et al., 2021; Fei et al., 2022). A brief description of these methods is given in Table 1. The source codes of many existing palmprint recognition methods are not publicly available, and to avoid biases introduced when models are retrained, publications are directly referred to obtain the results of the corresponding methods. This is a common approach in palmprint recognition research. The recognition accuracy results of the different methods for the Tongji University palmprint dataset and the Hong Kong Polytechnic University palmprint dataset are shown in Figures 5, 6, respectively. The method with the highest recognition accuracy in each dataset is bolded in the corresponding bar.

Table 2 shows the correct recognition rates of the different methods on the two datasets. According to the results in Table 2, it is clear that the proposed method achieves high performance on both datasets compared with the traditional and deep learning based methods in the existing work. According to the results in the table that the proposed method performs better on the PolyU dataset because of its higher requirement for image alignment accuracy during the image acquisition phase. On the tongji dataset, in addition to the excellent performance of the proposed method in this paper, the next best method is a new multi-view discriminative palmprint recognition method based on double cohesive learning proposed by Zhao et al. (2022), which improves the palmprint recognition performance by using palmprint features from multiple views presented in a low-dimensional subspace. The next best performer compared with the proposed method on the PolyU dataset is a biologically based transform feature extractor (BIT) for palmprint recognition proposed by Zhou et al. (2020). This method recognizes palmprint by simulating the visual perception mechanism of simple cells, which is computationally complex, not robust and cannot be directly applied to palmprint. The proposed method achieves stable recognition performance by controlling the transfer of features through a gating mechanism.

According to the results reported in Figures 5, 6, the method proposed in this paper obtained a more competitive performance compared with other methods. Specifically, on the Tongji University palmprint dataset, the recognition accuracy of the method proposed in this paper was 98.5%, outperforming other methods by 2.12, 3.81, 1.38, 3.14, 1.62, and 0.81%, respectively. On the Hong Kong Polytechnic University palmprint dataset, achieved 99.8% recognition rate, outperforming other methods by 3.62, 0.25, 0.69, 0.81, 1.84, 1.85, and 2.03%, respectively. On the Tongji University palmprint dataset, the existing methods of convolutional neural networks were not effective for palmprint recognition. For example, Jia et al. (2021) used a deep palmprint hash recognition method which neglected to extract the global features of palmprints and obtained the features of palmprints through an ordinary convolutional neural network. However, the ordinary convolutional neural network was limited by the size of the perceptual field and could not achieve good results even when using a hash layer and multiple loss functions to jointly supervise the training process. Alrahawe et al. (2021) designed a palmprint recognition system based on convolutional neural network, which is limited by the size. The designed convolutional neural network can only extract some shallow information and cannot achieve high recognition results. On the PolyU dataset, some researchers have designed convolutional neural networks for palmprint recognition. For example, a collaborative palmprint-specific binary feature learning method and a compact network consisting of a single convolutional layer were proposed by Fei et al. (2022). The palmprint feature learning method in this method uses a feature projection function, which loses some information of the palmprint and cannot achieve good recognition results. In contrast, the proposed method uses a deep convolutional neural network to extract local features of the palmprint image in separate channels. The global features of the palmprint are then obtained using a Res-Vit structure based on a self-attentive mechanism, allowing the designed network to avoid the shortcomings of the above study. It is possible to model and extract not only the local but also the global information of the palmprint. In particular, the proposed method also designs a gating mechanism for the selection control of the extracted features according to the different features extracted by the deep convolutional neural network and Res-Vit, making the palmprint recognition the best possible.

4.3. Ablation experiments

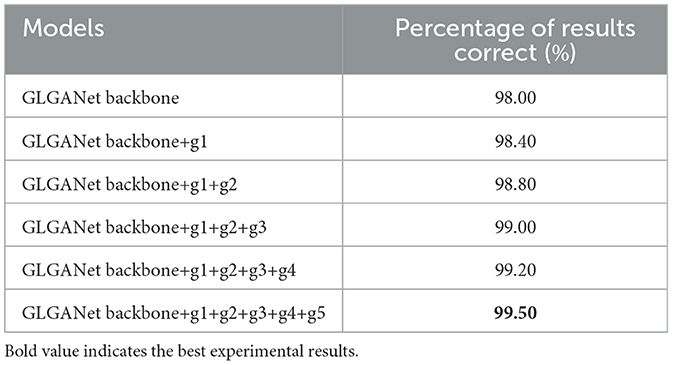

To further validate the importance and practical contribution of the backbone network and the designed modules (including the gating mechanism) used in this paper, relevant ablation experiments were conducted.

In this experiment, GLGANet backbone is used as the baseline model and then its effectiveness is verified by adding different gating mechanisms one by one. Specifically, the performance of the following five models is compared: GLGANet backbone: a good pre-trained model for palmprint recognition using the GLGANet backbone network, which is the baseline model. GLGANet backbone+G1: the first gating mechanism is added on top of the GLNet backbone network to control the features extracted by the first deep convolutional layer. GLGANet backbone+G1+G2: A second gating mechanism is added to GLGANet+G1 to control the features extracted by the first and second deep convolutional layers. GLGANet backbone+G1+G2+G3+G4:On top of the above model, a fourth gating mechanism is added to control the global features extracted by the second Transformer structure to a deeper level of the palmprint. Full model: The proposed full model (GLGANet +G1+G2+G3+G4+G5).

A comparison of GLGANet backbone and GLGANet backbone+G1 demonstrates the effectiveness of the gating mechanism used in GLGANet backbone to control the selection of the local features extracted from the first depth convolutional layer for palm prints. Comparison of GLGANet backbone+G1 and GLGANet backbone+G1+G2 shows that the gating mechanism is effective in controlling the selection of features extracted from the deep convolutional layer. Comparing GLGANet backbone+G1+G2 with GLGANet backbone+G1+G2+G3 and GLGANet backbone+G1+G2+G3+G4, it verifies that the gating mechanism is effective in controlling the local features of palmprints extracted from the depth convolution layer. It also has a control effect on the global features of the palmprint extracted by the Transformer structure. Finally, by comparing GLGANet backbone+G1+G2+G3+G4 with the full model, the effectiveness of the gating mechanism in controlling the local features and the fusion of the local and global features of the palmprint by the adaptive convolution fusion module is verified.

Table 3 lists the objective performance exhibited by the different models. Each of the gating mechanisms described above has improved the correctness of the palmprint recognition results. The results did not improve when two gating mechanisms were added. The reason is that the features extracted by adding the first two gating mechanisms were fused with the layer-level features extracted by the backbone network and then convolved and pooled several times, losing some key information. Starting with the addition of the third gating mechanism, the results were significantly improved. The recognition results are more obvious with the addition of the last five gating mechanisms and the adaptive convolution module.

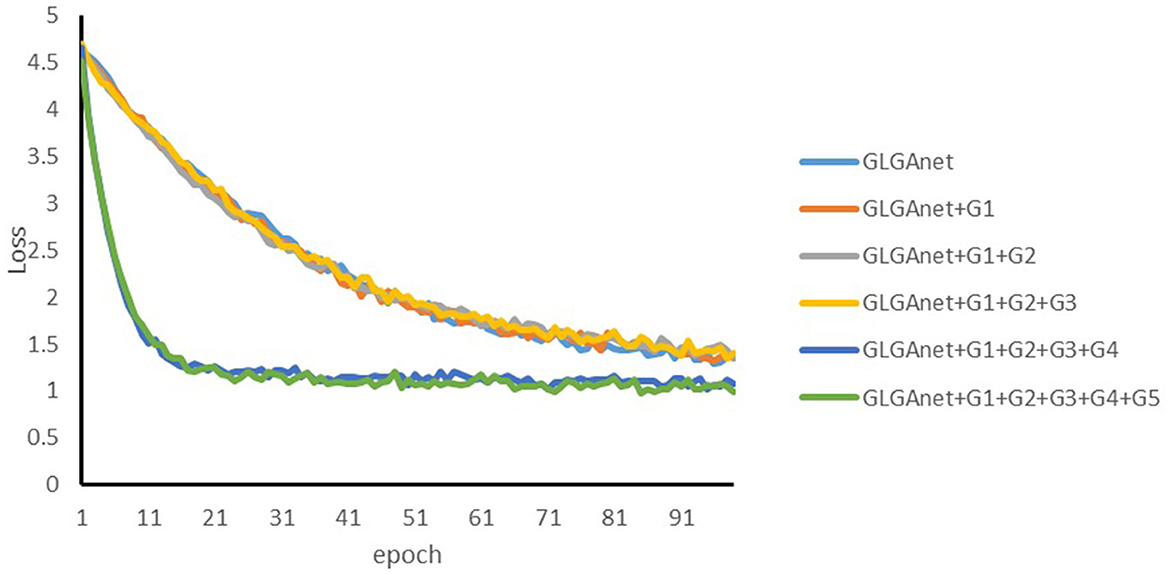

Figure 7 shows the convergence of the loss function during the training of different models. As shown in Figure 7, the proposed method does not achieve the best result when the first three gating mechanisms are added, which also corresponds to the results of the ablation experiments. (the effect is significantly improved when the third gating mechanism is added). As the number of gating mechanisms increases, the convergence of the loss function also verifies the effectiveness of the gating mechanisms. In particular, the loss function converges most significantly and the best recognition is achieved when all five gating mechanisms are added.

5. Summary

This paper proposes a framework for palmprint recognition based on CNN and Transformer. The method extracts the local information of palmprint by using CNN and the global contextual information of palmprint by Transformer. In addition, a gating mechanism is designed based on the difference in the features extracted by the CNN and Transformer, and the gating mechanism is used to control the selection of the extracted local and global features. In addition, an adaptive convolutional fusion module is designed to effectively fuse the features obtained by the gating mechanism with those of the backbone network through single or multiple convolutions. Experimental results verify the effectiveness of the key components extracted by the proposed method. In addition, the method achieved better performance in benchmark tests on the Tongji palmprint dataset and Poly-U dataset compared with state-of-the-art methods. Future work may focus on exploring the use of fewer gating mechanisms to achieve high accuracy in palmprint recognition and extending the proposed method to other biometric recognition problems.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://cslinzhang.github.io/ContactlessPalm/.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This research was jointed sponsored by National Natural Science Foundation of China (82205049), Natural Science Foundation of Chongqing (cstc2020jcyj-msxmX0259), Chongqing Medical Scientific Research Project (Joint project of Chongqing Health Commission and Science and Technology Bureau, 2022MSXM184), and Kuanren Talents Program of the Second Affiliated Hospital of Chongqing Medical University, China, Research on intelligent control technology of highway tunnel operation based on digital twin, the Key Project of Science and Technology of Ministry of Transport of China (Grant No. 2021-MS6-145).

Conflict of interest

XY was employed by China Merchants Chongqing Communications Research and Design Institute Co., Ltd. YKJ was employed by company Double Deuce Sports.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alrahawe, E. A., Humbe, V. T., and Shinde, G. (2021). A contactless palmprint biometric system based on CNN. Turkish J. Comput. Math. Educ. 12, 6344–6356. doi: 10.1109/eSmarTA52612.2021.9515726

BIT. (2020). A biologically inspired transform feature extractor for palmprint recognition, namely BIT.

COSDISH. (2020). A supervised hashing method for palmprint recognition- column sampling based discrete supervised hashing (COSDISH).

Dian, L., and Dongmei, S. (2016). “Contactless palmprint recognition based on convolutional neural network,” in 2016 IEEE 13th International Conference on Signal Processing (ICSP) (Chengdu: IEEE), 1363–1367. doi: 10.1109/ICSP.2016.7878049

Fei, L., Lu, G., Jia, W., Teng, S., and Zhang, D. (2018). Feature extraction methods for palmprint recognition: a survey and evaluation. IEEE Trans. Syst. Man Cybern. 49, 346–363. doi: 10.1109/TSMC.2018.2795609

Fei, L., Zhang, B., Jia, W., Wen, J., and Zhang, D. (2020a). Feature extraction for 3-d palmprint recognition: a survey. IEEE Trans. Instrum. Meas. 69, 645–656. doi: 10.1109/TIM.2020.2964076

Fei, L., Zhang, B., Teng, S., Guo, Z., Li, S., and Jia, W. (2020b). Joint multiview feature learning for hand-print recognition. IEEE Trans. Instrum. Meas. 69, 9743–9755. doi: 10.1109/TIM.2020.3002463

Fei, L., Zhang, B., Zhang, L., Jia, W., Wen, J., and Wu, J. (2020c). Learning compact multifeature codes for palmprint recognition from a single training image per palm. IEEE Trans. Multim. 23, 2930–2942. doi: 10.1109/TMM.2020.3019701

Fei, L., Zhang, B., Zhang, W., and Teng, S. (2019). Local apparent and latent direction extraction for palmprint recognition. Inform. Sci. 473, 59–72. doi: 10.1016/j.ins.2018.09.032

Fei, L., Zhao, S., Jia, W., Zhang, B., Wen, J., and Xu, Y. (2022). Toward efficient palmprint feature extraction by learning a single-layer convolution network. IEEE Trans. Neural Netw. Learn. Syst. doi: 10.1109/TNNLS.2022.3160597

Genovese, A., Piuri, V., Plataniotis, K. N., and Scotti, F. (2019). Palmnet: Gabor-PCA convolutional networks for touchless palmprint recognition. IEEE Trans. Inform. Forens. Sec. 14, 3160–3174. doi: 10.1109/TIFS.2019.2911165

Guo, Z., Zhang, D., Zhang, L., and Zuo, W. (2009). Palmprint verification using binary orientation co-occurrence vector. Pattern Recogn. Lett. 30, 1219–1227. doi: 10.1016/j.patrec.2009.05.010

He, X., Qi, G., Zhu, Z., Li, Y., Cong, B., and Bai, L. (2023). Medical image segmentation method based on multi-feature interaction and fusion over cloud computing. Simul. Model. Pract. Theory 126:102769. doi: 10.1016/j.simpat.2023.102769

Jia, W., Hu, R.-X., Lei, Y.-K., Zhao, Y., and Gui, J. (2013). Histogram of oriented lines for palmprint recognition. IEEE Trans. Syst. Man Cybern. 44, 385–395. doi: 10.1109/TSMC.2013.2258010

Jia, W., Huang, D.-S., and Zhang, D. (2008). Palmprint verification based on robust line orientation code. Pattern Recogn. 41, 1504–1513. doi: 10.1016/j.patcog.2007.10.011

Jia, W., Huang, S., Wang, B., Fei, L., Zhao, Y., and Min, H. (2021). “Deep multi-loss hashing network for palmprint retrieval and recognition,” in 2021 IEEE International Joint Conference on Biometrics (IJCB) (Shenzhen: IEEE), 1–8. doi: 10.1109/IJCB52358.2021.9484403

Jia, W., Ren, Q., Zhao, Y., Li, S., Min, H., and Chen, Y. (2022). EEPNet: an efficient and effective convolutional neural network for palmprint recognition. Pattern Recogn. Lett. 159, 140–149. doi: 10.1016/j.patrec.2022.05.015

Jia, W., Wang, B., Zhao, Y., Min, H., and Feng, H. (2020). A performance evaluation of hashing techniques for 2D and 3D palmprint retrieval and recognition. IEEE Sensors J. 20, 11864–11873. doi: 10.1109/JSEN.2020.2973357

Jing, K., Zhang, X., and Xu, X. (2021). Double-Laplacian mixture-error model-based supervised group-sparse coding for robust palmprint recognition. IEEE Trans. Circuits Syst. Video Technol. 32, 3125–3140. doi: 10.1109/TCSVT.2021.3103941

Kong, A., Zhang, D., and Kamel, M. (2006). Palmprint identification using feature-level fusion. Pattern Recogn. 39, 478–487. doi: 10.1016/j.patcog.2005.08.014

Kong, A.-K., and Zhang, D. (2004). “Competitive coding scheme for palmprint verification,” in Proceedings of the 17th International Conference on Pattern Recognition, Vol. 1 (Cambridge), 520–523. doi: 10.1109/ICPR.2004.1334184

Korichi, M., Samai, D., Meraoumia, A., and Benlamoudi, A. (2021). “Towards effective 2D and 3D palmprint recognition using transfer learning deep features and Reliff method,” in 2021 International Conference on Recent Advances in Mathematics and Informatics (ICRAMI) (Tebessa), 1–6. doi: 10.1109/ICRAMI52622.2021.9585911

KPCA. (2021). A dimensionality reduction method for palmprint image processing-kernel principal component analysis (KPCA).

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. Commun. ACM 60, 84–90. doi: 10.1145/3065386

Kusban, M. (2021). Improvement palmprint recognition system by adjusting image data reference points. J. Phys. 1858, 012077. doi: 10.1088/1742-6596/1858/1/012077

LCMFC. (2020). A multifeature learning method to jointly learn compact multifeature codes (LCMFCs) for palmprint recognition with a single training sample per palmprint.

Li, M., Wang, H., Liu, H., and Meng, Q. (2022). Palmprint recognition based on the line feature local tri-directional patterns IET Biometr. 11, 570–580. doi: 10.1049/bme2.12085

Liang, X., Yang, J., Lu, G., and Zhang, D. (2021). CompNet: competitive neural network for palmprint recognition using learnable Gabor kernels. IEEE Signal Process. Lett. 28, 1739–1743. doi: 10.1109/LSP.2021.3103475

Liu, C., Zhong, D., and Shao, H. (2021). Few-shot palmprint recognition based on similarity metric hashing network. Neurocomputing 456, 540–549. doi: 10.1016/j.neucom.2020.07.153

Matkowski, W. M., Chai, T., and Kong, A. W. K. (2019). Palmprint recognition in uncontrolled and uncooperative environment. IEEE Trans. Inform. Forens. Secur. 15, 1601–1615. doi: 10.1109/TIFS.2019.2945183

Patil, J. P., Nayak, C., and Jain, M. (2015). “Palmprint recognition using DWT, DCT and PCA techniques,” in 2015 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC) (IEEE), 1–5. doi: 10.1109/ICCIC.2015.7435677

Shi, Y., Yu, X., Sohn, K., Chandraker, M., and Jain, A. K. (2020). “Towards universal representation learning for deep face recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (IEEE), 6817–6826. doi: 10.1109/CVPR42600.2020.00685

Simonyan, K., and Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv [Preprint]. arXiv: 1409.1556. doi: 10.48550/arXiv.1409.1556

Tarawneh, A. S., Chetverikov, D., and Hassanat, A. B. (2018). Pilot comparative study of different deep features for palmprint identification in low-quality images. arXiv [Preprint]. arXiv: 1804.04602. doi: 10.48550/arXiv.1804.04602

Ungureanu, A.-S., Salahuddin, S., and Corcoran, P. (2020). Toward unconstrained palmprint recognition on consumer devices: a literature review. IEEE Access 8, 86130–86148. doi: 10.1109/ACCESS.2020.2992219

Wang, J., Zhang, X., Gong, W., and Xu, X. (2019). “A summary of palmprint recognition technology,” in 2019 4th International Conference on Control, Robotics and Cybernetics (CRC) (Tokyo), 91–97. doi: 10.1109/CRC.2019.00027

Wang, J., Zhao, M., Xie, X., Zhang, L., and Zhu, W. (2022). Fusion of bilateral 2DPCA information for image reconstruction and recognition. Appl. Sci. 12, 12913. doi: 10.3390/app122412913

Yaddaden, Y., and Parent, J. (2022). “An efficient palmprint authentication system based on one-class SVM and hog descriptor,” in 2022 2nd International Conference on Advanced Electrical Engineering (ICAEE) (Constantine), 1–6. doi: 10.1109/ICAEE53772.2022.9962020

Yang, A., Zhang, J., Sun, Q., and Zhang, Q. (2017). “Palmprint recognition based on CNN and local coding features,” in 2017 6th International Conference on Computer Science and Network Technology (ICCSNT) (Dalian), 482–487. doi: 10.1109/ICCSNT.2017.8343744

Yang, B., Xiang, X., Yao, J., and Xu, D. (2020). 3D palmprint recognition using complete block wise descriptor. Multim. Tools Appl. 79, 21987–22006. doi: 10.1007/s11042-020-09000-7

Yang, M. (2020). “Fingerprint recognition system based on big data and multi-feature fusion,” in 2020 International Conference on Culture-Oriented Science & Technology (ICCST) (Beijing), 473–475. doi: 10.1109/ICCST50977.2020.00097

Zhang, D., Kong, W.-K., You, J., and Wong, M. (2003). Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intell. 25, 1041–1050. doi: 10.1109/TPAMI.2003.1227981

Zhang, L., Cheng, Z., Shen, Y., and Wang, D. (2018). Palmprint and palmvein recognition based on DCNN and a new large-scale contactless palmvein dataset. Symmetry 10:78. doi: 10.3390/sym10040078

Zhang, S., Wang, Y., and Li, A. (2021). “Cross-view gait recognition with deep universal linear embeddings,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Nashville, TN: IEEE), 9095–9104. doi: 10.1109/CVPR46437.2021.00898

Zhao, S., Wu, J., Fei, L., Zhang, B., and Zhao, P. (2022). Double-cohesion learning based multiview and discriminant palmprint recognition. Inform. Fus. 83, 96–109. doi: 10.1016/j.inffus.2022.03.005

Zhong, D., Du, X., and Zhong, K. (2019). Decade progress of palmprint recognition: a brief survey. Neurocomputing 328, 16–28. doi: 10.1016/j.neucom.2018.03.081

Zhong, D., and Zhu, J. (2019). Centralized large margin cosine loss for open-set deep palmprint recognition. IEEE Trans. Circuits Syst. Video Technol. 30, 1559–1568. doi: 10.1109/TCSVT.2019.2904283

Zhou, X., Zhou, K., and Shen, L. (2020). Rotation and translation invariant palmprint recognition with biologically inspired transform. IEEE Access 8, 80097–80119. doi: 10.1109/ACCESS.2020.2990736

Zhu, Z., He, X., Qi, G., Li, Y., Cong, B., and Liu, Y. (2023). Brain tumor segmentation based on the fusion of deep semantics and edge information in multimodal MRI. Inform. Fusion 91, 376–387. doi: 10.1016/j.inffus.2022.10.022

Keywords: palmprint recognition, convolutional neural networks (CNN), gate control mechanism, adaptive feature fusion, deep learning-based artificial neural networks

Citation: Zhang K, Xu G, Jin YK, Qi G, Yang X and Bai L (2023) Palmprint recognition based on gating mechanism and adaptive feature fusion. Front. Neurorobot. 17:1203962. doi: 10.3389/fnbot.2023.1203962

Received: 11 April 2023; Accepted: 08 May 2023;

Published: 26 May 2023.

Edited by:

Qian Jiang, Yunnan University, ChinaReviewed by:

Alwin Poulose, Indian Institute of Science Education and Research, Thiruvananthapuram, IndiaShuaiqi Liu, Hebei University, China

Xinghua Feng, Southwest University of Science and Technology, China

Copyright © 2023 Zhang, Xu, Jin, Qi, Yang and Bai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Litao Bai, Ymx0QGNxbXUuZWR1LmNu

Kaibi Zhang1

Kaibi Zhang1 Guanqiu Qi

Guanqiu Qi Litao Bai

Litao Bai