- 1School of Information Engineering, Changsha Medical University, Changsha, China

- 2School of Information and Electrical Engineering, Hunan University of Science and Technology, Xiangtan, China

Dynamic complex matrix equation (DCME) is frequently encountered in the fields of mathematics and industry, and numerous recurrent neural network (RNN) models have been reported to effectively find the solution of DCME in no noise environment. However, noises are unavoidable in reality, and dynamic systems must be affected by noises. Thus, the invention of anti-noise neural network models becomes increasingly important to address this issue. By introducing a new activation function (NAF), a robust zeroing neural network (RZNN) model for solving DCME in noisy-polluted environment is proposed and investigated in this paper. The robustness and convergence of the proposed RZNN model are proved by strict mathematical proof and verified by comparative numerical simulation results. Furthermore, the proposed RZNN model is applied to manipulator trajectory tracking control, and it completes the trajectory tracking task successfully, which further validates its practical applied prospects.

Introduction

Complex matrix problems frequently arise in mathematics and engineering, since complex matrices are widely applied in signal processing (Liu et al., 2014), image quality assessment (Wang, 2012), joint diagonalization (Maurandi et al., 2013), and robot path tracking (Guo et al., 2019, 2020; Jin et al., 2020, 2022a,c,e; Shi et al., 2021, 2022a; Liu et al., 2022). Various numerical algorithms have been presented to solve the complex matrix problems, such as the Newton iterative method (Rajbenbach et al., 1987) and the Greville recursive method (Gan and Ling, 2008). However, the complexity of these iterative algorithms is proportional to the dimension of the matrix to be calculated, and these iterative algorithms are very effective in the calculation of low dimensional matrix. As the dimension of the matrix increases, the computational workload also increases dramatically. Moreover, with the development of big data science, the demand for large-scale computation is also inevitable. Owing to their serial-processing characteristic, the powerlessness of iterative algorithms in large-scale computation are gradually revealed.

To solve the above mentioned issue, the neural network method is proposed and deeply investigated due to its potential advantages of distributed-storage and parallel-computation in large-scale computation (Lin et al., 2022a,b; Zhou et al., 2022a,b). As a typical recurrent neural network (RNN), the gradient-based neural network (GNN) is widely used to solve matrix problems in recent years (Liu et al., 2021; Jin et al., 2022b). For example, an odd activation function (AF) activated GNN model is presented in Zhang (2005), and it solves matrix inversion problem effectively. Besides, an improved GNN model for solving linear inequalities is presented in Xiao and Zhang (2011). The GNN model can only approach the theoretical solutions of time-varying problems with fluctuation, rather than precisely converging to their theoretical solutions, and they are commonly used to solve static problems. However, time-varying problems are often encountered with the increasingly development engineering techniques, and it is urgent to develop a neural network model for solving time-varying problems.

It is worth to mention that the zeroing neural network (ZNN) model for solving dynamic problems has been proposed by Zhang and Ge (2005). As the time derivative of coefficient matrices is fully considered, the ZNN model achieves accurate solution to dynamic problems, which makes the ZNN model a powerful tool for solving dynamic problems. In Li et al. (2013), a sign-bi-power activation function (SBPAF) activated ZNN model achieves finite-time convergent to the theoretical solution of dynamic linear equation. In Jin (2021b), a finite time convergence recurrent neural network (FTCRNN) model is realized for solving time-varying complex matrix equation, and it has faster convergent speed than the conventional ZNN model. The above mentioned improved ZNN models guarantee accurate and fast solution to dynamic problems in ideal no noise environment. However, noises are unavoidable in reality, anti-noise ability must be considered for all the neural models. Hence, many anti-noise neural models have been reported to address this issue in recent years. In Jin et al. (2016), an anti-noise IEZNN model is reported for dynamic matrix inversion in noise polluted environment. Besides, in Jin et al. (2017), a NTZNN model is presented for solving dynamic problems in noisy environment. The existing anti-noise models work properly in noisy environment, but their finite-time convergent performance can be further improved. Thus, the improvement of the convergence and robustness of the existing neural models is still open. Moreover, the previous neural models focused on solving real domain dynamic problems (Li et al., 2020, 2021, 2022; Gong and Jin, 2021; Jin, 2021a; Jin and Qiu, 2022; Jin et al., 2022d,f; Shi et al., 2022b; Zhu et al., 2022), and the neural network research for solving complex domain dynamic problems is also indispensable. With the expansion of neural models to complex domain, various complex domain scientific and engineering problems can be solved easily.

Inspired by the above mentioned issues, a robust zeroing neural network (RZNN) model with fast convergence and robustness to noises for solving dynamic complex matrix equation (DCME) problems is proposed in this work. Its fast convergence irrelevant to system initial state and robustness to various noises are verified by rigorous mathematical analysis. Besides, the ZNN model activated by SBPAF are also applied to solve the DCME in same condition for the purpose of comparison, and the corresponding simulation results further demonstrate the superior convergence and robustness of the proposed RZNN model for solving dynamic complex domain problems.

The dynamic complex matrix equation and its transformation

Generally, DCME problem can be described by the following equation.

where A(t) ∈Cn×n and B(t) ∈Cn×n are the known dynamic complex matrices, and D(t)∈Cn×n represents the unknown dynamic complex matrix to be solved.

As we know, it is very difficult to find the matrix D(t) directly from the above complex domain equation. However, any complex number contains real and imaginary parts, and we can solve the complex matrix D(t) through the transformation below.

Then, calculating Eq. 2 yields

Then, Eq. 3 can be simplified as,

Equation 4 can be further rewritten as,

where, , , and , and we assume det|D(t)|≠0 to guarantee unique solution of Eq. 5 for t∈ [0, ∞].

Based on the above transformation, we can know that solving DCME (1) is equivalent to find the solution of the real domain dynamic matrix equation (DME) in (5), and the solution of Eq. 5 satisfies X(t) = Dre(t) + jDim(t).

Zeroing neural network and robust zeroing neural network models

We can follow the steps below to construct the ZNN model for solving DME (5).

Firstly, we define a dynamic error matrix

Then, formula (7) is applied for the convergence of E(t).

where γ > 0 is an adjustable coefficient for the convergent speed, and Γ() is an AF array.

Combining (6) and (7), the ZNN model for solving DME (5) is obtained.

It is worth to point out that the ZNN model (8) is stable as long as AF Γ() is a monotonically odd function. As a vital part of the ZNN model, the AF Γ() has an important influence on the convergence and robustness of the ZNN model, and various AFs have been reported in recent years, such as the linear AF, bi-power activation function, and SBPAF, etc. In addition, the SBPAF will be adopted as the AF Γ() in the ZNN model (8) for the comparisons with the proposed RZNN model in the simulation section.

To further improve the convergence and robustness of the ZNN model, a new AF is presented below.

where sgn() is the signum function and m, k, a, b > 0, mk > 1.

On the basis of the new AF (9), the RZNN model proposed in this work is realized.

where φ(•) is the corresponding element of the AF array Φ(•).

Considering the noises, the noise polluted RZNN model is represented as,

where N(t) denotes the additive matrix noise, and nij(t) represents its ijth element.

Robust zeroing neural network model analysis

In this section, the convergence and robustness of the proposed RZNN model will be analyzed and verified. For the convenience of subsequent analysis, the following Lemma 1 is introduced in advance.

Lemma 1. Consider the following dynamic system (Aouiti and Miaadi, 2020)

where m, k, a, b > 0, mk > 1. Dynamic system (12) is fixed-time stable, and x(t) will converge to zero within t.

On the basis of Lemma 1, the convergence and robustness of the proposed RZNN model with noise and without noise will be analyzed, respectively.

Case 1: Without noise

The following theorem 1 guarantees the fixed-time convergence of the proposed RZNN model (10) in no noise environment.

Theorem 1. For arbitrary initial system state, state solution X(t) generated by RZNN model (10) will converge to the theoretical solution X*(t) of DME (5) within ts.

Proof. According to (7), the design formula of RZNN model (10) can be derived as , and its n × n subsystems can be presented as

Consider the Lyapunov candidate function vij(t) = | eij(t)|, and substitute AF (9) into (14), then the derivative of vij(t) is

Based on Lemma 1, the convergent time tij of the ijth subsystem is

Then upper bound of the convergent time of RZNN model (10) is obtained.

Case 2: Polluted by dynamic bounded disappearing noise (DBDN)

The following theorem 2 guarantees the fixed-time convergence of the proposed RZNN model (11) polluted by DBDN.

Theorem 2. If N(t) in (11) is a DBDN, and | nij(t)| ≤ δ| eij(t)| and λc ≥ δ [δ∈ (0, +∞)] hold. For arbitrary initial system state, state solution X(t) generated by RZNN model (11) will converge to the theoretical solution X*(t) of DME (5) within ts.

Proof. The evolution formula of RZNN model (11) with noise can be written in the form of , and its n × n subsystem can be obtained as

Consider the Lyapunov candidate function vij(t) = | eij(t)| and the inequalities | nij(t)| ≤ δ| eij(t)| and λd ≥ δ, substitute AF (9) into (16), then the derivative of vij(t) is

Based on Lemma 1, the convergent time tij of the ijth subsystem is

Then upper bound of the convergent time of RZNN model (11) polluted by DBDN is obtained.

Case 3: Polluted by dynamic bounded non-disappearing noise (DBNDN)

The following theorem 3 guarantees the fixed-time convergence of the proposed RZNN model (11) polluted by DBNDN.

Theorem 3. If N(t) in (11) is a DBNDN, | nij(t)| ≤ δ and λd ≥ δ [δ∈ (0, + ∞)] hold. For arbitrary initial system state, state solution X(t) generated by RZNN model (11) will converge to the theoretical solution X*(t) of DME (5) within ts.

Proof. The evolution formula of RZNN model (11) with noise can be written in the form of , and its n × n subsystem can be obtained as

Consider the Lyapunov candidate function vij(t) = | eij(t)| and the inequalities | nij(t)| ≤ δ and λd ≥ δ, substitute AF (9) into (18), then the derivative of vij(t) is

According to Lemma 1, the convergent time tij of the ijth subsystem is

Then, upper bound of the convergent time of RZNN model (11) polluted by DBNDN is obtained.

Based on the above theorems, we can draw the conclusion that the proposed RZNN model can converge to the theoretical solution of DME (5) within fixed-time and it is robust to noise.

Numerical verification and robotic manipulator application

The convergence and robustness of the proposed RZNN model in noisy environment are analyzed in the above section, two examples of the proposed RZNN for DCME (1) solving and robotic manipulator trajectory tracking will be presented in this section.

Example 1. DCME (1) solving

Consider DCME (1) with the following dynamic coefficient matrices.

According to the transformation theory in Section “The dynamic complex matrix equation and its transformation,” the above DCME (1) can be transformed to DME (5) with the following dynamic coefficient matrices.

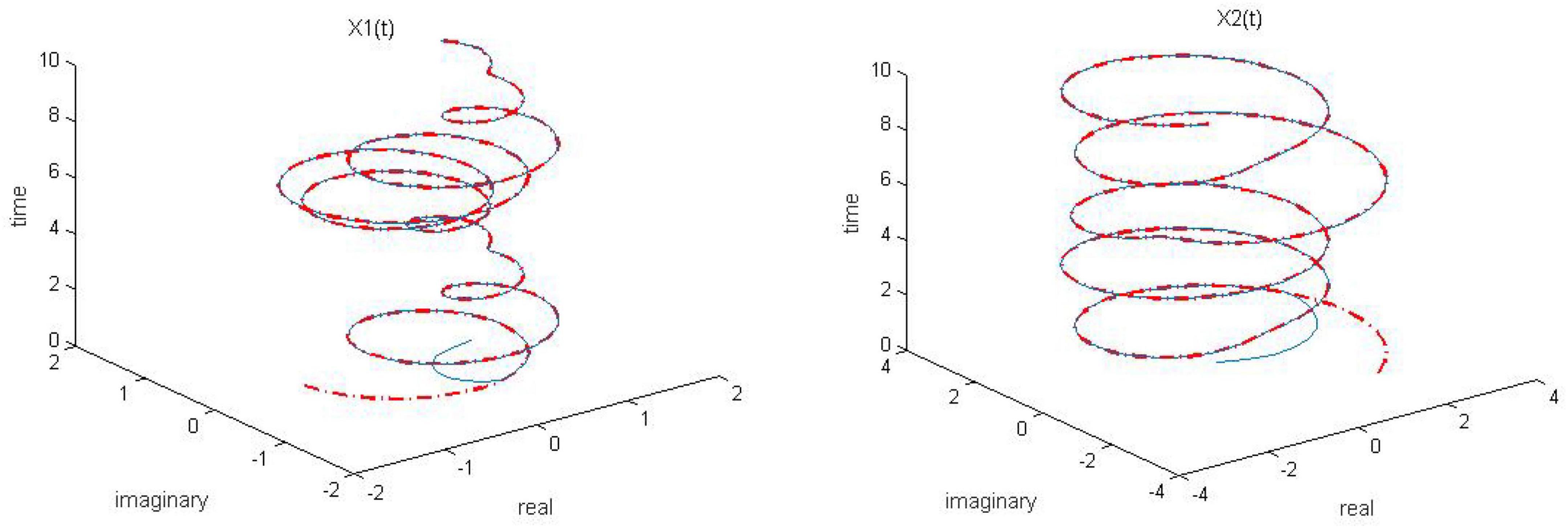

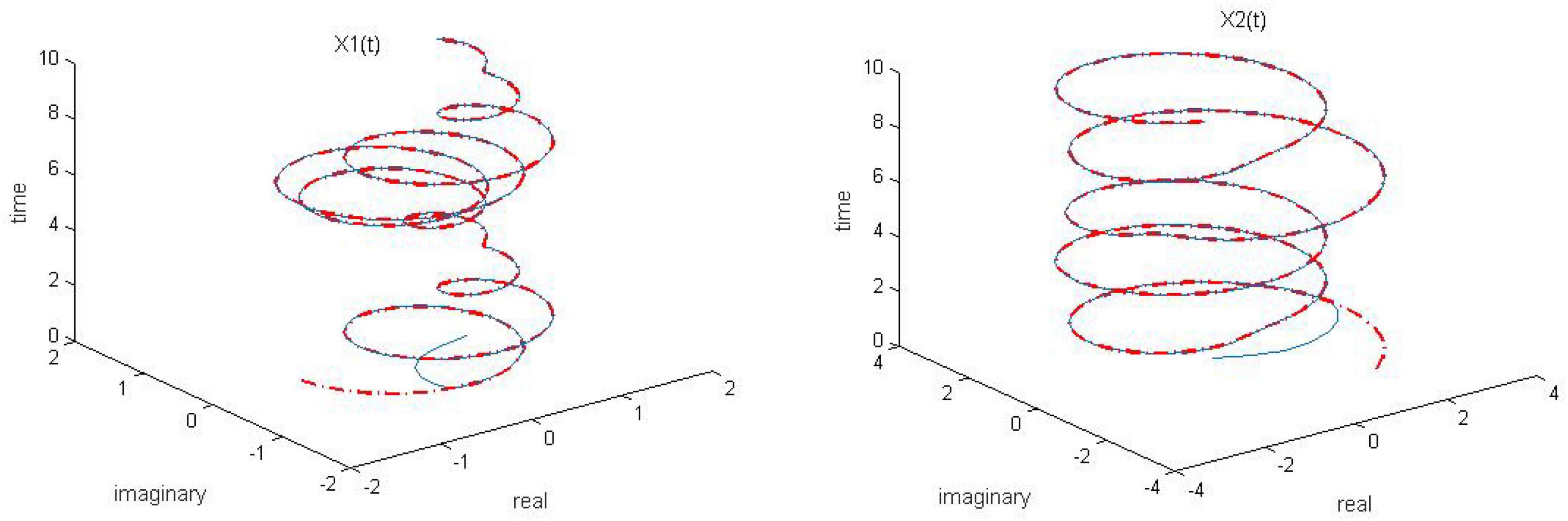

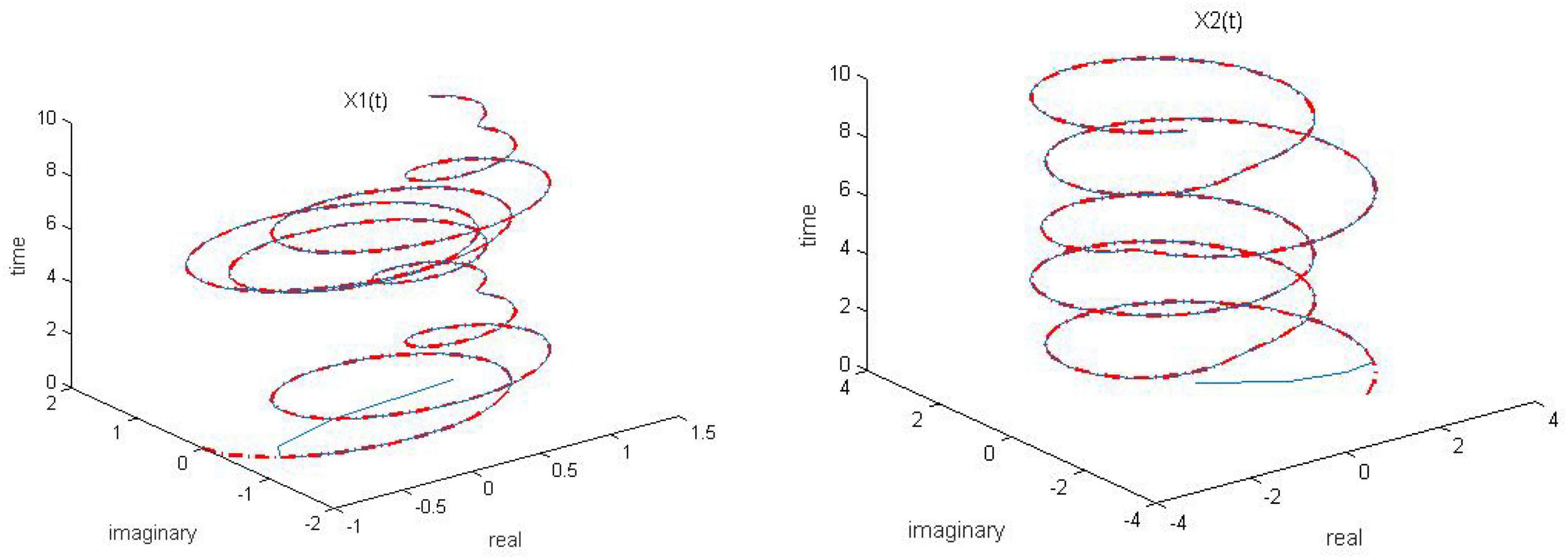

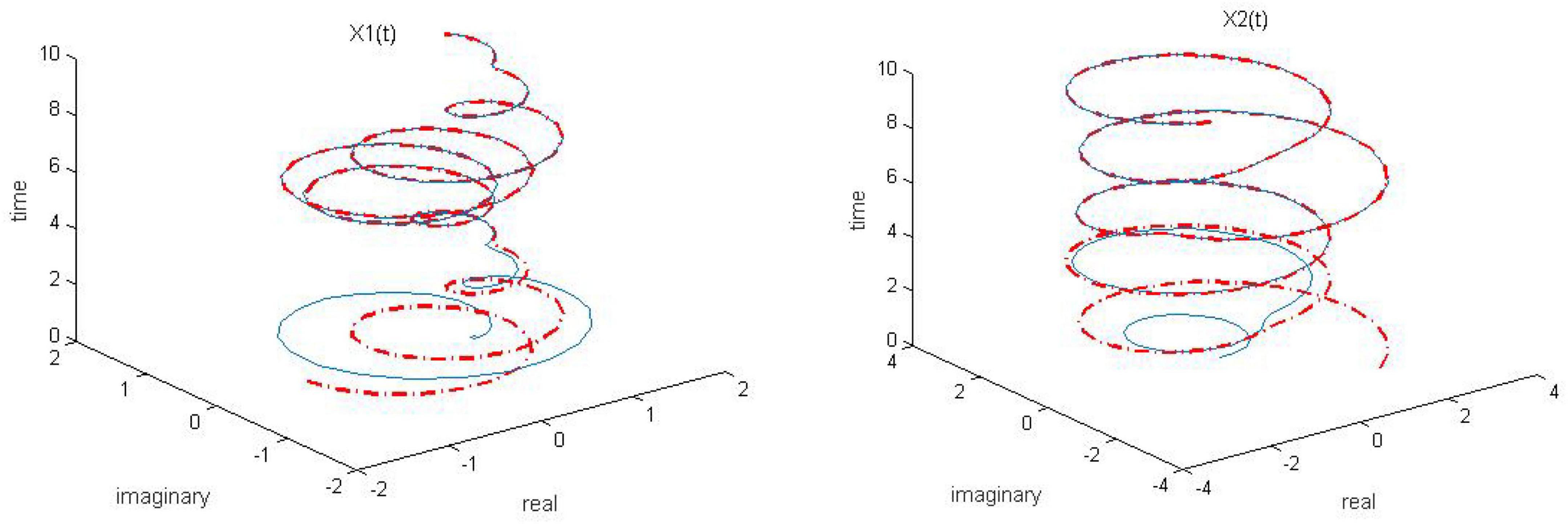

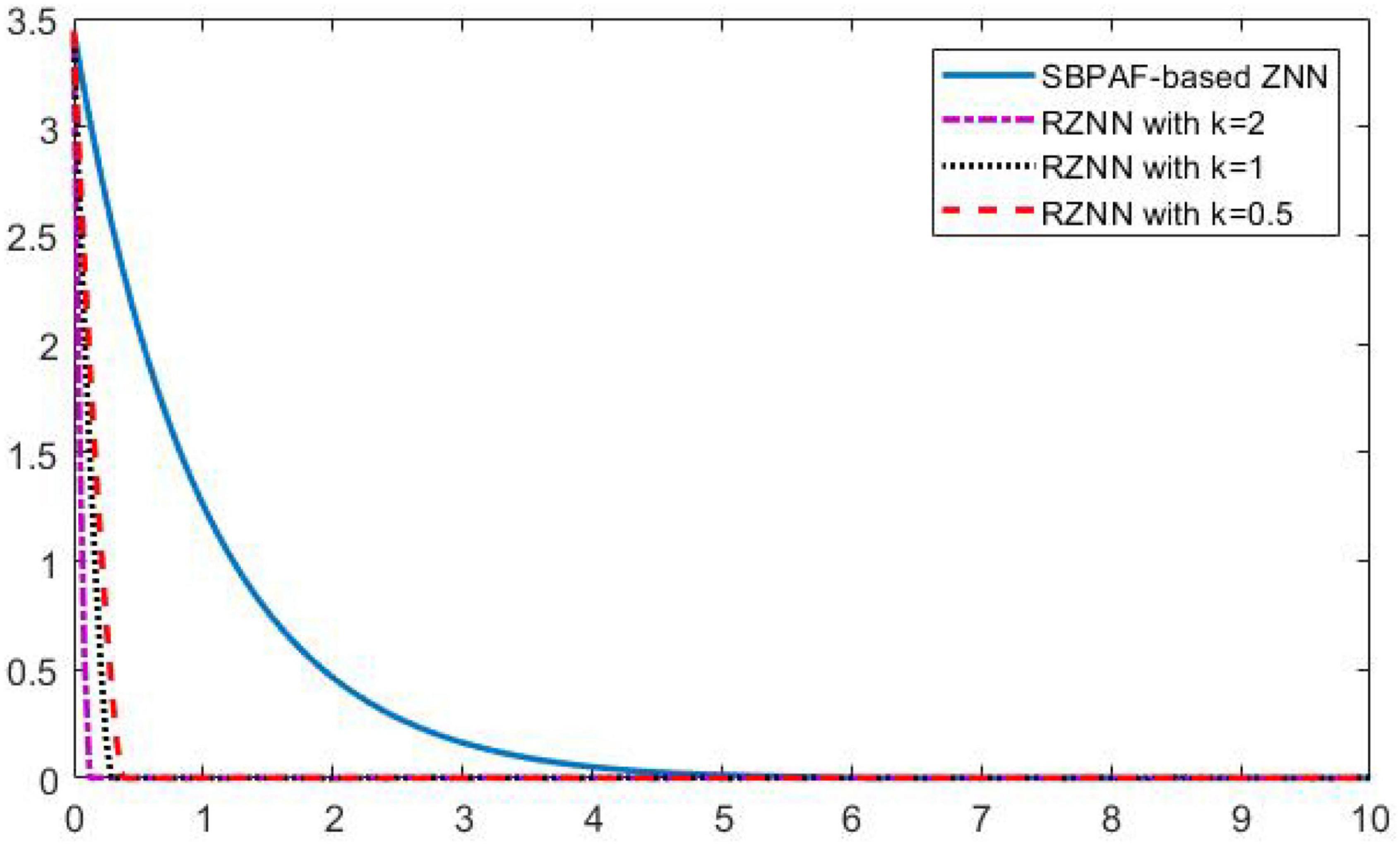

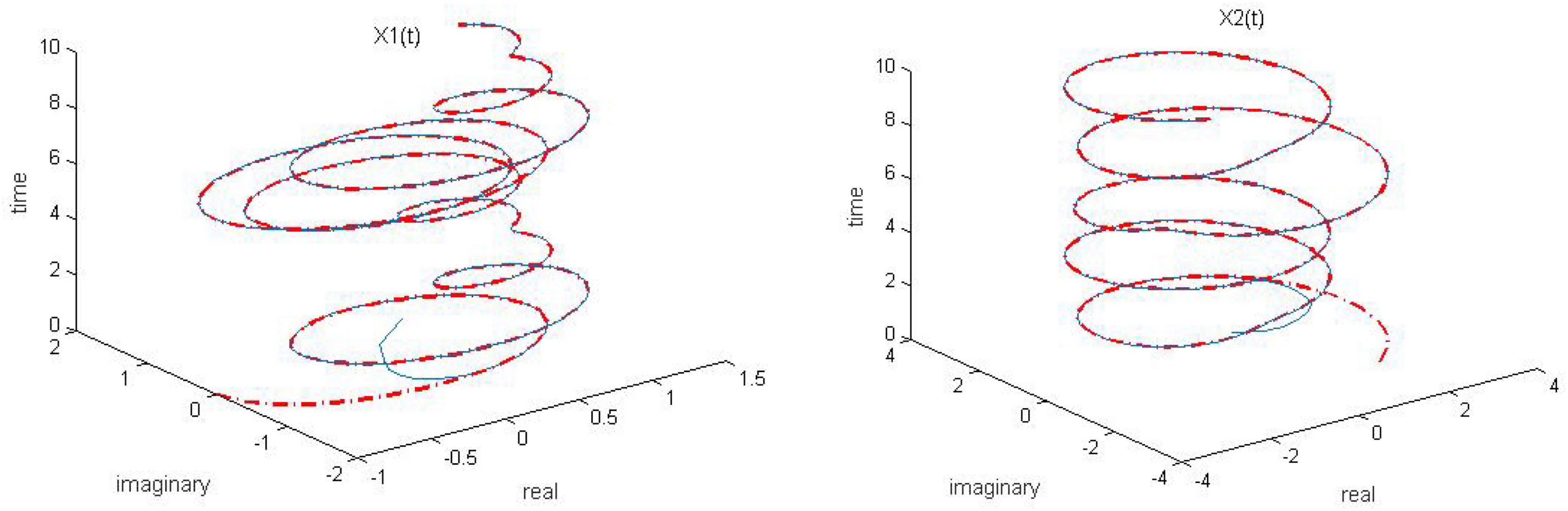

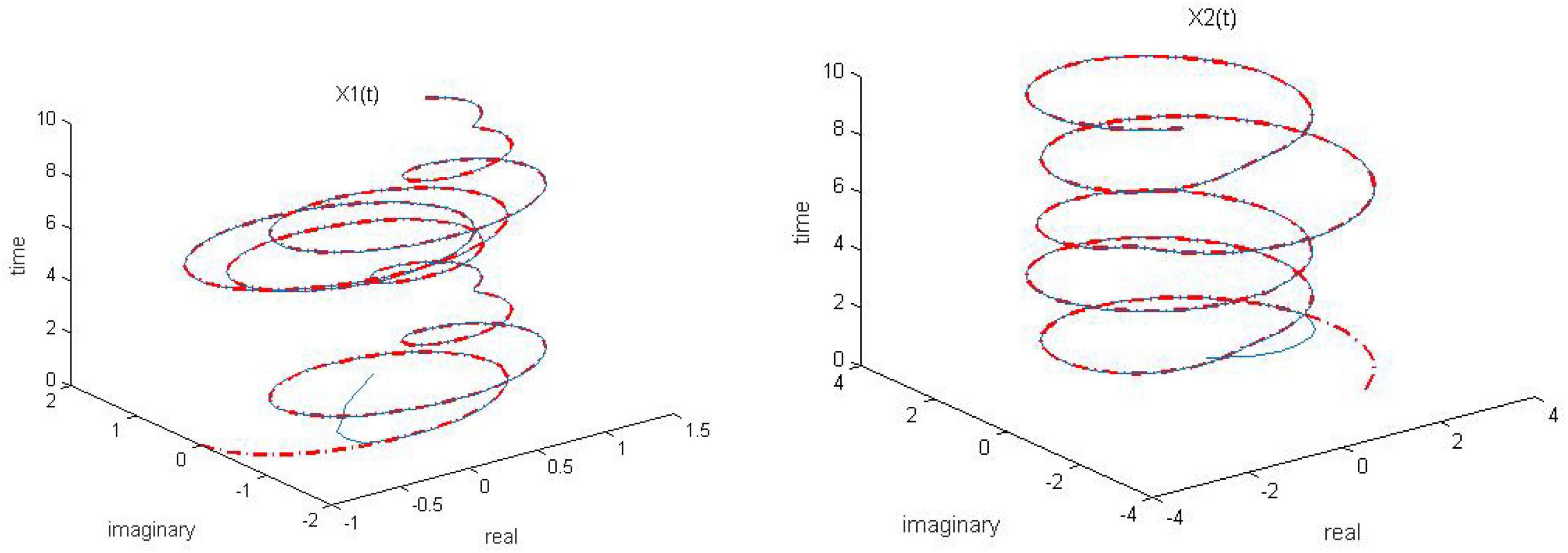

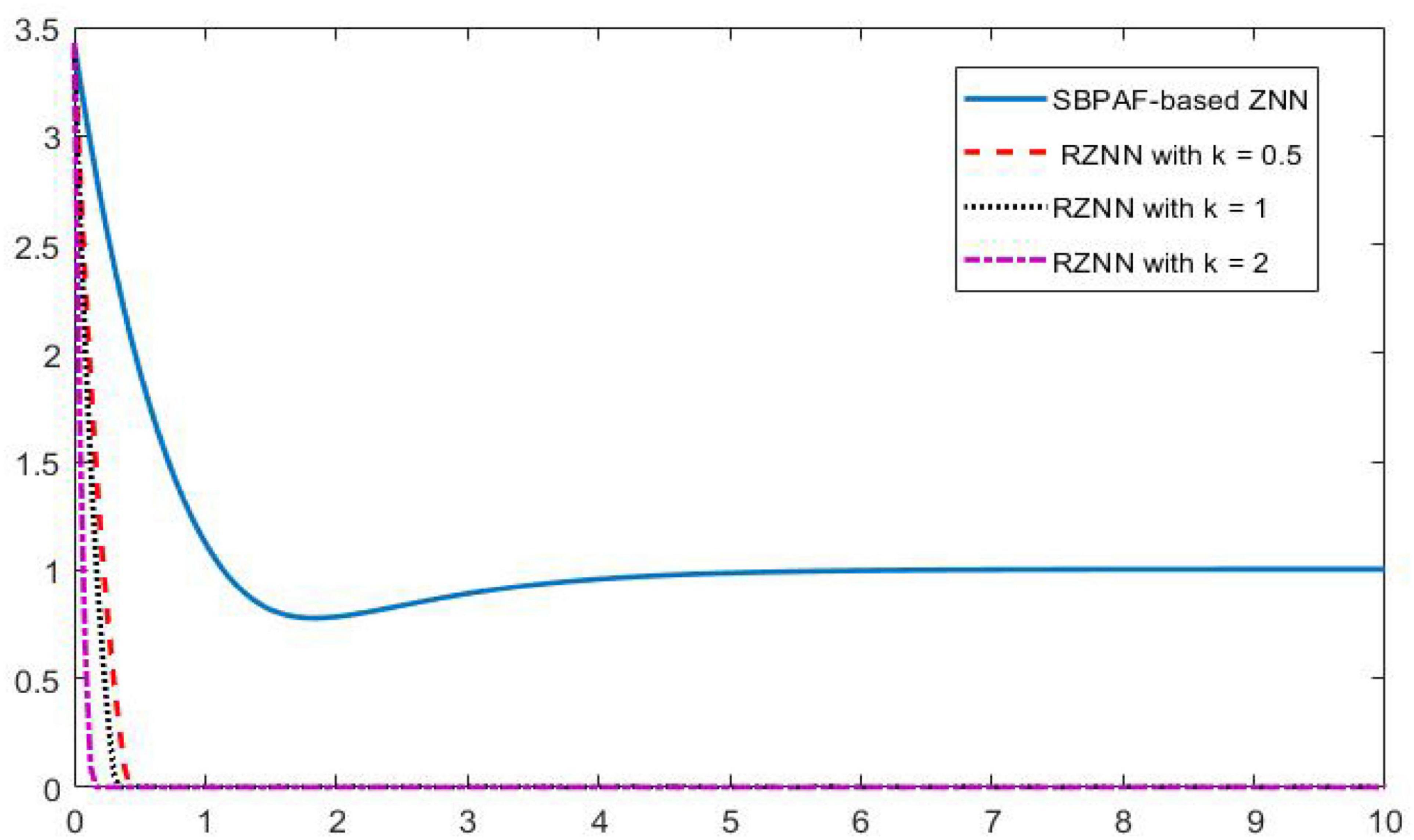

Let γ = 1, both of the proposed RZNN model (10) and SBPAF-based ZNN model (8) are used to solve the above DME (5) in no noise environment for arbitrary initial state X(t = 0). Moreover, in order to observe the parameter n in AF (9) to adjust the convergent speed of the proposed RZNN model (10), the parameter k is, respectively, set to be k = 0.5, k = 1, and k = 2 for solving DME (5). The corresponding simulation results are presented in Figures 1–5, and solid blue curves are state solutions of DME (5) obtained by neural network models, and red dotted curves are theoretical solutions of DME (5).

Figure 1. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (10) with k = 0.5 in no noise environment.

Figure 2. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (10) with k = 1 in no noise environment.

Figure 3. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (10) with k = 2 in no noise environment.

Figure 4. Dynamic complex matrix equation (DCME) (1) solved by sign-bi-power activation function (SBPAF)-based zeroing neural network (ZNN) model (8) in no noise environment.

Figure 5. Residual errors of robust zeroing neural network (RZNN) model (10) and sign-bi-power activation function (SBPAF)-based ZNN model (8) in no noise environment.

As observed in Figures 1–4, both of the proposed RZNN model (10) and SBPAF-based ZNN model (8) effectively solve DME (5) in no noise environment. Moreover, the parameter k in AF (9) has an important influence on the convergence of the RZNN model (10), and the convergence of the RZNN model (10) increases with the increase of the parameter k. The residual errors | | G(t)X(t)-H(t)| | F of RZNN model (10) and SBPAF-based ZNN model (8) are presented in Figure 5. From Figure 5, we can clearly observe that the proposed RZNN model (10) has superior convergence than the SBPAF-based ZNN model (8) in no noise environment.

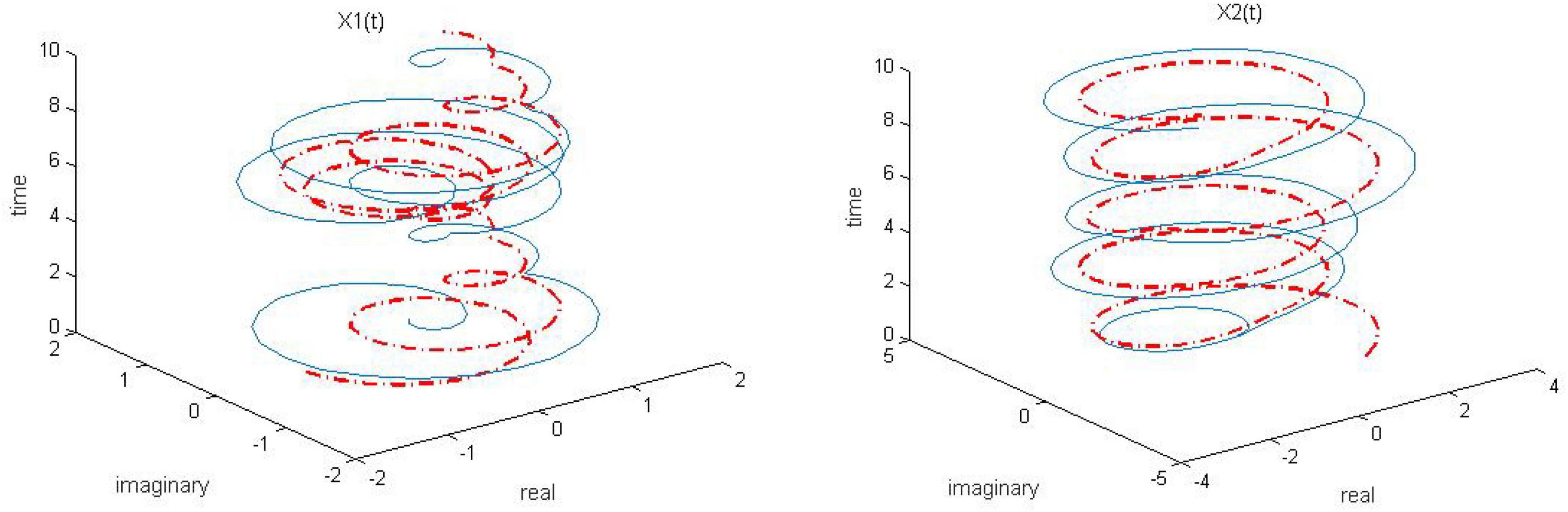

To further observe the convergence and robustness of the proposed RZNN model (11) and the SBPAF-based ZNN model (8), both of the proposed RZNN model (10) and SBPAF-based ZNN model (8) are adopted to solve the same DME (5) in constant noise n(t) = 0.5 polluted environment, and the parameter n is also set to be k = 0.5, k = 1, and k = 2, respectively. The corresponding simulation results for solving DME (5) in constant noise n(t) = 0.5 polluted environment are presented in Figures 6–10.

Figure 6. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (11) with k = 0.5 in n(t) = 0.5 polluted environment.

Figure 7. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (11) with k = 1 in n(t) = 0.5 polluted environment.

Figure 8. Dynamic complex matrix equation (DCME) (1) solved by robust zeroing neural network (RZNN) model (11) with k = 2 in n(t) = 0.5 polluted environment.

Figure 9. Dynamic complex matrix equation (DCME) (1) solved by sign-bi-power activation function (SBPAF)-based robust zeroing neural network (RZNN) model (8) in n(t) = 0.5 polluted environment.

Figure 10. Residual errors of robust zeroing neural network (RZNN) model (11) and sign-bi-power activation function (SBPAF)-based ZNN model (8) in n(t) = 0.5 polluted environment.

As seen in Figures 6–8, the proposed RZNN model (10) still effectively solves DME (5) in noise polluted environment. However, the SBPAF-based ZNN model (8) fails, and it cannot converge to the theoretical solution of DME (5) owing to the influence of the additive noise. The residual errors | | G(t)X(t)-H(t)| | F of the two models are presented in Figure 10 to further demonstrate their convergence and robustness. From Figure 10, we can also clearly observe that the proposed RZNN model (10) has superior convergence and robustness than the SBPAF-based ZNN model (8) in noise polluted environment.

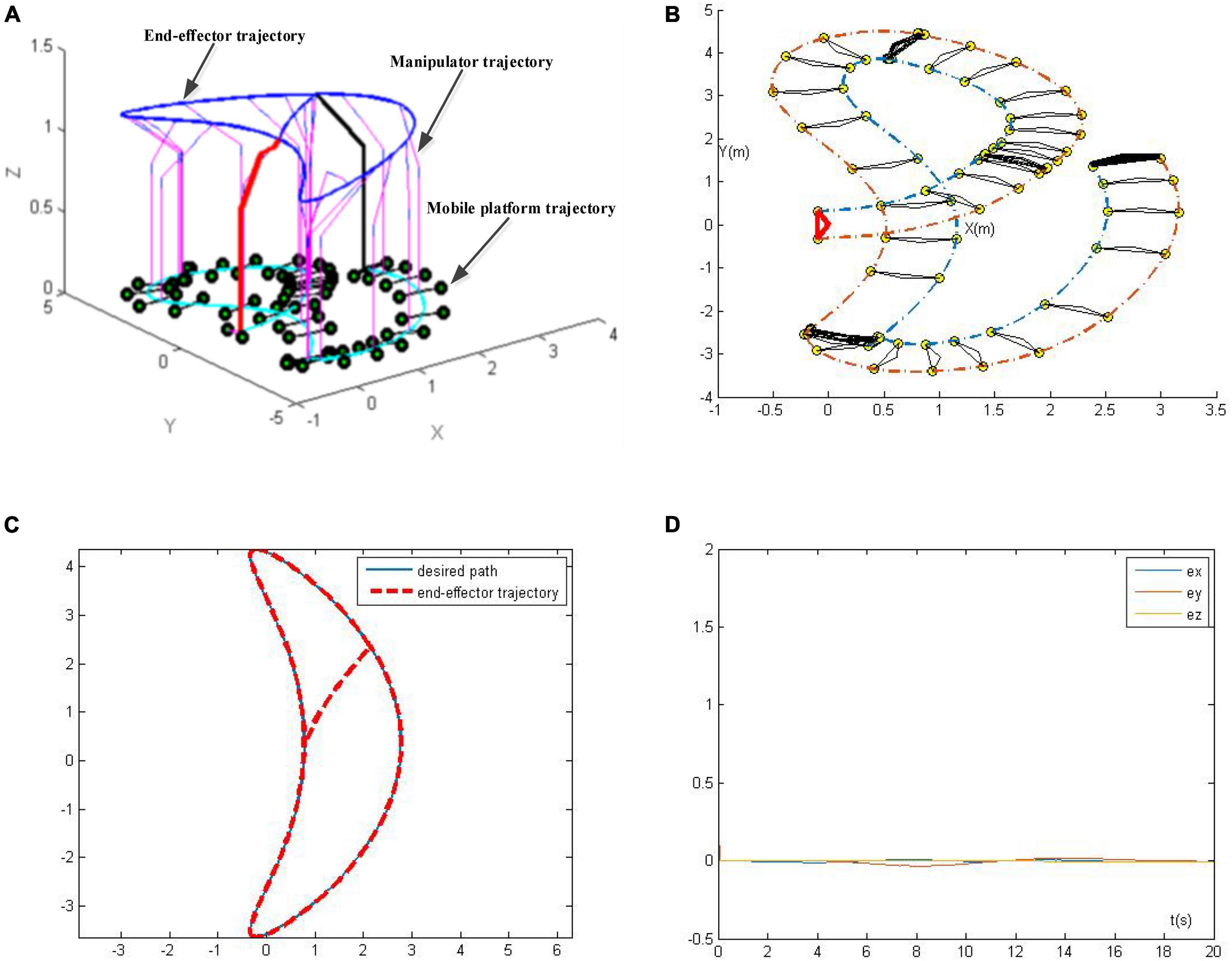

Example 2. Robotic manipulator trajectory tracking

With the development of artificial intelligence, robots have drawn considerable interests in academic and industrial fields (Guo et al., 2021; Jin and Gong, 2021). In this section, a robotic manipulator trajectory tracking application using the proposed RZNN model in noisy environment is presented.

According to Zhou et al. (2022c), the kinematic model of a robotic manipulator is

where r(t) is the end-effector position, θ(t) is joint angle, ξ() stands for a non-linear function. The velocity level motion equation can be expressed as

where J(θ) = əξ(θ)/əθ.

Assume rd(t) is the desired path, and r(t) is the end-effector tracking trajectory. We will design a control law , which enforces the tracking error e(t) = r(t)–rd(t) converging to 0. To achieve such a purpose, the proposed RZNN model is used to design the control law, and the RZNN-based kinematic control model is shown below.

The corresponding simulation results are presented in Figure 11. Figure 11A is the overall view of the tracking trajectory, Figure 11B is the mobile platform trajectory, Figure 11C presents the actual trajectory of end-effector and the desired tracking path, and Figure 11D presents tracking errors of the robotic manipulator in X, Y, and Z directions. As seen in Figure 11, the RZNN-based kinematic control model (21) completes the trajectory tracking task successfully, and the tracking errors of the robotic manipulator in X, Y, and Z directions are all less than 0.1 mm in noise polluted environment, which further demonstrates its superior convergence and robustness to noise.

Figure 11. Trajectory tracking results of the robotic manipulator synthesized by robust zeroing neural network (RZNN) model with n(t) = 0.5. (A) Whole tracking trajectory of the manipulator, (B) mobile platform trajectory, (C) desired path and the end-effector trajectory, and (D) tracking errors.

Conclusion

In this paper, by introducing a new AF, a RZNN model for DCME solving and robotic manipulator trajectory tracking is presented. Rigorous mathematical verification demonstrates that the RZNN model can accurately and quickly solve the DCME problem in various noises polluted environment. Moreover, the convergence and robustness of the proposed RZNN model are verified by comparative numerical simulation results. Compared with the SBPAF-based ZNN model, the proposed RZNN model has superior convergence and robustness to noise. In addition, we could focus our future research directions on the further improvements of the convergence and robustness of the RZNN model and the engineering application expansion of the ZNN models.

Data availability statement

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62273141), Natural Science Foundation of Hunan Province (Grant No. 2020JJ4315), and Scientific Research Fund of Hunan Provincial Education Department (Grant No. 20B216).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aouiti, C., and Miaadi, F. (2020). A new fixed-time stabilization approach for neural networks with time-varying delays. Neural Comput. Appl. 32, 3295–3309.

Gan, L., and Ling, C. (2008). Computation of the para-pseudoinverse for oversampled filter banks: forward and backward greville formulas. IEEE Trans. Signal Process. 56, 5851–5860.

Gong, J., and Jin, J. (2021). A better robustness and fast convergence zeroing neural network for solving dynamic nonlinear equations. Neural Comput. Appl. [Epub ahead of print]. doi: 10.1007/s00521-020-05617-9

Guo, D., Feng, Q., and Cai, J. (2019). Acceleration-level obstacle avoidance of redundant manipulators. IEEE Access 7, 183040–183048. doi: 10.3389/fnbot.2020.00054

Guo, D., Li, S., and Stanimirovic, P. S. (2020). Analysis and application of modified ZNN design with robustness against harmonic noise. IEEE Trans. Industr. Inform. 16, 4627–4638.

Guo, D., Li, Z., Khan, A. H., Feng, Q., and Cai, J. (2021). Repetitive motion planning of robotic manipulators with guaranteed precision. IEEE Trans. Industr. Inform. 17, 356–366.

Jin, J. (2021b). An improved finite time convergence recurrent neural network with application to time-varying linear complex matrix equation solution. Neural Process. Lett. 53, 777–786.

Jin, J. (2021a). A robust zeroing neural network for solving dynamic nonlinear equations and its application to kinematic control of mobile manipulator. Complex Intelligent Syst. 7, 87–99.

Jin, J., and Gong, J. (2021). An interference-tolerant fast convergence zeroing neural network for dynamic matrix inversion and its application to mobile manipulator path tracking. Alexandria Eng. J. 60, 659–669.

Jin, J., and Qiu, L. (2022). A robust fast convergence zeroing neural network and its applications to dynamic Sylvester equation solving and robot trajectory tracking. J. Franklin Instit. 359, 3183–3209.

Jin, J., Zhao, L., Li, M., Yu, F., and Xi, Z. (2020). Improved zeroing neural networks for finite time solving nonlinear equations. Neural Comput. Appl. 32, 4151–4160.

Jin, L., Li, J., Sun, Z., Lu, J., and Wang, F. (2022a). Neural dynamics for computing perturbed nonlinear equations applied to ACP-based lower limb motion intention recognition. IEEE Trans. Syst. Man Cybernet. Syst. 52, 5105–5113.

Jin, J., Chen, W., Zhao, L., Long, C., and Tang, Z. (2022c). A nonlinear zeroing neural network and its applications on time-varying linear matrix equations solving, electronic circuit currents computing and robotic manipulator trajectory tracking. Comput. Appl. Math. 41:319.

Jin, J., Zhu, J., Zhao, L., and Chen, L. (2022e). A fixed-time convergent and noise-tolerant zeroing neural network for online solution of time-varying matrix inversion. Appl. Soft Comput. 130:109691.

Jin, L., Li, W., and Li, S. (2022b). “Gradient-based differential neural-solution to time-dependent nonlinear optimization,” in Proceedings of the IEEE Transactions on Automatic Control, (Piscataway, NJ: IEEE).

Jin, J., Zhu, J., Gong, J., and Chen, W. (2022d). Novel activation functions-based ZNN models for fixed-time solving dynamic Sylvester equation. Neural Comput. Appl. 34, 14297–14315.

Jin, J., Zhu, J., Zhao, L., Chen, L., and Gong, J. (2022f). “A robust predefined-time convergence zeroing neural network for dynamic matrix inversion,” in Proceedings of the IEEE Transactions on Cybernetics, (Piscataway, NJ: IEEE). doi: 10.1109/TCYB.2022.3179312

Jin, L., Zhang, Y., and Li, S. (2016). Integration-enhanced Zhang neural network for real-time-varying matrix inversion in the presence of various kinds of noises. IEEE Trans. Neural Netw. Learn. Syst. 27, 2615–2627. doi: 10.1109/TNNLS.2015.2497715

Jin, L., Zhang, Y., Li, S., and Zhang, Y. (2017). Noise-tolerant ZNN models for solving time-varying zero-finding problems: a control-theoretic approach. IEEE Trans. Automatic Control 62, 992–997.

Li, S., Chen, S., and Liu, B. (2013). Accelerating a recurrent neural network to finite-time convergence for solving time-varying Sylvester equation by using a sign-bi-power activation function. Neural Process. Lett. 37, 189–205.

Li, W., Ma, X., Luo, J., and Jin, L. (2021). A strictly predefined-time convergent neural solution to equality- and inequality-constrained time-variant quadratic programming. IEEE Trans. Syst. Man Cybernet. Syst. 51, 4028–4039.

Li, W., Ng, W. Y., Zhang, X., Huang, Y., Li, Y., Song, C., et al. (2022). A kinematic modeling and control scheme for different robotic endoscopes: a rudimentary research prototype. IEEE Robot. Autom. Lett. 7, 8885–8892.

Li, W., Xiao, L., and Liao, B. (2020). A finite-time convergent and noise-rejection recurrent neural network and its discretization for dynamic nonlinear equations solving. IEEE Trans. Cybernet. 50, 3195–3207. doi: 10.1109/TCYB.2019.2906263

Lin, H., Wang, C., Cui, L., Sun, Y., Xu, C., and Yu, F. (2022a). Brain-like initial-boosted hyperchaos and application in biomedical image encryption. IEEE Trans. Ind. Inform. 18, 8839–8850. doi: 10.1109/TII.2022.3155599

Lin, H., Wang, C., Xu, C., Zhang, X., and Iu, H. H. C. (2022b). “A memristive synapse control method to generate diversified multi-structure chaotic attractors,” in Proceedings of the IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, (Piscataway, NJ: IEEE).

Liu, L., Liu, X., and Xia, S. (2014). “Deterministic complex-valued measurement matrices based on Berlekamp-Justesen codes,” in Proceedings of the 2014 IEEE China Summit & International Conference on Signal and Information Processing (ChinaSIP), Xi’an, 723–727.

Liu, M., Chen, L., Du, X., Jin, L., and Shang, M. (2021). “Activated gradients for deep neural networks,” in Proceedings of the IEEE Transactions on Neural Networks and Learning Systems, (Piscataway, NJ: IEEE).

Liu, M., Zhang, X., Shang, M., and Jin, L. (2022). Gradient-based differential kWTA network with application to competitive coordination of multiple robots. IEEE CAA J. Autom. Sin. 9, 1452–1463.

Maurandi, V., De Luigi, C., and Moreau, E. (2013). “Fast Jacobi like algorithms for joint diagonalization of complex symmetric matrices,” in Proceedings of the 21st European Signal Processing Conference (EUSIPCO 2013), Marrakech, 1–5.

Rajbenbach, H., Fainman, Y., and Lee, S. H. (1987). Optical implementation of an iterative algorithm for matrix inversion. Appl. Opt. 26, 1024–1031.

Shi, Y., Jin, L., Li, S., Li, J., Qiang, J., and Gerontitis, D. K. (2022a). Novel discrete-time recurrent neural networks handling discrete-form time-variant multi-augmented Sylvester matrix problems and manipulator application. IEEE Trans. Neural Netw. Learn. Syst. 33, 587–599. doi: 10.1109/TNNLS.2020.3028136

Shi, Y., Wang, J., Li, S., Li, B., and Sun, X. (2022b). “Tracking control of cable-driven planar robot based on discrete-time recurrent neural network with immediate discretization method,” in Proceedings of the IEEE Transactions on Industrial Informatics, (Piscataway, NJ: IEEE).

Shi, Y., Zhao, W., Li, S., Li, B., and Sun, X. (2021). Novel discrete-time recurrent neural network for robot manipulator: a direct discretization technical route. IEEE Trans. Neural Netw. Learn. Syst. [Epub ahead of print]. doi: 10.1109/TNNLS.2021.3108050

Wang, Y. (2012). “Image quality assessment based on gradient complex matrix,” in Proceedings of the 2012 International Conference on Systems and Informatics (ICSAI2012), Yantai, 1932–1935. doi: 10.1109/TCYB.2015.2512852

Xiao, L., and Zhang, Y. (2011). Zhang neural network versus gradient neural network for solving time-varying linear inequalities. IEEE Trans. Neural Netw. 22, 1676–1684. doi: 10.1109/TNN.2011.2163318

Zhang, Y. (2005). “Revisit the analog computer and gradient-based neural system for matrix inversion,” in Proceedings of the 2005 IEEE International Symposium on, Mediterrean Conference on Control and Automation Intelligent Control, (Limassol: IEEE), 1411–1416.

Zhang, Y., and Ge, S. S. (2005). Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans. Neural Netw. Learn. Syst. 16, 1477–1490. doi: 10.1109/TNN.2005.857946

Zhou, C., Wang, C., Sun, Y., Yao, W., and Lin, H. (2022a). Cluster output synchronizationfor memristive neural networks. Inform. Sci. 589, 459–477.

Zhou, C., Wang, C., Yao, W., and Lin, H. (2022b). Observer-based synchronization of memristive neural networks under DoS attacks and actuator saturation and its application to image encryption. Appl. Math. Comput. 425:127080.

Zhou, P., Tan, M., Ji, J., and Jin, J. (2022c). Design and analysis of anti-noise parameter-variable zeroing neural network for dynamic complex matrix inversion and manipulator trajectory tracking. Electronics 11:824.

Keywords: recurrent neural network, zeroing neural network, dynamic complex matrix equation, activation function, convergence

Citation: Jin J, Zhao L, Chen L and Chen W (2022) A robust zeroing neural network and its applications to dynamic complex matrix equation solving and robotic manipulator trajectory tracking. Front. Neurorobot. 16:1065256. doi: 10.3389/fnbot.2022.1065256

Received: 09 October 2022; Accepted: 31 October 2022;

Published: 15 November 2022.

Edited by:

Long Jin, Lanzhou University, ChinaReviewed by:

Dongsheng Guo, Hainan University, ChinaWeibing Li, Sun Yat-sen University, China

Yang Shi, Yangzhou University, China

Copyright © 2022 Jin, Zhao, Chen and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jie Jin, amo2NzEyM0BobnVzdC5lZHUuY24=

Jie Jin

Jie Jin Lv Zhao1,2

Lv Zhao1,2