- 1Laboratory of Cognitive Neuroscience, Center for Neuroprosthetics and Brain Mind Institute, Ecole Polytechnique Fédérale de Lausanne, Geneva, Switzerland

- 2Laboratory of Robotic Systems, Ecole Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

- 3Laboratoire de Recherche en Neuroimagerie, University Hospital (CHUV) and University of Lausanne (UNIL), Lausanne, Switzerland

- 4Department of Clinical Neurosciences, Faculty of Medicine, University Hospital, Geneva, Switzerland

Visuo-motor integration shapes our daily experience and underpins the sense of feeling in control over our actions. The last decade has seen a surge in robotically and virtually mediated interactions, whereby bodily actions ultimately result in an artificial movement. But despite the growing number of applications, the neurophysiological correlates of visuo-motor processing during human-machine interactions under dynamic conditions remain scarce. Here we address this issue by employing a bimanual robotic interface able to track voluntary hands movement, rendered in real-time into the motion of two virtual hands. We experimentally manipulated the visual feedback in the virtual reality with spatial and temporal conflicts and investigated their impact on (1) visuo-motor integration and (2) the subjective experience of being the author of one's action (i.e., sense of agency). Using somatosensory evoked responses measured with electroencephalography, we investigated neural differences occurring when the integration between motor commands and visual feedback is disrupted. Our results show that the right posterior parietal cortex encodes for differences between congruent and spatially-incongruent interactions. The experimental manipulations also induced a decrease in the sense of agency over the robotically-mediated actions. These findings offer solid neurophysiological grounds that can be used in the future to monitor integration mechanisms during movements and ultimately enhance subjective experience during human-machine interactions.

Introduction

Over the past years, continuous advances in the fields of robotics and virtual reality (VR) have made human-machine interactions increasingly widespread in our society. Rehabilitation robotics (Klamroth-Marganska et al., 2014), upper-limb neuroprostheses (Borton et al., 2013; Shokur et al., 2021), surgical robotic interfaces (Hussain et al., 2014; D'Ettorre et al., 2021), and manipulators for industrial applications (Ajoudani et al., 2018) represent concrete examples of how robotic technology can be applied to enhance human manipulation skills and to recover sensorimotor functions in patients affected by motor impairments. This rapid expansion has led to the emergence of a new interdisciplinary field, bringing together cognitive neuroscience, VR and robotics, providing a new approach to study cognitive functions (Rognini and Blanke, 2016; Beckerle et al., 2019; Wilf et al., 2021) and to investigate abnormal mental states (Blanke et al., 2014; Salomon et al., 2020; Bernasconi et al., 2021). However, despite the latest technological progresses, current knowledge of the perceptual, sensorimotor and neural mechanisms involved in these sophisticated human-robot interactions remains limited. This might be due to the fact that most of the efforts have so far been dedicated to the “machine” side, to improve control and usability, but less to the understanding of the brain mechanisms involved in robotically-mediated interactions. To fill this gap, a dedicated line of work has exploited behavioral measures of multisensory integration of bodily cues to successfully characterize robotically-mediated interactions in a perceptually-grounded fashion (Ionta et al., 2011; Blanke, 2012; Sengül et al., 2012, 2013, 2018; Rognini et al., 2013; Salomon et al., 2013; Pfeiffer et al., 2014; Akselrod et al., 2021). Some of these approaches consists in measuring how information related to a robot is processed as similar to information related to the user's body (therefore how the two sources are integrated), as an implicit measure of easiness and effectiveness of human-robot interaction (Sengül et al., 2012, 2013, 2018; Rognini et al., 2013). A way to experimentally address this aspect is the use of a well-known paradigm in cognitive science, the cross modal congruency task, which has been extensively employed to investigate visuo-tactile spatial integration (Maravita and Iriki, 2004). In the context of human-robot interaction, this task allowed characterizing several aspects of human-robot interaction in terms of visuo-motor and visuo-tactile integration (Rognini et al., 2013; Romano et al., 2015) as well as visuo-proprioceptive integration (Sengül et al., 2013). Importantly, those studies tackled subjective aspects such as the sense of agency [i.e., the subjective experience of controlling one's own actions (Haggard, 2017)] and the feeling of a presence (Blanke et al., 2014). Despite these insights, the neural correlates of robotic interactions under dynamic conditions (i.e., during active body movements) remain largely unknown.

Here we used a robotic VR set-up (Rognini et al., 2013) to characterize the neural correlates of visuo-motor integration and the link with the sense of agency during self-generated and robotically-mediated interactions. We used a bimanual haptic interface, originally developed as a prototype device of a robotic surgery manipulator (DaVinci System; Sengül et al., 2012, 2013; Rognini et al., 2013) to track hands movement trajectory while a realistic visual feedback was provided through VR. To interfere with visuo-motor integration mechanisms, we manipulated the visual feedback in selected trials by introducing spatial (change in the movement direction from horizontal to vertical) and temporal (600 ms delay) mismatches between the executed movement and the one observed by the participant in the VR environment. In addition to multisensory integration, these experimental conflicts allow investigating modulations in the subjective sense of agency over these self-generated actions. The neural correlates of visuo-motor integration were identified by recording somatosensory evoked potential (SEP) to stimulation of the median nerve at the wrist while participants performed voluntary bimanual hand movements through the interface. The measurement of SEPs is a well-established method to probe neural activity in the context of multisensory integration, having being employed to study interactions between somatosensory cues and other sensory modalities such as auditory (Foxe et al., 2000; Kisley and Cornwell, 2006; Touge et al., 2008), visual (Schürmann et al., 2002; Sebastianelli et al., 2022), and visuo-motor (Bernier et al., 2009). In our study, the choice of a somatosensory stimulation was motivated by a large body of literature showing that somatosensory pathways are prominently involved in processing sensory-motor stimuli (Huttunen et al., 1996; Forss and Jousmaki, 1998; Avikainen et al., 2002) and modulated by self-generated movements (Blakemore et al., 2000; Wasaka and Kakigi, 2012; Macerollo et al., 2018). Thus, changes in SEPs induced by experimentally manipulating the visual feedback would inform us regarding which brain regions are involved in the integration of visual and motor cues. We expect the involvement of multimodal brain regions such as parietal and prefrontal association areas, according to longstanding evidence in this context (Quintana and Fuster, 1993; Goodale, 1998; Fogassi and Luppino, 2005; Iacoboni, 2006; Kanayama et al., 2012; Limanowski and Blankenburg, 2016).

In addition, SEP modulations have also been linked to changes in the subjective experience related to one's own body relying on multisensory integration mechanisms (Dieguez et al., 2009; Aspell et al., 2012; Heydrich et al., 2018; Palluel et al., 2020). We predict a decrease in the reported sense of agency when the visual feedback is experimentally manipulated as compared to when no conflict is induced, in accordance with a previous study employing the same robotic interface (Rognini et al., 2013). Crucially, activity in associative areas have been also widely associated with the sense of agency and other fundamental aspects of bodily self-consciousness (David et al., 2008; Blanke, 2012).

Materials and methods

Participants

A total of 13 individuals (three females and 10 males, mean age 23.4 years, SD ± 1.5, range 21–27) participated in this study but data from three of them was discarded due to poor signal quality or absence of somatosensory evoked components when averaged according to the stimulation onset. All participants had normal or corrected to normal vision, and had no history of neurological or psychiatric conditions. All participants gave written informed consent and were compensated for their participation. The study protocol was approved by the local ethics research committee—Commission cantonale (VD) d'éthique de la recherche sur l'être humain—and was performed in accordance with the ethical standards laid down in the declaration of Helsinki.

Experimental procedure

In this study we combined robotics, VR and high-density electrical source imaging to study visuo-motor integration and the sense of agency during robotically-mediated interactions. Participants were instructed to perform continuous horizontal hands movements while interacting with a bimanual haptic interface during somatosensory evoked potentials (SEP) recording.

Robotic interface and virtual reality environment

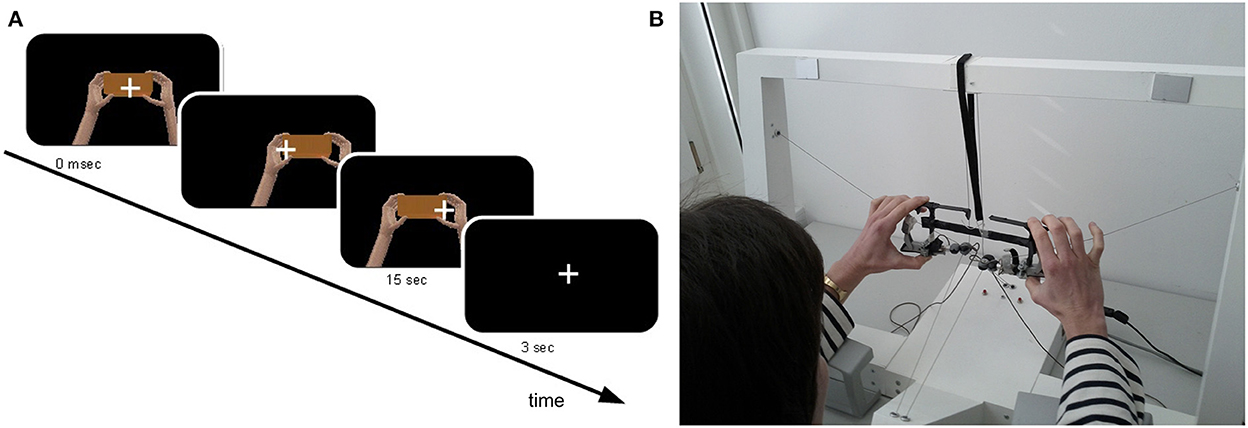

As in a previous study conducted in our laboratory (Rognini et al., 2013), a bimanual haptic interface was exploited for tracking hands movements in real-time. This platform has been designed as a training device for users of the Da Vinci Surgical System (Intuitive Surgical, Sunnyvale, CA, USA), a robotic platform used to perform minimally invasive surgical procedures (Lanfranco et al., 2004). For each hand, this haptic interface allows seven degrees of freedom in motion and force feedback in three translations (Kenney et al., 2009; Lerner et al., 2010). Two grippers held by the participant are connected through cables to the motors, so that the force feedback and the movement tracking is performed through a cable-driven system. To maintain a fixed distance between the hands (20 cm) and between the thumbs and the index (8 cm) and give the impression of holding a cube, the two grippers were linked through a mechanical frame (24 × 8 cm), constructed with lightweight material (~100 g). During the experiment, participants sat at a table where the haptic interface was placed, holding the grippers with their hands and with the head supported by a chin rest to minimize head movement. The movements performed interacting with the haptic device were presented in real-time (except during the asynchronous condition, see below) in a virtual environment through a head-mounted display (HMD, eMagin Z800 3DVisor, ~40° angle view, 1.44-megapixel resolution, 50 cd/m2 brightness, 227 g). The compliance to the real-time condition, crucial for the investigation of multisensory integration, was already established in previous experiments employing the same robotics-VR set-up (Sengül et al., 2012, 2013; Rognini et al., 2013). To mimic at best the reality, the virtual scene showed two hands moving and holding a rectangular block (Figure 1A). To compensate for underestimation of the perceived distance in virtual reality occurring when HMDs are used, we used a scale factor (i.e., the ratio between the distance in virtual and physical world) of 1.5.

Figure 1. Experimental set-up and virtual reality environment. Participants sat at a table and hold the frame of the robotic device between the index and the thumb of each hand while performing continuous horizontal (left-right) hand movement for 15 s. (A) Participants' hands movements were translated in real time into the movement of two virtual hands, presented through a head-mounted display. To mimic the reality, the virtual hands were shown holding a block (B).

The virtual reality environment was developed with the open-source platform CHAI 3D (http://www.chai3d.org; Conti et al., 2003) and a set of C++ libraries. The original source code of CHAI3D can be found at: www.chai3d.org/download/releases, while the experimental paradigm main code can be found at the GitHub repository: github.com/sissymarche/SEP_VRMimic_Study.

EEG acquisition and median nerve stimulation

Continuous EEG was acquired at 2048 Hz through a 64-channel Biosemi ActiveTwo system (Biosemi, Amsterdam, Netherlands) referenced to the CMS-DRL ground. Electrodes were evenly spaced according to the 10–20 EEG system and included conventional midline sites Fz, Cz, Pz, Oz, and sites over the left and right hemispheres. This high-density EEG montage was chosen to perform source reconstruction and to allow us to investigate changes in the neural generators throughout the entire brain. Electrooculogram (EOG) was also recorded to control for eye movements.

The somatosensory stimulation was delivered to participants' median nerve at the right wrist using a bipolar transcutaneous stimulation (Grass S48, West Warwick, USA) able to produce square waves with a constant current output through an insulating unit (Grass SIU5 RF Transformer isolation unit, West Warwick, USA). The intensity of the stimulation was tuned separately for each participant: after having found the intensity required to achieve the abduction of the thumb, the current was reduced at the 80% of this motor threshold and the value was kept throughout the entire recording. The stimulation was delivered at a frequency of 2 Hz and with a duration of 0.2 ms to avoid electric artifacts on the recorded EEG traces. No participant reported pain or discomfort with this level of stimulation.

Procedure

Participants sat at a table and hold the frame of the robotic interface between the index and the thumb of each hand while performing continuous horizontal (left-right) hand movement (Figure 1B). They were asked to perform narrow trajectories, keeping the movements' velocity as constant as possible, avoiding sudden movements. Additionally, participants were instructed to keep their elbows lifted during the movement in order to avoid tactile cues coming from the contact with the table. A fixation cross was presented in the middle of the display to minimize eye movement. The median nerve stimulation, delivered at the right wrist, continued throughout the entire experiment without interruptions.

The VR environment (see Section Robotic interface and virtual reality environment) was presented right at the beginning of each trial through a head-mounted display: participants could start interacting with the robotic interface immediately after and continued performing horizontal movements till the end of the trial, for a total duration of 15 s. A 3 s inter-trial break allowed participants to rest; during this time the virtual environment was replaced by a black screen. Each session consisted in a total of four blocks, each of which encompass 16 trials (four repetitions/condition) arranged in a randomized order, and lasting ~5 min. A total of 480 somatosensory stimulations per condition were delivered to each participant.

We tested the effect of visuo-motor integration on SEP and the sense of agency by experimentally manipulating the visual feedback provided through VR with two types of visuo-motor conflicts. During selected trials, the visual feedback was perturbed in real-time by changing the actual movement direction from horizontal (left-right) to vertical (up-down, congruency factor) and by introducing a 600 ms delay (synchrony factor) between the actual and the seen movements. This temporal delay was chosen in accordance with a previous study employing the same robotic interface (Rognini et al., 2013), and due to the strong effect elicited when using this duration on the subjective experience (Tsakiris et al., 2006). Therefore, the experiment included four experimental conditions arranged in a 2 × 2 factorial design with congruency (congruent/incongruent) and synchrony (synchronous/asynchronous) as factors. Out of the four conditions, randomly intermixed across trials, only in one condition the visual feedback reflected entirely the actual movement (congruent and synchronous).

At the end of the session, the sense agency for the seen movement during each of the four experimental conditions was assessed by means of one question concerning the perceived feeling of control (“I felt as I was responsible for the movement in the virtual reality”) and one control question (“I felt as if my hands were turning virtual”). This question allows to control for task compliance (as verified by differences in the average ratings to the different questionnaire items) and it has been effectively used for the same purpose in several VR-based studies (e.g., Slater et al., 2008; Perez-Marcos et al., 2009; Sanchez-Vives et al., 2010), including one from our group that employed the same robotic platform to address the sense of agency and ownership over virtual hands (Rognini et al., 2013). In our study, it is particularly suitable as a control item for the sense of agency question since it addresses perceptual aspects that are static rather than dynamic, these latter required to investigate the sense of agency. Participants had to answer by rating their subjective experience on a scale from 0 (strongly disagree) to 10 (strongly agree).

Data analysis

EEG preprocessing

EEG data preprocessing was conducted using the EEGlab (Delorme and Makeig, 2004) and FASTER (Nolan et al., 2010) toolboxes within the MATLAB (The MathWorks) environment. Data were first down-sampled to 512 Hz, band pass filtered (1–40 Hz) and re-referenced to the average value. For SEP calculation, the EEG epochs were time-locked to the electrical stimulation onset and covered a time window of 50 ms pre-stimulus and 300 ms post-stimulus, using the pre-stimulus interval for baseline correction. Epochs with amplitude exceeding a threshold value (±20 μV) were excluded. Bad channels were interpolated using a spherical interpolation. Artifactual epochs were removed via visual inspection, and residual artifacts removed using independent component analysis (ICA).

Topographic analysis of EEG data and source estimation

The analysis of the EEG signals consisted in a well-established multi-step procedure to investigate changes in the configuration of intracranial generators across conditions and was implemented mainly with the software Cartool (https://sites.google.com/site/cartoolcommunity/; Murray et al., 2008; Brunet et al., 2011). In-brief, first a topographical clustering algorithm is used to select periods of time, within each trial, in which brain activity is modulated by the experimental manipulation. Secondly, an inverse solution method is applied to evaluate differences in the neural generators of the signals recorded over the time periods found in the previous step across the experimental conditions. This procedure has been extensively described in previous works (Michel et al., 2004; Murray et al., 2008) and, being reference-independent and data-driven, offer analytical and interpretational advantages over canonical waveform analyses (Murray et al., 2008).

As a first step, the predominant topographies (maps) are identified by applying a Atomize and Agglomerate Hierarchical Clustering algorithm to the group-averaged data across all conditions. This approach consists in considering initially all possible clusters (one for each data point) and through subsequent iterations, reducing their number based on their contribution to the global explained variance (GEV). The optimal number of template maps is then found by applying a modified version of the Krzanowski–Lai criterion (Pascual-Marqui et al., 1995; Murray et al., 2008). Here we considered solutions with topographies accounting for at least 90% of the global dataset variance. The pattern of template maps found at the group-level is then statistically evaluated using single-subject data in a procedure referred to as “fitting.” This consists in computing the spatial correlation between the template maps and single-subject data at each time-point and each experimental condition. From this fitting procedure we extracted, for each condition and participant, the maps' presence (in milliseconds) and the associated global field power (GFP), two orthogonal features of the recorded electric field. GFP is a reference-independent measure, computationally equivalent to the standard deviation of all electrodes at a given time, thus its value is proportional to the strength of the electric field.

Map duration and GFP values were then submitted to a permutation-based repeated measures 2 × 2 ANOVA with congruency and synchrony as factors and 10,000 resampling. This non-parametric analysis was carried out using the “Permuco” R package, a statistical approach more appropriate than parametric ANOVA when the assumption of normality is violated or with moderate size datasets (Frossard and Renaud, 2021). This analysis allowed us to investigate potential map specificities for a given condition and consequently possible differences in the underlying intracranial generators.

In order to estimate periods of time along which an evoked activity could be reliably measured, we carried out a topographic consistency test (TCT; Koenig and Melie-García, 2010), able to identify time periods in which it is possible to observe a consistent relationship between the somatosensory stimulation and neural electric sources. This analysis is based on the argument that the GFP of the average response across participants depends on the consistency between the individual-subjects' topographies. That is, only if topographies are similar across participants, it is possible to obtain a GFP of the grand-average higher than what could be observed if topographies contained mainly noise. This is tested by simply shuffling the measured potentials across electrodes in each topographies, and compute the probability to obtain a GFP larger or equal to the empirical one (Michel et al., 2009). Of note, the TCT does not aim at investigating differences among experimental conditions, as the test is performed separately for each condition.

We then estimated the intracranial sources over the time periods in which we found a significant topographical modulation and presenting stable topography according to the TCT, using a distributed linear inverse solution and applying the local autoregressive average regularization approach (LAURA; Grave De Peralta Menendez et al., 2001, 2004). This method is used to address the non-uniqueness of the inverse problem and it is based on the biophysical principle that the potential recorded on the scalp decays as a function of the square distance to the source. Its spatial accuracy has been evaluated as superior by previous studies comparing different inverse solution techniques using real and simulated data (Grech et al., 2008; Carboni et al., 2022). In our study we used a solution space including 4,996 nodes, selected from a 2 × 2 × 2 mm grid equally distributed within the Montreal Neurological Institute's (MNI) average template brain. As assessed in previous studies, the localization accuracy is considered to be along the lines of the matrix grid size, here being equal to 2 mm (Grave De Peralta Menendez et al., 2004; Martuzzi et al., 2009). Before the estimation of the neural generators, the SEP data in the temporal domain was first averaged across the time window found in the previous topographic pattern analysis, in order to generate a single data-point for each participant and condition, thereby increasing the signal-to-noise ratio (Thelen et al., 2012). The result of the source estimation gave a current density value for each node of the solution space that can be statistically tested to investigate significant differences in the brain sources between conditions. A 2 × 2 repeated measures ANOVA with factors congruency and synchrony was performed at each node using the STEN toolbox, developed by Jean-François Knebel and Michael Notter (http://doi.org/10.5281/zenodo.1164038). Only nodes with p-values < 0.05 and clusters of at least 17 contiguous nodes were considered statistically significant, using the same spatial threshold determined in previous studies (Cappe et al., 2012; Thelen et al., 2012).

Analysis of behavioral data

All behavioral data (questionnaires and movement parameters) were analyzed using a permutation-based repeated measures 2 × 2 ANOVA with 10,000 resampling with congruency and synchrony as factors. Reports for the control question from two participants were missing, therefore, to control for the smaller sample size we performed the same analysis on a pool or participants taking into consideration ratings from participants whose EEG was excluded for the analysis (n = 11) and compare it with the one obtained including the final pool (n = 8).

Correlation analyses were performed between significant changes in neural generators and the corresponding differences in the reported sense of agency (Pearson correlation coefficient).

Raw movements recorded through the robotic interface were analyzed to investigate whether, despite the explicit instruction to keep the horizontal movement as consistent as possible throughout the entire duration of the experiment, our experimental manipulations of the visual feedback could have affected the executed movement. We considered two parameters: the velocity and the trajectory norm, computed as the Euclidean norm of each left-right path (as in Rognini et al., 2013). For each participant we then computed and analyzed the average values across experimental conditions.

Results

Electrical neuroimaging results

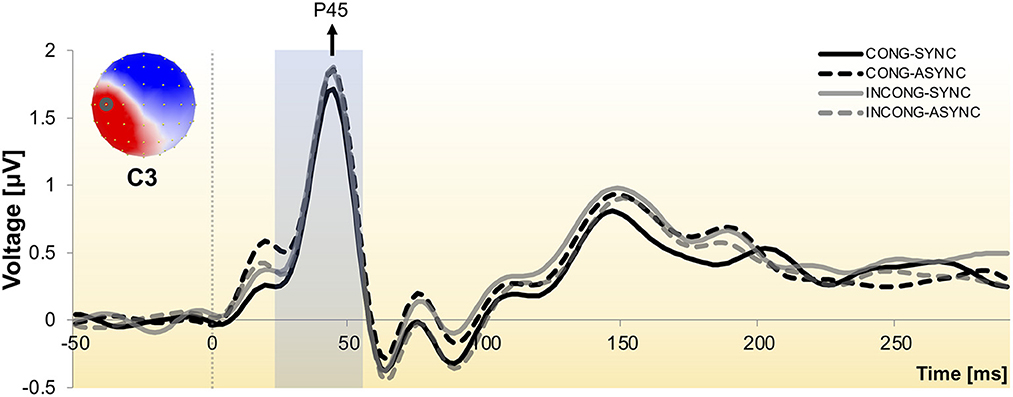

The waveform analysis revealed the prototypical components evoked by median nerve stimulation at the wrist (e.g., P45, N60), with maximal amplitude displayed by electrodes placed over central and parietal regions, contralateral to the stimulation side (Figure 2).

Figure 2. Somatosensory evoked potential to stimulation of the median nerve at the right wrist. Group-averaged SEP waveform across the four experimental conditions as recorded from one exemplar central electrode (C3), contralateral to the stimulation side. Responses exhibit the prototypical peaks, including the P45 and N60 components. The scalp topography is shown with the nasion upwards and left scalp leftwards and depicts the neural activity at the P45 peak, with electrode C3 encircled. The shaded time interval indicates the window of interest over which the neuroimaging analyses were performed.

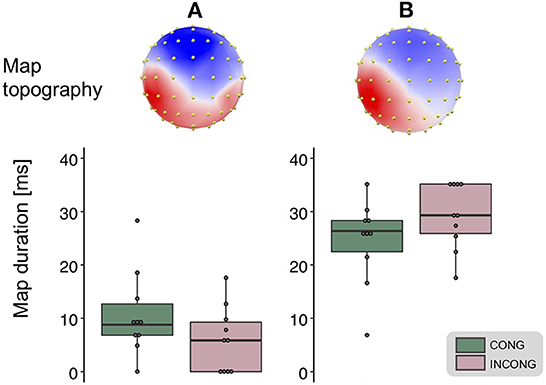

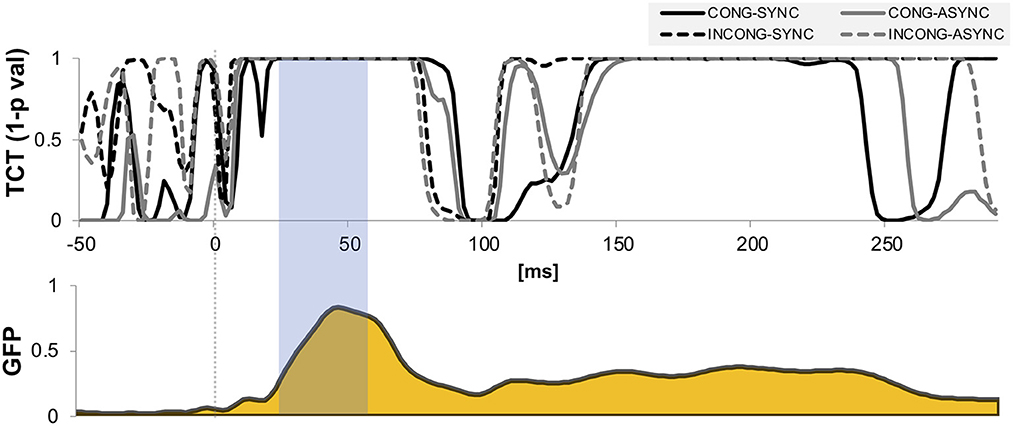

We performed hierarchical topographic clustering to identify periods of stable electric field topographies over the group-averaged data from all conditions. The topographic clustering accounted for 91% of the global explained variance and identified several periods of stable electric field topographies, one of which included the 21–56 ms post-stimulus interval. This time interval encompassed two topographic maps, both displaying a clear lateralization over the left central and parietal regions, contralateral to the stimulation side (Figure 3). Interestingly, one of the two topographies was selectively present in the congruent and synchrony condition (i.e., the one without any experimental manipulation, Figure 3A), showing a stronger activity over right parietal regions as compared to the other topography, which was predominant during the other three experimental conditions characterized by a visuo-motor mismatch (Figure 3B). The 21–56 ms interval overlapped with a window of topographic consistency across all four conditions as assessed through the TCT and included the peak of the GFP (Figure 4), in accordance with the inverse relationship between GFP and the significance of TCT and confirming evidence of evoked activity during this early time-period across the four conditions of interest (Murray et al., 2008). In the following we focus on the statistical analysis of the topographic representation of the EEG signal during the 21–56 ms post-stimulus period.

Figure 3. Topographic clustering and “fitting” procedure. The topographic pattern analyses identified periods of stable topography across the collective 300 ms post-electrical stimulation onset. For one of these time periods (21–56 ms), two maps were identified from the group-averaged SEPs. One map most prominently accounted for the congruent condition [map (A), left], while the other for the incongruent one [map (B), right]. The reliability of this result, observed at the group-average level, was assessed at the single-subject level using a spatial correlation fitting procedure. This analysis revealed a significantly different duration for the two maps in the congruent (green) as compared to the incongruent (pink) condition (main effect of the congruency factor, whiskers plots).

Figure 4. Topographic consistency and global field power. Results of the topographic consistency tests (TCT, upper plot) show intervals of time in which the null hypothesis of observed topographies explained entirely by noise is rejected (p-values < 0.05). For displaying purposes, the y-axis is expressed in expressed in terms of 1-p values. The TCT across the four conditions show periods of stable topographies during two time-windows, respectively 20–75 and 150–220 ms post stimulus onset. The first-time window covers the onset and peak of global field power (GFP, lower plot), and occurs around the P45 evoked potential. The time interval highlighted indicates the interval of interest over which the neuroimaging analyses were performed.

The reliability of the topographic pattern observed at the group-level in the previous step was tested at the single-subject level using a spatial correlation “fitting” procedure. Statistical tests over the map presence confirmed the predominance of the more posteriorly bilateral map (map A) when the virtual movement was spatially congruent with respect to the executed one: over the 21–56 ms interval we found a main effect of the factor congruency over the map duration [F(1,9) = 11.42, p < 0.01, η2p = 0.56, Figure 3]. A trend toward the same direction was present for the factor synchrony, but did not reach statistical significance [F(1,9) = 3.52, p = 0.09, η2p = 0.28]. There was no statistically significant interaction between the two factors [F(1,9) = 1.19, p = 0.3, η2p = 0.12]. We then tested the average GFP over the time window of interest and found no significant difference between the experimental conditions (all p-value > 0.05).

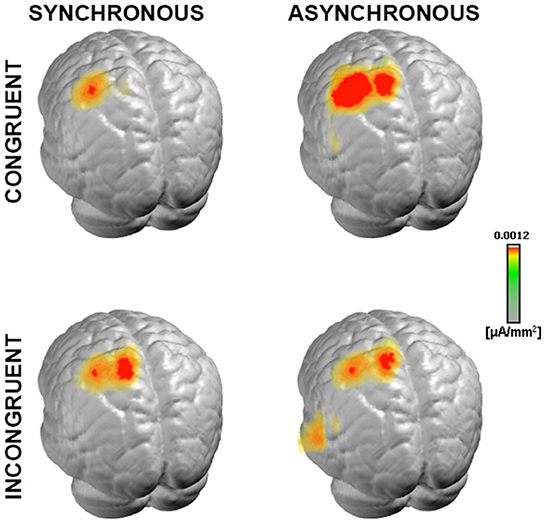

Next, to test the hypothesis that such topographic differences reflect changes in the underlying neural generators, we computed and statistically compared the source estimations over the time interval of interest, across the four conditions. All experimental conditions presented neural generators located prominently over the left somatosensory cortex, contralateral to the stimulation side (Figure 5), as expected from the lateralized pattern observed at the scalp topography (Figures 2, 3).

Figure 5. Neural generators of somatosensory evoked response. Group-averaged LAURA source density estimations over the 21–56 ms period post-stimulus onset for each of the four experimental conditions. Results are displayed on an average MNI brain. All conditions exhibited neural generators located within the left somatosensory area, contralateral to the stimulation side (right wrist).

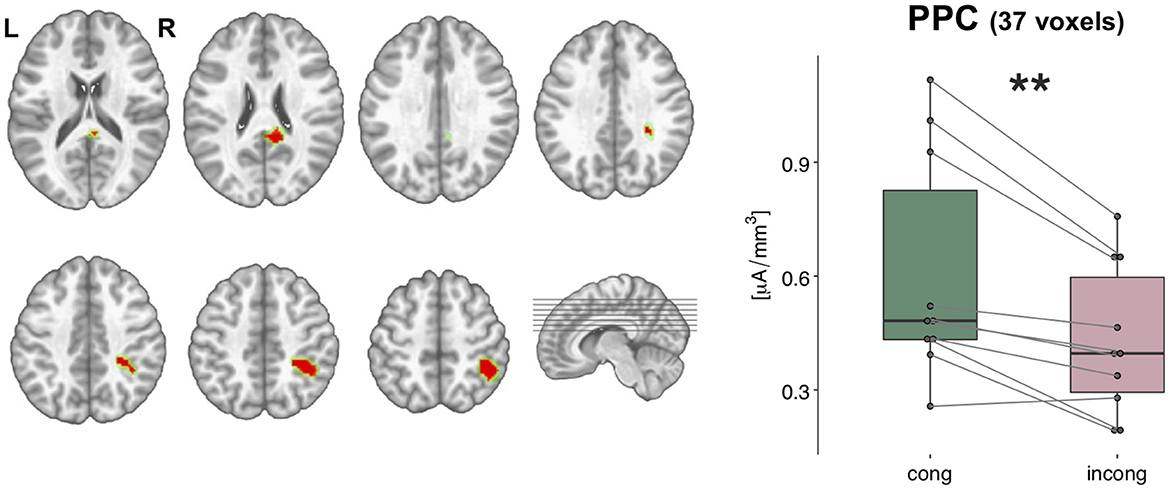

Scalar values of the current density of the sources from each participant and condition were statistically tested with a 2 × 2 ANOVA performed in the brain space. This analysis highlighted a cluster of 37 nodes in the posterior parietal cortex (PPC), where the estimated sources were stronger in the congruent condition as compared to the incongruent condition [F(1,9) = 17.42, p < 0.01, η2p = 0.66, Figure 6]. In addition, another cluster of 12 nodes over the posterior cingulate cortex (PCC) was significantly modulated for the main effect of congruency, but it will not be discussed any further since it did not survive the spatial threshold that we set.

Figure 6. Statistical analyses of the source estimations. Group-averaged source estimations were calculated over the 21–56 ms post-stimulus interval for each experimental condition and submitted to a repeated measures 2 × 2 ANOVA performed in the brain space. Two clusters that exhibited a significant main effect of the factor congruency are shown in axial slices of the MNI template brain, in correspondence of the posterior parietal cortex (PPC, 37 nodes) and posterior cingulate cortex (PCC, 12 nodes). Only nodes meeting the p-values < 0.05 statistical threshold and the spatial criterion of at least 17 contiguous nodes were considered reliable. Whisker plots depict the current density values in the PPC cluster in the congruent (green) and incongruent (pink) conditions. Significance is denoted with ** for p < 0.01.

Behavioral data

Questionnaire analysis

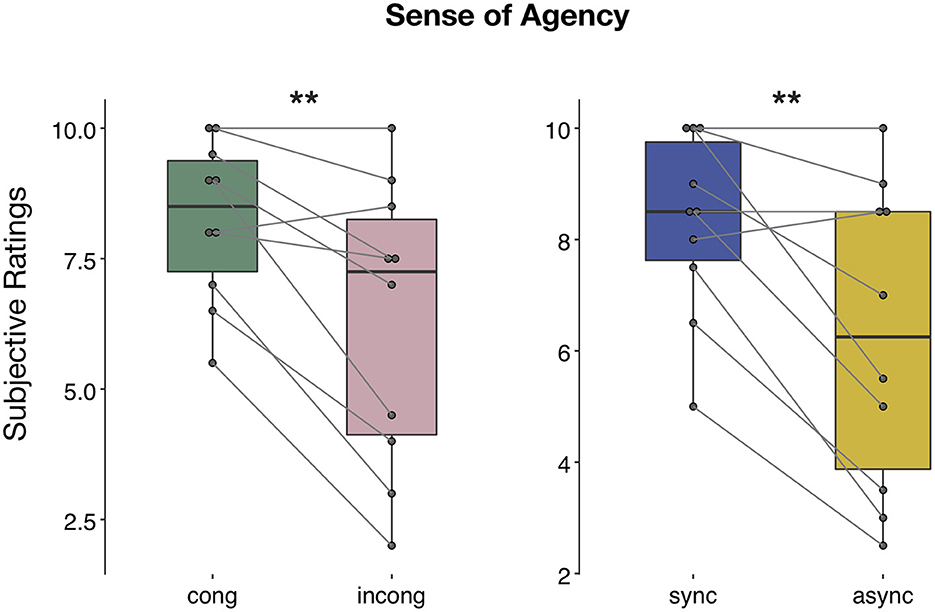

The ANOVA performed on the questionnaire scores on the sense of agency revealed a main effect of both congruency [F(1,9) = 13.05, p < 0.01, η2p = 0.59] and synchrony [F(1,9) = 12.1, p < 0.01, η2p = 0.57] factor, with participants reporting a stronger sense of agency during the congruent and synchronous conditions (Figure 7), but no significant interaction between the two factors [F(1,9) = 1.12, p > 0.05, η2p = 0.11]. Conversely, ratings for control question revealed no significant difference between the four conditions [main effect of congruency: F(1,7) = 2.39, p > 0.05, η2p = 0.26, main effect of synchrony: F(1,7) = 0.02, p > 0.05, η2p = 0.004, interaction congruency × synchrony: F(1,7) = 1, p > 0.05, η2p = 0.12; Supplementary Figure 1]. The absence of any statistical difference to the control questions was confirmed when considering a bigger sample size, including participants excluded from the EEG data analyses [main effect of congruency: F(1,10) = 2.66, p > 0.05, η2p = 0.21, main effect of synchrony: F(1,10) = 0.003, p > 0.05, η2p = 0.0003, interaction congruency × synchrony: F(1,10) = 0.51, p > 0.05, η2p = 0.05].

Figure 7. Sense of agency during human-robot interactions. Questionnaire results regarding the question on the sense of agency: the ANOVA revealed both a main effect of congruency and synchrony. Significance is denoted with ** for p < 0.01.

To investigate whether the neural changes observed in the PPC due to the effect of congruency factor might be related to the perceived SoA, we calculated the Pearson's r-value between the congruent-incongruent difference in current density and in the reported SoA and found no relationship between these two variables (r = 0.09, p > 0.05).

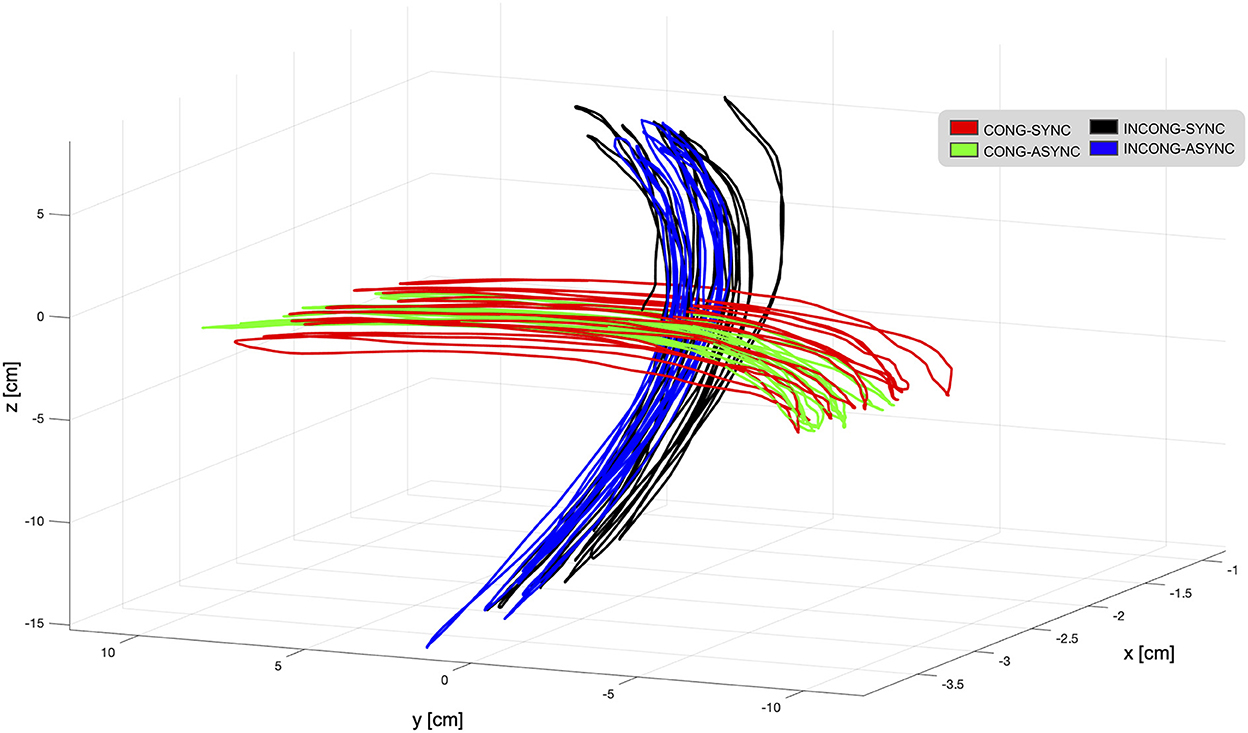

Movement trajectory

During the experiment, participants performed continuous movements with a mean trajectory norm of 10.6 cm (SE 0.6 cm) in the left-right direction, and with a mean velocity of 6.7 cm/s (SE 0.3 cm/s; Figure 8). Although there were discrepancies in the extent and velocity of the movements between participants, within-participant differences between conditions were minimal (below 1 cm for trajectory and below 1 cm/s for velocity in all participants except 2). This confirms that individual participants were able to perform the task in a consistent manner despite the presence of the experimental manipulation. The statistical analysis performed at the group level revealed a significant interaction of congruency and synchrony in the trajectory norm [F(1,9) = 6.6; p < 0.05, η2p = 0.42]. Post-hoc analyses revealed that, during the incongruent-synchronous condition, the trajectory was shorter than during the incongruent-asynchronous [t(9) = 2.1, p = 0.05, d = 0.69] and the congruent-asynchronous [t(9) = 1.85, p = 0.09, d = 0.58].

Figure 8. Movement trajectories as displayed in the virtual environment from one representative participant. During the task, participants were instructed to perform continuous horizontal left-right hand movements while interacting with the robotic interface. These movements were translated in real-time in the virtual reality environment, presented to the participant through a head-mounted display. To interfere with visuo-motor integration mechanisms, the visual feedback provided in the VR was either in accordance with the executed movement (congruent-synchronous condition, red trajectory) or experimentally manipulated. For this, a spatial mismatch translating the horizontal movement into a vertical one (incongruent conditions, black and blue trajectories) and a temporal delay (asynchronous conditions, green and blue trajectories) were employed in selected trials, resulting in a total of four experimental conditions. Trajectories are displayed for each condition during a single trial. Overall, the mean trajectory norm in the left-right direction was 10.6 cm, and the mean velocity 6.7 cm/s.

Statistical tests over average velocity revealed a main effect of synchrony [F(1,9) = 34.8; p < 0.001, η2p = 0.79] and a significant interaction between the two factors [F(1,9) = 27.9; p < 0.001, η2p = 0.75]. The movement was significantly slower during the delayed condition, irrespective of the presence of a spatial conflict [incongruent-synchronous > congruent-asychronous: t(9) = 2.41, p < 0.05, d = 0.76, incongruent-synchronous > incongruent-asychronous: t(9) = 2.86, p < 0.05, d = 0.9]. This finding is in line with the observation that, during the recordings, participants tended to slow down during the asynchronous trials when approaching the end of the right-left trajectory. Overall, manipulating the visual feedback resulted in a decreased velocity as compared to trials in which the feedback was in accordance with the executed movement [congruent-synchronous vs. congruent-asynchronous: t(9) = 9.22, p < 0.001, d = 2.9; vs. incongruent-synchronous: t(9) = 3, p < 0.05, d = 0.96; vs. incongruent-asynchronous: t(9) = 4.55, p = 0.001, d = 1.44].

Discussion

Our study aimed at identifying the neural correlates of visuo-motor integration during human-robotic interactions under dynamic conditions. To do so, we used a robotic interface to track bimanual movements and provided real-time visual feedback through VR (Rognini et al., 2013). This experimental set-up allowed us to introduce precise spatial and temporal mismatches between the executed and observed movement. To investigate how brain activity was modulated by these visuo-motor conflicts, we recorded somatosensory evoked potentials (SEPs) to stimulation of the median nerve at the right wrist. High-density electrical source imaging indicated that the spatial manipulation (congruency factor), but not the temporal one (synchrony factor), elicited a decrease in the neural activity in the right posterior parietal cortex (PPC). At the behavioral level, we observed that both spatial and temporal manipulations induced a significant decrease in the subjective experience of feeling in control over the movements of the virtual hands.

PPC has been traditionally considered as an associative area, integrating information from different sensory modalities (see for instance, Xing and Andersen, 2000). Several studies employing mismatches between the observed and felt movement through manipulation of the visual feedback consistently showed the involvement of this region in the detection of visuo-motor incongruences (Fink et al., 1999; Schnell et al., 2007; Wasaka and Kakigi, 2012). This region has also been proposed to be part of the fronto-parietal mirror neuron network, responsible for the correspondence between observed and executed movements (Rizzolatti and Craighero, 2004). Interestingly, we found that PPC is sensitive selectively to spatial rather than temporal conflicts. In line with this finding, PPC has been associated mainly to integration and processing of spatial information (Andersen and Zipser, 1988; Quintana and Fuster, 1993; Haggard, 2017), as well as sensory-spatial transformation processes (Torres et al., 2010), rather than temporal cues (Quintana and Fuster, 1993).

Moreover, the effect of the spatial manipulation was a stronger, and not reduced, activity in PPC when the observed movement was in accordance with the executed one, unlike previous studies reporting suppression of neural activity associated to self-generated actions or action attribution to the self (Fink et al., 1999; Farrer and Frith, 2002; Jeannerod, 2009). However, suppression mechanisms that appears at the behavioral level, such as the well-described inability to tickle oneself (Blakemore et al., 2000), and the physiological attenuation of SEP amplitude due to the gating effect during movement, have been shown to have different neurophysiological correlates (Palmer et al., 2016). Similar to our results, previous studies employing the same topographic analysis as the one we used also showed a stronger response as measured by evoked potentials when no experimental conflict was introduced as compared to a condition characterized by a visuo-tactile mismatch (Aspell et al., 2012). Authors have proposed that this phenomenon might be due to bottom-up multisensory integration mechanisms occurring when sensory cues are congruent, that would enhance the activity over modality specific regions such as the somatosensory cortex, as previously reported for visuo-tactile (Eimer and Driver, 2001; Taylor-Clarke et al., 2002) and sensorimotor integration through power increase in the gamma band (Aoki et al., 2001). In addition, enhanced activation over the intraparietal sulcus (IPL), a structure anatomically close to the cluster we found in our results, have been associated with integration processes of visual and spatial information in hand-centered coordinates (Makin et al., 2007).

Another important aspect of our findings is the lateralization to the right hemisphere, which cannot be ascribed to factors related to the task itself (the movement was bimanual) or differences in the executed movements (the analysis of the movement trajectory and velocity revealed no main effect of congruency). This effect follows a large body of literature supporting the prominent role of the right hemisphere in visuo-spatial processing. Previous studies have implicated the right PPC, rather than the left PPC, as a key site for visuo-spatial processing (Vallar, 1998) and monitoring of self- vs. externally- generated movements (Ogawa and Inui, 2007).

The analyses of the movements performed by participants highlighted differences between experimental conditions in the mean trajectory (interaction synchrony × congruency) and velocity (main effect of synchrony). These effects likely result from perceptual responses to mismatches in the visual feedback (e.g., slowing-down during the asynchronous condition to compensate for the temporal delay), and are not responsible for the changes observed at the neural level. It is true that active movements can modulate the waveform of SEPs, likely due to a top-down attenuation of the afferent proprioceptive information (i.e., gating effect; Kakigi et al., 1995; Palmer et al., 2016). However, a previous study has shown that changes in SEP to median nerve stimulation are associated with the presence of a visuo-motor conflict, but not with differences in movement kinematics (Bernier et al., 2009). Furthermore, our experimental manipulations do not impact proprioceptive cues since the distance between the two hands was kept fixed by using a mechanical frame held by participants.

Our experimental set-up allowed us to test the sense of agency for robotically-mediated movements, an aspect of bodily self-consciousness which is deeply rooted in the integration of visual and somatomotor information (Jeannerod and Pacherie, 2004; Daprati et al., 2007; Farrer et al., 2008). The decreased SoA reported during conditions with spatial and temporal conflicts as compared to conditions without any visuo-motor mismatch confirmed the classical findings (see for a review, Haggard, 2017), as well as previous results obtained using the same robotic-VR platform (Rognini et al., 2013). Although we couldn't find any relationship between the activity decrease in PPC and the reported SoA, it should be noted that parietal regions have been consistently associated with this subjective experience (Haggard, 2017). In this context, the involvement of PPC has been previously assessed in healthy individuals (Chaminade and Decety, 2002; Farrer et al., 2008; Jeannerod, 2009) and in patients with parietal lesions (Sirigu et al., 1999; Daprati et al., 2000; Ronchi et al., 2018). Converging evidence points to this area as a key player for monitoring the consistency between actions and their visual outcome (David et al., 2008). Further, the lateralization to the right PPC is compatible with previous neuroimaging evidence showing the prominent involvement of this region in the experience of controlling one's own actions, both in healthy individuals (Farrer et al., 2003) and in psychiatric and neurological patients affected by disorders of agency (Spence et al., 1997; Simeon et al., 2000). Indeed, damage to the right parietal hemisphere rather than the left one is associated not only to misattribution to one's own movements (Daprati et al., 2000), but to disturbances of other bodily self-consciousness aspects as well (Berti et al., 2005; Arzy et al., 2006; Heydrich et al., 2010).

Limitations

The gender imbalance in our participant cohort reflects the demographics on the campus of the École Polytechnique Fédérale de Lausanne, from which we recruited our participants. Previous studies have generally not revealed any significant effect of sex on multisensory perception (basic auditory, visual and somatosensory stimuli: Hagmann and Russo, 2016; audiovisual speech: Ross et al., 2015). Thus, we have no reason to assume that our results were confounded by the gender imbalance of our cohort. The task in which participants were engaged during the experiment consisted in performing bimanual horizontal movements consecutively for 15 s, without a specific goal, that to the best of our knowledge it is unlikely to elicit gender differences at the behavioral and neurophysiological level.

Another potential limitation of the current study pertains to the relatively low number of participants. However, our sample size is the same as that of previously published studies which, like our experiment, used SEPs to investigate neural changes associated with multisensory conflicts and subjective experience (e.g., Heydrich et al., 2018; Palluel et al., 2020). Furthermore, we chose robust, data-driven approaches to data analysis, such as permutation-based statistical tests (Frossard and Renaud, 2021) and reference-independent topographical analysis of the EEG data (Murray et al., 2008). The effect sizes that we observed confirm the adequacy of our sample size (Cohen, 1988).

Conclusion

By using a unique experimental set-up combined with electrical source imaging, we were able to uncover the neural correlates of visuo-motor integration during voluntary bimanual movement. Our results show that robotic interfaces combined with virtual reality are powerful tools for neuroscientific investigations, as they allow controlling complex dynamic interactions while introducing precise and consistent manipulations, free from potential experimenter bias. Understanding the neural substrate of robotically mediated interactions might translate into the improvement of controllability of current robotic systems and might be capitalized in neurorehabilitation applications (Mehrholz et al., 2015). Together with VR, now an established tool able to facilitate functional recovery and neural reorganization (Adamovich et al., 2010; Laver et al., 2017), EEG recordings can provide specific neural signatures to monitor changes over the course of rehabilitative interventions.

Data availability statement

The data supporting the conclusions of this article will be made available on request by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Commission cantonale d'éthique de la recherche sur l'être humain (CER-VD). The patients/participants provided their written informed consent to participate in this study.

Author contributions

OB conceived and supervised the research. OB, SM, and GR designed the experiment. SM carried out the experiment and wrote the manuscript. SM, FB, and MD analyzed the data. All authors contributed to the manuscript.

Funding

This research was supported by two donors advised by CARIGEST SA (Fondazione Teofilo Rossi di Montelera e di Premuda and a second one wishing to remain anonymous) and by the Bertarelli Foundation to OB. SM is supported by the NCCR Evolving Language, Swiss National Science Foundation Agreement #51NF40_180888.

Acknowledgments

We thank A. Thelen for the valuable discussions about the topographical analyses, A. Sengül for technical support, and P. Mégevand for comments on the final manuscript. The Cartool software (cartoolcommunity.unige.ch) has been programmed by Denis Brunet, from the Functional Brain Mapping Laboratory (FBMLab), Geneva, Switzerland and is supported by the Center for Biomedical Imaging (CIBM) of Geneva and Lausanne. The STEN toolbox (http://doi.org/10.5281/zenodo.1164038) has been programmed by Jean-François Knebel and Michael Notter, from the Laboratory for Investigative Neurophysiology (the LINE), Lausanne, Switzerland and is supported by the Center for Biomedical Imaging (CIBM) of Geneva and Lausanne and by National Center of Competence in Research project “SYNAPSY – The Synaptic Bases of Mental Disease”; project no. 51AU40_125759.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnbot.2022.1034615/full#supplementary-material

References

Adamovich, S. V., Fluet, G. G., Tunik, E., Alma, S., Merians (2010). Sensorimotor training in virtual reality: a review. NeuroRehabilitation. 25, 1–21. doi: 10.3233/NRE-2009-0497

Ajoudani, A., Zanchettin, A. M., Ivaldi, S., Albu-Schäffer, A., Kosuge, K., and Khatib, O. (2018). Progress and prospects of the human–robot collaboration. Auton. Robots 42, 957–975. doi: 10.1007/s10514-017-9677-2

Akselrod, M., Vigaru, B., Duenas, J., Martuzzi, R., Sulzer, J., Serino, A., et al. (2021). Contribution of interaction force to the sense of hand ownership and the sense of hand agency. Sci. Rep. 11, 1–18. doi: 10.1038/s41598-021-97540-9

Andersen, R. A., and Zipser, D. (1988). The role of the posterior parietal cortex in coordinate transformations for visual–motor integration. Can. J. Physiol. Pharmacol. 66, 488–501. doi: 10.1139/y88-078

Aoki, F., Fetz, E., Shupe, L., Lettich, E., and Ojemann, G. (2001). Changes in power and coherence of brain activity in human sensorimotor cortex during performance of visuomotor tasks. Biosystems 63, 89–99. doi: 10.1016/S0303-2647(01)00149-6

Arzy, S., Thut, G., Mohr, C., Michel, C. M., and Blanke, O. (2006). Neural basis of embodiment: distinct contributions of temporoparietal junction and extrastriate body area. J. Neurosci. 26, 8074–8081. doi: 10.1523/JNEUROSCI.0745-06.2006

Aspell, J. E., Palluel, E., and Blanke, O. (2012). Early and late activity in somatosensory cortex reflects changes in bodily self-consciousness: an evoked potential study. Neuroscience 216, 110–122. doi: 10.1016/j.neuroscience.2012.04.039

Avikainen, S., Forss, N., and Hari, R. (2002). Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage 15, 640–646. doi: 10.1006/nimg.2001.1029

Beckerle, P., Castellini, C., and Lenggenhager, B. (2019). Robotic interfaces for cognitive psychology and embodiment research: a research roadmap. Wiley Interdiscip. Rev. Cogn. Sci. 10, 1–9. doi: 10.1002/wcs.1486

Bernasconi, F., Blondiaux, E., Potheegadoo, J., Stripeikyte, G., Pagonabarraga, J., Bejr-kasem, H., et al. (2021). Robot-induced hallucinations in Parkinson's disease depend on altered sensorimotor processing in fronto-temporal network. 8362, 1–13. doi: 10.1126/scitranslmed.abc8362

Bernier, P. M., Burle, B., Vidal, F., Hasbroucq, T., and Blouin, J. (2009). Direct evidence for cortical suppression of somatosensory afferents during visuomotor adaptation. Cereb. Cortex 19, 2106–2113. doi: 10.1093/cercor/bhn233

Berti, A., Bottini, G., Gandola, M., Pia, L., Smania, N., Stracciari, A., et al. (2005). Shared cortical anatomy for motor awareness and motor control. Science 309, 488–491. doi: 10.1126/science.1110625

Blakemore, S. J., Wolpert, D., and Frith, C. (2000). Why can't you tickle yourself? Neuroreport 11, R11–R16. doi: 10.1097/00001756-200008030-00002

Blanke, O. (2012). Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571. doi: 10.1038/nrn3292

Blanke, O., Pozeg, P., Hara, M., Heydrich, L., Serino, A., Yamamoto, A., et al. (2014). Report neurological and robot-controlled induction of an apparition. Curr. Biol. 24, 2681–2686. doi: 10.1016/j.cub.2014.09.049

Borton, D., Micera, S., Millán, J. D. R., and Courtine, G. (2013). Personalized neuroprosthetics. Sci. Transl. Med. 5, 210rv2. doi: 10.1126/scitranslmed.3005968

Brunet, D., Murray, M. M., and Michel, C. M. (2011). Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput. Intell. Neurosci. 2011, 813870. doi: 10.1155/2011/813870

Cappe, C., Thelen, A., Romei, V., Thut, G., and Murray, M. M. (2012). Looming signals reveal synergistic principles of multisensory integration. J. Neurosci. 32, 1171–1182. doi: 10.1523/JNEUROSCI.5517-11.2012

Carboni, M., Brunet, D., Seeber, M., Michel, C. M., Vulliemoz, S., and Vorderwülbecke, B. J. (2022). Linear distributed inverse solutions for interictal EEG source localisation. Clin. Neurophysiol. 133, 58–67. doi: 10.1016/j.clinph.2021.10.008

Chaminade, T., and Decety, J. (2002). Leader or follower? Involvement of the inferior parietal lobule in agency. Neuroreport 13, 1975–1978. doi: 10.1097/00001756-200210280-00029

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences, 2nd Edn. New York, NY: L. E. Associates.

Conti, F., Barbagli, F., Balaniuk, R., Halg, M., Lu, C., Morris, D., et al. (2003). ≪ The CHAI libraries. ≫ in Proceedings of Eurohaptics 2003 (Dublin, Ireland), 496–500.

Daprati, E., Sirigu, A., Pradat-Diehl, P., Franck, N., and Jeannerod, M. (2000). Recognition of self-produced movement in a case of severe neglect. Neurocase. 6, 477–486. doi: 10.1080/13554790008402718

Daprati, E., Wriessnegger, S., and Lacquaniti, F. (2007). Kinematic cues and recognition of self-generated actions. Exp. Brain Res. 177, 31–44. doi: 10.1007/s00221-006-0646-9

David, N., Newen, A., and Vogeley, K. (2008). The “sense of agency” and its underlying cognitive and neural mechanisms. Conscious Cogn. 17, 523–534. doi: 10.1016/j.concog.2008.03.004

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

D'Ettorre, C., Mariani, A., Stilli, A., Rodriguez Y Baena, F., Valdastri, P., Deguet, A., et al. (2021). Accelerating surgical robotics research: a review of 10 years with the da Vinci Research Kit. IEEE Robot. Autom. Mag. 28, 56–78. doi: 10.1109/MRA.2021.3101646

Dieguez, S., Mercier, M. R., Newby, N., and Blanke, O. (2009). Feeling numbness for someone else's finger. Curr. Biol. 19, 1108–1109. doi: 10.1016/j.cub.2009.10.055

Eimer, M., and Driver, J. (2001). Crossmodal links in endogenous and exogenous spatial attention: evidence from event-related brain potential studies. Neurosci. Biobehav. Rev. 25, 497–511. doi: 10.1016/S0149-7634(01)00029-X

Farrer, C., Bouchereau, M., Jeannerod, M., and Franck, N. (2008). Effect of distorted visual feedback on the sense of agency. Behav. Neurol. 19, 53–7. doi: 10.1155/2008/425267

Farrer, C., Franck, N., Georgieff, N., Frith, C. D., Decety, J., and Jeannerod, M. (2003). Modulating the experience of agency: a positron emission tomography study. Neuroimage 18, 324–333. doi: 10.1016/S1053-8119(02)00041-1

Farrer, C., and Frith, C. D. (2002). Experiencing oneself vs. another person as being the cause of an action: the neural correlates of the experience of agency. Neuroimage 15, 596–603. doi: 10.1006/nimg.2001.1009

Fink, G. R., Marshall, J. C., Halligan, P. W., Frith, C. D., Driver, J., Frackowiak, R. S. J., et al. (1999). The neural consequences of conflict between intention and the senses. Brain 122, 497–512. doi: 10.1093/brain/122.3.497

Fogassi, L., and Luppino, G. (2005). Motor functions of the parietal lobe. Curr. Opin. Neurobiol. 15, 626–631. doi: 10.1016/j.conb.2005.10.015

Forss, N., and Jousmaki, V. (1998). Sensorimotor integration in human primary and secondary somatosensory cortices. Brain Res. 781, 259–267. doi: 10.1016/S0006-8993(97)01240-7

Foxe, J. J., Morocz, I. A., Murray, M. M., Higgins, B. A., Javitt, D. C., and Schroeder, C. E. (2000). Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Cogn. Brain Res. 10, 77–83. doi: 10.1016/S0926-6410(00)00024-0

Frossard, J., and Renaud, O. (2021). Permutation tests for regression, anova, and comparison of signals: the permuco package. J. Stat. Softw. 99, i15. doi: 10.18637/jss.v099.i15

Goodale, M. A. (1998). Visuomotor control: where does vision end and action begin? Curr. Biol. 8, 489–491. doi: 10.1016/S0960-9822(98)70314-8

Grave De Peralta Menendez, R., Andino, S. G., Lantz, G., Michel, C. M., and Landis, T. (2001). Noninvasive localization of electromagnetic epileptic activity. I. Method descriptions and simulations. Brain Topogr. 14, 131–137. doi: 10.1023/A:1012944913650

Grave De Peralta Menendez, R., Murray, M. M., Michel, C. M., Martuzzi, R., and Gonzalez Andino, S. L. (2004). Electrical neuroimaging based on biophysical constraints. Neuroimage 21, 527–539. doi: 10.1016/j.neuroimage.2003.09.051

Grech, R., Cassar, T., Muscat, J., Camilleri, K. P., Fabri, S. G., Zervakis, M., et al. (2008). Review on solving the inverse problem in EEG source analysis. J. Neuroeng. Rehabil. 5, 1–33. doi: 10.1186/1743-0003-5-25

Haggard, P. (2017). Sense of agency in the human brain. Nat. Rev. Neurosci. 18, 197–208. doi: 10.1038/nrn.2017.14

Hagmann, C. E., and Russo, N. (2016). Multisensory integration of redundant trisensory stimulation. Attent. Percept. Psychophys. 78, 2558–2568. doi: 10.3758/s13414-016-1192-6

Heydrich, L., Aspell, J. E., Marillier, G., Lavanchy, T., Herbelin, B., and Blanke, O. (2018). Cardio-visual full body illusion alters bodily self-consciousness and tactile processing in somatosensory cortex. Sci. Rep. 8, 1–8. doi: 10.1038/s41598-018-27698-2

Heydrich, L., Dieguez, S., Grunwald, T., Seeck, M., and Blanke, O. (2010). Illusory own body perceptions: case reports and relevance for bodily self-consciousness. Conscious. Cogn. 19, 702–710. doi: 10.1016/j.concog.2010.04.010

Hussain, A., Malik, A., Halim, M. U., and Ali, A. M. (2014). The use of robotics in surgery: a review. Int. J. Clin. Pract. 68, 1376–1382. doi: 10.1111/ijcp.12492

Huttunen, J., Wikström, H., Korvenoja, A., Seppäläinen, A. M., Aronen, H., and Ilmoniemi, R. J. (1996). Significance of the second somatosensory cortex in sensorimotor integration: enhancement of sensory responses during finger movements. Neuroreport 7, 1009–12. doi: 10.1097/00001756-199604100-00011

Iacoboni, M. (2006). Visuo-motor integration and control in the human posterior parietal cortex: evidence from TMS and fMRI. Neuropsychologia 44, 2691–2699. doi: 10.1016/j.neuropsychologia.2006.04.029

Ionta, S., Gassert, R., and Blanke, O. (2011). Multi-sensory and sensorimotor foundation of bodily self-consciousness - an interdisciplinary approach. Front. Psychol. 2, 1–8. doi: 10.3389/fpsyg.2011.00383

Jeannerod, M. (2009). The sense of agency and its disturbances in schizophrenia: a reappraisal. Exp. Brain Res. 192, 527–532. doi: 10.1007/s00221-008-1533-3

Jeannerod, M., and Pacherie, E. (2004). Agency, simulation and self-identification. Mind Lang. 19, 113–146. doi: 10.1111/j.1468-0017.2004.00251.x

Kakigi, R., Koyama, S., Hoshiyama, M., Watanabe, S., Shimojo, M., and Kitamura, Y. (1995). Gating of somatosensory evoked responses during active finger movements magnetoencephalographic studies. J. Neurol. Sci. 128, 195–204. doi: 10.1016/0022-510X(94)00230-L

Kanayama, N., Tamè, L., Ohira, H., and Pavani, F. (2012). Top down influence on visuo-tactile interaction modulates neural oscillatory responses. Neuroimage 59, 3406–3417. doi: 10.1016/j.neuroimage.2011.11.076

Kenney, P. A., Wszolek, M. F., Gould, J. J., Libertino, J. A., and Moinzadeh, A. (2009). Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology 73, 1288–1292. doi: 10.1016/j.urology.2008.12.044

Kisley, M. A., and Cornwell, Z. M. (2006). Gamma and beta neural activity evoked during a sensory gating paradigm: effects of auditory, somatosensory and cross-modal stimulation. Clin. Neurophysiol. 117, 3. doi: 10.1016/j.clinph.2006.08.003

Klamroth-Marganska, V., Blanco, J., Campen, K., Curt, A., Dietz, V., Ettlin, T., et al. (2014). Three-dimensional, task-specific robot therapy of the arm after stroke: a multicentre, parallel-group randomised trial. Lancet Neurol. 13, 159–166. doi: 10.1016/S1474-4422(13)70305-3

Koenig, T., and Melie-García, L. (2010). A method to determine the presence of averaged event-related fields using randomization tests. Brain Topogr. 23, 233–242. doi: 10.1007/s10548-010-0142-1

Lanfranco, A. R., Castellanos, A. E., Desai, J. P., and Meyers, W. C. (2004). Robotic surgery: a current perspective. Ann. Surg. 239, 14–21. doi: 10.1097/01.sla.0000103020.19595.7d

Laver, K., Lange, B., George, S., Deutsch, J., Saposnik, G., and Crotty, M. (2017). Virtual reality for stroke rehabilitation. Cochrane Database Syst. Rev. 2017, CD008349. doi: 10.1002/14651858.CD008349.pub4

Lerner, M. A., Ayalew, M., Peine, W. J., and Sundaram, C. P. (2010). Does training on a virtual reality robotic simulator improve performance on the da Vinci® surgical system? J. Endourol. 24, 467–472. doi: 10.1089/end.2009.0190

Limanowski, J., and Blankenburg, F. (2016). Integration of visual and proprioceptive limb position information in human posterior parietal, premotor, and extrastriate cortex. J. Neurosci. 36, 2582–2589. doi: 10.1523/JNEUROSCI.3987-15.2016

Macerollo, A., Brown, M. J. N., Kilner, J. M., and Chen, R. (2018). Neurophysiological changes measured using somatosensory evoked potentials. Trends Neurosci. 41, 294–310. doi: 10.1016/j.tins.2018.02.007

Makin, T. R., Holmes, N. P., and Zohary, E. (2007). Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J. Neurosci. 27, 731–740. doi: 10.1523/JNEUROSCI.3653-06.2007

Maravita, A., and Iriki, A. (2004). Tools for the body (schema). Trends Cogn. Sci. 8, 79–86. doi: 10.1016/j.tics.2003.12.008

Martuzzi, R., Murray, M. M., Meuli, R. A., Thiran, J. P., Maeder, P. P., Michel, C. M., et al. (2009). Methods for determining frequency- and region-dependent relationships between estimated LFPs and BOLD responses in humans. J. Neurophysiol. 101, 491–502. doi: 10.1152/jn.90335.2008

Mehrholz, J., Pohl, M., Platz, T., Kugler, J., and Elsner, B. (2015). Electromechanical and robot-assisted arm training for improving activities of daily living, arm function, and arm muscle strength after stroke. Cochrane Database Syst. Rev. 2015, CD006876. doi: 10.1002/14651858.CD006876.pub4

Michel, C. M., Koenig, T., Brandeis, D., Gianotti, L. R. R., and Wackermann, J. (2009). Electrical Neuroimaging. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511596889

Michel, C. M., Murray, M. M., Lantz, G., Gonzalez, S., Spinelli, L., and Grave de Peralta, R. (2004). EEG source imaging. Clin. Neurophysiol. 115, 2195–2222. doi: 10.1016/j.clinph.2004.06.001

Murray, M. M., Brunet, D., and Michel, C. M. (2008). Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20, 249–264. doi: 10.1007/s10548-008-0054-5

Nolan, H., Whelan, R., and Reilly, R. B. (2010). FASTER: fully automated statistical thresholding for EEG artifact rejection. J. Neurosci. Methods 192, 152–162. doi: 10.1016/j.jneumeth.2010.07.015

Ogawa, K., and Inui, T. (2007). Lateralization of the posterior parietal cortex for internal monitoring of self- versus externally generated movements. J. Cogn. Neurosci. 19, 1827–1835. doi: 10.1162/jocn.2007.19.11.1827

Palluel, E., Falconer, C. J., Lopez, C., Marchesotti, S., Hartmann, M., Blanke, O., et al. (2020). Imagined paralysis alters somatosensory evoked-potentials. Cogn. Neurosci. 11, 205–215. doi: 10.1080/17588928.2020.1772737

Palmer, C. E., Davare, M., and Kilner, J. M. (2016). Physiological and perceptual sensory attenuation have different underlying neurophysiological correlates. J. Neurosci. 36, 10803–10812. doi: 10.1523/JNEUROSCI.1694-16.2016

Pascual-Marqui, R. D., Michel, C. M., and Lehmann, D. (1995). Segmentation of brain electrical activity into microstates: model estimation and validation. IEEE Trans. Biomed. Eng. 42, 658–665. doi: 10.1109/10.391164

Perez-Marcos, D., Slater, M., and Sanchez-Vives, M. V. (2009). Inducing a virtual hand ownership illusion through a brain-computer interface. Neuroreport 20, 589–594. doi: 10.1097/WNR.0b013e32832a0a2a

Pfeiffer, C., Schmutz, V., and Blanke, O. (2014). Visuospatial viewpoint manipulation during full-body illusion modulates subjective first-person perspective. Exp. Brain Res. 232, 4021–4033. doi: 10.1007/s00221-014-4080-0

Quintana, J., and Fuster, J. M. (1993). Spatial and temporal factors in the role of prefrontal and parietal cortex in visuomotor integration. Cereb. Cortex 3, 122–132. doi: 10.1093/cercor/3.2.122

Rizzolatti, G., and Craighero, L. (2004). The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192. doi: 10.1146/annurev.neuro.27.070203.144230

Rognini, G., and Blanke, O. (2016). Cognetics: robotic interfaces for the conscious mind. Trends Cogn. Sci. 20, 162–164. doi: 10.1016/j.tics.2015.12.002

Rognini, G., Sengül, A., Aspell, J. E., Salomon, R., Bleuler, H., and Blanke, O. (2013). Visuo-tactile integration and body ownership during self-generated action. Eur. J. Neurosci. 37, 1120–1129. doi: 10.1111/ejn.12128

Romano, D., Caffa, E., Hernandez-Arieta, A., Brugger, P., and Maravita, A. (2015). The robot hand illusion: inducing proprioceptive drift through visuo-motor congruency. Neuropsychologia 70, 414–420. doi: 10.1016/j.neuropsychologia.2014.10.033

Ronchi, R., Park, H. D., and Blanke, O. (2018). Bodily Self-consciousness and Its Disorders, 1st Edn. Amsterdam: Elsevier B.V. doi: 10.1016/B978-0-444-63622-5.00015-2

Ross, L. A., Del Bene, V. A., Molholm, S., Frey, H. P., and Foxe, J. J. (2015). Sex differences in multisensory speech processing in both typically developing children and those on the autism spectrum. Front. Neurosci. 9, 1–13. doi: 10.3389/fnins.2015.00185

Salomon, R., Lim, M., Pfeiffer, C., Gassert, R., and Blanke, O. (2013). Full body illusion is associated with widespread skin temperature reduction. Front. Behav. Neurosci. 7, 1–11. doi: 10.3389/fnbeh.2013.00065

Salomon, R., Progin, P., Griffa, A., Rognini, G., Do, K. Q., Conus, P., et al. (2020). Sensorimotor induction of auditory misattribution in early psychosis. Schizophr. Bull. 46, 947–954. doi: 10.1093/schbul/sbz136

Sanchez-Vives, M. V., Spanlang, B., Frisoli, A., Bergamasco, M., and Slater, M. (2010). Virtual hand illusion induced by visuomotor correlations. PLoS ONE 5, 1–6. doi: 10.1371/journal.pone.0010381

Schnell, K., Heekeren, K., Schnitker, R., Daumann, J., Weber, J., Heßelmann, V., et al. (2007). An fMRI approach to particularize the frontoparietal network for visuomotor action monitoring: detection of incongruence between test subjects' actions and resulting perceptions. Neuroimage 34, 332–341. doi: 10.1016/j.neuroimage.2006.08.027

Schürmann, M., Kolev, V., Menzel, K., and Yordanova, J. (2002). Spatial coincidence modulates interaction between visual and somatosensory evoked potentials. Neuroreport 13, 779–783. doi: 10.1097/00001756-200205070-00009

Sebastianelli, G., Abagnale, C., Casillo, F., Cioffi, E., Parisi, V., Di Lorenzo, C., et al. (2022). Bimodal sensory integration in migraine: a study of the effect of visual stimulation on somatosensory evoked cortical responses. Cephalalgia 42, 654–662. doi: 10.1177/03331024221075073

Sengül, A., Rognini, G., Van Elk, M., Aspell, J. E., Bleuler, H., and Blanke, O. (2013). Force feedback facilitates multisensory integration during robotic tool use. Exp. Brain Res. 227, 497–507. doi: 10.1007/s00221-013-3526-0

Sengül, A., van Elk, M., Blanke, O., and Bleuler, H. (2018). “Congruent visuo-tactile feedback facilitates the extension of peripersonal space,” in Haptics: Science, Technology, and Applications, eds D. Prattichizzo, H. Shinoda, H. Z. Tan, E. Ruffaldi, and A. Frisoli (Springer International Publishing), 673–684. doi: 10.1007/978-3-319-93399-3_57

Sengül, A., van Elk, M., Rognini, G., Aspell, J. E., Bleuler, H., and Blanke, O. (2012). Extending the body to virtual tools using a robotic surgical interface: evidence from the crossmodal congruency task. PLoS ONE 7, 49473. doi: 10.1371/journal.pone.0049473

Shokur, S., Mazzoni, A., Schiavone, G., Weber, D. J., and Micera, S. (2021). A modular strategy for next-generation upper-limb sensory-motor neuroprostheses. Medicine 2, 912–937. doi: 10.1016/j.medj.2021.05.002

Simeon, D., Guralnik, O., Hazlett, E. A., Spiegel-Cohen, J., Hollander, E., and Buchsbaum, M. S. (2000). Feeling unreal: a PET study of depersonalization disorder. Am. J. Psychiatry 157, 1782–1788. doi: 10.1176/appi.ajp.157.11.1782

Sirigu, A., Daprati, E., Pradat-Diehl, P., Franck, N., and Jeannerod, M. (1999). Perception of self-generated movement following left parietal lesion. Brain 122, 1867–1874. doi: 10.1093/brain/122.10.1867

Slater, M., Perez-Marcos, D., Ehrsson, H. H., and Sanchez-Vives, M. V. (2008). Towards a digital body: the virtual arm illusion. Front. Hum. Neurosci. 2, 6. doi: 10.3389/neuro.09.006.2008

Spence, S. A., Brooks, D. J., Hirsch, S. R., Liddle, P. F., Meehan, J., and Grasby, P. M. (1997). A PET study of voluntary movement in schizophrenic patients experiencing passivity phenomena (delusions of alien control). Brain 120, 1997–2011. doi: 10.1093/brain/120.11.1997

Taylor-Clarke, M., Kennett, S., and Haggard, P. (2002). Vision modulates somatosensory cortical processing. Curr. Biol. 12, 233–236. doi: 10.1016/S0960-9822(01)00681-9

Thelen, A., Cappe, C., and Murray, M. M. (2012). Electrical neuroimaging of memory discrimination based on single-trial multisensory learning. Neuroimage 62, 1478–1488. doi: 10.1016/j.neuroimage.2012.05.027

Torres, E. B., Raymer, A., Gonzalez Rothi, L. J., Heilman, K. M., and Poizner, H. (2010). Sensory-spatial transformations in the left posterior parietal cortex may contribute to reach timing. J. Neurophysiol. 104, 2375–2388. doi: 10.1152/jn.00089.2010

Touge, T., Gonzalez, D., Wu, J., Deguchi, K., Tsukaguchi, M., Shimamura, M., et al. (2008). The interaction between somatosensory and auditory cognitive processing assessed with event-related potentials. J. Clin. Neurophysiol. 25, 90–97. doi: 10.1097/WNP.0b013e31816a8ffa

Tsakiris, M., Prabhu, G., and Haggard, P. (2006). Having a body vs. moving your body: how agency structures body-ownership. Conscious. Cogn. 15, 423–432. doi: 10.1016/j.concog.2005.09.004

Vallar, G. (1998). Spatial hemineglect in humans. Trends Cogn. Sci. 2, 87–97. doi: 10.1016/S1364-6613(98)01145-0

Wasaka, T., and Kakigi, R. (2012). Conflict caused by visual feedback modulates activation in somatosensory areas during movement execution. Neuroimage 59, 1501–1507. doi: 10.1016/j.neuroimage.2011.08.024

Wilf, M., Cerra Cheraka, M., Jeanneret, M., Ott, R., Perrin, H., Crottaz-Herbette, S., et al. (2021). Combined virtual reality and haptic robotics induce space and movement invariant sensorimotor adaptation. Neuropsychologia 150, 107692. doi: 10.1016/j.neuropsychologia.2020.107692

Keywords: robotics, electroencephalography, virtual reality, visuo-motor integration, sense of agency, bimanual movements, somatosensory evoked potentials, source imaging

Citation: Marchesotti S, Bernasconi F, Rognini G, De Lucia M, Bleuler H and Blanke O (2023) Neural signatures of visuo-motor integration during human-robot interactions. Front. Neurorobot. 16:1034615. doi: 10.3389/fnbot.2022.1034615

Received: 01 September 2022; Accepted: 23 November 2022;

Published: 26 January 2023.

Edited by:

Fiorenzo Artoni, Université de Genève, SwitzerlandReviewed by:

Xian Zhang, Yale University, United StatesThierry Chaminade, Centre National de la Recherche Scientifique (CNRS), France

Bradly J. Alicea, Orthogonal Research and Education Laboratory, United States

Copyright © 2023 Marchesotti, Bernasconi, Rognini, De Lucia, Bleuler and Blanke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Silvia Marchesotti, c2lsdmlhLm1hcmNoZXNvdHRpQHVuaWdlLmNo; Olaf Blanke, b2xhZi5ibGFua2VAZXBmbC5jaA==

†Present address: Silvia Marchesotti, Speech and Language Group, Department of Basic Neurosciences, Faculty of Medicine, University of Geneva, Geneva, Switzerland

Silvia Marchesotti

Silvia Marchesotti Fosco Bernasconi1

Fosco Bernasconi1 Giulio Rognini

Giulio Rognini Olaf Blanke

Olaf Blanke