95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurorobot. , 12 November 2020

Volume 14 - 2020 | https://doi.org/10.3389/fnbot.2020.582385

This article is part of the Research Topic Advanced Planning, Control, and Signal Processing Methods and Applications in Robotic Systems View all 13 articles

Shumei Yu1

Shumei Yu1 Pengcheng Hou1

Pengcheng Hou1 Rongchuan Sun1*

Rongchuan Sun1* Shaolong Kuang1

Shaolong Kuang1 Fengfeng Zhang1

Fengfeng Zhang1 Mingchuan Zhou2

Mingchuan Zhou2 Jing Guo3

Jing Guo3 Lining Sun1

Lining Sun1In robotic radiosurgery, motion tracking is crucial for accurate treatment planning of tumor inside the thoracic or abdominal cavity. Currently, motion characterization for respiration tracking mainly focuses on markers that are placed on the surface of human chest. Nevertheless, limited markers are not capable of expressing the comprehensive motion feature of the human chest and abdomen. In this paper, we proposed a method of respiratory motion characterization based on the voxel modeling of the thoracoabdominal torso. Point cloud data from depth cameras were used to achieve three-dimensional modeling of the chest and abdomen surface during respiration, and a dimensionality reduction algorithm was proposed to extract respiratory features from the established voxel model. Finally, experimental results including the accuracy of voxel model and correlation coefficient were compared to validate the feasibility of the proposed method, which provides enhanced accuracy of target motion correlation than traditional methods that utilized external markers.

Robotic radiosurgery shows significant advantages in treating tumors that are not suitable to be treated by chemotherapy (Coste-Maniere et al., 2005). Since control of radioactive beam according to tumor motion tracking ensures treatment of high precision, accurate tumor respiratory motion tracking is crucial for stereotactic radiosurgical robots. Movement of tumors caused by respiration is complex to be modeled; usually movement of tumors is obtained by X-ray imaging. However, the method of locating tumors in vivo by frequent irradiation of X-rays causes unnecessary secondary damage to normal tissues. Therefore, correlating the skin motion with tumor motion simultaneously caused by respiration is necessary before establishing a prediction model to obtain the upcoming position of tumor.

To develop a treatment planning system considering tumor motion caused by human breath, respiratory motion characterization of the skin surface is needed first. Several methods have been developed to model the respiratory skin motion. According to surrogate variations, researchers have studied the correlation of tumor movements with motion of markers on the chest and abdomen surface, multimodal (airflow, tidal volume, acceleration, force) features, and motion measurement of the chest and abdomen surface, respectively.

Currently, placing markers on the skin surface is the mostly adopted way to study the respiration characterization and external–internal motion correlation. In the study of the characterization of respiratory motion with a single marker, the motion of one marker on the abdominal skin and tumor was fitted as a linear relationship in vivo, but insufficient characterization in experiments was found (Kanoulas et al., 2007). CyberKnife (Cheng and Adler, 2006) used three markers to record the motion of skin surface in respiratory tracking system. Nevertheless, if the patient performs complex breathing pattern, limited surrogates could not reflex the complicated respiration mode. The correlation models between three markers and tumor in vivo based on linear/quadratic fitting, artificial neural network, and fuzzy logic were compared, respectively, and the results found that the correlation coefficient of fuzzy logic algorithm is higher (Torshabi et al., 2013). The tumor tracking method proposed (Iizuka et al., 2015) is based on a pre-established 4D model, which connects internal tumor motion with external respiratory signals. Respiratory signals were collected by monitoring four infrared (IR) markers on the abdominal wall of the patient using an IR camera. The method can effectively reduce the influence of radiotherapy on normal tissues and further provides local control. However, due to insufficient characterization of respiratory signals by a limited number of IR markers, there was inevitable accumulation error of targeting in the abdominal region. The correlation factors between four surface markers and the tumor in different directions of motion were analyzed (Koch et al., 2004). Studies have shown that the association degree of skin surface markers depends on the placement of markers and the breathing pattern of patients. In the study of six markers, the centroid motion curves of all markers and the corresponding tumor motion curves were calculated; finally, tumor locations by proportional linear interpolation were obtained (Schweikard et al., 2000). Furthermore, 19 infrared LED markers on abdominal skin of swine were adopted to study the respiration feature and proved that the more markers utilized on the skin, the more respiratory movement information can be extracted (Ernst et al., 2009).

For the study of multimodal information of the chest and abdomen surface, researchers studied the linear correlation factors of tidal volume, abdominal surface displacement, and the anterior–posterior displacement of tumor during human respiration and concluded that tidal volume has higher correlation with tumor displacement (Hoisak et al., 2004). However, the correlation between tidal volume and tumor has a noticed drift with respiratory phase, and the measurement of tidal volume brings great pain to patients. In addition, multimodal information using tension bands, optical markers, acceleration sensors, airflow acceleration, and temperature sensors was collected to compare their correlation effects of inner–outer respiratory motion and found that optical marker achieves the best performance for the tumor motion tracking (Durichen et al., 2013).

For the study of correlating tumor motion with chest and abdomen surface motion, there have been some exploratory researches on the measurement of the whole thoracoabdominal surface motion. A multiradar wireless system was designed to track respiratory movements in real time. Through the two radar devices integrated on the linear accelerator, the movements of the chest and abdomen are monitored in real time (Gu et al., 2011; Gu and Li, 2015). However, the subjects need to spend much time and energy on training the breathing modes, which are required for the breathing exercise. One study printed markers as 11 circles on Lycra T-shirt (Ernst and Saß, 2015). Experiments demonstrated the advantages of depth camera over traditional optical acquisition equipment in measuring multiple moving targets, along with defects of image distortion and noise increase. Studies used RGB-D camera to collect continuous depth images of chest and abdomen regions of patients, with principal component analysis (PCA) used to create a respiratory motion model that displayed as tidal volume change (Wijenayake and Park, 2017). A method was proposed to estimate tidal volume changes by using depth camera to reconstruct three-dimensional isochronal surface of chest (Transue et al., 2016). The whole isosurface was extracted to characterize the deformation and volume changes of the patient's chest. Due to the inherent inconsistencies in the depth values provided by the depth camera, there are still problems such as depth measurement errors that need to be resolved.

The study of chest and abdomen surface measurement shows the advantages of the whole skin surface for the complete expression of motion information. Thus, to better model the motion of the whole thoracoabdominal surface and correlate, the surface information with the tumor motion is crucial. To obtain the respiratory motion characteristics of chest and abdomen surface, proper and accurate feature extraction is necessary. To the best knowledge of the authors, there are no available methods to obtain the feature extraction for the respiratory motion model comprised of voxels. However, our proposed method is inspired by previous research on feature extraction specially focusing on the classification and recognition in image processing. Studies used a convolutional network to train simple linear iterative clustering (SLIC) superpixels and obtained the feature embedding space of each superpixel, from which the superpixel feature vector was extracted (Liu et al., 2018). Some researchers used multifactor distribution as soft label and extracted supplementary information through visual input. Convolution neural network was used for visual feature learning, and the painting styles were classified through label distribution learning (Yang et al., 2018). In the research of data dimensionality reduction, locally linear embedding (LLE) and Gustafson–Kessel algorithms were used for dimension reduction in gray and RGB color images (Wang et al., 2018). A novel method introduced an end-to-end trainable and deep Siamese-like network named PersEmoN that consists of two convolutional network branches. Both networks share their bottom feature extraction module and are optimized within a multitask learning framework (Zhang et al., 2019). Experiments showed the effectiveness of PersEmoN on two apparent personality and emotion datasets.

In this paper, based on our previous work on dynamic voxel modeling of thoracic–abdominal surface (Hou et al., 2019), a characterization method of respiratory motion was proposed, as shown in Figure 1. A visual information acquisition system with two depth cameras was established to collect the point cloud data of respiration movement. Afterwards, we constructed the point cloud surface into a watertight model using boundary interpolation. The watertight model was built into voxel model in the final step. Specifically, the established surface models based on time series are unprecedentedly to be transformed into voxel models with more three-dimensional structural information. Respiration features are extracted from voxel models by dimensionality reduction and used as a description of the whole thoracoabdominal torso. A correlation model between respiratory features and tumor motion was established. Finally, experimental results with model accuracy and correlation factor were studied to validate the proposed approach.

Compared with the solutions using limited number of infrared markers placed on the abdomen, the proposed method obtained more rich information about the patient's body surface by building 3D voxel models. A dimensionality reduction method based on 3D voxel model could extract more robust respiratory features from the established body surface model. It could also overcome the limitation of information loss problem that existed in current respiration tracking methods.

The main framework of constructing correlation model is (1) establish dynamic thoracoabdominal surface voxel model, (2) reduce the dimension of the voxel model and extract the low-dimensional representation vector of the voxel model, and (3) establish the correlation model between the representation vector and the tumor motion state.

Three-dimensional modeling of dynamic human thoracoabdominal skin surface during respiration mainly includes point cloud collecting of dynamic skin surface using multiple cameras of Kinect V2, model establishment of thoracoabdominal skin surface, and surface reconstruction into voxel model.

With modeling integrity considered, we adopted time-series-based strategy when building the model with data from multiple cameras. Simultaneous frames of different cameras were fused into one frame, and then, the fused frames were arranged in time sequences. Due to the overlap in the fusion of two frames of time asynchrony, it is necessary to consider the time synchronization problem of multicamera sampling.

Since the exposure and trigger times of multiple cameras cannot be completely unified, we considered sampling with an approximate synchronization strategy. Two computers control two cameras in a one-to-one manner.

As images from multiple cameras have their own coordinates, an algorithm based on 2D image calibration was developed to unify the separate coordinates. In the algorithm, a calibration plate coordinate was built first by identification of the corner points, and then, the camera coordinate could be converted to the universal coordinates. Transformation from the camera coordinates system at any point xs to the calibration plate coordinate system as xm was as follows:

Among them, ts is the center of the calibration plate in the camera coordinate system, and Rs is the rotation matrix. The position of the calibration plate in the world coordinate system is known, so any point can be converted from the coordinate system xm to the world coordinate system xc:

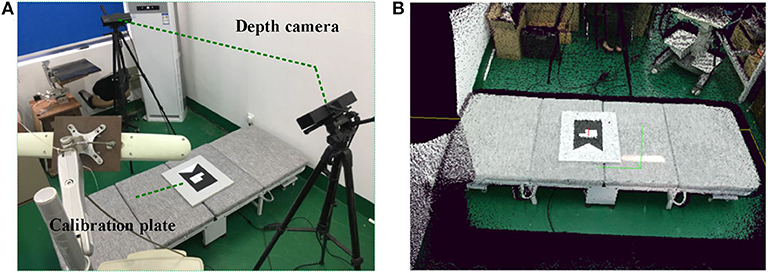

where Rm and tm are the rotation and translation of the calibration plate in the world coordinate system. Figure 2A shows the calibration setup consisting of two depth cameras and one calibration plate, and Figure 2B shows the point cloud data obtained through the above calibration procedures.

Figure 2. Calibration process for two Kinect V2 cameras. (A) Multicamera system. (B) Point cloud after calibration.

Although the point clouds of multiple cameras have been unified into the same coordinate system, the raw data of point cloud has noises and outliers brought by cameras themselves and infrared interference between each other camera. To pre-process the raw data, we used bilateral filtering (Tomasi and Manduchi, 1998) in denoise and statistical filtering (Moore, 1978) to eliminate outliers.

Due to certain calibration errors, the registration of the adjusted multiple point clouds still has overlap, which requires precise registration. We used a classical point cloud registration algorithm iterative closest point (ICP) to register multiple point clouds. The basic principle of ICP algorithm is to find the nearest point (pi, qi) in the target point cloud P and the source point cloud Q according to certain constraint conditions and then calculate the optimal matching parameter R and t to minimize the error function E(R, t). The error function is:

where n is the number of nearest point pairs, pi is one point in the target point cloud P, qi is the nearest point corresponding to pi in the source point cloud Q, R is the rotation matrix, and t is the translation vector. Figure 2 shows the two-frame point cloud fusion.

In order to obtain the required model of thoracoabdominal surface region and reduce the computation of point cloud processing, the existing model needs to be segmented. Due to the fixed placement of multiple cameras and the fixed scene, this paper adopted a fast and convenient segmentation algorithm with distance and color thresholds. The chest and abdomen area of the lying subject and the position of the treatment bed are constrained, and the auxiliary limit of the color threshold is applied to divide the expected chest and abdomen surface area. The segmented surface is uneven and has burrs. In order to make the thoracoabdominal surface model smooth, point clouds need to be smoothed. In this paper, moving least squares method (Breitkopf et al., 2005) was used to smooth point clouds.

To present more available information for respiration movement feature extraction, the surface model was transformed into a watertight model first. The procedure mainly contains boundary estimation and boundary insertion. The purpose of transforming the point cloud into watertight model is to facilitate the follow-up research to analyze the three-dimensional structure of the chest and abdomen surface based on time series and to avoid the structural problems such as unsmooth boundary and voids on the surface caused by the unclosed model.

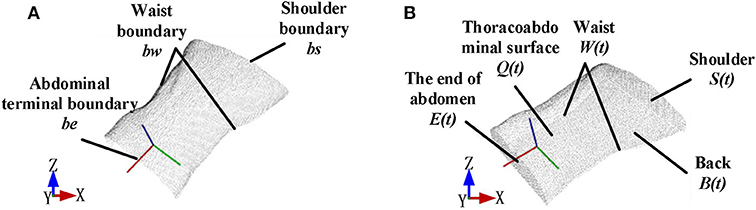

For boundary estimation, angles between searching points p and its adjacent points p1,…,pk were adopted as criteria to detect the boundary. Threshold of the angle value was set to classify the boundary after angles of point p, and its neighbor points were calculated. Classified boundary points combined a set named as Qb. Figure 3A shows the boundary point sets Qb were divided into bw, bs, be ∈ Qb, and Figure 3B shows the watertight thoracoabdominal model after point cloud interpolation.

Figure 3. Point cloud interpolation to form a watertight model. (A) Boundary division before point cloud interpolation. (B) Watertight model after point cloud interpolation.

Figure 4 shows the workflow of the watertight model construction. First, the original point cloud input was used to generate the back part of the model. During the process, the projection plane was established as a xy projection plane PL that is 5 cm lower than the xy plane bounded by bw boundary. The back part of the point cloud set B(t) was built by projecting the corresponding points qi ∈ Q in the original point cloud Q to the plane PL. To make the model watertight, boundary insertion was executed by using a method based on projection interpolation. As has been described above in Figure 3A, the boundary point cloud input was divided into the shoulder, waist, and abdominal end boundaries by boundary segmentation. After that, the shoulder part S(t) and the end of the abdomen E(t) was obtained by interpolating point cloud equally along the z negative axis to the plane PL, respectively; the waist part W(t) was obtained by interpolating point cloud equally along the waist curvature to the plane PL.

The watertight model composed of point cloud expressed the 3D information of the measured surface by independent points. To illustrate the space relationship of the entire surface, meshing was made to the point cloud model. Here, we used the classical Poisson reconstruction method (Kazhdan et al., 2006), in which normal vectors of the point clouds were calculated to display the curvature changes of the surface model. A smooth surface was built by estimation through indicator function's implicit fitting. Thus, the watertight thoracoabdominal point cloud model can be reconstructed into a model with smooth surface, as is shown in Figure 5A.

To study the volume changes of thoracoabdominal trunk movement, voxelization of the surface model is needed to convert the geometric representation of an object to the nearest voxel representation, which not only contains the surface information of the model but also describes the internal properties of the model. We used Octomap library (Hornung et al., 2013) to transform the point cloud into a voxel model. The voxelization of the model is shown in Figure 5B.

Due to the huge dimension of voxel model (arranging each voxel unit in columns up to several 100,000 dimensions), it is unrealistic to directly use these data to correlate with tumor motion because of a lot of noise and redundant information in huge data. In order to more effectively correlate with tumor motion and fully reflect surface motion characteristics, we first extract its physical significance features, then perform data analysis on the voxel model to extract respiratory features.

The movement of breathing is accompanied by the heaving of the chest and abdomen. Intuitively speaking, the expansion and contraction of the chest and abdomen must cause the changes of the entire volume V and surface area S of thoracoabdominal trunk.

The entire voxel model's voxel number variation with time series reflects the volume change of the thoracoabdominal trunk. To study the respiration characteristic displayed by volume changes, the voxel units of voxel model of each frame were traversed, and then, the volume of voxel units was accumulated; therefore, the volume change is reflected in the time series. The area change of voxel model is reflected in the area of voxel's outer layer in the similar way.

Intrinsic data characteristics refer to the information that reveals the motional information of the body surface obtained by the voxel model. It is obtained by reducing the dimensionality of the voxel model. It is necessary for the construction of the respiration tracking model.

Just as point cloud generation came with time stamps, the state of voxel model varied over time series. Each frame of the voxel model stood for a respiratory state, which was expressed by probability of voxel occupancy. The overview of the proposed method was presented in Algorithm 1.

Depending on the voxels' occupation and idleness, the state of voxels in the template-bounding box were marked with probability 1, 0, respectively. Thus, the voxel model state varied by time was expressed by the marker value of each voxel in the template-bounding box, as shown in the left diagram in Figure 6. Since the bounding box contained a large number of voxels, which could be illustrated as columns of a superhigh-dimensional vector, it would cost huge calculation if the original vectors were used to build the correlation model. Therefore, the vector Υ with superhigh dimension was transformed into a low-dimensional vector ψ that remained the characteristics of the voxel model changes. To accomplish dimension reduction, an algorithm based on LLE (Roweis, 2000) is shown in Algorithm 2.

Through combining physical variables V, S and essential parameters ψ = [ψ1, ψ2, .., ψm](m ≤ d + 1) after data analysis, characteristic variables Γ that can express time-varied states of the voxel model can be obtained as:

After feature extraction of voxel model in previous section, we obtained the representation vector of the whole thoracoabdominal surface motion. The establishment of respiratory correlation model is a key part of tumor tracking. The correlation function is to take the breathing surrogate signal (the characteristic signal of skin surface motion that is highly correlated with tumor motion in the body) as the input data and realize the motion estimation of the internal tumor by correlating the surrogate with the movement of the internal tumor. Therefore, we used the extracted representation vector as external surface motion surrogate to establish a correlation model with internal tumor motion.

Tumor movement is complex since it is caused by various factors such as respiration, heartbeat, and abdominal pressure. During inhalation, the volume of air inhaled by the lungs becomes larger because of the thoracoabdominal cavity and the diaphragm deform. During the exhalation process, the volume of gas remaining in the lungs becomes smaller; at the same time, the thoracoabdominal cavity and diaphragm are restored to their original state. During normal breathing, at the same transpulmonary pressure, the expiratory volume is greater than the inspiratory volume. The so-called hysteresis phenomenon (the phase lag between body surface characteristic respiratory motion and tumor respiratory motion) is attributed to the complex respiratory pressure–volume relationship of the lungs and chest and abdomen.

Therefore, exhalation and inhalation are two irreversible motion states. Before establishing the correlation model, we need to divide the entire breathing process into exhalation part and inhalation part and model these two processes, respectively. This paper divides the respiratory phase according to the amplitude of the external motion and uses the peak and valley values of the motion as the dividing points of exhalation and inspiration.

Due to the non-linear relationship between internal (the motion information of tumor in vivo) and external motion (the motion information of body surface), we adopt a polynomial model (Peressutti et al., 2012). That is, the trajectory of the tumor in vivo is approximated as a linear combination of multiple power terms of the external signal. In this paper, different polynomial functions are used to model the breathing movement during the exhalation and inhalation phases:

in which Aj is a polynomial coefficient, N is the highest power, and ki is the dividing points of each expiratory and inspiratory process. The highest power of the polynomial model is preferably 2 or 3. Higher powers are prone to overfitting and reduce the generalization ability of the model.

Experiments have been carried out to verify the feasibility of the respiratory motion characterization method based on thoracoabdominal surface modeling. Our experiments mainly focused on two aspects as validity of thoracoabdominal surface described by point cloud and validity of feature extraction on surface–tumor correlation. Position Comparison of Multiple Markers and Comparison of Marker Position Obtained by Point Cloud and NDI Method validated that whether the representation method of skin surface modeling has the same effect as the traditional representation method of finite markers; Optimization of Dimension Reduction and Correlation Coefficient of Dimension-Reduced Feature Vector and NDI Markers With Tumor Motion validated whether this method has more comprehensive characterization capability than the traditional finite marker method; in Correlation Model of Dimension-Reduced Feature and Tumor, correlation model between dimension-reduced features of thoracoabdominal voxel data and tumor motion was built and compared with the traditional method.

We used two Kinect V2 (Microsoft Co.) depth cameras placed at both sides of the experimental bed to collect data. Because the view of a single camera is limited, blind areas cannot be observed on the abdomen exit and will prevent a successful 3D modeling of thoracic–abdominal surface. However, using two Kinect V2 cameras has a complete view of the patient's body and is conducive to building the whole thoracic–abdominal model. The experimental subject is a phantom developed in our lab (Hou et al., 2018) for simulating respiratory movement of thoracoabdominal cavity. The experimental scene is shown in Figure 7. In order to compare the skin surface characterization method with traditional markers, we used NDI Polaris Spectra (Northern Digital Inc.), an optical tracking system (accuracy, 0.25 mm RMS) to record the skin markers' movement. Before experiments, we need to calibrate the coordinate systems of NDI Polaris Spectra and Kinect V2 depth cameras. To unify the two coordinate systems of point cloud and NDI system, spherical center of NDI optical markers in point cloud data was fitted to match with the marker's coordinates obtained by NDI Polaris Spectra.

In order to display the differences among multiple markers in characterizing the respiratory movement, the position of the markers on the chest and abdomen surface is changed, and the NDI sensor is utilized to track the motion change. Figure 8 shows the principal movement of the markers analyzed by PCA at different positions. Although the frequency and phase of the three markers are the same, the maximum motion range of marker 1 is about 5 mm, the maximum motion range of marker 2 is about 2 cm, and the maximum motion range of marker 3 is about 1 cm. The markers at different positions reflect different movements. It can be inferred that limited skin surface markers are not complete in motion representation of the whole thoracoabdominal surface.

To clarify whether our characterization method based on thoracoabdominal surface modeling contains the information provided by traditional finite markers, we made comparisons of the same marker position obtained by Kinect V2 and NDI optical tracking system. Before the comparison experiments, we need to unify the point cloud data into the NDI coordinates system. Therefore, calibration of NDI coordinates system and Kinect V2 coordinate system was carried out as follows.

Suppose the transformation equation of the two coordinate systems is A = RB + T, where A is the position of one point in coordinates of NDI, R is the rotation matrix, B is the coordinates of the same point in the point cloud coordinate system, and T is the translation matrix. To solve R and T, three markers were placed on the chest of the phantom for five times, each time with different positions, respectively. NDI optical tracking system and Kinect V2 recorded data simultaneously at the five experiments. After collecting the data, NDI data were used to calculate the corresponding transformation matrix R, T. Then, the point cloud obtained by Kinect V2 system was compared with the position of the marker under the NDI sensor.

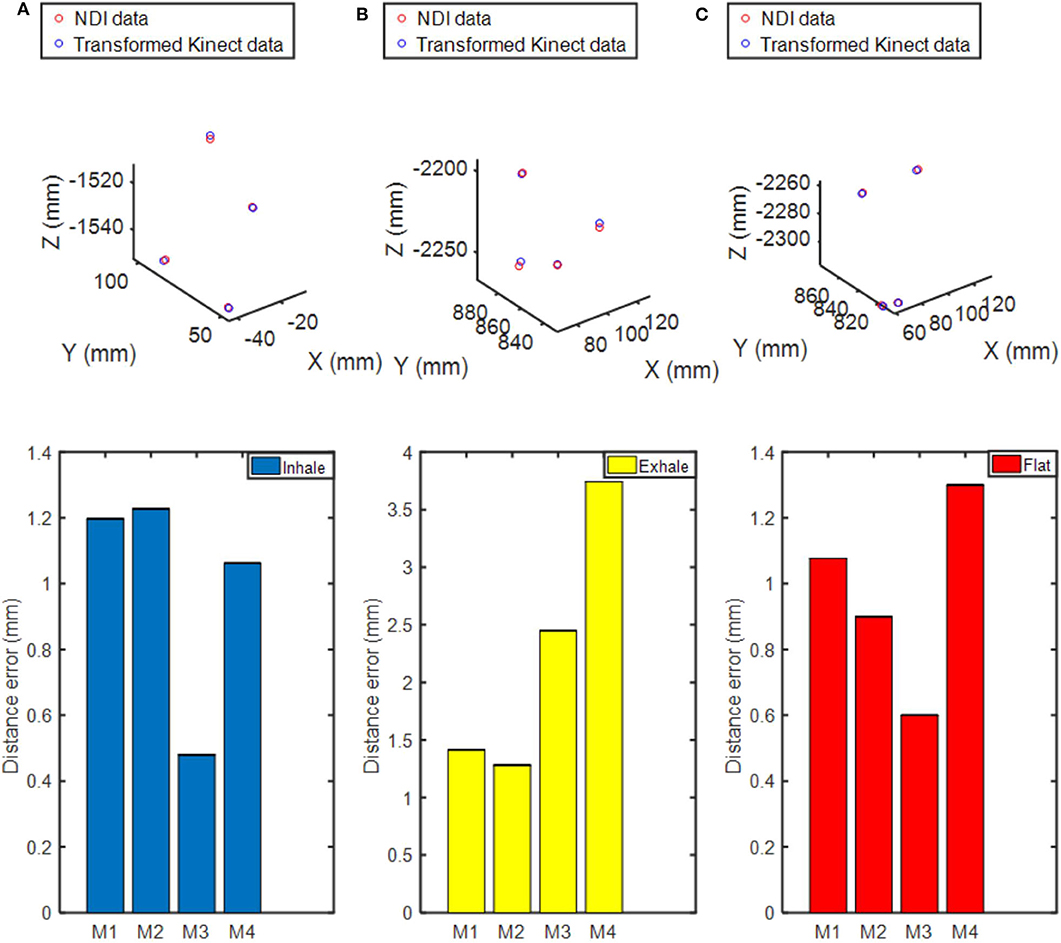

Center positions of four NDI markers' by fitting of point cloud obtained by Kinect V2 system were transformed and compared with the position of NDI markers under NDI sensor at three breathing fractions. As shown in Figure 9, there are three respiratory states: inhalation, exhalation, and transition between inhalation and exhalation. The uppermost figure represents the position relationship between the two coordinates in the three-dimensional space after conversion. By comparing the Euclidean distance between the two coordinates, it can be seen from the figure that the maximum distance error between the two coordinates is within 4 mm. The coordinates of the two can basically correspond to each other through conversion. It can be concluded that the characterization method based on thoracoabdominal surface modeling has the same effect as the traditional characterization method of finite markers.

Figure 9. Comparison of the spatial position of the markers in the depth camera coordinate system and the NDI system coordinate system. (A) Inhalation. (B) Exhalation. (C) Transition between inhalation and exhalation. The uppermost figure describes the spatial position of the NDI markers and the transformed ones from point cloud data in the NDI system coordinate. The figure below shows the distance error between the transformed position and the actual NDI position.

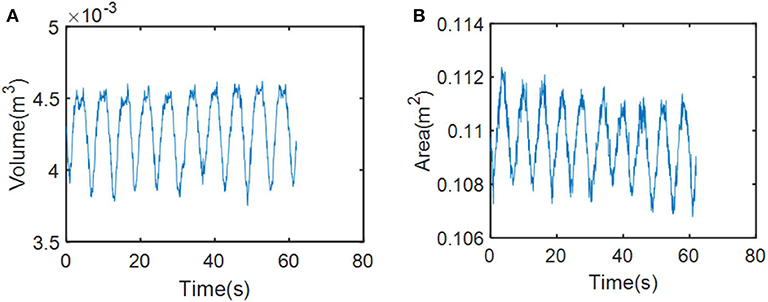

Unlike the traditional method of representing several isolated points, the advantage of our method lies in characterizing the entire surface feature. We collected respiratory motion data from our phantom for 1 min and obtained voxel models based on time series through the modeling method described in Dynamic Thoracoabdominal Surface Voxel Modeling. Figure 10 shows the volume and area features, respectively, extracted by using the method mentioned above. We can see that volume and area characteristics display periodicity and rhythmicity and conform to the law of respiratory movement. Then, we used dimension reduction analysis of voxel model proposed in Feature Vector of Dynamic Voxel Model. The bounding box contains 172,530 voxel units with a resolution of 5 mm and constitutes a 172,530-dimensional column vector, which can be reduced to any dimension. For example, we reduced the high-dimensional data to a characteristic vector with six dimensions. The respiratory characteristics in six dimensions are shown in Figure 11.

Figure 10. Changes in respiratory motion of physical characteristics. (A) Changes in respiratory motion of volume feature. (B) Changes in respiratory motion of area feature.

Whether the results of dimensionality reduction can accurately reflect the respiratory movement of the surface needs to be validated. In addition, optimal number of the characteristic vector after dimension reduction needs to be ascertained. To solve these problems, Pearson correlation method (Person, 1895) was used for correlation analysis in this paper. The formula is as follows:

where the Pearson correlation coefficient ρX, Y of two continuous variables (X, Y) is equal to the covariance cov(X, Y) between them divided by the product of their respective standard deviations (σX, σY). The value of the coefficient is always between −1.0 and 1.0. Variables close to 0 are regarded as uncorrelated, while variables close to 1 or −1 are regarded as strongly correlated.

Three sets of phantom data were, respectively, used to build voxel models and were reduced into characteristic vectors with 10 dimensions first. Then, correlation analysis between each dimension and tumor movement in vivo is carried out using Equation (6). As shown in Table 1, there is only one dimension with a correlation coefficient >0.5 with tumor motion in vivo. The correlation coefficient between this only dimension and tumor in vivo reached more than 0.9. Therefore, we can conclude that reducing the superhigh-dimensional voxel model to 1 dimension is the optimal operation.

In order to verify the correlation between each variable and tumor movement, we also calculated the correlation coefficient between tumor motion, dimension-reduced data, and NDI markers, respectively, by using Pearson method as Equation (6). The results are in Table 2. The correlation coefficient between the dimension-reduced feature vector and tumor motion is higher than that of skin surface markers and tumor motion. To sum up, the movement of chest and abdomen surface can be featured by one-dimensional feature vector. Besides, fusion of multidimensional voxel model data represents movement of chest and abdomen surface more accurately than markers.

As the correlation model has been introduced in the previous section, one-dimensional data were obtained by the dimension reduction method to establish the correlation model with tumor motion in vivo.

The obtained data (last for 1 min) is divide into two parts: one part was used to establish the correlation model, and the other one was used to verify the accuracy of the correlation model. That is, the correlation model was used to estimate the tumor motion in vivo using skin surface motion, while the feasibility and accuracy of the method were proved by comparing the error between the actual tumor motion and the estimated value.

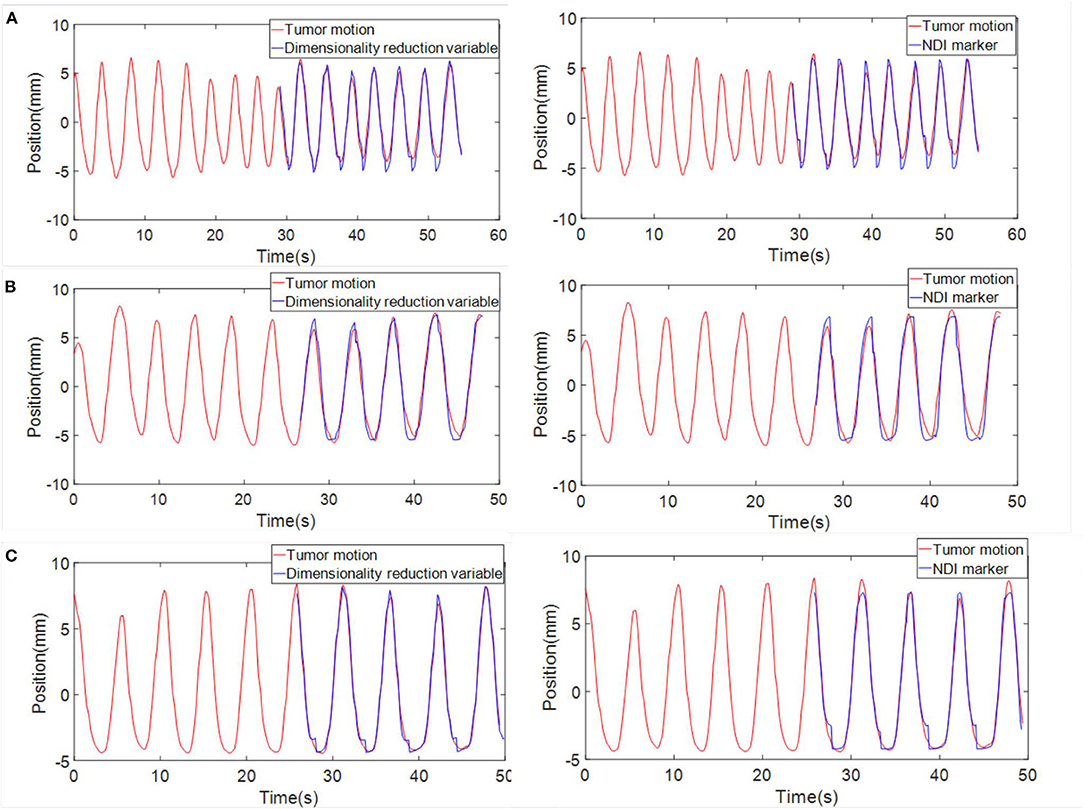

Then, we established the correlation model with tumor motion by using the dimensionality reduction results and NDI marker positions, respectively. After that, correlation models were used to estimate tumor motion. Three sets of phantom data with point cloud collection and NDI maker positions were used to test and compare the two correlation models. Figure 12 shows the comparison results. The mean squared error (MSE) between the estimated and actual values was also calculated and presented in Table 3. From Table 3, it can be seen that the accuracy of the correlation model obtained by dimension reduction results is much higher than the one of the correlation model obtained by NDI marker, which also indicates that the proposed approach in this work is feasible to extract respiratory features based on horacoabdominal surface modeling, and it is capable to establish an enhanced respiratory correlation model than skin surface marker method.

Figure 12. Comparison between the tumor motion estimation and their actual value (red, actual value; blue, estimated value). (A) Phantom data 1. (B) Phantom data 2. (C) Phantom data 3. Three sets of data are displayed. Curves in the left column show the estimation of tumor motion obtained through the correlation model built with dimensionality reduction results. Curves in the right column shows the estimation of tumor motion when NDI marker position was chosen as the input of the correlation model.

In this paper, a novel characterization method of human external respiratory motion for tumor correlation was proposed. Compared with previous methods of limited markers and multiple modality surrogates, our method accomplished better characterization by establishing a 3D voxel model containing rich structural information of thoracic and abdominal torso during the respiratory motion. Dimensions of the voxel model were reduced by a dimensionality reduction method. Through these procedures, features that can reflect changes in external respiratory motion were obtained. This paper builds the model of chest and abdomen torso's respiratory motion, from multicamera system construction, point cloud processing, and watertight model construction to model voxelization. A dimensionality reduction method was proposed to extract respiratory features from the established voxel model. The feasibility of the characterization method based on chest and abdomen surface modeling was verified and compared with the traditional finite surface marker representation method. The proposed method was proved to be more accurate than the traditional one with limited external markers. Furthermore, it breaks through the limitation of information loss in current respiration correlation methods, which describe the respiratory motion by using sparse sensing data. In future work, to form a sound solution of respiration tracking for radiosurgical robots, we will optimize the process of thoracoabdominal skin surface modeling, study the feature extraction of voxel model, and apply this method to the prediction of tumor motion under the clinical application of tumor tracking.

All datasets generated for this study are included in the article/supplementary material.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

SY contributed to the manuscript writing, data analysis, and experiment design. PH contributed to the manuscript writing and data analysis. RS conceived the presented idea and contributed to the algorithm design. MZ and JG contributed to the manuscript revision and system design. FZ, SK, and LS contributed to the support of experimental equipment. All authors discussed the results and contributed to the final manuscript.

This work was supported by the National Natural Science Foundation of China Study on three-dimensional reconstruction of body surface and tumor motion tracking for stereo radiotherapy robot based on point cloud data fusion (project number 61773273). This work was supported by grants from the National Natural Science Foundation of China (nos. 61673288 and 61773273).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would like to acknowledge the contribution of multiple volunteers to the experimental data and Professor Zhang toward the experimental equipment. The authors would also like to acknowledge Jiateng Wang for manuscript revision and the work of experiments.

Breitkopf, P., Naceur, H., Rassineux, A., and Villon, P. (2005). Moving least squares response surface approximation: formulation and metal forming applications. Comput. Struct. 83, 1411–1428. doi: 10.1016/j.compstruc.2004.07.011

Cheng, W., and Adler, J. (2006). An overview of cyberknife radiosurgery. Chin. J. Clin. Oncol. 3, 229–243. doi: 10.1007/s11805-006-0049-5

Coste-Maniere, E., Olender, D., Kilby, W., and Schulz, R. A. (2005). Robotic whole body stereotactic radiosurgery: clinical advantages of the cyberknife integrated system. Int. J. Med. Rob. Comput. Assist. Surg. 1, 28–39. doi: 10.1002/rcs.39

Durichen, R., Davenport, L., Bruder, R., Wissel, T., Schweikard, A., and Ernst, F. (2013). “Evaluation of the potential of multi-modal sensors for respiratory motion prediction and correlation,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Osaka), 5678–5681. doi: 10.1109/EMBC.2013.6610839

Ernst, F., and Saß, P. (2015). Respiratory motion tracking using microsoft's kinect v2 camera. Curr. Dir. Biomed. Eng. 1, 192–195. doi: 10.1515/cdbme-2015-0048

Ernst, F., Schlaefer, A., and Schweikard, A. (2009). Smoothing of respiratory motion traces for motion-compensated radiotherapy. Med. Phys. 37, 282–294. doi: 10.1118/1.3271684

Gu, C., and Li, C. (2015). Assessment of human respiration patterns via noncontact sensing using doppler multi-radar system. Sensors 15, 6383–6398. doi: 10.3390/s150306383

Gu, C., Li, R., Jiang, S. B., and Li, C. (2011). “A multi-radar wireless system for respiratory gating and accurate tumor tracking in lung cancer radiotherapy,” in 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Boston, MA), 417–420. doi: 10.1109/IEMBS.2011.6090054

Hoisak, J. D. P., Sixel, K. E., Tirona, R., Cheung, P. C. F., and Pignol, J.-P. (2004). Correlation of lung tumor motion with external surrogate indicators of respiration. Int. J. Radiat. Oncol. Biol. Phys. 60, 1298–1306. doi: 10.1016/j.ijrobp.2004.07.681

Hornung, A., Wurm, K. M., Bennewitz, M., Stachniss, C., and Burgard, W. (2013). OctoMap: an efficient probabilistic 3D mapping framework based on octrees. Auton. Rob. 34, 189–206. doi: 10.1007/s10514-012-9321-0

Hou, P., Sun, R., and Yu, S. (2019). “Feasibility of respiratory motion characterization based on skin-tumor correlated thoracoabdominal surface modeling,” in Proc. IEEE Int. Conf. Intelligent Robots and Systems (Macau).

Hou, P., Sun, R., Yu, S., and Sun, L. (2018). “Design of a holistic thoracoabdominal phantom for respiration tracking test in robotic radiosurgery,” in 3rd International Conference on Advanced Robotics and Mechatronics (Singapore), 318–322. doi: 10.1109/ICARM.2018.8610803

Iizuka, Y., Matsuo, Y., Ishihara, Y., Akimoto, M., Tanabe, H., Takayama, K., et al. (2015). Dynamic tumor-tracking radiotherapy with real-time monitoring for liver tumors using a gimbal mounted linac. Radiother. Oncol. 117, 496–500. doi: 10.1016/j.radonc.2015.08.033

Kanoulas, E., Aslam, J. A., Sharp, G. C., Berbeco, R. I., Nishioka, S., Shirato, H., et al. (2007). Derivation of the tumor position from external respiratory surrogates with periodical updating of the internal/external correlation. Phys. Med. Biol. 52, 5443–5456. doi: 10.1088/0031-9155/52/17/023

Kazhdan, M., Bolitho, M., and Hoppe, H. (2006). “Poisson surface reconstruction,” in Proceedings of Symposium on Geometry Processing (Cagliari), 61–70.

Koch, N., Liu, H. H., Starkschall, G., Jacobson, M., Forster, K., Liao, Z., et al. (2004). Evaluation of internal lung motion for respiratory-gated radiotherapy using MRI: part I—correlating internal lung motion with skin fiducial motion. Int. J. Radiat. Oncol. Biol. Phys. 60, 1459–1472. doi: 10.1016/j.ijrobp.2004.05.055

Liu, Y., Jiang, P., Petrosyan, V., Li, S., Bian, J., Zhang, L., et al. (2018). “DEL: deep embedding learning for efficient image segmentation,” in 27th Proceedings of International Conference on Artificial Intelligence Main track (Stockholm), 864–870. doi: 10.24963/ijcai.2018/120

Moore, J. B. (1978). Statistical filtering. Math. Control Theory 680, 152–169. doi: 10.1007/BFb0065316

Peressutti, D., Rijkhorst, E. J., Barratt, D. C., Penney, G. P., and King, A. P. (2012). “Estimating and resolving uncertainty in cardiac respiratory motion modelling,” in 9th IEEE International Conference Symposium on Biomedical Imaging (Barcelona), 262–265. doi: 10.1109/ISBI.2012.6235534

Person, K. (1895). Notes on regression and inheritance in the case of two parents. Proc. R. Soc. Lond. 58, 240–224. doi: 10.1098/rspl.1895.0041

Roweis, S. T. (2000). Nonlinear dimensionality reduction by locally linear embedding. Science 290, 2323–2326. doi: 10.1126/science.290.5500.2323

Schweikard, A., Glosser, G., Bodduluri, M., Murphy, M. J., and Adler, J. R. (2000). Robotic motion compensation for respiratory movement during radiosurgery. Comput. Aided Surg. 5, 263–277. doi: 10.3109/10929080009148894

Tomasi, C., and Manduchi, R. (1998). “Bilateral filtering for gray and color images,” in 1998 IEEE International Conference on Computer Vision (Bombay), 839–846. doi: 10.1109/ICCV.1998.710815

Torshabi, A. E., Riboldi, M., Fooladi, A. A. I., Mosalla, S. M. M., and Baroni, G. (2013). An adaptive fuzzy prediction model for real time tumor tracking in radiotherapy via external surrogates. J. Appl. Clin. Med. Phys. 14, 102–114. doi: 10.1120/jacmp.v14i1.4008

Transue, S., Nguyen, P., Vu, T., and Choi, M.-H. (2016). “Real-time tidal volume estimation using iso-surface reconstruction,” in 2016 IEEE First International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE) (Washington, DC), 209–218. doi: 10.1109/CHASE.2016.72

Wang, X., Xie, Q., Ma, T., and Zhu, J. (2018). Feature extraction based on dimension reduction and clustering for maize leaf spot images. Int. J. Pattern Recognit. Artif. Intell. 32:1854029. doi: 10.1142/S0218001418540290

Wijenayake, U., and Park, S.-Y. (2017). Real-time external respiratory motion measuring technique using an RGB-D camera and principal component analysis. Sensors 17:1840. doi: 10.3390/s17081840

Yang, J., Chen, L., Zhang, L., Sun, X., She, D., Lu, S.-P., et al. (2018). “Historical context-based style classification of painting images via label distribution learning,” in 2018 ACM Multimedia Conference on Multimedia Conference (Amsterdam), 1154–1162. doi: 10.1145/3240508.3240593

Keywords: respiratory motion characterization, voxel model, correlation model, tumor tracking, surface modeling

Citation: Yu S, Hou P, Sun R, Kuang S, Zhang F, Zhou M, Guo J and Sun L (2020) Correlated Skin Surface and Tumor Motion Modeling for Treatment Planning in Robotic Radiosurgery. Front. Neurorobot. 14:582385. doi: 10.3389/fnbot.2020.582385

Received: 11 July 2020; Accepted: 25 September 2020;

Published: 12 November 2020.

Edited by:

Zhan Li, University of Electronic Science and Technology of China, ChinaReviewed by:

Qiang Li, Bielefeld University, GermanyCopyright © 2020 Yu, Hou, Sun, Kuang, Zhang, Zhou, Guo and Sun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rongchuan Sun, c3Vucm9uZ2NodWFuQHN1ZGEuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.