95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurorobot. , 17 February 2017

Volume 11 - 2017 | https://doi.org/10.3389/fnbot.2017.00006

This article is part of the Research Topic Neural & Bio-inspired Processing and Robot Control View all 12 articles

In this paper, a hybrid electroencephalography–functional near-infrared spectroscopy (EEG–fNIRS) scheme to decode eight active brain commands from the frontal brain region for brain–computer interface is presented. A total of eight commands are decoded by fNIRS, as positioned on the prefrontal cortex, and by EEG, around the frontal, parietal, and visual cortices. Mental arithmetic, mental counting, mental rotation, and word formation tasks are decoded with fNIRS, in which the selected features for classification and command generation are the peak, minimum, and mean ΔHbO values within a 2-s moving window. In the case of EEG, two eyeblinks, three eyeblinks, and eye movement in the up/down and left/right directions are used for four-command generation. The features in this case are the number of peaks and the mean of the EEG signal during 1 s window. We tested the generated commands on a quadcopter in an open space. An average accuracy of 75.6% was achieved with fNIRS for four-command decoding and 86% with EEG for another four-command decoding. The testing results show the possibility of controlling a quadcopter online and in real-time using eight commands from the prefrontal and frontal cortices via the proposed hybrid EEG–fNIRS interface.

Brain–computer interface (BCI) or brain–machine interface (BMI) is a method of communication between brain and hardware by means of signals generated from the brain without the involvement of muscles and peripheral nervous system (Naseer and Hong, 2015b; Schroeder and Chestek, 2016). Although prosthetic devices utilize muscles or peripheral nerve signals (Ravindra and Castellini, 2014; Chadwell et al., 2016; Chen et al., 2016), brain signals are equally viable for provision of direct neural signals for interface purposes (Waldert et al., 2009; Quandt et al., 2012; Kao et al., 2014). A BCI, specifically, is an artificial intelligence system that can recognize a certain set of patterns generated by brain. The BCI promises as a platform to improve the quality of life of individuals with severe motor disabilities (Muller-Putz et al., 2015). The BCI procedure when acquiring control commands from the brain consists of five steps: signal acquisition, signal enhancement, feature extraction, classification, and control-interfacing (Nicolas-Alonso and Gomez-Gil, 2012).

The complicated surgical procedures performed for microelectrode implantation and establishment of BCI have been outstandingly successful in achieving control of robotic and prosthetic arms by means of neuronal-signal acquisition (Hochberg et al., 2006, 2012). These methods, however, are far from perfect options for BCI purposes, as they are all invasive and incur significant risks (Jerbi et al., 2011; Schultz and Kuiken, 2011; Rak et al., 2012; Ortiz-Rosario and Adeli, 2013).

The alternative non-invasive methods measure brain activities via either detection of electrophysiological signals (Li et al., 2010; Bai et al., 2015; Weyand et al., 2015) or determination of hemodynamic response (Bhutta et al., 2014; Ruiz et al., 2014; Hong et al., 2015; Naseer and Hong, 2015a; Weyand et al., 2015). Electrophysiological activity is generated by the neuronal firings prompted in the performance of brain tasks (Guntekin and Basar, 2016). The hemodynamic response is the increase of hemoglobin as a result of the neuronal firing that occurs when the brain performs an activity (Ferrari and Quaresima, 2012; Boas et al., 2014). The leading non-invasive BCI modalities in terms of cost and portability are electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) (von Luhmann et al., 2015; Lin and Hsieh, 2016). The selection criterion for each modality is task dependent.

Electroencephalography has applications for active-, passive-, and reactive-type BCIs (Turnip et al., 2011; Zander and Kothe, 2011; Turnip and Hong, 2012; Urgen et al., 2013; Yoo et al., 2014). It is most widely employed with reactive-type tasks in the performance of which the brain output is generated in reaction to external stimulation. Commands are generated by detection of steady-state visually evoked potentials (SSVEP) and P300-based activations (Li et al., 2010, 2013; Turnip and Hong, 2012; Cao et al., 2014; Bai et al., 2015). fNIRS-based BCIs, meanwhile, are most commonly of the active type, which obtains brain activity output via user intentionality, independent of external events. For the purposes of fNIRS-based active BCIs, mostly mental (e.g., math, counting, etc.) and motor-related tasks (e.g., motor imagery) are selected (Naseer et al., 2014; Hong et al., 2015; Hong and Naseer, 2016). Although recent studies have shown the importance of fNIRS-based BCI for reactive and passive tasks (Hu et al., 2012; Santosa et al., 2014; Bhutta et al., 2015; Khan and Hong, 2015), active-type tasks are primarily used to increase the number of commands for this modality. The active-type BCI is preferred over the reactive BCI, as it allows a person to communicate with a machine at will. For both EEG and fNIRS, the drawback of increasing the number of active commands is the decrease in accuracy for BCI (Vuckovic and Sepulveda, 2012; Naseer and Hong, 2015a).

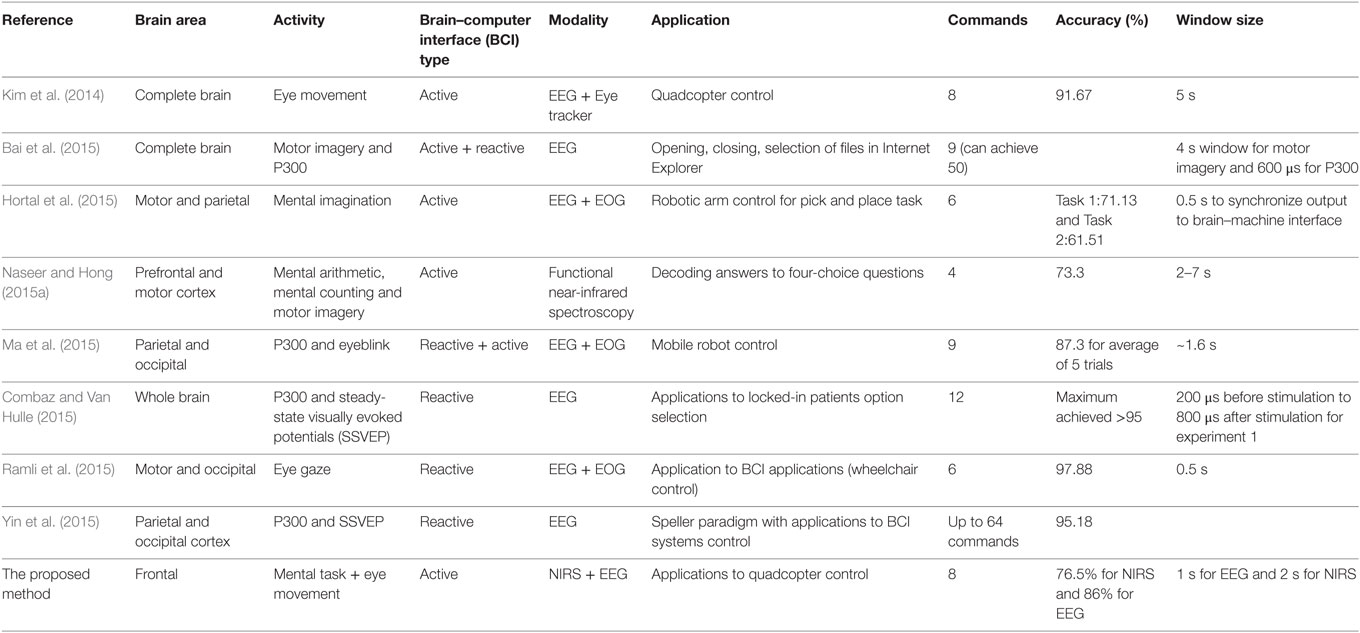

As a means of compensating for the accuracy reduction problem is the use of a single-brain signal acquisition modality, the hybrid BCI concept was proposed (Pfurtscheller et al., 2010). The design of a hybrid BCI entails the combination of either two modalities (at least one of which is a brain signal acquisition modality) or different brain signals (e.g., SSVEP and P300). The EEG–fNIRS-based hybrid BCI has been reported to enhance classification accuracy (Fazli et al., 2012; Putze et al., 2014; Tomita et al., 2014) and increase the number of commands (Khan et al., 2014). Classification accuracy can be improved by simultaneously decoding EEG and fNIRS signals for the same activity and combining the features. The number of active commands can be increased by decoding brain activities from different brain regions (e.g., motor tasks for EEG and mental tasks for fNIRS). However, for these cases, the reported window size using fNIRS for optimal classification is around 10 s (Tomita et al., 2014). The problem of window size reduction and others relevant to real-time/online BCI applications require further research. Table 1 summarizes the most recent work (Kim et al., 2014; Bai et al., 2015; Combaz and Van Hulle, 2015; Hortal et al., 2015; Ma et al., 2015; Naseer and Hong, 2015a; Ramli et al., 2015; Yin et al., 2015) in terms of command number, accuracy, and window size as those parameters relate to robotic-control applications.

Table 1. Comparison of our proposed method with recent electroencephalography (EEG)-based work on command generation, accuracy, and window size.

In the present BCI research, we decoded eight active commands using signals from the frontal and prefrontal cortices. Four tasks (mental math, mental counting, word formation, and mental rotation) were decoded using fNIRS, and four eye movement signals (up/down eye movement, left/right eye movement, twice or three times eyeblinks) were decoded using EEG. In the fNIRS classification and generation of commands, a 0- to 2-s window was used, whereas in the case of EEG, a 1-s window was used. The commands thus generated were used to update a quadcopter’s movement coordinates (six movements and start/stop). Revealing the obtained results briefly, the signal mean, peak, and minimum-value features obtained using oxyhemoglobin data in 0–2 s window provided 76.5% accurate classification. For EEG, signal peak and number of peaks achieved 86% accurate results. The testing of the drone in an arena showed the possibility of quadcopter control using eight-brain commands from the frontal cortex. To the authors’ best knowledge, this is the first fNIRS study to decode and classify brain activity in 0–2 s window. Also, this is the first study to decode four commands from the prefrontal cortex using fNIRS. Moreover, this work shows the first hybrid EEG–fNIRS-based decoding of eight active commands from the frontal and prefrontal cortices.

A total of 10 healthy adults were recruited (all right-handed males; mean age: 28.5 ± 4.8). Right-handers had been sought in order to minimize any variations in the electrophysiological and hemodynamic responses due to the hemispheric dominance difference. None of the selected participants had participated in any previous brain signal acquisition experiment, and none had a history of any psychiatric, neurological, or visual disorder. All of them had normal or corrected to normal vision, and all provided a written consent after having been informed in detail about the experimental procedure. Experiments with fNIRS and EEG were approved by the Institutional Review Board of Pusan National University, and they were conducted in accordance with the ethical standards encoded in the latest Declaration of Helsinki.

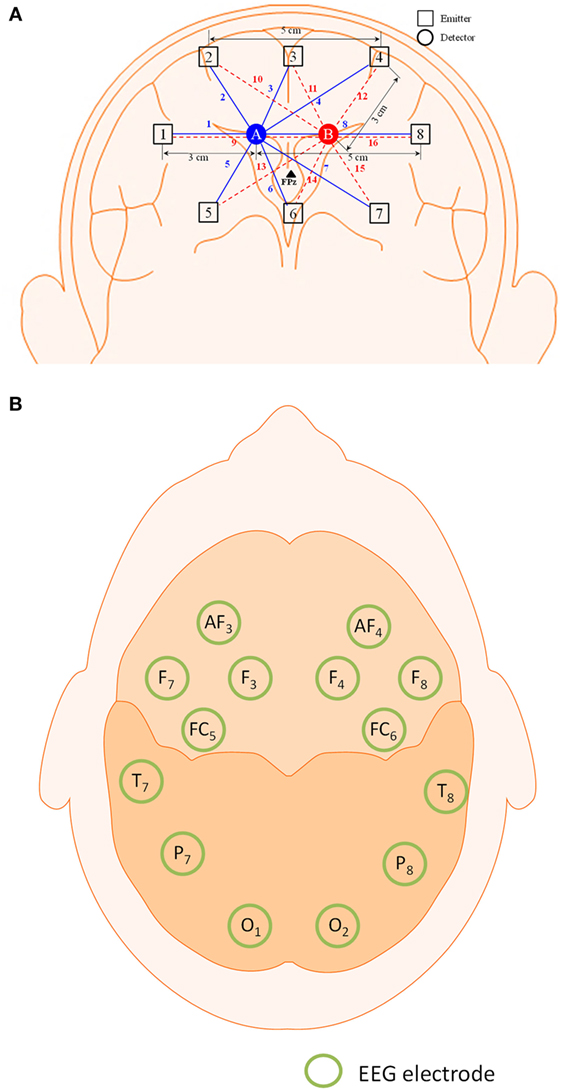

The frequency domain system ISS Imagent (ISS Inc., USA) was used for the signal acquisition. A total of eight sources and two detectors, making a combination of 16 channels, were positioned around the prefrontal cortex. The FPz location was positioned between the two detectors. The Emotiv EEG headset (Emotiv Epoc, USA) was used to acquire the EEG signals. The electrodes/optodes were positioned on the head according to the International 10–20 system (Jurcak et al., 2007). The electrode and optode placement is illustrated in Figure 1.

Figure 1. Configuration of optodes and electrodes for hybrid functional near-infrared spectroscopy–electroencephalography (fNIRS–EEG) experiment. (A) 16-channel fNIRS with 2 detectors and 8 emitters in the prefrontal brain region and (B) 14-electrode configuration of the Emotiv EEG headset.

The experimental procedure consisted of two sessions: training and testing. The subjects were trained to perform eight tasks detected simultaneously by EEG and fNIRS, after which the recorded brain activities were tested using real-time/online analysis.

For the training session, the subjects were seated in a comfortable chair and told to relax. A computer monitor was set up approximately 70 cm in front of the subjects. The session began with a resting period of 2 min to establish a data baseline. After the resting period, the screen cued the participants to perform one of eight specific tasks. The tasks were as follows:

• Mental counting: counting backward from a displayed number;

• Mental arithmetic: subtraction of two-digit numbers from three-digit numbers in pseudo random order (e.g., 233 − 52 = ?, ? − 23 = ?);

• Mental rotation: visualization of the clockwise rotation of a displayed stationary object (i.e., a cube);

• Word formation: formation of five scrambled words (e.g., “lloonab”), the first letter of which is shown as a cue (e.g., “B”);

• Two eyeblinks: blinking twice within 1 s window;

• Three eyeblinks: blinking thrice within 1 s window;

• Up/down eye movement: movement of both eyes in the up or down direction within 1 s window;

• Left/right eye movement: movement of both eyes in the left or right direction.

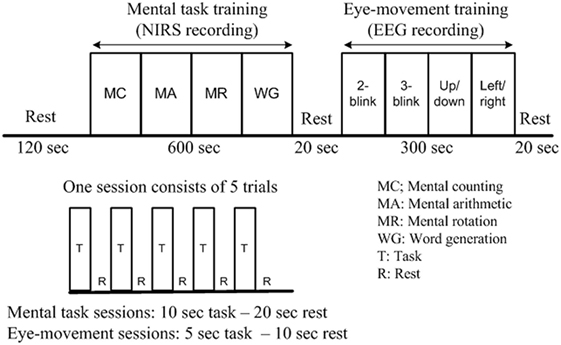

The mental tasks were recorded mainly using fNIRS, as the previous work (Weyand et al., 2015) has shown its utility for high-accuracy detection of the above-noted tasks. The eye movement tasks were recorded principally using EEG, as the Emotiv EEG head set, as noted earlier, is commercially available as a system for detection of various facial movements and motor signals. The training session was divided into two parts: mental task training and eye movement training. In the first part, the subjects were trained for mental arithmetic, counting, rotation, as well as word formation tasks. Each task consisted of five 10-s trials separated by a 20-s resting session. In the second part of the training session, the subjects were instructed to move their eyes according to the cue given. Each trial in this case was 5 s in duration, and the resting period was 10 s. Details on the experimental paradigm and data recording sequence are provided in Figure 2.

Figure 2. Experimental paradigm of a training session (per subject). After the initial 2-min rest, each functional near-infrared spectroscopy recording block consists of five 10-s activations and five 20-s rests, while each electroencephalography block consists of five 15-s tasks and five 10-s rests. The total duration of the experiment is 17 min.

As part of the testing session, the training data were used to test the movement of a quadcopter (Parrot AR drone 2.0, Parrot SA., France). Specifically, the eight commands recorded during the training session were used to navigate the quadcopter in an open arena. The data were translated into commands and the subjects were asked to move the quadcopter in a rectangular path.

The data for both modalities (EEG and fNIRS) were independently processed and filtered to acquire the desired output signals. In both cases, band-pass filtering was used to remove physiological noise from the acquired signals.

The frequency domain fNIRS system used two wavelengths (690 and 830 nm) to determine the changes in the concentration of hemoglobin. The sampling rate of 15.625 Hz was used to acquire the data. The modified Beer-Lambert law (Baker et al., 2014; Bhatt et al., 2016) was utilized to convert the data into concentrated changes of oxy- and deoxy-hemoglobin (ΔHbO and ΔHbR):

where A is the absorbance of light (optical density), Iin is the incident intensity of light, Iout is the detected intensity of light, α is the specific extinction coefficient in µM−1 cm−1, c is the absorber concentration in micromolars, l is the distance between the source and the detector in centimeters, d is the differential path-length factor, and η is the loss of light due to scattering.

The data were first preprocessed to remove physiological noises related to respiration, cardiac, and low-frequency drift signals. In order to minimize the physiological noise due to heart pulsation (1–1.5 Hz for adults), respiration (approximately 0.4 Hz for adults), and eye movement (0.3–1 Hz), the signals were low-pass filtered using a fourth-order Butterworth filter at a cutoff frequency of 0.15 Hz. The low-frequency drift signals were minimized from the data using a high-pass filter with a cutoff frequency of 0.033 Hz (Kamran and Hong, 2014; Bhutta et al., 2015; Hong and Santosa, 2016).

The 14-channel EEG data were acquired at a sampling rate of 128 Hz. The α-, β-, Δ-, and θ-bands, acquired by band-pass filtering between 8 and 12 Hz, 12 and 28 Hz, 0.5 and 4 Hz, and 4 and 8 Hz, respectively, enabled isolation of the electrodes corresponding to the eye movement activities (Lotte et al., 2007; Ortiz-Rosario and Adeli, 2013; Ma et al., 2015).

Several channels were activated for both EEG and fNIRS. Proper channel selection is essential to the high-accuracy generation of commands. Previous work has employed t-value-based approaches (Hong and Nguyen, 2014; Hong and Santosa, 2016), bundled-optode-based approaches (Nguyen and Hong, 2016; Nguyen et al., 2016), and channel-averaging approaches (Khan and Hong, 2015; Naseer and Hong, 2015a). Other studies alternatively have employed their own algorithms, for instance, independent component analysis, etc. (Hu et al., 2010; Kamran and Hong, 2013; Santosa et al., 2013). We used the following criteria for selection of fNIRS and EEG channels.

For fNIRS channel selection, we calculated the peak (max) value of ΔHbO in the baseline and in the first trials of the mental arithmetic, mental counting, mental rotation, and word formation tasks, respectively. If the difference between the max value of the trial and the baseline value was positive, the channel was selected for classification; if neutral or negative (equal to or less than zero), it was discarded.

In case of EEG, we measured the power spectrum for each channel. The selected channels were those in which the signal power corresponding to the eyeblink and movement tasks was significant. Mostly the channels near the frontal brain region were active in this case.

In order to generate commands, we first extracted the relevant features for classification. We selected signal peak and signal mean as features as, according to the literature (Khan and Hong, 2015), they provide better performance for fNIRS-based BCI systems. Also, in consideration of a recently reported possibility of an initial fNIRS signal dip (Hong and Naseer, 2016; Zafar and Hong, 2017), we added a minimum (min) signal value as a feature. We also investigated the possibility of minimizing the time for command generation by means of 0–1, 0–1.5, and 0–2 s windows.

For EEG signals, following channel selection we selected the signal mean and number of peaks as features for command generation. In this case, we used a moving window of 1 s to extract the relevant feature values.

For both modalities, MATLAB®-based functions were used to calculate the features of the mean, peak, min, and number of peaks. For offline processing, the extracted features were rescaled between 0 and 1 by the equation

where a ∈ Rn represents the feature value, a′ is the rescaled value between 0 and 1, max a denotes the largest value, and min a indicates the smallest value. These normalized feature values were used in a four-class classifier for training and testing of the data. We used linear discriminant analysis (LDA) to classify the signals for EEG and fNIRS, as, in one of our previous studies, we found it to be faster than support vector machine (Khan and Hong, 2015).

For our case, xi ∈ R2, where, for fNIRS, i denotes the classification class, μi is the sample mean of class i, and μ is the total mean over all of the samples l. That is,

where ni is the number of samples of class i and n is the total number of samples. The optimal projection matrix V for LDA that maximizes the following Fisher’s criterion is

where SB and SW are the between-class scatter matrix and the within-class scatter matrix, respectively, given by

where the total number of classes is given by m. Equation 5 was treated as an eigenvalue problem in order to obtain the optimal vector V corresponding to the largest eigenvalue. In the case of offline testing, 10-fold cross-validation was used to estimate the classification accuracy (Lotte et al., 2007; Hwang et al., 2013; Ortiz-Rosario and Adeli, 2013).

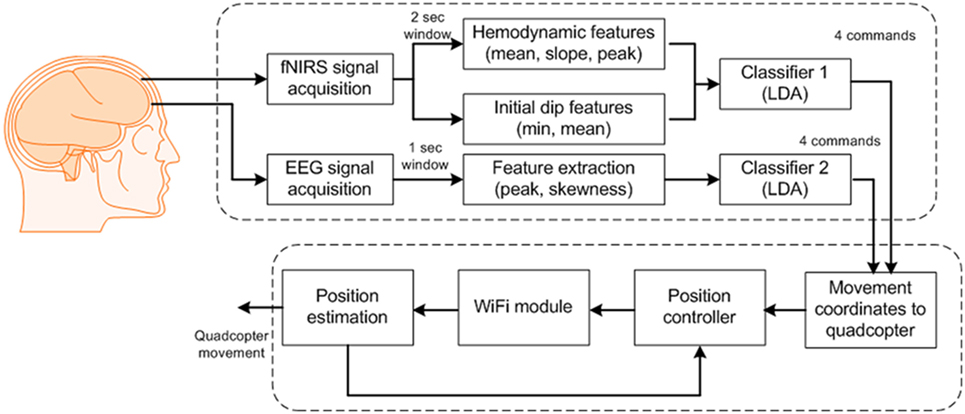

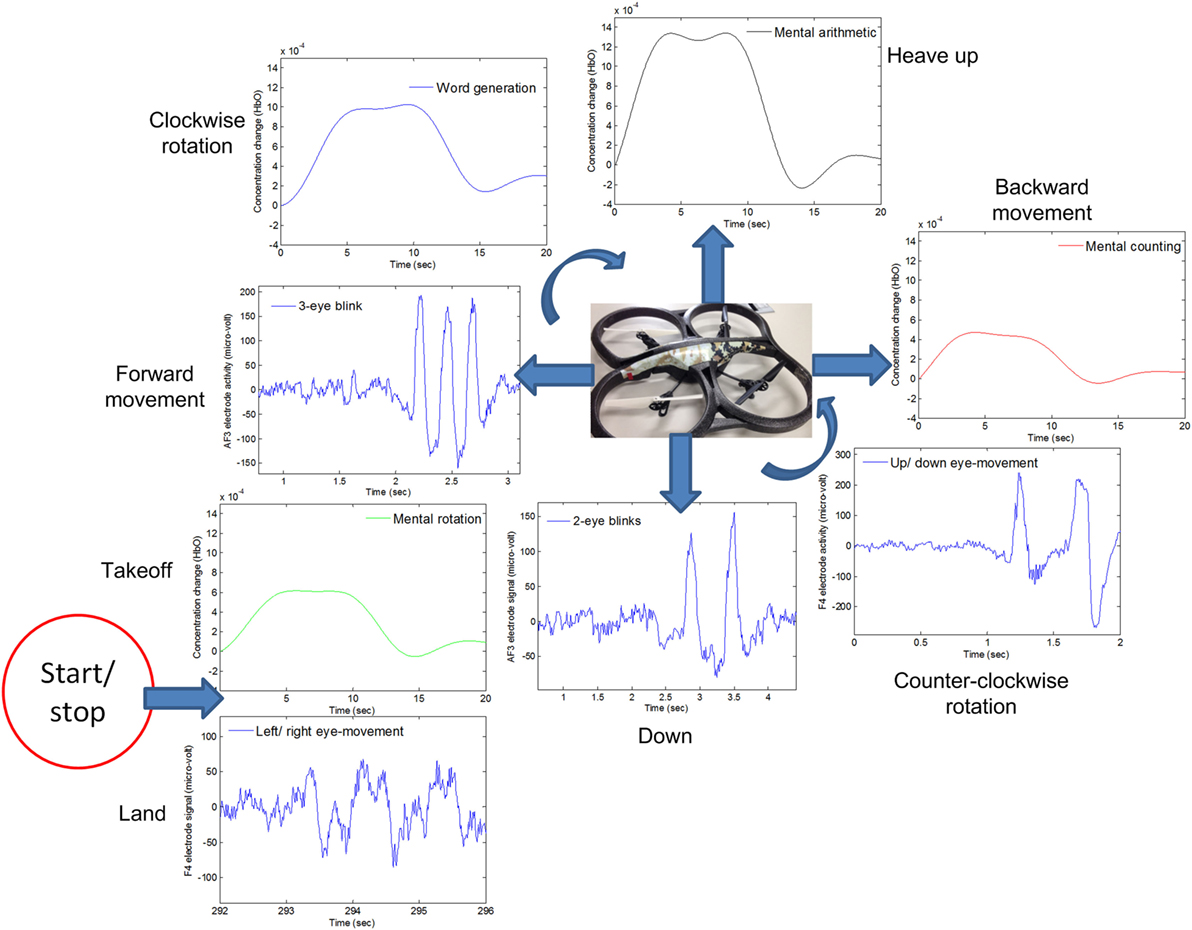

For control of the quadcopter, we formulated eight commands for classification: up/down movements, clockwise/counterclockwise rotations, forward/backward movements, and start/stop. After classification, we updated the quadcopter’s movement coordinates by Wi-Fi communication. The quadcopter has navigated using the transmitted commands. Figure 3 provides a block diagram of the BCI scheme for quadcopter control.

Figure 3. Block diagram of the proposed brain–computer interface scheme for generation of eight commands.

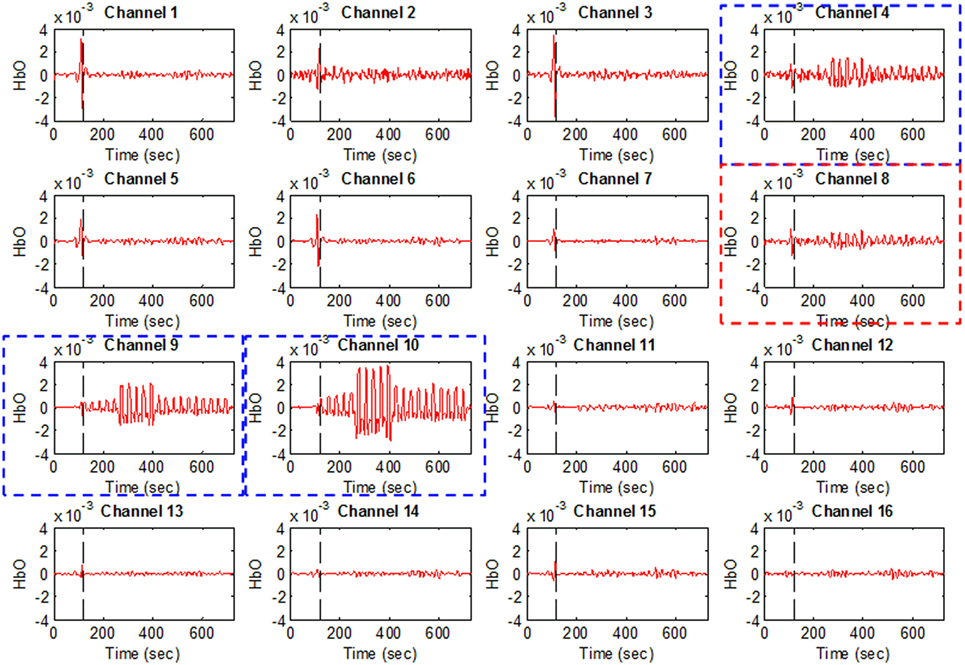

Figure 4 plots Subject 2’s ΔHbO values for all 16 channels and four activities. It can be seen that not all of the channels were active when performing a brain activity. However, for all four of the mental tasks, the activation pattern appears in the same channels.

Figure 4. HbO examples for Figure 1A (Subject 2). Channels 4, 9, and 10 were selected as active channels by the proposed method, but channel 8 was not (even if it was identified as such by the t-value analysis).

The plots in Figure 4 serve to emphasize the necessity of selecting proper channels for distinguishing of brain activities. As per our channel-selection criterion, we subtracted the max value in the baseline from the max value of the first trial. Accordingly, channels 4, 9, and 10 were selected for Subject 2, whereas channel 8 was not, due to having a higher value of baseline. We intended to identify different brain channels for different activities; therefore, as per our criterion, the subtraction of the first trial for each activity can identify different channels. However, in this case, for all subjects selected, the common channels were activated as corresponding to the mental tasks.

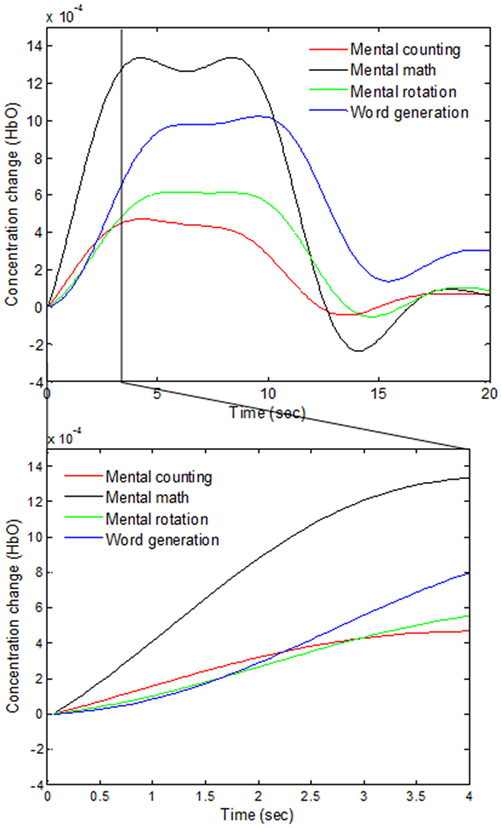

As various windows sizes have been used for detection of fNIRS features in different studies (Utsugi et al., 2008; Luu and Chau, 2009; Power et al., 2010; Naseer and Hong, 2013; Schudlo et al., 2013; Schudlo and Chau, 2015; Weyand and Chau, 2015; Weyand et al., 2015; Naseer et al., 2016a,b), we intended to minimize the window size applicable to BCI applications. We therefore selected 0–0.5, 0–1, 0–1.5, and 0–2 s windows for feature acquisition and investigated both hemodynamic and initial dip features to acquire the best window size for reduced computation time. The signals of Subject 2 as averaged over all of the trials in the reduced window are plotted in Figure 5.

Figure 5. Comparison of the averaged HbOs of four mental tasks and the magnified responses during 0–4 s window.

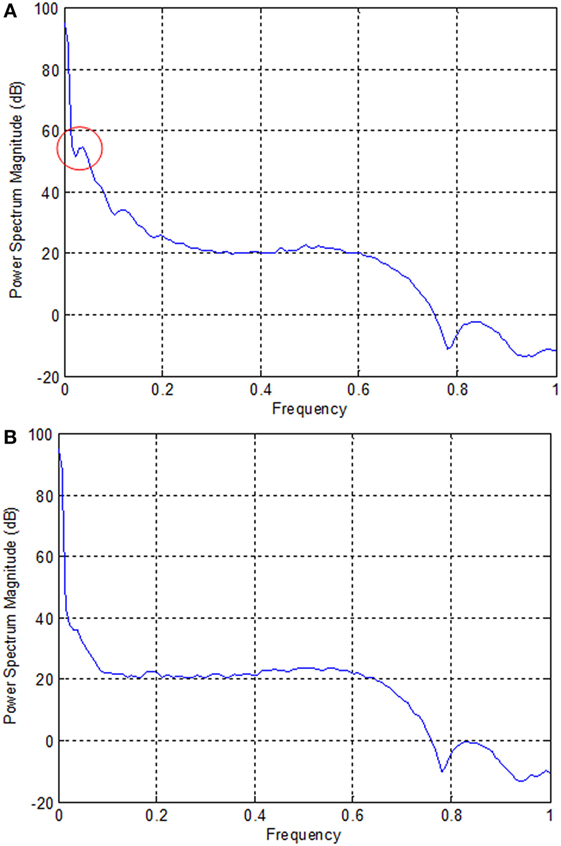

In the case of EEG, we examined the power spectrum in order to identify the activated channels. The F3, F4, O1, and O2 regions were most active. We selected the channel showing the highest power corresponding to the eye movement task. Figure 6 plots the normalized power spectrum of the selected channel for Subject 2.

Figure 6. Normalized power spectra of electroencephalography (Subject 2). (A) F3 electrode and (B) P7 electrode. The local peak in the red circle in (A) corresponds to the eye movement task measured by the F3 electrode.

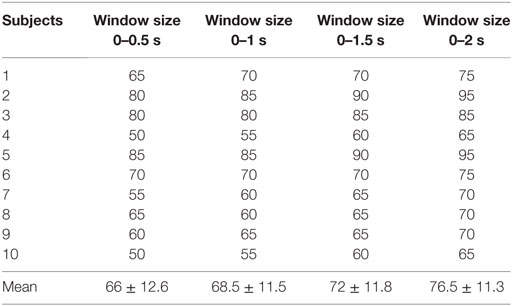

The accuracies obtained for fNIRS are shown in Table 2. The accuracies achieved using EEG for the selected channels are shown in Table 3.

Table 2. Classification accuracies of four functional near-infrared spectroscopy window sizes (based upon the mean, peak, and minimum values of ΔHbO).

For real-time/online testing, we associated each activity with quadcopter movement. The associated activity for each is shown in Figure 7.

Figure 7. The quadcopter control scheme based on electroencephalography and functional near-infrared spectroscopy signals.

We associated opposite movements with EEG/fNIRS activities; for example, if forward movement was associated with EEG signals, backward movement was associated with fNIRS signals. This was done to ensure safety from the quadcopter and anyone in the area. This scheme has benefits in any case, as, if a command is misclassified/misinterpreted, a command with the second modality can be generated to countermove the misclassified movement. As per Figure 7, when EEG was used for one motion, fNIRS was used for its counter motion. This selection reflected EEG’s demonstratively higher accuracy for most of the subjects.

We have tested the movement of the quadcopter in an arena. The subjects were asked to move the quadcopter in a rectangular path. They were asked to land the quadcopter near to the takeoff position. After take off, the subjects were informed to move the drone almost 3 m in forward direction, then 2 m to the left. The subjects had to increase the height by almost 0.5 m when reaching the left corner. After increasing the height, the subjects were to move the quadcopter backward 3 m and then 2 m to the right to reach the takeoff spot. After reaching the final position, the subjects were asked to land the drone. The path followed by the drone from Subject 2 is shown in Figure 8.

Since the drone requires quick response commands to maneuver, it can be clearly seen that the path was not properly followed. The subject had to adjust the path to the desired path to reach the final position. This is due to the delay in command generation and transmission to the drone for movement control. Further improvement can be made by incorporating an adaptive control algorithm to the drone’s control and reduction of window size to stabilize the trajectory followed by the drone.

In this study, we decoded eight active brain commands using hybrid EEG–fNIRS for BCI. The generated commands were tested using a quadcopter. To the best of the authors’ knowledge, there are only two previous studies that have tested their BCI schemes for control of a quadcopter in 3D space (LaFleur et al., 2013; Kim et al., 2014). Our work has an advantage over both studies, as we were able to control the quadcopter with a greater number of active commands. LaFleur et al. (2013) controlled the height and rotation of a quadcopter using motor imageries for the left, right, and both hands. However, in this case, the quadcopter was given a fixed forward speed. Therefore, this work did not give full quadcopter control to the user for navigation. Also, as it is impossible for some subjects to perform motor imagery (Vidaurre and Blankertz, 2010), their scheme is suited only to a specific set of users who can in fact perform it. Kim et al. (2014) integrated EEG with an eye tracker for generation of eight commands. Although their eye tracking was effective, an LED-based flash light was used to monitor the eye movement. As such, with the illuminating LED enhancing the contrast between the pupil and iris, it would be difficult to maintain quadcopter-controlling concentration for any significant span of time. In our case, there are no such drawbacks, as the necessary mental and eye movement commands are easy to generate. Also, our scheme yields more freedom to the user for drone control. It also includes a measure—specifically EEG/fNIRS integration—for avoidance of any miss-directional movement. A given EEG command has been matched with an opposing fNIRS command (see Figure 7). Thus, in the event that an EEG command is incorrectly classified and the quadcopter follows a wrong direction, fNIRS signals can be used to counteract that command.

Our proposed scheme for fNIRS classification incorporates features for both hemodynamics and initial dips. To the best of our knowledge, this is the first work to generate commands in a 0–2 s window for BCI. Whereas previously, different windows have been reported for fNIRS-based classification using fNIRS, in the current work, the smallest window size for classification was used. Although the reported optimal window size, which is to say, the size allowing for the highest classification accuracy, is 2–7 s (Naseer and Hong, 2013), the 0–2 s window size, albeit causing a decrease in accuracy, is still usable for BCI. Also, we used the mean, min, and peak values of ΔHbO for classification. Further investigation of feature selection and the use of adaptive algorithms will improve the results in both window size and classification.

Another advantage of the proposed scheme is its decoding of all four fNIRS activities from the prefrontal cortex. In previous research (Naseer and Hong, 2015a), four choices have been decoded using fNIRS in a 2–7 s window. However, in this case, only two classes were decoded from the prefrontal cortex, the other two commands having been generated using data from the motor cortex. Our proposed work is more advantageous for more users, as those suffering from locked-in syndrome cannot properly perform motor tasks. Another advantage of our work is the reduced command-generation time using fNIRS. This enables patients who are partially locked-in (with minor eye movements) to use eight commands in controlling a robot in online/real-time scenarios.

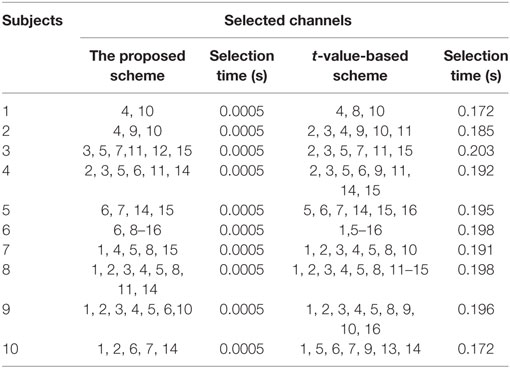

A previous fNIRS study (Hong and Santosa, 2016) proposed channel selection based on a t-value criterion. It classified four sound categories from the auditory cortex using the channels’ highest t-values. In our approach, we used the baseline as a reference for selection of channels. For classification, we selected, for four prefrontal activities, the common channels yielding a positive value after taking the difference between the peak value and the baseline. The drawback here is that only a limited number of channels can be selected. The algorithm can be further improved by adding the “difference of mean” for channel selection. In the comparison of our approach with t-value-based channel selection (see Table 4), most of the selected channels were common. It can be seen that the t-value-based scheme can identify more active channels. However, channel detection time also is an important factor for real-time applications, and our proposed scheme can identify the activated channels much quicker than the previous schemes. Thus, our method allows much room for further development in terms of command generation and real-time control.

Table 4. Comparison of selected channels and time between the proposed method and the t-value-based method.

A limitation of this method is the acquisition of activities using eye movement tasks. Although the use of eye movement for robot control has already been demonstrated to be effective (Ma et al., 2015), eye movements are related to motor activity, and so, it is difficult for motor-disabled patients to generate four EEG-based commands. The selection of different active tasks for EEG can improve the results. Another, fNIRS-related limitation of the proposed method is the variation in hemodynamic responses in subjects due to trial-to-trial variability (Hu et al., 2013). Granted, the proposed features (peak, mean, and min ΔHbO) might not yield the best performance for each subject in the 0–2 s window. However, this problem is not insoluble, and certainly, it will be addressed in further investigations into feature selection. Also, the use of adaptive algorithms promises improvement in fNIRS command generation time.

In this study, we investigated the possibility of decoding eight commands from the frontal and prefrontal cortices by combining electroencephalography and functional near-infrared spectroscopy (fNIRS) for a BCI. Four EEG commands were generated by eye movements (two and three blinks as well as left/right and up/down movements), using the number of peaks and the mean value as features. In the case of fNIRS, we chose mental counting, mental arithmetic, mental rotation, and word formation tasks for the purpose of activity decoding. We selected a 0–2 s window to generate the commands using fNIRS signals. The signal mean, peak, and minimum values were used as features for incorporation of hemodynamic signals and initial dip features in the classifier. The obtained 76.5% accuracy indicates the possibility of classifying the activities in reduced windows. We tested the generated commands in a real-time scenario using a quadcopter. The movement coordinates of the quadcopter were updated using the hybrid EEG–fNIRS-based commands. The performed experiments served to demonstrate the BCI feasibility and potential applications of the proposed eight-command decoding scheme. Further research on better feature selection and minimization of time window for command generation can improve the controllability of the quadcopter. Moreover, the incorporation of adaptive algorithms for flight control along with brain signal decoding for stable flight can further strengthen the results.

MJK has conducted all the experiments, carried out the data processing, and made initial manuscript. K-SH has suggested the theoretical aspects of the current study and supervised all the process from the beginning. All the authors have approved the final manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This research was supported by the Mid-Career Researcher Program (grant no. NRF-2014R1A2A1A10049727) and the Convergence Technology Development Program for Bionic Arm (grant no. 2016M3C1B2912986) through the National Research Foundation of Korea (NRF) under the auspices of the Ministry of Science, ICT and Future Planning, Republic of Korea.

Bai, L. J., Yu, T. Y., and Li, Y. Q. (2015). A brain computer interface-based explorer. J. Neurosci. Methods 244, 2–7. doi:10.1016/j.jneumeth.2014.06.015

Baker, W. B., Parthasarathy, A. B., Busch, D. R., Mesquita, R. C., Greenberg, J. H., and Yodh, A. G. (2014). Modified Beer-Lambert law for blood flow. Biomed. Opt. Express 5, 4053–4075. doi:10.1364/BOE.5.004053

Bhatt, M., Ayyalasomayajula, K. R., and Yalavarthy, P. K. (2016). Generalized Beer-Lambert model for near-infrared light propagation in thick biological tissues. J. Biomed. Opt. 21, 076012. doi:10.1117/1.JBO.21.7.076012

Bhutta, M. R., Hong, K.-S., Kim, B.-M., Hong, M. J., Kim, Y.-H., and Lee, S.-H. (2014). Note: three wavelengths near-infrared spectroscopy system for compensating the light absorbance by water. Rev. Sci. Instrum. 85, 026111. doi:10.1063/1.4865124

Bhutta, M. R., Hong, M. J., Kim, Y.-H., and Hong, K.-S. (2015). Single-trial lie detection using a combined fNIRS-polygraph system. Front. Psychol. 6:709. doi:10.3389/fpsyg.2015.00709

Boas, D. A., Elwell, C. E., Ferrari, M., and Taga, G. (2014). Twenty years of functional near-infrared spectroscopy: introduction for the special issue. Neuroimage 85, 1–5. doi:10.1016/j.neuroimage.2013.11.033

Cao, L., Li, J., Ji, H. F., and Jiang, C. J. (2014). A hybrid brain computer interface system based on the neurophysiological protocol and brain-actuated switch for wheelchair control. J. Neurosci. Methods 229, 33–43. doi:10.1016/j.jneumeth.2014.03.011

Chadwell, A., Kenney, L., Thies, S., Galpin, A., and Head, J. (2016). The reality of myoelectric prostheses: understanding what makes these devices difficult for some users to control. Front. Neurorobot. 10:7. doi:10.3389/fnbot.2016.00007

Chen, B. J., Feng, Y. G., and Wang, Q. N. (2016). Combining vibrotactile feedback with volitional myoelectric control for robotic transtibial prostheses. Front. Neurorobot. 10:8. doi:10.3389/fnbot.2016.00008

Combaz, A., and Van Hulle, M. M. (2015). Simultaneous detection of P300 and steady-state visually evoked potentials for hybrid brain-computer interface. PLoS ONE 10:e0121481. doi:10.1371/journal.pone.0121481

Fazli, S., Mehnert, J., Steinbrink, J., Curio, G., Villringer, A., Muller, K. R., et al. (2012). Enhanced performance by a hybrid NIRS-EEG brain computer interface. Neuroimage 59, 519–529. doi:10.1016/j.neuroimage.2011.07.084

Ferrari, M., and Quaresima, V. (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage 63, 921–935. doi:10.1016/j.neuroimage.2012.03.049

Guntekin, B., and Basar, E. (2016). Review of evoked and event-related delta responses in the human brain. Int. J. Psychophysiol. 103, 43–52. doi:10.1016/j.ijpsycho.2015.02.001

Hochberg, L. R., Bacher, D., Jarosiewicz, B., Masse, N. Y., Simeral, J. D., Vogel, J., et al. (2012). Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485, 372–375. doi:10.1038/nature11076

Hochberg, L. R., Serruya, M. D., Friehs, G. M., Mukand, J. A., Saleh, M., Caplan, A. H., et al. (2006). Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442, 164–171. doi:10.1038/nature04970

Hong, K.-S., and Naseer, N. (2016). Reduction of delay in detecting initial dips from functional near-infrared spectroscopy signals using vector-based phase analysis. Int. J. Neural Syst. 26, 16500125. doi:10.1142/S012906571650012X

Hong, K.-S., Naseer, N., and Kim, Y.-H. (2015). Classification of prefrontal and motor cortex signals for three-class fNIRS-BCI. Neurosci. Lett. 587, 87–92. doi:10.1016/j.neulet.2014.12.029

Hong, K.-S., and Nguyen, H.-D. (2014). State-space models of impulse hemodynamic responses over motor, somatosensory, and visual cortices. Biomed. Opt. Express 5, 1778–1798. doi:10.1364/BOE.5.001778

Hong, K.-S., and Santosa, H. (2016). Decoding four different sound-categories in the auditory cortex using functional near-infrared spectroscopy. Hear. Res. 333, 157–166. doi:10.1016/j.heares.2016.01.009

Hortal, E., Ianez, E., Ubeda, A., Perez-Vidal, C., and Azorin, J. M. (2015). Combining a brain-machine interface and an electrooculography interface to perform pick and place tasks with a robotic arm. Robot. Auton. Syst. 72, 181–188. doi:10.1016/j.robot.2015.05.010

Hu, X.-S., Hong, K.-S., and Ge, S. S. (2012). fNIRS-based online deception decoding. J. Neural Eng. 9, 026012. doi:10.1088/1741-2560/9/2/026012

Hu, X.-S., Hong, K.-S., and Ge, S. S. (2013). Reduction of trial-to-trial variability in functional near-infrared spectroscopy signals by accounting for resting-state functional connectivity. J. Biomed. Opt. 18, 017003. doi:10.1117/1.JBO.18.1.017003

Hu, X.-S., Hong, K.-S., Ge, S. S., and Jeong, M.-Y. (2010). Kalman estimator- and general linear model-based on-line brain activation mapping by near-infrared spectroscopy. Biomed. Eng. Online 9, 82. doi:10.1186/1475-925X-9-82

Hwang, H. J., Kim, S., Choi, S., and Im, C. H. (2013). EEG-based brain-computer interfaces: a thorough literature survey. Int. J. Hum. Comput. Interact. 29, 814–826. doi:10.1080/10447318.2013.780869

Jerbi, K., Vidal, J. R., Mattout, J., Maby, E., Lecaignard, F., Ossandon, T., et al. (2011). Inferring hand movement kinematics from MEG, EEG and intracranial EEG: from brain-machine interfaces to motor rehabilitation. IRBM 32, 8–18. doi:10.1016/j.irbm.2010.12.004

Jurcak, V., Tsuzuki, D., and Dan, I. (2007). 10/20, 10/10, and 10/5 systems revisited: their validity as relative head-surface-based positioning systems. Neuroimage 34, 1600–1611. doi:10.1016/j.neuroimage.2006.09.024

Kamran, M. A., and Hong, K.-S. (2013). Linear parameter-varying model and adaptive filtering technique for detecting neuronal activities: an fNIRS study. J. Neural Eng. 10, 056002. doi:10.1088/1741-2560/10/5/056002

Kamran, M. A., and Hong, K.-S. (2014). Reduction of physiological effects in fNIRS waveforms for efficient brain-state decoding. Neurosci. Lett. 580, 130–136. doi:10.1016/j.neulet.2014.07.058

Kao, J. C., Stavisky, S. D., Sussillo, D., Nuyujukian, P., and Shenoy, K. V. (2014). Information systems opportunities in brain-machine interface decoders. Proc. IEEE 102, 666–682. doi:10.1109/JPROC.2014.2307357

Khan, M. J., and Hong, K.-S. (2015). Passive BCI based on drowsiness detection: an fNIRS study. Biomed. Opt. Express 6, 4063–4078. doi:10.1364/BOE.6.004063

Khan, M. J., Hong, M. J., and Hong, K.-S. (2014). Decoding of four movement directions using hybrid NIRS-EEG brain-computer interface. Front. Hum. Neurosci. 8:244. doi:10.3389/fnhum.2014.00244

Kim, B. H., Kim, M., and Jo, S. (2014). Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking. Comput. Biol. Med. 51, 82–92. doi:10.1016/j.compbiomed.2014.04.020

LaFleur, K., Cassady, K., Doud, A., Shades, K., Rogin, E., and He, B. (2013). Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain-computer interface. J. Neural Eng. 10, 046003. doi:10.1088/1741-2560/10/4/046003

Li, Y. Q., Long, J. Y., Yu, T. Y., Yu, Z. L., Wang, C. C., Zhang, H. H., et al. (2010). An EEG-based BCI system for 2-D cursor control by combining Mu/Beta rhythm and P300 potential. IEEE Trans. Biomed. Eng. 57, 2495–2505. doi:10.1109/TBME.2010.2055564

Li, Y. Q., Pan, J. H., Wang, F., and Yu, Z. L. (2013). A hybrid BCI system combining P300 and SSVEP and its application to wheelchair control. IEEE Trans. Biomed. Eng. 60, 3156–3166. doi:10.1109/TBME.2013.2270283

Lin, J. S., and Hsieh, C. H. (2016). A wireless BCI-controlled integration system in smart living space for patients. Wirel. Pers. Commun. 88, 395–412. doi:10.1007/s11277-015-3129-0

Lotte, F., Congedo, M., Lecuyer, A., Lamarche, F., and Arnaldi, B. (2007). A review of classification algorithms for EEG-based brain-computer interfaces. J. Neural Eng. 4, R1–R13. doi:10.1088/1741-2560/4/R01

Luu, S., and Chau, T. (2009). Decoding subjective preference from single-trial near-infrared spectroscopy signals. J. Neural Eng. 6, 016003. doi:10.1088/1741-2560/6/1/016003

Ma, J. X., Zhang, Y., Cichocki, A., and Matsuno, F. (2015). A novel EOG/EEG hybrid human-machine interface adopting eye movements and ERPs: application to robot control. IEEE Trans. Biomed. Eng. 62, 876–889. doi:10.1109/TBME.2014.2369483

Muller-Putz, G., Leeb, R., Tangermann, M., Hohne, J., Kubler, A., Cincotti, F., et al. (2015). Towards noninvasive hybrid brain-computer interfaces: framework, practice, clinical application, and beyond. Proc. IEEE 103, 926–943. doi:10.1109/JPROC.2015.2411333

Naseer, N., and Hong, K.-S. (2013). Classification of functional near-infrared spectroscopy signals corresponding to the right- and left-wrist motor imagery for development of a brain-computer interface. Neurosci. Lett. 553, 84–89. doi:10.1016/j.neulet.2013.08.021

Naseer, N., and Hong, K.-S. (2015a). Decoding answers to four-choice questions using functional near infrared spectroscopy. J. Near Infrared Spectrosc. 23, 23–31. doi:10.1255/jnirs.1145

Naseer, N., and Hong, K.-S. (2015b). fNIRS-based brain-computer interfaces: a review. Front. Hum. Neurosci. 9:3. doi:10.3389/fnhum.2015.00003

Naseer, N., Hong, M. J., and Hong, K.-S. (2014). Online binary decision decoding using functional near-infrared spectroscopy for the development of brain-computer interface. Exp. Brain Res. 232, 555–564. doi:10.1007/s00221-013-3764-1

Naseer, N., Noori, F. M., Qureshi, N. K., and Hong, K.-S. (2016a). Determining optimal feature-combination for LDA classification of functional near-infrared spectroscopy signals in brain-computer interface application. Front. Hum. Neurosci. 10:237. doi:10.3389/fnhum.2016.00237

Naseer, N., Qureshi, N. K., Noori, F. M., and Hong, K.-S. (2016b). Analysis of different classification techniques for two-class functional near-infrared spectroscopy based brain-computer interface. Comput. Intell. Neurosci. 2016, 5480760. doi:10.1155/2016/5480760

Nguyen, H.-D., and Hong, K.-S. (2016). Bundled-optode implementation for 3D imaging in functional near-infrared spectroscopy. Biomed. Opt. Express 7, 3491–3507. doi:10.1364/BOE.7.003491

Nguyen, H.-D., Hong, K.-S., and Shin, Y.-I. (2016). Bundled-optode method in functional near-infrared spectroscopy. PLoS ONE 11:e0165146. doi:10.1371/journal.pone.0165146

Nicolas-Alonso, L. F., and Gomez-Gil, J. (2012). Brain computer interfaces, a review. Sensors 12, 1211–1279. doi:10.3390/s120201211

Ortiz-Rosario, A., and Adeli, H. (2013). Brain-computer interface technologies: from signal to action. Rev. Neurosci. 24, 537–552. doi:10.1515/revneuro-2013-0032

Pfurtscheller, G., Allison, B. Z., Brunner, C., Bauernfeind, G., Solis-Escalante, T., Scherer, R., et al. (2010). The hybrid BCI. Front. Neurosci. 4:30. doi:10.3389/fnpro.2010.00003

Power, S. D., Falk, T. H., and Chau, T. (2010). Classification of prefrontal activity due to mental arithmetic and music imagery using hidden Markov models and frequency domain near-infrared spectroscopy. J. Neural Eng. 7, 026002. doi:10.1088/1741-2560/7/2/026002

Putze, F., Hesslinger, S., Tse, C. Y., Huang, Y. Y., Herff, C., Guan, C. T., et al. (2014). Hybrid fNIRS-EEG based classification of auditory and visual perception processes. Front. Neurosci. 8:373. doi:10.3389/fnins.2014.00373

Quandt, F., Reichert, C., Schneider, B., Durschmid, S., Richter, D., Hinrichs, H., et al. (2012). Fundamentals and application of brain-machine interfaces (BMI). Klin. Neurophysiol. 43, 158–167. doi:10.1055/s-0032-1308970

Rak, R. J., Kolodziej, M., and Majkowski, A. (2012). Brain-computer interface as measurement and control system the review paper. Metrol. Meas. Syst. 19, 427–444. doi:10.2478/v10178-012-0037-4

Ramli, R., Arof, H., Ibrahim, F., Idris, M. Y. I., and Khairuddin, A. S. M. (2015). Classification of eyelid position and eyeball movement using EEG signals. Malayas. J. Comput. Sci. 28, 28–45.

Ravindra, V., and Castellini, C. (2014). A comparative analysis of three non-invasive human-machine interfaces for the disabled. Front. Neurorobot. 8:24. doi:10.3389/fnbot.2014.00024

Ruiz, S., Buyukturkoglu, K., Rana, M., Birbaumer, N., and Sitaram, R. (2014). Real-time fMRI brain computer interfaces: self-regulation of single brain regions to networks. Biol. Psychol. 95, 4–20. doi:10.1016/j.biopsycho.2013.04.010

Santosa, H., Hong, M. J., and Hong, K.-S. (2014). Lateralization of music processing auditory cortex: an fNIRS study. Front. Behav. Neurosci. 8:418. doi:10.3389/fnbeh.2014.00418

Santosa, H., Hong, M. J., Kim, S.-P., and Hong, K.-S. (2013). Noise reduction in functional near-infrared spectroscopy signals by independent component analysis. Rev. Sci. Instrum. 84, 073106. doi:10.1063/1.4812785

Schroeder, K. E., and Chestek, C. A. (2016). Intracortical brain-machine interfaces advance sensorimotor neuroscience. Front. Neurosci. 10:291. doi:10.3389/fnins.2016.00291

Schudlo, L. C., and Chau, T. (2015). Single-trial classification of near-infrared spectroscopy signals arising from multiple cortical regions. Behav. Brain Res. 290, 131–142. doi:10.1016/j.bbr.2015.04.053

Schudlo, L. C., Power, S. D., and Chau, T. (2013). Dynamic topographical pattern classification of multichannel prefrontal NIRS signals. J. Neural Eng. 10, 046018. doi:10.1088/1741-2560/10/4/046018

Schultz, A. E., and Kuiken, T. A. (2011). Neural interfaces for control of upper limb prostheses: the state of the art and future possibilities. PM R 3, 55–67. doi:10.1016/j.pmrj.2010.06.016

Tomita, Y., Vialatte, F. B., Dreyfus, G., Mitsukura, Y., Bakardjian, H., and Cichocki, A. (2014). Bimodal BCI using simultaneously NIRS and EEG. IEEE Trans. Biomed. Eng. 61, 1274–1284. doi:10.1109/TBME.2014.2300492

Turnip, A., and Hong, K.-S. (2012). Classifying mental activities from EEG-P300 signals using adaptive neural networks. Int. J. Innov. Comput. Inf. Control 8, 6429–6443.

Turnip, A., Hong, K.-S., and Jeong, M.-Y. (2011). Real-time feature extraction of P300 component using adaptive nonlinear principal component analysis. Biomed. Eng. Online 10, 83. doi:10.1186/1475-925X-10-83

Urgen, B. A., Plank, M., Ishiguro, H., Poizner, H., and Saygin, A. P. (2013). EEG Theta and Mu oscillations during perception of human and robot actions. Front. Neurorobot. 7:19. doi:10.3389/fnbot.2013.00019

Utsugi, K., Obata, A., Sato, H., Aoki, R., Maki, A., Koizumi, H., et al. (2008). GO-STOP control using optical brain-computer interface during calculation task. IEICE Trans. Commun. E91b, 2133–2141. doi:10.1093/ietcom/e91-b.7.2133

Vidaurre, C., and Blankertz, B. (2010). Towards a cure for BCI illiteracy. Brain Topogr. 23, 194–198. doi:10.1007/s10548-009-0121-6

von Luhmann, A., Herff, C., Heger, D., and Schultz, T. (2015). Toward a wireless open source instrument: functional near-infrared spectroscopy in mobile neuroergonomics and BCI applications. Front. Hum. Neurosci. 9:617. doi:10.3389/fnhum.2015.00617

Vuckovic, A., and Sepulveda, F. (2012). A two-stage four-class BCI based on imaginary movements of the left and the right wrist. Med. Eng. Phys. 34, 964–971. doi:10.1016/j.medengphy.2011.11.001

Waldert, S., Pistohl, T., Braun, C., Ball, T., Aertsen, A., and Mehring, C. (2009). A review on directional information in neural signals for brain-machine interfaces. J. Physiol. Paris 103, 244–254. doi:10.1016/j.jphysparis.2009.08.007

Weyand, S., and Chau, T. (2015). Correlates of near-infrared spectroscopy brain-computer interface accuracy in a multi-class personalization framework. Front. Hum. Neurosci. 9:536. doi:10.3389/fnhum.2015.00536

Weyand, S., Takehara-Nishiuchi, K., and Chau, T. (2015). Weaning off mental tasks to achieve voluntary self-regulatory control of a near-infrared spectroscopy brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 548–561. doi:10.1109/TNSRE.2015.2399392

Yin, E. W., Zeyl, T., Saab, R., Chau, T., Hu, D. W., and Zhou, Z. T. (2015). A hybrid brain-computer interface based on the fusion of P300 and SSVEP scores. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 693–701. doi:10.1109/TNSRE.2015.2403270

Yoo, J., Kwon, J., and Choe, Y. (2014). Predictable internal brain dynamics in EEG and its relation to conscious states. Front. Neurorobot. 8:18. doi:10.3389/fnbot.2014.00018

Zafar, A., and Hong, K.-S. (2017). Detection and classification of three-class initial dips from prefrontal cortex. Biomed. Opt. Express 8, 367–383. doi:10.1364/BOE.8.000367

Keywords: brain–computer interface, hybrid EEG–fNIRS, mental task, classification, quadcopter control

Citation: Khan MJ and Hong K-S (2017) Hybrid EEG–fNIRS-Based Eight-Command Decoding for BCI: Application to Quadcopter Control. Front. Neurorobot. 11:6. doi: 10.3389/fnbot.2017.00006

Received: 25 October 2016; Accepted: 24 January 2017;

Published: 17 February 2017

Edited by:

Xin Luo, Chongqing Institute of Green and Intelligent Technology (CAS), ChinaReviewed by:

Huanqing Wang, Carleton University, CanadaCopyright: © 2017 Khan and Hong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Keum-Shik Hong, a3Nob25nQHB1c2FuLmFjLmty

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.