94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurol., 06 April 2018

Sec. Applied Neuroimaging

Volume 9 - 2018 | https://doi.org/10.3389/fneur.2018.00224

This article is part of the Research TopicNeuroimaging of Affective Empathy and Emotional CommunicationView all 11 articles

Sona Patel1

Sona Patel1 Kenichi Oishi2

Kenichi Oishi2 Amy Wright2

Amy Wright2 Harry Sutherland-Foggio2

Harry Sutherland-Foggio2 Sadhvi Saxena2

Sadhvi Saxena2 Shannon M. Sheppard2

Shannon M. Sheppard2 Argye E. Hillis2*

Argye E. Hillis2*

Impaired expression of emotion through pitch, loudness, rate, and rhythm of speech (affective prosody) is common and disabling after right hemisphere (RH) stroke. These deficits impede all social interactions. Previous studies have identified cortical areas associated with impairments of expression, recognition, or repetition of affective prosody, but have not identified critical white matter tracts. We hypothesized that: (1) differences across patients in specific acoustic features correlate with listener judgment of affective prosody and (2) these differences are associated with infarcts of specific RH gray and white matter regions. To test these hypotheses, 41 acute ischemic RH stroke patients had MRI diffusion weighted imaging and described a picture. Affective prosody of picture descriptions was rated by 21 healthy volunteers. We identified percent damage (lesion load) to each of seven regions of interest previously associated with expression of affective prosody and two control areas that have been associated with recognition but not expression of prosody. We identified acoustic features that correlated with listener ratings of prosody (hereafter “prosody acoustic measures”) with Spearman correlations and linear regression. We then identified demographic variables and brain regions where lesion load independently predicted the lowest quartile of each of the “prosody acoustic measures” using logistic regression. We found that listener ratings of prosody positively correlated with four acoustic measures. Furthermore, the lowest quartile of each of these four “prosody acoustic measures” was predicted by sex, age, lesion volume, and percent damage to the seven regions of interest. Lesion load in pars opercularis, supramarginal gyrus, or associated white matter tracts (and not control regions) predicted lowest quartile of the four “prosody acoustic measures” in logistic regression. Results indicate that listener perception of reduced affective prosody after RH stroke is due to reduction in specific acoustic features caused by infarct in right pars opercularis or supramarginal gyrus, or associated white matter tracts.

A flat tone-of-voice is often interpreted as apathy, displeasure, sadness, or lack of empathy of the speaker, depending on the context. Yet, survivors of right hemisphere (RH) stroke (1–5) and people with certain neurological diseases—e.g., Parkinson’s disease (6–8), frontotemporal dementia (9–13), schizophrenia (14, 15)—may have trouble modulating their tone-of-voice to express emotion, even when they feel joyful or empathetic. Affective prosody (changes in pitch, loudness, rate, and rhythm of speech to convey emotion) communicates the speaker’s emotion and social intent. Thus, impairments in affective prosody can disrupt all daily interactions and interpersonal relationships, as well as influence social behavior (16).

It has long been recognized that strokes involving the right frontal lobe, particularly posterior inferior frontal cortex, are associated with impaired expression of affective prosody (3, 17). Infarcts in the right temporal lobe are often associated with impaired recognition of affective prosody (3) or impaired recognition and expression (17). Previous studies have identified cortical areas important for expression of emotion through prosody, using either functional MRI (fMRI) of healthy participants (18–22) or lesion-symptom mapping in individuals with focal brain damage (3, 23). Several studies show activation in inferior frontal cortex, specifically during evoked expressions. However, the brain regions involved seem to be dependent on the type of emotion expressed by the speaker (22). Although most studies of affective prosody impairments have focused on cortical regions, one study showed that infarcts that affected the right sagittal stratum (a large bundle of white matter fibers connecting occipital, cingulate, and temporal regions to the thalamus and basal ganglia) interfered with recognition of sarcasm (24). Nevertheless, few studies have identified the role of specific white matter tracts in the neural network underlying emotional expression.

The majority of studies investigating emotional prosody have focused on the perception rather than the production of emotion in speech. It has been suggested that, similar to the well-established dual-stream model subserving language processing in the left hemisphere (25–27), prosody comprehension proceeds along analogous dual ventral and dorsal streams in the right hemisphere (28). Specifically, it is proposed that the dorsal “how” pathway is critical for evaluating prosodic contours and mapping them to subvocal articulation, while the ventral “what” pathway, which includes the superior temporal sulcus and much of the temporal lobe, maps prosody to communicative meaning. While the research investigating these pathways in affective prosody generation is sparse, it has been proposed that bilateral basal ganglia play an important role in modulation of motor behavior during the preparation of emotional prosody generation, while RH cortical structures are involved in auditory feedback mechanisms during speech production (29).

Recent advances in acoustic analysis of speech and voice allow characterization of the fundamental frequency (i.e., the high versus low quality of the voice; measures include the mean, range, peak, and variation), intensity (i.e., how loud or soft the voice is; measures include the mean, range, peak, nadir, variation within, and across bandwidths), speech duration (i.e., how fast or slow the speech is), and rhythm (rate, timing, and relative intensity of various speech segments, such as vowels, consonants, pauses, and so on). Any of these features might be affected by focal brain damage, and changes in one or more feature can influence the perception of the emotion or intent of the speaker.

Previous studies have identified changes in acoustic features that are responsible for abnormal affective prosody in Parkinson’s disease (6, 8), schizotypal personality disorder and schizophrenia (15, 30), and frontotemporal dementia (10, 31). Almost all studies report that less pitch variability and slower rate of speech are associated with reduced prosody in these individuals. A reduction in the pitch variability is what is often referred to as “flat affect,” i.e., a “flat” pitch contour or one that is not as variable. Some conditions such as Parkinson’s disease result in a reduced vocal intensity as well, potentially due to an underlying motor control problem (32), resulting in a quieter voice. Taken together, the impact of these changes is a less variable and therefore a more monotone sounding voice. It has yet to be established whether abnormalities in specific acoustic features account for listener perception of impaired affective prosody after RH stroke.

In this study, we hypothesized that: (1) abnormal patterns of specific acoustic features correlate with lower listener rating of emotional expression and (2) these abnormal acoustic features are associated with infarcts of specific RH gray and white matter regions. Since no one-to-one map between each acoustic feature and a specific brain region exists, a standard set of acoustic parameters were investigated based on the features that are known to be affected in pathological conditions, including RH stroke.

A consecutive series of 41 acute ischemic RH stroke patients who provided written informed consent for all study procedures were enrolled. Consent forms and procedures were approved by the Johns Hopkins Institutional Review Board. Exclusion criteria included: previous neurological disease involving the brain (including prior stroke), impaired arousal or ongoing sedation, lack of premorbid competency in English, left handedness, <10th grade education, or contraindication for MRI (e.g., implanted ferrous metal). The mean age was 62.7 ± SD 12.5 years. The mean education was 14.4 ± 3.3 years. The mean lesion volume was 37.2 ± 67.0 cc. Participants were 41.5% women. Within 48 h of stroke onset, the participants were each administered a battery of assessments of affective prosody expression and recognition, but in this study we focused on affective prosody expression to test our hypotheses.

The speech samples from each participant included a description of the “Cookie Theft” picture, originally from the Boston Diagnostic Aphasia Examination (33). This same picture is also used in the National Institutes of Health Stroke Scale (34, 35). The stimulus is shown in Figure 1. Participants were instructed to describe the picture as if they were telling a story to a child. Participants were prompted to continue (“anything else that you can tell me?”) once. Recordings were made using a head-worn microphone placed two inches from the mouth of the participant. All samples were segmented and converted into mono recordings for analysis in Praat (36). A total of 26 parameters were automatically extracted from the speech samples using customized scripts. The parameters included measurements related to fundamental frequency (F0), intensity, duration, rate, and voice quality. The full list of parameters is given in Table 1 along with a short description of each measure. Because we had no a priori evidence to hypothesize that some of these features would be more affected than others, we included a standard list of features (measures of F0 and intensity and durations of various parts of speech) as well as a set of features that were either relative to certain frequency bands or parts of speech. We followed the same procedures followed in previous publications; for example, see Ref. (37) for details of the analyses.

The emotional expression of the speech samples was rated by 21 healthy volunteers, using a 1–7 scale (from no emotion to very emotional). They were given several practice items with feedback. The mean score for the 21 listeners for each voice sample was used to identify the acoustic features related to the listener ratings of emotional expression.

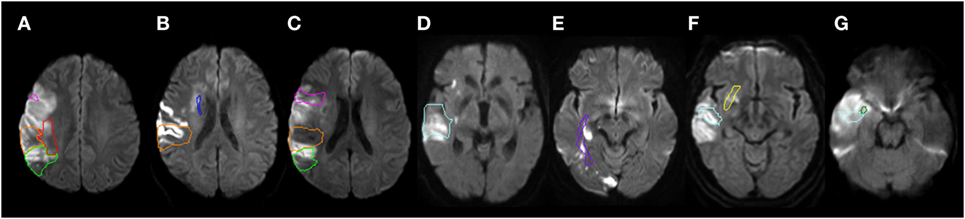

Participants were evaluated with MRI diffusion weighted imaging (DWI), fluid attenuated inversion recovery (to rule out old lesions), Susceptibility weighted imaging (to rule out hemorrhage), and T2-weighted imaging to evaluate for other structural lesions. A neurologist (Kenichi Oishi) who was blind to the results of the acoustic analyses identified the percent damage to each of seven gray and white matter regions that have previously been associated with deficits in expression of affective prosody and two control areas that have been associated with deficits in recognition but not expression of prosody (3, 13, 23, 24, 38). The seven regions of interest hypothesized to be related to prosody expression in the RH were: inferior frontal gyrus pars opercularis; supramarginal gyrus; angular gyrus; inferio-frontal-occipital fasciculus, superior frontal occipital fasciculus; superior longitudinal fasciculus (SLF); and uncinate fasciculus. The control areas that have previously been identified as critical for prosody recognition but not production (22, 24, 30) were: superior temporal gyrus and sagittal stratum. The procedure followed previous publications (39–41). In brief, the boundary(s) of acute stroke lesion(s) was defined by a threshold of >30% intensity increase from the unaffected area in the DWI (42, 43) then manually modified to avoid false-positive and false-negative areas by a neurologist (Kenichi Oishi). Kenichi Oishi was blinded to the results of the acoustic analyses to avoid bias in lesion identification. Then, the non-diffusion weighted image (b0) was transformed to the JHU-MNI-b0 atlas using affine transformation, followed by large deformation diffeomorphic metric mapping (LDDMM) (44, 45). The resultant matrices were applied to the stroke lesion for normalization. LDDMM provides optimal normalization to minimize warping regions of interest (37, 38). A customized version of the JHU-MNI Brain Parcelation Map1 was then overlaid on the normalized lesion map to determine the percentage volume of the nine regions (Figure 2), using DiffeoMap.2

Figure 2. Representative individuals with acute infarction in the selected structures. The structures are color-contoured: inferior frontal gyrus, pars opercularis [pink (A,C)], superior temporal gyrus [cyan (D,F,G)], supramarginal gyrus [orange (A,B,C)], angular gyrus [chartreuse green (A,C)], inferior fronto-occipital fasciculus [yellow (F)], sagittal stratum [purple (E)], superior fronto-occipital fasciculus [blue (B)], superior longitudinal fasciculus [red (A)], and the uncinate fasciculus [green (G)]. Diffusion weighted images were normalized to the JHU-MNI atlas space and pre-defined ROIs were overlaid on the normalized images. Images are all in radiological convention: left side of the figure is the right side of the individual.

All analyses were carried out with Stata, version 12 (StataCorp3). Acoustic features (from the 26 listed in Table 1) that correlated with mean listener judgments of affective prosody were identified with Spearman correlations. An alpha level of p < 0.05, after correction for multiple comparisons (n = 26) with Bonferroni correction, was considered significant. Acoustic features that independently contributed to listener rating of emotional expression, after adjustment for other acoustic features and age, were identified with linear regression, separately for men and women speakers, and were used in further analyses as the “prosody acoustic measures.” Then, percent damage to each ROI that independently predicted the lowest quartile of each of the identified prosody acoustic measures were identified using logistic regression. The independent variables included age, sex, education, and percent damage to (lesion load in) each of the nine ROIs (including two control regions). We included age and sex in all multivariable logistic regressions, along with percent damage to each of the five cortical regions of interest and the four white matter bundles of fibers, because age and sex can influence all acoustic features. Because we did not include healthy controls, we defined the lowest quartile of each prosody acoustic measure as abnormal. We chose this definition because we aimed to focus only on the most disrupted prosody for our analyses. Thus, the dependent variables were whether or not a patient’s score on a particular acoustic measure fell in the lowest quartile of the distribution across patients, coded as 0 or 1.

Mean scores (and SDs) for each of the acoustic features for men and women are shown in Table 2. There was no significant difference between men and women in age [male mean = 62.0, female mean = 63.8, t(39) = 0.41, ns]. Listener judgments of prosody correlated with certain cues, namely the relative articulation duration, i.e., the relative duration of voiced segments to the total duration of speech segments excluding pauses (Durv/s) (rho = 0.63; p < 0.00001) and spectral flatness (SF) (rho = −0.55; p = 0.0002). None of the other acoustic features correlated with listener judgment of prosody in univariate analyses.

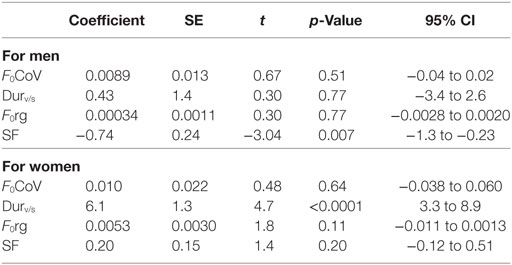

In multivariable analyses, mean listener rating (from 1 to 7) was best accounted for by a model that included Durv/s, SF, Fo range, and F0 coefficient of variation (F0CoV) of fundamental frequency in both women [F (4, 12) = 6.58; p = 0.0048; r2 = 0.69] and men [F(5, 18) = 5.13; p = 0.0056; r2 = 0.52]. These identified acoustic measures that correlated with listener judgment of prosody were then considered the “prosody acoustic measures” used in further analyses. The only feature found to be independently associated with rating of emotional expression was Durv/s (p < 0.0001) for women, and SF (p = 0.007) for men, after adjusting for other variables (age and the other acoustic features, from the set of 26) (Table 3). Durv/s was positively correlated with perceived emotional rating in both women (rho = 0.71; p = 0.0015) and men (rho = 0.55; p = 0.0052), but the correlation was stronger in women. SF was negatively correlated with perceived emotional expression in both women (rho = −0.39; p = 0.13) and men (rho = −0.69; p = 0.0002), but the association was significant only in men. That is, women (and to a lesser degree, men) who used more voicing were rated as having higher emotional prosody, and men who had higher SF were rated as having lower emotional prosody.

Table 3. Results of linear regression to identify “prosody acoustic measures”—measures that contributed to listener rating of affective prosody.

As indicated above, speech samples with the lowest level of each of the four “prosody acoustic measures” were rated as having the lowest affective prosody by healthy listeners. The lowest quartile of SF was predicted by sex, age, lesion volume, and percent damage to the nine RH regions (X2 = 27; p = 0.0081). Sex, lesion volume, damage to inferior frontal gyrus pars opercularis, inferior fronto-occipital (IFO) fasciculus, SLF, and uncinate fasciculus were the only independent predictors, after adjusting for the other variables. The lowest quartile of F0CoV was predicted by sex, age, lesion volume, and percent damage to the nine RH regions (X2 = 33; p = 0.0005); age and damage to supramarginal gyrus and SLF were the only independent predictors. The lowest quartile of Durv/s was predicted by sex, age, education, lesion volume, and percent damage to the nine RH regions (X2 = 25; p = 0.02), but none of the variables were independent predictors of Durv/s, after adjustment for other independent variables. The more ventral control regions (STG and sagittal stratum) were not independent predictors of any of the prosody acoustic features in the logistic regression models.

There are two novel and important results of this study. First, we identified abnormal patterns of acoustic features that contribute to diminished emotional expression of RH stroke survivors, as rated by healthy listeners. The features that together best accounted for diminished emotional expression were: the relative duration of the voiced parts of speech (Durv/s), SF, F0 range, and F0CoV. The first two features, Durv/s and SF, are measures of rhythm; the latter two features, F0 range and F0CoV, relate to pitch. Several previous studies have shown that F0 range (31) or F0 CoV (3, 23, 46) are abnormal in neurological diseases associated with impaired prosody, but most studies have not compared these acoustic features to other acoustic features that might convey emotional expression. We found that Durv/s was particularly important in emotional expression of female stroke participants, and SF was particularly important in emotional expression of male stroke participants. SF (computed as the ratio of the geometric to the arithmetic mean of the spectral energy distribution) has been shown to be important in conveying happy and sad tone-of-voice (47); see also (48). Differences between sexes might reflect differences in which emotions were rated as less emotional in men versus women. It is possible that men were rated as less emotional mostly on the happy and sad stimuli (which depend on SF), whereas women were rated less emotional on emotions that depend more on less noise or breathiness (captured by Durv/s), such as angry and happy. Our study was not powered to evaluate each emotion separately, so this speculation will need to be evaluated in future research.

The variable of Durv/s has been less studied than the other prosody acoustic measures we identified, with respect to emotional communication. However, one study showed that vocal fold contact time (which underlies Durv/s) varied substantially between expression of different emotions (49), consistent with a role for the percentage of voiced speech segments in conveying emotion. Yildirim et al. (50) carried out acoustic analysis of transitions from neutral to happy, sad, or angry speech, and found that angry and happy speech are characterized by longer utterance duration, as well as shorter pauses between words, higher F0, and wider ranges of energy, resulting in exaggerated, or hyperarticulated speech (51); but they did not specifically evaluate Durv/s.

The second important finding is that the measures of acoustic features associated with impaired expression of emotion (“prosody acoustic measures”) were associated with lesion load in right IFG pars opercularis or supramarginal gyrus, or associated white matter tracts, particularly right IFO fasciculus, SLF, and uncinate fasciculus. These findings are consistent with, but add specificity to, the proposal of a dorsal stream for transcoding acoustic information into motor speech modulation for affective prosody expression in the RH and a ventral stream for transcoding acoustic information into emotional meaning for affective prosody recognition (38, 52). The areas we identified that affected prosody acoustic measures, particularly IFG pars opercularis, supramarginal gyrus, and SLF (roughly equivalent to the arcuate fasciculus) are regions often considered to be included in the dorsal stream of speech production in the left hemisphere (53) and the dorsal stream of affective prosody production in the RH (29, 44). Because we focused on affective prosody expression, we did not provide evidence for the role of the proposed ventral stream. However, lesions in relatively ventral areas, including STG and sagittal stratum (white matter tracts connecting basal ganglia and thalamus with temporal and occipital lobes), which served as control regions, were not associated with impaired (lowest quartile) of prosody acoustic measures in multivariable logistic regression. Other studies are needed to evaluate the cortical and white matter regions associated with recognition of affective prosody. One study identified an association between damage to the sagittal stratum and impaired recognition of sarcastic voice (30).

An important role of right inferior frontal gyrus lesions in disrupting affective prosody expression has also been reported by Ross and Monnot (3). Furthermore, in an fMRI study of healthy controls, evoked expressions of anger (compared with neutral expressions) produced activation in the inferior frontal cortex and dorsal basal ganglia (22). Expression of anger was also associated with activation of the amygdala and anterior cingulate cortex (23), areas important for some aspects of emotional processing, such as empathy (31). The role of disruption to specific white matter tract bundles on affective prosody expression has been less studied than the role of cortical regions. One study showed that in left hemisphere stroke patients, deficits in emotional expression that were independent of the aphasic deficit were associated with deep white matter lesions below the supplementary motor area (which disrupt interhemispheric connections through the mid-rostral corpus callosum) (54). Here, we identified RH white matter tracts that are critical for expression of emotion through prosody, including IFO fasciculus, SLF, and uncinate fasciculus. Results indicate that affective prosody production relies on right IFO fasciculus, SLF, uncinate fasciculus, as well as supramarginal gyrus and inferior frontal gyrus pars opercularis.

Limitations of our study include the relatively small number of patients, which also limited the number of regions of interest we could evaluate. The small number of patients also reduces the power to detect associations between behavior and regions that are rarely damaged by stroke. Thus, there may be other areas that are critical for expression of emotion through prosody. We also did not analyze speech of healthy controls for this study, so we defined as “abnormal” those who were rated as having low-emotional expression by healthy controls. Despite its limitations, this study provides new information on specific gray and white matter regions where damage causes impaired expression of emotion through prosody.

This study was carried out in accordance with the recommendations of the Johns Hopkins Institutional Review Board with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Johns Hopkins Institutional Review Board. Analysis of the voice data was also performed under a protocol approved by the Seton Hall University Institutional Review Board.

SP responsible for acoustic analysis, design of the study, and editing of the paper. KO responsible for image analysis and editing of the paper. AW, HS-F, and SS responsible for data collection and analysis, editing of the paper. AH responsible for data analysis, design of the study, and drafting of the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The research reported in this paper was supported by the National Institutes of Health (National Institute of Deafness and Communication Disorders) through awards R01DC015466, R01DC05375, and P50 DC014664. The content is solely the responsibility of the authors and does not necessarily represent the views the National Institutes of Health. The authors declare no competing financial interests.

1. Pell MD. Reduced sensitivity to prosodic attitudes in adults with focal right hemisphere brain damage. Brain Lang (2007) 101(1):64–79. doi:10.1016/j.bandl.2006.10.003

2. Bowers D, Blonder LX, Feinberg T, Heilman KM. Differential impact of right and left hemisphere lesions on facial emotion and object imagery. Brain (1991) 114(Pt 6):2593–609. doi:10.1093/brain/114.6.2593

3. Ross ED, Monnot M. Neurology of affective prosody and its functional–anatomic organization in right hemisphere. Brain Lang (2008) 104(1):51–74. doi:10.1016/j.bandl.2007.04.007

4. Barrett AM, Buxbaum LJ, Coslett HB, Edwards E, Heilman KM, Hillis AE, et al. Cognitive rehabilitation interventions for neglect and related disorders: moving from bench to bedside in stroke patients. J Cogn Neurosci (2006) 18(7):1223–36. doi:10.1162/jocn.2006.18.7.1223

5. Pell MD, Baum SR. Unilateral brain damage, prosodic comprehension deficits, and the acoustic cues to prosody. Brain Lang (1997) 57(2):195–214. doi:10.1006/brln.1997.1736

6. Schröder C, Nikolova Z, Dengler R. Changes of emotional prosody in Parkinson’s disease. J Neurol Sci (2010) 289(1):32–5. doi:10.1016/j.jns.2009.08.038

7. Patel S, Parveen S, Anand S. Prosodic changes in Parkinson’s disease. J Acoust Soc Am (2016) 140(4):3442–3442. doi:10.1121/1.4971102

8. Péron J, Cekic S, Haegelen C, Sauleau P, Patel S, Drapier D, et al. Sensory contribution to vocal emotion deficit in Parkinson’s disease after subthalamic stimulation. Cortex (2015) 63:172–83. doi:10.1016/j.cortex.2014.08.023

9. Kumfor F, Irish M, Hodges JR, Piguet O. Discrete neural correlates for the recognition of negative emotions: insights from frontotemporal dementia. PLoS One (2013) 8(6):e67457. doi:10.1371/journal.pone.0067457

10. Dara C, Kirsch-Darrow L, Ochfeld E, Slenz J, Agranovich A, Vasconcellos-Faria A, et al. Impaired emotion processing from vocal and facial cues in frontotemporal dementia compared to right hemisphere stroke. Neurocase (2013) 19(6):521–9. doi:10.1080/13554794.2012.701641

11. Ghacibeh GA, Heilman KM. Progressive affective aprosodia and prosoplegia. Neurology (2003) 60(7):1192–4. doi:10.1212/01.WNL.0000055870.48864.87

12. Shany-Ur T, Poorzand P, Grossman SN, Growdon ME, Jang JY, Ketelle RS, et al. Comprehension of insincere communication in neurodegenerative disease: lies, sarcasm, and theory of mind. Cortex (2012) 48(10):1329–41. doi:10.1016/j.cortex.2011.08.003

13. Rankin KP, Salazar A, Gorno-Tempini ML, Sollberger M, Wilson SM, Pavlic D, et al. Detecting sarcasm from paralinguistic cues: anatomic and cognitive correlates in neurodegenerative disease. Neuroimage (2009) 47(4):2005–15. doi:10.1016/j.neuroimage.2009.05.077

14. Dondaine T, Robert G, Péron J, Grandjean D, Vérin M, Drapier D, et al. Biases in facial and vocal emotion recognition in chronic schizophrenia. Front Psychol (2014) 5:900. doi:10.3389/fpsyg.2014.00900

15. Martínez-Sánchez F, Muela-Martínez JA, Cortés-Soto P, Meilán JJG, Ferrándiz JAV, Caparrós AE, et al. Can the acoustic analysis of expressive prosody discriminate schizophrenia? Span J Psychol (2015) 18:E86. doi:10.1017/sjp.2015.85

16. De Stefani E, De Marco D, Gentilucci M. The effects of meaning and emotional content of a sentence on the kinematics of a successive motor sequence mimiking the feeding of a conspecific. Front Psychol (2016) 7:672. doi:10.3389/fpsyg.2016.00672

17. Wright A, Tippett D, Davis C, Gomez Y, Posner J, Ross ED, et al. Affective prosodic deficits during the hyperacute stage of right hemispheric ischemic infarction. Annu Meet Am Acad Neurol (2015) Baltimore, MD.

18. Buchanan TW, Lutz K, Mirzazade S, Specht K, Shah NJ, Zilles K, et al. Recognition of emotional prosody and verbal components of spoken language: an fMRI study. Brain Res Cogn Brain Res (2000) 9(3):227–38. doi:10.1016/S0926-6410(99)00060-9

19. Wildgruber D, Ackermann H, Kreifelts B, Ethofer T. Cerebral processing of linguistic and emotional prosody: fMRI studies. Prog Brain Res (2006) 156:249–68. doi:10.1016/S0079-6123(06)56013-3

20. Riecker A, Wildgruber D, Dogil G, Grodd W, Ackermann H. Hemispheric lateralization effects of rhythm implementation during syllable repetitions: an fMRI study. Neuroimage (2002) 16(1):169–76. doi:10.1006/nimg.2002.1068

21. Johnstone T, Van Reekum CM, Oakes TR, Davidson RJ. The voice of emotion: an FMRI study of neural responses to angry and happy vocal expressions. Soc Cogn Affect Neurosci (2006) 1(3):242–9. doi:10.1093/scan/nsl027

22. Frühholz S, Klaas HS, Patel S, Grandjean D. Talking in fury: the cortico-subcortical network underlying angry vocalizations. Cereb Cortex (2014) 25(9):2752–62. doi:10.1093/cercor/bhu074

23. Wright AE, Davis C, Gomez Y, Posner J, Rorden C, Hillis AE, et al. Acute ischemic lesions associated with impairments in expression and recognition of affective prosody. Perspect ASHA Spec Interest Groups (2016) 1(2):82–95. doi:10.1044/persp1.SIG2.82

24. Davis CL, Oishi K, Faria AV, Hsu J, Gomez Y, Mori S, et al. White matter tracts critical for recognition of sarcasm. Neurocase (2016) 22(1):22–9. doi:10.1080/13554794.2015.1024137

25. Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci (2007) 8(5):393. doi:10.1038/nrn2113

26. Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends Cogn Sci (Regul Ed) (2000) 4(4):131–8. doi:10.1016/S1364-6613(00)01463-7

27. Saur D, Kreher BW, Schnell S, Kümmerer D, Kellmeyer P, Vry MS, et al. Ventral and dorsal pathways for language. Proc Natl Acad Sci U S A (2008) 105(46):18035–40. doi:10.1073/pnas.0805234105

28. Sammler D, Grosbras M, Anwander A, Bestelmeyer PE, Belin P. Dorsal and ventral pathways for prosody. Curr Biol (2015) 25(23):3079–85. doi:10.1016/j.cub.2015.10.009

29. Pichon S, Kell CA. Affective and sensorimotor components of emotional prosody generation. J Neurosci (2013) 33(4):1640–50. doi:10.1523/JNEUROSCI.3530-12.2013

30. Dickey CC, Vu MT, Voglmaier MM, Niznikiewicz MA, McCarley RW, Panych LP. Prosodic abnormalities in schizotypal personality disorder. Schizophr Res (2012) 142(1):20–30. doi:10.1016/j.schres.2012.09.006

31. Nevler N, Ash S, Jester C, Irwin DJ, Liberman M, Grossman M. Automatic measurement of prosody in behavioral variant FTD. Neurology (2017) 89(7):650–6. doi:10.1212/WNL.0000000000004236

32. Liu T, Pinheiro AP, Deng G, Nestor PG, McCarley RW, Niznikiewicz MA. Electrophysiological insights into processing nonverbal emotional vocalizations. Neuroreport (2012) 23(2):108–12. doi:10.1097/WNR.0b013e32834ea757

33. Goodglass H, Kaplan E, Barresi B. Boston Disagnostic Aphasia Examination-Third Edition (BDAE-3). San Antonio: Pearson (2000).

34. Lyden P, Claesson L, Havstad S, Ashwood T, Lu M. Factor analysis of the National Institutes of Health Stroke Scale in patients with large strokes. Arch Neurol (2004) 61(11):1677–80. doi:10.1001/archneur.61.11.1677

35. Lyden P, Lu M, Jackson C, Marler J, Kothari R, Brott T, et al. Underlying structure of the National Institutes of Health Stroke Scale: results of a factor analysis. NINDS tPA Stroke Trial Investigators. Stroke (1999) 30(11):2347–54. doi:10.1161/01.STR.30.11.2347

36. Boersma P. Praat, a system for doing phonetics by computer. Glot International (2001) 5(9/10):341–5.

37. Patel S, Scherer KR, Björkner E, Sundberg J. Mapping emotions into acoustic space: the role of voice production. Biol Psychol (2011) 87(1):93–8. doi:10.1016/j.biopsycho.2011.02.010

38. Leitman DI, Wolf DH, Ragland JD, Laukka P, Loughead J, Valdez JN, et al. “It’s Not What You Say, But How You Say it”: a reciprocal temporo-frontal network for affective prosody. Front Hum Neurosci (2010) 4:19. doi:10.3389/fnhum.2010.00019

39. Leigh R, Oishi K, Hsu J, Lindquist M, Gottesman RF, Jarso S, et al. Acute lesions that impair affective empathy. Brain (2013) 136(Pt 8):2539–49. doi:10.1093/brain/awt177

40. Oishi K, Faria AV, Hsu J, Tippett D, Mori S, Hillis AE. Critical role of the right uncinate fasciculus in emotional empathy. Ann Neurol (2015) 77(1):68–74. doi:10.1002/ana.24300

41. Sebastian R, Schein MG, Davis C, Gomez Y, Newhart M, Oishi K, et al. Aphasia or neglect after thalamic stroke: the various ways they may be related to cortical hypoperfusion. Front Neurol (2014) 5:231. doi:10.3389/fneur.2014.00231

42. Wittsack H, Ritzl A, Fink GR, Wenserski F, Siebler M, Seitz RJ, et al. MR imaging in acute stroke: diffusion-weighted and perfusion imaging parameters for predicting infarct size. Radiology (2002) 222(2):397–403. doi:10.1148/radiol.2222001731

43. Kuhl CK, Textor J, Gieseke J, von Falkenhausen M, Gernert S, Urbach H, et al. Acute and subacute ischemic stroke at high-field-strength (3.0-T) diffusion-weighted MR imaging: intraindividual comparative study. Radiology (2005) 234(2):509–16. doi:10.1148/radiol.2342031626

44. Ceritoglu C, Oishi K, Li X, Chou M, Younes L, Albert M, et al. Multi-contrast large deformation diffeomorphic metric mapping for diffusion tensor imaging. Neuroimage (2009) 47(2):618–27. doi:10.1016/j.neuroimage.2009.04.057

45. Oishi K, Faria A, Jiang H, Li X, Akhter K, Zhang J, et al. Atlas-based whole brain white matter analysis using large deformation diffeomorphic metric mapping: application to normal elderly and Alzheimer’s disease participants. Neuroimage (2009) 46(2):486–99. doi:10.1016/j.neuroimage.2009.01.002

46. Guranski K, Podemski R. Emotional prosody expression in acoustic analysis in patients with right hemisphere ischemic stroke. Neurol Neurochir Pol (2015) 49(2):113–20. doi:10.1016/j.pjnns.2015.03.004

47. Monzo C, Alías F, Iriondo I, Gonzalvo X, Planet S. Discriminating expressive speech styles by voice quality parameterization. Proc of ICPhS Saarbrucken, Germany (2007) 2081–84.

48. Přibil J, Přibilová A. Statistical analysis of spectral properties and prosodic parameters of emotional speech. Meas Sci Rev (2009) 9(4):95–104. doi:10.2478/v10048-009-0016-4

49. Waaramaa T, Kankare E. Acoustic and EGG analyses of emotional utterances. Logoped Phoniatr Vocol (2013) 38(1):11–8. doi:10.3109/14015439.2012.679966

50. Yildirim S, Bulut M, Lee CM, Kazemzadeh A, Deng Z, Lee S. An acoustic study of emotions expressed in speech. Eighth International Conference on Spoken Language Processing. Jeju Island, Korea (2004).

51. Truong KP, van Leeuwen DA. Visualizing acoustic similarities between emotions in speech: an acoustic map of emotions. Eighth Annual Conference of the International Speech Communication Association Proceedings of Interspeech. Antwerp, Belgium (2007) 2265–2268.

52. Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci (Regul Ed) (2006) 10(1):24–30. doi:10.1016/j.tics.2005.11.009

53. Poeppel D, Hickok G. Towards a new functional anatomy of language. Cognition (2004) 92(1):1–12. doi:10.1016/j.cognition.2003.11.001

Keywords: prosody expression, stroke, right hemisphere, emotion, communication

Citation: Patel S, Oishi K, Wright A, Sutherland-Foggio H, Saxena S, Sheppard SM and Hillis AE (2018) Right Hemisphere Regions Critical for Expression of Emotion Through Prosody. Front. Neurol. 9:224. doi: 10.3389/fneur.2018.00224

Received: 01 November 2017; Accepted: 22 March 2018;

Published: 06 April 2018

Edited by:

Peter Sörös, University of Oldenburg, GermanyReviewed by:

Daniel L. Bowling, Universität Wien, AustriaCopyright: © 2018 Patel, Oishi, Wright, Sutherland-Foggio, Saxena, Sheppard and Hillis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Argye E. Hillis, YXJneWVAamhtaS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.