95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroinform. , 09 March 2023

Volume 17 - 2023 | https://doi.org/10.3389/fninf.2023.852105

This article is part of the Research Topic Insights in Neuroinformatics: 2021 View all 5 articles

Umberto A. Gava1,2

Umberto A. Gava1,2 Federico D’Agata2*

Federico D’Agata2* Enzo Tartaglione3

Enzo Tartaglione3 Riccardo Renzulli3

Riccardo Renzulli3 Marco Grangetto3

Marco Grangetto3 Francesca Bertolino1,2

Francesca Bertolino1,2 Ambra Santonocito2

Ambra Santonocito2 Edwin Bennink4,5

Edwin Bennink4,5 Giacomo Vaudano6

Giacomo Vaudano6 Andrea Boghi6

Andrea Boghi6 Mauro Bergui1,2

Mauro Bergui1,2Objective: In this study, we investigate whether a Convolutional Neural Network (CNN) can generate informative parametric maps from the pre-processed CT perfusion data in patients with acute ischemic stroke in a clinical setting.

Methods: The CNN training was performed on a subset of 100 pre-processed perfusion CT dataset, while 15 samples were kept for testing. All the data used for the training/testing of the network and for generating ground truth (GT) maps, using a state-of-the-art deconvolution algorithm, were previously pre-processed using a pipeline for motion correction and filtering. Threefold cross validation had been used to estimate the performance of the model on unseen data, reporting Mean Squared Error (MSE). Maps accuracy had been checked through manual segmentation of infarct core and total hypo-perfused regions on both CNN-derived and GT maps. Concordance among segmented lesions was assessed using the Dice Similarity Coefficient (DSC). Correlation and agreement among different perfusion analysis methods were evaluated using mean absolute volume differences, Pearson correlation coefficients, Bland-Altman analysis, and coefficient of repeatability across lesion volumes.

Results: The MSE was very low for two out of three maps, and low in the remaining map, showing good generalizability. Mean Dice scores from two different raters and the GT maps ranged from 0.80 to 0.87. Inter-rater concordance was high, and a strong correlation was found between lesion volumes of CNN maps and GT maps (0.99, 0.98, respectively).

Conclusion: The agreement between our CNN-based perfusion maps and the state-of-the-art deconvolution-algorithm perfusion analysis maps, highlights the potential of machine learning methods applied to perfusion analysis. CNN approaches can reduce the volume of data required by deconvolution algorithms to estimate the ischemic core, and thus might allow the development of novel perfusion protocols with lower radiation dose deployed to the patient.

Occlusion of a cerebral artery causes the sudden decrease of the blood perfusion in the vascular territory matching the occluded vessel. The peripheral regions of the area affected by the vascular occlusion have their blood flow deficit reduced by the collateral circulation, in comparison to the center of the affected territory. Ischemic lesions develop rapidly, originating from the center of the occluded vascular territory and progressively expanding to most peripheral regions.

From the onset of symptoms, in the ischemic hypo-perfused area of the brain two different regions may be identified: a central “core,” and a peripheral “penumbra,” where the former corresponds to the area of irreversible damage, and the latter to the area of a potential recovery, provided recanalization of the occluded vessel. Therefore, identification of core and penumbra may predict the fate of the tissue and drive reperfusion treatments (Donahue and Wintermark, 2015; Wannamaker et al., 2018). The extension of core and penumbra may be estimated using perfusion techniques, in particular CT Perfusion (CTP). During CTP, a series of low-dose scans is acquired after contrast bolus injection, allowing for computing time-density curves; deconvolution of these curves allows for generating parametric maps to track perfusion parameters dynamics. Cerebral Blood Volume (CBV), Cerebral Blood Flow (CBF), time to peak (TTP), time to peak of the deconvolved tissue residue function (Tmax), and mean transit time (MTT) are the estimated perfusion parameters most frequently used in clinical practice. Ischemic core and penumbra can be predicted by assessing perfusion parameters mismatch across different parametric maps, i.e., brain tissues characterized by reduced CBV and CBF, and increased MTT or TTP are interpreted as core lesions (Konstas et al., 2009a,b; Campbell et al., 2011).

Tmax has been found to approximate both penumbra and core identified with an increasing tissue perfusion delay (Demeestere et al., 2020).

The role of CTP is of particular importance in patients with unknown time from the onset of symptoms, or out of the 4.5- and 6-hours’ window used to select patients for intra-venous and intra-arterial reperfusion treatments, respectively. Two trials (DAWN and DEFUSE) demonstrated the clinical usefulness of intra-arterial reperfusion in patients selected using CBV and CBF estimation based on CTP (Albers et al., 2018; Nogueira et al., 2018).

Different algorithms are used to perform deconvolution of time-intensity curves, some of which are not public and may produce largely different maps (Kudo et al., 2010). In an ideal setting of limited noise, low variance, and no motion artifacts, a pixel-by-pixel analysis, as performed by deconvolution-based algorithms, is probably the best choice to obtain realistic, affordable, and reproducible maps. In a realistic setting, however, redundant information is acquired in order to overcome problems due to noise, large variance and movement. In practice, this imposes obtaining more slices, requiring a larger number of acquisitions and more X-ray exposure for the patients, to perform a series of spatial pre-processing steps for noise and variance reduction and to extract the arterial input function (AIF).

Luckily, Machine Learning (ML) approaches offer several potential advantages over canonical algorithms when applied to the problem of time-intensity curves deconvolution. In fact, ML techniques allow extracting information that is relatively insensitive to noise, misalignments, and intra-subjects variance (Dashtbani Moghari et al., 2021a; Li et al., 2021a).

Several supervised and unsupervised machine learning algorithms–e.g., Support Vector Machines, Random Forests, Ridge Regression, Feed forward Neural Network - have been applied to CTP and MRI perfusion showing better performance compared to simpler linear models (McKinley et al., 2018; Cheng et al., 2021).

In our study we explored whether a properly trained Convolutional Neural Network (CNN), based on a U-Net-like structure, can generate informative, AIF-independent, parametric maps of CBV, CBF, and time to peak TTP on a pre-processed dataset of CTP images. CTP images were obtained from a real-world dataset of patients with acute ischemic stroke (AIS), no large lesions on non-contrast CT scans, and candidates for reperfusion therapies (Barber et al., 2000). This dataset was chosen because it corresponds to the one used in the DAWN and DEFUSE trials, for which perfusion studies drive reperfusion therapies. We released publicly two versions of this dataset for data sharing following the FAIR protocol [UniToBrain, doi: 10.5281/zenodo.481760, doi: 10.21227/x8ea-vh1, (Gava et al., 2021)].

The datasets and the CNN training were developed as a use case of the European project DeepHealth1: a framework envisioned to tackle the real needs of the health sector and facilitate the daily work of medical personnel and expert users in terms of image processing and the use and training of predictive models without the need of combining numerous tools. To this end, the project will combine open High-Performance Computing infrastructure with ML techniques to support biomedical applications that require the analysis of large and complex biomedical datasets.

All procedures performed in this study involved human participants and thus followed the ethical standards of our institutional review board (Comitato Etico Interaziendale, CEI, id number 596.345), and the 1964 Helsinki declaration and its later amendments or comparable ethical standards. The requirement for written informed consent was waived because of the retrospective nature of the study.

CT Perfusion data gathered for this study is part of a larger open-access dataset developed for the DeepHealth project which now includes 258 consecutive patients, obtained retrospectively from hospital Picture Archiving and Communication System (PACS) and is available for download (Gava et al., 2021).

Perfusion data from a subset of 115 patients were extracted. For training the CNN, 115 subjects were randomly split into a training set of 100, and a testing set of 15, used to compare our results to a gold standard method.

CT Perfusion acquisition parameters were as follows: Scanner GE 64 slice, 80 kV, 150 mAs, 44.5-s duration, 89 volumes (40 mm axial coverage) with slice thickness 5 mm, injection of 40 ml of Iodine contrast agent (300 mg/ml) at 4 ml/s speed.

We calculated perfusion maps, including CBF, CBV, and TTP, using a pipeline of spatial pre-processing and a state-of-the-art fast model-based non-linear regression (NLR) method developed by Bennink et al. (2016).

Motion correction was done using Elastix 5.0.1, by rigid registration of all CTP frames to the first frame (Bennink et al., 2016). Registration was initiated on a coarse resolution level (8× down-sampling), followed by a full-resolution level. The sum of squared differences between the frames was minimized using stochastic gradient descent with, respectively 2,000 and 8,000 samples and 300 iterations per resolution level.

After motion correction, the CTP frames were filtered using a 3D bilateral filter, guided by the mean of all CTP frames (Klein et al., 2010). The spatial kernel size (standard deviation) was 3 mm and the range kernel size 20 Hounsfield units.

The CBV, CBF, and TTP were estimated by fitting a model to the measured attenuation curve in each voxel by means of non-linear regression. The model convolves the measured AIF with a box-shaped impulse response function (IRF). The CBF determines the height of the box, the MTT determines its width and the TTP determines its position on the time-axis (Tomasi and Manduchi, 1998). A downhill-simplex algorithm was used to minimize the sum of squared errors in 300 iterations. The initial CBF, MTT, and TTP were 60 mL/100 g/min, 5 s, and 3.5 s, respectively.

Arterial input function and Venous output function (VOF) calculations were done automatically on a 100 voxels sample.

The box-shaped model developed by Bennink et al. (2016) describes the impulse response function (IRF) of the perfused tissue in terms of CBV, MTT, and tracer delay. The box-shaped IRF enables fast NLR analysis, which is critical in a clinical setting such as ischemic stroke.

The time attenuation curve of the tissue and the relative CBV, CBF, and TTP maps were estimated using the AIF in conjunction with the IRF.

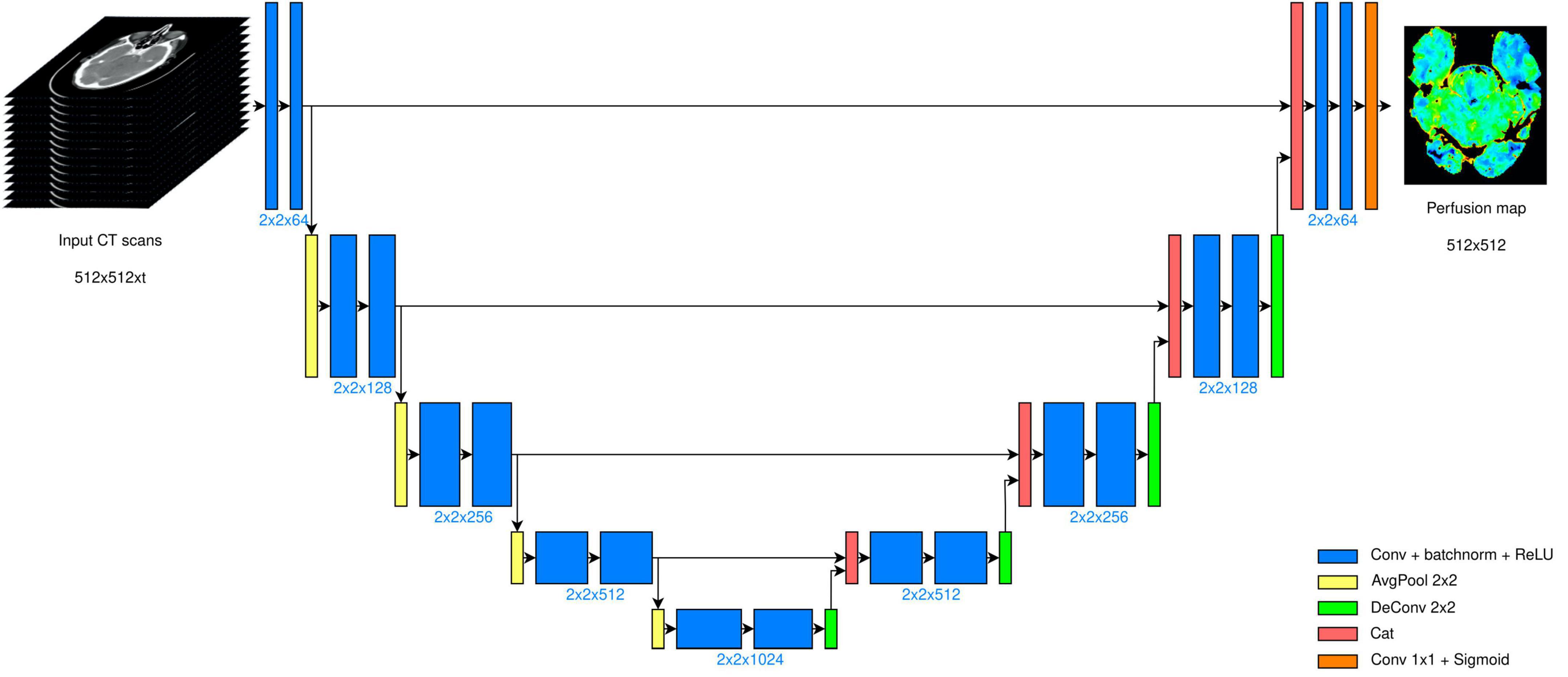

The filtered and registered images were the only input provided to the U-Net-like architecture. This network architecture has been originally developed for image segmentation, however, it proved to be robust and performed well in other scenarios as well (Chen et al., 2018; Falk et al., 2019). The original architecture was proposed by Ronneberger et al. (2015) and consisted of 18 convolutional layers with 3 × 3 filters, 4 up-convolution layers with 2 × 2 kernels, 4 max-pool layers with 2 × 2 kernels, and 1 convolutional layer with 1 × 1 convolution. Differently from similar models, U-Net is able to extract features at different spatial resolutions and, thanks to its “copy and crop” connections which resemble the residual connections in ResNets (He et al., 2016), the model automatically selects the optimal resolution(s) to extract features for the target training task, disregarding the other scales. In the original work, the authors proposed an extraction with five different resolutions (from 572 × 572 down to 32 × 32), which required a large number of filters per layer, from 64 at the highest resolution to 1,024 at the lowest.

To apply it to our problem, we needed to introduce modifications at the architectural level to fit our problem.

For segmentation tasks a state-of-the-art choice is to use max-pooling layers for sub-sampling:

This operator, however, introduces a non-linear behavior that prevents the forward propagation of a great part of the information content (Sabour et al., 2017). Moreover, since the use of the standard max-pool layer in our context was suboptimal as we do not expect sparse features to be extracted, we employed average pooling layers in place of max-pool. By the same token, we used 2 × 2 kernels instead of 3 × 3 kernels not only for the average pooling and for the up-convolution, but also for the convolutional layers. The input to our model then were 512 × 512× t image stacks (in place of 572 × 572 × 3), where t defines the number of CT volumes acquired. The CT scans were processed, hence, as 3D tensors, where the third dimension is time. Depending on the chosen time granularity, the number of input channels changed accordingly. The overall CNN structure is displayed in Figure 1.

Figure 1. U-Net architecture deployed. The model takes scans of size 512 × 512, acquired in t different time instants. The bottleneck layer is placed after four encoding stages, and the output is a 512 × 512 map.

During training, instead of using standard cross-entropy loss, or dice score/focal loss, which are typical in segmentation tasks, we minimized the mean squared error loss (MSE), which was compatible with the desired ground truth output. No additional information (like the AIF) was provided to the CNN: all the information is implicitly extracted or inferred from the registered CT scans.

The model was pre-trained on 128 × 128 sub-sampled inputs for 250 epochs, after that it was fine-tuned for 50 additional epochs on the full 512 × 512 resolution. Training on full resolution images requires high consumption of GPU memory and the time needed to reach model convergence becomes very high.

Training on down-sampled images, on the contrary, allows us to reach a solution in a much shorter time since the task is simplified. The pre-training step produces a suitable initialization for the model: this type of approach is often used for training generative networks, and the results are comparable and smoother than those produced with very high dimensional image training. The training of the entire model for each target is done using Adam with a learning rate 10–5, b1 = 0.9, b2 = 0.999, and batch size equal to 8.

The output of the model was a 512 × 512 map (Figure 2), where all the pixel values were normalized in the range 0–1. The entire model was trained using an SGD optimization strategy with a learning rate decay policy self-tuned according to the performance on the validation set. The source code is publicly available at https://github.com/EIDOSLAB/Neural-Network-derived-perfusion-maps.

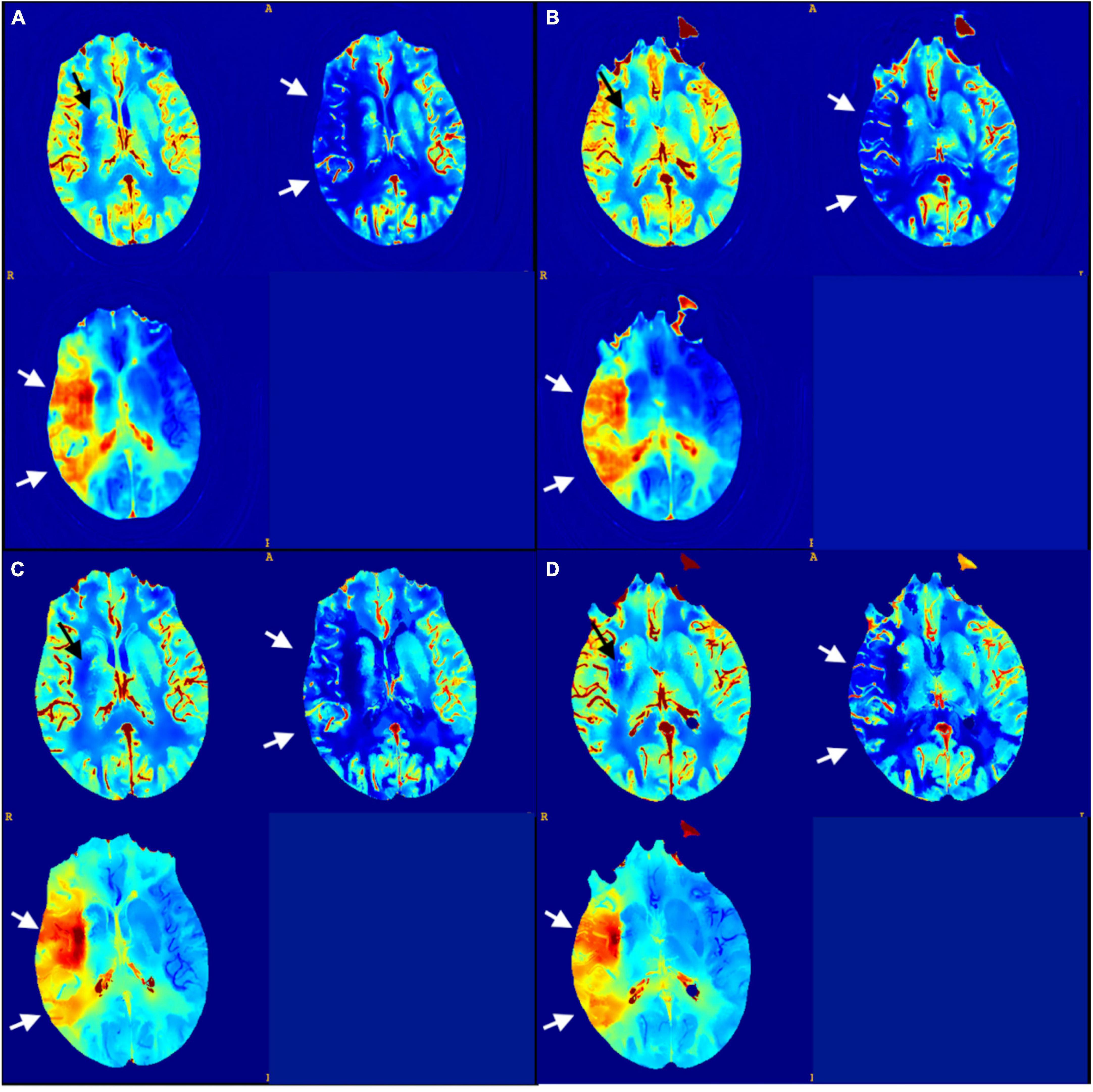

Figure 2. (A,B) CNN output maps from the testing set (CBV, CBF, and TTP); (C,D) matching sections of GT maps. There is a small infarct core displayed in the CBV map at the right basal ganglia (black arrows) and an extended penumbra showed in the CBF and TTP maps across right middle cerebral artery territories (white arrows).

To evaluate the performance of the CNN model in generating new maps, we implemented a threefold cross-validation protocol on the training dataset.

The accuracy of CNN maps was carried out through the segmentation of the infarct core (CBV) and total hypo-perfused territory (CBF, TTP), on the test set of 15 CNN-parametric maps. The process is performed manually by two expert radiologists using ITK-SNAP open-source software (Yushkevich et al., 2006). Segmentation was carried out section-wise following the axial direction.

To avoid bias induced by repetitive evaluation of the same patients, GT-maps segmentation was performed by two different radiologists.

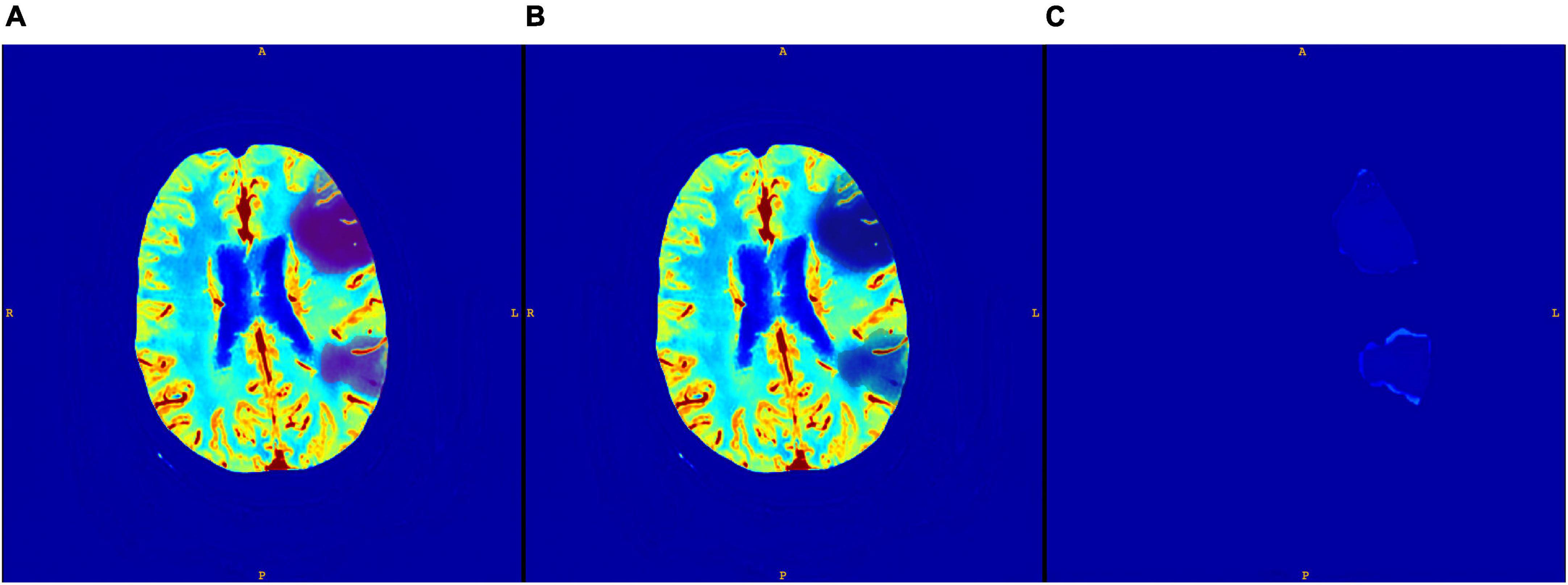

An example of a core segmentation on both CNN and GT maps is displayed in Figure 3. We notice that the map is perfectly reconstructed everywhere, except in the core region, where the CNN generated map has a positive, uniform bias, which however does not compromise the segmentation of the core area.

Figure 3. Core segmented on CNN CBV map (A); core segmented on GT CBV map (B); and the difference between the two maps (C).

Penumbra volumes correspond to the mismatch between the total hypo-perfused region and the ischemic core.

The CNN segmented volumes from both raters were matched with the GT to assess overlapping regions by calculating the Dice Similarity Coefficient (DSC).

Dice Similarity Coefficient was also calculated by matching CNN segmentations from different raters of the same set of maps to evaluate inter-rater concordance.

Pearson correlation coefficient (r) was used to assess the relationship between the lesion volumes on GT and CNN maps. Bland-Altman and coefficient of repeatability (CR) analysis was also performed across volumes segmented on CNN and GT maps to assess agreement between the different methods.

Intraclass correlation coefficient (ICC) was used to assess reliability across volumes segmented from different raters.

Friedman test was used to look into significant differences across volumes of segmented lesions.

Statistical analysis was performed using the 3D-convert toolbox from ITK-SNAP, intraclass_corr function in Python 3.6.5 (ICC1k, pingouin library),2 and Statistical Package for the Social Sciences (SPSS) software (version 27.0.1.0).

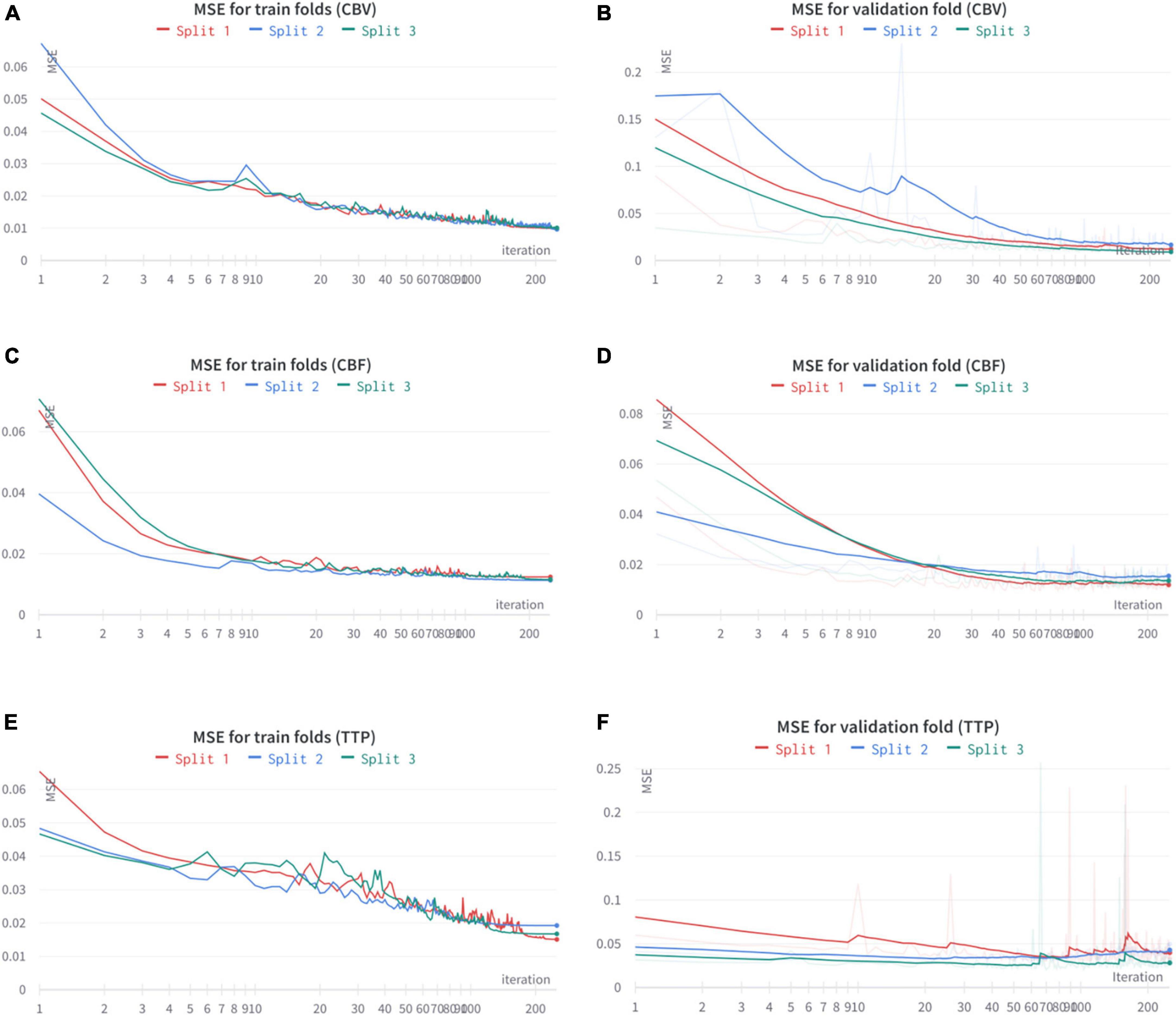

In Table 1 we reported the final performance of the models as both the MSE evaluated on the training set and the one obtained on the validation set. We would like to remark that the threefold slit was performed on the patient’s base. The MSE for both the generation of CBV and CBF is very low, in the order of 0.01 (corresponding to a PSNR close to 20 dB), having a generalization gap of 0.001 only. This shows that these two maps can be produced with very high fidelity, even on new subjects. Differently from the previous two maps, TTP’s generation results more problematic, with an average MSE of 0.017 and a higher generalization gap. Given the extremely high non-linearity of TTP maps, a worse generation performance is expected. All the training and validation results are shared in Figure 4.

Figure 4. Train and validation curves for the threefold cross-validation results on CBV (A,B), CBF (C,D), and TTP (E,F).

A total of 3 out of 15 CTP datasets used as CNN testing exhibited normal perfusion parameters on both GT and CNN-parametric maps and resulted in negative vessel occlusion on CT Angiography (CTA). Normal perfusion maps were excluded from DSC analysis to avoid overestimation of the segmentations comparison results.

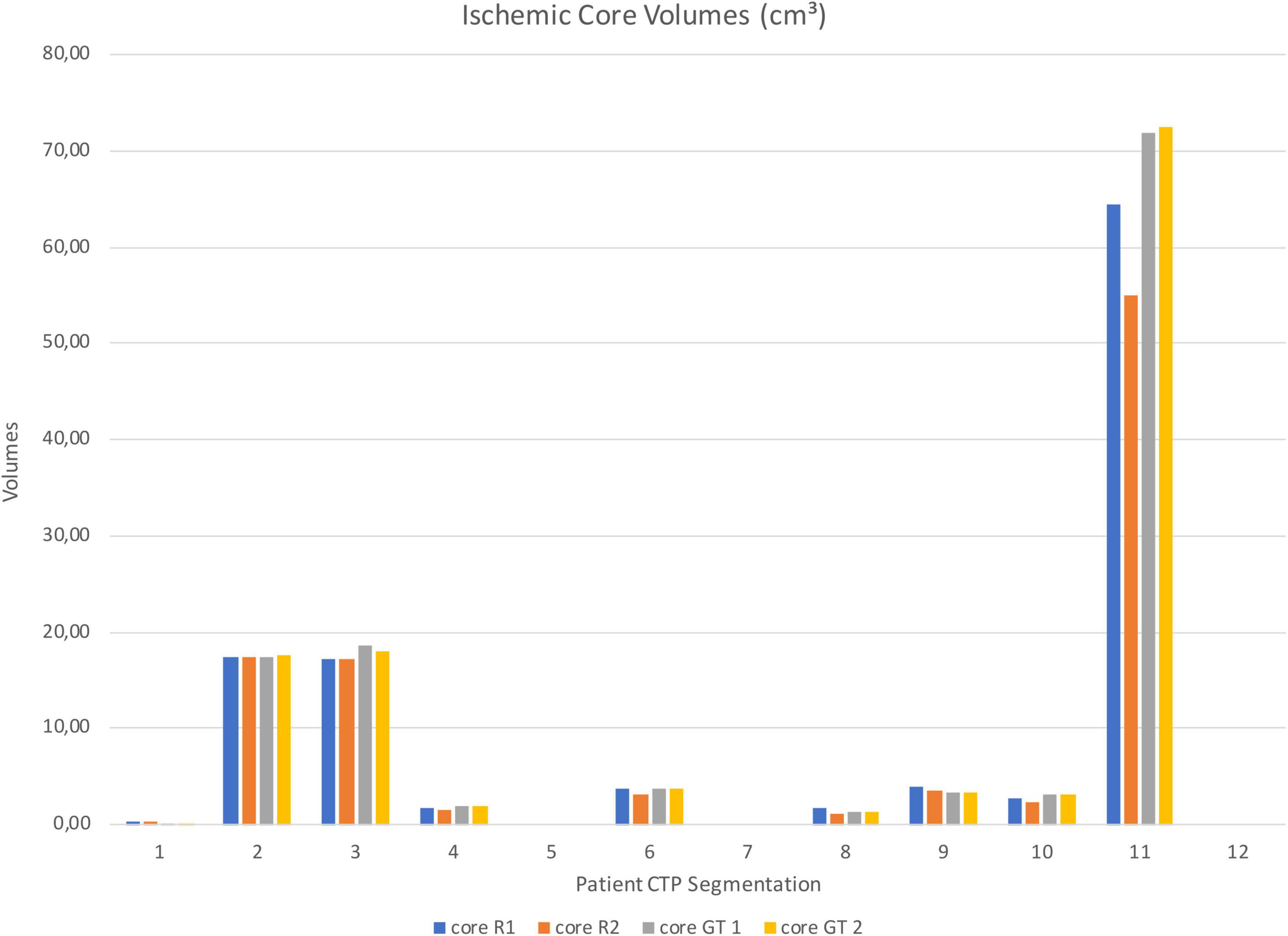

Segmented core (CBV) and hypo-perfused regions (CBF/TTP) volumes of the remaining patients were shown in Figures 5, 6; 3 out of 12 patients presented with hypo-perfused territories without ischemic cores.

Figure 5. Test sample ischemic core segmentation volumes from Rater 1 (R1), Rater 2 (R2), GT 1 and GT 2.

Mean DSC for all CBV lesions ranged from 0.80 to 0.86 and for CBF/TTP lesions from 0.82 to 0.87, with high DSC values are found both across and within different methods of perfusion analysis. DSC resulting from segmentation matching are presented as mean and standard deviation (SD) in Table 2.

Friedman test did not reveal a significant difference among segmented volumes on CBV and CBF/TTP maps. Mean ICC for absolute agreement were excellent for volumes segmented both on CBV and CBF/TTP maps (0.98 C.I. 0.95–1.00; 0.99 C.I. 0.98–1.00).

We also found a strong positive correlation (r = 0.99, r = 0.98 with p < 0.001) between CBV–CBF/TPP lesion volume on GT and CNN maps for both raters (Table 2).

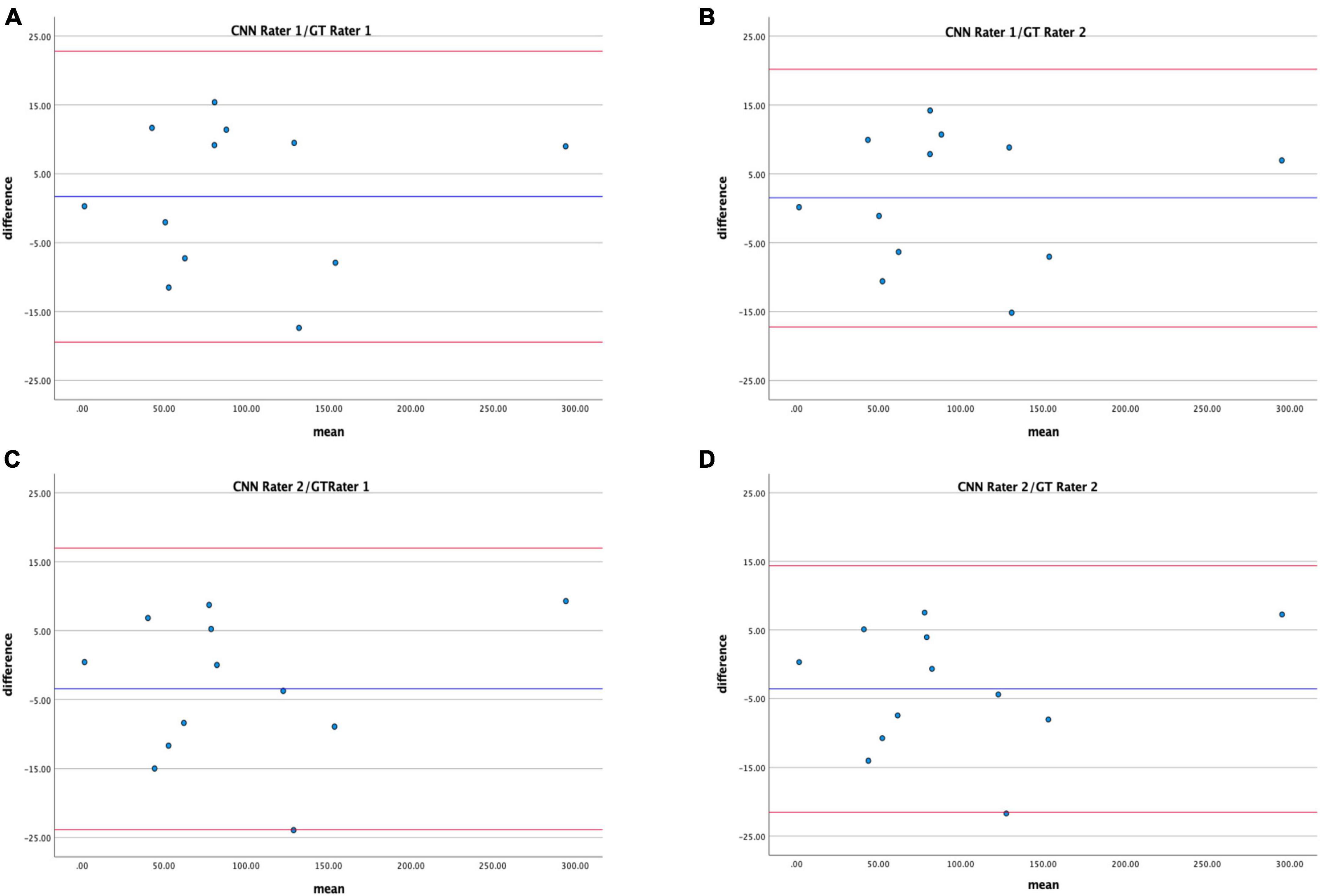

Bland–Altman analysis displays good agreement between the CNN proposed method and the GT in estimating hypo-perfused regions on CTP maps (Figure 7); CR for Rater 1 and Rater 2 were 19.9–17.7 and 20.9–18.4.

Figure 7. Bland–Altman plots showing agreement between total hypo-perfuse volume measurements derived from CNN and GT maps for Rater 1 (A,B) and Rater 2 (C,D).

Mean absolute volume differences across the ischemic core from different observers and the GT were 1.20 ± 2.31 cm3 (Rater 1) and 2.27 ± 5.44 cm3 while mean volume differences for the whole hypo-perfused region were 9.37 ± 4.84 cm3 (Rater 1) and 8.52 ± 6.49 cm3 (Rater 2).

The study demonstrated that parametric maps generated by our CNN-based approach are comparable to maps resulting from a state-of-the-art CTP NLR algorithm when working on pre-processed images.

There is a high DSC and strong linear correlation between CNN and GT segmented volumes.

The performance of the CNN at estimating ischemic core and penumbra is comparable to a state-of-the-art CTP NLR algorithm without additional inputs such as AIF or VOF.

To precisely estimate perfusion parameters, the proposed CNN requires only registered CT scans, while deconvolution-based CT and MRI brain perfusion analysis methods need additional inputs, such as the AIF curve measured in a large feeding artery (Fieselmann et al., 2011). This suggests that our CNN is capable of combining information from arterial and tissue density to obtain quantitative estimates for the CBV, CBF, and MTT. On the other hand, other recent automated CTP analysis methods such as RAPID (Campbell et al., 2015), use AIF and the venous output function (VOF) from a major venous system to compute perfusion parameters and subsequently estimate ischemic core and penumbra (Laughlin et al., 2019).

In a model developed by Hess et al. (2018) the performance of the CNN in regressing the Tmax parameter on DSC-MRI perfusion imaging increases with the addition of bolus information. Future regression of the Tmax using our CNN could show if the assumption made by Hess et al. (2018) for MRI perfusion imaging also extend to CTP.

The CTP datasets were obtained from a population of patients eligible for reperfusion therapy, with limited or no hypodense lesions on non-contrast CT.

Such a population was targeted to simulate the clinical setting where CTP is a key parameter for clinical decisions: when the time of onset is not known, CTP allows to effectively select patients for treatment, as shown in DAWN and DEFUSE-3 trials (Albers et al., 2018; Nogueira et al., 2018). Our method shows a highly accurate performance on patients with no vessel occlusion on CTA and normal CTP parameters on GT maps, therefore suggesting that our CNN-based approach can yield highly reliable results even within the general population. Moreover, hypodense lesions may mark an ischemic core on CTP; this can introduce additional information to be exploited by our CNN-based approach without additionally estimating the tissue-curve offset, as required by deconvolution-based algorithms.

The CNN was tested on a high dose/signal-to-noise ratio (SNR) dataset with limited axial coverage, thus an application to noisier datasets is required for a complete comparison with state-of-the-art techniques. Moreover, in order to confirm its robustness, our approach should undergo further testing on a larger population sample and use the latest CTP protocols from different scanners.

Considering the highly similar performance of CNNs and deconvolution-based algorithms, one might ask why the former approach might be preferable. ML algorithms have been largely proven to overcome conventional image processing algorithms in practically every field [segmentation (Zhou et al., 2017; Clèrigues et al., 2019; Kloenne et al., 2020), noise reduction (Chen et al., 2017; Xiao et al., 2019; Kloenne et al., 2020; Dashtbani Moghari et al., 2021a), novelty detection (Chen et al., 2017; Zhang et al., 2019), radiation dose reduction (Chen et al., 2017; Dashtbani Moghari et al., 2021b; Li et al., 2021a)] and in recent years its use is expanding also to CTP and MRI perfusion imaging. In particular, the generation of synthetic maps using ML approaches has been performed with MRI DSC perfusion by Ho et al. (2016) and Meier et al. (2019). Meier et al. (2019) obtained results similar to ours: they compared the performance of a commercial FDA-approved perfusion software and a CNN not only to generate Tmax MRI perfusion maps, but also to identify selection criteria for reperfusion therapies. They concluded that CNN-based approaches may lead to greater standardization, a faster analysis pipeline, and increased robustness (Meier et al., 2019). Ho et al. (2016), instead, estimated voxel-wise MRI perfusion parameters using a deep learning approach exploiting the concentration time curve and AIF as inputs. Their approach, however, proved to be time-consuming and thus not ideal for clinical practice.

de la Rosa et al. (2021) developed a novel supervised CNN designed for estimating vascular function (AIF and VOF) in perfusion imaging, which showed improved CTP results when combined with traditional deconvolution algorithms. Their CNN used CTP 4D CTP data as inputs to generate the AIF and VOF curve associated with a probability map showing the voxel-wise contribution to the estimated parameters (de la Rosa et al., 2021).

In the ISLES-2018, challenges Song was ranked first using a deep learning method based on a U-Net architecture to produce synthetic diffusion-weighted imaging (DWI) images by combining information from CTP raw data and post-processed parametric maps. The CNN-derived DWI images were compared to the DWI GT using a discriminator to determine whether the presented image was synthetic or not. The information useful to determine the origin of the DWI maps were fed back into the deep-learning algorithm to improve the prediction of the synthetic DWI images (Hakim et al., 2021).

In a recent study Kuang et al. (2020) developed a ML model based on a random forest classifier to predict ischemic tissue probability in each voxel. They combined different CTP parameters with specific clinical time variables (onset to imaging and imaging to reperfusion). Their threshold-free model proved to perform better than the current clinically used method with fixed thresholding (Kuang et al., 2020). Similarly, Robben et al. (2019) showed that a deep learning approach using in combination native CTP images with metadata such as time parameters and treatment outcome was able to effectively predict the final infarct probability.

In their work, Dolz et al. (2018) propose a “multipath dense U-Net,” where the connectivity with respect to the original architecture is enhanced on the encoder side. In this work, the model takes as input the perfusion maps (in particular, CBV, CTP, DWT, and MTT) and outputs the segmentation of the infarcted tissue. Besides, architecture is further modified by introducing inception modules in the encoder.

Segmentation of ischemic stroke lesions from MRI imaging is the task tackled by Kadry et al. (2021). Leveraging the ISLES 2015 database, a U-Net architecture is trained to learn and extract the segmentation of the ischemic stroke lesions. Differently from the original U-Net architecture, the authors leverage over the VGG-11 backbone having on the encoder side 32, 64,128, 256, and 512 filters in the various depth stages (which are then mirrored in the decoder).

Very recently, Li et al. (2021b) have proposed a multi-scale U-Net model for ischemic stroke lesion segmentation. The authors here combine multiple convolution kernel sizes in the same layer, similarly to inception modules and as seen in the work from Dolz et al. (2018), but they also introduce the knowledge of dilated convolution which allows for extracting non-strictly local information.

In order to assess performance on segmentation of ischemic stroke lesions, Pinheiro et al. (2019) provided an extensive study on many configurations of U-Net and V-Nets, applied to relatively small datasets of MRI and CT images. The authors showed that deeper U-Nets perform better than shallow ones, and that including CT modality improves the results. Finally, they showed that employing perfusion maps yields much better results than using raw perfusion data alone.

In our work, we leverage the key messages from Pinheiro et al. (2019) to focus on the following question: can a neural network model produce perfusion maps that allow for a more accurate segmentation afterward? To answer this question, we cannot base our work on the existing literature, which has employed the U-Net model as a black box to extract the segmentation of the ischemic lesion from the raw perfusion or from the already-processed maps. Instead of using the U-Net model to solve the segmentation problem, we employ it to solve the regression problem of matching the perfusion maps. These contain the information needed by the medical expert to predict the extension of the ischemic lesion.

The proposed CNN-based method generated informative, AIF-independent perfusion maps of patients with AIS, approximating perfusion mismatch in brain tissues very well. Our ML model performed similarly to the state-of-the-art NLR perfusion analysis methods used as GT in estimating CBF, CBV, and TTP parametric maps.

More frequent use of ML methods for perfusion analysis can lead to the reduction of data inputs needed for perfusion mismatch prediction and therefore to a smaller radiation dose for the patients. In the near future, combining different ML approaches to CTP analysis and integrating clinical parameters in the model, has the potential to bring new improved standards in terms of acquisition protocols and ischemic core prediction.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: The datasets generated for this study can be found in the Zenodo repository doi: 10.5281/zenodo.481760 and IEEE DataPort repository doi: 10.21227/x8ea-vh1.

The studies involving human participants were reviewed and approved by the Comitato Etico Interaziendale, CEI, Città della Salute e della Scienza, Turin, Italy, id number 596.345. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

UG, FD’A, ET, MG, EB, GV, and MB contributed to the conception and design of the study. UG, FD’A, ET, FB, AS, EB, AB, and RR organized the database. UG, ET, FB, AS, EB, AB, and RR performed the statistical analysis. UG, FD’A, and MB wrote the first draft of the manuscript. UG, FD’A, ET, RR, MG, EB, AB, and MB wrote sections of the manuscript. All authors contributed to the manuscript revision, read, and approved the submitted version.

This project was received funding from the European Union’s Horizon 2020 Research and Innovation Programme under grant agreement No. 825111, DeepHealth Project (https://doi.org/10.3030/825111).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Albers, G. W., Marks, M. P., Kemp, S., Christensen, S., Tsai, J. P., Ortega-Gutierrez, S., et al. (2018). Thrombectomy for stroke at 6 to 16 hours with selection by perfusion imaging. N. Engl. J. Med. 378, 708–718. doi: 10.1056/NEJMoa1713973

Barber, P. A., Demchuk, A. M., Zhang, J., and Buchan, A. M. (2000). Validity and reliability of a quantitative computed tomography score in predicting outcome of hyperacute stroke before thrombolytic therapy. Lancet 355, 1670–1674. doi: 10.1016/S0140-6736(00)02237-6

Bennink, E., Oosterbroek, J., Kudo, K., Viergever, M. A., Velthuis, B. K., and de Jong, H. W. A. M. (2016). Fast nonlinear regression method for CT brain perfusion analysis. J. Med. Imaging 3:026003. doi: 10.1117/1.jmi.3.2.026003

Campbell, B. C. V., Christensen, S., Levi, C. R., Desmond, P. M., Donnan, G. A., Davis, S. M., et al. (2011). Cerebral blood flow is the optimal CT perfusion parameter for assessing infarct core. Stroke 42, 3435–3440. doi: 10.1161/STROKEAHA.111.618355

Campbell, B. C. V., Yassi, N., Ma, H., Sharma, G., Salinas, S., Churilov, L., et al. (2015). Imaging selection in ischemic stroke: Feasibility of automated CT-perfusion analysis. Int. J. Stroke 10, 51–54. doi: 10.1111/ijs.12381

Chen, H., Zhang, Y., Kalra, M. K., Lin, F., Chen, Y., Liao, P., et al. (2017). Low-Dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans. Med. Imaging 36, 2524–2535. doi: 10.1109/TMI.2017.2715284

Chen, S., Qin, A., Zhou, D., and Yan, D. (2018). Technical Note: U-net-generated synthetic CT images for magnetic resonance imaging-only prostate intensity-modulated radiation therapy treatment planning. Med. Phys. 45, 5659–5665. doi: 10.1002/mp.13247

Cheng, K., Atchaneeyasakul, K., Barakat, Z., Liebeskind, D., and Scalzo, F. (2021). “CT perfusion imaging of the brain with machine learning,” in Proceedings of the international symposium on visual computing, 4 October 2021, eds G. Bebis, V. Athitsos, T. Yan, M. Lau, F. Li, C. Shi, et al. (Heidelberg: Springer-VerlagBerlin), 41–52. doi: 10.1007/978-3-030-90436-4_4

Clèrigues, A., Valverde, S., Bernal, J., Freixenet, J., Oliver, A., and Lladó, X. (2019). Acute ischemic stroke lesion core segmentation in CT perfusion images using fully convolutional neural networks. Comput. Biol. Med. 115:103487. doi: 10.1016/j.compbiomed.2019.103487

Dashtbani Moghari, M., Young, N., Moore, K., Fulton, R. R., Evans, A., and Kyme, A. Z. (2021a). Head movement during cerebral CT perfusion imaging of acute ischaemic stroke: Characterisation and correlation with patient baseline features. Eur. J. Radiol. 144:109979. doi: 10.1016/j.ejrad.2021.109979

Dashtbani Moghari, M., Zhou, L., Yu, B., Young, N., Moore, K., Evans, A., et al. (2021b). Efficient radiation dose reduction in whole-brain CT perfusion imaging using a 3D GAN: Performance and clinical feasibility. Phys. Med. Biol. 66:075008. doi: 10.1088/1361-6560/abe917

de la Rosa, E., Sima, D., Menze, B., Kirschke, J., and Robben, D. (2021). AIFNet: Automatic vascular function estimation for perfusion analysis using deep learning. Med. Image Anal. 74:102211. doi: 10.1016/j.media.2021.102211

Demeestere, J., Wouters, A., Christensen, S., Lemmens, R., and Lansberg, M. G. (2020). Review of perfusion imaging in acute ischemic stroke: From time to tissue. Stroke 51, 1017–1024. doi: 10.1161/STROKEAHA.119.028337

Dolz, J., ben Ayed, I., and Desrosiers, C. (2018). Dense multi-path U-net for ischemic stroke lesion segmentation in multiple image modalities. arXiv [preprint] arXiv:1810.07003

Donahue, J., and Wintermark, M. (2015). Perfusion CT and acute stroke imaging: Foundations, applications, and literature review. J. Neuroradiol. 42, 21–29. doi: 10.1016/j.neurad.2014.11.003

Falk, T., Mai, D., Bensch, R., Çiçek, Ö., Abdulkadir, A., Marrakchi, Y., et al. (2019). U-Net: Deep learning for cell counting, detection, and morphometry. Nat. Methods 16, 67–70. doi: 10.1038/s41592-018-0261-2

Fieselmann, A., Kowarschik, M., Ganguly, A., Hornegger, J., and Fahrig, R. (2011). Deconvolution-based CT and MR brain perfusion measurement: Theoretical model revisited and practical implementation details. Int. J. Biomed. Imaging 2011:467563. doi: 10.1155/2011/467563

Gava, U., D’Agata, F., Bennink, E., Tartaglione, E., Perlo, D., Vernone, A., et al. (2021). UniTOBrain [Internet]. doi: 10.21227/x8ea-vh16

Hakim, A., Christensen, S., Winzeck, S., Lansberg, M. G., Parsons, M. W., Lucas, C., et al. (2021). Predicting infarct core from computed tomography perfusion in acute ischemia with machine learning: Lessons from the ISLES challenge. Stroke 52, 2328–2337. doi: 10.1161/STROKEAHA.120.030696

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the 2016 IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, NV, 770–778. doi: 10.1109/CVPR.2016.90

Hess, A., Meier, R., Kaesmacher, J., Jung, S., Scalzo, F., Liebeskind, D., et al. (2018). “Synthetic perfusion maps: Imaging perfusion deficits in DSC-MRI with deep learning,” in Brainlesion: Glioma, multiple sclerosis, stroke and traumatic brain injuries, Vol. 11383, eds A. Crimi, S. Bakas, H. Kuijf, F. Keyvan, M. Reyes, and T. van Walsum (Cham: Springer).

Ho, K. C., Scalzo, F., Sarma, K v., El-Saden, S., and Arnold, C. W. (2016). “A temporal deep learning approach for MR perfusion parameter estimation in stroke,” in Proceedings of the international conference on pattern recognition, Cancun, 1315–1320. doi: 10.1109/ICPR.2016.7899819

Kadry, S., Damaševièius, R., Taniar, D., Rajinikanth, V., and Lawal, I. A. (2021). “U-Net supported segmentation of ischemic-stroke-lesion from brain MRI slices,” in Proceedings of the 2021 seventh international conference on bio signals, images, and instrumentation (ICBSII), Chennai, 1–5. doi: 10.1109/ICBSII51839.2021.9445126

Klein, S., Staring, M., Murphy, K., Viergever, M. A., and Pluim, J. P. W. (2010). Elastix: A toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 29, 196–205. doi: 10.1109/TMI.2009.2035616

Kloenne, M., Niehaus, S., Lampe, L., Merola, A., Reinelt, J. D., Roeder, I., et al. (2020). Domain-specific cues improve robustness of deep learning-based segmentation of CT volumes. Sci. Rep. 10:10712. doi: 10.1038/s41598-020-67544-y

Konstas, A. A., Goldmakher, G. V., Lee, T. Y., and Lev, M. H. (2009a). Theoretic basis and technical implementations of CT perfusion in acute ischemic stroke, part 1: Theoretic basis. Am. J. Neuroradiol. 30, 662–668. doi: 10.3174/ajnr.A1487

Konstas, A. A., Goldmakher, G. V., Lee, T. Y., and Lev, M. H. (2009b). Theoretic basis and technical implementations of CT perfusion in acute ischemic stroke, Part 2: Technical implementations. Am. J. Neuroradiol. 30, 885–892. doi: 10.3174/ajnr.A1492

Kuang, H., Qiu, W., Boers, A., Brown, S., Muir, K., Majoie, C. B. L. M., et al. (2020). CT perfusion based machine learning model better predicts follow-up infarction in patients with acute ischemic stroke. Stroke 52, 223–231. doi: 10.1161/STROKEAHA.120.030092

Kudo, K., Sasaki, M., Yamada, K., Momoshima, S., Utsunomiya, H., Shirato, H., et al. (2010). Differences in CT perfusion maps generated by different commercial software: Quantitative analysis by using identical source data of acute stroke patients. Radiology 254, 200–209. doi: 10.1148/radiol.254082000

Laughlin, B. B., Chan, A., Tai, W. A., and Moftakhar, P. (2019). RAPID automated CT perfusion in clinical practice. Neuroimaging 38–55.

Li, S., Zeng, D., Bian, Z., Li, D., Zhu, M., Huang, J., et al. (2021a). Learning non-local perfusion textures for high-quality computed tomography perfusion imaging. Phys. Med. Biol. 66:115007. doi: 10.1088/1361-6560/abfc90

Li, S., Zheng, J., and Li, D. (2021b). Precise segmentation of non-enhanced computed tomography in patients with ischemic stroke based on multi-scale U-Net deep network model. Comput. Methods Programs Biomed. 208:106278. doi: 10.1016/j.cmpb.2021.106278

McKinley, R., Hung, F., Wiest, R., Liebeskind, D., and Scalzo, F. (2018). A machine learning approach to perfusion imaging with dynamic susceptibility contrast MR. Front. Neurol. 9:717. doi: 10.3389/fneur.2018.00717

Meier, R., Lux, P., Med, B., Jung, S., Fischer, U., Gralla, J., et al. (2019). Neural network–derived perfusion maps for the assessment of lesions in patients with acute ischemic stroke. Radiol. Artif. Intell. 1:e190019. doi: 10.1148/ryai.2019190019

Nogueira, R. G., Jadhav, A. P., Haussen, D. C., Bonafe, A., Budzik, R. F., Bhuva, P., et al. (2018). Thrombectomy 6 to 24 hours after stroke with a mismatch between deficit and infarct. N. Engl. J. Med. 378, 11–21. doi: 10.1056/NEJMoa1706442

Pinheiro, G. R., Voltoline, R., Bento, M., and Rittner, L. (2019). “V-net and U-net for ischemic stroke lesion segmentation in a small dataset of perfusion data,” in Brainlesion: Glioma, multiple sclerosis, stroke and traumatic brain injuries, eds A. Crimi, S. Bakas, H. Kuijf, F. Keyvan, M. Reyes, and T. van Walsum (Cham: Springer International Publishing), 301–309.

Robben, D., Boers, A., Marquering, H., Langezaal, L. C., Roos, Y. B., Oostenbrugge, R. J., et al. (2019). Prediction of final infarct volume from native CT perfusion and treatment parameters using deep learning. Med. Image Anal. 59:101589. doi: 10.1016/j.media.2019.101589

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: Convolutional networks for biomedical image segmentation,” in Medical image computing and computer-assisted intervention–MICCAI 2015. Lecture notes in computer science, Vol. 9351, eds N. Navab, J. Hornegger, W. Wells, and A. Frangi (Cham: Springer). doi: 10.1007/978-3-319-24574-4_28

Sabour, S., Frosst, N., and Hinton, G. (2017). Dynamic routing between capsules. arXiv [preprint] arXiv:1710.09829.

Tomasi, C., and Manduchi, R. (1998). “Bilateral filtering for gray and color images,” in Proceedings of the IEEE international conference on computer vision, (Piscataway, NJ: IEEE). doi: 10.1109/iccv.1998.710815

Wannamaker, R., Guinand, T., Menon, B. K., Demchuk, A., Goyal, M., Frei, D., et al. (2018). Computed tomographic perfusion predicts poor outcomes in a randomized trial of endovascular therapy. Stroke 49, 1426–1433. doi: 10.1161/STROKEAHA.117.019806

Xiao, Y., Liu, P., Liang, Y., Stolte, S., Sanelli, P., Gupta, A., et al. (2019). STIR-net: Deep spatial-temporal image restoration net for radiation reduction in CT perfusion. Front. Neurol. 10:647. doi: 10.3389/fneur.2019.00647

Yushkevich, P. A., Piven, J., Hazlett, H. C., Smith, R. G., Ho, S., Gee, J. C., et al. (2006). User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage 31, 1116–1128. doi: 10.1016/j.neuroimage.2006.01.015

Zhang, H., Chen, Y., Song, Y., Xiong, Z., Yang, Y., and Jonathan Wu, Q. M. (2019). Automatic kidney lesion detection for CT images using morphological cascade convolutional neural networks. IEEE Access. 7, 83001–83011. doi: 10.1109/ACCESS.2019.2924207

Zhou, X., Takayama, R., Wang, S., Zhou, X., Hara, T., and Fujita, H. (2017). “Automated segmentation of 3D anatomical structures on CT images by using a deep convolutional network based on end-to-end learning approach,” in Proceedings of the medical imaging 2017: Image processing, Orlando, FL. doi: 10.1117/12.2254201

Keywords: machine learning, convolutional neural network (CNN), CT-perfusion imaging, perfusion maps, stroke

Citation: Gava UA, D’Agata F, Tartaglione E, Renzulli R, Grangetto M, Bertolino F, Santonocito A, Bennink E, Vaudano G, Boghi A and Bergui M (2023) Neural network-derived perfusion maps: A model-free approach to computed tomography perfusion in patients with acute ischemic stroke. Front. Neuroinform. 17:852105. doi: 10.3389/fninf.2023.852105

Received: 10 January 2022; Accepted: 24 February 2023;

Published: 09 March 2023.

Edited by:

Ludovico Minati, Tokyo Institute of Technology, JapanReviewed by:

Elisa Francesca Ciceri, IRCCS Carlo Besta Neurological Institute Foundation, ItalyCopyright © 2023 Gava, D’Agata, Tartaglione, Renzulli, Grangetto, Bertolino, Santonocito, Bennink, Vaudano, Boghi and Bergui. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Federico D’Agata, ZmVkZXJpY28uZGFnYXRhQHVuaXRvLml0

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.