95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroinform. , 21 September 2022

Volume 16 - 2022 | https://doi.org/10.3389/fninf.2022.997068

This article is part of the Research Topic Brain-Computer Interface and Its Applications View all 15 articles

In this study, we proposed a new type of hybrid visual stimuli for steady-state visual evoked potential (SSVEP)-based brain-computer interfaces (BCIs), which incorporate various periodic motions into conventional flickering stimuli (FS) or pattern reversal stimuli (PRS). Furthermore, we investigated optimal periodic motions for each FS and PRS to enhance the performance of SSVEP-based BCIs. Periodic motions were implemented by changing the size of the stimulus according to four different temporal functions denoted by none, square, triangular, and sine, yielding a total of eight hybrid visual stimuli. Additionally, we developed the extended version of filter bank canonical correlation analysis (FBCCA), which is a state-of-the-art training-free classification algorithm for SSVEP-based BCIs, to enhance the classification accuracy for PRS-based hybrid visual stimuli. Twenty healthy individuals participated in the SSVEP-based BCI experiment to discriminate four visual stimuli with different frequencies. An average classification accuracy and information transfer rate (ITR) were evaluated to compare the performances of SSVEP-based BCIs for different hybrid visual stimuli. Additionally, the user's visual fatigue for each of the hybrid visual stimuli was also evaluated. As the result, for FS, the highest performances were reported when the periodic motion of the sine waveform was incorporated for all window sizes except for 3 s. For PRS, the periodic motion of the square waveform showed the highest classification accuracies for all tested window sizes. A significant statistical difference in the performance between the two best stimuli was not observed. The averaged fatigue scores were reported to be 5.3 ± 2.05 and 4.05 ± 1.28 for FS with sine-wave periodic motion and PRS with square-wave periodic motion, respectively. Consequently, our results demonstrated that FS with sine-wave periodic motion and PRS with square-wave periodic motion could effectively improve the BCI performances compared to conventional FS and PRS. In addition, thanks to its low visual fatigue, PRS with square-wave periodic motion can be regarded as the most appropriate visual stimulus for the long-term use of SSVEP-based BCIs, particularly for window sizes equal to or larger than 2 s.

Brain-computer interfaces (BCIs) are promising alternative communication technologies that have been generally developed for people who suffer from neuromuscular disorders or physical disabilities such as spinal cord injury, amyotrophic lateral sclerosis, and locked-in syndrome (Daly and Wolpaw, 2008). BCIs have provided new non-muscular communication channels that allowed for interaction between a user and the external environment. A variety of non-invasive brain imaging modalities have been employed to record brain activities in the field of BCIs. For example, functional magnetic resonance imaging (fMRI), magnetoencephalography, and functional near-infrared spectroscopy have been successfully employed to implement BCIs. In addition, electroencephalography (EEG) is another representative non-invasive neuroimaging modality that has been the most intensively studied owing to its advantages over the other modalities, such as high temporal resolution, affordability, and portability (Dai et al., 2020; Zhang et al., 2021).

In the EEG-based BCIs, the user performs certain mental tasks according to paradigms designed for eliciting task-related neural activities. Motor imagery, event-related potential, P300, and auditory steady-state response are popular paradigms employed to implement EEG-based BCIs (Lotte et al., 2018; Abiri et al., 2019). Steady-state visual evoked potential (SSVEP) is also one of the most promising EEG-based BCI paradigms, which has attracted increased interest from BCI researchers in recent decades (Waytowich et al., 2018). SSVEPs are periodic brain activities evoked in response to the presentation of visual stimulus flickering or pattern-reversing at a specific temporal frequency. SSVEP signals are entrained at the fundamental and harmonic frequencies of the visual stimulus and are well-known to be mainly observed in the occipital region of the brain over a wide range of 1–90 Hz (Herrmann, 2001; Choi et al., 2019a). SSVEP-based BCIs interpret the user's intention by detecting the visual stimulus that the user gazed at based on these characteristics and have various advantages over the other paradigms, such as high information transfer rate (ITR), excellent stability, and little training requirement (Zhang et al., 2020; Kim and Im, 2021). Thanks to these advantages, SSVEP-based BCIs have been successfully applied to various applications including mental speller (Nakanishi et al., 2018), assistive technology for patients (Perera et al., 2016), online home appliance control (Kim et al., 2019), and hands-free controllers for virtual reality (VR) (Armengol-Urpi and Sarma, 2018) or augmented reality (AR) (Arpaia et al., 2021).

In general, two types of visual stimuli have been employed to evoke SSVEPs: (1) flickering stimulus (FS) and (2) pattern-reversal stimulus (PRS) (Bieger et al., 2010; Zhu et al., 2010). FS is the visual stimulus that modulates the color or luminance of the stimulus at a specific frequency. Flickering single graphics in the form of squares or circles rendered on an LCD monitor is the representative FS used to elicit the SSVEPs. PRS evokes SSVEP responses by alternating the patterns of the visual stimuli (e.g., checkerboard or line boxes) at a constant frequency. Based on these visual stimuli, a number of studies have been conducted to improve the performance of SSVEP-based BCIs, examples of which include optimization of stimulus parameters such as spatial frequency of PRS, stimulation frequencies, colors, and waveform of FS (Bieger et al., 2010; Teng et al., 2011; Duszyk et al., 2014; Jukiewicz and Cysewska-Sobusiak, 2016; Chen et al., 2019). Recently, Choi et al. (2019b) and Park et al. (2019) proposed a novel type of visual stimulus called grow/shrink stimulus (GSS) to improve the performance of SSVEP-based BCI in AR and VR environments, respectively. GSS was implemented by incorporating a periodic motion into FS to concurrently evoke SSVEP and steady-state motion visual evoked potential (SSMVEP), inspired by previous studies that reported that the periodic motion-based visual stimuli could elicit SSMVEP (Xie et al., 2012; Yan et al., 2017). GSS has shown a higher BCI performance compared to the conventional PRS or FS in both VR and AR environments. However, no study has been conducted on the performance of GSS-like visual stimuli for SSVEP-based BCI when the LCD monitor is used as a rendering device. Furthermore, the effect of the motion parameters (i.e., the waveform of the temporal motion dynamics) on the BCI performances has not been investigated. Indeed, the investigation of the performance of various GSS-like visual stimuli with the LCD monitor environment is important because most SSVEP-based BCI studies employ the LCD monitor to present the visual stimuli (Ge et al., 2019; Chen et al., 2021; Xu et al., 2021). In addition, to the best of our knowledge, hybrid visual stimuli that consolidate PRS with periodic motions have never been proposed in previous studies.

In this study, we proposed novel hybrid visual stimuli that consolidate the conventional PRS with periodic motions and further investigated the effect of waveforms of the periodic motions for hybrid visual stimuli based on either FS or PRS on the performance of SSVEP-based BCIs. As for the periodic motions, the stimulus size was changed according to four different waveforms: none (no change in the size), square (changing size in a binary manner), triangular (linearly increasing and decreasing size), and sine (changing size with a sinusoidal waveform), resulting in a total of eight different hybrid visual stimuli (i.e., FS and PRS each with four periodic motions). We evaluated two crucial factors for the practical use of SSVEP-based BCIs: (1) BCI performances and (2) visual fatigue, for each visual stimulus, with 20 healthy participants. A filter bank canonical correlation analysis (FBCCA) algorithm, which is a state-of-the-art training-free algorithm for SSVEP-based BCIs was employed to evaluate the performances of SSVEP-based BCIs in terms of classification accuracy and information transfer rate (ITR). Moreover, an extended version of FBCCA, named subharmonic-FBCCA (sFBCCA) was developed for the SSVEP-based BCIs with PRS-based hybrid visual stimuli.

A total of 20 healthy adults (10 males, aged 23.7 ± 3.5 years) with normal or corrected-to-normal vision participated in the experiments. None of the reported any serious history of neurological, psychiatric, or other severe diseases that could otherwise influence the experimental results. All participants were informed of the detailed experimental procedure and provided written consent before the experiment. This study and the experimental paradigm were approved by the Institutional Review Board Committee of Hanyang University, Republic of Korea (IRB No. HYU-202006-004-03) according to the Declaration of Helsinki.

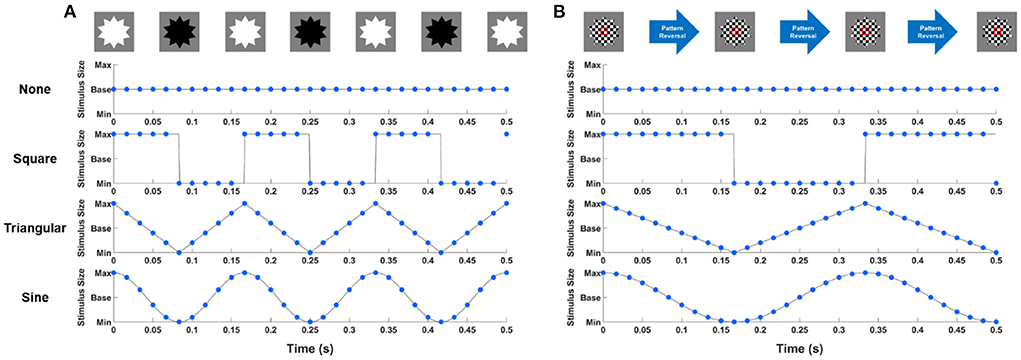

The visual stimuli were developed with the Unity 3D engine (Unity Technologies ApS, San Francisco, CA, USA). Based on previous GSS studies (Choi et al., 2019b; Park et al., 2019), all stimuli were designed in a star shape, with a base size of 7 cm (5.7°) to increase the visibility of periodic motions. The background color was set to gray. Both FS and PRS changed the color or reversed the patterns with the periodic square waveform according to the results of previous studies that reported that square-wave FS exhibited significantly higher classification accuracy than FS of other waveforms (Teng et al., 2011; Chen et al., 2019). The periodic motions were implemented by varying the size of visual stimuli according to four different types of waveforms: none (no change in the size), square (changing size in a binary manner), triangular (linearly increasing and decreasing size), and sine (changing size with a sinusoidal waveform) waveforms with a modulation ratio of 33% compared to the base size (i.e., the radius of each stimulus was changed from 0.67 to 1.33 when the radius of the base stimulus was assumed to be one). The conventional visual stimuli of FS and PRS were combined with four periodic motions, resulting in eight hybrid visual stimuli. Hereinafter, none, square, triangular, and sine waveforms are referred to as None, Square, Triangular, and Sine, respectively, and each hybrid visual stimuli are referred to as FS-None, FS-Square, FS-Triangular, FS-Sine, PRS-None, PRS-Square, PRS-Triangular, and PRS-Sine. Note that FS-None and PRS-None were the same as the conventional FS and PRS with the base size. Figure 1 illustrates the examples of the hybrid visual stimuli when the stimulation frequency was set to 6 Hz. Blue circles indicate the stimulus size presented to the participants considering the refresh rate of the LCD monitor (= 60 Hz). It is worthwhile noting that the most important difference between FS and PRS is that FS elicits SSVEP responses at the number of full cycles (i.e., two reversals) per second, whereas PRS evokes SSVEP responses at the number of reversals per second (Zhu et al., 2010). Therefore, the stimulation frequencies of periodic motions for PRS were set to be half of those for FS, which were considered as subharmonics of the stimulation frequencies in the further analysis.

Figure 1. Examples of (A) FS-based hybrid visual stimuli and (B) PRS-based hybrid visual stimuli when the stimulation frequency was 6 Hz. Blue circles indicate the stimulus size presented to participants considering the refresh rate of the LCD monitor.

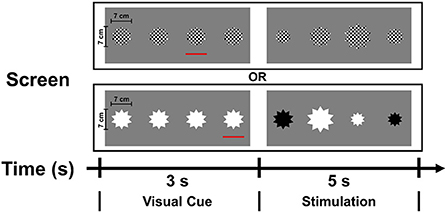

The participants sat 70 cm away from a 27-inch LCD monitor with a resolution of 1920 x 1080 pixels and the 60 Hz refresh rate. The experiment consisted of eight sessions corresponding to each hybrid visual stimuli and the order of the sessions was randomized for each participant. Each session was composed of 20 trials (5 trials × 4 stimuli), each of which consisted of the visual cue of 3 s and the stimulation time of 5 s. The red bar was presented under the target stimulus during visual cue period in a randomized order. The timing sequence of a single trial is shown in Figure 2. The stimulation frequencies of four stimuli were determined as 6, 6.67, 7.5, and 10 Hz considering the refresh rate of the LCD monitor. In each trial, the participants were instructed to focus their attention on the target stimulus among four simultaneously flickering stimuli without eye blinks and body movements during the stimulation time. At the end of each session, the participants evaluated the visual fatigue score for each hybrid visual stimulus in the range of 1–10 (1, low fatigue; 10, high fatigue).

Figure 2. The timing sequence of a single trial. Each trial consisted of the visual cue of 3 s and the stimulation time of 5 s.

EEG data were recorded from eight scalp electrodes (O1, Oz, O2, PO7, PO3, POz, PO4, and PO8) using a commercial EEG system (BioSemi Active Two; Biosemi, Amsterdam, The Netherlands) at a sampling rate of 2,048 Hz. A CMS active electrode and a DRL passive electrode were used to form a feedback loop for the amplifier reference (Park et al., 2019). MATLAB 2020b (Mathworks; Natick, MA) was used to analyze the EEG data, and the functions implemented in the BBCI toolbox (https://github.com/bbci/bbci_public) were employed. The raw EEG data were down-sampled to 256 Hz to reduce the computational cost and then bandpass-filtered using a sixth-order zero-phase Butterworth filter with cutoff frequencies of 2 and 54 Hz. Considering a latency delay in the visual pathway, the EEG data were segmented into epochs from 0.135 to 0.135+w s with respect to the task onset time (0 s), where w indicates the window size used for SSVEP detection (Rabiul Islam et al., 2017).

CCA is a multivariate statistical method used to measure the underlying correlation between two sets of multidimensional variables, and where, Ns is the number of sample points and dx and dy indicate the dimension of X and Y, respectively (Nakanishi et al., 2015). Considering their linear combinations and , CCA finds a pair of weight vectors and that maximize Pearson's correlation coefficients between x and y using the following equation:

Here, T denotes the transpose operation. The maximum correlation coefficient with respect to WX and WY is called the “CCA coefficient.”

For SSVEP detection, the CCA coefficients, ρf, between multichannel EEG signals, , and the reference signals for each stimulus frequency, , were evaluated and the frequency with the largest CCA coefficient was classified as the target frequency, as follows:

Here, K is the number of stimulus frequencies presented to the participants.

The reference signal for each stimulus frequency (Yf) was set as

where, f is the stimulus frequency. In this study, Nc and fs denote the number of channels and sampling frequency, which were set to 8 and 256, respectively. Nh represents the number of harmonics, which was set to 5 according to previous studies (Chen et al., 2015).

FBCCA combines CCA with filter bank analysis to extract the discriminative information in the harmonic components (Chen et al., 2015). The filter bank is applied to decompose EEG data into multiple sub-band data, and CCA coefficients are evaluated for each sub-band. The weighted sums of the squared sub-band CCA coefficients for each stimulus frequency are calculated using the following equations:

where, Nm is the number of subbands, m is the index of the subbands, and denotes the CCA coefficient of sub-band m. The target frequency is determined in the same manner as in CCA. According to previous studies (Chen et al., 2015; Zhao et al., 2020), the following parameters were set: a = 1.25, b = 0.25, and Nm = 5. The filter bank for five sub-bands was designed with lower and upper cutoff frequencies of 4–52, 8–52, 12–52, 16–52, and 20–52 Hz, respectively (Chen et al., 2015). In this study, FBCCA was employed to identify SSVEPs because it is generally regarded as the best available algorithm, yielding the highest classification accuracy without the need for training sessions (Zerafa et al., 2018; Liu et al., 2020).

In this study, we proposed an extended version of FBCCA, named subharmonic-FBCCA (sFBCCA), to utilize the information in the subharmonic component, elicited by periodic motions for PRS. In sFBCCA, the reference signal was expanded to include the subharmonic component as follows:

In addition, the equation for the weighted sums of the squared sub-band CCA coefficients is extended as the following equations:

where, msub represents the index of the subharmonic, set to 0.5, in this study. The bandpass filter for the subharmonic component was designed with lower and upper cutoff frequencies of 1–52 Hz. sFBCCA was employed to classify SSVEPs for hybrid visual stimuli of PRS-Square, PRS-Triangular, and PRS-Sine.

In addition to the classification accuracy, ITR (bits per minute) has been widely employed as a metric to assess the performance of the BCI system (Wolpaw et al., 2002). The ITR was evaluated using the following equation:

Where, T denotes the window size (in seconds), N indicates the number of classes, and p represents the classification accuracy. In the present study, the N value was 4.

Statistical analyses were also performed using MATLAB 2020b (MathWorks; Natick, MA, USA). The non-parametric method was employed because the normality criterion was not satisfied owing to the small sample size. Friedman test was conducted to verify if there were significant differences among the BCI performances. Wilcoxon signed-rank test with the false discovery rates (FDRs) correction for multiple comparisons was performed for post-hoc analyses.

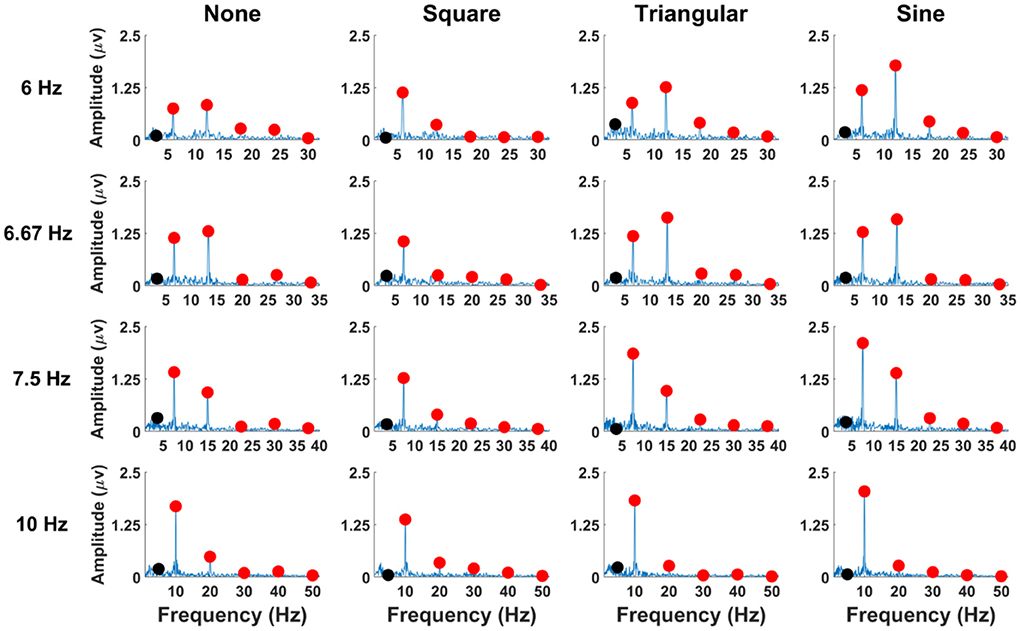

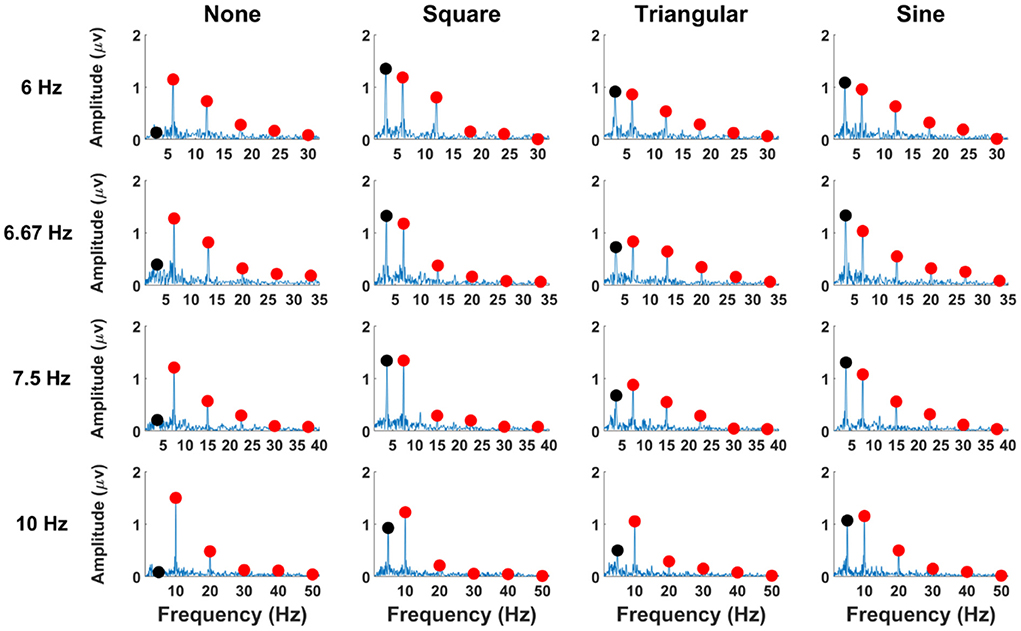

Figure 3 illustrates the grand mean amplitude spectra of SSVEPs averaged across all participants with respect to waveforms of periodic motions. The amplitudes of SSVEPs were obtained from the EEG signals of 5-s long recorded at the Oz electrode. Here, the first five harmonic components of the stimulation frequencies, which were used for the classification, and the subharmonic components were presented in the figure. The red circles indicate the fundamentals and harmonics of stimulation frequencies, and the black circles represent the subharmonics. For FS-based hybrid visual stimuli, clear SSVEP peaks were mainly evoked at the fundamental and second harmonic frequencies. No SSVEP peaks were observed at the subharmonic frequency. The grand average amplitudes of each SSVEP component are listed in Supplementary Table 1.

Figure 3. SSVEP amplitudes of the averaged EEG signals across all the participants at the Oz electrode for FS-based hybrid visual stimuli. Red circles indicate the stimulation frequencies and their harmonics, and black circles represent the subharmonic frequencies.

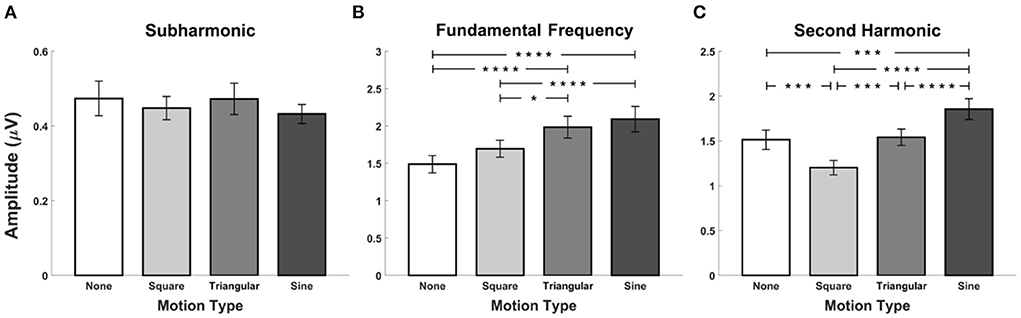

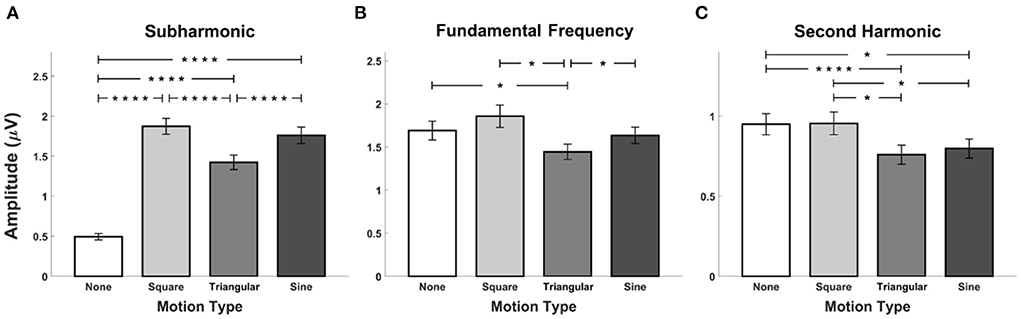

The grand mean amplitudes of SSVEP components averaged over all stimulation frequencies across all the participants are illustrated in Figure 4 as a function of waveforms of periodic motions, where the error bars represent the standard errors. The statistical analyses were performed to compare the differences in the amplitude of SSVEP components at the subharmonic, fundamental, and second harmonic frequencies among FS-based hybrid visual stimuli. Four SSVEP amplitudes at each harmonic frequency were calculated from the EEG signals averaged over each stimulation frequency recorded at the Oz electrode for each participant. Consequently, a total of 80 SSVEP amplitudes (4 stimulation frequencies ×20 participants) were statistically compared. The Friedman test indicated significant differences in the amplitudes at fundamental and second harmonic frequencies (subharmonic frequency: χ2 = 0.05, p = 0.998, fundamental frequency: χ2 = 28.26, p < 0.001, second harmonic frequency: χ2 = 32.81, p < 0.001). At the fundamental frequency, SSVEP amplitude elicited by FS-Sine and FS-Triangular was significantly higher than that elicited by FS-None and FS-Square (FDRs-corrected p < 0.05 between FS-Square vs FS-Triangular, and FDRs-corrected p < 0.001 for the others, Wilcoxon signed-rank test). For the second harmonic frequency, SSVEP amplitude evoked by FS-Sine was significantly higher than that evoked by FS with other waveforms (p < 0.005 for FS-None and p < 0.001 for the others, Wilcoxon signed-rank test with FDRs correction). Additionally, FS-Square elicited significantly lower SSVEP amplitude than that elicited by the other waveforms (FDRs-corrected p < 0.005 for FS-None and FS-Triangular, and FDRs-corrected p < 0.001 for FS-Sine, Wilcoxon signed-rank test).

Figure 4. Grand mean SSVEP amplitudes for FS-based hybrid visual stimuli averaged across all the participants at (A) the subharmonic frequency, (B) the fundamental frequency, and (C) the second harmonic frequency. Error bars represent the standard errors. Here, the asterisks of *, ***, and **** represent FDRs-corrected p < 0.05, p < 0.005, and p < 0.001, respectively (Wilcoxon signed-rank test).

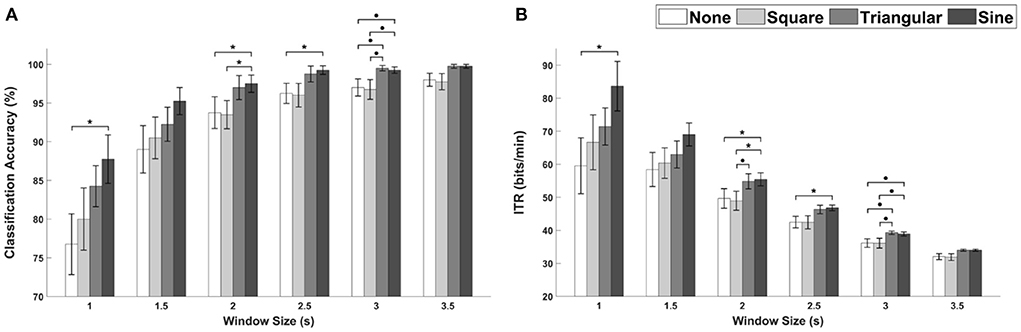

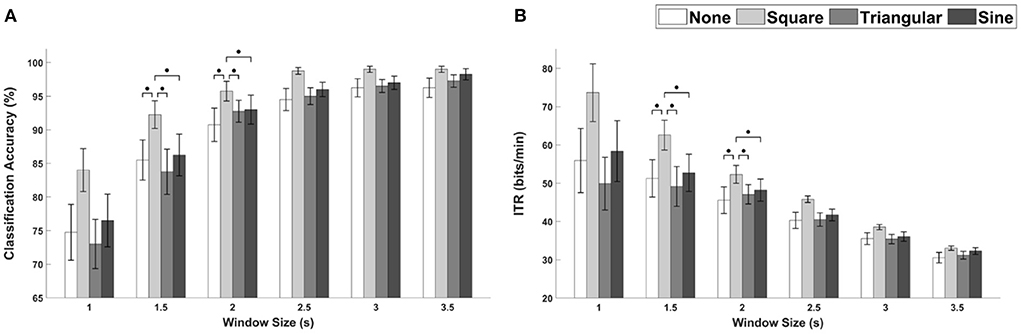

The average classification accuracies and ITRs for FS-based hybrid visual stimuli with respect to different window sizes are depicted in Figures 5A,B, respectively. The Friedman test indicated statistically significant differences for all window sizes except for 1.5 and 3.5 s (1 s, χ2 = 8.10, p < 0.5; 1.5 s, χ2 = 6.20, p = 0.102; 2 s, χ2 = 9.50, p < 0.05; 2.5 s, χ2 = 11.38, p < 0.001; 3 s, χ2 = 9.88, p < 0.05; 3.5 s, χ2 = 7.47, p = 0.058, identical to both the classification accuracy and ITR). The Wilcoxon signed-rank test with FDRs correction showed statistically significant differences in both classification accuracy and ITR between FS-None and FS-Sine for window sizes of 1, 2, and 2.5 s (p < 0.5 for both classification accuracies and ITRs). Additionally, the performances of FS-Sine were significantly higher than those of FS-Square for the window size of 2 s (p < 0.5, Wilcoxon signed-rank test with FDRs correction). As illustrated in the figure, the performances of SSVEP-based BCI could be improved by incorporating triangular- and sine-wave periodic motions into the conventional FS for all window sizes. For FS, FS-Sine exhibited the highest average performances for every window size except for 3 s, especially for short window sizes.

Figure 5. The average performance of FS-based hybrid visual stimuli in terms of (A) classification accuracies and (B) ITRs as a function of waveforms with different window sizes. Error bars represent the standard errors. Here, ∙p < 0.1 and *p < 0.05 are the FDRs-corrected p-values from Wilcoxon signed-rank test.

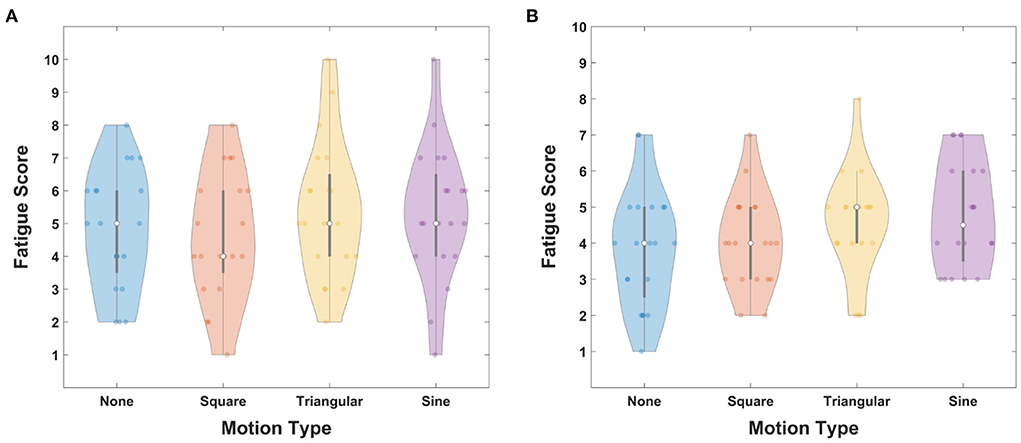

Figure 6A illustrates the fatigue scores of FS-based hybrid stimuli as a function of periodic motion waveforms. The gray bars represent the interquartile ranges from the first quartile to the third quartile and white circles indicate the median values. The averaged fatigue scores were 4.8 ± 1.82, 4.6 ± 1.90, 5.4 ± 2.01, and 5.3 ± 2.05 for FS-None, FS-Square, FS-Triangular, and FS-Sine, respectively. A statistically significant difference was not observed in the Friedman test (χ2 = 2.55, p = 0.467).

Figure 6. Fatigue scores for (A) FS-based hybrid visual stimuli and (B) PRS-based hybrid visual stimuli as a function of waveforms. Gray bars represent the interquartile range from 25 to 75% and white circles indicate median values.

The grand mean amplitude spectra of SSVEPs averaged across all participants are illustrated in Figure 7 as a function of waveforms of PRS-based hybrid visual stimuli. The red circles indicate the fundamental and harmonic frequencies, and the black circles represent the subharmonic frequencies. Unlike the FS-based hybrid visual stimuli, clear SSVEP peaks were observed at the subharmonic frequency for PRS-Square, PRS-Triangular, and PRS-Sine, as expected. The grand mean amplitudes of each SSVEP component are listed in Supplementary Table 2.

Figure 7. SSVEP amplitudes of averaged EEG signals over all participants for PRS-based hybrid visual stimuli at the Oz electrode. Red circles indicate the stimulation frequencies and their harmonics, and black circles represent the subharmonic frequencies.

The grand mean amplitudes of SSVEP components at the subharmonic, fundamental, and second harmonic frequencies averaged over all stimulation frequencies across all the participants are illustrated in Figure 8 with respect to the periodic motion waveforms incorporated with PRS. The error bars represent the standard errors. The Friedman test indicated significant differences in amplitudes at all harmonic frequencies (χ2 = 110.53, p < 0.001; χ2 = 10.69, p < 0.05; χ2 = 32.81, p < 0.05). PRS-None evoked the lowest SSVEP amplitudes at the subharmonic frequency (p < 0.001 for PRS-Square, PRS-Triangular, and PRS-Sine, Wilcoxon signed-rank test with FDRs correction). In addition, the SSVEP amplitude induced by PRS-Triangular was significantly lower than that induced by PRS-Square and Sine (FDRs-corrected p < 0.001, Wilcoxon signed-rank test). For the fundamental frequency, PRS-Triangular induced significantly lower SSVEP amplitudes compared to other PRS-based hybrid visual stimuli and even conventional PRS (p < 0.05, Wilcoxon signed-rank test with FDRs correction). The SSVEP amplitudes elicited by PRS-None and PRS-Square were significantly higher than those elicited by PRS-Triangular and PRS-Sine at the second harmonic frequency (p < 0.001 between PRS-None and PRS-Triangular, and p < 0.05 for the others, Wilcoxon signed-rank test, FDRs-corrected).

Figure 8. Grand mean SSVEP amplitudes for PRS-based hybrid visual stimuli averaged across all the participants at (A) the subharmonic frequency, (B) the fundamental frequency, and (C) the second harmonic frequency. Error bars represent the standard errors. Asterisks of * and **** represent FDRs-corrected p < 0.05 and p < 0.001, respectively (Wilcoxon signed-rank test).

Figures 9A,B depict the average classification accuracies and ITRs, respectively, for PRS-based hybrid visual stimuli with respect to different window sizes. Here, all the performances of SSVEP-based BCIs were evaluated using FBCCA for PRS-None and sFBCCA for PRS-Square, PRS-Triangular, and PRS-Sine cases. The Friedman test indicated statistically significant differences for window sizes of 1.5 and 2 s (1 s, χ2 = 5.59, p < 0.133; 1.5 s, χ2 = 9.30, p < 0.05; 2 s, χ2 = 8.06, p < 0.05; 2.5 s, χ2 = 6.38, p < 0.1; 3 s, χ2 = 7.12, p < 0.1; 3.5 s, χ2 = 4.63, p = 0.201, identical to both the classification accuracy and ITR). For all window sizes, PRS-Square showed the highest performance in terms of both classification accuracy and ITR, although statistically significant differences were not observed.

Figure 9. The average performance of PRS-based hybrid visual stimuli in terms of (A) classification accuracies and (B) ITRs as a function of waveforms with different window sizes. Error bars represent the standard errors. Here, ∙p < 0.1 is the FDRs-corrected p-value from Wilcoxon signed-rank test.

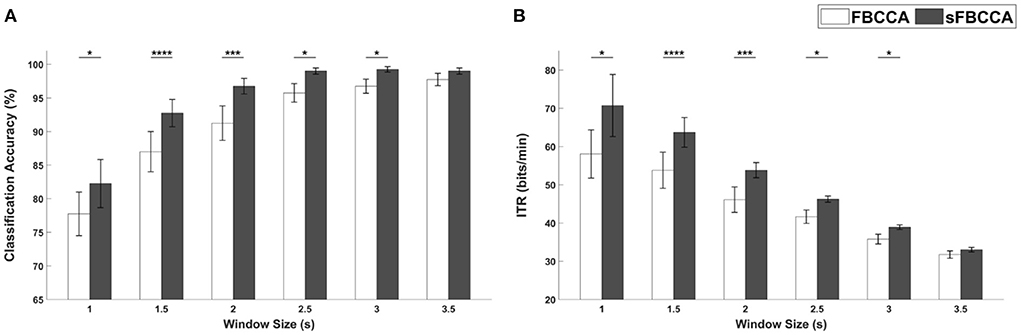

To investigate the effect of sFBCCA, the SSVEP-based BCI performances for PRS-Square were evaluated using FBCCA and sFBCCA with respect to different window sizes. In Figure 10, the white and gray bars indicate the averaged classification accuracies and ITRs evaluated using FBCCA and sFBCCA, respectively. The error bars represent standard errors. The SSVEP-based BCI performances evaluated using sFBCCA were significantly improved compared to those evaluated using FBCCA for every window size except 3.5 s (Wilcoxon signed-rank test). The result demonstrated that the proposed sFBCCA could significantly improve the performance of SSVEP-based BCIs when PRS-based hybrid visual stimuli are employed.

Figure 10. (A) Classification accuracies and (B) ITRs of SSVEP-based BCI for PRS-Square evaluated using FBCCA and sFBCCA with different window sizes. Error bars represent the standard errors. Here, *, ***, and **** represent p < 0.05, p < 0.005, and p < 0.001, respectively (Wilcoxon signed-rank test).

The fatigue scores for PRS-based hybrid visual stimuli are illustrated in Figure 6B as a function of periodic motion waveforms. The gray bars represent the interquartile ranges from 25 to 75% and white circles indicate the median values. For PRS-None, PRS-Square, PRS-Triangular, and PRS-Sine, the averaged fatigue scores were reported as 3.85 ± 1.63, 4.05 ± 1.28, 4.55 ± 1.43, and 4.8 ± 1.51, respectively.

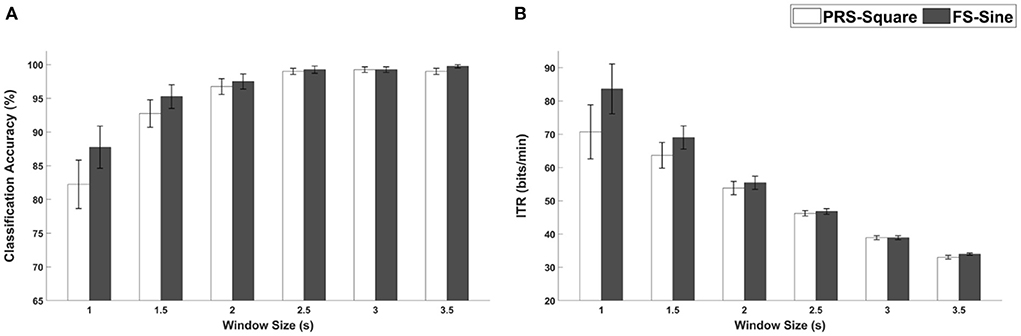

The average classification accuracies and ITRs for FS-Sine and PRS-Square, which exhibited the highest performances among FS- and PRS-based hybrid visual stimuli, are shown in Figures 11A,B, respectively. The differences in the average classification accuracies were reported to be 5.5, 2.5, 0.75, 0.25, 0.00, and 0.75%p for window sizes of 1, 1.5, 2, 2.5, 3, and 3.5 s, respectively. As for the ITRs, the differences were 12.93, 5.35, 1.60, 0.56, 0.00, 0.94 bits/min for the 1-, 1.5-, 2-, 2.5-, 3-, and 3.5-s window sizes. Statistically significant differences were not observed (Wilcoxon signed-rank test).

Figure 11. (A) Classification accuracies and (B) ITRs for PRS-Square and FS-Sine with different window sizes. Error bars represent the standard errors.

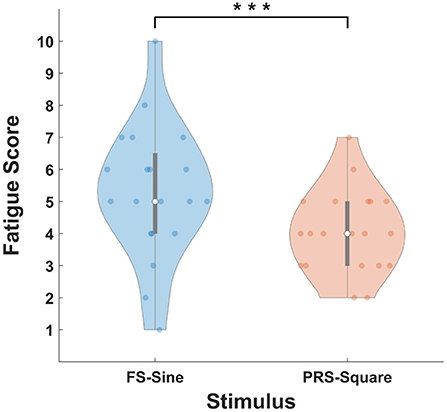

The violin plot in Figure 12 illustrates the fatigue scores for FS-Sine and PRS-Square. The distributions of fatigue scores from the first quartile to the third quartile are presented as gray bars and the median values are indicated as white circles. The average fatigue scores were reported to be 5.3 ± 2.05 and 4.05 ± 1.28 for FS-Sine and PRS-Square, respectively. A statistically significant difference in the fatigue score was observed between the FS-Sine and PRS-Square conditions (p < 0.005, Wilcoxon signed-rank test), implying that PRS-Square is more visually comfortable to the users than FS-Sine.

Figure 12. Average fatigue scores for FS-Sine and PRS-Square. Gray bars represent the interquartile range from 25 to 75% and white circles indicate the median values. Here, the asterisk of *** represents p < 0.005 (Wilcoxon signed-rank test).

In this study, we proposed novel types of hybrid visual stimuli that incorporate periodic motions into conventional SSVEP visual stimuli. Periodic motions were realized by changing the size of the visual stimulus according to four different types of waveforms. We then investigated the effect of periodic motion waveforms for the hybrid visual stimuli on the performances of SSVP-based BCIs, for the first time. Our results demonstrated that the conventional SSVEP visual stimuli combined with appropriate periodic motions could increase the SSVEP amplitudes significantly, resulting in the enhancement of SSVEP-based BCI performances. For FS, the hybrid stimulus of FS-Sine elicited the highest SSVEP amplitudes at the fundamental and second harmonic frequencies, thereby resulting in the highest average performances in terms of classification accuracies and ITRs for every window size except for 3 s. As for PRS, PRS-Square evoked the highest SSVEP components, thereby exhibiting the highest performances for all window sizes. No statistically significant difference in the performances between FS-Sine and PRS-Square was observed; however, the visual fatigue score of PRS-Square was significantly lower than that of FS-Sine. Visual fatigue is one of the main obstacles to implementing practical SSVEP-based BCIs because visual fatigue generally decreases SSVEP amplitudes, yielding degradation of overall SSVEP BCI performances (Makri et al., 2015; Ajami et al., 2018). Therefore, our results suggest that the proposed PRS-Square is the most appropriate stimulus that could improve the SSVEP-based BCI performance without inducing high visual fatigue. It is believed that the use of PRS-Square stimuli has a great potential to improve the practicality of SSVEP-based BCIs, particularly for long-term use.

We hypothesized that the performances of SSVEP-based BCIs with any kind of hybrid visual stimuli could outperform those with conventional SSVEP visual stimuli because the hybrid visual stimuli could induce both SSVEP and SSMVEP. However, unlike our expectation, FS-Square and PRS-Triangular exhibited lower average classification accuracies and ITRs than the conventional visual stimuli for some window sizes. In addition, the periodic motion of the same waveform showed different effects on FS and PRS. For example, contrary to FS-Square, PRS-Square achieved the highest classification accuracies and ITRs for every window size, suggesting that it is important to combine conventional visual stimuli with periodic motions with appropriate waveforms for implementing high-performance SSVEP-based BCIs. We believe that these results might originate from different mechanisms of FS and PRS to evoke SSVEP. However, imaging modalities with higher spatial resolution such as fMRI would be necessary to further investigate the mechanisms of FS and PRS to evoke SSVEP. On the other hand, the luminance or pattern of FS and PRS was changed according to the square waveform because previous studies (Teng et al., 2011; Chen et al., 2019) demonstrated that square-wave FS achieved significantly higher classification performances than other waveform stimuli. However, as there is a possibility that the hybrid visual stimuli combining FS or PRS of other waveforms with periodic motions might improve the performance of SSVEP-based BCI, further investigations would be necessary for the future.

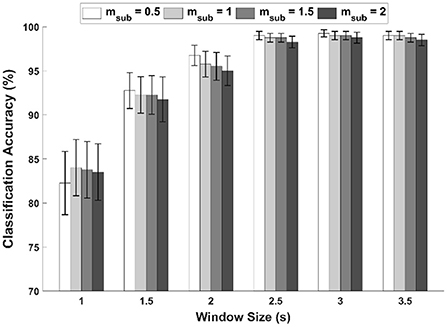

In contrast to the FS-based hybrid visual stimuli, the PRS-based hybrid visual stimuli were implemented by incorporating periodic motions whose stimulation frequency was half of PRS frequency. Although not mentioned in this manuscript, we also tested PRS-based hybrid visual stimuli with periodic motions of which the stimulation frequency was the same as that of PRS in our preliminary tests. For PRS with periodic motions of twice the frequency, most participants complained of severe visual fatigue and discomfort due to the rapid change in the stimulus size although almost the same classification accuracy as the PRS-based hybrid visual stimuli employed in this study was reported. As a result, the stimulation frequency of periodic motions was determined as half of PRS frequency. Since the use of the reduced stimulation frequency for periodic motions evoked subharmonic component, we extended the conventional FBCCA and proposed sFBCCA to fully exploit useful information contained in SSVEP evoked by the proposed PRS-based hybrid visual stimuli. The use of sFBCCA significantly enhanced the classification performances, compared to the results of FBCCA applied to PRS-Square, as shown in Figure 10. In the sFBCCA, the index of subharmonic, msub, was set to 0.5, which showed the highest classification accuracies for all window sizes except 1 s, as shown in Figure 13. However, there is still a possibility of further improvement of SSVEP-based BCI performances by optimizing sFBCCA parameters. Additionally, PRS-based hybrid visual stimulus has a promising possibility of increasing the number of commands limited by the refresh rate of the LCD monitor (Li et al., 2021), thanks to its characteristics of dual main stimulation frequencies induced by SSVEP and SSMVEP. This would be one of the promising topics we would like to further investigate in our future studies for implementing high-performance SSVEP-based BCIs.

Figure 13. Average classification accuracies for PRS-Square as a function of msub in sFBCCA with different window sizes.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Institutional Review Board Committee of Hanyang University. The patients/participants provided their written informed consent to participate in this study.

JK designed the experiment, developed the algorithm, and analyzed the data. JH developed the visual stimulation program. HN performed experiments. C-HI supervised the study. JK and C-HI wrote the manuscript. All authors reviewed the manuscript.

This work was supported in part by Hyundai Motor Group in 2021. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication. This work was also supported in part by the National Research Foundation of Korea (NRF) grant funded by the Korea Government (MSIT) (No. NRF2019R1A2C2086593), and in part by the Institute for Information and communications Technology Promotion (IITP) grant funded by the Korea government (MSIT) (2017-0-00432).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2022.997068/full#supplementary-material

Abiri, R., Borhani, S., Sellers, E. W., Jiang, Y., and Zhao, X. (2019). A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 16, 011001. doi: 10.1088/1741-2552/aaf12e

Ajami, S., Mahnam, A., and Abootalebi, V. (2018). An adaptive SSVEP-based brain-computer interface to compensate fatigue-induced decline of performance in practical application. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 2200–2209. doi: 10.1109/TNSRE.2018.2874975

Armengol-Urpi, A., and Sarma, S. E. (2018). “Sublime: a hands-free virtual reality menu navigation system using a high-frequency SSVEP-based brain-computer interface,” in Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, (Tokyo, Japan: Association for Computing Machinery) 1–8. doi: 10.1145/3281505.3281514

Arpaia, P., De Benedetto, E., and Duraccio, L. (2021). Design, implementation, and metrological characterization of a wearable, integrated AR-BCI hands-free system for health 4.0 monitoring. Measurement 177, 109280. doi: 10.1016/j.measurement.2021.109280

Bieger, J., Molina, G. G., and Zhu, D. (2010). “Effects of stimulation properties in steady-state visual evoked potential based brain-computer interfaces,” in Proceedings of 32nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, (Buenes Aires, Argentina: IEEE) 3345–3348.

Chen, X., Wang, Y., Gao, S., Jung, T.-P., and Gao, X. (2015). Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. J. Neural Eng. 12, 046008. doi: 10.1088/1741-2560/12/4/046008

Chen, X., Wang, Y., Zhang, S., Xu, S., and Gao, X. (2019). Effects of stimulation frequency and stimulation waveform on steady-state visual evoked potentials using a computer monitor. J. Neural Eng. 16, 066007. doi: 10.1088/1741-2552/ab2b7d

Chen, Y., Yang, C., Ye, X., Chen, X., Wang, Y., and Gao, X. (2021). Implementing a calibration-free SSVEP-based BCI system with 160 targets. J. Neural Eng. 18, 046094. doi: 10.1088/1741-2552/ac0bfa

Choi, G.-Y., Han, C.-H., Jung, Y.-J., and Hwang, H.-J. (2019a). A multi-day and multi-band dataset for a steady-state visual-evoked potential–based brain-computer interface. GigaScience 8, giz133. doi: 10.1093/gigascience/giz133

Choi, K- M., Park, S., and Im, C.-H. (2019b). Comparison of visual stimuli for steady-state visual evoked potential-based brain-computer interfaces in virtual reality environment in terms of classification accuracy and visual comfort. Comput. Intell. Neurosci. 2019, 9680697. doi: 10.1155/2019/9680697

Dai, G., Zhou, J., Huang, J., and Wang, N. (2020). HS-CNN: a CNN with hybrid convolution scale for EEG motor imagery classification. J. Neural Eng. 17, 016025. doi: 10.1088/1741-2552/ab405f

Daly, J. J., and Wolpaw, J. R. (2008). Brain–computer interfaces in neurological rehabilitation. Lancet Neurol. 7, 1032–1043. doi: 10.1016/S1474-4422(08)70223-0

Duszyk, A., Bierzyńska, M., Radzikowska, Z., Milanowski, P., Kuś, R., Suffczyński, P., et al. (2014). Towards an optimization of stimulus parameters for brain-computer interfaces based on steady state visual evoked potentials. PLOS ONE 9, e112099. doi: 10.1371/journal.pone.0112099

Ge, S., Jiang, Y., Wang, P., Wang, H., and Zheng, W. (2019). Training-free steady-state visual evoked potential brain–computer interface based on filter bank canonical correlation analysis and spatiotemporal beamforming decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 1714–1723. doi: 10.1109/TNSRE.2019.2934496

Herrmann, C. S. (2001). Human EEG responses to 1–100 Hz flicker: resonance phenomena in visual cortex and their potential correlation to cognitive phenomena. Exp. Brain Res. 137, 346–353. doi: 10.1007/s002210100682

Jukiewicz, M., and Cysewska-Sobusiak, A. (2016). Stimuli design for SSVEP-based brain computer-interface. Int. J. Electr. Telecomm. 62, 109–113. doi: 10.1515/eletel-2016-0014

Kim, H., and Im, C.-H. (2021). Influence of the number of channels and classification algorithm on the performance robustness to electrode shift in steady-state visual evoked potential-based brain-computer interfaces. Front. Neuroinform. 15, 750839. doi: 10.3389/fninf.2021.750839

Kim, M., Kim, M.-K., Hwang, M., Kim, H.-Y., Cho, J., and Kim, S.-P. (2019). Online home appliance control using EEG-based brain–computer interfaces. Electronics 8, 1101. doi: 10.3390/electronics8101101

Li, M., He, D., Li, C., and Qi, S. (2021). Brain–computer interface speller based on steady-state visual evoked potential: a review focusing on the stimulus paradigm and performance. Brain Sci. 11, 450. doi: 10.3390/brainsci11040450

Liu, B., Huang, X., Wang, Y., Chen, X., and Gao, X. (2020). BETA: a large benchmark database toward SSVEP-BCI application. Front. Neurosci. 14, 627. doi: 10.3389/fnins.2020.00627

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., et al. (2018). A review of classification algorithms for EEG-based brain–computer interfaces: a 10 year update. J. Neural Eng. 15, 031005. doi: 10.1088/1741-2552/aab2f2

Makri, D., Farmaki, C., and Sakkalis, V. (2015). “Visual fatigue effects on steady state visual evoked potential-based brain computer interfaces,” in 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), (Montpellier, France: IEEE). doi: 10.1109/NER.2015.7146562

Nakanishi, M., Wang, Y., Chen, X., Wang, Y. T., Gao, X., and Jung, T. P. (2018). Enhancing detection of SSVEPs for a high-speed brain speller using task-related component analysis. IEEE Trans. Biomed. Eng. 65, 104–112. doi: 10.1109/TBME.2017.2694818

Nakanishi, M., Wang, Y., Wang, Y.-T., and Jung, T.-P. (2015). A comparison study of canonical correlation analysis based methods for detecting steady-state visual evoked potentials. PLoS ONE 10, e0140703. doi: 10.1371/journal.pone.0140703

Park, S., Cha, H., and Im, C.-H. (2019). Development of an online home appliance control system using augmented reality and an SSVEP-based brain–computer interface. IEEE Access 7, 163604–163614. doi: 10.1109/ACCESS.2019.2952613

Perera, C. J., Naotunna, I., Sadaruwan, C., Gopura, R. A. R. C., and Lalitharatne, T. D. (2016). “SSVEP based BMI for a meal assistance robot,” in 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), (Budapest, Hungary: IEEE) 002295–002300. doi: 10.1109/SMC.2016.7844580

Rabiul Islam, M., Khademul Islam Molla, M., Nakanishi, M., and Tanaka, T. (2017). Unsupervised frequency-recognition method of SSVEPs using a filter bank implementation of binary subband CCA. J. Neural Eng. 14, 026007. doi: 10.1088/1741-2552/aa5847

Teng, F., Chen, Y., Choong, A. M., Gustafson, S., Reichley, C., Lawhead, P., et al. (2011). Square or sine: finding a waveform with high success rate of eliciting SSVEP. Comput. Intell. Neurosci. 2011, 1–5. doi: 10.1155/2011/364385

Waytowich, N., Lawhern, V. J., Garcia, J. O., Cummings, J., Faller, J., Sajda, P., et al. (2018). Compact convolutional neural networks for classification of asynchronous steady-state visual evoked potentials. J. Neural Eng. 15, 066031. doi: 10.1088/1741-2552/aae5d8

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain–computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Xie, J., Xu, G., Wang, J., Zhang, F., and Zhang, Y. (2012). Steady-state motion visual evoked potentials produced by oscillating newton's rings: implications for brain-computer interfaces. PLoS ONE 7, e39707. doi: 10.1371/journal.pone.0039707

Xu, L., Xu, M., Jung, T.-P., and Ming, D. (2021). Review of brain encoding and decoding mechanisms for EEG-based brain–computer interface. Cogn. Neurodyn. 15, 569–584. doi: 10.1007/s11571-021-09676-z

Yan, W., Xu, G., Li, M., Xie, J., Han, C., Zhang, S., et al. (2017). Steady-state motion visual evoked potential (SSMVEP) based on equal luminance colored enhancement. PLoS ONE 12, e0169642. doi: 10.1371/journal.pone.0169642

Zerafa, R., Camilleri, T., Falzon, O., and Camilleri, K. P. (2018). To train or not to train? A survey on training of feature extraction methods for SSVEP-based BCIs. J. Neural Eng. 15, 051001. doi: 10.1088/1741-2552/aaca6e

Zhang, C., Kim, Y.-K., and Eskandarian, A. (2021). EEG-inception: an accurate and robust end-to-end neural network for EEG-based motor imagery classification. J. Neural Eng. 18, 046014. doi: 10.1088/1741-2552/abed81

Zhang, Y., Xie, S. Q., Wang, H., Yu, Z., and Zhang, Z. (2020). Data analytics in steady-state visual evoked potential-based brain-computer interface: a review. IEEE Sens. J. 21, 1124–1138. doi: 10.1109/JSEN.2020.3017491

Zhao, J., Zhang, W., Wang, J. H., Li, W., Lei, C., Chen, G., et al. (2020). Decision-making selector (DMS) for integrating CCA-based methods to improve performance of SSVEP-based BCIs. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 1128–1137. doi: 10.1109/TNSRE.2020.2983275

Keywords: brain-computer interfaces (BCIs), steady-state visual evoked potential (SSVEP), steady-state motion visual evoked potential (SSMVEP), hybrid visual stimulus, periodic motion

Citation: Kwon J, Hwang J, Nam H and Im C-H (2022) Novel hybrid visual stimuli incorporating periodic motions into conventional flickering or pattern-reversal visual stimuli for steady-state visual evoked potential-based brain-computer interfaces. Front. Neuroinform. 16:997068. doi: 10.3389/fninf.2022.997068

Received: 18 July 2022; Accepted: 30 August 2022;

Published: 21 September 2022.

Edited by:

Luzheng Bi, Beijing Institute of Technology, ChinaReviewed by:

Sung-Phil Kim, Ulsan National Institute of Science and Technology, South KoreaCopyright © 2022 Kwon, Hwang, Nam and Im. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chang-Hwan Im, aWNoQGhhbnlhbmcuYWMua3I=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.