95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroinform. , 29 April 2022

Volume 16 - 2022 | https://doi.org/10.3389/fninf.2022.886365

This article is part of the Research Topic Weakly Supervised Deep Learning-based Methods for Brain Image Analysis View all 11 articles

Wenchao Li1

Wenchao Li1 Jiaqi Zhao2

Jiaqi Zhao2 Chenyu Shen1

Chenyu Shen1 Jingwen Zhang3

Jingwen Zhang3 Ji Hu1

Ji Hu1 Mang Xiao4

Mang Xiao4 Jiyong Zhang1*

Jiyong Zhang1* Minghan Chen3* for the Alzheimer's Disease Neuroimaging Initiative†

Minghan Chen3* for the Alzheimer's Disease Neuroimaging Initiative†Alzheimer's disease (AD) has raised extensive concern in healthcare and academia as one of the most prevalent health threats to the elderly. Due to the irreversible nature of AD, early and accurate diagnoses are significant for effective prevention and treatment. However, diverse clinical symptoms and limited neuroimaging accuracy make diagnoses challenging. In this article, we built a brain network for each subject, which assembles several commonly used neuroimaging data simply and reasonably, including structural magnetic resonance imaging (MRI), diffusion-weighted imaging (DWI), and amyloid positron emission tomography (PET). Based on some existing research results, we applied statistical methods to analyze (i) the distinct affinity of AD burden on each brain region, (ii) the topological lateralization between left and right hemispheric sub-networks, and (iii) the asymmetry of the AD attacks on the left and right hemispheres. In the light of advances in graph convolutional networks for graph classifications and summarized characteristics of brain networks and AD pathologies, we proposed a regional brain fusion-graph convolutional network (RBF-GCN), which is constructed with an RBF framework mainly, including three sub-modules, namely, hemispheric network generation module, multichannel GCN module, and feature fusion module. In the multichannel GCN module, the improved GCN by our proposed adaptive native node attribute (ANNA) unit embeds within each channel independently. We not only fully verified the effectiveness of the RBF framework and ANNA unit but also achieved competitive results in multiple sets of AD stages' classification tasks using hundreds of experiments over the ADNI clinical dataset.

Alzheimer's disease (AD) is a progressive, degenerative, and irreversible brain disorder. Accurate early detections of AD offer enormous benefits to patients, families, and society as a whole (Alzheimer's Association, 2019). Due to advances in neuroimaging and machine learning technology in recent years, numerous machine learning algorithms have been developed to diagnose the stage of AD with various neuroimaging data and achieved some promising results (Klöppel et al., 2008; Vemuri et al., 2008; Cuingnet et al., 2011; Wee et al., 2011; O'Dwyer et al., 2012; Dyrba et al., 2013; Nir et al., 2015; Prasad et al., 2015; Zhang and Liu, 2018; Yan et al., 2019; Yang and Mohammed, 2020).

Compared with diffusion magnetic resonance imaging (dMRI), structural MRI (sMRI) is a more mature technology, making it more accessible in both clinical and academic settings. Various auxiliary diagnosis algorithms for AD have been developed based on sMRI data (Klöppel et al., 2008; Vemuri et al., 2008; Cuingnet et al., 2011; Tanveer et al., 2020), using the macrostructural changes of the brain, such as brain atrophy and neuronal tissue loss, to indicate AD progression. As dMRI techniques advance in recent years, some AD-related pathological studies have manifested that the microstructural changes of the brain may appear before macrostructural changes and occur in the early stages of the disease (Amlien and Fjell, 2014; Araque Caballero et al., 2018; Veale et al., 2021). Given the forward-looking nature of dMRI techniques in AD diagnosis, there have emerged many related discriminative method studies based on dMRI scans in recent years, which can be divided into two categories, namely, the diffusivity measures-based methods (O'Dwyer et al., 2012; Dyrba et al., 2013; Nir et al., 2015; Zhang and Liu, 2018) and network neuroscience-based methods (Wee et al., 2011; Prasad et al., 2015). Although the auxiliary diagnosis algorithm for AD based on dMRI scans has achieved good results, both types of methods mentioned above have their limitations. Among them, the former method usually only considers the local characteristics of nerve fibers. While the latter method can account for both local and global features to some extent, it will be inevitable to lose some key discriminative information when some specific network measures are selected artificially.

With the rise of graph convolutional networks (GCNs) for graph classification tasks on graph-structured data (Bruna et al., 2013; Henaff et al., 2015; Ying et al., 2018; Gao and Ji, 2019; Huang et al., 2019; Lee et al., 2019), a novel methodology is provided for an intelligent clinical diagnosis algorithm for AD based on network neuroscience. Song et al. (2019) directly utilized the conventional GCNs to discriminate the AD stages of subjects using the structural connectivity inputs derived from diffusion tensor imaging. However, although the conventional GCN can learn the potential representation information of graph-structured data more comprehensively, it cannot fully take the unique characteristics of AD pathology and the brain network into account. A closer topographical analysis reveals that the aggression of AD pathology exhibits different affinities for both hemispheres, which aligns with AD pathology studies (Giannakopoulos et al., 1994; Braak and Del Tredici, 2015; Ossenkoppele et al., 2016; Vogel et al., 2020). Meanwhile, some studies related to the brain network have proven the significant hemispheric lateralization of topology organization in the structural brain network (Iturria-Medina et al., 2011; Caeyenberghs and Leemans, 2014; Nusbaum et al., 2017; Yang et al., 2017). Some studies have also indicated that AD-related pathologies attack different brain regions in a certain sequence as the disease progresses (Crossley et al., 2014; Bischof et al., 2016; Cope et al., 2018; Pereira et al., 2019; Vogel et al., 2020).

Although Aβ status has been included in the revised diagnostic criteria for AD (Sperling et al., 2011), compared with other modalities of neuroimaging data, studies related to the AD diagnosis algorithms based on amyloid-PET data are still relatively rare (Vandenberghe et al., 2013; Yan et al., 2019). In the study by Son et al. (2020), the clinical feasibility of the deep learning method was validated in comparison with the visual rating or quantitative measures for evaluating the diagnosis and prognosis of subjects with equivocal amyloid-PET scans, so studies on an auxiliary diagnosis algorithm for AD based on amyloid-PET scans ought to receive more attention.

A large amount of literature demonstrates that data from multiple modalities, such as sMRI, DWI, fMRI, and PET biomarkers, can reveal the pathological characteristics of AD from different perspectives, so the fusion of complementary information from multimodal data can usually boost the performance of AD-related classifications and predictions. However, the conventional multimodality fusion methods, both direct (Schouten et al., 2016; Tang et al., 2016) and indirect (Yu et al., 2016; Zheng et al., 2017) mechanical combinations of multimodal features, neglect the intrinsic relationships between biomarkers derived from different modalities. For instance, some studies have revealed that amyloid-β (detected by PET) proteins spread along neural pathways (detected by dMRI) in a prion-like manner in AD progression (Iturria-Medina et al., 2014; Kim et al., 2019; Raj, 2021).

In this article, we proposed a novel regional brain fusion-graph convolutional network (RBF-GCN), which explicitly utilizes the characteristics of AD pathology and topological structure of brain networks simultaneously. To play the role of some more discriminative node attributes in AD diagnosis, we devised an adaptive native node attribute (ANNA) unit to improve the classic GCN, and the improved GCN can be embedded into the RBF framework. Inspired by the propagation mechanism of AD pathology and the working mechanism of GCNs based on message propagation, we used the graph-structured data to simply and naturally fuse the information from DWI scans (i.e., network topology) and amyloid-PET scans (i.e., nodal attributes).

The rest of this article is organized as follows. In the following sections, we describe the dataset used in this study as well as the proposed method. Later, we present details of our experimental results and discuss them. Finally, we conclude this article.

Our dataset consists of 502 subjects, which includes 168 normal controls (NCs), 165 mild cognitive impairment (MCI), and 169 AD subjects. The demographic information of our dataset is shown in Table 1. All neuroimaging data of the subjects are selected from the Alzheimer's Disease Neuroimaging Initiative (ADNI; http://adni.loni.usc.edu), where each subject has scans of T1-weighted MRI, DWI, and amyloid-PET images. Amyloid-PET and T1-weighted MRI are jointly applied to calculate the regional amyloid standardized uptake value ratio (SUVR) level of each subject, and DWI and T1-weighted MRI are jointly used to construct the corresponding structural brain network. The related processing process of data is described in the next section.

In this study, the Destrieux atlas (Destrieux et al., 2010) is used to calculate the amyloid SUVR of 148 cortical regions from the amyloid-PET scan and construct structural brain networks. Following the Destrieux atlas, the human cerebral cortex was parcellated into 74 different brain regions per hemisphere. The parcellation process of the cortical surface applied the standard internationally accepted nomenclature and criteria, so we selected this atlas. The data are processed using our in-house pipeline built on top of FreeSurfer (https://surfer.nmr.mgh.harvard.edu/) and FSL (FMRIB Software Library, https://fsl.fmrib.ox.ac.uk/fsl/fslwiki). Below is a detailed description of the processing steps.

For the SUVR calculation, we first applied a set of image processing steps on MR images to obtain the region parcellations. The preprocessing of each MR image consists of four major steps (Rajapakse et al., 1997; Tohka et al., 2004; Brendel et al., 2015), namely, (1) skull stripping; (2) tissue segmentation, where we segmented the volumetric intensity image into white matter (WM), gray matter (GM), and cerebrospinal fluid (CSF); (3) constructing the cortical surface based on tissue segmentation results; and (4) registering the Destrieux atlas to the underlying MR image using deformable image registration, which allows us to map the region parcellation from atlas space to individual space. We then performed an image registration between amyloid-PET images and associated MR images such that two imaging modality data are spatially aligned. Next, we selected the cerebellum as the reference region to calculate the SUVR for each brain region, which is essentially equal to the ratio between the average SUV in the region under consideration and the average SUV at the cerebellum (Vogel et al., 2020; Gonzalez-Escamilla et al., 2021). To construct the structural brain network, based on the Destrieux parcellation of the MR image, we used a seed-based probabilistic fiber tractography method over the DWI scan data, which determines how many fibers (directly) connect any two brain regions (Messaritaki et al., 2019). Then, a structural brain network consisting of 148 nodes and associated edges is constructed where connectivity strength reflects the number of fibers between two nodes.

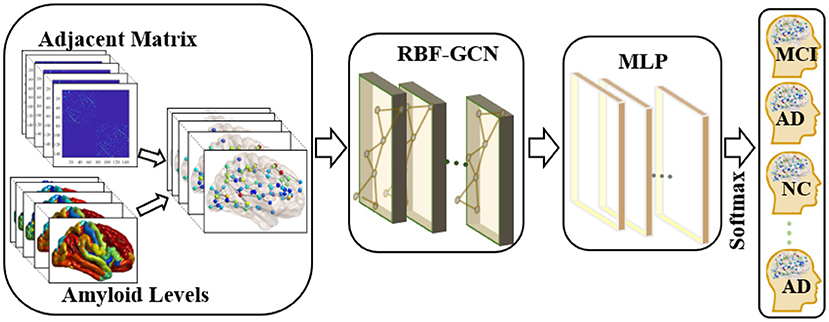

In this study, subjects S = [s1, ⋯ , sn] associate with their structural brain network ℕ = {V, L, F}, where V denotes nodes (brain regions) set, L represents links (white matter fiber bundles between every two nodes) set, and F denotes the node attribute matrix. More specifically, m = |V| represents the total number of nodes in each brain network, and each node vi ∈ V has a d-dimensional attribute feature representation denoted by fi, which could naturally incorporate the nodal identification code, amyloid level, and so on. Hence, a subject's node attribute matrix is . The weighted adjacent matrix A ∈ Rm×m quantitatively describes the strength of network links L. Y = [y1, ⋯ , yn] represents the status of subjects, and a subject's status yi belongs to one of the three diagnostic labels, namely, NC, MCI, and AD. As illustrated in Figure 1, our objective is to discriminate each subject's status using the model learned from the aforementioned brain network dataset.

Figure 1. Overview of the pipeline used in the classification of each subject's clinical diagnosis. First, we applied the graph-structured data to incorporate the topology of the brain network and nodal attributes (i.e., amyloid levels) in each brain region. Then, a regional brain fusion-graph convolutional network (RBF-GCN) model is developed to extract the underlying features of the graph-structured data. Finally, a multilayer perceptron (MLP) module and a Softmax layer are added to predict each subject's Alzheimer's disease (AD) stage.

Based on the information propagation mechanism, the classical GCN can be used to integrate the structural information of the brain network into the nodal feature representation, which is commonly defined as (Kipf and Max, 2017; Huang et al., 2019; Ranjan et al., 2020):

H(k)denotes the input of the k-th GCN layer, and H(0)is initialized with the initial node attribute matrix, i.e., H(0) = F. represents the adjacent matrix of the network with self-loop, and Im denotes the identity matrix. The diagonal matrix is computed by and for i ≠ j. W(k) ∈ Rd(k)×d(k+1) is a learnable parameter matrix in the k-th GCN layer, which is shared by each node of the brain network.

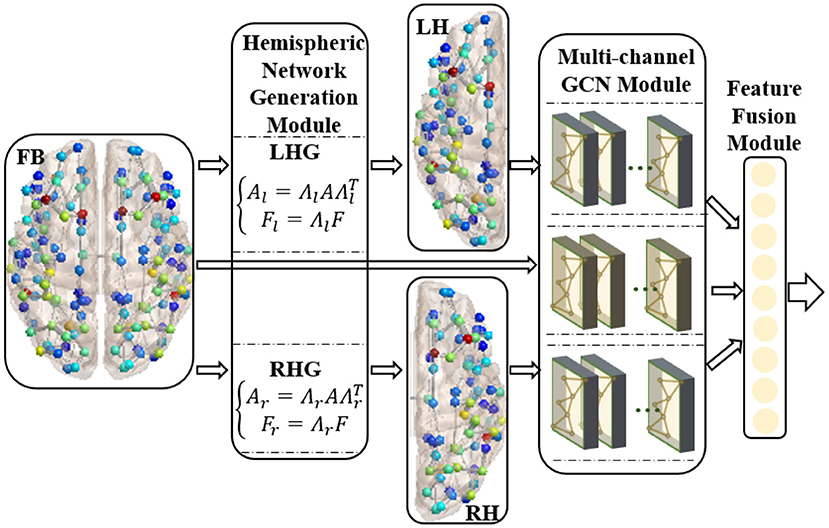

We proposed the RBF-GCN inspired by the asymmetry of AD pathology between hemispheres (Giannakopoulos et al., 1994; Braak and Del Tredici, 2015; Ossenkoppele et al., 2016; Vogel et al., 2020) and the hemispheric lateralization of topology organization in the structural brain network (Iturria-Medina et al., 2011; Caeyenberghs and Leemans, 2014; Nusbaum et al., 2017; Yang et al., 2017). As illustrated in Figure 2, RBF-GCN is built using an RBF framework mainly comprised of three modules, namely, (1) hemispheric network generation module, which generates left and right hemispheric subnetwork based on the whole brain network, (2) multichannel GCN module, which extracts the representation information from the left and right hemispheric and the full brain, respectively, and (3) feature fusion module (Lu et al., 2020), which merges feature vectors from left and right hemispheres and the full brain. In the multichannel GCN module of RBF-GCN, the improved GCN enhanced by our proposed ANNA unit is embedded into each channel independently.

Figure 2. Regional brain fusion framework. FB, LH, and RH denote full brain network and left and right hemispheric subnetwork, respectively. LHG and RHG separately denote left and right hemispheric network generation modules. FB and LH generated by LHG and RH generated by RHG are input to the corresponding channels in the multichannel GCN module, and then the extracted representation information is merged in the feature fusion module.

The relative subnetworks of both hemispheres are generated by the following operation:

where Al, Ar and Fl, Fr denote the weighted adjacent matrix and node attribute matrix of left/right hemispheric networks, respectively (the left and right hemispheric networks have the same number of nodes). Λl and Λr are used to extract the left and right hemispheric subnetworks and node attribute matrices. When corresponding nodes are arranged in the order to construct the adjacency matrix of the whole brain network, the above two matrices are defined as follows:

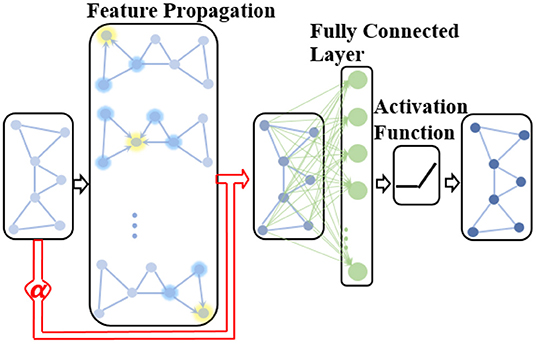

In a classical GCN, the increased depth of GCN allows each node to aggregate features from more topologically distant k-hop nodes, which could encode structural information of brain networks more comprehensively. Meanwhile, the attribute features representation at each node is smoothed. However, a consensus has emerged that AD attacks different brain regions in a certain sequence during disease progression in various existing AD pathological studies (Crossley et al., 2014; Bischof et al., 2016; Cope et al., 2018; Pereira et al., 2019; Vogel et al., 2020). From this, it can be inferred that the expressive power of attribute features of some specific brain network nodes (brain regions) is relatively stronger, which is more conducive to AD diagnosis. To evaluate the importance of regional structural network and attribute features in determining AD stages, we improved the classic GCN by adding an ANNA unit, which is more suitable for brain network data. The improved GCN is defined as follows:

where α(k) is a learnable parameter in the k-th GCN layer used to adaptively adjust the contribution of the native node attribute in the extracted network representation. Therefore, the proposed ANNA unit can play the role of multimodal data simultaneously when diagnosing the AD stages, thereby improving the prediction accuracy. The overall structure of the improved GCN is shown in Figure 3.

Figure 3. The structure of the improved GCN with the adaptive native node attribute (ANNA) unit. The red section indicates the ANNA unit, which adaptively adjusts the contribution of native node features in the extracted network representation.

To improve the discrimination capacity of the feature maps of the brain network, we explored two commonly used feature fusion methods, namely, concatenation and addition, to integrate the full brain network and left and right hemispheric subnetworks (Ma et al., 2019; Du et al., 2020; Saad et al., 2022).

The process of concatenation can be described by the following formula:

where Hf, Hl, and Hr denote the feature maps of full brain network and left and right hemispheric subnetworks, which are output by the corresponding channels of the multichannel GCN module. The combined feature map is denoted by Hconcat, whose column dimension size is equal to the sum of the corresponding values of the right three feature maps. So, the feature map generated by this method owns the more diverse feature representation.

The process of addition is performed as the following formula:

where ⊕ means element-wise addition. The three items on the right side of the formula must have the same shape (i.e., both row and column dimensions must match), and their sum, the combined feature map Hadd, also shares the same shape right-hand side. Compared with concatenation, addition is more space efficient: this fusion method condenses the feature representation information in a relatively narrower dimensional space, which puts forward higher requirements for the classifier's ability to identify feature details.

To evaluate the performance of our proposed method, we implemented three binary classification tasks (i.e., NC vs. AD, NC vs. MCI, and MCI vs. AD) and one multiclass classification task (i.e., NC vs. MCI vs. AD) on the real-world dataset from the ADNI database in AD diagnosis. In recent years, although computer-aided diagnosis research has achieved breakthrough results in the first binary classification task (i.e., NC vs. AD), the remaining three classification tasks have relatively more room for improvement (Tanveer et al., 2020). Thus, for more refined disease state transformation prediction and more accurate early screening, we focused more on the remaining three classification tasks.

All our experiments employ 10-fold cross-validation to ensure a fair performance evaluation. A comprehensive evaluation of the classifier is conducted by simultaneously examining three quantitative aspects, namely, accuracy (ACC), sensitivity (SEN), and specificity (SPE) (Liu et al., 2016). As an exception, multiclass classification only checks accuracy. Following are the formulas for calculating the three metrics (Baratloo et al., 2015):

where TP, FP, TN, and FN represent the numbers of subjects correctly identified as patients, incorrectly identified as patients, correctly identified as healthy, and incorrectly identified as healthy, respectively. For each classification task, a group of subjects with relatively serious illnesses is considered patients, while the rest are considered healthy, such as classification task (i.e., MCI vs. AD), AD subjects are considered patients, and MCI subjects are considered healthy. We established a 3-layered GCN with 32 hidden units as the baseline in our experiments. For the fairness of comparison, our RBF-GCN model also adopts a 3-layered network, and all three network channels of the whole brain, and each hemisphere owns 32 hidden units (Huang et al., 2019). The MLP modules used in our experiments are all 2-layered fully connected networks composed of 32 hidden units. In the model training session, the epoch size is set to 500, the learning rate is set to 0.001, the batch size is set to 20, the weight parameters are initialized using the Xavier normal distribution (Glorot and Bengio, 2010), and the negative log-likelihood loss function and Adam method (Kingma and Ba, 2014) are applied to optimize the model.

This section first verifies the effectiveness of our ANNA unit and RBF framework through ablation experiments and then verifies the superior performance of our proposed RBF-GCN model on multiple sets of AD stages classification tasks through comparative experiments.

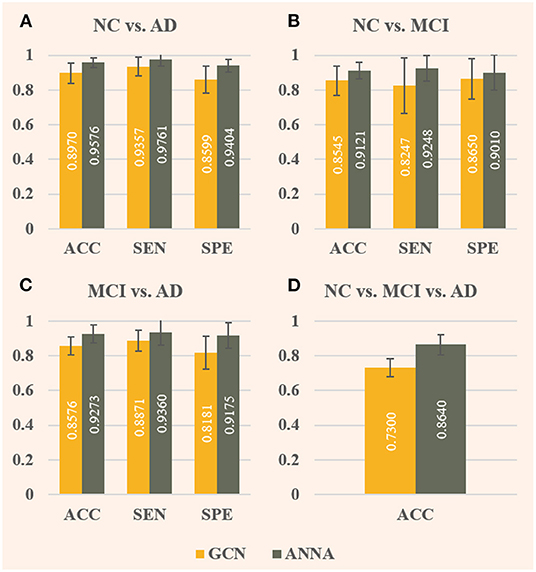

As shown in Figure 4, the ANNA unit can effectively improve the classification performance on all four tasks. For the fairness of comparison, both models employed the same parameter configuration and training method except for the ANNA unit. Regarding classification accuracy, the highest improvement was obtained on the multiclassification task shown in Figure 4D, which increased by 18.36%, and the classification task with the smallest improvement (i.e., NC vs. MCI) also increased by 6.74%, which is only slightly lower than 6.76% from the classification task (i.e., NC vs. AD). With regards to sensitivity, the tasks with greater difficulty of discrimination (i.e., NC vs. MCI and MCI vs. AD) show a relatively higher improvement, which is 12.14 and 5.51%, respectively. Therefore, it is reasonable to deduce that the improved GCN enhanced by the ANNA unit has a more pronounced effect on more difficult classification tasks. As for specificity, the highest improvement was on the task MCI vs. AD, which increased by 12.15%; the smallest improvement was on the task NC vs. MCI, which increased by 4.16%. In addition, as the error bars demonstrate, the GCN with the ANNA unit generally has a smaller standard deviation in performance, which indicates that the training of this model is more stable.

Figure 4. Evaluation of baseline GCN and the improved GCN with ANNA unit for AD stages classification. The baseline GCN is denoted by GCN and plotted in yellow; the GCN with ANNA unit is denoted by ANNA and plotted in green. The specific index for each column is displayed in white in the middle. The error bars depict the standard deviation of the three evaluation indicators across the 10 different folds, respectively. The panels (A–D), respectively, display the results of the four groups of classification tasks.

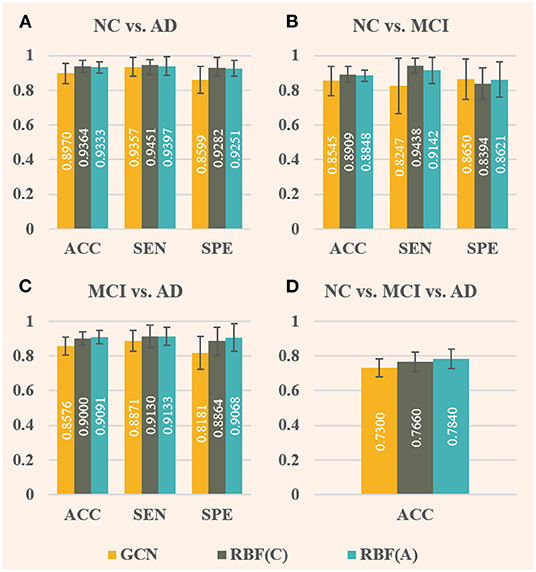

As illustrated in Figure 5, the effect of RBF framework is compared with the baseline GCN (denoted by GCN), where RBF (C) denotes RBF framework with the concatenation module and RBF (A) denotes the one with the addition module. Comparing Figures 4, 5, the overall performance improvement of the RBF framework is not as significant as that of the ANNA unit, however, which can also improve the classification performance on all four tasks quite well. Regarding classification accuracy, RBF (C) performs better in the first two tasks (i.e., NC vs. AD and NC vs. MCI), that is, the accuracy improvement is more pronounced. Contrariwise, RBF (A) performs better in the other two tasks. Among them, the highest accuracy improvement (7.40%) is achieved by RBF (A) in the multiclassification task, and the lowest one (3.55%) appears in the task NC vs. MCI, which is also obtained by RBF (A). In contrast, RBF (A) is more dependent on data, whereas a task-balanced RBF (C) facilitates the promotion. With regards to sensitivity, both RBF (C) and RBF (A) performed exceptionally well with tasks NC vs. MCI, the former improving by up to 14.44%, but their corresponding specificity manifested slightly negative growth. Methods that compromise sensitivity and specificity can be more clinically accepted. For the other two binary classification tasks, in terms of specificity, both RBF (C) and RBF (A) achieved quite high-performance improvements. Among them, the highest (10.84%) and the lowest (7.58%) were obtained by RBF (A) in the task MCI vs. AD and task NC vs. AD, respectively. As illustrated by the error bars of Figure 5, the RBF framework can also enhance the stability of the model on the test set.

Figure 5. Comparison of baseline GCN (denoted by GCN, in yellow) and both improved GCNs with RBF framework [denoted by RBF (C), in green, and denoted by RBF (A), in blue] for AD stages classification. Among them, RBF (C) corresponds to the RBF framework using the concatenation module, whereas RBF (A) corresponds to the one using the addition module. In this case, only baseline GCNs are embedded in the multichannel GCN module of the RBF framework. The specific index for each column is displayed in white in the middle. The error bars depict the standard deviation of the three evaluation indicators across the 10-folds, respectively. The panels (A–D), respectively, display the results of the four groups of classification tasks.

Besides investigating how the different components of our proposed RBF-GCN model impact AD stages classification performance, we further compared the performance of RBF-GCN to several competitive methods proposed in recent literature, which include (1) Multiscale Laplacian Graph (MLG) kernel, which is a multilevel, recursively defined kernel that captures topological relationships between individual vertices, and between sub-graphs (Kondor and Pan, 2016); (2) graph2vec, which defines an unsupervised representation learning method to learn the embedding of graphs of arbitrary sizes (Narayanan et al., 2017); (3) Infograph, which is an unsupervised graph representation learning method that applies contrastive learning to graph learning by maximizing the mutual information between both graph-level and node-level representations (Sun et al., 2019); (4) PSCN (PATCHY-SAN), which extracts and normalizes a neighborhood of exactly k nodes for every node and then uses selected neighborhood as the receptive field in traditional convolutional operations (Niepert et al., 2016); and (5) baseline GCN. To reduce the impact of external factors, such as model training and evaluation process on evaluation fairness, we implemented the aforementioned methods using libraries GraKeL (Siglidis et al., 2020) and CogDL (Cen et al., 2021) in Python and Pytorch. Some of the hyperparameters were adjusted to achieve the best performance. These models were trained and evaluated on our dataset of 502 subjects with 10-fold cross-validation.

As shown in Table 2, when compared with the first four typical graph classification methods, baseline GCN model achieves promising performance, especially for the task NC vs. MCI vs. AD: the classification accuracies for the first four methods are about 50%, while baseline GCN model achieves 73%. This result once again confirms that GCN is a powerful and promising tool in AD stage classification studies. Given the outstanding performance of baseline GCN, we directly used it as a benchmark and compared the proposed RBF-GCN (C) and RBF-GCN (A) models with it. For the task NC vs. AD, in terms of accuracy, sensitivity, and specificity, RBF-GCN (C) and RBF-GCN (A) increased by 7.09, 4.32, 10.08% and 7.09, 5.58, 8.63%, respectively. Both models achieved the same classification accuracy (96.06%). For the task NC vs. MCI, in terms of accuracy, sensitivity, and specificity, RBF-GCN (C) and RBF-GCN (A) increased by 7.81, 14.90, 4.54% and 8.52, 13.46, 6.94%, respectively. Compared with the results of GCN with ANNA unit and RBF framework, RBF-GCN (C) and RBF-GCN (A) noticeably improve accuracy and specificity. For the task MCI vs. AD, in terms of accuracy, sensitivity, and specificity, RBF-GCN (C) and RBF-GCN (A) increased by 10.95, 11.27, 11.72 and 10.95, 9.45, 14.46, respectively. Similar to their performance on the task NC vs. AD, both methods achieved the same accuracy (95.15%). For the task NC vs. MCI vs. AD, RBF-GCN (C) and RBF-GCN (A) inherited the superior performance of classic GCN and achieved an accuracy of 90 and 87.2%, respectively, an increase of 23.29 and 19.45%. For all four tasks, RBF-GCN (A) and RBF-GCN (C) achieved the lowest standard deviations of the evaluation metrics. In summary, both methods achieved better performance on multiple tasks than the GCN with the ANNA unit and RBF framework. Their performance appears to be closer, implying that the ANNA unit improves the adaptability of the RBF framework to a certain extent and has the potential for clinical applications. With the same preconditions, compared with the baseline GCN, our proposed RBF-GCN model has significantly improved the classification performance. Although the computational cost has increased slightly, the network size is relatively small, making it still quite competitive in terms of computational cost. Each epoch takes about 0.6 s on a machine with an NVIDIA GeForce GTX 1080 Ti GPU (11 GB memory), 8-core Intel Xeon CPU (3.0 GHz), and 64 GB of RAM.

In this section, we examine the pathological basis for designing the ANNA unit and RBF framework with statistical analysis.

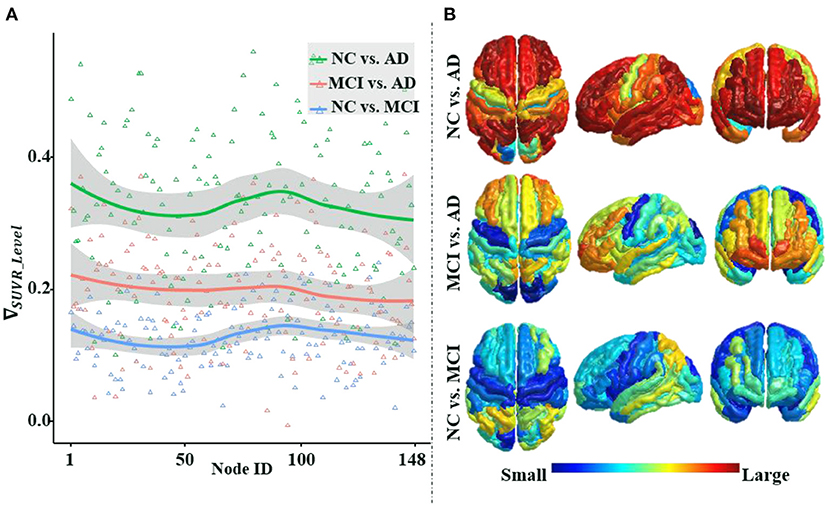

Figure 6 illustrates that the differences in average amyloid levels between cohorts vary by brain region, implying that AD burdens have different affinities for different brain regions, which are consistent with the results of numerous existing studies (Crossley et al., 2014; Bischof et al., 2016; Cope et al., 2018; Pereira et al., 2019; Vogel et al., 2020). This further validates the necessity of our proposed ANNA unit, which is conducive to playing the role of brain region attributes with more obvious discrimination in AD diagnosis. Through horizontal comparison across the three cohort pairs (i.e., NC vs. AD, MCI vs. AD, and NC vs. MCI), it is clear that the magnitude of the differences in amyloid levels is from large to small in order. Among them, the difference in the amyloid level from the cohort pair (i.e., NC vs. AD) is significantly larger than the other two cohort pairs, and the gap between the other two cohort pairs (i.e., MCI vs. AD and NC vs. MCI) is not large. This is not only consistent with the general clinical manifestations of AD development but also consistent with our experimental results, that is, the performance of the same classification method on the task NC vs. AD is significantly better than the other two binary classification tasks. In sum, the mutual confirmation between the manifestation of amyloid-β in AD pathological development and our experimental results more comprehensively substantiates the necessity and effectiveness of our proposed ANNA unit.

Figure 6. An illustration of the differences in the average regional amyloid level between cohorts. The three curves from top to bottom in panle (A), respectively, depict the differences in the average amyloid level of each brain region between cohorts NC vs. AD, MCI vs. AD, and NC vs. MCI. Corresponding to panel (A), from top to bottom, panel (B) depicts the brain region maps of the average amyloid level differences between the cohorts. Node IDs (1–74) correspond to the 74 brain regions in the left hemisphere, node IDs (75–148) to the 74 brain regions in the right hemisphere, and within each hemisphere, the brain regions are numbered based on Destrieux atlas parcellation. MCI, mild cognitive impairment; NC, normal control.

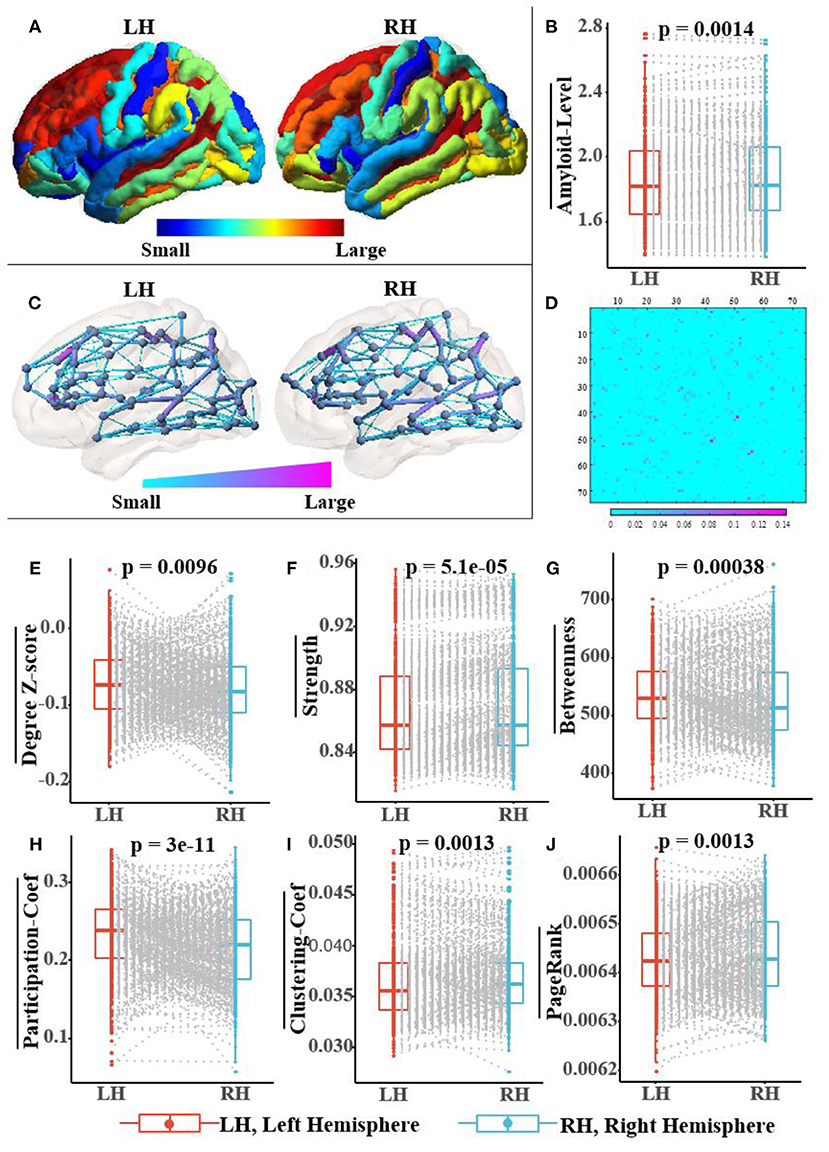

Figure 7 not only shows a significant difference in the distribution of amyloid-β between left and right hemispheres but also in the topological structure of the subnetworks of both hemispheres. In Figure 7A, we visualized the asymmetric distribution of amyloid-β in the left and right hemispheres and then further check it with a Wilcoxon signed-rank test in Figure 7B, which is consistent with existing research results on AD pathology (Giannakopoulos et al., 1994; Braak and Del Tredici, 2015; Ossenkoppele et al., 2016; Vogel et al., 2020). As reported in some literature (Iturria-Medina et al., 2011; Caeyenberghs and Leemans, 2014; Nusbaum et al., 2017; Yang et al., 2017), Figures 7C,D visualize the hemispheric lateralization of topology organization in the structural brain network, which further illustrates with six typical graph measures, including the within-module degree z-score, strength, betweenness, participation coefficient, clustering coefficient, and Pagerank (Kaiser, 2011; Fornito et al., 2016). Among them, two measurements (i.e., the within-module degree z-score and participation coefficient) reveal the modularity of the brain network, while another three centrality measurements (i.e., strength, Pagerank, and betweenness) and clustering coefficient jointly reveal the hierarchical nature of the brain network. In Figures 7E–J, the Wilcoxon signed-rank test indicates the significant hemispheric lateralization of the brain network. Briefly, the above statistical analysis results firmly prove the rationality of our RBF framework design concept; at the same time, the improved experimental results can validate the analysis results in turn.

Figure 7. Population-wide comparison between left and right hemispheres. Panel (A) exhibits the average amyloid level map of the corresponding brain regions on the left and right hemispheres. Panel (B) shows the paired comparison box plots of the average amyloid levels for the respective brain regions in the left and right hemispheres. Panel (C) exhibits the population topological structures of the left and right hemispheres. Panel (D) displays the difference matrix of the adjacency matrix of the left and right hemispheres. Panel (E–J) show the paired comparison box plots of the six graph measures of the brain networks from the left and right hemispheres, respectively. Pairs of brain regions in the left and right hemispheres with the same name are matched in the paired samples Wilcoxon test.

In this study, we introduced RBF-GCN, a novel model specifically for AD stages classification based on graph-level classification. An RBF framework is devised to explicitly exploit the asymmetry of AD pathology and the lateralization of the brain network between both hemispheres. Meanwhile, an ANNA unit is proposed to adaptively assess the role of the structural information of the brain network and the attribute feature information at each node. We validated the effectiveness of the RBF-GCN components using ablation experiments and data-driven statistical analysis. Through extensive experiments, we demonstrated that RBF-GCN reaches state-of-the-art performance on AD stages classification tasks. Future research should explore the possibility of integrating different GCNs with higher expressive power into the multichannel GCN module to further improve the performance of RBF-GCN. Regarding multimodality fusion, more biomarker information about AD pathology should be incorporated to improve the accuracy of the diagnosis algorithm. Additionally, larger-scale datasets should be constructed to verify the generalization ability of the model.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

WL contributed to conceptualization, methodology, software, data curation, and writing. JiaZ and CS contributed to methodology and software. JinZ contributed to writing the manuscript. JH and MX contributed to conceptualization. JiyZ contributed to conceptualization, methodology, and supervision. MC contributed to conceptualization, writing, and supervision. All authors have read and agreed to the published version of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Alzheimer's Association (2019). 2019 Alzheimer's disease facts and figures. Alzheimers Dementia 15, 321–387. doi: 10.1016/j.jalz.2019.01.010

Amlien, I., and Fjell, A. (2014). Diffusion tensor imaging of white matter degeneration in Alzheimer's disease and mild cognitive impairment. Neuroscience 276, 206–215. doi: 10.1016/j.neuroscience.2014.02.017

Araque Caballero, M. Á., Suárez-Calvet, M., Duering, M., Franzmeier, N., Benzinger, T., Fagan, A. M., et al. (2018). White matter diffusion alterations precede symptom onset in autosomal dominant Alzheimer's disease. Brain 141, 3065–3080. doi: 10.1093/brain/awy229

Baratloo, A., Hosseini, M., Negida, A., and El Ashal, G. (2015). Part 1: simple definition and calculation of accuracy, sensitivity and specificity.

Bischof, G. N., Jessen, F., Fliessbach, K., Dronse, J., Hammes, J., Neumaier, B., et al. (2016). Impact of tau and amyloid burden on glucose metabolism in Alzheimer's disease. Ann. Clin. Transl. Neurol. 3, 934–939. doi: 10.1002/acn3.339

Braak, H., and Del Tredici, K. (2015). The preclinical phase of the pathological process underlying sporadic Alzheimer's disease. Brain 138, 2814–2833. doi: 10.1093/brain/awv236

Brendel, M., Högenauer, M., Delker, A., Sauerbeck, J., Bartenstein, P., Seibyl, J., et al. (2015). Improved longitudinal [18F]-AV45 amyloid PET by white matter reference and VOI-based partial volume effect correction. Neuroimage 108, 450–459. doi: 10.1016/j.neuroimage.2014.11.055

Bruna, J., Zaremba, W., Szlam, A., and LeCun, Y. (2013). Spectral networks and locally connected networks on graphs. arXiv [Preprint].arXiv: 1312.6203. Available online at: https://arxiv.org/abs/1312.6203

Caeyenberghs, K., and Leemans, A. (2014). Hemispheric lateralization of topological organization in structural brain networks. Hum. Brain Mapp. 35, 4944–4957. doi: 10.1002/hbm.22524

Cen, Y., Hou, Z., Wang, Y., Chen, Q., Luo, Y., Yao, X., et al. (2021). CogDL: Toolkit for deep learning on graphs. arXiv [Preprint]. arXiv: 2103.00959. Available online at: https://arxiv.org/abs/2103.00959

Cope, T. E., Rittman, T., Borchert, R. J., Jones, P. S., Vatansever, D., Allinson, K., et al. (2018). Tau burden and the functional connectome in Alzheimer's disease and progressive supranuclear palsy. Brain 141, 550–567. doi: 10.1093/brain/awx347

Crossley, N. A., Mechelli, A., Scott, J., Carletti, F., Fox, P. T., Mcguire, P., et al. (2014). The hubs of the human connectome are generally implicated in the anatomy of brain disorders. Brain 137, 2382–2395. doi: 10.1093/brain/awu132

Cuingnet, R., Gerardin, E., Tessieras, J., Auzias, G., Lehéricy, S., Habert, M.-O., et al. (2011). Automatic classification of patients with Alzheimer's disease from structural MRI: a comparison of ten methods using the ADNI database. Neuroimage 56, 766–781. doi: 10.1016/j.neuroimage.2010.06.013

Destrieux, C., Fischl, B., Dale, A., and Halgren, E. (2010). Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage 53, 1–15. doi: 10.1016/j.neuroimage.2010.06.010

Du, C., Wang, Y., Wang, C., Shi, C., and Xiao, B. (2020). Selective feature connection mechanism: concatenating multi-layer CNN features with a feature selector. Patt. Recogn. Lett. 129, 108–114. doi: 10.1016/j.patrec.2019.11.015

Dyrba, M., Ewers, M., Wegrzyn, M., Kilimann, I., Plant, C., Oswald, A., et al. (2013). Robust automated detection of microstructural white matter degeneration in Alzheimer's disease using machine learning classification of multicenter DTI data. PLoS ONE 8, e64925. doi: 10.1371/journal.pone.0064925

Fornito, A., Zalesky, A., and Bullmore, E. (2016). Fundamentals of Brain Network Analysis. Cambridge: Academic Press.

Gao, H., and Ji, S. (2019). “Graph u-nets,” in International Conference on Machine Learning (Long Beach, CA: PMLR), 2083–2092.

Giannakopoulos, P., Bouras, C., and Hof, P. R. (1994). Alzheimer's disease with asymmetric atrophy of the cerebral hemispheres: morphometric analysis of four cases. Acta Neuropathol. 88, 440–447. doi: 10.1007/BF00389496

Glorot, X., and Bengio, Y. (2010). “Understanding the difficulty of training deep feedforward neural networks,” in Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics: JMLR Workshop and Conference Proceedings (Sardinia), 249–256.

Gonzalez-Escamilla, G., Miederer, I., Grothe, M. J., Schreckenberger, M., Muthuraman, M., and Groppa, S. (2021). Metabolic and amyloid PET network reorganization in Alzheimer's disease: differential patterns and partial volume effects. Brain Imaging Behav. 15, 190–204. doi: 10.1007/s11682-019-00247-9

Henaff, M., Bruna, J., and LeCun, Y. (2015). Deep convolutional networks on graph-structured data. arXiv [Preprint]. arXiv: 1506.05163. Available online at: https://arxiv.org/abs/1506.05163

Huang, J., Li, Z., Li, N., Liu, S., and Li, G. (2019). “Attpool: Towards hierarchical feature representation in graph convolutional networks via attention mechanism,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (Seoul: IEEE), 6480–6489.

Iturria-Medina, Y., Pérez Fernández, A., Morris, D. M., Canales-Rodríguez, E. J., Haroon, H. A., García Pentón, L., et al. (2011). Brain hemispheric structural efficiency and interconnectivity rightward asymmetry in human and nonhuman primates. Cerebr. Cortex 21, 56–67. doi: 10.1093/cercor/bhq058

Iturria-Medina, Y., Sotero, R. C., Toussaint, P. J., Evans, A. C., and Alzheimer's Disease Neuroimaging Initiative. (2014). Epidemic spreading model to characterize misfolded proteins propagation in aging and associated neurodegenerative disorders. PLoS Comput. Biol. 10, e1003956. doi: 10.1371/journal.pcbi.1003956

Kaiser, M. (2011). A tutorial in connectome analysis: topological and spatial features of brain networks. Neuroimage 57, 892–907. doi: 10.1016/j.neuroimage.2011.05.025

Kim, H.-R., Lee, P., Seo, S. W., Roh, J. H., Oh, M., Oh, J. S., et al. (2019). Comparison of amyloid β and tau spread models in Alzheimer's Disease. Cerebr Cortex 29, 4291–4302. doi: 10.1093/cercor/bhy311

Kingma, D. P., and Ba, J. (2014). Adam: a method for stochastic optimization. arXiv [Preprint]. arXiv: 1412.6980. Available online at: https://arxiv.org/abs/1412.6980

Kipf, T. N., and Max, W. (2017). “Semi-supervised classification with graph convolutional networks,” in The 5th International Conference on Learning Representations (Toulon).

Klöppel, S., Stonnington, C. M., Chu, C., Draganski, B., Scahill, R. I., Rohrer, J. D., et al. (2008). Automatic classification of MR scans in Alzheimer's disease. Brain 131, 681–689. doi: 10.1093/brain/awm319

Kondor, R., and Pan, H. (2016). “The multiscale laplacian graph kernel.” in Proceedings of the 30th International Conference on Neural Information Processing Systems (Barcelona: NIPS), 2990–2998.

Lee, J., Lee, I., and Kang, J. (2019). “Self-attention graph pooling,” in International Conference on Machine Learning (Long Beach, CA: PMLR), 3734–3743.

Liu, J., Li, M., Lan, W., Wu, F.-X., Pan, Y., and Wang, J. (2016). Classification of Alzheimer's disease using whole brain hierarchical network. IEEE/ACM Transact. Comp. Biol. Bioinformat. 15, 624–632. doi: 10.1109/TCBB.2016.2635144

Lu, T., Wang, J., Jiang, J., and Zhang, Y. (2020). Global-local fusion network for face super-resolution. Neurocomputing 387, 309–320. doi: 10.1016/j.neucom.2020.01.015

Ma, C., Mu, X., and Sha, D. (2019). Multi-layers feature fusion of convolutional neural network for scene classification of remote sensing. IEEE Access 7, 121685–121694. doi: 10.1109/ACCESS.2019.2936215

Messaritaki, E., Dimitriadis, S. I., and Jones, D. K. (2019). Optimization of graph construction can significantly increase the power of structural brain network studies. Neuroimage 199, 495–511. doi: 10.1016/j.neuroimage.2019.05.052

Narayanan, A., Chandramohan, M., Venkatesan, R., Chen, L., Liu, Y., and Jaiswal, S. (2017). graph2vec: Learning distributed representations of graphs. arXiv [Preprint]. arXiv:1707.05005. Available online at: https://arxiv.org/abs/1707.05005

Niepert, M., Ahmed, M., and Kutzkov, K. (2016). “Learning convolutional neural networks for graphs,” in International Conference on Machine Learning (Long Beach, CA: PMLR), 2014–2023.

Nir, T. M., Villalon-Reina, J. E., Prasad, G., Jahanshad, N., Joshi, S. H., Toga, A. W., et al. (2015). Diffusion weighted imaging-based maximum density path analysis and classification of Alzheimer's disease. Neurobiol. Aging 36, S132–S140. doi: 10.1016/j.neurobiolaging.2014.05.037

Nusbaum, F., Hannoun, S., Kocevar, G., Stamile, C., Fourneret, P., Revol, O., et al. (2017). Hemispheric differences in white matter microstructure between two profiles of children with high intelligence quotient vs. controls: a tract-based spatial statistics study. Front. Neurosci. 11, 173. doi: 10.3389/fnins.2017.00173

O'Dwyer, L., Lamberton, F., Bokde, A. L., Ewers, M., Faluyi, Y. O., Tanner, C., et al. (2012). Using support vector machines with multiple indices of diffusion for automated classification of mild cognitive impairment. PLoS ONE 7, e32441. doi: 10.1371/journal.pone.0032441

Ossenkoppele, R., Schonhaut, D. R., Schöll, M., Lockhart, S. N., Ayakta, N., Baker, S. L., et al. (2016). Tau PET patterns mirror clinical and neuroanatomical variability in Alzheimer's disease. Brain 139, 1551–1567. doi: 10.1093/brain/aww027

Pereira, J. B., Ossenkoppele, R., Palmqvist, S., Strandberg, T. O., Smith, R., Westman, E., et al. (2019). Amyloid and tau accumulate across distinct spatial networks and are differentially associated with brain connectivity. Elife 8, e50830. doi: 10.7554/eLife.50830.sa2

Prasad, G., Joshi, S. H., Nir, T. M., Toga, A. W., Thompson, P. M., Initiative, A., et al. (2015). Brain connectivity and novel network measures for Alzheimer's disease classification. Neurobiol. Aging 36, S121–S131. doi: 10.1016/j.neurobiolaging.2014.04.037

Raj, A. (2021). Graph models of pathology spread in Alzheimer's disease: an alternative to conventional graph theoretic analysis. Brain Connect. 11, 799–814. doi: 10.1089/brain.2020.0905

Rajapakse, J. C., Giedd, J. N., and Rapoport, J. L. (1997). Statistical approach to segmentation of single-channel cerebral MR images. IEEE Trans. Med. Imag. 16, 176–186. doi: 10.1109/42.563663

Ranjan, E., Sanyal, S., and Talukdar, P. (2020). “Asap: adaptive structure aware pooling for learning hierarchical graph representations,” in Proceedings of the AAAI Conference on Artificial Intelligence (New York, NY: AAAI), 5470–5477.

Saad, W., Shalaby, W. A., Shokair, M., Abd El-Samie, F., Dessouky, M., and Abdellatef, E. (2022). COVID-19 classification using deep feature concatenation technique. J. Ambient Intell. Human. Comp. 13, 2025–2043. doi: 10.1007/s12652-021-02967-7

Schouten, T. M., Koini, M., De Vos, F., Seiler, S., Van Der Grond, J., Lechner, A., et al. (2016). Combining anatomical, diffusion, and resting state functional magnetic resonance imaging for individual classification of mild and moderate Alzheimer's disease. NeuroImage Clin. 11, 46–51. doi: 10.1016/j.nicl.2016.01.002

Siglidis, G., Nikolentzos, G., Limnios, S., Giatsidis, C., Skianis, K., and Vazirgiannis, M. (2020). GraKeL: a graph kernel library in python. J. Mach. Learn. Res. 21, 1–5.

Son, H. J., Oh, J. S., Oh, M., Kim, S. J., Lee, J.-H., Roh, J. H., et al. (2020). The clinical feasibility of deep learning-based classification of amyloid PET images in visually equivocal cases. Eur. J. Nucl. Med. Mol. Imaging 47, 332–341. doi: 10.1007/s00259-019-04595-y

Song, T.-A., Chowdhury, S. R., Yang, F., Jacobs, H., El Fakhri, G., Li, Q., et al. (2019). “Graph convolutional neural networks for Alzheimer's disease classification,” in 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019) (Venice: IEEE), 414–417.

Sperling, R. A., Aisen, P. S., Beckett, L. A., Bennett, D. A., Craft, S., Fagan, A. M., et al. (2011). Toward defining the preclinical stages of Alzheimer's disease: recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimers Dementia 7, 280–292. doi: 10.1016/j.jalz.2011.03.003

Sun, F.-Y., Hoffmann, J., Verma, V., and Tang, J. (2019). Infograph: unsupervised and semi-supervised graph-level representation learning via mutual information maximization. arXiv [Preprint]. arXiv: 1908.01000. Available online at: https://arxiv.org/abs/1908.01000

Tang, X., Qin, Y., Wu, J., Zhang, M., Zhu, W., and Miller, M. I. (2016). Shape and diffusion tensor imaging based integrative analysis of the hippocampus and the amygdala in Alzheimer's disease. Magn. Reson. Imaging 34, 1087–1099. doi: 10.1016/j.mri.2016.05.001

Tanveer, M., Richhariya, B., Khan, R., Rashid, A., Khanna, P., Prasad, M., et al. (2020). Machine learning techniques for the diagnosis of Alzheimer's disease: a review. ACM Transact. Multimedia Comp. Commun. Appl. 16, 1–35. doi: 10.1145/3344998

Tohka, J., Zijdenbos, A., and Evans, A. (2004). Fast and robust parameter estimation for statistical partial volume models in brain MRI. Neuroimage 23, 84–97. doi: 10.1016/j.neuroimage.2004.05.007

Vandenberghe, R., Nelissen, N., Salmon, E., Ivanoiu, A., Hasselbalch, S., Andersen, A., et al. (2013). Binary classification of 18F-flutemetamol PET using machine learning: comparison with visual reads and structural MRI. Neuroimage 64, 517–525. doi: 10.1016/j.neuroimage.2012.09.015

Veale, T., Malone, I. B., Poole, T., Parker, T. D., Slattery, C. F., Paterson, R. W., et al. (2021). Loss and dispersion of superficial white matter in Alzheimer's disease: a diffusion MRI study. Brain Commun. 3:fcab272 doi: 10.1093/braincomms/fcab272

Vemuri, P., Gunter, J. L., Senjem, M. L., Whitwell, J. L., Kantarci, K., Knopman, D. S., et al. (2008). Alzheimer's disease diagnosis in individual subjects using structural MR images: validation studies. Neuroimage 39, 1186–1197. doi: 10.1016/j.neuroimage.2007.09.073

Vogel, J. W., Iturria-Medina, Y., Strandberg, O. T., Smith, R., Levitis, E., Evans, A. C., et al. (2020). Spread of pathological tau proteins through communicating neurons in human Alzheimer's disease. Nat. Commun. 11, 1–15. doi: 10.1038/s41467-020-15701-2

Wee, C.-Y., Yap, P.-T., Li, W., Denny, K., Browndyke, J. N., Potter, G. G., et al. (2011). Enriched white matter connectivity networks for accurate identification of MCI patients. Neuroimage 54, 1812–1822. doi: 10.1016/j.neuroimage.2010.10.026

Yan, Y., Somer, E., and Grau, V. (2019). Classification of amyloid PET images using novel features for early diagnosis of Alzheimer's disease and mild cognitive impairment conversion. Nucl. Med. Commun. 40, 242–248. doi: 10.1097/MNM.0000000000000953

Yang, C., Zhong, S., Zhou, X., Wei, L., Wang, L., and Nie, S. (2017). The abnormality of topological asymmetry between hemispheric brain white matter networks in Alzheimer's disease and mild cognitive impairment. Front. Aging Neurosci. 9, 261. doi: 10.3389/fnagi.2017.00261

Yang, K., and Mohammed, E. A. (2020). A review of artificial intelligence technologies for early prediction of Alzheimer's Disease. arXiv [Preprint]. arXiv: 2101.01781. Available online at: https://arxiv.org/abs/2101.01781

Ying, R., You, J., Morris, C., Ren, X., Hamilton, W. L., and Leskovec, J. (2018). “Hierarchical graph representation learning with differentiable pooling,” in Proceedings of the 32nd International Conference on Neural Information Processing Systems (Montreal, QC), 4805–4815.

Yu, G., Liu, Y., and Shen, D. (2016). Graph-guided joint prediction of class label and clinical scores for the Alzheimer's disease. Brain Struct. Funct. 221, 3787–3801. doi: 10.1007/s00429-015-1132-6

Keywords: brain network, amyloid-PET, regional brain fusion-graph convolutional network (RBF-GCN), adaptive native node attribute (ANNA), Alzheimer's disease

Citation: Li W, Zhao J, Shen C, Zhang J, Hu J, Xiao M, Zhang J and Chen M (2022) Regional Brain Fusion: Graph Convolutional Network for Alzheimer's Disease Prediction and Analysis. Front. Neuroinform. 16:886365. doi: 10.3389/fninf.2022.886365

Received: 28 February 2022; Accepted: 30 March 2022;

Published: 29 April 2022.

Edited by:

Mingxia Liu, University of North Carolina at Chapel Hill, United StatesReviewed by:

Hongtao Xie, University of Science and Technology of China, ChinaCopyright © 2022 Li, Zhao, Shen, Zhang, Hu, Xiao, Zhang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jiyong Zhang, anpoYW5nQGhkdS5lZHUuY24=; Minghan Chen, Y2hlbm1Ad2Z1LmVkdQ==

†Data used in preparation of this article were obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.